Swift Development with Cocoa (2015)

Chapter 9. Audio and Video

As we’ve seen, Cocoa and Cocoa Touch have a lot of support for displaying still images and text. The APIs also have great support for displaying video and audio—either separately or at the same time.

OS X and iOS have historically had APIs for displaying audiovisual (AV) content, but only recently did Apple introduce a comprehensive API for loading, playing, and otherwise working with AV content. This API, AV Foundation, is identical on both OS X and iOS, and is the one-stop shop for both AV playback and editing.

In this chapter, you’ll learn how to use AV Foundation to display video and audio. We’ll demonstrate how to use the framework in OS X, but the same API also applies to iOS.

You’ll also learn how to access the user’s photo library on iOS, as well as how to capture photos and videos using the built-in camera available on iOS and OS X.

NOTE

AV Foundation is a large and powerful framework, capable of performing very complex operations with audio and video. Final Cut Pro, Apple’s professional-level video editing tool, uses AV Foundation for all of the actual work involved in editing video. Covering all the features of this framework is beyond the scope of this book, so we address only audio and video playback in this chapter. If you want to learn about the more advanced features in AV Foundation, check out the AV Foundation Programming Guide in the Xcode documentation.

AV Foundation

AV Foundation is designed to load and play back a large number of popular audiovisual formats. The formats supported by AV Foundation are:

§ QuickTime (.mov files)

§ MPEG4 audio (including .mp4, .m4a, and .m4v)

§ Wave, AIFF, and CAF audio

§ Apple Lossless audio

§ MP3 and AAC audio

From a coding perspective, there’s no distinction between these formats—you simply tell AV Foundation to load the resource and start playing.

AV Foundation refers to media that can be played as an asset. Assets can be loaded from URLs (which can point to a resource on the Internet or a file stored locally), or they can be created from other assets (content creation apps, like iMovie, do this). In this chapter, we’ll be looking at media loaded from URLs.

When you have a file you want to play—such as an H.264 movie or an MP3 file—you can create an AVPlayer to coordinate playback.

Playing Video with AVPlayer

The AVPlayer class is a high-level object that can play back any media that AV Foundation supports. AVPlayer is capable of playing both audio and video content, though if you only want to play back audio, AV Foundation provides an object dedicated to sound playback (AVAudioPlayer, discussed later). In this section, we talk about playing videos.

When you want to play back media, you create an AVPlayer and provide it with the URL of the video you want to play back:

let contentURL = NSURL(fileURLWithPath:"/Users/jon/Desktop/AVFile.m4v")

let player = AVPlayer(URL: contentURL)

When you set up a player with a content URL, the player will take a moment to get ready to play back the content. The amount of time needed depends on the content and where it’s being kept. If it’s a video file, the decoder will take longer to get ready than for an audio file, and if the file is hosted on the Internet, it will take longer to transfer enough data to start playing.

AVPlayer acts as the controller for your media playback. At its simplest, you can tell the player to just start playing:

player.play()

NOTE

In the background, the play method actually just sets the playback rate to 1.0, which means that it should play back at normal speed. You could also start playback at half-speed by saying player.rate = 0.5. In the same vein, setting the rate to 0 pauses playback—which is exactly what the pause method does.

AVPlayerLayer

AVPlayer is only responsible for coordinating playback, not for displaying the video content to the user. If you want video playback to be visible, you need a Core Animation layer to display the content on.

AV Foundation provides a Core Animation layer called AVPlayerLayer that presents video content from the AVPlayer. Because it’s a CALayer, you need to add it to an existing layer tree in order for it to be visible. We’ll recap how to work with layers later in this chapter.

You create an AVPlayerLayer with the AVPlayerLayer(player:) method:

var playerLayer = AVPlayerLayer(player: player)

(Yes, we are now in tongue-twister territory.)

Once created, the player layer will display whatever image the AVPlayer you provided tells it to. It’s up to you to actually size the layer appropriately and add it to the layer tree:

var view = UIView(frame: CGRectMake(0, 0, 640, 360))

playerLayer.frame = view.bounds

view.layer.addSublayer(playerLayer)

Once the player layer is visible, you can forget about it—all of the actual work involved in controlling video playback is handled by the AVPlayer.

Putting It Together

To demonstrate how to use AVPlayer and AVPlayerLayer, we’ll build a simple video player application for OS X.

NOTE

The same API applies to iOS and OS X.

Before you start building this project, download the sample video from the book’s examples. Here are the steps you’ll need to take to build the video player application:

1. First, create a new Cocoa application named VideoPlayer, and drag the sample video into the project navigator.

The interface for this project will consist of an NSView, which will play host to the AVPlayerLayer, as well as buttons that make the video play back at normal speed, play back at one-quarter speed, and rewind.

In order to add the AVPlayerLayer into the view, that view must be backed by a CALayer. This requires checking a checkbox in the Interface Builder—once that’s done, the view will have a layer to which we can add the AVPlayerLayer as a sublayer.

WARNING

On OS X, NSViews do not use a CALayer by default. This is not the case in iOS, where all UIViews have a CALayer.

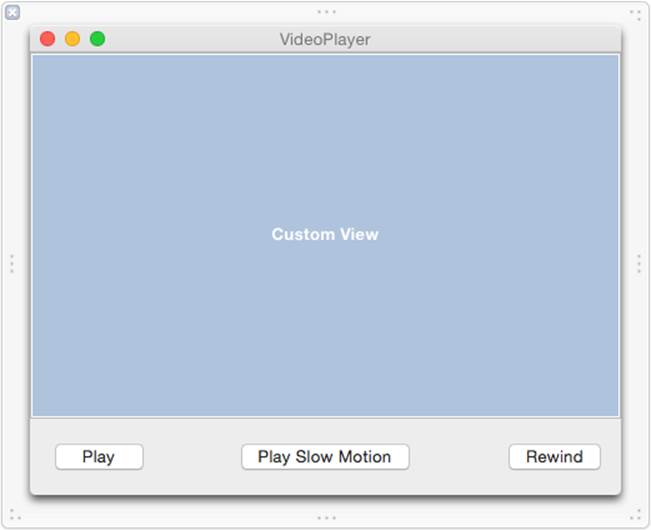

2. Next, create the interface. Open MainMenu.xib and drag a custom view into the main window. Make it fill the window, but leave some space at the bottom. This view will contain the video playback layer.

Drag in three buttons and place them underneath the video playback view. Label them Play, Play Slow Motion, and Rewind.

To add an AVPlayerLayer to the window, the view that it’s being inserted into must have its own CALayer. To make this happen, you tell either the video playback view or any of its superviews that it should use a CALayer. Once a view has a CALayer, it and all of its subviews each use aCALayer to display their content.

3. Make the window use a CALayer. Click inside the window and open the View Effects Inspector, which is the last button at the top of the inspector.

The Core Animation Layer section of the inspector will list the selected view. Check the checkbox to give it a layer; once this is done you should have an interface looking like Figure 9-1.

Figure 9-1. The interface layout for the VideoPlayer app

4. Finally, connect the code to the interface. Now that the interface is laid out correctly, we’ll make the code aware of the view that the video should be displayed in, and create the actions that control playback.

Open AppDelegate.swift in the assistant.

Control-drag from the video container view into AppDelegate. Create an outlet called playerView.

Control-drag from each of the buttons under the video container view into AppDelegate, and create actions for each of them. Name these actions play, playSlowMotion, and rewind.

Now we’ll write the code that loads and prepares the AVPlayer and AVPlayerLayer. Because we want to control the player, we’ll keep a reference to it around by adding a class extension that contains an instance variable to store the AVPlayer. We don’t need to keep theAVPlayerLayer around in the same way, because once we add it to the layer tree, we can forget about it—it will just display whatever the AVPlayer needs to show.

We’ll also need to import the AV Foundation and Quartz Core framework headers in order to work with the necessary classes:

1. To import the headers, add the following code to the import statements at the top of AppDelegate.swift:

2. import AVFoundation

import QuartzCore

3. To include the class extension, add a new property to AppDelegate.swift:

var player: AVPlayer?

Next, we’ll create and set up the AVPlayer and AVPlayerLayer. To set up the AVPlayer, you need something to play. In this case, we’ll make the application determine the location of the test video that was compiled into the application’s folder, and give that to the AVPlayer.

Once the AVPlayer is ready, we can create the AVPlayerLayer. The AVPlayerLayer needs to be added to the video player view’s layer and resized to fill the layer. Setting the frame property of the layer accomplishes this. As a final touch, we’ll also make the layer automatically resize when its superlayer resizes.

Finally, we’ll tell the AVPlayer that it should pause when it reaches the end of playback:

1. To set up the AVPlayer, replace the applicationDidFinishLaunching() method in AppDelegate.swift with the following code:

2. func applicationDidFinishLaunching(aNotification: NSNotification?) {

3. let contentURL = NSBundle.mainBundle().URLForResource("TestVideo",

4. withExtension: "m4v")

5. player = AVPlayer(URL: contentURL)

6.

7. var playerLayer = AVPlayerLayer(player: player)

8. self.playerView.layer?.addSublayer(playerLayer)

9. playerLayer.frame = self.playerView.bounds

10. playerLayer.autoresizingMask =

11. CAAutoresizingMask.LayerHeightSizable |

12. CAAutoresizingMask.LayerWidthSizable

13.

self.player!.actionAtItemEnd = AVPlayerActionAtItemEnd.None

14.The last step in coding the application is to create the control methods, which are run when the buttons are clicked. These controls—Play, Play Slow Motion, and Rewind—simply tell the AVPlayer to set the rate of playback. In the case of rewinding, it’s a matter of telling the player to seek to the start.

To add the control methods, replace the play(), playSlowMotion(), and rewind() methods in AppDelegate.swift with the following code:

@IBAction func play(sender: AnyObject) {

self.player!.play()

}

@IBAction func playSlowMotion(sender: AnyObject) {

self.player!.rate = 0.25

}

@IBAction func rewind(sender: AnyObject) {

self.player!.seekToTime(kCMTimeZero)

}

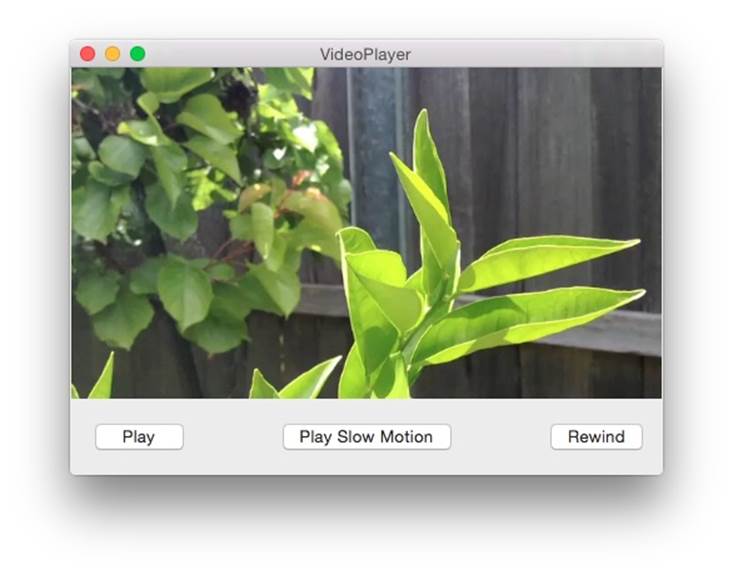

It’s time to test the app, so go ahead and launch it. Play around with the buttons and resize the window. Video should be visible in the window, as seen in Figure 9-2.

Figure 9-2. The completed interface for our VideoPlayer app

AVKit

The preceding example is a good way of loading media into a layer, but it is also a little clunky. Your users are already used to a particular way of how videos and audio and their controls should be displayed. Luckily, new in OS X 10.9, there is a dedicated subclass of NSView calledAVPlayerView specifically designed for playing audio and video. AVPlayerView is the same view that QuickTime Player uses when it wants to play audio or video and is part of the AVKit framework. It is designed to work with an AVPlayer and allows your code to pass any messages to the AVPlayer it is displaying. Starting with iOS 8, AVKit is also available for iOS; instead of an AVPlayerView it uses an AVPlayerViewController, but it works in a very similar manner to its OS X cousin.

WARNING

The desktop version of AVKit is only available on OS X 10.9 and later, and the mobile version is only for iOS 8 and later. Sorry iOS 7 and Mountain Lion users!

Hooking an AVPlayerView up to an existing AVPlayer is straightforward. To demonstrate, let’s make a few changes to our VideoPlayer app:

1. First, to modify the interface, open MainMenu.xib and delete the custom view and all buttons.

Drag an AVPlayerView onto the main window, and resize it to be the full size of the window.

At this stage, your interface should look similar to Figure 9-3.

Figure 9-3. The updated interface for our app

2. Then, to connect the code, open AppDelegate.swift in the assistant.

Control-drag from the AVPlayerView into the AppDelegate, and create an outlet called playerView.

Delete or comment out the previous playerView outlet.

3. Next, to import the headers, add the following code to the top of AppDelegate.swift:

import AVKit

4. Finally, we need to set up the AVPlayerView. Replace the applicationDidFinishLaunching() method in AppDelegate.swift with the following code:

5. func applicationDidFinishLaunching(aNotification: NSNotification?) {

6. let contentURL = NSBundle.mainBundle().URLForResource("TestVideo",

7. withExtension: "m4v")

8.

9. self.playerView.player = AVPlayer(URL: contentURL)

}

Now when you run the application, you should see an app which is very similar to the QuickTime Player.

Finally, it is good practice to delete the methods for handling the play, slow motion, and rewind options, and remove the Quartz Core framework as it is no longer needed.

AVKit on iOS

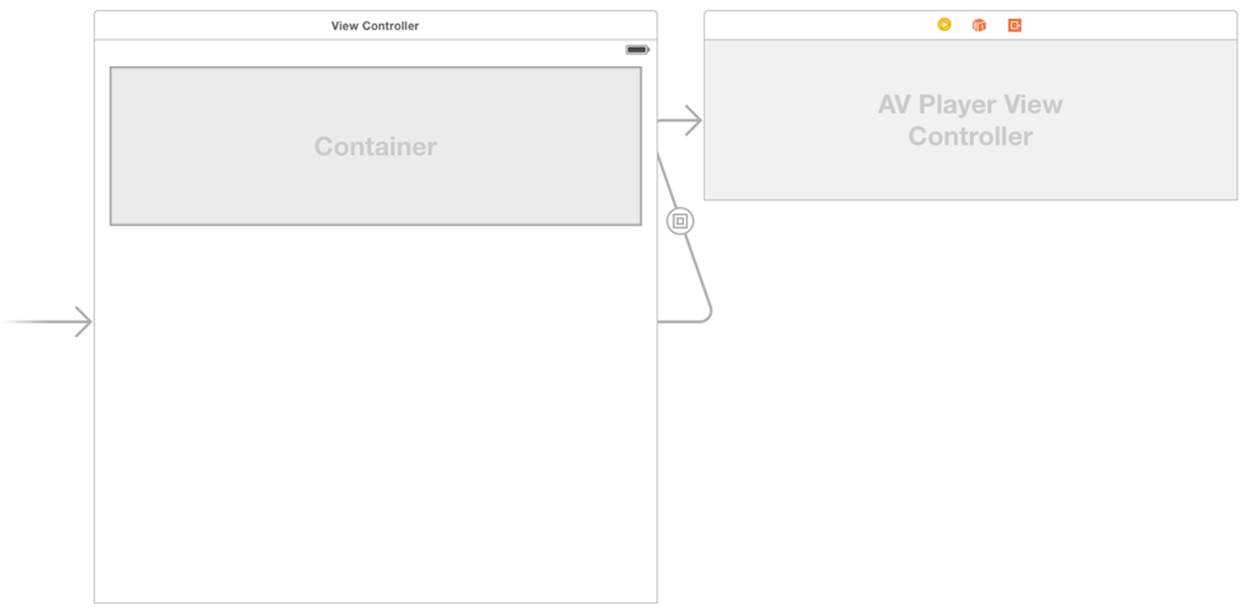

AVKit behaves a little bit differently on iOS than it does on OS X. In OS X, you use an NSView subclass to present the player, its content, and its controls. In iOS, you instead use an AVPlayerViewController, a subclass of UIViewController, which despite being an entire view controller works in a very similar fashion. Just like AVKit in OS X, it is very simple to start using an AVPlayerViewController in your code. To demonstrate this, lets create a simple iOS video app:

1. Create a new, single view iOS application, and call it AVKit-iOS.

Drag a video into the project navigator to add it to the project.

2. Then create the interface, which for this application will use an AVPlayerViewController and a container view. A container view is a specialized UIView subclass that can embed an entire view controller within it and will let us display the AVPlayerViewController and its content.

Open Main.storyboard. In the object library search for a container view, and drag it into the view controller. Resize the container view to be as large or as small as you want; in our app we made it take up about a third of the screen but you can make it as big or small as you want.

Delete the additional view controller. By default, adding a container view to an app will also include an additional view controller that will be the contents for the container view to show; we don’t want this.

Add an AVPlayerViewController to the interface. Place the new view controller off to the side of the main view controller.

Connect the AVPlayerViewController to the container view. Control-drag from the container view to the AVPlayerViewController and select the embed segue option from the list of segues that appears. This will embed the AVPlayerViewController and its content into the container view.

The final step is to give this new segue an identifier. Select the segue in the Interface Builder and open the Attributes Inspector in the Utilities tab on the right of Xcode. Change the identifier of the segue to videoSegue; we will be using this identifier later to configure the video content.

Once complete the interface should look similar to Figure 9-4.

Figure 9-4. The interface for our AVKit app

3. To import the frameworks, open ViewController.swift and add the following to the top of the file:

4. import AVKit

import AVFoundation

WARNING

It is very important to remember to import the AV Foundation framework as well as AVKit, as AVKit only provides the AVPlayerViewController and does not come with any of the classes needed to actually configure the view controller.

5. The last thing we need to do is configure what content we want the AVPlayerViewController to show.

Inside ViewController.swift, add the following function:

override func prepareForSegue(segue: UIStoryboardSegue, sender: AnyObject?)

{

if segue.identifier == "videoSegue"

{

// set up the player

let videoURL = NSBundle.mainBundle().URLForResource("TestVideo",

withExtension: "m4v")

let videoViewController =

segue.destinationViewController as AVPlayerViewController

videoViewController.player = AVPlayer(URL: videoURL)

}

}

This code is waiting for the VideoSegue we named earlier to be called. Once this is called we are getting a reference to our sample video and configuring the player property of the AVPlayerViewController to play this video.

Now if you run the app you should see a small window in the app playing the video. It has the default iOS video controls and as an added bonus, the fullscreen button will also automagically expand the video to full size and will also support landscape!

Playing Sound with AVAudioPlayer

AVPlayer is designed for playing back any kind of audio or video. AV Foundation also provides a class specifically designed for playing back sounds, called AVAudioPlayer.

AVAudioPlayer is a simpler choice than AVPlayer for playing audio. It’s useful for playing back sound effects and music in apps that don’t use video, and has a couple of advantages over AVPlayer:

§ AVAudioPlayer allows you to set volumes on a per-player basis (AVPlayer uses the system volume).

§ AVAudioPlayer is easier to loop.

§ You can query an AVAudioPlayer for its current output power, which you can use to show volume levels over time.

NOTE

AVAudioPlayer is the simplest way to play back audio files, but it’s not the only option. If you need more control over how the audio is played back (e.g., you might want to generate audio yourself, or apply effects to the audio), then you should use the more powerful AVAudioEngine API. For more information on this, see the Xcode documentation for the AVAudioEngineclass.

AVAudioPlayer works in the same way as AVPlayer. Given an NSURL that points to a sound file that OS X or iOS supports, you create an AVAudioPlayer, set it up the way you want (by setting balance, volume, and looping), and then play it:

let soundFileURL = NSBundle.mainBundle().URLForResource("TestSound",

withExtension: "wav")

var error: NSError?

audioPlayer = AVAudioPlayer(contentsOfURL: soundFileURL, error: &error)

NOTE

You need to keep a reference to your AVAudioPlayer, or it will be removed from memory and stop playing. Therefore, you should keep an instance variable around that stores a reference to the player to keep it from being freed.

Telling an AVAudioPlayer to play is a simple matter:

audioPlayer.play()

You can also set the volume and indicate how many times the sound should loop. The volume is a number between 0 and 1. The number of loops defaults to 0 (play once); if you set it to 1, it will play twice. Set the number of loops to –1 to make the sound loop until stopped:

audioPlayer.volume = 0.5

audioPlayer.numberOfLoops = -1

To seek to a point in the sound, set the currentTime property. This property is measured in seconds, so to seek to the start of the sound, set currentTime to 0:

audioPlayer.currentTime = 0

Seeking to a point in the sound doesn’t affect whether the sound is playing or not. If you seek while the sound is playing, the sound will jump.

NOTE

In this chapter, we’ve focused on media playback. However, AV Foundation has full support for capturing media as well, including audio and video. For information on how this works, see the “Media Capture” section in the AV Foundation Programming Guide, available in the Xcode documentation.

Speech Synthesis

A feature introduced in iOS 7 within AV Foundation is the ability to synthesize speech: you can now have your application say almost any text that you want. There are two main components to synthesizing speech. The first is an AVSpeechUtterance, which represents the text you want to have synthesized. This includes the rate at which you want it to be spoken, the volume, pitch, any delay, and the voice to use when synthesizing the text. The second component, an AVSpeechSynthesizer, is the object responsible for actually synthesizing, speaking, and controlling any utterances passed to it:

// creating a synthesizer

var synthesizer = AVSpeechSynthesizer()

// creating an utterance to synthesize

let utteranceString = "I am the very model of a modern major general"

var utterance = AVSpeechUtterance(string: utteranceString)

// setting a rate to speak at

utterance.rate = 0.175

// synthesizing and speaking the utterance

synthesizer.speakUtterance(utterance)

Should you want to pause the utterance, call pauseSpeakingAtBoundary() on your AVSpeechSynthesizer, which takes in a AVSpeechBoundary as a parameter for controlling when to pause the utterance. Passing in AVSpeechBoundary.Immediate will pause the utterance immediately, whereas AVSpeechBoundary.Word will pause the utterance at the completion of the current word. Call continueSpeaking() to continue the utterance. To fully stop a speech synthesizer and all utterances associated with it, call stopSpeakingAtBoundary(), which also takes in a speech boundary to inform the synthesizer when to stop.

Working with the Photo Library

In addition to playing back video and audio, iOS and OS X allow you to access the built-in camera system to capture video and audio. Similar hardware is available on both systems—in fact, Apple refers to the front-facing camera on the iPhone, iPad, and all Mac machines as the “FaceTime camera,” suggesting that users are meant to treat the camera the same way across all devices. The camera can record still images as well as video.

The APIs for accessing the camera are different on OS X and iOS. The camera was introduced on the Mac well before iOS was released, and iOS’s implementation is somewhat easier to use and cleaner, as the API benefited from several years of development experience.

NOTE

If you really need a consistent API for recording camera content across both iOS and OS X, AV Foundation provides a set of classes for capturing content—the key ones being AVCaptureSession, AVCaptureInput, and AVCaptureOutput. However, this system is designed for more finely grained control over data flows from the camera to consumers of that data, and isn’t terribly convenient for simple uses like recording video and saving it to a file. In this chapter, therefore, we’ll only be covering the iOS implementation. For OS X developers, please refer to the QTKit Application Tutorial included in the Xcode documentation.

Capturing Photos and Video from the Camera

To capture video and photos from the camera on iOS, you use a view controller called UIImagePickerController.

At its simplest, UIImagePickerController allows you to present an interface almost identical to the built-in camera application on the iPhone and iPad. Using this interface, the user can take a photo that is delivered to your application as a UIImage object.

You can also configure the UIImagePickerController to capture video. In this case, the user can record up to 30 minutes of video and deliver it to your application as an NSString that contains the path to where the captured video file is kept.

UIImagePickerController can be set up to control which camera is used (front-side or back-side camera), whether the LED flashlight is available (on devices that have them), and whether the user is allowed to crop or adjust the photo he took or trim the video he recorded.

UIImagePickerController works like this:

1. You create an instance of the class.

2. You optionally configure the picker to use the settings that you want.

3. You provide the picker with a delegate object that conforms to the UIImagePickerControllerDelegate protocol.

4. You present the view controller, usually modally, by having the current view controller call presentViewController(animated:, completion:).

5. The user takes a photo or records a video. When they’re done, the delegate object receives the imagePickerController(didFinishPickingMediaWithInfo:) message.

This method receives a dictionary that contains information about the media that the user captured, which you can query to retrieve data like the original or edited photos, the location of the video file, and other useful information.

In this method, your view controller must dismiss the image picker controller, by calling the dismissViewControllerAnimated(:completion:) method on the current view controller.

6. If the user chooses to cancel the image picker (by tapping the Cancel button that appears), the delegate object receives the imagePickerControllerDidCancel() message.

In this method, your view controller must also dismiss the image picker by calling dismissViewControllerAnimated(completion). If this method isn’t called, the Cancel button won’t appear to do anything when tapped, and the user will think that your application is buggy.

When using UIImagePickerController, it’s important to remember that the hardware on the device your app is running on may vary. Not all devices have a front-facing camera, which was only introduced in the iPhone 4 and the iPad 2; on earlier devices, you can only use the rear-facing camera. Some devices don’t have a camera at all, such as the early iPod touch models and the first iPad. As time goes on, devices are getting more and more capable, but it is always good practice to make sure a camera is there before you use it.

You can use UIImagePickerController to determine which features are available and adjust your app’s behavior accordingly. For example, to determine if any kind of camera is available, you use the isSourceTypeAvailable() class method:

let sourceType = UIImagePickerControllerSourceType.Camera

if (UIImagePickerController.isSourceTypeAvailable(sourceType))

{

// we can use the camera

}

else

{

// we can't use the camera

}

You can further specify if a front- or rear-facing camera is available by using the class method isCameraDeviceAvailable():

let frontCamera = UIImagePickerControllerCameraDevice.Front

let rearCamera = UIImagePickerControllerCameraDevice.Rear

if (UIImagePickerController.isCameraDeviceAvailable(frontCamera))

{

// the front camera is available

}

if (UIImagePickerController.isCameraDeviceAvailable(rearCamera))

{

// the rear camera is available

}

NOTE

The iOS simulator does not have a camera, and UIImagePickerController reports this. If you want to test out using the camera, you must test your app on a device that actually has a built-in camera. This doesn’t stop you from using UIImagePickerController itself, because you can still access the user’s photo library. We’ll be talking about this in more detail in the next section.

Building a Photo Application

To demonstrate how to use UIImagePickerController, we’ll build a simple application that allows the user to take a photo, which is then displayed on the screen. The image picker will be configured to take only photos, and will use the front-facing camera if it’s available and the rear-facing camera if it’s not:

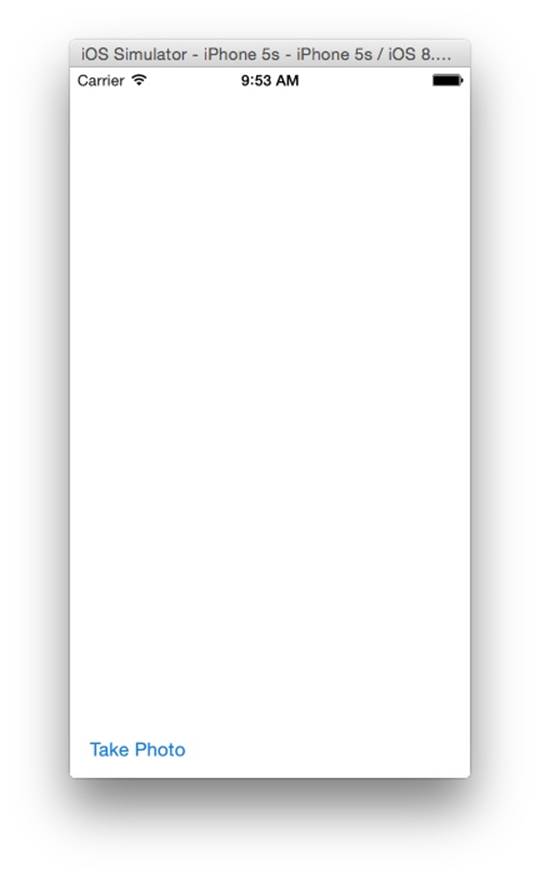

1. Create a single view iPhone application and name it Photos.

2. The interface for this application will be deliberately simple: a button that brings up the camera view, and an image view that displays the photo that the user took.

To create the interface, open Main.storyboard and drag a UIImageView into the main screen. Resize it so that it takes up the top half of the screen.

Drag a UIButton into the main screen and place it under the image view. Make the button’s label read Take Photo.

When you’re done, the interface should look like Figure 9-5.

3. Next, connect the interface to the code. Open ViewController.swift in the assistant.

Control-drag from the image view into ViewController. Create an outlet called imageView.

Control-drag from the button into ViewController. Create an action called takePhoto.

4. Then make the view controller conform to the UIImagePickerControllerDelegate and UINavigationControllerDelegate protocols. Update ViewController.swift to look like the following code:

5. class ViewController: UIViewController,

6. UIImagePickerControllerDelegate,

UINavigationControllerDelegate {

Figure 9-5. The photo-picking app’s interface

5. Then add the code that shows the image picker. When the button is tapped, we need to create, configure, and present the image picker view. Replace the takePhoto() method with the following code:

6. @IBAction func takePhoto(sender: AnyObject) {

7. var picker = UIImagePickerController()

8.

9. let sourceType = UIImagePickerControllerSourceType.Camera

10. if (UIImagePickerController.isSourceTypeAvailable(sourceType))

11. {

12. // we can use the camera

13. picker.sourceType = UIImagePickerControllerSourceType.Camera

14.

15. let frontCamera = UIImagePickerControllerCameraDevice.Front

16. let rearCamera = UIImagePickerControllerCameraDevice.Rear

17. //use the front-facing camera if available

18. if (UIImagePickerController.isCameraDeviceAvailable(frontCamera))

19. {

20. picker.cameraDevice = frontCamera

21. }

22. else

23. {

24. picker.cameraDevice = rearCamera

25. }

26. // make this object be the delegate for the picker

27. picker.delegate = self

28.

29. self.presentViewController(picker, animated: true,

30. completion: nil)

31. }

32.

}

33.We now need to add the UIImagePickerControllerDelegate methods—specifically, the one called when the user finishes taking a photo, and the one called when the user cancels taking a photo.

Add the following methods to ViewController.swift:

func imagePickerController(picker: UIImagePickerController!,

didFinishPickingMediaWithInfo info: [NSObject : AnyObject]!)

{

let image: UIImage =

info[UIImagePickerControllerOriginalImage] as UIImage

self.imageView.image = image

picker.dismissViewControllerAnimated(true, completion: nil)

}

func imagePickerControllerDidCancel(picker: UIImagePickerController!) {

picker.dismissViewControllerAnimated(true, completion: nil)

}

Now run the application and test it out on an iPhone or iPad. Take a photo of yourself, and see that it appears in the image view.

If you try to test out the application on the iOS Simulator, the button won’t appear to do anything at all. That’s because the if statement in the takePhoto() method keeps the image picker from trying to work with hardware that isn’t there.

WARNING

If you ask UIImagePickerController to work with a camera and there isn’t one present on the device, an exception will be thrown and your application will crash. Always test to see if the camera device that you intend to use is actually available.

The Photo Library

Capturing a photo with the camera is useful, but the user will likely also want to work with photos that he’s previously taken or downloaded from the Internet. For example, a social networking application should include some method of sharing photos from the user’s photo collection.

To let the user access his photo library from within your app, you use UIImagePickerController again. If you want to present the photo library instead of the camera, set the sourceType property of the image picker to UIImagePickerControllerSourceType.PhotoLibrary orUIImagePickerControllerSourceType.SavedPhotosAlbum.

NOTE

UIImagePickerControllerSourceType.PhotoLibrary makes the UIImagePickerController display the entire photo library, while UIImagePickerControllerSourceType.SavedPhotosAlbum makes the UIImagePickerController display only the Camera Roll album on devices that have a camera, or the Saved Photos album on devices that don’t.

When you present an image picker controller that has been set up to use a noncamera source, the photo library interface used in the built-in Photos application appears. The user then browses for and selects a photo, at which point the image picker’s delegate receives theimagePickerController(didFinishPickingMediaWithInfo:) message, just like if the image picker had been set up to use the camera.

To demonstrate this, we’ll update the application to include a button that displays the Saved Photos album:

1. To update the interface, add a new button to the application’s main screen and make its label read Photo Library.

2. Then connect the interface to the code. Open ViewController.swift in the Assistant again. Control-drag from the new button into ViewController, and create a new action called loadFromLibrary.

3. Replace the loadFromLibrary() method in ViewController.swift with the following code:

4. @IBAction func loadFromLibrary(sender: AnyObject) {

5. var picker = UIImagePickerController()

6. picker.sourceType =

7. UIImagePickerControllerSourceType.SavedPhotosAlbum

8. picker.delegate = self

9. self.presentViewController(picker, animated: true, completion: nil)

}

Test the application by running the app and tapping the Photo Library button. Select a photo to make it appear on the screen.

If you’re testing this in the simulator, you won’t have access to a large array of user-taken photos, but since Xcode 6, the iOS Simulator does come with a few photos in the library for testing purposes.