C# 5.0 in a Nutshell (2012)

Chapter 14. Concurrency & Asynchrony

Most applications need to deal with more than one thing happening at a time (concurrency). In this chapter, we start with the essential prerequisites, namely the basics of threading and tasks, and then describe the principles of asynchrony and C# 5.0’s asynchronous functions in detail.

In Chapter 22, we’ll revisit multithreading in greater detail, and in Chapter 23, we’ll cover the related topic of parallel programming.

Introduction

The most common concurrency scenarios are:

Writing a responsive user interface

In WPF, Metro, and Windows Forms applications, you must run time-consuming tasks concurrently with the code that runs your user interface to maintain responsiveness.

Allowing requests to process simultaneously

On a server, client requests can arrive concurrently and so must be handled in parallel to maintain scalability. If you use ASP.NET, WCF, or Web Services, the .NET Framework does this for you automatically. However, you still need to be aware of shared state (for instance, the effect of using static variables for caching.)

Parallel programming

Code that performs intensive calculations can execute faster on multicore/multiprocessor computers if the workload is divided between cores (Chapter 23 is dedicated to this).

Speculative execution

On multicore machines, you can sometimes improve performance by predicting something that might need to be done, and then doing it ahead of time. LINQPad uses this technique to speed up the creation of new queries. A variation is to run a number of different algorithms in parallel that all solve the same task. Whichever one finishes first “wins”—this is effective when you can’t know ahead of time which algorithm will execute fastest.

The general mechanism by which a program can simultaneously execute code is called multithreading. Multithreading is supported by both the CLR and operating system, and is a fundamental concept in concurrency. Understanding the basics of threading, and in particular, the effects of threads on shared state, is therefore essential.

Threading

A thread is an execution path that can proceed independently of others.

Each thread runs within an operating system process, which provides an isolated environment in which a program runs. With a single-threaded program, just one thread runs in the process’s isolated environment and so that thread has exclusive access to it. With a multithreaded program, multiple threads run in a single process, sharing the same execution environment (memory, in particular). This, in part, is why multithreading is useful: one thread can fetch data in the background, for instance, while another thread displays the data as it arrives. This data is referred to asshared state.

Creating a Thread

NOTE

The Windows Metro profile does not let you create and start threads directly; instead you must do this via tasks (see Tasks). Tasks add a layer of indirection that complicates learning, so the best way to start is with Console applications (or LINQPad) and create threads directly until you’re comfortable with how they work.

A client program (Console, WPF, Metro, or Windows Forms) starts in a single thread that’s created automatically by the operating system (the “main” thread). Here it lives out its life as a single-threaded application, unless you do otherwise, by creating more threads (directly or indirectly).[12]

You can create and start a new thread by instantiating a Thread object and calling its Start method. The simplest constructor for Thread takes a ThreadStart delegate: a parameterless method indicating where execution should begin. For example:

// NB: All samples in this chapter assume the following namespace imports:

using System;

using System.Threading;

class ThreadTest

{

static void Main()

{

Thread t = new Thread (WriteY); // Kick off a new thread

t.Start(); // running WriteY()

// Simultaneously, do something on the main thread.

for (int i = 0; i < 1000; i++) Console.Write ("x");

}

static void WriteY()

{

for (int i = 0; i < 1000; i++) Console.Write ("y");

}

}

// Typical Output:

xxxxxxxxxxxxxxxxyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxyyyyyyyyyyyyy

yyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyxxxxxxxxxxxxxxxxxxxxxx

xxxxxxxxxxxxxxxxxxxxxxyyyyyyyyyyyyyyyyyyyyyyyyyyyyyyy

yyyyyyyyyyyyyxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

...

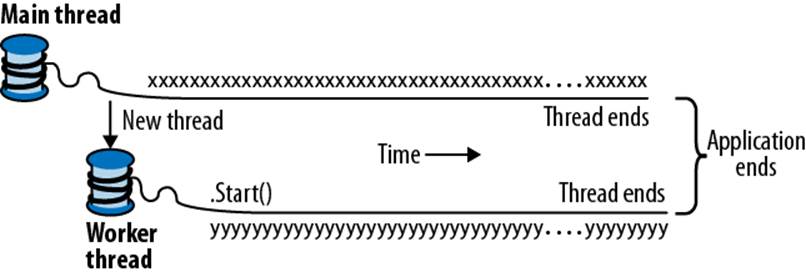

The main thread creates a new thread t on which it runs a method that repeatedly prints the character y. Simultaneously, the main thread repeatedly prints the character x, as shown in Figure 14-1. On a single-core computer, the operating system must allocate “slices” of time to each thread (typically 20 ms in Windows) to simulate concurrency, resulting in repeated blocks of x and y. On a multicore or multiprocessor machine, the two threads can genuinely execute in parallel (subject to competition by other active processes on the computer), although you still get repeated blocks of x and y in this example because of subtleties in the mechanism by which Console handles concurrent requests.

Figure 14-1. Starting a new thread

NOTE

A thread is said to be preempted at the points where its execution is interspersed with the execution of code on another thread. The term often crops up in explaining why something has gone wrong!

Once started, a thread’s IsAlive property returns true, until the point where the thread ends. A thread ends when the delegate passed to the Thread’s constructor finishes executing. Once ended, a thread cannot restart.

Each thread has a Name property that you can set for the benefit of debugging. This is particularly useful in Visual Studio, since the thread’s name is displayed in the Threads Window and Debug Location toolbar. You can set a thread’s name just once; attempts to change it later will throw an exception.

The static Thread.CurrentThread property gives you the currently executing thread:

Console.WriteLine (Thread.CurrentThread.Name);

Join and Sleep

You can wait for another thread to end by calling its Join method:

static void Main()

{

Thread t = new Thread (Go);

t.Start();

t.Join();

Console.WriteLine ("Thread t has ended!");

}

static void Go() { for (int i = 0; i < 1000; i++) Console.Write ("y"); }

This prints “y” 1,000 times, followed by “Thread t has ended!” immediately afterward. You can include a timeout when calling Join, either in milliseconds or as a TimeSpan. It then returns true if the thread ended or false if it timed out.

Thread.Sleep pauses the current thread for a specified period:

Thread.Sleep (TimeSpan.FromHours (1)); // Sleep for 1 hour

Thread.Sleep (500); // Sleep for 500 milliseconds

Thread.Sleep(0) relinquishes the thread’s current time slice immediately, voluntarily handing over the CPU to other threads. Thread.Yield() method does the same thing—except that it relinquishes only to threads running on the same processor.

NOTE

Sleep(0) or Yield is occasionally useful in production code for advanced performance tweaks. It’s also an excellent diagnostic tool for helping to uncover thread safety issues: if inserting Thread.Yield() anywhere in your code breaks the program, you almost certainly have a bug.

While waiting on a Sleep or Join, a thread is blocked.

Blocking

A thread is deemed blocked when its execution is paused for some reason, such as when Sleeping or waiting for another to end via Join. A blocked thread immediately yields its processor time slice, and from then on consumes no processor time until its blocking condition is satisfied. You can test for a thread being blocked via its ThreadState property:

bool blocked = (someThread.ThreadState & ThreadState.WaitSleepJoin) != 0;

NOTE

ThreadState is a flags enum, combining three “layers” of data in a bitwise fashion. Most values, however, are redundant, unused, or deprecated. The following extension method strips a ThreadState to one of four useful values: Unstarted, Running, WaitSleepJoin, and Stopped:

public static ThreadState Simplify (this ThreadState ts)

{

return ts & (ThreadState.Unstarted |

ThreadState.WaitSleepJoin |

ThreadState.Stopped);

}

The ThreadState property is useful for diagnostic purposes, but unsuitable for synchronization, because a thread’s state may change in between testing ThreadState and acting on that information.

When a thread blocks or unblocks, the operating system performs a context switch. This incurs a small overhead, typically one or two microseconds.

I/O-bound versus compute-bound

An operation that spends most of its time waiting for something to happen is called I/O-bound—an example is downloading a web page or calling Console.ReadLine. (I/O-bound operations typically involve input or output, but this is not a hard requirement: Thread.Sleep is also deemed I/O-bound.) In contrast, an operation that spends most of its time performing CPU-intensive work is called compute-bound.

Blocking versus spinning

An I/O-bound operation works in one of two ways: it either waits synchronously on the current thread until the operation is complete (such as Console.ReadLine, Thread.Sleep, or Thread.Join), or operates asynchronously, firing a callback when the operation finishes some time later (more on this later).

I/O-bound operations that wait synchronously spend most of their time blocking a thread. They may also “spin” in a loop periodically:

while (DateTime.Now < nextStartTime)

Thread.Sleep (100);

Leaving aside that there are better ways to do this (such as timers or signaling constructs), another option is that a thread may spin continuously:

while (DateTime.Now < nextStartTime);

In general, this is very wasteful on processor time: as far as the CLR and operating system are concerned, the thread is performing an important calculation, and so gets allocated resources accordingly. In effect, we’ve turned what should be an I/O-bound operation into a compute-bound operation.

NOTE

There are a couple of nuances with regard to spinning versus blocking. First, spinning very briefly can be effective when you expect a condition to be satisfied soon (perhaps within a few microseconds) because it avoids the overhead and latency of a context switch. The .NET Framework provides special methods and classes to assist—see “SpinLock and SpinWait” athttp://albahari.com/threading/.

Second, blocking does not incur a zero cost. This is because each thread ties up around 1MB of memory for as long as it lives and causes an ongoing administrative overhead for the CLR and operating system. For this reason, blocking can be troublesome in the context of heavily I/O-bound programs that need to handle hundreds or thousands of concurrent operations. Instead, such programs need to use a callback-based approach, rescinding their thread entirely while waiting. This is (in part) the purpose of the asynchronous patterns that we’ll discuss later.

Local Versus Shared State

The CLR assigns each thread its own memory stack so that local variables are kept separate. In the next example, we define a method with a local variable, then call the method simultaneously on the main thread and a newly created thread:

static void Main()

{

new Thread (Go).Start(); // Call Go() on a new thread

Go(); // Call Go() on the main thread

}

static void Go()

{

// Declare and use a local variable - 'cycles'

for (int cycles = 0; cycles < 5; cycles++) Console.Write ('?');

}

A separate copy of the cycles variable is created on each thread’s memory stack, and so the output is, predictably, ten question marks.

Threads share data if they have a common reference to the same object instance:

class ThreadTest

{

bool _done;

static void Main()

{

ThreadTest tt = new ThreadTest(); // Create a common instance

new Thread (tt.Go).Start();

tt.Go();

}

void Go() // Note that this is an instance method

{

if (!_done) { _done = true; Console.WriteLine ("Done"); }

}

}

Because both threads call Go() on the same ThreadTest instance, they share the _done field. This results in “Done” being printed once instead of twice.

Local variables captured by a lambda expression or anonymous delegate are converted by the compiler into fields, and so can also be shared:

class ThreadTest

{

static void Main()

{

bool done = false;

ThreadStart action = () =>

{

if (!done) { done = true; Console.WriteLine ("Done"); }

};

new Thread (action).Start();

action();

}

}

Static fields offer another way to share data between threads:

class ThreadTest

{

static bool _done; // Static fields are shared between all threads

// in the same application domain.

static void Main()

{

new Thread (Go).Start();

Go();

}

static void Go()

{

if (!_done) { _done = true; Console.WriteLine ("Done"); }

}

}

All three examples illustrate another key concept: that of thread safety (or rather, lack of it!). The output is actually indeterminate: it’s possible (though unlikely) that “Done” could be printed twice. If, however, we swap the order of statements in the Go method, the odds of “Done” being printed twice go up dramatically:

static void Go()

{

if (!_done) { Console.WriteLine ("Done"); _done = true; }

}

The problem is that one thread can be evaluating the if statement right as the other thread is executing the WriteLine statement—before it’s had a chance to set done to true.

NOTE

Our example illustrates one of many ways that shared writable state can introduce the kind of intermittent errors for which multithreading is notorious. We’ll see next how to fix our program with locking; however it’s better to avoid shared state altogether where possible. We’ll see later how asynchronous programming patterns help with this.

Locking and Thread Safety

NOTE

Locking and thread safety are large topics. For a full discussion, see Exclusive Locking and Locking and Thread Safety in Chapter 22.

We can fix the previous example by obtaining an exclusive lock while reading and writing to the shared field. C# provides the lock statement for just this purpose:

class ThreadSafe

{

static bool _done;

static readonly object _locker = new object();

static void Main()

{

new Thread (Go).Start();

Go();

}

static void Go()

{

lock (_locker)

{

if (!_done) { Console.WriteLine ("Done"); _done = true; }

}

}

}

When two threads simultaneously contend a lock (which can be upon any reference-type object, in this case, _locker), one thread waits, or blocks, until the lock becomes available. In this case, it ensures only one thread can enter its code block at a time, and “Done” will be printed just once. Code that’s protected in such a manner—from indeterminacy in a multithreaded context—is called thread-safe.

NOTE

Even the act of autoincrementing a variable is not thread-safe: the expression x++ executes on the underlying processor as distinct read-increment-write operations. So, if two threads execute x++ at once outside a lock, the variable may end up getting incremented once rather than twice (or worse, x could be torn, ending up with a bitwise-mixture of old and new content, under certain conditions).

Locking is not a silver bullet for thread safety—it’s easy to forget to lock around accessing a field, and locking can create problems of its own (such as deadlocking).

A good example of when you might use locking is around accessing a shared in-memory cache for frequently accessed database objects in an ASP.NET application. This kind of application is simple to get right, and there’s no chance of deadlocking. We give an example in Thread Safety in Application Servers in Chapter 22.

Passing Data to a Thread

Sometimes you’ll want to pass arguments to the thread’s startup method. The easiest way to do this is with a lambda expression that calls the method with the desired arguments:

static void Main()

{

Thread t = new Thread ( () => Print ("Hello from t!") );

t.Start();

}

static void Print (string message) { Console.WriteLine (message); }

With this approach, you can pass in any number of arguments to the method. You can even wrap the entire implementation in a multistatement lambda:

new Thread (() =>

{

Console.WriteLine ("I'm running on another thread!");

Console.WriteLine ("This is so easy!");

}).Start();

Lambda expressions didn’t exist prior to C# 3.0. So you might also come across an old-school technique, which is to pass an argument into Thread’s Start method:

static void Main()

{

Thread t = new Thread (Print);

t.Start ("Hello from t!");

}

static void Print (object messageObj)

{

string message = (string) messageObj; // We need to cast here

Console.WriteLine (message);

}

This works because Thread’s constructor is overloaded to accept either of two delegates:

public delegate void ThreadStart();

public delegate void ParameterizedThreadStart (object obj);

The limitation of ParameterizedThreadStart is that it accepts only one argument. And because it’s of type object, it usually needs to be cast.

Lambda expressions and captured variables

As we saw, a lambda expression is the most convenient and powerful way to pass data to a thread. However, you must be careful about accidentally modifying captured variables after starting the thread. For instance, consider the following:

for (int i = 0; i < 10; i++)

new Thread (() => Console.Write (i)).Start();

The output is nondeterministic! Here’s a typical result:

0223557799

The problem is that the i variable refers to the same memory location throughout the loop’s lifetime. Therefore, each thread calls Console.Write on a variable whose value may change as it is running! The solution is to use a temporary variable as follows:

for (int i = 0; i < 10; i++)

{

int temp = i;

new Thread (() => Console.Write (temp)).Start();

}

Each of the digits 0 to 9 is then written exactly once. (The ordering is still undefined because threads may start at indeterminate times.)

NOTE

This is analogous to the problem we described in Captured Variables in Chapter 8. The problem is just as much about C#’s rules for capturing variables in for loops as it is about multithreading.

This problem also applies to foreach loops prior to C# 5.

Variable temp is now local to each loop iteration. Therefore, each thread captures a different memory location and there’s no problem. We can illustrate the problem in the earlier code more simply with the following example:

string text = "t1";

Thread t1 = new Thread ( () => Console.WriteLine (text) );

text = "t2";

Thread t2 = new Thread ( () => Console.WriteLine (text) );

t1.Start(); t2.Start();

Because both lambda expressions capture the same text variable, t2 is printed twice.

Exception Handling

Any try/catch/finally blocks in effect when a thread is created are of no relevance to the thread when it starts executing. Consider the following program:

public static void Main()

{

try

{

new Thread (Go).Start();

}

catch (Exception ex)

{

// We'll never get here!

Console.WriteLine ("Exception!");

}

}

static void Go() { throw null; } // Throws a NullReferenceException

The try/catch statement in this example is ineffective, and the newly created thread will be encumbered with an unhandled NullReferenceException. This behavior makes sense when you consider that each thread has an independent execution path.

The remedy is to move the exception handler into the Go method:

public static void Main()

{

new Thread (Go).Start();

}

static void Go()

{

try

{

...

throw null; // The NullReferenceException will get caught below

...

}

catch (Exception ex)

{

Typically log the exception, and/or signal another thread

that we've come unstuck

...

}

}

You need an exception handler on all thread entry methods in production applications—just as you do (usually at a higher level, in the execution stack) on your main thread. An unhandled exception causes the whole application to shut down. With an ugly dialog box!

NOTE

In writing such exception handling blocks, rarely would you ignore the error: typically, you’d log the details of the exception, and then perhaps display a dialog box allowing the user to automatically submit those details to your web server. You then might choose to restart the application, because it’s possible that an unexpected exception might leave your program in an invalid state.

Centralized exception handling

In WPF, Metro, and Windows Forms applications, you can subscribe to “global” exception handling events, Application.DispatcherUnhandledException and Application.ThreadException, respectively. These fire after an unhandled exception in any part of your program that’s called via the message loop (this amounts to all code that runs on the main thread while the Application is active). This is useful as a backstop for logging and reporting bugs (although it won’t fire for unhandled exceptions on non-UI threads that you create). Handling these events prevents the program from shutting down, although you may choose to restart the application to avoid the potential corruption of state that can follow from (or that led to) the unhandled exception.

AppDomain.CurrentDomain.UnhandledException fires on any unhandled exception on any thread, but since CLR 2.0, the CLR forces application shutdown after your event handler completes. However, you can prevent shutdown by adding the following to your application configuration file:

<configuration>

<runtime>

<legacyUnhandledExceptionPolicy enabled="1" />

</runtime>

</configuration>

This can be useful in programs that host multiple application domains (Chapter 24): if an unhandled exception occurs in a non-default application domain, you can destroy and recreate the offending domain rather than restarting the whole application.

Foreground Versus Background Threads

By default, threads you create explicitly are foreground threads. Foreground threads keep the application alive for as long as any one of them is running, whereas background threads do not. Once all foreground threads finish, the application ends, and any background threads still running abruptly terminate.

NOTE

A thread’s foreground/background status has no relation to its priority (allocation of execution time).

You can query or change a thread’s background status using its IsBackground property:

static void Main (string[] args)

{

Thread worker = new Thread ( () => Console.ReadLine() );

if (args.Length > 0) worker.IsBackground = true;

worker.Start();

}

If this program is called with no arguments, the worker thread assumes foreground status and will wait on the ReadLine statement for the user to press Enter. Meanwhile, the main thread exits, but the application keeps running because a foreground thread is still alive. On the other hand, if an argument is passed to Main(), the worker is assigned background status, and the program exits almost immediately as the main thread ends (terminating the ReadLine).

When a process terminates in this manner, any finally blocks in the execution stack of background threads are circumvented. If your program employs finally (or using) blocks to perform cleanup work such as deleting temporary files, you can avoid this by explicitly waiting out such background threads upon exiting an application, either by joining the thread, or with a signaling construct (see Signaling). In either case, you should specify a timeout, so you can abandon a renegade thread should it refuse to finish, otherwise your application will fail to close without the user having to enlist help from the Task Manager.

Foreground threads don’t require this treatment, but you must take care to avoid bugs that could cause the thread not to end. A common cause for applications failing to exit properly is the presence of active foreground threads.

Thread Priority

A thread’s Priority property determines how much execution time it gets relative to other active threads in the operating system, on the following scale:

enum ThreadPriority { Lowest, BelowNormal, Normal, AboveNormal, Highest }

This becomes relevant when multiple threads are simultaneously active. Elevating a thread’s priority should be done with care as it can starve other threads. If you want a thread to have higher priority than threads in other processes, you must also elevate the process priority using theProcess class in System.Diagnostics:

using (Process p = Process.GetCurrentProcess())

p.PriorityClass = ProcessPriorityClass.High;

This can work well for non-UI processes that do minimal work and need low latency (the ability to respond very quickly) in the work they do. With compute-hungry applications (particularly those with a user interface), elevating process priority can starve other processes, slowing down the entire computer.

Signaling

Sometimes you need a thread to wait until receiving notification(s) from other thread(s). This is called signaling. The simplest signaling construct is ManualResetEvent. Calling WaitOne on a ManualResetEvent blocks the current thread until another thread “opens” the signal by calling Set. In the following example, we start up a thread that waits on a ManualResetEvent. It remains blocked for two seconds until the main thread signals it:

var signal = new ManualResetEvent (false);

new Thread (() =>

{

Console.WriteLine ("Waiting for signal...");

signal.WaitOne();

signal.Dispose();

Console.WriteLine ("Got signal!");

}).Start();

Thread.Sleep(2000);

signal.Set(); // "Open" the signal

After calling Set, the signal remains open; it may be closed again by calling Reset.

ManualResetEvent is one of several signaling constructs provided by the CLR; we cover all of them in detail in Chapter 22.

Threading in Rich Client Applications

In WPF, Metro, and Windows Forms applications, executing long-running operations on the main thread makes the application unresponsive, because the main thread also processes the message loop which performs rendering and handles keyboard and mouse events.

A popular approach is to start up “worker” threads for time-consuming operations. The code on a worker thread runs a time-consuming operation and then updates the UI when complete. However, all rich client applications have a threading model whereby UI elements and controls can be accessed only from the thread that created them (typically the main UI thread). Violating this causes either unpredictable behavior, or an exception to be thrown.

Hence when you want to update the UI from a worker thread, you must forward the request to the UI thread (the technical term is marshal). The low-level way to do this is as follows (later, we’ll discuss other solutions which build on these):

§ In WPF, call BeginInvoke or Invoke on the element’s Dispatcher object.

§ In Metro apps, call RunAsync or Invoke on the Dispatcher object.

§ In Windows Forms, call BeginInvoke or Invoke on the control.

All of these methods accept a delegate referencing the method you want to run. BeginInvoke/RunAsync work by enqueuing the delegate to the UI thread’s message queue (the same queue that handles keyboard, mouse, and timer events). Invoke does the same thing, but then blocks until the message has been read and processed by the UI thread. Because of this, Invoke lets you get a return value back from the method. If you don’t need a return value, BeginInvoke/RunAsync are preferable in that they don’t block the caller and don’t introduce the possibility of deadlock (see Deadlocks in Chapter 22).

NOTE

You can imagine, that when you call Application.Run, the following pseudo-code executes:

while (!thisApplication.Ended)

{

wait for something to appear in message queue

Got something: what kind of message is it?

Keyboard/mouse message -> fire an event handler

User BeginInvoke message -> execute delegate

User Invoke message -> execute delegate & post result

}

It’s this kind of loop that enables a worker thread to marshal a delegate for execution onto the UI thread.

To demonstrate, suppose that we have a WPF window that contains a text box called txtMessage, whose content we wish a worker thread to update after performing a time-consuming task (which we will simulate by calling Thread.Sleep). Here’s how we’d do it:

partial class MyWindow : Window

{

public MyWindow()

{

InitializeComponent();

new Thread (Work).Start();

}

void Work()

{

Thread.Sleep (5000); // Simulate time-consuming task

UpdateMessage ("The answer");

}

void UpdateMessage (string message)

{

Action action = () => txtMessage.Text = message;

Dispatcher.BeginInvoke (action);

}

}

Running this results in a responsive window appearing immediately. Five seconds later, it updates the textbox. The code is similar for Windows Forms, except that we call the (Form’s) BeginInvoke method instead:

void UpdateMessage (string message)

{

Action action = () => txtMessage.Text = message;

this.BeginInvoke (action);

}

MULTIPLE UI THREADS

It’s possible to have multiple UI threads if they each own different windows. The main scenario is when you have an application with multiple top-level windows, often called a Single Document Interface (SDI) application, such as Microsoft Word. Each SDI window typically shows itself as a separate “application” on the taskbar and is mostly isolated, functionally, from other SDI windows. By giving each such window its own UI thread, each window can be made more responsive with respect to the others.

Synchronization Contexts

In the System.ComponentModel namespace, there’s an abstract class called SynchronizationContext which enables the generalization of thread marshaling.

WPF, Metro, and Windows Forms each define and instantiate SynchronizationContext subclasses which you can obtain via the static property SynchronizationContext.Current (while running on a UI thread). Capturing this property lets you later “post” to UI controls from a worker thread:

partial class MyWindow : Window

{

SynchronizationContext _uiSyncContext;

public MyWindow()

{

InitializeComponent();

// Capture the synchronization context for the current UI thread:

_uiSyncContext = SynchronizationContext.Current;

new Thread (Work).Start();

}

void Work()

{

Thread.Sleep (5000); // Simulate time-consuming task

UpdateMessage ("The answer");

}

void UpdateMessage (string message)

{

// Marshal the delegate to the UI thread:

_uiSyncContext.Post (_ => txtMessage.Text = message);

}

}

This is useful because the same technique works for WPF, Metro, and Windows Forms (SynchronizationContext also has a ASP.NET specialization where it serves a more subtle role, ensuring that page processing events are processed sequentially following asynchronous operations, and to preserve the HttpContext.)

Calling Post is equivalent to calling BeginInvoke on a Dispatcher or Control; there’s also a Send method which is equivalent to Invoke.

NOTE

Framework 2.0 introduced the BackgroundWorker class which used the SynchronizationContext class to make the job of managing worker threads in rich client applications a little easier. BackgroundWorker has since been made redundant by the Tasks and asynchronous functions, which as we’ll see, also leverage SynchronizationContext.

The Thread Pool

Whenever you start a thread, a few hundred microseconds are spent organizing such things as a fresh local variable stack. The thread pool cuts this overhead by having a pool of pre-created recyclable threads. Thread pooling is essential for efficient parallel programming and fine-grained concurrency; it allows short operations to run without being overwhelmed with the overhead of thread startup.

There are a few things to be wary of when using pooled threads:

§ You cannot set the Name of a pooled thread, making debugging more difficult (although you can attach a description when debugging in Visual Studio’s Threads window).

§ Pooled threads are always background threads.

§ Blocking pooled threads can degrade performance (see Hygiene in the thread pool).

You are free to change the priority of a pooled thread—it will be restored to normal when released back to the pool.

You can query if you’re currently executing on a pooled thread via the property Thread.CurrentThread.IsThreadPoolThread.

Entering the thread pool

The easiest way to explicitly run something on a pooled thread is to use Task.Run (we’ll cover this in more detail in the following section):

// Task is in System.Threading.Tasks

Task.Run (() => Console.WriteLine ("Hello from the thread pool"));

As tasks didn’t exist prior to Framework 4.0, a common alternative is to call ThreadPool.QueueUserWorkItem:

ThreadPool.QueueUserWorkItem (notUsed => Console.WriteLine ("Hello"));

NOTE

The following use the thread pool implicitly:

§ WCF, Remoting, ASP.NET, and ASMX Web Services application servers

§ System.Timers.Timer and System.Threading.Timer

§ The parallel programming constructs that we describe in Chapter 23

§ The (now redundant) BackgroundWorker class

§ Asynchronous delegates (also now redundant)

Hygiene in the thread pool

The thread pool serves another function, which is to ensure that a temporary excess of compute-bound work does not cause CPU oversubscription. Oversubscription is the condition of there being more active threads than CPU cores, with the operating system having to time-slice threads.Oversubscription hurts performance because time-slicing requires expensive context switches and can invalidate the CPU caches which have become essential in delivering performance to modern processors.

The CLR avoids oversubscription in the thread pool by queuing tasks and throttling their startup. It begins by running as many concurrent tasks as there are hardware cores, and then tunes the level of concurrency via a hill-climbing algorithm, continually adjusting the workload in a particular direction. If throughput improves, it continues in the same direction (otherwise it reverses). This ensures that it always tracks the optimal performance curve—even in the face of competing process activity on the computer.

The CLR’s strategy works best if two conditions are met:

§ Work items are mostly short-running (<250ms, or ideally <100ms), so that the CLR has plenty of opportunities to measure and adjust.

§ Jobs that spend most of their time blocked do not dominate the pool.

Blocking is troublesome because it gives the CLR the false idea that it’s loading up the CPU. The CLR is smart enough to detect and compensate (by injecting more threads into the pool), although this can make the pool vulnerable to subsequent oversubscription. It also may introduce latency, as the CLR throttles the rate at which it injects new threads, particularly early in an application’s life (more so on client operating systems where it favors lower resource consumption).

Maintaining good hygiene in the thread pool is particularly relevant when you want to fully utilize the CPU (e.g., via the parallel programming APIs in Chapter 23).

Tasks

A thread is a low-level tool for creating concurrency, and as such it has limitations. In particular:

§ While it’s easy to pass data into a thread that you start, there’s no easy way to get a “return value” back from a thread that you Join. You have to set up some kind of shared field. And if the operation throws an exception, catching and propagating that exception is equally painful.

§ You can’t tell a thread to start something else when it’s finished; instead you must Join it (blocking your own thread in the process).

These limitations discourage fine-grained concurrency; in other words, they make it hard to compose larger concurrent operations by combining smaller ones (something essential for the asynchronous programming that we’ll look at in following sections). This in turn leads to greater reliance on manual synchronization (locking, signaling, and so on) and the problems that go with it.

The direct use of threads also has performance implications that we discussed in The Thread Pool. And should you need to run hundreds or thousands of concurrent I/O-bound operations, a thread-based approach consumes hundreds or thousands of MB of memory purely in thread overhead.

The Task class helps with all of these problems. Compared to a thread, a Task is a higher-level abstraction—it represents a concurrent operation that may or may not be backed by a thread. Tasks are compositional (you can chain them together through the use of continuations). They can use the thread pool to lessen startup latency, and with a TaskCompletionSource, they can leverage a callback approach that avoid threads altogether while waiting on I/O-bound operations.

The Task types were introduced in Framework 4.0 as part of the parallel programming library. However they have since been enhanced (through the use of awaiters) to play equally well in more general concurrency scenarios, and are backing types for C# 5.0’s asynchronous functions.

NOTE

In this section, we’ll ignore the features of tasks that are aimed specifically at parallel programming and cover them instead in Chapter 23.

Starting a Task

From Framework 4.5, the easiest way to start a Task backed by a thread is with the static method Task.Run (the Task class is in the System.Threading.Tasks namespace). Simply pass in an Action delegate:

Task.Run (() => Console.WriteLine ("Foo"));

The Task.Run method is new to Framework 4.5. In Framework 4.0, you can accomplish the same thing by calling Task.Factory.StartNew. (The former is mostly a shortcut for the latter.)

NOTE

Tasks use pooled threads by default, which are background threads. This means that when the main thread ends, so do any tasks that you create. Hence, to run these examples from a Console application, you must block the main thread after starting the task (for instance, by Waiting the task or by calling Console.ReadLine):

static void Main()

{

Task.Run (() => Console.WriteLine ("Foo"));

Console.ReadLine();

}

In the book’s LINQPad companion samples, Console.ReadLine is omitted because the LINQPad process keeps background threads alive.

Calling Task.Run in this manner is similar to starting a thread as follows (except for the thread pooling implications that we’ll discuss shortly):

new Thread (() => Console.WriteLine ("Foo")).Start();

Task.Run returns a Task object that we can use to monitor its progress, rather like a Thread object. (Notice, however, that we didn’t call Start because Task.Run creates “hot” tasks; you can instead use Task’s constructor to create “cold” tasks although this is rarely done in practice.)

NOTE

You can track a task’s execution status via its Status property.

Wait

Calling Wait on a task blocks until it completes and is the equivalent of calling Join on a thread:

Task task = Task.Run (() =>

{

Thread.Sleep (2000);

Console.WriteLine ("Foo");

});

Console.WriteLine (task.IsCompleted); // False

task.Wait(); // Blocks until task is complete

Wait lets you optionally specify a timeout and a cancellation token to end the wait early (see Cancellation).

Long-running tasks

By default, the CLR runs tasks on pooled threads, which is ideal for short-running compute-bound work. For longer-running and blocking operations (such as our example above), you can prevent use of a pooled thread as follows:

Task task = Task.Factory.StartNew (() => ...,

TaskCreationOptions.LongRunning);

NOTE

Running one long-running task on a pooled thread won’t cause trouble; it’s when you run multiple long-running tasks in parallel (particularly ones that block) that performance can suffer. And in that case, there are usually better solutions than TaskCreationOptions.LongRunning:

§ If the tasks are I/O-bound, TaskCompletionSource and asynchronous functions let you implement concurrency with callbacks (continuations) instead of threads.

§ If the tasks are compute-bound, a producer/consumer queue lets you throttle the concurrency for those tasks, avoiding starvation for other threads and processes (see Writing a Producer/Consumer Queue in Chapter 23).

Returning values

Task has a generic subclass called Task<TResult> which allows a task to emit a return value. You can obtain a Task<TResult> by calling Task.Run with a Func<TResult> delegate (or a compatible lambda expression) instead of an Action:

Task<int> task = Task.Run (() => { Console.WriteLine ("Foo"); return 3; });

// ...

You can obtain the result later by querying the Result property. If the task hasn’t yet finished, accessing this property will block the current thread until the task finishes:

int result = task.Result; // Blocks if not already finished

Console.WriteLine (result); // 3

In the following example, we create a task that uses LINQ to count the number of prime numbers in the first three million (+2) integers:

Task<int> primeNumberTask = Task.Run (() =>

Enumerable.Range (2, 3000000).Count (n =>

Enumerable.Range (2, (int)Math.Sqrt(n)-1).All (i => n % i > 0)));

Console.WriteLine ("Task running...");

Console.WriteLine ("The answer is " + primeNumberTask.Result);

This writes “Task running...”, and then a few seconds later, writes the answer of 216815.

NOTE

Task<TResult> can be thought of as a “future,” in that it encapsulates a Result that becomes available later in time.

Interestingly, when Task and Task<TResult> first debuted in an early CTP, the latter was actually called Future<TResult>.

Exceptions

Unlike with threads, tasks conveniently propagate exceptions. So, if the code in your task throws an unhandled exception (in other words, if your task faults), that exception is automatically re-thrown to whoever calls Wait()—or accesses the Result property of a Task<TResult>:

// Start a Task that throws a NullReferenceException:

Task task = Task.Run (() => { throw null; });

try

{

task.Wait();

}

catch (AggregateException aex)

{

if (aex.InnerException is NullReferenceException)

Console.WriteLine ("Null!");

else

throw;

}

(The CLR wraps the exception in an AggregateException in order to play well with parallel programming scenarios; we discuss this in Chapter 23.)

You can test for a faulted task without re-throwing the exception via the IsFaulted and IsCanceled properties of the Task. If both properties return false, no error occurred; if IsCanceled is true, an OperationCanceledOperation was thrown for that task (see Cancellation); ifIsFaulted is true, another type of exception was thrown and the Exception property will indicate the error.

Exceptions and autonomous tasks

With autonomous “set-and-forget” tasks (those for which you don’t rendezvous via Wait() or Result, or a continuation that does the same), it’s good practice to explicitly exception-handle the task code to avoid silent failure, just as you would with a thread.

Unhandled exceptions on autonomous tasks are called unobserved exceptions and in CLR 4.0, they would actually terminate your program (the CLR would re-throw the exception on the finalizer thread when the task dropped out of scope and was garbage collected). This was helpful in indicating that a problem had occurred that you might not have been aware of; however the timing of the error could be deceptive in that the garbage collector can lag significantly behind the offending task. Hence, when it was discovered that this behavior complicated certain patterns of asynchrony (see Parallelism and WhenAll), it was dropped in CLR 4.5.

NOTE

Ignoring exceptions is fine when an exception solely indicates a failure to obtain a result that you’re no longer interested in. For example, if a user cancels a request to download a web page, we wouldn’t care if turns out that the web page didn’t exist.

Ignoring exceptions is problematic when an exception indicates a bug in your program, for two reasons:

§ The bug may have left your program in an invalid state.

§ More exceptions may occur later as a result of the bug, and failure to log the initial error can make diagnosis difficult.

You can subscribe to unobserved exceptions at a global level via the static event TaskScheduler.UnobservedTaskException; handling this event and logging the error can make good sense.

There are a couple of interesting nuances on what counts as unobserved:

§ Tasks waited upon with a timeout will generate an unobserved exception if the faults occurs after the timeout interval.

§ The act of checking a task’s Exception property after it has faulted makes the exception “observed.”

Continuations

A continuation says to a task, “when you’ve finished, continue by doing something else.” A continuation is usually implemented by a callback that executes once upon completion of an operation. There are two ways to attach a continuation to a task. The first is new to Framework 4.5 and is particularly significant because it’s used by C# 5’s asynchronous functions, as we’ll see soon. We can demonstrate it with the prime number counting task that we wrote a short while ago in Returning values:

Task<int> primeNumberTask = Task.Run (() =>

Enumerable.Range (2, 3000000).Count (n =>

Enumerable.Range (2, (int)Math.Sqrt(n)-1).All (i => n % i > 0)));

var awaiter = primeNumberTask.GetAwaiter();

awaiter.OnCompleted (() =>

{

int result = awaiter.GetResult();

Console.WriteLine (result); // Writes result

});

Calling GetAwaiter on the task returns an awaiter object whose OnCompleted method tells the antecedent task (primeNumberTask) to execute a delegate when it finishes (or faults). It’s valid to attach a continuation to an already-completed task, in which case the continuation will be scheduled to execute right away.

NOTE

An awaiter is any object that exposes the two methods that we’ve just seen (OnCompleted and GetResult), and a Boolean property called IsCompleted. There’s no interface or base class to unify all of these members (although OnCompleted is part of the interface INotifyCompletion). We’ll explain the significance of the pattern in Asynchronous Functions in C# 5.0.

If an antecedent task faults, the exception is re-thrown when the continuation code calls awaiter.GetResult(). Rather than calling GetResult, we could simply access the Result property of the antecedent. The benefit of calling GetResult is that if the antecedent faults, the exception is thrown directly without being wrapped in AggregateException, allowing for simpler and cleaner catch blocks.

For nongeneric tasks, GetResult() has a void return value. Its useful function is then solely to rethrow exceptions.

If a synchronization context is present, OnCompleted automatically captures it and posts the continuation to that context. This is very useful in rich client applications, as it bounces the continuation back to the UI thread. In writing libraries, however, it’s not usually desirable because the relatively expensive UI-thread-bounce should occur just once upon leaving the library, rather than between method calls. Hence you can defeat it with the ConfigureAwait method:

var awaiter = primeNumberTask.ConfigureAwait (false).GetAwaiter();

If no synchronization context is present—or you use ConfigureAwait(false)—the continuation will (in general) execute on the same thread as the antecedent, avoiding unnecessary overhead.

The other way to attach a continuation is by calling the task’s ContinueWith method:

primeNumberTask.ContinueWith (antecedent =>

{

int result = antecedent.Result;

Console.WriteLine (result); // Writes 123

});

ContinueWith itself returns a Task, which is useful if you want to attach further continuations. However, you must deal directly with AggregateException if the task faults, and write extra code to marshal the continuation in UI applications (see Task Schedulers in Chapter 23). And in non-UI contexts, you must specify TaskContinuationOptions.ExecuteSynchronously if you want the continuation to execute on the same thread; otherwise it will bounce to the thread pool. ContinueWith is particularly useful in parallel programming scenarios; we cover it in detail in Continuations in Chapter 23.

TaskCompletionSource

We’ve seen how Task.Run creates a task that runs a delegate on a pooled (or non-pooled) thread. Another way to create a task is with TaskCompletionSource.

TaskCompletionSource lets you create a task out of any operation that starts and finishes some time later. It works by giving you a “slave” task that you manually drive—by indicating when the operation finishes or faults. This is ideal for I/O-bound work: you get all the benefits of tasks (with their ability to propagate return values, exceptions, and continuations) without blocking a thread for the duration of the operation.

To use TaskCompletionSource, you simply instantiate the class. It exposes a Task property that returns a task upon which you can wait and attach continuations—just as with any other task. The task, however, is controlled entirely by the TaskCompletionSource object via the following methods:

public class TaskCompletionSource<TResult>

{

public void SetResult (TResult result);

public void SetException (Exception exception);

public void SetCanceled();

public bool TrySetResult (TResult result);

public bool TrySetException (Exception exception);

public bool TrySetCanceled();

...

}

Calling any of these methods signals the task, putting it into a completed, faulted, or canceled state (we’ll cover the latter in the section Cancellation). You’re supposed to call one of these methods exactly once: if called again, SetResult, SetException, or SetCanceled will throw an exception, whereas the Try* methods return false.

The following example prints 42 after waiting for five seconds:

var tcs = new TaskCompletionSource<int>();

new Thread (() => { Thread.Sleep (5000); tcs.SetResult (42); })

.Start();

Task<int> task = tcs.Task; // Our "slave" task.

Console.WriteLine (task.Result); // 42

With TaskCompletionSource, we can write our own Run method:

Task<TResult> Run<TResult> (Func<TResult> function)

{

var tcs = new TaskCompletionSource<TResult>();

new Thread (() =>

{

try { tcs.SetResult (function()); }

catch (Exception ex) { tcs.SetException (ex); }

}).Start();

return tcs.Task;

}

...

Task<int> task = Run (() => { Thread.Sleep (5000); return 42; });

Calling this method is equivalent to calling Task.Factory.StartNew with the TaskCreationOptions.LongRunning option to request a non-pooled thread.

The real power of TaskCompletionSource is in creating tasks that don’t tie up threads. For instance, consider a task that waits for five seconds and then returns the number 42. We can write this without a thread through use the Timer class, which with the help of the CLR (and in turn, the operating system) fires an event in x milliseconds (we revisit timers in Chapter 22):

Task<int> GetAnswerToLife()

{

var tcs = new TaskCompletionSource<int>();

// Create a timer that fires once in 5000 ms:

var timer = new System.Timers.Timer (5000) { AutoReset = false };

timer.Elapsed += delegate { timer.Dispose(); tcs.SetResult (42); };

timer.Start();

return tcs.Task;

}

Hence our method returns a task that completes five seconds later, with a result of 42. By attaching a continuation to the task, we can write its result without any blocking any thread:

var awaiter = GetAnswerToLife().GetAwaiter();

awaiter.OnCompleted (() => Console.WriteLine (awaiter.GetResult()));

We could make this more useful and turn it into a general-purpose Delay method by parameterizing the delay time and getting rid of the return value. This means having it return a Task instead of a Task<int>. However, there’s no nongeneric version of TaskCompletionSource, which means we can’t directly create a nongeneric Task. The workaround is simple: since Task<TResult> derives from Task, we create a TaskCompletionSource<anything> and then implicitly convert the Task<anything> that it gives you into a Task, like this:

var tcs = new TaskCompletionSource<object>();

Task task = tcs.Task;

Now we can write our general-purpose Delay method:

Task Delay (int milliseconds)

{

var tcs = new TaskCompletionSource<object>();

var timer = new System.Timers.Timer (milliseconds) { AutoReset = false };

timer.Elapsed += delegate { timer.Dispose(); tcs.SetResult (null); };

timer.Start();

return tcs.Task;

}

Here’s how we can use it to write “42” after five seconds:

Delay (5000).GetAwaiter().OnCompleted (() => Console.WriteLine (42));

Our use of TaskCompletionSource without a thread means that a thread is engaged only when the continuation starts, five seconds later. We can demonstrate this by starting 10,000 of these operations at once without error or excessive resource consumption:

for (int i = 0; i < 10000; i++)

Delay (5000).GetAwaiter().OnCompleted (() => Console.WriteLine (42));

NOTE

Timers fire their callbacks on pooled threads, so after five seconds, the thread pool will receive 10,000 requests to call SetResult(null) on a TaskCompletionSource. If the requests arrive faster than they can be processed, the thread pool will respond by enqueuing and then processing them at the optimum level of parallelism for the CPU. This is ideal if the thread-bound jobs are short-running, which is true in this case: the thread-bound job is merely the call to SetResult plus either the action of posting the continuation to the synchronization context (in a UI application) or otherwise the continuation itself (Console.WriteLine(42)).

Task.Delay

The Delay method that we just wrote is sufficiently useful that it’s available as a static method on the Task class:

Task.Delay (5000).GetAwaiter().OnCompleted (() => Console.WriteLine (42));

or:

Task.Delay (5000).ContinueWith (ant => Console.WriteLine (42));

Task.Delay is the asynchronous equivalent of Thread.Sleep.

Principles of Asynchrony

In demonstrating TaskCompletionSource, we ended up writing asynchronous methods. In this section, we’ll define exactly what asynchronous operations are, and explain how this leads to asynchronous programming.

Synchronous Versus Asynchronous Operations

A synchronous operation does its work before returning to the caller.

An asynchronous operation does (most or all of) its work after returning to the caller.

The majority of methods that you write and call are synchronous. An example is Console.WriteLine or Thread.Sleep. Asynchronous methods are less common, and initiate concurrency, because work continues in parallel to the caller. Asynchronous methods typically return quickly (or immediately) to the caller; hence they are also called non-blocking methods.

Most of the asynchronous methods that we’ve seen so far can be described as general-purpose methods:

§ Thread.Start

§ Task.Run

§ Methods that attach continuations to tasks

In addition, some of the methods that we discussed in Synchronization Contexts (Dispatcher.BeginInvoke, Control.BeginInvoke and SynchronizationContext.Post) are asynchronous, as are the methods that we wrote in the section, TaskCompletionSource, including Delay.

What is Asynchronous Programming?

The principle of asynchronous programming is that you write long-running (or potentially long-running) functions asynchronously. This is in contrast to the conventional approach of writing long-running functions synchronously, and then calling those functions from a new thread or task to introduce concurrency as required.

The difference with the asynchronous approach is that concurrency is initiated inside the long-running function, rather than from outside the function. This has two benefits:

§ I/O-bound concurrency can be implemented without tying up threads (as we demonstrated in “TaskCompletionSource”), improving scalability and efficiency.

§ Rich-client applications end up with less code on worker threads, simplifying thread safety.

This, in turn, leads to two distinct uses for asynchronous programming. The first is writing (typically server-side) applications that deal efficiently with a lot of concurrent I/O. The challenge here is not thread-safety (as there’s usually minimal shared state) but thread-efficiency; in particular, not consuming a thread per network request. Hence in this context, it’s only I/O-bound operations that benefit from asynchrony.

The second use is to simplify thread-safety in rich-client applications. This is particularly relevant as a program grows in size, because to deal with complexity, we typically refactor larger methods into smaller ones, resulting in chains of methods that call one another (call graphs).

With a traditional synchronous call graph, if any operation within the graph is long-running, we must run the entire call graph on a worker thread to maintain a responsive UI. Hence, we end up with a single concurrent operation that spans many methods (course-grained concurrency), and this requires considering thread-safety for every method in the graph.

With an asynchronous call graph, we need not start a thread until it’s actually needed, typically low in the graph (or not at all in the case of I/O-bound operations). All other methods can run entirely on the UI thread, with much-simplified thread-safety. This results in fine-grained concurrency—a sequence of small concurrent operations, in between which execution bounces to the UI thread.

NOTE

To benefit from this, both I/O- and compute-bound operations need to be written asynchronously; a good rule of thumb is to include anything that might take longer than 50ms.

(On the flipside, excessively fine-grained asynchrony can hurt performance, because asynchronous operations incur an overhead—see Optimizations.)

In this chapter, we’ll focus mostly on the rich-client scenario which is the more complex of the two. In Chapter 16, we give two examples that illustrate the I/O-bound scenario (see Concurrency with TCP and Writing an HTTP Server).

NOTE

The Metro and Silverlight .NET profiles encourage asynchronous programming to the point where synchronous versions of some long-running methods are not even exposed. Instead, you get asynchronous methods that return tasks (or objects that can be converted into tasks via the AsTask extension method).

Asynchronous Programming and Continuations

Tasks are ideally suited to asynchronous programming, because they support continuations which are essential for asynchrony (consider the Delay method that we wrote previously in “TaskCompletionSource”). In writing Delay, we used TaskCompletionSource, which is a standard way to implement “bottom-level” I/O-bound asynchronous methods.

For compute-bound methods, we use Task.Run to initiate thread-bound concurrency. Simply by returning the task to the caller, we create an asynchronous method. What distinguishes asynchronous programming is that we aim to do so lower in the call graph, so that in rich-client applications, higher-level methods can remain on the UI thread and access controls and shared state without thread-safety issues. To illustrate, consider the following method which computes and counts prime numbers, using all available cores (we discuss ParallelEnumerable inChapter 23):

int GetPrimesCount (int start, int count)

{

return

ParallelEnumerable.Range (start, count).Count (n =>

Enumerable.Range (2, (int)Math.Sqrt(n)-1).All (i => n % i > 0));

}

The details of how this works are unimportant; what matters is that it can take a while to run. We can demonstrate this by writing another method to call it:

void DisplayPrimeCounts()

{

for (int i = 0; i < 10; i++)

Console.WriteLine (GetPrimesCount (i*1000000 + 2, 1000000) +

" primes between " + (i*1000000) + " and " + ((i+1)*1000000-1));

Console.WriteLine ("Done!");

}

with the following output:

78498 primes between 0 and 999999

70435 primes between 1000000 and 1999999

67883 primes between 2000000 and 2999999

66330 primes between 3000000 and 3999999

65367 primes between 4000000 and 4999999

64336 primes between 5000000 and 5999999

63799 primes between 6000000 and 6999999

63129 primes between 7000000 and 7999999

62712 primes between 8000000 and 8999999

62090 primes between 9000000 and 9999999

Now we have a call graph, with DisplayPrimeCounts calling GetPrimesCount. The former uses Console.WriteLine for simplicity, although in reality it would more likely be updating UI controls in a rich-client application, as we’ll demonstrate later. We can initiate course-grained concurrency for this call graph as follows:

Task.Run (() => DisplayPrimeCounts());

With a fine-grained asynchronous approach, we instead start by writing an asynchronous version of GetPrimesCount:

Task<int> GetPrimesCountAsync (int start, int count)

{

return Task.Run (() =>

ParallelEnumerable.Range (start, count).Count (n =>

Enumerable.Range (2, (int) Math.Sqrt(n)-1).All (i => n % i > 0)));

}

Why Language Support is Important

Now we must modify DisplayPrimeCounts so that it calls GetPrimesCountAsync. This is where C#’s new await and async keywords come into play, because to do so otherwise is trickier than it sounds. If we simply modify the loop as follows:

for (int i = 0; i < 10; i++)

{

var awaiter = GetPrimesCountAsync (i*1000000 + 2, 1000000).GetAwaiter();

awaiter.OnCompleted (() =>

Console.WriteLine (awaiter.GetResult() + " primes between... "));

}

Console.WriteLine ("Done");

then the loop will rapidly spin through ten iterations (the methods being nonblocking) and all ten operations will execute in parallel (followed by a premature “Done”).

NOTE

Executing these tasks in parallel is undesirable in this case because their internal implementations are already parallelized; it will only make us wait longer to see the first results (and muck up the ordering).

There is a much more common reason, however, for needing to serialize the execution of tasks, which is that Task B depends on the result of Task A. For example, in fetching a web page, a DNS lookup must precede the HTTP request.

To get them running sequentially, we must trigger the next loop iteration from the continuation itself. This means eliminating the for loop and resorting to a recursive call in the continuation:

void DisplayPrimeCounts()

{

DisplayPrimeCountsFrom (0);

}

void DisplayPrimeCountsFrom (int i)

{

var awaiter = GetPrimesCountAsync (i*1000000 + 2, 1000000).GetAwaiter();

awaiter.OnCompleted (() =>

{

Console.WriteLine (awaiter.GetResult() + " primes between...");

if (i++ < 10) DisplayPrimeCountsFrom (i);

else Console.WriteLine ("Done");

});

}

It gets even worse if we want to make DisplayPrimesCount itself asynchronous, returning a task that it signals upon completion. To accomplish this requires creating a TaskCompletionSource:

Task DisplayPrimeCountsAsync()

{

var machine = new PrimesStateMachine();

machine.DisplayPrimeCountsFrom (0);

return machine.Task;

}

class PrimesStateMachine

{

TaskCompletionSource<object> _tcs = new TaskCompletionSource<object>();

public Task Task { get { return _tcs.Task; } }

public void DisplayPrimeCountsFrom (int i)

{

var awaiter = GetPrimesCountAsync (i*1000000+2, 1000000).GetAwaiter();

awaiter.OnCompleted (() =>

{

Console.WriteLine (awaiter.GetResult());

if (i++ < 10) DisplayPrimeCountsFrom (i);

else { Console.WriteLine ("Done"); _tcs.SetResult (null); }

});

}

}

Fortunately, C# 5’s asynchronous functions do all of this work for us. With the async and await keywords, we need only write this:

async Task DisplayPrimeCounts()

{

for (int i = 0; i < 10; i++)

Console.WriteLine (await GetPrimesCountAsync (i*1000000 + 2, 1000000) +

" primes between " + (i*1000000) + " and " + ((i+1)*1000000-1));

Console.WriteLine ("Done!");

}

Hence async and await are essential for implementing asynchrony without excessive complexity. Let’s now see how these keywords work.

NOTE

Another way of looking at the problem is that imperative looping constructs (for, foreach and so on), do not mix well with continuations, because they rely on the current local state of the method (“how many more times is this loop going to run?”)

While the async and await keywords offer one solution, it’s sometimes possible to solve it in another way by replacing the imperative looping constructs with the functional equivalent (in other words, LINQ queries). This is the basis of Reactive Framework (Rx) and can be a good option when you want to execute query operators over the result—or combine multiple sequences. The price to pay is that to avoid blocking, Rx operates over push-based sequences, which can be conceptually tricky.

Asynchronous Functions in C# 5.0

C# 5.0 introduces the async and await keywords. These keywords let you write asynchronous code that has the same structure and simplicity as synchronous code, as well as eliminating the “plumbing” of asynchronous programming.

Awaiting

The await keyword simplifies the attaching of continuations. Starting with a basic scenario, the compiler expands:

var result = await expression;

statement(s);

into something functionally similar to:

var awaiter = expression.GetAwaiter();

awaiter.OnCompleted (() =>

{

var result = awaiter.GetResult();

statement(s);

);

NOTE

The compiler also emits code to short-circuit the continuation in case of synchronous completion (see Optimizations) and to handle various nuances that we’ll pick up in later sections.

To demonstrate, let’s revisit the asynchronous method that we wrote previously that computes and counts prime numbers:

Task<int> GetPrimesCountAsync (int start, int count)

{

return Task.Run (() =>

ParallelEnumerable.Range (start, count).Count (n =>

Enumerable.Range (2, (int)Math.Sqrt(n)-1).All (i => n % i > 0)));

}

With the await keyword, we can call it as follows:

int result = await GetPrimesCountAsync (2, 1000000);

Console.WriteLine (result);

In order to compile, we need to add the async modifier to the containing method:

async void DisplayPrimesCount()

{

int result = await GetPrimesCountAsync (2, 1000000);

Console.WriteLine (result);

}

The async modifier tells the compiler to treat await as a keyword rather than an identifier should an ambiguity arise within that method (this ensures that code written prior to C# 5 that might use await as an identifier will still compile without error). The async modifier can be applied only to methods (and lambda expressions) that return void or (as we’ll see later) a Task or Task<TResult>.

NOTE

The async modifier is similar to the unsafe modifier in that it has no effect on a method’s signature or public metadata; it affects only what happens inside the method. For this reason, it makes no sense to use async in an interface. However it is legal, for instance, to introduce async when overriding a non-async virtual method, as long as you keep the signature the same.

Methods with the async modifier are called asynchronous functions, because they themselves are typically asynchronous. To see why, let’s look at how execution proceeds through an asynchronous function.

Upon encountering an await expression, execution (normally) returns to the caller—rather like with yield return in an iterator. But before returning, the runtime attaches a continuation to the awaited task, ensuring that when the task completes, execution jumps back into the method and continues where it left off. If the task faults, its exception is re-thrown, otherwise its return value is assigned to the await expression. We can summarize everything we just said by looking at the logical expansion of the asynchronous method above:

void DisplayPrimesCount()

{

var awaiter = GetPrimesCountAsync (2, 1000000).GetAwaiter();

awaiter.OnCompleted (() =>

{

int result = awaiter.GetResult();

Console.WriteLine (result);

});

}

The expression upon which you await is typically a task; however any object with a GetAwaiter method that returns an awaitable object (implementing INotifyCompletion.OnCompleted and with a appropriately typed GetResult method and a bool IsCompleted property) will satisfy the compiler.

Notice that our await expression evaluates to an int type; this is because the expression that we awaited was a Task<int> (whose GetAwaiter().GetResult() method returns an int).

Awaiting a nongeneric task is legal and generates a void expression:

await Task.Delay (5000);

Console.WriteLine ("Five seconds passed!");

Capturing local state

The real power of await expressions is that they can appear almost anywhere in code. Specifically, an await expression can appear in place of any expression (within an asynchronous function) except for inside a catch or finally block, lock expression, unsafe context or an executable’s entry point (main method).

In the following example, we await inside a loop:

async void DisplayPrimeCounts()

{

for (int i = 0; i < 10; i++)

Console.WriteLine (await GetPrimesCountAsync (i*1000000+2, 1000000));

}

Upon first executing GetPrimesCount, execution returns to the caller by virtue of the await expression. When the method completes (or faults), execution resumes where it left off, with the values of local variables and loop counters preserved.

Without the await keyword, the simplest equivalent might be the example we wrote in “Why Language Support is Important.” The compiler, however, takes the more general strategy of refactoring such methods into state machines (rather like it does with iterators).

The compiler relies on continuations (via the awaiter pattern) to resume execution after an await expression. This means that if running on the UI thread of a rich client application, the synchronization context ensures execution resumes on the same thread. Otherwise, execution resumes on whatever thread the task finished on. The change-of-thread does not affect the order execution and is of little consequence unless you’re somehow relying on thread affinity, perhaps through the use of thread-local storage (see Thread-Local Storage in Chapter 22). It’s rather like touring a city and hailing taxis to get from one destination to another. With a synchronization context, you’ll always get the same taxi; with no synchronization context, you’ll usually get a different taxi each time. In either case, though, the journey is the same.

Awaiting in a UI

We can demonstrate asynchronous functions in a more practical context by writing a simple UI that remains responsive while calling a compute-bound method. Let’s start with a synchronous solution:

class TestUI : Window

{

Button _button = new Button { Content = "Go" };

TextBlock _results = new TextBlock();

public TestUI()

{

var panel = new StackPanel();

panel.Children.Add (_button);

panel.Children.Add (_results);

Content = panel;

_button.Click += (sender, args) => Go();

}

void Go()

{

for (int i = 1; i < 5; i++)

_results.Text += GetPrimesCount (i * 1000000, 1000000) +

" primes between " + (i*1000000) + " and " + ((i+1)*1000000-1) +

Environment.NewLine;

}

int GetPrimesCount (int start, int count)

{

return ParallelEnumerable.Range (start, count).Count (n =>

Enumerable.Range (2, (int) Math.Sqrt(n)-1).All (i => n % i > 0));

}

}

Upon pressing the “Go” button, the application becomes unresponsive for the time it takes to execute the compute-bound code. There are two steps in asynchronizing this; the first is to switch to the asynchronous version of GetPrimesCount that we used in previous examples:

Task<int> GetPrimesCountAsync (int start, int count)

{

return Task.Run (() =>

ParallelEnumerable.Range (start, count).Count (n =>

Enumerable.Range (2, (int) Math.Sqrt(n)-1).All (i => n % i > 0)));

}

The second step is to modify Go to call GetPrimesCountAsync:

async void Go()

{

_button.IsEnabled = false;

for (int i = 1; i < 5; i++)

_results.Text += await GetPrimesCountAsync (i * 1000000, 1000000) +

" primes between " + (i*1000000) + " and " + ((i+1)*1000000-1) +

Environment.NewLine;

_button.IsEnabled = true;

}

This illustrates the simplicity of programming with asynchronous functions: you program as you would synchronously, but call asynchronous functions instead of blocking functions and await them. Only the code within GetPrimesCountAsync runs on a worker thread; the code in Go“leases” time on the UI thread. We could say that Go executes pseudo-concurrently to the message loop (in that its execution is interspersed with other events that the UI thread processes). With this pseudo-concurrency, the only point at which preemption can occur is during an await. This simplifies thread-safety: in our case, the only problem that this could cause is reentrancy (clicking the button again while it’s running, which we avoid by disabling the button). True concurrency occurs lower in the call stack, inside code called by Task.Run. To benefit from this model, truly concurrent code avoids accessing shared state or UI controls.

To give another example, suppose that instead of calculating prime numbers, we want to download several web pages and sum their lengths. Framework 4.5 exposes numerous task-returning asynchronous methods, one of which is the WebClient class in System.Net. TheDownloadDataTaskAsync method asynchronously downloads a URI to a byte array, returning a Task<byte[]>, so by awaiting it, we get a byte[]. Let’s now rewrite our Go method:

async void Go()

{

_button.IsEnabled = false;

string[] urls = "www.albahari.com www.oreilly.com www.linqpad.net".Split();

int totalLength = 0;

try

{

foreach (string url in urls)

{

var uri = new Uri ("http://" + url);

byte[] data = await new WebClient().DownloadDataTaskAsync (uri);

_results.Text += "Length of " + url + " is " + data.Length +

Environment.NewLine;

totalLength += data.Length;

}

_results.Text += "Total length: " + totalLength;

}

catch (WebException ex)

{

_results.Text += "Error: " + ex.Message;

}

finally { _button.IsEnabled = true; }

}

Again, this mirrors how we’d write it synchronously—including the use of catch and finally blocks. Even though execution returns to the caller after the first await, the finally block does not execute until the method has logically completed (by virtue of all its code executing—or an early return or unhandled exception).

It can be helpful to consider exactly what’s happening underneath. First, we need to revisit the pseudo-code that runs the message loop on the UI thread:

Set synchronization context for this thread to WPF sync context

while (!thisApplication.Ended)

{

wait for something to appear in message queue

Got something: what kind of message is it?

Keyboard/mouse message -> fire an event handler

User BeginInvoke/Invoke message -> execute delegate

}

Event handlers that we attach to UI elements execute via this message loop. When our Go method runs, execution proceeds as far as the await expression, and then returns to the message loop (freeing the UI to respond to further events). The compiler’s expansion of await ensures that before returning, however, a continuation is set up such that execution resumes where it left off upon completion of the task. And because we awaited on a UI thread, the continuation posts to the synchronization context which executes it via the message loop, keeping our entire Go method executing pseudo-concurrently on the UI thread. True (I/O-bound) concurrency occurs within the implementation of DownloadDataTaskAsync.

Comparison to course-grained concurrency

Asynchronous programming was difficult prior to C# 5, not only because there was no language support, but because the .NET Framework exposed asynchronous functionality through clumsy patterns called the EAP and the APM (see Obsolete Patterns), rather than task-returning methods.