Optimized C++ (2016)

Chapter 6. Optimize Dynamically Allocated Variables

That’s where the money is.

Bank robber Willie Sutton (1901–1980)

This quote was attributed to Sutton as the answer to a reporter’s 1952 question, “Why do you rob banks?” Sutton later denied ever having said it.

Except for the use of less-than-optimal algorithms, the naïve use of dynamically allocated variables is the greatest performance killer in C++ programs. Improving a program’s use of dynamically allocated variables is so often “where the money is” that a developer can be an effective optimizer knowing nothing other than how to reduce calls into the memory manager.

C++ features that use dynamically allocated variables, like standard library containers, smart pointers, and strings, make writing applications in C++ productive. But there is a dark side to this expressive power. When performance matters, new is not your friend.

Lest I start a panic, let me say that the goal in optimizing memory management is not to live an ascetic life free of distraction from the many useful C++ features that use dynamically allocated variables. Rather, the goal is to remove performance-robbing, unneeded calls into the memory manager through skillful use of these same features.

My experience is that removing even one call into the memory manager from a loop or frequently called function is enough to significantly boost performance, and there are generally opportunities to remove many more than one call.

Before talking about how to optimize the use of dynamically allocated variables, this chapter offers a review of how C++ thinks about variables. It also reviews the set of tools in the dynamic memory API.

C++ Variables Refresher

Every C++ variable (every variable of plain data type; every array, struct, or class instance) has a fixed layout in memory, the size of which is known at compile time. C++ allows a program to get the size in bytes of a variable and obtain a pointer to that variable, but does not specify the bit-by-bit layout of variables. C++ rules allow the developer to reason about the order and layout of member variables in structs. C++ also provides union types that overlay multiple variables onto the same block of memory, but what the program sees when it looks at a union is implementation-dependent.

Storage Duration of Variables

Every variable has a storage duration, or lifetime, that describes the period of time during which the storage, or memory bytes occupied by the variable, has a meaningful value. The cost to allocate memory for the variable depends on the storage duration.

The C++ syntax for declaring variables can be confusing, due to the need to maintain compatibility with C syntax while adding several new concepts. In C++, the storage duration is not directly specified, but is inferred from the variable’s declaration:

Static storage duration

Variables with static storage duration live in memory reserved by the compiler. Each static variable occupies a fixed amount of memory at a fixed address determined at compile time. The memory for static variables is reserved for the life of the program. Each global static variable is constructed before execution enters main() and destroyed after execution leaves main(). Static variables declared a function scope are only constructed “before the first time execution enters a function”, which can be as early as when global statics are constructed, or as late as the first call to that function. C++ specifies an order of construction and destruction for global static variables so that the developer can reason exactly about their lifetimes, but the rules are so complex that they serve more as a warning than a behavior to make use of.

Each static variable is known by a name, and may also be referred to by pointers and references. The name that refers to a static variable, as well as pointers and references to that variable, may be visible to code in the constructors and destructors of other static variables before it has a meaningful value and after its value is destroyed.

There is no runtime cost to create the storage for static variables. However, the storage is not available for reuse. Static variables are thus appropriate for data that will be used for the whole life of the program.

Variables declared at namespace scope and variables declared static or extern have static storage duration.

Thread-local storage duration

Since C++11, a program can declare variables with thread-local storage duration. Before C++11, some compilers and frameworks provided similar facilities in a nonstandard manner.

Thread-local variables are constructed at thread entry and destroyed at thread exit; their lifetime is the same as the lifetime of the thread. Each thread has its own separate copy of these variables.

Thread-local variables can be more expensive to access than static variables, depending on the operating system and compiler. In some systems, the thread-local storage is allocated by the thread, so that the cost of accessing a thread-local variable is a single indirection greater than that of accessing a static variable. In others, thread-local variables must be accessed through a global table indexed by the thread ID. Although this operation can be performed in constant time, it requires a function call and some computation, making the cost of access considerably greater.

In C++11, variables declared with the thread_local storage class specifier keyword have thread-local storage duration.

Automatic storage duration

Variables with automatic storage duration exist in memory reserved by the compiler on the function call stack. Each automatic variable occupies a fixed amount of memory at a fixed offset from the stack pointer that is determined at compile time, but the absolute address of the automatic variable is not fixed until execution enters the scope of the declaration.

Automatic variables exist during the time that execution is within the scope of the surrounding code block delimited by curly braces. They are constructed at the point where they are declared, and destroyed when execution leaves the surrounding scope.

Like static variables, automatic variables are known by name. Unlike static variables, the name is visible only after the variable is constructed, and before it is destroyed. References and pointers to automatic variables may exist after the variable is destroyed, causing the chaos of undefined behavior if they are dereferenced.

Like with static variables, there is no runtime cost to allocate the storage used by automatic variables. Unlike with static variables, there is a limit to the total amount of memory that can be occupied by automatic variables at any one time. Exceeding this maximum value, via runaway recursion or very deeply nested function calls, causes a stack overflow and abrupt, irrevocable program termination. Automatic variables are appropriate for objects used by the code that surrounds them.

A function’s formal argument variables have automatic storage duration. So do variables declared within executable blocks and not otherwise marked.

Dynamic storage duration

Variables with dynamic storage duration live in memory requested by the running program. The program calls into the memory manager, the collection of C++ runtime system functions and data structures that administers a pool of memory on behalf of the program. The program explicitly requests storage for and constructs the dynamic variable in a new-expression (covered in more detail in “New-Expressions Construct Dynamic Variables”), which may occur at any point in the program. The program later explicitly destroys the variable and returns the variable’s memory to the memory manager in a delete-expression (see “Delete-Expressions Dispose of Dynamic Variables”). This can happen at any point in the program when the variable is no longer needed.

Like with automatic variables, but unlike with static variables, the address of a dynamic variable is determined at run time.

Unlike with static, thread-local, and automatic variables, the declaration syntax for arrays is extended so that the highest dimension of a dynamic array variable may be specified at run time by a (non-constant) expression. This is the only case in C++ where the size of a variable is not fixed at compile time.

A dynamic variable does not have its own name. Instead, when it’s constructed, the C++ memory manager returns a pointer to the dynamic variable. The program must assign this pointer to a variable so it can return the dynamic variable to the memory manager before the last pointer to that variable is destroyed, or risk running out of the memory used for creating dynamic variables. A modern processor can exhaust gigabytes of memory in minutes if dynamic variables are not returned properly.

Unlike static and thread-local variables, the number and type of dynamic variables can change over time without any fixed limit to the amount of storage they consume. Also, unlike with static and automatic variables, there is a significant runtime cost to manage the memory used by dynamic variables.

Variables returned by new-expressions have dynamic storage duration.

Ownership of Variables

Another important concept of C++ variables is ownership. The owner of a variable determines when the variable is created and when it is destroyed. Sometimes the storage duration tells when the variable is created and destroyed, but ownership is a separate concept, and it is an important one for optimization of dynamic variables. Here are some guidelines:

Global ownership

Variables with static storage duration are owned by the program as a whole. They are constructed before execution enters the main() function and destroyed after the program returns from main().

Lexically scoped ownership

Variables with automatic storage duration are owned by a lexical scope consisting of a block of code surrounded by curly braces. This may be a function body; the controlled statement block of an if, while, for, or do statement; a try or catch clause; or a compound statement delimited only by curly braces. They are constructed when execution enters the lexical scope, and destroyed when execution passes out of the lexical scope.

The outermost lexical scope—the scope entered first and exited last—is the body of main(). For all intents and purposes, an automatic variable declared in main() has the same lifetime as a static variable.

Member ownership

Member variables of classes and structs are owned by the class instance that contains them. They are constructed by the class constructor as an instance of the class is constructed, and destroyed as the instance is destroyed.

Ownership of dynamic variables

Dynamic variables have no predefined owner. Rather, the new-expression that creates the dynamic variable returns a pointer, which must be explicitly managed by the program. The dynamic variable must be returned to the memory manager via a delete-expression to be destroyed before the last pointer to the dynamic variable is destroyed. The lifetime of the dynamic variable is thus completely programmable—a powerful but dangerous tool. If a dynamic variable is not returned to the memory manager through a delete-expression before the last pointer to it is destroyed, the memory manager loses track of that variable for the rest of the program’s run time.

Ownership of dynamic variables must be enforced by the developer and encoded into the program logic. It is not controlled by the compiler or defined by C++. Ownership of dynamic variables is important to optimization. Programs with strongly defined ownership can be made more efficient than programs where ownership is diffuse.

Value Objects and Entity Objects

Some variables get their meaning in a program from their contents. They are called value objects. Other variables get their meaning from the role they play in a program. These variables are called entities or entity objects.

C++ does not encode whether a particular variable behaves as a value or entity object. The developer encodes the variable’s role into the program logic. C++ allows the developer to define a copy constructor and an operator==() for any class. The role of a variable determines whether the developer should define these operators. If the developer does not take steps to forbid operations that don’t make sense, the compiler will not complain.

Entity objects may be identified by their common properties:

Entities are unique

Some objects in a program conceptually have a unique identity. The mutex that guards a specific critical region and a symbol table with many entries are examples of objects with unique identities in a program.

Entities are mutable

A program can lock or unlock a mutex, but it is still the same mutex. A program can add symbols to a symbol table, but it’s still the symbol table. You can start your car and drive it to work, and it is still your car. Entities have a meaning as a whole. Changing the state of an entity doesn’t change its fundamental meaning to the program.

Entities are not copyable

Entities are not copied. Their nature comes from the way they are used, not from their bits. You might copy all the bits of the system symbol table into another data structure, but it would not be the system symbol table. The program would still look for the system symbol table in the old place, and the copy would be unused. If the program sometimes modified the copy instead of the original, the symbol table would stop being valid. You might copy the bits of a mutex that guarded a critical section, but locking the copy would not produce mutual exclusion. Mutual exclusion is a property that arises from two threads agreeing to signal each other using a specific set of bits.

Entities are not comparable

The operation of comparing entities for equality does not make sense. Entities are, by their nature, individuals. A comparison must always return false.

Likewise, value objects have common properties that are the opposite of the properties of entity objects:

Values are interchangeable and comparable

The integer 4 and the string "Hello, World!" are values. The expression 2 + 2 compares equal to the value 4, and not equal to the value 5. The value of the expression string("Hello") + string("!") compares equal to the string "Hello!", and not equal to "Hi". The meaning of a value comes from its bits, not from its use in the program.

Values are immutable

There is no operation able to change a 4 into a 5, so that 2 + 2 = 5. You can change an integer variable that holds a 4 so that it now holds a 5. That changes the variable, which is an entity with a unique name. But that doesn’t change the value 4.

Values are copyable

Copying a value makes sense. Two string variables can have the value "foo" and the program is still correct.

Whether a variable is an entity object or a value object determines whether copying and comparison for equality make sense. Entities should not be copied or compared. Whether a class member variable is an entity or a value determines how the class copy constructor should be written. Class instances may share ownership of an entity, but cannot validly copy the entity. Understanding entities and value objects is important because variables that behave as entities are often structures with many dynamically allocated variables that would be expensive to copy if copying even made sense.

C++ Dynamic Variable API Refresher

C++ contains a robust toolkit for managing dynamic variables. Options range from automation to fine control over memory management and the construction of dynamically allocated C++ variables. Even experienced developers may have used only the most basic of these tools. We’ll start with a quick look at those, before going into depth on the features that bear particularly on optimization:

Pointers and references

Dynamic variables in C++ don’t have a name. They are accessed through a C-style pointer or a reference variable. Pointers abstract hardware addresses to hide the complexity and variability of computer architectures. Not every value a pointer variable can hold corresponds to a usable memory location, but there is nothing about the bits that tell the programmer that. One specific value called nullptr in C++11 is guaranteed by the C++ standard never to point to a valid memory location. The integer 0 stood in for nullptr prior to C++11; and is convertible tonullptr in C++11, however, the all-zeros bit pattern in a pointer variable is not necessarily equal to nullptr. C-style pointers that are declared without an initializer have no predefined value (for efficiency’s sake). Reference variables cannot be declared without an initializer, so they always point to valid locations.1

New- and delete-expressions

Dynamic variables in C++ are created via a new-expression (see “New-Expressions Construct Dynamic Variables”). A new-expression gets the storage needed to hold the variable, constructs a variable of a specified type in the storage, and returns a typed pointer to the newly constructed variable. The new-expression for creating arrays is different from the new-expression for creating a single instance, but both return the same type of pointer.

Dynamic variables are disposed of via a delete-expression (see “Delete-Expressions Dispose of Dynamic Variables”). A delete-expression destroys a variable and disposes of its storage. The delete-expression for disposing of an array is different from the one for individual instances. Both operate on a pointer of the same type, however, so you can’t tell from the pointer whether it points to an array or a scalar. The developer must remember the kind of new-expression used to create a dynamic variable and destroy it with a matching kind of delete-expression, or chaos reigns. Undefined behavior results if a pointer-to-array is deleted as a pointer-to-instance, or vice versa.

New-expressions and delete-expressions are baked into the syntax of the C++ language. Here are some brief examples of the basic kinds of new- and delete-expressions that all developers are aware of:

{

int n = 100;

char* cp; // cp has no particular value

Book* bp = nullptr; // bp points to invalid address

// ...

cp = new char[n]; // cp points to new dynamic array

bp = new Book("Optimized C++"); // new dynamic class instance

// ...

char array[sizeof(Book)];

Book* bp2 = new(array) Book("Moby Dick"); // placement new

// ...

delete[] cp; // delete dynamic array before changing pointer

cp = new char; // cp now points to a single dynamic char

// ...

delete bp; // done using class instance

delete cp; // done using dynamically allocated char

bp2->~Book(); // done using placed class instance

}

// delete dynamic variables before pointer goes out of scope

Memory management functions

New-expressions and delete-expressions call memory management functions from the C++ standard library to allocate storage from and return storage to a pool that the C++ standard calls the “free store.” These functions are overloads of operator new(), operator new[]() for arrays, operator delete(), and operator delete[]() for arrays. C++ also provides classic C-library memory management functions such as malloc() and free(), which allocate and release untyped blocks of storage.

Class constructors and destructors

C++ allows each class to define a constructor member function that is called to establish an initial value when an instance of that class is created. Another member function, the destructor, is called whenever a class instance is destroyed. Among other benefits, these special member functions provide a place to put new- and delete-expressions so that any dynamic member variables are automatically managed with the class instance that contains them.

Smart pointers

The C++ standard library provides “smart pointer” template classes that behave like the raw pointer types, except they also delete the pointed-to variables when they go out of scope. Smart pointers remember whether the allocated storage is an array or a single instance, and call the correct delete-expression for the type of the smart pointer. Smart pointers are explored further in the next section.

Allocator templates

The C++ standard library provides allocator templates, which generalize new- and delete-expressions for use with standard containers. Allocators are explored further in “Provide Custom Standard Library Allocators”.

Smart Pointers Automate Ownership of Dynamic Variables

Ownership of dynamic variables is not controlled by the compiler or defined by C++. A program may declare a pointer variable in one place, assign a dynamic variable to the pointer using a new-expression in another place, copy the pointer to another pointer in a third place, and destroy the dynamic variable referenced by that second pointer using a delete-expression in yet another place. However, a program that behaves in this way can be hard to test and debug because ownership of the dynamic variable is so diffuse. Ownership of dynamic variables is enforced by the developer and encoded into the program logic. When ownership is diffuse, every line of the program may potentially create a dynamic variable, add or remove references, or destroy the variable. The developer must potentially trace all execution paths to ensure the dynamic variables are always properly returned to the memory manager.

This complexity can be reduced through the use of programming idioms. One common practice is to declare a pointer variable as a private member of some class. The class constructor can set the pointer to nullptr, copy a pointer argument, or contain a new-expression to create a dynamic variable. Because the pointer is a private member, any change to the pointer must happen within member functions of the class. This limits the number of code lines that can affect the pointer, making coding and debugging far easier. The class destructor can contain a delete-expression for the dynamic variable. An instance of a class that behaves in this way is said to own the dynamic variable.

A simple class may be designed with the single purpose of owning a dynamic variable. In addition to constructing and destroying the dynamic variable, the class also implements operator->() and operator*(). Such a class is called a smart pointer, because it mostly behaves like a C-style pointer, but also destroys the dynamic object it points to when the smart pointer instance is destroyed.

C++ provides a smart pointer template class called std::unique_ptr<T> to maintain ownership of a dynamic variable of type T. unique_ptr compiles to code that is comparable in efficiency to handwritten code.

Automating ownership of dynamic variables

Smart pointers automate ownership of dynamic variables by coupling the lifetime of the dynamic variable to the lifetime of the smart pointer that owns it. The dynamic variable is properly destroyed and its memory is freed automatically, depending on the declaration of the pointer:

· A smart pointer instance declared with automatic storage duration deletes the dynamic variable it owns when execution passes out of the scope enclosing its declaration, whether this happens by executing a break or continue statement, returning from the function, or throwing an exception through the scope.

· A smart pointer instance declared as a class member deletes the dynamic variable it owns when the containing class is destroyed. In addition, because the rules for class destruction cause each member to be destroyed after the class destructor executes, it is not necessary to write explicit code in the destructor to delete the dynamic variable. The smart pointer is deleted by the built-in machinery of C++.

· A smart pointer instance declared with thread-local storage duration deletes the dynamic variable it owns when the thread terminates normally (but not, generally, if the thread is terminated by the operating system).

· A smart pointer instance declared with static storage duration deletes the dynamic variable it owns when the program ends.

Maintaining a single owner in general, and using std::unique_ptr to maintain ownership in particular, makes it significantly easier to reason about whether a dynamic variable points to valid storage, and whether it is properly returned to the memory manager when it is no longer needed. There is little cost penalty for using unique_ptr, so it should be the first choice for developers interested in optimization.

Shared ownership of dynamic variables is more expensive

C++ allows multiple pointers and references to a dynamic variable. Multiple pointers may refer to a dynamic variable if several data structures point to the dynamic variable, or if a pointer to the dynamic variable is passed as a function argument, so that one pointer is in the caller’s scope and another pointer is in the scope of the called function. Multiple pointers to a dynamic variable exist briefly during assignment or construction of an object intended to own a dynamic variable.

During any time that multiple pointers refer to a dynamic variable, the developer must reason about which pointer is the owner. It is up to the developer not to explicitly delete the dynamic variable through a nonowning pointer, dereference any pointer after deleting the dynamic variable, or cause two owning pointers to refer to the same object so that it is deleted twice. This analysis becomes particularly important when an error or exception occurs.

Sometimes ownership of a dynamic variable must be shared between two or more pointers. Ownership must be shared when the lifetimes of two pointers to the variable overlap, but the lifetime of neither pointer fully contains the lifetime of the other.

When ownership must be shared, the C++ standard library template std::shared_ptr<T> provides a smart pointer capable of managing shared ownership. Instances of shared_ptr contain a pointer to the dynamic variable, and another pointer to a dynamic object containing a reference count. When a dynamic variable is assigned to a shared_ptr, the assignment operator constructs the reference counter object and sets the reference count to 1. When one shared_ptr is assigned to another, the reference count is incremented. When a shared_ptr is destroyed, the destructor decrements the reference counter, and deletes the dynamic variable only if the reference count is 0. Expensive atomic increment and decrement operations are performed on the reference count so that shared_ptr works in multithreaded programs. std::shared_ptr is therefore considerably more expensive than either a C-style pointer or std::unique_ptr.

It is up to the developer not to assign a C-style pointer (such as the pointer returned by a new-expression) to multiple smart pointers, but only to assign from one smart pointer to another. If the same C-style pointer is assigned to several smart pointers, the pointer will be deleted several times, resulting in what the C++ standard inscrutibly calls “undefined behavior.” This sounds obvious, but because a smart pointer can be constructed from a plain old pointer, type conversion during argument passing can silently cause this to happen.

std::auto_ptr versus container classes

A pre-C++11 smart pointer called std::auto_ptr<T> can also manage unshared ownership of a dynamic variable. In many ways, auto_ptr behaves like unique_ptr. However, auto_ptr does not implement move semantics (discussed in “Implement Move Semantics”) and does not have a copy constructor.

Most standard library containers prior to C++11 copy-constructed their value type into the container’s internal storage, so auto_ptr could not be used as the value type. Prior to the introduction of unique_ptr, standard library containers had to be programmed using C-style pointers, deep object copies, or shared_ptr. Each of these solutions had problems. Native C-style pointers created risks of errors or memory leaks, deep object copies were inefficient for large objects, and shared_ptr was inherently expensive. Some projects implemented special unsafe smart pointers for container classes whose copy constructor performed a move-like operation, such as using std::swap() to transfer an owned pointer to the constructor. This let many, but not all, container class member functions work as expected. It was efficient, but was unsafe and difficult to debug.

Prior to C++11, it was common practice to use std::shared_ptr for the value type in standard library container class instances. This practice produced correct and debuggable code, but at the expense of shared_ptr’s inefficiency.

Dynamic Variables Have Runtime Cost

Because C++ is compiled to native machine code and directly executed by the computer, the cost of most C++ statements is at most a few memory accesses. However, the cost of allocating memory for a dynamic variable is measured in thousands of memory accesses. This cost is so high, on average, that eliminating even one call into the memory manager can noticeably improve the performance of a function, as demonstrated repeatedly in Chapter 4.

Conceptually, a function for allocating memory searches a collection of free memory blocks looking for a block able to satisfy the request. If the function finds a block the right size, it removes the block from the collection and returns it. If the function finds a block that is much bigger than the request, it can choose to split the block and return a portion. Obviously, this general description leaves room for many implementation choices.

If no free memory blocks are available to fill the request, the allocation function makes an expensive call into the operating system kernel for an additional large memory block from the system’s pool of available memory. The memory returned by the kernel may or may not be cached in physical RAM (see “Memory Has Finite Capacity”), perhaps resulting in even more delay the first time it is accessed. Walking the list of free memory blocks is itself expensive. They are scattered through memory and less likely to be in cache than are memory blocks currently visible to the running program.

The collection of free memory blocks is a resource shared by all the threads of a program. Any changes to the free block collection must be thread-safe. If several threads make frequent calls to allocate or free memory, they experience contention for the memory manager as a resource, causing all but one thread to wait.

A C++ program must free allocated memory when the dynamic variable it contains is no longer needed. Conceptually, a function to free memory puts the returned memory block onto the free block collection. In real implementations, the behavior of the free function is considerably more complex. Most implementations try to coalesce a newly freed memory block with adjacent free blocks. This keeps the free memory collection from filling up with blocks too small to be useful. Calls to the memory freeing function have the same issues of reduced cache performance and contention for multithreaded access to the free blocks as do calls to allocate memory.

Reduce Use of Dynamic Variables

Dynamic variables are a robust solution to many problems. However, they are too expensive to use for solving every problem. Statically created variables can often do the work of dynamic variables.

Create Class Instances Statically

It is possible to create class instances dynamically. However, most class instances that are not part of containers can and generally should be created statically (that is, without using new). Sometimes class instances are created dynamically because the developer does not realize there is another option. For instance, an inexperienced C++ developer who learned Java first might know that typical Java syntax for instantiating a class looks like this:

MyClass myInstance = new MyClass("hello", 123);

If the naïve user types the Java syntax into a C++ program, the C++ compiler encourages him to make myInstance a pointer, saying “cannot convert from ‘MyClass *' to ‘MyClass’.” The slightly more experienced developer might make myInstance a smart pointer to avoid having to explicitly delete the dynamically created class instance, if he is even aware that this is an issue:

MyClass* myInstance = new MyClass("hello", 123);

However, both solutions are wasteful. Instead, the class should usually be statically constructed, like this:

MyClass myInstance("hello", 123);

or:

MyClass anotherMC = MyClass("hello", 123); // maybe less efficient

If myInstance is declared in a block of executable code, it has automatic storage duration when declared in this way. It will be destroyed when execution leaves the block containing this declaration. If the program needs myInstance to persist longer than this, it can declare myInstancewithin an outer scope or a longer-lived object and pass a pointer into functions that need to use myInstance. The declaration can be moved to file scope if myInstance needs to live as long as the whole program.

Create class member variables statically

When member variables of a class are themselves class instances, these members can be constructed statically when the containing class is constructed. This saves the cost of allocating memory for the members.

Sometimes it seems like a class instance must be constructed dynamically because it is a member of another class, and some resource needed to construct the member is not available at the time the enclosing class is constructed. An alternative pattern is to make the problem class (rather than a pointer to it) a member of the containing class, and simply not to initialize the problem class fully when it is constructed. Create a member function in the problem class to initialize the variable fully once resources are available. Then insert a call to the initialization member function at the point where a dynamic instance would have been instantiated using new. This common pattern is called two-part initialization.

There is no cost to two-part initialization. The member could not be used before the point where it was constructed anyway. Any cost of checking whether the member instance was fully initialized is the same as the cost to see whether the pointer to it was nullptr. An additional benefit of the method described here is that an initialization member function can return error codes or other information, whereas a constructor cannot.

Two-part initialization is especially useful when a class must do something during initialization that is time-consuming, like reading a file, or that can fail, like requesting a web page from the Internet. Providing a separate initialization function makes it possible to do either of these kinds of initialization concurrently (see Chapter 12) with other program activity, and makes it easier to repeat the second kind of initialization in case of failure.

Use Static Data Structures

std::string, std::vector, std::map, and std::list are the containers most C++ developers use every day. Used carefully, they can be reasonably efficient. But they’re not the only choices. std::string and std::vector reallocate their storage occasionally to grow as you add items to the container. std::map and std::list allocate a new node for every added item. Sometimes, this is too expensive. We’ll explore alternatives here.

Use std::array instead of std::vector

std::vector allows the program to define dynamically resizable arrays of any type. If the size of the array, or the maximum size, is known at compile time, std::array offers a similar interface, but with a fixed-size array and no calls into the memory manager.

std::array is copy-constructible and offers standard library–style random-access iterators and subscripting via operator[]. size() returns the fixed size of the array.

From an optimization standpoint, std::array is almost indistinguishable from C-style arrays. From a programming standpoint, std::array has a comfortable similarity to the standard library containers.

Create large buffers on the stack

Chapter 4 demonstrated that inserting into a string can be expensive because of the need to reallocate the string’s storage as it grows longer. If the developer knows the maximum possible size the string can reach, or at least its maximum reasonable size, it may be possible to use an oversized C-style array having automatic storage duration as a temporary, mutate the string in the temporary, and then copy the result.

The design pattern is to call a function to create or mutate the data, which declares a very large automatic array. The function copies the data from its argument to the local array and performs insertions, deletions, or other permutations on the static array.

Although there is a limit to the total storage you can declare on the stack, this limit is frequently very large. On desktop systems, for instance, unless the algorithm uses very deep recursion, there is room on the stack for arrays of 10,000 characters.

A cautious developer worried about overflowing the local array can check the length of the argument string or array, and default to a dynamically constructed array if the argument is too big for the local array.

Why is all this copying any faster than using a dynamic data structure like std::string? Partly, it’s because mutating operations often copy the input anyway. Partly it’s because copying blocks of even a few thousand bytes is inexpensive on desktop-class hardware, compared to the cost of allocating dynamic storage for intermediate results.

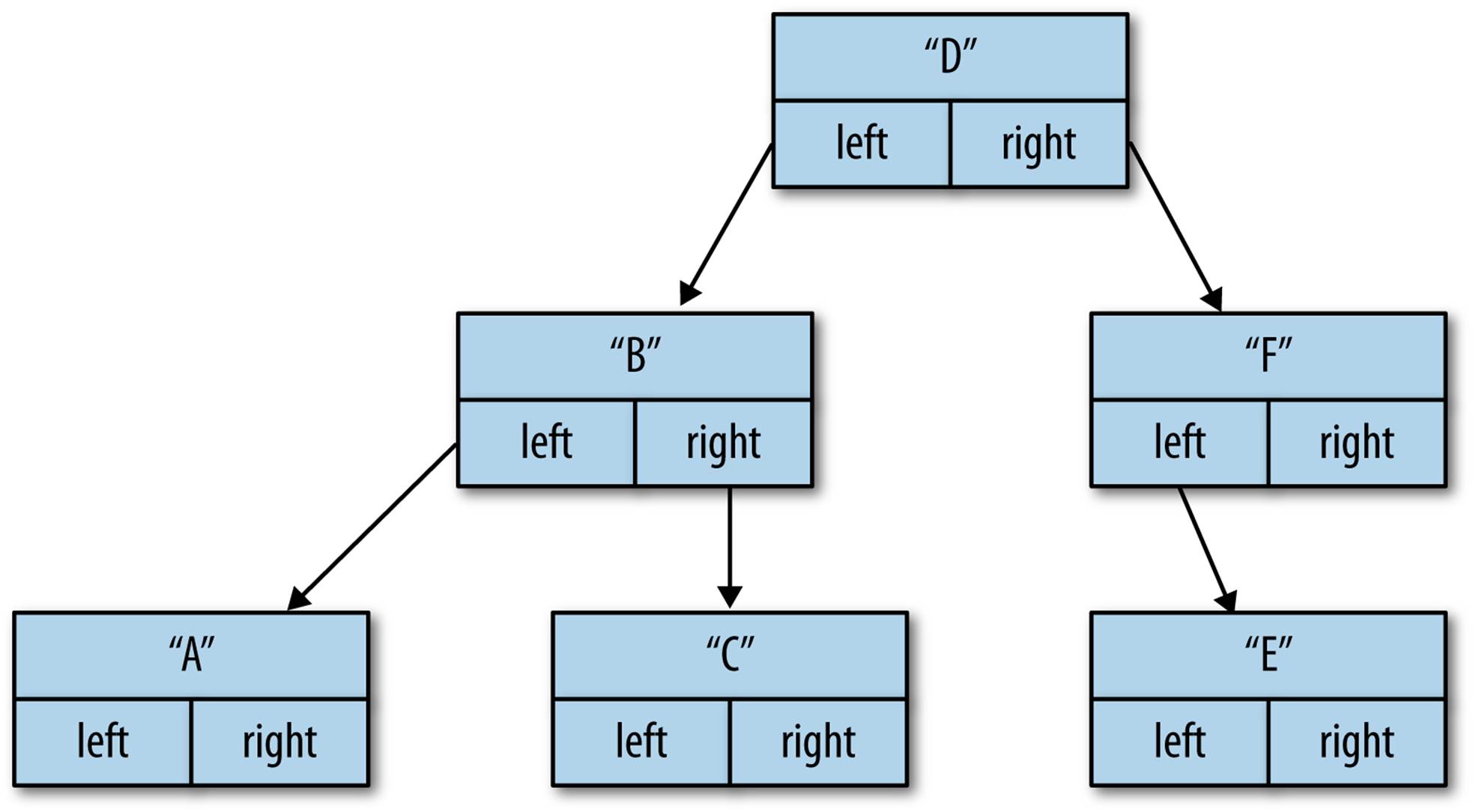

Create linked data structures statically

Linked data structures can be constructed as static initialized data. For instance, the tree structure shown in Figure 6-1 can be reproduced statically.

Figure 6-1. Simple tree structure

In this particular example, the tree is a binary search tree, the nodes are laid out in an array in breadth-first order, and the first element is the root:

struct treenode {

char const* name;

treenode* left;

treenode* right;

} tree[] = {

{ "D", &tree[1], &tree[2] }

{ "B", &tree[3], &tree[4] },

{ "F", &tree[5], nullptr },

{ "A", nullptr, nullptr },

{ "C", nullptr, nullptr },

{ "E", nullptr, nullptr },

};

This works because the addresses of array elements are constant expressions. This notation allows any linked structure to be defined, but the initializers are not very mnemonic, so it’s easy to make a typing error when building such a structure.

Another way to statically create linked structures is to initialize a variable for each element of the structure. This mechanism is very mnemonic, but has the disadvantage that forward references (like the one from fourth to first in the following code) have to be specially declared. The most natural way to declare this structure (first, second, third, fourth) would require extern declarations for all four variables. It’s defined in reverse order so that most of the references are to already-declared variables:

struct cyclenode {

char const* name;

cyclenode* next;

}

extern cyclenode first; // forward reference

cyclenode fourth = { "4", &first );

cyclenode third = { "3", &fourth };

cyclenode second = { "2", &third };

cyclenode first = { "1", &second };

Create binary trees in an array

Most developers learn about binary trees as an example of a linked data structure, in which each node is a separate instance of a class that contains pointers to the left and right child nodes. An unfortunate consequence of defining the tree data structure in this way is that a class storing something as small as a single character requires enough storage for two pointers as well, plus any overhead imposed by the dynamic memory manager.

An alternative approach is to build a binary tree in an array. Instead of containing explicit links to child nodes, the array indexes of a node’s children are computed from the index of the node. If the index of a node is i, its two children are at index 2i and 2i+1. As an additional bonus, the parent of a node is at index i/2. Because these multiplications and divisions can be implemented as left and right shifts, the computation is inexpensive even on extremely modest embedded processors.

The nodes of a tree implemented in an array require a mechanism to determine whether their left and right children are valid nodes in the tree or if they are the equivalent of null pointers. If the tree is left-balanced, a single integer retaining the array index of the first invalid node suffices.

These properties—the ability to compute child and parent nodes, and the efficiency of representation of a left-balanced tree—make a tree-in-an-array an efficient implementation for heap data structures.

A tree-in-an-array representation may be less efficient for balanced binary trees than a linked representation. An array of up to 2n positions may be required to hold a balanced binary tree of n nodes, depending on the balancing algorithm. Further, a balancing operation requires copying nodes to different array locations, rather than merely updating pointers. On smaller processors, and for larger node sizes, the copy operation may be too expensive. But if the node size is smaller than three pointers, the tree-in-an-array representation may still be a performance win.

Use a circular buffer instead of a deque

std::deque and std::list are so often used for a FIFO (first-in-first-out) buffer that the standard library has a container adapter called std::queue.

A double-ended queue, or deque, may also be implemented over a circular buffer. A circular buffer is an array data structure in which the front and back of the queue are given by two array indexes, modulo the length of the array. A circular buffer has similar properties to a deque, including constant-time push_back() and pop_front(), and random-access iterators. However, a circular buffer does not require reallocation as long as the consumer keeps up with the producer. The size of the circular buffer does not determine how many inputs it can process, but instead determines how far ahead the producer can get.

The difference between a circular buffer and a list or deque is the limit to the number of items in the buffer made visible by the circular buffer. Specializing the general queue data structure by exposing this constraint to the user makes a significantly more efficient implementation possible.

Boost has a circular buffer implemented as a standard library container. There are plenty of other implementations on the Web. A circular buffer can be designed with a static buffer whose size is given by a template parameter, with an initial size requiring a single allocation, or with a dynamically resizable buffer like std::vector. The performance of the circular buffer is similar to that of std::vector.

Use std::make_shared Instead of new

A shared pointer such as std::shared_ptr really contains two pointers. One points to the object referred to by the pointer. The other points to a second dynamic variable holding the reference count shared by all the std::shared_ptrs that refer to the object. So, a statement like:

std::shared_ptr<MyClass> p(new MyClass("hello", 123));

calls into the memory manager twice—once to create an instance of MyClass, and once again to create the hidden reference count object.

The pre-C++11 way around the expense of allocating the reference counter is to add an intrusive reference count as a base class of MyClass and create a custom smart pointer that uses the intrusive reference count:

custom_shared_ptr<RCClass> p(new RCClass("Goodbye", 999));

The standard writers heard our pain, and invented a template function called std::make_shared() that allocates a single block of storage to hold both the reference count and an instance of MyClass. std::shared_ptr also has a deleter function that knows which of the two ways the shared pointer was constructed. Now you know why std::shared_ptr seems so complicated internally when you step through it in the debugger.

make_shared() is pretty easy to use:

std::shared_ptr<MyClass> p = std::make_shared<MyClass>("hello", 123);

or the even simpler C++11-style declaration:

auto p = std::make_shared<MyClass>("hello", 123);

Don’t Share Ownership Unnecessarily

Multiple std::shared_ptr instances can share ownership of a dynamic variable. shared_ptr is useful when the lifetimes of the various pointers overlap unpredictably, but it is expensive. Incrementing and decrementing the reference count in a shared_ptr is performed not with a simple increment instruction, but with an expensive atomic increment with a full memory fence (see “Atomic Operations on Shared Variables”) so that shared_ptr works in multithreaded programs.

If the lifetime of one shared_ptr completely encloses the lifetime of another, the expense of the second shared_ptr is unnecessary. The following code illustrates a common situation:

void fiddle(std::shared_ptr<Foo> f);

...

shared_ptr<Foo> myFoo = make_shared<Foo>();

...

fiddle(myFoo);

myFoo owns a dynamic instance of Foo. When the program calls fiddle(), the call creates a second link to the dynamic Foo instance, incrementing the shared_ptr’s reference count. When fiddle() returns, the shared_ptr argument releases its ownership of the dynamic Fooinstance. The caller still owns a pointer. The minimum cost of this is an unnecessary atomic increment and decrement, each with a full memory fence. Across one function call, this extra cost is negligible. But as a programming practice, making every function argument that passes a pointer-to-Foo a shared_ptr across a whole program can result in significant cost.

Making the argument to fiddle() a plain pointer saves this cost:

void fiddle(Foo* f);

...

shared_ptr<Foo> myFoo = make_shared<Foo>();

...

fiddle(myFoo.get());

There is received wisdom that naked C-style pointers should never appear in a program except in the implementation of smart pointers, but another view is that plain pointers are fine as long as they are understood to represent nonowning pointers. In a team where developers feel really strongly that plain pointers are the devil’s playthings, the same result can be accomplished with references. Making a function argument a Foo& sends two messages—that the caller is responsible to ensure the reference remains valid for the duration of the call, and that the pointer is non-null:

void fiddle(Foo& f);

...

shared_ptr<Foo> myFoo = make_shared<Foo>();

...

if (myFoo)

fiddle(*myFoo.get());

The dereferencing operator * converts the pointer-to-Foo returned by get() into a reference to Foo. That is to say, it generates no code at all, just a note to the compiler. References are a convention that means “unowned, non-null pointer.”

Use a “Master Pointer” to Own Dynamic Variables

std::shared_ptr is easy. It automates management of a dynamic variable. But as previously noted, shared pointers are expensive. In many cases, they are unnecessary.

Very often, a single data structure owns a dynamic variable for its whole lifetime. References or pointers to the dynamic variable may be passed to and returned by functions, assigned to variables, and so on, but none of these references outlive the “master” reference.

If there is a master reference, it can be efficiently implemented using std::unique_ptr. A naked C-style pointer or C++ reference can be used to refer to the object during function calls. When this is done consistently, the use of naked pointers and references documents that these are “unowned” pointers.

A faction of developers become very uncomfortable with any deviation from use of std::shared_ptr. However, these same developers use iterators every day, not realizing perhaps that they behave as unowned pointers that can be made invalid. My experience using master pointers in several large projects is that problems leading to memory leaks or double frees do not happen in practice. When there is an obvious owner, master pointers are a big optimization win. When in doubt, std::shared_ptr is always available.

Reduce Reallocation of Dynamic Variables

Often the convenience of a dynamic variable is just too much to pass up. std::string comes to mind. But that doesn’t excuse the developer from being careful. Techniques exist to reduce the number of allocations made when using standard library containers. These techniques can be generalized even to the developer’s own data structures.

Preallocate Dynamic Variables to Prevent Reallocation

As data is appended to a std::string or std::vector, its dynamically allocated internal storage eventually becomes full. The next append operation causes the string or vector to allocate a larger block of storage and copy the old contents to the new storage. Both the call to the memory manager and the copy perform many memory accesses and consume many instructions. Yes, it’s true that an append takes place in O(1) time, but the constant of proportionality (how big the constant time is in milliseconds) can be significant.

Both string and vector have a member function, reserve(size_t n), that tells the string or vector to ensure there is sufficient space for at least n entries. If the size can be computed or estimated, a call to reserve() keeps the string or vector’s internal storage from needing to be reallocated as it grows to this limit:

std::string errmsg;

errmsg.reserve(100); // only one allocation for all this appending

errmsg += "Error 1234: variable ";

errmsg += varname;

errmsg += " was used before set. Undefined behavior.";

A call to reserve() acts as a hint to the string or vector. Unlike with allocating a worst-case static buffer, the only penalty for too low a guess is the possibility of additional reallocations. Even an extravagant overestimate for the expected size is not a problem if the string or vector will be used briefly and then destroyed. The shrink_to_fit() member function of std::string or std::vector can also be used to return unused space to the memory manager after using reserve() to preallocate a string or vector.

The standard library hash table type std::unordered_map (see “std::unordered_map and std::unordered_multimap”) has a backbone array (a list of buckets) containing links to the rest of the data structure. It also has a reserve() member function. Unfortunately, std::deque, which also has a backbone array, lacks a reserve() member function.

Developers designing their own data structures containing a backbone array would do their users a favor by including a function like reserve() to preallocate this backbone.

Create Dynamic Variables Outside of Loops

The following small loop has a big problem. It appends the lines of each file in namelist to the std::string variable config, then extracts a small amount of data from config. The problem is that config is created each time through the loop, and each time through the loop it’s reallocated as it grows longer. Then, at the end of the loop, config goes out of scope and is destroyed, returning its storage to the memory manager:

for (auto& filename : namelist) {

std::string config;

ReadFileXML(filename, config);

ProcessXML(config);

}

One way to make this loop more efficient is to move the declaration of config outside the loop. Inside the loop, config is cleared. However, clear() does not free the dynamically sized buffer inside config. It just sets the length of the contents to zero. After the first time through the loop, config won’t be reallocated unless a subsequent file is significantly larger than the first file:

std::string config;

for (auto& filename : namelist) {

config.clear();

ReadFileXML(filename, config);

ProcessXML(config);

}

A variation on this theme is to make config a class member variable. This latter advice may smell to some developers like advocating the unclean practice of using global variables. And in a sense it is. However, making dynamically allocated variables long lived can have a dramatic impact on performance.

This trick works not just with std::string, but also with std::vector and any other data structure that has a dynamically resizable backbone.

OPTIMIZATION WAR STORY

I once wrote a multithreaded program in which each thread entered a class instance. These threads each logged their activities. The logging functions originally defined a local temporary string into which the log information was formatted. I discovered that when there were more than half a dozen threads, each banging away at the memory manager to create lines of logging information, contention for the memory manager became so great that performance fell precipitously. The solution was to make the temporary string that held each log line a class member that was reused for each logged line. Once the string had been used a few times, it became long enough that reallocation was never required. Replicating this single trick through a large program provided the largest performance boost of any change I made, allowing the program (a telephony server) to scale easily to 10 times as many simultaneous calls.

Eliminate Unneeded Copying

In the classical Kernighan and Ritchie (K & R) definition of C, all the entities that could be directly assigned were primitive types like char, int, float, and pointers, which fit into a single machine register. Thus, any assignment statement like a = b; was efficient, generating only one or two instructions to fetch the value of b and store it into a. In C++, assignment of basic types like char, int, or float is just as efficient.

But there are simple-looking assignment statements that are not efficient in C++. If a and b are instances of the class BigClass, the assignment a = b; calls a member function of BigClass called the assignment operator. The assignment operator can be as simple as copying the fields ofb into a. But the point is that it may do absolutely anything a C++ function can do. BigClass may have dozens of fields to copy. If BigClass owns dynamic variables, these may be copied, resulting in calls to the memory manager. If BigClass owns a std::map with a million entries, or even a char array with a million characters, the cost of the assignment statement may be significant.

In C++, the initialized declaration Foo a = b; may invoke another member function called the copy constructor if Foo names a class. The copy constructor and assignment operator are closely related member functions that mostly do the same thing: copy the fields of one class instance to another. And like with the assignment operator, there is no upper limit to the cost of the copy constructor.

A developer scanning a hot piece of code for optimization opportunities must pay special attention to assignments and declarations, because these are places where expensive copying may lurk. In fact, copying may occur in any of the following places:

· Initialization (calls constructor)

· Assignment (calls assignment operator)

· Function arguments (each argument expression is move- or copy-constructed into its formal argument)

· Function return (calls move or copy constructor, perhaps twice)

· Inserting items into a standard library container (items are move- or copy-constructed)

· Inserting items into a vector (all items are move- or copy-constructed if the vector is reallocated)

Scott Meyers, among many others, covers the topic of copy construction extensively and well in his book Effective C++. The brief notes here are just an outline.

Disable Unwanted Copying in the Class Definition

Not every object in a program should be copied. For instance, objects that behave as entities (see “Value Objects and Entity Objects”) must not be copied or they lose their meaning.

Many objects that behave as entities have a considerable amount of state (a vector of 1,000 strings or a table of 1,000 symbols, for instance). A program that inadvertently copies an entity into a function meant to examine the entity’s state may appear to function correctly, but the runtime cost of the copy may be considerable.

If copying a class instance is expensive or undesirable, one expedient way to avoid this expense is to forbid copying. Declaring the copy constructor and assignment operator private prevents them being called. Since they can’t be called, no definition is even necessary; the declaration alone is enough. For example:

// pre-C++11 way to disable copying

class BigClass {

private:

BigClass(BigClass const&);

BigClass& operator=(BigClass const&);

public:

...

};

In C++11, the delete keyword can be appended to a declaration of the copy constructor and assignment operator to achieve the same result. It’s a good idea to make deleted constructors public because the compiler provides a clearer error message in this case:

// C++11 way to disable copying

class BigClass {

public:

BigClass(BigClass const&) = delete;

BigClass& operator=(BigClass const&) = delete;

...

};

Any attempt to assign an instance of a class declared in this way—or pass it by value to a function, or return it by value, or use it as the value of a standard library container class—will result in compile errors.

Still permitted is to assign or initialize variables with pointers or references to the class, or pass and return references or pointers to the instance in functions. From an optimization standpoint, assigning, passing, or returning a pointer or reference is far more efficient, because the pointer or reference fits into a machine register.

Eliminate Copying on Function Call

I’ll start this section by detailing the overhead during a function call when a C++ program evaluates the arguments. Read carefully, because the consequences for optimization are significant. When a program calls a function, each argument expression is evaluated, and each formal argument is constructed, with the value of the corresponding argument expression as its initializer.

“Constructed” means that a constructor member function of the formal argument is invoked. If the formal argument is a basic type like an int, double, or char*, the basic type’s constructor is conceptual, not an actual function. The program just copies the value into the formal argument’s storage.

If a formal argument is an instance of some class, however, one of the class’s copy constructors is called to initialize the instance. In all but trivial cases, the copy constructor is an actual function. Code is executed to call the function, and for whatever the copy constructor does. If the argument class is a std::list with a million entries, its copy constructor calls the memory manager a million times to create new entries. If the argument is a list of maps of strings, that whole data structure may be copied, node by node. For a very large and complex argument, the copy will probably take long enough to attract the original developer’s attention. But if the argument only had a few entries during testing, there’s a risk that the error will remain undiscovered until the design is long entrenched and becomes an obstacle to scaling the program. Consider the following example:

int Sum(std::list<int> v) {

int sum = 0;

for (auto it : v)

sum += *it;

return sum;

}

When the function Sum() shown here is called, the actual argument is a list: for instance, int total = Sum(MyList);. The formal argument v is also a list. v is constructed by finding a constructor that takes a reference to a list. This is the copy constructor. The copy constructor forstd::list makes a copy of each element of the list. If MyList is always just a few elements long, the cost, while unecessary, is bearable. But the overhead can be burdensome as MyList scales. If it has 1,000 elements, the memory manager is invoked 1,000 times. At the end of the function, the formal argument v goes out of scope, and those 1,000 elements are returned to the free list one by one.

To avoid this expense, formal arguments can be defined as types that have trivial copy constructors. To pass class instances into a function, pointers or references have trivial constructors. In the previous example, v could be a std::list<int> const&. Then, instead of copy-constructing an instance of the class, the reference is initialized with a reference to the actual argument. The reference typically is implemented as a pointer.

Passing a reference to a class instance can improve performance if copy-constructing the instance would call into the memory manager to copy its internal data, as with standard library containers, or when the class contains an array that must be copied, or has many local variables. There is some cost to accessing the instance through a reference: the pointer implementing the reference must be dereferenced each time the instance is accessed. If the function is large, and the function body uses the value of the argument many times, a point of diminishing returns may be reached where the cost of continually dereferencing the reference outweighs the cost savings by avoiding the copy. But for brief functions, passing arguments by reference is a win for all but the smallest classes.

Reference arguments don’t behave exactly like value arguments. A reference argument modified within the function modifies the referenced instance, while modifying a value argument has no effect outside the function. Declaring reference arguments as const prevents accidental modification.

Reference arguments can also introduce aliases, which can cause unanticipated effects. That is, if a function signature is:

void func(Foo& a, Foo& b);

the call func(x,x); introduces an alias. If func() updates a, it will find that b also is suddenly updated.

Eliminate Copying on Function Return

When a function returns a value, the value is copy-constructed into an unnamed temporary of the type returned by the function. Copy construction is trivial for basic types like long or double, or pointers, but when variables are classes, the copy constructor is typically a real function call. The bigger and more complex the class is, the more time-consuming its copy constructor is. Here is a simple example:

std::vector<int> scalar_product(std::vector<int> const& v, int c) {

std::vector<int> result;

result.reserve(v.size());

for (auto val : v)

result.push_back(val * c);

return result;

}

The cost of copy-constructing that already-constructed return value can be avoided in some cases by returning a reference, as discussed in the previous section. Unfortunately, that trick doesn’t work if the value to be returned is computed within the function and assigned to a variable with automatic storage duration. Such a variable goes out of scope when the function returns, leaving a dangling reference to unknown bytes off the end of the stack that will almost immediately be written over. Worse still, computing a result within the function is a very common use case, so that most functions return values, not references.

As if the cost of copy-constructing into the return value wasn’t bad enough, the value returned from a function is often assigned to a variable in the caller, as in auto res = scalar_product(argarray, 10);. So, on top of the copy constructor called inside the function, another copy constructor or assignment operator is invoked in the caller.

This double copy construction was a tremendous performance killer in early C++ programs. Fortunately, the big-brained folks who brought us the C++ standard and the many wonderful C++ compilers found a way to eliminate the extra copy constructor. This optimization is variously calledcopy elision or return value optimization (RVO). Developers may have heard of RVO, and may think it means they can return objects by value without worrying about the cost. This is unfortunately not the case. The conditions under which the compiler can perform RVO are very specific. The function must return a locally constructed object. The compiler must be able to determine that the same object is returned on all control paths. The object must have the same type as the declared return type of the function. To a first order of approximation, if a function is brief and has a single control path, the compiler is quite likely to do RVO. If the function is larger, or the control path is branched, it’s harder for the compiler to determine that RVO is possible. Compilers also vary in the quality of their analysis.

There is a way to eliminate construction of a class instance inside the function, plus both copy constructions (or the equivalent copy constructor followed by assignment operator) that happen on returning from a function. It involves direct action by the developer, so the result is deterministically better than hoping the compiler does RVO in a given situation. Instead of returning a value using a return statement, the value can be returned as an out parameter, a reference argument updated to the returned value inside the function:

void scalar_product(

std::vector<int> const& v,

int c,

vector<int>& result) {

result.clear();

result.reserve(v.size());

for (auto val : v)

result.push_back(val * c);

}

Here, an out parameter named result has been added to the function’s argument list. There are several advantages to this mechanism:

· The object is already constructed when the function is called. Sometimes the object must be cleared or reinitialized, but this is unlikely ever to be more expensive than construction.

· The object updated inside the function need not be copied into an unnamed temporary in the return statement.

· Because the actual data is returned in an argument, the return type of the function can be void, or the return value can be used for status or error information.

· Because the updated object is already bound to a name in the caller, it need not be copied or assigned upon return from the function.

But wait, there’s more. Many data structures (strings, vectors, hash tables) have a dynamically allocated backbone array that can often be reused if a function is called more than once in a program. Sometimes the result of the function must be saved in the caller, but this cost is never more than the cost of the copy constructor that would be called if the function returned a class instance by value.

Are there any extra runtime costs to this mechanism, such as the cost of an extra argument? Not really. What the compiler actually does with an instance-returning function is convert it to a form with an extra argument, a reference to uninitialized storage for the unnamed temporary returned by the function.

There’s one place in C++ where there’s no choice but to return objects by value: operator functions. Developers writing matrix math functions who want to use the readable operator syntax A = B * C; cannot use reference arguments, but must focus instead on carefully implementing operator functions so that they can use RVO and move semantics to maximal efficiency.

Copy Free Libraries

When a buffer, struct, or other data structure to be filled is a function argument, a reference can be passed through several layers of library calls inexpensively. I have heard libraries that implement this behavior described as “copy free” libraries. This pattern appears in many libraries of performance-critical functions. It’s worth learning.

For example, the C++ standard library istream::read() member function has the following signature:

istream& read(char* s, streamsize n);

This function reads up to n bytes into the storage pointed to by s. The buffer s is an out parameter, so the read data doesn’t have to be copied into newly allocated storage. Since s is an argument, istream::read() can use the returned value for something else; in this case, returning its “this” pointer as a reference.

But istream::read() doesn’t actually fetch data from the operating system kernel itself. It calls another function. While implementations differ, it may call the C library function fread(), which has the following signature:

size_t fread(void* ptr, size_t size, size_t nmemb, FILE* stream);

fread() reads size*nmemb bytes of data and stores them in the storage pointed to by ptr. The ptr argument of fread() is the same as the s argument of istream::read().

But fread() isn’t the end of the calling chain. On Linux, fread() calls the standard Unix function read(). On Windows, fread() calls the ReadFile() function from Win32. These two functions have similar signatures:

ssize_t read(int fd, void *buf, size_t count);

BOOL ReadFile(HANDLE hFile, void* buf, DWORD n, DWORD* bytesread,

OVERLAPPED* pOverlapped);

Both functions take a void* to the buffer to fill in, and a maximum number of bytes to read. Although its type has been cast from char* to void* on its way down the calling chain, the pointer refers to the same storage.

There is an alternative design aesthetic that says these structures and buffers should be returned by value. They are created in the function, so they shouldn’t exist prior to the function call. The function is “simpler” with one less argument. C++ allows the developer to return structures by value, so this way must be the “natural” way in C++. With the developers of Unix, Windows, C, and C++ all behind the copy-free style, I am bewildered that there are supporters for this alternative aesthetic, which has a high cost: that of copying the structure or buffer, not once, but several times as it passes up the layers of a library. If the returned value has dynamic variables, the cost may include calling the memory manager several times to make copies. An attempt to allocate the structure once and return a pointer requires ownership of the pointer to be transferred several times. RVO and move semantics only partially address these costs, and require careful attention from the developer to implement well. From the standpoint of performance, a copy-free design is far more efficient.

Implement the “Copy on Write” Idiom

Copy on write (sometimes abbreviated COW) is a programming idiom used to copy class instances containing expensive-to-copy dynamic variables efficiently. COW is a well-known optimization with a long history. It has been used frequently over the years, especially in the implementation of C++ character string classes. CString character strings in Windows use COW. Some older implementations of std::string also use COW. However, the C++11 standard forbids its use in implementing std::string. COW is not always an optimization win, so it must be used judiciously, despite its august tradition.

Normally, if an object that owns a dynamic variable is copied, a new copy must be made of the dynamic variable. This is called a deep copy operation. An object that contains nonowning pointers can get by with copying the pointers, rather than the pointed-to variables. This is called ashallow copy.

The idea of copy on write is that two copies of an object are the same until one of them is modified. So, until one instance or the other is modified, they can share pointers to any expensive-to-copy parts. Copy on write initially performs a shallow copy operation, and delays performing the deep copy until an element of the object is mutated.

In a modern C++ implementation of COW, any class member that refers to a dynamic variable is implemented using a shared-ownership smart pointer such as std::shared_ptr. The class copy constructor copies the shared-ownership pointer, delaying the creation of a new copy of the dynamic variable until any copy wants to mutate the dynamic variable.

Any mutating operation in the class checks the reference count of the shared pointer before proceeding. If the reference count is greater than 1, indicating shared ownership, the operation makes a new copy of the object, swaps the shared pointer member with the shared pointer to the new copy, and releases the old copy, decrementing its reference count. Now the mutating operation can proceed, knowing that the dynamic variable is unshared.

It’s important to construct the dynamic variable in the COW class using std::make_shared(). Otherwise, use of the shared pointer costs an extra trip to the memory manager to get the reference count object. For many dynamic variables, this is the same cost as simply copying the dynamic variable into new storage and assigning it to a (nonsharing) smart pointer. So, unless many copies are made, or mutating operators are not usually called, the COW idiom may not buy anything.

Slice Data Structures

Slicing is a programming idiom in which one variable refers to a portion of another. For instance, the experimental string_view type proposed for C++17 refers to a substring of another character string, containing a char* pointer to the beginning of the substring and a length that places the substring’s end within the string being referred to.

Slices are small, easily copied objects, without the high cost of allocating storage for and copying contents into a subarray or substring. If the sliced data structure is owned by shared pointers, slices can be completely safe. But experience teaches that slices are ephemeral. They typically serve their purpose briefly and then go out of scope, before the sliced data structure can be deleted. string_view, for instance, uses an unowned pointer into the strings.

Implement Move Semantics

With respect to optimization, the move semantics added to C++11 are the biggest thing to happen to C++ in, well, ever. Move semantics solve several recurring problems from previous versions of C++. For example:

· An object is assigned to a variable, causing the internal contents of the object to be copied at significant runtime expense, after which the original object is immediately destroyed. The effort of copying is wasted, because the contents of the original object could have been reused.

· The developer wishes to assign an entity (see “Value Objects and Entity Objects”), such as an auto_ptr or resource handle, to a variable. The “copy” operation in the assignment is undefined on such an object because it has a unique identity.

Both these cases bite hard in dynamic containers such as std::vector, where the internal storage of the container must be reallocated as the number of items in the container grows. The first case makes reallocating a container more expensive than necessary. The second case prevents entities like auto_ptr from being stored in containers at all.

The problem arises because the copy operation performed by copy constructors and assignment operators works fine for basic types and unowned pointers, but doesn’t make sense for entities. Objects with this type of member could be put in C-style arrays, but not in dynamic containers likestd::vector.

Prior to C++11, C++ offered no standard way to efficiently move the contents of a variable to another variable, leaving nothing behind, for situations when the expense of copy was not needed.

Nonstandard Copy Semantics: A Painful Hack

When a variable behaves as an entity, making a copy is typically a one-way ticket to undefined behavior land. It is good practice to disable the copy constructor and assignment operator for such a variable. But containers like std::vector require contained objects to be copied when the container is reallocated, so disabling copy means you can’t use that type of object in a container.

For the desperate designer wanting to put entities into standard library containers before the advent of move semantics, a workaround was to implement assignment in a nonstandard way. For instance, a kind of smart pointer could be created that implemented assignment as shown inExample 6-1.

Example 6-1. Hacky smart pointer noncopying assignment

hacky_ptr& hacky_ptr::operator=(hacky_ptr& rhs) {

if (*this != rhs) {

this->ptr_ = rhs.ptr_;

rhs.ptr_ = nullptr;

}

return *this;

}

This assignment operator compiles and runs. A statement such as q = p; transfers ownership of the pointer to q, setting the pointer in p to nullptr. Ownership is preserved by this definition. A pointer defined in this way works in std::vector:

Although the signature of the assignment operator offers a subtle hint that rhs is modified, which is not usual for assignment, the assignment itself offers no clue to its deviant behavior (see Example 6-2).

Example 6-2. hacky_ptr use causes surprises

hacky_ptr p, q;

p = new Foo;

q = p;

...

p->foo_func(); // surprise, dereferences nullptr

A new developer expecting this code to work will be disappointed, possibly after a lengthy debugging session. Debasing the meaning of “copy” in this way is a coding horror, difficult to justify even when it seems necessary.

std::swap(): The Poor Man’s Move Semantics

Another possible operation between two variables is “swap,” in which the two variables exchange contents. Swap is well defined even when the variables are entities, because at the end of the operation, only one variable contains each entity. C++ provides the template functionstd::swap() to exchange the contents of two variables:

std::vector<int> a(1000000,0);

...

std::vector<int> b; // b is empty

std::swap(v,w); // now b has a million entries

The default instantiation of std::swap() prior to move semantics is equivalent to this:

template <typename T> void std::swap(T& a, T& b) {

T tmp = a; // make a new copy of a

a = b; // copy b to a, discarding a's old value

b = tmp; // copy temp to b, discarding b's old value

}

This default instantiation works only for objects for which the copy operation is defined. It is also potentially inefficient: a’s original value is copied twice, and b’s original value is copied once. If type T contains dynamically allocated members, three copies are made, and two are destroyed. This is more expensive than the conceptual copy operation, which does only one copy and one delete.