High Performance MySQL (2012)

Chapter 5. Indexing for High Performance

Indexes (also called “keys” in MySQL) are data structures that storage engines use to find rows quickly. They also have several other beneficial properties that we’ll explore in this chapter.

Indexes are critical for good performance, and become more important as your data grows larger. Small, lightly loaded databases often perform well even without proper indexes, but as the dataset grows, performance can drop very quickly.[51] Unfortunately, indexes are often forgotten or misunderstood, so poor indexing is a leading cause of real-world performance problems. That’s why we put this material early in the book—even earlier than our discussion of query optimization.

Index optimization is perhaps the most powerful way to improve query performance. Indexes can improve performance by many orders of magnitude, and optimal indexes can sometimes boost performance about two orders of magnitude more than indexes that are merely “good.” Creating truly optimal indexes will often require you to rewrite queries, so this chapter and the next one are closely related.

Indexing Basics

The easiest way to understand how an index works in MySQL is to think about the index in a book. To find out where a particular topic is discussed in a book, you look in the index, and it tells you the page number(s) where that term appears.

In MySQL, a storage engine uses indexes in a similar way. It searches the index’s data structure for a value. When it finds a match, it can find the row that contains the match. Suppose you run the following query:

mysql> SELECT first_name FROM sakila.actor WHERE actor_id = 5;

There’s an index on the actor_id column, so MySQL will use the index to find rows whose actor_id is 5. In other words, it performs a lookup on the values in the index and returns any rows containing the specified value.

An index contains values from one or more columns in a table. If you index more than one column, the column order is very important, because MySQL can only search efficiently on a leftmost prefix of the index. Creating an index on two columns is not the same as creating two separate single-column indexes, as you’ll see.

IF I USE AN ORM, DO I NEED TO CARE?

The short version: yes, you still need to learn about indexing, even if you rely on an object-relational mapping (ORM) tool.

ORMs produce logically and syntactically correct queries (most of the time), but they rarely produce index-friendly queries, unless you use them for only the most basic types of queries, such as primary key lookups. You can’t expect your ORM, no matter how sophisticated, to handle the subtleties and complexities of indexing. Read the rest of this chapter if you disagree! It’s sometimes a hard job for an expert human to puzzle through all of the possibilities, let alone an ORM.

Types of Indexes

There are many types of indexes, each designed to perform well for different purposes. Indexes are implemented in the storage engine layer, not the server layer. Thus, they are not standardized: indexing works slightly differently in each engine, and not all engines support all types of indexes. Even when multiple engines support the same index type, they might implement it differently under the hood.

That said, let’s look at the index types MySQL currently supports, their benefits, and their drawbacks.

B-Tree indexes

When people talk about an index without mentioning a type, they’re probably referring to a B-Tree index, which typically uses a B-Tree data structure to store its data.[52] Most of MySQL’s storage engines support this index type. The Archive engine is the exception: it didn’t support indexes at all until MySQL 5.1, when it started to allow a single indexed AUTO_INCREMENT column.

We use the term “B-Tree” for these indexes because that’s what MySQL uses in CREATE TABLE and other statements. However, storage engines might use different storage structures internally. For example, the NDB Cluster storage engine uses a T-Tree data structure for these indexes, even though they’re labeled BTREE, and InnoDB uses B+Trees. The variations in the structures and algorithms are out of scope for this book, though.

Storage engines use B-Tree indexes in various ways, which can affect performance. For instance, MyISAM uses a prefix compression technique that makes indexes smaller, but InnoDB leaves values uncompressed in its indexes. Also, MyISAM indexes refer to the indexed rows by their physical storage locations, but InnoDB refers to them by their primary key values. Each variation has benefits and drawbacks.

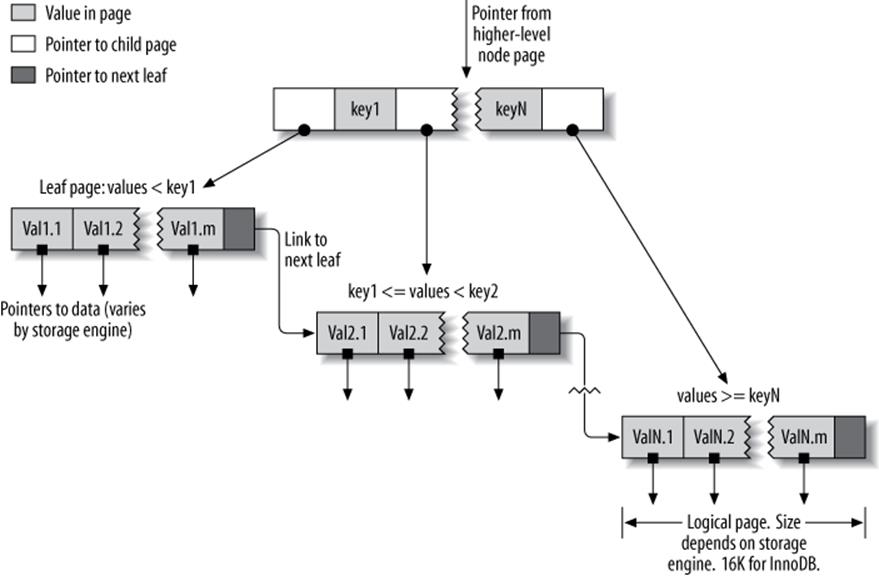

The general idea of a B-Tree is that all the values are stored in order, and each leaf page is the same distance from the root. Figure 5-1 shows an abstract representation of a B-Tree index, which corresponds roughly to how InnoDB’s indexes work. MyISAM uses a different structure, but the principles are similar.

A B-Tree index speeds up data access because the storage engine doesn’t have to scan the whole table to find the desired data. Instead, it starts at the root node (not shown in this figure). The slots in the root node hold pointers to child nodes, and the storage engine follows these pointers. It finds the right pointer by looking at the values in the node pages, which define the upper and lower bounds of the values in the child nodes. Eventually, the storage engine either determines that the desired value doesn’t exist or successfully reaches a leaf page.

Figure 5-1. An index built on a B-Tree (technically, a B+Tree) structure

Leaf pages are special, because they have pointers to the indexed data instead of pointers to other pages. (Different storage engines have different types of “pointers” to the data.) Our illustration shows only one node page and its leaf pages, but there might be many levels of node pages between the root and the leaves. The tree’s depth depends on how big the table is.

Because B-Trees store the indexed columns in order, they’re useful for searching for ranges of data. For instance, descending the tree for an index on a text field passes through values in alphabetical order, so looking for “everyone whose name begins with I through K” is efficient.

Suppose you have the following table:

CREATE TABLE People (

last_name varchar(50) not null,

first_name varchar(50) not null,

dob date not null,

gender enum('m', 'f')not null,

key(last_name, first_name, dob)

);

The index will contain the values from the last_name, first_name, and dob columns for every row in the table. Figure 5-2 illustrates how the index arranges the data it stores.

Figure 5-2. Sample entries from a B-Tree (technically, a B+Tree) index

Notice that the index sorts the values according to the order of the columns given in the index in the CREATE TABLE statement. Look at the last two entries: there are two people with the same name but different birth dates, and they’re sorted by birth date.

Types of queries that can use a B-Tree index

B-Tree indexes work well for lookups by the full key value, a key range, or a key prefix. They are useful only if the lookup uses a leftmost prefix of the index.[53] The index we showed in the previous section will be useful for the following kinds of queries:

Match the full value

A match on the full key value specifies values for all columns in the index. For example, this index can help you find a person named Cuba Allen who was born on 1960-01-01.

Match a leftmost prefix

This index can help you find all people with the last name Allen. This uses only the first column in the index.

Match a column prefix

You can match on the first part of a column’s value. This index can help you find all people whose last names begin with J. This uses only the first column in the index.

Match a range of values

This index can help you find people whose last names are between Allen and Barrymore. This also uses only the first column.

Match one part exactly and match a range on another part

This index can help you find everyone whose last name is Allen and whose first name starts with the letter K (Kim, Karl, etc.). This is an exact match on last_name and a range query on first_name.

Index-only queries

B-Tree indexes can normally support index-only queries, which are queries that access only the index, not the row storage. We discuss this optimization in Covering Indexes.

Because the tree’s nodes are sorted, they can be used for both lookups (finding values) and ORDER BY queries (finding values in sorted order). In general, if a B-Tree can help you find a row in a particular way, it can help you sort rows by the same criteria. So, our index will be helpful forORDER BY clauses that match all the types of lookups we just listed.

Here are some limitations of B-Tree indexes:

§ They are not useful if the lookup does not start from the leftmost side of the indexed columns. For example, this index won’t help you find all people named Bill or all people born on a certain date, because those columns are not leftmost in the index. Likewise, you can’t use the index to find people whose last name ends with a particular letter.

§ You can’t skip columns in the index. That is, you won’t be able to find all people whose last name is Smith and who were born on a particular date. If you don’t specify a value for the first_name column, MySQL can use only the first column of the index.

§ The storage engine can’t optimize accesses with any columns to the right of the first range condition. For example, if your query is WHERE last_name="Smith" AND first_name LIKE 'J%' AND dob='1976-12-23', the index access will use only the first two columns in the index, because the LIKE is a range condition (the server can use the rest of the columns for other purposes, though). For a column that has a limited number of values, you can often work around this by specifying equality conditions instead of range conditions. We show detailed examples of this in the indexing case study later in this chapter.

Now you know why we said the column order is extremely important: these limitations are all related to column ordering. For optimal performance, you might need to create indexes with the same columns in different orders to satisfy your queries.

Some of these limitations are not inherent to B-Tree indexes, but are a result of how the MySQL query optimizer and storage engines use indexes. Some of them might be removed in the future.

Hash indexes

A hash index is built on a hash table and is useful only for exact lookups that use every column in the index.[54] For each row, the storage engine computes a hash code of the indexed columns, which is a small value that will probably differ from the hash codes computed for other rows with different key values. It stores the hash codes in the index and stores a pointer to each row in a hash table.

In MySQL, only the Memory storage engine supports explicit hash indexes. They are the default index type for Memory tables, though Memory tables can have B-Tree indexes, too. The Memory engine supports nonunique hash indexes, which is unusual in the database world. If multiple values have the same hash code, the index will store their row pointers in the same hash table entry, using a linked list.

Here’s an example. Suppose we have the following table:

CREATE TABLE testhash (

fname VARCHAR(50) NOT NULL,

lname VARCHAR(50) NOT NULL,

KEY USING HASH(fname)

) ENGINE=MEMORY;

containing this data:

mysql> SELECT * FROM testhash;

+--------+-----------+

| fname | lname |

+--------+-----------+

| Arjen | Lentz |

| Baron | Schwartz |

| Peter | Zaitsev |

| Vadim | Tkachenko |

+--------+-----------+

Now suppose the index uses an imaginary hash function called f(), which returns the following values (these are just examples, not real values):

f('Arjen')= 2323

f('Baron')= 7437

f('Peter')= 8784

f('Vadim')= 2458

The index’s data structure will look like this:

|

Slot |

Value |

|

2323 |

Pointer to row 1 |

|

2458 |

Pointer to row 4 |

|

7437 |

Pointer to row 2 |

|

8784 |

Pointer to row 3 |

Notice that the slots are ordered, but the rows are not. Now, when we execute this query:

mysql> SELECT lname FROM testhash WHERE fname='Peter';

MySQL will calculate the hash of 'Peter' and use that to look up the pointer in the index. Because f('Peter') = 8784, MySQL will look in the index for 8784 and find the pointer to row 3. The final step is to compare the value in row 3 to 'Peter', to make sure it’s the right row.

Because the indexes themselves store only short hash values, hash indexes are very compact. As a result, lookups are usually lightning fast. However, hash indexes have some limitations:

§ Because the index contains only hash codes and row pointers rather than the values themselves, MySQL can’t use the values in the index to avoid reading the rows. Fortunately, accessing the in-memory rows is very fast, so this doesn’t usually degrade performance.

§ MySQL can’t use hash indexes for sorting because they don’t store rows in sorted order.

§ Hash indexes don’t support partial key matching, because they compute the hash from the entire indexed value. That is, if you have an index on (A,B) and your query’s WHERE clause refers only to A, the index won’t help.

§ Hash indexes support only equality comparisons that use the =, IN(), and <=> operators (note that <> and <=> are not the same operator). They can’t speed up range queries, such as WHERE price > 100.

§ Accessing data in a hash index is very quick, unless there are many collisions (multiple values with the same hash). When there are collisions, the storage engine must follow each row pointer in the linked list and compare their values to the lookup value to find the right row(s).

§ Some index maintenance operations can be slow if there are many hash collisions. For example, if you create a hash index on a column with a very low selectivity (many hash collisions) and then delete a row from the table, finding the pointer from the index to that row might be expensive. The storage engine will have to examine each row in that hash key’s linked list to find and remove the reference to the one row you deleted.

These limitations make hash indexes useful only in special cases. However, when they match the application’s needs, they can improve performance dramatically. An example is in data-warehousing applications where a classic “star” schema requires many joins to lookup tables. Hash indexes are exactly what a lookup table requires.

In addition to the Memory storage engine’s explicit hash indexes, the NDB Cluster storage engine supports unique hash indexes. Their functionality is specific to the NDB Cluster storage engine, which we don’t cover in this book.

The InnoDB storage engine has a special feature called adaptive hash indexes. When InnoDB notices that some index values are being accessed very frequently, it builds a hash index for them in memory on top of B-Tree indexes. This gives its B-Tree indexes some properties of hash indexes, such as very fast hashed lookups. This process is completely automatic, and you can’t control or configure it, although you can disable the adaptive hash index altogether.

Building your own hash indexes

If your storage engine doesn’t support hash indexes, you can emulate them yourself in a manner similar to that InnoDB uses. This will give you access to some of the desirable properties of hash indexes, such as a very small index size for very long keys.

The idea is simple: create a pseudohash index on top of a standard B-Tree index. It will not be exactly the same thing as a real hash index, because it will still use the B-Tree index for lookups. However, it will use the keys’ hash values for lookups, instead of the keys themselves. All you need to do is specify the hash function manually in the query’s WHERE clause.

An example of when this approach works well is for URL lookups. URLs generally cause B-Tree indexes to become huge, because they’re very long. You’d normally query a table of URLs like this:

mysql> SELECT id FROM url WHERE url="http://www.mysql.com";

But if you remove the index on the url column and add an indexed url_crc column to the table, you can use a query like this:

mysql> SELECT id FROM url WHERE url="http://www.mysql.com"

-> AND url_crc=CRC32("http://www.mysql.com");

This works well because the MySQL query optimizer notices there’s a small, highly selective index on the url_crc column and does an index lookup for entries with that value (1560514994, in this case). Even if several rows have the same url_crc value, it’s very easy to find these rows with a fast integer comparison and then examine them to find the one that matches the full URL exactly. The alternative is to index the full URL as a string, which is much slower.

One drawback to this approach is the need to maintain the hash values. You can do this manually or, in MySQL 5.0 and newer, you can use triggers. The following example shows how triggers can help maintain the url_crc column when you insert and update values. First, we create the table:

CREATE TABLE pseudohash (

id int unsigned NOT NULL auto_increment,

url varchar(255) NOT NULL,

url_crc int unsigned NOT NULL DEFAULT 0,

PRIMARY KEY(id)

);

Now we create the triggers. We change the statement delimiter temporarily, so we can use a semicolon as a delimiter for the trigger:

DELIMITER //

CREATE TRIGGER pseudohash_crc_ins BEFORE INSERT ON pseudohash FOR EACH ROW BEGIN

SET NEW.url_crc=crc32(NEW.url);

END;

//

CREATE TRIGGER pseudohash_crc_upd BEFORE UPDATE ON pseudohash FOR EACH ROW BEGIN

SET NEW.url_crc=crc32(NEW.url);

END;

//

DELIMITER ;

All that remains is to verify that the trigger maintains the hash:

mysql> INSERT INTO pseudohash (url) VALUES ('http://www.mysql.com');

mysql> SELECT * FROM pseudohash;

+----+----------------------+------------+

| id | url | url_crc |

+----+----------------------+------------+

| 1 | http://www.mysql.com | 1560514994 |

+----+----------------------+------------+

mysql> UPDATE pseudohash SET url='http://www.mysql.com/' WHERE id=1;

mysql> SELECT * FROM pseudohash;

+----+---------------------- +------------+

| id | url | url_crc |

+----+---------------------- +------------+

| 1 | http://www.mysql.com/ | 1558250469 |

+----+---------------------- +------------+

If you use this approach, you should not use SHA1() or MD5() hash functions. These return very long strings, which waste a lot of space and result in slower comparisons. They are cryptographically strong functions designed to virtually eliminate collisions, which is not your goal here. Simple hash functions can offer acceptable collision rates with better performance.

If your table has many rows and CRC32() gives too many collisions, implement your own 64-bit hash function. Make sure you use a function that returns an integer, not a string. One way to implement a 64-bit hash function is to use just part of the value returned by MD5(). This is probably less efficient than writing your own routine as a user-defined function (see Chapter 7), but it’ll do in a pinch:

mysql> SELECT CONV(RIGHT(MD5('http://www.mysql.com/'), 16), 16, 10) AS HASH64;

+---------------------+

| HASH64 |

+---------------------+

| 9761173720318281581 |

+---------------------+

Handling hash collisions

When you search for a value by its hash, you must also include the literal value in your WHERE clause:

mysql> SELECT id FROM url WHERE url_crc=CRC32("http://www.mysql.com")

-> AND url="http://www.mysql.com";

The following query will not work correctly, because if another URL has the CRC32() value 1560514994, the query will return both rows:

mysql> SELECT id FROM url WHERE url_crc=CRC32("http://www.mysql.com");

The probability of a hash collision grows much faster than you might think, due to the so-called Birthday Paradox. CRC32() returns a 32-bit integer value, so the probability of a collision reaches 1% with as few as 93,000 values. To illustrate this, we loaded all the words in/usr/share/dict/words into a table along with their CRC32() values, resulting in 98,569 rows. There is already one collision in this set of data! The collision makes the following query return more than one row:

mysql> SELECT word, crc FROM words WHERE crc = CRC32('gnu');

+---------+------------+

| word | crc |

+---------+------------+

| codding | 1774765869 |

| gnu | 1774765869 |

+---------+------------+

The correct query is as follows:

mysql> SELECT word, crc FROM words WHERE crc = CRC32('gnu')AND word = 'gnu';

+------+------------+

| word | crc |

+------+------------+

| gnu | 1774765869 |

+------+------------+

To avoid problems with collisions, you must specify both conditions in the WHERE clause. If collisions aren’t a problem—for example, because you’re doing statistical queries and you don’t need exact results—you can simplify, and gain some efficiency, by using only the CRC32() value in the WHERE clause. You can also use the FNV64() function, which ships with Percona Server and can be installed as a plugin in any version of MySQL. It’s 64 bits long, very fast, and much less prone to collisions than CRC32().

Spatial (R-Tree) indexes

MyISAM supports spatial indexes, which you can use with partial types such as GEOMETRY. Unlike B-Tree indexes, spatial indexes don’t require your WHERE clauses to operate on a leftmost prefix of the index. They index the data by all dimensions at the same time. As a result, lookups can use any combination of dimensions efficiently. However, you must use the MySQL GIS functions, such as MBRCONTAINS(), for this to work, and MySQL’s GIS support isn’t great, so most people don’t use it. The go-to solution for GIS in an open source RDBMS is PostGIS in PostgreSQL.

Full-text indexes

FULLTEXT is a special type of index that finds keywords in the text instead of comparing values directly to the values in the index. Full-text searching is completely different from other types of matching. It has many subtleties, such as stopwords, stemming and plurals, and Boolean searching. It is much more analogous to what a search engine does than to simple WHERE parameter matching.

Having a full-text index on a column does not eliminate the value of a B-Tree index on the same column. Full-text indexes are for MATCH AGAINST operations, not ordinary WHERE clause operations.

We discuss full-text indexing in more detail in Chapter 7.

Other types of index

Several third-party storage engines use different types of data structures for their indexes. For example, TokuDB uses fractal tree indexes. This is a newly developed data structure that has some of the same benefits as B-Tree indexes, without some of the drawbacks. As you read through this chapter, you’ll see many InnoDB topics, including clustered indexes and covering indexes. In most cases, the discussions of InnoDB apply equally well to TokuDB.

ScaleDB uses Patricia tries (that’s not a typo), and other technologies such as InfiniDB or Infobright have their own special data structures for optimizing queries.

[51] This chapter assumes you’re using conventional hard drives, unless otherwise stated. Solid-state drives have different performance characteristics, which we cover throughout this book. The indexing principles remain true, but the penalties we’re trying to avoid aren’t as large with solid-state drives as they are with conventional drives.

[52] Many storage engines actually use a B+Tree index, in which each leaf node contains a link to the next for fast range traversals through nodes. Refer to computer science literature for a detailed explanation of B-Tree indexes.

[53] This is MySQL-specific, and even version-specific. Some other databases can use nonleading index parts, though it’s usually more efficient to use a complete prefix. MySQL might offer this option in the future; we show workarounds later in the chapter.

[54] See the computer science literature for more on hash tables.

Benefits of Indexes

Indexes enable the server to navigate quickly to a desired position in the table, but that’s not all they’re good for. As you’ve probably gathered by now, indexes have several additional benefits, based on the properties of the data structures used to create them.

B-Tree indexes, which are the most common type you’ll use, function by storing the data in sorted order, and MySQL can exploit that for queries with clauses such as ORDER BY and GROUP BY. Because the data is presorted, a B-Tree index also stores related values close together. Finally, the index actually stores a copy of the values, so some queries can be satisfied from the index alone. Three main benefits proceed from these properties:

1. Indexes reduce the amount of data the server has to examine.

2. Indexes help the server avoid sorting and temporary tables.

3. Indexes turn random I/O into sequential I/O.

This subject really deserves an entire book. For those who would like to dig in deeply, we recommend Relational Database Index Design and the Optimizers, by Tapio Lahdenmaki and Mike Leach (Wiley). It explains topics such as how to calculate the costs and benefits of indexes, how to estimate query speed, and how to determine whether indexes will be more expensive to maintain than the benefit they provide.

Lahdenmaki and Leach’s book also introduces a three-star system for grading how suitable an index is for a query. The index earns one star if it places relevant rows adjacent to each other, a second star if its rows are sorted in the order the query needs, and a final star if it contains all the columns needed for the query.

We’ll return to these principles throughout this chapter.

IS AN INDEX THE BEST SOLUTION?

An index isn’t always the right tool. At a high level, keep in mind that indexes are most effective when they help the storage engine find rows without adding more work than they avoid. For very small tables, it is often more effective to simply read all the rows in the table. For medium to large tables, indexes can be very effective. For enormous tables, the overhead of indexing, as well as the work required to actually use the indexes, can start to add up. In such cases you might need to choose a technique that identifies groups of rows that are interesting to the query, instead of individual rows. You can use partitioning for this purpose; see Chapter 7.

If you have lots of tables, it can also make sense to create a metadata table to store some characteristics of interest for your queries. For example, if you execute queries that perform aggregations over rows in a multitenant application whose data is partitioned into many tables, you can record which users of the system are actually stored in each table, thus letting you simply ignore tables that don’t have information about those users. These tactics are usually useful only at extremely large scales. In fact, this is a crude approximation of what Infobright does. At the scale of terabytes, locating individual rows doesn’t make sense; indexes are replaced by per-block metadata.

Indexing Strategies for High Performance

Creating the correct indexes and using them properly is essential to good query performance. We’ve introduced the different types of indexes and explored their strengths and weaknesses. Now let’s see how to really tap into the power of indexes.

There are many ways to choose and use indexes effectively, because there are many special-case optimizations and specialized behaviors. Determining what to use when and evaluating the performance implications of your choices are skills you’ll learn over time. The following sections will help you understand how to use indexes effectively.

Isolating the Column

We commonly see queries that defeat indexes or prevent MySQL from using the available indexes. MySQL generally can’t use indexes on columns unless the columns are isolated in the query. “Isolating” the column means it should not be part of an expression or be inside a function in the query.

For example, here’s a query that can’t use the index on actor_id:

mysql> SELECT actor_id FROM sakila.actor WHERE actor_id + 1 = 5;

A human can easily see that the WHERE clause is equivalent to actor_id = 4, but MySQL can’t solve the equation for actor_id. It’s up to you to do this. You should get in the habit of simplifying your WHERE criteria, so the indexed column is alone on one side of the comparison operator.

Here’s another example of a common mistake:

mysql> SELECT ... WHERE TO_DAYS(CURRENT_DATE) - TO_DAYS(date_col) <= 10;

Prefix Indexes and Index Selectivity

Sometimes you need to index very long character columns, which makes your indexes large and slow. One strategy is to simulate a hash index, as we showed earlier in this chapter. But sometimes that isn’t good enough. What can you do?

You can often save space and get good performance by indexing the first few characters instead of the whole value. This makes your indexes use less space, but it also makes them less selective. Index selectivity is the ratio of the number of distinct indexed values (the cardinality) to the total number of rows in the table (#T), and ranges from 1/#T to 1. A highly selective index is good because it lets MySQL filter out more rows when it looks for matches. A unique index has a selectivity of 1, which is as good as it gets.

A prefix of the column is often selective enough to give good performance. If you’re indexing BLOB or TEXT columns, or very long VARCHAR columns, you must define prefix indexes, because MySQL disallows indexing their full length.

The trick is to choose a prefix that’s long enough to give good selectivity, but short enough to save space. The prefix should be long enough to make the index nearly as useful as it would be if you’d indexed the whole column. In other words, you’d like the prefix’s cardinality to be close to the full column’s cardinality.

To determine a good prefix length, find the most frequent values and compare that list to a list of the most frequent prefixes. There’s no good table to demonstrate this in the Sakila sample database, so we derive one from the city table, just so we have enough data to work with:

CREATE TABLE sakila.city_demo(city VARCHAR(50) NOT NULL);

INSERT INTO sakila.city_demo(city) SELECT city FROM sakila.city;

-- Repeat the next statement five times:

INSERT INTO sakila.city_demo(city) SELECT city FROM sakila.city_demo;

-- Now randomize the distribution (inefficiently but conveniently):

UPDATE sakila.city_demo

SET city = (SELECT city FROM sakila.city ORDER BY RAND() LIMIT 1);

Now we have an example dataset. The results are not realistically distributed, and we used RAND(), so your results will vary, but that doesn’t matter for this exercise. First, we find the most frequently occurring cities:

mysql> SELECT COUNT(*) AS cnt, city

-> FROM sakila.city_demo GROUP BY city ORDER BY cnt DESC LIMIT 10;

+-----+----------------+

| cnt | city |

+-----+----------------+

| 65 | London |

| 49 | Hiroshima |

| 48 | Teboksary |

| 48 | Pak Kret |

| 48 | Yaound |

| 47 | Tel Aviv-Jaffa |

| 47 | Shimoga |

| 45 | Cabuyao |

| 45 | Callao |

| 45 | Bislig |

+-----+----------------+

Notice that there are roughly 45 to 65 occurrences of each value. Now we find the most frequently occurring city name prefixes, beginning with three-letter prefixes:

mysql> SELECT COUNT(*) AS cnt, LEFT(city, 3) AS pref

-> FROM sakila.city_demo GROUP BY pref ORDER BY cnt DESC LIMIT 10;

+-----+------+

| cnt | pref |

+-----+------+

| 483 | San |

| 195 | Cha |

| 177 | Tan |

| 167 | Sou |

| 163 | al- |

| 163 | Sal |

| 146 | Shi |

| 136 | Hal |

| 130 | Val |

| 129 | Bat |

+-----+------+

There are many more occurrences of each prefix, so there are many fewer unique prefixes than unique full-length city names. The idea is to increase the prefix length until the prefix becomes nearly as selective as the full length of the column. A little experimentation shows that 7 is a good value:

mysql> SELECT COUNT(*) AS cnt, LEFT(city, 7) AS pref

-> FROM sakila.city_demo GROUP BY pref ORDER BY cnt DESC LIMIT 10;

+-----+---------+

| cnt | pref |

+-----+---------+

| 70 | Santiag |

| 68 | San Fel |

| 65 | London |

| 61 | Valle d |

| 49 | Hiroshi |

| 48 | Teboksa |

| 48 | Pak Kre |

| 48 | Yaound |

| 47 | Tel Avi |

| 47 | Shimoga |

+-----+---------+

Another way to calculate a good prefix length is by computing the full column’s selectivity and trying to make the prefix’s selectivity close to that value. Here’s how to find the full column’s selectivity:

mysql> SELECT COUNT(DISTINCT city)/COUNT(*) FROM sakila.city_demo;

+-------------------------------+

| COUNT(DISTINCT city)/COUNT(*) |

+-------------------------------+

| 0.0312 |

+-------------------------------+

The prefix will be about as good, on average (there’s a caveat here, though), if we target a selectivity near .031. It’s possible to evaluate many different lengths in one query, which is useful on very large tables. Here’s how to find the selectivity of several prefix lengths in one query:

mysql> SELECT COUNT(DISTINCT LEFT(city, 3))/COUNT(*) AS sel3,

-> COUNT(DISTINCT LEFT(city, 4))/COUNT(*) AS sel4,

-> COUNT(DISTINCT LEFT(city, 5))/COUNT(*) AS sel5,

-> COUNT(DISTINCT LEFT(city, 6))/COUNT(*) AS sel6,

-> COUNT(DISTINCT LEFT(city, 7))/COUNT(*) AS sel7

-> FROM sakila.city_demo;

+--------+--------+--------+--------+--------+

| sel3 | sel4 | sel5 | sel6 | sel7 |

+--------+--------+--------+--------+--------+

| 0.0239 | 0.0293 | 0.0305 | 0.0309 | 0.0310 |

+--------+--------+--------+--------+--------+

This query shows that increasing the prefix length results in successively smaller improvements as it approaches seven characters.

It’s not a good idea to look only at average selectivity. The caveat is that the worst-case selectivity matters, too. The average selectivity might make you think a four- or five-character prefix is good enough, but if your data is very uneven, that could be a trap. If you look at the number of occurrences of the most common city name prefixes using a value of 4, you’ll see the unevenness clearly:

mysql> SELECT COUNT(*) AS cnt, LEFT(city, 4) AS pref

-> FROM sakila.city_demo GROUP BY pref ORDER BY cnt DESC LIMIT 5;

+-----+------+

| cnt | pref |

+-----+------+

| 205 | San |

| 200 | Sant |

| 135 | Sout |

| 104 | Chan |

| 91 | Toul |

+-----+------+

With four characters, the most frequent prefixes occur quite a bit more often than the most frequent full-length values. That is, the selectivity on those values is lower than the average selectivity. If you have a more realistic dataset than this randomly generated sample, you’re likely to see this effect even more. For example, building a four-character prefix index on real-world city names will give terrible selectivity on cities that begin with “San” and “New,” of which there are many.

Now that we’ve found a good value for our sample data, here’s how to create a prefix index on the column:

mysql> ALTER TABLE sakila.city_demo ADD KEY (city(7));

Prefix indexes can be a great way to make indexes smaller and faster, but they have downsides too: MySQL cannot use prefix indexes for ORDER BY or GROUP BY queries, nor can it use them as covering indexes.

A common case we’ve found to benefit from prefix indexes is when long hexadecimal identifiers are used. We discussed more efficient techniques of storing such identifiers in the previous chapter, but what if you’re using a packaged solution that you can’t modify? We see this frequently with vBulletin and other applications that use MySQL to store website sessions, keyed on long hex strings. Adding an index on the first eight characters or so often boosts performance significantly, in a way that’s completely transparent to the application.

NOTE

Sometimes suffix indexes make sense (e.g., for finding all email addresses from a certain domain). MySQL does not support reversed indexes natively, but you can store a reversed string and index a prefix of it. You can maintain the index with triggers; see Building your own hash indexes.

Multicolumn Indexes

Multicolumn indexes are often very poorly understood. Common mistakes are to index many or all of the columns separately, or to index columns in the wrong order.

We’ll discuss column order in the next section. The first mistake, indexing many columns separately, has a distinctive signature in SHOW CREATE TABLE:

CREATE TABLE t (

c1 INT,

c2 INT,

c3 INT,

KEY(c1),

KEY(c2),

KEY(c3)

);

This strategy of indexing often results when people give vague but authoritative-sounding advice such as “create indexes on columns that appear in the WHERE clause.” This advice is very wrong. It will result in one-star indexes at best. These indexes can be many orders of magnitude slower than truly optimal indexes. Sometimes when you can’t design a three-star index, it’s much better to ignore the WHERE clause and pay attention to optimal row order or create a covering index instead.

Individual indexes on lots of columns won’t help MySQL improve performance for most queries. MySQL 5.0 and newer can cope a little with such poorly indexed tables by using a strategy known as index merge, which permits a query to make limited use of multiple indexes from a single table to locate desired rows. Earlier versions of MySQL could use only a single index, so when no single index was good enough to help, MySQL often chose a table scan. For example, the film_actor table has an index on film_id and an index on actor_id, but neither is a good choice for both WHERE conditions in this query:

mysql> SELECT film_id, actor_id FROM sakila.film_actor

-> WHERE actor_id = 1 OR film_id = 1;

In older MySQL versions, that query would produce a table scan unless you wrote it as the UNION of two queries:

mysql> SELECT film_id, actor_id FROM sakila.film_actor WHERE actor_id = 1

-> UNION ALL

-> SELECT film_id, actor_id FROM sakila.film_actor WHERE film_id = 1

-> AND actor_id <> 1;

In MySQL 5.0 and newer, however, the query can use both indexes, scanning them simultaneously and merging the results. There are three variations on the algorithm: union for OR conditions, intersection for AND conditions, and unions of intersections for combinations of the two. The following query uses a union of two index scans, as you can see by examining the Extra column:

mysql> EXPLAIN SELECT film_id, actor_id FROM sakila.film_actor

-> WHERE actor_id = 1 OR film_id = 1\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: film_actor

type: index_merge

possible_keys: PRIMARY,idx_fk_film_id

key: PRIMARY,idx_fk_film_id

key_len: 2,2

ref: NULL

rows: 29

Extra: Using union(PRIMARY,idx_fk_film_id); Using where

MySQL can use this technique on complex queries, so you might see nested operations in the Extra column for some queries.

The index merge strategy sometimes works very well, but it’s more common for it to actually be an indication of a poorly indexed table:

§ When the server intersects indexes (usually for AND conditions), it usually means that you need a single index with all the relevant columns, not multiple indexes that have to be combined.

§ When the server unions indexes (usually for OR conditions), sometimes the algorithm’s buffering, sorting, and merging operations use lots of CPU and memory resources. This is especially true if not all of the indexes are very selective, so the scans return lots of rows to the merge operation.

§ Recall that the optimizer doesn’t account for this cost—it optimizes just the number of random page reads. This can make it “underprice” the query, which might in fact run more slowly than a plain table scan. The intensive memory and CPU usage also tends to impact concurrent queries, but you won’t see this effect when you run the query in isolation. Sometimes rewriting such queries with a UNION, the way you used to have to do in MySQL 4.1 and earlier, is more optimal.

When you see an index merge in EXPLAIN, you should examine the query and table structure to see if this is really the best you can get. You can disable index merges with the optimizer_switch option or variable. You can also use IGNORE INDEX.

Choosing a Good Column Order

One of the most common causes of confusion we’ve seen is the order of columns in an index. The correct order depends on the queries that will use the index, and you must think about how to choose the index order such that rows are sorted and grouped in a way that will benefit the query. (This section applies to B-Tree indexes, by the way; hash and other index types don’t store their data in sorted order as B-Tree indexes do.)

The order of columns in a multicolumn B-Tree index means that the index is sorted first by the leftmost column, then by the next column, and so on. Therefore, the index can be scanned in either forward or reverse order, to satisfy queries with ORDER BY, GROUP BY, and DISTINCT clauses that match the column order exactly.

As a result, the column order is vitally important in multicolumn indexes. The column order either enables or prevents the index from earning “stars” in Lahdenmaki and Leach’s three-star system (see Benefits of Indexes earlier in this chapter for more on the three-star system). We will show many examples of how this works through the rest of this chapter.

There is an old rule of thumb for choosing column order: place the most selective columns first in the index. How useful is this suggestion? It can be helpful in some cases, but it’s usually much less important than avoiding random I/O and sorting, all things considered. (Specific cases vary, so there’s no one-size-fits-all rule. That alone should tell you that this rule of thumb is probably less important than you think.)

Placing the most selective columns first can be a good idea when there is no sorting or grouping to consider, and thus the purpose of the index is only to optimize WHERE lookups. In such cases, it might indeed work well to design the index so that it filters out rows as quickly as possible, so it’s more selective for queries that specify only a prefix of the index in the WHERE clause. However, this depends not only on the selectivity (overall cardinality) of the columns, but also on the actual values you use to look up rows—the distribution of values. This is the same type of consideration we explored for choosing a good prefix length. You might actually need to choose the column order such that it’s as selective as possible for the queries that you’ll run most.

Let’s use the following query as an example:

SELECT * FROM payment WHERE staff_id = 2 AND customer_id = 584;

Should you create an index on (staff_id, customer_id), or should you reverse the column order? We can run some quick queries to help examine the distribution of values in the table and determine which column has a higher selectivity. Let’s transform the query to count the cardinality of each predicate[55] in the WHERE clause:

mysql> SELECT SUM(staff_id = 2), SUM(customer_id = 584) FROM payment\G

*************************** 1. row ***************************

SUM(staff_id = 2): 7992

SUM(customer_id = 584): 30

According to the rule of thumb, we should place customer_id first in the index, because the predicate matches fewer rows in the table. We can then run the query again to see how selective staff_id is within the range of rows selected by this specific customer ID:

mysql> SELECT SUM(staff_id = 2) FROM payment WHERE customer_id = 584\G

*************************** 1. row ***************************

SUM(staff_id = 2): 17

Be careful with this technique, because the results depend on the specific constants supplied for the chosen query. If you optimize your indexes for this query and other queries don’t fare as well, the server’s performance might suffer overall, or some queries might run unpredictably.

If you’re using the “worst” sample query from a report from a tool such as pt-query-digest, this technique can be an effective way to see what might be the most helpful indexes for your queries and your data. But if you don’t have specific samples to run, it might be better to use the old rule of thumb, which is to look at the cardinality across the board, not just for one query:

mysql> SELECT COUNT(DISTINCT staff_id)/COUNT(*) AS staff_id_selectivity,

> COUNT(DISTINCT customer_id)/COUNT(*) AS customer_id_selectivity,

> COUNT(*)

> FROM payment\G

*************************** 1. row ***************************

staff_id_selectivity: 0.0001

customer_id_selectivity: 0.0373

COUNT(*): 16049

customer_id has higher selectivity, so again the answer is to put that column first in the index:

mysql> ALTER TABLE payment ADD KEY(customer_id, staff_id);

As with prefix indexes, problems often arise from special values that have higher than normal cardinality. For example, we have seen applications treat users who aren’t logged in as “guest” users, who get a special user ID in session tables and other places where user activity is recorded. Queries involving that user ID are likely to behave very differently from other queries, because there are usually a lot of sessions that aren’t logged in. We’ve also seen system accounts cause similar problems. One application had a magical administrative account, which wasn’t a real user, who was “friends” with every user of the whole website so that it could send status notices and other messages. That user’s huge list of friends was causing severe performance problems for the site.

This is actually fairly typical. Any outlier, even if it’s not an artifact of a poor decision in how the application is managed, can cause problems. Users who really do have lots of friends, photos, status messages, and the like can be just as troublesome as fake users.

Here’s a real example we saw once, on a product forum where users exchanged stories and experiences about the product. Queries of this particular form were running very slowly:

mysql> SELECT COUNT(DISTINCT threadId) AS COUNT_VALUE

-> FROM Message

-> WHERE (groupId = 10137) AND (userId = 1288826) AND (anonymous = 0)

-> ORDER BY priority DESC, modifiedDate DESC

This query appeared not to have a very good index, so the customer asked us to see if it could be improved. The EXPLAIN follows:

id: 1

select_type: SIMPLE

table: Message

type: ref

key: ix_groupId_userId

key_len: 18

ref: const,const

rows: 1251162

Extra: Using where

The index that MySQL chose for this query is on (groupId, userId), which would seem like a pretty decent choice if we had no information about the column cardinality. However, a different picture emerged when we looked at how many rows matched that user ID and group ID:

mysql> SELECT COUNT(*), SUM(groupId = 10137),

-> SUM(userId = 1288826), SUM(anonymous = 0)

-> FROM Message\G

*************************** 1. row ***************************

count(*): 4142217

sum(groupId = 10137): 4092654

sum(userId = 1288826): 1288496

sum(anonymous = 0): 4141934

It turned out that this group owned almost every row in the table, and the user had 1.3 million rows—in this case, there simply isn’t an index that can help! This was because the data was migrated from another application, and all of the messages were assigned to the administrative user and group as part of the import process. The solution to this problem was to change the application code to recognize this special-case user ID and group ID, and not issue this query for that user.

The moral of this little story is that rules of thumb and heuristics can be useful, but you have to be careful not to assume that average-case performance is representative of special-case performance. Special cases can wreck performance for the whole application.

In the end, although the rule of thumb about selectivity and cardinality is interesting to explore, other factors—such as sorting, grouping, and the presence of range conditions in the query’s WHERE clause—can make a much bigger difference to query performance.

Clustered Indexes

Clustered indexes[56] aren’t a separate type of index. Rather, they’re an approach to data storage. The exact details vary between implementations, but InnoDB’s clustered indexes actually store a B-Tree index and the rows together in the same structure.

When a table has a clustered index, its rows are actually stored in the index’s leaf pages. The term “clustered” refers to the fact that rows with adjacent key values are stored close to each other.[57] You can have only one clustered index per table, because you can’t store the rows in two places at once. (However, covering indexes let you emulate multiple clustered indexes; more on this later.)

Because storage engines are responsible for implementing indexes, not all storage engines support clustered indexes. We focus on InnoDB in this section, but the principles we discuss are likely to be at least partially true for any storage engine that supports clustered indexes now or in the future.

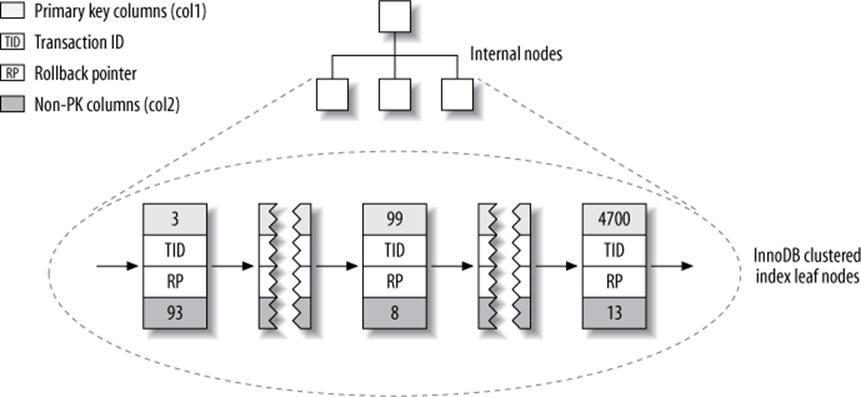

Figure 5-3 shows how records are laid out in a clustered index. Notice that the leaf pages contain full rows but the node pages contain only the indexed columns. In this case, the indexed column contains integer values.

Figure 5-3. Clustered index data layout

Some database servers let you choose which index to cluster, but none of MySQL’s built-in storage engines does at the time of this writing. InnoDB clusters the data by the primary key. That means that the “indexed column” in Figure 5-3 is the primary key column.

If you don’t define a primary key, InnoDB will try to use a unique nonnullable index instead. If there’s no such index, InnoDB will define a hidden primary key for you and then cluster on that. InnoDB clusters records together only within a page. Pages with adjacent key values might be distant from each other.

A clustering primary key can help performance, but it can also cause serious performance problems. Thus, you should think carefully about clustering, especially when you change a table’s storage engine from InnoDB to something else (or vice versa).

Clustering data has some very important advantages:

§ You can keep related data close together. For example, when implementing a mailbox, you can cluster by user_id, so you can retrieve all of a single user’s messages by fetching only a few pages from disk. If you didn’t use clustering, each message might require its own disk I/O.

§ Data access is fast. A clustered index holds both the index and the data together in one B-Tree, so retrieving rows from a clustered index is normally faster than a comparable lookup in a nonclustered index.

§ Queries that use covering indexes can use the primary key values contained at the leaf node.

These benefits can boost performance tremendously if you design your tables and queries to take advantage of them. However, clustered indexes also have disadvantages:

§ Clustering gives the largest improvement for I/O-bound workloads. If the data fits in memory the order in which it’s accessed doesn’t really matter, so clustering doesn’t give much benefit.

§ Insert speeds depend heavily on insertion order. Inserting rows in primary key order is the fastest way to load data into an InnoDB table. It might be a good idea to reorganize the table with OPTIMIZE TABLE after loading a lot of data if you didn’t load the rows in primary key order.

§ Updating the clustered index columns is expensive, because it forces InnoDB to move each updated row to a new location.

§ Tables built upon clustered indexes are subject to page splits when new rows are inserted, or when a row’s primary key is updated such that the row must be moved. A page split happens when a row’s key value dictates that the row must be placed into a page that is full of data. The storage engine must split the page into two to accommodate the row. Page splits can cause a table to use more space on disk.

§ Clustered tables can be slower for full table scans, especially if rows are less densely packed or stored nonsequentially because of page splits.

§ Secondary (nonclustered) indexes can be larger than you might expect, because their leaf nodes contain the primary key columns of the referenced rows.

§ Secondary index accesses require two index lookups instead of one.

The last point can be a bit confusing. Why would a secondary index require two index lookups? The answer lies in the nature of the “row pointers” the secondary index stores. Remember, a leaf node doesn’t store a pointer to the referenced row’s physical location; rather, it stores the row’s primary key values.

That means that to find a row from a secondary index, the storage engine first finds the leaf node in the secondary index and then uses the primary key values stored there to navigate the primary key and find the row. That’s double work: two B-Tree navigations instead of one.[58] In InnoDB, the adaptive hash index can help reduce this penalty.

Comparison of InnoDB and MyISAM data layout

The differences between clustered and nonclustered data layouts, and the corresponding differences between primary and secondary indexes, can be confusing and surprising. Let’s see how InnoDB and MyISAM lay out the following table:

CREATE TABLE layout_test (

col1 int NOT NULL,

col2 int NOT NULL,

PRIMARY KEY(col1),

KEY(col2)

);

Suppose the table is populated with primary key values 1 to 10,000, inserted in random order and then optimized with OPTIMIZE TABLE. In other words, the data is arranged optimally on disk, but the rows might be in a random order. The values for col2 are randomly assigned between 1 and 100, so there are lots of duplicates.

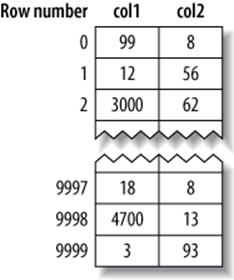

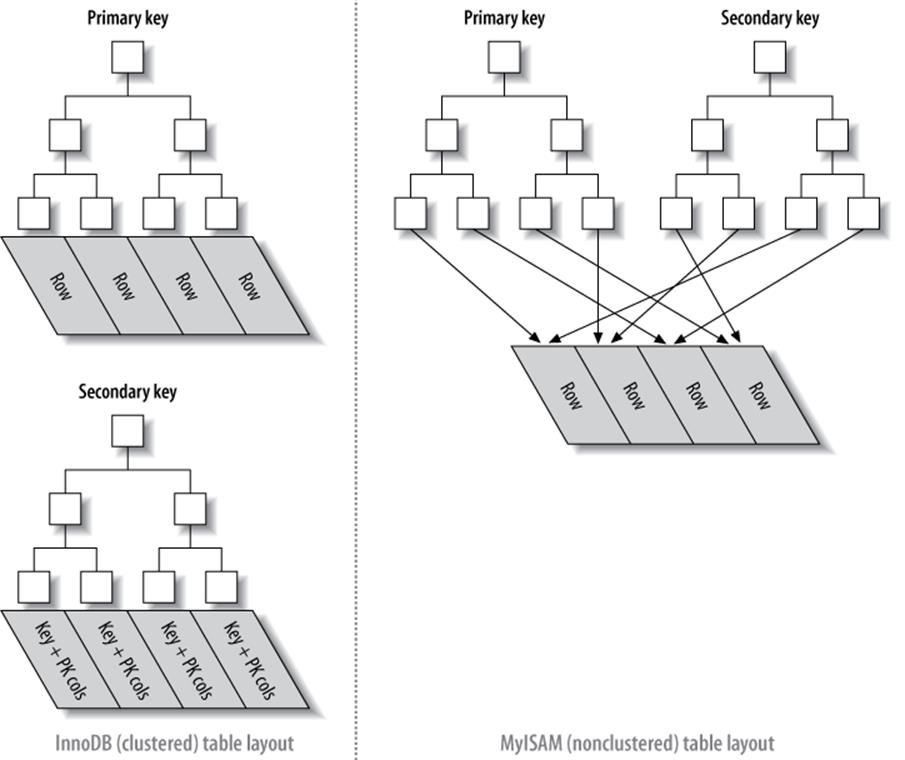

MyISAM’s data layout

MyISAM’s data layout is simpler, so we’ll illustrate that first. MyISAM stores the rows on disk in the order in which they were inserted, as shown in Figure 5-4.

We’ve shown the row numbers, beginning at 0, beside the rows. Because the rows are fixed-size, MyISAM can find any row by seeking the required number of bytes from the beginning of the table. (MyISAM doesn’t always use “row numbers,” as we’ve shown; it uses different strategies depending on whether the rows are fixed-size or variable-size.)

This layout makes it easy to build an index. We illustrate with a series of diagrams, abstracting away physical details such as pages and showing only “nodes” in the index. Each leaf node in the index can simply contain the row number. Figure 5-5 illustrates the table’s primary key.

Figure 5-4. MyISAM data layout for the layout_test table

Figure 5-5. MyISAM primary key layout for the layout_test table

We’ve glossed over some of the details, such as how many internal B-Tree nodes descend from the one before, but that’s not important to understanding the basic data layout of a nonclustered storage engine.

What about the index on col2? Is there anything special about it? As it turns out, no—it’s just an index like any other. Figure 5-6 illustrates the col2 index.

Figure 5-6. MyISAM col2 index layout for the layout_test table

In fact, in MyISAM, there is no structural difference between a primary key and any other index. A primary key is simply a unique, nonnullable index named PRIMARY.

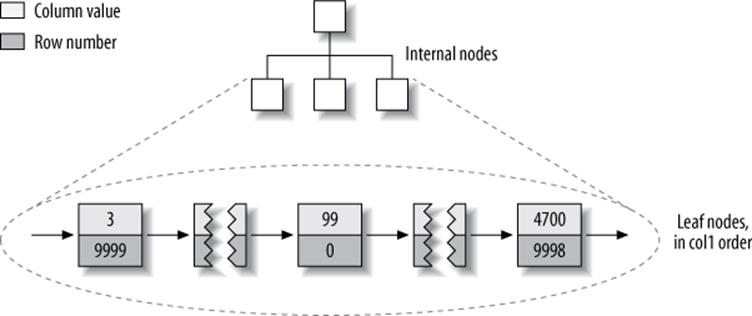

InnoDB’s data layout

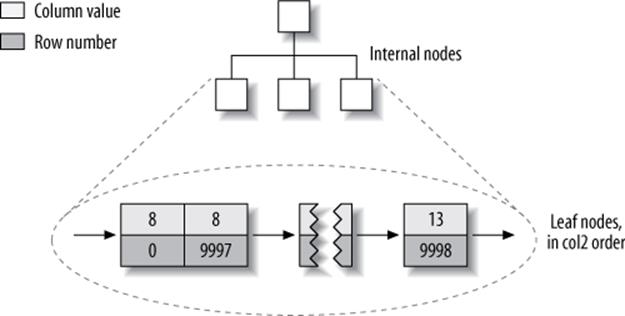

InnoDB stores the same data very differently because of its clustered organization. InnoDB stores the table as shown in Figure 5-7.

Figure 5-7. InnoDB primary key layout for the layout_test table

At first glance, that might not look very different from Figure 5-5. But look again, and notice that this illustration shows the whole table, not just the index. Because the clustered index “is” the table in InnoDB, there’s no separate row storage as there is for MyISAM.

Each leaf node in the clustered index contains the primary key value, the transaction ID, and rollback pointer InnoDB uses for transactional and MVCC purposes, and the rest of the columns (in this case, col2). If the primary key is on a column prefix, InnoDB includes the full column value with the rest of the columns.

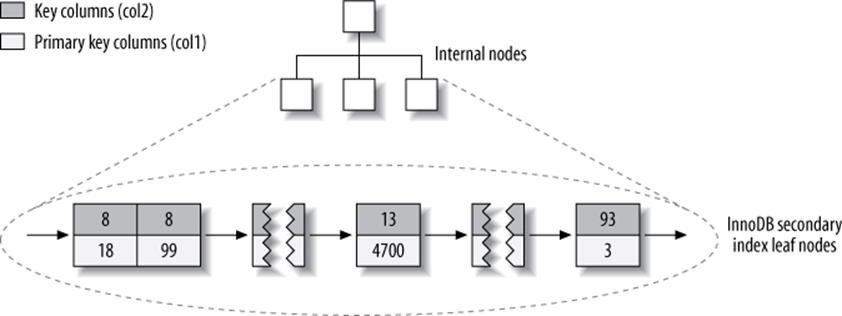

Also in contrast to MyISAM, secondary indexes are very different from clustered indexes in InnoDB. Instead of storing “row pointers,” InnoDB’s secondary index leaf nodes contain the primary key values, which serve as the “pointers” to the rows. This strategy reduces the work needed to maintain secondary indexes when rows move or when there’s a data page split. Using the row’s primary key values as the pointer makes the index larger, but it means InnoDB can move a row without updating pointers to it.

Figure 5-8 illustrates the col2 index for the example table. Each leaf node contains the indexed columns (in this case just col2), followed by the primary key values (col1).

Figure 5-8. InnoDB secondary index layout for the layout_test table

These diagrams have illustrated the B-Tree leaf nodes, but we intentionally omitted details about the non-leaf nodes. InnoDB’s non-leaf B-Tree nodes each contain the indexed column(s), plus a pointer to the next-deeper node (which might be either another non-leaf node or a leaf node). This applies to all indexes, clustered and secondary.

Figure 5-9 is an abstract diagram of how InnoDB and MyISAM arrange the table. This illustration makes it easier to see how differently InnoDB and MyISAM store data and indexes.

If you don’t understand why and how clustered and nonclustered storage are different, and why it’s so important, don’t worry. It will become clearer as you learn more, especially in the rest of this chapter and in the next chapter. These concepts are complicated, and they take a while to understand fully.

Inserting rows in primary key order with InnoDB

If you’re using InnoDB and don’t need any particular clustering, it can be a good idea to define a surrogate key, which is a primary key whose value is not derived from your application’s data. The easiest way to do this is usually with an AUTO_INCREMENT column. This will ensure that rows are inserted in sequential order and will offer better performance for joins using primary keys.

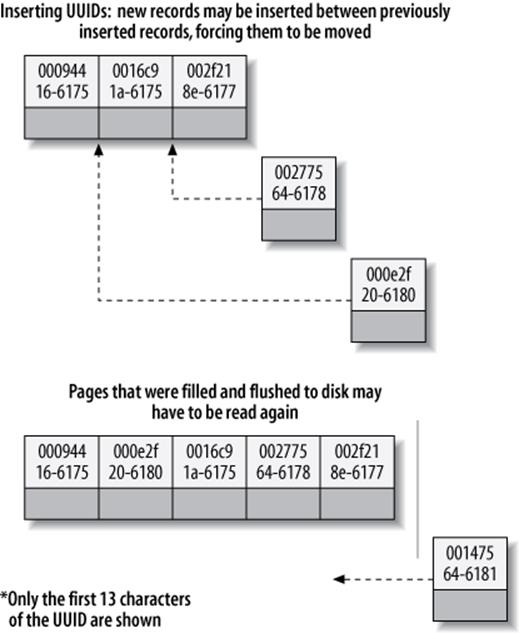

It is best to avoid random (nonsequential and distributed over a large set of values) clustered keys, especially for I/O-bound workloads. For example, using UUID values is a poor choice from a performance standpoint: it makes clustered index insertion random, which is a worst-case scenario, and does not give you any helpful data clustering.

To demonstrate, we benchmarked two cases. The first is inserting into a userinfo table with an integer ID, defined as follows:

CREATE TABLE userinfo (

id int unsigned NOT NULL AUTO_INCREMENT,

name varchar(64) NOT NULL DEFAULT '',

email varchar(64) NOT NULL DEFAULT '',

password varchar(64) NOT NULL DEFAULT '',

dob date DEFAULT NULL,

address varchar(255) NOT NULL DEFAULT '',

city varchar(64) NOT NULL DEFAULT '',

state_id tinyint unsigned NOT NULL DEFAULT '0',

zip varchar(8) NOT NULL DEFAULT '',

country_id smallint unsigned NOT NULL DEFAULT '0',

gender ('M','F')NOT NULL DEFAULT 'M',

account_type varchar(32) NOT NULL DEFAULT '',

verified tinyint NOT NULL DEFAULT '0',

allow_mail tinyint unsigned NOT NULL DEFAULT '0',

parrent_account int unsigned NOT NULL DEFAULT '0',

closest_airport varchar(3) NOT NULL DEFAULT '',

PRIMARY KEY (id),

UNIQUE KEY email (email),

KEY country_id (country_id),

KEY state_id (state_id),

KEY state_id_2 (state_id,city,address)

) ENGINE=InnoDB

Figure 5-9. Clustered and nonclustered tables side-by-side

Notice the autoincrementing integer primary key.[59]

The second case is a table named userinfo_uuid. It is identical to the userinfo table, except that its primary key is a UUID instead of an integer:

CREATE TABLE userinfo_uuid (

uuid varchar(36) NOT NULL,

...

We benchmarked both table designs. First, we inserted a million records into both tables on a server with enough memory to hold the indexes. Next, we inserted three million rows into the same tables, which made the indexes bigger than the server’s memory. Table 5-1 compares the benchmark results.

Table 5-1. Benchmark results for inserting rows into InnoDB tables

|

Table |

Rows |

Time (sec) |

Index size (MB) |

|

userinfo |

1,000,000 |

137 |

342 |

|

userinfo_uuid |

1,000,000 |

180 |

544 |

|

userinfo |

3,000,000 |

1233 |

1036 |

|

userinfo_uuid |

3,000,000 |

4525 |

1707 |

Notice that not only does it take longer to insert the rows with the UUID primary key, but the resulting indexes are quite a bit bigger. Some of that is due to the larger primary key, but some of it is undoubtedly due to page splits and resultant fragmentation as well.

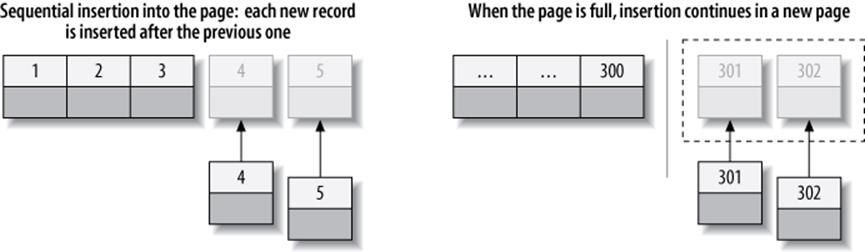

To see why this is so, let’s see what happened in the index when we inserted data into the first table. Figure 5-10 shows inserts filling a page and then continuing on a second page.

Figure 5-10. Inserting sequential index values into a clustered index

As Figure 5-10 illustrates, InnoDB stores each record immediately after the one before, because the primary key values are sequential. When the page reaches its maximum fill factor (InnoDB’s initial fill factor is only 15/16 full, to leave room for modifications later), the next record goes into a new page. Once the data has been loaded in this sequential fashion, the primary key pages are packed nearly full with in-order records, which is highly desirable. (The secondary index pages are not likely to differ, however.)

Contrast that with what happened when we inserted the data into the second table with the UUID clustered index, as shown in Figure 5-11.

Figure 5-11. Inserting nonsequential values into a clustered index

Because each new row doesn’t necessarily have a larger primary key value than the previous one, InnoDB cannot always place the new row at the end of the index. It has to find the appropriate place for the row—on average, somewhere near the middle of the existing data—and make room for it. This causes a lot of extra work and results in a suboptimal data layout. Here’s a summary of the drawbacks:

§ The destination page might have been flushed to disk and removed from the caches, or might not have ever been placed into the caches, in which case InnoDB will have to find it and read it from the disk before it can insert the new row. This causes a lot of random I/O.

§ When insertions are done out of order, InnoDB has to split pages frequently to make room for new rows. This requires moving around a lot of data, and modifying at least three pages instead of one.

§ Pages become sparsely and irregularly filled because of splitting, so the final data is fragmented.

After loading such random values into a clustered index, you should probably do an OPTIMIZE TABLE to rebuild the table and fill the pages optimally.

The moral of the story is that you should strive to insert data in primary key order when using InnoDB, and you should try to use a clustering key that will give a monotonically increasing value for each new row.

WHEN PRIMARY KEY ORDER IS WORSE

For high-concurrency workloads, inserting in primary key order can actually create points of contention in InnoDB. The upper end of the primary key is one hot spot. Because all inserts take place there, concurrent inserts might fight over next-key locks. Another hot spot is the AUTO_INCREMENT locking mechanism; if you experience problems with that, you might be able to redesign your table or application, or configure innodb_autoinc_lock_mode. If your server version doesn’t support innodb_autoinc_lock_mode, you can upgrade to a newer version of InnoDB that will perform better for this specific workload.

Covering Indexes

A common suggestion is to create indexes for the query’s WHERE clause, but that’s only part of the story. Indexes need to be designed for the whole query, not just the WHERE clause. Indexes are indeed a way to find rows efficiently, but MySQL can also use an index to retrieve a column’s data, so it doesn’t have to read the row at all. After all, the index’s leaf nodes contain the values they index; why read the row when reading the index can give you the data you want? An index that contains (or “covers”) all the data needed to satisfy a query is called a covering index.

Covering indexes can be a very powerful tool and can dramatically improve performance. Consider the benefits of reading only the index instead of the data:

§ Index entries are usually much smaller than the full row size, so MySQL can access significantly less data if it reads only the index. This is very important for cached workloads, where much of the response time comes from copying the data. It is also helpful for I/O-bound workloads, because the indexes are smaller than the data and fit in memory better. (This is especially true for MyISAM, which can pack indexes to make them even smaller.)

§ Indexes are sorted by their index values (at least within the page), so I/O-bound range accesses will need to do less I/O compared to fetching each row from a random disk location. For some storage engines, such as MyISAM and Percona XtraDB, you can even OPTIMIZE the table to get fully sorted indexes, which will let simple range queries use completely sequential index accesses.

§ Some storage engines, such as MyISAM, cache only the index in MySQL’s memory. Because the operating system caches the data for MyISAM, accessing it typically requires a system call. This might cause a huge performance impact, especially for cached workloads where the system call is the most expensive part of data access.

§ Covering indexes are especially helpful for InnoDB tables, because of InnoDB’s clustered indexes. InnoDB’s secondary indexes hold the row’s primary key values at their leaf nodes. Thus, a secondary index that covers a query avoids another index lookup in the primary key.

In all of these scenarios, it is typically much less expensive to satisfy a query from an index instead of looking up the rows.

A covering index can’t be just any kind of index. The index must store the values from the columns it contains. Hash, spatial, and full-text indexes don’t store these values, so MySQL can use only B-Tree indexes to cover queries. And again, different storage engines implement covering indexes differently, and not all storage engines support them (at the time of this writing, the Memory storage engine doesn’t).

When you issue a query that is covered by an index (an index-covered query), you’ll see “Using index” in the Extra column in EXPLAIN.[60] For example, the sakila.inventory table has a multicolumn index on (store_id, film_id). MySQL can use this index for a query that accesses only those two columns, such as the following:

mysql> EXPLAIN SELECT store_id, film_id FROM sakila.inventory\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: inventory

type: index

possible_keys: NULL

key: idx_store_id_film_id

key_len: 3

ref: NULL

rows: 4673

Extra: Using index

Index-covered queries have subtleties that can disable this optimization. The MySQL query optimizer decides before executing a query whether an index covers it. Suppose the index covers a WHERE condition, but not the entire query. If the condition evaluates as false, MySQL 5.5 and earlier will fetch the row anyway, even though it doesn’t need it and will filter it out.

Let’s see why this can happen, and how to rewrite the query to work around the problem. We begin with the following query:

mysql> EXPLAIN SELECT * FROM products WHERE actor='SEAN CARREY'

-> AND title like '%APOLLO%'\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: products

type: ref

possible_keys: ACTOR,IX_PROD_ACTOR

key: ACTOR

key_len: 52

ref: const

rows: 10

Extra: Using where

The index can’t cover this query for two reasons:

§ No index covers the query, because we selected all columns from the table and no index covers all columns. There’s still a shortcut MySQL could theoretically use, though: the WHERE clause mentions only columns the index covers, so MySQL could use the index to find the actor and check whether the title matches, and only then read the full row.

§ MySQL can’t perform the LIKE operation in the index. This is a limitation of the low-level storage engine API, which in MySQL 5.5 and earlier allows only simple comparisons (such as equality, inequality, and greater-than) in index operations. MySQL can perform prefix-match LIKEpatterns in the index because it can convert them to simple comparisons, but the leading wildcard in the query makes it impossible for the storage engine to evaluate the match. Thus, the MySQL server itself will have to fetch and match on the row’s values, not the index’s values.

There’s a way to work around both problems with a combination of clever indexing and query rewriting. We can extend the index to cover (artist, title, prod_id) and rewrite the query as follows:

mysql> EXPLAIN SELECT *

-> FROM products

-> JOIN (

-> SELECT prod_id

-> FROM products

-> WHERE actor='SEAN CARREY' AND title LIKE '%APOLLO%'

-> ) AS t1 ON (t1.prod_id=products.prod_id)\G

*************************** 1. row ***************************

id: 1

select_type: PRIMARY

table: <derived2>

...omitted...

*************************** 2. row ***************************

id: 1

select_type: PRIMARY

table: products

...omitted...

*************************** 3. row ***************************

id: 2

select_type: DERIVED

table: products

type: ref

possible_keys: ACTOR,ACTOR_2,IX_PROD_ACTOR

key: ACTOR_2

key_len: 52

ref:

rows: 11

Extra: Using where; Using index

We call this a “deferred join” because it defers access to the columns. MySQL uses the covering index in the first stage of the query, when it finds matching rows in the subquery in the FROM clause. It doesn’t use the index to cover the whole query, but it’s better than nothing.

The effectiveness of this optimization depends on how many rows the WHERE clause finds. Suppose the products table contains a million rows. Let’s see how these two queries perform on three different datasets, each of which contains a million rows:

1. In the first, 30,000 products have Sean Carrey as the actor, and 20,000 of those contain “Apollo” in the title.

2. In the second, 30,000 products have Sean Carrey as the actor, and 40 of those contain “Apollo” in the title.

3. In the third, 50 products have Sean Carrey as the actor, and 10 of those contain “Apollo” in the title.

We used these three datasets to benchmark the two variations of the query and got the results shown in Table 5-2.

Table 5-2. Benchmark results for index-covered queries versus non-index-covered queries

|

Dataset |

Original query |

Optimized query |

|

Example 1 |

5 queries per sec |

5 queries per sec |

|

Example 2 |

7 queries per sec |

35 queries per sec |

|

Example 3 |

2400 queries per sec |

2000 queries per sec |

Here’s how to interpret these results:

§ In example 1 the query returns a big result set, so we can’t see the optimization’s effect. Most of the time is spent reading and sending data.

§ Example 2, where the second condition filter leaves only a small set of results after index filtering, shows how effective the proposed optimization is: performance is five times better on our data. The efficiency comes from needing to read only 40 full rows, instead of 30,000 as in the first query.

§ Example 3 shows the case when the subquery is inefficient. The set of results left after index filtering is so small that the subquery is more expensive than reading all the data from the table.

In most storage engines, an index can cover only queries that access columns that are part of the index. However, InnoDB can actually take this optimization a little bit further. Recall that InnoDB’s secondary indexes hold primary key values at their leaf nodes. This means InnoDB’s secondary indexes effectively have “extra columns” that InnoDB can use to cover queries.

For example, the sakila.actor table uses InnoDB and has an index on last_name, so the index can cover queries that retrieve the primary key column actor_id, even though that column isn’t technically part of the index:

mysql> EXPLAIN SELECT actor_id, last_name

-> FROM sakila.actor WHERE last_name = 'HOPPER'\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: actor

type: ref

possible_keys: idx_actor_last_name

key: idx_actor_last_name

key_len: 137

ref: const

rows: 2

Extra: Using where; Using index

IMPROVEMENTS IN FUTURE MYSQL VERSIONS

Many of the particulars we’ve mentioned here are a result of the limited storage engine API, which doesn’t allow MySQL to push filters through the API to the storage engine. If MySQL could do that, it could send the query to the data, instead of pulling the data into the server where it evaluates the query. At the time of writing, the unreleased MySQL 5.6 contains a significant improvement to the storage engine API, called index condition pushdown. This feature will change query execution greatly and render obsolete many of the tricks we’ve discussed.

Using Index Scans for Sorts

MySQL has two ways to produce ordered results: it can use a sort operation, or it can scan an index in order.[61] You can tell when MySQL plans to scan an index by looking for “index” in the type column in EXPLAIN. (Don’t confuse this with “Using index” in the Extra column.)

Scanning the index itself is fast, because it simply requires moving from one index entry to the next. However, if MySQL isn’t using the index to cover the query, it will have to look up each row it finds in the index. This is basically random I/O, so reading data in index order is usually much slower than a sequential table scan, especially for I/O-bound workloads.

MySQL can use the same index for both sorting and finding rows. If possible, it’s a good idea to design your indexes so that they’re useful for both tasks at once.

Ordering the results by the index works only when the index’s order is exactly the same as the ORDER BY clause and all columns are sorted in the same direction (ascending or descending).[62] If the query joins multiple tables, it works only when all columns in the ORDER BY clause refer to the first table. The ORDER BY clause also has the same limitation as lookup queries: it needs to form a leftmost prefix of the index. In all other cases, MySQL uses a sort.

One case where the ORDER BY clause doesn’t have to specify a leftmost prefix of the index is if there are constants for the leading columns. If the WHERE clause or a JOIN clause specifies constants for these columns, they can “fill the gaps” in the index.

For example, the rental table in the standard Sakila sample database has an index on (rental_date, inventory_id, customer_id):

CREATE TABLE rental (

...

PRIMARY KEY (rental_id),

UNIQUE KEY rental_date (rental_date,inventory_id,customer_id),

KEY idx_fk_inventory_id (inventory_id),

KEY idx_fk_customer_id (customer_id),

KEY idx_fk_staff_id (staff_id),

...

);

MySQL uses the rental_date index to order the following query, as you can see from the lack of a filesort[63] in EXPLAIN:

mysql> EXPLAIN SELECT rental_id, staff_id FROM sakila.rental

-> WHERE rental_date = '2005-05-25'

-> ORDER BY inventory_id, customer_id\G

*************************** 1. row ***************************

type: ref

possible_keys: rental_date

key: rental_date

rows: 1

Extra: Using where

This works, even though the ORDER BY clause isn’t itself a leftmost prefix of the index, because we specified an equality condition for the first column in the index.

Here are some more queries that can use the index for sorting. This one works because the query provides a constant for the first column of the index and specifies an ORDER BY on the second column. Taken together, those two form a leftmost prefix on the index:

... WHERE rental_date = '2005-05-25' ORDER BY inventory_id DESC;

The following query also works, because the two columns in the ORDER BY are a leftmost prefix of the index:

... WHERE rental_date > '2005-05-25' ORDER BY rental_date, inventory_id;

Here are some queries that cannot use the index for sorting:

§ This query uses two different sort directions, but the index’s columns are all sorted ascending:

... WHERE rental_date = '2005-05-25' ORDER BY inventory_id DESC, customer_id ASC;

§ Here, the ORDER BY refers to a column that isn’t in the index:

... WHERE rental_date = '2005-05-25' ORDER BY inventory_id, staff_id;