High Performance MySQL (2012)

Chapter 6. Query Performance Optimization

In the previous chapters we explained schema optimization and indexing, which are necessary for high performance. But they aren’t enough—you also need to design your queries well. If your queries are bad, even the best-designed schema and indexes will not perform well.

Query optimization, index optimization, and schema optimization go hand in hand. As you gain experience writing queries in MySQL, you will learn how to design tables and indexes to support efficient queries. Similarly, what you learn about optimal schema design will influence the kinds of queries you write. This process takes time, so we encourage you to refer back to these three chapters as you learn more.

This chapter begins with general query design considerations—the things you should consider first when a query isn’t performing well. We then dig much deeper into query optimization and server internals. We show you how to find out how MySQL executes a particular query, and you’ll learn how to change the query execution plan. Finally, we’ll look at some places MySQL doesn’t optimize queries well and explore query optimization patterns that help MySQL execute queries more efficiently.

Our goal is to help you understand deeply how MySQL really executes queries, so you can reason about what is efficient or inefficient, exploit MySQL’s strengths, and avoid its weaknesses.

Why Are Queries Slow?

Before trying to write fast queries, remember that it’s all about response time. Queries are tasks, but they are composed of subtasks, and those subtasks consume time. To optimize a query, you must optimize its subtasks by eliminating them, making them happen fewer times, or making them happen more quickly.[69]

What are the subtasks that MySQL performs to execute a query, and which ones are slow? The full list is impossible to include here, but if you profile a query as we showed in Chapter 3, you will find out what tasks it performs. In general, you can think of a query’s lifetime by mentally following the query through its sequence diagram from the client to the server, where it is parsed, planned, and executed, and then back again to the client. Execution is one of the most important stages in a query’s lifetime. It involves lots of calls to the storage engine to retrieve rows, as well as post-retrieval operations such as grouping and sorting.

While accomplishing all these tasks, the query spends time on the network, in the CPU, in operations such as statistics and planning, locking (mutex waits), and most especially, calls to the storage engine to retrieve rows. These calls consume time in memory operations, CPU operations, and especially I/O operations if the data isn’t in memory. Depending on the storage engine, a lot of context switching and/or system calls might also be involved.

In every case, excessive time may be consumed because the operations are performed needlessly, performed too many times, or are too slow. The goal of optimization is to avoid that, by eliminating or reducing operations, or making them faster.

Again, this isn’t a complete or accurate picture of a query’s life. Our goal here is to show the importance of understanding a query’s lifecycle and thinking in terms of where the time is consumed. With that in mind, let’s see how to optimize queries.

[69] Sometimes you might also need to modify a query to reduce its impact on other queries running on the system. In this case, you’re trying to reduce the query’s resource consumption, a topic we discussed in Chapter 3.

Slow Query Basics: Optimize Data Access

The most basic reason a query doesn’t perform well is because it’s working with too much data. Some queries just have to sift through a lot of data and can’t be helped. That’s unusual, though; most bad queries can be changed to access less data. We’ve found it useful to analyze a poorly performing query in two steps:

1. Find out whether your application is retrieving more data than you need. That usually means it’s accessing too many rows, but it might also be accessing too many columns.

2. Find out whether the MySQL server is analyzing more rows than it needs.

Are You Asking the Database for Data You Don’t Need?

Some queries ask for more data than they need and then throw some of it away. This demands extra work of the MySQL server, adds network overhead,[70] and consumes memory and CPU resources on the application server.

Here are a few typical mistakes:

Fetching more rows than needed

One common mistake is assuming that MySQL provides results on demand, rather than calculating and returning the full result set. We often see this in applications designed by people familiar with other database systems. These developers are used to techniques such as issuing a SELECTstatement that returns many rows, then fetching the first N rows and closing the result set (e.g., fetching the 100 most recent articles for a news site when they only need to show 10 of them on the front page). They think MySQL will provide them with these 10 rows and stop executing the query, but what MySQL really does is generate the complete result set. The client library then fetches all the data and discards most of it. The best solution is to add a LIMIT clause to the query.

Fetching all columns from a multitable join

If you want to retrieve all actors who appear in the film Academy Dinosaur, don’t write the query this way:

mysql> SELECT * FROM sakila.actor

-> INNER JOIN sakila.film_actor USING(actor_id)

-> INNER JOIN sakila.film USING(film_id)

-> WHERE sakila.film.title = 'Academy Dinosaur';

That returns all columns from all three tables. Instead, write the query as follows:

mysql> SELECT sakila.actor.* FROM sakila.actor...;

Fetching all columns

You should always be suspicious when you see SELECT *. Do you really need all columns? Probably not. Retrieving all columns can prevent optimizations such as covering indexes, as well as adding I/O, memory, and CPU overhead for the server.

Some DBAs ban SELECT * universally because of this fact, and to reduce the risk of problems when someone alters the table’s column list.

Of course, asking for more data than you really need is not always bad. In many cases we’ve investigated, people tell us the wasteful approach simplifies development, because it lets the developer use the same bit of code in more than one place. That’s a reasonable consideration, as long as you know what it costs in terms of performance. It might also be useful to retrieve more data than you actually need if you use some type of caching in your application, or if you have another benefit in mind. Fetching and caching full objects might be preferable to running many separate queries that retrieve only parts of the object.

Fetching the same data repeatedly

If you’re not careful, it’s quite easy to write application code that retrieves the same data repeatedly from the database server, executing the same query to fetch it. For example, if you want to find out a user’s profile image URL to display next to a list of comments, you might request this repeatedly for each comment. Or you could cache it the first time you fetch it, and reuse it thereafter. The latter approach is much more efficient.

Is MySQL Examining Too Much Data?

Once you’re sure your queries retrieve only the data you need, you can look for queries that examine too much data while generating results. In MySQL, the simplest query cost metrics are:

§ Response time

§ Number of rows examined

§ Number of rows returned

None of these metrics is a perfect way to measure query cost, but they reflect roughly how much data MySQL must access internally to execute a query and translate approximately into how fast the query runs. All three metrics are logged in the slow query log, so looking at the slow query log is one of the best ways to find queries that examine too much data.

Response time

Beware of taking query response time at face value. Hey, isn’t that the opposite of what we’ve been telling you? Not really. It’s still true that response time is what matters, but it’s a bit complicated.

Response time is the sum of two things: service time and queue time. Service time is how long it takes the server to actually process the query. Queue time is the portion of response time during which the server isn’t really executing the query—it’s waiting for something, such as waiting for an I/O operation to complete, waiting for a row lock, and so forth. The problem is, you can’t break the response time down into these components unless you can measure them individually, which is usually hard to do. In general, the most common and important waits you’ll encounter are I/O and lock waits, but you shouldn’t count on that, because it varies a lot.

As a result, response time is not consistent under varying load conditions. Other factors—such as storage engine locks (table locks and row locks), high concurrency, and hardware—can also have a considerable impact on response times. Response time can also be both a symptom and a cause of problems, and it’s not always obvious which is the case, unless you can use the techniques shown in Single-Query Versus Server-Wide Problems to find out.

When you look at a query’s response time, you should ask yourself whether the response time is reasonable for the query. We don’t have space for a detailed explanation in this book, but you can actually calculate a quick upper-bound estimate (QUBE) of query response time using the techniques explained in Tapio Lahdenmaki and Mike Leach’s book Relational Database Index Design and the Optimizers (Wiley). In a nutshell: examine the query execution plan and the indexes involved, determine how many sequential and random I/O operations might be required, and multiply these by the time it takes your hardware to perform them. Add it all up and you have a yardstick to judge whether a query is slower than it could or should be.

Rows examined and rows returned

It’s useful to think about the number of rows examined when analyzing queries, because you can see how efficiently the queries are finding the data you need.

However, this is not a perfect metric for finding “bad” queries. Not all row accesses are equal. Shorter rows are faster to access, and fetching rows from memory is much faster than reading them from disk.

Ideally, the number of rows examined would be the same as the number returned, but in practice this is rarely possible. For example, when constructing rows with joins, the server must access multiple rows to generate each row in the result set. The ratio of rows examined to rows returned is usually small—say, between 1:1 and 10:1—but sometimes it can be orders of magnitude larger.

Rows examined and access types

When you’re thinking about the cost of a query, consider the cost of finding a single row in a table. MySQL can use several access methods to find and return a row. Some require examining many rows, but others might be able to generate the result without examining any.

The access method(s) appear in the type column in EXPLAIN’s output. The access types range from a full table scan to index scans, range scans, unique index lookups, and constants. Each of these is faster than the one before it, because it requires reading less data. You don’t need to memorize the access types, but you should understand the general concepts of scanning a table, scanning an index, range accesses, and single-value accesses.

If you aren’t getting a good access type, the best way to solve the problem is usually by adding an appropriate index. We discussed indexing in the previous chapter; now you can see why indexes are so important to query optimization. Indexes let MySQL find rows with a more efficient access type that examines less data.

For example, let’s look at a simple query on the Sakila sample database:

mysql> SELECT * FROM sakila.film_actor WHERE film_id = 1;

This query will return 10 rows, and EXPLAIN shows that MySQL uses the ref access type on the idx_fk_film_id index to execute the query:

mysql> EXPLAIN SELECT * FROM sakila.film_actor WHERE film_id = 1\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: film_actor

type: ref

possible_keys: idx_fk_film_id

key: idx_fk_film_id

key_len: 2

ref: const

rows: 10

Extra:

EXPLAIN shows that MySQL estimated it needed to access only 10 rows. In other words, the query optimizer knew the chosen access type could satisfy the query efficiently. What would happen if there were no suitable index for the query? MySQL would have to use a less optimal access type, as we can see if we drop the index and run the query again:

mysql> ALTER TABLE sakila.film_actor DROP FOREIGN KEY fk_film_actor_film;

mysql> ALTER TABLE sakila.film_actor DROP KEY idx_fk_film_id;

mysql> EXPLAIN SELECT * FROM sakila.film_actor WHERE film_id = 1\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: film_actor

type: ALL

possible_keys: NULL

key: NULL

key_len: NULL

ref: NULL

rows: 5073

Extra: Using where

Predictably, the access type has changed to a full table scan (ALL), and MySQL now estimates it’ll have to examine 5,073 rows to satisfy the query. The “Using where” in the Extra column shows that the MySQL server is using the WHERE clause to discard rows after the storage engine reads them.

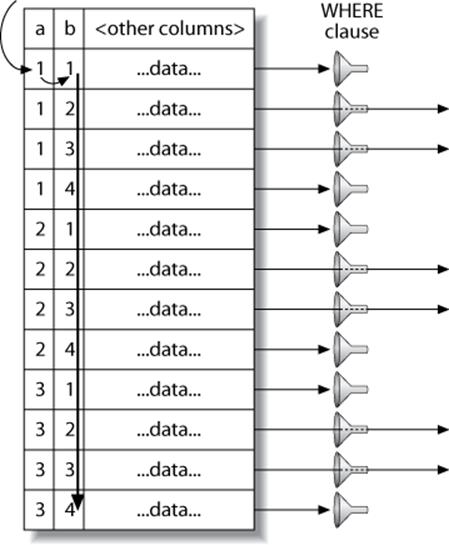

In general, MySQL can apply a WHERE clause in three ways, from best to worst:

§ Apply the conditions to the index lookup operation to eliminate nonmatching rows. This happens at the storage engine layer.

§ Use a covering index (“Using index” in the Extra column) to avoid row accesses, and filter out nonmatching rows after retrieving each result from the index. This happens at the server layer, but it doesn’t require reading rows from the table.

§ Retrieve rows from the table, then filter nonmatching rows (“Using where” in the Extra column). This happens at the server layer and requires the server to read rows from the table before it can filter them.

This example illustrates how important it is to have good indexes. Good indexes help your queries get a good access type and examine only the rows they need. However, adding an index doesn’t always mean that MySQL will access and return the same number of rows. For example, here’s a query that uses the COUNT() aggregate function:[71]

mysql> SELECT actor_id, COUNT(*) FROM sakila.film_actor GROUP BY actor_id;

This query returns only 200 rows, but it needs to read thousands of rows to build the result set. An index can’t reduce the number of rows examined for a query like this one.

Unfortunately, MySQL does not tell you how many of the rows it accessed were used to build the result set; it tells you only the total number of rows it accessed. Many of these rows could be eliminated by a WHERE clause and end up not contributing to the result set. In the previous example, after removing the index on sakila.film_actor, the query accessed every row in the table and the WHERE clause discarded all but 10 of them. Only the remaining 10 rows were used to build the result set. Understanding how many rows the server accesses and how many it really uses requires reasoning about the query.

If you find that a huge number of rows were examined to produce relatively few rows in the result, you can try some more sophisticated fixes:

§ Use covering indexes, which store data so that the storage engine doesn’t have to retrieve the complete rows. (We discussed these in the previous chapter.)

§ Change the schema. An example is using summary tables (discussed in Chapter 4).

§ Rewrite a complicated query so the MySQL optimizer is able to execute it optimally. (We discuss this later in this chapter.)

[70] Network overhead is worst if the application is on a different host from the server, but transferring data between MySQL and the application isn’t free even if they’re on the same server.

[71] See Optimizing COUNT() Queries for more on this topic.

Ways to Restructure Queries

As you optimize problematic queries, your goal should be to find alternative ways to get the result you want—but that doesn’t necessarily mean getting the same result set back from MySQL. You can sometimes transform queries into equivalent forms that return the same results, and get better performance. However, you should also think about rewriting the query to retrieve different results, if that provides an efficiency benefit. You might be able to ultimately do the same work by changing the application code as well as the query. In this section, we explain techniques that can help you restructure a wide range of queries and show you when to use each technique.

Complex Queries Versus Many Queries

One important query design question is whether it’s preferable to break up a complex query into several simpler queries. The traditional approach to database design emphasizes doing as much work as possible with as few queries as possible. This approach was historically better because of the cost of network communication and the overhead of the query parsing and optimization stages.

However, this advice doesn’t apply as much to MySQL, because it was designed to handle connecting and disconnecting very efficiently and to respond to small and simple queries very quickly. Modern networks are also significantly faster than they used to be, reducing network latency. Depending on the server version, MySQL can run well over 100,000 simple queries per second on commodity server hardware and over 2,000 queries per second from a single correspondent on a gigabit network, so running multiple queries isn’t necessarily such a bad thing.

Connection response is still slow compared to the number of rows MySQL can traverse per second internally, though, which is counted in millions per second for in-memory data. All else being equal, it’s still a good idea to use as few queries as possible, but sometimes you can make a query more efficient by decomposing it and executing a few simple queries instead of one complex one. Don’t be afraid to do this; weigh the costs, and go with the strategy that causes less work. We show some examples of this technique a little later in the chapter.

That said, using too many queries is a common mistake in application design. For example, some applications perform 10 single-row queries to retrieve data from a table when they could use a single 10-row query. We’ve even seen applications that retrieve each column individually, querying each row many times!

Chopping Up a Query

Another way to slice up a query is to divide and conquer, keeping it essentially the same but running it in smaller “chunks” that affect fewer rows each time.

Purging old data is a great example. Periodic purge jobs might need to remove quite a bit of data, and doing this in one massive query could lock a lot of rows for a long time, fill up transaction logs, hog resources, and block small queries that shouldn’t be interrupted. Chopping up theDELETE statement and using medium-size queries can improve performance considerably, and reduce replication lag when a query is replicated. For example, instead of running this monolithic query:

mysql> DELETE FROM messages WHERE created < DATE_SUB(NOW(),INTERVAL 3 MONTH);

you could do something like the following pseudocode:

rows_affected = 0

do {

rows_affected = do_query(

"DELETE FROM messages WHERE created < DATE_SUB(NOW(),INTERVAL 3 MONTH)

LIMIT 10000")

} while rows_affected > 0

Deleting 10,000 rows at a time is typically a large enough task to make each query efficient, and a short enough task to minimize the impact on the server[72] (transactional storage engines might benefit from smaller transactions). It might also be a good idea to add some sleep time between theDELETE statements to spread the load over time and reduce the amount of time locks are held.

Join Decomposition

Many high-performance applications use join decomposition. You can decompose a join by running multiple single-table queries instead of a multitable join, and then performing the join in the application. For example, instead of this single query:

mysql> SELECT * FROM tag

-> JOIN tag_post ON tag_post.tag_id=tag.id

-> JOIN post ON tag_post.post_id=post.id

-> WHERE tag.tag='mysql';

You might run these queries:

mysql> SELECT * FROM tag WHERE tag='mysql';

mysql> SELECT * FROM tag_post WHERE tag_id=1234;

mysql> SELECT * FROM post WHERE post.id in (123,456,567,9098,8904);

Why on earth would you do this? It looks wasteful at first glance, because you’ve increased the number of queries without getting anything in return. However, such restructuring can actually give significant performance advantages:

§ Caching can be more efficient. Many applications cache “objects” that map directly to tables. In this example, if the object with the tag mysql is already cached, the application can skip the first query. If you find posts with an ID of 123, 567, or 9098 in the cache, you can remove them from the IN() list. The query cache might also benefit from this strategy. If only one of the tables changes frequently, decomposing a join can reduce the number of cache invalidations.

§ Executing the queries individually can sometimes reduce lock contention.

§ Doing joins in the application makes it easier to scale the database by placing tables on different servers.

§ The queries themselves can be more efficient. In this example, using an IN() list instead of a join lets MySQL sort row IDs and retrieve rows more optimally than might be possible with a join. We explain this in more detail later.

§ You can reduce redundant row accesses. Doing a join in the application means you retrieve each row only once, whereas a join in the query is essentially a denormalization that might repeatedly access the same data. For the same reason, such restructuring might also reduce the total network traffic and memory usage.

§ To some extent, you can view this technique as manually implementing a hash join instead of the nested loops algorithm MySQL uses to execute a join. A hash join might be more efficient. (We discuss MySQL’s join strategy later in this chapter.)

As a result, doing joins in the application can be more efficient when you cache and reuse a lot of data from earlier queries, you distribute data across multiple servers, you replace joins with IN() lists on large tables, or a join refers to the same table multiple times.

[72] Percona Toolkit’s pt-archiver tool makes these types of jobs easy and safe.

Query Execution Basics

If you need to get high performance from your MySQL server, one of the best ways to invest your time is in learning how MySQL optimizes and executes queries. Once you understand this, much of query optimization is a matter of reasoning from principles, and query optimization becomes a very logical process.

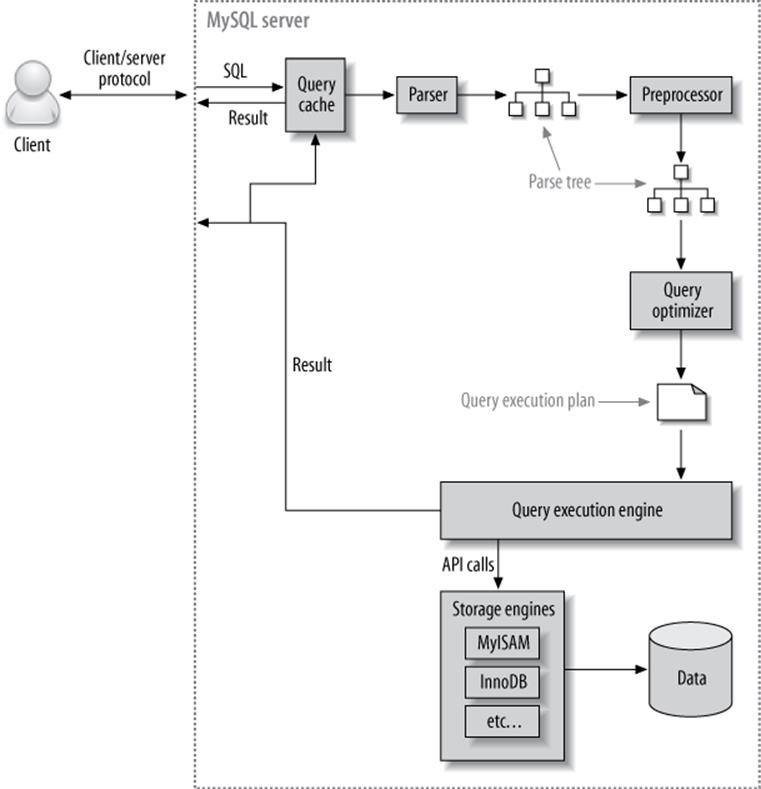

In other words, it’s time to revisit what we discussed earlier: the process MySQL follows to execute queries. Follow along with Figure 6-1 to see what happens when you send MySQL a query:

1. The client sends the SQL statement to the server.

2. The server checks the query cache. If there’s a hit, it returns the stored result from the cache; otherwise, it passes the SQL statement to the next step.

3. The server parses, preprocesses, and optimizes the SQL into a query execution plan.

4. The query execution engine executes the plan by making calls to the storage engine API.

5. The server sends the result to the client.

Each of these steps has some extra complexity, which we discuss in the following sections. We also explain which states the query will be in during each step. The query optimization process is particularly complex and important to understand. There are also exceptions or special cases, such as the difference in execution path when you use prepared statements; we discuss that in the next chapter.

Figure 6-1. Execution path of a query

The MySQL Client/Server Protocol

Though you don’t need to understand the inner details of MySQL’s client/server protocol, you do need to understand how it works at a high level. The protocol is half-duplex, which means that at any given time the MySQL server can be either sending or receiving messages, but not both. It also means there is no way to cut a message short.

This protocol makes MySQL communication simple and fast, but it limits it in some ways too. For one thing, it means there’s no flow control; once one side sends a message, the other side must fetch the entire message before responding. It’s like a game of tossing a ball back and forth: only one side has the ball at any instant, and you can’t toss the ball (send a message) unless you have it.

The client sends a query to the server as a single packet of data. This is why the max_allowed_packet configuration variable is important if you have large queries.[73] Once the client sends the query, it doesn’t have the ball anymore; it can only wait for results.

In contrast, the response from the server usually consists of many packets of data. When the server responds, the client has to receive the entire result set. It cannot simply fetch a few rows and then ask the server not to bother sending the rest. If the client needs only the first few rows that are returned, it either has to wait for all of the server’s packets to arrive and then discard the ones it doesn’t need, or disconnect ungracefully. Neither is a good idea, which is why appropriate LIMIT clauses are so important.

Here’s another way to think about this: when a client fetches rows from the server, it thinks it’s pulling them. But the truth is, the MySQL server is pushing the rows as it generates them. The client is only receiving the pushed rows; there is no way for it to tell the server to stop sending rows. The client is “drinking from the fire hose,” so to speak. (Yes, that’s a technical term.)

Most libraries that connect to MySQL let you either fetch the whole result set and buffer it in memory, or fetch each row as you need it. The default behavior is generally to fetch the whole result and buffer it in memory. This is important because until all the rows have been fetched, the MySQL server will not release the locks and other resources required by the query. The query will be in the “Sending data” state. When the client library fetches the results all at once, it reduces the amount of work the server needs to do: the server can finish and clean up the query as quickly as possible.

Most client libraries let you treat the result set as though you’re fetching it from the server, although in fact you’re just fetching it from the buffer in the library’s memory. This works fine most of the time, but it’s not a good idea for huge result sets that might take a long time to fetch and use a lot of memory. You can use less memory, and start working on the result sooner, if you instruct the library not to buffer the result. The downside is that the locks and other resources on the server will remain open while your application is interacting with the library.[74]

Let’s look at an example using PHP. First, here’s how you’ll usually query MySQL from PHP:

<?php

$link = mysql_connect('localhost', 'user', 'p4ssword');

$result = mysql_query('SELECT * FROM HUGE_TABLE', $link);

while ( $row = mysql_fetch_array($result) ) {

// Do something with result

}

?>

The code seems to indicate that you fetch rows only when you need them, in the while loop. However, the code actually fetches the entire result into a buffer with the mysql_query() function call. The while loop simply iterates through the buffer. In contrast, the following code doesn’t buffer the results, because it uses mysql_unbuffered_query() instead of mysql_query():

<?php

$link = mysql_connect('localhost', 'user', 'p4ssword');

$result = mysql_unbuffered_query('SELECT * FROM HUGE_TABLE', $link);

while ( $row = mysql_fetch_array($result) ) {

// Do something with result

}

?>

Programming languages have different ways to override buffering. For example, the Perl DBD::mysql driver requires you to specify the C client library’s mysql_use_result attribute (the default is mysql_buffer_result). Here’s an example:

#!/usr/bin/perl

use DBI;

my $dbh = DBI->connect('DBI:mysql:;host=localhost', 'user', 'p4ssword');

my $sth = $dbh->prepare('SELECT * FROM HUGE_TABLE', { mysql_use_result => 1 });

$sth->execute();

while ( my $row = $sth->fetchrow_array() ) {

# Do something with result

}

Notice that the call to prepare() specified to “use” the result instead of “buffering” it. You can also specify this when connecting, which will make every statement unbuffered:

my $dbh = DBI->connect('DBI:mysql:;mysql_use_result=1', 'user', 'p4ssword');

Query states

Each MySQL connection, or thread, has a state that shows what it is doing at any given time. There are several ways to view these states, but the easiest is to use the SHOW FULL PROCESSLIST command (the states appear in the Command column). As a query progresses through its lifecycle, its state changes many times, and there are dozens of states. The MySQL manual is the authoritative source of information for all the states, but we list a few here and explain what they mean:

Sleep

The thread is waiting for a new query from the client.

Query

The thread is either executing the query or sending the result back to the client.

Locked

The thread is waiting for a table lock to be granted at the server level. Locks that are implemented by the storage engine, such as InnoDB’s row locks, do not cause the thread to enter the Locked state. This thread state is the classic symptom of MyISAM locking, but it can occur in other storage engines that don’t have row-level locking, too.

Analyzing and statistics

The thread is checking storage engine statistics and optimizing the query.

Copying to tmp table [on disk]

The thread is processing the query and copying results to a temporary table, probably for a GROUP BY, for a filesort, or to satisfy a UNION. If the state ends with “on disk,” MySQL is converting an in-memory table to an on-disk table.

Sorting result

The thread is sorting a result set.

Sending data

This can mean several things: the thread might be sending data between stages of the query, generating the result set, or returning the result set to the client.

It’s helpful to at least know the basic states, so you can get a sense of “who has the ball” for the query. On very busy servers, you might see an unusual or normally brief state, such as statistics, begin to take a significant amount of time. This usually indicates that something is wrong, and you should use the techniques shown in Chapter 3 to capture detailed diagnostic data when it happens.

The Query Cache

Before even parsing a query, MySQL checks for it in the query cache, if the cache is enabled. This operation is a case-sensitive hash lookup. If the query differs from a similar query in the cache by even a single byte, it won’t match,[75] and the query processing will go to the next stage.

If MySQL does find a match in the query cache, it must check privileges before returning the cached query. This is possible without parsing the query, because MySQL stores table information with the cached query. If the privileges are OK, MySQL retrieves the stored result from the query cache and sends it to the client, bypassing every other stage in query execution. The query is never parsed, optimized, or executed.

You can learn more about the query cache in Chapter 7.

The Query Optimization Process

The next step in the query lifecycle turns a SQL query into an execution plan for the query execution engine. It has several substeps: parsing, preprocessing, and optimization. Errors (for example, syntax errors) can be raised at any point in the process. We’re not trying to document the MySQL internals here, so we’re going to take some liberties, such as describing steps separately even though they’re often combined wholly or partially for efficiency. Our goal is simply to help you understand how MySQL executes queries so that you can write better ones.

The parser and the preprocessor

To begin, MySQL’s parser breaks the query into tokens and builds a “parse tree” from them. The parser uses MySQL’s SQL grammar to interpret and validate the query. For instance, it ensures that the tokens in the query are valid and in the proper order, and it checks for mistakes such as quoted strings that aren’t terminated.

The preprocessor then checks the resulting parse tree for additional semantics that the parser can’t resolve. For example, it checks that tables and columns exist, and it resolves names and aliases to ensure that column references aren’t ambiguous.

Next, the preprocessor checks privileges. This is normally very fast unless your server has large numbers of privileges.

The query optimizer

The parse tree is now valid and ready for the optimizer to turn it into a query execution plan. A query can often be executed many different ways and produce the same result. The optimizer’s job is to find the best option.

MySQL uses a cost-based optimizer, which means it tries to predict the cost of various execution plans and choose the least expensive. The unit of cost was originally a single random 4 KB data page read, but it has become more sophisticated and now includes factors such as the estimated cost of executing a WHERE clause comparison. You can see how expensive the optimizer estimated a query to be by running the query, then inspecting the Last_query_cost session variable:

mysql> SELECT SQL_NO_CACHE COUNT(*) FROM sakila.film_actor;

+----------+

| count(*) |

+----------+

| 5462 |

+----------+

mysql> SHOW STATUS LIKE 'Last_query_cost';

+-----------------+-------------+

| Variable_name | Value |

+-----------------+-------------+

| Last_query_cost | 1040.599000 |

+-----------------+-------------+

This result means that the optimizer estimated it would need to do about 1,040 random data page reads to execute the query. It bases the estimate on statistics: the number of pages per table or index, the cardinality (number of distinct values) of the indexes, the length of the rows and keys, and the key distribution. The optimizer does not include the effects of any type of caching in its estimates—it assumes every read will result in a disk I/O operation.

The optimizer might not always choose the best plan, for many reasons:

§ The statistics could be wrong. The server relies on storage engines to provide statistics, and they can range from exactly correct to wildly inaccurate. For example, the InnoDB storage engine doesn’t maintain accurate statistics about the number of rows in a table because of its MVCC architecture.

§ The cost metric is not exactly equivalent to the true cost of running the query, so even when the statistics are accurate, the query might be more or less expensive than MySQL’s approximation. A plan that reads more pages might actually be cheaper in some cases, such as when the reads are sequential so the disk I/O is faster, or when the pages are already cached in memory. MySQL also doesn’t understand which pages are in memory and which pages are on disk, so it doesn’t really know how much I/O the query will cause.

§ MySQL’s idea of “optimal” might not match yours. You probably want the fastest execution time, but MySQL doesn’t really try to make queries fast; it tries to minimize their cost, and as we’ve seen, determining cost is not an exact science.

§ MySQL doesn’t consider other queries that are running concurrently, which can affect how quickly the query runs.

§ MySQL doesn’t always do cost-based optimization. Sometimes it just follows the rules, such as “if there’s a full-text MATCH() clause, use a FULLTEXT index if one exists.” It will do this even when it would be faster to use a different index and a non-FULLTEXT query with a WHEREclause.

§ The optimizer doesn’t take into account the cost of operations not under its control, such as executing stored functions or user-defined functions.

§ As we’ll see later, the optimizer can’t always estimate every possible execution plan, so it might miss an optimal plan.

MySQL’s query optimizer is a highly complex piece of software, and it uses many optimizations to transform the query into an execution plan. There are two basic types of optimizations, which we call static and dynamic. Static optimizations can be performed simply by inspecting the parse tree. For example, the optimizer can transform the WHERE clause into an equivalent form by applying algebraic rules. Static optimizations are independent of values, such as the value of a constant in a WHERE clause. They can be performed once and will always be valid, even when the query is reexecuted with different values. You can think of these as “compile-time optimizations.”

In contrast, dynamic optimizations are based on context and can depend on many factors, such as which value is in a WHERE clause or how many rows are in an index. They must be reevaluated each time the query is executed. You can think of these as “runtime optimizations.”

The difference is important when executing prepared statements or stored procedures. MySQL can do static optimizations once, but it must reevaluate dynamic optimizations every time it executes a query. MySQL sometimes even reoptimizes the query as it executes it.[76]

Here are some types of optimizations MySQL knows how to do:

Reordering joins

Tables don’t always have to be joined in the order you specify in the query. Determining the best join order is an important optimization; we explain it in depth later in this chapter.

Converting OUTER JOINs to INNER JOINs

An OUTER JOIN doesn’t necessarily have to be executed as an OUTER JOIN. Some factors, such as the WHERE clause and table schema, can actually cause an OUTER JOIN to be equivalent to an INNER JOIN. MySQL can recognize this and rewrite the join, which makes it eligible for reordering.

Applying algebraic equivalence rules

MySQL applies algebraic transformations to simplify and canonicalize expressions. It can also fold and reduce constants, eliminating impossible constraints and constant conditions. For example, the term (5=5 AND a>5) will reduce to just a>5. Similarly, (a<b AND b=c) AND a=5becomes b>5 AND b=c AND a=5. These rules are very useful for writing conditional queries, which we discuss later in this chapter.

COUNT(), MIN(), and MAX() optimizations

Indexes and column nullability can often help MySQL optimize away these expressions. For example, to find the minimum value of a column that’s leftmost in a B-Tree index, MySQL can just request the first row in the index. It can even do this in the query optimization stage, and treat the value as a constant for the rest of the query. Similarly, to find the maximum value in a B-Tree index, the server reads the last row. If the server uses this optimization, you’ll see “Select tables optimized away” in the EXPLAIN plan. This literally means the optimizer has removed the table from the query plan and replaced it with a constant.

Likewise, COUNT(*) queries without a WHERE clause can often be optimized away on some storage engines (such as MyISAM, which keeps an exact count of rows in the table at all times).

Evaluating and reducing constant expressions

When MySQL detects that an expression can be reduced to a constant, it will do so during optimization. For example, a user-defined variable can be converted to a constant if it’s not changed in the query. Arithmetic expressions are another example.

Perhaps surprisingly, even something you might consider to be a query can be reduced to a constant during the optimization phase. One example is a MIN() on an index. This can even be extended to a constant lookup on a primary key or unique index. If a WHERE clause applies a constant condition to such an index, the optimizer knows MySQL can look up the value at the beginning of the query. It will then treat the value as a constant in the rest of the query. Here’s an example:

mysql> EXPLAIN SELECT film.film_id, film_actor.actor_id

-> FROM sakila.film

-> INNER JOIN sakila.film_actor USING(film_id)

-> WHERE film.film_id = 1;

+----+-------------+------------+-------+----------------+-------+------+

| id | select_type | table | type | key | ref | rows |

+----+-------------+------------+-------+----------------+-------+------+

| 1 | SIMPLE | film | const | PRIMARY | const | 1 |

| 1 | SIMPLE | film_actor | ref | idx_fk_film_id | const | 10 |

+----+-------------+------------+-------+----------------+-------+------+

MySQL executes this query in two steps, which correspond to the two rows in the output. The first step is to find the desired row in the film table. MySQL’s optimizer knows there is only one row, because there’s a primary key on the film_id column, and it has already consulted the index during the query optimization stage to see how many rows it will find. Because the query optimizer has a known quantity (the value in the WHERE clause) to use in the lookup, this table’s ref type is const.

In the second step, MySQL treats the film_id column from the row found in the first step as a known quantity. It can do this because the optimizer knows that by the time the query reaches the second step, it will know all the values from the first step. Notice that the film_actor table’sref type is const, just as the film table’s was.

Another way you’ll see constant conditions applied is by propagating a value’s constant-ness from one place to another if there is a WHERE, USING, or ON clause that restricts the values to being equal. In this example, the optimizer knows that the USING clause forces film_id to have the same value everywhere in the query—it must be equal to the constant value given in the WHERE clause.

Covering indexes

MySQL can sometimes use an index to avoid reading row data, when the index contains all the columns the query needs. We discussed covering indexes at length in the previous chapter.

Subquery optimization

MySQL can convert some types of subqueries into more efficient alternative forms, reducing them to index lookups instead of separate queries.

Early termination

MySQL can stop processing a query (or a step in a query) as soon as it fulfills the query or step. The obvious case is a LIMIT clause, but there are several other kinds of early termination. For instance, if MySQL detects an impossible condition, it can abort the entire query. You can see this in the following example:

mysql> EXPLAIN SELECT film.film_id FROM sakila.film WHERE film_id = −1;

+----+...+-----------------------------------------------------+

| id |...| Extra |

+----+...+-----------------------------------------------------+

| 1 |...| Impossible WHERE noticed after reading const tables |

+----+...+-----------------------------------------------------+

This query stopped during the optimization step, but MySQL can also terminate execution early in some other cases. The server can use this optimization when the query execution engine recognizes the need to retrieve distinct values, or to stop when a value doesn’t exist. For example, the following query finds all movies without any actors:[77]

mysql> SELECT film.film_id

-> FROM sakila.film

-> LEFT OUTER JOIN sakila.film_actor USING(film_id)

-> WHERE film_actor.film_id IS NULL;

This query works by eliminating any films that have actors. Each film might have many actors, but as soon as it finds one actor, it stops processing the current film and moves to the next one because it knows the WHERE clause prohibits outputting that film. A similar “Distinct/not-exists” optimization can apply to certain kinds of DISTINCT, NOT EXISTS(), and LEFT JOIN queries.

Equality propagation

MySQL recognizes when a query holds two columns as equal—for example, in a JOIN condition—and propagates WHERE clauses across equivalent columns. For instance, in the following query:

mysql> SELECT film.film_id

-> FROM sakila.film

-> INNER JOIN sakila.film_actor USING(film_id)

-> WHERE film.film_id > 500;

MySQL knows that the WHERE clause applies not only to the film table but to the film_actor table as well, because the USING clause forces the two columns to match.

If you’re used to another database server that can’t do this, you might have been advised to “help the optimizer” by manually specifying the WHERE clause for both tables, like this:

... WHERE film.film_id > 500 AND film_actor.film_id > 500

This is unnecessary in MySQL. It just makes your queries harder to maintain.

IN() list comparisons

In many database servers, IN() is just a synonym for multiple OR clauses, because the two are logically equivalent. Not so in MySQL, which sorts the values in the IN() list and uses a fast binary search to see whether a value is in the list. This is O(log n) in the size of the list, whereas an equivalent series of OR clauses is O(n) in the size of the list (i.e., much slower for large lists).

The preceding list is woefully incomplete, because MySQL performs more optimizations than we could fit into this entire chapter, but it should give you an idea of the optimizer’s complexity and intelligence. If there’s one thing you should take away from this discussion, it’s don’t try to outsmart the optimizer. You might end up just defeating it, or making your queries more complicated and harder to maintain for zero benefit. In general, you should let the optimizer do its work.

Of course, as smart as the optimizer is, there are times when it doesn’t give the best result. Sometimes you might know something about the data that the optimizer doesn’t, such as a fact that’s guaranteed to be true because of application logic. Also, sometimes the optimizer doesn’t have the necessary functionality, such as hash indexes; at other times, as mentioned earlier, its cost estimates might prefer a query plan that turns out to be more expensive than an alternative.

If you know the optimizer isn’t giving a good result, and you know why, you can help it. Some of the options are to add a hint to the query, rewrite the query, redesign your schema, or add indexes.

Table and index statistics

Recall the various layers in the MySQL server architecture, which we illustrated in Figure 1-1. The server layer, which contains the query optimizer, doesn’t store statistics on data and indexes. That’s a job for the storage engines, because each storage engine might keep different kinds of statistics (or keep them in a different way). Some engines, such as Archive, don’t keep statistics at all!

Because the server doesn’t store statistics, the MySQL query optimizer has to ask the engines for statistics on the tables in a query. The engines provide the optimizer with statistics such as the number of pages per table or index, the cardinality of tables and indexes, the length of rows and keys, and key distribution information. The optimizer can use this information to help it decide on the best execution plan. We see how these statistics influence the optimizer’s choices in later sections.

MySQL’s join execution strategy

MySQL uses the term “join” more broadly than you might be used to. In sum, it considers every query a join—not just every query that matches rows from two tables, but every query, period (including subqueries, and even a SELECT against a single table). Consequently, it’s very important to understand how MySQL executes joins.

Consider the example of a UNION query. MySQL executes a UNION as a series of single queries whose results are spooled into a temporary table, then read out again. Each of the individual queries is a join, in MySQL terminology—and so is the act of reading from the resulting temporary table.

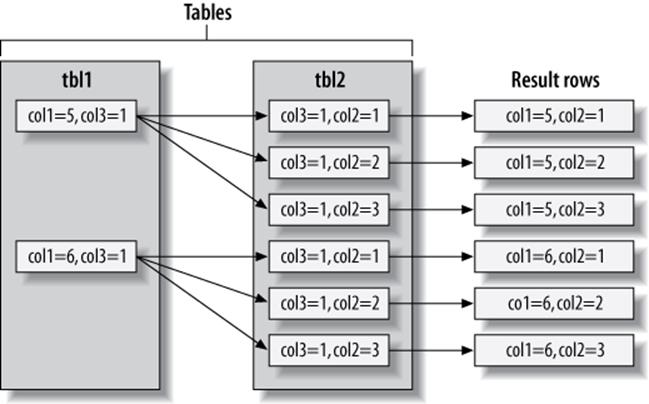

At the moment, MySQL’s join execution strategy is simple: it treats every join as a nested-loop join. This means MySQL runs a loop to find a row from a table, then runs a nested loop to find a matching row in the next table. It continues until it has found a matching row in each table in the join. It then builds and returns a row from the columns named in the SELECT list. It tries to build the next row by looking for more matching rows in the last table. If it doesn’t find any, it backtracks one table and looks for more rows there. It keeps backtracking until it finds another row in some table, at which point it looks for a matching row in the next table, and so on.[78]

This process of finding rows, probing into the next table, and then backtracking can be written as nested loops in the execution plan—hence the name “nested-loop join.” As an example, consider this simple query:

mysql> SELECT tbl1.col1, tbl2.col2

-> FROM tbl1 INNER JOIN tbl2 USING(col3)

-> WHERE tbl1.col1 IN(5,6);

Assuming MySQL decides to join the tables in the order shown in the query, the following pseudocode shows how MySQL might execute the query:

outer_iter = iterator over tbl1 where col1 IN(5,6)

outer_row = outer_iter.next

while outer_row

inner_iter = iterator over tbl2 where col3 = outer_row.col3

inner_row = inner_iter.next

while inner_row

output [ outer_row.col1, inner_row.col2 ]

inner_row = inner_iter.next

end

outer_row = outer_iter.next

end

This query execution plan applies as easily to a single-table query as it does to a many-table query, which is why even a single-table query can be considered a join—the single-table join is the basic operation from which more complex joins are composed. It can support OUTER JOINs, too. For example, let’s change the example query as follows:

mysql> SELECT tbl1.col1, tbl2.col2

-> FROM tbl1 LEFT OUTER JOIN tbl2 USING(col3)

-> WHERE tbl1.col1 IN(5,6);

Here’s the corresponding pseudocode, with the changed parts in bold:

outer_iter = iterator over tbl1 where col1 IN(5,6)

outer_row = outer_iter.next

while outer_row

inner_iter = iterator over tbl2 where col3 = outer_row.col3

inner_row = inner_iter.next

if inner_row

while inner_row

output [ outer_row.col1, inner_row.col2 ]

inner_row = inner_iter.next

end

else

output [ outer_row.col1, NULL ]

end

outer_row = outer_iter.next

end

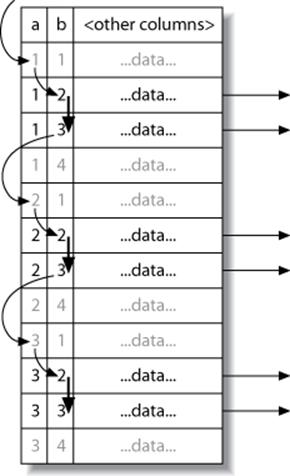

Another way to visualize a query execution plan is to use what the optimizer folks call a “swim-lane diagram.” Figure 6-2 contains a swim-lane diagram of our initial INNER JOIN query. Read it from left to right and top to bottom.

Figure 6-2. Swim-lane diagram illustrating retrieving rows using a join

MySQL executes every kind of query in essentially the same way. For example, it handles a subquery in the FROM clause by executing it first, putting the results into a temporary table,[79] and then treating that table just like an ordinary table (hence the name “derived table”). MySQL executesUNION queries with temporary tables too, and it rewrites all RIGHT OUTER JOIN queries to equivalent LEFT OUTER JOINs. In short, current versions of MySQL coerce every kind of query into this execution plan.[80]

It’s not possible to execute every legal SQL query this way, however. For example, a FULL OUTER JOIN can’t be executed with nested loops and backtracking as soon as a table with no matching rows is found, because it might begin with a table that has no matching rows. This explains why MySQL doesn’t support FULL OUTER JOIN. Still other queries can be executed with nested loops, but perform very badly as a result. We’ll look at some of those later.

The execution plan

MySQL doesn’t generate byte-code to execute a query, as many other database products do. Instead, the query execution plan is actually a tree of instructions that the query execution engine follows to produce the query results. The final plan contains enough information to reconstruct the original query. If you execute EXPLAIN EXTENDED on a query, followed by SHOW WARNINGS, you’ll see the reconstructed query.[81]

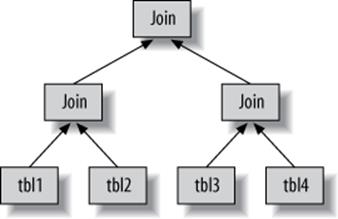

Any multitable query can conceptually be represented as a tree. For example, it might be possible to execute a four-table join as shown in Figure 6-3.

Figure 6-3. One way to join multiple tables

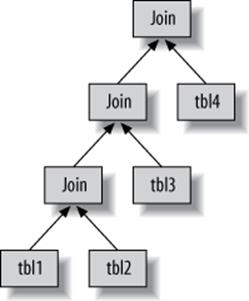

This is what computer scientists call a balanced tree. This is not how MySQL executes the query, though. As we described in the previous section, MySQL always begins with one table and finds matching rows in the next table. Thus, MySQL’s query execution plans always take the form of aleft-deep tree, as in Figure 6-4.

Figure 6-4. How MySQL joins multiple tables

The join optimizer

The most important part of the MySQL query optimizer is the join optimizer, which decides the best order of execution for multitable queries. It is often possible to join the tables in several different orders and get the same results. The join optimizer estimates the cost for various plans and tries to choose the least expensive one that gives the same result.

Here’s a query whose tables can be joined in different orders without changing the results:

mysql> SELECT film.film_id, film.title, film.release_year, actor.actor_id,

-> actor.first_name, actor.last_name

-> FROM sakila.film

-> INNER JOIN sakila.film_actor USING(film_id)

-> INNER JOIN sakila.actor USING(actor_id);

You can probably think of a few different query plans. For example, MySQL could begin with the film table, use the index on film_id in the film_actor table to find actor_id values, and then look up rows in the actor table’s primary key. Oracle users might phrase this as “The filmtable is the driver table into the film_actor table, which is the driver for the actor table.” This should be efficient, right? Now let’s use EXPLAIN to see how MySQL wants to execute the query:

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: actor

type: ALL

possible_keys: PRIMARY

key: NULL

key_len: NULL

ref: NULL

rows: 200

Extra:

*************************** 2. row ***************************

id: 1

select_type: SIMPLE

table: film_actor

type: ref

possible_keys: PRIMARY,idx_fk_film_id

key: PRIMARY

key_len: 2

ref: sakila.actor.actor_id

rows: 1

Extra: Using index

*************************** 3. row ***************************

id: 1

select_type: SIMPLE

table: film

type: eq_ref

possible_keys: PRIMARY

key: PRIMARY

key_len: 2

ref: sakila.film_actor.film_id

rows: 1

Extra:

This is quite a different plan from the one suggested in the previous paragraph. MySQL wants to start with the actor table (we know this because it’s listed first in the EXPLAIN output) and go in the reverse order. Is this really more efficient? Let’s find out. The STRAIGHT_JOIN keyword forces the join to proceed in the order specified in the query. Here’s the EXPLAIN output for the revised query:

mysql> EXPLAIN SELECT STRAIGHT_JOIN film.film_id...\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: film

type: ALL

possible_keys: PRIMARY

key: NULL

key_len: NULL

ref: NULL

rows: 951

Extra:

*************************** 2. row ***************************

id: 1

select_type: SIMPLE

table: film_actor

type: ref

possible_keys: PRIMARY,idx_fk_film_id

key: idx_fk_film_id

key_len: 2

ref: sakila.film.film_id

rows: 1

Extra: Using index

*************************** 3. row ***************************

id: 1

select_type: SIMPLE

table: actor

type: eq_ref

possible_keys: PRIMARY

key: PRIMARY

key_len: 2

ref: sakila.film_actor.actor_id

rows: 1

Extra:

This shows why MySQL wants to reverse the join order: doing so will enable it to examine fewer rows in the first table.[82] In both cases, it will be able to perform fast indexed lookups in the second and third tables. The difference is how many of these indexed lookups it will have to do:

§ Placing film first will require about 951 probes into film_actor and actor, one for each row in the first table.

§ If the server scans the actor table first, it will have to do only 200 index lookups into later tables.

In other words, the reversed join order will require less backtracking and rereading. To double-check the optimizer’s choice, we executed the two query versions and looked at the Last_query_cost variable for each. The reordered query had an estimated cost of 241, while the estimated cost of forcing the join order was 1,154.

This is a simple example of how MySQL’s join optimizer can reorder queries to make them less expensive to execute. Reordering joins is usually a very effective optimization. There are times when it won’t result in an optimal plan, though, and for those times you can use STRAIGHT_JOINand write the query in the order you think is best—but such times are rare. In most cases, the join optimizer will outperform a human.

The join optimizer tries to produce a query execution plan tree with the lowest achievable cost. When possible, it examines all potential combinations of subtrees, beginning with all one-table plans.

Unfortunately, a join over n tables will have n-factorial combinations of join orders to examine. This is called the search space of all possible query plans, and it grows very quickly—a 10-table join can be executed up to 3,628,800 different ways! When the search space grows too large, it can take far too long to optimize the query, so the server stops doing a full analysis. Instead, it resorts to shortcuts such as “greedy” searches when the number of tables exceeds the limit specified by the optimizer_search_depth variable (which you can change if necessary).

MySQL has many heuristics, accumulated through years of research and experimentation, that it uses to speed up the optimization stage. This can be beneficial, but it can also mean that MySQL might (on rare occasions) miss an optimal plan and choose a less optimal one because it’s trying not to examine every possible query plan.

Sometimes queries can’t be reordered, and the join optimizer can use this fact to reduce the search space by eliminating choices. A LEFT JOIN is a good example, as are correlated subqueries (more about subqueries later). This is because the results for one table depend on data retrieved from another table. These dependencies help the join optimizer reduce the search space by eliminating choices.

Sort optimizations

Sorting results can be a costly operation, so you can often improve performance by avoiding sorts or by performing them on fewer rows.

We showed you how to use indexes for sorting in Chapter 3. When MySQL can’t use an index to produce a sorted result, it must sort the rows itself. It can do this in memory or on disk, but it always calls this process a filesort, even if it doesn’t actually use a file.

If the values to be sorted will fit into the sort buffer, MySQL can perform the sort entirely in memory with a quicksort. If MySQL can’t do the sort in memory, it performs it on disk by sorting the values in chunks. It uses a quicksort to sort each chunk and then merges the sorted chunks into the results.

There are two filesort algorithms:

Two passes (old)

Reads row pointers and ORDER BY columns, sorts them, and then scans the sorted list and rereads the rows for output.

The two-pass algorithm can be quite expensive, because it reads the rows from the table twice, and the second read causes a lot of random I/O. This is especially expensive for MyISAM, which uses a system call to fetch each row (because MyISAM relies on the operating system’s cache to hold the data). On the other hand, it stores a minimal amount of data during the sort, so if the rows to be sorted are completely in memory, it can be cheaper to store less data and reread the rows to generate the final result.

Single pass (new)

Reads all the columns needed for the query, sorts them by the ORDER BY columns, and then scans the sorted list and outputs the specified columns.

This algorithm is available only in MySQL 4.1 and newer. It can be much more efficient, especially on large I/O-bound datasets, because it avoids reading the rows from the table twice and trades random I/O for more sequential I/O. However, it has the potential to use a lot more space, because it holds all the desired columns from each row, not just the columns needed to sort the rows. This means fewer tuples will fit into the sort buffer, and the filesort will have to perform more sort merge passes.

It’s tricky to say which algorithm is more efficient, and there are best and worst cases for each algorithm. MySQL uses the new algorithm if the total size of all the columns needed for the query, plus the ORDER BY columns, is no more than max_length_for_sort_data bytes, so you can use this setting to influence which algorithm is used. See Optimizing for Filesorts in Chapter 8 for more on this topic.

MySQL might use much more temporary storage space for a filesort than you’d expect, because it allocates a fixed-size record for each tuple it will sort. These records are large enough to hold the largest possible tuple, including the full length of each VARCHAR column. Also, if you’re using UTF-8, MySQL allocates three bytes for each character. As a result, we’ve seen cases where poorly optimized schemas caused the temporary space used for sorting to be many times larger than the entire table’s size on disk.

When sorting a join, MySQL might perform the filesort at two stages during the query execution. If the ORDER BY clause refers only to columns from the first table in the join order, MySQL can filesort this table and then proceed with the join. If this happens, EXPLAIN shows “Using filesort” in the Extra column. In all other circumstances—such as a sort against a table that’s not first in the join order, or when the ORDER BY clause contains columns from more than one table—MySQL must store the query’s results into a temporary table and then filesort the temporary table after the join finishes. In this case, EXPLAIN shows “Using temporary; Using filesort” in the Extra column. If there’s a LIMIT, it is applied after the filesort, so the temporary table and the filesort can be very large.

MySQL 5.6 introduces significant changes to how sorts are performed when only a subset of the rows will be needed, such as a LIMIT query. Instead of sorting the entire result set and then returning a portion of it, MySQL 5.6 can sometimes discard unwanted rows before sorting them.

The Query Execution Engine

The parsing and optimizing stage outputs a query execution plan, which MySQL’s query execution engine uses to process the query. The plan is a data structure; it is not executable byte-code, which is how many other databases execute queries.

In contrast to the optimization stage, the execution stage is usually not all that complex: MySQL simply follows the instructions given in the query execution plan. Many of the operations in the plan invoke methods implemented by the storage engine interface, also known as the handler API. Each table in the query is represented by an instance of a handler. If a table appears three times in the query, for example, the server creates three handler instances. Though we glossed over this before, MySQL actually creates the handler instances early in the optimization stage. The optimizer uses them to get information about the tables, such as their column names and index statistics.

The storage engine interface has lots of functionality, but it needs only a dozen or so “building-block” operations to execute most queries. For example, there’s an operation to read the first row in an index, and one to read the next row in an index. This is enough for a query that does an index scan. This simplistic execution method makes MySQL’s storage engine architecture possible, but it also imposes some of the optimizer limitations we’ve discussed.

NOTE

Not everything is a handler operation. For example, the server manages table locks. The handler might implement its own lower-level locking, as InnoDB does with row-level locks, but this does not replace the server’s own locking implementation. As explained in Chapter 1, anything that all storage engines share is implemented in the server, such as date and time functions, views, and triggers.

To execute the query, the server just repeats the instructions until there are no more rows to examine.

Returning Results to the Client

The final step in executing a query is to reply to the client. Even queries that don’t return a result set still reply to the client connection with information about the query, such as how many rows it affected.

If the query is cacheable, MySQL will also place the results into the query cache at this stage.

The server generates and sends results incrementally. Think back to the single-sweep multijoin method we mentioned earlier. As soon as MySQL processes the last table and generates one row successfully, it can and should send that row to the client.

This has two benefits: it lets the server avoid holding the row in memory, and it means the client starts getting the results as soon as possible.[83]

Each row in the result set is sent in a separate packet in the MySQL client/server protocol, although protocol packets can be buffered and sent together at the TCP protocol layer.

[73] If the query is too large, the server will refuse to receive any more data and throw an error.

[74] You can work around this with SQL_BUFFER_RESULT, which we’ll see a bit later.

[75] Percona Server has a feature that strips comments from queries before the hash lookup is performed, which can help make the query cache more effective when queries differ only in the text contained in their comments.

[76] For example, the range check query plan reevaluates indexes for each row in a JOIN. You can see this query plan by looking for “range checked for each record” in the Extra column in EXPLAIN. This query plan also increments the Select_full_range_join server variable.

[77] We agree, a movie without actors is strange, but the Sakila sample database lists no actors for SLACKER LIAISONS, which it describes as “A Fast-Paced Tale of a Shark And a Student who must Meet a Crocodile in Ancient China.”

[78] As we show later, MySQL’s query execution isn’t quite this simple; there are many optimizations that complicate it.

[79] There are no indexes on the temporary table, which is something you should keep in mind when writing complex joins against subqueries in the FROM clause. This applies to UNION queries, too.

[80] There are significant changes in MySQL 5.6 and in MariaDB, which introduce more sophisticated execution paths.

[81] The server generates the output from the execution plan. It thus has the same semantics as the original query, but not necessarily the same text.

[82] Strictly speaking, MySQL doesn’t try to reduce the number of rows it reads. Instead, it tries to optimize for fewer page reads. But a row count can often give you a rough idea of the query cost.

[83] You can influence this behavior if needed—for example, with the SQL_BUFFER_RESULT hint. See Query Optimizer Hints.

Limitations of the MySQL Query Optimizer

MySQL’s “everything is a nested-loop join” approach to query execution isn’t ideal for optimizing every kind of query. Fortunately, there are only a limited number of cases where the MySQL query optimizer does a poor job, and it’s usually possible to rewrite such queries more efficiently. Even better, when MySQL 5.6 is released it will eliminate many of MySQL’s limitations and make a variety of queries execute much more quickly.

Correlated Subqueries

MySQL sometimes optimizes subqueries very badly. The worst offenders are IN() subqueries in the WHERE clause. As an example, let’s find all films in the Sakila sample database’s sakila.film table whose casts include the actress Penelope Guiness (actor_id=1). This feels natural to write with a subquery, as follows:

mysql> SELECT * FROM sakila.film

-> WHERE film_id IN(

-> SELECT film_id FROM sakila.film_actor WHERE actor_id = 1);

It’s tempting to think that MySQL will execute this query from the inside out, by finding a list of actor_id values and substituting them into the IN() list. We said an IN() list is generally very fast, so you might expect the query to be optimized to something like this:

-- SELECT GROUP_CONCAT(film_id) FROM sakila.film_actor WHERE actor_id = 1;

-- Result: 1,23,25,106,140,166,277,361,438,499,506,509,605,635,749,832,939,970,980

SELECT * FROM sakila.film

WHERE film_id

IN(1,23,25,106,140,166,277,361,438,499,506,509,605,635,749,832,939,970,980);

Unfortunately, exactly the opposite happens. MySQL tries to “help” the subquery by pushing a correlation into it from the outer table, which it thinks will let the subquery find rows more efficiently. It rewrites the query as follows:

SELECT * FROM sakila.film

WHERE EXISTS (

SELECT * FROM sakila.film_actor WHERE actor_id = 1

AND film_actor.film_id = film.film_id);

Now the subquery requires the film_id from the outer film table and can’t be executed first. EXPLAIN shows the result as DEPENDENT SUBQUERY (you can use EXPLAIN EXTENDED to see exactly how the query is rewritten):

mysql> EXPLAIN SELECT * FROM sakila.film ...;

+----+--------------------+------------+--------+------------------------+

| id | select_type | table | type | possible_keys |

+----+--------------------+------------+--------+------------------------+

| 1 | PRIMARY | film | ALL | NULL |

| 2 | DEPENDENT SUBQUERY | film_actor | eq_ref | PRIMARY,idx_fk_film_id |

+----+--------------------+------------+--------+------------------------+

According to the EXPLAIN output, MySQL will table-scan the film table and execute the subquery for each row it finds. This won’t cause a noticeable performance hit on small tables, but if the outer table is very large, the performance will be extremely bad. Fortunately, it’s easy to rewrite such a query as a JOIN:

mysql> SELECT film.* FROM sakila.film

-> INNER JOIN sakila.film_actor USING(film_id)

-> WHERE actor_id = 1;

Another good optimization is to manually generate the IN() list by executing the subquery as a separate query with GROUP_CONCAT(). Sometimes this can be faster than a JOIN. And finally, although IN() subqueries work poorly in many cases, EXISTS() or equality subqueries sometimes work much better. Here is another way to rewrite our IN() subquery example:

mysql> SELECT * FROM sakila.film

-> WHERE EXISTS(

-> SELECT * FROM sakila.film_actor WHERE actor_id = 1

-> AND film_actor.film_id = film.film_id);

NOTE

The optimizer limitations we’ll discuss throughout this section apply to the official MySQL server from Oracle Corporation as of version 5.5. The MariaDB fork of MySQL has several related query optimizer and execution engine enhancements, such as executing correlated subqueries from the inside out.

When a correlated subquery is good

MySQL doesn’t always optimize correlated subqueries badly. If you hear advice to always avoid them, don’t listen! Instead, measure and make your own decision. Sometimes a correlated subquery is a perfectly reasonable, or even optimal, way to get a result. Let’s look at an example:

mysql> EXPLAIN SELECT film_id, language_id FROM sakila.film

-> WHERE NOT EXISTS(

-> SELECT * FROM sakila.film_actor

-> WHERE film_actor.film_id = film.film_id

-> )\G

*************************** 1. row ***************************

id: 1

select_type: PRIMARY

table: film

type: ALL

possible_keys: NULL

key: NULL

key_len: NULL

ref: NULL

rows: 951

Extra: Using where

*************************** 2. row ***************************

id: 2

select_type: DEPENDENT SUBQUERY

table: film_actor

type: ref

possible_keys: idx_fk_film_id

key: idx_fk_film_id

key_len: 2

ref: film.film_id

rows: 2

Extra: Using where; Using index

The standard advice for this query is to write it as a LEFT OUTER JOIN instead of using a subquery. In theory, MySQL’s execution plan will be essentially the same either way. Let’s see:

mysql> EXPLAIN SELECT film.film_id, film.language_id

-> FROM sakila.film

-> LEFT OUTER JOIN sakila.film_actor USING(film_id)

-> WHERE film_actor.film_id IS NULL\G

*************************** 1. row ***************************

id: 1

select_type: SIMPLE

table: film

type: ALL

possible_keys: NULL

key: NULL

key_len: NULL

ref: NULL

rows: 951

Extra:

*************************** 2. row ***************************

id: 1

select_type: SIMPLE

table: film_actor

type: ref

possible_keys: idx_fk_film_id

key: idx_fk_film_id

key_len: 2

ref: sakila.film.film_id

rows: 2

Extra: Using where; Using index; Not exists

The plans are nearly identical, but there are some differences:

§ The SELECT type against film_actor is DEPENDENT SUBQUERY in one query and SIMPLE in the other. This difference simply reflects the syntax, because the first query uses a subquery and the second doesn’t. It doesn’t make much difference in terms of handler operations.

§ The second query doesn’t say “Using where” in the Extra column for the film table. That doesn’t matter, though: the second query’s USING clause is the same thing as a WHERE clause anyway.

§ The second query says “Not exists” in the film_actor table’s Extra column. This is an example of the early-termination algorithm we mentioned earlier in this chapter. It means MySQL is using a not-exists optimization to avoid reading more than one row in the film_actor table’sidx_fk_film_id index. This is equivalent to a NOT EXISTS() correlated subquery, because it stops processing the current row as soon as it finds a match.

So, in theory, MySQL will execute the queries almost identically. In reality, measuring is the only way to tell which approach is really faster. We benchmarked both queries on our standard setup. The results are shown in Table 6-1.

Table 6-1. NOT EXISTS versus LEFT OUTER JOIN

|

Query |

Result in queries per second (QPS) |

|

NOT EXISTS subquery |

360 QPS |

|

LEFT OUTER JOIN |

425 QPS |

Our benchmark found that the subquery is quite a bit slower!

However, this isn’t always the case. Sometimes a subquery can be faster. For example, it can work well when you just want to see rows from one table that match rows in another table. Although that sounds like it describes a join perfectly, it’s not always the same thing. The following join, which is designed to find every film that has an actor, will return duplicates because some films have multiple actors:

mysql> SELECT film.film_id FROM sakila.film

-> INNER JOIN sakila.film_actor USING(film_id);

We need to use DISTINCT or GROUP BY to eliminate the duplicates:

mysql> SELECT DISTINCT film.film_id FROM sakila.film

-> INNER JOIN sakila.film_actor USING(film_id);

But what are we really trying to express with this query, and is it obvious from the SQL? The EXISTS operator expresses the logical concept of “has a match” without producing duplicated rows and avoids a GROUP BY or DISTINCT operation, which might require a temporary table. Here’s the query written as a subquery instead of a join:

mysql> SELECT film_id FROM sakila.film

-> WHERE EXISTS(SELECT * FROM sakila.film_actor

-> WHERE film.film_id = film_actor.film_id);

Again, we benchmarked to see which strategy was faster. The results are shown in Table 6-2.

Table 6-2. EXISTS versus INNER JOIN

|

Query |

Result in queries per second (QPS) |

|

INNER JOIN |

185 QPS |

|

EXISTS subquery |

325 QPS |

In this example, the subquery performs much faster than the join.

We showed this lengthy example to illustrate two points: you should not heed categorical advice about subqueries, and you should measure to prove your assumptions about query plans and response time. A final note on subqueries: this is one of the rare cases where we need to mention a bug in MySQL. In MySQL version 5.1.48 and earlier, the following syntax can lock a row in table2:

SELECT ... FROM table1 WHERE col = (SELECT ... FROM table2 WHERE ...);

This bug, if it affects you, can cause subqueries to behave much differently under high concurrency than if you measure their performance in a single thread. This is bug 46947, and even though it’s solved, it still reinforces our point: don’t assume.

UNION Limitations

MySQL sometimes can’t “push down” conditions from the outside of a UNION to the inside, where they could be used to limit results or enable additional optimizations.

If you think any of the individual queries inside a UNION would benefit from a LIMIT, or if you know they’ll be subject to an ORDER BY clause once combined with other queries, you need to put those clauses inside each part of the UNION. For example, if you UNION together two tables andLIMIT the result to the first 20 rows, MySQL will store both tables into a temporary table and then retrieve just 20 rows from it:

(SELECT first_name, last_name

FROM sakila.actor

ORDER BY last_name)

UNION ALL

(SELECT first_name, last_name

FROM sakila.customer

ORDER BY last_name)

LIMIT 20;