Introduction to 3D Game Programming with DirectX 12 (Computer Science) (2016)

|

Part 1 |

MATHEMATICAL

In 3D computer graphics, we use matrices to compactly describe geometric transformations such as scaling, rotation, and translation, and also to change the coordinates of a point or vector from one frame to another. This chapter explores the mathematics of matrices. Objectives: 1. To obtain an understanding of matrices and the operations defined on them. 2. To discover how a vector-matrix multiplication can be viewed as a linear combination. 3. To learn what the identity matrix is, and what the transpose, determinant, and inverse of a matrix are. 4. To become familiar with the subset of classes and functions provided by the DirectX Math library used for matrix mathematics. 2.1 DEFINITION An m × n matrix M is a rectangular array of real numbers with m rows and n columns. The product of the number of rows and columns gives the dimensions of the matrix. The numbers in a matrix are called elements or entries. We identify a matrix element by specifying the row and column of the element using a double subscript notation Mij, where the first subscript identifies the row and the second subscript identifies the column.

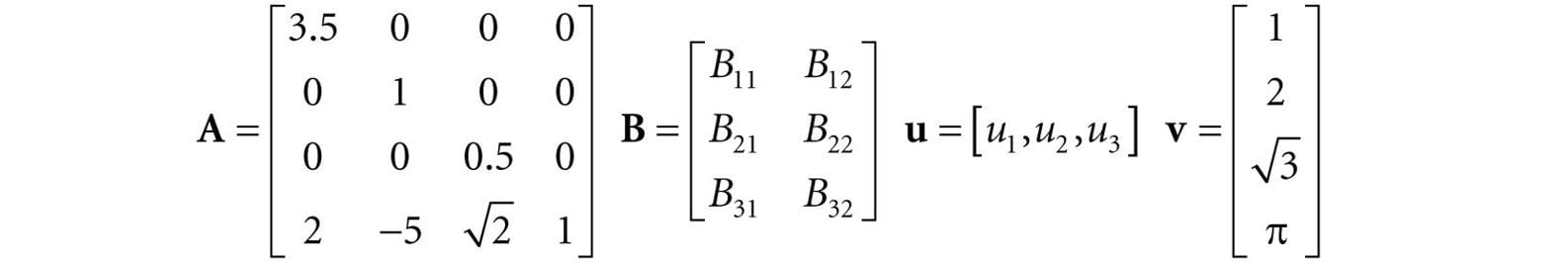

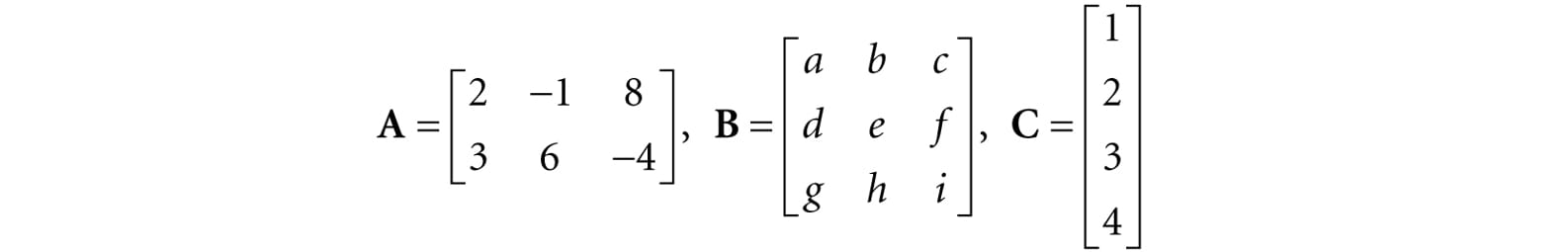

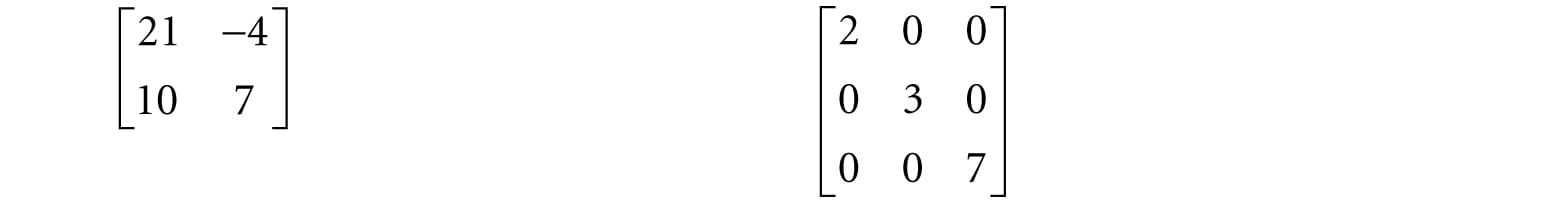

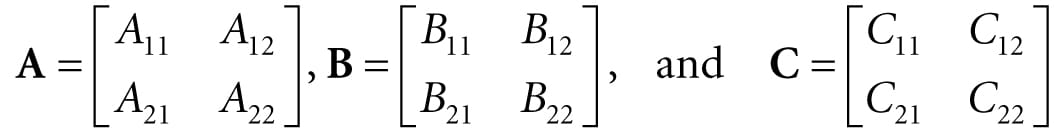

Consider the following matrices:

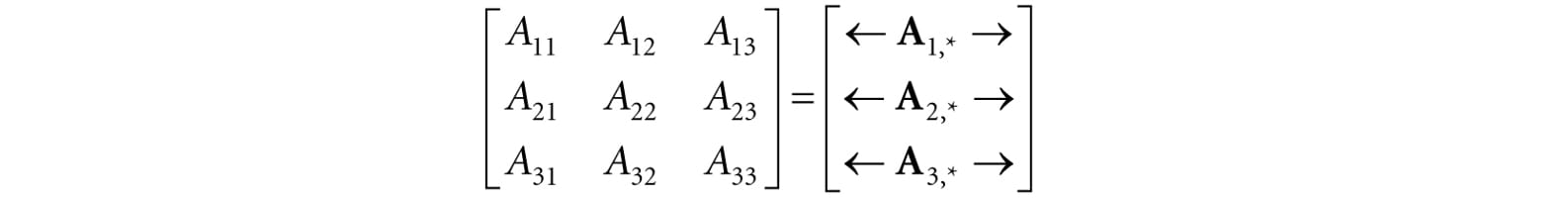

1. The matrix A is a 4 × 4 matrix; the matrix B is a 3 × 2 matrix; the matrix u is a 1 × 3 matrix; and the matrix v is a 4 × 1 matrix. 2. We identify the element in the fourth row and second column of the matrix A by A42 = −5. We identify the element in the second row and first column of the matrix B by B21. 3. The matrices u and v are special matrices in the sense that they contain a single row or column, respectively. We sometimes call these kinds of matrices row vectors or column vectors because they are used to represent a vector in matrix form (e.g., we can freely interchange the vector notations (x, y, z) and [x, y, z]). Observe that for row and column vectors, it is unnecessary to use a double subscript to denote the elements of the matrix—we only need one subscript. Occasionally we like to think of the rows of a matrix as vectors. For example, we might write:

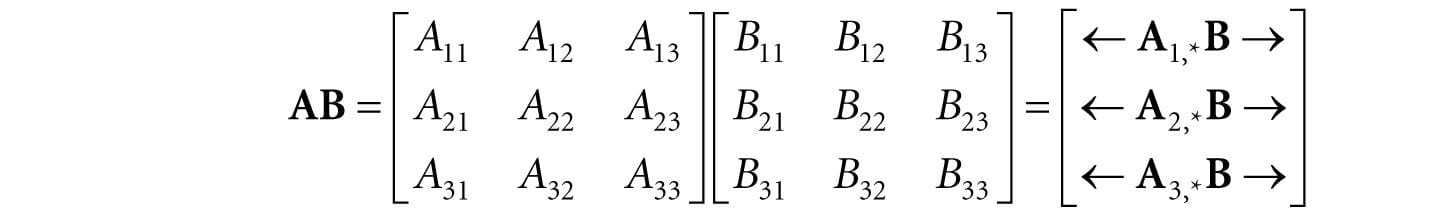

where A1,* = [A11, A12, A13], A2,* = [A21, A22, A23] and A3,* = [A31, A32, A33]. In this notation, the first index specifies the row, and we put a ‘*’ in the second index to indicate that we are referring to the entire row vector. Likewise, we like to do the same thing for the columns:

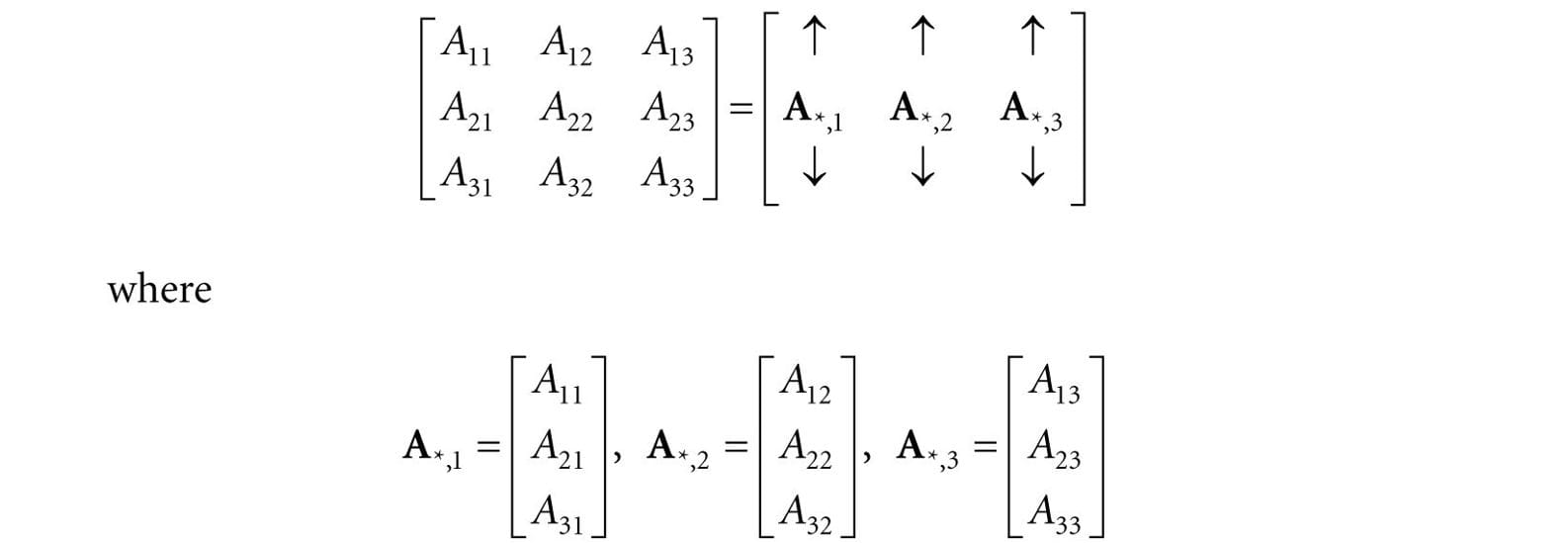

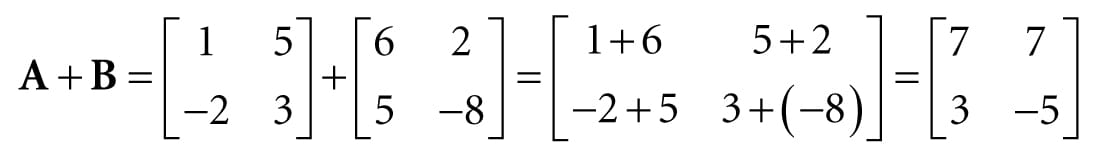

In this notation, the second index specifies the column, and we put a ‘*’ in the first index to indicate that we are referring to the entire column vector. We now define equality, addition, scalar multiplication, and subtraction on matrices. 1. Two matrices are equal if and only if their corresponding elements are equal; as such, two matrices must have the same number of rows and columns in order to be compared. 2. We add two matrices by adding their corresponding elements; as such, it only makes sense to add matrices that the same number of rows and columns. 3. We multiply a scalar and a matrix by multiplying the scalar with every element in the matrix. 4. We define subtraction in terms of matrix addition and scalar multiplication. That is, A − B = A + (−1 B) = A + (−B).

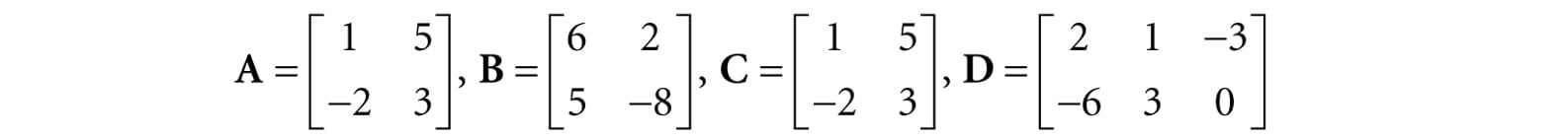

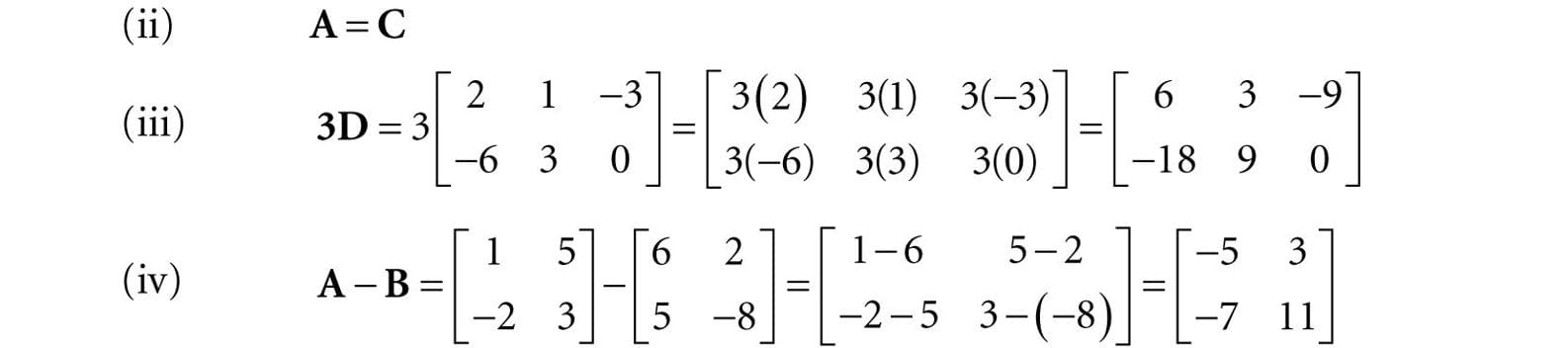

Let

Then, (i)

1. A + B = B + A Commutative law of addition 2. (A + B) + C = A + (B + C) Associative law of addition 3. r(A + B) = rA + rB Scalar distribution over matrices 4. (r + s)A = rA + sA Matrix distribution over scalars 2.2 MATRIX MULTIPLICATION 2.2.1 Definition If A is a m × n matrix and B is a n × p matrix, then the product AB is defined and is a m × p matrix C, where the ijth entry of the product C is given by taking the dot product of the ith row vector in A with the jth column vector in B, that is,

So note that in order for the matrix product AB to be defined, we require that the number of columns in A equal the number of rows in B, which is to say, we require that the dimension of the row vectors in A equal the dimension of the column vectors in B. If these dimensions did not match, then the dot product in Equation 2.1 would not make sense.

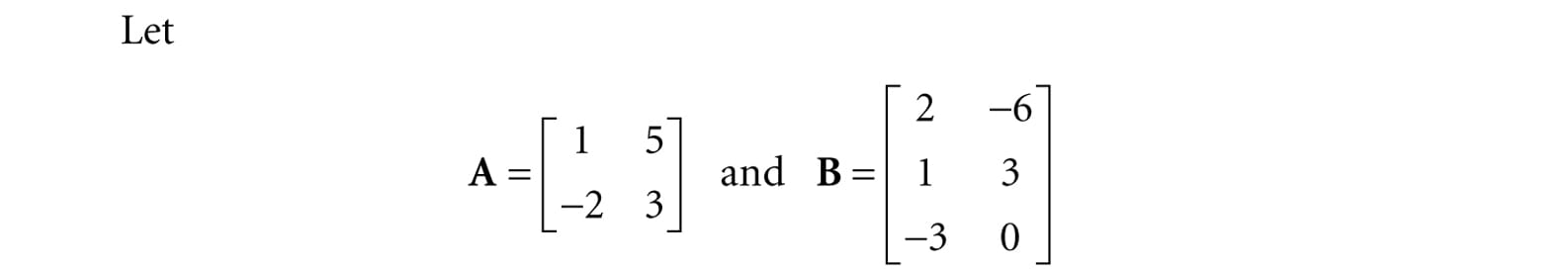

The product AB is not defined since the row vectors in A have dimension 2 and the column vectors in B have dimension 3. In particular, we cannot take the dot product of the first row vector in A with the first column vector in B because we cannot take the dot product of a 2D vector with a 3D vector.

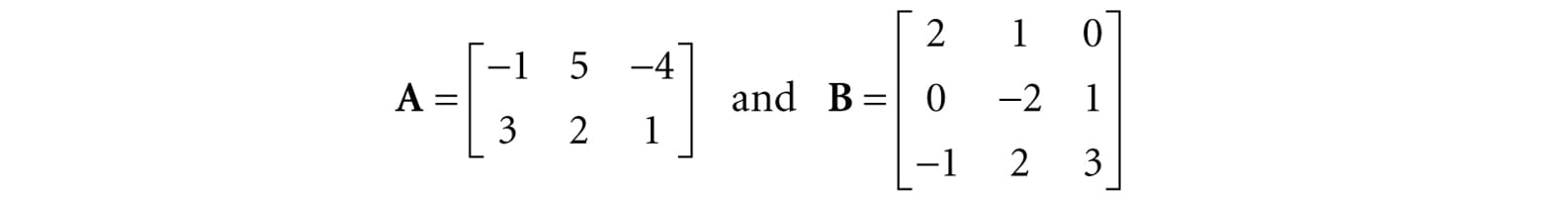

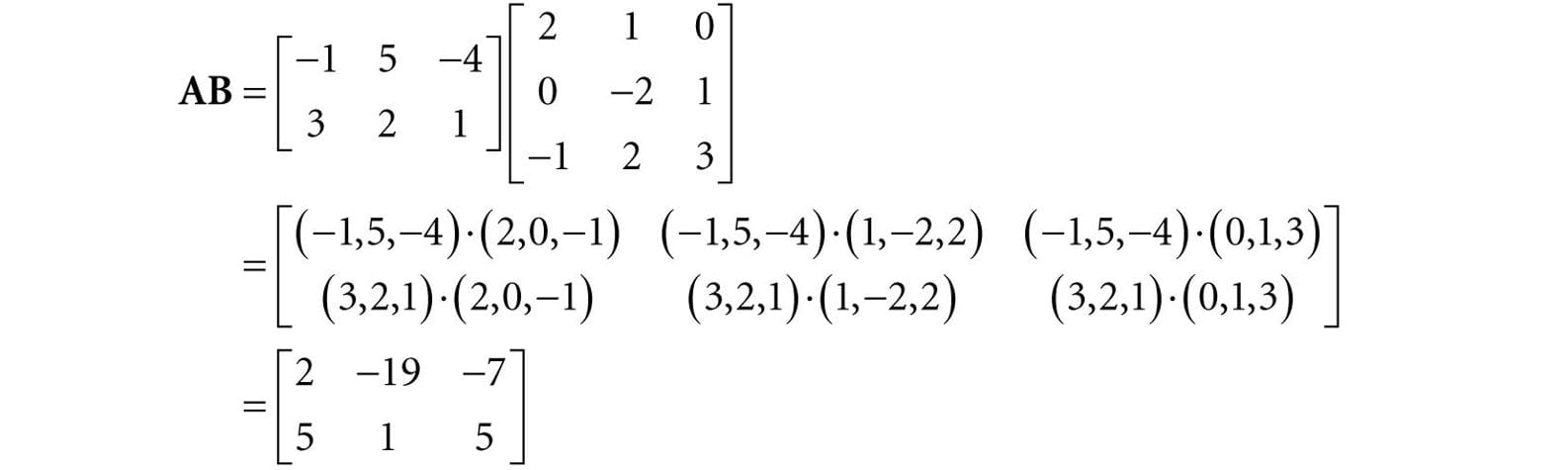

Let

We first point out that the product AB is defined (and is a 2 × 3 matrix) because the number of columns of A equals the number of rows of B. Applying Equation 2.1 yields:

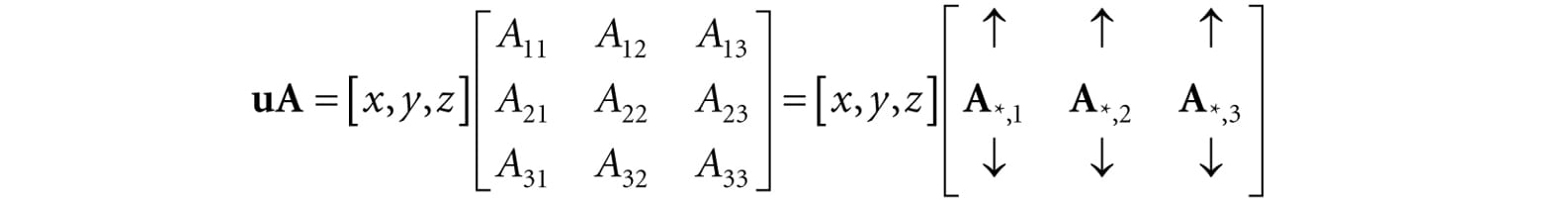

Observe that the product BA is not defined because the number of columns in B does not equal the number of rows in A. This demonstrates that, in general, matrix multiplication is not commutative; that is, AB ≠ BA. 2.2.2 Vector-Matrix Multiplication Consider the following vector-matrix multiplication:

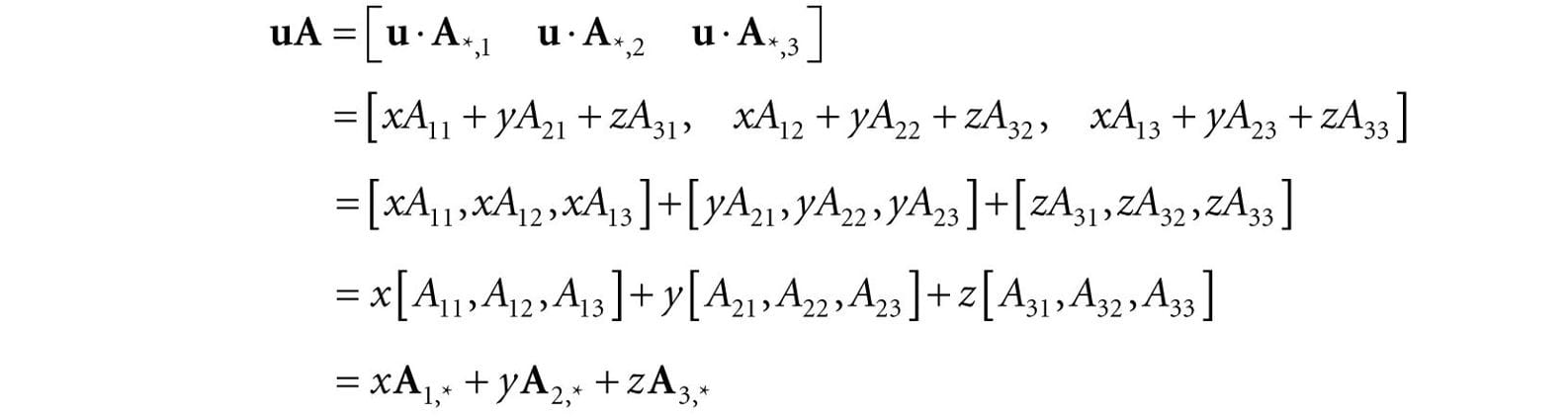

Observe that uA evaluates to a 1 × 3 row vector in this case. Now, applying Equation 2.1 gives:

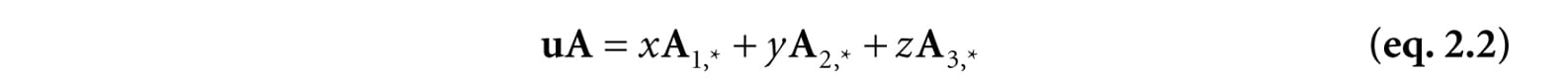

Thus,

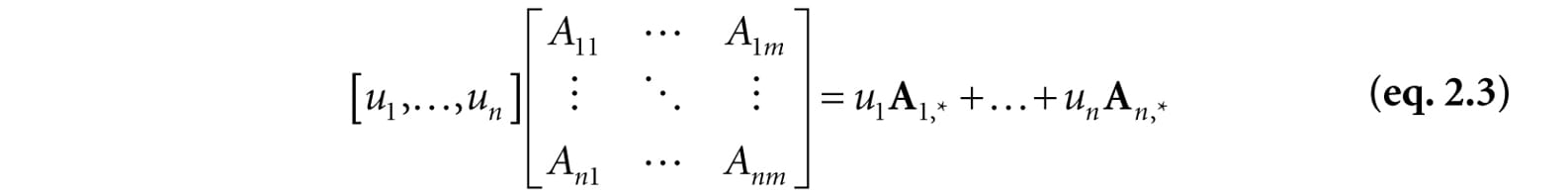

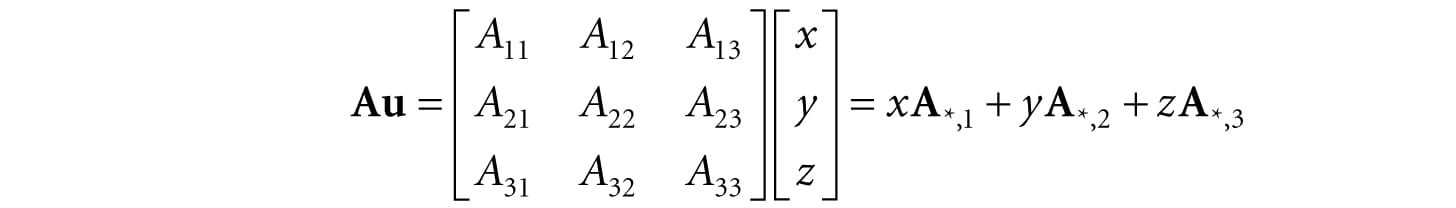

Equation 2.2 is an example of a linear combination, and it says that the vector-matrix product uA is equivalent to a linear combination of the row vectors of the matrix A with scalar coefficients x, y, and z given by the vector u. Note that, although we showed this for a 1 × 3 row vector and a 3 × 3 matrix, the result is true in general. That is, for a l × n row vector u and a n × m matrix A, we have that uA is a linear combination of the row vectors in A with scalar coefficients given by u:

2.2.3 Associativity Matrix multiplication has some nice algebraic properties. For example, matrix multiplication distributes over addition: A(B + C) = AB + AC) and (A + B)C = AC + BC). In particular, however, we will use the associative law of matrix multiplication from time to time, which allows us to choose the order we multiply matrices: (AB)C = A(BC) 2.3 THE TRANSPOSE OF A MATRIX The transpose of a matrix is found by interchanging the rows and columns of the matrix. Thus the transpose of an m × n matrix is an n × m matrix. We denote the transpose of a matrix M as MT.

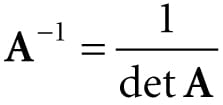

Find the transpose for the following three matrices:

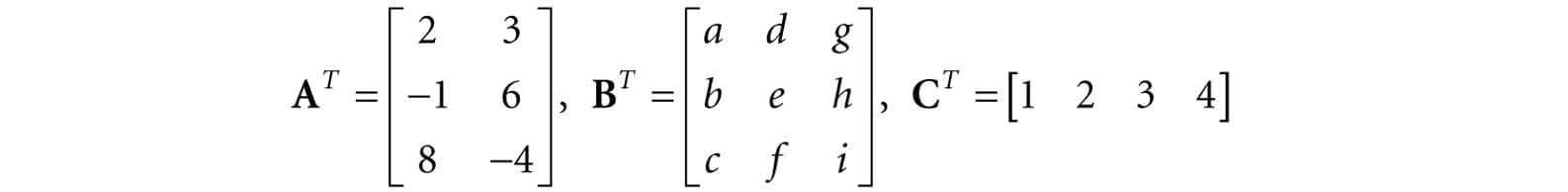

To reiterate, the transposes are found by interchanging the rows and columns, thus

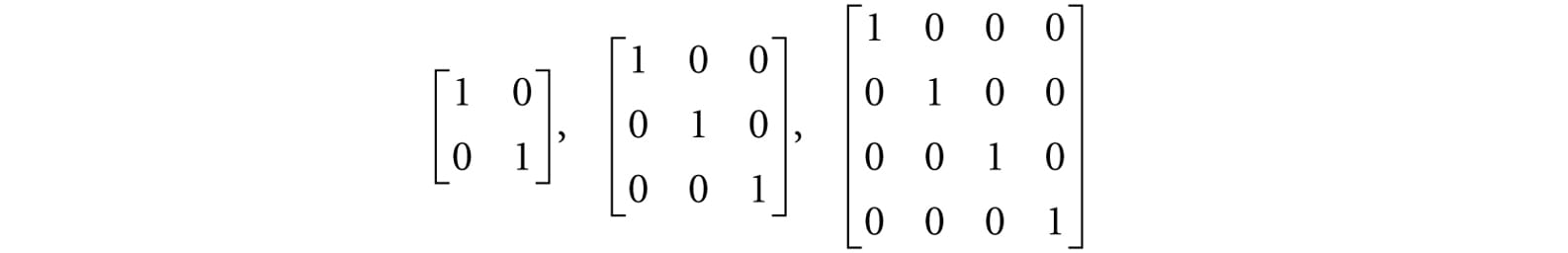

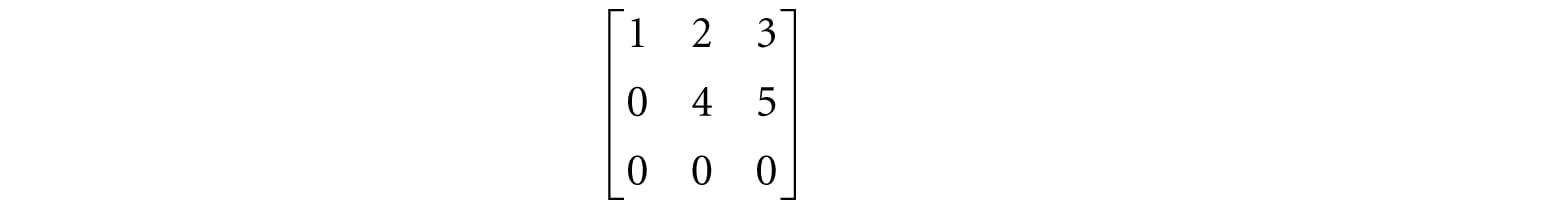

The transpose has the following useful properties: 1. (A + B)T = AT + BT 2. (cA)T = cAT 3. (AB)T = BTAT 4. (AT)T = A 5. (A−1)T = (AT)−1 2.4 THE IDENTITY MATRIX There is a special matrix called the identity matrix. The identity matrix is a square matrix that has zeros for all elements except along the main diagonal; the elements along the main diagonal are all ones. For example, below are 2 × 2, 3 × 3 and 4 × 4 identity matrices.

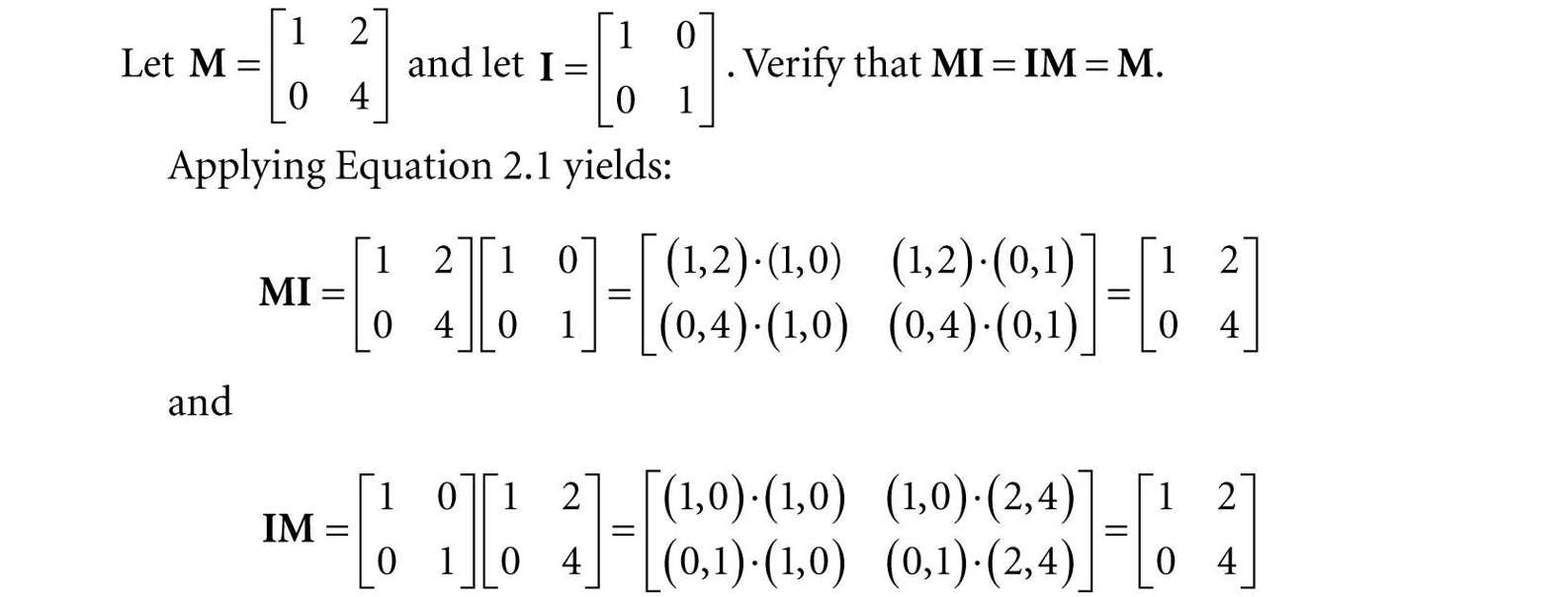

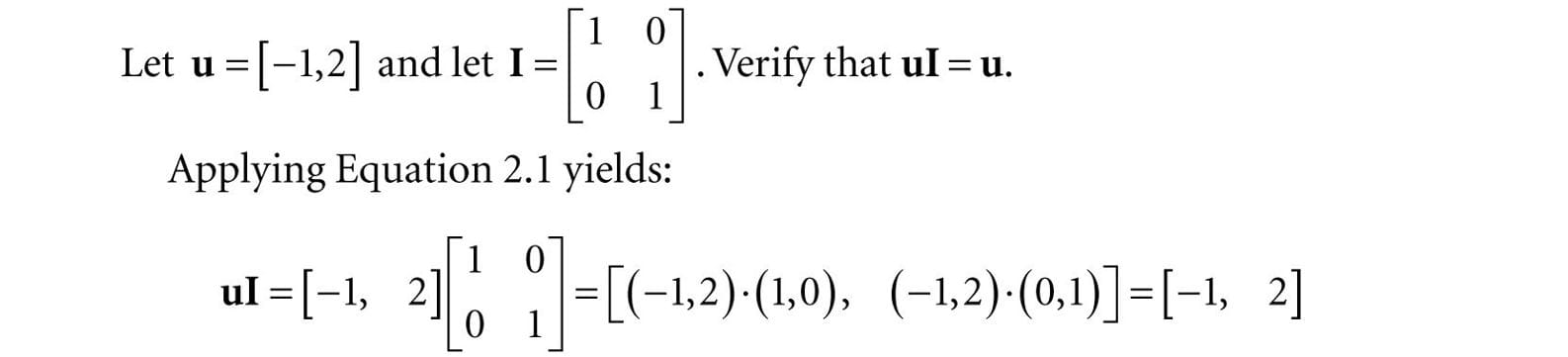

The identity matrix acts as a multiplicative identity; that is, if A is an m × n matrix, B is an n × p matrix, and I is the n × n identity matrix, then AI = A and IB = B In other words, multiplying a matrix by the identity matrix does not change the matrix. The identity matrix can be thought of as the number 1 for matrices. In particular, if M is a square matrix, then multiplication with the identity matrix is commutative: MI = IM = M

Thus it is true that MI = IM = M.

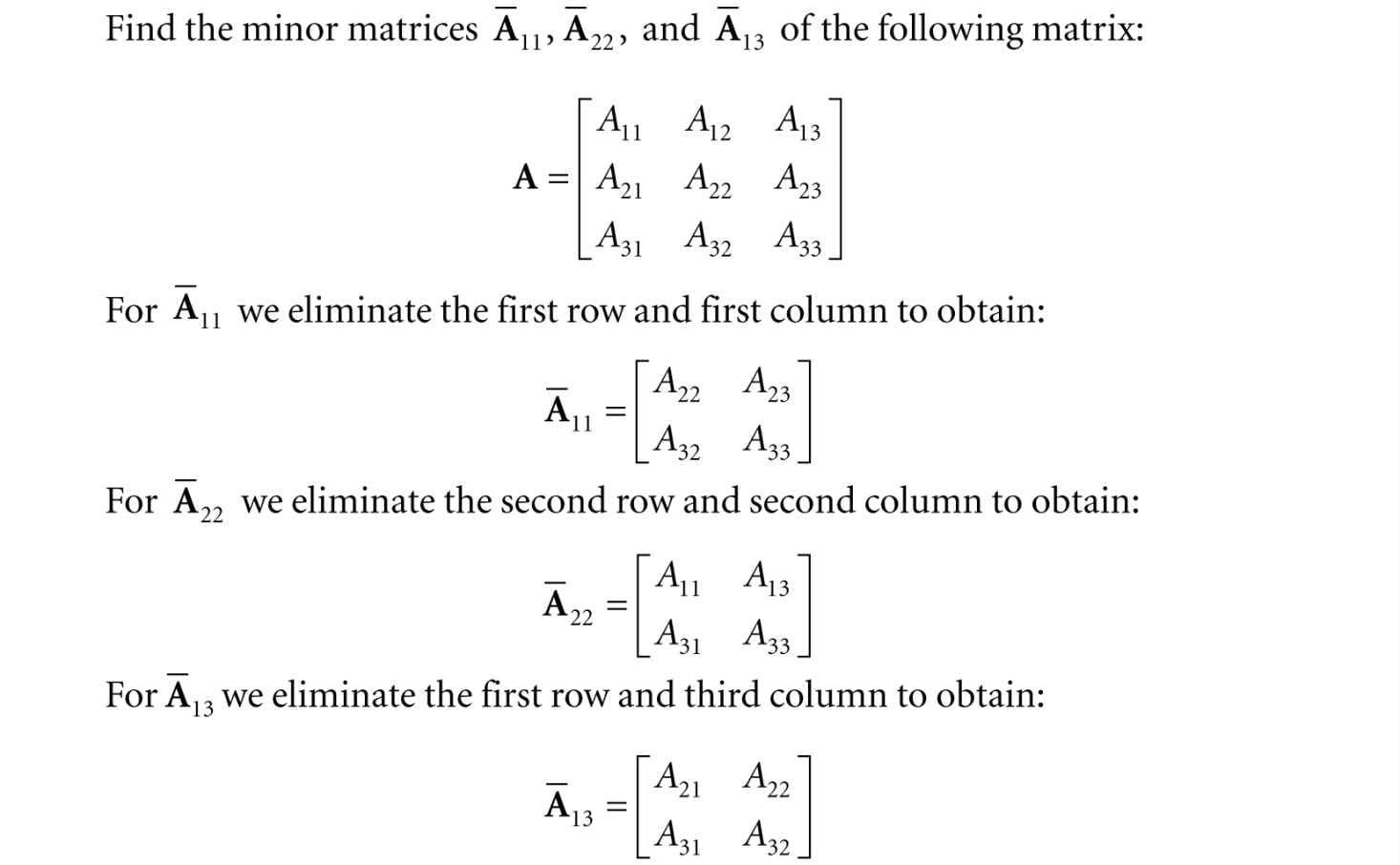

Note that we cannot take the product Iu because the matrix multiplication is not defined. 2.5 THE DETERMINANT OF A MATRIX The determinant is a special function which inputs a square matrix and outputs a real number. The determinant of a square matrix A is commonly denoted by det A. It can be shown that the determinant has a geometric interpretation related to volumes of boxes and that the determinant provides information on how volumes change under linear transformations. In addition, determinants are used to solve systems of linear equations using Cramer’s Rule. However, for our purposes, we are mainly motivated to study the determinant because it gives us an explicit formula for finding the inverse of a matrix (the topic of §2.7). In addition, it can be proved that: A square matrix A is invertible if and only if det A ≠ 0. This fact is useful because it gives us a computational tool for determining if a matrix is invertible. Before we can define the determinant, we first introduce the concept of matrix minors. 2.5.1 Matrix Minors Given an n × n matrix A, the minor matrix is the (n − 1) × (n − 1) matrix found by deleting the ith row and jth column of A.

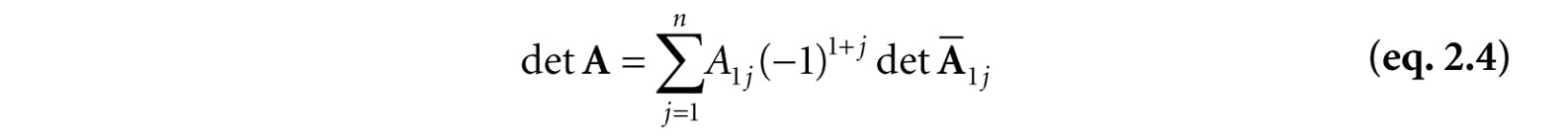

2.5.2 Definition The determinant of a matrix is defined recursively; for instance, the determinant of a 4 × 4 matrix is defined in terms of the determinant of a 3 × 3 matrix, and the determinant of a 3 × 3 matrix is defined in terms of the determinant of a 2 × 2 matrix, and the determinant of a 2 × 2 matrix is defined in terms of the determinant of a 1 × 1 matrix (the determinant of a 1 × 1 matrix A = [A11] is trivially defined to be det [A11] = A11). Let A be an n × n matrix. Then for n > 1 we define:

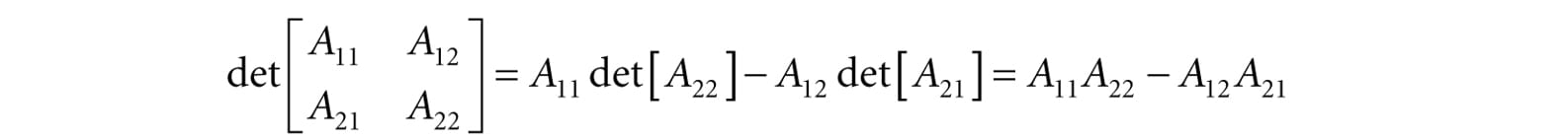

Recalling the definition of the minor matrix , for 2 × 2 matrices, this gives the formula:

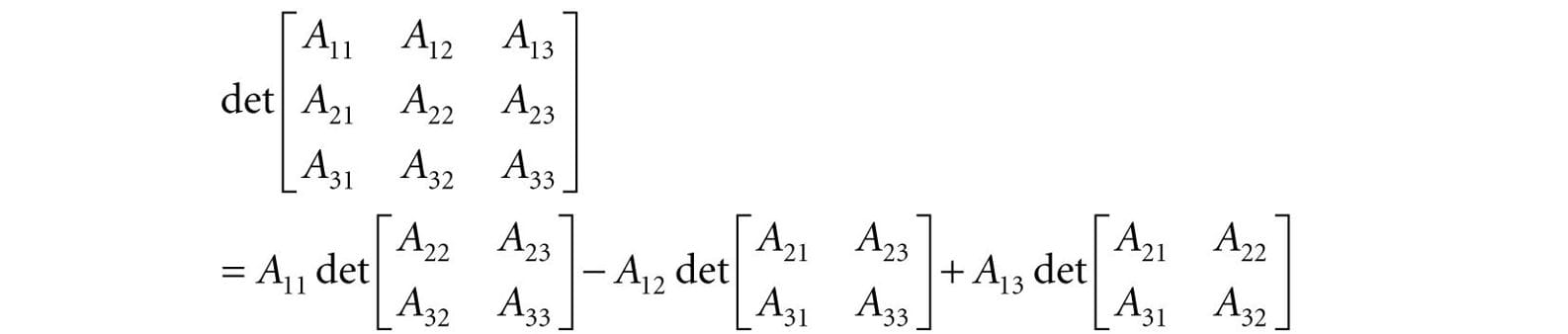

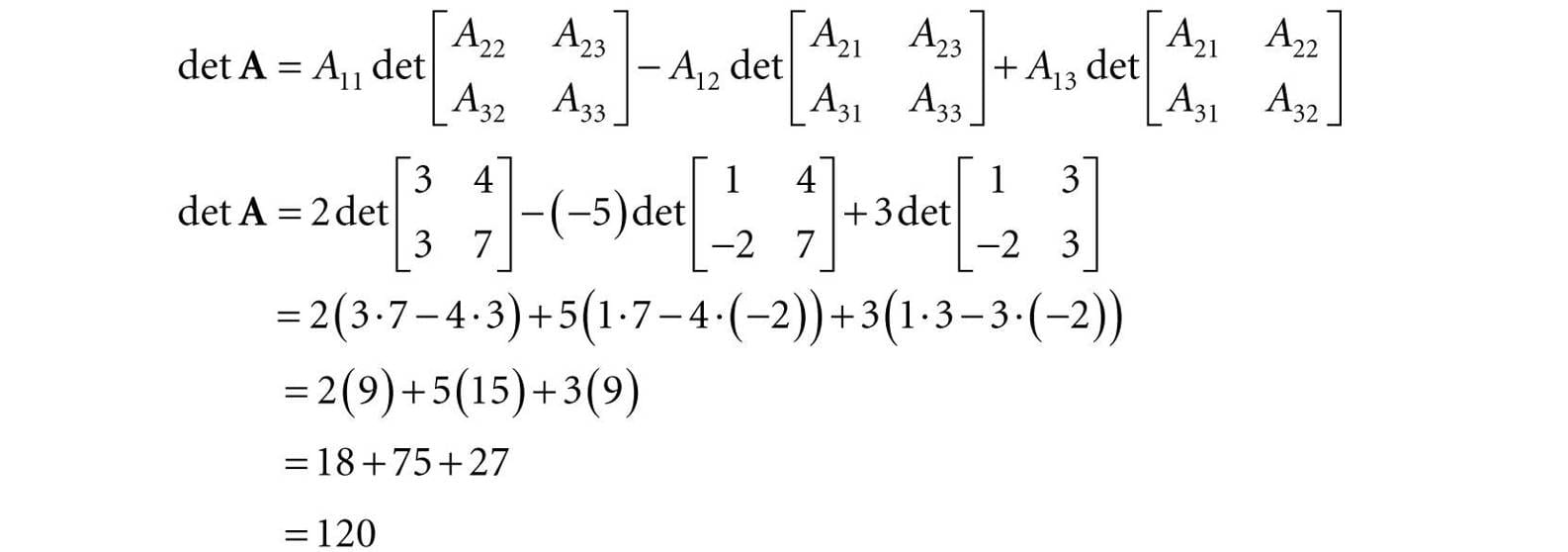

For 3 × 3 matrices, this gives the formula:

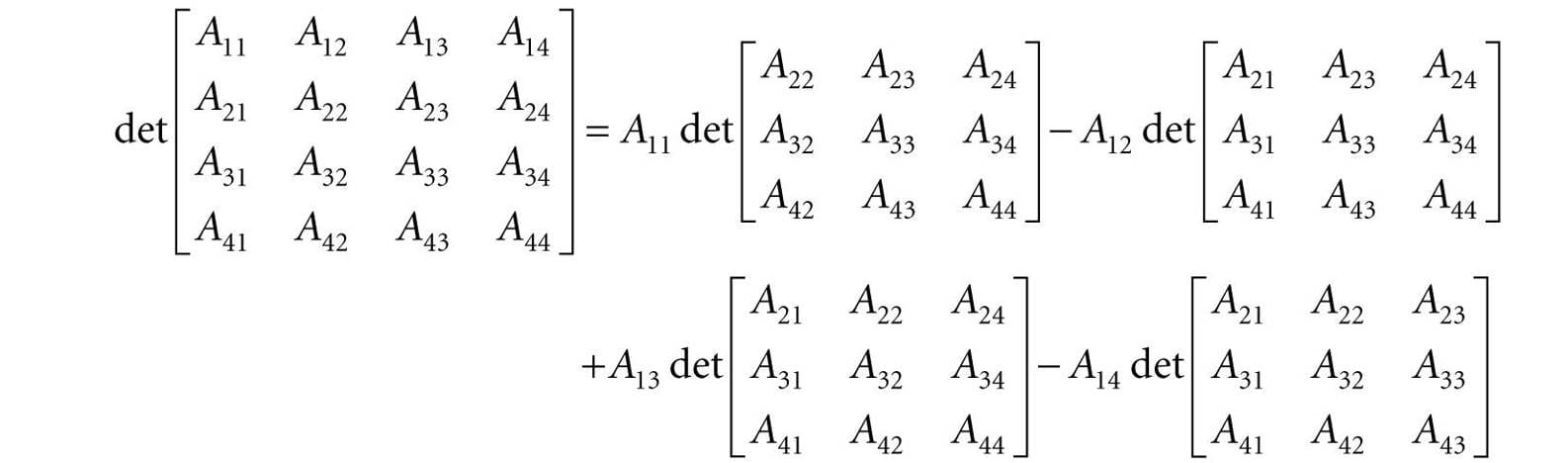

And for 4 × 4 matrices, this gives the formula:

In 3D graphics, we primarily work with 4 × 4 matrices, and so we do not need to continue generating explicit formulas for n > 4.

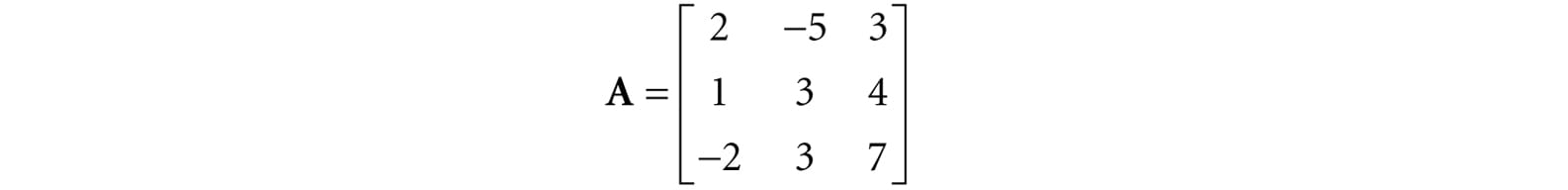

Find the determinant of the matrix

We have that:

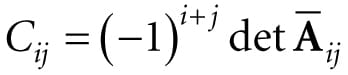

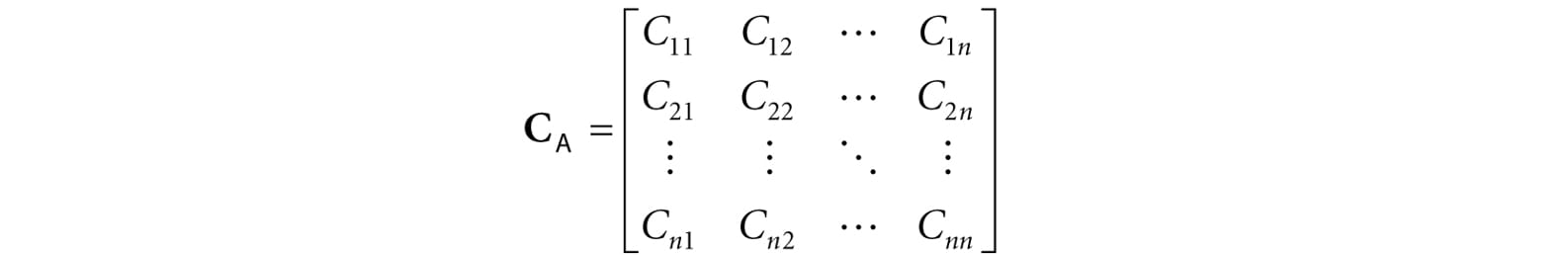

2.6 THE ADJOINT OF A MATRIX Let A be an n × n matrix. The product is called the cofactor of Aij. If we compute Cij and place it in the ijth position of a corresponding matrix CA for every element in A, we obtain the cofactor matrix of A:

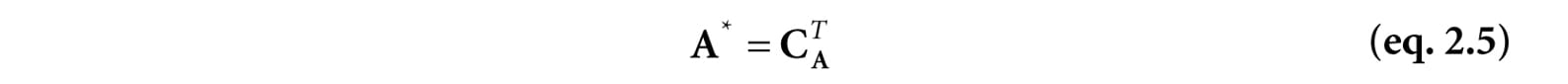

If we take the transpose of CA we get a matrix that is called adjoint of A, which we denote by

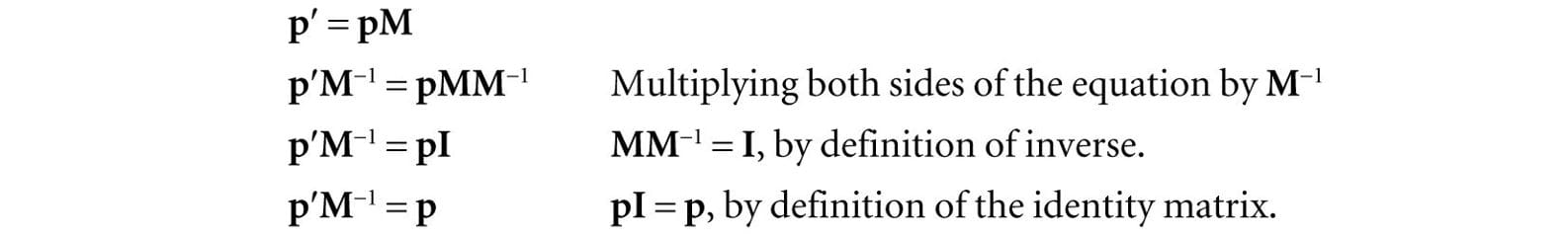

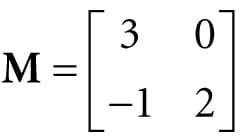

In the next section, we learn that the adjoint enables us to find an explicit formula for computing matrix inverses. 2.7 THE INVERSE OF A MATRIX Matrix algebra does not define a division operation, but it does define a multiplicative inverse operation. The following list summarizes the important information about inverses: 1. Only square matrices have inverses; therefore, when we speak of matrix inverses, we assume we are dealing with a square matrix. 2. The inverse of an n × n matrix M is an n × n matrix denoted by M−1. 3. Not every square matrix has an inverse. A matrix that does have an inverse is said to be invertible, and a matrix that does not have an inverse is said to be singular. 4. The inverse is unique when it exists. 5. Multiplying a matrix with its inverse results in the identity matrix: MM−1 = M−1M = I. Note that multiplying a matrix with its own inverse is a case when matrix multiplication is commutative. Matrix inverses are useful when solving for other matrices in a matrix equation. For example, suppose that we are given the matrix equation p′ = pM. Further suppose that we are given p′ and M, and want to solve for p. Assuming that M is invertible (i.e., M−1 exists), we can solve for p like so:

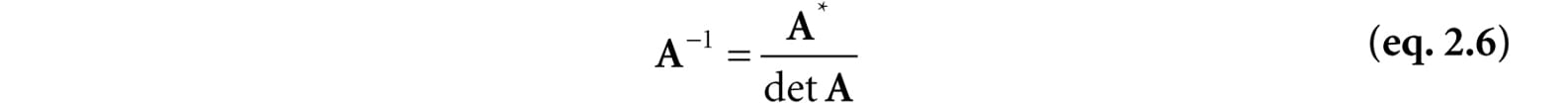

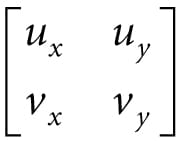

A formula for finding inverses, which we do not prove here but should be proved in any college level linear algebra text, can be given in terms of the adjoint and determinant:

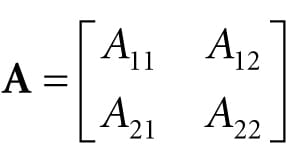

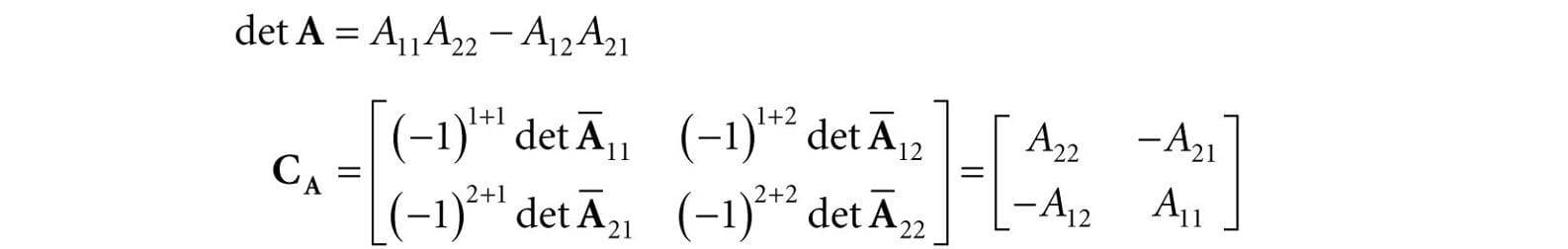

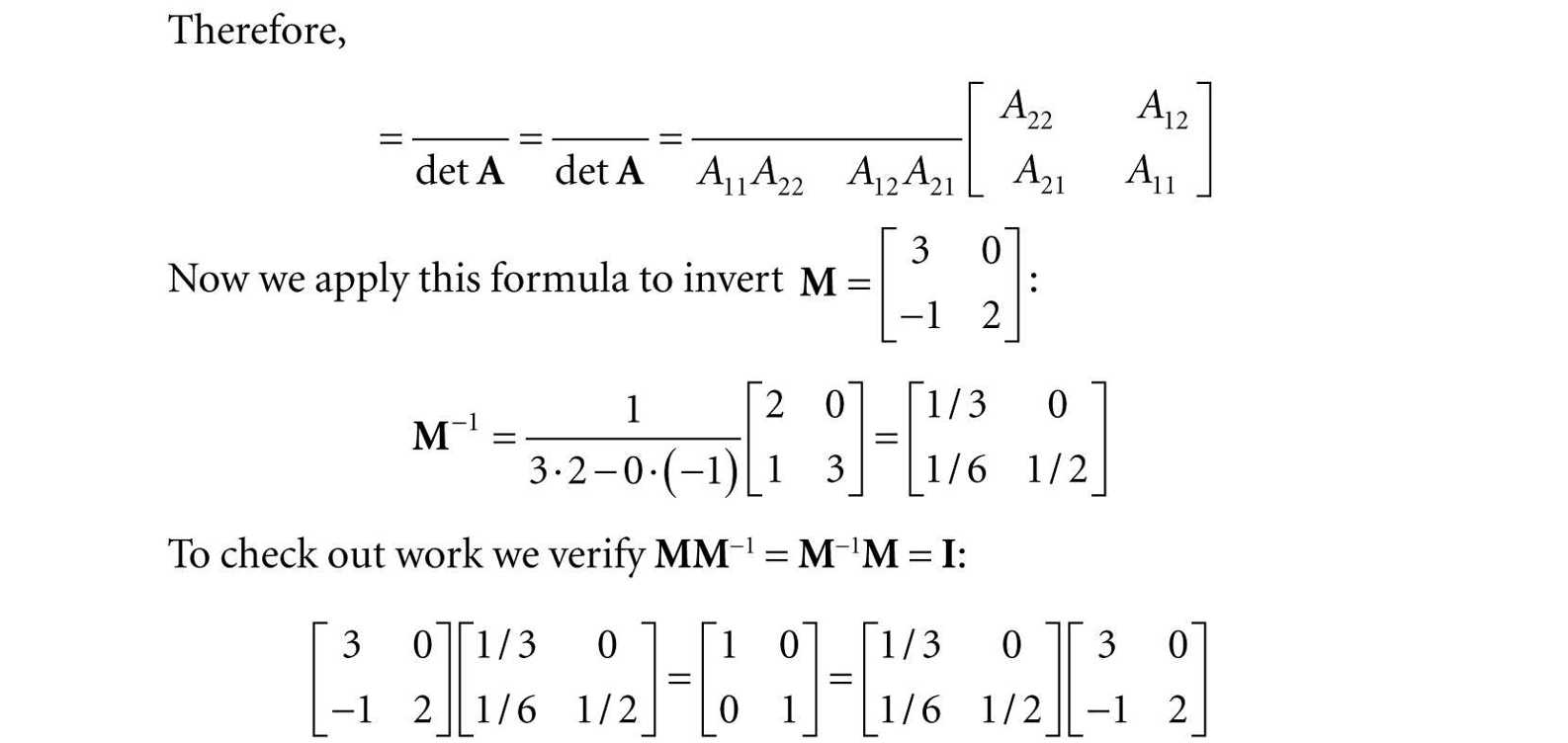

Find a general formula for the inverse of a 2 × 2 matrix , and use this formula to find the inverse of the matrix We have that

To conclude this section on inverses, we present the following useful algebraic property for the inverse of a product: (AB)−1 = B−1A−1 This property assumes both A and B are invertible and that they are both square matrices of the same dimension. To prove that B−1A−1 is the inverse of AB, we must show (AB)(B−1A−1) = I and (B−1A−1)(AB) = I. This is done as follows: (AB)(B−1A−1) = A(BB−1)A−1 = AIA−1 = AA−1 = I (B−1A−1)(AB) = B−1(A−1A)B = B−1IB = B−1B = I 2.8 DIRECTX MATH MATRICES For transforming points and vectors, we use 1 × 4 row vectors and 4 × 4 matrices. The reason for this will be explained in the next chapter. For now, we just concentrate on the DirectX Math types used to represent 4 × 4 matrices. 2.8.1 Matrix Types To represent 4 × 4 matrices in DirectX math, we use the XMMATRIX class, which is defined as follows in the DirectXMath.h header file (with some minor adjustments we have made for clarity): #if (defined(_M_IX86) || defined(_M_X64) || defined(_M_ARM)) && defined(_XM_NO_INTRINSICS_) struct XMMATRIX #else __declspec(align(16)) struct XMMATRIX #endif { // Use 4 XMVECTORs to represent the matrix for SIMD. XMVECTOR r[4]; XMMATRIX() {} // Initialize matrix by specifying 4 row vectors. XMMATRIX(FXMVECTOR R0, FXMVECTOR R1, FXMVECTOR R2, CXMVECTOR R3) { r[0] = R0; r[1] = R1; r[2] = R2; r[3] = R3; } // Initialize matrix by specifying 4 row vectors. XMMATRIX(float m00, float m01, float m02, float m03, float m10, float m11, float m12, float m13, float m20, float m21, float m22, float m23, float m30, float m31, float m32, float m33); // Pass array of sixteen floats to construct matrix. explicit XMMATRIX(_In_reads_(16) const float *pArray); XMMATRIX& operator= (const XMMATRIX& M) { r[0] = M.r[0]; r[1] = M.r[1]; r[2] = M.r[2]; r[3] = M.r[3]; return *this; } XMMATRIX operator+ () const { return *this; } XMMATRIX operator- () const; XMMATRIX& XM_CALLCONV operator+= (FXMMATRIX M); XMMATRIX& XM_CALLCONV operator-= (FXMMATRIX M); XMMATRIX& XM_CALLCONV operator*= (FXMMATRIX M); XMMATRIX& operator*= (float S); XMMATRIX& operator/= (float S); XMMATRIX XM_CALLCONV operator+ (FXMMATRIX M) const; XMMATRIX XM_CALLCONV operator- (FXMMATRIX M) const; XMMATRIX XM_CALLCONV operator* (FXMMATRIX M) const; XMMATRIX operator* (float S) const; XMMATRIX operator/ (float S) const; friend XMMATRIX XM_CALLCONV operator* (float S, FXMMATRIX M); }; As you can see, XMMATRIX uses four XMVECTOR instances to use SIMD. Moreover, XMMATRIX provides overloaded operators for matrix arithmetic. In addition to using the various constructors, an XMMATRIX instance can be created using the XMMatrixSet function: XMMATRIX XM_CALLCONV XMMatrixSet( float m00, float m01, float m02, float m03, float m10, float m11, float m12, float m13, float m20, float m21, float m22, float m23, float m30, float m31, float m32, float m33); Just as we use XMFLOAT2 (2D), XMFLOAT3 (3D), and XMFLOAT4 (4D) when storing vectors in a class, it is recommended, by the DirectXMath documentation to use the XMFLOAT4X4 type to store matrices as class data members. struct XMFLOAT4X4 { union { struct { float _11, _12, _13, _14; float _21, _22, _23, _24; float _31, _32, _33, _34; float _41, _42, _43, _44; }; float m[4][4]; }; XMFLOAT4X4() {} XMFLOAT4X4(float m00, float m01, float m02, float m03, float m10, float m11, float m12, float m13, float m20, float m21, float m22, float m23, float m30, float m31, float m32, float m33); explicit XMFLOAT4X4(_In_reads_(16) const float *pArray); float operator() (size_t Row, size_t Column) const { return m[Row][Column]; } float& operator() (size_t Row, size_t Column) { return m[Row][Column]; } XMFLOAT4X4& operator= (const XMFLOAT4X4& Float4x4); }; We use the following method to load data from XMFLOAT4X4 into XMMATRIX: inline XMMATRIX XM_CALLCONV XMLoadFloat4x4(const XMFLOAT4X4* pSource); We use the following method to store data from XMMATRIX into XMFLOAT4X4: inline void XM_CALLCONV XMStoreFloat4x4(XMFLOAT4X4* pDestination, FXMMATRIX M); 2.8.2 Matrix Functions The DirectX Math library includes the following useful matrix related functions: XMMATRIX XM_CALLCONV XMMatrixIdentity(); // Returns the identity matrix I bool XM_CALLCONV XMMatrixIsIdentity( // Returns true if M is the identity matrix FXMMATRIX M); // Input M XMMATRIX XM_CALLCONV XMMatrixMultiply( // Returns the matrix product AB FXMMATRIX A, // Input A CXMMATRIX B); // Input B XMMATRIX XM_CALLCONV XMMatrixTranspose( // Returns MT FXMMATRIX M); // Input M XMVECTOR XM_CALLCONV XMMatrixDeterminant( // Returns (det M, det M, det M, det M) FXMMATRIX M); // Input M XMMATRIX XM_CALLCONV XMMatrixInverse( // Returns M−1 XMVECTOR* pDeterminant, // Input (det M, det M, det M, det M) FXMMATRIX M); // Input M When we declare a XMMATRIX parameter to a function, we use the same rules we used when passing XMVECTOR parameters (see §1.6.3), except that an XMMATRIX counts as four XMVECTOR parameters. Assuming there are no more than two additional FXMVECTOR parameters in total to the function, the first XMMATRIX should be of type FXMMATRIX, and any other XMMATRIX should be of type CXMMATRIX. We illustrate how these types are defined on 32-bit Windows with a compiler that supports the __fastcall calling convention and a compiler that supports the newer __vectorcall calling convention: // 32-bit Windows __fastcall passes first 3 XMVECTOR arguments // via registers, the remaining on the stack. typedef const XMMATRIX& FXMMATRIX; typedef const XMMATRIX& CXMMATRIX; // 32-bit Windows __vectorcall passes first 6 XMVECTOR arguments // via registers, the remaining on the stack. typedef const XMMATRIX FXMMATRIX; typedef const XMMATRIX& CXMMATRIX; Observe that on 32-bit Windows with __fastcall, a XMMATRIX cannot be passed through SSE/SSE2 registers because only three XMVECTOR arguments via registers are supported, and a XMMATRIX requires four; thus the matrix is just passed on the stack by reference. For the details on how these types are defined for the other platforms, see “Calling Conventions” under “Library Internals” in the DirectXMath documentation [DirectXMath]. The exception to these rules is with constructor methods. [DirectXMath] recommends always using CXMMATRIX for constructors that takes XMMATRIX parameters. Furthermore, do not use the annotation XM_CALLCONV for constructors. 2.8.3 DirectX Math Matrix Sample Program The following code provides some examples on how to use the XMMATRIX class and most of the functions listed in the previous section. #include <windows.h> // for XMVerifyCPUSupport #include <DirectXMath.h> #include <DirectXPackedVector.h> #include <iostream> using namespace std; using namespace DirectX; using namespace DirectX::PackedVector; // Overload the "<<" operators so that we can use cout to // output XMVECTOR and XMMATRIX objects. ostream& XM_CALLCONV operator << (ostream& os, FXMVECTOR v) { XMFLOAT4 dest; XMStoreFloat4(&dest, v); os << "(" << dest.x << ", " << dest.y << ", " << dest.z << ", " << dest.w << ")"; return os; } ostream& XM_CALLCONV operator << (ostream& os, FXMMATRIX m) { for (int i = 0; i < 4; ++i) { os << XMVectorGetX(m.r[i]) << ″\t"; os << XMVectorGetY(m.r[i]) << "\t"; os << XMVectorGetZ(m.r[i]) << "\t"; os << XMVectorGetW(m.r[i]); os << endl; } return os; } int main() { // Check support for SSE2 (Pentium4, AMD K8, and above). if (!XMVerifyCPUSupport()) { cout << "directx math not supported" << endl; return 0; } XMMATRIX A(1.0f, 0.0f, 0.0f, 0.0f, 0.0f, 2.0f, 0.0f, 0.0f, 0.0f, 0.0f, 4.0f, 0.0f, 1.0f, 2.0f, 3.0f, 1.0f); XMMATRIX B = XMMatrixIdentity(); XMMATRIX C = A * B; XMMATRIX D = XMMatrixTranspose(A); XMVECTOR det = XMMatrixDeterminant(A); XMMATRIX E = XMMatrixInverse(&det, A); XMMATRIX F = A * E; cout << "A = " << endl << A << endl; cout << "B = " << endl << B << endl; cout << "C = A*B = " << endl << C << endl; cout << "D = transpose(A) = " << endl << D << endl; cout << "det = determinant(A) = " << det << endl << endl; cout << "E = inverse(A) = " << endl << E << endl; cout << "F = A*E = " << endl << F << endl; return 0; }

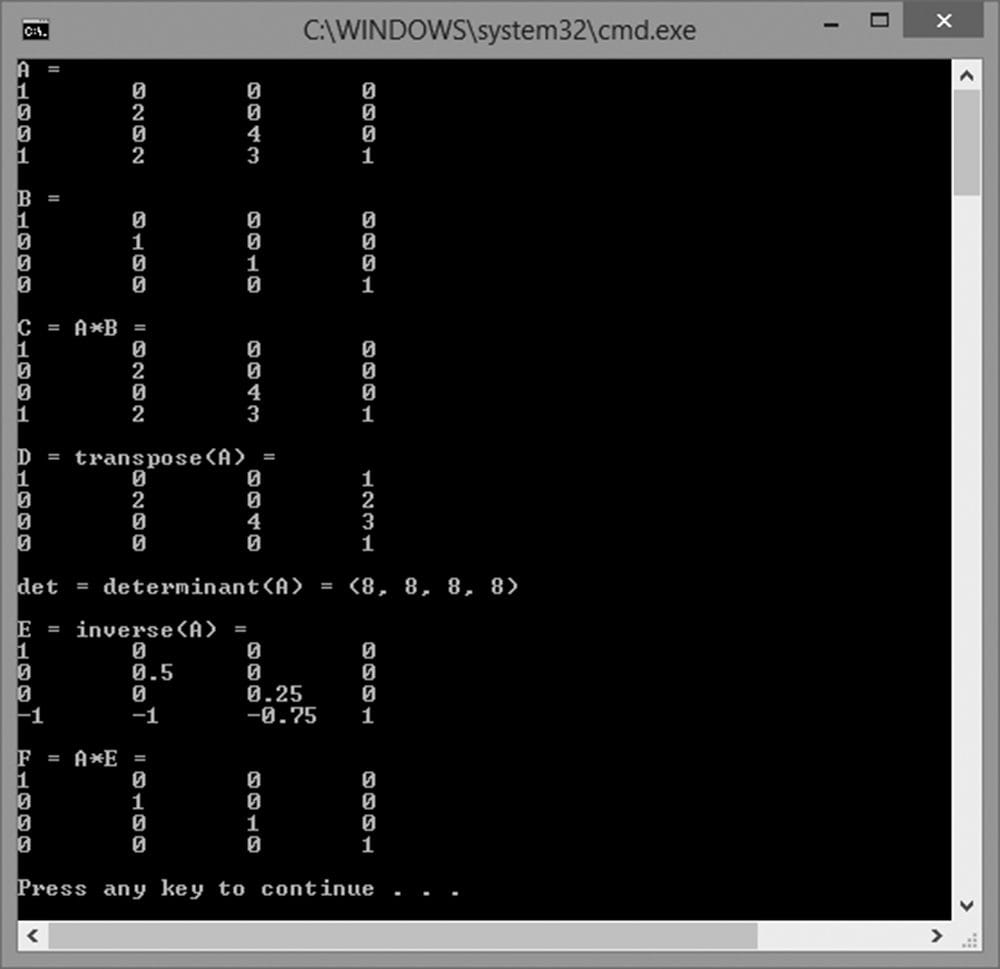

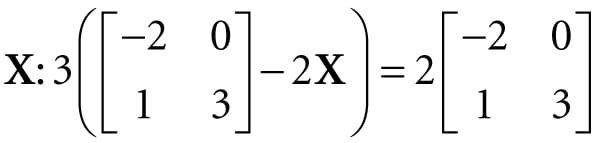

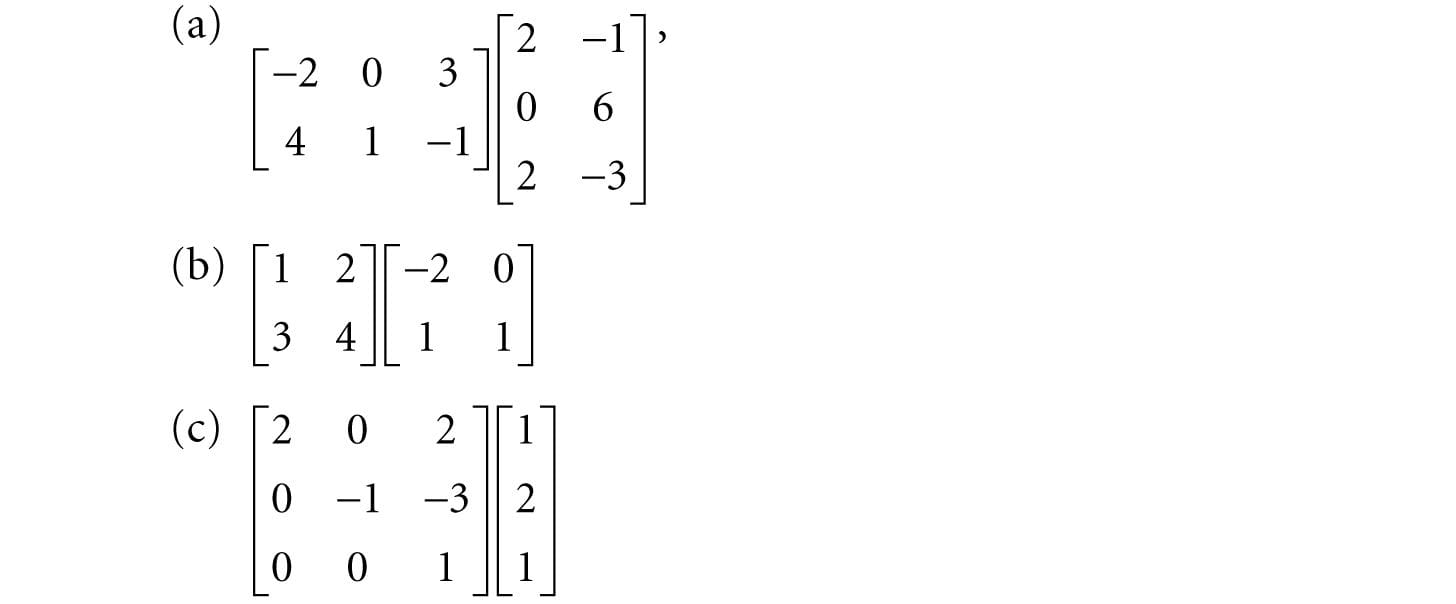

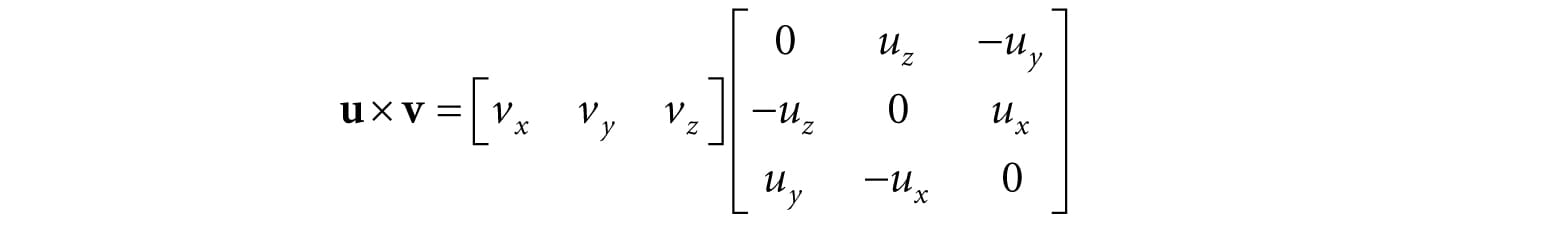

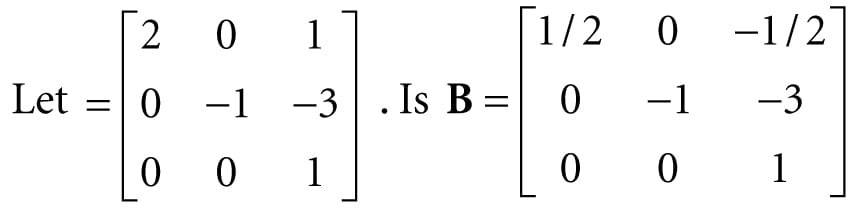

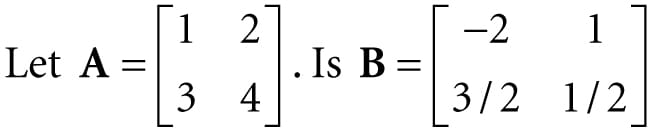

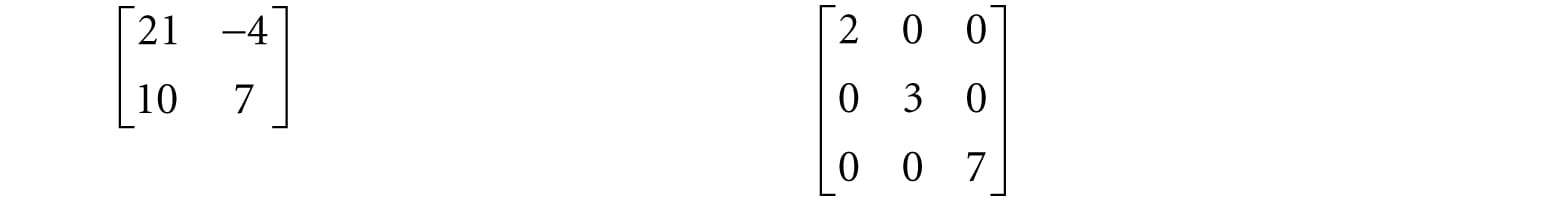

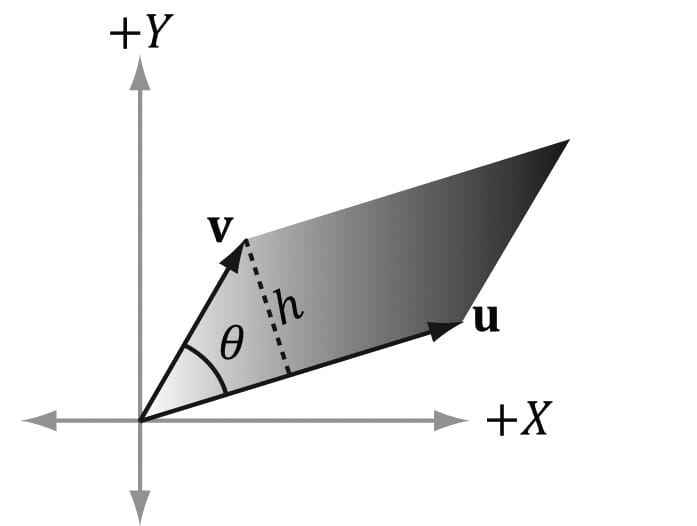

Figure 2.1. Output of the above program. 2.9 SUMMARY 1. An m × n matrix M is a rectangular array of real numbers with m rows and n columns. Two matrices of the same dimensions are equal if and only if their corresponding components are equal. We add two matrices of the same dimensions by adding their corresponding elements. We multiply a scalar and a matrix by multiplying the scalar with every element in the matrix. 2. If A is a m × n matrix and B is a n × p matrix, then the product AB is defined and is a m × p matrix C, where the ijth entry of the product C is given by taking the dot product of the ith row vector in A with the jth column vector in B, that is, . 3. Matrix multiplication is not commutative (i.e., AB ≠ BA, in general). Matrix multiplication is associative: (AB)C = A(BC). 4. The transpose of a matrix is found by interchanging the rows and columns of the matrix. Thus the transpose of an m × n matrix is an n × m matrix. We denote the transpose of a matrix M as MT. 5. The identity matrix is a square matrix that has zeros for all elements except along the main diagonal, and the elements along the main diagonal are all ones. 6. The determinant, det A, is a special function which inputs a square matrix and outputs a real number. A square matrix A is invertible if and only if det A ≠ 0. The determinant is used in the formula for computing the inverse of a matrix. 7. Multiplying a matrix with its inverse results in the identity matrix: MM−1 = M−1M = I. The inverse of a matrix, if it exists, is unique. Only square matrices have inverses and even then, a square matrix may not be invertible. The inverse of a matrix can be computed with the formula: , where is the adjoint (transpose of the cofactor matrix of A). 8. We use the DirectX Math XMMATRIX type to describe 4 × 4 matrices efficiently in code using SIMD operations. For class data members, we use the XMFLOAT4X4 class, and then use the loading (XMLoadFloat4x4) and storage (XMStoreFloat4x4) methods to convert back and forth between XMMATRIX and XMFLOAT4X4. The XMMATRIX class overloads the arithmetic operators to do matrix addition, subtraction, matrix multiplication, and scalar multiplication. Moreover, the DirectX Math library provides the following useful matrix functions for computing the identity matrix, product, transpose, determinant, and inverse: XMMATRIX XM_CALLCONV XMMatrixIdentity(); XMMATRIX XM_CALLCONV XMMatrixMultiply(FXMMATRIX A, CXMMATRIX B); XMMATRIX XM_CALLCONV XMMatrixTranspose(FXMMATRIX M); XMVECTOR XM_CALLCONV XMMatrixDeterminant(FXMMATRIX M); XMMATRIX XM_CALLCONV XMMatrixInverse(XMVECTOR* pDeterminant, FXMMATRIX M); 2.10 EXERCISES 1. Solve the following matrix equation for . 2. Compute the following matrix products: 3. Compute the transpose of the following matrices: 4. Write the following linear combinations as vector-matrix products: 1. v = 2(1, 2, 3) − 4(−5, 0, −1) +3(2, −2, 3) 2. v = 3(2, −4) + 2(1, 4) −1(−2, −3) + 5(1, 1) 5. Show that 6. Show that 7. Prove that the cross product can be expressed by the matrix product: 8. the inverse of A? 9. the inverse of A? 10.Find the determinants of the following matrices: 11.Find the inverse of the following matrices: 12.Is the following matrix invertible? 13.Show that (A−1)T = (AT)−1, assuming A is invertible. 14.Let A and B be n × n matrices. A fact proved in linear algebra books is that det(AB) = det A · det B. Use this fact along with the fact that det I = 1 to prove det , assuming A is invertible. 15.Prove that the 2D determinant gives the signed area of the parallelogram spanned by u = (ux, uy) and v = (vx, vy). The result is positive if u can be rotated counterclockwise to coincide with v by an angle θ ∈ (0, π), and negative otherwise. 16.Find the area of the parallelogram spanned by: 1. u = (3, 0) band v = (1, 1) 2. u = (−1, −1) and v = (0, 1) 17.Let . 18.Write a computer program that computes the transpose of an m × n matrix without using DirectX Math (just use an array of arrays in C++). 19.Write a computer program that computes the determinant and inverse of 4 × 4 matrices without using DirectX Math (just use an array of arrays in C++). All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL) If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval. © 2016-2025 All site design rights belong to S.Y.A. |

Example 2.1

Example 2.1