Introduction to 3D Game Programming with DirectX 12 (Computer Science) (2016)

|

Part 2 |

DIRECT3D |

In this part, we study fundamental Direct3D concepts and techniques that are used throughout the rest of this book. With these fundamentals mastered, we can move on to writing more interesting applications. A brief description of the chapters in this part follows.

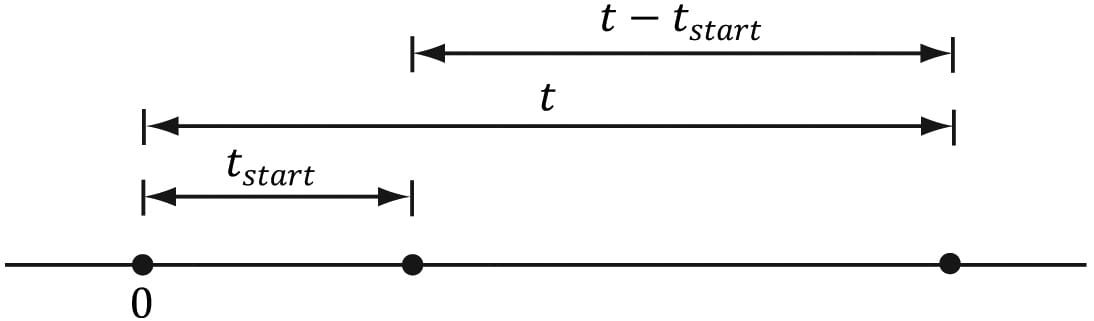

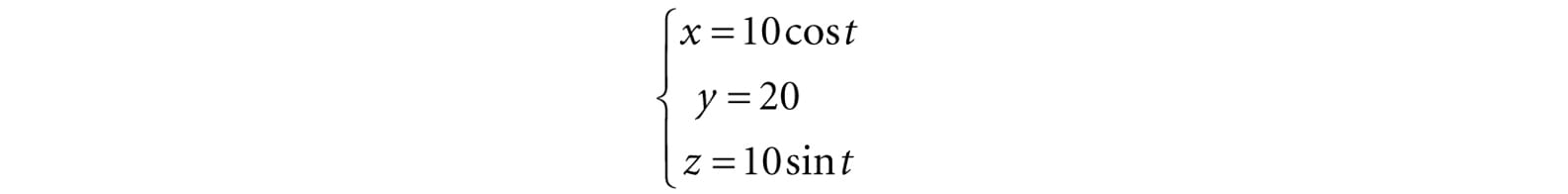

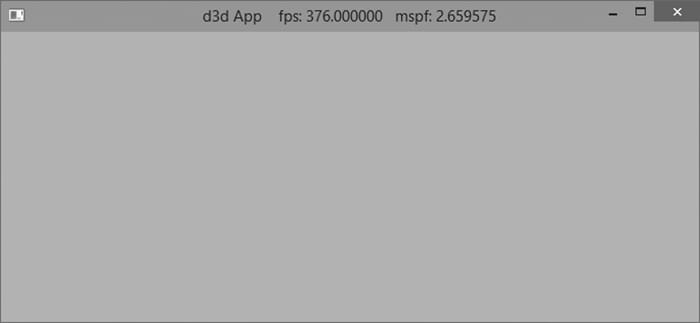

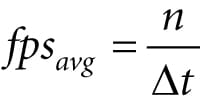

Chapter 4, Direct3D Initialization: In this chapter, we learn what Direct3D is about and how to initialize it in preparation for 3D drawing. Basic Direct3D topics are also introduced, such as surfaces, pixel formats, page flipping, depth buffering, and multisampling. We also learn how to measure time with the performance counter, which we use to compute the frames rendered per second. In addition, we give some tips on debugging Direct3D applications. We develop and use our own application framework—not the SDK's framework.

Chapter 5, The Rendering Pipeline: In this long chapter, we provide a thorough introduction to the rendering pipeline, which is the sequence of steps necessary to generate a 2D image of the world based on what the virtual camera sees. We learn how to define 3D worlds, control the virtual camera, and project 3D geometry onto a 2D image plane.

Chapter 6, Drawing in Direct3D: This chapter focuses on the Direct3D API interfaces and methods needed to define 3D geometry, configure the rendering pipeline, create vertex and pixel shaders, and submit geometry to the rendering pipeline for drawing. By the end of this chapter, you will be able to draw a 3D box and transform it.

Chapter 7, Drawing in Direct3D Part II: This chapter introduces a number of drawing patterns that will be used throughout the remainder of the book. From improving the workload balance between CPU and GPU, to organizing how our renderer draws objects. The chapter concludes by showing how to draw more complicated objects like grids, spheres, cylinders, and an animated wave simulation.

Chapter 8, Lighting: This chapter shows how to create light sources and define the interaction between light and surfaces via materials. In particular, we show how to implement directional lights, point lights, and spotlights with vertex and pixel shaders.

Chapter 9, Texturing: This chapter describes texture mapping, which is a technique used to increase the realism of the scene by mapping 2D image data onto a 3D primitive. For example, using texture mapping, we can model a brick wall by applying a 2D brick wall image onto a 3D rectangle. Other key texturing topics covered include texture tiling and animated texture transformations.

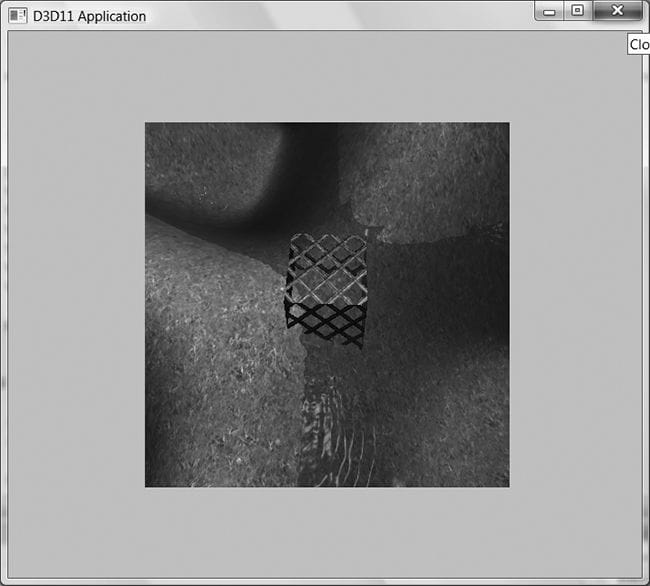

Chapter 10, Blending: Blending allows us to implement a number of special effects like transparency. In addition, we discuss the intrinsic clip function, which enables us to mask out certain parts of an image from showing up; this can be used to implement fences and gates, for example. We also show how to implement a fog effect.

Chapter 11, Stenciling: This chapter describes the stencil buffer, which, like a stencil, allows us to block pixels from being drawn. Masking out pixels is a useful tool for a variety of situations. To illustrate the ideas of this chapter, we include a thorough discussion on implementing planar reflections and planar shadows using the stencil buffer.

Chapter 12, The Geometry Shader: This chapter shows how to program geometry shaders, which are special because they can create or destroy entire geometric primitives. Some applications include billboards, fur rendering, subdivisions, and particle systems. In addition, this chapter explains primitive IDs and texture arrays.

Chapter 13, The Compute Shader: The Compute Shader is a programmable shader Direct3D exposes that is not directly part of the rendering pipeline. It enables applications to use the graphics processing unit (GPU) for general purpose computation. For example, an imaging application can take advantage of the GPU to speed up image processing algorithms by implementing them with the compute shader. Because the Compute Shader is part of Direct3D, it reads from and writes to Direct3D resources, which enables us integrate results directly to the rendering pipeline. Therefore, in addition to general purpose computation, the compute shader is still applicable for 3D rendering.

Chapter 14, The Tessellation Stages: This chapter explores the tessellation stages of the rendering pipeline. Tessellation refers to subdividing geometry into smaller triangles and then offsetting the newly generated vertices in some way. The motivation to increase the triangle count is to add detail to the mesh. To illustrate the ideas of this chapter, we show how to tessellate a quad patch based on distance, and we show how to render cubic Bézier quad patch surfaces.

|

Chapter 4 |

DIRECT3D |

The initialization process of Direct3D requires us to be familiar with some basic Direct3D types and basic graphics concepts; the first and second sections of this chapter address these requirements. We then detail the necessary steps to initialize Direct3D. Next, a small detour is taken to introduce accurate timing and the time measurements needed for real-time graphics applications. Finally, we explore the sample framework code, which is used to provide a consistent interface that all demo applications in this book follow.

Objectives:

1. To obtain a basic understanding of Direct3D’s role in programming 3D hardware.

2. To understand the role COM plays with Direct3D.

3. To learn fundamental graphics concepts, such as how 2D images are stored, page flipping, depth buffering, multi-sampling, and how the CPU and GPU interact.

4. To learn how to use the performance counter functions for obtaining high-resolution timer readings.

5. To find out how to initialize Direct3D.

6. To become familiar with the general structure of the application framework that all the demos of this book employ.

4.1 PRELIMINARIES

The Direct3D initialization process requires us to be familiar with some basic graphics concepts and Direct3D types. We introduce these ideas and types in this section, so that we do not have to digress when we cover the initialization process.

4.1.1 Direct3D 12 Overview

Direct3D is a low-level graphics API (application programming interface) used to control and program the GPU (graphics processing unit) from our application, thereby allowing us to render virtual 3D worlds using hardware acceleration. For example, to submit a command to the GPU to clear a render target (e.g., the screen), we would call the Direct3D method ID3D12CommandList::ClearRenderTargetView. The Direct3D layer and hardware drivers will translate the Direct3D commands into native machine instructions understood by the system’s GPU; thus, we do not have to worry about the specifics of the GPU, so long as it supports the Direct3D version we are using. To make this work, GPU vendors like NVIDIA, Intel, and AMD must work with the Direct3D team and provide compliant Direct3D drivers.

Direct3D 12 adds some new rendering features, but the main improvement over the previous version is that it has been redesigned to significantly reduce CPU overhead and improve multi-threading support. In order to achieve these performance goals, Direct3D 12 has become a much lower level API than Direct3D 11; it has less abstraction, requires additional manual “bookkeeping” from the developer, and more closely mirrors modern GPU architectures. The improved performance is, of course, the reward for using this more difficult API.

4.1.2 COM

Component Object Model (COM) is the technology that allows DirectX to be programming-language independent and have backwards compatibility. We usually refer to a COM object as an interface, which for our purposes can be thought of and used as a C++ class. Most of the details of COM are hidden to us when programming DirectX with C++. The only thing that we must know is that we obtain pointers to COM interfaces through special functions or by the methods of another COM interface—we do not create a COM interface with the C++ new keyword. In addition, COM objects are reference counted; when we are done with an interface we call its Release method (all COM interfaces inherit functionality from the IUnknown COM interface, which provides the Release method) rather than delete it—COM objects will free their memory when their reference count goes to 0.

To help manage the lifetime of COM objects, the Windows Runtime Library (WRL) provides the Microsoft::WRL::ComPtr class (#include <wrl.h>), which can be thought of as a smart pointer for COM objects. When a ComPtr instance goes out of scope, it will automatically call Release on the underlying COM object, thereby saving us from having to manually call Release. The three main ComPtr methods we use in this book are:

1. Get: Returns a pointer to the underlying COM interface. This is often used to pass arguments to functions that take a raw COM interface pointer. For example:

ComPtr<ID3D12RootSignature> mRootSignature;

...

// SetGraphicsRootSignature expects ID3D12RootSignature* argument.

mCommandList->SetGraphicsRootSignature(mRootSignature.Get());

2. GetAddressOf: Returns the address of the pointer to the underlying COM interface. This is often used to return a COM interface pointer through a function parameter. For example:

ComPtr<ID3D12CommandAllocator> mDirectCmdListAlloc;

...

ThrowIfFailed(md3dDevice->CreateCommandAllocator(

D3D12_COMMAND_LIST_TYPE_DIRECT,

mDirectCmdListAlloc.GetAddressOf()));

3. Reset: Sets the ComPtr instance to nullptr and decrements the reference count of the underlying COM interface. Equivalently, you can assign nullptr to a ComPtr instance.

There is, of course, much more to COM, but more detail is not necessary for using DirectX effectively.

|

|

COM interfaces are prefixed with a capital I. For example, the COM interface that represents a command list is called ID3D12GraphicsCommandList. |

4.1.3 Textures Formats

A 2D texture is a matrix of data elements. One use for 2D textures is to store 2D image data, where each element in the texture stores the color of a pixel. However, this is not the only usage; for example, in an advanced technique called normal mapping, each element in the texture stores a 3D vector instead of a color. Therefore, although it is common to think of textures as storing image data, they are really more general purpose than that. A 1D texture is like a 1D array of data elements, a 2D texture is like a 2D array of data elements, and a 3D texture is like a 3D array of data elements. As will be discussed in later chapters, textures are actually more than just arrays of data; they can have mipmap levels, and the GPU can do special operations on them, such as apply filters and multi-sampling. In addition, a texture cannot store arbitrary kinds of data elements; it can only store certain kinds of data element formats, which are described by the DXGI_FORMAT enumerated type. Some example formats are:

1. DXGI_FORMAT_R32G32B32_FLOAT: Each element has three 32-bit floating-point components.

2. DXGI_FORMAT_R16G16B16A16_UNORM: Each element has four 16-bit components mapped to the [0, 1] range.

3. DXGI_FORMAT_R32G32_UINT: Each element has two 32-bit unsigned integer components.

4. DXGI_FORMAT_R8G8B8A8_UNORM: Each element has four 8-bit unsigned components mapped to the [0, 1] range.

5. DXGI_FORMAT_R8G8B8A8_SNORM: Each element has four 8-bit signed components mapped to the [-1, 1] range.

6. DXGI_FORMAT_R8G8B8A8_SINT: Each element has four 8-bit signed integer components mapped to the [-128, 127] range.

7. DXGI_FORMAT_R8G8B8A8_UINT: Each element has four 8-bit unsigned integer components mapped to the [0, 255] range.

Note that the R, G, B, A letters are used to stand for red, green, blue, and alpha, respectively. Colors are formed as combinations of the basis colors red, green, and blue (e.g., equal red and equal green makes yellow). The alpha channel or alpha component is generally used to control transparency. However, as we said earlier, textures need not store color information even though the format names suggest that they do; for example, the format

DXGI_FORMAT_R32G32B32_FLOAT

has three floating-point components and can therefore store any 3D vector with floating-point coordinates. There are also typeless formats, where we just reserve memory and then specify how to reinterpret the data at a later time (sort of like a C++ reinterpret cast) when the texture is bound to the pipeline; for example, the following typeless format reserves elements with four 16-bit components, but does not specify the data type (e.g., integer, floating-point, unsigned integer):

DXGI_FORMAT_R16G16B16A16_TYPELESS

We will see in Chapter 6 that the DXGI_FORMAT enumerated type is also used to describe vertex data formats and index data formats.

4.1.4 The Swap Chain and Page Flipping

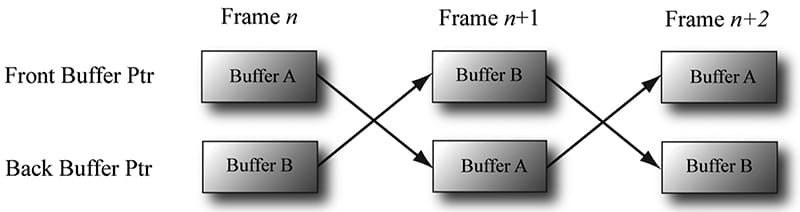

To avoid flickering in animation, it is best to draw an entire frame of animation into an off-screen texture called the back buffer. Once the entire scene has been drawn to the back buffer for the given frame of animation, it is presented to the screen as one complete frame; in this way, the viewer does not watch as the frame gets drawn—the viewer only sees complete frames. To implement this, two texture buffers are maintained by the hardware, one called the front buffer and a second called the back buffer. The front buffer stores the image data currently being displayed on the monitor, while the next frame of animation is being drawn to the back buffer. After the frame has been drawn to the back buffer, the roles of the back buffer and front buffer are reversed: the back buffer becomes the front buffer and the front buffer becomes the back buffer for the next frame of animation. Swapping the roles of the back and front buffers is called presenting. Presenting is an efficient operation, as the pointer to the current front buffer and the pointer to the current back buffer just need to be swapped. Figure 4.1 illustrates the process.

Figure 4.1. For frame n, Buffer A is currently being displayed and we render the next frame to Buffer B, which is serving as the current back buffer. Once the frame is completed, the pointers are swapped and Buffer B becomes the front buffer and Buffer A becomes the new back buffer. We then render the next frame n+1 to Buffer A. Once the frame is completed, the pointers are swapped and Buffer A becomes the front buffer and Buffer B becomes the back buffer again.

The front and back buffer form a swap chain. In Direct3D, a swap chain is represented by the IDXGISwapChain interface. This interface stores the front and back buffer textures, as well as provides methods for resizing the buffers (IDXGISwapChain::ResizeBuffers) and presenting (IDXGISwapChain::Present).

Using two buffers (front and back) is called double buffering. More than two buffers can be employed; using three buffers is called triple buffering. Two buffers are usually sufficient, however.

|

|

Even though the back buffer is a texture (so an element should be called a texel), we often call an element a pixel since, in the case of the back buffer, it stores color information. Sometimes people will call an element of a texture a pixel, even if it doesn’t store color information (e.g., “the pixels of a normal map”). |

4.1.5 Depth Buffering

The depth buffer is an example of a texture that does not contain image data, but rather depth information about a particular pixel. The possible depth values range from 0.0 to 1.0, where 0.0 denotes the closest an object in the view frustum can be to the viewer and 1.0 denotes the farthest an object in the view frustum can be from the viewer. There is a one-to-one correspondence between each element in the depth buffer and each pixel in the back buffer (i.e., the ijth element in the back buffer corresponds to the ijth element in the depth buffer). So if the back buffer had a resolution of 1280 × 1024, there would be 1280 × 1024 depth entries.

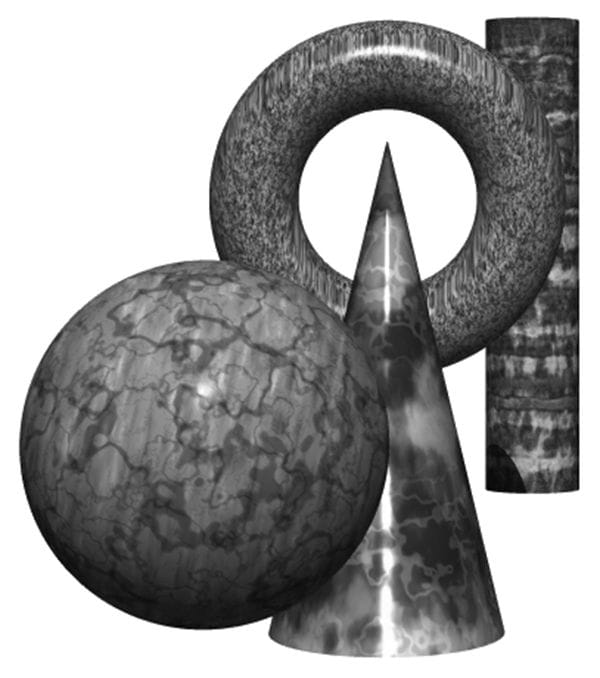

Figure 4.2 shows a simple scene, where some objects partially obscure the objects behind them. In order for Direct3D to determine which pixels of an object are in front of another, it uses a technique called depth buffering or z-buffering. Let us emphasize that with depth buffering, the order in which we draw the objects does not matter.

Figure 4.2. A group of objects that partially obscure each other.

|

|

To handle the depth problem, one might suggest drawing the objects in the scene in the order of farthest to nearest. In this way, near objects will be painted over far objects, and the correct results should be rendered. This is how a painter would draw a scene. However, this method has its own problems—sorting a large data set in back-to-front order and intersecting geometry. Besides, the graphics hardware gives us depth buffering for free. |

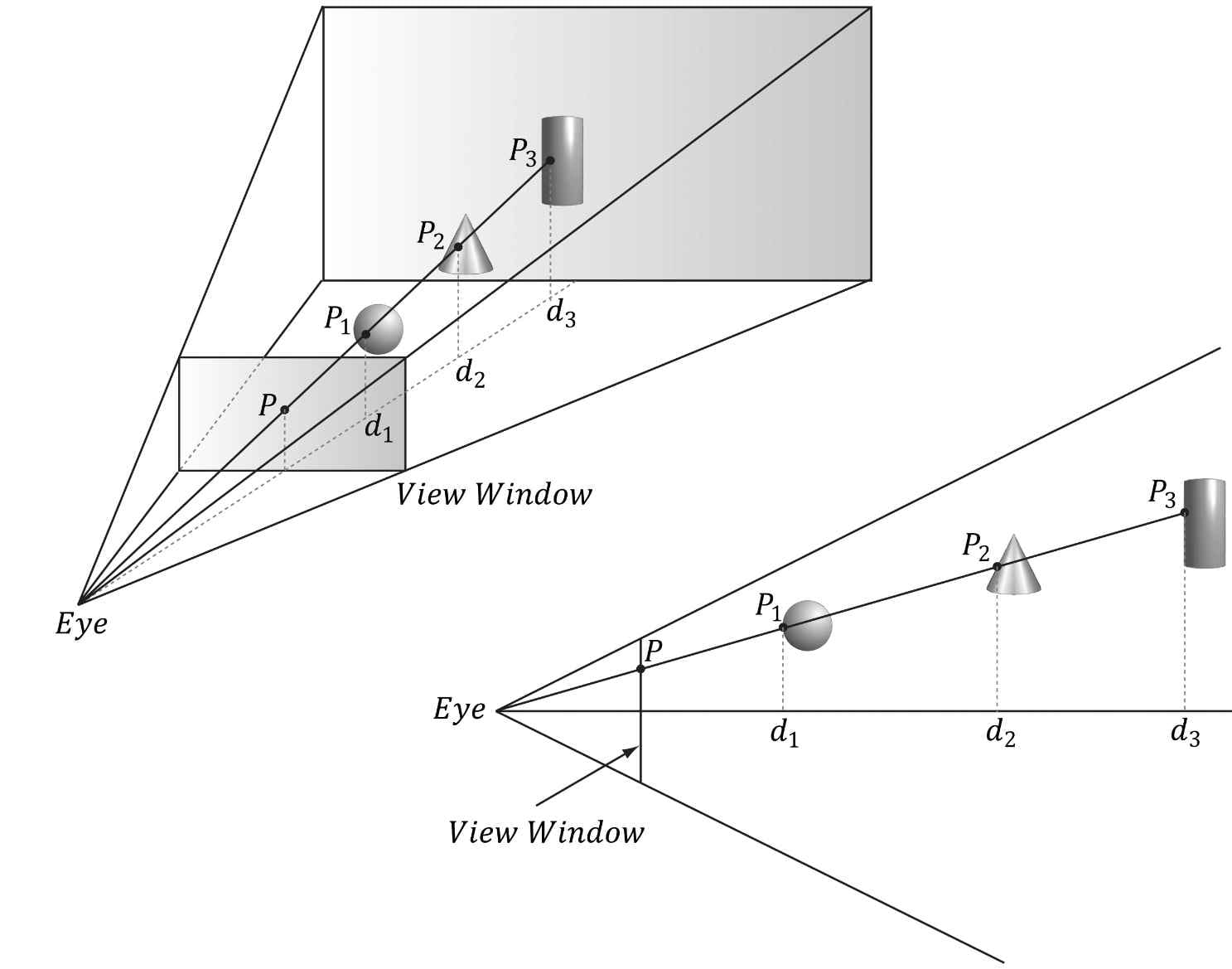

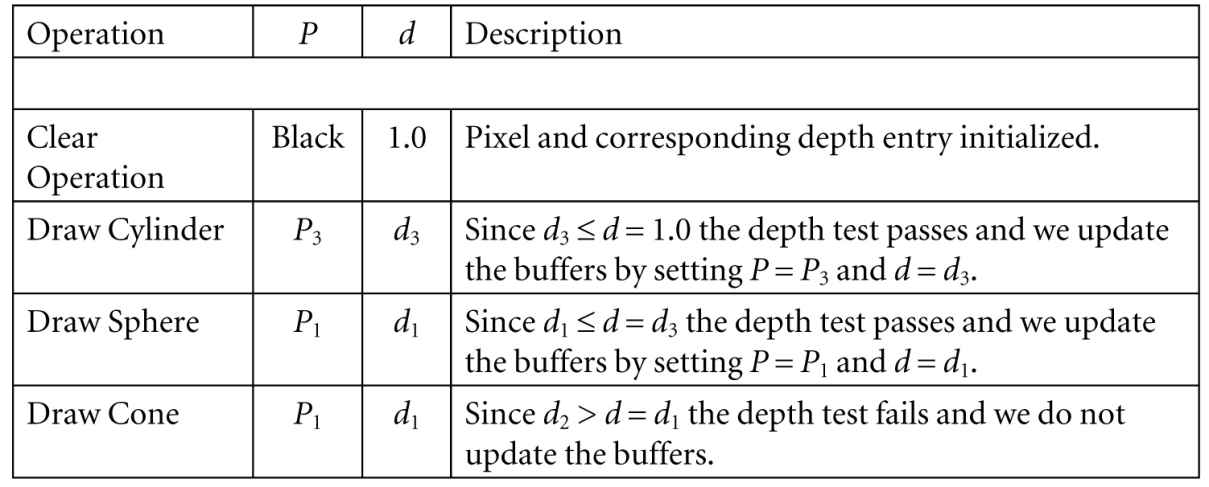

To illustrate how depth buffering works, let us look at an example. Consider Figure 4.3, which shows the volume the viewer sees and a 2D side view of that volume. From the figure, we observe that three different pixels compete to be rendered onto the pixel P on the view window. (Of course, we know the closest pixel should be rendered to P since it obscures the ones behind it, but the computer does not.) First, before any rendering takes place, the back buffer is cleared to a default color, and the depth buffer is cleared to a default value—usually 1.0 (the farthest depth value a pixel can have). Now, suppose that the objects are rendered in the order of cylinder, sphere, and cone. The following table summarizes how the pixel P and its corresponding depth value d are updated as the objects are drawn; a similar process happens for the other pixels.

Figure 4.3. The view window corresponds to the 2D image (back buffer) we generate of the 3D scene. We see that three different pixels can be projected to the pixel P. Intuition tells us that P1 should be written to P since it is closer to the viewer and blocks the other two pixels. The depth buffer algorithm provides a mechanical procedure for determining this on a computer. Note that we show the depth values relative to the 3D scene being viewed, but they are actually normalized to the range [0.0, 1.0] when stored in the depth buffer.

As you can see, we only update the pixel and its corresponding depth value in the depth buffer when we find a pixel with a smaller depth value. In this way, after all is said and done, the pixel that is closest to the viewer will be the one rendered. (You can try switching the drawing order around and working through this example again if you are still not convinced.)

To summarize, depth buffering works by computing a depth value for each pixel and performing a depth test. The depth test compares the depths of pixels competing to be written to a particular pixel location on the back buffer. The pixel with the depth value closest to the viewer wins, and that is the pixel that gets written to the back buffer. This makes sense because the pixel closest to the viewer obscures the pixels behind it.

The depth buffer is a texture, so it must be created with certain data formats. The formats used for depth buffering are as follows:

1. DXGI_FORMAT_D32_FLOAT_S8X24_UINT: Specifies a 32-bit floating-point depth buffer, with 8-bits (unsigned integer) reserved for the stencil buffer mapped to the [0, 255] range and 24-bits not used for padding.

2. DXGI_FORMAT_D32_FLOAT: Specifies a 32-bit floating-point depth buffer.

3. DXGI_FORMAT_D24_UNORM_S8_UINT: Specifies an unsigned 24-bit depth buffer mapped to the [0, 1] range with 8-bits (unsigned integer) reserved for the stencil buffer mapped to the [0, 255] range.

4. DXGI_FORMAT_D16_UNORM: Specifies an unsigned 16-bit depth buffer mapped to the [0, 1] range.

|

|

An application is not required to have a stencil buffer, but if it does, the stencil buffer is always attached to the depth buffer. For example, the 32-bit format |

DXGI_FORMAT_D24_UNORM_S8_UINT

uses 24-bits for the depth buffer and 8-bits for the stencil buffer. For this reason, the depth buffer is better called the depth/stencil buffer. Using the stencil buffer is a more advanced topic and will be explained in Chapter 11.

4.1.6 Resources and Descriptors

During the rendering process, the GPU will write to resources (e.g., the back buffer, the depth/stencil buffer), and read from resources (e.g., textures that describe the appearance of surfaces, buffers that store the 3D positions of geometry in the scene). Before we issue a draw command, we need to bind (or link) the resources to the rendering pipeline that are going to be referenced in that draw call. Some of the resources may change per draw call, so we need to update the bindings per draw call if necessary. However, GPU resources are not bound directly. Instead, a resource is referenced through a descriptor object, which can be thought of as lightweight structure that describes the resource to the GPU. Essentially, it is a level of indirection; given a resource descriptor, the GPU can get the actual resource data and know the necessary information about it. We bind resources to the rendering pipeline by specifying the descriptors that will be referenced in the draw call.

Why go to this extra level of indirection with descriptors? The reason is that GPU resources are essentially generic chunks of memory. Resources are kept generic so they can be used at different stages of the rendering pipeline; a common example is to use a texture as a render target (i.e., Direct3D draws into the texture) and later as a shader resource (i.e., the texture will be sampled and serve as input data for a shader). A resource by itself does not say if it is being used as a render target, depth/stencil buffer, or shader resource. Also, perhaps we only want to bind a subregion of the resource data to the rendering pipeline—how can we do that given the whole resource? Moreover, a resource can be created with a typeless format, so the GPU would not even know the format of the resource.

This is where descriptors come in. In addition to identifying the resource data, descriptors describe the resource to the GPU: they tell Direct3D how the resource will be used (i.e., what stage of the pipeline you will bind it to), where applicable we can specify a subregion of the resource we want to bind in the descriptor, and if the resource format was specified as typeless at creation time, then we must now state the type when creating the descriptor.

|

|

A view is a synonym for descriptor. The term “view” was used in previous versions of Direct3D, and it is still used in some parts of the Direct3D 12 API. We use both interchangeably in this book; for example, constant buffer view and constant buffer descriptor mean the same thing. |

Descriptors have a type, and the type implies how the resource will be used. The types of descriptors we use in this book are:

1. CBV/SRV/UAV descriptors describe constant buffers, shader resources and unordered access view resources.

2. Sampler descriptors describe sampler resources (used in texturing).

3. RTV descriptors describe render target resources.

4. DSV descriptors describe depth/stencil resources.

A descriptor heap is an array of descriptors; it is the memory backing for all the descriptors of a particular type your application uses. You will need a separate descriptor heap for each type of descriptor. You can also create multiple heaps of the same descriptor type.

We can have multiple descriptors referencing the same resource. For example, we can have multiple descriptors referencing different subregions of a resource. Also, as mentioned, resources can be bound to different stages of the rendering pipeline. For each stage, we need a separate descriptor. For the example of using a texture as a render target and shader resource, we would need to create two descriptors: an RTV typed descriptor, and an SRV typed descriptor. Similarly, if you create a resource with a typeless format, it is possible for the elements of a texture to be viewed as floating-point values or as integers, for example; this would require two descriptors, where one descriptor specifies the floating-point format, and the other the integer format.

Descriptors should be created at initialization time. This is because there is some type checking and validation that occurs, and it is better to do this at initialization time rather than runtime.

|

|

The August 2009 SDK documentation says: “Creating a fully-typed resource restricts the resource to the format it was created with. This enables the runtime to optimize access […].” Therefore, you should only create a typeless resource if you really need the flexibility they provide (the ability to reinterpret the data in multiple ways with multiple views); otherwise, create a fully typed resource. |

4.1.7 Multisampling Theory

Because the pixels on a monitor are not infinitely small, an arbitrary line cannot be represented perfectly on the computer monitor. Figure 4.4 illustrates a “stair-step” (aliasing) effect, which can occur when approximating a line by a matrix of pixels. Similar aliasing effects occur with the edges of triangles.

Figure 4.4. On the top we observe aliasing (the stairstep effect when trying to represent a line by a matrix of pixels) On the bottom, we see an antialiased line, which generates the final color of a pixel by sampling and using its neighboring pixels; this results in a smoother image and dilutes the stairstep effect.

Shrinking the pixel sizes by increasing the monitor resolution can alleviate the problem significantly to where the stair-step effect goes largely unnoticed.

When increasing the monitor resolution is not possible or not enough, we can apply antialiasing techniques. One technique, called supersampling, works by making the back buffer and depth buffer 4X bigger than the screen resolution. The 3D scene is then rendered to the back buffer at this larger resolution. Then, when it comes time to present the back buffer to the screen, the back buffer is resolved (or downsampled) such that 4 pixel block colors are averaged together to get an averaged pixel color. In effect, supersampling works by increasing the resolution in software.

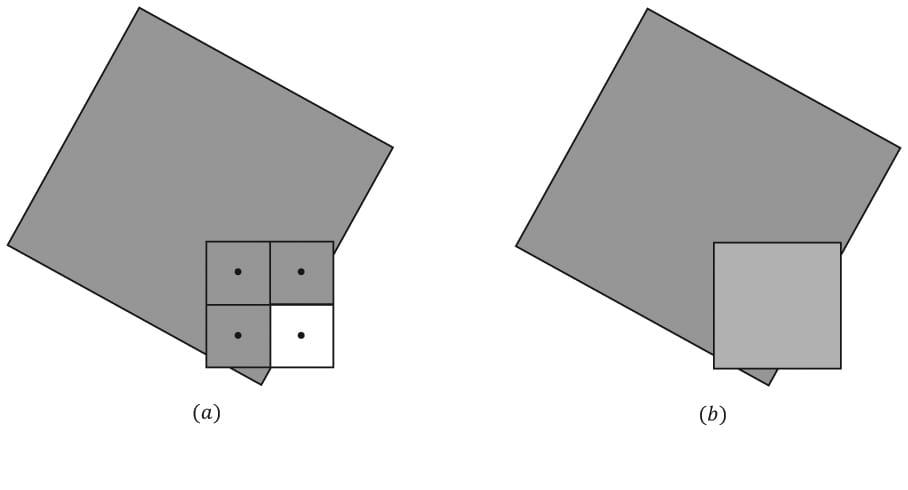

Supersampling is expensive because it increases the amount of pixel processing and memory by fourfold. Direct3D supports a compromising antialiasing technique called multisampling, which shares some computational information across subpixels making it less expensive than supersampling. Assuming we are using 4X multisampling (4 subpixels per pixel), multisampling also uses a back buffer and depth buffer 4X bigger than the screen resolution; however, instead of computing the image color for each subpixel, it computes it only once per pixel, at the pixel center, and then shares that color information with its subpixels based on visibility (the depth/stencil test is evaluated per subpixel) and coverage (does the subpixel center lie inside or outside the polygon?). Figure 4.5 shows an example.

Figure 4.5. We consider one pixel that crosses the edge of a polygon. (a) The green color evaluated at the pixel center is stored in the three visible subpixels that are covered by the polygon. The subpixel in the fourth quadrant is not covered by the polygon and so does not get updated with the green color—it just keeps its previous color computed from previously drawn geometry or the Clear operation. (b) To compute the resolved pixel color, we average the four subpixels (three green pixels and one white pixel) to get a light green along the edge of the polygon. This results in a smoother looking image by diluting the stairstep effect along the edge of the polygon.

|

|

Observe the key difference between supersampling and multisampling. With supersampling, the image color is computed per subpixel, and so each subpixel could potentially be a different color. With multisampling (Figure 4.5), the image color is computed once per pixel and that color is replicated into all visible subpixels that are covered by the polygon. Because computing the image color is one of the most expensive steps in the graphics pipeline, the savings from multisampling over supersampling is significant. On the other hand, supersampling is more accurate. |

In Figure 4.5, we show a pixel subdivided into four subpixels in a uniform grid pattern. The actual pattern used (the points where the subpixels are positioned) can vary across hardware vendors, as Direct3D does not define the placement of the subpixels. Some patterns do better than others in certain situations.

4.1.8 Multisampling in Direct3D

In the next section, we will be required to fill out a DXGI_SAMPLE_DESC structure. This structure has two members and is defined as follows:

typedef struct DXGI_SAMPLE_DESC

{

UINT Count;

UINT Quality;

} DXGI_SAMPLE_DESC;

The Count member specifies the number of samples to take per pixel, and the Quality member is used to specify the desired quality level (what “quality level” means can vary across hardware manufacturers). Higher sample counts or higher quality is more expensive to render, so a tradeoff between quality and speed must be made. The range of quality levels depends on the texture format and the number of samples to take per pixel.

We can query the number of quality levels for a given texture format and sample count using the ID3D12Device::CheckFeatureSupport method like so:

typedef struct D3D12_FEATURE_DATA_MULTISAMPLE_QUALITY_LEVELS {

DXGI_FORMAT Format;

UINT SampleCount;

D3D12_MULTISAMPLE_QUALITY_LEVELS_FLAG Flags;

UINT NumQualityLevels;

} D3D12_FEATURE_DATA_MULTISAMPLE_QUALITY_LEVELS;

D3D12_FEATURE_DATA_MULTISAMPLE_QUALITY_LEVELS msQualityLevels;

msQualityLevels.Format = mBackBufferFormat;

msQualityLevels.SampleCount = 4;

msQualityLevels.Flags = D3D12_MULTISAMPLE_QUALITY_LEVELS_FLAG_NONE;

msQualityLevels.NumQualityLevels = 0;

ThrowIfFailed(md3dDevice->CheckFeatureSupport(

D3D12_FEATURE_MULTISAMPLE_QUALITY_LEVELS,

&msQualityLevels,

sizeof(msQualityLevels)));

Note that the second parameter is both an input and output parameter. For the input, we must specify the texture format, sample count, and flag we want to query multisampling support for. The function will then fill out the quality level as the output. Valid quality levels for a texture format and sample count combination range from zero to NumQualityLevels–1.

The maximum number of samples that can be taken per pixel is defined by:

#define D3D11_MAX_MULTISAMPLE_SAMPLE_COUNT ( 32 )

However, a sample count of 4 or 8 is common in order to keep the performance and memory cost of multisampling reasonable. If you do not wish to use multisampling, set the sample count to 1 and the quality level to 0. All Direct3D 11 capable devices support 4X multisampling for all render target formats.

|

|

A DXGI_SAMPLE_DESC structure needs to be filled out for both the swap chain buffers and the depth buffer. Both the back buffer and depth buffer must be created with the same multisampling settings. |

4.1.9 Feature Levels

Direct3D 11 introduces the concept of feature levels (represented in code by the D3D_FEATURE_LEVEL enumerated type), which roughly correspond to various Direct3D versions from version 9 to 11:

enum D3D_FEATURE_LEVEL

{

D3D_FEATURE_LEVEL_9_1 = 0x9100,

D3D_FEATURE_LEVEL_9_2 = 0x9200,

D3D_FEATURE_LEVEL_9_3 = 0x9300,

D3D_FEATURE_LEVEL_10_0 = 0xa000,

D3D_FEATURE_LEVEL_10_1 = 0xa100,

D3D_FEATURE_LEVEL_11_0 = 0xb000,

D3D_FEATURE_LEVEL_11_1 = 0xb100

}D3D_FEATURE_LEVEL;

Feature levels define a strict set of functionality (see the SDK documentation for the specific capabilities each feature level supports). For example, a GPU that supports feature level 11 must support the entire Direct3D 11 capability set, with few exceptions (some things like the multisampling count still need to be queried, as they are allowed to vary between different Direct3D 11 hardware). Feature sets make development easier—once you know the supported feature set, you know the Direct3D functionality you have at your disposal.

If a user’s hardware did not support a certain feature level, the application could fallback to an older feature level. For example, to support a wider audience, an application might support Direct3D 11, 10, and 9.3 level hardware. The application would check feature level support from newest to oldest: That is, the application would first check if Direct3D 11 is supported, second Direct3D 10, and finally Direct3D 9.3. In this book, we always require support for feature level D3D_FEATURE_LEVEL_11_0. However, real-world applications do need to worry about supporting older hardware to maximize their audience.

4.1.10 DirectX Graphics Infrastructure

DirectX Graphics Infrastructure (DXGI) is an API used along with Direct3D. The basic idea of DXGI is that some graphics related tasks are common to multiple graphics APIs. For example, a 2D rendering API would need swap chains and page flipping for smooth animation just as much as a 3D rendering API; thus the swap chain interface IDXGISwapChain (§4.1.4) is actually part of the DXGI API. DXGI handles other common graphical functionality like full-screen mode transitions, enumerating graphical system information like display adapters, monitors, and supported display modes (resolution, refresh rate, and such); it also defines the various supported surface formats (DXGI_FORMAT).

We briefly describe some DXGI concepts and interfaces that will be used during our Direct3D initialization. One of the key DXGI interfaces is the IDXGIFactory interface, which is primarily used to create the IDXGISwapChain interface and enumerate display adapters. Display adapters implement graphical functionality. Usually, the display adapter is a physical piece of hardware (e.g., graphics card); however, a system can also have a software display adapter that emulates hardware graphics functionality. A system can have several adapters (e.g., if it has several graphics cards). An adapter is represented by the IDXGIAdapter interface. We can enumerate all the adapters on a system with the following code:

void D3DApp::LogAdapters()

{

UINT i = 0;

IDXGIAdapter* adapter = nullptr;

std::vector<IDXGIAdapter*> adapterList;

while(mdxgiFactory->EnumAdapters(i, &adapter) != DXGI_ERROR_NOT_FOUND)

{

DXGI_ADAPTER_DESC desc;

adapter->GetDesc(&desc);

std::wstring text = L"***Adapter: ";

text += desc.Description;

text += L"\n";

OutputDebugString(text.c_str());

adapterList.push_back(adapter);

++i;

}

for(size_t i = 0; i < adapterList.size(); ++i)

{

LogAdapterOutputs(adapterList[i]);

ReleaseCom(adapterList[i]);

}

}

An example of the output from this method is the following:

***Adapter: NVIDIA GeForce GTX 760

***Adapter: Microsoft Basic Render Driver

The “Microsoft Basic Render Driver” is a software adapter included with Windows 8 and above.

A system can have several monitors. A monitor is an example of a display output. An output is represented by the IDXGIOutput interface. Each adapter is associated with a list of outputs. For instance, consider a system with two graphics cards and three monitors, where two monitors are hooked up to one graphics card, and the third monitor is hooked up to the other graphics card. In this case, one adapter has two outputs associated with it, and the other adapter has one output associated with it. We can enumerate all the outputs associated with an adapter with the following code:

void D3DApp::LogAdapterOutputs(IDXGIAdapter* adapter)

{

UINT i = 0;

IDXGIOutput* output = nullptr;

while(adapter->EnumOutputs(i, &output) != DXGI_ERROR_NOT_FOUND)

{

DXGI_OUTPUT_DESC desc;

output->GetDesc(&desc);

std::wstring text = L"***Output: ";

text += desc.DeviceName;

text += L"\n";

OutputDebugString(text.c_str());

LogOutputDisplayModes(output, DXGI_FORMAT_B8G8R8A8_UNORM);

ReleaseCom(output);

++i;

}

}

Note that, per the documentation, the “Microsoft Basic Render Driver” has no display outputs.

Each monitor has a set of display modes it supports. A display mode refers to the following data in DXGI_MODE_DESC:

typedef struct DXGI_MODE_DESC

{

UINT Width; // Resolution width

UINT Height; // Resolution height

DXGI_RATIONAL RefreshRate;

DXGI_FORMAT Format; // Display format

DXGI_MODE_SCANLINE_ORDER ScanlineOrdering; //Progressive vs. interlaced

DXGI_MODE_SCALING Scaling; // How the image is stretched

// over the monitor.

} DXGI_MODE_DESC;

typedef struct DXGI_RATIONAL

{

UINT Numerator;

UINT Denominator;

} DXGI_RATIONAL;

typedef enum DXGI_MODE_SCANLINE_ORDER

{

DXGI_MODE_SCANLINE_ORDER_UNSPECIFIED = 0,

DXGI_MODE_SCANLINE_ORDER_PROGRESSIVE = 1,

DXGI_MODE_SCANLINE_ORDER_UPPER_FIELD_FIRST = 2,

DXGI_MODE_SCANLINE_ORDER_LOWER_FIELD_FIRST = 3

} DXGI_MODE_SCANLINE_ORDER;

typedef enum DXGI_MODE_SCALING

{

DXGI_MODE_SCALING_UNSPECIFIED = 0,

DXGI_MODE_SCALING_CENTERED = 1,

DXGI_MODE_SCALING_STRETCHED = 2

} DXGI_MODE_SCALING;

Fixing a display mode format, we can get a list of all supported display modes an output supports in that format with the following code:

void D3DApp::LogOutputDisplayModes(IDXGIOutput* output, DXGI_FORMAT format)

{

UINT count = 0;

UINT flags = 0;

// Call with nullptr to get list count.

output->GetDisplayModeList(format, flags, &count, nullptr);

std::vector<DXGI_MODE_DESC> modeList(count);

output->GetDisplayModeList(format, flags, &count, &modeList[0]);

for(auto& x : modeList)

{

UINT n = x.RefreshRate.Numerator;

UINT d = x.RefreshRate.Denominator;

std::wstring text =

L"Width = " + std::to_wstring(x.Width) + L" " +

L"Height = " + std::to_wstring(x.Height) + L" " +

L"Refresh = " + std::to_wstring(n) + L"/" + std::to_wstring(d) +

L"\n";

::OutputDebugString(text.c_str());

}

}

An example of some of the output from this code is as follows:

***Output: \\.\DISPLAY2

...

Width = 1920 Height = 1080 Refresh = 59950/1000

Width = 1920 Height = 1200 Refresh = 59950/1000

Enumerating display modes is particularly important when going into full-screen mode. In order to get optimal full-screen performance, the specified display mode (including refresh rate), must match exactly a display mode the monitor supports. Specifying an enumerated display mode guarantees this.

For more reference material on DXGI, we recommend reading the following articles “DXGI Overview,” “DirectX Graphics Infrastructure: Best Practices,” and “DXGI 1.4 Improvements” available online at:

DXGI Overview: http://msdn.microsoft.com/en-us/library/windows/desktop/bb205075(v=vs.85).aspx

DirectX Graphics Infrastructure: Best Practices: http://msdn.microsoft.com/en-us/library/windows/desktop/ee417025(v=vs.85).aspx

DXGI 1.4 Improvements: https://msdn.microsoft.com/en-us/library/windows/desktop/mt427784%28v=vs.85%29.aspx

4.1.11 Checking Feature Support

We already used the ID3D12Device::CheckFeatureSupport method to check multisampling support by the current graphics driver. However, that is just one feature support we can check for with this function. The prototype of this method is as follows:

HRESULT ID3D12Device::CheckFeatureSupport(

D3D12_FEATURE Feature,

void *pFeatureSupportData,

UINT FeatureSupportDataSize);

1. Feature: A member of the D3D12_FEATURE enumerated type identifying the type of features we want to check the support:

1. D3D12_FEATURE_D3D12_OPTIONS: Checks support for various Direct3D 12 features.

2. D3D12_FEATURE_ARCHITECTURE: Checks support for hardware architecture features.

3. D3D12_FEATURE_FEATURE_LEVELS: Checks feature level support.

4. D3D12_FEATURE_FORMAT_SUPPORT: Check feature support for a given texture format (e.g., can the format be used as a render target, can the format be used with blending).

5. D3D12_FEATURE_MULTISAMPLE_QUALITY_LEVELS: Check multisampling feature support.

2. pFeatureSupportData: Pointer to a data structure to retrieve the feature support information. The type of structure you use depends on what you specified for the Feature parameter:

1. If you specified D3D12_FEATURE_D3D12_OPTIONS, then pass an instance of D3D12_FEATURE_DATA_D3D12_OPTIONS.

2. If you specified D3D12_FEATURE_ARCHITECTURE, then pass an instance of D3D12_FEATURE_DATA_ARCHITECTURE.

3. If you specified D3D12_FEATURE_FEATURE_LEVELS, then pass an instance of D3D12_FEATURE_DATA_FEATURE_LEVELS.

4. If you specified D3D12_FEATURE_FORMAT_SUPPORT, then pass an instance of D3D12_FEATURE_DATA_FORMAT_SUPPORT.

5. If you specified D3D12_FEATURE_MULTISAMPLE_QUALITY_LEVELS, then pass an instance of D3D12_FEATURE_DATA_MULTISAMPLE_QUALITY_LEVELS.

3. FeatureSupportDataSize: The size of the data structure passed into pFeatureSupportData the parameter.

The ID3D12Device::CheckFeatureSupport function checks support for a lot of features, many of which we do not need to check in this book and are advanced; see the SDK documentation for details on the data members for each feature structure. However, as an example, we show below how to check for supported feature levels (§4.1.9):

typedef struct D3D12_FEATURE_DATA_FEATURE_LEVELS {

UINT NumFeatureLevels;

const D3D_FEATURE_LEVEL *pFeatureLevelsRequested;

D3D_FEATURE_LEVEL MaxSupportedFeatureLevel;

} D3D12_FEATURE_DATA_FEATURE_LEVELS;

D3D_FEATURE_LEVEL featureLevels[3] =

{

D3D_FEATURE_LEVEL_11_0, // First check D3D 11 support

D3D_FEATURE_LEVEL_10_0, // Next, check D3D 10 support

D3D_FEATURE_LEVEL_9_3 // Finally, check D3D 9.3 support

};

D3D12_FEATURE_DATA_FEATURE_LEVELS featureLevelsInfo;

featureLevelsInfo.NumFeatureLevels = 3;

featureLevelsInfo.pFeatureLevelsRequested = featureLevels;

md3dDevice->CheckFeatureSupport(

D3D12_FEATURE_FEATURE_LEVELS,

&featureLevelsInfo,

sizeof(featureLevelsInfo));

Note that the second parameter is both an input and output parameter. For the input, we specify the number of elements (NumFeatureLevels) in a feature level array, and a pointer to a feature level array (pFeatureLevelsRequested) which contains a list of feature levels we want to check hardware support for. The function outputs the maximum supported feature level through the MaxSupportedFeatureLevel field.

4.1.12 Residency

A complex game will use a lot of resources such as textures and 3D meshes, but many of these resources will not be needed by the GPU all the time. For example, if we imagine a game with an outdoor forest that has a large cave in it, the cave resources will not be needed until the player enters the cave, and when the player enters the cave, the forest resources will no longer be needed.

In Direct3D 12, applications manage resource residency (essentially, whether a resource is in GPU memory) by evicting resources from GPU memory and then making them resident on the GPU again as needed. The basic idea is to minimize how much GPU memory the application is using because there might not be enough to store every resource for the entire game, or the user has other applications running that require GPU memory. As a performance note, the application should avoid the situation of swapping the same resources in and out of GPU memory within a short time frame, as there is overhead for this. Ideally, if you are going to evict a resource, that resource should not be needed for a while. Game level/area changes are good examples of times to change resource residency.

By default, when a resource is created it is made resident and it is evicted when it is destroyed. However, an application can manually control residency with the following methods:

HRESULT ID3D12Device::MakeResident(

UINT NumObjects,

ID3D12Pageable *const *ppObjects);

HRESULT ID3D12Device::Evict(

UINT NumObjects,

ID3D12Pageable *const *ppObjects);

For both methods, the second parameter is an array of ID3D12Pageable resources, and the first parameter is the number of resources in the array.

In this book, for simplicity and due to our demos being small compared to a game, we do not manage residency. See the documentation on residency for more information: https://msdn.microsoft.com/en-us/library/windows/desktop/mt186622%28v=vs.85%29.aspx

4.2 CPU/GPU INTERACTION

We must understand that with graphics programming we have two processors at work: the CPU and GPU. They work in parallel and sometimes need to be synchronized. For optimal performance, the goal is to keep both busy for as long as possible and minimize synchronizations. Synchronizations are undesirable because it means one processing unit is idle while waiting on the other to finish some work; in other words, it ruins the parallelism.

4.2.1 The Command Queue and Command Lists

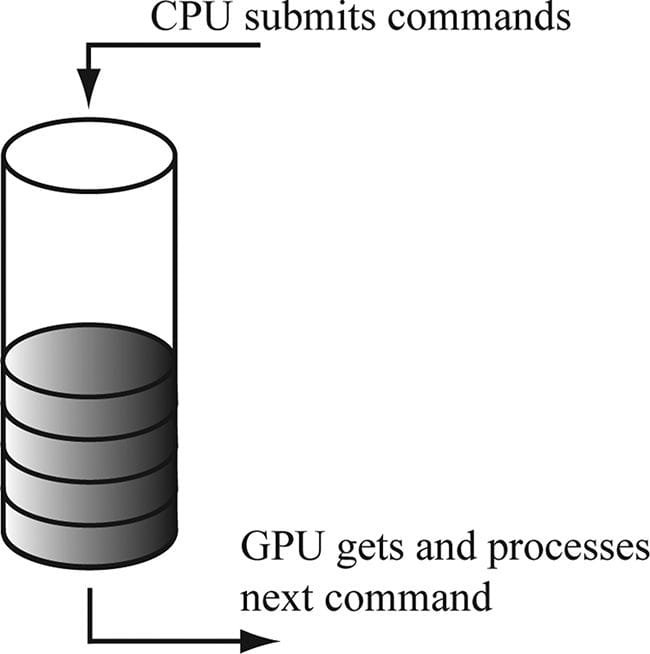

The GPU has a command queue. The CPU submits commands to the queue through the Direct3D API using command lists (see Figure 4.6). It is important to understand that once a set of commands have been submitted to the command queue, they are not immediately executed by the GPU. They sit in the queue until the GPU is ready to process them, as the GPU is likely busy processing previously inserted commands.

Figure 4.6. The command queue.

If the command queue gets empty, the GPU will idle because it does not have any work to do; on the other hand, if the command queue gets too full, the CPU will at some point have to idle while the GPU catches up [Crawfis12]. Both of these situations are undesirable; for high performance applications like games, the goal is to keep both CPU and GPU busy to take full advantage of the hardware resources available.

In Direct3D 12, the command queue is represented by the ID3D12CommandQueue interface. It is created by filling out a D3D12_COMMAND_QUEUE_DESC structure describing the queue and then calling ID3D12Device::CreateCommandQueue. The way we create our command queue in this book is as follows:

Microsoft::WRL::ComPtr<ID3D12CommandQueue> mCommandQueue;

D3D12_COMMAND_QUEUE_DESC queueDesc = {};

queueDesc.Type = D3D12_COMMAND_LIST_TYPE_DIRECT;

queueDesc.Flags = D3D12_COMMAND_QUEUE_FLAG_NONE;

ThrowIfFailed(md3dDevice->CreateCommandQueue(

&queueDesc, IID_PPV_ARGS(&mCommandQueue)));

The IID_PPV_ARGS helper macro is defined as:

#define IID_PPV_ARGS(ppType) __uuidof(**(ppType)), IID_PPV_ARGS_Helper(ppType)

where __uuidof(**(ppType)) evaluates to the COM interface ID of (**(ppType)), which in the above code is ID3D12CommandQueue. The IID_PPV_ARGS_Helper function essentially casts ppType to a void**. We use this macro throughout this book, as many Direct3D 12 API calls have a parameter that requires the COM ID of the interface we are creating and take a void**.

One of the primary methods of this interface is the ExecuteCommandLists method which adds the commands in the command lists to the queue:

void ID3D12CommandQueue::ExecuteCommandLists(

// Number of commands lists in the array

UINT Count,

// Pointer to the first element in an array of command lists

ID3D12CommandList *const *ppCommandLists);

The command lists are executed in order starting with the first array element.

As the above method declarations imply, a command list for graphics is represented by the ID3D12GraphicsCommandList interface which inherits from the ID3D12CommandList interface. The ID3D12GraphicsCommandList interface has numerous methods for adding commands to the command list. For example, the following code adds commands that set the viewport, clear the render target view, and issue a draw call:

// mCommandList pointer to ID3D12CommandList

mCommandList->RSSetViewports(1, &mScreenViewport);

mCommandList->ClearRenderTargetView(mBackBufferView,

Colors::LightSteelBlue, 0, nullptr);

mCommandList->DrawIndexedInstanced(36, 1, 0, 0, 0);

The names of these methods suggest that the commands are executed immediately, but they are not. The above code just adds commands to the command list. The ExecuteCommandLists method adds the commands to the command queue, and the GPU processes commands from the queue. We will learn about the various commands ID3D12GraphicsCommandList supports as we progress through this book. When we are done adding commands to a command list, we must indicate that we are finished recording commands by calling the ID3D12GraphicsCommandList::Close method:

// Done recording commands.

mCommandList->Close();

The command list must be closed before passing it off to ID3D12CommandQueue::ExecuteCommandLists.

Associated with a command list is a memory backing class called an ID3D12CommandAllocator. As commands are recorded to the command list, they will actually be stored in the associated command allocator. When a command list is executed via ID3D12CommandQueue::ExecuteCommandLists, the command queue will reference the commands in the allocator. A command allocator is created from the ID3D12Device:

HRESULT ID3D12Device::CreateCommandAllocator(

D3D12_COMMAND_LIST_TYPE type,

REFIID riid,

void **ppCommandAllocator);

1. type: The type of command lists that can be associated with this allocator. The two common types we use in this book are:

1. D3D12_COMMAND_LIST_TYPE_DIRECT: Stores a list of commands to directly be executed by the GPU (the type of command list we have been describing thus far).

2. D3D12_COMMAND_LIST_TYPE_BUNDLE: Specifies the command list represents a bundle. There is some CPU overhead in building a command list, so Direct3D 12 provides an optimization that allows us to record a sequence of commands into a so-called bundle. After a bundle has been recorded, the driver will preprocess the commands to optimize their execution during rendering. Therefore, bundles should be recorded at initialization time. The use of bundles should be thought of as an optimization to use if profiling shows building particular command lists are taking significant time. The Direct3D 12 drawing API is already very efficient, so you should not need to use bundles often, and you should only use them if you can demonstrate a performance gain by them; that is to say, do not use them by default. We do not use bundles in this book; see the DirectX 12 documentation for further details.

2. riid: The COM ID of the ID3D12CommandAllocator interface we want to create.

3. ppCommandAllocator: Outputs a pointer to the created command allocator.

Command lists are also created from the ID3D12Device:

HRESULT ID3D12Device::CreateCommandList(

UINT nodeMask,

D3D12_COMMAND_LIST_TYPE type,

ID3D12CommandAllocator *pCommandAllocator,

ID3D12PipelineState *pInitialState,

REFIID riid,

void **ppCommandList);

1. nodeMask: Set to 0 for single GPU system. Otherwise, the node mask identifies the physical GPU this command list is associated with. In this book we assume single GPU systems.

2. type: The type of command list: either _COMMAND_LIST_TYPE_DIRECT or D3D12_COMMAND_LIST_TYPE_BUNDLE.

3. pCommandAllocator: The allocator to be associated with the created command list. The command allocator type must match the command list type.

4. pInitialState: Specifies the initial pipeline state of the command list. This can be null for bundles, and in the special case where a command list is executed for initialization purposes and does not contain any draw commands. We discuss ID3D12PipelineState in Chapter 6.

5. riid: The COM ID of the ID3D12CommandList interface we want to create.

6. ppCommandList: Outputs a pointer to the created command list.

|

|

You can use the ID3D12Device::GetNodeCount method to query the number of GPU adapter nodes on the system. |

You can create multiple command lists associated with the same allocator, but you cannot record at the same time. That is, all command lists must be closed except the one whose commands we are going to record. Thus, all commands from a given command list will be added to the allocator contiguously. Note that when a command list is created or reset, it is in an “open” state. So if we tried to create two command lists in a row with the same allocator, we would get an error:

D3D12 ERROR: ID3D12CommandList::{Create,Reset}CommandList: The command allocator is currently in-use by another command list.

After we have called ID3D12CommandQueue::ExecuteCommandList(C), it is safe to reuse the internal memory of C to record a new set of commands by calling the ID3D12CommandList::Reset method. The parameters of this method are the same as the matching parameters in ID3D12Device::CreateCommandList.

HRESULT ID3D12CommandList::Reset(

ID3D12CommandAllocator *pAllocator,

ID3D12PipelineState *pInitialState);

This method puts the command list in the same state as if it was just created, but allows us to reuse the internal memory and avoid deallocating the old command list and allocating a new one. Note that resetting the command list does not affect the commands in the command queue because the associated command allocator still has the commands in memory that the command queue references.

After we have submitted the rendering commands for a complete frame to the GPU, we would like to reuse the memory in the command allocator for the next frame. The ID3D12CommandAllocator::Reset method may be used for this:

HRESULT ID3D12CommandAllocator::Reset(void);

The idea of this is analogous to calling std::vector::clear, which resizes a vector back to zero, but keeps the current capacity the same. However, because the command queue may be referencing data in an allocator, a command allocator must not be reset until we are sure the GPU has finished executing all the commands in the allocator; how to do this is covered in the next section.

4.2.2 CPU/GPU Synchronization

Due to having two processors running in parallel, a number of synchronization issues appear.

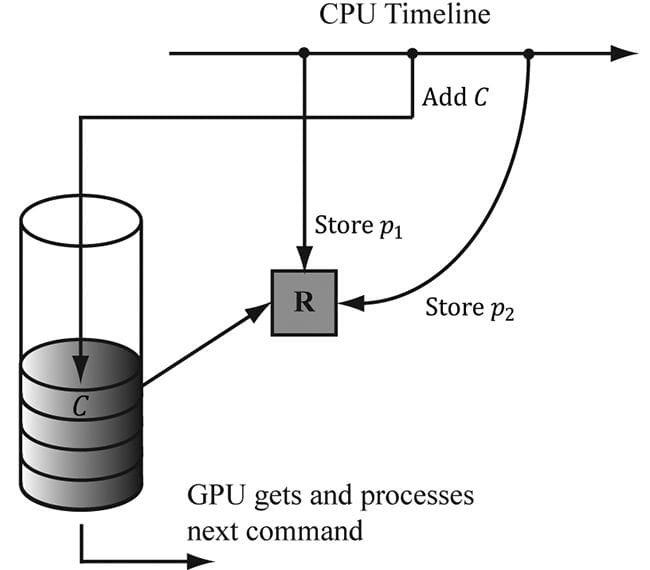

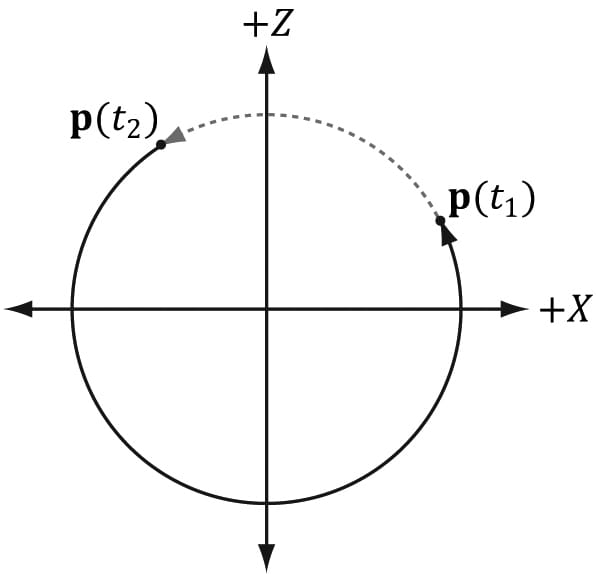

Suppose we have some resource R that stores the position of some geometry we wish to draw. Furthermore, suppose the CPU updates the data of R to store position p1 and then adds a drawing command C that references R to the command queue with the intent of drawing the geometry at position p1. Adding commands to the command queue does not block the CPU, so the CPU continues on. It would be an error for the CPU to continue on and overwrite the data of R to store a new position p2 before the GPU executed the draw command C (see Figure 4.7).

Figure 4.7. This is an error because C draws the geometry with p2 or draws while R is in the middle of being updated. In any case, this is not the intended behavior.

One solution to this situation is to force the CPU to wait until the GPU has finished processing all the commands in the queue up to a specified fence point. We call this flushing the command queue. We can do this using a fence. A fence is represented by the ID3D12Fence interface and is used to synchronize the GPU and CPU. A fence object can be created with the following method:

HRESULT ID3D12Device::CreateFence(

UINT64 InitialValue,

D3D12_FENCE_FLAGS Flags,

REFIID riid,

void **ppFence);

// Example

ThrowIfFailed(md3dDevice->CreateFence(

0,

D3D12_FENCE_FLAG_NONE,

IID_PPV_ARGS(&mFence)));

A fence object maintains a UINT64 value, which is just an integer to identify a fence point in time. We start at value zero and every time we need to mark a new fence point, we just increment the integer. Now, the following code/comments show how we can use a fence to flush the command queue.

UINT64 mCurrentFence = 0;

void D3DApp::FlushCommandQueue()

{

// Advance the fence value to mark commands up to this fence point.

mCurrentFence++;

// Add an instruction to the command queue to set a new fence point.

// Because we are on the GPU timeline, the new fence point won’t be

// set until the GPU finishes processing all the commands prior to

// this Signal().

ThrowIfFailed(mCommandQueue->Signal(mFence.Get(), mCurrentFence));

// Wait until the GPU has completed commands up to this fence point.

if(mFence->GetCompletedValue() < mCurrentFence)

{

HANDLE eventHandle = CreateEventEx(nullptr, false, false, EVENT_ALL_ACCESS);

// Fire event when GPU hits current fence.

ThrowIfFailed(mFence->SetEventOnCompletion(mCurrentFence, eventHandle));

// Wait until the GPU hits current fence event is fired.

WaitForSingleObject(eventHandle, INFINITE);

CloseHandle(eventHandle);

}

}

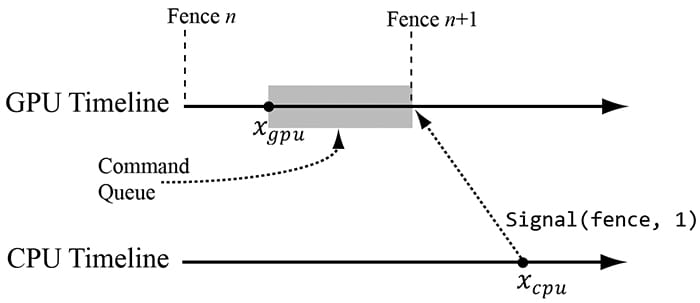

Figure 4.8 explains this code graphically.

Figure 4.8. At this snapshot, the GPU has processed commands up to xgpu and the CPU has just called the ID3D12CommandQueue::Signal(fence, n+1) method. This essentially adds an instruction to the end of the queue to change the fence value to n + 1. However, mFence->GetCompletedValue() will continue to return n until the GPU processes all the commands in the queue that were added prior to the Signal(fence, n+1) instruction.

So in the previous example, after the CPU issued the draw command C, it would flush the command queue before overwriting the data of R to store a new position p2. This solution is not ideal because it means the CPU is idle while waiting for the GPU to finish, but it provides a simple solution that we will use until Chapter 7. You can flush the command queue at almost any point (not necessarily only once per frame); if you have some initialization GPU commands, you can flush the command queue to execute the initialization before entering the main rendering loop, for example.

Note that flushing the command queue also can be used to solve the problem we mentioned at the end of the last section; that is, we can flush the command queue to be sure that all the GPU commands have been executed before we reset the command allocator.

4.2.3 Resource Transitions

To implement common rendering effects, it is common for the GPU to write to a resource R in one step, and then, in a later step, read from the resource R. However, it would be a resource hazard to read from a resource if the GPU has not finished writing to it or not started writing at all. To solve this problem, Direct3D associates a state to resources. Resources are in a default state when they are created, and it is up to the application to tell Direct3D any state transitions. This enables the GPU to do any work it needs to do to make the transition and prevent resource hazards. For example, if we are writing to a resource, say a texture, we will set the texture state to a render target state; when we need to read the texture, we will change its state to a shader resource state. By informing Direct3D of a transition, the GPU can take steps to avoid the hazard, for example, by waiting for all the write operations to complete before reading from the resource. The burden of resource transition falls on the application developer for performance reasons. The application developer knows when these transitions are happening. An automatic transition tracking system would impose additional overhead.

A resource transition is specified by setting an array of transition resource barriers on the command list; it is an array in case you want to transition multiple resources with one API call. In code, a resource barrier is represented by the D3D12_RESOURCE_BARRIER_DESC structure. The following helper function (defined in d3dx12.h) returns a transition resource barrier description for a given resource, and specifies the before and after states:

struct CD3DX12_RESOURCE_BARRIER : public D3D12_RESOURCE_BARRIER

{

// [...] convenience methods

static inline CD3DX12_RESOURCE_BARRIER Transition(

_In_ ID3D12Resource* pResource,

D3D12_RESOURCE_STATES stateBefore,

D3D12_RESOURCE_STATES stateAfter,

UINT subresource = D3D12_RESOURCE_BARRIER_ALL_SUBRESOURCES,

D3D12_RESOURCE_BARRIER_FLAGS flags = D3D12_RESOURCE_BARRIER_FLAG_NONE)

{

CD3DX12_RESOURCE_BARRIER result;

ZeroMemory(&result, sizeof(result));

D3D12_RESOURCE_BARRIER &barrier = result;

result.Type = D3D12_RESOURCE_BARRIER_TYPE_TRANSITION;

result.Flags = flags;

barrier.Transition.pResource = pResource;

barrier.Transition.StateBefore = stateBefore;

barrier.Transition.StateAfter = stateAfter;

barrier.Transition.Subresource = subresource;

return result;

}

// [...] more convenience methods

};

Observe that CD3DX12_RESOURCE_BARRIER extends D3D12_RESOURCE_BARRIER_DESC and adds convenience methods. Most Direct3D 12 structures have extended helper variations, and we prefer those variations for the convenience. The CD3DX12 variations are all defined in d3dx12.h. This file is not part of the core DirectX 12 SDK, but is available for download from Microsoft. For convenience, a copy is included in the Common directory of the book’s source code.

An example of this function from this chapter’s sample application is as follows:

mCommandList->ResourceBarrier(1,

&CD3DX12_RESOURCE_BARRIER::Transition(

CurrentBackBuffer(),

D3D12_RESOURCE_STATE_PRESENT,

D3D12_RESOURCE_STATE_RENDER_TARGET));

This code transitions a texture representing the image we are displaying on screen from a presentation state to a render target state. Observe that the resource barrier is added to the command list. You can think of the resource barrier transition as a command itself instructing the GPU that the state of a resource is being transitioned, so that it can take the necessary steps to prevent a resource hazard when executing subsequent commands.

|

|

There are other types of resource barriers besides transition types. For now, we only need the transition types. We will introduce the other types when we need them. |

4.2.4 Multithreading with Commands

Direct3D 12 was designed for efficient multithreading. The command list design is one way Direct3D takes advantage of multithreading. For large scenes with lots of objects, building the command list to draw the entire scene can take CPU time. So the idea is to build command lists in parallel; for example, you might spawn four threads, each responsible for building a command list to draw 25% of the scene objects.

A few things to note about command list multithreading:

1. Command list are not free-threaded; that is, multiple threads may not share the same command list and call its methods concurrently. So generally, each thread will get its own command list.

2. Command allocators are not free-threaded; that is, multiple threads may not share the same command allocator and call its methods concurrently. So generally, each thread will get its own command allocator.

3. The command queue is free-threaded, so multiple threads can access the command queue and call its methods concurrently. In particular, each thread can submit their generated command list to the thread queue concurrently.

4. For performance reasons, the application must specify at initialization time the maximum number of command lists they will record concurrently.

For simplicity, we will not use multithreading in this book. Once the reader is finished with this book, we recommend they study the Multithreading12 SDK sample to see how command lists can be generated in parallel. Applications that want to maximize system resources should definitely use multithreading to take advantage of multiple CPU cores.

4.3 INITIALIZING DIRECT3D

The following subsections show how to initialize Direct3D for our demo framework. It is a long process, but only needs to be done once. Our process of initializing Direct3D can be broken down into the following steps:

1. Create the ID3D12Device using the D3D12CreateDevice function.

2. Create an ID3D12Fence object and query descriptor sizes.

3. Check 4X MSAA quality level support.

4. Create the command queue, command list allocator, and main command list.

5. Describe and create the swap chain.

6. Create the descriptor heaps the application requires.

7. Resize the back buffer and create a render target view to the back buffer.

8. Create the depth/stencil buffer and its associated depth/stencil view.

9. Set the viewport and scissor rectangles.

4.3.1 Create the Device

Initializing Direct3D begins by creating the Direct3D 12 device (ID3D12Device). The device represents a display adapter. Usually, the display adapter is a physical piece of 3D hardware (e.g., graphics card); however, a system can also have a software display adapter that emulates 3D hardware functionality (e.g., the WARP adapter). The Direct3D 12 device is used to check feature support, and create all other Direct3D interface objects like resources, views, and command lists. The device can be created with the following function:

HRESULT WINAPI D3D12CreateDevice(

IUnknown* pAdapter,

D3D_FEATURE_LEVEL MinimumFeatureLevel,

REFIID riid, // Expected: ID3D12Device

void** ppDevice );

1. pAdapter: Specifies the display adapter we want the created device to represent. Specifying null for this parameter uses the primary display adapter. We always use the primary adapter in the sample programs of this book. §4.1.10 showed how to enumerate all the system’s display adapters.

2. MinimumFeatureLevel: The minimum feature level our application requires support for; device creation will fail if the adapter does not support this feature level. In our framework, we specify D3D_FEATURE_LEVEL_11_0 (i.e., Direct3D 11 feature support).

3. riid: The COM ID of the ID3D12Device interface we want to create.

4. ppDevice: Returns the created device.

Here is an example call of this function:

#if defined(DEBUG) || defined(_DEBUG)

// Enable the D3D12 debug layer.

{

ComPtr<ID3D12Debug> debugController;

ThrowIfFailed(D3D12GetDebugInterface(IID_PPV_ARGS(&debugController)));

debugController->EnableDebugLayer();

}

#endif

ThrowIfFailed(CreateDXGIFactory1(IID_PPV_ARGS(&mdxgiFactory)));

// Try to create hardware device.

HRESULT hardwareResult = D3D12CreateDevice(

nullptr, // default adapter

D3D_FEATURE_LEVEL_11_0,

IID_PPV_ARGS(&md3dDevice));

// Fallback to WARP device.

if(FAILED(hardwareResult))

{

ComPtr<IDXGIAdapter> pWarpAdapter;

ThrowIfFailed(mdxgiFactory->EnumWarpAdapter(IID_PPV_ARGS(&pWarpAdapter)));

ThrowIfFailed(D3D12CreateDevice(

pWarpAdapter.Get(),

D3D_FEATURE_LEVEL_11_0,

IID_PPV_ARGS(&md3dDevice)));

}

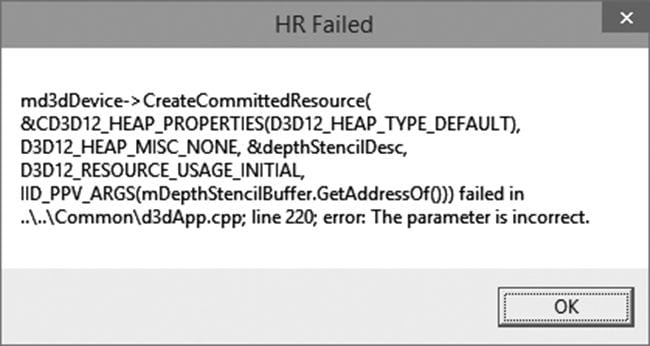

Observe that we first enable the debug layer for debug mode builds. When the debug layer is enabled, Direct3D will enable extra debugging and send debug messages to the VC++ output window like the following:

D3D12 ERROR: ID3D12CommandList::Reset: Reset fails because the command list was not closed.

Also observe that if our call to D3D12CreateDevice fails, we fallback to a WARP device, which is a software adapter. WARP stands for Windows Advanced Rasterization Platform. On Windows 7 and lower, the WARP device supports up to feature level 10.1; on Windows 8, the WARP device supports up to feature level 11.1. In order to create a WARP adapter, we need to create an IDXGIFactory4 object so that we can enumerate the warp adapter:

ComPtr<IDXGIFactory4> mdxgiFactory;

CreateDXGIFactory1(IID_PPV_ARGS(&mdxgiFactory));

mdxgiFactory->EnumWarpAdapter(

IID_PPV_ARGS(&pWarpAdapter));

The mdxgiFactory object will also be used to create our swap chain since it is part of the DXGI.

4.3.2 Create the Fence and Descriptor Sizes

After we have created our device, we can create our fence object for CPU/GPU synchronization. In addition, once we get to working with descriptors, we are going to need to know their size. Descriptor sizes can vary across GPUs so we need to query this information. We cache the descriptor sizes so that it is available when we need it for various descriptor types:

ThrowIfFailed(md3dDevice->CreateFence(

0, D3D12_FENCE_FLAG_NONE, IID_PPV_ARGS(&mFence)));

mRtvDescriptorSize = md3dDevice->GetDescriptorHandleIncrementSize(

D3D12_DESCRIPTOR_HEAP_TYPE_RTV);

mDsvDescriptorSize = md3dDevice->GetDescriptorHandleIncrementSize(

D3D12_DESCRIPTOR_HEAP_TYPE_DSV);

mCbvSrvDescriptorSize = md3dDevice->GetDescriptorHandleIncrementSize(

D3D12_DESCRIPTOR_HEAP_TYPE_CBV_SRV_UAV);

4.3.3 Check 4X MSAA Quality Support

In this book, we check support for 4X MSAA. We choose 4X because it gives a good improvement without being overly expensive, and because all Direct3D 11 capable devices support 4X MSAA with all render target formats. Therefore, it is guaranteed to be available on Direct3D 11 hardware and we do not have to verify support for it. However, we do have to check the supported quality level, which can be done with the following method:

D3D12_FEATURE_DATA_MULTISAMPLE_QUALITY_LEVELS msQualityLevels;

msQualityLevels.Format = mBackBufferFormat;

msQualityLevels.SampleCount = 4;

msQualityLevels.Flags = D3D12_MULTISAMPLE_QUALITY_LEVELS_FLAG_NONE;

msQualityLevels.NumQualityLevels = 0;

ThrowIfFailed(md3dDevice->CheckFeatureSupport(

D3D12_FEATURE_MULTISAMPLE_QUALITY_LEVELS,

&msQualityLevels,

sizeof(msQualityLevels)));

m4xMsaaQuality = msQualityLevels.NumQualityLevels;

assert(m4xMsaaQuality > 0 && "Unexpected MSAA quality level.");

Because 4X MSAA is always supported, the returned quality should always be greater than 0; therefore, we assert that this is the case.

4.3.4 Create Command Queue and Command List

Recall from §4.2.1 that a command queue is represented by the ID3D12CommandQueue interface, a command allocator is represented by the ID3D12CommandAllocator interface, and a command list is represented by the ID3D12GraphicsCommandList interface. The following function shows how we create a command queue, command allocator, and command list:

ComPtr<ID3D12CommandQueue> mCommandQueue;

ComPtr<ID3D12CommandAllocator> mDirectCmdListAlloc;

ComPtr<ID3D12GraphicsCommandList> mCommandList;

void D3DApp::CreateCommandObjects()

{

D3D12_COMMAND_QUEUE_DESC queueDesc = {};

queueDesc.Type = D3D12_COMMAND_LIST_TYPE_DIRECT;

queueDesc.Flags = D3D12_COMMAND_QUEUE_FLAG_NONE;

ThrowIfFailed(md3dDevice->CreateCommandQueue(

&queueDesc, IID_PPV_ARGS(&mCommandQueue)));

ThrowIfFailed(md3dDevice->CreateCommandAllocator(

D3D12_COMMAND_LIST_TYPE_DIRECT,

IID_PPV_ARGS(mDirectCmdListAlloc.GetAddressOf())));

ThrowIfFailed(md3dDevice->CreateCommandList(

0,

D3D12_COMMAND_LIST_TYPE_DIRECT,

mDirectCmdListAlloc.Get(), // Associated command allocator

nullptr, // Initial PipelineStateObject

IID_PPV_ARGS(mCommandList.GetAddressOf())));

// Start off in a closed state. This is because the first time we

// refer to the command list we will Reset it, and it needs to be

// closed before calling Reset.

mCommandList->Close();

}

Observe that for CreateCommandList, we specify null for the pipeline state object parameter. In this chapter’s sample program, we do not issue any draw commands, so we do not need a valid pipeline state object. We will discuss pipeline state objects in Chapter 6.

4.3.5 Describe and Create the Swap Chain

The next step in the initialization process is to create the swap chain. This is done by first filling out an instance of the DXGI_SWAP_CHAIN_DESC structure, which describes the characteristics of the swap chain we are going to create. This structure is defined as follows:

typedef struct DXGI_SWAP_CHAIN_DESC

{

DXGI_MODE_DESC BufferDesc;

DXGI_SAMPLE_DESC SampleDesc;

DXGI_USAGE BufferUsage;

UINT BufferCount;

HWND OutputWindow;

BOOL Windowed;

DXGI_SWAP_EFFECT SwapEffect;

UINT Flags;

} DXGI_SWAP_CHAIN_DESC;

The DXGI_MODE_DESC type is another structure, defined as:

typedef struct DXGI_MODE_DESC

{

UINT Width; // Buffer resolution width

UINT Height; // Buffer resolution height

DXGI_RATIONAL RefreshRate;

DXGI_FORMAT Format; // Buffer display format

DXGI_MODE_SCANLINE_ORDER ScanlineOrdering; //Progressive vs. interlaced

DXGI_MODE_SCALING Scaling; // How the image is stretched

// over the monitor.

} DXGI_MODE_DESC;

In the following data member descriptions, we only cover the common flags and options that are most important to a beginner at this point. For a description of further flags and options, refer to the SDK documentation.

1. BufferDesc: This structure describes the properties of the back buffer we want to create. The main properties we are concerned with are the width and height, and pixel format; see the SDK documentation for further details on the other members.

2. SampleDesc: The number of multisamples and quality level; see §4.1.8. For single sampling, specify a sample count of 1 and quality level of 0.

3. BufferUsage: Specify DXGI_USAGE_RENDER_TARGET_OUTPUT since we are going to be rendering to the back buffer (i.e., use it as a render target).

4. BufferCount: The number of buffers to use in the swap chain; specify two for double buffering.

5. OutputWindow: A handle to the window we are rendering into.

6. Windowed: Specify true to run in windowed mode or false for full-screen mode.

7. SwapEffect: Specify DXGI_SWAP_EFFECT_FLIP_DISCARD.

8. Flags: Optional flags. If you specify DXGI_SWAP_CHAIN_FLAG_ALLOW_MODE_SWITCH, then when the application is switching to full-screen mode, it will choose a display mode that best matches the current application window dimensions. If this flag is not specified, then when the application is switching to full-screen mode, it will use the current desktop display mode.

After we have described out swap chain, we can create it with the IDXGIFactory::CreateSwapChain method:

HRESULT IDXGIFactory::CreateSwapChain(

IUnknown *pDevice, // Pointer to ID3D12CommandQueue.

DXGI_SWAP_CHAIN_DESC *pDesc, // Pointer to swap chain description.

IDXGISwapChain **ppSwapChain);// Returns created swap chain interface.

The following code shows how we create the swap chain in our sample framework. Observe that this function has been designed so that it can be called multiple times. It will destroy the old swap chain before creating the new one. This allows us to recreate the swap chain with different settings; in particular, we can change the multisampling settings at runtime.

DXGI_FORMAT mBackBufferFormat = DXGI_FORMAT_R8G8B8A8_UNORM;

void D3DApp::CreateSwapChain()

{

// Release the previous swapchain we will be recreating.

mSwapChain.Reset();

DXGI_SWAP_CHAIN_DESC sd;

sd.BufferDesc.Width = mClientWidth;

sd.BufferDesc.Height = mClientHeight;

sd.BufferDesc.RefreshRate.Numerator = 60;

sd.BufferDesc.RefreshRate.Denominator = 1;

sd.BufferDesc.Format = mBackBufferFormat;

sd.BufferDesc.ScanlineOrdering = DXGI_MODE_SCANLINE_ORDER_UNSPECIFIED;

sd.BufferDesc.Scaling = DXGI_MODE_SCALING_UNSPECIFIED;

sd.SampleDesc.Count = m4xMsaaState ? 4 : 1;

sd.SampleDesc.Quality = m4xMsaaState ? (m4xMsaaQuality - 1) : 0;

sd.BufferUsage = DXGI_USAGE_RENDER_TARGET_OUTPUT;

sd.BufferCount = SwapChainBufferCount;

sd.OutputWindow = mhMainWnd;

sd.Windowed = true;

sd.SwapEffect = DXGI_SWAP_EFFECT_FLIP_DISCARD;

sd.Flags = DXGI_SWAP_CHAIN_FLAG_ALLOW_MODE_SWITCH;

// Note: Swap chain uses queue to perform flush.

ThrowIfFailed(mdxgiFactory->CreateSwapChain(

mCommandQueue.Get(),

&sd,

mSwapChain.GetAddressOf()));

}

4.3.6 Create the Descriptor Heaps

We need to create the descriptor heaps to store the descriptors/views (§4.1.6) our application needs. A descriptor heap is represented by the ID3D12DescriptorHeap interface. A heap is created with the ID3D12Device::CreateDescriptorHeap method. In this chapter’s sample program, we need SwapChainBufferCount many render target views (RTVs) to describe the buffer resources in the swap chain we will render into, and one depth/stencil view (DSV) to describe the depth/stencil buffer resource for depth testing. Therefore, we need a heap for storing SwapChainBufferCount RTVs, and we need a heap for storing one DSV. These heaps are created with the following code:

ComPtr<ID3D12DescriptorHeap> mRtvHeap;

ComPtr<ID3D12DescriptorHeap> mDsvHeap;

void D3DApp::CreateRtvAndDsvDescriptorHeaps()

{

D3D12_DESCRIPTOR_HEAP_DESC rtvHeapDesc;

rtvHeapDesc.NumDescriptors = SwapChainBufferCount;

rtvHeapDesc.Type = D3D12_DESCRIPTOR_HEAP_TYPE_RTV;

rtvHeapDesc.Flags = D3D12_DESCRIPTOR_HEAP_FLAG_NONE;

rtvHeapDesc.NodeMask = 0;

ThrowIfFailed(md3dDevice->CreateDescriptorHeap(

&rtvHeapDesc, IID_PPV_ARGS(mRtvHeap.GetAddressOf())));

D3D12_DESCRIPTOR_HEAP_DESC dsvHeapDesc;

dsvHeapDesc.NumDescriptors = 1;

dsvHeapDesc.Type = D3D12_DESCRIPTOR_HEAP_TYPE_DSV;

dsvHeapDesc.Flags = D3D12_DESCRIPTOR_HEAP_FLAG_NONE;

dsvHeapDesc.NodeMask = 0;

ThrowIfFailed(md3dDevice->CreateDescriptorHeap(

&dsvHeapDesc, IID_PPV_ARGS(mDsvHeap.GetAddressOf())));

}

In our application framework, we define

static const int SwapChainBufferCount = 2;

int mCurrBackBuffer = 0;

and we keep track of the current back buffer index with mCurrBackBuffer (recall that the front and back buffers get swapped in page flipping, so we need to track which buffer is the current back buffer so we know which one to render to).