Game Programming Algorithms and Techniques: A Platform-Agnostic Approach (2014)

Chapter 5. Input

Without input, games would be a static form of entertainment, much like film or television. It is the fact that the game responds to the keyboard, mouse, controller, or other input device that enables interactivity.

This chapter takes an in-depth look at a wide variety of input devices, including those that are predominantly for mobile devices. It further covers how a high-level input system might be designed and implemented.

Input Devices

As shown in Figure 5.1, there is a host of types of input devices, some more common than others. Although a keyboard, mouse, or controller might be the most familiar input device for a PC or console gamer, on the mobile side of things touch screens and accelerometers dominate. Other more recent input devices include the WiiMote, Kinect, guitar controllers, and head-tracking devices for virtual reality headsets. Regardless of the device, however, certain core techniques are applied when the device is queried for information.

Figure 5.1 A variety of input devices.

Most forms of input can be broken down into two categories: digital and analog. A digital form of input is one that has a binary state: It can be either “on” or “off.” For example, a key on a keyboard typically is digital—either the spacebar is pressed, or it isn’t. Most keyboards do not have an in-between state. An analog form of input is one where there can be a range of values returned by the device. One common analog device is a joystick, which will have a range of values in two dimensions.

Whereas a keyboard may only have digital inputs, many input devices have both analog and digital components. Take, for instance, the Xbox 360 controller: The directional pad and the face buttons are digital, but the thumb sticks and triggers are analog. Note that the face buttons on a controller are not always digital, as in the case of the Playstation 2, but it’s a typical implementation.

One other consideration for some types of games is that the input system may need to respond to chords (multiple button presses at once) or sequences (a series of inputs). A genre where these are prevalent is the fighting game, which often has special moves that utilize both chords and sequences. For example, in the later Street Fighter games, if a Blanka player presses forward and all three punch or kick buttons at the same time (a chord), the character will quickly jump forward. Similarly, all Street Fighter games have the concept of a fireball, which is performed with a quarter circle forward followed by a punch button (a sequence). Handling chords and sequences is beyond the scope of this chapter, but one approach is to utilize a state machine designed to recognize the different special moves as they are performed.

Digital Input

Other than for text-based games, standard console input (such as cin) typically is not used. For graphical games, the console usually is disabled altogether, so it would be impossible to use standard input in any event. Most games will instead use a library that enables much more granular device queries—one possibility for a cross-platform title might be Simple DirectMedia Layer (SDL; www.libsdl.org).

With libraries such as SDL, it is possible to query the current state of a digital input device. In such systems, it usually is possible to grab an array of Booleans that describe the state of every key on the keyboard (or any other digital input device). So if the spacebar is pressed, the index corresponding to the spacebar would be set to true. Memorizing which index numbers correspond to which keys would be tiresome, so usually there is a keycode enumeration that maps each index to a specific name. For example, if you want to check the status of the spacebar, you might be able to check the index defined by K_SPACE.

Now suppose you are making a simple game where pressing the spacebar causes your ship to fire a missile. It might be tempting to have code similar to this:

if IsKeyDown(K_SPACE)

fire missile

end

But the problem with this code is that even if the player quickly presses and releases the spacebar, the if statement will be true for multiple consecutive frames. That’s because if the game runs at 60 FPS, the player would have to press and release the spacebar within the span of 16ms for it to only be detected on one single frame. Because this is unlikely to occur, each tap of the spacebar would probably fire three or four missiles on consecutive frames. Furthermore, if the player simply holds down the spacebar, a missile will fire once per frame until it’s released. This might be the desired behavior, but it also might completely imbalance the game.

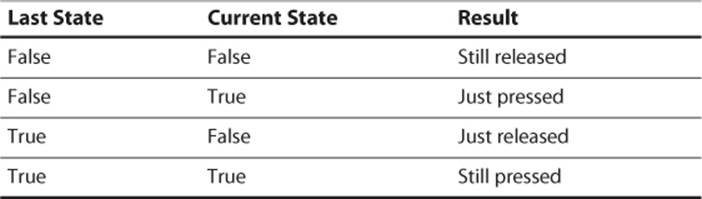

A simple but effective improvement is to track the state of the key on both the current frame and the previous frame. Because there are now two Boolean values, there are a total of four possible combinations, and each maps to a logically different state, as detailed in Table 5.1.

Table 5.1 Last and Current Key State Combinations

So if on the last frame the spacebar was not pressed, but on the current frame it is pressed, that would correspond to the “just pressed” result. This means if the missile fire action were instead mapped to a “just pressed” spacebar action, the ship would only fire one missile per discrete button press.

This system also provides more flexibility than the previous approach. Suppose our game allows the player to charge up his missile the longer he holds down the spacebar. In this case, we would begin charging the missile on “just pressed,” increase the power of the missile the longer the spacebar is “still pressed,” and finally launch the missile when “just released” occurs.

In order to support these four different results, we first must declare an enum of possibilities:

enum KeyState

StillReleased,

JustPressed,

JustReleased,

StillPressed

end

Then, when the input is updated, the current snapshot needs to be grabbed:

function UpdateKeyboard()

// lastState and currentState are arrays defined elsewhere,

// which store the entire state of the keyboard.

lastState = currentState

currentState = get keyboard state

end

Finally, a function can be implemented that returns the correct KeyState value depending on the permutation:

function GetKeyState(int keyCode)

if lastState[keyCode] == true

if currentState[keyCode] == true

return StillPressed

else

return JustReleased

end

else

if currentState[keyCode] == true

return JustPressed

else

return StillReleased

end

end

end

With this function, it is then possible to check the KeyState of any key. Later in this chapter, we will discuss an event-based system that builds on this. But for now, let’s move on to analog devices.

Analog Input

Because analog devices have a wide range of values, it is common to have spurious input. Suppose a joystick has its x and y values represented by a signed 16-bit integer. This means both the x and y values can range from –32,768 to +32,767. If the joystick is placed down on a flat surface and the player doesn’t touch it, in theory both the x and y values should be zero, because the joystick is not being touched. However, in practice the values will be around zero, but rarely precisely zero.

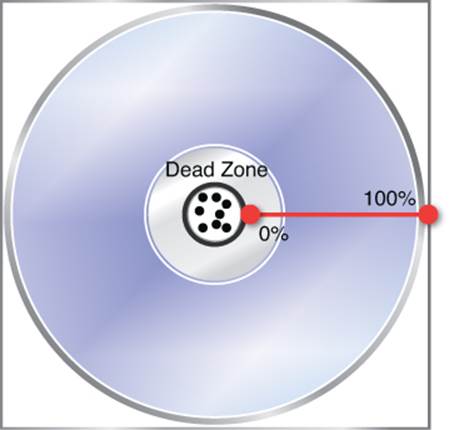

Because of this, if the raw analog input was just applied directly to the movement of a character, the character would never stay still. This means you could put down your controller yet still have your character move onscreen. To resolve this problem, most games implement some sort ofanalog filtering, which is designed to eliminate spurious input data. Figure 5.2 illustrates how this filtering might be used to implement a dead zone, which is an area around the center of the joystick input range that is ignored.

Figure 5.2 Joystick dead zone causes input within the inner circle to be ignored.

A very basic dead zone implementation would be to set the x and y values to zero in cases where they are relatively close to zero. If the values range between +/– 32K, perhaps a ~10% dead zone of 3,000 could be utilized:

int deadZone = 3000

Vector2 joy = get joystick input

if joy.x >= -deadZone && joy.x <= deadZone

joy.x = 0

end

if joy.y >= -deadZone && joy.y <= deadZone

joy.y = 0

end

However, there are two problems with this approach. First of all, the dead zone will be a square instead of a circle. This means if you press the joystick such that the x and y values of the joystick are both slightly less than the dead zone value, you won’t get any movement even though you have moved the joystick beyond the 10% threshold.

Another issue is that this approach does not use the full range of values available. There is a smooth ramp from 10% speed to 100% speed, but everything below 10% is lost. We would prefer a solution where the movement can be anywhere from 0% speed to 100% speed. Or in other words, we want valid movement input to start at slightly higher than 0% speed, rather than slightly higher than 10% speed. This will require modifying the range so an “original” value of 55% speed, which is half way between 10% speed and 100% speed, is instead filtered into 50% speed.

To solve this problem, rather than treating the x and y values as individual components, we can treat the joystick input as a 2D floating point vector. Then vector operations can be performed in order to filter for the dead zone.

float deadZone = 3000

float maxValue = 32677

Vector2 joy = get joystick input

float length = joy.length()

// If the length < deadZone, we want no input

if length < deadzone

joy.x = 0

joy.y = 0

else

// Calculate the percent between the deadZone and maxValue circles

float pct = (length – deadZone) / (maxValue – deadZone)

// Normalize vector and multiply to get correct final value

joy = joy / length

joy = joy * maxValue * pct

end

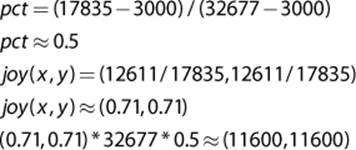

Let’s test out the preceding pseudocode. The midpoint between 32,677 and 3,000 is ~17,835, so a vector with that length should yield a percent of ~50%. One such vector with that length would be x = 12,611 and y = 12,611. Because the length of 17,835 is greater than the dead zone of 3,000, the else case will be utilized and the following calculations will occur:

After the analog filtering is applied, the resultant vector has a length of ~16,404. In other words, a value that’s 50% between 3,000 and 32,677 was adjusted to be the value 50% between 0 and 32,677, which allows us to get the full range of movement speeds from 0% all the way to 100%.

Event-Based Input Systems

Imagine you are driving a kid to an amusement park. The kid is very excited, so every minute she asks, “Are we there yet?” She keeps asking this over and over again until you finally arrive, at which point the answer to her question is yes. Now imagine that instead of one kid, you’re driving a car full of kids. Every single kid is interested in going to the amusement park, so each of them repeatedly asks the same question. Not only is this annoying for the driver, it’s also a waste of energy on the part of the kids.

This scenario is essentially a polling system in that each kid is polling for the result of the query. For an input system, a polling approach would be one where any code interested in whether the spacebar was “just pressed” would have to manually check. Over and over again on every single frame, and in multiple locations, GetKeyState would be called on K_SPACE. This not only leads to a duplication of effort, but it could also introduce bugs if the state of the keyboard changes in the middle of the frame.

If we return to the amusement park analogy, imagine once again you have that car full of kids. Rather than having the kids poll over and over again, you can employ an event-based or push system. In an event-based system, the kids “register” to an event that they care about (in this case, arrival at the amusement park) and are then notified once the event occurs. So the drive to the amusement park is serene, and once you arrive you simply notify all the kids that you’ve arrived.

An input system can be designed to be event based as well. There can be an overarching system that accepts registrations to particular input events. When the event occurs, the system can then notify any code that registered to said event. So all the systems that must know about the spacebar can register to a spacebar “just pressed” event, and they will all be notified once it occurs.

Note that the underlying code for an event-based input system still must use a polling mechanism. Just like how you as the driver must continue to check whether or not you’ve arrived at the park so you can notify the kids, the event-based input system must be polling for the spacebar so it can notify the appropriate systems. However, the difference is that the polling is now being performed in only one location in code.

A Basic Event System

Certain languages, such as C#, have native support for events. However, even if events are not natively supported by your language of choice, it is very much possible to implement an event system. Nearly every language has support for saving a function in a variable, whether it’s through an anonymous function, lambda expression, function object, or function pointer. As long as functions can be saved to variables, it is possible to have a list of functions that are registered to a particular event. When that event is triggered, those registered functions can then each be executed.

Suppose you want to implement a simple event-driven system for mouse clicks. Different parts of the game code will want to be notified when a mouse click occurs and in which location on screen. In this case, there needs to be a central “mouse manager” that allows interested parties to register to the mouse click event. The declaration for this class might look something like this:

class MouseManager

List functions

// Accepts functions passed as parameters with signature of (int, int)

function RegisterToMouseClick(function handler(int, int))

functions.Add(handler)

end

function Update(float deltaTime)

bool mouseClicked = false

int mouseX = 0, mouseY = 0

// Poll for a mouse click

...

if mouseClicked

foreach function f in functions

f(mouseX, mouseY)

end

end

end

end

Then any party interested in receiving a mouse click would be able to register a function by calling RegisterToMouseClick in the following manner:

// Register "myFunction" to mouse clicks

MouseManager.RegisterToMouseClick(myFunction)

Once registered, the function will be automatically called every time a mouse click occurs. The best way to make the MouseManager globally accessible is to use the singleton design pattern, which is a way to provide access to a class that has only one instance in the entire program.

The event system used for the mouse manager could easily be expanded so that it covers all button presses—it wouldn’t be that much more work to allow functions to be registered to every key on the keyboard, for example. But there are a few issues with the approach used in this basic event system.

Input in Unreal

The Unreal Engine uses a fairly customizable event-driven system for player input. The key bindings are all defined in an INI file, and each key binding can be directly mapped to one or more functions written in the Unreal scripting language. For example, if there were a Fire script function that causes the player to fire his weapon, it could be mapped to both the left mouse button and the right trigger on a controller with the following bindings:

Bindings=(Name="LeftMouseButton",Command="Fire")

Bindings=(Name="XboxTypeS_RightTrigger",Command="Fire")

By default, these bindings get triggered when the key is “just pressed.” It’s also possible to have different actions occur when the key is “just released” if the onrelease modifier is used. For example, the following binding would cause the StartFire script function to be called when the left mouse button is first pressed, and then the StopFire function when it’s released:

Bindings=(Name="LeftMouseButton",Command="StartFire | onrelease

StopFire")

The pipe operator can be used to actually map any number of script functions to one key press, so there is a great deal of flexibility. One thing that this event system is not really designed to handle, however, is menu input. For UI screens, Unreal instead provides an UpdateInputfunction that can be overloaded to create the desired behavior.

Further information on the Unreal binding system can be found on Epic’s site at http://udn.epicgames.com/Three/KeyBinds.html.

One issue is that the current system has a tight coupling between specific buttons and events. That is to say interested parties must register to a specific key press (left mouse button) rather than an abstract action (such as fire weapon). What if one player wants to fire his weapon with the spacebar instead of the left mouse button? Any firing code that was registered directly to the left mouse button wouldn’t work properly in this case. So an ideal input system would allow for abstract actions that can be bound to specific buttons.

Another issue is that the event system described does not allow any one registered party to prevent any other parties from receiving the event. Suppose you are making a simple RTS (such as the tower defense game in Chapter 14, “Sample Game: Tower Defense for PC/Mac”). If such a game were on a PC, it would make sense that a mouse click could be directed either at the user interface (the menus) or at the game world itself (selecting units). Because the UI is displayed on top of the game world, the UI needs to have first priority on mouse clicks. This means when a mouse click occurs, the UI must first inspect it and determine whether the click is directed at the UI. If it is, then that click event should not be dispatched to the game world. But in our current input system, both the game world and UI would receive the mouse click, which could potentially cause unintended behavior.

A More Complex System

The system covered in this section does not allow direct registration of a function to a specific event. It instead creates a list of active input actions that can then be queried by interested parties. So while perhaps it is not an event-based system in the strict definition, it is fairly similar. It also happens to be that the system outlined here is very similar to the one used in Chapter 14’s tower defense game.

The basic premise of this system is that there is a map of key bindings. Recall that a map is a set of (key, value) pairs, which, depending on the type of map, may or may not be sorted. In this system, the key is the name of the binding (such as “Fire”), and the value is information about that binding such as what key it is mapped to and when it gets triggered (just pressed, still pressed, and so on). Because we want to support multiple bindings for actions (so “Fire” can be the left mouse button or the spacebar), we’d ideally select a type of map that does allow duplicate entries with the same key.

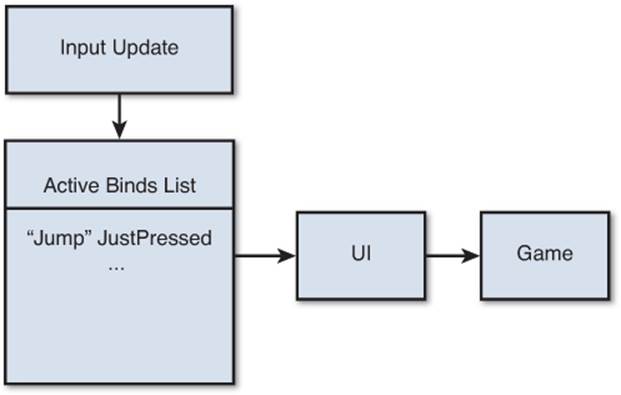

Every frame, the input system goes through the map of key bindings and determines which bindings (if any) are active. The active bindings for that specific frame are then stored in an “active” bindings map. This map of active bindings is first provided to the UI system, which will go through and determine whether there are any actions that affect the UI. If there are such actions, they will be removed from the active bindings map and processed. Any remaining bindings are then passed on to the gameplay system for processing. Figure 5.3 visualizes how the bindings are passed between the systems.

Figure 5.3 Proposed input system.

Although it might seem odd that all the bindings in the game, including actions such as “Jump,” might be first processed by the UI, it provides a great deal of flexibility. For example, in a tutorial it’s common to have a dialog box that only goes away once a particular action is performed. In this case, you could have the “Press ... to Jump” dialog box pause the game and only go away once the button is pressed.

The code for this system is not terribly complex and is provided in Listing 5.1. If you’d like to see a live implementation of a system very similar to this one, take a look at InputManager.cs, which is provided in the source for Chapter 14’s tower defense game.

Listing 5.1 A More Complex Input Manager

// KeyState enum (JustPressed, JustReleased, etc.) from earlier in this

chapter

...

// GetKeyState from earlier in this chapter

...

// BindInfo is used for the "value" in the map

struct BindInfo

int keyCode

KeyState stateType

end

class InputManager

// Stores all the bindings

Map keyBindings;

// Stores only the active bindings

Map activeBindings;

// Helper function to add a binding to keyBindings

function AddBinding(string name, int code, KeyState type)

keyBindings.Add(name, BindInfo(code, type))

end

// Initializes all bindings

function InitializeBindings()

// These could be parsed from a file.

// Call AddBinding on each binding.

// For example, this would be a binding "Fire" mapped to

// enter's keyCode and a KeyState of JustReleased:

// AddBinding("Fire", K_ENTER, JustReleased)

...

end

function Update(float deltaTime)

// Clear out any active bindings from last frame

activeBindings.Clear()

// KeyValuePair has a .key and .value member for the

// key and value from the map, respectively.

foreach KeyValuePair k in keyBindings

// Use previously-defined GetKeyState to grab the state

// of this particular key code.

if GetKeyState(k.value.keyCode) == k.value.stateType

// If this matches, this binding is active

activeBindings.Add(k.key, k.value)

end

end

// If there are any active bindings, send it to the UI first

if activeBindings.Count() != 0

// Send active bindings to UI

...

// Send active bindings to rest of game

...

end

end

end

One inefficiency of this system is that if there are multiple bindings that use the same key, their state will be checked multiple times every frame. This could definitely be optimized, but I chose not to for the purposes of simplicity and ease of implementation.

It also would be possible to extend this system further so that arbitrary functions could be mapped to the bindings, perhaps with the option of either registering through the UI or the game. But in the particular case of the tower defense game, doing so would be an example of over-architecting a system. If it’s not really necessary for a game to have so much flexibility, there’s no reason to add further complication to the code for the input manager.

Mobile Input

Most smartphones and tablets have input mechanisms that differ from the traditional keyboard, mouse, or controller. Because of this, it is fitting to have a section focused on the input varieties typically encountered on mobile devices.

Note that simply because a mobile device has a large number of input options at its disposal does not mean that most games should use them. Mobile games must be very careful not to have an overly complex control scheme, which may turn away potential players.

Touch Screens and Gestures

Touch screens enable players to interact with the device’s screen using their fingers. At a basic level, if only single finger tapping is allowed in the game, it could be implemented in the same manner as mouse clicking. However, most touch devices support the idea of multi-touch, which means multiple fingers can be active on the screen at the same time.

Some mobile games use multi-touch to implement a virtual controller, which has a virtual joystick and virtual buttons that the player can interact with. This is especially popular for mobile games that are a version of an existing console game, but it can sometimes be a frustrating experience for the player because a virtual controller doesn’t have the same tactile feedback as an actual controller.

Something that can only be done with a touch screen, though, is a gesture, which is a series of touch actions that cause a specific behavior. An example of a gesture would be the very popular “pinch-to-zoom” on iOS devices, where the user can pinch her index finger and thumb together to zoom in, or pull them apart to zoom out.

Thankfully, both the iOS and Android SDKs provide ways to easily detect system-supplied gestures, including pinch-to-zoom. In iOS, there is a set of classes that inherits from UIGestureRecognizer, and a function to handle said gestures can easily be added to any program. On the Android side of things, there is the android.gesture package, which contains several libraries that do the same.

For customized gestures, things get a bit hairier. For iOS, really the only option is to create a custom subclass of UIGestureRecognizer that will go through the touch events and try to detect the gesture. But in the case of the Android SDK, a very handy “Gestures Builder” app can be used to create custom gestures.

You could potentially recognize gestures in any number of ways, but one popular algorithm is the Rubine algorithm, which was outlined in Dean Rubine’s 1991 paper “Specifying gestures by example.” Although the Rubine algorithm was originally developed for pen-based gestures, it can also be applied to either single-finger gestures or each finger of a multi-finger gesture.

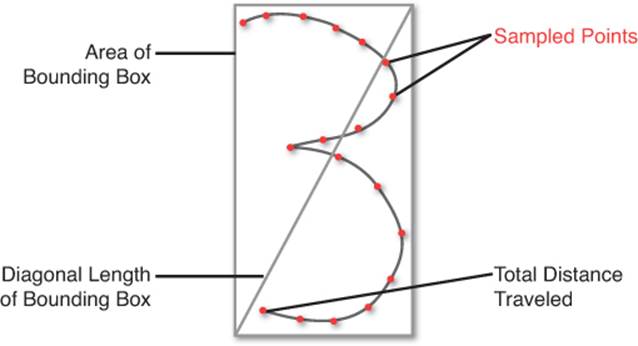

To utilize the Rubine algorithm, we first must create a library of gestures. The way this library is created is by drawing the desired gestures in a test program. As the gesture is drawn, 14 mathematical properties of the gesture, called features, are calculated. These features include elements such as the total time to draw the gesture, the total distance travelled, the area of the gesture, the midpoint of the gesture, the initial position, and so on. Figure 5.4 illustrates several of the features in the original Rubine algorithm.

Figure 5.4 Some of the Rubine algorithm features.

Once the features have been calculated for a particular gesture, it is saved into the library. And once the library is complete, it can then be loaded by whichever program that wants to recognize said gestures. Then when the end user starts drawing a gesture, the particular gesture has the same mathematical features calculated for it. Once the user lifts the pen (or finger), the algorithm uses statistical regression to determine which gesture, if any, closely matches the user’s gesture.

Although it’s true that not many games will want to use advanced gestures, there certainly are some instances where they are used. For example, Brain Age on the Nintendo DS requires users to write in numbers for solutions to math problems. Another example might be a hangman game that’s designed to teach kids how to properly write letters of the alphabet.

Accelerometer and Gyroscope

The accelerometer and gyroscope are the primary mechanisms for determining small movements on a mobile device. Early iOS and Android devices only had accelerometers, but now essentially all mobile devices have both. Although we will discuss both alongside each other, they actually serve two distinct purposes.

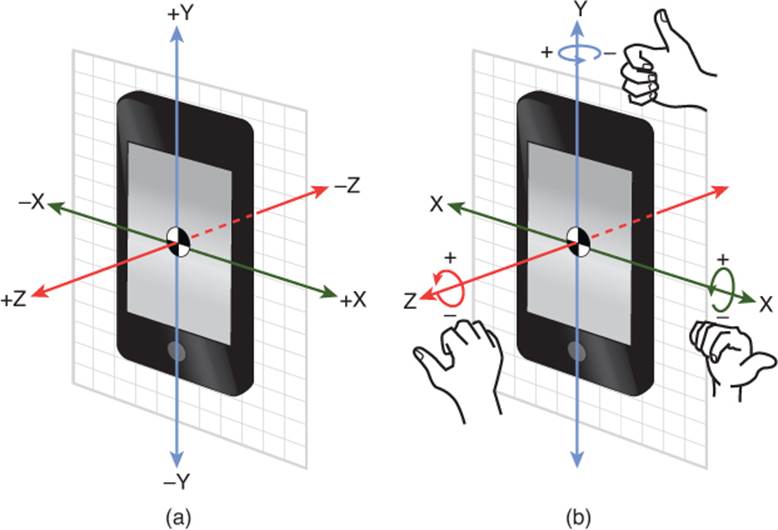

The accelerometer detects acceleration along the coordinate space represented by the device itself at the origin. On iOS devices, the orientation of the axes is such that if you hold the device in a portrait orientation, the y-axis is up and down on the screen, the x-axis is left and right along the screen, and the z-axis is into and out of the screen. So, for example, if the device is in a portrait orientation and moves up, the acceleration would be registered along the y-axis. These axes are illustrated in Figure 5.5(a).

Figure 5.5 Accelerometer (a) and gyroscope (b).

Because an accelerometer measures acceleration, there is one constant that will always be applied to the device: gravity. This means that if the device is at rest, the accelerometer can roughly determine the orientation the device is held at based on which axis is being affected by gravity. So if the device is in a portrait orientation, gravity should be along the device’s y-axis, whereas if it’s in a landscape orientation, gravity would be along the device’s x-axis.

A gyroscope, on the other hand, is designed to measure rotation about the device’s principle axes, as illustrated in Figure 5.5(b). This means that it is possible to get very precise measurements of the device’s orientation with the gyroscope. One non-gaming example that the gyroscope is well-suited for is a bubble level app that can determine whether or not a picture on the wall is level.

Trying to derive meaningful information from the raw accelerometer and gyroscope data can be challenging, so the device SDKs typically have higher-level functionality that aggregates it into easy-to-use information. For example, on iOS it is possible to use CMDeviceMotion to extract information such as the gravity vector, user acceleration, and attitude (or orientation of the device). And each of these components is provided with the usual mathematical objects—gravity and user acceleration are represented by 3D vectors, and attitude can be queried either as Euler angles, a rotation matrix, or a quaternion.

Other Mobile Input

Some types of games might expand and use additional methods of input that are only available on mobile devices. For example, an augmented reality game might use data from the camera and draw on top of objects in the physical world. Still other games might use GPS data to determine if there are any other players nearby who are playing the same game as you. Some of these input mechanisms will definitely carry over to wearable computing (such as virtual reality devices). However, even though there have been exciting developments in this area in recent years (such as the Oculus Rift), VR still has a little bit to go before it becomes a part of mainstream gaming.

Summary

Because every game must have input, it’s a very fundamental concept. Both digital and analog devices exist in many different forms, but the techniques used to query them are often similar. With digital devices, we typically want to add some type of meaning to the binary “on” and “off” state. With analog devices, we often need to have some type of filtering to remove spurious input. But on a higher level, we want a way to abstract discrete input events into actions performed by the player, which is where event-based systems as well as gestures come into play.

Review Questions

1. What is the difference between a digital and an analog device? Give an example of each.

2. In input, what is a chord and what is a sequence?

3. Why are the “just pressed” and “just released” events necessary, as opposed to simply querying the state of the device?

4. What is analog filtering, and what problem does it try to solve?

5. What advantages does an event-based input system have over a polling system?

6. In a basic event-based system, how can you track which functions are registered to the event?

7. In a mouse-based game, where clicks can be processed by either the UI or the game world, what is one method you can use to ensure the UI has the first opportunity to process a click?

8. What problem does the Rubine algorithm attempt to solve? How, roughly speaking, does it function?

9. Outline the differences between an accelerometer and gyroscope.

10. What types of input mechanisms might an augmented reality game use, and for what purpose?

Additional References

Abrash, Michael. Ramblings in Valve Time. http://blogs.valvesoftware.com/abrash/. This blog, written by game industry legend Michael Abrash, features quite a few insights into the future of virtual and augmented reality.

Rubine, Dean. “Specifying gestures by example.” In SIGGRAPH ’91: Proceedings of the 18th annual conference on computer graphics and interactive techniques. (1991): 329–337. This is the original paper that outlines the Rubine gesture-recognition algorithm.

All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL)

If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval.

© 2016-2026 All site design rights belong to S.Y.A.