Client-Server Web Apps with JavaScript and Java (2014)

Chapter 7. Rapid Development Practices

To find my home in one sentence, concise, as if hammered in metal. Not to enchant anybody. Not to earn a lasting name in posterity. An unnamed need for order, for rhythm, for form, which three words are opposed to chaos and nothingness.

—Czeslaw Milosz

Developer Productivity

Conciseness, efficiency, and simplicity are highly valued in modern culture. Perhaps this is because of the relative abundance and complexity that distinguishes our time from previous generations. A web application built using simple, concise code and efficient and streamlined practices will result in a final product that is easier to maintain, easier to adapt, and will ultimately be more profitable. Likewise, a programmer’s workflow and tools should be efficient and avoid unnecessary complexity. Because there are so many options available, it is particularly important for a developer to step back from coding and consider if the workflow in use is truly optimal and productive.

Along with a fundamental shift to a client-server web paradigm, there has been incremental changes and improvements to development workflows. These improvements eliminate extraneous, unnecessary work by using reasonable defaults and removing rarely used configuration options. Simplification of workflows results in smaller, tighter feedback loops. Frequent feedback promotes quicker recognition and elimination of problems. The early identification and remediation of bugs results in increased productivity—and happier programmers. Furthermore, it introduces the possibility of creating complex, high-quality software that ironically requires less time and resources to construct and maintain.

COPIOUS HAND-WAVING…

Measuring programmer productivity is notoriously difficult. Measures such as hours worked, lines of code per day, or defects resolved are objectively measurable, but not terribly meaningful. The unique purpose, time frame, and intended longevity of a project make it difficult to compare to others. A truly formal and objective measurement that fairly and accurately reflects productivity across all projects simply does not exist. In practice, most software development managers engage in a bit of artful spreadsheet manipulation and develop a knack for accurately assessing the degree of correlation between the estimates given by their developers and actual outcomes. With that understood, a general assumption that improvements to processes can be made to the benefit of individual and group performance will be accepted.

Agile methodology was initially presented as a correction to waterfall methods, which tend to be encumbered early on with a large amount of effort and activity that actually prevent meaningful progress. Limiting this tendency toward “analysis-paralysis” and recognizing the need to immediately begin creating a workable product was refreshing when introduced. Unfortunately, the term has become diluted over time and now can often simply suggest a “ready-fire-aim” approach where there is a great deal of activity early on with no well-defined goal. In this context, though it might seem counterintuitive, being truly productive requires one initially to cease working. Obviously, this is meant as an absolute cessation of activity. It is the ability to sacrifice immediately measurable and visible progress for long-term project quality and productivity.

This is a significant challenge. Management wants to see progress on the project at hand. Developers like coding. Users want evidence that work has commenced. But starting prematurely can result in the wrong (or at least less than ideal) tool or approach to be adopted. An inapplicable convention or tradition introduced to a project can be very damaging. An early deviation can set a project on a bad trajectory and lead to problems that compound as the project progresses. It takes a bit of vision to realize that ceasing work to do some up-front analysis can result in far more productive work and higher-quality results.

Up-front analysis has suffered quite a bit with the adoption of pseudo-agile methodologies (“pseudo” is intended to indicate that what is suggested here is not opposed to an agile approach). It is far more beneficial to measure twice and cut once. This applies on many levels. Development team leads need to make decisions relevant to their projects. Individual developers need to remain aware of the best practices and emerging techniques that might be applicable to the problem at hand. Having the right tool or approach for a given job can reduce the time and effort required to complete the initial work, and done correctly, can result in a system that is simpler and easier to maintain in the long run. The pressure to focus all energy and attention on whatever task is deemed most urgent must be resisted to make the fundamental adjustments required to work productively. An agile approach that welcomes changing business requirements throughout the project can be used, but foundational technical decisions and related developer workflow should generally not be significantly affected.

PRODUCTIVITY IN ISOLATION

It should be apparent that a focus on productivity alone is inadequate. If productivity were to be considered in absolute isolation, then doing nothing might be considered the best option! Software quality, reliability, clear communication, correctness of functionality, and adherence to processes and conventions are important values as well. All other things being equal, it is better to complete a required task through fewer actions using less resources. So changes to processes in the name of productivity still need to be considered in light of this wider range of concerns. Besides, a truly productive process will tend to promote quality, reliability, and other important values as well.

It is somewhat disappointing that there is no simple plan or theory that will result in productivity gains. True software productivity improvements are discovered and enacted in practice during specific projects in an ad hoc manner. Work is done, and over time, improvements to the process are identified and implemented, and the cycle repeats. The knowledge gleaned is a source of reflection on areas that can be improved, optimized, or even eliminated on other projects.

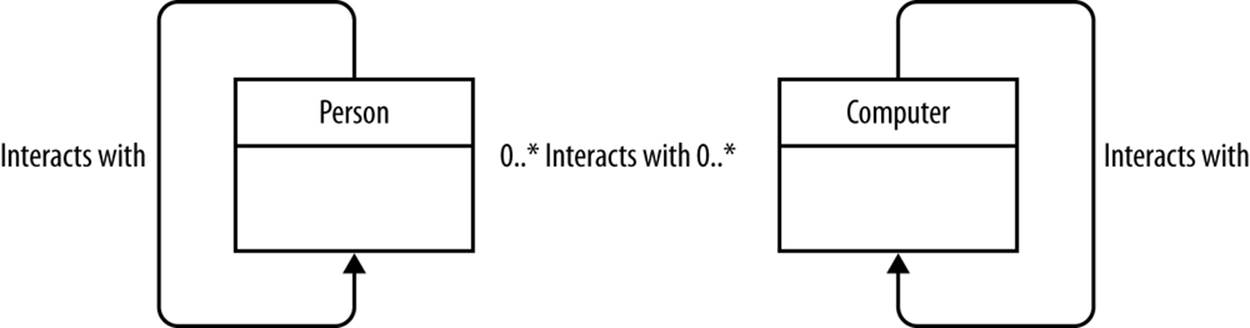

In the abstract, a software project requires that one or more tasks be completed, ideally in the most effective and productive manner possible. Productivity is often most easily comprehended and measured when isolated to a defined task. Every software development task involves one or more people and one or more computers. Each person and computer can interact with other people and computers over the course of a project. All of these are likely to be geographically dispersed. This is shown in Figure 7-1.

Figure 7-1. Interactions between people and computers

With this in mind, productivity improvements can be made to interactions between:

§ People (with each other)

§ Computers

§ People and computers

People and computers are the resources that perform the work required to complete a task, as shown in Table 7-1. To increase productivity:

§ Redefine the task.

§ Increase efficiency (of a given resource or in interactions between resources).

§ Increase resources.

§ Increase effort (get more out of each resource through additional work).

Table 7-1. Areas for productivity improvement

|

Action |

Humans |

Computers |

|

Redefine the task |

Identify requirements, plan, architect, manage |

Languages, software, programming paradigms |

|

Increase efficiency |

Develop skills, minimize distractions |

Automate, preprocess, compress, optimize, tune |

|

Increase resources |

Developers, consultants |

Scale, add hardware/processing power |

|

Increase effort |

Time management, workload |

Parallelize |

These areas are important to recognize on a couple of counts. A failure to take advantage of productivity improvements in one area (such as recent technical innovations classified under the Computers column) result in an increase in factors affecting productivity in the Humans column (additional hours or personnel). A bit of reflection about these areas as they relate to a project can help to suggest actions that might require relatively minor effort and result in significantly better results.

Acknowledgement of this more holistic view can help avoid overemphasis on a single category for solving all problems. A well-known example is the irrational hope that increasing workload and adding developers late in a project will result in meeting an overly ambitious deadline. Another is the sophomoric developer trap of believing that everything can be automated through additional homegrown software development regardless of the nature of the task. The particular emphasis of this book is in the Computers column.

Optimizing Developer and Team Workflow

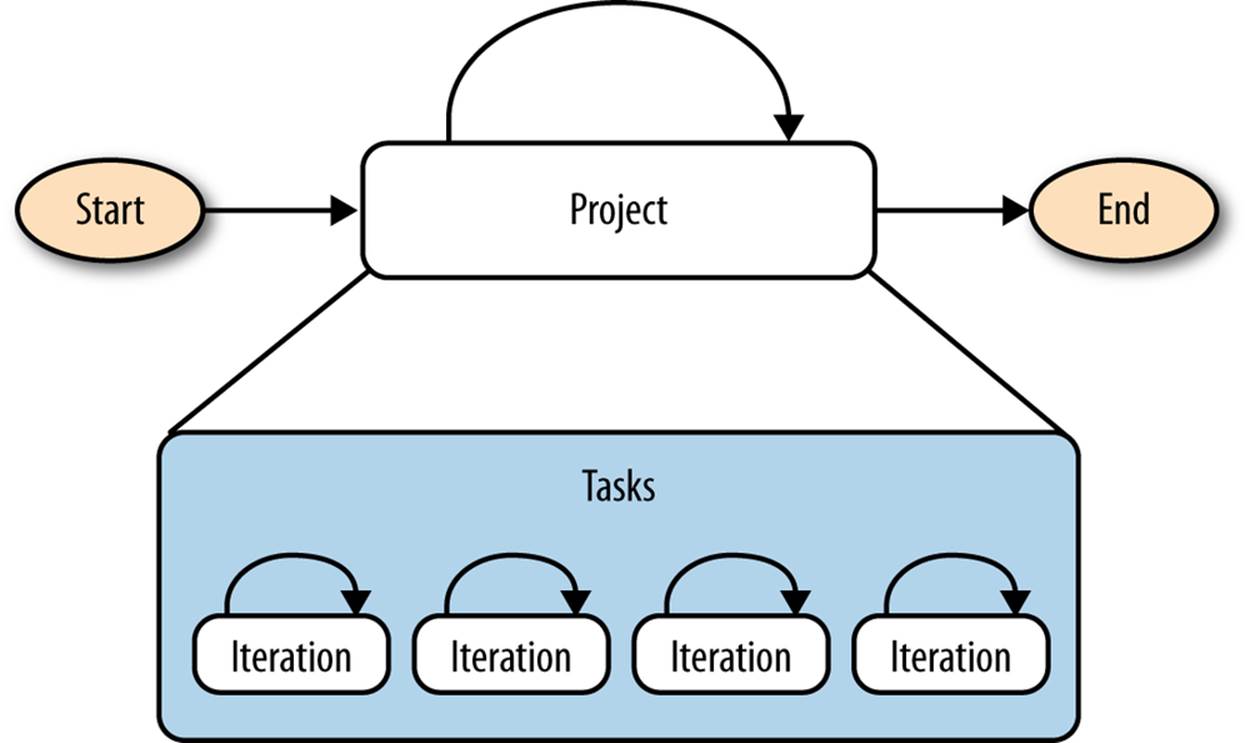

Iteration involves repeating a process with the aim of approaching (and eventually completing) an intended result. Each part of a project includes tasks that are iterated times, as shown in Figure 7-2. This basic observation can be applied to many different aspects of software development, including requirement-gathering, design, development, testing, and deployment.

Figure 7-2. Project iterations

There are a couple of key insights to keep in mind:

§ An iteration should conclude with an observable result. This result can be compared with the state prior to completing the iteration as well as with the intended result. If the result is not observable, there is a problem. What is observable can be measured and improved. What is not visible cannot be evaluated, fixed, or enhanced. An iteration can also be thought of as a feedback loop, beginning with an action and resulting in a response that must be evaluated.

§ An iteration (or feedback loop) can be small or wide. A single code change made by a developer is a small iteration, while the final delivery of an entire large-scale system is relatively large. Feedback from a deployment can be automated or manual. Automated feedback can provide a general indication that a deployed system is functioning as expected but cannot replace an actual end-user response to a release.

§ Shorter iterations allow for increased feedback. Increased feedback is essentially increased visibility. Increased feedback is desirable for many reasons. It results in a quicker identification of problems (and even opportunities), and it is easier to make corrections to the project trajectory early in the process. The smaller the loop and more immediate the feedback, the better.

§ By its very nature, any gain to a given iteration will result in a much more significant gain to the overall project due to the fact that it is repeated. The challenge is to recognize that a task is being repeated and to make improvements to tasks that will have the greatest overall impact to the entire project.

§ Any optimization to a task in the project is worthwhile. An optimization to a task that is repeated many times is generally even more beneficial. It is better to automate a task altogether if possible. What is somewhat counterintuitive to those geared toward “doing work” is that the best option, if available, is to eliminate unneeded tasks altogether.

These observations are rather obvious and boil down to simple common sense. But as those who have spent any time in the software development world can attest, developers are creatures of habit. Many get comfortable with a certain sequence in a workflow or a given set of tools. This can result in a large amount of unnecessary work that complicates projects and produces suboptimal results, to say the least.

FASTER IS BETTER

Boyd decided that the primary determinant to winning dogfights was not observing, orienting, planning, or acting better. The primary determinant to winning dogfights was observing, orienting, planning, and acting faster. In other words, how quickly one could iterate. Speed of iteration, Boyd suggested, beats quality of iteration...

I’ll state Boyd’s discovery as Boyd’s Law of Iteration: In analyzing complexity, fast iteration almost always produces better results than in-depth analysis.

— Roger Sessions, “A Better Path to Enterprise Architectures”

Most of us are not going to create the next big thing that is going to revolutionize software development processes. But simply stepping outside of one’s own programming culture can be an eye-opening experience. There are plenty of improvements available that can be leveraged, and delving a bit deeper into well-established technologies can provide significant results for individual developers let alone wider project considerations. A few examples might help illustrate this.

Example: Web Application Fix

A change needs to be made to a Java JEE web application (an EAR comprised of several WARs) built using Maven and deployed to a JBoss web application server. The developer who needs to make the change will need to make several code changes (likely making a mistake or two in the process). One way to approach the problem involves a few different steps. First, make code changes. Next, type out commands to do a standard full build, followed by a deployment of the application. This second step might take several minutes to complete depending on the size of the application, the number of unit tests being run, the build, and other factors. What improvements to the process might be made?

§ To start, there are numerous shell command options that might be of use. Command history (and searching) immediately come to mind.

§ Does every step in the build process need to be performed? For example, in Maven, the -DskiptTests parameter might shorten the build time significantly.

§ Is a full deployment even required? It might be possible to hot deploy code depending in a manner that no build is required to test a change.

§ Does the change require an initial deployment at all? Initial test might be done by testing within the browser (if they relate primarily to HTML, CSS, or JavaScript). For server-side code, attaching the remote debugger and observing relevant objects and variables in their immediate, populated context might allow enough discovery to prevent a few unnecessary iterations. The Java code might be able to be evaluated in a unit test outside of the full deployment as well (which suggests a productivity benefit of Test-Driven Development).

Example: Testing Integration

This example is regarding the same web application project, now well underway. There is a general recognition of the need to include testing as part of the SDLC. This can occur at many stages and at many levels:

§ A QA resource or developer peer testing might do a good job validating initial requirements and code match-up, but people do not tend to be consistent or exhaustive.

§ Unit tests (using JUnit) are created. They provide more extensive testing but are of little use if not run consistently.

§ Unit tests are quickly integrated into the Maven build. The build is done on a continuous integration server so developers are quickly alerted of a change that breaks the build. Yet it is difficult to tell how extensive or valuable the tests are.

§ A coverage report can be generated to provide some indication of code coverage. One area noted is that testing is specifically server-side. This is an issue because the browser-side code in the project is significant.

§ Fortunately, our crafty front-end engineers have already begun doing unit testing using Jasmine. These can be integrated into the Maven build using a plug-in. In addition, JavaScript developers run unit tests on their client-side code using Karma installed on their individual workstations.

§ As the project proceeds, the project flow solidifies, and broader functional tests that reflect user experiences can be written. These functional tests can then be run on various browsers using Selenium.

The focus with this testing scenario has been on increasing feedback regarding project status rather than quality itself. The value in optimization is evident, as bugs are quickly identified and fixed. In addition, incongruities between requirements and implementation are easily identified. New developers can be added to such a project because they can learn about code in relative isolation by observing and running tests. A project with significant test coverage can survive sweeping refactoring changes. The confidence to undertake such refactoring is a result of having suites of automated tests that verify a significant subset of existing functionality.

Example: Greenfield Development

As a software architect on a new project, you need to choose the best set of tools and set up an initial application structure. As a cloud-deployed, highly scalable web application, a client-server architecture described in this book is selected. It is accepted that the team will adopt some new tools and processes, but Java is mandated as the programming language due to organizational practices and in-house abilities (which eliminate possibilities like Rails and Grails that rely on other JVM languages):

§ Maven/JEE is initially considered. Although JAX-RS is suitable for server-side development, JSF development does not fit due to a tendency for developers to use sessions.

§ After a bit of investigation, it turns out that the entire build/deployment to an application server can be mitigated by using a server-side framework like Spring Roo or the Play framework. Play is selected, and a server-side web API is generated that serves some sample JSON files from the filesystem. These mock services will later be replaced with integration from a variety of other backend services.

§ Yeoman can be used to generate a front-end project that uses the JavaScript framework and relevant HTML5 starter project. A quick npm search yeoman-generator yields a few likely candidates that are used to generate not just one but several client-side projects—each in its own directory. A few hours of evaluation (hooking up the frontends to the existing services) provides a sense of the value each generated project brings, and one is selected.

§ Some cleanup is done, including providing example server-side and client-side tests that run automatically when a file is saved. Automated documentation utilities are set up for Java and JavaScript. Code is checked into SVN, and the project is registered and configured on the continuous integration server. The server also generates documentation when code is built and publishes it to a known central documentation server. An IDE template is set up that includes acceptable defaults for code formatting.

With this initial work in place, many of the most significant and important decisions have been made prior to individual developers implementing more specific business requirements. These decisions have led to a process that allows for relatively isolated (parallel) client and server development. It also includes immediate feedback using unit tests and specific examples for developers to copy as they add tests for new functionality. Published auto-generated documentation and an IDE template encourage relatively homogeneous coding and commenting practices.

These examples are subjective and will undoubtedly be changed and improved as new technologies emerge. The point is to provide an example of the analysis that can be done to make incremental improvements to processes rather than blindly following previous practices and conventions. The section that follows is intended to help you brainstorm and identify areas of your projects that are applicable targets for improvement.

Productivity and the Software Development Life Cycle

Productivity needs to be considered at each point in the software development life cycle. This is because a glaring inefficiency in a fundamental step in the process cannot necessarily be overcome by productivity gains in another. In general, tasks related to productivity can be prioritized in order of diminishing returns. Although each project and team is unique, some general statements can be made concerning which areas will tend to have the greatest overall effect. Generally, management and cultural decisions are foundational, followed by overall technical architecture, specific application design, and lower-level programming and platform concerns. Given an accurate analysis and prioritization of tasks, optimal results can be obtained by addressing productivity issues in order.

Management and Culture

In general, the largest gains can be had when considering the overall scope of a project involving many individuals working on a team. Although not the focus here, management actions, team dynamics, and work culture have a profound impact on the work that will be accomplished. These broad environmental considerations set the stage for the work to be done and the value proposition for the overall organization as well as each individual. They are significant and often the primary areas that should be addressed. One challenge that can be significant—especially in larger organizations—is to align goals. Charlie Munger, the businessman and investor best known for his association with Warren Buffett, described the challenge that Federal Express once faced to align the goals of workers with the organizational mandate to eliminate delays. The solution to the problem involved making sure all parties involved had the proper incentives:

From all business, my favorite case on incentives is Federal Express. The heart and soul of their system—which creates the integrity of the product—is having all their airplanes come to one place in the middle of the night and shift all the packages from plane to plane. If there are delays, the whole operation can’t deliver a product full of integrity to Federal Express customers.

And it was always screwed up. They could never get it done on time. They tried everything—moral persuasion, threats, you name it. And nothing worked.

Finally, somebody got the idea to pay all these people not so much an hour, but so much a shift—and when it’s all done, they can all go home. Well, their problems cleared up overnight.

So getting the incentives right is a very, very important lesson. It was not obvious to Federal Express what the solution was. But maybe now, it will hereafter more often be obvious to you.

—Charlie Munger

Other “big-picture” considerations: basic well-known organizational and management principles hold true. Assign responsibility, centralize documentation, and use version control. These concerns are obvious yet frequently ignored.

Technical Architecture

The overall architecture of a system dictates many facets of subsequent technology selection and implementation. A highly scalable, cloud-based application targeted for a widespread public deployment involves a more sophisticated setup than an application that is going to be used internally by an organization. There is far more margin for error in the latter case. The overall productivity of a team will be severely hindered if members are required to either code with consideration for scenarios that will never occur or make changes late in the project to address unexpected architectural requirements. A clear sense of project scope should be reflected in architectural choices.

A similar concern is the choice of data storage medium. Traditional relational databases are a relatively well-understood resource that provide services like referential and transactional integrity that developers tend to take for granted. New NoSQL solutions offer the ability to optimize write operations and store data in a manner that is far less constrained. Each has its benefits, but there is no single silver bullet that will address all concerns. A NoSQL solution might be selected because of initial scalability concerns related to incoming data. But if reporting capabilities are not also considered up front, data might not be stored in a way that will allow for efficient reporting. Individual developers might be remarkably capable and productive, but will not be able to overcome a fundamentally incorrect data storage decision through isolated effort on a specific reporting task.

Each programming language has dogmatic adherents who enter into epic debates on the relative virtues of their language. What is certain is that there are characteristics of a language that make it simpler to complete a given programming task in less time. For instance, if a compilation step can be eliminated (through the use of automated compiling in an IDE or the use of a scripting language), a task will require less time. Languages like Java have a huge number of available supporting libraries, while some newer languages like Scala and Groovy boast fundamental language differences that reduce the amount of code that needs to be written to perform an equivalent task. Scripting languages like Ruby and Python have their own unique workflows that have been effective on their own and influenced the development of tools and processes elsewhere.

Software Tools

Selection of programming languages, development tools, and frameworks is a major area where an architect steers project direction. The power and constraints available to individual programmers throughout development of a project are influenced heavily by these decisions. Technologies and their associated workflows were created with a variety of values in mind. Productivity will inevitably be impacted this selection.

In Software Tools (Addison-Wesley Professional, 1976), Brian Kernighan famously said, “Controlling complexity is the essence of computer programming.” The range of software tools that have assisted in the attempt to tame complexity touch on every part of the software development life cycle: version control systems, automated documentation, coverage and quality reports, testing tools, issue management systems, and continuous integration servers, to name a few. Besides these, the simple everyday tools a developer has mastered can be the difference that makes a developer an order of magnitude more effective than his peers.

Each language has associated build tools. Although you can mix and match languages and build tools, there is a close associate between Maven for Java, Gradle for Groovy, SBT for Scala, and Rake for Ruby. Each language has associated frameworks for developing client-server web applications. Java is known for JEE and Spring (which is also available through a highly automated utility called Roo) as well as for newer frameworks like Play (which also supports Scala). The same could be said for Ruby and Rails, Groovy and Grails, and Python and Django. Most of these frameworks include embedded servers that tend to promote developer workflow. They also tend to be coupled with starter projects that can eliminate a significant amount of time-consuming, boilerplate coding. The selection of a relevant framework can result in reduced build time, the elimination of a build altogether, the benefits of preprocessing of an asset pipeline, and easy incorporation of integrated test suites.

IDEs include features such as code completion, intelligent searching and code navigation, refactoring functionality (encapsulating code in a new method, and renaming a variable across files of different types), unit test integration, and background compilation. They are a mainstay in the Java community. They provide tremendous value when working in a language like Java, so much so that some developers find it hard to believe that every programmer does not use one for every task.

Developers using scripting languages (particularly those that were not initially created for the JVM) tend to use lighter-weight code editors. If working at the command line in an *nix type environment,[3] vi (or vim) and Emacs along with a few of the built-in utilities can provide analogous (or even superior) mechanisms for a variety of software development tasks. Even if you’re not working all the time at the command line, it is worthwhile to be conversant at this level because so many support tasks (deployment to a server, viewing logs, and checking server performance) take place in an environment where only the command line is available.

Performance

Applications that perform well can be debugged more quickly. Minimizing the time required for an iteration (the size of the feedback loop) makes for a larger number of possible changes and validation. The optimization of a poorly performing section of code can provide time for developers to work on other issues that would otherwise be spent waiting for a system to respond. The initial selection of APIs, algorithms, data storage mechanisms, and related processing has a tremendous downstream effect in this regard. Even the choice of programming language paradigms has an effect; for example, functional programming (which is widely publicized for its virtues of limiting side effects), commonly utilizes highly efficient caching mechanisms. It can also be used to efficiently traverse structures with an extremely terse, easily understood code representation. It simplifies processing and requires fewer lines of code that need to be sifted through when refactoring. Both actual application performance as well as human readability can benefit with the use of the right technology (with the right team). Even in a mature project, there are often areas that are candidates for optimization. For instance, network performance in many web applications can benefit from simple compression or reduction of calls. These details can be overlooked early in a project but can often be implemented later in a nondisruptive manner. And the gains for improving performance always extend the specific area addressed as time is freed up for developers to actively program and test rather than wait for the system to respond.

By way of more general application design, the benefits of RESTful application constraints promote productivity. A client-server paradigm allows for parallel development, easier debugging and maintenance, and simplification of otherwise complex tasks. These benefits also apply to the practice of creating discrete modules elsewhere in a system. Proper modularization of a project can allow an initial creation of a project using a highly productive but less scalable solution that can later be rewritten. Good design tends to promote later productivity.

Testing

Once done in a largely haphazard and ad hoc manner, testing has become a much more formalized discipline. Automation of testing (along with integrating tests into builds) is required to ensure confidence in large-scale refactoring and is foundational to practices like automated deployment. Automated tests were initially run intermittently and infrequently. With available processing power, it is now feasible to run build suites every time a project is built (or even every time a file is saved). The extent of testing might vary a bit as a project matures but is a necessity in some form in most nontrivial modern web development. Testing has become firmly established in the JavaScript community, allowing for the development of larger projects that perform reliably across browsers and devices. New forms of testing are being developed to address cloud-based deployments such as Netflix’s Chaos Monkey, which assists the development of resilient services by actively causing failures.

Testing, when properly instituted, can fulfill a unique roll in facilitating communication between programmers and computers as well as programs and other members of their team. This might not be obvious at first; after all, the purpose of testing is generally understood to validate the reliability or functionality of software. Certain types of testing can also contribute significantly to the overall productivity of a large-scale project. This is immediately evident when tests reduce the pain of integration and result in quality improvements that require fewer fixes. In a somewhat more subtle manner, tests that follow the Behavior-Driven Development paradigm can improve productivity by creating a means of consistent communication between project team members representing different areas. As stated in The Cucumber Book: Behaviour-Driven Development for Testers and Developers:

Software teams work best when the developers and business stakeholders are communicating clearly with one another. A great way to do that is to collaboratively specify the work that’s about to be done using automated acceptance tests…When the team writes their acceptance tests collaboratively, they can develop their own ubiquitous language for talking about their problem domain. This helps them avoid misunderstandings.

Better communication results in less time wasted due to misunderstandings. Better understanding results in greater productivity.

WHEN TESTING OPPOSES PRODUCTIVITY

There are times when running tests can become burdensome and inhibit productivity. Constructing and maintaining tests takes time. Running extensive test suites requires time and resources. Like any other development task, effort and time are required for testing that could be spent elsewhere. This has led to many developers and other parties dismissing testing efforts in large part.

It has become relatively easy to integrate testing into web applications at many levels. Many starter projects include testing configured out of the box. To get the maximum value from testing, a culture is required that values the benefits of tests and considers their maintenance to be real work and worth the effort. It is hard to sell testing in terms as directly benefitting productivity. For most projects that have any significant lifespan, its value cannot be understated.

Underlying Platform(s)

The operating system along with installed infrastructure software comprises the underlying local deployment platform. A reasonably powerful workstation or two (along with an extra monitor for added screen real estate) is the equivalent of a supercomputer from a few years ago. Initial setup to allocate sufficient JVM memory or to shut off unneeded programs from consuming resources might be of some value, but often, fundamental aspects of application design play a larger factor. In some cases, having a faster file system will make a noticeable impact on build time.

The use of centralized databases versus developer-maintained copies can be an important decision. In a developer-maintained scenario, migration frameworks like FlyWayDB can be of assistance. If working with remote resources, networking can become a significant concern, particularly when working with distributed teams.

Conclusion

By design, there is no sample project with this chapter. Productivity, or simply efficiency during the development process, requires a step back to consider available options and how they fit with the project at hand. Each stage of a project includes macro- and micro-level tasks that might be simplified, automated, or performed more efficiently. There is no substitute for “coming up for air” and giving the appearance of leisure that allows sufficient reflection on the best options available.

[3] For the uninitiated, an asterisk is a wildcard in programmer-speak. *nix is shorthand for “Unix or Unix-like systems such as Linux.” The term *nix also includes Apple’s OS X but excludes Windows. Windows can run an emulation environment like Cygwin to make it look like a Unix system.