JavaScript Application Design: A Build First Approach (2015)

Part 1. Build processes

The first part of this book is dedicated to build processes and provides a practical introduction to Grunt. You’ll learn the why, how, and what of build processes, both in theory and in practice.

In chapter 1, we go over what the Build First philosophy entails: a build process and application complexity management. Then, we’ll start fiddling with our first build task, using lint to prevent syntax errors in our code.

Chapter 2 is all about build tasks. You’ll learn about the various tasks that comprise a build, how to configure them, and how to create your own tasks. In each case, we’ll take a look at the theory and then walk through practical examples using Grunt.

In chapter 3, we’ll learn how to configure application environments while keeping sensitive information safe. We’ll go over the development environment workflow, and you’ll learn how to automate the build step itself.

Chapter 4 then describes a few more tasks we need to take into account when releasing our application, such as asset optimization and managing documentation. You’ll learn about keeping code quality in check with continuous integration, and we’ll also go through the motions of deploying an application to a live environment.

Chapter 1. Introduction to Build First

This chapter covers

· Identifying problems in modern application design

· Defining Build First

· Building processes

· Managing complexity within applications

Developing an application properly can be hard. It takes planning. I’ve created applications over a weekend, but that doesn’t mean they were well-designed. Improvisation is great for throw-away prototypes and great when concept-proofing an idea; however, building a maintainable application requires a plan, the glue that holds together the features you currently have in mind and maybe even those you might add in the near future. I’ve participated in countless endeavors where the application’s front-end wasn’t all it could be.

Eventually, I realized that back-end services usually have an architect devoted to their planning, design, and overview—and often it’s not one architect but an entire team of them. This is hardly the case with front-end development, where a developer is expected to prototype a working sketch of the application and then asked to run with it, hoping that the prototype will survive an implementation in production. Front-end development requires as much dedication to architecture planning and design as back-end development does.

Long gone are the days when we’d copy a few snippets of code off the internet, paste them in our page, and call it a day. Mashing together JavaScript code as an afterthought no longer holds up to modern standards. JavaScript is now front and center. We have many frameworks and libraries to choose from, which can help you organize your code by allowing you to write small components rather than a monolithic application. Maintainability isn’t something you can tack onto a code base whenever you’d like; it’s something you have to build into the application, and the philosophy under which the application is designed, from the beginning. Writing an application that isn’t designed to be maintainable translates into stacking feature after feature in an ever-so-slightly tilting Jenga tower.

If maintainability isn’t built in, it gets to a point where you can’t add any more pieces to the tower. The code becomes convoluted and bugs become increasingly hard to track down. Refactoring means halting product development, and the business can’t afford that. The release schedule must be maintained, and letting the tower come crashing down is unacceptable, so we compromise.

1.1. When things go wrong

You might want to deploy a new feature to production, so humans can try it out. How many steps do you have to take to do that? Eight? Five? Why would you risk a mistake in a routine task such as a deployment? Deploying should be no different than building your application locally. One step. That’s it.

Unfortunately that’s rarely the standard. Have you faced the challenging position I’ve found myself in of having to take many of these steps manually? Sure, you can compile the application in a single step, or you might use an interpreted server-side language that doesn’t need any pre-compilation. Maybe later you need to update your database to the latest version. You may have even created a script for those updates, and yet you log into your database server, upload the file, and run the schema updates yourself.

Cool, you’ve updated the database; however, something’s not right and the application is throwing an error. You look at the clock. Your application has been down for more than 10 minutes. This should’ve been a straightforward update. You check the logs; you forgot to add that new variable to your configuration file. Silly! You add it promptly, mumbling something about wrestling with the code base. You forget to alter the config file before it deploys; it slipped your mind to update it before deploying to production!

Sound like a familiar ritual? Fear not, this is an unfortunately common illness, spread through different applications. Consider the crisis scenarios described next.

1.1.1. How to lose $172,222 a second for 45 minutes

I bet you’d consider losing almost half a billion dollars a serious issue, and that’s exactly what happened to Knight’s Capital.[1] They developed a new feature to allow stock traders to participate in something called the Retail Liquidity Program (RLP). The RLP functionality was intended to replace an unused piece of functionality called Power Peg (PP), which had been discontinued for close to nine years. The RLP code reused a flag, which was used to activate the PP code. They removed the Power Peg feature when they added RLP, so all was good. Or at least they thought it was good, until the point when they flipped the switch.

1 For more information about Knight’s Capital, see http://bevacqua.io/bf/knight.

Deployments had no formal process and were executed by hand by a single technician. This person forgot to deploy the code changes to one of their eight servers, meaning that in the case of the eighth server, the PP code, and not the RLP feature, would be behind the activation flag. They didn’t notice anything wrong until a week later when they turned on the flag, activating RLP on all servers but one, and the nine-year-old Power Peg feature on the other.

Orders routed through the eighth server triggered the PP code rather than RLP. As a result, the wrong types of orders were sent to trading centers. Attempts to amend the situation only further aggravated it, because they removed the RLP code from the servers which did have it. Long story short, they lost somewhere in the vicinity of $460 million in less than an hour. When you consider that all they needed to do to avoid their downfall was have a more formal build process in place, the whole situation feels outrageous, irresponsible, and, in retrospect, easily averted. Granted, this is an extreme case, but it boldly illustrates the point. An automated process would have increased the probability that human errors could be prevented or at least detected sooner.

1.1.2. Build First

In this book, my goal is to teach you the Build First philosophy of designing for clean, well-structured, and testable applications before you write a single line of code. You’ll learn about process automation, which will mitigate the odds of human error, such as those leading to Knight’s Capital’s bankruptcy. Build First is the foundation that will empower you to design clean, well-structured, and testable applications, which are easy to maintain and refactor. Those are the two fundamental aspects of Build First: process automation and design.

To teach you the Build First approach, this book will show you techniques that will improve the quality of your software as well as your web development workflow. In Part 1, we’ll begin by learning how to establish build processes appropriate for modern web application development. Then, you’ll walk through best practices for productive day-to-day development, such as running tasks when your code changes, deploying applications from your terminal by entering a single command, and monitoring the state of your application once it’s in production.

The second part of the book—managing complexity and design—focuses on application quality. Here I give you an introduction to writing more modular JavaScript components by comparing the different options that are currently available. Asynchronous flows in JavaScript tend to grow in complexity and length, which is why I prepared a chapter where you’ll gain insight into writing cleaner asynchronous code while learning about different tools you can use to improve that code. Using Backbone as your gateway drug of choice, you’ll learn enough about MVC in JavaScript to get you started on the path to client-side MVC. I mentioned testable applications are important, and while modularity is a great first step in the right direction, testing merits a chapter of its own. The last chapter dissects a popular API design mentality denominated REST (Representational State Transfer), helping you design your own, as well as delving into application architecture on the server side, but always keeping an eye on the front end. We’ll begin our exploration of build processes after looking at one more crisis scenario Build First can avert by automating your process.

1.1.3. Rites of initiation

Complicated setup procedures, such as when new team members come onboard, are also a sign you may be lacking in the automation department. Much to my torment, I’ve worked on projects where getting a development environment working for the first time took a week. A full week before you can even begin to fathom what the code does.

Download approximately 60 gigabytes worth of database backups, create a database configuring things you’ve never heard of before, such as collation, and then run a series of schema upgrade scripts that don’t quite work. Once you’ve figured that out, you might want to patch your Windows Media Player by installing specific and extremely outdated codecs in your environment, which will feel as futile as attempts to cram a pig into a stuffed refrigerator.

Last, try compiling the 130+ project monolith in a single pass while you grab a cup of coffee. Oh, but you forgot to install the external dependencies; that’ll do it. Nope, wait, you also need to compile a C++ program so codecs will work again. Compile again, and another 20 minutes go by. Still failing? Shoot. Ask around, maybe? Well, nobody truly knows. All of them went through that excruciating process when they started out, and they erased the memory from their minds. Check out the wiki? Sure, but it’s all over the place. It has bits of information here and there, but they don’t address your specific problems.

The company never had a formal initiation workflow, and as things started to pile up, it became increasingly hard to put one together. They had to deal with giant backups, upgrades, codecs, multiple services required by the website, and compiling the project took half an hour for every semi-colon you changed. If they’d automated these steps from the beginning, like we’ll do in Build First, the process would’ve been that much smoother.

Both the Knight’s Capital debacle and the overly complicated setup story have one thing in common: if they’d planned ahead and automated their build and deployment processes, their issues would’ve been averted. Planning ahead and automating the processes surrounding your applications are fundamental aspects of the Build First philosophy, as you’ll learn in the next section.

1.2. Planning ahead with Build First

In the case of Knight’s Capital, where they forgot to deploy code to one of the production web servers, having a single-step deployment process that automatically deployed the code to the whole web farm would’ve been enough to save the company from bankruptcy. The deeper issue in this case was code quality, because they had unused pieces of code sitting around in their code base for almost 10 years.

A complete refactor that doesn’t provide any functional gains isn’t appealing to a product manager; their goal is to improve the visible, consumer-facing product, not the underlying software. Instead, you can continuously improve the average quality of code in your project by progressively improving the code base and refactoring code as you touch it, writing tests that cover the refactored functionality, and wrapping legacy code in interfaces, so you can refactor later.

Refactoring won’t do the trick on its own, though. Good design that’s ingrained into the project from its inception is much more likely to stick, rather than attempts to tack it onto a poor structure as an afterthought. Design is the other fundamental aspect of the book, along with build processes mentioned previously.

Before we dive into the uncharted terrains of Build First, I want to mention this isn’t a set of principles that only apply to JavaScript. For the most part, people usually associate these principles with back-end languages, such as Java, C#, or PHP, but here I’m applying them to the development process for JavaScript applications. As I mentioned previously, client-side code often doesn’t get the love and respect it deserves. That often means broken code because we lack proper testing, or a code base that’s hard to read and maintain. The product (and developer productivity) suffers as a result.

When it comes to JavaScript, given that interpreted languages don’t need a compiler, naive developers might think that’s justification enough to ditch the build process entirely. The problem when going down that road is that they’ll be shooting in the dark: the developer won’t know whether the code works until it’s executed by a browser, and won’t know whether it does what it’s expected to, either. Later on, they might find themselves manually deploying to a hosting environment and logging into it remotely to tweak a few configuration settings to make it work.

1.2.1. Core principles in Build First

At its core, the Build First approach encourages establishing not only a build process but also clean application design. The following list shows at a high level what embracing the Build First approach gives us:

· Reduced error proclivity because there’s no human interaction

· Enhanced productivity by automating repetitive tasks

· Modular, scalable application design

· Testability and maintainability by shrinking complexity

· Releases that conform to performance best practices

· Deployed code that’s always tested before a release

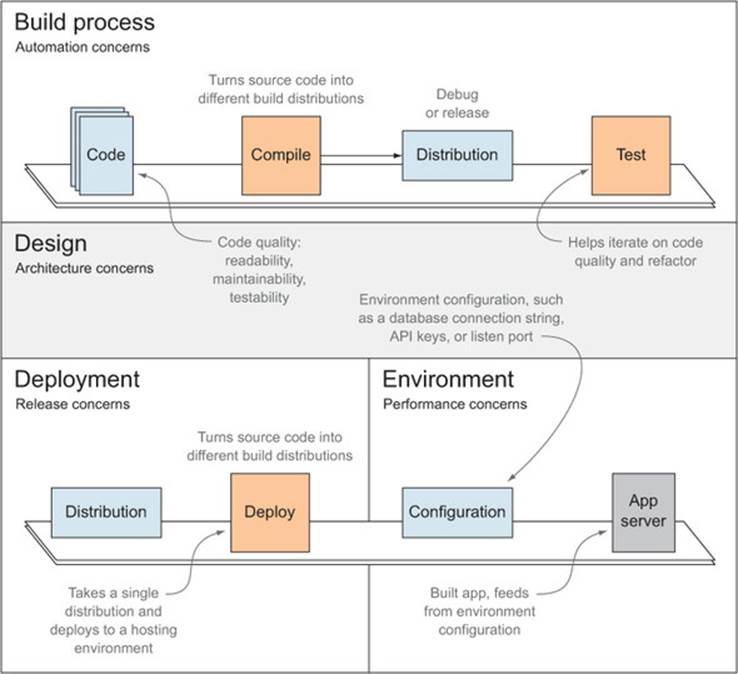

Looking at figure 1.1, starting with the top row and moving down, you can see

· Build process: This is where you compile and test the application in an automated fashion. The build can be aimed at facilitating continuous development or tuned for maximum performance for a release.

· Design: You’ll spend most of your time here, coding and augmenting the architecture as you go. While you’re at it, you might refactor your code and update the tests to ensure components work as expected. Whenever you’re not tweaking the build process or getting ready for a deployment, you’ll be designing and iterating on the code base for your application.

· Deployment and Environment: These are concerned with automating the release process and configuring the different hosted environments. The deployment process is in charge of delivering your changes to the hosted environment, while environment configuration defines the environment and the services—or databases—it interacts with, at a high level.

Figure 1.1. High-level view of the four areas of focus in Build First: Build process, Design, Deployment, and Environment

As figure 1.1 illustrates, Build First applications have two main components: the processes surrounding the project, such as building and deploying the application, and the design and quality of the application code itself, which is iteratively improved on a daily basis as you work on new features. Both are equally important, and they depend on each other to thrive. Good processes don’t do any good if you’re lacking in your application design. Similarly, good design won’t survive crises such as the ones I described previously without the help of decent build and deployment procedures.

As with the Build First approach, this book is broken into two parts. In part 1, we look at the build process (tuned for either development or release) and the deployment process, as well as environments and how they can be configured. Part 2 delves into the application itself, and helps us come up with modular designs that are clear and concise. It also takes us through the practical design considerations you’ll have to make when building modern applications.

In the next two sections, you’ll get an overview of the concepts discussed in each part of the book.

1.3. Build processes

A build process is intended to automate repetitive tasks such as installing dependencies, compiling code, running unit tests, and performing any other important functions. The ability to execute all of the required tasks in a single step, called a one-step build, is critical because of the powerful opportunities it unveils. Once you have a one-step build in place, you can execute it as many times as required, without the outcome changing. This property is called idempotence: no matter how many times you invoke the operation, the result will be the same.

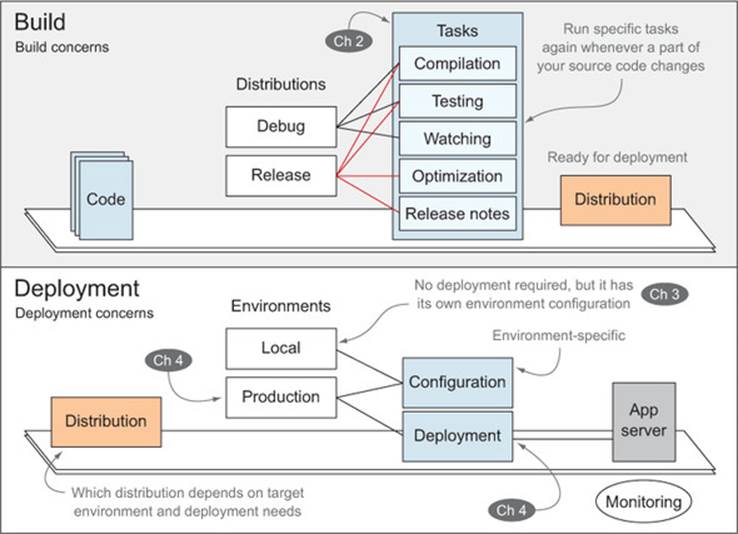

Figure 1.2 highlights in more detail the steps that make up the automated build and deployment processes.

Figure 1.2. High-level view of the processes in Build First: Build and Deployment

Pros and cons of automating your build processes

Possibly the most important advantage to having an automated build process is that you can deploy as frequently as needed. Providing humans with the latest features as soon as they’re ready allows us to tighten the feedback loop through which we can gain better insights into the product we should be building.

The main disadvantage to setting up an automated process is the time you’ll need to spend putting the process together before you can start seeing the real benefits, but the benefits—such as automated testing, higher code quality, a leaner development workflow, and a safer deployment flow—far outweigh the effort spent putting together that process. As a general rule, you’ll set up the process once and then replay it as much as you’d like, tweaking it a little as you go.

Build

The top of figure 1.2 zooms in on the build portion in the build process workflow (shown back in figure 1.1), detailing the concerns as you aim for either development or release. If you aim for development, you’ll want to maximize your ability to debug, and I bet you’ll like a build that knows when to execute parts of itself without you taking any action. That’s called continuous development (CD), and you’ll learn about it in chapter 3. The release distribution of a build isn’t concerned with CD, but you’ll want to spend time optimizing your assets so they perform as fast as possible in production environments where humans will use your application.

Deployment

The bottom of figure 1.2 zooms into the deployment process (originally shown in figure 1.1), which takes either the debug or release distribution (what I call distinct process flows with a specific purpose throughout the book) and deploys it to a hosted environment.

This package will work together with the environment-specific configuration (which keeps secrets, such as database connection strings and API keys, safe, and is discussed in chapter 3) to serve the application.

Part 1 is dedicated to the build aspect of Build First:

· Chapter 2 explains build tasks, teaching you how to write tasks and configure them using Grunt, the task runner you’ll use as a build tool throughout part 1.

· Chapter 3 covers environments, how to securely configure your application, and the development work flow.

· Chapter 4 discusses tasks you should perform during release builds. Then you’ll learn about deployments, running tests on every push to version control, and production monitoring.

Benefits of a Build Process

Once you’re done with part 1, you’ll feel confident performing the following operations on your own applications:

· Automating repetitive tasks such as compilation, minification, and testing

· Building an icon spritesheet so that HTTP requests for iconography are reduced to a single one. Such spriting techniques are discussed in chapter 2, as well as other HTTP 1.x optimization tricks, as a means to improve page speed and application delivery performance.

· Spinning up new environments effortlessly and neglecting to differentiate between development and production

· Restarting a web server and recompiling assets automatically whenever related files change

· Supporting multiple environments with flexible, single-step deployments

The Build First approach eliminates manual labor when it comes to tedious tasks, while also improving your productivity from the beginning. Build First acknowledges the significance of the build process for shaping a maintainable application iteratively. The application itself is also built by iteratively chipping away at its complexity.

Clean application design and architecture are addressed in part 2 of this book, which covers complexity management within the application, as well as design considerations with a focus on raising the quality bar. Let’s go over that next.

1.4. Handling application complexity and design

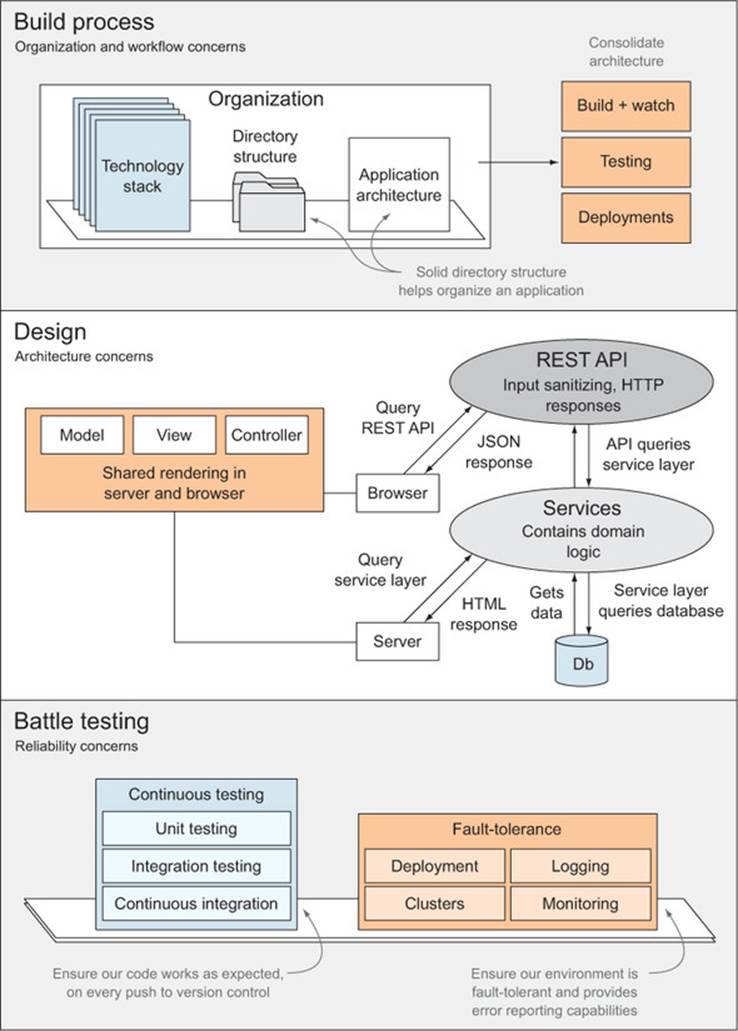

Modularization, managing dependencies, understanding asynchronous flow, carefully following the right patterns, and testing are all crucial if you expect your code to work at a certain scale, regardless of language. In part 2 you’ll learn different concepts, techniques, and patterns to apply to your applications, making them more modular, focused, testable, and maintainable. Figure 1.3, viewed from the top down, shows the progression we’ll follow in part 2.

Figure 1.3. Application design and development concerns discussed in part 2

Modularity

You’ll learn how to break your application into components, break those components down into modules, and then write concise functions that have a single purpose inside those modules. Modules can come from external packages, developed by third parties, and you can also develop them yourself. External packages should be handled by a package manager that takes care of versioning and updates on your behalf, which eliminates the need to manually download dependencies (such as jQuery) and automates the process.

As you’ll learn in chapter 5, modules indicate their dependencies (the modules they depend upon) in code, as opposed to grabbing them from the global namespace; this improves self-containment. A module system will take advantage of this information, being able to resolve all of these dependencies; it’ll save you from having to maintain long lists of <script> tags in the appropriate order for your application to work correctly.

Design

You’ll get acquainted with separation of concerns and how to design your application in a layered way by following the Model-View-Controller pattern, further tightening the modularity in your applications. I’ll tell you about shared rendering in chapter 7, the technique where you render views on the server side first, and then let the client side do view rendering for subsequent requests on the same single-page application.

Asynchronous Code

I’ll teach you about the different types of asynchronous code flow techniques, using callbacks, Promises, generators, and events and helping you tame the asynchronous beast.

Testing Practices

In chapter 5 we discuss everything about modularity, learn about closures and the module pattern, talk about module systems and package managers, and try to pinpoint the strengths found in each solution. Chapter 6 takes a deep dive into asynchronous programming in JavaScript. You’ll learn how to avoid writing a callback soup that will confuse you a week from now, and then you’ll learn about the Promise pattern and the generators API coming in ES6.

Chapter 7 is dedicated to patterns and practices, such as how to best develop code, whether jQuery is the right choice for you, and how to write JavaScript code you can use in both the client and the server. We’ll then look at the Backbone MVC framework. Keep in mind that Backbone is the tool I’ll use to introduce you to MVC in JavaScript, but it’s by no means the only tool you can use to this end.

In chapter 8 we’ll go over testing solutions, automation, and tons of practical examples of unit testing client-side JavaScript. You’ll learn how to develop tests in JavaScript at the unit level by testing a particular component and at the integration level by testing the application as a whole.

The book closes with a chapter on REST API design, and the implications of consuming a REST API in the front end, as well as a proposed structure to take full advantage of REST.

Practical design considerations

The book aims to get you thinking about practical design considerations made when building a real application, as well as deciding thoughtfully on the best possible tool for a job, all the while focusing on quality in both your processes and the application itself. When you set out to build an application, you start by determining the scope, choosing a technology stack, and composing a minimum viable build process. Then you begin building the app, maybe using an MVC architecture and sharing the view rendering engine in both the browser and the server, something we discuss in chapter 7. In chapter 9 you’ll learn the important bits on how to put an API together, and you’ll learn how to define backing services that will be used by both the server-side view controllers and the REST API.

Figure 1.4 is an overview of how typical Build First applications may be organized.

Figure 1.4. Pragmatic architectural considerations

Build process

Beginning at the upper left, figure 1.4 outlines how you can start by composing a build process which helps consolidate a starting point for your architecture, by deciding how to organize your code base. Defining a modular application architecture is the key to a maintainable code base, as you’ll observe in chapter 5. The architecture is then consolidated by putting in place automated processes that provide you with continuous development, integration, and deployment capabilities.

Design and Rest API

Designing the application itself, including a REST API that can effectively increase maintainability, is only possible by identifying clear cut components with clear purposes so they’re orthogonal (meaning that they don’t fight for resources on any particular concern). In chapter 9 we’ll explore a multi-tiered approach to application design which can help you quickly isolate the web interface from your data and your business logic by strictly defining layers and the communication paths between those layers.

Battle testing

Once a build process and architecture are designed, battle testing is where you’ll get drenched in reliability concerns. Here you’ll plumb together continuous integration, where tests are executed on every push to your version control system, and maybe even continuous deployments, making several deployments to production per day. Last, fault tolerance concerns such as logging, monitoring, and clustering are discussed. These are glanced over in chapter 4, and help make your production environment more robust, or (at worst) warn you when things go awry.

All along the way, you’ll write tests, adjust the build process, and tweak the code. It will be a terrific experiment for you to battle test Build First. It’s time you get comfortable and start learning specifics about the Build First philosophy.

1.5. Diving into Build First

Quality is the cornerstone of Build First, and every measure taken by this approach works toward the simple goal of improving quality in both your code and the structure surrounding it. In this section, you’ll learn about code quality and setting up lint, a code quality tool, in your command line. Measuring code quality is a good first step toward writing well-structured applications. If you start doing it early enough, it’ll be easy to have your code base conform to a certain quality standard, and that’s why we’ll do it right off the bat.

In chapter 2, once you’ve learned about lint, I’ll introduce you to Grunt, the build tool you’ll use throughout the book to compose and automate build processes. Using Grunt allows you to run the code quality checks as part of a build, meaning you won’t forget about them.

Grunt: the means to an end

Grunt is used intensively in part 1 and in some of part 2 to drive our build processes. I chose Grunt because it’s a popular tool that’s easy to teach and satisfies the most needs:

· It has full support for Windows.

· Little JavaScript knowledge is required and it takes little effort to pick up and run.

It’s important to understand that Grunt is a means to an end, a tool that enables you to easily put together the build processes described in this book. This doesn’t make Grunt the absolute best tool for the job, and in an effort to make that clear, I’ve compiled a comparison between Grunt and two other tools: *npm, which is a package manager that can double as a lean build tool, and *Gulp, a code-driven build tool that has several conventions in common with Grunt.

If you’re curious about other build tools such as Gulp or using npm run as a build system, then you should read more about the topic in appendix C, which covers picking your own build tool.

Lint is a code-quality tool that’s perfect for keeping an interpreted program—such as those written in JavaScript—in check. Rather than firing up a browser to check if your code has any syntax errors, you can execute a lint program in the command line. It can tell you about potential problems in your code, such as undeclared variables, missing semicolons, or syntax errors. That being said, lint isn’t a magic wand: it won’t detect logic issues in your code, it’ll only warn you about syntax and style errors.

1.5.1. Keeping code quality in check

Lint is useful for determining if a given piece of code contains any syntax errors. It also enforces a set of JavaScript coding best practice rules, which we’ll cover at the beginning of part 2, in chapter 5, when we look at modularity and dependency management.

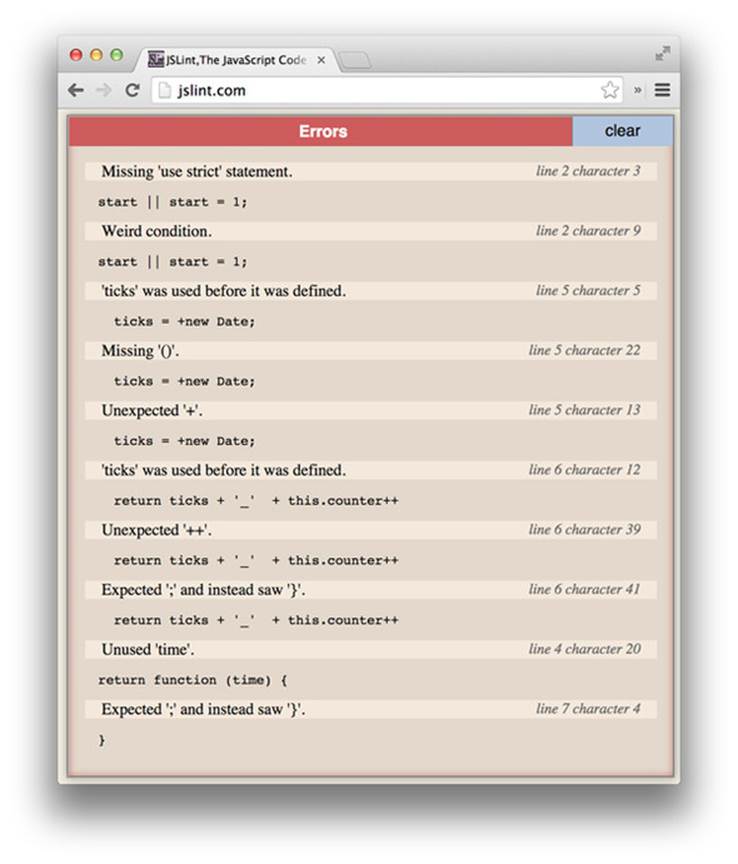

Around 10 years ago Douglas Crockford released JSLint, a harsh tool that checks code and tells us all the little things that are wrong with it. Linting exists to help us improve the overall quality of our code. A lint program can tell you about the potential issues with a snippet, or even a list of files, straight from the command line, and this has the added benefit that you don’t even have to execute the code to learn what’s wrong with it. This process is particularly useful when it comes to JavaScript code, because the lint tool will act as a compiler of sorts, making sure that to the best of its knowledge your code can be interpreted by a JavaScript engine.

On another level, linters (the name given to lint programs) can be configured to warn you about code that’s too complex, such as functions that include too many lines, obscure constructs that might confuse other people (such as with blocks, new statements, or using this too aggressively, in the case of JavaScript), or similar code style checks. Take the following code snippet as an example (listed as ch01/01_lint-sample in the samples online):

function compose_ticks_count (start) {

start || start = 1;

this.counter = start;

return function (time) {

ticks = +new Date;

return ticks + '_' + this.counter++

}

}

Plenty of problems are readily apparent in this small piece, but they may not be that easy to spot. When analyzed through JSLint, you’ll get both expected and interesting results. It’ll complain that you must declare your variables before you try to use them, or that you’re missing semicolons. Depending on the lint tool you use, it might complain about your use of the this keyword. Most linters will also complain about the way you’re using || rather than using a more readable if statement. You can lint this sample online.[2] Figure 1.5 shows the output of Crockford’s tool.

2 Go to http://jslint.com for the online sample. This is the original JavaScript linter Crockford maintains.

Figure 1.5. Lint errors found in a code snippet.

In the case of compiled languages, these kinds of errors are caught whenever you attempt to compile your code, and you don’t need any lint tools. In JavaScript, though, there’s no compiler because of the dynamic nature of the language. This is decidedly powerful, but also more error-prone than what you might expect from compiled languages, which wouldn’t even allow you to execute the code in the first place.

Instead of being compiled, JavaScript code is interpreted by an engine such as V8 (as seen in Google Chrome) or SpiderMonkey (the engine powering Mozilla Firefox). Where other engines do compile the JavaScript code, most famously the V8 engine, you can’t benefit from their static code analysis outside the browser.[3] One of the perceived disadvantages of dynamic languages like JS is that you can’t know for sure whether code will work when you execute it. Although that’s true, you can vastly diminish this uncertainty using a lint tool. Furthermore, JSLint advises us to stay away from certain coding style practices such as using eval, leaving variables undeclared, omitting braces in block statements, and so on.

3 You can see Node.js, a server-side JavaScript platform that also runs on V8, in effect in the console instead, but by the time V8 detects syntax issues, it’ll be too late for your program, which will implode. It’s always best to lint first, regardless of the platform.

Has your eye caught a potential problem in the last code snippet function we looked at? Check out the accompanying code sample (chapter 1, 01_lint-sample) to verify your answer! Hint: the problem lies in repetition. The fixed version is also found in the source code example; make sure to check out all that good stuff.

Understanding the source code that comes with this book

The source code included with this book has many nuggets of information, including a tweaked version of the linting example function, which passes the lint verification, fully commented to let you understand the changes made to it. The sample also goes on to explain that linters aren’t bulletproof.

The other code samples in the book contain similar pieces of advice and nuggets of information, so be sure to check them out! Samples are organized by chapter, and they appear in the same order as in the book. Several examples are only discussed at a glance in the book, but all of the accompanying code samples are fully documented and ready to use.

The reason for this discrepancy between code in the book and the source code is that sometimes I want to explain a topic, but there may be too much code involved to be included in the book. In those cases, I didn’t want to drift too much from the concept in question, but still wanted you to have the code. This way you can focus on learning while reading the book, and focus on experimenting when browsing the code samples.

Linting is often referred to as the first test you should set up when writing JavaScript. Where linters fail, unit tests come in. This isn’t to say that using linters is unnecessary, but rather, that linting alone is insufficient! Unit testing helps ensure your code behaves the way you expect it to. Unit testing is discussed in chapter 8, where you’ll learn how to write tests for the code you develop throughout part 2, which is dedicated to writing modular, maintainable, and testable JavaScript code.

Next up, you’ll start putting together a build process from scratch. You’ll start small, setting up a task to lint the code, then running it from the command line, similar to how the process looks if you use a compiler; you’ll learn to make a habit of running the build every time you make a change and see whether the code still “compiles” against the linter. Chapter 3 teaches you how to have the build run itself, so you don’t have to repeat yourself like that, but it’ll be fine for the time being.

How can you use a lint tool such as JSLint straight in the command line? Well, I’m glad you asked.

1.5.2. Lint in the command line

One of the most common ways to add a task to a build process is to execute that task using a command line. If you execute the task from the command line, it’ll be easy to integrate it to your build process. You’re going to use JSHint[4] to lint your software.

4 For more information on JSHint, see http://jshint.com.

JSHint is a command line tool that lints JavaScript files and snippets. It’s written in Node.js, which is a platform for developing applications using JavaScript. If you need a quick overview of Node.js fundamentals, refer to appendix A, where I explain what modules are and how they work. If you want a deeper analysis of Node.js, refer to Node.js in Action by Mike Cantelon et al. (Manning, 2013). Understanding this will also be useful when working with Grunt, our build tool of choice, in the next chapter.

Node.js explained

Node is a relatively new platform you’ve surely heard of by now. It was initially released in 2009, and it follows event-driven and single-threaded patterns, which translates into high-performing concurrent request handling. In this regard, it’s comparable to the design in Nginx, a highly scalable multi-purpose—and very popular—reverse proxy server meant to serve static content and pipe other requests to an application server (such as Node).

Node.js has been praised as particularly easy to adopt by front-end engineers, considering it’s merely JavaScript on the server side (for the most part). It also made it possible to abstract the front end from the back end entirely,[a] only interacting through data and REST API interfaces, such as the one you’ll learn to design and then build in chapter 9.

a For more information on abstracting the front end from the back end, see http://bevacqua.io/bf/node-frontend.

Node.js and JSHint Installation

Here are the steps for installing Node.js and the JSHint command-line interface (CLI) tool. Alternative Node.js installation methods and troubleshooting are also offered in appendix A.

1. Go to http://nodejs.org, as shown in figure 1.6, and click on the INSTALL button to download the latest version of node.

Figure 1.6. The http://nodejs.org website

2. Execute the downloaded file and follow the installation instructions.

You’ll get a command-line tool called npm (Node Package Manager) for free, as it comes bundled with Node. This package manager, npm, can be used from your terminal to install, publish, and manage modules in your node projects. Packages can be installed on a project-by-project basis or they can be installed globally, making them easier to access directly in the terminal. In reality, the difference between the two is that globally installed packages are put in a folder that’s in the PATH environment variable, and those that aren’t are put in a folder named node_modules in the same folder you’re in when you execute the command. To keep projects self-contained, local installs are always preferred. But in the case of utilities such as the JSHint linter, which you want to use system-wide, a global install is more appropriate. The -g modifier tells npm to install jshintglobally. That way, you can use it on the command line as jshint.

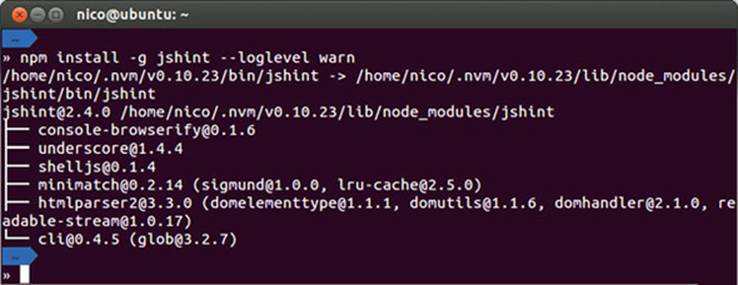

1. Open your favorite terminal window and execute npm install -g jshint, as shown in figure 1.7. If it failed, you may need to use sudo to get elevated privileges; for example, sudo npm install -g jshint.

Figure 1.7. Installing jshint through npm

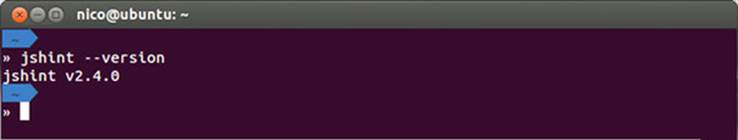

2. Run jshint --version. It should output the version number for the jshint program, as shown in figure 1.8. It’ll probably be a different version, as module versions in actively developed packages change frequently.

Figure 1.8. Verifying jshint works in your terminal

The next section explains how to lint your code.

Linting your code

You should now have jshint installed on your system, and accessible in your terminal, as you’ve verified. To lint your code using JSHint, you can change directories using cd to your project root, and then type in jshint . (the dot tells JSHint to lint all of the files in the current folder). If the operation is taking too long, you may need to add the --exclude node_modules option; this way you’ll only lint your own code and ignore third-party code installed via npm install.

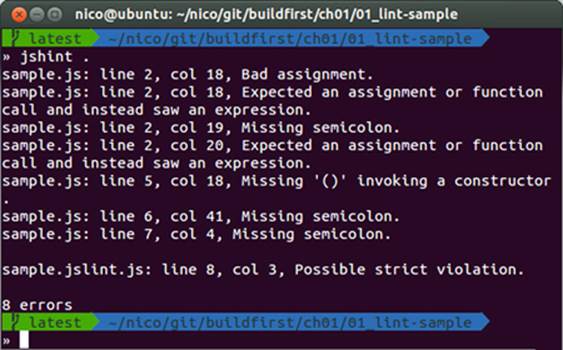

When the command completes, you’ll get a detailed report indicating the status of your code. If your code has any problems, the tool will report the expected result and line number for each of those problems. Then it will exit with an error code, allowing you to “break the build” if the lint fails. Whenever a build task fails to produce the expected output, the entire process should be aborted. This presents a number of benefits because it prevents work from continuing if something goes wrong, refusing to complete a build until you fix any issues. Figure 1.9 shows the results of linting a snippet of code.

Figure 1.9. Linting with JSHint from your terminal

Once JSHint is all set, you might be tempted to call it a day, because it’s your only task; however, that wouldn’t scale up nicely if you want to add extra tasks to your build. You might want to include a unit testing step in your build process; this becomes a problem because you now have to run at least two commands: jshint and another one to execute your tests. That doesn’t scale well. Imagine remembering to use jshint and half a dozen other commands complete with their parameters. It would be too cumbersome, hard to remember, and error prone. You wouldn’t want to lose half a billion dollars, would you?

Then you better start putting your build tasks together, because even if you only have a single one for now, you’ll soon have a dozen! Composing a build process helps you think in terms of automation, and it’ll help you save time by avoiding repetition of steps.

Every language has its own set of build tools you can use. Most have a tool that stands out and sees far wider adoption than the rest. When it comes to JavaScript build systems, Grunt is one of the most popular tools, with thousands of plugins (to help you with build tasks) to pick from. If you’re writing a build process for another language, you’ll probably want to research your own. Even though the build tasks in the book are written in JavaScript and use Grunt, the principles I describe should apply to almost any language and build tool.

Flip over to chapter 2 to see how you can integrate JSHint into Grunt, as you begin your hands-on journey through the land of build processes.

1.6. Summary

This chapter serves as an overview of the concepts you’ll dig into throughout the rest of the book. Here are highlights about what you’ve learned in this chapter:

· Modern JavaScript application development is problematic because of the lack of regard given to design and architecture.

· Build First is a solution that enables automated processes and maintainable application design, and encourages you to think about what you’re building.

· You learned about lint and ran code through a linter, improving its code quality without using a browser.

· In part 1 you’ll learn all about build processes, deployments, and environment configuration. You’ll use Grunt to develop builds, and in appendix C you’ll learn about other tools you can use.

· Part 2 is dedicated to complexity in application design. Modularity, asynchronous code flows, application and API design, and testability all have a role to play, and they come together in part 2.

You’ve barely scratched the surface of what you can achieve using a Build First approach to application design! We have much ground to cover! Let’s move to chapter 2, where we’ll discuss the most common tasks you might need to perform during a build and go over implementation examples using Grunt.