Windows Internals, Sixth Edition, Part 1 (2012)

Chapter 3. System Mechanisms

The Windows operating system provides several base mechanisms that kernel-mode components such as the executive, the kernel, and device drivers use. This chapter explains the following system mechanisms and describes how they are used:

§ Trap dispatching, including interrupts, deferred procedure calls (DPCs), asynchronous procedure calls (APCs), exception dispatching, and system service dispatching

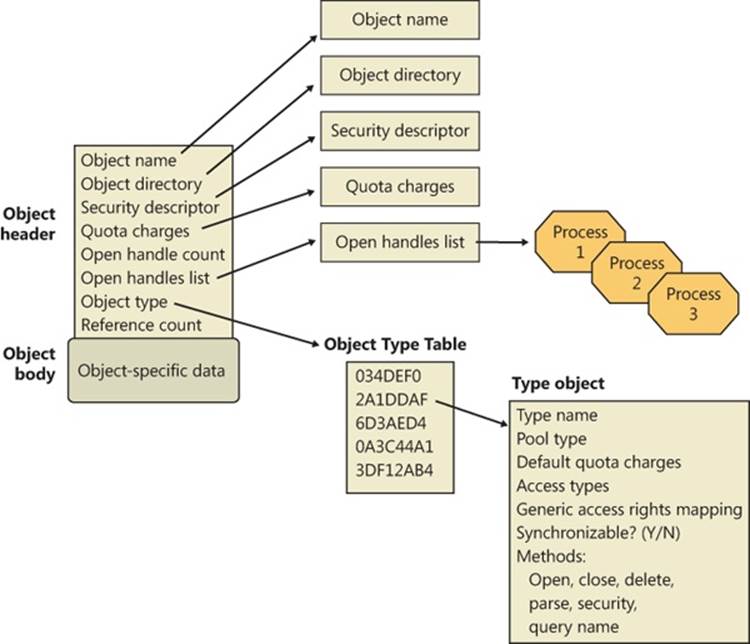

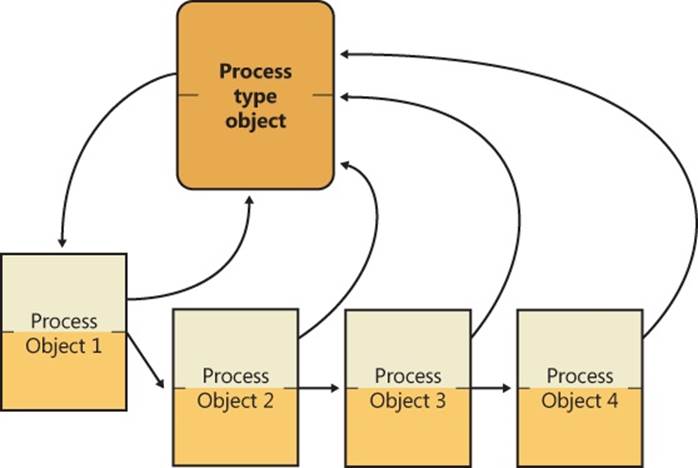

§ The executive object manager

§ Synchronization, including spinlocks, kernel dispatcher objects, how waits are implemented, as well as user-mode-specific synchronization primitives that avoid trips to kernel mode (unlike typical dispatcher objects)

§ System worker threads

§ Miscellaneous mechanisms such as Windows global flags

§ Advanced Local Procedure Calls (ALPCs)

§ Kernel event tracing

§ Wow64

§ User-mode debugging

§ The image loader

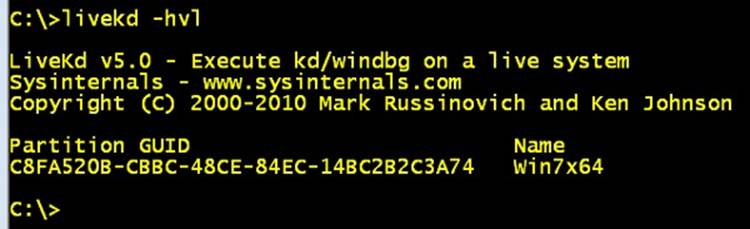

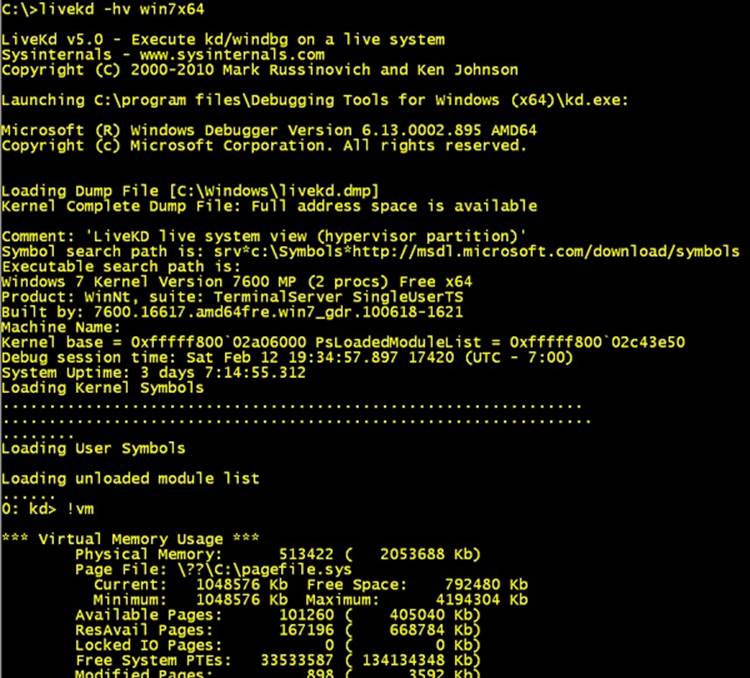

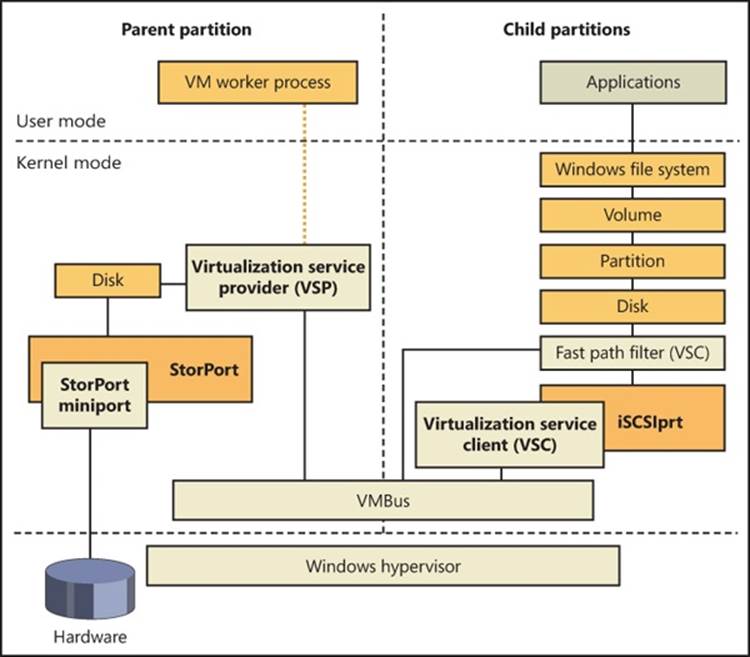

§ Hypervisor (Hyper-V)

§ Kernel Transaction Manager (KTM)

§ Kernel Patch Protection (KPP)

§ Code integrity

Trap Dispatching

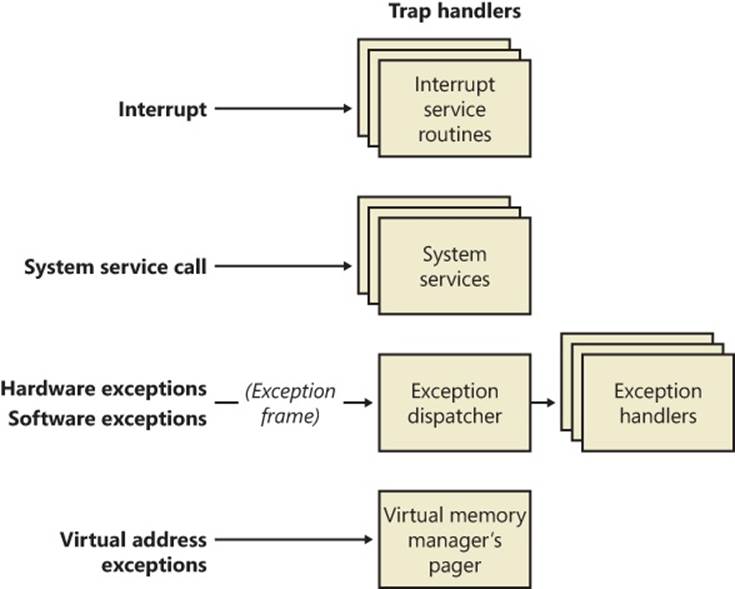

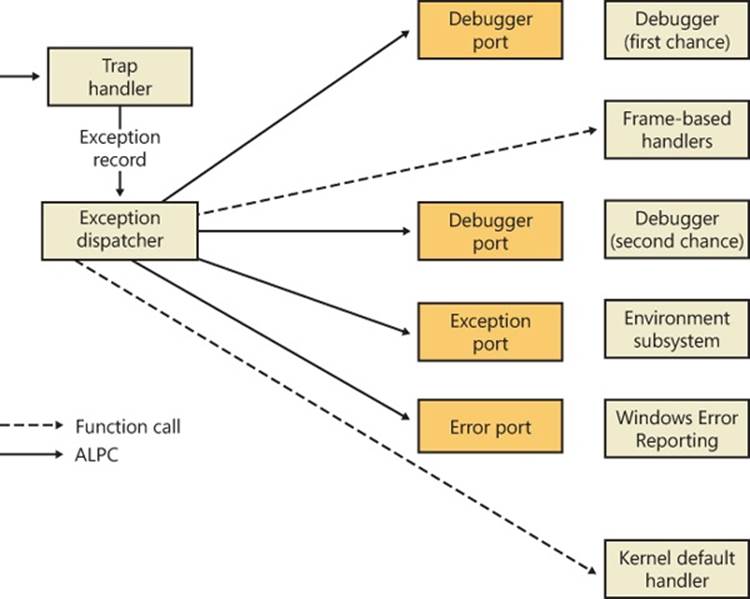

Interrupts and exceptions are operating system conditions that divert the processor to code outside the normal flow of control. Either hardware or software can detect them. The term trap refers to a processor’s mechanism for capturing an executing thread when an exception or an interrupt occurs and transferring control to a fixed location in the operating system. In Windows, the processor transfers control to a trap handler, which is a function specific to a particular interrupt or exception. Figure 3-1 illustrates some of the conditions that activate trap handlers.

The kernel distinguishes between interrupts and exceptions in the following way. An interrupt is an asynchronous event (one that can occur at any time) that is unrelated to what the processor is executing. Interrupts are generated primarily by I/O devices, processor clocks, or timers, and they can be enabled (turned on) or disabled (turned off). An exception, in contrast, is a synchronous condition that usually results from the execution of a particular instruction. (Aborts, such as machine checks, is a type of processor exception that’s typically not associated with instruction execution.) Running a program a second time with the same data under the same conditions can reproduce exceptions. Examples of exceptions include memory-access violations, certain debugger instructions, and divide-by-zero errors. The kernel also regards system service calls as exceptions (although technically they’re system traps).

Figure 3-1. Trap dispatching

Either hardware or software can generate exceptions and interrupts. For example, a bus error exception is caused by a hardware problem, whereas a divide-by-zero exception is the result of a software bug. Likewise, an I/O device can generate an interrupt, or the kernel itself can issue a software interrupt (such as an APC or DPC, both of which are described later in this chapter).

When a hardware exception or interrupt is generated, the processor records enough machine state on the kernel stack of the thread that’s interrupted to return to that point in the control flow and continue execution as if nothing had happened. If the thread was executing in user mode, Windows switches to the thread’s kernel-mode stack. Windows then creates a trap frame on the kernel stack of the interrupted thread into which it stores the execution state of the thread. The trap frame is a subset of a thread’s complete context, and you can view its definition by typing dt nt!_ktrap_frame in the kernel debugger. (Thread context is described in Chapter 5.) The kernel handles software interrupts either as part of hardware interrupt handling or synchronously when a thread invokes kernel functions related to the software interrupt.

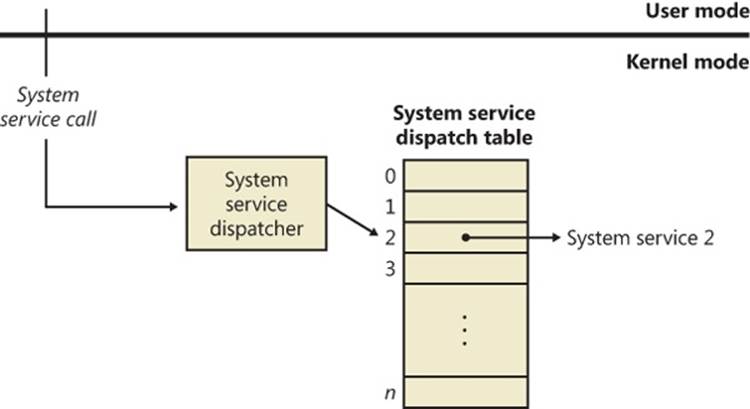

In most cases, the kernel installs front-end, trap-handling functions that perform general trap-handling tasks before and after transferring control to other functions that field the trap. For example, if the condition was a device interrupt, a kernel hardware interrupt trap handler transfers control to the interrupt service routine (ISR) that the device driver provided for the interrupting device. If the condition was caused by a call to a system service, the general system service trap handler transfers control to the specified system service function in the executive. The kernel also installs trap handlers for traps that it doesn’t expect to see or doesn’t handle. These trap handlers typically execute the system function KeBugCheckEx, which halts the computer when the kernel detects problematic or incorrect behavior that, if left unchecked, could result in data corruption. (For more information on bug checks, see Chapter 14, “Crash Dump Analysis,” in Part 2.) The following sections describe interrupt, exception, and system service dispatching in greater detail.

Interrupt Dispatching

Hardware-generated interrupts typically originate from I/O devices that must notify the processor when they need service. Interrupt-driven devices allow the operating system to get the maximum use out of the processor by overlapping central processing with I/O operations. A thread starts an I/O transfer to or from a device and then can execute other useful work while the device completes the transfer. When the device is finished, it interrupts the processor for service. Pointing devices, printers, keyboards, disk drives, and network cards are generally interrupt driven.

System software can also generate interrupts. For example, the kernel can issue a software interrupt to initiate thread dispatching and to asynchronously break into the execution of a thread. The kernel can also disable interrupts so that the processor isn’t interrupted, but it does so only infrequently—at critical moments while it’s programming an interrupt controller or dispatching an exception, for example.

The kernel installs interrupt trap handlers to respond to device interrupts. Interrupt trap handlers transfer control either to an external routine (the ISR) that handles the interrupt or to an internal kernel routine that responds to the interrupt. Device drivers supply ISRs to service device interrupts, and the kernel provides interrupt-handling routines for other types of interrupts.

In the following subsections, you’ll find out how the hardware notifies the processor of device interrupts, the types of interrupts the kernel supports, the way device drivers interact with the kernel (as a part of interrupt processing), and the software interrupts the kernel recognizes (plus the kernel objects that are used to implement them).

Hardware Interrupt Processing

On the hardware platforms supported by Windows, external I/O interrupts come into one of the lines on an interrupt controller. The controller, in turn, interrupts the processor on a single line. Once the processor is interrupted, it queries the controller to get the interrupt request (IRQ). The interrupt controller translates the IRQ to an interrupt number, uses this number as an index into a structure called the interrupt dispatch table (IDT), and transfers control to the appropriate interrupt dispatch routine. At system boot time, Windows fills in the IDT with pointers to the kernel routines that handle each interrupt and exception.

Windows maps hardware IRQs to interrupt numbers in the IDT, and the system also uses the IDT to configure trap handlers for exceptions. For example, the x86 and x64 exception number for a page fault (an exception that occurs when a thread attempts to access a page of virtual memory that isn’t defined or present) is 0xe (14). Thus, entry 0xe in the IDT points to the system’s page-fault handler. Although the architectures supported by Windows allow up to 256 IDT entries, the number of IRQs a particular machine can support is determined by the design of the interrupt controller the machine uses.

EXPERIMENT: VIEWING THE IDT

You can view the contents of the IDT, including information on what trap handlers Windows has assigned to interrupts (including exceptions and IRQs), using the !idt kernel debugger command. The !idt command with no flags shows simplified output that includes only registered hardware interrupts (and, on 64-bit machines, the processor trap handlers).

The following example shows what the output of the !idt command looks like:

lkd> !idt

Dumping IDT:

00: fffff80001a7ec40 nt!KiDivideErrorFault

01: fffff80001a7ed40 nt!KiDebugTrapOrFault

02: fffff80001a7ef00 nt!KiNmiInterrupt Stack = 0xFFFFF80001865000

03: fffff80001a7f280 nt!KiBreakpointTrap

04: fffff80001a7f380 nt!KiOverflowTrap

05: fffff80001a7f480 nt!KiBoundFault

06: fffff80001a7f580 nt!KiInvalidOpcodeFault

07: fffff80001a7f7c0 nt!KiNpxNotAvailableFault

08: fffff80001a7f880 nt!KiDoubleFaultAbort Stack = 0xFFFFF80001863000

09: fffff80001a7f940 nt!KiNpxSegmentOverrunAbort

0a: fffff80001a7fa00 nt!KiInvalidTssFault

0b: fffff80001a7fac0 nt!KiSegmentNotPresentFault

0c: fffff80001a7fc00 nt!KiStackFault

0d: fffff80001a7fd40 nt!KiGeneralProtectionFault

0e: fffff80001a7fe80 nt!KiPageFault

10: fffff80001a80240 nt!KiFloatingErrorFault

11: fffff80001a803c0 nt!KiAlignmentFault

12: fffff80001a804c0 nt!KiMcheckAbort Stack = 0xFFFFF80001867000

13: fffff80001a80840 nt!KiXmmException

1f: fffff80001a5ec10 nt!KiApcInterrupt

2c: fffff80001a80a00 nt!KiRaiseAssertion

2d: fffff80001a80b00 nt!KiDebugServiceTrap

2f: fffff80001acd590 nt!KiDpcInterrupt

37: fffff8000201c090 hal!PicSpuriousService37 (KINTERRUPT fffff8000201c000)

3f: fffff8000201c130 hal!PicSpuriousService37 (KINTERRUPT fffff8000201c0a0)

51: fffffa80045babd0 dxgkrnl!DpiFdoLineInterruptRoutine (KINTERRUPT fffffa80045bab40)

52: fffffa80029f1390 USBPORT!USBPORT_InterruptService (KINTERRUPT fffffa80029f1300)

62: fffffa80029f15d0 USBPORT!USBPORT_InterruptService (KINTERRUPT fffffa80029f1540)

USBPORT!USBPORT_InterruptService (KINTERRUPT fffffa80029f1240)

72: fffffa80029f1e10 ataport!IdePortInterrupt (KINTERRUPT fffffa80029f1d80)

81: fffffa80045bae10 i8042prt!I8042KeyboardInterruptService (KINTERRUPT fffffa80045bad80)

82: fffffa80029f1ed0 ataport!IdePortInterrupt (KINTERRUPT fffffa80029f1e40)

90: fffffa80045bad50 Vid+0x7918 (KINTERRUPT fffffa80045bacc0)

91: fffffa80045baed0 i8042prt!I8042MouseInterruptService (KINTERRUPT fffffa80045bae40)

a0: fffffa80045bac90 vmbus!XPartPncIsr (KINTERRUPT fffffa80045bac00)

a2: fffffa80029f1210 sdbus!SdbusInterrupt (KINTERRUPT fffffa80029f1180)

rimmpx64+0x9FFC (KINTERRUPT fffffa80029f10c0)

rimspx64+0x7A14 (KINTERRUPT fffffa80029f1000)

rixdpx64+0x9C50 (KINTERRUPT fffffa80045baf00)

a3: fffffa80029f1510 USBPORT!USBPORT_InterruptService (KINTERRUPT fffffa80029f1480)

HDAudBus!HdaController::Isr (KINTERRUPT fffffa80029f1c00)

a8: fffffa80029f1bd0 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f1b40)

a9: fffffa80029f1b10 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f1a80)

aa: fffffa80029f1a50 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f19c0)

ab: fffffa80029f1990 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f1900)

ac: fffffa80029f18d0 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f1840)

ad: fffffa80029f1810 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f1780)

ae: fffffa80029f1750 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f16c0)

af: fffffa80029f1690 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f1600)

b0: fffffa80029f1d50 NDIS!ndisMiniportMessageIsr (KINTERRUPT fffffa80029f1cc0)

b1: fffffa80029f1f90 ACPI!ACPIInterruptServiceRoutine (KINTERRUPT fffffa80029f1f00)

b3: fffffa80029f1450 USBPORT!USBPORT_InterruptService (KINTERRUPT fffffa80029f13c0)

c1: fffff8000201c3b0 hal!HalpBroadcastCallService (KINTERRUPT fffff8000201c320)

d1: fffff8000201c450 hal!HalpHpetClockInterrupt (KINTERRUPT fffff8000201c3c0)

d2: fffff8000201c4f0 hal!HalpHpetRolloverInterrupt (KINTERRUPT fffff8000201c460)

df: fffff8000201c310 hal!HalpApicRebootService (KINTERRUPT fffff8000201c280)

e1: fffff80001a8e1f0 nt!KiIpiInterrupt

e2: fffff8000201c270 hal!HalpDeferredRecoveryService (KINTERRUPT fffff8000201c1e0)

e3: fffff8000201c1d0 hal!HalpLocalApicErrorService (KINTERRUPT fffff8000201c140)

fd: fffff8000201c590 hal!HalpProfileInterrupt (KINTERRUPT fffff8000201c500)

fe: fffff8000201c630 hal!HalpPerfInterrupt (KINTERRUPT fffff8000201c5a0)

On the system used to provide the output for this experiment, the keyboard device driver’s (I8042prt.sys) keyboard ISR is at interrupt number 0x81. You can also see that interrupt 0xe corresponds to KiPageFault, as explained earlier.

Each processor has a separate IDT so that different processors can run different ISRs, if appropriate. For example, in a multiprocessor system, each processor receives the clock interrupt, but only one processor updates the system clock in response to this interrupt. All the processors, however, use the interrupt to measure thread quantum and to initiate rescheduling when a thread’s quantum ends. Similarly, some system configurations might require that a particular processor handle certain device interrupts.

x86 Interrupt Controllers

Most x86 systems rely on either the i8259A Programmable Interrupt Controller (PIC) or a variant of the i82489 Advanced Programmable Interrupt Controller (APIC); today’s computers include an APIC. The PIC standard originates with the original IBM PC. The i8259A PIC works only with uniprocessor systems and has only eight interrupt lines. However, the IBM PC architecture defined the addition of a second PIC, called the slave, whose interrupts are multiplexed into one of the master PIC’s interrupt lines. This provides 15 total interrupts (seven on the master and eight on the slave, multiplexed through the master’s eighth interrupt line). APICs and Streamlined Advanced Programmable Interrupt Controllers (SAPICs, discussed shortly) work with multiprocessor systems and have 256 interrupt lines. Intel and other companies have defined the Multiprocessor Specification (MP Specification), a design standard for x86 multiprocessor systems that centers on the use of APIC. To provide compatibility with uniprocessor operating systems and boot code that starts a multiprocessor system in uniprocessor mode, APICs support a PIC compatibility mode with 15 interrupts and delivery of interrupts to only the primary processor. Figure 3-2 depicts the APIC architecture.

The APIC actually consists of several components: an I/O APIC that receives interrupts from devices, local APICs that receive interrupts from the I/O APIC on the bus and that interrupt the CPU they are associated with, and an i8259A-compatible interrupt controller that translates APIC input into PIC-equivalent signals. Because there can be multiple I/O APICs on the system, motherboards typically have a piece of core logic that sits between them and the processors. This logic is responsible for implementing interrupt routing algorithms that both balance the device interrupt load across processors and attempt to take advantage of locality, delivering device interrupts to the same processor that has just fielded a previous interrupt of the same type. Software programs can reprogram the I/O APICs with a fixed routing algorithm that bypasses this piece of chipset logic. Windows does this by programming the APICs in an “interrupt one processor in the following set” routing mode.

Figure 3-2. x86 APIC architecture

x64 Interrupt Controllers

Because the x64 architecture is compatible with x86 operating systems, x64 systems must provide the same interrupt controllers as the x86. A significant difference, however, is that the x64 versions of Windows will not run on systems that do not have an APIC because they use the APIC for interrupt control.

IA64 Interrupt Controllers

The IA64 architecture relies on the Streamlined Advanced Programmable Interrupt Controller (SAPIC), which is an evolution of the APIC. Even if load balancing and routing are present in the firmware, Windows does not take advantage of it; instead, it statically assigns interrupts to processors in a round-robin manner.

EXPERIMENT: VIEWING THE PIC AND APIC

You can view the configuration of the PIC on a uniprocessor and the current local APIC on a multiprocessor by using the !pic and !apic kernel debugger commands, respectively. Here’s the output of the !pic command on a uniprocessor. (Note that the !pic command doesn’t work if your system is using an APIC HAL.)

lkd> !pic

----- IRQ Number ----- 00 01 02 03 04 05 06 07 08 09 0A 0B 0C 0D 0E 0F

Physically in service: . . . . . . . . . . . . . . . .

Physically masked: . . . Y . . Y Y . . Y . . Y . .

Physically requested: . . . . . . . . . . . . . . . .

Level Triggered: . . . . . Y . . . Y . Y . . . .

Here’s the output of the !apic command on a system running with an APIC HAL. Note that during local kernel debugging, this command shows the APIC associated with the current processor—in other words, whichever processor the debugger’s thread happens to be running on as you enter the command. When looking at a crash dump or remote system, you can use the ~(tilde) command followed by the processor number to switch the processor of whose local APIC you want to see.

lkd> !apic

Apic @ fffe0000 ID:0 (50014) LogDesc:01000000 DestFmt:ffffffff TPR 20

TimeCnt: 00000000clk SpurVec:3f FaultVec:e3 error:0

Ipi Cmd: 01000000'0000002f Vec:2F FixedDel Ph:01000000 edg high

Timer..: 00000000'000300fd Vec:FD FixedDel Dest=Self edg high m

Linti0.: 00000000'0001003f Vec:3F FixedDel Dest=Self edg high m

Linti1.: 00000000'000004ff Vec:FF NMI Dest=Self edg high

TMR: 51-52, 62, A3, B1, B3

IRR:

ISR::

The various numbers following the Vec labels indicate the associated vector in the IDT with the given command. For example, in this output, interrupt number 0xFD is associated with the APIC Timer, and interrupt number 0xE3 handles APIC errors. Because this experiment was run on the same machine as the earlier !idt experiment, you can notice that 0xFD is the HAL’s Profiling Interrupt (which uses a timer for profile intervals), and 0xe3 is the HAL’s Local APIC Error Handler, as expected.

The following output is for the !ioapic command, which displays the configuration of the I/O APICs, the interrupt controller components connected to devices:

lkd> !ioapic

IoApic @ FEC00000 ID:0 (51) Arb:A951

Inti00.: 0000a951'0000a951 Vec:51 LowestDl Lg:0000a951 lvl low

Software Interrupt Request Levels (IRQLs)

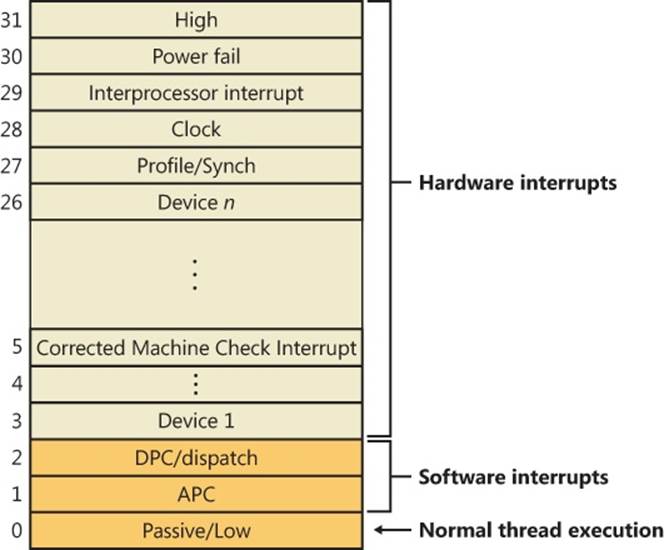

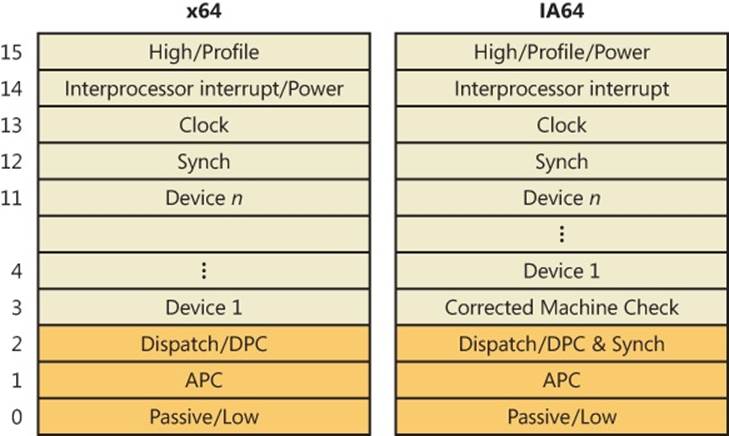

Although interrupt controllers perform interrupt prioritization, Windows imposes its own interrupt priority scheme known as interrupt request levels (IRQLs). The kernel represents IRQLs internally as a number from 0 through 31 on x86 and from 0 to 15 on x64 and IA64, with higher numbers representing higher-priority interrupts. Although the kernel defines the standard set of IRQLs for software interrupts, the HAL maps hardware-interrupt numbers to the IRQLs. Figure 3-3 shows IRQLs defined for the x86 architecture, and Figure 3-4 shows IRQLs for the x64 and IA64 architectures.

Figure 3-3. x86 interrupt request levels (IRQLs)

Interrupts are serviced in priority order, and a higher-priority interrupt preempts the servicing of a lower-priority interrupt. When a high-priority interrupt occurs, the processor saves the interrupted thread’s state and invokes the trap dispatchers associated with the interrupt. The trap dispatcher raises the IRQL and calls the interrupt’s service routine. After the service routine executes, the interrupt dispatcher lowers the processor’s IRQL to where it was before the interrupt occurred and then loads the saved machine state. The interrupted thread resumes executing where it left off. When the kernel lowers the IRQL, lower-priority interrupts that were masked might materialize. If this happens, the kernel repeats the process to handle the new interrupts.

Figure 3-4. x64 and IA64 interrupt request levels (IRQLs)

IRQL priority levels have a completely different meaning than thread-scheduling priorities (which are described in Chapter 5). A scheduling priority is an attribute of a thread, whereas an IRQL is an attribute of an interrupt source, such as a keyboard or a mouse. In addition, each processor has an IRQL setting that changes as operating system code executes.

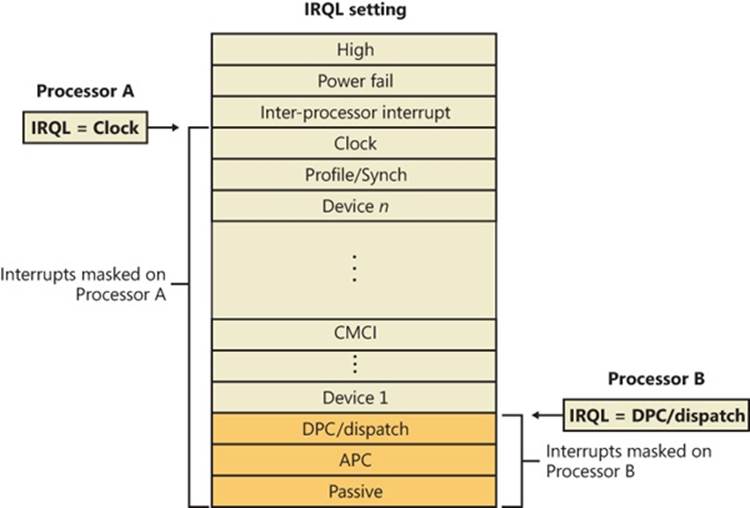

Each processor’s IRQL setting determines which interrupts that processor can receive. IRQLs are also used to synchronize access to kernel-mode data structures. (You’ll find out more about synchronization later in this chapter.) As a kernel-mode thread runs, it raises or lowers the processor’s IRQL either directly by calling KeRaiseIrql and KeLowerIrql or, more commonly, indirectly via calls to functions that acquire kernel synchronization objects. As Figure 3-5 illustrates, interrupts from a source with an IRQL above the current level interrupt the processor, whereas interrupts from sources with IRQLs equal to or below the current level are masked until an executing thread lowers the IRQL.

Because accessing a PIC is a relatively slow operation, HALs that require accessing the I/O bus to change IRQLs, such as for PIC and 32-bit Advanced Configuration and Power Interface (ACPI) systems, implement a performance optimization, called lazy IRQL, that avoids PIC accesses. When the IRQL is raised, the HAL notes the new IRQL internally instead of changing the interrupt mask. If a lower-priority interrupt subsequently occurs, the HAL sets the interrupt mask to the settings appropriate for the first interrupt and does not quiesce the lower-priority interrupt until the IRQL is lowered (thus keeping the interrupt pending). Thus, if no lower-priority interrupts occur while the IRQL is raised, the HAL doesn’t need to modify the PIC.

Figure 3-5. Masking interrupts

A kernel-mode thread raises and lowers the IRQL of the processor on which it’s running, depending on what it’s trying to do. For example, when an interrupt occurs, the trap handler (or perhaps the processor) raises the processor’s IRQL to the assigned IRQL of the interrupt source. This elevation masks all interrupts at and below that IRQL (on that processor only), which ensures that the processor servicing the interrupt isn’t waylaid by an interrupt at the same level or a lower level. The masked interrupts are either handled by another processor or held back until the IRQL drops. Therefore, all components of the system, including the kernel and device drivers, attempt to keep the IRQL at passive level (sometimes called low level). They do this because device drivers can respond to hardware interrupts in a timelier manner if the IRQL isn’t kept unnecessarily elevated for long periods.

NOTE

An exception to the rule that raising the IRQL blocks interrupts of that level and lower relates to APC-level interrupts. If a thread raises the IRQL to APC level and then is rescheduled because of a dispatch/DPC-level interrupt, the system might deliver an APC-level interrupt to the newly scheduled thread. Thus, APC level can be considered a thread-local rather than processor-wide IRQL.

EXPERIMENT: VIEWING THE IRQL

You can view a processor’s saved IRQL with the !irql debugger command. The saved IRQL represents the IRQL at the time just before the break-in to the debugger, which raises the IRQL to a static, meaningless value:

kd> !irql

Debugger saved IRQL for processor 0x0 -- 0 (LOW_LEVEL)

Note that the IRQL value is saved in two locations. The first, which represents the current IRQL, is the processor control region (PCR), while its extension, the processor region control block (PRCB), contains the saved IRQL in the DebuggerSaveIrql field. The PCR and PRCB contain information about the state of each processor in the system, such as the current IRQL, a pointer to the hardware IDT, the currently running thread, and the next thread selected to run. The kernel and the HAL use this information to perform architecture-specific and machine-specific actions. Portions of the PCR and PRCB structures are defined publicly in the Windows Driver Kit (WDK) header file Ntddk.h.

You can view the contents of the current processor’s PCR with the kernel debugger by using the !pcr command. To view the PCR of a specific processor, add the processor’s number after the command, separated with a space:

lkd> !pcr 0

KPCR for Processor 0 at fffff80001bfad00:

Major 1 Minor 1

NtTib.ExceptionList: fffff80001853000

NtTib.StackBase: fffff80001854080

NtTib.StackLimit: 000000000026ea28

NtTib.SubSystemTib: fffff80001bfad00

NtTib.Version: 0000000001bfae80

NtTib.UserPointer: fffff80001bfb4f0

NtTib.SelfTib: 000007fffffdb000

SelfPcr: 0000000000000000

Prcb: fffff80001bfae80

Irql: 0000000000000000

IRR: 0000000000000000

IDR: 0000000000000000

InterruptMode: 0000000000000000

IDT: 0000000000000000

GDT: 0000000000000000

TSS: 0000000000000000

CurrentThread: fffff80001c08c40

NextThread: 0000000000000000

IdleThread: fffff80001c08c40

DpcQueue:

Because changing a processor’s IRQL has such a significant effect on system operation, the change can be made only in kernel mode—user-mode threads can’t change the processor’s IRQL. This means that a processor’s IRQL is always at passive level when it’s executing user-mode code. Only when the processor is executing kernel-mode code can the IRQL be higher.

Each interrupt level has a specific purpose. For example, the kernel issues an interprocessor interrupt (IPI) to request that another processor perform an action, such as dispatching a particular thread for execution or updating its translation look-aside buffer (TLB) cache. The system clock generates an interrupt at regular intervals, and the kernel responds by updating the clock and measuring thread execution time. If a hardware platform supports two clocks, the kernel adds another clock interrupt level to measure performance. The HAL provides a number of interrupt levels for use by interrupt-driven devices; the exact number varies with the processor and system configuration. The kernel uses software interrupts (described later in this chapter) to initiate thread scheduling and to asynchronously break into a thread’s execution.

Mapping Interrupts to IRQLs

IRQL levels aren’t the same as the interrupt requests (IRQs) defined by interrupt controllers—the architectures on which Windows runs don’t implement the concept of IRQLs in hardware. So how does Windows determine what IRQL to assign to an interrupt? The answer lies in the HAL. In Windows, a type of device driver called a bus driver determines the presence of devices on its bus (PCI, USB, and so on) and what interrupts can be assigned to a device. The bus driver reports this information to the Plug and Play manager, which decides, after taking into account the acceptable interrupt assignments for all other devices, which interrupt will be assigned to each device. Then it calls a Plug and Play interrupt arbiter, which maps interrupts to IRQLs. (The root arbiter is used on non-ACPI systems, while the ACPI HAL has its own arbiter on ACPI-compatible systems.)

The algorithm for assignment differs for the various HALs that Windows includes. On ACPI systems (including x86, x64, and IA64), the HAL computes the IRQL for a given interrupt by dividing the interrupt vector assigned to the IRQ by 16. As for selecting an interrupt vector for the IRQ, this depends on the type of interrupt controller present on the system. On today’s APIC systems, this number is generated in a round-robin fashion, so there is no computable way to figure out the IRQ based on the interrupt vector or the IRQL. However, an experiment later in this section shows how the debugger can query this information from the interrupt arbiter.

Predefined IRQLs

Let’s take a closer look at the use of the predefined IRQLs, starting from the highest level shown in Figure 3-4:

§ The kernel uses high level only when it’s halting the system in KeBugCheckEx and masking out all interrupts.

§ Power fail level originated in the original Windows NT design documents, which specified the behavior of system power failure code, but this IRQL has never been used.

§ Interprocessor interrupt level is used to request another processor to perform an action, such as updating the processor’s TLB cache, system shutdown, or system crash.

§ Clock level is used for the system’s clock, which the kernel uses to track the time of day as well as to measure and allot CPU time to threads.

§ The system’s real-time clock (or another source, such as the local APIC timer) uses profile level when kernel profiling (a performance-measurement mechanism) is enabled. When kernel profiling is active, the kernel’s profiling trap handler records the address of the code that was executing when the interrupt occurred. A table of address samples is constructed over time that tools can extract and analyze. You can obtain Kernrate, a kernel profiling tool that you can use to configure and view profiling-generated statistics, from the Windows Driver Kit (WDK). See the Kernrate experiment for more information on using this tool.

§ The synchronization IRQL is internally used by the dispatcher and scheduler code to protect access to global thread scheduling and wait/synchronization code. It is typically defined as the highest level right after the device IRQLs.

§ The device IRQLs are used to prioritize device interrupts. (See the previous section for how hardware interrupt levels are mapped to IRQLs.)

§ The corrected machine check interrupt level is used to signal the operating system after a serious but corrected hardware condition or error that was reported by the CPU or firmware through the Machine Check Error (MCE) interface.

§ DPC/dispatch-level and APC-level interrupts are software interrupts that the kernel and device drivers generate. (DPCs and APCs are explained in more detail later in this chapter.)

§ The lowest IRQL, passive level, isn’t really an interrupt level at all; it’s the setting at which normal thread execution takes place and all interrupts are allowed to occur.

EXPERIMENT: USING KERNEL PROFILER (KERNRATE) TO PROFILE EXECUTION

You can use the Kernel Profiler tool (Kernrate) to enable the system-profiling timer, collect samples of the code that is executing when the timer fires, and display a summary showing the frequency distribution across image files and functions. It can be used to track CPU usage consumed by individual processes and/or time spent in kernel mode independent of processes (for example, interrupt service routines). Kernel profiling is useful when you want to obtain a breakdown of where the system is spending time.

In its simplest form, Kernrate samples where time has been spent in each kernel module (for example, Ntoskrnl, drivers, and so on). For example, after installing the Windows Driver Kit, try performing the following steps:

1. Open a command prompt.

2. Type cd C:\WinDDK\7600.16385.1\tools\other (the path to your installation of the Windows 7/Server 2008R2 WDK).

3. Type dir. (You will see directories for each platform.)

4. Run the image that matches your platform (with no arguments or switches). For example, i386\kernrate.exe is the image for an x86 system.

5. While Kernrate is running, perform some other activity on the system. For example, run Windows Media Player and play some music, run a graphics-intensive game, or perform network activity such as doing a directory listing of a remote network share.

6. Press Ctrl+C to stop Kernrate. This causes Kernrate to display the statistics from the sampling period.

In the following sample output from Kernrate, Windows Media Player was running, playing a recorded movie from disk:

C:\WinDDK\7600.16385.1\tools\Other\i386>kernrate.exe

/==============================\

< KERNRATE LOG >

\==============================/

Date: 2011/03/09 Time: 16:44:24

Machine Name: TEST-LAPTOP

Number of Processors: 2

PROCESSOR_ARCHITECTURE: x86

PROCESSOR_LEVEL: 6

PROCESSOR_REVISION: 0f06

Physical Memory: 3310 MB

Pagefile Total: 7285 MB

Virtual Total: 2047 MB

PageFile1: \??\C:\pagefile.sys, 4100MB

OS Version: 6.1 Build 7601 Service-Pack: 1.0

WinDir: C:\Windows

Kernrate Executable Location: C:\WINDDK\7600.16385.1\TOOLS\OTHER\I386

Kernrate User-Specified Command Line:

kernrate.exe

Kernel Profile (PID = 0): Source= Time,

Using Kernrate Default Rate of 25000 events/hit

Starting to collect profile data

***> Press ctrl-c to finish collecting profile data

===> Finished Collecting Data, Starting to Process Results

------------Overall Summary:--------------

P0 K 0:00:00.000 ( 0.0%) U 0:00:00.234 ( 4.7%) I 0:00:04.789 (95.3%)

DPC 0:00:00.000 ( 0.0%) Interrupt 0:00:00.000 ( 0.0%)

Interrupts= 9254, Interrupt Rate= 1842/sec.

P1 K 0:00:00.031 ( 0.6%) U 0:00:00.140 ( 2.8%) I 0:00:04.851 (96.6%)

DPC 0:00:00.000 ( 0.0%) Interrupt 0:00:00.000 ( 0.0%)

Interrupts= 7051, Interrupt Rate= 1404/sec.

TOTAL K 0:00:00.031 ( 0.3%) U 0:00:00.374 ( 3.7%) I 0:00:09.640 (96.0%)

DPC 0:00:00.000 ( 0.0%) Interrupt 0:00:00.000 ( 0.0%)

Total Interrupts= 16305, Total Interrupt Rate= 3246/sec.

Total Profile Time = 5023 msec

BytesStart BytesStop BytesDiff.

Available Physical Memory , 1716359168, 1716195328, -163840

Available Pagefile(s) , 5973733376, 5972783104, -950272

Available Virtual , 2122145792, 2122145792, 0

Available Extended Virtual , 0, 0, 0

Committed Memory Bytes , 1665404928, 1666355200, 950272

Non Paged Pool Usage Bytes , 66211840, 66211840, 0

Paged Pool Usage Bytes , 189083648, 189087744, 4096

Paged Pool Available Bytes , 150593536, 150593536, 0

Free System PTEs , 37322, 37322, 0

Total Avg. Rate

Context Switches , 30152, 6003/sec.

System Calls , 110807, 22059/sec.

Page Faults , 226, 45/sec.

I/O Read Operations , 730, 145/sec.

I/O Write Operations , 1038, 207/sec.

I/O Other Operations , 858, 171/sec.

I/O Read Bytes , 2013850, 2759/ I/O

I/O Write Bytes , 28212, 27/ I/O

I/O Other Bytes , 19902, 23/ I/O

-----------------------------

Results for Kernel Mode:

-----------------------------

OutputResults: KernelModuleCount = 167

Percentage in the following table is based on the Total Hits for the Kernel

Time 3814 hits, 25000 events per hit --------

Module Hits msec %Total Events/Sec

NTKRNLPA 3768 5036 98 % 18705321

NVLDDMKM 12 5036 0 % 59571

HAL 12 5036 0 % 59571

WIN32K 10 5037 0 % 49632

DXGKRNL 9 5036 0 % 44678

NETW4V32 2 5036 0 % 9928

FLTMGR 1 5036 0 % 4964

================================= END OF RUN ==================================

============================== NORMAL END OF RUN ==============================

The overall summary shows that the system spent 0.3 percent of the time in kernel mode, 3.7 percent in user mode, 96.0 percent idle, 0.0 percent at DPC level, and 0.0 percent at interrupt level. The module with the highest hit rate was Ntkrnlpa.exe, the kernel for machines with Physical Address Extension (PAE) or NX support. The module with the second highest hit rate was nvlddmkm.sys, the driver for the video card on the machine used for the test. This makes sense because the major activity going on in the system was Windows Media Player sending video I/O to the video driver.

If you have symbols available, you can zoom in on individual modules and see the time spent by function name. For example, profiling the system while rapidly dragging a window around the screen resulted in the following (partial) output:

C:\WinDDK\7600.16385.1\tools\Other\i386>kernrate.exe -z ntkrnlpa -z win32k

/==============================\

< KERNRATE LOG >

\==============================/

Date: 2011/03/09 Time: 16:49:56

Time 4191 hits, 25000 events per hit --------

Module Hits msec %Total Events/Sec

NTKRNLPA 3623 5695 86 % 15904302

WIN32K 303 5696 7 % 1329880

INTELPPM 141 5696 3 % 618855

HAL 61 5695 1 % 267778

CDD 30 5696 0 % 131671

NVLDDMKM 13 5696 0 % 57057

----- Zoomed module WIN32K.SYS (Bucket size = 16 bytes, Rounding Down) --------

Module Hits msec %Total Events/Sec

BltLnkReadPat 34 5696 10 % 149227

memmove 21 5696 6 % 92169

vSrcTranCopyS8D32 17 5696 5 % 74613

memcpy 12 5696 3 % 52668

RGNOBJ::bMerge 10 5696 3 % 43890

HANDLELOCK::vLockHandle 8 5696 2 % 35112

----- Zoomed module NTKRNLPA.EXE (Bucket size = 16 bytes, Rounding Down) --------

Module Hits msec %Total Events/Sec

KiIdleLoop 3288 5695 87 % 14433713

READ_REGISTER_USHORT 95 5695 2 % 417032

READ_REGISTER_ULONG 93 5695 2 % 408252

RtlFillMemoryUlong 31 5695 0 % 136084

KiFastCallEntry 18 5695 0 % 79016

The module with the second hit rate was Win32k.sys, the windowing system driver. Also high on the list were the video driver and Cdd.dll, a global video driver used for the 3D-accelerated Aero desktop theme. These results make sense because the main activity in the system was drawing on the screen. Note that in the zoomed display for Win32k.sys, the functions with the highest hits are related to merging, copying, and moving bits, the main GDI operations for painting a window dragged on the screen.

One important restriction on code running at DPC/dispatch level or above is that it can’t wait for an object if doing so necessitates the scheduler to select another thread to execute, which is an illegal operation because the scheduler relies on DPC-level software interrupts to schedule threads. Another restriction is that only nonpaged memory can be accessed at IRQL DPC/dispatch level or higher.

This rule is actually a side effect of the first restriction because attempting to access memory that isn’t resident results in a page fault. When a page fault occurs, the memory manager initiates a disk I/O and then needs to wait for the file system driver to read the page in from disk. This wait would, in turn, require the scheduler to perform a context switch (perhaps to the idle thread if no user thread is waiting to run), thus violating the rule that the scheduler can’t be invoked (because the IRQL is still DPC/dispatch level or higher at the time of the disk read). A further problem results in the fact that I/O completion typically occurs at APC_LEVEL, so even in cases where a wait wouldn’t be required, the I/O would never complete because the completion APC would not get a chance to run.

If either of these two restrictions is violated, the system crashes with an IRQL_NOT_LESS_OR_EQUAL or a DRIVER_IRQL_NOT_LESS_OR_EQUAL crash code. (See Chapter 14 in Part 2 for a thorough discussion of system crashes.) Violating these restrictions is a common bug in device drivers. The Windows Driver Verifier (explained in the section “Driver Verifier” in Chapter 10, “Memory Management,” in Part 2) has an option you can set to assist in finding this particular type of bug.

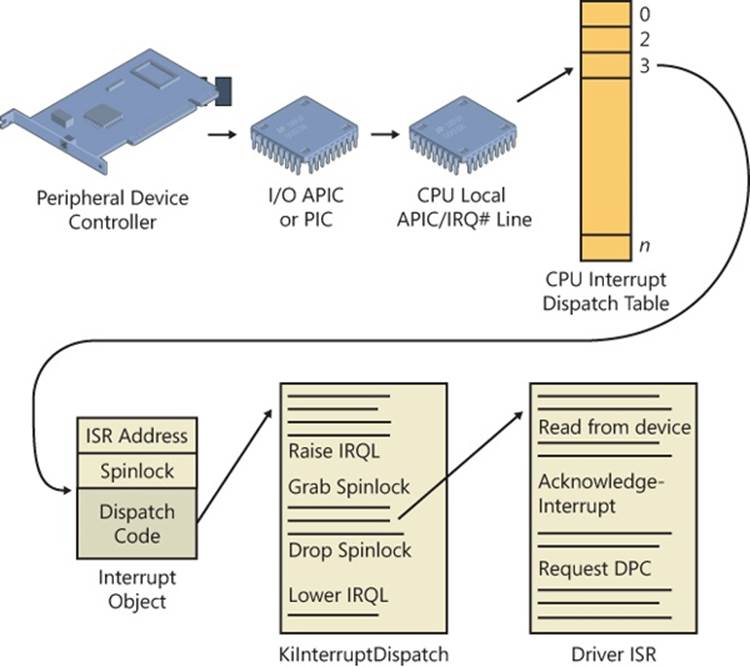

Interrupt Objects

The kernel provides a portable mechanism—a kernel control object called an interrupt object—that allows device drivers to register ISRs for their devices. An interrupt object contains all the information the kernel needs to associate a device ISR with a particular level of interrupt, including the address of the ISR, the IRQL at which the device interrupts, and the entry in the kernel’s interrupt dispatch table (IDT) with which the ISR should be associated. When an interrupt object is initialized, a few instructions of assembly language code, called the dispatch code, are copied from an interrupt-handling template, KiInterruptTemplate, and stored in the object. When an interrupt occurs, this code is executed.

This interrupt-object resident code calls the real interrupt dispatcher, which is typically either the kernel’s KiInterruptDispatch or KiChainedDispatch routine, passing it a pointer to the interrupt object. KiInterruptDispatch is the routine used for interrupt vectors for which only one interrupt object is registered, and KiChainedDispatch is for vectors shared among multiple interrupt objects. The interrupt object contains information that this second dispatcher routine needs to locate and properly call the ISR the device driver provides.

The interrupt object also stores the IRQL associated with the interrupt so that KiInterruptDispatch or KiChainedDispatch can raise the IRQL to the correct level before calling the ISR and then lower the IRQL after the ISR has returned. This two-step process is required because there’s no way to pass a pointer to the interrupt object (or any other argument for that matter) on the initial dispatch because the initial dispatch is done by hardware. On a multiprocessor system, the kernel allocates and initializes an interrupt object for each CPU, enabling the local APIC on that CPU to accept the particular interrupt.

On x64 Windows systems, the kernel optimizes interrupt dispatch by using specific routines that save processor cycles by omitting functionality that isn’t needed, such as KiInterruptDispatchNoLock, which is used for interrupts that do not have an associated kernel-managed spinlock (typically used by drivers that want to synchronize with their ISRs), and KiInterruptDispatchNoEOI, which is used for interrupts that have programmed the APIC in “Auto-End-of-Interrupt” (Auto-EOI) mode—because the interrupt controller will send the EOI signal automatically, the kernel does not need to the extra code to do perform the EOI itself. Finally, for the performance/profiling interrupt specifically, the KiInterruptDispatchLBControl handler is used, which supports the Last Branch Control MSR available on modern CPUs. This register enables the kernel to track/save the branch instruction when tracing; during an interrupt, this information would be lost because it’s not stored in the normal thread register context, so special code must be added to preserve it. The HAL’s performance and profiling interrupts use this functionality, for example, while the other HAL interrupt routines take advantage of the “no-lock” dispatch code, because the HAL does not require the kernel to synchronize with its ISR.

Another kernel interrupt handler is KiFloatingDispatch, which is used for interrupts that require saving the floating-point state. Unlike kernel-mode code, which typically is not allowed to use floating-point (MMX, SSE, 3DNow!) operations because these registers won’t be saved across context switches, ISRs might need to use these registers (such as the video card ISR performing a quick drawing operation). When connecting an interrupt, drivers can set the FloatingSave argument to TRUE, requesting that the kernel use the floating-point dispatch routine, which will save the floating registers. (However, this greatly increases interrupt latency.) Note that this is supported only on 32-bit systems.

Figure 3-6 shows typical interrupt control flow for interrupts associated with interrupt objects.

Figure 3-6. Typical interrupt control flow

EXPERIMENT: EXAMINING INTERRUPT INTERNALS

Using the kernel debugger, you can view details of an interrupt object, including its IRQL, ISR address, and custom interrupt-dispatching code. First, execute the !idt command and locate the entry that includes a reference to I8042KeyboardInterruptService, the ISR routine for the PS2 keyboard device:

81: fffffa80045bae10 i8042prt!I8042KeyboardInterruptService (KINTERRUPT

fffffa80045bad80)

To view the contents of the interrupt object associated with the interrupt, execute dt nt!_kinterrupt with the address following KINTERRUPT:

lkd> dt nt!_KINTERRUPT fffffa80045bad80

+0x000 Type : 22

+0x002 Size : 160

+0x008 InterruptListEntry : _LIST_ENTRY [ 0x00000000'00000000 - 0x0 ]

+0x018 ServiceRoutine : 0xfffff880'0356ca04 unsigned char

i8042prt!I8042KeyboardInterruptService+0

+0x020 MessageServiceRoutine : (null)

+0x028 MessageIndex : 0

+0x030 ServiceContext : 0xfffffa80'02c839f0

+0x038 SpinLock : 0

+0x040 TickCount : 0

+0x048 ActualLock : 0xfffffa80'02c83b50 -> 0

+0x050 DispatchAddress : 0xfffff800'01a7db90 void nt!KiInterruptDispatch+0

+0x058 Vector : 0x81

+0x05c Irql : 0x8 ''

+0x05d SynchronizeIrql : 0x9 ''

+0x05e FloatingSave : 0 ''

+0x05f Connected : 0x1 ''

+0x060 Number : 0

+0x064 ShareVector : 0 ''

+0x065 Pad : [3] ""

+0x068 Mode : 1 ( Latched )

+0x06c Polarity : 0 ( InterruptPolarityUnknown )

+0x070 ServiceCount : 0

+0x074 DispatchCount : 0

+0x078 Rsvd1 : 0

+0x080 TrapFrame : 0xfffff800'0185ab00 _KTRAP_FRAME

+0x088 Reserved : (null)

+0x090 DispatchCode : [4] 0x8d485550

In this example, the IRQL that Windows assigned to the interrupt is 8. Although there is no direct mapping between an interrupt vector and an IRQ, Windows does keep track of this translation when managing device resources through what are called arbiters. For each resource type, an arbiter maintains the relationship between virtual resource usage (such as an interrupt vector) and physical resources (such as an interrupt line). As such, you can query either the root IRQ arbiter (on systems without ACPI) or the ACPI IRQ arbiter and obtain this mapping. Use the !apciirqarb command to obtain information on the ACPI IRQ arbiter:

lkd> !acpiirqarb

Processor 0 (0, 0):

Device Object: 0000000000000000

Current IDT Allocation:

...

0000000000000081 - 0000000000000081 D fffffa80029b4c20 (i8042prt)

A:0000000000000000 IRQ:0

...

If you don’t have an ACPI system, you can use !arbiter 4 (4 tells the debugger to display only IRQ arbiters):

lkd> !arbiter 4

DEVNODE fffffa80027c6d90 (HTREE\ROOT\0)

Interrupt Arbiter "RootIRQ" at fffff80001c82500

Allocated ranges:

0000000000000081 - 0000000000000081 Owner fffffa80029b4c20 (i8042prt)

In both cases, you will be given the owner of the vector, in the type of a device object. You can then use the !devobj command to get information on the i8042prt device in this example (which corresponds to the PS/2 driver):

lkd> !devobj fffffa80029b4c20

Device object (fffffa80029b4c20) is for:

00000061 \Driver\ACPI DriverObject fffffa8002888e70

Current Irp 00000000 RefCount 1 Type 00000032 Flags 00003040

Dacl fffff9a100096a41 DevExt fffffa800299f740 DevObjExt fffffa80029b4d70 DevNode fffffa80029b54b0

The device object is associated to a device node, which stores all the device's physical resources.

You can now dump these resources with the !devnode command, and using the 6 flag to ask

for resource information:

lkd> !devnode fffffa80029b54b0 6

DevNode 0xfffffa80029b54b0 for PDO 0xfffffa80029b4c20

Parent 0xfffffa800299b390 Sibling 0xfffffa80029b5230 Child 0000000000

InstancePath is "ACPI\PNP0303\4&17aa870d&0"

ServiceName is "i8042prt"

...

CmResourceList at 0xfffff8a00185bf40 Version 1.1 Interface 0xf Bus #0

Entry 0 - Port (0x1) Device Exclusive (0x1)

Flags (0x11) - PORT_MEMORY PORT_IO 16_BIT_DECODE

Range starts at 0x60 for 0x1 bytes

Entry 1 - Port (0x1) Device Exclusive (0x1)

Flags (0x11) - PORT_MEMORY PORT_IO 16_BIT_DECODE

Range starts at 0x64 for 0x1 bytes

Entry 2 - Port (0x1) Device Exclusive (0x1)

Flags (0x11) - PORT_MEMORY PORT_IO 16_BIT_DECODE

Range starts at 0x62 for 0x1 bytes

Entry 3 - Port (0x1) Device Exclusive (0x1)

Flags (0x11) - PORT_MEMORY PORT_IO 16_BIT_DECODE

Range starts at 0x66 for 0x1 bytes

Entry 4 - Interrupt (0x2) Device Exclusive (0x1)

Flags (0x01) - LATCHED

Level 0x1, Vector 0x1, Group 0, Affinity 0xffffffff

The device node tells you that this device has a resource list with 4 entries, one of which is an interrupt entry corresponding to IRQ 1. (The level and vector numbers represent the IRQ vector, not the interrupt vector.) IRQ 1 is the traditional PC/AT IRQ number associated with the PS/2 keyboard device, so this is the expected value. (A USB keyboard would have a different interrupt.)

On ACPI systems, you can obtain this information in a slightly easier way by reading the extended output of the !acpiirqarb command introduced earlier. As part of its output, it displays the IRQ to IDT mapping table:

Interrupt Controller (Inputs: 0x0-0x17 Dev: 0000000000000000):

(00)Cur:IDT-a1 Ref-1 edg hi Pos:IDT-00 Ref-0 edg hi

(01)Cur:IDT-81 Ref-1 edg hi Pos:IDT-00 Ref-0 edg hi

(02)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(03)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(04)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(05)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(06)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(07)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(08)Cur:IDT-71 Ref-1 edg hi Pos:IDT-00 Ref-0 edg hi

(09)Cur:IDT-b1 Ref-1 lev hi Pos:IDT-00 Ref-0 edg hi

(0a)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(0b)Cur:IDT-00 Ref-0 edg hi Pos:IDT-00 Ref-0 edg hi

(0c)Cur:IDT-91 Ref-1 edg hi Pos:IDT-00 Ref-0 edg hi

(0d)Cur:IDT-61 Ref-1 edg hi Pos:IDT-00 Ref-0 edg hi

(0e)Cur:IDT-82 Ref-1 edg hi Pos:IDT-00 Ref-0 edg hi

(0f)Cur:IDT-72 Ref-1 edg hi Pos:IDT-00 Ref-0 edg hi

(10)Cur:IDT-51 Ref-3 lev low Pos:IDT-00 Ref-0 edg hi

(11)Cur:IDT-b2 Ref-1 lev low Pos:IDT-00 Ref-0 edg hi

(12)Cur:IDT-a2 Ref-5 lev low Pos:IDT-00 Ref-0 edg hi

(13)Cur:IDT-92 Ref-1 lev low Pos:IDT-00 Ref-0 edg hi

(14)Cur:IDT-62 Ref-2 lev low Pos:IDT-00 Ref-0 edg hi

(15)Cur:IDT-a3 Ref-2 lev low Pos:IDT-00 Ref-0 edg hi

(16)Cur:IDT-b3 Ref-1 lev low Pos:IDT-00 Ref-0 edg hi

(17)Cur:IDT-52 Ref-1 lev low Pos:IDT-00 Ref-0 edg hi

As expected, IRQ 1 is associated with IDT entry 0x81. For more information on device objects, resources, and other related concepts, see Chapter 8, “I/O System,” in Part 2.

The ISR’s address for the interrupt object is stored in the ServiceRoutine field (which is what !idt displays in its output), and the interrupt code that actually executes when an interrupt occurs is stored in the DispatchCode array at the end of the interrupt object. The interrupt code stored there is programmed to build the trap frame on the stack and then call the function stored in theDispatchAddress field (KiInterruptDispatch in the example), passing it a pointer to the interrupt object.

WINDOWS AND REAL-TIME PROCESSING

Deadline requirements, either hard or soft, characterize real-time environments. Hard real-time systems (for example, a nuclear power plant control system) have deadlines the system must meet to avoid catastrophic failures, such as loss of equipment or life. Soft real-time systems (for example, a car’s fuel-economy optimization system) have deadlines the system can miss, but timeliness is still a desirable trait. In real-time systems, computers have sensor input devices and control output devices. The designer of a real-time computer system must know worst-case delays between the time an input device generates an interrupt and the time the device’s driver can control the output device to respond. This worst-case analysis must take into account the delays the operating system introduces as well as the delays the application and device drivers impose.

Because Windows doesn’t enable controlled prioritization of device IRQs and user-level applications execute only when a processor’s IRQL is at passive level, Windows isn’t typically suitable as a real-time operating system. The system’s devices and device drivers—not Windows—ultimately determine the worst-case delay. This factor becomes a problem when the real-time system’s designer uses off-the-shelf hardware. The designer can have difficulty determining how long every off-the-shelf device’s ISR or DPC might take in the worst case. Even after testing, the designer can’t guarantee that a special case in a live system won’t cause the system to miss an important deadline. Furthermore, the sum of all the delays a system’s DPCs and ISRs can introduce usually far exceeds the tolerance of a time-sensitive system.

Although many types of embedded systems (for example, printers and automotive computers) have real-time requirements, Windows Embedded Standard 7 doesn’t have real-time characteristics. It is simply a version of Windows 7 that makes it possible to produce small-footprint versions of Windows 7 suitable for running on devices with limited resources. For example, a device that has no networking capability would omit all the Windows 7 components related to networking, including network management tools and adapter and protocol stack device drivers.

Still, there are third-party vendors that supply real-time kernels for Windows. The approach these vendors take is to embed their real-time kernel in a custom HAL and to have Windows run as a task in the real-time operating system. The task running Windows serves as the user interface to the system and has a lower priority than the tasks responsible for managing the device.

Associating an ISR with a particular level of interrupt is called connecting an interrupt object, and dissociating an ISR from an IDT entry is called disconnecting an interrupt object. These operations, accomplished by calling the kernel functions IoConnectInterruptEx andIoDisconnectInterruptEx, allow a device driver to “turn on” an ISR when the driver is loaded into the system and to “turn off” the ISR if the driver is unloaded.

Using the interrupt object to register an ISR prevents device drivers from fiddling directly with interrupt hardware (which differs among processor architectures) and from needing to know any details about the IDT. This kernel feature aids in creating portable device drivers because it eliminates the need to code in assembly language or to reflect processor differences in device drivers.

Interrupt objects provide other benefits as well. By using the interrupt object, the kernel can synchronize the execution of the ISR with other parts of a device driver that might share data with the ISR. (See Chapter 8 in Part 2 for more information about how device drivers respond to interrupts.)

Furthermore, interrupt objects allow the kernel to easily call more than one ISR for any interrupt level. If multiple device drivers create interrupt objects and connect them to the same IDT entry, the interrupt dispatcher calls each routine when an interrupt occurs at the specified interrupt line. This capability allows the kernel to easily support daisy-chain configurations, in which several devices share the same interrupt line. The chain breaks when one of the ISRs claims ownership for the interrupt by returning a status to the interrupt dispatcher.

If multiple devices sharing the same interrupt require service at the same time, devices not acknowledged by their ISRs will interrupt the system again once the interrupt dispatcher has lowered the IRQL. Chaining is permitted only if all the device drivers wanting to use the same interrupt indicate to the kernel that they can share the interrupt; if they can’t, the Plug and Play manager reorganizes their interrupt assignments to ensure that it honors the sharing requirements of each. If the interrupt vector is shared, the interrupt object invokes KiChainedDispatch, which will invoke the ISRs of each registered interrupt object in turn until one of them claims the interrupt or all have been executed. In the earlier sample !idt output (in the EXPERIMENT: Viewing the IDT section), vector 0xa2 is connected to several chained interrupt objects. On the system it was run on, it happens to correspond to an integrated 7-in-1 media card reader, which is a combination of Secure Digital (SD), Compact Flash (CF), MultiMedia Card (MMC) and other types of readers, each having their individual interrupt. Because it’s packaged as one device by the same vendor, it makes sense that its interrupts share the same vector.

LINE-BASED VS. MESSAGE SIGNALED-BASED INTERRUPTS

Shared interrupts are often the cause of high interrupt latency and can also cause stability issues. They are typically undesirable and a side effect of the limited number of physical interrupt lines on a computer. For example, in the previous example of the 7-in-1 media card reader, a much better solution is for each device to have its own interrupt and for one driver to manage the different interrupts knowing which device they came from. However, consuming four IRQ lines for a single device quickly leads to IRQ line exhaustion. Additionally, PCI devices are each connected to only one IRQ line anyway, so the media card reader cannot use more than one IRQ in the first place.

Other problems with generating interrupts through an IRQ line is that incorrect management of the IRQ signal can lead to interrupt storms or other kinds of deadlocks on the machine, because the signal is driven “high” or “low” until the ISR acknowledges it. (Furthermore, the interrupt controller must typically receive an EOI signal as well.) If either of these does not happen due to a bug, the system can end up in an interrupt state forever, further interrupts could be masked away, or both. Finally, line-based interrupts provide poor scalability in multiprocessor environments. In many cases, the hardware has the final decision as to which processor will be interrupted out of the possible set that the Plug and Play manager selected for this interrupt, and there is little device drivers can do.

A solution to all these problems is a new interrupt mechanism first introduced in the PCI 2.2 standard called message-signaled interrupts (MSI). Although it remains an optional component of the standard that is seldom found in client machines, an increasing number of servers and workstations implement MSI support, which is fully supported by the all recent versions of Windows. In the MSI model, a device delivers a message to its driver by writing to a specific memory address. This action causes an interrupt, and Windows then calls the ISR with the message content (value) and the address where the message was delivered. A device can also deliver multiple messages (up to 32) to the memory address, delivering different payloads based on the event.

Because communication is based across a memory value, and because the content is delivered with the interrupt, the need for IRQ lines is removed (making the total system limit of MSIs equal to the number of interrupt vectors, not IRQ lines), as is the need for a driver ISR to query the device for data related to the interrupt, decreasing latency. Due to the large number of device interrupts available through this model, this effectively nullifies any benefit of sharing interrupts, decreasing latency further by directly delivering the interrupt data to the concerned ISR.

Finally, MSI-X, an extension to the MSI model, which is introduced in PCI 3.0, adds support for 32-bit messages (instead of 16-bit), a maximum of 2048 different messages (instead of just 32), and more importantly, the ability to use a different address (which can be dynamically determined) for each of the MSI payloads. Using a different address allows the MSI payload to be written to a different physical address range that belongs to a different processor, or a different set of target processors, effectively enabling nonuniform memory access (NUMA)-aware interrupt delivery by sending the interrupt to the processor that initiated the related device request. This improves latency and scalability by monitoring both load and closest NUMA node during interrupt completion.

INTERRUPT AFFINITY AND PRIORITY

On systems that both support ACPI and contain an APIC, Windows enables driver developers and administrators to somewhat control the processor affinity (selecting the processor or group of processors that receives the interrupt) and affinity policy (selecting how processors will be chosen and which processors in a group will be chosen). Furthermore, it enables a primitivemechanism of interrupt prioritization based on IRQL selection. Affinity policy is defined according to Table 3-1, and it’s configurable through a registry value called InterruptPolicyValue in the Interrupt Management\Affinity Policy key under the device’s instance key in the registry. Because of this, it does not require any code to configure—an administrator can add this value to a given driver’s key to influence its behavior. Microsoft provides such a tool, called the Interrupt Affinity policy Tool, which can be downloaded from http://www.microsoft.com/whdc/system/sysperf/intpolicy.mspx.

TABLE 3-1. IRQ AFFINITY POLICIES

|

Policy |

Meaning |

|

IrqPolicyMachineDefault |

The device does not require a particular affinity policy. Windows uses the default machine policy, which (for machines with less than eight logical processors) is to select any available processor on the machine. |

|

IrqPolicyAllCloseProcessors |

On a NUMA machjne, the Plug and Play manager assigns the interrupt to all the processors that are close to the device (on the same node). On non-NUMA machines, this is the same as IrqPolicyAllProcessorsInMachine. |

|

IrqPolicyOneCloseProcessor |

On a NUMA machjne, the Plug and Play manager assigns the interrupt to one processor that is close to the device (on the same node). On non-NUMA machines, the chosen processor will be any available on the system. |

|

IrqPolicyAllProcessorsInMachine |

The interrupt is processed by any available processor on the machine. |

|

IrqPolicySpecifiedProcessors |

The interrupt is processed only by one of the processors specified in the affinity mask under the AssignmentSetOverride registry value. |

|

IrqPolicySpreadMessagesAcrossAllProcessors |

Different message-signaled interrupts are distributed across an optimal set of eligible processors, keeping track of NUMA topology issues, if possible. This requires MSI-X support on the device and platform. |

Other than setting this affinity policy, another registry value can also be used to set the interrupt’s priority, based on the values in Table 3-2.

TABLE 3-2. IRQ PRIORITIES

|

Priority |

Meaning |

|

IrqPriorityUndefined |

No particular priority is required by the device. It receives the default priority (IrqPriorityNormal). |

|

IrqPriorityLow |

The device can tolerate high latency and should receive a lower IRQL than usual. |

|

IrqPriorityNormal |

The device expects average latency. It receives the default IRQL associated with its interrupt vector. |

|

IrqPriorityHigh |

The device requires as little latency as possible. It receives an elevated IRQL beyond its normal assignment. |

As discussed earlier, it is important to note that Windows is not a real-time operating system, and as such, these IRQ priorities are hints given to the system that control only the IRQL associated with the interrupt and provide no extra priority other than the Windows IRQL priority-scheme mechanism. Because the IRQ priority is also stored in the registry, administrators are free to set these values for drivers should there be a requirement of lower latency for a driver not taking advantage of this feature.

Software Interrupts

Although hardware generates most interrupts, the Windows kernel also generates software interrupts for a variety of tasks, including these:

§ Initiating thread dispatching

§ Non-time-critical interrupt processing

§ Handling timer expiration

§ Asynchronously executing a procedure in the context of a particular thread

§ Supporting asynchronous I/O operations

These tasks are described in the following subsections.

Dispatch or Deferred Procedure Call (DPC) Interrupts

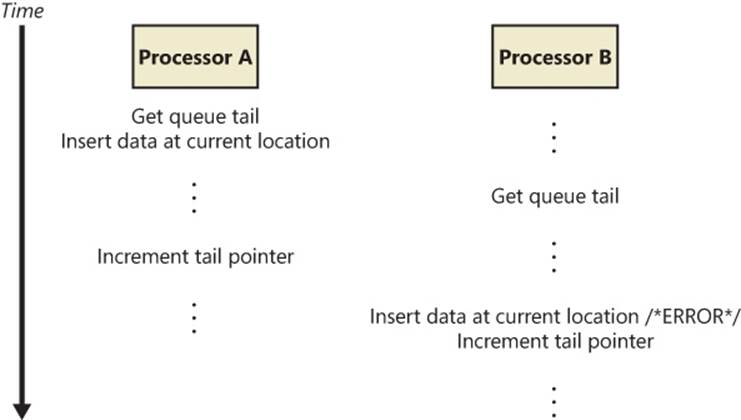

When a thread can no longer continue executing, perhaps because it has terminated or because it voluntarily enters a wait state, the kernel calls the dispatcher directly to effect an immediate context switch. Sometimes, however, the kernel detects that rescheduling should occur when it is deep within many layers of code. In this situation, the kernel requests dispatching but defers its occurrence until it completes its current activity. Using a DPC software interrupt is a convenient way to achieve this delay.

The kernel always raises the processor’s IRQL to DPC/dispatch level or above when it needs to synchronize access to shared kernel structures. This disables additional software interrupts and thread dispatching. When the kernel detects that dispatching should occur, it requests a DPC/dispatch-level interrupt; but because the IRQL is at or above that level, the processor holds the interrupt in check. When the kernel completes its current activity, it sees that it’s going to lower the IRQL below DPC/dispatch level and checks to see whether any dispatch interrupts are pending. If there are, the IRQL drops to DPC/dispatch level and the dispatch interrupts are processed. Activating the thread dispatcher by using a software interrupt is a way to defer dispatching until conditions are right. However, Windows uses software interrupts to defer other types of processing as well.

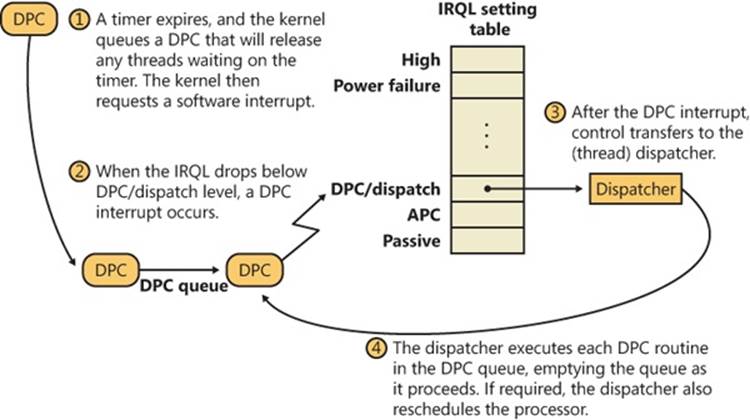

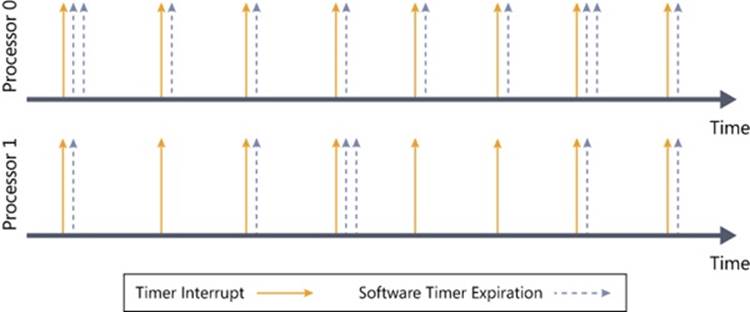

In addition to thread dispatching, the kernel also processes deferred procedure calls (DPCs) at this IRQL. A DPC is a function that performs a system task—a task that is less time-critical than the current one. The functions are called deferred because they might not execute immediately.

DPCs provide the operating system with the capability to generate an interrupt and execute a system function in kernel mode. The kernel uses DPCs to process timer expiration (and release threads waiting for the timers) and to reschedule the processor after a thread’s quantum expires. Device drivers use DPCs to process interrupts. To provide timely service for hardware interrupts, Windows—with the cooperation of device drivers—attempts to keep the IRQL below device IRQL levels. One way that this goal is achieved is for device driver ISRs to perform the minimal work necessary to acknowledge their device, save volatile interrupt state, and defer data transfer or other less time-critical interrupt processing activity for execution in a DPC at DPC/dispatch IRQL. (See Chapter 8 in Part 2 for more information on DPCs and the I/O system.)

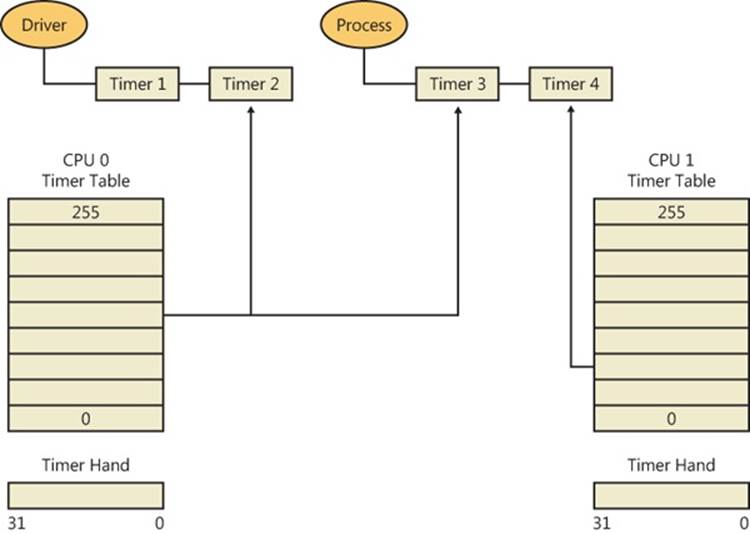

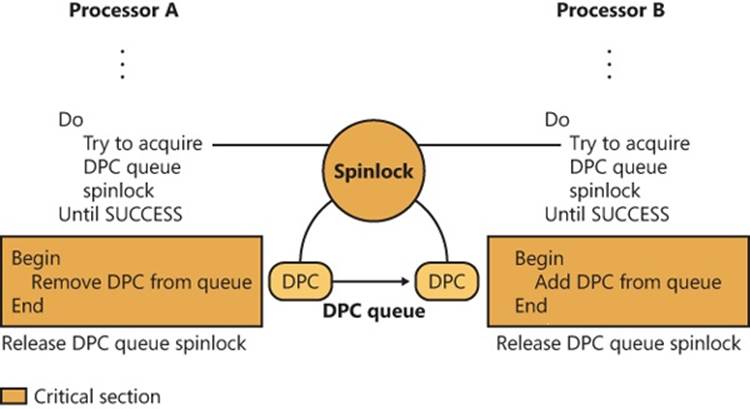

A DPC is represented by a DPC object, a kernel control object that is not visible to user-mode programs but is visible to device drivers and other system code. The most important piece of information the DPC object contains is the address of the system function that the kernel will call when it processes the DPC interrupt. DPC routines that are waiting to execute are stored in kernel-managed queues, one per processor, called DPC queues. To request a DPC, system code calls the kernel to initialize a DPC object and then places it in a DPC queue.

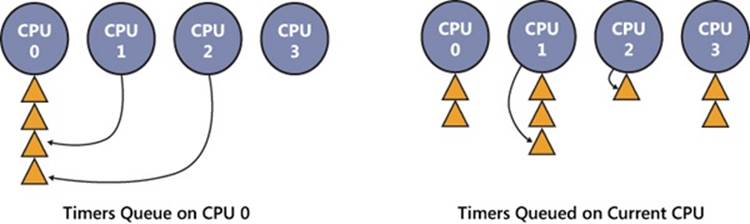

By default, the kernel places DPC objects at the end of the DPC queue of the processor on which the DPC was requested (typically the processor on which the ISR executed). A device driver can override this behavior, however, by specifying a DPC priority (low, medium, medium-high, or high, where medium is the default) and by targeting the DPC at a particular processor. A DPC aimed at a specific CPU is known as a targeted DPC. If the DPC has a high priority, the kernel inserts the DPC object at the front of the queue; otherwise, it is placed at the end of the queue for all other priorities.

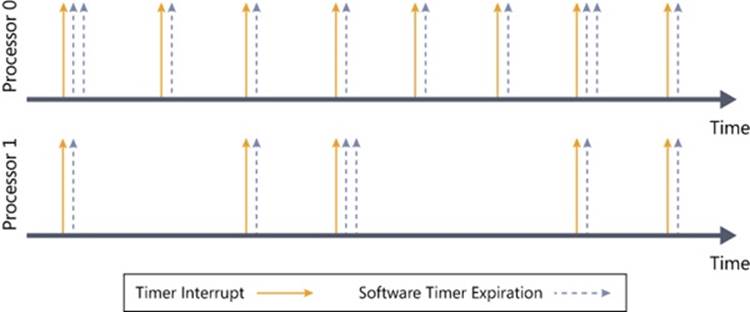

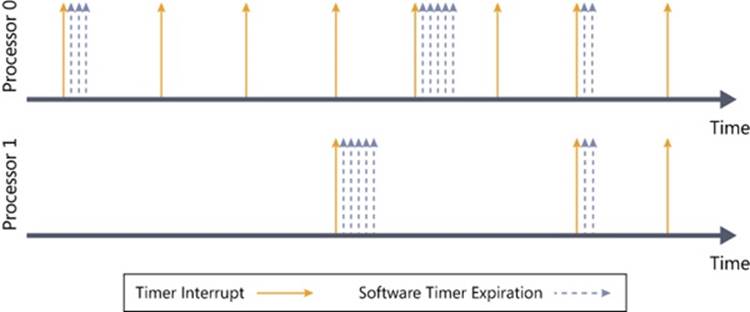

When the processor’s IRQL is about to drop from an IRQL of DPC/dispatch level or higher to a lower IRQL (APC or passive level), the kernel processes DPCs. Windows ensures that the IRQL remains at DPC/dispatch level and pulls DPC objects off the current processor’s queue until the queue is empty (that is, the kernel “drains” the queue), calling each DPC function in turn. Only when the queue is empty will the kernel let the IRQL drop below DPC/dispatch level and let regular thread execution continue. DPC processing is depicted in Figure 3-7.

DPC priorities can affect system behavior another way. The kernel usually initiates DPC queue draining with a DPC/dispatch-level interrupt. The kernel generates such an interrupt only if the DPC is directed at the current processor (the one on which the ISR executes) and the DPC has a priority higher than low. If the DPC has a low priority, the kernel requests the interrupt only if the number of outstanding DPC requests for the processor rises above a threshold or if the number of DPCs requested on the processor within a time window is low.

Figure 3-7. Delivering a DPC

If a DPC is targeted at a CPU different from the one on which the ISR is running and the DPC’s priority is either high or medium-high, the kernel immediately signals the target CPU (by sending it a dispatch IPI) to drain its DPC queue, but only as long as the target processor is idle. If the priority is medium or low, the number of DPCs queued on the target processor must exceed a threshold for the kernel to trigger a DPC/dispatch interrupt. The system idle thread also drains the DPC queue for the processor it runs on. Although DPC targeting and priority levels are flexible, device drivers rarely need to change the default behavior of their DPC objects. Table 3-3 summarizes the situations that initiate DPC queue draining. Medium-high and high appear and are, in fact, equal priorities when looking at the generation rules. The difference comes from their insertion in the list, with high interrupts being at the head and medium-high interrupts at the tail.

Table 3-3. DPC Interrupt Generation Rules

|

DPC Priority |

DPC Targeted at ISR’s Processor |

DPC Targeted at Another Processor |

|

Low |

DPC queue length exceeds maximum DPC queue length, or DPC request rate is less than minimum DPC request rate |

DPC queue length exceeds maximum DPC queue length, or system is idle |

|

Medium |

Always |

DPC queue length exceeds maximum DPC queue length, or system is idle |

|

Medium-High |

Always |

Target processor is idle |

|

High |

Always |

Target processor is idle |

Because user-mode threads execute at low IRQL, the chances are good that a DPC will interrupt the execution of an ordinary user’s thread. DPC routines execute without regard to what thread is running, meaning that when a DPC routine runs, it can’t assume what process address space is currently mapped. DPC routines can call kernel functions, but they can’t call system services, generate page faults, or create or wait for dispatcher objects (explained later in this chapter). They can, however, access nonpaged system memory addresses, because system address space is always mapped regardless of what the current process is.

DPCs are provided primarily for device drivers, but the kernel uses them too. The kernel most frequently uses a DPC to handle quantum expiration. At every tick of the system clock, an interrupt occurs at clock IRQL. The clock interrupt handler (running at clock IRQL) updates the system time and then decrements a counter that tracks how long the current thread has run. When the counter reaches 0, the thread’s time quantum has expired and the kernel might need to reschedule the processor, a lower-priority task that should be done at DPC/dispatch IRQL. The clock interrupt handler queues a DPC to initiate thread dispatching and then finishes its work and lowers the processor’s IRQL. Because the DPC interrupt has a lower priority than do device interrupts, any pending device interrupts that surface before the clock interrupt completes are handled before the DPC interrupt occurs.

Because DPCs execute regardless of whichever thread is currently running on the system (much like interrupts), they are a primary cause for perceived system unresponsiveness of client systems or workstation workloads because even the highest-priority thread will be interrupted by a pending DPC. Some DPCs run long enough that users might perceive video or sound lagging, and even abnormal mouse or keyboard latencies, so for the benefit of drivers with long-running DPCs, Windows supports threaded DPCs.

Threaded DPCs, as their name implies, function by executing the DPC routine at passive level on a real-time priority (priority 31) thread. This allows the DPC to preempt most user-mode threads (because most application threads don’t run at real-time priority ranges), but it allows other interrupts, nonthreaded DPCs, APCs, and higher-priority threads to preempt the routine.

The threaded DPC mechanism is enabled by default, but you can disable it by adding a DWORD value HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\Session Manager\kernel\ThreadDpcEnable and setting it to 0. Because threaded DPCs can be disabled, driver developers who make use of threaded DPCs must write their routines following the same rules as for nonthreaded DPC routines and cannot access paged memory, perform dispatcher waits, or make assumptions about the IRQL level at which they are executing. In addition, they must not use the KeAcquire/ReleaseSpinLockAtDpcLevel APIs because the functions assume the CPU is at dispatch level. Instead, threaded DPCs must use KeAcquire/ReleaseSpinLockForDpc, which performs the appropriate action after checking the current IRQL.

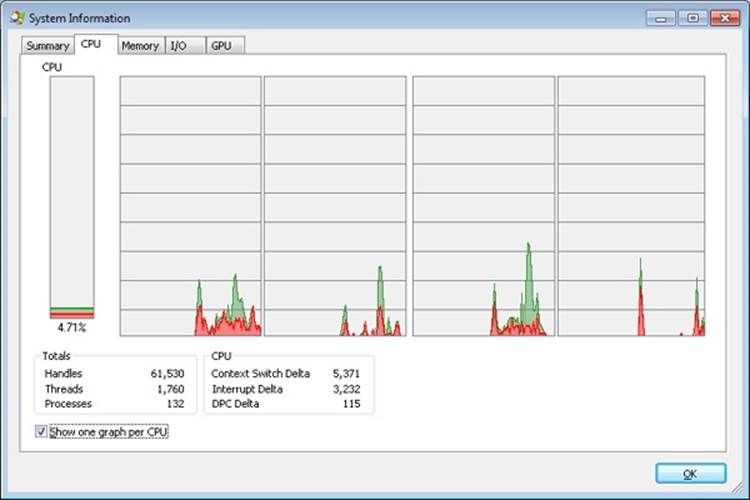

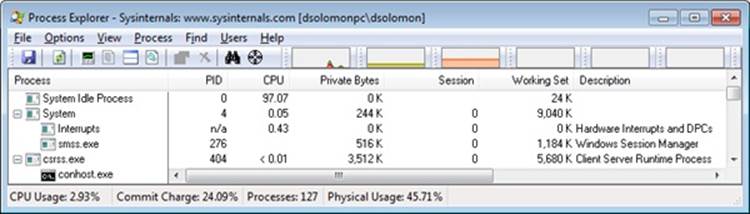

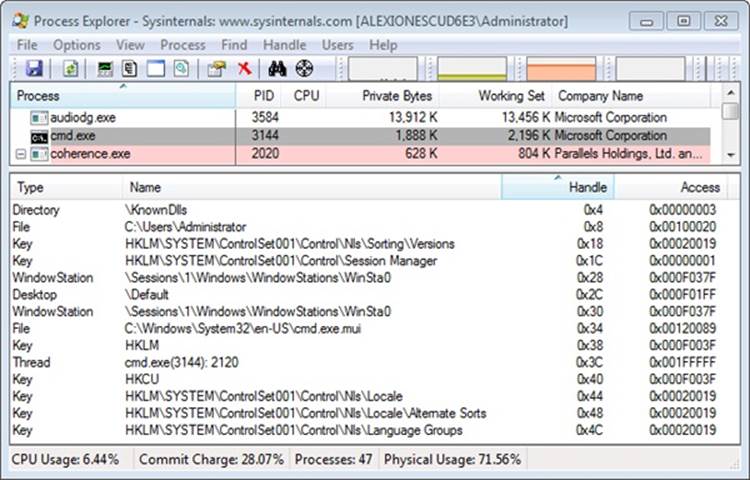

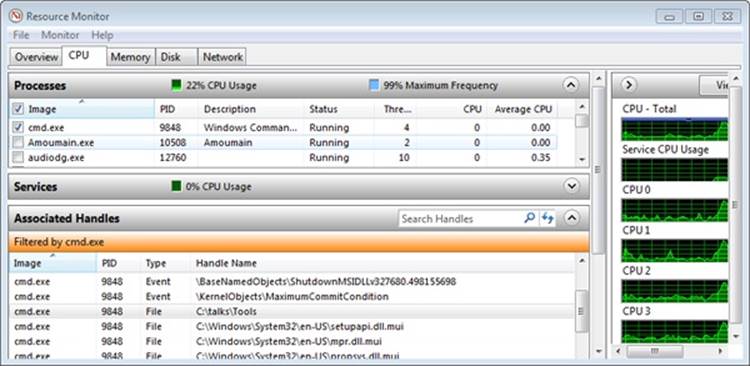

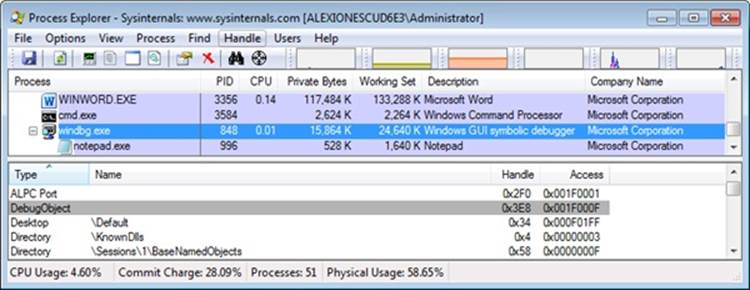

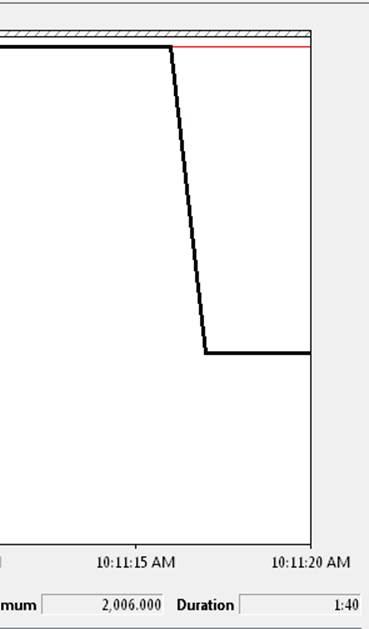

EXPERIMENT: MONITORING INTERRUPT AND DPC ACTIVITY

You can use Process Explorer to monitor interrupt and DPC activity by opening the System Information dialog and switching to the CPU tab, where it lists the number of interrupts and DPCs executed each time Process Explorer refreshes the display (1 second by default):

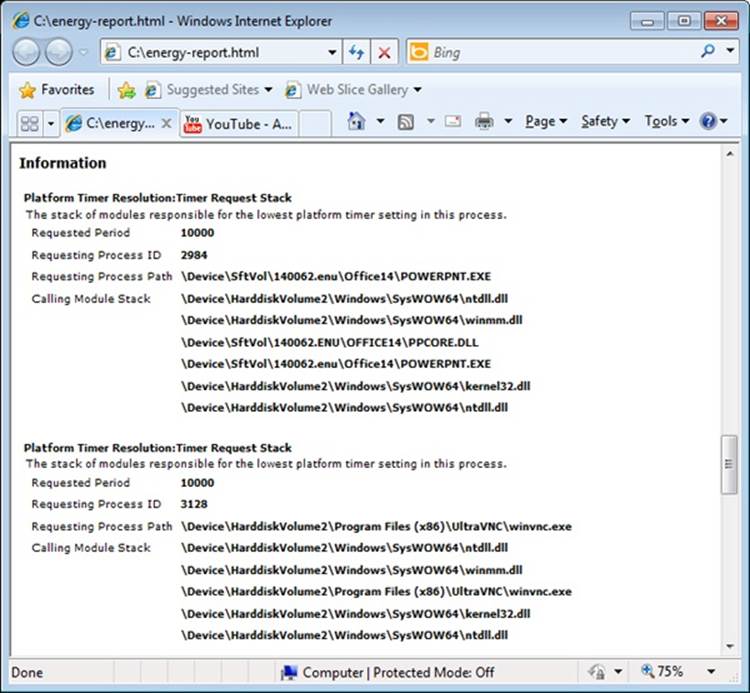

You can also trace the execution of specific interrupt service routines and deferred procedure calls with the built-in event tracing support (described later in this chapter):

1. Start capturing events by opening an elevated command prompt, navigating to the Microsoft Windows Performance Toolkit directory (typically in c:\Program Files) and typing the following command (make sure no other program is capturing events, such as Process Explorer or Process Monitor, or this will fail with an error):

xperf –on PROC_THREAD+LOADER+DPC+INTERRUPT

2. Stop capturing events by typing the following:

xperf –d dpcisr.etl

3. Generate reports for the event capture by typing this:

4. xperf dpcisr.etl

tracerpt \kernel.etl –report dpcisr.html –f html

This will generate a web page called dpcisr.html.

5. Open report.html, and expand the DPC/ISR subsection. Expand the DPC/ISR Breakdown area, and you will see summaries of the time spent in ISRs and DPCs by each driver. For example:

Running an ln command in the kernel debugger on the address of each event record shows the name of the function that executed the DPC or ISR:

lkd> ln 0x806321C7

(806321c7) ndis!ndisInterruptDpc

lkd> ln 0x820AED3F

(820aed3f) nt!IopTimerDispatch

lkd> ln 0x82051312

(82051312) nt!PpmPerfIdleDpc

The first is a DPC queued by a network card NDIS miniport driver. The second is a DPC for a generic I/O timer expiration. The third address is the address of a DPC for an idle performance operation.

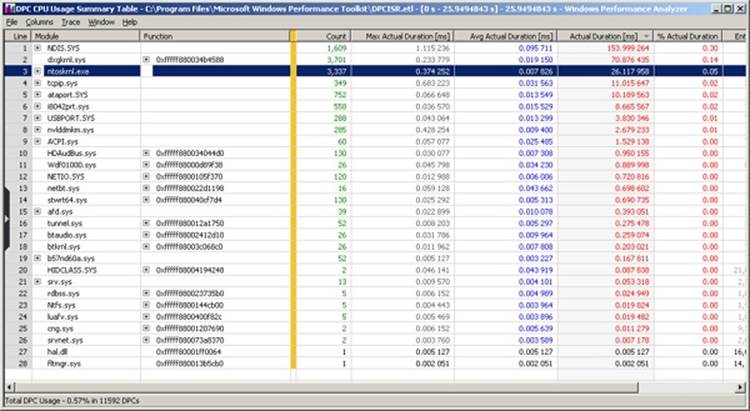

Other than using it to get an HTML report, you can use the Xperf Viewer to show a detailed overview of all DPC and ISR events by right-clicking on the DPC and/or ISR CPU Usage graphs in the main Xperf window and choosing Summary Table. You will be able to see a per-driver view of each DPC and ISR in detail, along with its duration and count, just as shown in the following graphic:

Asynchronous Procedure Call Interrupts

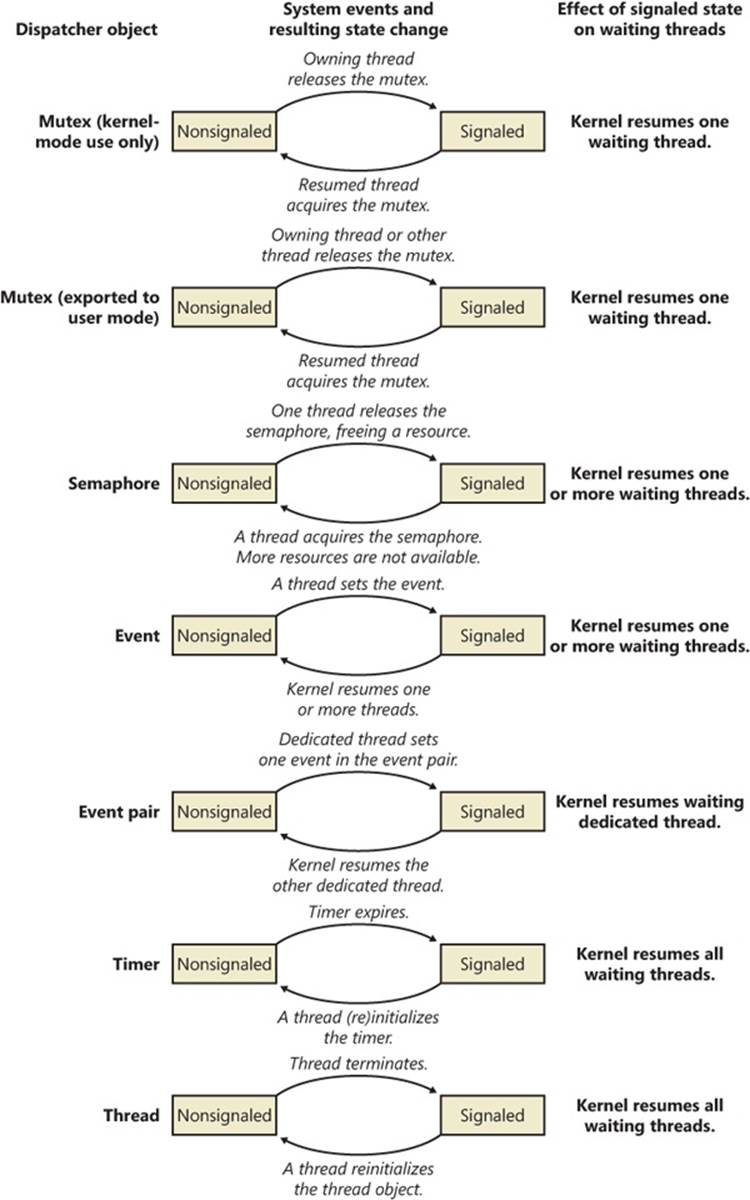

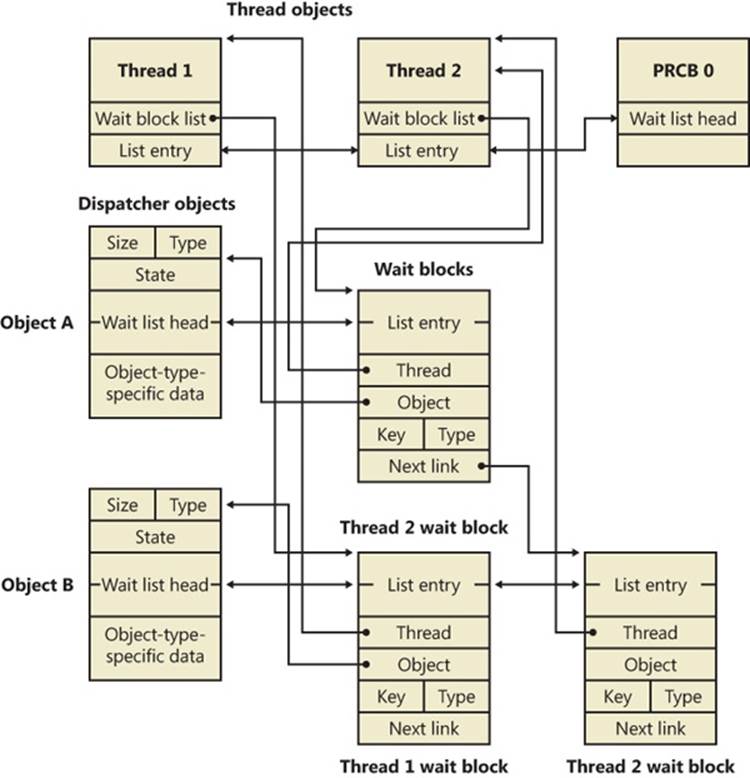

Asynchronous procedure calls (APCs) provide a way for user programs and system code to execute in the context of a particular user thread (and hence a particular process address space). Because APCs are queued to execute in the context of a particular thread and run at an IRQL less than DPC/dispatch level, they don’t operate under the same restrictions as a DPC. An APC routine can acquire resources (objects), wait for object handles, incur page faults, and call system services.

APCs are described by a kernel control object, called an APC object. APCs waiting to execute reside in a kernel-managed APC queue. Unlike the DPC queue, which is systemwide, the APC queue is thread-specific—each thread has its own APC queue. When asked to queue an APC, the kernel inserts it into the queue belonging to the thread that will execute the APC routine. The kernel, in turn, requests a software interrupt at APC level, and when the thread eventually begins running, it executes the APC.

There are two kinds of APCs: kernel mode and user mode. Kernel-mode APCs don’t require permission from a target thread to run in that thread’s context, while user-mode APCs do. Kernel-mode APCs interrupt a thread and execute a procedure without the thread’s intervention or consent. There are also two types of kernel-mode APCs: normal and special. Special APCs execute at APC level and allow the APC routine to modify some of the APC parameters. Normal APCs execute at passive level and receive the modified parameters from the special APC routine (or the original parameters if they weren’t modified).

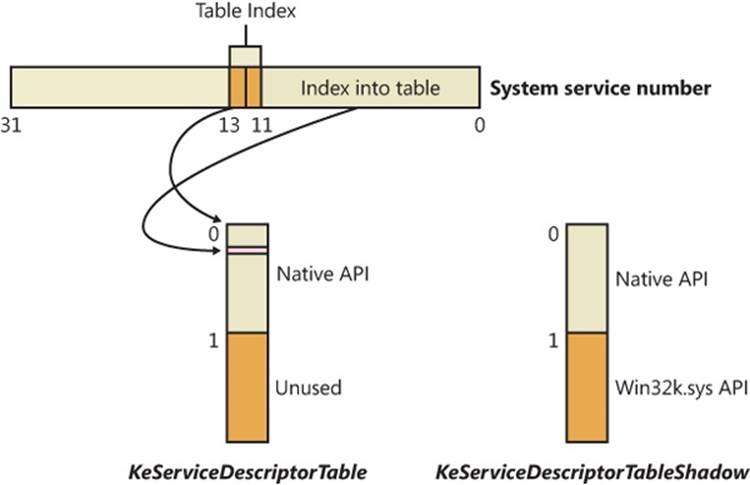

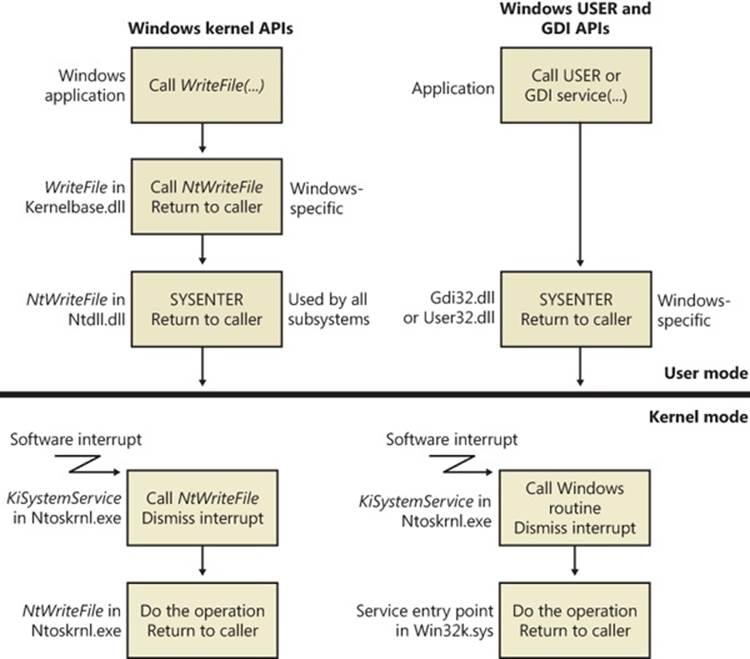

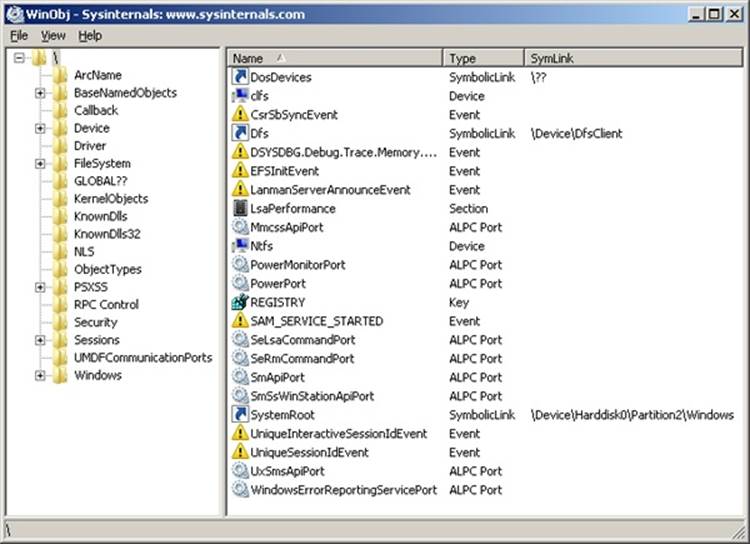

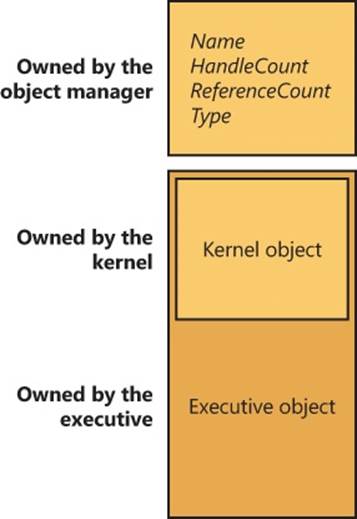

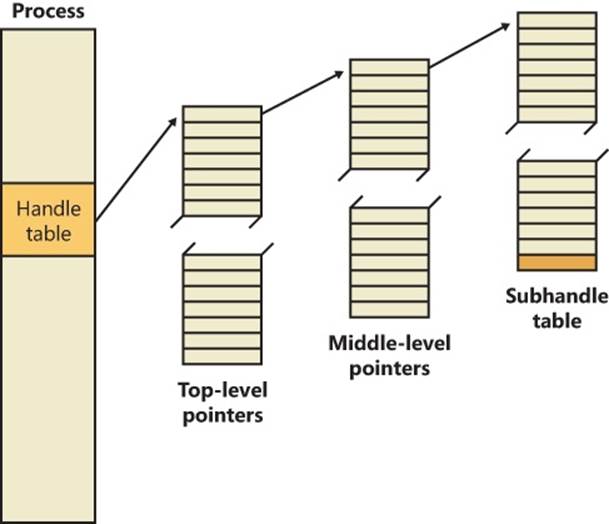

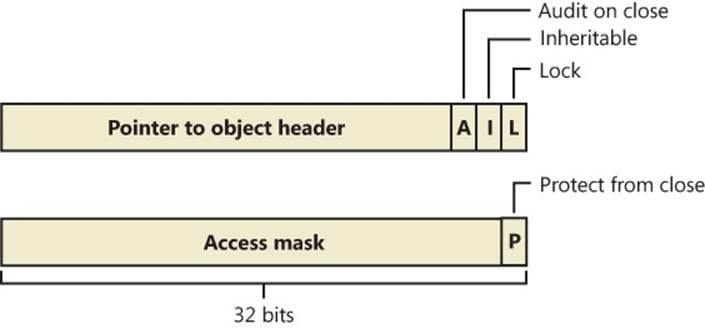

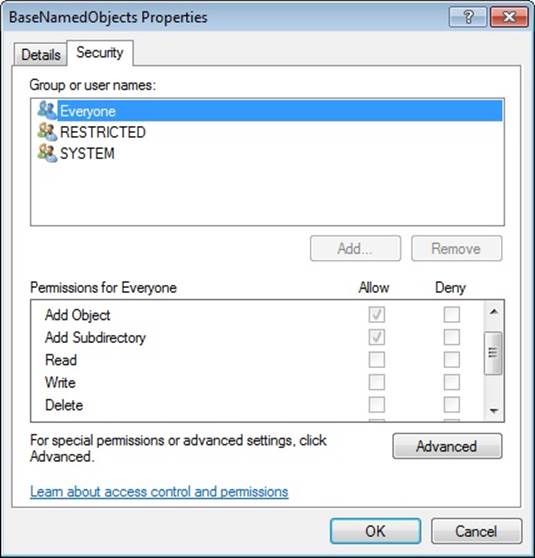

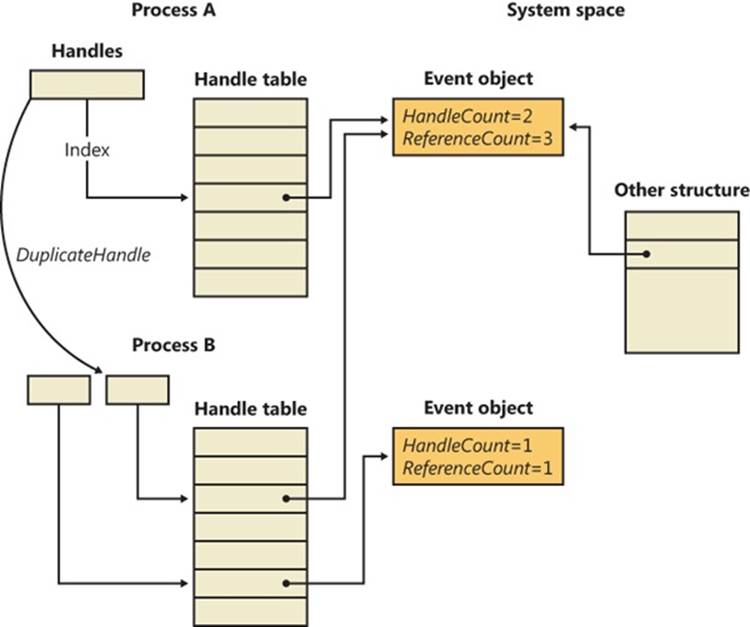

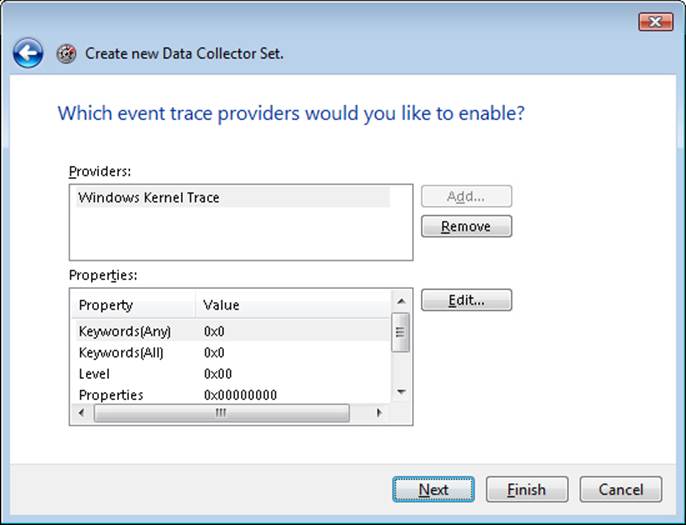

Both normal and special APCs can be disabled by raising the IRQL to APC level or by calling KeEnterGuardedRegion. KeEnterGuardedRegion disables APC delivery by setting the SpecialApcDisable field in the calling thread’s KTHREAD structure (described further in Chapter 5). A thread can disable normal APCs only by calling KeEnterCriticalRegion, which sets the KernelApcDisable field in the thread’s KTHREAD structure. Table 3-4 summarizes the APC insertion and delivery behavior for each type of APC.