Scenario-Focused Engineering (2014)

Appendix D. Selected case studies

This appendix contains two case studies of teams that followed many of the concepts and techniques described in this book to deliver solutions to customers.

The first case study is a textbook account of how an expert used a design-thinking approach in a real-world situation. It details the process he used to discover the unarticulated need a company was hunting for, as well as the process he followed and the artifacts he created along the path to developing a final solution. It highlights how these techniques can be used quickly, with a full iteration completed in only one week.

The second is a good illustration of what the path more often looks like for teams that are embarking on a journey to delight their customers. This one is an account of implementing Scenario-Focused Engineering in a large team at Microsoft. This case study is written by an engineer who loved the ideas he was exposed to in the SFE workshop and was passionate about implementing them on his own team. The case study follows how he and his team worked through the speed bumps and challenges that ensued.

Case study: Scenarios set the course for a drifting project

Chris Nodder, Interface Tamer, Chris Nodder Consulting, LLC

Summary: Taking one week to plan a project by following a user-centered design process with a focus on common user scenarios stopped churn and led to the fast, focused delivery of a well-received social media product for hotel staff worldwide.

Hampton Hotels is part of Hilton Worldwide, with nearly 2,000 focused-service hotels in fifteen countries. Hampton prides itself on participation from hotel employees, or Team Members. A big differentiator from other hotel chains is Hampton’s brand personality—summed up as “Hamptonality.” The more engaged hotel Team Members are, the more likely they are to exhibit Hamptonality and thus delight guests.

A General Manager (GM) at each property is the focal point for questions from their teams and their management companies. They have formal channels to turn to for procedural advice, but at the point I got involved there was no common location for sharing tips and tricks on topics such as staff motivation or increasing customer loyalty—the kind of elements that make up Hamptonality.

To help GMs with these informal questions, the Brand team had planned a video-based tips-and-tricks site called Sharecast, which would be populated with both top-down content and grassroots Hamptonality suggestions.

The Brand team wanted to announce Sharecast at the GM conference, a massive annual event that was just seven months away. But the project had been stalled for months, partly because the team wasn’t sure that it was the right direction to take.

To get the project back on track, we set up a one-week user-centered planning and design workshop with the business sponsor, user representatives, a project manager, a UX designer, and the primary developer.

Locking everyone in a room for a week was highly successful in getting them on the same page. We started by reviewing all the information we had about users of the system from site visits, user-testing sessions, and the personal experiences of the user representatives. This led to three personas: a sharer (knowledgeable, confident, posts best practices), a searcher (smart, curious, but lacks confidence, wants to gain knowledge), and a connector (isolated, hungry for community, wants reassurance).

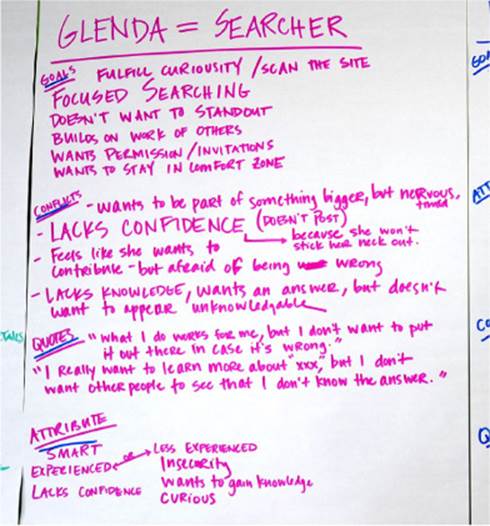

To help project teams pull various information sources together, I normally create four headings for each persona: goals, conflicts, quotes, and attributes. You can see the resulting thumbnail persona sketch for the searcher persona in Figure D-1. In future design conversations, the persona names serve as a placeholder or shorthand for all of this information and for the discussions that led to each persona’s creation. With this type of information written down, it’s straightforward to work out how a key persona would want to use the system.

FIGURE D-1 Goals, conflicts, quotes, and attributes provide a quick summary of target personas.

We next wrote scenarios to work out how these personas would interact with each other and with the system. These scenarios played a pivotal role in reaching a way forward. Almost immediately, it became clear that the stories we were telling for these personas had very little to do with video, and no real need for company-produced content. The sharers, searchers, and connectors would create and consume content without any corporate assistance—in fact, they’d be more likely to use the system if it remained “corporate free.”

This was a major turning point for the project team. Once we had the scenarios written, we performed some rounds of design charrette ideation exercises to identify creative ways of letting the different personas interact on the website. Although there were people from multiple roles in the room, the fact that in a charrette exercise everybody works individually before sharing ideas meant that everyone’s voice was heard.

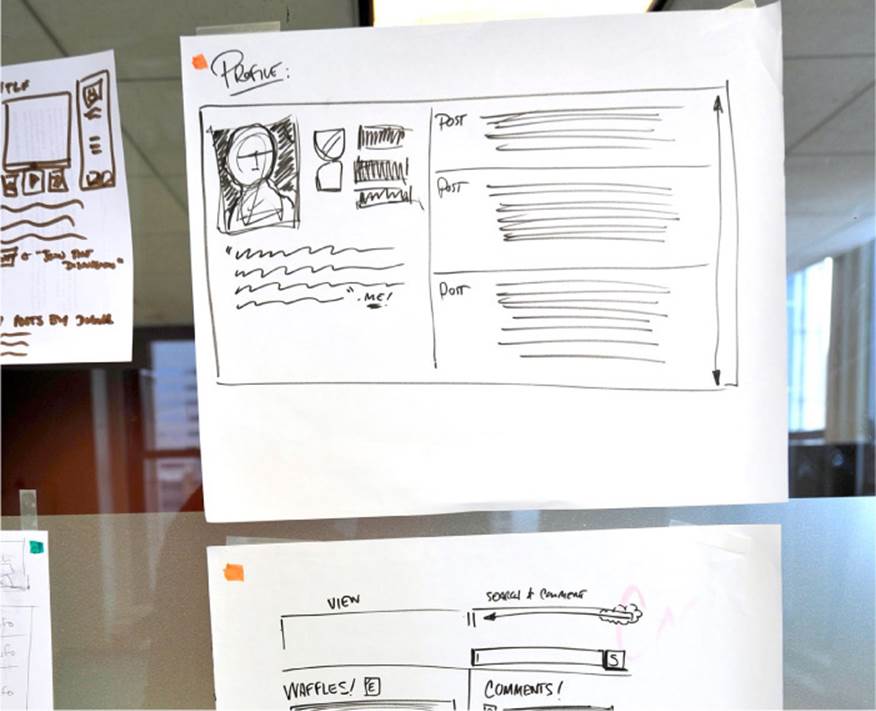

The project team was surprisingly creative, and several of these charrette sketches formed central parts of the final interface. Figure D-2 shows a charrette sketch of a profile page. This two-pane approach became a key design feature for the finished product.

FIGURE D-2 Design charrette output is normally just quick sketches to get across a key idea. We mined these sketches heavily for design ideas later in the process.

We created the first prototype interface using the scenarios as our guide. Although the scenarios themselves very clearly avoided defining interface components, it was easy to read through each one and find where an interaction was described. For every interaction, we then turned to the ideation sketches for inspiration on how we could create a suitable interface component. Pauses in the scenario coincided very well with the move to a new screen in the prototype interface.

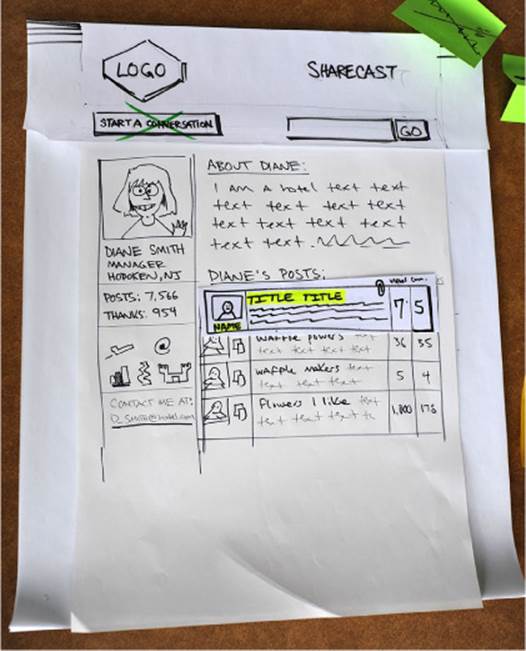

We drew each component on paper with sticky notes glued to the back so that we could easily reposition each piece as the interface evolved. I find that this approach works really well as a way to keep the interface fluid. It bears no cost to rework the design, whereas when a design is drawn out fully on one sheet of paper, the designer may be more reluctant to make changes because it means redrawing the whole thing. Figure D-3 shows one of the screens that was developed in this way. I often get even more granular than this, creating individual pieces of paper for each design element.

FIGURE D-3 The profile view as a paper prototype. Note how the prototyped view compares with the original ideation sketch shown in Figure D-2.

On Thursday of this intensive week, we performed our first usability test to verify that we were on the right track. After just three participants, we had sufficiently consistent results to know what we needed to change before starting to code.

On Friday, we wrote high-level Agile user stories and prioritized them so that we could work out how soon we would have a sufficiently advanced product to be able to conduct alpha and beta tests of the interface with larger groups of users. We used a Story Map to prioritize the work items: we laid out each screen of the prototype interface on a long table and wrote user stories to cover every capability of each screen, placing the story cards directly below the screen they referred to.

It was then relatively simple to sort the user stories into must-haves (core functionality), essential experiences (what makes the interface workable for the personas), and nice-to-haves (which add delight). Or, to use a cupcake analogy—cake, frosting, and sprinkles. There is no point building sprinkles capabilities in one area of the interface if you still lack cake functionality in another area, but adding sprinkles at the end can really complete the experience.

Compared with a straight backlog, this two-dimensional approach, with interface components on the horizontal axis and priority on the vertical axis, lets you see the interaction between stories more easily. This quick prioritization exercise gave the developer enough information to estimate the effort involved, and the good news was that we could still be done in time for the GM conference.

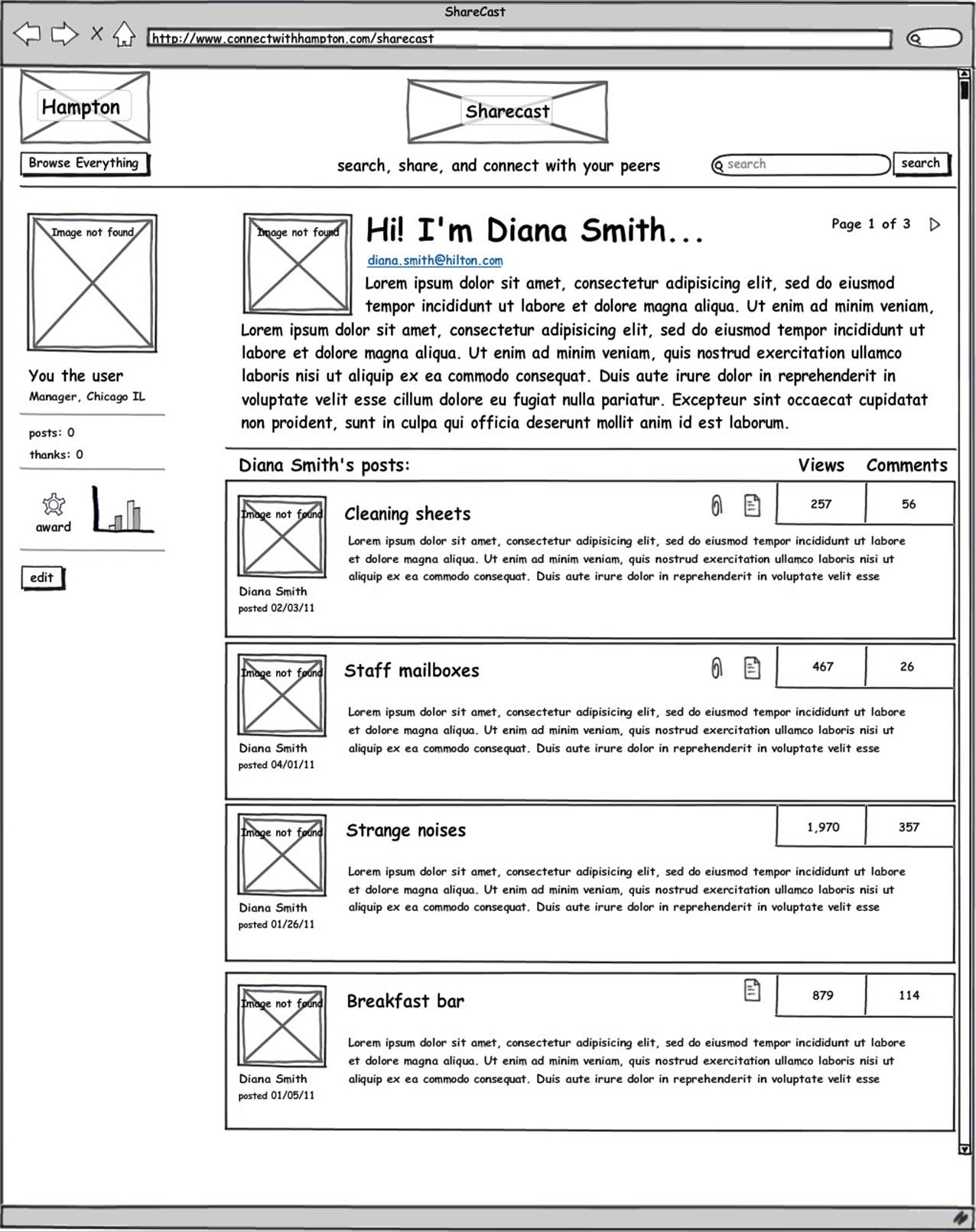

Two weeks after the workshop, we visited two hotel properties and ran a more formal set of usability tests with computer-drawn paper prototypes to ensure that we were on the right track before coding. This provided the final set of tweaks to our assumptions. The capabilities we chose to build for our target personas ended up delighting the whole user base. The clean, minimal, focused interface provided just enough features beyond the basic set, and they were implemented in just the right way that users truly expressed delight with the system, even though it was still only a wireframe sketch on paper (Figure D-4).

FIGURE D-4 A wireframe mockup of the profile view. Notice the design elements that have been carried through from the initial concept sketches but have been fine-tuned based on feedback.

When the alpha and beta releases rolled out to select groups of GMs, usage did indeed fit with our scenario predictions, so we felt confident gearing up to release the production version at the GM conference. User testing was a pleasant experience at this point because there were no big surprises. Early verification of the layout and functionality meant that there were only a few course corrections as we moved from wireframes to final design (Figure D-5).

FIGURE D-5 The final design that was released.

Alpha and beta participants were sufficiently engaged that they built out hundreds of entries for the community before release. At the conference, the launch was a big success. When the solution was announced in a room full of 1,800 GMs, the alpha and beta participants spontaneously cheered and applauded. There was immediately a lot of activity and energy on the site.

Today, Sharecast has been adopted not just by hotel GMs but by individual Team Members as well. One front-desk agent captured it perfectly in a note she sent to a GM she had never met, in a Hampton hotel five states away: “I’ve been following your posts on Sharecast and I just wanted to thank you. My goal is to be a GM of a Hampton Inn and I love your enthusiasm and the ideas you have shared. I’ve written many of your ideas in my journal so I can implement them at my own property.”

And this wouldn’t truly be a user-centered tale without some quantitative metrics to accompany the qualitative data. Today on the site, around 70 percent of views are from returning visitors, people spend an average of 15 minutes per visit, and in that time they view around 14 pages. That’s a good set of engagement metrics by any estimation!

Case study: Visual C++ 2012

Mike Pietraszak, Senior Test Lead, Microsoft Visual C++

Summary: A large two-year project with limited customer interaction in the middle of the cycle was struggling, but after making short-term modifications to the scenario-focused techniques and through perseverance, the large team delivered a well-received product and began a long-term change in engineering practices.

In the summer of 2010, the various product groups that form the Visual Studio suite (Visual C++, Visual C#, Visual Basic, and others) started putting Scenario-Focused Engineering (SFE) techniques into practice. SFE would be used for the first time in the two-year development cycle for the Visual Studio 2012 suite, including for Visual C++ 2012. After completing SFE training, each product group took a slightly different approach to adopting SFE. I was a test lead in the Visual C++ group, and along with the efforts of many others, I played a part in the adoption of SFE for our group.

The Visual C++ group had spent several decades building expertise in feature-focused software development. The bug-tracking database, design specifications, milestone reviews, and even the organizational structure (feature teams) were all built around the concept of features. Over many years and many product releases, our seasoned group of engineers had also seen its share of new processes and procedures come and go. Some of these were viewed as flavor-of-the week fads, and we learned to be cautious and careful about investing in something that wouldn’t be around for the next product release cycle. We wondered if our deep-rooted feature-focus would be an obstacle to the adoption of SFE.

Caution and enthusiasm

In May of 2010, Austina De Bonte and Drew Fletcher conducted an SFE training session for the entire Visual C++ product group, more than 90 developers, testers, and program managers (PMs). During the training, our product group showed a palpable degree of skepticism about the wisdom of pivoting from our proven feature-focused processes to a process that focused more on customer scenarios.

But Visual C++ management was enthusiastic about the promise of SFE, and so was I. Our group hired a user-experience expert and created a three-person subgroup. The SFE subgroup was not focused on a particular feature, as the other feature teams in the organization were. Instead, the subgroup had the unique charter to coordinate customer interactions and organize scenario-related test activities for all Visual C++ scenarios. I was thrilled to be the lead for the SFE subgroup.

Early on, the Visual C++ group chose a customer persona as our focus: Liam, a rising-star programmer who wants to develop and self-publish his own apps. My SFE subgroup planned, scheduled, and conducted interviews and focus groups. We turned our findings into large posters that were hung in the hallway, complete with photos of the customers we had met. The PMs made a healthy investment of their time in brainstorming. Together, we identified seven C++ scenarios for the 2012 product release, and we built end-to-end scenario walk-throughs and storyboards. As the product took shape in the very early stages, we conducted lab studies with customers. The development team reviewed this feedback and made product changes. We were on our way.

But as the product took shape, we had to make a tradeoff. We valued direct customer validation of our implementation, but we also wanted to prevent our new features and designs from leaking publicly too soon before product launch. We decided to halt customer lab studies until much closer to the product launch. Instead of customers, the Visual C++ testers took turns looking at different scenarios (“fresh eyes”) and continued using Usable, Useful, Desirable (UUD) surveys to evaluate the scenarios at each milestone.

Struggles

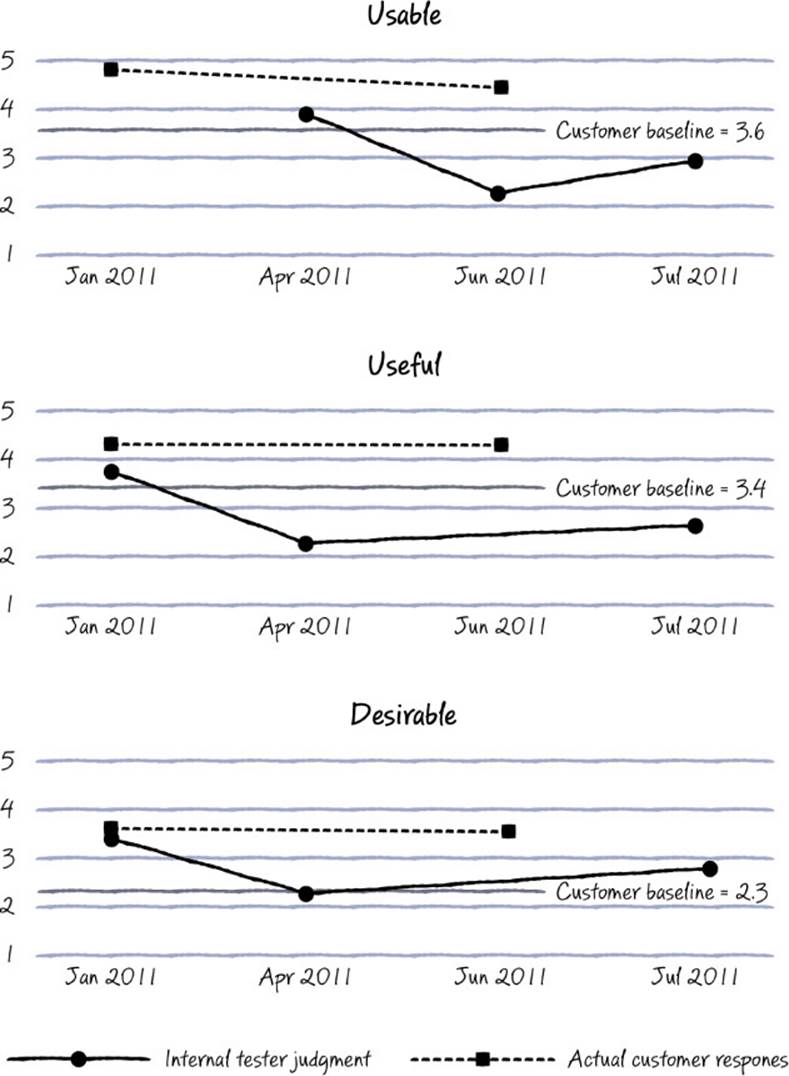

But the following months were not exactly smooth sailing. In fact, adapting our historical feature-focused processes and “getting” SFE was very, very challenging. Milestone progress reviews were conducted, but they remained mostly feature-focused—with scenario reviews happening afterward if at all. Some scenarios were defined from the customer’s perspective, but several were really just a collection of features that we called a “scenario” (for example, “Compiler toolchain fundamentals” and “Developer IDE productivity fundamentals”). The results of our first lab study UUD survey with customers did show an improvement over our 2010 baseline, but subsequent surveys weren’t trending upward for 2012 as we expected. Our test team was now using UUD surveys to augment UUD survey data from real customers, but this data was trending downward. Was our product actually getting worse, or were we not using UUD correctly? (See Figure D-6.) We started doubting SFE and ourselves. Our confidence waned.

FIGURE D-6 Customer vs. tester survey data variance (for the scenario related to building a 3-D game in C++).

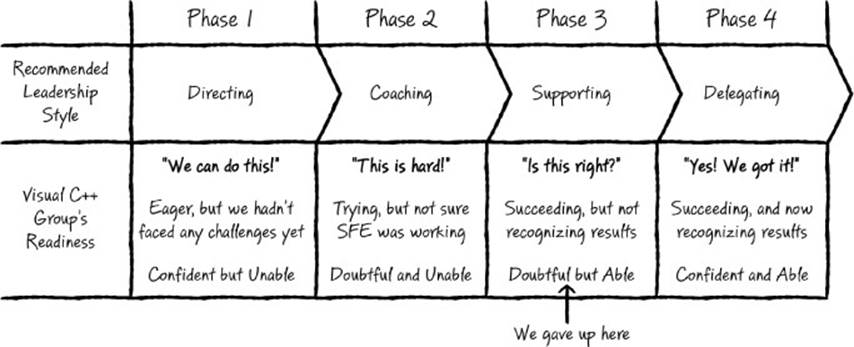

We should have expected this. Authors Paul Hersey (The Situational Leader) and Ken Blanchard (The One-Minute Manager) precisely capture this phenomenon in their situational-leadership model. It states that as teams take on a new skill (like SFE), they go through four phases, and leaders should engage their teams differently depending on the phase of maturity a team is in. Phase 3—when a team is proficient in the new skill but doubts itself—is particularly tenuous. At that point, a significant investment of time, energy, and money has been made, but the full impact of the results aren’t very obvious yet. Bingo.

Waving the white flag

So we reached the summer of 2011, one year into our adoption of SFE. With all the effort we’d put into SFE, we did see some positive results, but our accumulated and persistent struggles—and doubts—hit us pretty hard. We were aware of the Hersey-Blanchard model, and as leaders we should have recognized where we were. We should have been supportive, encouraging our group to push forward. But it was precisely at this point that we buckled. (See Figure D-7.) At a scenario review that summer, a weary participant asked dishearteningly, “Why are we even doing this?” That turned out to be the last scenario review the Visual C++ group held for the 2012 product.

FIGURE D-7 Visual C++ group’s readiness and recommended leadership style

We reverted to a full-on feature-focus groove, effectively abandoning SFE. Testers stopped producing survey data for scenarios. We stopped scheduling customer lab studies. We shut down my SFE subgroup, reassigning people to other feature work. Our user-experience expert left the company. SFE was only a fad, just as our skeptical inner voices had predicted a year before. SFE had fizzled out.

Or had it?

Rebound

That same summer, independently of the Visual C++ group’s efforts, the larger Visual Studio group began conducting new reviews for a small number of key scenarios across all languages in the suite. The Visual C++ group—which had merged with the Accelerated Massive Parallelism (AMP) group—was now responsible for representing four of these key scenarios at the Visual Studio reviews:

![]() Create high performance computing apps with C++ (AMP).

Create high performance computing apps with C++ (AMP).

![]() Create a Windows Store application with HTML, JavaScript, and C++.

Create a Windows Store application with HTML, JavaScript, and C++.

![]() Create a Windows Store application with XAML and C++.

Create a Windows Store application with XAML and C++.

![]() Create a Windows Store 3-D game with C++.

Create a Windows Store 3-D game with C++.

Our work began again, but with a different outlook and technique. Instead of our original seven scenarios and the AMP scenario, Visual C++ now focused on just four. Instead of having an SFE subgroup coordinating and advising all Visual C++ testers, the four key scenario testers now worked on their own but shared their best practices with peers. Instead of UUD surveys, our test team used a new evaluation method: customer experience values (CEV). The CEV rating system breaks a scenario into steps or “experiences.” Each step is performed manually and is rated as Blocked, Poor, Fair, or Good. Bug reports describing the variance from expectations are required for any rating other than Good.

One group member, Gabriel Esparza-Romero, started and led the test-driven CEV effort as a way to quantify scenario progress and state, while also indicating what was needed to complete the scenario by highlighting gaps and areas for improvement. He was helped along by many others, and this methodology evolved and branched out throughout the release. In hindsight, this approach resulted in tester-provided ratings that were consistently lower than actual customer ratings. Later, in 2014, this was addressed by breaking test-provided CEV into three components: implementation quality rating, provided by the development team; design completeness rating, provided by the new “experiences and insights” team (more on that later); and a customer insight rating, provided by customer interviews and social media. While the CEV rating was invaluable, it wasn’t a magic silver bullet. CEV ratings don’t provide an automatic tripwire or mechanism to guide teams toward a change in course for the product when the data indicates a change is necessary. Teams must decide themselves how to detect and make those course corrections when using CEV.

Taking up the AMP scenario went smoothly for the Visual C++ team, despite a change to this scenario to support the C++ customer persona—Liam, the self-published, indie app developer. This scenario included the AMP language and library as well as the parallel debugger. The AMP group based its initial designs on the needs of technical computing customers, but it was able to adapt designs to also satisfy customers building consumer-facing parallel computing projects such as computer vision and general image processing. The parallel debugger was also adapted to this new focus, but it didn’t require a significant change in design.

Recovery

We were very fortunate. The Visual Studio scenario reviews started precisely at the moment when we lost our gumption and had reverted to focusing on features. If we had truly abandoned SFE in the summer of 2011, it would have sent the message that SFE truly was just a passing fad. But by sticking with it in its new form through the rest of the cycle, it was clear that SFE was an important, sustained, long-term shift.

The Visual Studio scenario reviews also gave Visual C++ a consistent drumbeat and a forum for accountability and visibility. This, it turns out, was when the Visual C++ group found its SFE groove. SFE had taken on a new look, but it was back and here to stay.

Addressing scenario gaps

The Visual Studio scenario reviews proved to be particularly impactful. One example is the effect these reviews had on the 3-D game scenario. To complete this scenario, customers needed to use several new features:

• Create 3-D models with the 3-D Model Editor

• Create preliminary C++ and HLSL code with the 3-D Project Template

• Debug HLSL shaders with the 3-D Graphics Debugger (Pixel History)

In this new era, with the SFE subgroup dissolved, I had changed gears and was now leading a small test team focusing on the 3-D game scenario. As we evaluated the scenario using CEV, we found that once a customer produced a model with the 3-D Model Editor (step 1), they could not use the new 3-D Project Template (step 2) to display the model in their game because the template did not include code to parse and render the model’s file format. This was a big scenario gap. Reporting a “Poor” CEV rating for step 2 generated a lot of discussion during reviews and ultimately helped garner approval for the creation of the Visual Studio 3-D Starter Kit, which shipped shortly after Visual Studio 2012 was released in August of that year. The kit helped customers complete the full end-to-end scenario, and it was updated and rereleased for new devices, like Windows Phone 8. The kit consistently receives 4.5/5-star ratings from customers. Without the CEV reviews, customers might still be struggling to write their own code to overcome the scenario gap, but we caught the problem and were able to fix it.

Shipping Visual C++ 2012

Along with the rest of the Visual Studio suite, Visual C++ shipped in August 2012. InfoWorld did not evaluate Visual C++ on its own, but it rated the combined Visual Studio 2012 suite 9/10 for capability (utility), 9/10 for ease of use (usability), and 9/10 for value (desirability). Not bad. (See http://www.infoworld.com/d/application-development/review-visual-studio-2012-shines-windows-8-205971.)

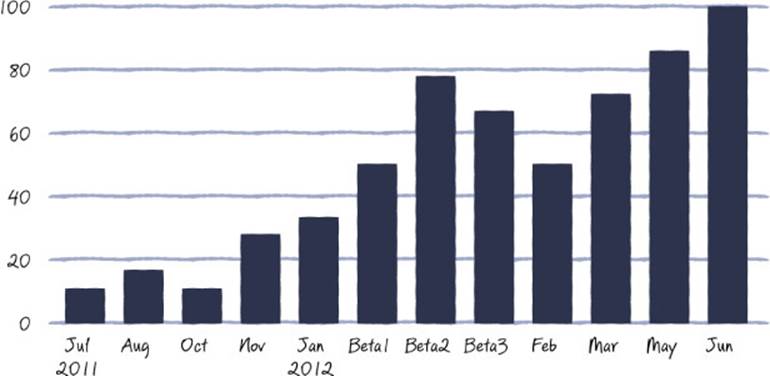

The CEV evaluation method was time-consuming and took its toll on those providing regular evaluations, but using it on the reduced set of scenarios proved to be an effective technique. The 3-D game scenario, for example, saw a clear trend of improvement with each evaluation. The bugs we entered for each CEV evaluation were fixed incrementally as we went along, improving ratings and cumulatively piling up in number over the course of the release.

As the 2012 release ended, we didn’t immediately recognize our own successes. The 2012 cycle didn’t result in a rapid, seismic shift from features to scenarios. Instead, we gradually found a balance between the two. But we learned from both the successes and the painful aspects of the 2012 release, made iterative improvements in the 2013 release, and then made even more dramatic changes for our next release.

SFE process scorecard

Looking back at the 2012 release, how well did the Visual C++ group follow SFE principles and techniques? Here’s a recap:

![]() Identify target customers We defined a persona (Liam, the rising-star developer) for this release.

Identify target customers We defined a persona (Liam, the rising-star developer) for this release.

![]() Observe We used interviews, focus groups, and lab studies, but we could have “soaked in data” more to gain greater empathy and new insights. Our decision to restrict customer access of prerelease builds limited our ability to observe customers using our product, and we used testers as substitutes.

Observe We used interviews, focus groups, and lab studies, but we could have “soaked in data” more to gain greater empathy and new insights. Our decision to restrict customer access of prerelease builds limited our ability to observe customers using our product, and we used testers as substitutes.

![]() Frame Product management made a healthy investment to create scenarios and stories, but we initially felt the need to treat all work as a scenario and mistakenly applied this term to some groups of feature work.

Frame Product management made a healthy investment to create scenarios and stories, but we initially felt the need to treat all work as a scenario and mistakenly applied this term to some groups of feature work.

![]() Brainstorm We did brainstorm, but we didn’t get customer feedback on many variations.

Brainstorm We did brainstorm, but we didn’t get customer feedback on many variations.

![]() Build We didn’t create many prototypes but went right to production code.

Build We didn’t create many prototypes but went right to production code.

![]() Observe We did use lab studies to guide us to make design changes (generative data). We struggled with UUD survey data as a means to track our progress (summative data).

Observe We did use lab studies to guide us to make design changes (generative data). We struggled with UUD survey data as a means to track our progress (summative data).

![]() Repeat We didn’t do frequent, rapid cycles. We spent about two months in each stage and then shipped in August of 2012.

Repeat We didn’t do frequent, rapid cycles. We spent about two months in each stage and then shipped in August of 2012.

So, what went wrong? In retrospect, we could have done several things differently.

![]() Lost the faith We gave up—but just temporarily. We were lucky that a white knight (in the form of the Visual Studio scenario reviews) appeared to help us restart. But we should have pushed through, adapted, and continued on our own.

Lost the faith We gave up—but just temporarily. We were lucky that a white knight (in the form of the Visual Studio scenario reviews) appeared to help us restart. But we should have pushed through, adapted, and continued on our own.

![]() Using testers for lab studies We thought that by rotating the scenarios, we could use the “fresh eyes” of testers to act as the customer and rate our scenarios using UUD surveys. But as we should have known, we are not the customer, and the tester-generated survey data didn’t show a good correlation to (or prediction of) what real customers thought. (See Figure D-6 earlier.)

Using testers for lab studies We thought that by rotating the scenarios, we could use the “fresh eyes” of testers to act as the customer and rate our scenarios using UUD surveys. But as we should have known, we are not the customer, and the tester-generated survey data didn’t show a good correlation to (or prediction of) what real customers thought. (See Figure D-6 earlier.)

![]() Not everything needs to be a scenario The Visual C++ 2012 release added great new functionality in the IDE and compiler (for example, support for the C++11 standard), and initially we tried to create a false wrapper around this functionality and call the work “scenarios,” which was unnatural and unnecessary.

Not everything needs to be a scenario The Visual C++ 2012 release added great new functionality in the IDE and compiler (for example, support for the C++11 standard), and initially we tried to create a false wrapper around this functionality and call the work “scenarios,” which was unnatural and unnecessary.

![]() Didn’t iterate designs with real customers frequently or consistently enough We did meet with customers at the beginning and end of the release, but in the middle we used team members as proxies for customers to limit potential leaks. Our customer interactions were somewhat far apart, and we didn’t always use them to validate changes we made in response to feedback from a previous interaction. We didn’t do frequent, rapid cycles, and instead spent about two months in each SFE stage.

Didn’t iterate designs with real customers frequently or consistently enough We did meet with customers at the beginning and end of the release, but in the middle we used team members as proxies for customers to limit potential leaks. Our customer interactions were somewhat far apart, and we didn’t always use them to validate changes we made in response to feedback from a previous interaction. We didn’t do frequent, rapid cycles, and instead spent about two months in each SFE stage.

![]() Overscoped To be successful with SFE, you need to be able to modify your plans to adapt to feedback. We bit off a lot for this release and didn’t make the necessary cuts to allow for unplanned new work.

Overscoped To be successful with SFE, you need to be able to modify your plans to adapt to feedback. We bit off a lot for this release and didn’t make the necessary cuts to allow for unplanned new work.

What went right? A few key things really had a significant, positive impact on our scenario efforts.

![]() Finished strong and adapted Midway through the product release, after we gave up, we dug deep, got back on the SFE horse, and shipped. Finishing this way allowed us to look back at our struggles and make iterative improvements to how we could use SFE in future releases (more on that later).

Finished strong and adapted Midway through the product release, after we gave up, we dug deep, got back on the SFE horse, and shipped. Finishing this way allowed us to look back at our struggles and make iterative improvements to how we could use SFE in future releases (more on that later).

![]() Interviews, focus groups, lab studies The data we collected from customers confirmed the directions we were already planning and helped us make adjustments. We turned this data into large posters—which included photos of the people we’d met with—that we hung in the hallways to remind us of what was important to customers. Our developers made good use of this data. With more iterations, we could have responded even better to this input.

Interviews, focus groups, lab studies The data we collected from customers confirmed the directions we were already planning and helped us make adjustments. We turned this data into large posters—which included photos of the people we’d met with—that we hung in the hallways to remind us of what was important to customers. Our developers made good use of this data. With more iterations, we could have responded even better to this input.

![]() Brainstorming Program managers deeply considered multiple approaches, which resulted in better designs.

Brainstorming Program managers deeply considered multiple approaches, which resulted in better designs.

![]() Walk-throughs Our user-experience expert led the generation of detailed scenario walk-throughs and storyboards to help engineers visualize the product. The walk-through technique was very valuable and is still frequently used today.

Walk-throughs Our user-experience expert led the generation of detailed scenario walk-throughs and storyboards to help engineers visualize the product. The walk-through technique was very valuable and is still frequently used today.

![]() Just a few scenarios Focusing on everything means you don’t focus on anything. Reducing our focus to fewer scenarios helped us invest attention and resources in those scenarios and deliver a better product.

Just a few scenarios Focusing on everything means you don’t focus on anything. Reducing our focus to fewer scenarios helped us invest attention and resources in those scenarios and deliver a better product.

The importance of timing

When we started using SFE, we created a dedicated SFE subgroup. Doing this at the beginning, as we were all learning SFE, was probably a mistake. Forming an SFE subgroup created the unintended perception that it wasn’t necessary for everyone to become more scenario-focused because that was the SFE subgroup’s job. Also, my original SFE subgroup acted as consultants, but we were not scenario owners ourselves. Thus, we lacked credibility with other testers who were scenario owners and responsible for evaluating the scenarios they owned. In their book Switch: How to Change Things When Change Is Hard, Chip and Dan Heath stress the importance of “shaping the path” when making a difficult change, like the one from features to scenarios. You can desire change emotionally and intellectually, but when things get tough, you’ll often fall back to a well-worn (feature) path. Having scenario owners provide guidance to other owners as peers proved to be far more effective than my “consulting” subgroup of nonowners. The owners of our four key scenarios cleared new paths themselves, sharing the processes that were working for them.

But in 2014, as of the writing of this book, the Visual C++ team has decided that the timing is right to reintroduce a similar “experiences and insights” team, similar to the initial SFE subgroup. The members of this team are unique—they created a new discipline—on par with traditional developer, tester, and PM roles. They visit, interact with, and poll customers. Then they analyze qualitative interaction data along with quantitative product usage data to ensure that customers’ needs are fulfilled.

Using temporary stopgaps

As I mentioned, we chose to restrict customer access of prerelease builds and instead used testers as substitutes. Initially, our testers used UUD surveys, but then we switched to CEV. Neither using testers as substitute customers or using CEV instead of UUD surveys was the right long-term solution for us, but using them temporarily was good because they allowed us to move forward without abandoning SFE.

By using CEV to rate each step in a scenario, by filing bugs for gaps/defects in the scenario steps, and by reviewing the CEV ratings with peers and management, we shipped a better product. This system also proved to be consistent and a good summative metric for tracking and predicting progress. (See Figure D-8.) The testers also created detailed, end-to-end, manual scripts to follow when evaluating CEV data, which provided consistency during evaluation. These scripts were eventually automated, creating a great set of check-in tests that were run before developers committed product changes. The automation also reduced the cost and number of issues found during manual testing. The danger here was that CEV would just become another checklist of features rather than an evaluation of the customer experience, so it was important to switch back to UUD surveys and real customers.

FIGURE D-8 Percent of scenario steps with CEV rating of Good or better

Temporarily using CEV also delayed our analysis and adaptation of how we used UUD surveys. It was great having a UUD baseline (from the 2010 version of our product), and we believed that by conducting future UUD surveys on incremental monthly builds of the 2012 product in development, we could track our progress toward being “done” (summative). However, our incremental data points didn’t change much, even after six months of development. (See Figure D-6.) Before using UUD surveys again, we should better understand why this happened. Switching to CEV delayed our investigation of this situation.

Beyond 2012

SFE took root in the 2010–12 cycle, slowly at first, but it has continued to grow and flourish and has increasingly changed how we organize and produce software.

Work is still organized into features, but scenarios are driving the features that are implemented. The organization is changing and has restructured around the “experiences and insights” discipline and scenarios. Today, you’ll more likely hear someone in the group ask, “What scenario are you working on?” than “What feature are you working on?”

With product updates shipping every three months instead of every two to three years, the group is building a fast (and positive) feedback cycle with customers. Through the team’s agility and its willingness to make product changes based on feedback, customer engagement has increased. Scenario walk-throughs are now a core part of all milestone reviews. Product telemetry and customer data are now commonly used during planning and design. While independent prototypes are not common, the minimum viable product approach proposed by Eric Ries (in his book The Lean Startup: How Today’s Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses) is often taken when we plan and brainstorm new product changes.

But one of the most striking and sustained changes from a test perspective is in the number and type of bug reports filed. In the Visual C++ 2010 release, only 6 percent of bug reports were related to scenarios/experiences, usability, or customer-reported issues. After we adopted SFE in Visual C++ 2012, bug reports of this type jumped to 22 percent and then to 31 percent in the Visual C++ 2013 release. This reflects the fact that the team is spending significantly more time evaluating the product from the perspective of delivering complete customer scenarios.

So while we hit a period of doubt one year in, the shift to scenarios did take root in the end. Scenarios are now a core part of the way the group thinks and works. Just as it is for products, iteration is the key to the success of SFE for your organization and project. Even when things look grim, keep going! If you experiment, listen, adapt, and move forward, you will succeed in bringing change to your organization.

My sincere thanks and gratitude to my Visual C++ colleagues for their review and input for this case study: Ale Contenti, Ayman Shoukry, Birgit Hindman, Chris Shaffer, Gabriel Esparza-Romero, Jerry Higgins, Jim Griesmer, Martha Wieczorek, Orville McDonald, Rui Sun, Steve Carroll, Tarek Madkour, and Tim Gerken.

All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL)

If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval.

© 2016-2026 All site design rights belong to S.Y.A.