Scenario-Focused Engineering (2014)

Part II: The Fast Feedback Cycle

Chapter 5. Observing customers: Building empathy

Now that you know who your target customers are, the next step is to learn all about them—to figure out what their needs are, what problems they have, and what motivates them. Having a deep understanding of your customers allows you to discover what an outstanding solution looks like from their point of view—a solution that meets their functional needs as well as their emotional wants.

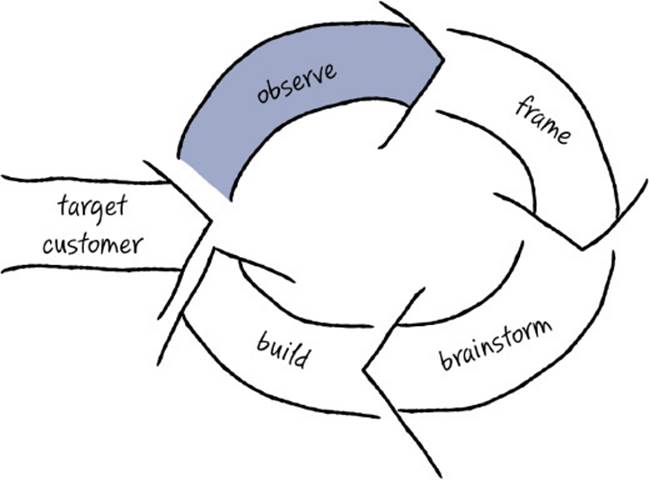

In this first stage of the Fast Feedback Cycle, you do the research required to make data-based decisions about which experiences to build for your customers. This stage is all about data collection and the synthesis of that data. In gathering this data, stay grounded in observation, whether you are observing customers directly, observing customer behavior through instrumentation or A/B testing, or observing industrywide patterns and trends. Rather than basing decisions on opinions, judgments, or assumptions, you want to remain data-driven and base your decisions on actual customer research.

This chapter presents some of our favorite research techniques for collecting the data you need to kick off your project and inform your decisions. As in each stage of the Fast Feedback Cycle, there are many tools that you can use to achieve the intent of this stage. Remember that the tools and techniques presented in this chapter (and throughout this book, for that matter) are items in your toolbox, and you decide which techniques work best for you in any particular situation. By no means does this chapter list every available research technique. We just describe our favorites—those we’ve found most useful in our experience.

As with many of the techniques we discuss in this book, don’t feel as though you have to be an expert in customer research techniques to get worthwhile results. Even small improvements in collecting valid customer data can have a tremendous impact on your product. In short, don’t let the perfect get in the way of the good enough.

You are not the customer

To deeply understand your customers, you first have to accept that your customers’ situation, background, experience, motivations, and needs are different from your own. Let’s go out on a limb and posit that as a software professional you understand a lot more about technology than your customers do. You probably have a degree in computer science, math, or engineering (or the equivalent professional experience). You have firsthand knowledge of how software is made. You understand how 1s and 0s at the machine level relate to a programming language and compiler. When someone says that data is accessed “in the cloud,” you have a good sense of local versus remote persistence. When using software you unconsciously avoid bugs because you have a sense for what software typically can and cannot do.

Now think about your customers. Do the qualities that describe you also describe them? What is their level of computer science experience or education? How do they spend their time, day in and day out? What are their priorities and motivations? Almost certainly, your customers’ lives are quite different from yours. When it comes to software, you are a power user. (Remember the dangers associated with focusing on power users described in the previous chapter?) While building for power users is often tempting—because those needs are so familiar to you—solutions that address that audience likely won’t generate much carryover to (or revenue from) broader, more mainstream customer segments.

Let’s say, for example, that your customer is a tax accountant or a teenage gamer. Acknowledging that you are not the customer here is simple logic. You are not a tax accountant or a teenage gamer (sorry, as much as you’d like to be that person again, you aren’t now). You went to a different school, you have a different degree and a different type of job. Your work schedule and cycle of output is different. The tools you use to get your job done are different, as is the style in which you communicate with your customers, peers, and managers. In cases where the customer’s background is so obviously different from yours, it’s not hard to accept that you are different from them.

However, internalizing that you are not the customer becomes more difficult when the domain you work in is well aligned with your target customer’s domain. What if you are a regular user of the software you create? Or what if you are an avid photographer and your company is creating photo software? Or what if you work in what’s mostly a consumer domain, where nearly everyone uses products such as mobile phones, televisions, game systems, refrigerators, and so on. In situations such as these, it is still extremely dangerous and short-sighted to assume that you are a mirror of your customer and that if you build software that you like, your customers will like it as well.

Sure, you may also have a smartphone, but unlike the average home user, you know exactly how to customize it, can debate whether syncing your mail with IMAP or POP3 is a better bet, and know what to do when your kid bricks it by mistake or a new app fails to install. In those cases, the average consumer probably heads to the Genius Bar to get help, or calls the teenager next door. Remember that you’re getting paid to write this software, while your customers are paying to use it. Given that fact alone, the motivation gap is pretty huge.

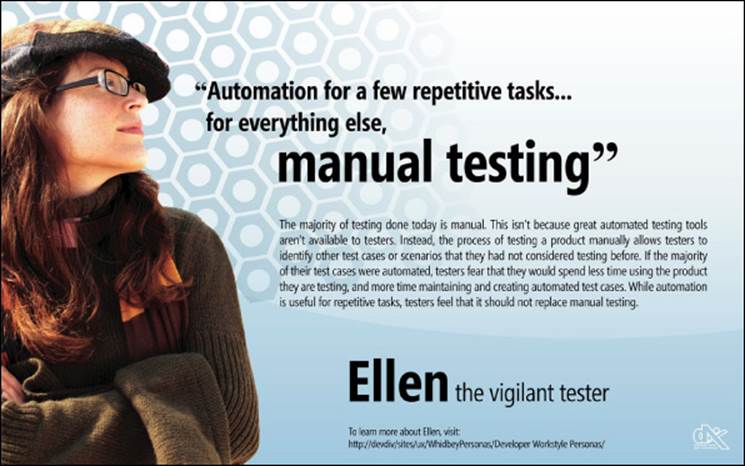

Here’s a short case study of a team whose target customer mirrored the team itself . . . or so they thought. The team named this quintessential customer Ellen and gave her the tagline “the vigilant tester.” You’ll learn more about Ellen later in this chapter.

SFE in action: Ellen, the vigilant tester

Steven Clarke, Principal UX Researcher, Microsoft Visual Studio

As part of the Visual Studio 2005 release, we integrated a set of test tools, code-named Camano, into the Visual Studio suite of developer tools. Prior to that release, Visual Studio had contained no significant support for testing software, as it was primarily used by software developers to write, compile, and debug code. Given a very short time frame, the Camano team set out to build what we believed would become a compelling set of test tools that would compete with the handful of sophisticated test-tool offerings already in the market.

We utilized multiple sources of information available to us at the time to figure out what we should build. We performed competitive analyses of existing tools, spent time talking to testers at Microsoft about the tools they used and why they used them, did extensive market research, and studied the work of recognized experts in the field. All of the information that we collected pointed us in the direction of building tools for automating the creation and running of test scripts and code. At that time, this was what almost all of the competitor tools did, and it was also what the vast majority of testers at Microsoft did. Given this information, we assumed that most customers would place a high value on tools that allowed them to easily create and manage their automated tests.

But after we shipped two releases of the Camano test tools, the product had not achieved the market share and revenue goals we had hoped for, so we started another round of analysis. We first brought in a handful of customers to the Microsoft usability lab. What we observed surprised us. Customers said things like, “Why are you making me do this? You are making me think like a developer!” And while the customers were able to perform their assigned tasks in the lab, when we listened carefully to what they were saying, the tone was not very complimentary, and it was clear that they were not having a good time completing their tasks.

We followed up these usability studies by observing professional software testers at work in their own environments—to observe their workflow, to see what tools they used, and to understand the context of their work. Previously we had observed testers at Microsoft to understand how testers work. Now we were looking outside Microsoft, and our observations were in stark contrast to what we had learned internally. In hindsight, it’s interesting to see that though we felt like we had done enough solid research to get the first version completed quickly, we hadn’t taken the time to talk to any external customers directly.

What we learned when we did talk to external customers was that testers outside Microsoft had different demands, worked in different situations, and required different tools. It wasn’t that they were naïve or inexperienced—far from it! In fact, they were quite sophisticated in how they approached their jobs. It’s just that their approach to testing software was different from the way the Microsoft testers worked. This was a pretty big new idea for us. Frankly, many of us were shocked to hear this because we had been thinking about the customer differently for such a long time.

There were certainly common attributes. We learned that great testers, no matter where they worked, were driven by raising the quality of the product they tested. They take pride in representing their customers and making their customers’ lives easier. The big difference between our customers and testers at Microsoft was the type of team they worked on and the type of product they worked on. This had a large impact on the approach they took. For a variety of reasons (resources, type of application being built, etc.), testers outside Microsoft relied heavily on manual and exploratory testing to find product bugs. Occasionally they’d use automation to speed up the process for some often-repeated tasks, but, by and large, manual tests allowed the tester to focus on seeing the user’s experience, which they found to be a more productive way to find bugs.

It was now clear why the previous releases had not been as successful as we had hoped. Customers who purchase and use Camano want to spend their time acting like their own end users, finding and removing bugs. They do not want to write automated tests. They seek out and use tools that make the process of finding and reporting good bugs easy. We learned that if the Camano team could find a way to make running and managing manual tests easier, and at the same time improve the communication flow between testers and developers, we would have a very good chance of creating a product that a large segment of the general testing community would genuinely want to use, and it would solve a real need for them.

Invariably, after presenting this case study in our workshops, someone shouts out, “But what about the C++ team?” You might think that surely Visual C++ is a product for which Microsoft engineers would make excellent proxies for the target customer, but it turns out even that’s not true. One difference is in the libraries that are used. It’s quite common for Visual C++ customers to use high-level libraries such as DirectX/Direct3D in their solutions. But the developers on the VC++ team don’t typically use these libraries; instead they are authoring the libraries, as well as writing the low-level code for the compiler itself. Also, the build systems used inside Microsoft are often specialized (and optimized) for extremely large projects such as Windows and Office, whereas many VC++ customers use the Visual Studio build system or an open source system such as Git. Also, the developers working on the back end of the C++ optimizing compiler tend to spend much more time debugging assembly-level code than do typical VC++ customers. The list goes on and on. At the core, it’s true that both Visual C++ developers at Microsoft and their customers are coding in C++, but the use of the C++ language, the available libraries, and the supporting tools are different enough that the VC++ team at Microsoft still has a very strong need for studying its customers—because they simply are not the customer.

Building empathy

After you accept the fact that you are not the customer and cannot rely on your own experiences to know what’s important to build, the natural next question is, “How will I know what my customer wants?” The answer is that you need to do a bunch of customer research to figure that out. But as we explore in this chapter, doing extensive research alone is not enough. Research and data alone don’t provide you with answers to all of your questions. Delighting a customer is not simply an equation, nor is there an algorithm to guide you. During the design and implementation of software, you are going to make judgment calls—many of them, every day—and you need empathy for your customer to inform your judgments.

![]() Vocab

Vocab

Empathy is the capacity to deeply understand the inner experience of another person, allowing you to see and understand from that person’s point of view.

Scott Cook, founder and former CEO at Intuit, is largely credited with turning that company around from the brink of bankruptcy. Cook talks about gaining understanding by walking in your customers’ shoes. Here’s a wonderful excerpt from an interview with Scott Cook about what it takes to build customer empathy:1

How do you develop and nurture the kind of culture that can continue to innovate?

First you have to understand the customers intimately, deeply, from observing them working in their offices. The key is face-to-face, regular close contact with the customer. It’s crucial. You can’t do it fly-by-wire, you can’t do it by remote control. It’s just got to be full frontal contact. Otherwise you misunderstand their needs. There’s a proverb that I teach people in the company. It goes something like “Empathy is not just about walking in another’s shoes. First you must remove your own shoes.” You have to get past your own blinders and biases and assumptions before you can see with clear eyes what’s really going on. And building a culture where that is valued, where engineers want to do that, where that’s what’s known to be valued, is very hard. Most companies don’t have that culture. A company has to be willing to stop believing its own beliefs and believe what it’s hearing from customers. Then you’ve got to translate that into products that are such a breakthrough that they transform business, and people can’t imagine going back to the old way.

The idea behind empathy is to become so immersed in your customers’ perspective that you can imagine what they see, hear what they hear, and understand the world from their point of view. To do this, you must take off your own “shoes”—you must shed your own biases, attitudes, and preconceived notions, which are largely based on your own lifetime of personal experiences.

This deep level of understanding helps you predict what your customers would say or how they might react to various designs you consider, so empathy helps you streamline decision making. It helps you to narrow the places where you get feedback and to focus on a few concepts that you believe are most important to your customers. You need empathy because you simply can’t collect conclusive data about every possible question that might emerge during the product-design process—you won’t always have directly relevant data to inform each decision point. This isn’t to say that you should make every decision from your empathetic gut instincts, but you need to become comfortable making progress using decisions based on empathy, trusting in the iterative process to provide course corrections if you get off track.

![]() Tip

Tip

Talk to real customers, not proxies. Some teams hire employees to be a proxy for the end customer and to bring that perspective inside the company in an ever-present way. Their job is to talk to lots of customers and convey those needs to the development team. This approach has a nugget of potential value, but we have rarely seen it work well in practice—usually it results in a distorted view of what customers’ needs are. If you want to be customer-focused and want to build empathy, the team needs to talk to real live customers, not proxies.

Evoking empathy across a team encourages people to do their best work, to really, deeply care about their customers not because their boss told them to or because their bonus depends on it, but because they genuinely want to help customers achieve their goals. This allows the team to be intrinsically motivated to solve a customer’s problem, rather than feel as though it is simply earning a paycheck. And as the psychologist Mihaly Czikszentmihalyi suggests in his famous work on “flow,” intrinsic motivation is linked to more creative and successful solutions.2

Empathy is not an inborn talent that only some people have. Rather, empathy can be developed in nearly everyone with a little time, experience, and effort. A curious, customer-focused mindset gets you started, and from there it is a continuous process of learning more and more about a customer to build up a body of knowledge and experience over time.

![]() Tip

Tip

Everyone, not just the boss, needs to develop empathy for the customer. Every team member needs to be able to step into the customer’s shoes from time to time to consider an alternative or evaluate a potential solution or decide whether to take a bug fix late in the project. Having empathy for the customer helps every individual person on the team make better decisions on the customer’s behalf.

The data-gathering techniques described later in this chapter show how to collect information about your customers. As that customer data is compiled, shared, and analyzed, it becomes the basis for creating broadly based, deep empathy for your customers across the entire team.

SFE in action: Trading places

Susan Palmer, Program Manager, Microsoft Bing Advertising

As a program manager on the Bing Ads team, I saw a need to have our engineers connect more directly with our customers. I ended up creating a program called “Trade Places with an Advertiser,” which was designed to provide our team’s engineers with an opportunity to step into the shoes of a real small-business owner who has to figure out how to create, manage, and monitor paid search advertising using both the Bing Ads platform and Google AdWords, our main competitor.

Here’s how it works. When engineers on the team sign up for the program, they’re paired with a small business and are tasked with creating and managing a real ad campaign using Bing Ads and Google AdWords. The program takes place entirely in the live environment, where participants have a real budget and real deadlines. For a period of four weeks, participants behave as though they were the real owner of that business. During that time, our participating engineers must navigate the experience of paid search advertising across two different platforms in the same way our customers do—through trial and error. They’re not allowed to use any internal Microsoft resources or support. They have access only to the same resources that our customers have access to. The engineers who participate in this program also have to continue to manage their very full-time jobs, just like our customers. This full immersion program is a great opportunity for our engineers to actually use the platform they work on in the same way that our customers do.

The results have been remarkable. Through weekly one-hour group discussions, engineers explain the challenges, frustrations, and surprises they experienced acting as customers, and they brainstorm ways they can make using our platform easier for our customers. It’s not uncommon to hear participants request that everyone joining the Bing Ads platform team be required to participate in the Trade Places program because the experience is so eye opening. It’s also not uncommon for a participant to reprioritize a feature request once he or she has gone through the program and truly understands the benefit and impact of the request on our customers. The Trade Places program has enabled our engineers to gain deep customer empathy by living through a real-world, end-to-end experience of what our customers do and feel every day.

What customers won’t tell you

The obvious approach to gaining empathy is to ask customers what they want. And this does work to a degree. Customers are usually glad to answer your questions, share their experiences, complain about their pain points, and even suggest improvements that they’d like to see. But have you ever noticed that when you ask customers why they want what they’re asking for, they tend to talk about their surface needs, most likely in the context of fixing or improving a specific task at hand? That’s great information to have, but when you’re looking for future needs and root causes, what customers say doesn’t quite give you all the information you’re looking for.

The truth is that customers usually find that articulating their deep needs is difficult or impossible. Often they fixate on their wish list, so you hear more about their surface-level wants than the real needs they forget to mention, or they take for granted that you already understand those basics. Sometimes, however, customers don’t really know what they need, they just have a vague feeling that’s difficult to put into words. (Remember the story about purchasing the car? Part of the criteria was “I’ll know it when I see it?”) Sometimes customers can’t imagine the future, or they limit what they ask for on the basis of what they believe is technically feasible. Sometimes customers are trying their best to tell you, but because you don’t understand their context, you don’t understand the full implications of what they’re saying.

People often see only what is broken in their individual situation and tend to miss the larger picture. Customers won’t be able to tell you what is wrong with the entire end-to-end system because they see only what is broken with the portion they use. Deeper needs and more important problems become visible only when you zoom out to see issues and opportunities across several different roles or steps in a process, which gives you a more systemic perspective.

Furthermore, it may be difficult for customers to pinpoint their needs because, frankly, that need has not yet been discovered. People are so used to doing things the usual way that no one has noticed the opportunity to make something even better. Some of the most exciting needs might lie dormant, waiting for someone to do the digging necessary to reveal them.

Unearthing unarticulated needs

Henry Ford, the inventor of the Model T car that began the automobile age, has a famous quote about customers’ ability to articulate their needs: “If I had asked people what they wanted, they would have said faster horses.” You need to look beyond what people are saying and read between the lines to figure out what they really need. This is why many people say that listening to customers is dangerous, that giving them what they ask for is rarely the right plan. The answer, however, is not to stop asking but to listen more deeply, to watch customer behavior and read between the lines, and to be ready to analyze patterns and triangulate different sources to figure out what customers really need. The goal is to identify unarticulated needs.

![]() Vocab

Vocab

Unarticulated needs, sometimes referred to as latent needs, are the deeper needs that customers can’t quite tell you. They are the deep desires and underlying needs that drive customer behaviors.

An unarticulated need is one that is present but is hidden or not obvious. Customers are generally not aware of these needs on a conscious level. However, after you identify such a need and state it out loud, both the team and your customers usually recognize it as “obvious” and wonder why they did not notice it before. Identifying and solving a novel, unarticulated need is a great way to delight customers by solving a problem that they didn’t even realize they had. Once they see the solution, they can’t imagine how they’ve ever lived without it.

Think back to Chapter 2, “End-to-end experiences, not features.” The Nintendo Wii Fit tapped into the unarticulated needs of customers by giving them not just a game but new hope for losing weight in a fast and fun way. The milk shake vendor discovered a surprising unarticulated need—that people purchased milk shakes to pass the time during a boring commute. 3M’s invention of its ubiquitous Post-it notes also hinges on unarticulated needs, and it reminds us how hard those needs can be to notice. 3M unintentionally came up with a glue that was only a little bit sticky, but 10 years passed before the company found a use for it, when a 3M engineer carrying a dog-eared Bible with lots of paper tabs sticking out had the eureka moment that maybe those paper tabs would mark pages better if they were a little bit sticky.3 Even needs that in hindsight are patently obvious weren’t so obvious in the moment.

![]() Tip

Tip

Be careful about getting distracted by wants instead of needs. Even though customers can spin a passionate story, if what they are asking for is a surface-level desire or a nice-to-have feature, solving that problem may not really be your priority. You will likely find that customers are not as willing to pay for a solution that satisfies their wants if it doesn’t also satisfy a true need.

It turns out that uncovering unarticulated needs can be one of the best ways to create a breakthrough product or service. If you can uncover a hidden need that your competition hasn’t noticed yet, and can come up with a great solution that addresses that need, that can give you a significant competitive advantage in attracting customers to your offering.

That said, it’s true that not every great product or service has based its success on unarticulated needs. Sometimes just getting the basics done, and done extremely well, with an eye toward fulfilling your customers’ end-to-end problems, is all that you need. However, as the market continues to mature, we expect that an increasing number of major advances will be tied back to novel, unarticulated needs.

You won’t end up acting on every unarticulated need that you discover. Instead, you will prioritize and probably blend some, winnow others, and synthesize your discoveries into a few highly meaningful needs—which you then combine with obvious, clearly stated needs—that you target in your solution.

Generating insights about customers

When you identify an important customer need, whether it’s articulated or not, the crucial next step is to back up and understand why that need is so important. Good questions to ask are “What is the customer’s motivation behind this need?” or “Why does the customer care about this?” Sometimes the answer is very obvious, while other times it requires some interpolation and triangulation between different data points for you to come up with a hypothesis. Other times you must go beyond identifying a pain point and take it a few steps further, looking for the root cause of that failure. Once you get to the reason for that need, and the reason explains something central about the customers you are targeting, those nuggets are referred to as insights.

![]() Vocab

Vocab

An insight boils down customer needs and desires to a root cause—not a symptom or side effect, but the original motivation or deep need behind the customer’s behavior. An insight tells you something new from observations, synthesis, or understanding. It is not a new fact, but a new meaning of the facts, derived from observations. Insights tell you why customers are doing what they’re doing, not just what they are doing.

The deepest insights about your customers are the ones that are laced with what Annette Simmons, author of The Story Factor, calls “big T” truth.4 That is, they pinpoint fundamental truths about human needs and desires that span cultures and epochs—the desire to belong to a community, to feel safe and secure, to express love, or to feel vengeful when wronged. As with an unarticulated need, when you hit upon one of these big-T truths, it can seem so obvious in retrospect. How could you have not ever realized that the reason teens spend hours texting each other well after they should be asleep is that they don’t want to feel left out of something? That behavior is not about being addicted to technology, as parents might assume. Rather, it’s about a teenager yearning to fit in with a group and to always be in the know.

The power of direct observation

Your first instinct may be to do research at arm’s length—to make use of online discussion boards, email contacts, Twitter feeds, and analyst reports and to interview people over the phone. While these can be good sources of information and worthwhile activities, the richest source of unarticulated needs is often direct observation of customers in their native habitat, whether that is at work, at home, on the bus, or in a coffee shop. When you observe customers directly, you see their end-to-end needs in the context of their real-world situations. The Lean Startup movement has created a memorable acronym for this principle called “GOOB”—Get Out of the Building.”5 Don’t just sit in your office building. You need to go to where your customers are.

While you observe customers, you likely won’t be silent. You’ll want to ask questions to understand their beliefs about what they do and their attitudes about those tasks. But many of those questions will be spurred by watching what your customers are doing and how they’re doing it (which, by the way, will often not be what you predicted you’d see). You will notice things that people would never think to mention in an interview, such as the fact that they are using an awkward workaround to smooth the integration between two pieces of software (but they don’t think of it as awkward—“That’s just the way we’ve always done it”), or that they aren’t using the new automation for a key task and continue doing it the old, laborious way (“I don’t have time to figure it out,” “It doesn’t actually work because I need it to do this slightly different thing, and the old way still works fine”).

Direct customer observation also gives you clues about the potentially biggest areas for delight—perhaps the customer has an irrational hatred for a particular part of your service, and fixing what appears to be a minor annoyance could yield huge delight for the customer, which you wouldn’t have predicted. You will also learn more about your customers’ context just by being in their space—seeing how much paper clutter is on the average kitchen counter or tacked to the fridge is an important piece of situational context if you are building a family calendar appliance, for instance. Or you notice how much of knowledge workers’ desk space is taken up by family photos, helping you see where the workers’ true emotional priorities are, despite the business software you are building for them. This style of observational customer research is sometimes called design ethnography.

![]() Vocab

Vocab

Design ethnography is a style of customer research that relies heavily on observation to learn deeply about a particular individual in the context of that person’s group, culture, environment, etc. The science of ethnography is rooted in anthropology and often includes detailed, in-depth descriptions of everyday life and practices.

The power of observing customers as a way to alert you to possible unarticulated needs is greatly underappreciated. Many teams tell us that they were skeptical at first but found that visiting just a handful of customers was transformative for helping the team unearth insights that they would not likely have found any other way.

![]() Tip

Tip

If some team members are having particular difficulty establishing empathy with the chosen target customer, sending them on a visit to observe a customer is a particularly good way to help them refocus. It’s tough to sit with live customers and not start seeing the world a bit more from their perspective. Site visits like this can be an eye-opening experience, and it’s not uncommon for the experience to stimulate exhilarating discoveries and new insights, even from recalcitrant team members.

Neuroscience break, by Dr. Indrė Viskontas

How do we empathize with others? What is the mechanism underlying our ability to imagine how someone else might feel or what he or she might be thinking? It turns out that our brains have evolved a pretty simple solution to this problem: if you want to know what it feels like to be someone else, activate the parts of your brain that would be active if you were in the same situation. That is, when we see someone perform a goal-oriented action, and we are motivated to empathize with him or her, our brains behave as if we’re the ones doing whatever it is that our demonstrator is doing.

First discovered in monkeys, so-called mirror neurons are active not only when a monkey or a person performs a specific action, but also when that person or monkey watches another person or monkey do the same thing, effectively mirroring what’s happening in the other brain. These neurons are not morphologically or structurally different from other brain cells—they are simply defined by their receptive fields, by which stimuli in the environment cause them to fire. And it turns out that they are part of a neural circuit that underlies empathy. This is the same circuit that seems to be impaired in people with autism spectrum disorders, who have difficulty empathizing with others. When we cry at a movie or feel embarrassed on behalf of a colleague’s faux pas, that mirror neuron circuit is active.

So if you want to empathize or put yourself in someone else’s shoes, go observe them. Pay close attention to what they are doing, how they are behaving, and what they may be expressing in terms of emotions. That will set off your mirror neuron network and allow your brain to mimic the activity in the brain of the person you are observing.

Here are a couple of examples illustrating the power of direct observation. When the company OXO looked at how to redesign measuring cups, customers never mentioned the biggest problem with a traditional glass measuring cup: that you have to bend down to check the level. But this problem was plainly visible when OXO observed customers in the kitchen. Based on this observation, OXO designed a new cup with a ramp along the inside edge of the cup, marked with graduated measurements. (See Figure 5-1.) The result is that users can measure liquid in the cup simply by looking down as they pour. This created a surprisingly simple solution that solved a problem the customer didn’t even realize could be solved. The measuring cup OXO designed was a huge success and sold a couple of million units within the first 18 months after it launched.6

FIGURE 5-1 A standard measuring cup (left) requires a user to bend down to check the level of the liquid being measured. In an OXO measuring cup (right), you can read the level of the liquid by looking down as you stand.

The power of observation isn’t just for consumer products. While on a site visit, members of the Microsoft Visual Studio team observed a group of their customers, software developers, attempting to use instant messaging (IM) to communicate with each other. A member of the Visual Studio team took note of this behavior and got to thinking about what it might be like to incorporate some form of IM directly in the Visual Studio user interface.

When the team went back to that same set of developers to explore this idea further, they asked them to demonstrate how they currently use IM in their work. The developers pointed out something that annoyed them greatly—when they copied code from Visual Studio into an IM window, the code became scrambled. The team probed further and asked why the developers were copying code into the IM window. They learned that these customers were trying to use IM to do informal code reviews. That was their primary use of IM in the first place.

After observing and interviewing this set of customers, the Visual Studio team concluded that the solution to the customer need was not to embed IM into Visual Studio. Rather, their customers needed to do code reviews and were looking for a better mechanism than looking over each other’s shoulders to share and review sections of code, especially when they weren’t located in the same place. Once the team realized that key insight, it shifted its approach to focus on supporting code reviews and code sharing rather than building a general purpose IM solution.

This is a great example of how building just what the customer asked for would never have solved the full, underlying need. In the end, the Visual Studio team built a rich suite of code-sharing features that was much simpler and more integrated with developers’ natural workflow. Even the best embedded IM solution would have paled in comparison had the team not stopped to understand the reason behind their customers’ behaviors.

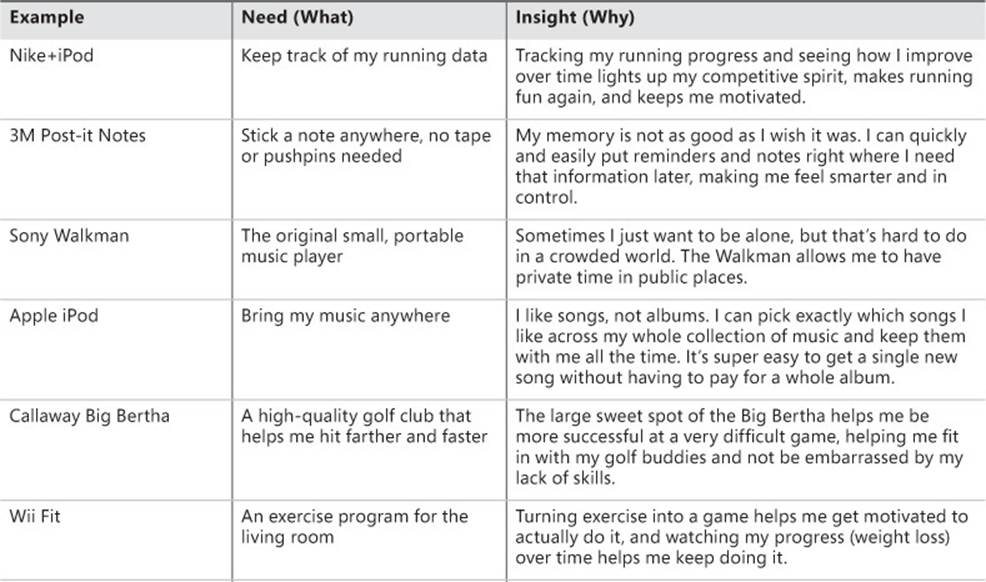

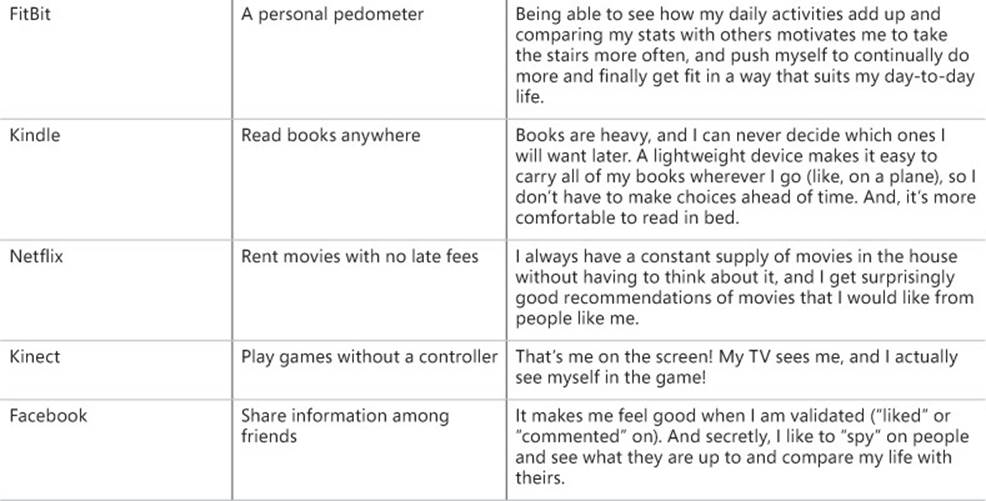

Needs versus insights

We’ve talked a lot about the need to develop empathy with customers to truly understand them. You need to dig beyond surface-level needs to get to the insights that explain why those needs are important to solve. Take a look at the following table for some examples of companies that have capitalized on the powerful, motivating insights behind their customers’ needs.

This table illustrates the difference between a surface need and an insight. It’s important to realize that these companies could have created other solutions that might have addressed the same identified need but been out of sync with the insight behind that need, and likely would not have been as successful. Each of these insights reveals why customers don’t just appreciate a product’s functionality but have an emotional connection with it. There is an element of deep surprise or delight or a big-T human truth embedded in every one of these insights.

The multiple dimensions of customer research

The good news is that there are decades of experience and practice on how to research customers quickly and efficiently and get valid information you can trust. You don’t have to reinvent the wheel here or talk to hundreds of customers to find the patterns. It’s a lot more doable than it looks, but there are a few gotchas to be aware of. In this section we take a high-level look at several approaches for how you find, record, and interpret customer data. Understanding how these different types of research fit together is critical to creating a balanced research plan—you need to use a rich mix of approaches to get the most accurate results.

Generative versus evaluative research

The first time through the Fast Feedback Cycle, you build empathy for customers so that you can start exploring ideas that are likely to resonate well with them. Gathering customer data with the intent of generating new ideas is called generative research. The insights that result from generative research provide the meat of the scenarios you’ll write, which in turn help you create solution ideas that address the problems those scenarios describe.

![]() Vocab

Vocab

Generative research is used to collect data that helps create an understanding of customer needs and inspire solution ideas.

Later in the iterative cycle, another kind of customer research becomes important. Once you have a prototype, product, service, or website and you want to evaluate how well your proposed solutions are meeting customer needs and whether customers actually like them, you gather data with the intent of evaluating a specific solution, which is called evaluative research.

![]() Vocab

Vocab

Evaluative research is used to measure or evaluate something you have created.

In the rest of this chapter we focus mainly on generative research approaches. As we explore the Fast Feedback Cycle in the chapters that follow, we’ll peel the onion to see how some of these same approaches can be used in an evaluative mode, and we’ll discuss evaluative research in detail in Chapter 9, “Observing customers: Getting feedback.”

Do as I SAY, or as I DO?

If you had some magic crystal ball and the ability to interact with any customer, any time, what do you think would be most effective? To interview a customer in depth? To track a customer’s actions via software instrumentation? To observe the customer’s behavior in person, maybe with the ability to interrupt in real time to ask questions? The common thread in all of these actions is observation, yet each approach is profoundly different in what you observe and what kind of data you collect. Some of these techniques are about watching what a customer actually does, while others are about listening to what a customer says. Which is better? Of course, you already know the answer. Both approaches are valuable, and you ideally want a mix.

![]() Vocab

Vocab

DO data, sometimes called objective data, focuses on watching what customers actually do when faced with a decision or a particular situation. SAY data, sometimes called subjective data, focuses on listening to what customers say about their experience, behavior, needs, or desires.

However, some researchers and businesspeople feel strongly that a customer taking action is ultimately the only thing that matters. Did the customer purchase the product or not? Was the customer able to complete the task or not? Did she use the new feature, or didn’t she? It’s true that customers often say that they would do one thing but actually do the other in a real situation, so DO data is pretty important. We’ve seen some teams go so far as to convince themselves that the only valid type of customer research is objective DO data, such as instrumentation, A/B testing, or other types of usage analytics.

On the other hand, SAY data is usually captured by asking questions during customer observations and interviews, as well as through surveys. By asking questions, you can learn about subtleties of your customers’ behavior and attitudes, such as how they perform a task or why they like a particular feature so much or what annoys them about it. Remember that to generate insights, you need to understand why a customer is motivated to solve this problem in the first place. Because no one has figured out yet how to read minds, to get WHY data you need to be able to ask the customer some questions and get SAY data.

So what about those teams that believe DO data is all that matters? Although it’s important to observe and measure a customer’s actions, DO data on its own can hardly ever explain why customers did what they did and leaves you guessing. Relying exclusively on DO data makes it very easy to oversimplify cause and effect or to make assumptions that may or may not be true. Furthermore, by looking at your instrumentation, you might see the customer succeeding at the tasks you are watching, but if you don’t question them, you might never realize that they were irritated the whole time because they found the experience unsatisfying, frustrating, or just plain annoying. You are aiming to build a positive emotional connection with your customers, so it’s vitally important to understand their emotional state as well, and that requires getting robust SAY data. Luckily, some of the most powerful techniques inherently capture a mix of SAY and DO data at the same time. We’ll highlight these in the “Observe stage: Key tools and techniques” section later.

QUANT versus QUAL

Another dimension that differentiates customer research techniques is whether the approach generates primarily quantitative data or qualitative data.

![]() Vocab

Vocab

Quantitative data, or QUANT, focuses on capturing measurements, usually in a numerical or other well-structured way.

Quantitative data is what many engineers are most familiar with, and it typically gets reported as graphs, charts, statistics, trends, and other types of numerical analysis. When applied to DO data, QUANT can answer questions such as “What is the customer doing?” or “How much are they doing it, and how often?” This is usually done by capturing and analyzing usage data to produce metrics. Many people assume that QUANT research is always focused on studying large numbers of participants so that you get statistically significant results. This is intensified by the current trend to harness and leverage the jewels hidden in “big data.”

Big data refers to a data set that is so large it cannot easily be managed, queried, charted, and viewed using traditional data management tools such as spreadsheets and databases. While the term is applicable to many sciences—meteorology, genomics, and biology, for example—it is especially applicable to computer science because of the vast amounts of data captured through instrumentation of online services, otherwise known as “data exhaust.” Think of the data that your mobile phone, your favorite apps, and the websites you visit are collecting about your actions every second of every day. As of 2012, it’s estimated that we create 2.5 quintillion bytes of data daily.7 The field of data science is emerging and is focused on extracting meaningful analysis from these extremely large data sets. Analyzing big data is a fundamentally quantitative technique.

However, QUANT approaches do not always need to rely on large data sets. Particularly when focusing on SAY data, you can use quantitative approaches to capture subjective data from customers, such as to ask about attitudes or overall satisfaction via a short survey at the end of a usability test. Customers could be asked to answer each question on a scale of 1 to 5, which would result in numerical data that can be graphed and trended over time. Those trends can serve as an early warning system for new problems that may have inadvertently been introduced (such as usability issues when multiple new experiences are integrated). Those statistics can also serve as a progress meter on how close your solution is to being ready to ship.

![]() Vocab

Vocab

Qualitative data, or QUAL, focuses on gaining understanding by gathering and analyzing data such as descriptions, verbatim quotes, photos, videos, and other unstructured but rich data sources.

Qualitative data is typically gathered by doing research with a small number of participants, going deep with each participant to fully understand his or her situation. Qualitative research is about collecting detailed descriptions and narratives about an individual customer and is most often done through observations and interviews, which can capture both DO and SAY data. QUAL often answers questions such as “How is the customer doing that, and why is he doing it?”

Just like with DO data, teams can get into a bad habit of believing that QUANT is the only true source of valid data. But like DO data, QUANT rarely explains why things are happening—it only helps you quantify what is going on and how much it’s happening. This means that QUANT will never be enough on its own for you to form insights about your customers, no matter how much big data is behind it. You need to use QUAL to figure out why. To get the best picture of what your customers are doing, and why they are doing it, you need to use QUANT and QUAL techniques together.

![]() Mindshift

Mindshift

Data is data. We hear lots of stories from the user experience researcher, product planner, marketing guy, or field person that she is the lone voice in her company, desperately trying to get the engineers to add some qualitative research to the mix. This person should not have to be an underdog, the customer-focused David fighting the numbers-driven Goliath. Data is data, and qualitative data is just as valid and important as quantitative data. Each has its role in helping you figure out what customers are doing and what they might need in the future.

Using complementary research approaches

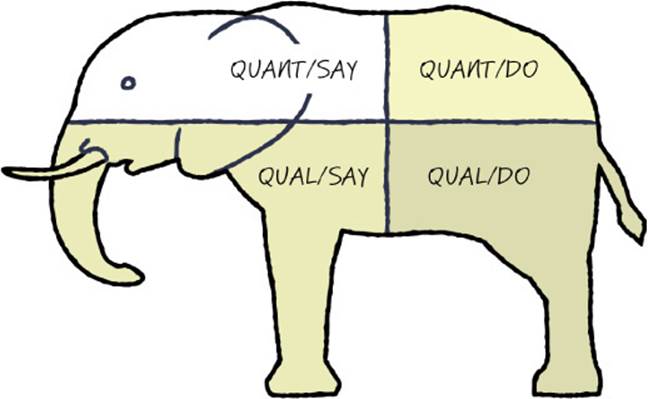

Have you heard the ancient Indian story of the group of blind men, each touching a different part of an elephant? The man touching the tusk proclaims the elephant is like a spear, while the one touching the skin says it is like a wall. Another, touching the tail, says it’s like a rope, and the man touching the trunk says it is like a spout. Just like the men touching the elephant one part at a time, different research methods highlight different aspects of the same situation. The reality is that every research method is imperfect, so to see the whole elephant, you must have multiple data points from multiple sources using multiple research techniques. Only by triangulating those data points do you come away with a valid picture of what’s really going on for the customer, and you can begin to see the whole elephant.

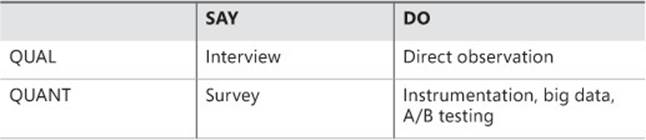

When you look at SAY versus DO and QUANT versus QUAL approaches, their cross-products combine to form a grid that describes four main classes of research activities:

Most research techniques boil down to applying one or more of these four basic approaches. The more important the decision you are making, the more important it is to get robust data from all four of these research quadrants. Some of the more powerful research techniques span these cells, and this greater coverage is the very reason they are so powerful. For instance, site visits often incorporate elements of both direct observation (QUAL/DO) and interview (QUAL/SAY)—you observe your customers in their native habitat and ask a few probing questions along the way. Similarly, usability testing can combine direct observation (QUAL/DO) of customers attempting to use a solution with a short questionnaire (QUANT/SAY) about their satisfaction afterward.

This grid is an excellent tool for making sure that you’ve chosen complementary research approaches and not just different forms of the same basic approach. For instance, consider a research plan that includes both a big-data analysis of instrumentation usage data and A/B testing of concepts on a live service. This plan seems good—until you realize that both of these techniques are fundamentally QUANT/DO techniques. Even though you are using two different techniques, you’re still looking at only one-fourth of the elephant.

When complementary approaches are used together, they can help you find deep insights and give you the statistical confidence you need, and they do it in an efficient, cost-effective way. There are a couple of basic patterns that come up often. The classic approach is to start with QUAL/SAY and QUAL/DO methods, observe a small number of customers, and understand their specific situations in detail. When you do qualitative observation of a few customers, you’re likely to develop several hypotheses about unarticulated needs that seem to be driving those customers’ behavior. However, because you talked with only a handful of people, you can’t be sure whether the patterns you see will hold with a larger population.

The next step is to use QUANT approaches to validate whether your hypotheses ring true to a statistically significant population of your target customers.8 If the QUANT checks out, you can feel confident that you have unearthed a valid insight. Practically speaking, professional user researchers find that most insights generated through QUAL research are surprisingly correct and bear out in QUANT research with the larger population, despite the initially small sample size.

![]() Tip

Tip

Our personal experience concurs that even with small sample sizes, going deep with a few customers is the most efficient way to get insights that are almost always correct. If you have time for only one research approach, start with direct customer observation, which will get you both QUAL/DO and QUAL/SAY data.

However, if you already have some QUANT data on hand, you can start your research from a different direction. Perhaps you have usage data (DO) that you got through instrumentation, or perhaps you crawled through your database of customer support reports (SAY). Start by analyzing the existing data set for patterns. You may find some patterns about pain points, usage patterns, and the like, but also keep an eye out for anomalies in the data, for places where the data doesn’t quite make sense. Sometimes you’ll find outlying data points that you would just as soon throw out because they don’t fit the curve. Sometimes a statistic that you expected to be high turns out low.

Instead of ignoring those cases, use the anomalies as jumping-off points to do some QUAL research to try to figure out what’s going on. Go deep with a few people in your target segment, observe them, ask questions, and try to understand why customers might be behaving in a way that would generate that unusual statistic. Chances are you will learn something new, and maybe something that your competitors haven’t noticed yet. Often these anomalies represent lead users, who can be very productive research subjects for identifying potential new unarticulated needs.

![]() Mindshift

Mindshift

Addicted to QUANT? Gathering and analyzing quantitative data can be addictive, especially to someone with a strong engineering mindset. We’ve observed many teams, organizations, and companies that have recently adopted a strong data-driven culture, which is fantastic. Having a penchant for data is a great skill to have in the business world. And in the age of big data, having the ability to understand how to collect and synthesize massive amounts of data is transitioning from a “nice to have” to a “must-have” for our industry. But this focus on vast quantities of data can sometimes lead to problems. We’ve seen many teams fall into the trap of relying solely on quantitative data for most of their decision making, and that data usually represents only DO data. It is extremely easy to jump to incorrect conclusions based on quantitative data and analysis paired with your personal experience and opinions. This is a particularly insidious way to forget that you are not the customer.

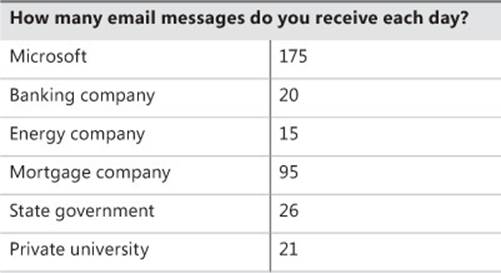

Here’s a final example that illustrates the need to go beyond QUANT. Several years ago, the Microsoft Exchange team collected email usage data from several large enterprise companies, as well as within Microsoft itself. The following table shows the average number of email messages that were received by each mailbox, in each of these companies (listed by industry for the sake of anonymity).

During our workshops, before we reveal this data, we ask the class to guess how many messages per day Microsoft employees receive on average. It turns out that Microsoft engineers have a pretty good idea about themselves and give pretty accurate estimates of their email usage patterns. However, it’s consistently a surprise that other companies’ use of email is dramatically lower in comparison.

Furthermore, when you look at the numbers, you can’t just create an average and say something like “non-software companies use email one-fifth the time that software companies do.” Because that’s not what the data shows. Take a look at the mortgage company. Why is it that its employees receive almost four times as much email as the other companies? Is there a business reason? A cultural reason? Looking at this data also leads to other statements, such as “I’m pretty sure workers in government and academia communicate with each other regularly, yet their email traffic seems to be low. If they aren’t relying on email so much, how are they communicating with each other?”

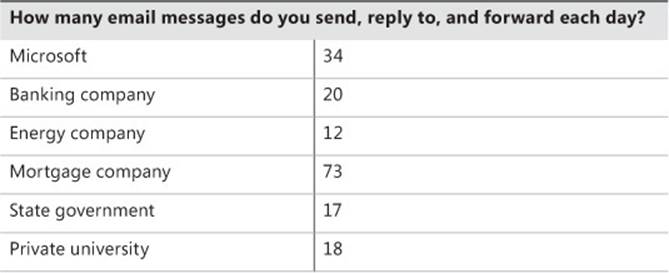

Then we look at how many messages the average employee sends each day, including original emails, replies, and forwards.

Again, we ask the audience to guess the numbers. When we reveal the actual numbers, some people are again surprised. Despite the lower number of messages they receive per day, the companies studied still send almost as much email as a Microsoft employee. Most people notice a pattern that the number of messages received is roughly proportional to the number of messages sent—but this pattern isn’t true for Microsoft, and there is still the anomaly of the mortgage company, which has a higher level of mail traffic overall.

At this point, after pausing to let the class consider the data, a discussion emerges. At first no one speaks, but then someone chimes in with an explanation for why these companies all send about the same amount of email as they receive: “I think they must not use distribution lists like we do,” and “They must not get nearly as much automatically generated status emails as we do,” and “I bet they actually respond to all of their email!” Someone points out the anomaly (the mortgage company), and another begins to explain to the class all of the business reasons why it makes sense that a mortgage company employee sends more than twice the amount of email in a day than someone at Microsoft. The class actually comes up with some very compelling reasons (at least they sound compelling), such as “Mortgage companies have to send all of their documents around to be signed,” or “In a mortgage company you are dealing with financial transactions and you need to send email to all of the parties in order to have accurate records of intent.”

We then interrupt them and announce to the class that they are all liars. Bald-faced liars. We then plead with the class—don’t tell lies. Don’t make up stories to explain why the numbers are the way they are. It’s so easy to jump to conclusions, to see a pattern and believe that you understand the reason for that pattern. In fact, you may be right about some of your hypotheses, but you simply do not know for sure, so don’t make it up. Instead, notice anomalies like these and use them to trigger some QUAL research to help explain the WHY behind the data. You might generate some educated guesses, a point of view about what may be occurring based on the data as well as your empathy for the customer. You might even consider your own intuition and life experience if that is relevant and not too biased. But then it’s essential to go do some further research to validate that what you think is happening is actually happening, and find out if you are correct.

We hope that everyone gets a laugh and egos remain intact. However, the point remains—don’t make up lies. Be curious and use QUAL to understand what customers are actually doing and why. And be careful with quantitative analysis so that you don’t interpret more than is there in the data.

Where do I find customers?

Gaining easy access to a robust pipeline of target customers to study is an activity where the value of having a strong user researcher or product planner on the team becomes apparent. If you have a researcher on your team already, he probably has a pretty good head start on understanding your customers and knowing where to find them. So if you have a researcher on your team, buy him a cup of coffee and see what he has to say. It may be the most valuable cup of coffee you’ve ever purchased.

If you don’t have a researcher dedicated to your team, and you don’t have the resources to hire a consultant, you need to do the work of creating a customer pipeline. Develop a list of people who match the profile of your target customers and who you can contact for ongoing research, whether that is the generative research that we’re focused on right now, or evaluative research that you will do once you have some initial concepts and prototypes to show. It’s important to have a deep bench of people available, because generally you don’t want to go back to the same individuals over and over again. If you start using the same people repeatedly, you’ll notice that they’ve learned too much about you and your offerings and can no longer provide unbiased, impartial, unvarnished feedback that is representative of the larger population of customers out there in the real world. Additionally, although most customers are flattered that you genuinely care what they think and that their feedback might make a difference, they will also become fatigued if you call on them too often.

Where do you find customers? Here are some good places to start looking:

![]() Online groups Post a request online in a discussion forum, social network group, or Twitter hashtag related to your industry or specialty.

Online groups Post a request online in a discussion forum, social network group, or Twitter hashtag related to your industry or specialty.

![]() Go where your customers are If you are looking for skiers, go to a ski area. If you are looking for parents, try local PTA meetings. If you’re looking for small-business owners, go to a conference that attracts lots of small businesses. You get the idea.

Go where your customers are If you are looking for skiers, go to a ski area. If you are looking for parents, try local PTA meetings. If you’re looking for small-business owners, go to a conference that attracts lots of small businesses. You get the idea.

![]() Ask around Use your network of friends, family, and colleagues to recommend people who match your target customer profile.

Ask around Use your network of friends, family, and colleagues to recommend people who match your target customer profile.

![]() Get recommendations Once you gather an initial base of customers, ask them to recommend friends or colleagues who they think might also be interested in providing feedback.

Get recommendations Once you gather an initial base of customers, ask them to recommend friends or colleagues who they think might also be interested in providing feedback.

Be sure that you have a clear list of criteria that matches the profile of your target customer. This is sometimes called a screener. The screener typically consists of about a dozen questions that cover basic demographic information such as age and gender, as well as several specific questions that are unique to your target customer profile. When recruiting customers for research, you typically ask the screener questions over the phone before scheduling a time to meet, which ensures that it’s worth your time (and theirs) to study them.

![]() Mindshift

Mindshift

Make sure you’re talking to the right people. One of the biggest mistakes teams make when they do their own customer research, without the help of an expert, is to start gathering data from people outside the target customer demographic. They don’t do it intentionally—the people they talk with seem close enough, and time is of the essence, so they take what they can get. But later on they often regret not being more careful when they realize that some of their data was contradictory or actually pointed them in the wrong direction. It’s easy to see how this could happen when you mistakenly interview a power-user photographer instead of a more novice point-and-shoot-camera user who is your intended target. A more subtle problem happens when you run an Internet survey that gets a strong response rate from a demographic you hadn’t intended, but you forgot to include screener questions that would have alerted you to this fact. Take the time to build a screener and use it. If, after repeated attempts, you have trouble finding people who are a reasonable match for your target customer’s profile, maybe that target customer doesn’t actually exist. You may need to return to the activities described in Chapter 4, “Identifying your target customer,” and reconsider your business strategy and target customer.

How many customers should I study?

Your instincts may lead you to want to do research with large numbers of people to maximize statistical significance and the validity of the data you find. However, doing deep, observational research with large numbers of people can easily become prohibitively expensive and time-consuming. Thankfully, it turns out that you can discover unarticulated needs extremely effectively by going deep with just a small number of research subjects. In fact, you need to observe only about a half-dozen customers, provided that each is aligned to your target segment. Once you start interviewing the seventh, eighth, or ninth customer in that same profile, it’s very likely that you will have diminishing returns and not learn anything substantially new. The important thing is that you specify a customer profile based on your target customer segment and identify people who match that profile. Nonetheless, once engineers get bitten by the research bug, they tend to overdo research in an attempt to be “sure.” Chances are that you don’t need to do as much research as you think to inform any single iteration of the Fast Feedback Cycle.

Sometimes just having a strong and persistent curiosity can lead to valuable time with a customer. We know a former colleague, Prashant, who years ago traveled to New Orleans for a conference about some aspect of the latest version of Visual Basic. He was excited to travel to New Orleans because, it turns out, he had a few hidden tattoos and had made an appointment to get another from a well-known artist in the city. To his surprise and delight, the artist happened to be an avid part-time VB programmer, and as long as the tattoo session went on, Prashant had a captive audience of a terrific representative customer. Prashant shared his knowledge of the new product, and the artist engaged him in deep technical conversation. He filled Prashant with feedback, ideas, and a wealth of well-thought-out opinions on what he needed and why.

![]() Mindshift

Mindshift

Lead users can be a gold mine for insights. Sometimes you may explicitly choose to study a lead user, even if she isn’t exactly your target customer. As we mentioned in Chapter 4, lead users have deeper and sharper needs than others. But you’ll find that “average” customers will have most of these same needs, just not to as great a degree. Because of this, spotting unarticulated needs by studying a lead user may be easier than by studying a more average customer. For instance, if you’re aiming your service at a typical point-and-shoot photographer, you might study a complete beginner to notice more clearly what he stumbles on. An early adopter lead user may help you discover a new trend before it goes mainstream. A lead user may also help you uncover the true root cause behind a particular behavior. The flip side of studying lead users is that you need to carefully validate that the lead user’s needs actually carry over and are representative of the larger target market you are going after. It’s worth repeating: beware of studying power users who know the ins and outs of every bit of software they use and have figured out exactly how it was built. Their needs rarely carry over to a broader market.

How do you know you are done, that you have talked with enough customers? The answer is quite subjective. If you are looking for new insights, what you should hope occurs is that you hear something interesting within your first few customer encounters, something deep that leads you to a new possible insight about your customers. If you hear similar statements or see behaviors that point to the same insight from a few different people, that should be plenty of evidence to trigger your curiosity and excitement that you are on to something. You’ll have opportunities in future iterations to further validate that insight with more customers, so you don’t need to be completely sure, you just need a well-informed hunch. You might need only a few qualified people to develop a promising hypothesis that is worth moving forward with.

![]() Tip

Tip

If the data you’re collecting doesn’t seem rich enough for distilling interesting insights, instead of talking to more customers, focus on gathering different types of data and using different research techniques. Have you looked at all four quadrants of the elephant: QUANT/SAY, QUANT/DO, QUAL/SAY, QUAL/DO?

On the other hand, you may talk to plenty of customers, feel like you have lots of data, but still not see any clear patterns or insights. Or perhaps you see so many possible needs that it’s hard to prioritize which are the most important. In this case, the synthesis techniques discussed later in this chapter will be of particular interest. These techniques help you find the needle in a haystack of data, help you make sense of what customers are telling you, and help you identify more subtle patterns in your data.

It’s important to realize that collecting data and understanding customers is ongoing and cumulative. It’s not a waterfall process where you stop everything, learn everything there is to know, and then proceed. You target your data gathering such that you learn enough to at least partially answer your current questions and move forward, but you remain open to new data and even new questions because you know you’ll never have the complete picture. More data won’t necessarily lead you to more or better insights, especially not right away, before you have explored some possible solutions and seen how customers react to them.

Do I need an expert?

A lot of science exists about how to do solid customer research, whether it’s knowing exactly how many people you need to observe to get good coverage, how to interpret results, how many survey respondents you need to get statistical significance for a given target population, how best to capture data, or what inherent biases lurk in each technique. It’s possible to hire a consultant to help you with your research, and many small and midsize companies go this route rather than employ their own full-time researchers. Professional researchers might have job titles such as user researcher, design researcher, ethnographer, market researcher, usability engineer, or product planner. The User Experience Professionals Association (UXPA) has consultancy listings on their website at www.uxpa.org. If you can afford it, it’s well worth hiring a professional.

However, it is also possible to do research on your own. As we walk through the techniques later in this chapter, we’ll point out some of the most salient gotchas to help you avoid pitfalls. The most common mistake is to introduce bias into your data without realizing it. One way to mitigate many biases is to simply be sure you have multiple observers. But every technique has inherent biases and blind spots, so picking the right technique for the job depends a lot on deciding which kinds of problems you can live with and which you can’t. Despite the risks, we do strongly encourage you to give it a try. Getting started isn’t as hard as it looks. You will gain some important benefits, even from an imperfect, first-time effort.

Conducting customer research is considered a branch of social science research in most contexts, and as such is subject to certain rules about the ethical treatment of research subjects. Generally, you have an obligation to inform research subjects about how their data will be used and that they have the right to decline to answer any question or to cease their participation at any point. You also are required to get the research subject’s consent before recording them in any way, so no hidden cameras. Please learn your local laws and abide by them.

![]() Mindshift

Mindshift

Will the real researcher please stand up? We often see teams of engineers fail to recognize the difference in approach used by two main types of customer-research experts. The crux lies between user researchers, who usually come from a social-science background, and market researchers or product planners, who often come from a business or marketing background. User researchers tend to focus more on ethnographic, qualitative approaches, whereas product planners tend to use more quantitative approaches, often starting from a business or competitive-landscape perspective. Both approaches are valid and important, but sometimes a bit of tension can arise between these two types of experts because they employ different points of view. Both are passionate about uncovering the deep insights about customers that should inform the project team’s plans and strategies, and both have a tendency to think their methods are superior.

As the client who needs to make decisions based on research, ideally you want to be informed by both types of expertise and to take the time to understand the findings across both of them to gain the sharpest, most robust insights. If you decide to hire a consultant, clarify which approaches and techniques the consultant will use, and ideally draw from both schools of thought or link the consultant with staff in your company to bring in a complementary approach. It’s worth the effort to help ensure that everyone works together and synthesizes their insights in harmony—otherwise, you might end up with two different sets of data that don’t quite jibe, which could encourage an engineering team under stress to ignore both of them.

What is the engineering team’s role in research?

Even if you hire some professional help, that doesn’t mean the rest of the team is off the hook. It’s vital for members of the engineering team to be involved in the research effort, including attending site visits, interviews, and focus groups; developing surveys; examining competitive data; and synthesizing the data into insights. But who exactly on the team should be involved, and how many people should participate firsthand?

Some teams we’ve worked with take the approach that every member of the project team needs to have a personal hand in some part of the customer research. These teams are more likely to dedicate a block of time at the beginning of a new project for the whole team to become familiar with the available research or do a consolidated push of interviews, site visits, and other studies. They might require every member of the team to attend at least one in-person research activity over the course of a project or during the year. These teams believe that there is no substitute for experience when it comes to developing deep empathy with your target customer.

Other teams have decided that it’s better to have a small team of people, a mix of professional researchers and engineers, lead the research effort. It becomes that small group’s job to keep everyone informed so that the rest of the team can also build empathy for its customers. Even if you have a dedicated research team, it is important that a few engineers be an intimate part of this group to help provide a translation layer when it is time to explain the insights to the rest of the team and also to bring their engineering mindset to bear on understanding the context or technical implications of comments customers may make during the course of an observation, interview, or other encounter.

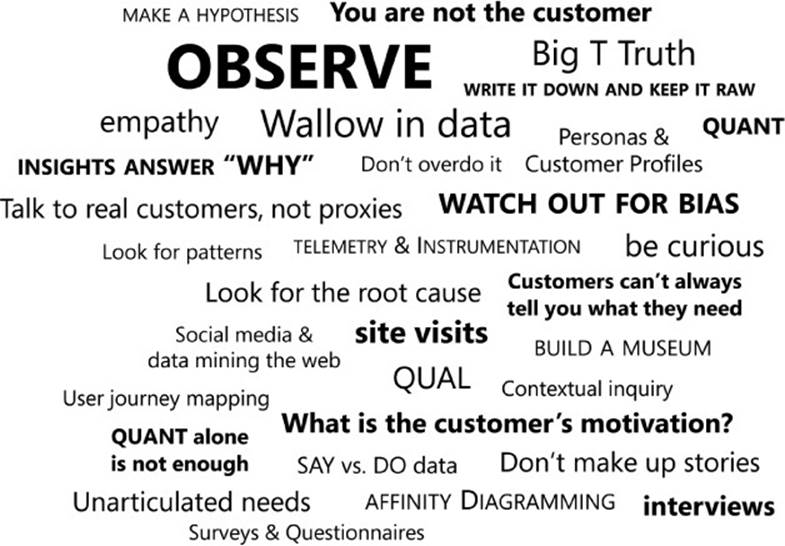

Observe stage: Key tools and techniques

Now it’s time to get to the nitty-gritty and talk about how to actually do generative research about customers. This section offers an overview of our favorite tools and techniques. The techniques are broken into two large categories: data-gathering techniques that help you collect generative customer data, and synthesis techniques that help you sort through and synthesize your data to find customer insights. The chapter finishes with a deep dive into one of our favorite techniques: affinity diagramming.

Of course, you won’t use all the tools and techniques outlined here. Pick the ones (or one) that are most relevant to your situation, and remember that you probably don’t have to do as much research as you think to kick off a rapid iterative process. Once you have a hypothesis for some key insights that seem promising, you are ready to move on to the next stage of the Fast Feedback Cycle.

![]() Mindshift

Mindshift

Write it down and keep it raw. When doing customer research of any type, it’s essential to capture the data that you collect in a robust way. For even a moderate amount of research, you will amass a lot of information, and you can easily forget the details if you don’t write them down and keep them catalogued. Also, don’t just go for rough impressions—you need all the details at your fingertips to do this well.

Data can come in a lot of forms. You might get multimedia data such as photos, video, or audio clips. You might also amass piles of quantitative data that will be processed into charts, graphs, and other visualizations. You should keep detailed notes of every customer encounter, which will give you transcripts, verbatim quotes, and other notes about what you’ve noticed about the customer’s environment, motivation, or attitude. Think about what synthesis techniques you plan to use. This might affect how you capture your data—whether that means writing each separate quote or idea on its own sticky note or index card, capturing it electronically, or using a particular tool set.

The most important thing to remember when capturing data is to keep it as “raw” as possible. Especially when you talk with customers directly, write down as much as possible and exactly what the person says. Do not mix in your own interpretation of what was said or your ideas for what themes you see emerging at this stage. You can keep notes for potential hypotheses and insights separately, but it’s better to spend your mental energy paying attention to the specific person or case at hand, capturing it as accurately as you can, and not try to draw conclusions. Save that for later when you can look across all of your data points. Also, don’t zoom in too quickly on one specific case that may turn out to be fairly exclusive to a single individual. At the data collection stage, your primary attitude should be one of curiosity and of trying to understand your customers’ environment, situation, tasks, pain points, and desires in as much detail as possible. Save the interpretation for later.

Data-gathering techniques

Here we’ll introduce you to techniques that we find most useful for doing primary research, where you study your customers directly. These techniques represent a variety of DO and SAY approaches. Some approaches produce QUANT data, others produce QUAL data, and many produce a mix. We’ll point out which techniques are best for different situations and where the major sources of potential bias stem from. Remember that no technique is perfect; each has its pros and cons.

Site visits and interviews

Primary usage: QUAL/DO data (observing behavior), QUAL/SAY data (interviews).

Best for: In-person observation to understand context, attitudes, and behaviors and to notice needs that customers may not be aware of.