Scenario-Focused Engineering (2014)

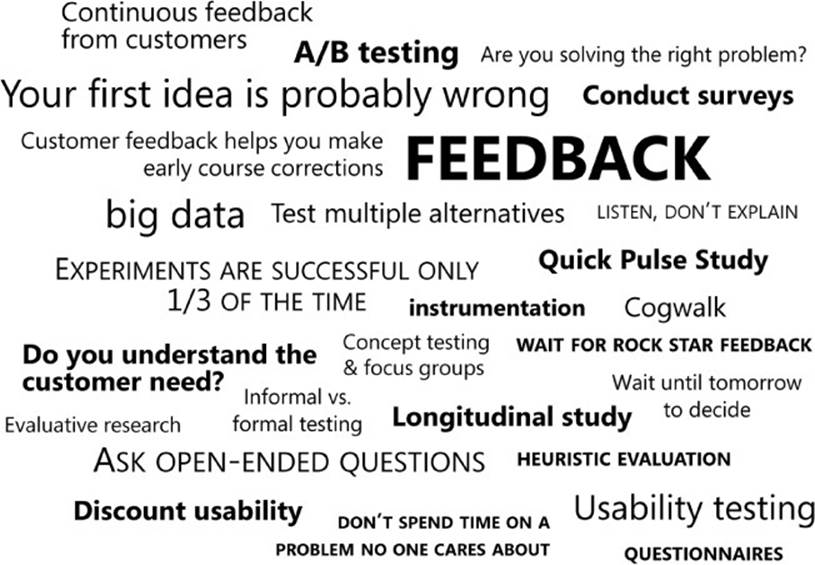

Part II: The Fast Feedback Cycle

Chapter 9. Observing customers: Getting feedback

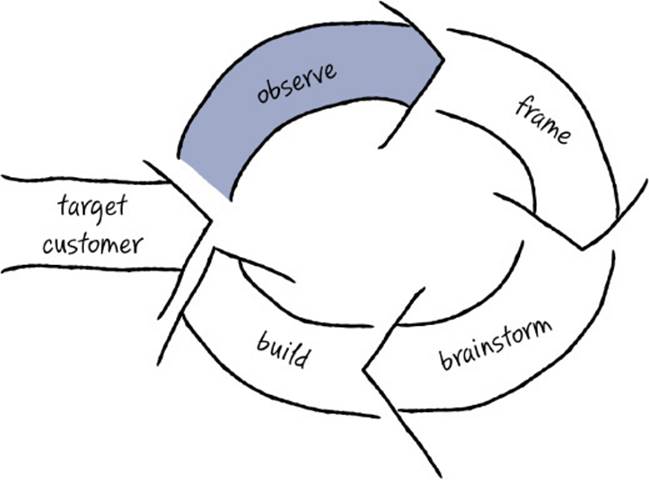

Congratulations. You’ve completed your first iteration of the Fast Feedback Cycle and built something that you expect will delight your customers. Now you repeat the cycle, and it’s time to check in again with customers. It’s time to find out what customers think of your ideas. It’s time for you to be objective and listen to what they have to say.

This chapter returns to the first stage of the Fast Feedback Cycle, “Observe.” In Chapter 5, “Observing customers: Building empathy,” we explored generative research techniques for building empathy and identifying new customer insights; the focus of this chapter is on how to useevaluative research techniques to get customer feedback on what you’ve built, whether that’s a prototype or a functional solution.1

Why get feedback?

Remember the tunnel? You may be in it. You’ve invested a lot of time, energy, and emotion in your solution idea up to this point. You’ve researched your customers and developed empathy for them. You’ve made decisions on their behalf and now have something to show them. You’ve built it for them, and you love it. Of course your customers are going to love it as well . . . or are they?

The fact is, as hard as you try and as smart as you are, you don’t own a functioning crystal ball. And cold, hard data shows that even when you start with great customer research and build something based on that, you rarely achieve your goals on the first attempt. This is why we believe so strongly in iteration.

So what are the odds of getting it right the first time, without any feedback from your customers? Dr. Ron Kohavi, general manager of the Analysis & Experimentation team in Microsoft’s Applications & Services Group, has made a career of testing ideas through online controlled experimentation, otherwise known as A/B testing. After performing countless experiments at Microsoft, starting before 2007, he concludes, “We are poor at assessing the value of ideas. Only one-third of the ideas tested at Microsoft improved the metric(s) they were designed to improve. Success is even harder to find in well-optimized domains like Bing.” This problem is not unique to Microsoft. Dr. Kohavi gathered data from many other sources to demonstrate that this is a systemic problem across the entire software industry:

Jim Manzi wrote that at Google, only “about 10 percent of these [controlled experiments, were] leading to business changes.” Avinash Kaushik wrote in his Experimentation and Testing primer that “80% of the time you/we are wrong about what a customer wants.” Mike Moran wrote that Netflix considers 90% of what they try to be wrong. Regis Hadiaris from Quicken Loans wrote that “in the five years I’ve been running tests, I’m only about as correct in guessing the results as a major league baseball player is in hitting the ball. That’s right—I’ve been doing this for five years, and I can only “guess” the outcome of a test about 33% of the time!” Dan McKinley at Etsy wrote “nearly everything fails” and “it’s been humbling to realize how rare it is for them [features] to succeed on the first attempt. I strongly suspect that this experience is universal, but it is not universally recognized or acknowledged.” Finally, Colin McFarland wrote in the book Experiment!, “No matter how much you think it’s a no-brainer, how much research you’ve done, or how many competitors are doing it, sometimes, more often than you might think, experiment ideas simply fail.”2

Don’t be depressed by these statistics, getting it wrong isn’t all bad news. In the process of running an experiment, you almost always learn something that helps you adjust your approach, and then you are that much more likely to nail it on your next iteration. By using some of the techniques listed in this chapter, you’ll figure out which of your ideas work and which don’t. You will get a much better idea of what customers really want by watching how they react to your proposed solutions, deepening your customer insights. You’ll usually leave with inspiration for what new ideas (or what blend of existing ideas) might work better. And you will weed out bad ideas instead of carrying their costs through the longer development and delivery process.

![]() Mindshift

Mindshift

Many engineers tell us that they have lightning-bolt moments when they finally get to watch customers use the stuff they’ve worked so hard to build. As soon as you sit down with a customer, a magical thing happens—all of a sudden, you start to see the problem space through the customer’s eyes. You may notice problems in your approach that you didn’t see at all during the long hours you spent designing or coding your solution. That profound shift in perspective is quite easy to achieve and can make all the difference in helping you see what isn’t quite working, to notice where your customer is stumbling, and to know where to focus your energy next.

User testing comes in many flavors

Now that you have developed some ideas, the main goal of the Observe stage in the Fast Feedback Cycle is to put customers in front of your prototypes, your working code, or whatever your latest progress is and see how they react and what they do with it. Is their response enthusiastic? Does your solution actually solve the problem you’ve set out to solve? Do they intuitively navigate through the user interface? Did they do what you expected? Can you measure improvement in the key metrics you’re trying to move? You may be surprised by what you learn.

You can discover many different kinds of things in user testing, from whether you are solving a problem that your customers care about to whether you picked the right solution for the problem you addressed. You can also compile real-world usage data about your solution. The focus of your testing and what you are seeking to learn progresses in a predictable pattern throughout a project’s phases, from early to midgame to endgame. It’s worth thinking about what you’re trying to learn at any given phase of your project so that you can pick the right approach for getting feedback.

Testing whether you fully understand the customer need

In the first couple of iterations of the Fast Feedback Cycle, you want to make sure that customers actually care about the problems you decided to solve for them, and that you fully understand all the nuances of their needs. To do this, you show customers your latest thinking and look primarily for confirmation that the problems your solutions are trying to solve resonate with them. You should also listen for how you might adjust your framing to describe the customer need more directly. Do you understand the customer problem deeply and thoroughly, in all its complexities? Are you thinking about solving that problem in a way that your customers understand and appreciate? Is this problem important enough that they will pay for a solution to it? Do you have a clear understanding of what you need to deliver to make a useful and desirable solution?

Since you are primarily interested in testing your overall direction and customer insights, it’s quite likely that you can get feedback long before you have any working code. As we’ve mentioned, it’s usually most efficient in the first iteration or two to use low-fidelity, rapid prototyping techniques such as sketches, storyboards, or paper prototypes, or just have customers read and react to written customer scenario narratives. The goal is to get directional feedback that tells you whether you are on the right track before you get too far along in selecting, building, and fine-tuning a specific solution, which prevents you from wasting time on a dead end.

For example, imagine that you decided to build a new service that provides a way for small businesses to establish a web presence. At this stage, you show customers some early concepts, perhaps prototypes of tools to help build a website, or easily establish a business-oriented Facebook presence, or create a blog, or more easily post and respond to Twitter messages. Showing these early concepts helps customers describe what additional aspects of the solution they were expecting or perhaps triggers them to mention more-subtle problems you haven’t heard from them before, or they might comment on where your ideas haven’t really met their goals. You might simply show the scenario your team developed and ask whether the story sounds familiar or how they might change it to better reflect their situation.

After showing customers your early ideas, you might ask them again how they currently do these tasks, what it would take for a solution to be better enough that they would switch to it, and what would be involved in switching. You might also do some price testing at this stage to see what customers would be willing to pay for or how they react to advertising.

In doing all this, you might better understand that even though most small-business customers aren’t totally satisfied with their current web presence, they’re also loath to put their energy into rebuilding it, and their most pressing needs are actually in managing their social-network presence and communicating with their customers. In deeper conversations, you now discover that the biggest issue for these business owners turns out to be how to reach their customers without having their emails land in the junk mail folder, which was not what you first assumed. It’s common for teams to find that the problem they thought was most important really wasn’t as important as they thought. If you went full steam ahead and delivered a fancy new tool set for establishing a website presence for small businesses, you might have been disappointed by poor sales, regardless of how well the solution worked or how innovative it was, because this just wasn’t an important problem for them to solve right now.

Remember, that to validate your direction and confirm whether you fully understand your customers’ needs and are on a path to delight them, it’s vital that you test with customers who are representative of your target market. Only your target customers can answer the question “Is this device, service, or app solving a problem that I care about?”

![]() Mindshift

Mindshift

Don’t spend time on a problem no one cares about. It can be very tempting to skip customer feedback and move on based on your intuition. However, remember that you are trying to avoid spending a lot of effort on a problem, only to realize later that your target customers don’t actually care enough about that problem to switch providers, pay money, or actually engage with your product. Sadly, this mistake happens a lot in our industry, so it’s worth making a quick iteration to avoid having to throw out a lot of work later on—or worse, end up shipping something you later realize isn’t the right thing but is the only thing you’ve got.

Thankfully, getting feedback doesn’t have to take much time. In fact, because you’re only looking for directional feedback, you don’t have to have a complete, buttoned-up prototype to get started talking to customers about your ideas. The sooner you float your half-baked, early concepts with customers to see whether you are on the right track, the quicker you’ll be able to make crucial course corrections that point you in the right direction.

SFE in action: You’re wrong

Jeff Derstadt, Principal Development Lead, Microsoft Azure

For nine months, starting in the fall of 2013, I participated in an incubation experiment within Microsoft where we adopted “lean startup” techniques to find new business value for the Azure platform. Our team developed a three-day workshop to give other engineers at Microsoft a taste of what it is like to practice lean techniques and the kind of customer development that mirrors that of successful startups.

At the start of the workshop, attendees form groups of four around an idea for a new consumer product that all team members are passionate about (such as a wearable device for children or a movie recommendation service). Each team starts by developing a pitch about their idea and then delivers the pitch to the entire group on the morning of the first day. As pitches go on, we ask team members whether they believe in their idea and think it’s a winner. Everyone raises their hands in affirmation: customers will love their idea and product.

Following the pitches, we provide a brief overview of lean techniques and make the point that in today’s technology markets, the most significant business risks have shifted from Can we build this to Have we built the right thing and achieved successful product-market fit. Halfway through the first day, the learning begins when we kick everyone out of the building and have them go talk to customers about their product idea and record what they learn. Each team is tasked with interviewing 15-20 real customers—that is, people who do not work for Microsoft and aren’t friends or family members.

On the morning of day two, teams come with a readout of their idea and their learnings from the customer interviews. As though following the same playbook, each team delivers a scarily similar message: not many people liked their solution, the team is a little depressed, and talking with customers is hard stuff! What we’ve observed is that teams typically spend that first customer outing talking to people about their solution and its features (“Do you want music with your workout reminder app?”). From this experience, most of the attendees get a goodtaste of being wrong, and being proven wrong by people who were supposed to buy their product.

To get teams back on track, the day-two readouts are followed by a discussion about customer development, which is the process of learning about customers, their activities, and their problems and leaving out any mention of a solution. Teams regroup and come up with a line of questioning that allows them to inquire about a day in the life of their customers, how they spend their time, and the problems they have trying to achieve something. At midday, teams again head out of the building to talk to 15-20 new customers and prepare a readout for the next day.

Day three is about promise and hope. Teams again present their readouts, but the sentiment is always very different: people are reinvigorated and full of new ideas. From their new line of questioning, teams have learned why people rejected their initial solution and why it may not have fit for their customers, and they have usually discovered a new problem that their customers wish could be solved. The creative gears are turning again, innovation is happening, and people are excited about how easy it seems to now get to a right answer.

Regardless of engineering position or level, people start the workshop by raising their hands and saying they are right, and they end the workshop knowing they were wrong. They learn to fail fast and that gathering data about all aspects of their customers can lead to something customers will love. Being wrong isn’t so bad after all, and learning how to get to being right and then delivering that value is the action we all have to take.

Testing whether you’ve got the right solution

As you gain confidence that you’ve focused on the right problems, your attention shifts to identifying an optimal solution that strikes the right balance between great customer experience, technology feasibility, and business strategy. At this point you want to get feedback that helps you make the big tradeoffs between what is ideal versus what is feasible and helps you balance multiple, sometimes conflicting, goals.

To get this type of feedback, you test the alternative solution ideas you developed in the prototyping stage. If the ideas are relatively easy to code, then A/B testing several different approaches can be a good option. But the more you are facing a larger or newer problem or exploring an unknown solution space, the more you should lean toward rough, noncode prototypes that you test with customers in person.

Remember from the last chapter: While you explore alternatives, don’t let your prototypes become unnecessarily detailed, which distracts you from the main decision points. At this point you care mostly about the rough flow of your solution, whether it makes sense to the customer, and whether it feels good to them. Again, if your prototype has too many bells and whistles, your customers can easily be distracted and start telling you about how they don’t care for that animation or that they really prefer blue rather than orange—small details that are not really central to the essence of your solution.

Following along with our small-business example, you might use early feedback to reframe your scenario to focus on social networking and customer communication as the problems your customers care most about. In the next round of user testing (with a fresh group of representative customers), you might show some paper prototypes of several approaches for how to manage Twitter, Facebook, and email communications. A few of the prototypes might propose out-of-the-box ideas for how to ensure that email doesn’t get junked, or new approaches for how to communicate with customers in ways that don’t involve email, which avoids the junk mail perception to begin with. You carefully listen to feedback about which aspects of the updated solutions resonate with customers and which don’t. You notice where customers say that key functionality is still missing and use that feedback to create a smaller set of revised alternatives that you test with another set of customers.

![]() Mindshift

Mindshift

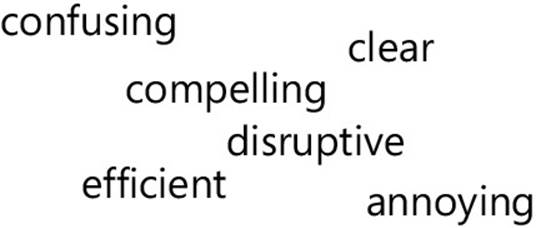

Keep iterating until you get rock-star feedback. It’s easy to convince yourself that customers love your solution, so beware of feedback that sounds like this:

“Well, that’s kind of cool, but it’s not for me. But I like it. Good job. You know I think I have a friend who might really like it . . .”

The feedback you receive when you have solved a well-understood, articulated need will be generally positive. You’ll get encouraging and optimistic feedback from your customers. After all, you are solving a pain point for them, and people mostly want to be nice and tell you that they like your work.

However, when you solve an unarticulated need exceptionally well, when you have found a way to address a problem that customers might not even know they had, your customers are surprised and deeply delighted, and the feedback you receive cannot be misread. When you rock your customers’ world, you know it. And you will know this very early on when you give customers a first glimpse of your ideas in prototype form, or even in a simple sketch. If you are not getting extremely positive, glowing feedback that shows that customers think you are a magician or a rock star, you probably haven’t hit the optimal solution yet—or you aren’t yet solving an important problem—and need to continue trying out ideas. Don’t be impatient: it may take a few iterations before you find the sweet spot that truly resonates with your customers.

SFE in action: Testing early prototypes of Kinect

Ramon Romero, Senior Design Research Lead, Microsoft Kinect

With Kinect we were able to successfully predict the device’s appeal and potential well in advance of release. The key was to understand the questions we wanted to answer with our prototypes. Early testing of Kinect experiences focused on two parallel questions, both fundamental to the ultimate success of the accessory:

![]() First and foremost, could consumers adapt to controlling Kinect?

First and foremost, could consumers adapt to controlling Kinect?

![]() Second, could we create a set of experiences that would be fun, attractive, and novel while sufficiently demonstrating the potential of the technology?

Second, could we create a set of experiences that would be fun, attractive, and novel while sufficiently demonstrating the potential of the technology?

Kinect prototype testing with consumers began in early 2009, 22 months prior to release. Before shipping, Kinect would be experienced by hundreds of participants, but in those first few months we conducted only a few simple tests of prototypes as they were updated. Testing was undertaken in the utmost secrecy. Participants were led to believe that an existing Xbox video camera was the active device. The early Kinect prototype was hidden within a PC chassis.

We followed standard usability protocols with the exception that we gave the Kinect itself only a limited introduction—“Imagine controlling a game system without using a controller”—and required that customers figure out how to control their interaction with the game without further instruction. This was our success criteria: Kinect’s success hinged on it being instantly understandable.

The answer to our first question was clear almost immediately. Consumers readily adapted to Kinect in even the earliest versions of its prototype. We had the answer to our experience question as well. In these earliest tests we saw success in one of the mini-games that ultimately shipped as part of Kinect Adventures!: Rally Ball, a game that requires the user to bounce a virtual ball against a wall at the end of a hallway to clear tiles.

Interestingly, Kinect looked good in all phases of its testing, even when it ostensibly should not have. Prototypes were rough, failed frequently, and often contained only the seed of a coherent game design. Nonetheless, the potential shined through and participants expressed their excitement directly. This is not a common thing, even for games.

We should use this experience to teach ourselves something. Ultimately, the release of Kinect was highly successful. Microsoft announced 24 million units sold by 2013, meaning about one in three Xbox 360 owners bought a Kinect attached to go with it.3 We cannot conclusively state that we predicted this level of success in prototype. But we do know one thing. True potential shined, and we could see it well before the final experiences were created.

Testing whether your solution works well

As you start winnowing your options down toward a single solution, the fidelity of your solution prototypes needs to increase. If you haven’t already, you should switch from testing prototypes to building end-to-end slices of functionality and testing working code. As you do this, you’ll use the feedback you receive to shift from deciding which solution to implement toward tuning the interaction and usability of your implementation. Is the experience smooth, fluid, and easy to use? Does it flow? Is it aesthetically pleasing? Is it meeting your experience metrics?

This is the time when you might take a few shortcuts in recruiting customers for in-person testing. It can take a lot of effort to locate, recruit, and screen for target customers who are willing to take the time to give you early feedback. Especially if your contact list of target customers is small, you need to use your customer pool wisely. Because obvious usability problems often mask nonobvious ones, you want to take care of the obvious problems before you recruit actual target customers to give you detailed feedback.

Big usability issues that you uncover first—such as can users find the command, can they follow the steps, does the UI follow well-known standards, can users remember what to do, does the language make sense, does the experience flow naturally, and so on—tend to be universal. When fleshing out these types of early issues, it’s okay to get feedback from a teammate (someone who did not work on the product) or from people who are not directly in your target market, as long as they are about as savvy with technology as your target customers are. You might even make the code available to the engineering team for “dogfooding”—Microsoft’s lingo for “eating your own dog food,” meaning to use your own prerelease software, which helps identify obvious problems quickly without the expense of customer testing.

Asking a user experience expert to do a heuristic evaluation of your proposed design is another way to flesh out the more obvious problems. Or try asking a few team members to put on their user hats and do a cognitive walk-through to predict what customers might be thinking at each stage and where possible confusions might lie. These are good techniques not only for identifying obvious usability problems but also for identifying blocking issues such as logic problems, gaps in the end-to-end experience, or written text that makes no sense to anyone. We’ll discuss these techniques in more detail later in the chapter.

![]() Mindshift

Mindshift

You are (still) not the customer. Be careful at this stage not to overuse feedback from teammates and other tech-savvy members of your company. Self-hosting your code and getting quick feedback on prototypes give you a good gut check about whether your solutions basically make sense, but internal feedback tells you little about whether those solutions are truly tuned to your customers’ needs and preferences. Getting a thumbs-up from your colleagues is the easy part, but it definitely doesn’t tell you whether you’ve achieved delight for your real-world customers. You have to take your testing to the next level and bring your solutions to target customers, see what areas need adjustments, and keep iterating to get to finished, polished, thoroughly tuned solutions.

Fine-tuning the details of your solution

Once you’ve smoked out and resolved the big problems, you need to test with customers in your target demographic again and watch those customers use your solution. At this point, you are almost always testing live, functioning code. As your solution matures, your focus moves from optimizing the basic flow for your customer to finding more-subtle problems and fine-tuning the details of usability and aesthetics, all as the team continues to write code in parallel.

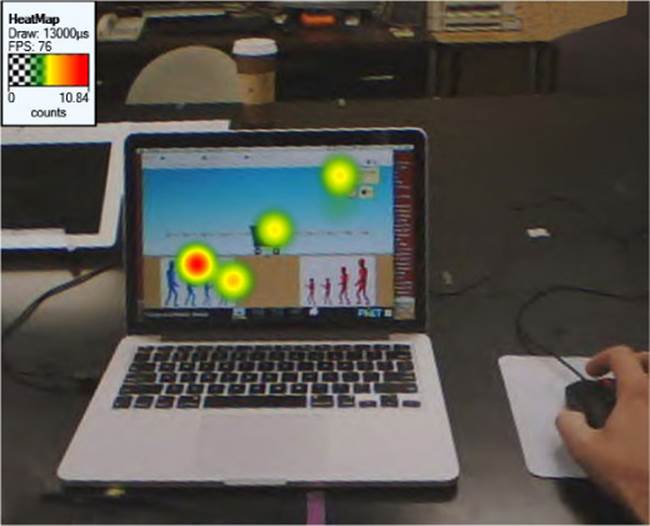

You might conduct an A/B test with a percentage of your user base by hosting your idea on your live service to see what happens in actual usage. You can also get feedback in person by asking customers to perform a series of specific tasks while you observe and pay attention to what they do, what they say, and what they have trouble with. Usability testing is a good mechanism for doing this. It can be done informally with good results to fuel quick iterations, or formally if you need to get more statistically reliable results for benchmarking.

Testing real-world usage over time

Sometimes it’s advantageous to do longitudinal research to track a set of customers using your product over a period of time to see how their behavior changes. Do they hate your product at first but then, after day three, realize they can’t live without it? Or perhaps their first impression is delight, but they discover that what first delighted them becomes annoying later on as they become more fluent with the system and want a more streamlined experience? What features and functions do they discover right away and which do they discover later or never? Which do they continue to use and how often? Are they using your solution for the scenarios you expected and optimized for?

![]() Vocab

Vocab

Longitudinal research is when you give your solution to customers and track their behavior as they use the solution over a period of days, weeks, or months.

Longitudinal studies take more effort to do effectively. You first have to build something robust enough that someone can use it under real circumstances, and you need to devise ways to get regular feedback from your participants throughout the study. However, the big advantage to doing a longitudinal study is that you get feedback about how the product is being used in the customer’s context—home, workplace, automobile, or wherever you expect your solution will be used in real life. Sure, you can always try hard to re-create a real-life situation in a usability lab, but there’s nothing like the real thing. It’s very difficult to simulate environmental inputs such as the phone ringing during dinner, the crazy driver cutting you off at the intersection, or your boss popping into your office to say hi while you’re deep in thought about a gnarly coding problem.

Although conducting a longitudinal study outside the lab—for example, in the customer’s real-world environment—is usually preferable, it’s not always possible. Sometimes, the specific environment is difficult to use for firsthand observation. An IT server environment is one example. What IT pro would expose the live corporate network to early, potentially unstable prototype code? In these cases, do your best to mimic the real-world situation in the lab, and then bring customers into the lab for several sessions over a period of time.

Formal versus informal testing approaches

You can make use of a continuum of approaches for user testing, from formalized, to informal, to ad hoc. For almost all of the techniques we describe in this chapter, you can adjust the level of formality to best suit your situation. If you’re an engineer who is very close to the implementation of the project, you’ll usually want to engage in informal user research techniques that allow you to get frequent, quick feedback, and then use that input to rapidly iterate ideas and continue to build on your sense of customer empathy.

An informal user test is done quickly and easily, with little to no setup or planning costs. Informal user tests are highly influenced by the intuition, knowledge, and communication style of the researcher (in this case, that would be you). You just go out and show your stuff to a few customers, watch what they do, listen to what they have to say, make observations, and take notes. You can do this type of informal user testing with a prototype, an early build, a sketch, working code, or whatever work output you have created. You are looking for problems in your design, to see what works and what doesn’t and how customers react to your ideas. Quick, informal methods usually let you find most of the big problems in your early solution ideas after interacting with just a few customers. However, be attentive that you don’t introduce bias into the feedback by trying to sell your ideas; be sure you create an environment for honest feedback.

![]() Mindshift

Mindshift

Be opportunistic about gathering informal feedback. Don’t be shy about engaging people and getting quick, informal feedback about your product or the early ideas you are considering. Remember the story about the marketing manager who got great customer insights while being tattooed? If you have that level of customer desire, curiosity, and connection, you’ll be able to get brilliant feedback on your ideas every day, armed with nothing more than a piece of paper and a Sharpie. Taking a trip on an airplane? Don’t think of the airplane as a mode of travel, think of it as your personal customer feedback lab. Open your laptop, and get quick feedback from your row mates about what you are working on. Go ahead and carry a paper prototype, folded up in your pocket, and show it to people when you go to a café or keep an interactive prototype installed on your smartphone. You never know when you’ll have a great opportunity for a quick user test.4 But be sure that you ask enough questions to determine whether you’re talking to an actual target customer so that you can interpret the feedback accordingly.

As your solution matures, you may want to add more formality to your user testing. By formality, we mean the structure and rigor around which you run your user tests and collect and report on the resulting data. You might conduct an A/B test online with a large number of participants, tracking specific metrics with telemetry and with careful attention to the experiment’s design and the statistical validity of the results. For more-formal usability tests, you would determine how many customers you need to test ahead of time, write down a specific set of tasks for the user to perform, and ask each user to perform the same set of tasks in the same order, in the same environment, and with the same facilitator. You might even time the customers’ actions with a stopwatch so that you can create a mathematical model of user behavior and learn how long it takes customers to do a particular task. With formal testing, observations are recorded in a structured format that allows the data to be merged and analyzed after all the tests have been completed, and the data is usually compiled in a written report.

The advantage of increasing the formality and rigor of your user testing is that it increases the reliability of the data you collect—you gain the ability to extrapolate your findings more reliably to your target population. Many teams use a more formal user-testing approach to help them understand when their experience metrics have been met, to tell them when they are “done,” or for benchmarking against a competitor. While you can conduct formal testing at any time, it typically begins after the project’s direction is well understood and the big usability issues have been uncovered and fixed. This kind of testing tends to be more expensive, so you should use it sparingly. Because of the need for an impartial facilitator, formal user testing is another area where you would be well served to hire a professional.

Testing for improvement versus confirmation

While it is generally true that informal user-testing techniques are great for quick iterations and getting feedback to further develop and refine your ideas, and that more-formalized user tests are employed to answer questions about experience metrics, this isn’t always the case—formal and informal methods simply refer to how you approach the user test; they don’t define why or what you are testing. To help you communicate clearly with user researchers (and the product team in general), there’s one more concept to learn that helps make the definition of different kinds of user testing more precise.

In the world of user research, evaluative techniques are often classified as formative (you are looking to make improvements that will help shape or “form” the product) or summative (you are measuring results against a benchmark, answering the question “Are we done yet?”).

![]() Vocab

Vocab

Formative research techniques are used to determine what works well and what doesn’t, with the goal of making improvements or validating your ideas. Your intent is to uncover problems so that you can fix them. Summative research techniques provide a systematic and objective way to gather measurable feedback data about a specific problem, product, scenario, or hypothesis you want to test. Summative research gives you statistical power and allows you to make statements such as “90% of people were successful in winning a pink bunny within the first 10 minutes of play.”

Summative approaches are much more rigorous and require a deeper level of expertise than do formative approaches. They also take more time to prepare, execute, analyze, and report. It is overkill to use summative methods during early iterations. Because summative research involves quite a bit more science and statistical knowledge, it is another area where hiring a professional is extremely helpful.

In this chapter we focus mostly on formative techniques for validating customer delight and finding issues. In Chapter 11, “The way you work,” we offer a bit more about summative approaches that help you track the progress of your solution against user-experience metrics, using tools such as scorecarding and benchmarking.

The Zen of giving and receiving feedback

Hearing feedback about your work can be painful. It’s hard to be critiqued. It’s hard to remain objective and continue listening when a customer finds fault with something you built for them, something you thought they would love. Thankfully, accepting feedback becomes easier with practice. It especially helps if you can keep a Zen frame of mind about receiving feedback: the process is not about you being right, but about finding an optimal solution, which is everyone’s common goal. The more you understand how hard it is to pinpoint another person’s true needs, the easier you will be on yourself when you inevitably discover that your first set of ideas isn’t perfect and you need to adjust.

How to listen for feedback

Receiving feedback can be really hard on a lot of people. But this is just another instance of tunnel vision. Your idea makes so much sense in your own mind, and seems like the only viable approach, that you can’t imagine how another person might not understand it or see how wonderful it is. As soon as someone challenges an idea, suggests that it might not be working, or suggests an alternative, our overwhelming tendency is to jump in and argue, often before the reviewer even finishes a sentence. Or, if you’re feeling charitable, you might not so much argue as explain: “Clearly, they aren’t understanding my idea,” you think, “so if I just explain it a little bit better, then they’ll get it and appreciate how great my idea is.”

The trouble is that software doesn’t come prepackaged with an engineer who sits on your shoulder, ready to explain anything that doesn’t make sense. If you have to explain how your solution is supposed to work, then by definition it isn’t working yet. And if you’re spending so much of your energy explaining and arguing, then you really aren’t listening to the feedback and may miss out on valuable clues for how you might improve your solution. Remember that feedback is not about you, it’s about the solution. It’s not personal. As such, everyone, including team members and customers, is on the same side, trying to find the absolute best solution possible.

So whether you are getting feedback from a teammate or from a customer, develop the self-discipline to just listen and not speak, other than to say “Uh huh” every now and again. You may be tempted to, but don’t explain anything or argue or justify. Just listen. As Karl Melder discussed in his sidebar “How to interact with customers” in Chapter 5, body language is an important part of listening. Be open to the feedback and present a body language that conveys that you are actively listening and that you value what the reviewer is saying, no matter what.

If you are a solution-oriented problem solver, which is generally a good trait to have as an engineer, it may be particularly hard for you to listen for very long because you so much want to apply a fix to everything the customer is telling you—you want to get on with it and build a better solution. Other times, the feedback might cause your head to spin and make you worry about how the heck you’re ever going to fix your solution or if it’s even possible. It’s really easy for your mind to take over and lose focus on what the customer is telling you right now.

The antidote to these mind games is to get back into your Zen frame of mind. Remember that your immediate job is to listen to the feedback and understand what the customer is telling you. Remind yourself that you can wait until tomorrow to decide whether to act on that feedback. In other words, to make the best decisions about what to do, you need to marinate the feedback. By the next day, the feedback often makes a lot more sense than it did in the moment. Acting right away often produces a poorer result.

When you listen to a customer who is giving you feedback on your solution, remember that the value of listening doesn’t only come from hearing customers tell you that they adore your solution. The value lies in hearing the good and the bad together, because they are often intertwined. Pay special attention to the places where the customer is confused, frustrated, or doesn’t use your solution in the way you anticipated. Those rough spots give you clues to what you should focus on next.

Ask open-ended questions

Any time you observe a customer using your solution, it’s tempting to try to help them out, give them hints, tell them what you know, or give them the context you have so that they’ll come to the same conclusions you have. But you’ll get much better feedback if you can learn to use the language of psychologists.

Limit your speech. If a customer asks for help, pause and let him work it out himself. If he continues to struggle, try to respond first by asking a question. Finally, when you ask the customer for information (versus helping the customer get through a task), ask open-ended questions. If he answers you with a question, put the question back to him and have the customer, not you, answer. Doing this may seem awkward or rude, but customers quickly understand that this is not a normal conversational setting. You will be amazed how often a single question from you will help customers become unstuck (which usually indicates that the problem is probably not a large one, by the way).

Here are some phrases you can practice using:

![]() Interesting, tell me more about that.

Interesting, tell me more about that.

![]() Yes, I understand.

Yes, I understand.

![]() What would you have expected?

What would you have expected?

![]() What do you think you should do next?

What do you think you should do next?

![]() How do you think it works?

How do you think it works?

![]() What do you expect this component to do?

What do you expect this component to do?

Sometimes, it’s productive to simply have a frank conversation with a customer as she is experiencing your solution. But oftentimes your talking can actually get in the way of hearing the customer’s feedback (or it changes the flow of how she is experiencing your product and thus alters her experience and feedback). In a semiformal setting, such as a usability test, it’s a best practice for everyone but the facilitator to keep quiet and for you to ask any questions you have at the very end.

But if you find that you can’t resist, that you must ask users questions as they experience your solution (“Why did you do that? Any idea what would work better for you right now?”), you can do so as long as you are intentional about having an investigative conversation (not just constantly interrupting). A useful technique is to define breakpoints as part of the user test and ask your questions when the user reaches those moments. Asking questions in this manner helps you avoid contaminating the customer’s behavior on any subsequent task.

You may think that holding an informal conversation while walking a customer through your solution is one of the easiest ways to get feedback. But, in fact, it’s actually one of the more difficult techniques to do well without introducing significant bias, and it requires practice.5

Present multiple options

It’s easier for most people to give richer feedback when they have multiple options to compare and contrast. If you present only one idea, the only possibility is for customers to like or dislike that idea. Perhaps customers dislike some aspect of a particular implementation but would still find a solution valuable. Perhaps customers like one aspect of this solution but another aspect of a second solution. When you present only one option, it can be hard to tease apart these sorts of things.

Neuroscience break by Dr. Indrė Viskontas

For most of the decisions we make, we go through a step-wise process of evaluating alternatives, and we feel much more satisfied about our decision when we have had the opportunity to compare multiple options. Marketing companies have known this for years: it’s why you see several options whenever you shop, whether you’re buying magazine subscriptions ($24.99 for 1 year, $29.99 for 2 years), trying on running shoes, or ordering lunch. It’s hard to evaluate the worth of something on its own—we assign value by comparing things. What’s more, we find it easier to compare like with like, and that comparison influences our decision making.

For example, if we are in the process of buying a house, any good real-estate agent knows that to encourage us to buy house A, say a three-bedroom, two-bath, ranch-style home in an up-and-coming neighborhood, she should show us two other options—a very similar house B, the decoy, say a two-bedroom, two-bath home in the same neighborhood, and house C, a totally different style of home in a different neighborhood. Our natural tendency is to fixate on comparing house A with house B and then put an offer on A. However, when there are too many differences between options, or too many options to consider, we tend to find it much more difficult to make a decision.

Remember that even though you have been thinking long and hard about this problem space, you can almost be certain that the customer has not been and that this user test is probably the very first time the customer is seeing your solution. When you first explore the solution space by showing multiple ideas or approaches to customers, you expose them a little bit to the universe of what is possible. This allows customers to give you deeper and broader feedback rather than limiting them to the path you already chose. Customers might even suggest a different path, one you haven’t considered.

How to give feedback (to teammates)

Before we get into the details about methods of customer feedback, there’s one more topic to touch on. When teammates ask you to comment on their work, how do you give productive feedback?

Teams that put the customer at the center of their work tend to be highly collaborative in nature. When working on such a team, it’s especially important to learn how to give constructive feedback that is easy to swallow so that it really gets heard and considered by your teammates.

When you’re the one giving feedback, you can make it easier for the recipient to hear your perspective. Here are a few tips for giving feedback:

![]() Offer your thoughts Don’t claim to be right, just offer your opinions as you see them. Remind yourself that diversity helps teams be more effective in the long run. If you see something differently from the rest of the group, don’t be shy—you owe it to the team to speak up. The recipient gets to decide whether to act on your feedback. Your job is to make sure that your teammate hears the feedback so that he or she gets the benefit of your perspective and can make an informed decision.

Offer your thoughts Don’t claim to be right, just offer your opinions as you see them. Remind yourself that diversity helps teams be more effective in the long run. If you see something differently from the rest of the group, don’t be shy—you owe it to the team to speak up. The recipient gets to decide whether to act on your feedback. Your job is to make sure that your teammate hears the feedback so that he or she gets the benefit of your perspective and can make an informed decision.

![]() Say what you see Providing a running commentary about which parts of the user interface you’ve noticed first and what thoughts are going through your head can be extremely helpful. You may not have noticed the crucial interface item that was added, for instance, which is valuable feedback for the designer. Or you may have misunderstood what it was for. Or you may have noticed something on the page that confused you but that the designer hadn’t given a second thought to. If all those thoughts are locked in your head, people aren’t getting the full benefit of your feedback. Say it out loud.

Say what you see Providing a running commentary about which parts of the user interface you’ve noticed first and what thoughts are going through your head can be extremely helpful. You may not have noticed the crucial interface item that was added, for instance, which is valuable feedback for the designer. Or you may have misunderstood what it was for. Or you may have noticed something on the page that confused you but that the designer hadn’t given a second thought to. If all those thoughts are locked in your head, people aren’t getting the full benefit of your feedback. Say it out loud.

![]() Don’t try to convince anyone This is not the time to drive for a decision. Give the other person time and space to marinate on your feedback. Wait until tomorrow to plan your next steps.

Don’t try to convince anyone This is not the time to drive for a decision. Give the other person time and space to marinate on your feedback. Wait until tomorrow to plan your next steps.

![]() Remember to point out the positives It’s easy to zoom in on the problems, but pointing out things that are working well is equally important so that they don’t get lost inadvertently in the next iteration. Sandwiching negative feedback between two positive comments can also make the negatives a bit easier to hear.

Remember to point out the positives It’s easy to zoom in on the problems, but pointing out things that are working well is equally important so that they don’t get lost inadvertently in the next iteration. Sandwiching negative feedback between two positive comments can also make the negatives a bit easier to hear.

![]() Be genuine Don’t compliment something that you don’t really like, just to have something nice to say. Similarly, don’t go looking for problems to quibble about. It’s okay to say, “Hmm, this looks pretty good to me, nothing is jumping out at me right now.”

Be genuine Don’t compliment something that you don’t really like, just to have something nice to say. Similarly, don’t go looking for problems to quibble about. It’s okay to say, “Hmm, this looks pretty good to me, nothing is jumping out at me right now.”

![]() Remember that you’re on the same team, with the same goals Everyone is trying to get to an optimal solution for your customers. Try not to let personal egos drive decisions. If in doubt, instead of going head-to-head and debating your opinion with others, suggest doing a user test to see what works better.

Remember that you’re on the same team, with the same goals Everyone is trying to get to an optimal solution for your customers. Try not to let personal egos drive decisions. If in doubt, instead of going head-to-head and debating your opinion with others, suggest doing a user test to see what works better.

Observe stage (feedback): Key tools and techniques

In this section, we present a handful of specific research methods for getting customer feedback and also make some suggestions for which techniques best fit different situations and phases of your project. These methods are presented roughly in the order in which you might use them in a project, starting with techniques for early iterations and ending with techniques you would use against real code as you fine-tune your solutions getting ready for release.

Just like the generative feedback techniques we discussed in Chapter 5, these evaluative research techniques break down into SAY, DO, QUANT, and QUAL. You still want a good mix of all four quadrants of the research elephant for the best validity of your results. Also, it’s customary to refer to the people who take part in customer research studies as participants, and we’ve used that nomenclature in this section.

Scenario interview

Primary usage: QUAL/SAY data.

Best for: Testing whether you fully understand the customer need.

Many teams find it useful to get a quick round of feedback on their scenarios before they start building prototypes. This is a particularly good idea when you are in a brand-new solution space or don’t have a lot of experience with your target customers. Getting feedback on your scenario helps ensure that your scenario is correct, that it reflects your customers’ reality, and that it captures the most important nuances and motivations of your customers. Taking the time to test your scenario helps you move forward with confidence that you are on the right path to solving a problem your customers care about, even before you start generating solution ideas and prototypes.

Instead of just handing a written scenario to a customer and asking, “What do you think?” create a structured interview and ask questions such as these:

Does this scenario ever happen to you?

Can you talk about the problems the scenario presents and how it affects you or someone else?

Is there anything left out that’s important?

Did we get any of the details wrong?

If we were to build a solution for this, on a scale of 1-5, how important would that be to you? Why?

Which of these two scenarios would you rather we focus on?

Lean Startup “fake homepage” approach

Primary usage: QUANT/DO data.

Best for: Testing whether you’ve got the right solution; testing marketing messages and pricing.

In The Lean Startup, Eric Ries underlines the importance of testing with customers before you commit to a business plan or solution, to make sure that demand for your solution exists before you invest in building it. One of the central techniques that Ries suggests is based on a very simple rapid prototype.

The idea is to publish a single webpage that acts as the home page of your new service. It should display the marketing message that conveys the core value proposition of your solution, including pricing or other terms. Put a “Buy” or “Download” button at the bottom, and see if anyone clicks it. (Be sure you have web analytics enabled so that you get reports of page views as well as clicks.) If a customer does click to purchase your service, the next page might show a 404 error (page not found) or perhaps say “Coming soon” and ask for information about how to notify customers when the product is available. These customers would make great candidates for future customer testing, by the way.

Ries makes the point that if no one clicks the “Buy” button, you know that you need to try something else. Either you’re solving the wrong problem or your pricing or business model needs to be rethought. In any case, don’t bother building the solution until you can see that enough people are actually clicking “Buy.”

This is a very simple idea, but it’s profoundly useful to know what percentage of visitors to your website would actually want to buy your solution—not just in a lab setting, but in real life. You can imagine extending this approach to other domains as well. This is one of the few ways we’ve seen to test an early concept with a QUANT/DO approach, so it’s well worth having in your toolbox.

Concept testing and focus groups

Primary usage: QUAL/SAY data.

Best for: Testing whether you’ve fully understood the customer need, testing whether you’ve got the right solution, testing marketing messages and pricing.

The idea behind concept testing is to get feedback on your initial solution ideas very early in your project, often before you have anything that looks like a user interface. This is another approach to take in early iterations to see whether you are solving a problem customers actually have and whether customers respond well to your rough ideas. The goal isn’t to nail down a specific solution but to see whether you’re in the right ballpark and engage customers in a conversation that would help you deepen your understanding of their needs—and thereby learn how to improve your solution ideas to better meet their needs.

The basic approach to concept testing is to show customers both the original scenarios as well as a few possible ideas for solutions that might address the problem or situation raised in each scenario. If you have sketches or mockups for some or all of your ideas, you might show those. You might show some key marketing messages or pricing ideas to get feedback in those areas as well.6

Ask customers which solution ideas they would prefer for each scenario and why. Ask how they might use such a solution in their daily life. We strongly recommend asking customers how they think the solution would work; this can be illuminating and may identify hidden assumptions, both yours and theirs. Encourage customers to share ideas they have for how to improve your solution. You might ask customers to rank the scenarios or the solutions you show over the course of the session. Or, to elicit a finer-grained prioritization, give them $100 of fake money and ask them to spend it on the ideas or scenarios they would find the most valuable. A couple of ideas may be dramatically more valuable than the rest, which would not be as evident if you asked them simply to rank the ideas in priority order.

To do concept testing, you might meet with one customer at a time or with a group of customers to gather feedback in a focus-group format. However, remember the caveats about focus groups from Chapter 5. Even with an experienced facilitator, focus groups often result in biased feedback because of groupthink, when some participants heavily lead the discussion and others yield to their opinions. Our experience is that testing concepts with a few individuals is usually more efficient and reliable than a focus group, and you’re likely to elicit deeper, more personal feedback with that approach.

SFE in action: The case against focus groups

Paul Elrif, PhD, Principal at Caelus LLC, Owner of Dragonfly Nutrition LLC

Focus groups are often used by trained and untrained researchers without regard to the tradeoffs of the method. I assert that there are more useful and efficient methods for data collection than running focus groups. I find that one-to-one interviews, surveys, and some lesser-known methods are much more robust and, more importantly, more reproducible than focus groups are.

Robert K. Merton is largely credited with developing the idea of focused interviews and focus groups. One interesting thing about Merton is that he viewed focus groups as a method to test known hypotheses rather than to tease out new hypotheses or features from participants. When run well, a focus group can contribute to a product development idea. The problem is that a properly run focus group requires expert skill as a facilitator, and expert skill as a study designer, to know what is appropriate for a focus group and what is not.

The main problem with focus groups is the influence of groupthink. Several studies demonstrate that individuals tend to conform to what the group expresses rather than be seen as disagreeing. For example, Solomon Asch (1951)7 ran some interesting studies that demonstrated that even when participants were asked to judge the difference between the lengths of lines drawn on a piece of paper, they often conform when they don’t agree with others in the group. This relatively benign difference highlights that even for small disagreements, individuals are eager to conform.

Focus groups are often made up of people who are unfamiliar with one another. This poses a number of concerns. For instance, if you have people who are under NDA with their own employers, they will likely not provide honest answers if they believe they will be in violation by talking about their work with competitors in the room. Also, many people will become reticent in the presence of their managers or coworkers, especially if they do not want to be seen as disagreeing.

The many personality types in focus groups can direct an entire room in ways that are not repeatable. Based on my own observations, at least five types of participants will negatively affect your study: the agreeable, the contrarian, the loudmouth, the one-upper, and the quiet polite guy (similar to “the agreeable,” except he may not agree but will remain silent).

You will often find that focus groups do not allow nearly enough time to get through an entire structured question list. It’s difficult to give every person in a focus group an opportunity to provide complete answers to your questions. How will you know whether individuals had time to fully provide data?

Two of the hallmarks of good research are reproducibility of results and accuracy of the data collected. To assert that a study is both reproducible and accurate, one must try to reduce the number of confounding variables. It can be very tricky to acquire both reproducible and accurate data from a focus group conversation.

Even though focus groups are very difficult to administer well, there are indeed some specific cases where a focus group may be the right tool for the job. The classic case is when you want to discover how a group of people will react to an idea, or you want to observe how a group of people will interact when they are presented with an idea. Another situation is one where you expect that multiple customers will be using the hardware or software at the same time, or when there is workflow involving multiple users. These are all situations where hosting focus group conversations would be a good additional exercise to conduct. The same goes for families that share a computer or some kind of system.

If you want to gather feedback from multiple customers and you are not an expert facilitator, I highly recommend that you put the time and energy into scheduling a series of one-to-one interviews. One-to-one interviews are a much easier method for collecting independent and unbiased customer observations.

Surveys and questionnaires

Primary usage: QUANT/SAY data.

Best for: Testing whether you fully understand the customer need, testing whether you’ve got the right solution, testing whether your solution works well, fine-tuning the details of your solution.

We talked about surveys in detail in Chapter 5. Surveys are commonly used for generative research, although you can also use this technique to gather feedback about solution ideas. The difference is in the types of questions you ask. Generative surveys focus on a customer’s attitudes and preferences before any solution is available. Now that you have a solution and are looking for feedback, your survey questions will focus on evaluating how well customers believe your solution ideas will satisfy their needs. As we mentioned earlier, while surveys are easy to create and administer, they are deceptively difficult to do well. Many bad decisions have been made based on the data coming from flawed surveys. Professionals are plentiful; leverage one to help you build an unbiased survey.

The most common form of survey used for getting feedback is a questionnaire, a small set of survey questions that is administered at the end of a usability test. You can also implement an online survey with a percentage of your live customers during their regular use of your service or after the customers finish a particular experience such as sending an online invitation to a party or placing an order. The value of using a questionnaire after a customer experiences your solution is that it gives you an easy way to collect some numerical preference data about that experience, which you can then track and trend over time. Keep the number of questions to a minimum, on the order of 5 to 10. The most common types of questions provide structured answers that allow for straightforward numerical analysis afterward:

![]() Yes/No questions on a Likert scale of 1-Definitely No, 2-Probably No, 3-Maybe, 4-Probably Yes, 5-Definitely Yes.

Yes/No questions on a Likert scale of 1-Definitely No, 2-Probably No, 3-Maybe, 4-Probably Yes, 5-Definitely Yes.

![]() Rate the level of agreement to a statement on a Likert scale of 1-Strongly Disagree to 3-Neutral to 5-Strongly Agree.

Rate the level of agreement to a statement on a Likert scale of 1-Strongly Disagree to 3-Neutral to 5-Strongly Agree.

![]() Goldilocks questions that use a slightly different scale: 1-Too much, to 3-Just Right, to 5-Too Little. Goldilocks questions create a slightly more complicated analysis because the optimal answer is at the middle of the range; they don’t offer a simple “higher is better” calculation.

Goldilocks questions that use a slightly different scale: 1-Too much, to 3-Just Right, to 5-Too Little. Goldilocks questions create a slightly more complicated analysis because the optimal answer is at the middle of the range; they don’t offer a simple “higher is better” calculation.

![]() You may also want to include an open-ended question at the end to capture anything that is top-of-mind for the customer that you hadn’t thought to ask about.

You may also want to include an open-ended question at the end to capture anything that is top-of-mind for the customer that you hadn’t thought to ask about.

The results of a questionnaire may provide you with hints for questions to ask customers during a post-usability study interview. For example, perhaps a customer gave a high satisfaction rating to a task that you noticed he was struggling with, or a very low satisfaction rating to a task he seemed to do quickly and easily. Often, task performance and satisfaction are not congruent, and you may not recognize the disconnect unless you ask. It’s important to discover these discrepancies and then probe with the customer to find out why. Also, be sure to provide the questionnaire to customers after they are done with their task so that you don’t interrupt their flow or influence their opinions during a task.

Questionnaires are commonly administered following both informal and formal usability testing. Because this is such a common practice, there are a handful of industry-recognized and standardized usability questionnaires that you may consider using. These include QUIS (Questionnaire for User Interface Satisfaction) and SUS (System Usability Scale). Some of these questionnaires are so widely used that you can get industry data about how customers have responded to similar products, which lets you set some benchmarks for how usable your product is relative to others in your industry. Note that you are required to pay a license fee to use many of these questionnaires. A more detailed introduction to the world of questionnaires, along with a list of commonly available questionnaires (with links to the specific questions/forms) can be found athttp://hcibib.org/perlman/question.html.

Another way to use a questionnaire is to embed it in your product or service. At some point in the customer’s experience, pop up a questionnaire that asks about the customer’s perception or satisfaction right when she is using your solution. This approach is common in web experiences these days, but it can also be used in a desktop application. For example, the Send-a-Smile feedback program was implemented during the beta for Office 2010. In the beta release, the feedback team added two icons to the Windows taskbar in the lower-right corner of the desktop—a smiley face and a frowny face. The idea was to encourage beta users to click the smiley face when something in the beta product made them happy, and to click the frowny face when something in the product made them unhappy. The tool automatically took a picture of the screen that the user was working on at the time and also prompted the user to type in a reason for his or her response. Send a Smile generated a tremendous amount of beta feedback and identified key areas where the product was missing the mark as well as validating that places built for delight were actually having the desired effect.

Cognitive walk-through

Primary usage: QUAL/SAY data.

Best for: Testing whether your solution works well, identifying more commonly found problems without needing real customers.

A cognitive walk-through, or a cogwalk for short, is a straightforward, informal evaluation method that focuses on the interaction and flow of a solution.8 It’s a good technique to use early on when you are ferretting out problems with concepts and mental-model issues and making sure that you catch bigger issues before you test with customers directly, which is more expensive.

When doing a cogwalk, you don’t need actual customers. Instead, you gather several teammates or colleagues together to evaluate the flow of a prototype. The team starts out with a set of tasks to achieve. Together, the group’s members put on their metaphorical customer hats and walk through the prototype one screen at a time, putting themselves in the place of the customer and imagining what the customer might do, think, or wonder about at each stage. They run through the specific set of tasks to simulate how users will experience the flow of the application. You aren’t so concerned about the total time needed to perform each task; rather, you want to observe whether people can easily navigate through the system, find what they need, figure out what to do when they need to do it, and most especially, predict where customers might get stuck. In short, you want to see whether your solution makes sense to human beings.

Practically speaking, cognitive walk-throughs usually involve showing a series of static screens in some sort of slide show, a PowerPoint prototype, or a paper prototype. This helps the team focus on the right level of feedback (concepts and flow rather than small details) and also makes it doable very early on in a project, before the team spends a lot of time making more elaborate prototypes or investing in functional code.

As team members walk through each screen in the experience, they answer some standard sets of questions.9 By using the same set of questions for each stage, you can calibrate the responses you get over time:

Do users know what to do at this step?

Do users feel like they are making progress toward a specific goal?

At each screen, the group’s members collectively discuss the steps they would take to achieve the set of tasks presented to them. You want to predict where customers might get stuck, and what they might misunderstand. For example, will they know what to do with the big blue button on the Update screen? As you walk through the prototype, a scribe takes notes on any potential problems to be addressed in the next iteration.

Heuristic evaluation

Primary usage: QUAL/SAY data.

Best for: Testing whether your solution works well, identifying more commonly found problems without needing real customers.

Another method that is often used to identify early usability issues is a heuristic evaluation.10 In contrast to a cognitive walk-through, which addresses interaction and flow, a heuristic evaluation assesses the user interface against a set of established principles, or heuristics, to look for well-understood gotchas and common mistakes that are known to make interfaces hard to use. Like a cogwalk, this evaluation is a good way to identify the bigger, more obvious problems in an interface before you go to the trouble of recruiting customers for testing.

In his book Usability Engineering (1993), Jakob Nielsen (who’s often considered the father of usability testing) explains in detail how you go about doing a heuristic evaluation. He’s also written many articles on the subject that are easily discovered on the web.11 We’ll present a condensed version of his thoughts here.

Heuristic evaluations are typically done by a reviewer who has a lot of experience observing customers and who is very familiar with common usability issues. Before the evaluation takes place, though, teams must decide upon a set of heuristics on which the application will be evaluated. Those heuristics are usually a set of user interface guidelines or a set of platform-specific user experience principles. Examples of user interface principles include consistency (Are similar actions performed in the same way?) and user control (Can the user back out, or escape, from any step or application state?).

In running the evaluation, a best practice is to have the reviewer go through the prototype twice. The first time through, the reviewer becomes familiar with the prototype. She gets a feel for what the system does, how it works, and how the user interface flows. Once she’s familiar with the system, she goes through the prototype a second time with the intent of providing feedback, stepping through the application and evaluating each screen against the heuristics.

Remember that a heuristic evaluation is a bit different from a usability study. In a usability study you really want users to try to make sense of the system, even if they have to struggle to do so. In a heuristic evaluation, you are primarily focused on discovering usability issues by systematically judging the user interface against a set of established principles, so if the reviewer gets stuck, it’s okay to move her along quickly through the application by helping, answering questions, or providing hints. However, definitely note those stumbling blocks and address them in your next iteration.

While a heuristic evaluation is typically performed by a single reviewer, it’s understandable that no single person is likely to find the majority of usability issues in a system. When you use this method, it’s ideal to recruit a few people to do the evaluations and then merge their feedback afterward. According to Nielsen, to get the best return on your investment, heuristic evaluations work best with three to five reviewers.

For a heuristic evaluation, you can use either low- or high-fidelity prototypes and even recruit teammates and others who do not perfectly match your target audience to do the evaluations. However, it is important that the reviewer or reviewers have expertise in good user interface design practices in addition to being familiar with the intended customer for the solution.

A quick Internet search for “usability heuristics” provides a long list of papers, articles, and information on guidelines and rules of thumb for usability and evaluation. Jakob Nielsen publishes a particularly useful and widely used set of usability heuristics.12

Wizard of Oz test

Primary usage: QUAL/DO data.

Best for: Testing whether you have the right solution, testing whether your solution works well; especially appropriate for voice- or text-based interfaces.

One clever approach to user testing is called a Wizard of Oz test. Instead of building a paper or other visual prototype, have a “man behind the curtain” make an experience magically work by responding to the customer manually. This technique is most natural if you are testing a voice-based interface, where the person behind the curtain speaks aloud to mimic an automated voice system. For example, if you were building a service such as Siri or Cortana or redesigning an automated telephone menu, you might do some Wizard of Oz testing to try out different voice commands and interaction patterns to see which are most natural and understandable for customers. This approach can also work well for a simple text interface or a command-line interface, where the person behind the curtain simply types the response to each command.

You might imagine using a Wizard of Oz approach for a visual interface to test the magic of desktop sharing or something similar, with someone sitting behind a curtain manually changing what appears on the customer’s screen. This is more work to set up, however, and less common. By the time you have enough visual material to show on a screen, not much more effort is needed to link it up into a click-through prototype.

Informal testing and observation

Primary usage: QUAL/SAY data.

Best for: All stages, for quickly testing to see whether you are on the right track.

Informal observation can very quickly and easily provide you with customer feedback about your solution and your customer. With informal testing, you have a great deal of discretion in what you show, say, and ask, and it can be applied at nearly any phase of the project.

The important step in an informal test is to show customers some sort of artifact representing your solution and let them react to it. You might just ask them to comment on it, but it is helpful to have a few questions in mind to make sure you are getting feedback on the aspects you are most interested in. And whether you are sitting in a usability lab or in a café, you want to pay attention to minimizing bias, which means you want to keep your tone as neutral as possible and generally ask open-ended questions and then just listen. Although tempting, explaining your idea to customers does little in telling you whether they would understand it on their own or find it useful. The more you explain, the less likely customers are to offer their own thoughts. The hardest part about doing any kind of user research is often just listening, and this is particularly true for informal testing because you don’t have an impartial facilitator running the test.

One way to get more frequent and earlier feedback is to think more about what you are testing than how you are testing it. Go ahead and test your earliest work. Remember the outputs you created in the earlier stages of the iterative cycle—insights, scenarios, sketches, and storyboards? In addition to prototypes, these are also relatively well-formalized bodies of work that you and your team have created. They all represent some amount of teamwork, consensus building, and decision making and are all well suited for getting very quick, informal feedback. The goal of this feedback is not to know definitively that you’ve found the right solution, but to get directional feedback that confirms you’re on the right track or to help you find an appropriate course correction.

Even though you can get a lot of great data from informal testing, be sure that the people you talk with are target customers—or ask enough questions to understand how far away from your target they are. You don’t want to get blown off course by getting conflicting feedback from people who are not your target customer.

Here are some ideas for getting quick, informal feedback on a customer insight, scenario, sketch, or early product design:

![]() Read your scenario (or list of insights) to customers and ask them what they think Do they tell you something like, “Oh man, that is so me. I can totally relate to that person. Please let me know when you solve that problem. Can I be on your beta test?” Or do they tell you something more like, “Hmmm . . . yes, that’s interesting. It’s not me, but I guess I can see how someone else would have that problem.” But beware of an answer like this: “Yeah, that sounds like an important problem for person X, and I bet they want this, this, and this.” This suggestion is no more based in data than your own guesses about that customer’s needs, and this kind of statement also confirms that a person who makes it is either not your customer or isn’t all that excited about your proposed scenario, or both.