Effective MySQL: Backup and Recovery (2012)

Chapter 8. Optimizing Backup and Recovery

Once you know there is a valid backup and restore process for your environment, how can you improve and refine this process? Depending on your locking strategy, diskspace availability, or business data recovery service level agreement (SLA), there are various techniques you can use to optimize and streamline your process. There are also architectural considerations for further optimizations.

In this chapter we will discuss:

• Use and benefits of compression

• Levering streaming

• Parallelism with mydumper

• Full and incremental backups

• Architectural considerations

![]()

Example Backup Environment

Chapter 2 discussed the primary backup options available for a MySQL instance. This chapter has specific demonstrations for mysqldump, MySQL Enterprise Backup (MEB), and XtraBackup products described as well as mydumper.

All tests were performed on an Amazon Web Services (AWS) Elastic Compute Cloud (EC2) large instance with a dedicated Elastic Block Storage (EBS) partition for the MySQL data and the MySQL backup location. An EC2 m1.large instance is defined with the following characteristics from http://aws.amazon.com/ec2/instance-types/:

• 7.5GB memory

• 4 EC2 Compute Units (2 virtual cores with 2 EC2 Compute Units each)

• 850GB instance storage

• 64-bit platform

• I/O Performance: High

• API name: ml.large

The following articles will provide all the steps necessary to start using AWS without any prior knowledge in order to repeat any examples in this chapter:

• http://effectivemysql.com/article/setting-up-amazon-web-services/

• http://effectivemysql.com/article/using-amazon-web-services/

Refer to the GitHub repository of code for this book to reproduce the full environment and commands used in the following examples. Details can be found at http://effectivemysql.com/book/backup-recovery/.

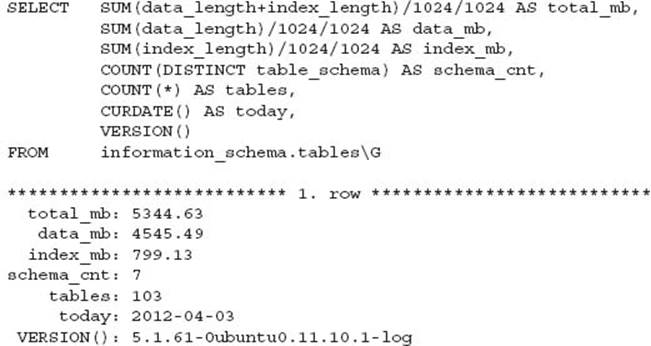

The small database environment used is approximately 5GB.

For the purposes of testing and providing shorter commands, the MySQL privileges have been recorded in a user MySQL configuration file. This does not represent the optimal MySQL user account or approach for securing MySQL backups. An appropriate and secure approach should be used for production systems.

All times shown are for a single execution of the respective command and are provided as an example representation. Appropriate error checking is not shown in the following examples. This should be applied accordingly in a production setting. Accurate benchmarking should involve several iterations of the same test and should include monitoring additional system resources, including CPU, disk, and network throughput to determine a more specific measurement.

![]()

Using Compression

One of the most common improvements to any backup strategy is the use of compression. The time savings for transferring backups offsite or to external media, including tape, can be an important benefit. Compression can also be used to reduce disk I/O during the backup or recovery approach. This feature has one significant limitation—that is, the time taken to compress or uncompress files may impact individual steps in the backup or recovery strategy.

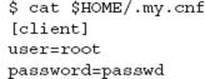

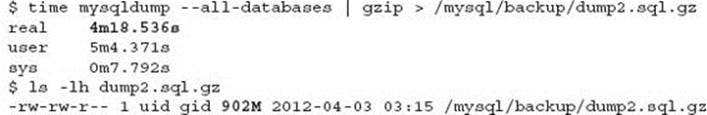

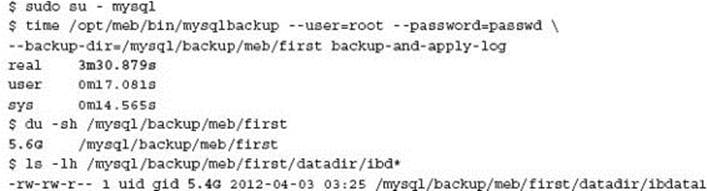

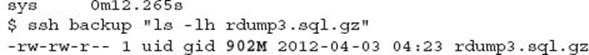

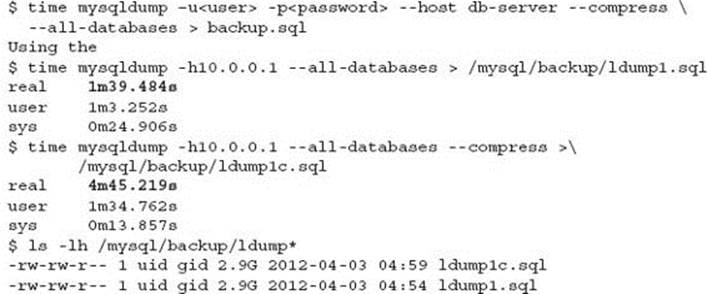

mysqldump

When using mysqldump, compression can simply be included as an additional step or in the command line via a piped command. For example:

or

![]()

TIP While the gzip command is demonstrated here, other compression tools exist, including bzip and 7zip. These tools can provide better compression ratios for certain types of data. Compressions tools also generally include different options between the fastest and best compression.

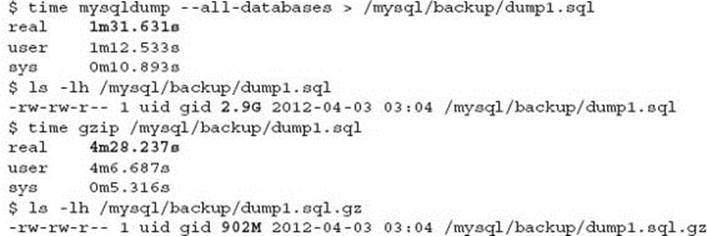

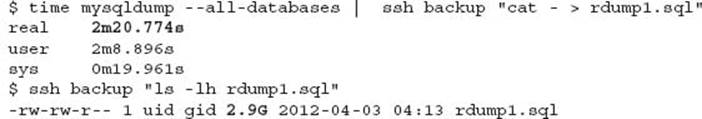

Using the example MySQL database of approximately 5GB:

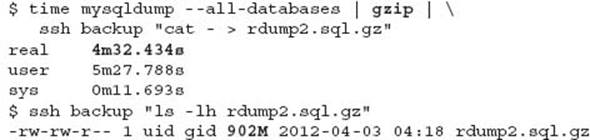

With compression combined as a single command the results are:

When using a piped command the first benefit is that the output file is automatically compressed on the fly, without requiring any additional temporary disk space. This helps if your system has limited diskspace. The disadvantage is the additional time this command may take to execute. When combined with the mysqldump command using default settings, a lock of all tables can affect application access. In this example, the locking was increased from 91 seconds to 258 seconds. Locking is not a consideration for an InnoDB only database when the --single-transactionoption is used.

Testing is necessary to confirm the benefits for your environment. It is easy to time the backup and compress commands separately and the time taken when combined. The combined time may not be a significant overhead in some situations. This will depend on disk throughput capacity and memory. In the preceding example, 359 seconds was reduced to 258 seconds when the statements were combined, producing a saving in time. In the following example of a different sized database on a different system, the time is about the same, 83 seconds compared with 81 seconds.

Compression with a filesystem snapshot is a process that occurs as a post-step, generally before copying the snapshot files. As the size of the backup grows, the negative impact on the production system and the recovery process becomes more obvious.

The compression on the database server can have an effect on the database I/O performance. Is the copy of an uncompressed backup that is five to ten times larger on a dedicated network interface less of an impact than the compression? This will be discussed in the following sections.

TIP The nice and ionice Linux commands can change the priority of work on a system and lower the system impact of certain commands.

While you consider this, the greatest issue uncovered during consulting in a disaster recovery situation is either the time taken to uncompress the data before restoration, or insufficient disk space to uncompress a backup and restore accordingly. In the first situation, a client with a large centralized SAN for more than 30 databases had a 17 hour delay in the database restoration due to the time taken to uncompress data. In the second situation, your system may require at least two times the database size in diskspace, the uncompressed backup file, and the restored database.

Under normal circumstances the most common database recovery is that of the last physical backup. It would be optimal to always ensure an uncompressed copy of the system you wish to restore is on disk.

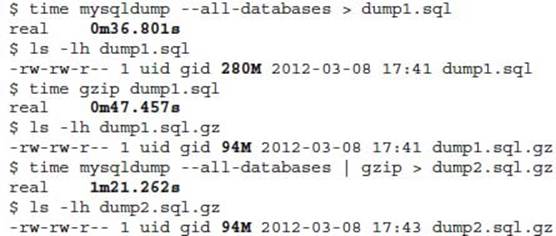

Compression Utilities

Using the mysqldump backup of 2.9GB the following testing was performed to compare the time and % compression savings of various available open source products.

The percentage savings and compression time of results will vary depending on the type of data that is stored in the MySQL database.

NOTE The pigz compression utility was the surprising winner in best compression time producing at least a size of gzip. This was a full 50% faster than gzip.

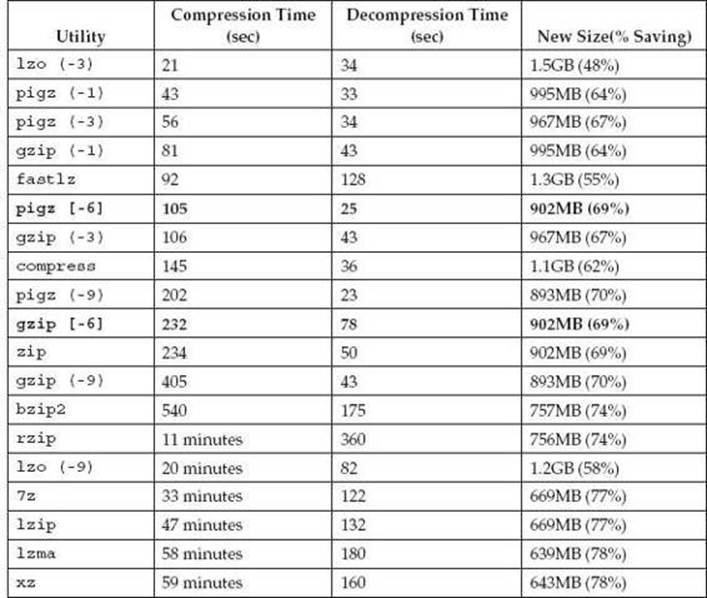

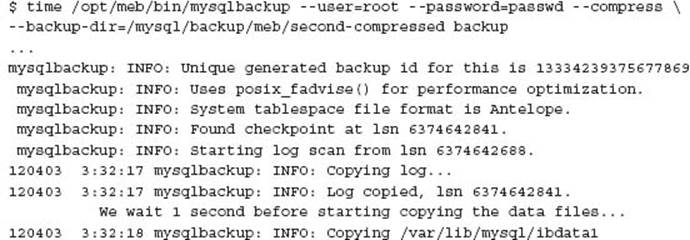

MySQL Enterprise Backup (MEB)

A backup with MEB can enable compression with the --compress option. With compression you are unable to apply the logs within a single backup command, i.e., --compress and the action backup-and-apply-log are incompatible. Compression is also incompatible with all incremental backup options including --incremental and --incremental-with-redo-log-only.

The following information is for a normal MEB backup:

Only InnoDB tablespace files are compressed. These are given a.ibz extension accordingly for both the per tablespace .ibd data files and the common tablespace ibdata file. Large MyISAM data files are not compressed. For example:

By comparison the backup without the --compress option produced a 5.6GB backup in 210 seconds. This compressed backup of 1.7GB took 282 seconds to complete.

The --compress-level=N option enables further compression. A value of 1 is the default and fastest compression; 9 is the slowest compression. Subsequent tests with --compress-level=9 produced only slightly better compression; however, the time taken was six times longer.

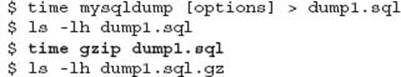

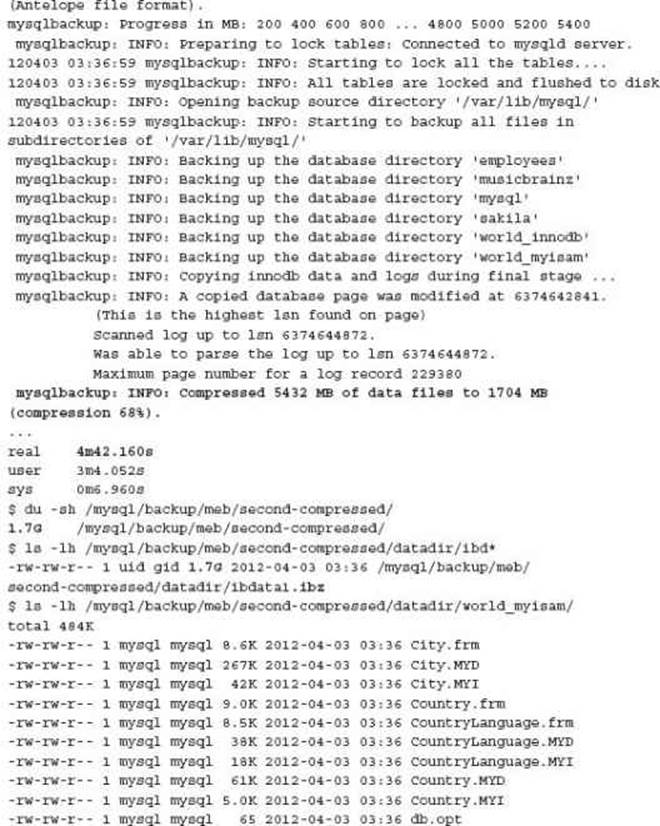

XtraBackup

To enable compression with XtraBackup, you must first stream the data with the --stream=tar option and pipe accordingly to an applicable compression command. For example:

![]()

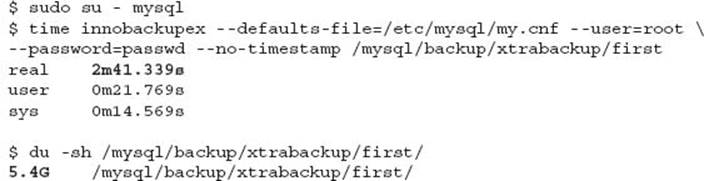

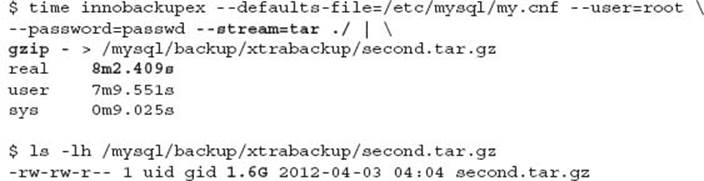

A normal XtraBackup produces the following results for the example database:

The following is produced for an XtraBackup with compression:

For comparison, without the streaming and compression the backup took 161 seconds and produced a backup directory 5.4GB in size compared with 482 seconds and a backup file of 1.6GB in size with compression.

When extracting the backup file, the following syntax is used:

![]()

CAUTION When uncompressing XtraBackup tar files, the -i option is required.

![]()

Streaming Backups

A Linux pipe combined with an applicable command can be used to stream output across the network, avoiding the need to write any backup information on the database server.

Using SSH

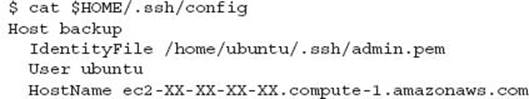

Using standard SSH with keyed authentication you can automate the network transfer of a backup. In the following examples the SSH connection has been simplified to just using the alias backup:

TIP You can remove the complexity for remote connections by defining the hostname, port, user, and key details in the SSH configuration file $HOME/.ssh/config.

For example:

This can be combined with compression as described previously. For example:

It is also possible to offload the compression to the remote host by sending the data uncompressed and applying at the destination. For example:

You can also throttle throughput in a pipe with the pv command. For example:

![]()

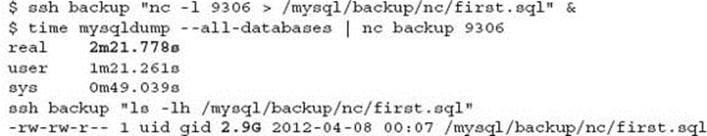

Using nc

Using netcat (nc) you can transfer a file via TCP/UDP directly on a given port. This generally requires defining the receiving communication on the destination server, and may require additional firewall access on a defined port. For example:

This command is generally considered more lightweight than ssh and possibly a little faster. The size of this backup example does not represent what true savings may occur for larger files. The time saving can be attributed to less authentication and encryption requirements; however, this highlights a potential security impact for a plain text SQL dump. Adequate firewall security for an internal network is an important consideration. Compression can also be included with these piped commands at either the source or destination host.

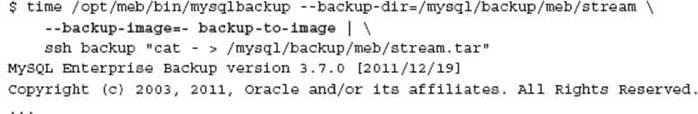

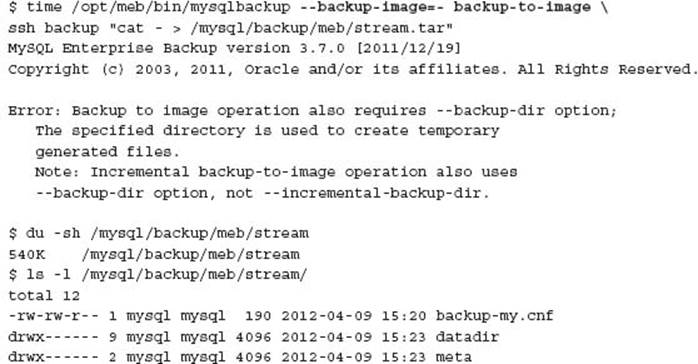

MySQL Enterprise Backup (MEB)

To achieve streaming with MEB, the [backup-to-image] option can be used in conjunction with writing the output to standout and using an appropriate piped output. For example:

While you are performing a remote backup, MEB does require a local working directory and it does leave files on the backup server.

With MEB version 3.7 the backup-to-image backup feature now includes checksum verification to ensure the backup data remains unchanged during any transfers to other systems. Each file within the backup image is tested against a checksum calculated using the CRC32 algorithm, either when files are extracted from the backup image, or using the new mysqlbackup option validate to test a backup image without extracting.

MEB also provides streaming options to high-capacity storage devices using the System Backup to Tape (SBT) interface. This enables MEB to integrate with Oracle Secure Backup (OSB) or other compatible media management software (MMS) products to manage the backup and restore process. More information about the various --sbt options can be found at http://dev.mysql.com/doc/mysql-enterprise-backup/3.7/en/meb-backup-tape.html.

XtraBackup

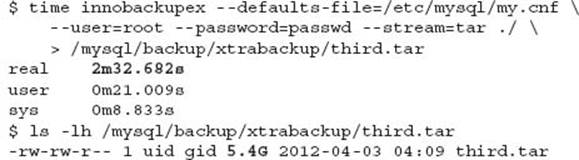

To enable streaming with XtraBackup, the --stream=tar option is required and combined with one of the preceding examples. For example:

![]()

The --incremental option is not applicable if specified with the --stream option. The --stream option will always produce a full backup.

The execution time and resulting backup size are comparable to a standard XtraBackup command as shown previously.

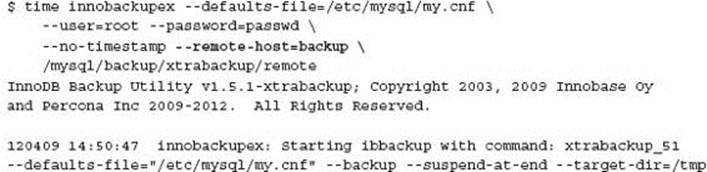

XtraBackup also provides a push of a backup to a remote host with the --remote-host option.

For more information and other examples see http://www.percona.com/doc/percona-xrrabackup/howtos/recipes_ibkx_stream.html.

![]()

Remote Backups

In the previous section it was possible to push a MySQL backup to an external server. With all client/server backup options it is possible to pull a MySQL backup from the database server. One of the benefits of this process is the necessary access requirements. In a pull process the only permissions on the database server are the necessary MySQL permissions and firewall access to the MySQL port; no operating system user is necessary.

mysqldump

Using the --host option enables the mysqldump command to connect to a remote server.

The use of --compress can provide some assistance in client/server network communications of the data; however, this does not result in a compressed backup file.

Surprisingly, the use of the --compress option results in a much slower backup.

MySQL Enterprise Backup (MEB)

MEB does not support connecting to a remote host.

NOTE While MEB has a --host configuration option, this is used only for the validation of parsing this option when it exists in a [client] configuration section without producing an error message during execution.

XtraBackup

XtraBackup does not support connecting to a remote host.

![]()

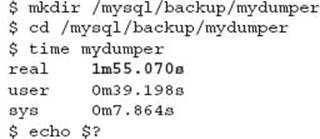

Parallel Processing

Using the default mysqldump command has the limitation of being a single threaded process. This is particularly important during the restoration process of a large database. While mysqldump natively does not support parallel processing, the open source mydumper provides a suitable replacement.

mydumper

Mydumper (http://www.mydumper.org/) is a high-performance MySQL backup and restore toolset released under the GNU GPLv3 license. Domas Mituzas, Andrew Hutchings, and Mark Leith created the mydumper toolset for use in both MySQL and Drizzle. Mydumper was created as a tool that competes with the mysqldump client program. Although there are many installations currently using mysqldump, it can be slow considering that it is not multi-threaded.

Given that mydumper is multi-threaded it can create a MySQL backup much faster than the mysqldump tool distributed with MySQL. Mydumper also has the capability to retrieve the binary logs from a remote server. Copying the binary logs at the same time as the dump has the advantage of supporting a point in time backup.

The major advantages of mydumper are as follows:

• Multi-threaded, which makes dumping data much faster.

• Mydumper output is easy to manage and parse because there are separate files for tables and meta-data.

• All threads maintain a consistent snapshot that provides accurate master and slave positions.

• Mydumper supports Perl Regular Expressions (PCRE), which enable pattern matching for database names and table names to be included or excluded.

• The mydumper toolset also comes with the ability to restore data from a mydumper backup through the multi-threaded tool called myloader.

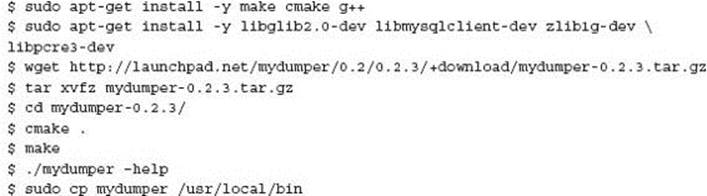

Installation

Mydumper must be compiled from source code. This will require a system that has a C++ compiler available. Additional dependencies include:

• CMake

• Glib2 with development packages

• PCRE with development packages

• MySQL client libraries and development packages

Refer to http://docs.mydumper.org/compiling.html for operating specific commands to install these dependencies.

The following commands were used for an Ubuntu environment:

NOTE The current stable version is 0.23. The current development version is 0.5.1. This development version includes additional options for enabling daemon mode, defining an interval between snapshots, and an output log file option.

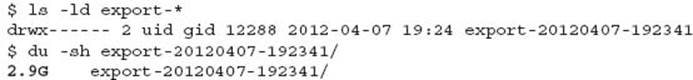

Usage

On an operational MySQL system, mydumper can operate with no arguments and will attempt to connect to MySQL via the local socket file. For example:

No output is produced to indicate success or failure, or to provide details of the export produced. By default a directory with a name of export-[date/time] will be produced.

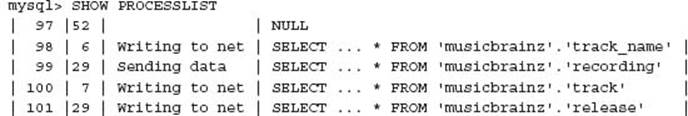

During the backup you can monitor the multiple threads with the SHOW PROCESSLIST command. For example:

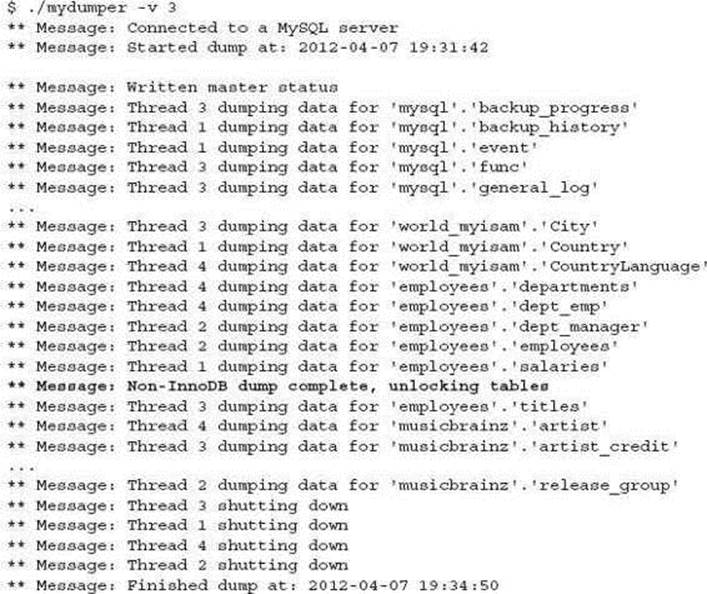

Running in verbose mode produces additional output; however, the full output directory is not included in the information provided:

The output provides some additional insight into the operation. As shown by the message, non-InnoDB tables are backed first to improve locking during the entire dump process.

The following example uses the regular expression options to exclude any mysql and test schema objects.

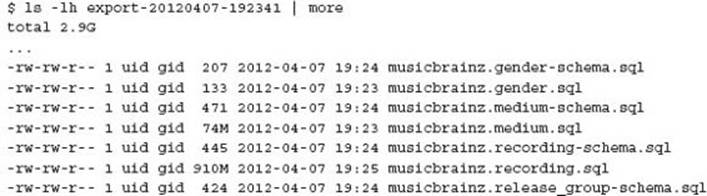

![]()

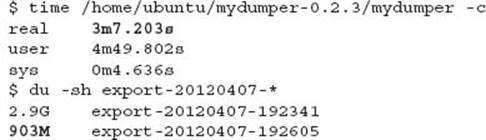

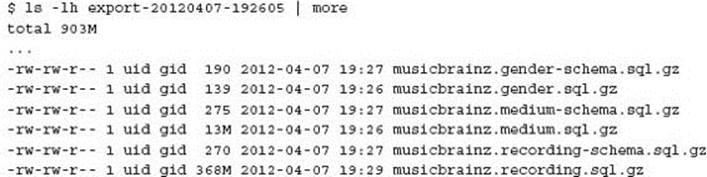

Compression

By default all output files are uncompressed. By using the -c option, all files will be compressed, producing a much smaller database backup. All files in the directory are compressed with gzip.

More Information

Mydumper generates several files pertaining to meta-data, table data, table Schemas, and binary logs.

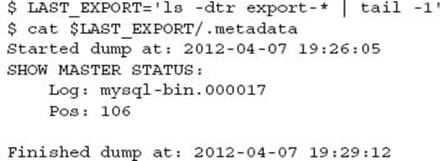

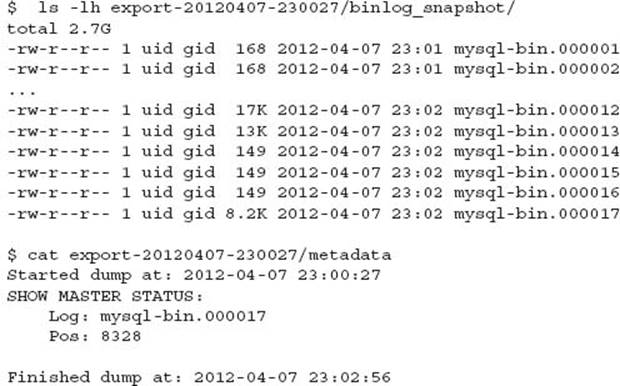

The .metadata file stores the start and end times of the dump as well as the master binary log position. When a dump is executed a .metadata file is created in the output directory.

Table data can be stored in two different ways, one file with all table data or many files with chunks of data for one table. If the --rows option is added to the command, then many files will be created for one table with a naming convention like database.table.chunk, sql. If the --rowsoption is not specified, one file per table will be create with a naming convention like database.table.sql.

When using the compression option, all files are included:

Table Schemas are created by default and stored in individual files named databases.table-schema.sql. These files can be removed from the dump process with the --no-Schemas option. There is no companion option to produce only the schema objects. You should use mysqldump for this functionality.

When the --binlogs option is used mydumper will store binary logs in a sub-directory inside the dump directory unless otherwise specified by the --binlog-outdir option. Binary logs will have the same filename as the MySQL server that supplies them. The meta-file will also reflect the current master position:

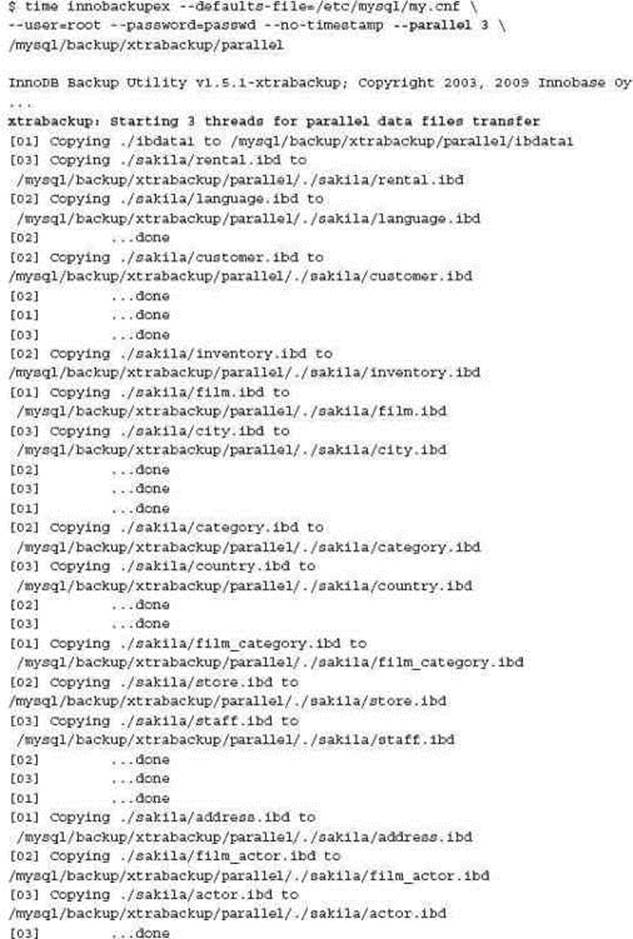

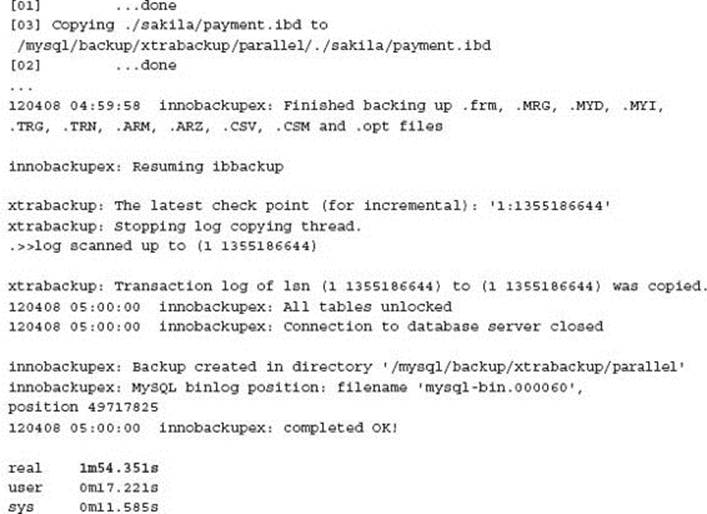

XtraBackup

Parallel copying for a local backup with XtraBackup is possible when multiple InnoDB data files exist, either from using the innodb_file_per_table configuration option or when multiple data files in the innodb_data_file_path configuration option exist. Parallel processing is enabled by adding the --parallel option to the backup process. For example:

![]()

Incremental Backups

In addition to performing a full backup of your MySQL database, several options exist to perform incremental backups. These can reduce the time to perform a backup and the size of backup files; however, a restore process will be more complex and may be more time consuming.

The choice for using a full backup versus an incremental backup can depend also on physical resources. The added steps during the restore process may introduce an additional chance of error under a crisis situation. The simplicity of a full server restore may also be more easily automated.

Depending on the volume and rate of change of data, an incremental backup may result in a smaller backup; however, it may take a similar amount of time to execute.

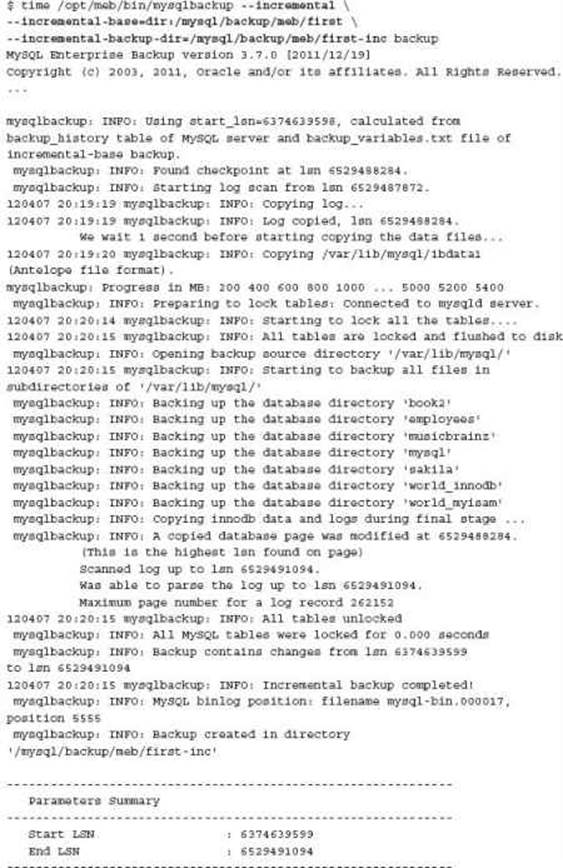

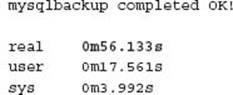

MySQL Enterprise Backup (MEB)

With the --incremental option and either the --incremental-base option or the --start-lsn of an appropriate backup, an incremental backup can be performed. For example:

The backup directory is significantly smaller than the previous full backup. The saving is in the ibdata1 tablespace file, which is not the full size.

The --incremental option is for InnoDB tables, or for infrequent updates of non-InnoDB tables. If a non-InnoDB table has been modified, the entire file is included in the backup. The --incremental option is incompatible with the --compress option and also with the backup-and-apply-logcommand.

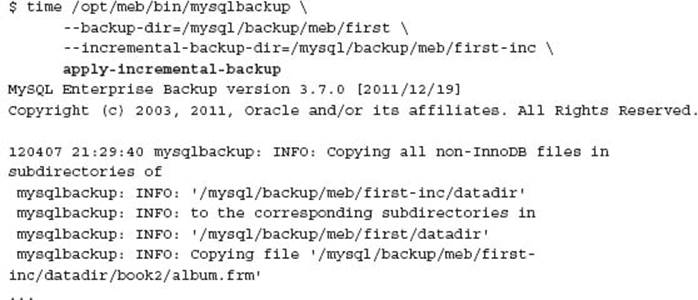

Producing a Full Restore

In order to utilize an incremental backup, this has to be applied to the full backup with the apply-incremental-backup command. For example:

For more information visit the MEB Reference Manual at http://dev.mysql.com/doc/mysql-enterprise-backup/3.7/en/mysqlbackup.incremental.html and the blog post at https://blogs.oracle.com/MySQL/entry/mysql_enterprise_backup_taking_incremental.

MEB also provides an alternative means of producing an incremental backup with the --incremental-with-redo-log-only option. This option uses the InnoDB transactional log files and requires that all information is still contained within these circular files. See more details from the MEB Reference Manual at http://dev.mysql.com/doc/mysql-enterprise-backup/3.7/en/backup-incremental-options.html and the blog post at https://blogs.oracle.com/MySQL/entry/mysql_enterprise_backup_redo_log.

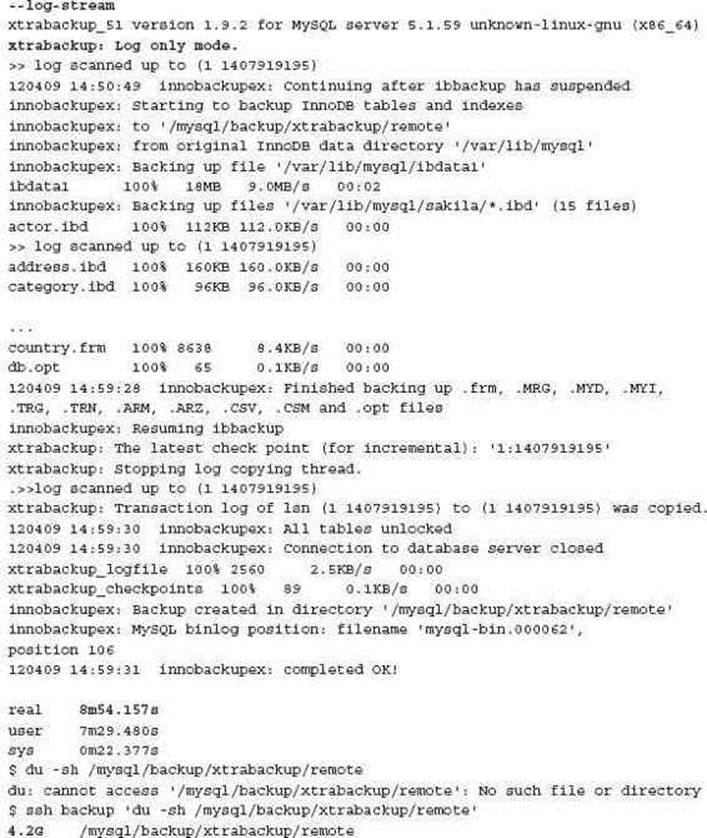

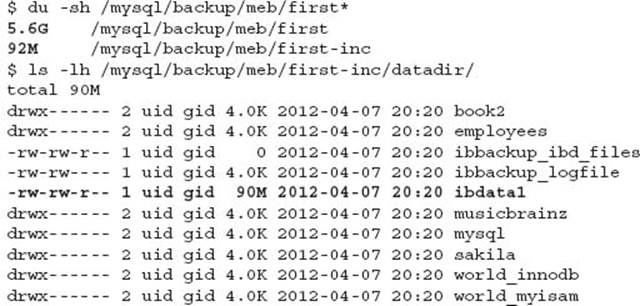

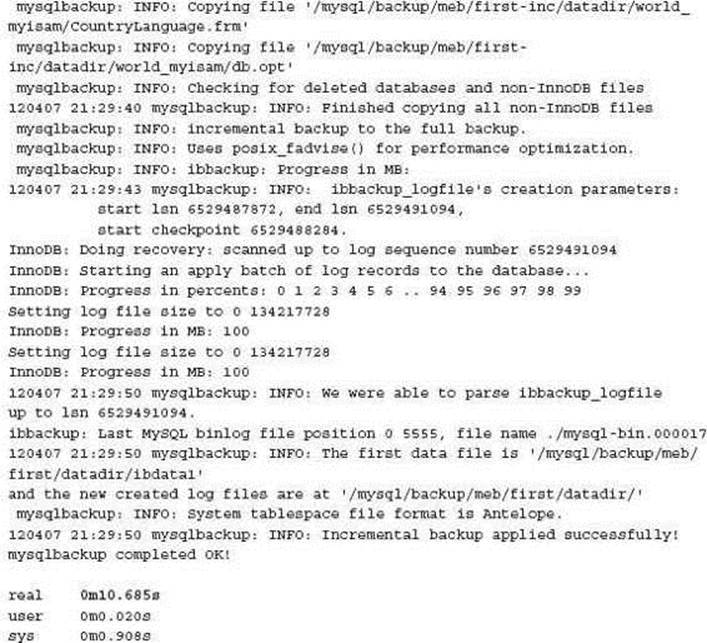

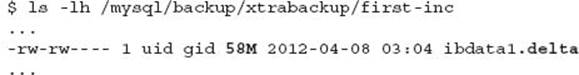

XtraBackup

XtraBackup supports the ability to perform an incremental backup with the --incremental and --incremental-basedir options. A previous full backup is required to perform an incremental backup. For example:

An incremental backup can now be created:

The output will show a delta of the InnoDB common tablespace files was generated:

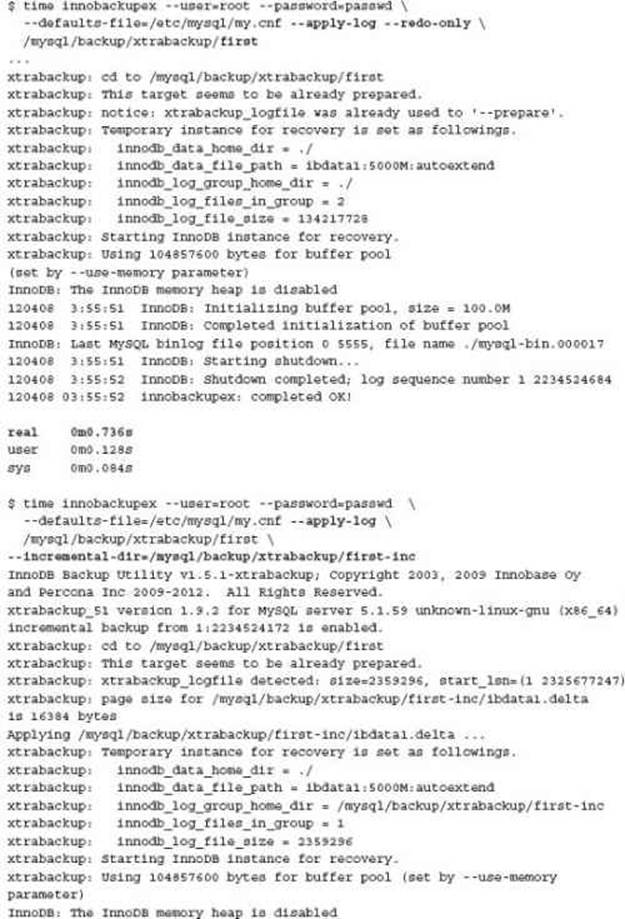

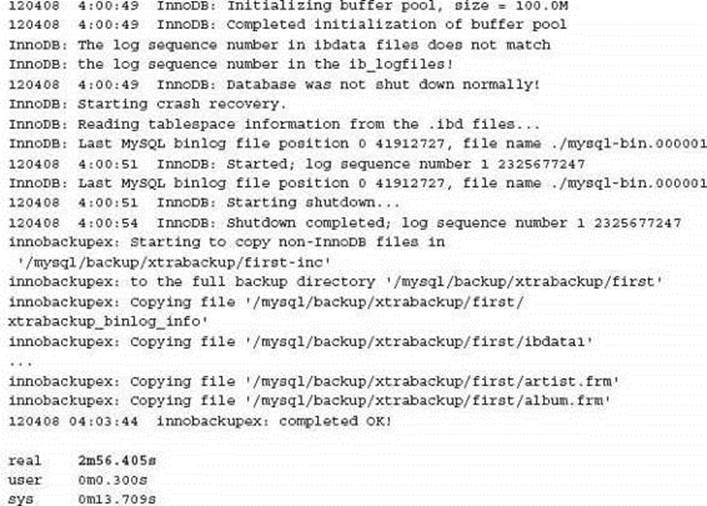

Two additional steps are required to apply the incremental backup to the full backup to enable the successful restore of this backup:

The end result is a complete backup in the original full backup directory that contains all information from the incremental backup.

![]()

Partial Backups

Generally for a backup and recovery strategy, partial backups are not practical due to absence of some data. A relational database also defines consistency with database constraints, including foreign keys, which may not be included in a partial backup. These options, however, may be of benefit in a partial data recovery process for a corrupt or dropped table, or for convenience in testing.

A partial backup may be practical in an ETL process when only a subset of data is necessary and additional data can be regenerated without a backup. This can be an optimization that saves backup space and time.

mysqldump

The mysqldump command allows for the specification of various database Schemas or tables with the --databases and --tables options.

MySQL Enterprise Backup (MEB)

MEB supports the ability to perform partial backups. These options include --only-innodb, --only-innodb-with-frm, --only-known-file-types, --databases, --databases-list-path, and--include. For more information see http://dev.mysql.com/doc/mysql-enterprise-backup/3.7/en/backup-partial-options.html.

XtraBackup

XtraBackup provides an export of an individual InnoDB and XtraDB table with the --export option; however, an import is only possible with Percona Server using XtraDB.

More information can be found at http://www.percona.com/doc/percona-xtrabackup/innobackupex/importing_exporting_tables_ibk.html.

![]()

MySQL Backup Security

Throughout these examples the topic of appropriate MySQL security has not been discussed. This is an important consideration for any complete backup and recovery strategy. A recent poll at the 2012 annual MySQL conference highlighted that very few organizations use SSL for accessing MySQL data.

MySQL provides SSL support for client connections, for example a remote backup, and for MySQL replication. In Chapter 3 of Effective MySQL: Advanced Replication Techniques, SSL usage is described in detail. The following sections can be found in the MySQL Reference Manual.

• Client connections - http://dev.mysql.com/doc/refman/5.5/en/secure-connections.html

• Replication - http://dev.mysql.com/doc/refman/5.5/en/replication-solutions-ssl.html

With the wider adoption of MySQL in the Cloud as discussed in Chapter 9, the use of SSL for client communications and MySQL replication will become an important requirement. It is possible to encrypt files created on a production server before network transfer with generally available utilities including openssl and gpg.

Using transparent encryption techniques can provide a level of adequate security on the filesystem and can be integrated into the existing MySQL backup and recovery options with little impact. The ezNcrypt product from Gazzang (http://www.gazzang.com/) is one offering that provides examples for implementation with MySQL. This blog post by Mike Frank provides an introduction: http://mikefrank.wordpress.com/tag/mysql-mysqldump-ezncrypt-gazzang-linux-backup-xrrabackup-transparent-encryption/.

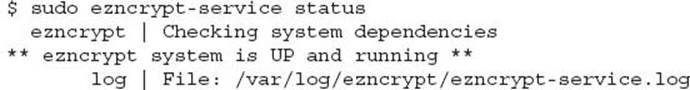

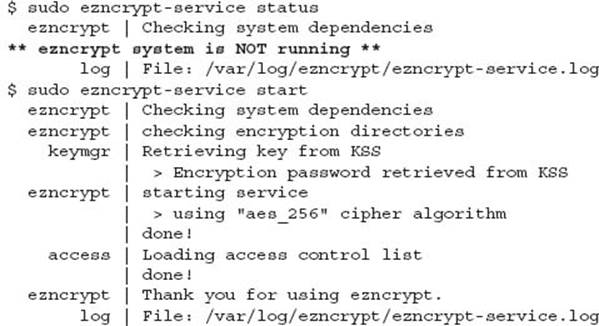

Encryption with ezNcrypt

The following steps demonstrate how to set up a mysqldump encrypted backup with ezNcrypt. You can request a free trial evaluation of the software from http://blog.gazzang.com/request-a-free-trial-of-ezncrypt/. Following installation and configuration, the first step is to verify the ezNcrypt process is running:

If the process is not running you would find the following error message:

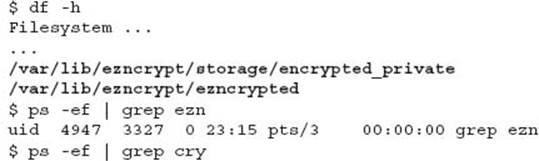

Under the covers you will find the following attached devices, and no actual processes.

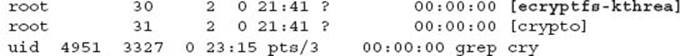

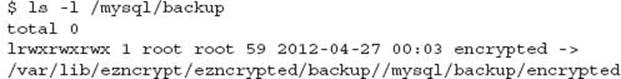

The first step is to create a backup directory and encrypt all contents that are placed in the directory. ezNcrypt uses the concept of an @category for reference with an encrypted file or directory.

The underlying regular directory is now replaced:

Any attempts to write to this directory will fail, even with the Linux super user:

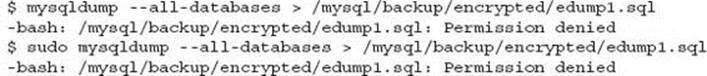

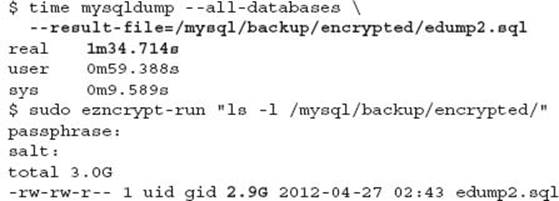

mysqldump

In order to read and write from an encrypted directory you need to grant access controls to a given program, for example mysqldump:

You verify the defined access control rules with:

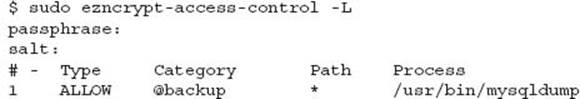

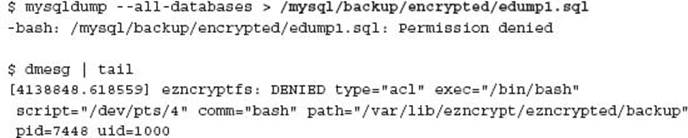

However, writing with mysqldump still causes an error because it is the shell redirection that is performing the writing, as seen in the system error log:

You can use the --result-file option with mysqldump to enable the process to create the file directly. For example:

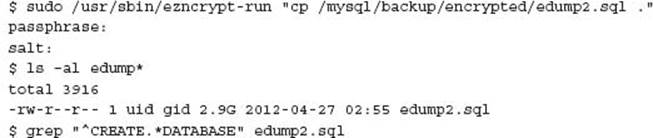

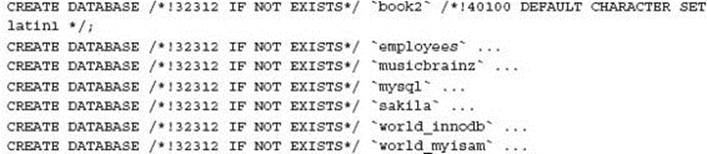

In this single test, the transparent encryption added only a very nominal overhead. You can easily extract the file from the encrypted directory; however, that would defeat the purpose of using encryption. The following syntax is shown just to confirm the validity of the encrypted file:

When using correctly configured directories and access controls, the use is truly transparent to the backup process.

Restoring an encrypted file is a little more involved. The best approach is to create a script to perform the work, then encrypt this script. When executed, this script will have the permissions necessary to read and apply the encrypted file.

TIP Using transparent encryption it is possible to encrypt the MySQL user and password securely in a plain text configuration file and used with appropriate MySQL client commands.

![]()

Architectural Considerations

Given the various options for backup, it is possible to optimize a recovery strategy to minimize downtime. A failover to a standby system is generally the best approach for critical operations.

The archiving of data from an OLTP system to a secondary MySQL instance can be a great benefit for ensuring smaller backups and a more efficient restore process. If your application stores logging, history, or archive information in individual tables or large amounts of reproducible transient data or read-only data, considering the separation of this information into different instances can also serve to reduce the dependency on a primary system. This is an approach for designing your application to support sharding and partitioning.

A simple example is a new system for analyzing stock information. With a large amount of historical information (over 30 years and approximately 500GB), the application was designed for data recorded in two individual tables: a historical table of data before the system went live and a second table for data following the go live date. The application was written to query one or both tables appropriately based on date parameters. By recommending the client split the MySQL instance into two separate instances, placing all historical data on one instance, the only application modification was an additional database connection management.

The backup strategy was also optimized now for two different sets of data. The first set was a static copy of historical data, no daily backup was needed, and no binary logging was necessary. This requirement actually enabled an additional benefit of parallelism and enabling the MySQL query cache. The second set of current data, which was much smaller, could be managed with a different backup and recovery process to meet SLA requirements. This architectural change enabled a different strategy for read scalability and negating the requirement of replication for historical information. The system was also able to support a partial failure of any historical information by reporting this information as unavailable.

TIP A well designed and configured MySQL replication topology can be the first step for a minimal recovery time. MySQL replication is not a complete backup solution but can support optimizing many common failure scenarios. The Effective MySQL: Advanced Replication Techniquesbook will focus on the various options that are available with MySQL.

![]()

Conclusion

With any RDBMS system, time and new features will always result in more data being recorded. While an appropriate MySQL backup and recovery strategy may meet business expectations today, this may not be so in six months’ time. The decision of which backup strategy to use can also be affected by optimization factors. The addition of more hardware such as an additional network card or an additional low cost hard drive can change the decision process for optimizing backup and recovery.

The SQL statements and web links listed in this chapter can be downloaded from http://effectivemysql.com/book/backup-recovery/.

All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL)

If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval.

© 2016-2025 All site design rights belong to S.Y.A.