How to Use Objects: Code and Concepts (2016)

Part III: Events

Chapter 8. A Brief Introduction to Threads

Programming with threads is in many ways very different from single-threaded code, and threads often exhibit surprising and unintuitive behavior. It would therefore be irresponsible to leave you with the impression that background threads in user interfaces are merely about how to start,![]() 7.10 stop, and track long-running tasks. This chapter highlights the new challenges and techniques that arise with using multiple threads, whether in the form of actual Thread objects or through task execution frameworks. We

7.10 stop, and track long-running tasks. This chapter highlights the new challenges and techniques that arise with using multiple threads, whether in the form of actual Thread objects or through task execution frameworks. We![]() 7.10.2 also hope to encourage you to follow up on this fascinating and practically highly relevant topic in the literature.

7.10.2 also hope to encourage you to follow up on this fascinating and practically highly relevant topic in the literature.![]() 148

148

8.1 The Core: Parallel Code Execution

Modern users expect modern applications to do many things at once: to display animations, to perform extensive computations, to access the network, and many more. Managing these activities is much simplified if each task can be coded as if it were the only one, and then the Java platform takes care to execute the tasks at the same time, or at least virtually the same time. Threads enable precisely this programming model.

Wrap code fragments in Runnables to start them in parallel.

Suppose, for instance, that one needs to download files and do heavy computation simultaneously. First, one wraps up the code as objects:![]() 1.8.6

1.8.6

threads.MinimalThreads

class DownloadWorker implements Runnable {

public void run() {

... perform work as if only task

}

}

class ComputationWorker implements Runnable {

public void run() {

... perform work as if only task

}

}

Then, one can tell Java to execute the two fragments in the run() methods simultaneously, by constructing Thread objects and starting the threads.

threads.MinimalThreads.startActivities

new Thread(new DownloadWorker()).start();

new Thread(new ComputationWorker()).start();

![]()

![]() 148This code shows the accepted way of creating threads. In principle, the API would allow overriding the run() method of Thread directly before starting the thread, but the solution presented here has the advantage of keeping the language infrastructure separate from the application objects.

148This code shows the accepted way of creating threads. In principle, the API would allow overriding the run() method of Thread directly before starting the thread, but the solution presented here has the advantage of keeping the language infrastructure separate from the application objects.

The scheduler decides nondeterministically which threads get to run.

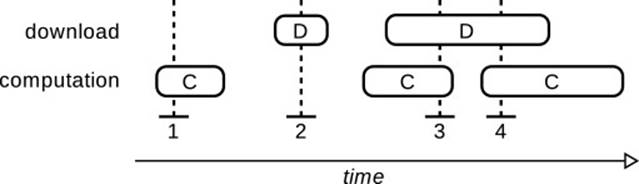

Fig. 8.1 shows the resulting runtime behavior. Code from the download task and the computation task is executed for brief periods of time, then is preempted and another thread gets to run. Since the switches happen so frequently, the user perceives the tasks as running simultaneously.

Figure 8.1 Interleaving of Code Execution by Threads

At this point, the connection between parallel and concurrent execution must be clarified. Because the scheduler makes tasks appear to be running simultaneously, one also says that the tasks execute concurrently and speaks of concurrent execution, concurrent programming, and so on. Since modern CPUs have multiple cores, threads very often will run truly in parallel: You cannot assume that other threads are stopped while your thread is working. The connection is captured succinctly by the popular characterization that “concurrency is potential parallelism.”

Let us examine Fig. 8.1 in more detail. At points 1 and 2, the two threads run on their own, but the execution can actually overlap, as at points 3 and 4, if the system has multiple CPUs or a CPU with multiple cores. The question of which thread runs at which point in time is decided by a central scheduler component, which usually resides in the operating system. Its decisions are based on how many threads are waiting, their different priorities, whether a thread tries to read data that is not yet![]() 8.2 available, whether it is waiting for some lock, and many other details. From the application’s perspective, its choices appear to be completely arbitrary and nondeterministic, so you should always assume that a thread might be running at any time, unless you actively prevent this—for instance, by using locks.

8.2 available, whether it is waiting for some lock, and many other details. From the application’s perspective, its choices appear to be completely arbitrary and nondeterministic, so you should always assume that a thread might be running at any time, unless you actively prevent this—for instance, by using locks.

Threads create entirely new programming challenges.

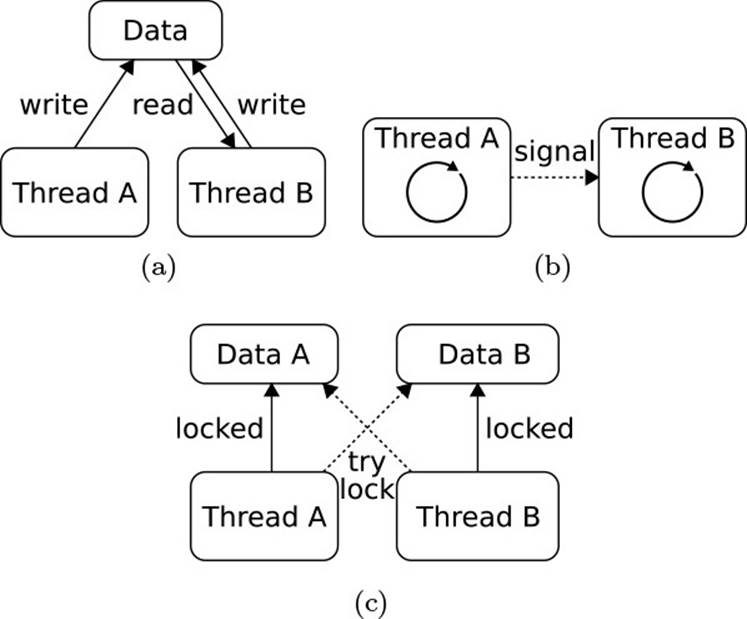

The remainder of this chapter discusses solutions to the fundamental challenges arising from the use of threads (Fig. 8.2).

Figure 8.2 New Challenges with Threads

1. When two threads, with the unpredictable runtime behavior from Fig. 8.1, access the same data, the state of the data structure becomes unpredictable as well, because it is unclear which writes take effect before which reads. Because the central question is which one of several operations gets executed first, this problem is termed a race condition. The challenge is to restrain the nondeterministic behavior so that one can still create programs that are correct and perform reliably.

2. Sometimes, threads need to communicate, mostly about events. But each thread is running its own code, so they cannot simply call methods of one another. We need a mechanism for sending signals between threads and for waiting for signals within a thread.

3. The solution to the first problem consists of locking the data: Before a thread accesses the data, it must obtain the lock on the data. Since no two threads can ever obtain the lock at the same time, the order of writes and reads has become somewhat more predictable. However, a new problem arises: If threads access several pieces of data, it may happen that each of them acquires one lock and then waits indefinitely, because the other thread holds the other lock. Such deadlocks are dreaded because they are usually not reproducible, so that they are challenging to track down and fix.

Objects confined to threads do not require special attention.

Before we delve into the details, here is a shortcut that can be taken very often: If only a single thread holds a reference to an object, then it can work![]() 148(§2.3) with that object as if there was no multithreading at all, since other threads cannot interfere with the object. This technique is calledconfinement. The object in question never “escapes from” a specific thread, and this is guaranteed by obeying a strict ownership discipline that avoids leaking object

148(§2.3) with that object as if there was no multithreading at all, since other threads cannot interfere with the object. This technique is calledconfinement. The object in question never “escapes from” a specific thread, and this is guaranteed by obeying a strict ownership discipline that avoids leaking object![]() 2.2.1 references to places where they might be accessible to other threads. Confinement is the deeper reason for introducing a dedicated event dispatch

2.2.1 references to places where they might be accessible to other threads. Confinement is the deeper reason for introducing a dedicated event dispatch![]() 7.10.1 thread in user interfaces and using other threads only as “producers” of data that they hand to the event thread via asyncExec(). One effective strategy for coping with threads is to identify as many object structures as possible that can be managed within one thread. For the remaining cases, read on.

7.10.1 thread in user interfaces and using other threads only as “producers” of data that they hand to the event thread via asyncExec(). One effective strategy for coping with threads is to identify as many object structures as possible that can be managed within one thread. For the remaining cases, read on.

8.2 Correctness in the Presence of Threads

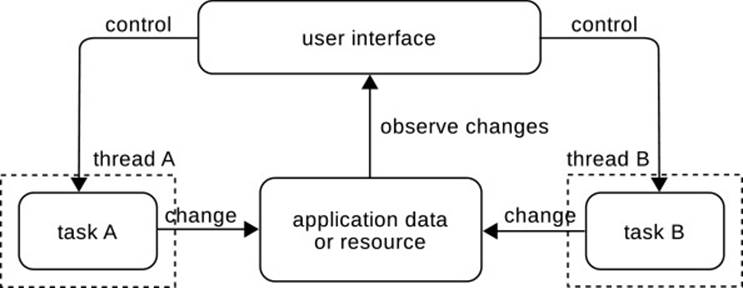

To keep the discussion focused, let us get back to the original motivation of![]() 7.10 background threads in user interfaces. Fig. 8.3 shows the pattern observed in many similar situations. The user interface spawns two threads A and B. Both collect data from somewhere and modify the application’s data structures

7.10 background threads in user interfaces. Fig. 8.3 shows the pattern observed in many similar situations. The user interface spawns two threads A and B. Both collect data from somewhere and modify the application’s data structures![]() 9.1 accordingly. The user interface mirrors that data on the screen. Since

9.1 accordingly. The user interface mirrors that data on the screen. Since![]() 7.10.1 displaying the data already involves the event dispatch thread, the same problems will occur with a single background thread A alone. Here are some concrete examples of such situations:

7.10.1 displaying the data already involves the event dispatch thread, the same problems will occur with a single background thread A alone. Here are some concrete examples of such situations:

• The application is a web browser and the threads perform different downloads simultaneously.

• ![]() 237The application is a server, and each thread is responsible for communicating with a single client.

237The application is a server, and each thread is responsible for communicating with a single client.

• ![]() 235The application is Eclipse, and a builder thread transforms an EMF model into Java source code in a file that an editor is currently visiting.

235The application is Eclipse, and a builder thread transforms an EMF model into Java source code in a file that an editor is currently visiting.

Figure 8.3 The Challenge of Multithreading

Threads challenge the correctness arguments.

The primary challenge in the situation of Fig. 8.3 is that the access to shared resources, such as data structures or files, must be coordinated to avoid causing havoc in the resources’ representations and endangering the correctness of the software. After all, the entire correctness argument introduced![]() 4 with contracts at the core rests on the notion of assertions, which capture knowledge about the program’s behavior in statements of the form: “Whenever execution reaches this point in the code, I know that ...”

4 with contracts at the core rests on the notion of assertions, which capture knowledge about the program’s behavior in statements of the form: “Whenever execution reaches this point in the code, I know that ...”![]() 4.1

4.1

Threads introduce the problem of interference. That is, one thread![]() 6,15,204 reaches an assertion and knows that it holds; in the next microsecond, a second thread modifies the memory described by the assertion and the assertion does not hold any longer. In other words, with threads you must assume that unexpected modifications of memory can occur at any time—unless you exclude them explicitly.

6,15,204 reaches an assertion and knows that it holds; in the next microsecond, a second thread modifies the memory described by the assertion and the assertion does not hold any longer. In other words, with threads you must assume that unexpected modifications of memory can occur at any time—unless you exclude them explicitly.

A particular danger lies in breaking the often intricate invariants of![]() 4.1 objects. The invariant describes what a “proper object” looks like, and it must hold whenever no code of the object’s class runs. However, if the object is currently “working,” then it usually breaks the invariant temporarily. As a result, a different thread accessing the same object can no longer assume that the invariant holds.

4.1 objects. The invariant describes what a “proper object” looks like, and it must hold whenever no code of the object’s class runs. However, if the object is currently “working,” then it usually breaks the invariant temporarily. As a result, a different thread accessing the same object can no longer assume that the invariant holds.

To demonstrate the problem of interference, let us break a GapText Store. Our previous analyses have convinced us that the code is correct.![]() 1.3.1

1.3.1 ![]() 4.1 Yet, the following code produces an exception: As soon as the access in line 7 happens to run simultaneously in the two threads created in lines 11 and 12, some exception or other will be thrown; because of the intricate arithmetic on indices, it is most probably an ArrayIndexOutOfBoundsException.

4.1 Yet, the following code produces an exception: As soon as the access in line 7 happens to run simultaneously in the two threads created in lines 11 and 12, some exception or other will be thrown; because of the intricate arithmetic on indices, it is most probably an ArrayIndexOutOfBoundsException.

swt.threads.BreakRepresentation.main

1 final GapTextStore shared = new GapTextStore();

2 class Worker implements Runnable {

3 public void run() {

4 for (int i = 0; i != 10000; i++) {

5 String text = String.format("%03d ",

6 rand.nextInt(1000));

7 shared.replace(shared.getLength(), 0, text);

8 }

9 }

10 }

11 new Thread(new Worker()).start();

12 new Thread(new Worker()).start();

The minimal requirement is mutual exclusion.

Interference can occur at any time, unless one prevents it explicitly. Mutual exclusion means denying all but a single thread access to a shared resource for some period of time, so that the thread can work independently of other threads in the system. Mutual exclusion is minimal in that without it, the nondeterministic scheduling results in unpredictable states of the shared resource.

Mutual exclusion is usually accomplished by locks, as shown in the following code. First, we create a Lock object. Locks have two main methods lock() and unlock(). When a thread calls lock(), then it acquires the lock and holds it until it calls unlock(). The internal mechanisms guarantee that no two threads can ever hold the same lock simultaneously. If a thread calls lock() while another thread happens to hold the lock already, the first thread must simply wait until the lock becomes available again.

swt.threads.PreserveRepresentation.main

final Lock lock = new ReentrantLock();

![]() The interface Lock specifies only the basic behavior, but there can be variations. A common one is a ReentrantLock, which allows a thread that already holds the lock to lock() it once more. The lock keeps a count of lock() and unlock() calls and frees the lock only when the counter reaches 0. This behavior is useful, for instance, when one public method calls another public method and both start by locking the entire object, as seen in the next example.

The interface Lock specifies only the basic behavior, but there can be variations. A common one is a ReentrantLock, which allows a thread that already holds the lock to lock() it once more. The lock keeps a count of lock() and unlock() calls and frees the lock only when the counter reaches 0. This behavior is useful, for instance, when one public method calls another public method and both start by locking the entire object, as seen in the next example.

Using a lock, we can now protect the GapTextStore by replacing the problematic line 6 in the original with the following code block. In line 1, the thread executing the code obtains the lock, or waits until the lock is available. The crucial observation is that line 3 can be reached only by a single thread—namely, the thread that holds the lock. As a result, only a single thread at a time can perform the modification of the gap store, so that the correctness argument is not invalidated by interference, and no exception is ever thrown. The lock protects the code between lock()and unlock() from interference.

swt.threads.PreserveRepresentation.main

1 lock.lock();

2 try {

3 shared.replace(shared.getLength(), 0, text);

4 } finally {

5 lock.unlock();

6 }

![]() Make sure to always release a lock you have acquired, since otherwise the shared resource can never be accessed again. The preceding code shows the idiom of using a

Make sure to always release a lock you have acquired, since otherwise the shared resource can never be accessed again. The preceding code shows the idiom of using a![]() 148 try-finally combination for this purpose; regardless of how the protected region between

148 try-finally combination for this purpose; regardless of how the protected region between![]() 1.5.6lock() and unlock() is left, the lock is freed.

1.5.6lock() and unlock() is left, the lock is freed.

![]() Very often, locks are conceptually associated with a specific object, or a group of objects. Code that wishes to work with the object has to acquire the lock beforehand and release it afterward. In such cases, one says that one holds the lock on the object, even if the connection is only conceptual, not technical.

Very often, locks are conceptually associated with a specific object, or a group of objects. Code that wishes to work with the object has to acquire the lock beforehand and release it afterward. In such cases, one says that one holds the lock on the object, even if the connection is only conceptual, not technical.

![]() Java offers a built-in locking mechanism through the synchronized keyword. Each

Java offers a built-in locking mechanism through the synchronized keyword. Each![]() 148 object has an associated lock that synchronized acquires and releases, usually for the this object. Writing synchronized before all public methods of a class protects its internal invariants: Only one thread at a time can ever enter the class’s code, so the normal

148 object has an associated lock that synchronized acquires and releases, usually for the this object. Writing synchronized before all public methods of a class protects its internal invariants: Only one thread at a time can ever enter the class’s code, so the normal![]() 4.1 correctness arguments apply. Such objects are also called monitors. (Unfortunately, that term is used by the Java Language Specification in a different sense.) Compared to Lock objects, this mechanism is much less flexible. We will discuss the inflexibility later at the appropriate points.

4.1 correctness arguments apply. Such objects are also called monitors. (Unfortunately, that term is used by the Java Language Specification in a different sense.) Compared to Lock objects, this mechanism is much less flexible. We will discuss the inflexibility later at the appropriate points.

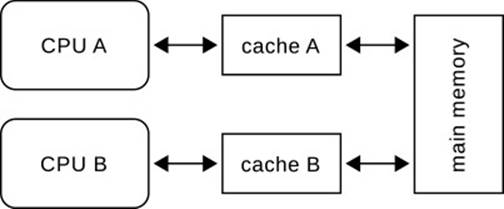

![]() Multithreading on modern processors goes beyond nondeterministic execution of one thread or another. The threads may actually run in parallel, on different CPUs or different cores of the same CPU (Fig. 8.4). The memory hierarchy of the processor then introduces a new problem beyond mere interference: When one thread overwrites the memory accessed by a different thread, the other thread may not even become aware of the fact. Suppose one thread runs on CPU A and writes some data to a shared object. For efficiency, that data is not written through to main memory, but is kept in the CPU’s local cache A until the cache is required elsewhere. The second thread, when it runs,

Multithreading on modern processors goes beyond nondeterministic execution of one thread or another. The threads may actually run in parallel, on different CPUs or different cores of the same CPU (Fig. 8.4). The memory hierarchy of the processor then introduces a new problem beyond mere interference: When one thread overwrites the memory accessed by a different thread, the other thread may not even become aware of the fact. Suppose one thread runs on CPU A and writes some data to a shared object. For efficiency, that data is not written through to main memory, but is kept in the CPU’s local cache A until the cache is required elsewhere. The second thread, when it runs,![]() 115 reads only from its own local cache B and is not aware of the new data in cache A.

115 reads only from its own local cache B and is not aware of the new data in cache A.

Figure 8.4 Threads and Caching

Java’s mutual exclusion mechanisms also include a memory barrier, which synchronizes the different caches and the main memory at the hardware level, thereby ensuring that all threads do see the current data stored in an object. The keyword volatile also introduces a memory barrier, without providing locking at the same time. The details![]() 111,166 are specified in the Java Memory Model. The specification itself is rather daunting. Essentially, it guarantees that when using locking or volatile, the reads and writes to a memory location are always properly ordered in the sense that a read either sees or doesn’t see a write, depending on the scheduler’s decisions, but there is no state of undefinedness in between.

111,166 are specified in the Java Memory Model. The specification itself is rather daunting. Essentially, it guarantees that when using locking or volatile, the reads and writes to a memory location are always properly ordered in the sense that a read either sees or doesn’t see a write, depending on the scheduler’s decisions, but there is no state of undefinedness in between.

Make protection from interference part of the encapsulation.

The guard for the shared gap store in the example is rather unreliable, since all clients must obey the protocol of acquiring the lock before accessing the store. A better solution is to build objects in such a way that they protect their own data structures and invariants by its own locks. Such objects are called thread-safe.

![]() 2.4In the example, we could just wrap the GapTextStore in a class that acquires a lock before accessing the wrapped store, as shown next. If each public method proceeds in the same way as replace(), then the invariants of the store are always safe and clients cannot circumvent the locking protocol.

2.4In the example, we could just wrap the GapTextStore in a class that acquires a lock before accessing the wrapped store, as shown next. If each public method proceeds in the same way as replace(), then the invariants of the store are always safe and clients cannot circumvent the locking protocol.

swt.threads.ThreadSafeTextStore

class ThreadSafeTextStore {

private Lock lock = new ReentrantLock();

private GapTextStore rep = new GapTextStore();

public final void replace(int offset, int length, String text) {

lock.lock();

try {

rep.replace(offset, length, text);

} finally {

lock.unlock();

}

}

... similarly for get() and getLength()

}

![]() In such small examples, the coding overhead for lock acquisition and release does seem rather tremendous. In real-world code, the locks protect longer sequences of operations, so that the necessary try-finally block is not as intrusive.

In such small examples, the coding overhead for lock acquisition and release does seem rather tremendous. In real-world code, the locks protect longer sequences of operations, so that the necessary try-finally block is not as intrusive.

![]() The Java library offers wrappers for the classes of the collections framework. To make an ArrayList thread-safe, one wraps it by Collections.synchronizedList(). The concurrency library in java.util.concurrent also offers special data structures, such asConcurrentHashMap, which are thread-safe but may also allow more concurrency—for instance, for simultaneous traversals through iterators. For storing single values in a thread-safe manner, one use the classes AtomicInteger, AtomicIntegerArray, and so on fromjava.util.concurrent.atomic.

The Java library offers wrappers for the classes of the collections framework. To make an ArrayList thread-safe, one wraps it by Collections.synchronizedList(). The concurrency library in java.util.concurrent also offers special data structures, such asConcurrentHashMap, which are thread-safe but may also allow more concurrency—for instance, for simultaneous traversals through iterators. For storing single values in a thread-safe manner, one use the classes AtomicInteger, AtomicIntegerArray, and so on fromjava.util.concurrent.atomic.

Since the new gap store objects are thread-safe, one can simply share an instance between threads.

swt.threads.ThreadSafeRepresentation.main

final ThreadSafeTextStore shared = new ThreadSafeTextStore();

The problematic access in the example no longer requires explicit protection.

swt.threads.ThreadSafeRepresentation.main

shared.replace(shared.getLength(), 0, text);

From a higher perspective, thread-safe objects encapsulate not only their data structures, but also the protection of those data structures. Their Lock objects become part of their internal representation, and one can formulate assertions about whether the lock is currently held at some given point. The main advantage is that clients no longer have to understand the necessity to lock, so this is one more encapsulated detail.

Beware of multistep operations and cross-object invariants.

Thread-safety is not the ultimate solution to threading problems, but addresses only a bare minimum requirement: When the invariants of objects are broken, there is no chance of ever obtaining correct software. However, it is important to remember that even a thread-safe object can potentially be modified between two calls to locking methods, so that the assertions about the object’s state may get broken.

To demonstrate, consider the following very simple case: We append a string text to the thread-safe text store shared in lines 1–2 and read the text back immediately afterward in line 3. Lines 4–7 write an error message if the text has been changed unexpectedly.

swt.threads.MultiStepBroken.main

1 int insertAt = shared.getLength();

2 shared.replace(insertAt, 0, text);

3 String retrieved = shared.get(insertAt, text.length());

4 if (!retrieved.equals(text)) {

5 System.err.println("Unexpected text read back at position "

6 + insertAt + " and step " + i);

7 }

In a single-threaded setting, we can track the state of shared in detail by![]() 4.7 assertions. After line 2, shared contains text at insertAt, so retrieved should be the same text. Surprisingly often, however, this is not the case. In experiments with executing the preceding code 10,000 times in a loop in two threads, usually between 20 and 60 violations were detected.

4.7 assertions. After line 2, shared contains text at insertAt, so retrieved should be the same text. Surprisingly often, however, this is not the case. In experiments with executing the preceding code 10,000 times in a loop in two threads, usually between 20 and 60 violations were detected.

Lock objects that are mentioned in assertions.

The previously mentioned experiments show that there is no such thing as “immediately afterward” with threads; thus, you should always assume that a different thread will happen to run at the most inappropriate moment.![]() 4.1 This attitude is very similar to the advice to be paranoid about the correctness of software.

4.1 This attitude is very similar to the advice to be paranoid about the correctness of software.

![]() 4.1In consequence, whenever one makes an assertion about an object in a multithreaded context, one must check at the same time that one holds a lock on the object. Assertions without locks will always be broken at some point by an unfortunate combination of thread interactions.

4.1In consequence, whenever one makes an assertion about an object in a multithreaded context, one must check at the same time that one holds a lock on the object. Assertions without locks will always be broken at some point by an unfortunate combination of thread interactions.

In the preceding example, the crucial lines 1–3 work with shared, and it is in this code region that we make assumptions about the state of shared. Lines 4–7, in contrast, work with local variables, which cannot be accessed from a different thread. The minimum required locking therefore protects the problematic steps, as shown here:

swt.threads.MultiStepCorrect.main

int insertAt;

String retrieved;

shared.lock();

try {

insertAt = shared.getLength();

shared.replace(insertAt, 0, text);

retrieved = shared.get(insertAt, text.length());

} finally {

shared.unlock();

}

Note that we must use the lock built into shared, because otherwise other clients of that object resource might still modify it at the same time. The ThreadSafeTextStore therefore exports its lock.

swt.threads.ThreadSafeTextStore

public void lock() {

lock.lock();

}

public void unlock(){

lock.unlock();

}

![]() At this point, we see the first advantage of explicit locks over synchronized. That statement always releases the lock in the same method where it obtains the lock—it supports block-structured locking. Explicit Lock objects, in contrast, can be held for arbitrary periods of time, independently of the program’s block structure.

At this point, we see the first advantage of explicit locks over synchronized. That statement always releases the lock in the same method where it obtains the lock—it supports block-structured locking. Explicit Lock objects, in contrast, can be held for arbitrary periods of time, independently of the program’s block structure.

Missing mutual exclusion makes for elusive bugs.

It is important to realize that a missing lock may not show up immediately, but may remain undetected for a very long time. Especially if the shared data structure is accessed only infrequently, chances are that the bug will lie dormant for months or even years. When it does show up, it will be hard to trace down and virtually impossible to reproduce. You should really be paranoid about holding all necessary locks.

8.3 Notifications Between Threads

We have seen the wide application and importance of notifications through the OBSERVER pattern. Event-driven software generalizes this idea to say![]() 2.1 that an object’s behavior can be understood as reactions to events. It is therefore necessary to investigate the connection with threads: What happens if the execution of one thread discovers some state change or event that a different thread should be notified about or that it is even waiting for? Unlike with the usual pattern, one cannot simply call a method on a thread and pass some event object. Instead, the software must include mechanisms to synchronize the working of threads at specific points.

2.1 that an object’s behavior can be understood as reactions to events. It is therefore necessary to investigate the connection with threads: What happens if the execution of one thread discovers some state change or event that a different thread should be notified about or that it is even waiting for? Unlike with the usual pattern, one cannot simply call a method on a thread and pass some event object. Instead, the software must include mechanisms to synchronize the working of threads at specific points.

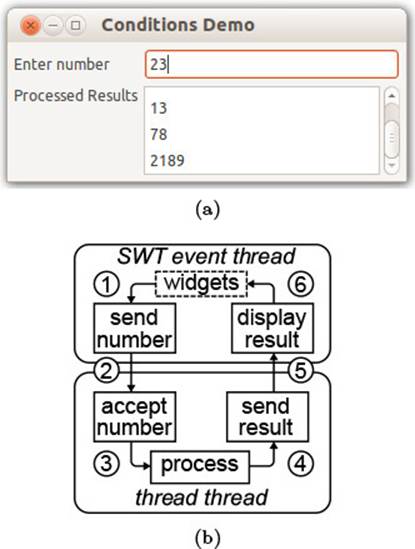

We use a simple example to understand the mechanics of interthread notifications [Fig. 8.5(a)]: The user enters a number that gets processed in a background thread. The result of the processing appears in the list below the entry field. Fig. 8.5(b) shows the sequence of necessary steps from a high-level perspective. The example involves two threads, the SWT event![]() 7.10.1 dispatch thread and a background worker thread. The new challenge is the synchronization in step 2 of Fig. 8.5(b), which passes the entered number across the thread boundary.

7.10.1 dispatch thread and a background worker thread. The new challenge is the synchronization in step 2 of Fig. 8.5(b), which passes the entered number across the thread boundary.

Figure 8.5 Mechanics of the Conditions Demo

But let us treat the other steps first to get the overall picture. In step 1, when the user presses enter in the text field, an event-listener dispatches the number for processing:

swt.threads.ConditionsDemo.ConditionsDemo

number.addSelectionListener(new SelectionAdapter() {

public void widgetDefaultSelected(SelectionEvent e) {

int input = Integer.parseInt(number.getText());

number.setText("");

sendNumber(input);

}

});

![]() In this code, we finish the UI-level work before sending off the number. Had we left number.setText()

In this code, we finish the UI-level work before sending off the number. Had we left number.setText()![]() 8.1 as the last statement, the answer might have arrived before the old number was cleared. Although in the specific example this does not matter, we prefer to keep the sequence clear: We complete fetching the number from the UI before starting the processing.

8.1 as the last statement, the answer might have arrived before the old number was cleared. Although in the specific example this does not matter, we prefer to keep the sequence clear: We complete fetching the number from the UI before starting the processing.

Step 3 in Fig. 8.5(b) is a simple method call to processNumber(), which is a no-op and passes the input on in step 4:

swt.threads.ConditionsDemo

public void processNumber(Integer number) {

Integer result = number; // pseudo-"processing"

sendResult(result);

}

In step 5, the worker thread accesses the user interface in the usual![]() 7.10.1 manner using asyncExec(). Step 6 is inlined in the following code:

7.10.1 manner using asyncExec(). Step 6 is inlined in the following code:

swt.threads.ConditionsDemo.sendResult

display.asyncExec(new Runnable() {

public void run() {

if (!results.isDisposed()) {

results.add(result.toString());

}

}

});

![]() In case you are wondering about the asymmetry, recall that step 5 involves a second

In case you are wondering about the asymmetry, recall that step 5 involves a second![]() 7.10.1 queue, with very similar coding idioms as the ones shown next. It is the SWT event queue, but it remains hidden in the implementation of asyncExec().

7.10.1 queue, with very similar coding idioms as the ones shown next. It is the SWT event queue, but it remains hidden in the implementation of asyncExec().

So the remaining task is to complete step 2 in Fig. 8.5(b). The general approach is shown in Fig. 8.6. The two threads share a lock dataLock, a condition variable dataAvailable, and a plain, unsynchronized queue postbox for buffering data. Briefly speaking, condition variables enable signals to be sent across thread boundaries. Here is what will happen: (1) The worker thread keeps waiting for a signal that new data is available. (2) When the user enters a new number, the SWT thread acquires the lock for synchronization, (3) pushes the data into the queue, and (4) sends the signal that the worker is waiting for. (5) The worker then acquires the lock and fetches the newly arrived data from the queue.

Figure 8.6 Using Condition Variables for Thread Synchronization

So much for the conceptual side. Because of interactions between the lock and the condition variables, the technical details and coding idioms are somewhat more involved.

Condition variables serve to signal state changes between threads.

Our example follows a standard approach to the producer–consumer pattern (Fig. 7.22, page 397): The producer drops data into a queue, where the consumer can pick it up later. In the next code snippet, we create the setup from Fig. 8.6: A postbox contains the actual data, and we choose a plain linked list. Since different threads will work with the data, we need a dataLock to protect the accesses.

swt.threads.ConditionsDemo

private Queue<Integer> postbox = new LinkedList<Integer>();

private Lock dataLock = new ReentrantLock();

private Condition dataAvailable = dataLock.newCondition();

The new element in the example is the condition variable data Available. A condition variable enables one thread to suspend execution until a particular condition on the program state may hold, which a different thread signals through the condition variable after it has changed the program state. The qualification “may hold” is necessary, of course, because the state may change again between the sending and the processing of the notification. Also, the condition variable does not check whether the signal is actually justified. Condition variables are always associated with locks,![]() 8.2 because otherwise the notifications themselves may be prone to interference and race conditions.

8.2 because otherwise the notifications themselves may be prone to interference and race conditions.

In the current example, the condition variable dataAvailable will be used to signal the specific state that the postbox now contains data. When the user presses “enter” in the number field, a listener for the widget DefaultSelected event executes the following code. It locks the postbox as usual to guarantee exclusive access. It then pushes the new number into the queue (line 3). Finally, it uses the condition variable dataAvailable to send a signal to any waiting thread that new data has arrived (line 4).

swt.threads.ConditionsDemo.sendNumber

1 dataLock.lock();

2 try {

3 postbox.add(number);

4 dataAvailable.signalAll();

5 } finally {

6 dataLock.unlock();

7 }

![]() Like a lock, the condition variable is not technically associated with a specific data structure or a state of that data structure. The chosen name reflects the conceptual relationship to keep the code readable.

Like a lock, the condition variable is not technically associated with a specific data structure or a state of that data structure. The chosen name reflects the conceptual relationship to keep the code readable.

![]()

![]() 148The method signalAll() wakes up all threads waiting for the given condition. It is customary to use signalAll() even if one expects only a single thread to be waiting, because lost signals can easily occur if there happen to be several threads and the one being woken up cannot handle the signal. The idioms for preventing lost signals would complicate the code, while the gain in runtime would be marginal. It is better to be conservative when programming with threads.

148The method signalAll() wakes up all threads waiting for the given condition. It is customary to use signalAll() even if one expects only a single thread to be waiting, because lost signals can easily occur if there happen to be several threads and the one being woken up cannot handle the signal. The idioms for preventing lost signals would complicate the code, while the gain in runtime would be marginal. It is better to be conservative when programming with threads.

The background thread keeps on processing numbers from the postbox. (The loop follows in the next code snippet.) Whenever it is ready for the next input, it will look at the postbox. If no number has arrived as yet, it must wait. Conditions enable the thread to implement just this behavior. Since the thread needs to access the postbox, it acquires the corresponding lock (lines 1–2, 8–10). The counterpart of the signaling from the event thread is found in lines 3–4: The background thread checks whether the postbox contains data and otherwise goes to sleep until the event thread announces that data is available. Once data becomes available, it is processed as usual in lines 5–7.

swt.threads.ConditionsDemo.run

1 dataLock.lock();

2 try {

3 while (postbox.isEmpty())

4 dataAvailable.await();

5 do {

6 processNumber(postbox.remove());

7 } while (!postbox.isEmpty());

8 } finally {

9 dataLock.unlock();

10 }

Three crucial details are worth mentioning. First, calling await() on a condition variable also releases the lock that it is associated with. In the preceding code, line 4 therefore releases the lock acquired in line 1. This is necessary to enable other threads to modify the data structure—here the postbox—at all, because they need the lock to do so. When a signal is received and await() is about to return, it reacquires the lock. (If several threads have called await(), the first one to acquire the lock proceeds; the others continue to wait for the lock to become available.)

As a related second detail, the entire region from line 1 to line 9 is protected by the lock from the thread’s perspective. In particular, when the check in line 3 detects available data, then this assertion cannot be![]() 8.2 invalidated by interference afterward, so that line 6 is justified in assuming that the call to remove() will not fail. (We have chosen a do-while loop to demonstrate this detail.)

8.2 invalidated by interference afterward, so that line 6 is justified in assuming that the call to remove() will not fail. (We have chosen a do-while loop to demonstrate this detail.)

Finally, the while loop in line 3 needs explaining: Would not an if be sufficient? After all, the intuition is to “wait if no data needs processing.” The problem with this reasoning is that it assumes that the await() returns precisely if data is available. However, there are several reasons why this might not be the case. Not all are valid in the current context, but they explain the general idiom of using a while loop. First, several threads might wait simultaneously and the first one to reacquire the lock after await() would process all the data. The others would wake up to find that no data is available after all and would fail in line 6. Second, the condition might be signaled inadvertently, especially if the condition variable is used by many clients or is even public. Finally, the Java platform does not guarantee absolutely that await() returns only when a corresponding signal() has been called—spurious wakeups may make await() return without provocation. Since programming with threads should be very conservative, one always uses a loop to wait for the desired condition.

In summary, the example has introduced the fundamental idea of sending notifications between threads: One uses conditions to send the actual signal and employs data structures to contain the event objects or other data associated with the notifications. Conditions can be thought of as a predefined interthread observer pattern.

Use predefined building blocks for synchronization wherever possible.

This idiom for sending and receiving notifications is extremely common.![]() 8.2 The Java library offers a set of blocking queues that encapsulate all the necessary locking and signaling. The term refers to the fact that these queues will block the execution of reading threads when no data is available and the execution of writing threads when the queue’s capacity is exhausted. Blocking queues offer just the functionality for passing data items or events safely from one thread to the other.

8.2 The Java library offers a set of blocking queues that encapsulate all the necessary locking and signaling. The term refers to the fact that these queues will block the execution of reading threads when no data is available and the execution of writing threads when the queue’s capacity is exhausted. Blocking queues offer just the functionality for passing data items or events safely from one thread to the other.

As a result, blocking queues make programming with threads a breeze. In the previous example, we can replace the bare postbox with a synchronized queue.

swt.threads.BlockingQueueDemo

private BlockingQueue<Integer> postbox =

new LinkedBlockingQueue<Integer>();

Whenever the user enters data, the event dispatch thread puts it into the queue, relying on the queue’s internal mechanisms to take care of all interthread communication issues.

swt.threads.BlockingQueueDemo.sendNumber

postbox.put(Integer.parseInt(number.getText()));

number.setText("");

Likewise, the background thread can simply fetch the next item from the queue. The method take() blocks until data is actually available.

swt.threads.BlockingQueueDemo.run

processData(postbox.take());

![]()

![]() 7.10.1

7.10.1 ![]() 148Blocking queues are the basic ingredient to implementing producer–consumer relationships between threads: One thread keeps producing data and putting it into the queue; the other thread keeps taking data from the queue and processing it further. In such a situation, one more aspect of synchronization must be considered—namely, that of processing speed. If producing data is much quicker than processing it in the consumer, then memory will overflow with the items stored in the queue. For this reason, blocking queues in the library usually have a fixed capacity. When the producer calls put() and the queue is already full, then the call will block, making the behavior symmetric to that of take() on an empty queue. As a result, the producer will be slowed down to the speed of the consumer and a memory overflow is avoided.

148Blocking queues are the basic ingredient to implementing producer–consumer relationships between threads: One thread keeps producing data and putting it into the queue; the other thread keeps taking data from the queue and processing it further. In such a situation, one more aspect of synchronization must be considered—namely, that of processing speed. If producing data is much quicker than processing it in the consumer, then memory will overflow with the items stored in the queue. For this reason, blocking queues in the library usually have a fixed capacity. When the producer calls put() and the queue is already full, then the call will block, making the behavior symmetric to that of take() on an empty queue. As a result, the producer will be slowed down to the speed of the consumer and a memory overflow is avoided.

In the preceding example, the LinkedBlockingQueue has unbounded capacity, which mirrors the previous behavior of the postbox and ensures that the call to put() will not block in the event thread, which would freeze the user interface. This situation is really special. In usual producer–consumer relationships, you should prefer queues with a limited capacity.

The library supports further common synchronization tasks. For instance,![]() 148 a CountDownLatch enables a thread to wait until one or more other threads have reached some specific point—for example, until all threads have started up or have finished processing different parts of a computation. A CyclicBarrier lets threads wait for one another repeatedly at specific points, such as when data needs to be exchanged. It is highly advisable to look through the library before attempting to implement a custom mechanism.

148 a CountDownLatch enables a thread to wait until one or more other threads have reached some specific point—for example, until all threads have started up or have finished processing different parts of a computation. A CyclicBarrier lets threads wait for one another repeatedly at specific points, such as when data needs to be exchanged. It is highly advisable to look through the library before attempting to implement a custom mechanism.

Stop threads using interrupt().

The final notification between threads concerns their termination. Suppose the user interface has started a background thread, but the expected results have become redundant due to further user actions. How should one notify the thread about this event? Within the Eclipse framework, this is accomplished through the progress monitors. At the level of the threads![]() 7.10.2 themselves, interrupts serve the purpose.

7.10.2 themselves, interrupts serve the purpose.

In the running example of processing entered numbers, the background thread must be stopped when the dialog closes. We attach a Dispose Listener to the dialog and interrupt the background thread (line 5).

swt.threads.ConditionsDemo.ConditionsDemo

1 workerThread = new Thread(worker);

2 workerThread.start();

3 addDisposeListener(new DisposeListener() {

4 public void widgetDisposed(DisposeEvent e) {

5 workerThread.interrupt();

6 }

7 });

The thread being interrupted may receive the interrupt in two possible ways. When it is currently blocked in waiting for some signal or condition to occur, such as through await() on a Condition or take() on a BlockingQueue, the call will return with anInterruptedException (possibly after waiting to reacquire any released locks). Otherwise, the thread’s built-in interruption status flag will be set to true.

The processing loop of the running example, shown next, exhibits a common idiom for handling interrupts. Before starting on any further work, the thread checks whether it has already been interrupted (line 2). Otherwise, it waits for more data and processes it. Furthermore, it expects anInterruptedException to be thrown while it waits for new data. Lines 5–8 exhibit the idiom of converting the exception to a set interruption status![]() 148 flag to unify the two cases of receiving an interruption. In the example, the loop and the thread then terminate.

148 flag to unify the two cases of receiving an interruption. In the example, the loop and the thread then terminate.

swt.threads.ConditionsDemo

1 public void run() {

2 while (!Thread.currentThread().isInterrupted()) {

3 try {

4 ...

5 } catch (InterruptedException exc) {

6 // convert to flag

7 Thread.currentThread().interrupt();

8 }

9 }

10 }

11

In summary, the thread terminates itself rather than being terminated from the outside. The interrupt sent by another thread is understood as a![]() 1.5.6 kind request. In particular, the receiver must be exception-safe for possible InterruptedExceptions, meaning it must close open resources and leave invariants of data structures intact.

1.5.6 kind request. In particular, the receiver must be exception-safe for possible InterruptedExceptions, meaning it must close open resources and leave invariants of data structures intact.

![]() Do not ignore InterruptedExceptions. When writing code, these exceptions often appear to be merely a nuisance to be dealt with later on, and one is tempted to add an empty catch clause to be rid of them. However, you should be aware that the proper termination of a thread is just as much a part of its life cycle and behavior as the actual processing that it performs. Properly handling InterruptedExceptions is part of the requirements.

Do not ignore InterruptedExceptions. When writing code, these exceptions often appear to be merely a nuisance to be dealt with later on, and one is tempted to add an empty catch clause to be rid of them. However, you should be aware that the proper termination of a thread is just as much a part of its life cycle and behavior as the actual processing that it performs. Properly handling InterruptedExceptions is part of the requirements.

![]() Be aware of which blocking methods are interruptible. For instance, the method Lock.lock() blocks until the lock becomes available but it is not terminated with an InterruptedException when the thread receives an interrupt in the meantime. The reason is that usually one does not expect to wait long for locks, so that the behavior is a useful default. If your application does expect to wait longer, it might make sense to use lockInterruptibly() instead.

Be aware of which blocking methods are interruptible. For instance, the method Lock.lock() blocks until the lock becomes available but it is not terminated with an InterruptedException when the thread receives an interrupt in the meantime. The reason is that usually one does not expect to wait long for locks, so that the behavior is a useful default. If your application does expect to wait longer, it might make sense to use lockInterruptibly() instead.

![]() The ability to lock while accepting interrupts and the ability to lock with a given timeout are two more aspects that make Lock objects much more flexible than the built-in synchronized command.

The ability to lock while accepting interrupts and the ability to lock with a given timeout are two more aspects that make Lock objects much more flexible than the built-in synchronized command.

![]() Never use the stop() method on Threads. It aborts the computation immediately without giving the thread the chance to clean up. Even the finally clauses, which

Never use the stop() method on Threads. It aborts the computation immediately without giving the thread the chance to clean up. Even the finally clauses, which![]() 1.5.6 are used to protect against unexpected termination, will not be executed.

1.5.6 are used to protect against unexpected termination, will not be executed.

Ensure that all threads that get started are also interrupted.

Threads that are no longer required must be interrupted to free their resources. One further reason is that the JVM will not terminate until all started threads have terminated. In user interface examples, this means that even closing their shell and terminating their event dispatch thread will not be sufficient. It is necessary to add a dispose-listener as shown earlier. The requirement to stop threads is really no different from the general injunction of managing an object’s life cycle, for instance, by unregistering![]() 2.1.2 the object from all subjects that it observes and freeing any resources it

2.1.2 the object from all subjects that it observes and freeing any resources it![]() 7.4.1 might hold.

7.4.1 might hold.

![]() The JVM offers daemon threads that get terminated automatically when the last non-daemon thread terminates. As a result, daemon threads never force the JVM to keep running. However, daemon threads are stopped abruptly, without any notification to the threads themselves, and the reasons against using Thread.stop() apply here as well.

The JVM offers daemon threads that get terminated automatically when the last non-daemon thread terminates. As a result, daemon threads never force the JVM to keep running. However, daemon threads are stopped abruptly, without any notification to the threads themselves, and the reasons against using Thread.stop() apply here as well.

8.4 Asynchronous Messages

Normal communication between objects consists of calling methods of each other. Since method calls are always associated with technical details of parameter passing and the call stack, it is simpler to use the abstraction that objects communicate by sending messages to each other, even if in the![]() 1.4.1

1.4.1 ![]() 1.1 end “sending a message” really translates to calling a method. The objects in this abstraction become more independent of one another, with one sending a message and the other reacting appropriately.

1.1 end “sending a message” really translates to calling a method. The objects in this abstraction become more independent of one another, with one sending a message and the other reacting appropriately.

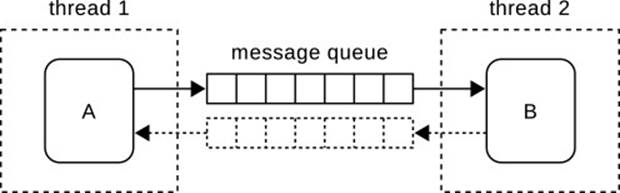

In the context of threads, this independence can be strengthened, since the receiving object does not have to react to a message immediately, but can defer processing until a convenient time. Such messages are called asynchronous messages. Fig. 8.7 gives an overview. Object A lives in a thread 1; its code is executed in that thread. Object B lives in a different thread 2. Usually, B will execute a loop to wait for messages and call the appropriate methods on itself. If A wants to send a message to B, it puts the message into a synchronized queue and proceeds with its own processing. At some![]() 8.3 later point, B will receive the message and react to it. Since the sender does not wait for a return value, any results are often transmitted again asynchronously through a second queue, shown as dashed lines in the figure.

8.3 later point, B will receive the message and react to it. Since the sender does not wait for a return value, any results are often transmitted again asynchronously through a second queue, shown as dashed lines in the figure.

Figure 8.7 Asynchronous Messages

For a concrete example, consider a user interface that downloads web pages in the background. Object A in Fig. 8.7 is part of the user interface![]() 7.10.1 and lives in the event thread. It uses the following method download() whenever it requires a web page. Lines 2–4 create a request and display it in a table. Line 5 associates the table row with the request in a hash map. This is necessary because of asynchronous completion: When the answer to the request arrives, its result has to be shown in the correct table row.

7.10.1 and lives in the event thread. It uses the following method download() whenever it requires a web page. Lines 2–4 create a request and display it in a table. Line 5 associates the table row with the request in a hash map. This is necessary because of asynchronous completion: When the answer to the request arrives, its result has to be shown in the correct table row.

swt.threads.AsyncMessages

1 public void download(String url) throws InterruptedException {

2 DownloadRequest req = new DownloadRequest(url);

3 TableItem item = new TableItem(table, SWT.NONE);

4 item.setText(0, url);

5 openRequests.put(req, item);

6 requests.put(req);

7 }

The results, through the dashed queue in Fig. 8.7, are received in a separate thread, which executes the following loop and displays the results![]() 7.10.1 in the correct table row (switching to the event thread as usual).

7.10.1 in the correct table row (switching to the event thread as usual).

swt.threads.AsyncMessages.run

while (true) {

final DownloadResult res = results.take();

final TableItem origin = openRequests.remove(res.request);

display.asyncExec(new Runnable() {

public void run() {

origin.setText(1, res.message);

}

});

}

![]()

![]() 218The ASYNCHRONOUS COMPLETION TOKEN pattern generalizes this approach to messages sent between processes and over the network. In this setting, the original request to which an answer belongs cannot be given by a simple object reference, as done in the preceding example, because the asynchronous message leaves the JVM’s address space. Instead, each request contains a unique identifier, the completion token, which the receiver passes back unmodified with the answer. The sender keeps a table of open requests to assign the incoming answers correctly.

218The ASYNCHRONOUS COMPLETION TOKEN pattern generalizes this approach to messages sent between processes and over the network. In this setting, the original request to which an answer belongs cannot be given by a simple object reference, as done in the preceding example, because the asynchronous message leaves the JVM’s address space. Instead, each request contains a unique identifier, the completion token, which the receiver passes back unmodified with the answer. The sender keeps a table of open requests to assign the incoming answers correctly.

![]() Functional languages—notably Erlang, ML, and Scala—have long since discovered

Functional languages—notably Erlang, ML, and Scala—have long since discovered![]() 16

16 ![]() 212

212 ![]() 113 that sending asynchronous messages through message queues is a viable model of concurrent execution itself. Rather than having to talk about bare-bones threads executing methods of objects, one can talk directly about objects, sometimes called actors in this context, which send and receive messages through channels. The fact that asynchronous messages can carry entire programming models highlights their practical utility.

113 that sending asynchronous messages through message queues is a viable model of concurrent execution itself. Rather than having to talk about bare-bones threads executing methods of objects, one can talk directly about objects, sometimes called actors in this context, which send and receive messages through channels. The fact that asynchronous messages can carry entire programming models highlights their practical utility.

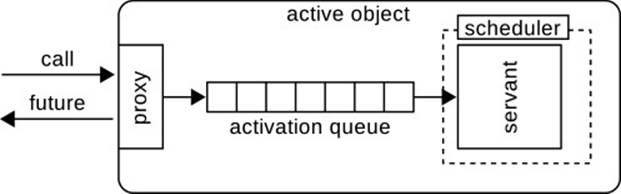

Asynchronous messages introduce some extra complexity, since we cannot wait for the return value of a method call as usual. Sometimes it will be possible to hide this complexity. The ACTIVE OBJECT pattern gives the![]() 218 general idea.

218 general idea.

Pattern: Active Object

The ACTIVE OBJECT pattern offers an abstraction over asynchronous message processing (Fig. 8.8) by encapsulating the details of queuing and multi-threading. Internally, the active object contains a servant, which contains the real functionality. To the outside, the active object acts as a PROXY for that servant. It starts a scheduler thread, which repeatedly takes method calls from an activation queue and calls the corresponding method in the servant.

Figure 8.8 Active Object Pattern

Clients of active objects appear to simply call methods on the proxy, knowing that the calls will be processed asynchronously in the background. Active objects can maintain this abstraction of method calls for the return values by employing futures. Futures are simply containers for the value![]() 148 to be computed later on. The active object’s methods return a future immediately, and the client can later obtain the result by calling get() on the future. If the processing has not yet been completed, the call blocks. The Java library contains a collection of future implementations, which are intended to be used with the executors framework.

148 to be computed later on. The active object’s methods return a future immediately, and the client can later obtain the result by calling get() on the future. If the processing has not yet been completed, the call blocks. The Java library contains a collection of future implementations, which are intended to be used with the executors framework.

8.5 Open Calls for Notification

User interfaces and event-driven software in general rely heavily on notifications,![]() 2.1 and in particular on the OBSERVER pattern. In connection with threads, a fundamental question is whether notifications should be sent while holding the lock or after releasing it. Notifications sent without holding

2.1 and in particular on the OBSERVER pattern. In connection with threads, a fundamental question is whether notifications should be sent while holding the lock or after releasing it. Notifications sent without holding![]() 148 on to the lock are termed open calls. We will now look at the consequences and the differences to the usual observer pattern that result from this relaxation of locking.

148 on to the lock are termed open calls. We will now look at the consequences and the differences to the usual observer pattern that result from this relaxation of locking.

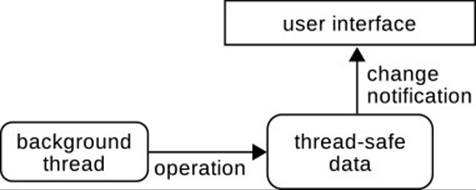

Fig. 8.9 is a typical situation: The application data is stored in some![]() 8.2 thread-safe object, so that background threads can work with it by calling the available methods to perform operations. The user interface seeks to

8.2 thread-safe object, so that background threads can work with it by calling the available methods to perform operations. The user interface seeks to![]() 9.1 reflect the state of the data on the screen in a timely fashion. For that purpose, it registers an observer and updates the display according to the reported changes.

9.1 reflect the state of the data on the screen in a timely fashion. For that purpose, it registers an observer and updates the display according to the reported changes.

Figure 8.9 Reflecting Thread-Safe Data in the User Interface

As a concrete example, let us examine a class ThreadSafeData that maintains a single value data, as a representative for a larger data structure. The first and obvious way to implement the OBSERVER pattern here would be the following: We protect both the actual modification and the change notification by locking, so as to make the whole block behave just as in the single-threaded setting.

swt.threads.ThreadSafeData

1 public void setValueSync(int value) {

2 lock.lock();

3 try {

4 int oldData = this.value;

5 this.value = value;

6 changes.firePropertyChange("data", oldData, value);

7 } finally {

8 lock.unlock();

9 }

10 }

When we turn to the user interface, we see that this strict locking discipline may, in fact, not be necessary. The user interface registers a listener with the data structure, uses asyncExec to switch to the event thread, and updates the text field value with the new content of the data structure (lines 6–7).

swt.threads.ReflectThreadSafeData.setThreadSafeData

1 public void propertyChange(final PropertyChangeEvent evt) {

2 display.asyncExec(new Runnable() {

3 public void run() {

4 if (isDisposed())

5 return;

6 int curValue = data.getValue();

7 value.setText(String.format("%03d", curValue));

8 int reportedValue = (Integer) evt.getNewValue();

9 state.setText(reportedValue == curValue ? "==" : "!=");

10 }

11 });

12 }

Lines 8–9 drive home the crucial point of the example: They check whether the current value of data is still the same as the value reported with the change event—that is, the value for which the event has been sent originally. When the background threads keep modifying the shared data structure, this will usually not be the case: Line 2 schedules the runnable to be executed on the event thread at some later point, so the code at line 4 does not start executing immediately and there is plenty of time for the thread to modify the data in between (see also Fig. 7.21 on page 396).

Notifications with locks provide strong guarantees.

Now suppose we attach a second listener to the shared data. It is independent of the user interface and simply checks whether the data is still intact when the listener receives the callback. In fact, this is always the case, because the lock in setValueSync() prevents a change between lines 5 and 6 of that method.

swt.threads.DifferencingObserver

public void propertyChange(PropertyChangeEvent evt) {

int reportedValue = (Integer) evt.getNewValue();

int actualValue = data.getValue();

if (reportedValue != actualValue)

System.out.format("detected difference %03d != %03d\n",

reportedValue, actualValue);

}

From a more general perspective, sending notifications while still holding the lock ensures that the observers see exactly the state that is the result of a single modification. This behavior is just the same as in a single-threaded environment. This strong guarantee is bought at the expense that no other thread can work with the data structure until all observers have finished.

Open calls can decrease lock contention.

![]() 148The example of the user interface also shows that in many situations the observers do not rely on finding exactly the state as it was after the change. That is, they will look at the data structure anyway to retrieve the most recent values, so it does not matter whether these have been changed in the meantime. We can therefore recode the setter in ThreadSafeData: Before sending out the notifications in line 10; we release the lock in line 8 to enable other threads to operate on the data.

148The example of the user interface also shows that in many situations the observers do not rely on finding exactly the state as it was after the change. That is, they will look at the data structure anyway to retrieve the most recent values, so it does not matter whether these have been changed in the meantime. We can therefore recode the setter in ThreadSafeData: Before sending out the notifications in line 10; we release the lock in line 8 to enable other threads to operate on the data.

swt.threads.ThreadSafeData

1 public void setDataOpen(int value) {

2 lock.lock();

3 int oldValue;

4 try {

5 oldValue = this.value;

6 this.value = value;

7 } finally {

8 lock.unlock();

9 }

10 changes.firePropertyChange("data", oldValue, value);

11 }

Open calls in general are notifications, whether to observers or calls to arbitrary collaborators, that are sent without holding on to locks after the actual operation has completed. When the data structure in question is central to the application and is heavily used by different threads, then switching to open calls for notifications can make the application more efficient, because fewer threads must wait for the lock—the potential for![]() 8.6 lock contention is reduced. Open calls can also help prevent deadlocks, as will be clarified by our later analysis of that phenomenon.

8.6 lock contention is reduced. Open calls can also help prevent deadlocks, as will be clarified by our later analysis of that phenomenon.

Open calls can endanger correctness.

Suppose the data structure is a list and the observer pattern follows the![]() 2.1.3 usual push variant, in which the subject sends along detailed change information. One change might read “added item at position 5.” However, when the observer finally receives the call, that item might already have been moved elsewhere, or the index 5 might be invalid because the list has been emptied in the meantime.

2.1.3 usual push variant, in which the subject sends along detailed change information. One change might read “added item at position 5.” However, when the observer finally receives the call, that item might already have been moved elsewhere, or the index 5 might be invalid because the list has been emptied in the meantime.

![]() 8.2

8.2 ![]() 4.1In essence, this behavior once again reflects assertions invalidated by interference. At the end of the list operation, the index was valid because the assertion “the index is in the list” was protected by the lock. As soon as the lock is released, the assertion can be invalidated.

4.1In essence, this behavior once again reflects assertions invalidated by interference. At the end of the list operation, the index was valid because the assertion “the index is in the list” was protected by the lock. As soon as the lock is released, the assertion can be invalidated.

Use open calls only when no observer will rely on the stronger guarantees.

We have presented open calls here mainly to point out their challenges, and to discuss the decision necessary about holding onto or releasing a lock for notifications. Open calls can be useful in heavily used data structures, but they require all possible receivers of the calls to be aware of the fact that the message they receive may no longer be up-to-date.

Open calls are very similar to asynchronous messages in this respect:![]() 8.4 There, too, the reception of the message may be delayed to the point that the data that it concerns has changed in the meantime. The huge success of message-driven systems such as Erlang’s actors shows that open calls do

8.4 There, too, the reception of the message may be delayed to the point that the data that it concerns has changed in the meantime. The huge success of message-driven systems such as Erlang’s actors shows that open calls do![]() 16 have a place in performance-critical applications.

16 have a place in performance-critical applications.

8.6 Deadlocks

There is one more complication in connection with threads that needs to be discussed: deadlocks. In general, this term covers situations where one or more threads fail to make progress because a condition that they are waiting for can never occur. More particularly, deadlocks happen when threads cannot obtain the locks they require because other threads hold onto those locks and cannot make progress themselves.

Deadlocks arise easily in operations involving multiple objects.

We will illustrate the problem in the context of multistep operations. These![]() 8.2 must often obtain multiple locks on different objects from a collection that is shared with other threads, since they can exclude interference only by holding all the necessary locks for the entire duration of the operation.

8.2 must often obtain multiple locks on different objects from a collection that is shared with other threads, since they can exclude interference only by holding all the necessary locks for the entire duration of the operation.

Let us proceed with a simple example, where operations simply copy sections of text from one ThreadSafeTextStore to another. At the core,![]() 8.2 they perform the following actions (lines 4–6): They compute the section of text to be copied, obtain the characters from the source, and write them to the destination. Both src and dst are shared among different operations running simultaneously. Therefore, assertions established about the character range in line 4 hold true in lines 5–6 only if the whole sequence is protected by locks.

8.2 they perform the following actions (lines 4–6): They compute the section of text to be copied, obtain the characters from the source, and write them to the destination. Both src and dst are shared among different operations running simultaneously. Therefore, assertions established about the character range in line 4 hold true in lines 5–6 only if the whole sequence is protected by locks.

swt.threads.DeadlocksBroken.main

1 src.lock();

2 dst.lock();

3 try {

4 ... determine positions and length for

5 String text = src.get(srcOffset, srcLength);

6 dst.replace(dstOffset, 0, text);

7 } finally {

8 src.unlock();

9 dst.unlock();

10 }

So far, the code looks good, and no assertion can ever be broken by interference. However, it can easily happen that operations prevent one another from obtaining the necessary locks in lines 1–2. Suppose, for instance, that two operations copy texts around between two buffers, as in the following setup: One copies from a to b, the other in the other direction. (Worker performs this action many times in its run() method.)

swt.threads.DeadlocksBroken.main

new Thread(new Worker(a, b)).start();

new Thread(new Worker(b, a)).start();

Now a new interaction between threads occurs. The action starts by locking both the source and the target, as would be expected:

swt.threads.DeadlocksBroken.main

1 src.lock();

2 dst.lock();

However, the two threads lock in a different order: The first thread locks a and then b, but the second thread locks b and then a. As a result, each thread may acquire in line 1 the very lock that the other thread is trying to acquire in line 2. Effectively, the threads prevent each other from proceeding beyond line 2, and the application, or at least some of its background threads, freezes completely.

As before, such a problem may or may not occur depending on the exact timing. If one thread happens to pass lines 1–2 while the other is doing something else, everything will be in order. Such bugs can be difficult to trace and to fix, because their manifestation depends on the exact timings![]() 8.1 of the run and the decisions taken by the scheduler.

8.1 of the run and the decisions taken by the scheduler.

Avoid deadlocks by resource ordering.

The core of the deadlock problem lies in the different order in which the threads acquire the locks. If both threads were to lock first a and then b, regardless of which is their respective source and destination, then nothing bad would happen: the thread which first locks a can also proceed to lock b, since the other thread is still waiting for its first lock on a. This seems simple enough, but does this idea also work with more than two objects?![]() 148 The answer is “yes”, and the approach is called resource ordering.

148 The answer is “yes”, and the approach is called resource ordering.

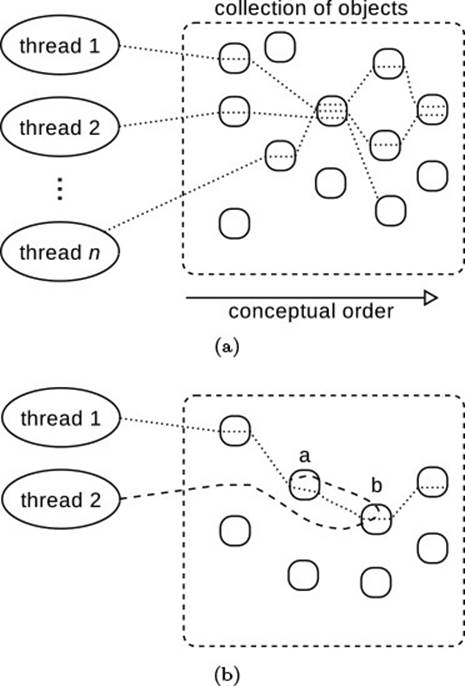

To see the point, suppose there are many threads working on a collection of objects, as shown in Fig. 8.10(a). Each object is protected by a specific lock, which a thread must acquire before working with the object. The dotted lines indicate the order in which each thread would like to acquire the necessary locks. In the example, all threads at some point require the object in the middle, but otherwise they access different sets of objects, sharing only some with other threads.

Figure 8.10 Intuition of Resource Ordering

The intuition of the picture suggests that deadlocks are avoided if threads always lock objects “left-to-right.” A deadlock would require a situation like that in Fig. 8.10(b), where thread 1 locks a, thread 2 locks b, and then thread 2 goes “back left” to lock a. However, this cannot happen if locking proceeds from left to right.

The graphical notion of “locking left-to-right” can be made precise by imposing some conceptual order on the objects by expressing “a is left of b” by the symbol a < b. The figure suggests that it is not necessary to choose a linear order, such as for natural numbers, which arranges the objects strictly from left to right. It can be allowed that two objects occupy the same horizontal position, which leads to a partial order, where a ≤ b and b ≤ a imply a = b (i.e., two different objects on the same horizontal position cannot be compared).

How can one establish such an order on objects? Since any partial order will do, we are actually quite free in the choice.

• One can use some intrinsic attribute of the locked objects. For instance, when moving money from one BankAccount to another, one could order bank accounts by their account numbers.

• ![]() 148(§2.4.5)

148(§2.4.5) ![]() 2.2.1Hierarchical containment locking proceeds along the ownership structure of objects, always locking owners before their parts. This strategy is particularly useful if the parts also protect their own invariants by locking, since then a call from the owner to a part’s method locks in the correct order.