Learning Python (2013)

Part II. Types and Operations

Chapter 9. Tuples, Files, and Everything Else

This chapter rounds out our in-depth tour of the core object types in Python by exploring the tuple, a collection of other objects that cannot be changed, and the file, an interface to external files on your computer. As you’ll see, the tuple is a relatively simple object that largely performs operations you’ve already learned about for strings and lists. The file object is a commonly used and full-featured tool for processing files on your computer. Because files are so pervasive in programming, the basic overview of files here is supplemented by larger examples in later chapters.

This chapter also concludes this part of the book by looking at properties common to all the core object types we’ve met—the notions of equality, comparisons, object copies, and so on. We’ll also briefly explore other object types in Python’s toolbox, including the None placeholder and thenamedtuple hybrid; as you’ll see, although we’ve covered all the primary built-in types, the object story in Python is broader than I’ve implied thus far. Finally, we’ll close this part of the book by taking a look at a set of common object type pitfalls and exploring some exercises that will allow you to experiment with the ideas you’ve learned.

NOTE

This chapter’s scope—files: As in Chapter 7 on strings, our look at files here will be limited in scope to file fundamentals that most Python programmers—including newcomers to programming—need to know. In particular, Unicode text files were previewed in Chapter 4, but we’re going to postpone full coverage of them until Chapter 37, as optional or deferred reading in the Advanced Topics part of this book.

For this chapter’s purpose, we’ll assume any text files used will be encoded and decoded per your platform’s default, which may be UTF-8 on Windows, and ASCII or other elsewhere (and if you don’t know why this matters, you probably don’t need to up front). We’ll also assume that filenames encode properly on the underlying platform, though we’ll stick with ASCII names for portability here.

If Unicode text and files is a critical subject for you, I suggest reading the Chapter 4 preview for a quick first look, and continuing on to Chapter 37 after you master the file basics covered here. For all others, the file coverage here will apply both to typical text and binary files of the sort we’ll meet here, as well as to more advanced file-processing modes you may choose to explore later.

Tuples

The last collection type in our survey is the Python tuple. Tuples construct simple groups of objects. They work exactly like lists, except that tuples can’t be changed in place (they’re immutable) and are usually written as a series of items in parentheses, not square brackets. Although they don’t support as many methods, tuples share most of their properties with lists. Here’s a quick look at the basics. Tuples are:

Ordered collections of arbitrary objects

Like strings and lists, tuples are positionally ordered collections of objects (i.e., they maintain a left-to-right order among their contents); like lists, they can embed any kind of object.

Accessed by offset

Like strings and lists, items in a tuple are accessed by offset (not by key); they support all the offset-based access operations, such as indexing and slicing.

Of the category “immutable sequence”

Like strings and lists, tuples are sequences; they support many of the same operations. However, like strings, tuples are immutable; they don’t support any of the in-place change operations applied to lists.

Fixed-length, heterogeneous, and arbitrarily nestable

Because tuples are immutable, you cannot change the size of a tuple without making a copy. On the other hand, tuples can hold any type of object, including other compound objects (e.g., lists, dictionaries, other tuples), and so support arbitrary nesting.

Arrays of object references

Like lists, tuples are best thought of as object reference arrays; tuples store access points to other objects (references), and indexing a tuple is relatively quick.

Table 9-1 highlights common tuple operations. A tuple is written as a series of objects (technically, expressions that generate objects), separated by commas and normally enclosed in parentheses. An empty tuple is just a parentheses pair with nothing inside.

Table 9-1. Common tuple literals and operations

|

Operation |

Interpretation |

|

() |

An empty tuple |

|

T = (0,) |

A one-item tuple (not an expression) |

|

T = (0, 'Ni', 1.2, 3) |

A four-item tuple |

|

T = 0, 'Ni', 1.2, 3 |

Another four-item tuple (same as prior line) |

|

T = ('Bob', ('dev', 'mgr')) |

Nested tuples |

|

T = tuple('spam') |

Tuple of items in an iterable |

|

T[i] T[i][j] T[i:j] len(T) |

Index, index of index, slice, length |

|

T1 + T2 T * 3 |

Concatenate, repeat |

|

for x in T: print(x) 'spam' in T [x ** 2 for x in T] |

Iteration, membership |

|

T.index('Ni') T.count('Ni') |

Methods in 2.6, 2.7, and 3.X: search, count |

|

namedtuple('Emp', ['name', 'jobs']) |

Named tuple extension type |

Tuples in Action

As usual, let’s start an interactive session to explore tuples at work. Notice in Table 9-1 that tuples do not have all the methods that lists have (e.g., an append call won’t work here). They do, however, support the usual sequence operations that we saw for both strings and lists:

>>> (1, 2) + (3, 4) # Concatenation

(1, 2, 3, 4)

>>> (1, 2) * 4 # Repetition

(1, 2, 1, 2, 1, 2, 1, 2)

>>> T = (1, 2, 3, 4) # Indexing, slicing

>>> T[0], T[1:3]

(1, (2, 3))

Tuple syntax peculiarities: Commas and parentheses

The second and fourth entries in Table 9-1 merit a bit more explanation. Because parentheses can also enclose expressions (see Chapter 5), you need to do something special to tell Python when a single object in parentheses is a tuple object and not a simple expression. If you really want a single-item tuple, simply add a trailing comma after the single item, before the closing parenthesis:

>>> x = (40) # An integer!

>>> x

40

>>> y = (40,) # A tuple containing an integer

>>> y

(40,)

As a special case, Python also allows you to omit the opening and closing parentheses for a tuple in contexts where it isn’t syntactically ambiguous to do so. For instance, the fourth line of Table 9-1 simply lists four items separated by commas. In the context of an assignment statement, Python recognizes this as a tuple, even though it doesn’t have parentheses.

Now, some people will tell you to always use parentheses in your tuples, and some will tell you to never use parentheses in tuples (and still others have lives, and won’t tell you what to do with your tuples!). The most common places where the parentheses are required for tuple literals are those where:

§ Parentheses matter—within a function call, or nested in a larger expression.

§ Commas matter—embedded in the literal of a larger data structure like a list or dictionary, or listed in a Python 2.X print statement.

In most other contexts, the enclosing parentheses are optional. For beginners, the best advice is that it’s probably easier to use the parentheses than it is to remember when they are optional or required. Many programmers (myself included) also find that parentheses tend to aid script readability by making the tuples more explicit and obvious, but your mileage may vary.

Conversions, methods, and immutability

Apart from literal syntax differences, tuple operations (the middle rows in Table 9-1) are identical to string and list operations. The only differences worth noting are that the +, *, and slicing operations return new tuples when applied to tuples, and that tuples don’t provide the same methods you saw for strings, lists, and dictionaries. If you want to sort a tuple, for example, you’ll usually have to either first convert it to a list to gain access to a sorting method call and make it a mutable object, or use the newer sorted built-in that accepts any sequence object (and other iterables—a term introduced in Chapter 4 that we’ll be more formal about in the next part of this book):

>>> T = ('cc', 'aa', 'dd', 'bb')

>>> tmp = list(T) # Make a list from a tuple's items

>>> tmp.sort() # Sort the list

>>> tmp

['aa', 'bb', 'cc', 'dd']

>>> T = tuple(tmp) # Make a tuple from the list's items

>>> T

('aa', 'bb', 'cc', 'dd')

>>> sorted(T) # Or use the sorted built-in, and save two steps

['aa', 'bb', 'cc', 'dd']

Here, the list and tuple built-in functions are used to convert the object to a list and then back to a tuple; really, both calls make new objects, but the net effect is like a conversion.

List comprehensions can also be used to convert tuples. The following, for example, makes a list from a tuple, adding 20 to each item along the way:

>>> T = (1, 2, 3, 4, 5)

>>> L = [x + 20 for x in T]

>>> L

[21, 22, 23, 24, 25]

List comprehensions are really sequence operations—they always build new lists, but they may be used to iterate over any sequence objects, including tuples, strings, and other lists. As we’ll see later in the book, they even work on some things that are not physically stored sequences—anyiterable objects will do, including files, which are automatically read line by line. Given this, they may be better called iteration tools.

Although tuples don’t have the same methods as lists and strings, they do have two of their own as of Python 2.6 and 3.0—index and count work as they do for lists, but they are defined for tuple objects:

>>> T = (1, 2, 3, 2, 4, 2) # Tuple methods in 2.6, 3.0, and later

>>> T.index(2) # Offset of first appearance of 2

1

>>> T.index(2, 2) # Offset of appearance after offset 2

3

>>> T.count(2) # How many 2s are there?

3

Prior to 2.6 and 3.0, tuples have no methods at all—this was an old Python convention for immutable types, which was violated years ago on grounds of practicality with strings, and more recently with both numbers and tuples.

Also, note that the rule about tuple immutability applies only to the top level of the tuple itself, not to its contents. A list inside a tuple, for instance, can be changed as usual:

>>> T = (1, [2, 3], 4)

>>> T[1] = 'spam' # This fails: can't change tuple itself

TypeError: object doesn't support item assignment

>>> T[1][0] = 'spam' # This works: can change mutables inside

>>> T

(1, ['spam', 3], 4)

For most programs, this one-level-deep immutability is sufficient for common tuple roles. Which, coincidentally, brings us to the next section.

Why Lists and Tuples?

This seems to be the first question that always comes up when teaching beginners about tuples: why do we need tuples if we have lists? Some of the reasoning may be historic; Python’s creator is a mathematician by training, and he has been quoted as seeing a tuple as a simple association of objects and a list as a data structure that changes over time. In fact, this use of the word “tuple” derives from mathematics, as does its frequent use for a row in a relational database table.

The best answer, however, seems to be that the immutability of tuples provides some integrity—you can be sure a tuple won’t be changed through another reference elsewhere in a program, but there’s no such guarantee for lists. Tuples and other immutables, therefore, serve a similar role to “constant” declarations in other languages, though the notion of constantness is associated with objects in Python, not variables.

Tuples can also be used in places that lists cannot—for example, as dictionary keys (see the sparse matrix example in Chapter 8). Some built-in operations may also require or imply tuples instead of lists (e.g., the substitution values in a string format expression), though such operations have often been generalized in recent years to be more flexible. As a rule of thumb, lists are the tool of choice for ordered collections that might need to change; tuples can handle the other cases of fixed associations.

Records Revisited: Named Tuples

In fact, the choice of data types is even richer than the prior section may have implied—today’s Python programmers can choose from an assortment of both built-in core types, and extension types built on top of them. For example, in the prior chapter’s sidebar Why You Will Care: Dictionaries Versus Lists, we saw how to represent record-like information with both a list and a dictionary, and noted that dictionaries offer the advantage of more mnemonic keys that label data. As long as we don’t require mutability, tuples can serve similar roles, with positions for record fields like lists:

>>> bob = ('Bob', 40.5, ['dev', 'mgr']) # Tuple record

>>> bob

('Bob', 40.5, ['dev', 'mgr'])

>>> bob[0], bob[2] # Access by position

('Bob', ['dev', 'mgr'])

As for lists, though, field numbers in tuples generally carry less information than the names of keys in a dictionary. Here’s the same record recoded as a dictionary with named fields:

>>> bob = dict(name='Bob', age=40.5, jobs=['dev', 'mgr']) # Dictionary record

>>> bob

{'jobs': ['dev', 'mgr'], 'name': 'Bob', 'age': 40.5}

>>> bob['name'], bob['jobs'] # Access by key

('Bob', ['dev', 'mgr'])

In fact, we can convert parts of the dictionary to a tuple if needed:

>>> tuple(bob.values()) # Values to tuple

(['dev', 'mgr'], 'Bob', 40.5)

>>> list(bob.items()) # Items to tuple list

[('jobs', ['dev', 'mgr']), ('name', 'Bob'), ('age', 40.5)]

But with a bit of extra work, we can implement objects that offer both positional and named access to record fields. For example, the namedtuple utility, available in the standard library’s collections module mentioned in Chapter 8, implements an extension type that adds logic to tuples that allows components to be accessed by both position and attribute name, and can be converted to dictionary-like form for access by key if desired. Attribute names come from classes and are not exactly dictionary keys, but they are similarly mnemonic:

>>> from collections import namedtuple # Import extension type

>>> Rec = namedtuple('Rec', ['name', 'age', 'jobs']) # Make a generated class

>>> bob = Rec('Bob', age=40.5, jobs=['dev', 'mgr']) # A named-tuple record

>>> bob

Rec(name='Bob', age=40.5, jobs=['dev', 'mgr'])

>>> bob[0], bob[2] # Access by position

('Bob', ['dev', 'mgr'])

>>> bob.name, bob.jobs # Access by attribute

('Bob', ['dev', 'mgr'])

Converting to a dictionary supports key-based behavior when needed:

>>> O = bob._asdict() # Dictionary-like form

>>> O['name'], O['jobs'] # Access by key too

('Bob', ['dev', 'mgr'])

>>> O

OrderedDict([('name', 'Bob'), ('age', 40.5), ('jobs', ['dev', 'mgr'])])

As you can see, named tuples are a tuple/class/dictionary hybrid. They also represent a classic tradeoff. In exchange for their extra utility, they require extra code (the two startup lines in the preceding examples that import the type and make the class), and incur some performance costs to work this magic. (In short, named tuples build new classes that extend the tuple type, inserting a property accessor method for each named field that maps the name to its position—a technique that relies on advanced topics we’ll explore in Part VIII, and uses formatted code strings instead of class annotation tools like decorators and metaclasses.) Still, they are a good example of the kind of custom data types that we can build on top of built-in types like tuples when extra utility is desired.

Named tuples are available in Python 3.X, 2.7, 2.6 (where _asdict returns a true dictionary), and perhaps earlier, though they rely on features relatively modern by Python standards. They are also extensions, not core types—they live in the standard library and fall into the same category asChapter 5’s Fraction and Decimal—so we’ll delegate to the Python library manual for more details.

As a quick preview, though, both tuples and named tuples support unpacking tuple assignment, which we’ll study formally in Chapter 13, as well as the iteration contexts we’ll explore in Chapter 14 and Chapter 20 (notice the positional initial values here: named tuples accept these by name, position, or both):

>>> bob = Rec('Bob', 40.5, ['dev', 'mgr']) # For both tuples and named tuples

>>> name, age, jobs = bob # Tuple assignment (Chapter 11)

>>> name, jobs

('Bob', ['dev', 'mgr'])

>>> for x in bob: print(x) # Iteration context (Chapters 14, 20)

...prints Bob, 40.5, ['dev', 'mgr']...

Tuple-unpacking assignment doesn’t quite apply to dictionaries, short of fetching and converting keys and values and assuming or imposing an positional ordering on them (dictionaries are not sequences), and iteration steps through keys, not values (notice the dictionary literal form here: an alternative to dict):

>>> bob = {'name': 'Bob', 'age': 40.5, 'jobs': ['dev', 'mgr']}

>>> job, name, age = bob.values()

>>> name, job # Dict equivalent (but order may vary)

('Bob', ['dev', 'mgr'])

>>> for x in bob: print(bob[x]) # Step though keys, index values

...prints values...

>>> for x in bob.values(): print(x) # Step through values view

...prints values...

Watch for a final rehash of this record representation thread when we see how user-defined classes compare in Chapter 27; as we’ll find, classes label fields with names too, but can also provide program logic to process the record’s data in the same package.

Files

You may already be familiar with the notion of files, which are named storage compartments on your computer that are managed by your operating system. The last major built-in object type that we’ll examine on our object types tour provides a way to access those files inside Python programs.

In short, the built-in open function creates a Python file object, which serves as a link to a file residing on your machine. After calling open, you can transfer strings of data to and from the associated external file by calling the returned file object’s methods.

Compared to the types you’ve seen so far, file objects are somewhat unusual. They are considered a core type because they are created by a built-in function, but they’re not numbers, sequences, or mappings, and they don’t respond to expression operators; they export only methods for common file-processing tasks. Most file methods are concerned with performing input from and output to the external file associated with a file object, but other file methods allow us to seek to a new position in the file, flush output buffers, and so on. Table 9-2 summarizes common file operations.

Table 9-2. Common file operations

|

Operation |

Interpretation |

|

output = open(r'C:\spam', 'w') |

Create output file ('w' means write) |

|

input = open('data', 'r') |

Create input file ('r' means read) |

|

input = open('data') |

Same as prior line ('r' is the default) |

|

aString = input.read() |

Read entire file into a single string |

|

aString = input.read(N) |

Read up to next N characters (or bytes) into a string |

|

aString = input.readline() |

Read next line (including \n newline) into a string |

|

aList = input.readlines() |

Read entire file into list of line strings (with \n) |

|

output.write(aString) |

Write a string of characters (or bytes) into file |

|

output.writelines(aList) |

Write all line strings in a list into file |

|

output.close() |

Manual close (done for you when file is collected) |

|

output.flush() |

Flush output buffer to disk without closing |

|

anyFile.seek(N) |

Change file position to offset N for next operation |

|

for line in open('data'): use line |

File iterators read line by line |

|

open('f.txt', encoding='latin-1') |

Python 3.X Unicode text files (str strings) |

|

open('f.bin', 'rb') |

Python 3.X bytes files (bytes strings) |

|

codecs.open('f.txt', encoding='utf8') |

Python 2.X Unicode text files (unicode strings) |

|

open('f.bin', 'rb') |

Python 2.X bytes files (str strings) |

Opening Files

To open a file, a program calls the built-in open function, with the external filename first, followed by a processing mode. The call returns a file object, which in turn has methods for data transfer:

afile = open(filename, mode)

afile.method()

The first argument to open, the external filename, may include a platform-specific and absolute or relative directory path prefix. Without a directory path, the file is assumed to exist in the current working directory (i.e., where the script runs). As we’ll see in Chapter 37’s expanded file coverage, the filename may also contain non-ASCII Unicode characters that Python automatically translates to and from the underlying platform’s encoding, or be provided as a pre-encoded byte string.

The second argument to open, processing mode, is typically the string 'r' to open for text input (the default), 'w' to create and open for text output, or 'a' to open for appending text to the end (e.g., for adding to logfiles). The processing mode argument can specify additional options:

§ Adding a b to the mode string allows for binary data (end-of-line translations and 3.X Unicode encodings are turned off).

§ Adding a + opens the file for both input and output (i.e., you can both read and write to the same file object, often in conjunction with seek operations to reposition in the file).

Both of the first two arguments to open must be Python strings. An optional third argument can be used to control output buffering—passing a zero means that output is unbuffered (it is transferred to the external file immediately on a write method call), and additional arguments may be provided for special types of files (e.g., an encoding for Unicode text files in Python 3.X).

We’ll cover file fundamentals and explore some basic examples here, but we won’t go into all file-processing mode options; as usual, consult the Python library manual for additional details.

Using Files

Once you make a file object with open, you can call its methods to read from or write to the associated external file. In all cases, file text takes the form of strings in Python programs; reading a file returns its content in strings, and content is passed to the write methods as strings. Reading and writing methods come in multiple flavors; Table 9-2 lists the most common. Here are a few fundamental usage notes:

File iterators are best for reading lines

Though the reading and writing methods in the table are common, keep in mind that probably the best way to read lines from a text file today is to not read the file at all—as we’ll see in Chapter 14, files also have an iterator that automatically reads one line at a time in a for loop, list comprehension, or other iteration context.

Content is strings, not objects

Notice in Table 9-2 that data read from a file always comes back to your script as a string, so you’ll have to convert it to a different type of Python object if a string is not what you need. Similarly, unlike with the print operation, Python does not add any formatting and does not convert objects to strings automatically when you write data to a file—you must send an already formatted string. Because of this, the tools we have already met to convert objects to and from strings (e.g., int, float, str, and the string formatting expression and method) come in handy when dealing with files.

Python also includes advanced standard library tools for handling generic object storage (the pickle module), for dealing with packed binary data in files (the struct module), and for processing special types of content such as JSON, XML, and CSV text. We’ll see these at work later in this chapter and book, but Python’s manuals document them in full.

Files are buffered and seekable

By default, output files are always buffered, which means that text you write may not be transferred from memory to disk immediately—closing a file, or running its flush method, forces the buffered data to disk. You can avoid buffering with extra open arguments, but it may impede performance. Python files are also random-access on a byte offset basis—their seek method allows your scripts to jump around to read and write at specific locations.

close is often optional: auto-close on collection

Calling the file close method terminates your connection to the external file, releases its system resources, and flushes its buffered output to disk if any is still in memory. As discussed in Chapter 6, in Python an object’s memory space is automatically reclaimed as soon as the object is no longer referenced anywhere in the program. When file objects are reclaimed, Python also automatically closes the files if they are still open (this also happens when a program shuts down). This means you don’t always need to manually close your files in standard Python, especially those in simple scripts with short runtimes, and temporary files used by a single line or expression.

On the other hand, including manual close calls doesn’t hurt, and may be a good habit to form, especially in long-running systems. Strictly speaking, this auto-close-on-collection feature of files is not part of the language definition—it may change over time, may not happen when you expect it to in interactive shells, and may not work the same in other Python implementations whose garbage collectors may not reclaim and close files at the same points as standard CPython. In fact, when many files are opened within loops, Pythons other than CPython may require close calls to free up system resources immediately, before garbage collection can get around to freeing objects. Moreover, close calls may sometimes be required to flush buffered output of file objects not yet reclaimed. For an alternative way to guarantee automatic file closes, also see this section’s later discussion of the file object’s context manager, used with the with/as statement in Python 2.6, 2.7, and 3.X.

Files in Action

Let’s work through a simple example that demonstrates file-processing basics. The following code begins by opening a new text file for output, writing two lines (strings terminated with a newline marker, \n), and closing the file. Later, the example opens the same file again in input mode and reads the lines back one at a time with readline. Notice that the third readline call returns an empty string; this is how Python file methods tell you that you’ve reached the end of the file (empty lines in the file come back as strings containing just a newline character, not as empty strings). Here’s the complete interaction:

>>> myfile = open('myfile.txt', 'w') # Open for text output: create/empty

>>> myfile.write('hello text file\n') # Write a line of text: string

16

>>> myfile.write('goodbye text file\n')

18

>>> myfile.close() # Flush output buffers to disk

>>> myfile = open('myfile.txt') # Open for text input: 'r' is default

>>> myfile.readline() # Read the lines back

'hello text file\n'

>>> myfile.readline()

'goodbye text file\n'

>>> myfile.readline() # Empty string: end-of-file

''

Notice that file write calls return the number of characters written in Python 3.X; in 2.X they don’t, so you won’t see these numbers echoed interactively. This example writes each line of text, including its end-of-line terminator, \n, as a string; write methods don’t add the end-of-line character for us, so we must include it to properly terminate our lines (otherwise the next write will simply extend the current line in the file).

If you want to display the file’s content with end-of-line characters interpreted, read the entire file into a string all at once with the file object’s read method and print it:

>>> open('myfile.txt').read() # Read all at once into string

'hello text file\ngoodbye text file\n'

>>> print(open('myfile.txt').read()) # User-friendly display

hello text file

goodbye text file

And if you want to scan a text file line by line, file iterators are often your best option:

>>> for line in open('myfile.txt'): # Use file iterators, not reads

... print(line, end='')

...

hello text file

goodbye text file

When coded this way, the temporary file object created by open will automatically read and return one line on each loop iteration. This form is usually easiest to code, good on memory use, and may be faster than some other options (depending on many variables, of course). Since we haven’t reached statements or iterators yet, though, you’ll have to wait until Chapter 14 for a more complete explanation of this code.

NOTE

Windows users: As mentioned in Chapter 7, open accepts Unix-style forward slashes in place of backward slashes on Windows, so any of the following forms work for directory paths—raw strings, forward slashes, or doubled-up backslashes:

>>> open(r'C:\Python33\Lib\pdb.py').readline()

'#! /usr/bin/env python3\n'

>>> open('C:/Python33/Lib/pdb.py').readline()

'#! /usr/bin/env python3\n'

>>> open('C:\\Python33\\Lib\\pdb.py').readline()

'#! /usr/bin/env python3\n'

The raw string form in the second command is still useful to turn off accidental escapes when you can’t control string content, and in other contexts.

Text and Binary Files: The Short Story

Strictly speaking, the example in the prior section uses text files. In both Python 3.X and 2.X, file type is determined by the second argument to open, the mode string—an included “b” means binary. Python has always supported both text and binary files, but in Python 3.X there is a sharper distinction between the two:

§ Text files represent content as normal str strings, perform Unicode encoding and decoding automatically, and perform end-of-line translation by default.

§ Binary files represent content as a special bytes string type and allow programs to access file content unaltered.

In contrast, Python 2.X text files handle both 8-bit text and binary data, and a special string type and file interface (unicode strings and codecs.open) handles Unicode text. The differences in Python 3.X stem from the fact that simple and Unicode text have been merged in the normal string type—which makes sense, given that all text is Unicode, including ASCII and other 8-bit encodings.

Because most programmers deal only with ASCII text, they can get by with the basic text file interface used in the prior example, and normal strings. All strings are technically Unicode in 3.X, but ASCII users will not generally notice. In fact, text files and strings work the same in 3.X and 2.X if your script’s scope is limited to such simple forms of text.

If you need to handle internationalized applications or byte-oriented data, though, the distinction in 3.X impacts your code (usually for the better). In general, you must use bytes strings for binary files, and normal str strings for text files. Moreover, because text files implement Unicode encodings, you should not open a binary data file in text mode—decoding its content to Unicode text will likely fail.

Let’s look at an example. When you read a binary data file you get back a bytes object—a sequence of small integers that represent absolute byte values (which may or may not correspond to characters), which looks and feels almost exactly like a normal string. In Python 3.X, and assuming an existing binary file:

>>> data = open('data.bin', 'rb').read() # Open binary file: rb=read binary

>>> data # bytes string holds binary data

b'\x00\x00\x00\x07spam\x00\x08'

>>> data[4:8] # Act like strings

b'spam'

>>> data[4:8][0] # But really are small 8-bit integers

115

>>> bin(data[4:8][0]) # Python 3.X/2.6+ bin() function

'0b1110011'

In addition, binary files do not perform any end-of-line translation on data; text files by default map all forms to and from \n when written and read and implement Unicode encodings on transfers in 3.X. Binary files like this one work the same in Python 2.X, but byte strings are simply normal strings and have no leading b when displayed, and text files must use the codecs module to add Unicode processing.

Per the note at the start of this chapter, though, that’s as much as we’re going to say about Unicode text and binary data files here, and just enough to understand upcoming examples in this chapter. Since the distinction is of marginal interest to many Python programmers, we’ll defer to the files preview in Chapter 4 for a quick tour and postpone the full story until Chapter 37. For now, let’s move on to some more substantial file examples to demonstrate a few common use cases.

Storing Python Objects in Files: Conversions

Our next example writes a variety of Python objects into a text file on multiple lines. Notice that it must convert objects to strings using conversion tools. Again, file data is always strings in our scripts, and write methods do not do any automatic to-string formatting for us (for space, I’m omitting byte-count return values from write methods from here on):

>>> X, Y, Z = 43, 44, 45 # Native Python objects

>>> S = 'Spam' # Must be strings to store in file

>>> D = {'a': 1, 'b': 2}

>>> L = [1, 2, 3]

>>>

>>> F = open('datafile.txt', 'w') # Create output text file

>>> F.write(S + '\n') # Terminate lines with \n

>>> F.write('%s,%s,%s\n' % (X, Y, Z)) # Convert numbers to strings

>>> F.write(str(L) + '$' + str(D) + '\n') # Convert and separate with $

>>> F.close()

Once we have created our file, we can inspect its contents by opening it and reading it into a string (strung together as a single operation here). Notice that the interactive echo gives the exact byte contents, while the print operation interprets embedded end-of-line characters to render a more user-friendly display:

>>> chars = open('datafile.txt').read() # Raw string display

>>> chars

"Spam\n43,44,45\n[1, 2, 3]${'a': 1, 'b': 2}\n"

>>> print(chars) # User-friendly display

Spam

43,44,45

[1, 2, 3]${'a': 1, 'b': 2}

We now have to use other conversion tools to translate from the strings in the text file to real Python objects. As Python never converts strings to numbers (or other types of objects) automatically, this is required if we need to gain access to normal object tools like indexing, addition, and so on:

>>> F = open('datafile.txt') # Open again

>>> line = F.readline() # Read one line

>>> line

'Spam\n'

>>> line.rstrip() # Remove end-of-line

'Spam'

For this first line, we used the string rstrip method to get rid of the trailing end-of-line character; a line[:−1] slice would work, too, but only if we can be sure all lines end in the \n character (the last line in a file sometimes does not).

So far, we’ve read the line containing the string. Now let’s grab the next line, which contains numbers, and parse out (that is, extract) the objects on that line:

>>> line = F.readline() # Next line from file

>>> line # It's a string here

'43,44,45\n'

>>> parts = line.split(',') # Split (parse) on commas

>>> parts

['43', '44', '45\n']

We used the string split method here to chop up the line on its comma delimiters; the result is a list of substrings containing the individual numbers. We still must convert from strings to integers, though, if we wish to perform math on these:

>>> int(parts[1]) # Convert from string to int

44

>>> numbers = [int(P) for P in parts] # Convert all in list at once

>>> numbers

[43, 44, 45]

As we have learned, int translates a string of digits into an integer object, and the list comprehension expression introduced in Chapter 4 can apply the call to each item in our list all at once (you’ll find more on list comprehensions later in this book). Notice that we didn’t have to runrstrip to delete the \n at the end of the last part; int and some other converters quietly ignore whitespace around digits.

Finally, to convert the stored list and dictionary in the third line of the file, we can run them through eval, a built-in function that treats a string as a piece of executable program code (technically, a string containing a Python expression):

>>> line = F.readline()

>>> line

"[1, 2, 3]${'a': 1, 'b': 2}\n"

>>> parts = line.split('$') # Split (parse) on $

>>> parts

['[1, 2, 3]', "{'a': 1, 'b': 2}\n"]

>>> eval(parts[0]) # Convert to any object type

[1, 2, 3]

>>> objects = [eval(P) for P in parts] # Do same for all in list

>>> objects

[[1, 2, 3], {'a': 1, 'b': 2}]

Because the end result of all this parsing and converting is a list of normal Python objects instead of strings, we can now apply list and dictionary operations to them in our script.

Storing Native Python Objects: pickle

Using eval to convert from strings to objects, as demonstrated in the preceding code, is a powerful tool. In fact, sometimes it’s too powerful. eval will happily run any Python expression—even one that might delete all the files on your computer, given the necessary permissions! If you really want to store native Python objects, but you can’t trust the source of the data in the file, Python’s standard library pickle module is ideal.

The pickle module is a more advanced tool that allows us to store almost any Python object in a file directly, with no to- or from-string conversion requirement on our part. It’s like a super-general data formatting and parsing utility. To store a dictionary in a file, for instance, we pickle it directly:

>>> D = {'a': 1, 'b': 2}

>>> F = open('datafile.pkl', 'wb')

>>> import pickle

>>> pickle.dump(D, F) # Pickle any object to file

>>> F.close()

Then, to get the dictionary back later, we simply use pickle again to re-create it:

>>> F = open('datafile.pkl', 'rb')

>>> E = pickle.load(F) # Load any object from file

>>> E

{'a': 1, 'b': 2}

We get back an equivalent dictionary object, with no manual splitting or converting required. The pickle module performs what is known as object serialization—converting objects to and from strings of bytes—but requires very little work on our part. In fact, pickle internally translates our dictionary to a string form, though it’s not much to look at (and may vary if we pickle in other data protocol modes):

>>> open('datafile.pkl', 'rb').read() # Format is prone to change!

b'\x80\x03}q\x00(X\x01\x00\x00\x00bq\x01K\x02X\x01\x00\x00\x00aq\x02K\x01u.'

Because pickle can reconstruct the object from this format, we don’t have to deal with it ourselves. For more on the pickle module, see the Python standard library manual, or import pickle and pass it to help interactively. While you’re exploring, also take a look at the shelvemodule. shelve is a tool that uses pickle to store Python objects in an access-by-key filesystem, which is beyond our scope here (though you will get to see an example of shelve in action in Chapter 28, and other pickle examples in Chapter 31 and Chapter 37).

NOTE

Notice that I opened the file used to store the pickled object in binary mode; binary mode is always required in Python 3.X, because the pickler creates and uses a bytes string object, and these objects imply binary-mode files (text-mode files imply str strings in 3.X). In earlier Pythons it’s OK to use text-mode files for protocol 0 (the default, which creates ASCII text), as long as text mode is used consistently; higher protocols require binary-mode files. Python 3.X’s default protocol is 3 (binary), but it creates bytes even for protocol 0. See Chapter 28, Chapter 31, and Chapter 37; Python’s library manual; or reference books for more details on and examples of pickled data.

Python 2.X also has a cPickle module, which is an optimized version of pickle that can be imported directly for speed. Python 3.X renames this module _pickle and uses it automatically in pickle—scripts simply import pickle and let Python optimize itself.

Storing Python Objects in JSON Format

The prior section’s pickle module translates nearly arbitrary Python objects to a proprietary format developed specifically for Python, and honed for performance over many years. JSON is a newer and emerging data interchange format, which is both programming-language-neutral and supported by a variety of systems. MongoDB, for instance, stores data in a JSON document database (using a binary JSON format).

JSON does not support as broad a range of Python object types as pickle, but its portability is an advantage in some contexts, and it represents another way to serialize a specific category of Python objects for storage and transmission. Moreover, because JSON is so close to Python dictionaries and lists in syntax, the translation to and from Python objects is trivial, and is automated by the json standard library module.

For example, a Python dictionary with nested structures is very similar to JSON data, though Python’s variables and expressions support richer structuring options (any part of the following can be an arbitrary expression in Python code):

>>> name = dict(first='Bob', last='Smith')

>>> rec = dict(name=name, job=['dev', 'mgr'], age=40.5)

>>> rec

{'job': ['dev', 'mgr'], 'name': {'last': 'Smith', 'first': 'Bob'}, 'age': 40.5}

The final dictionary format displayed here is a valid literal in Python code, and almost passes for JSON when printed as is, but the json module makes the translation official—here translating Python objects to and from a JSON serialized string representation in memory:

>>> import json

>>> json.dumps(rec)

'{"job": ["dev", "mgr"], "name": {"last": "Smith", "first": "Bob"}, "age": 40.5}'

>>> S = json.dumps(rec)

>>> S

'{"job": ["dev", "mgr"], "name": {"last": "Smith", "first": "Bob"}, "age": 40.5}'

>>> O = json.loads(S)

>>> O

{'job': ['dev', 'mgr'], 'name': {'last': 'Smith', 'first': 'Bob'}, 'age': 40.5}

>>> O == rec

True

It’s similarly straightforward to translate Python objects to and from JSON data strings in files. Prior to being stored in a file, your data is simply Python objects; the JSON module recreates them from the JSON textual representation when it loads it from the file:

>>> json.dump(rec, fp=open('testjson.txt', 'w'), indent=4)

>>> print(open('testjson.txt').read())

{

"job": [

"dev",

"mgr"

],

"name": {

"last": "Smith",

"first": "Bob"

},

"age": 40.5

}

>>> P = json.load(open('testjson.txt'))

>>> P

{'job': ['dev', 'mgr'], 'name': {'last': 'Smith', 'first': 'Bob'}, 'age': 40.5}

Once you’ve translated from JSON text, you process the data using normal Python object operations in your script. For more details on JSON-related topics, see Python’s library manuals and search the Web.

Note that strings are all Unicode in JSON to support text drawn from international character sets, so you’ll see a leading u on strings after translating from JSON data in Python 2.X (but not in 3.X); this is just the syntax of Unicode objects in 2.X, as introduced Chapter 4 and Chapter 7, and covered in full in Chapter 37. Because Unicode text strings support all the usual string operations, the difference is negligible to your code while text resides in memory; the distinction matters most when transferring text to and from files, and then usually only for non-ASCII types of text where encodings come into play.

NOTE

There is also support in the Python world for translating objects to and from XML, a text format used in Chapter 37; see the web for details.For another semirelated tool that deals with formatted data files, see the standard library’s csv module. It parses and creates CSV (comma-separated value) data in files and strings. This doesn’t map as directly to Python objects, but is another common data exchange format:

>>> import csv

>>> rdr = csv.reader(open('csvdata.txt'))

>>> for row in rdr: print(row)

...

['a', 'bbb', 'cc', 'dddd']

['11', '22', '33', '44']

Storing Packed Binary Data: struct

One other file-related note before we move on: some advanced applications also need to deal with packed binary data, created perhaps by a C language program or a network connection. Python’s standard library includes a tool to help in this domain—the struct module knows how to both compose and parse packed binary data. In a sense, this is another data-conversion tool that interprets strings in files as binary data.

We saw an overview of this tool in Chapter 4, but let’s take another quick look here for more perspective. To create a packed binary data file, open it in 'wb' (write binary) mode, and pass struct a format string and some Python objects. The format string used here means pack as a 4-byte integer, a 4-character string (which must be a bytes string as of Python 3.2), and a 2-byte integer, all in big-endian form (other format codes handle padding bytes, floating-point numbers, and more):

>>> F = open('data.bin', 'wb') # Open binary output file

>>> import struct

>>> data = struct.pack('>i4sh', 7, b'spam', 8) # Make packed binary data

>>> data

b'\x00\x00\x00\x07spam\x00\x08'

>>> F.write(data) # Write byte string

>>> F.close()

Python creates a binary bytes data string, which we write out to the file normally—this one consists mostly of nonprintable characters printed in hexadecimal escapes, and is the same binary file we met earlier. To parse the values out to normal Python objects, we simply read the string back and unpack it using the same format string. Python extracts the values into normal Python objects—integers and a string:

>>> F = open('data.bin', 'rb')

>>> data = F.read() # Get packed binary data

>>> data

b'\x00\x00\x00\x07spam\x00\x08'

>>> values = struct.unpack('>i4sh', data) # Convert to Python objects

>>> values

(7, b'spam', 8)

Binary data files are advanced and somewhat low-level tools that we won’t cover in more detail here; for more help, see the struct coverage in Chapter 37, consult the Python library manual, or import struct and pass it to the help function interactively. Also note that you can use the binary file-processing modes 'wb' and 'rb' to process a simpler binary file, such as an image or audio file, as a whole without having to unpack its contents; in such cases your code might pass it unparsed to other files or tools.

File Context Managers

You’ll also want to watch for Chapter 34’s discussion of the file’s context manager support, new as of Python 3.0 and 2.6. Though more a feature of exception processing than files themselves, it allows us to wrap file-processing code in a logic layer that ensures that the file will be closed (and if needed, have its output flushed to disk) automatically on exit, instead of relying on the auto-close during garbage collection:

with open(r'C:\code\data.txt') as myfile: # See Chapter 34 for details

for line in myfile:

...use line here...

The try/finally statement that we’ll also study in Chapter 34 can provide similar functionality, but at some cost in extra code—three extra lines, to be precise (though we can often avoid both options and let Python close files for us automatically):

myfile = open(r'C:\code\data.txt')

try:

for line in myfile:

...use line here...

finally:

myfile.close()

The with context manager scheme ensures release of system resources in all Pythons, and may be more useful for output files to guarantee buffer flushes; unlike the more general try, though, it is also limited to objects that support its protocol. Since both these options require more information than we have yet obtained, however, we’ll postpone details until later in this book.

Other File Tools

There are additional, more specialized file methods shown in Table 9-2, and even more that are not in the table. For instance, as mentioned earlier, seek resets your current position in a file (the next read or write happens at that position), flush forces buffered output to be written out to disk without closing the connection (by default, files are always buffered), and so on.

The Python standard library manual and the reference books described in the preface provide complete lists of file methods; for a quick look, run a dir or help call interactively, passing in an open file object (in Python 2.X but not 3.X, you can pass in the name file instead). For more file-processing examples, watch for the sidebar Why You Will Care: File Scanners in Chapter 13. It sketches common file-scanning loop code patterns with statements we have not covered enough yet to use here.

Also, note that although the open function and the file objects it returns are your main interface to external files in a Python script, there are additional file-like tools in the Python toolset. Among these:

Standard streams

Preopened file objects in the sys module, such as sys.stdout (see Print Operations in Chapter 11 for details)

Descriptor files in the os module

Integer file handles that support lower-level tools such as file locking (see also the “x” mode in Python 3.3’s open for exclusive creation)

Sockets, pipes, and FIFOs

File-like objects used to synchronize processes or communicate over networks

Access-by-key files known as “shelves”

Used to store unaltered and pickled Python objects directly, by key (used in Chapter 28)

Shell command streams

Tools such as os.popen and subprocess.Popen that support spawning shell commands and reading and writing to their standard streams (see Chapter 13 and Chapter 21 for examples)

The third-party open source domain offers even more file-like tools, including support for communicating with serial ports in the PySerial extension and interactive programs in the pexpect system. See applications-focused Python texts and the Web at large for additional information on file-like tools.

NOTE

Version skew note: In Python 2.X, the built-in name open is essentially a synonym for the name file, and you may technically open files by calling either open or file (though open is generally preferred for opening). In Python 3.X, the name file is no longer available, because of its redundancy with open.

Python 2.X users may also use the name file as the file object type, in order to customize files with object-oriented programming (described later in this book). In Python 3.X, files have changed radically. The classes used to implement file objects live in the standard library module io. See this module’s documentation or code for the classes it makes available for customization, and run atype(F) call on an open file F for hints.

Core Types Review and Summary

Now that we’ve seen all of Python’s core built-in types in action, let’s wrap up our object types tour by reviewing some of the properties they share. Table 9-3 classifies all the major types we’ve seen so far according to the type categories introduced earlier. Here are some points to remember:

§ Objects share operations according to their category; for instance, sequence objects—strings, lists, and tuples—all share sequence operations such as concatenation, length, and indexing.

§ Only mutable objects—lists, dictionaries, and sets—may be changed in place; you cannot change numbers, strings, or tuples in place.

§ Files export only methods, so mutability doesn’t really apply to them—their state may be changed when they are processed, but this isn’t quite the same as Python core type mutability constraints.

§ “Numbers” in Table 9-3 includes all number types: integer (and the distinct long integer in 2.X), floating point, complex, decimal, and fraction.

§ “Strings” in Table 9-3 includes str, as well as bytes in 3.X and unicode in 2.X; the bytearray string type in 3.X, 2.6, and 2.7 is mutable.

§ Sets are something like the keys of a valueless dictionary, but they don’t map to values and are not ordered, so sets are neither a mapping nor a sequence type; frozenset is an immutable variant of set.

§ In addition to type category operations, as of Python 2.6 and 3.0 all the types in Table 9-3 have callable methods, which are generally specific to their type.

Table 9-3. Object classifications

|

Object type |

Category |

Mutable? |

|

Numbers (all) |

Numeric |

No |

|

Strings (all) |

Sequence |

No |

|

Lists |

Sequence |

Yes |

|

Dictionaries |

Mapping |

Yes |

|

Tuples |

Sequence |

No |

|

Files |

Extension |

N/A |

|

Sets |

Set |

Yes |

|

Frozenset |

Set |

No |

|

bytearray |

Sequence |

Yes |

WHY YOU WILL CARE: OPERATOR OVERLOADING

In Part VI of this book, we’ll see that objects we implement with classes can pick and choose from these categories arbitrarily. For instance, if we want to provide a new kind of specialized sequence object that is consistent with built-in sequences, we can code a class that overloads things like indexing and concatenation:

class MySequence:

def __getitem__(self, index):

# Called on self[index], others

def __add__(self, other):

# Called on self + other

def __iter__(self):

# Preferred in iterations

and so on. We can also make the new object mutable or not by selectively implementing methods called for in-place change operations (e.g., __setitem__ is called on self[index]=value assignments). Although it’s beyond this book’s scope, it’s also possible to implement new objects in an external language like C as C extension types. For these, we fill in C function pointer slots to choose between number, sequence, and mapping operation sets.

Object Flexibility

This part of the book introduced a number of compound object types—collections with components. In general:

§ Lists, dictionaries, and tuples can hold any kind of object.

§ Sets can contain any type of immutable object.

§ Lists, dictionaries, and tuples can be arbitrarily nested.

§ Lists, dictionaries, and sets can dynamically grow and shrink.

Because they support arbitrary structures, Python’s compound object types are good at representing complex information in programs. For example, values in dictionaries may be lists, which may contain tuples, which may contain dictionaries, and so on. The nesting can be as deep as needed to model the data to be processed.

Let’s look at an example of nesting. The following interaction defines a tree of nested compound sequence objects, shown in Figure 9-1. To access its components, you may include as many index operations as required. Python evaluates the indexes from left to right, and fetches a reference to a more deeply nested object at each step. Figure 9-1 may be a pathologically complicated data structure, but it illustrates the syntax used to access nested objects in general:

>>> L = ['abc', [(1, 2), ([3], 4)], 5]

>>> L[1]

[(1, 2), ([3], 4)]

>>> L[1][1]

([3], 4)

>>> L[1][1][0]

[3]

>>> L[1][1][0][0]

3

![A nested object tree with the offsets of its components, created by running the literal expression [‘abc’, [(1, 2), ([3], 4)], 5]. Syntactically nested objects are internally represented as references (i.e., pointers) to separate pieces of memory.](learning_1.files/image011.jpg)

Figure 9-1. A nested object tree with the offsets of its components, created by running the literal expression [‘abc’, [(1, 2), ([3], 4)], 5]. Syntactically nested objects are internally represented as references (i.e., pointers) to separate pieces of memory.

References Versus Copies

Chapter 6 mentioned that assignments always store references to objects, not copies of those objects. In practice, this is usually what you want. Because assignments can generate multiple references to the same object, though, it’s important to be aware that changing a mutable object in place may affect other references to the same object elsewhere in your program. If you don’t want such behavior, you’ll need to tell Python to copy the object explicitly.

We studied this phenomenon in Chapter 6, but it can become more subtle when larger objects of the sort we’ve explored since then come into play. For instance, the following example creates a list assigned to X, and another list assigned to L that embeds a reference back to list X. It also creates a dictionary D that contains another reference back to list X:

>>> X = [1, 2, 3]

>>> L = ['a', X, 'b'] # Embed references to X's object

>>> D = {'x':X, 'y':2}

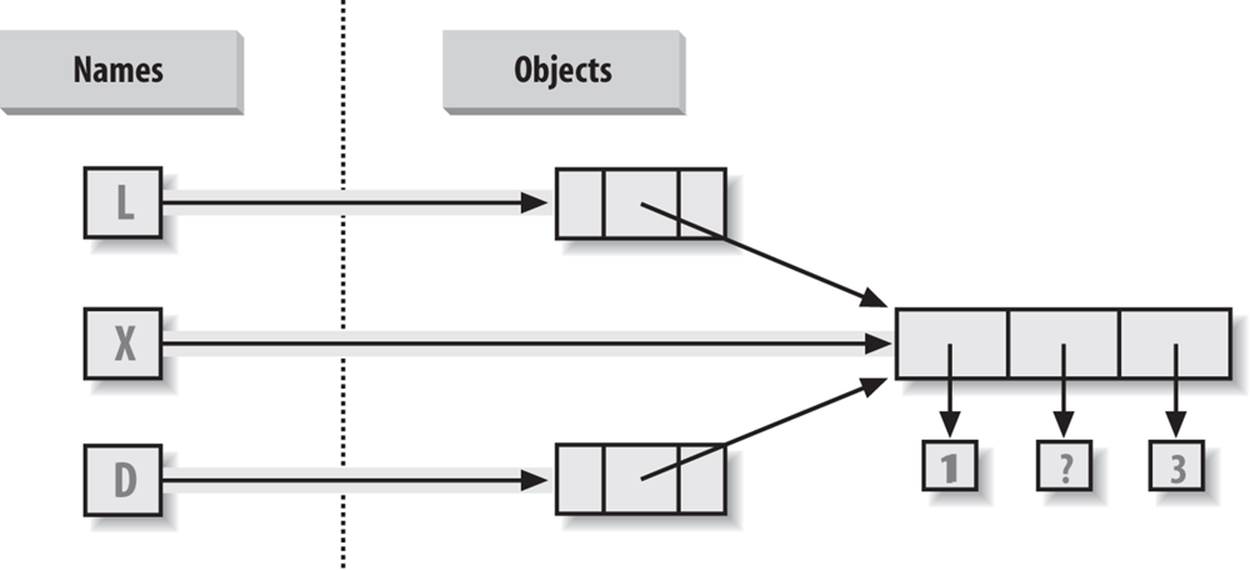

At this point, there are three references to the first list created: from the name X, from inside the list assigned to L, and from inside the dictionary assigned to D. The situation is illustrated in Figure 9-2.

Figure 9-2. Shared object references: because the list referenced by variable X is also referenced from within the objects referenced by L and D, changing the shared list from X makes it look different from L and D, too.

Because lists are mutable, changing the shared list object from any of the three references also changes what the other two reference:

>>> X[1] = 'surprise' # Changes all three references!

>>> L

['a', [1, 'surprise', 3], 'b']

>>> D

{'x': [1, 'surprise', 3], 'y': 2}

References are a higher-level analog of pointers in other languages that are always followed when used. Although you can’t grab hold of the reference itself, it’s possible to store the same reference in more than one place (variables, lists, and so on). This is a feature—you can pass a large object around a program without generating expensive copies of it along the way. If you really do want copies, however, you can request them:

§ Slice expressions with empty limits (L[:]) copy sequences.

§ The dictionary, set, and list copy method (X.copy()) copies a dictionary, set, or list (the list’s copy is new as of 3.3).

§ Some built-in functions, such as list and dict make copies (list(L), dict(D), set(S)).

§ The copy standard library module makes full copies when needed.

For example, say you have a list and a dictionary, and you don’t want their values to be changed through other variables:

>>> L = [1,2,3]

>>> D = {'a':1, 'b':2}

To prevent this, simply assign copies to the other variables, not references to the same objects:

>>> A = L[:] # Instead of A = L (or list(L))

>>> B = D.copy() # Instead of B = D (ditto for sets)

This way, changes made from the other variables will change the copies, not the originals:

>>> A[1] = 'Ni'

>>> B['c'] = 'spam'

>>>

>>> L, D

([1, 2, 3], {'a': 1, 'b': 2})

>>> A, B

([1, 'Ni', 3], {'a': 1, 'c': 'spam', 'b': 2})

In terms of our original example, you can avoid the reference side effects by slicing the original list instead of simply naming it:

>>> X = [1, 2, 3]

>>> L = ['a', X[:], 'b'] # Embed copies of X's object

>>> D = {'x':X[:], 'y':2}

This changes the picture in Figure 9-2—L and D will now point to different lists than X. The net effect is that changes made through X will impact only X, not L and D; similarly, changes to L or D will not impact X.

One final note on copies: empty-limit slices and the dictionary copy method only make top-level copies; that is, they do not copy nested data structures, if any are present. If you need a complete, fully independent copy of a deeply nested data structure (like the various record structures we’ve coded in recent chapters), use the standard copy module, introduced in Chapter 6:

import copy

X = copy.deepcopy(Y) # Fully copy an arbitrarily nested object Y

This call recursively traverses objects to copy all their parts. This is a much more rare case, though, which is why you have to say more to use this scheme. References are usually what you will want; when they are not, slices and copy methods are usually as much copying as you’ll need to do.

Comparisons, Equality, and Truth

All Python objects also respond to comparisons: tests for equality, relative magnitude, and so on. Python comparisons always inspect all parts of compound objects until a result can be determined. In fact, when nested objects are present, Python automatically traverses data structures to apply comparisons from left to right, and as deeply as needed. The first difference found along the way determines the comparison result.

This is sometimes called a recursive comparison—the same comparison requested on the top-level objects is applied to each of the nested objects, and to each of their nested objects, and so on, until a result is found. Later in this book—in Chapter 19—we’ll see how to write recursive functions of our own that work similarly on nested structures. For now, think about comparing all the linked pages at two websites if you want a metaphor for such structures, and a reason for writing recursive functions to process them.

In terms of core types, the recursion is automatic. For instance, a comparison of list objects compares all their components automatically until a mismatch is found or the end is reached:

>>> L1 = [1, ('a', 3)] # Same value, unique objects

>>> L2 = [1, ('a', 3)]

>>> L1 == L2, L1 is L2 # Equivalent? Same object?

(True, False)

Here, L1 and L2 are assigned lists that are equivalent but distinct objects. As a review of what we saw in Chapter 6, because of the nature of Python references, there are two ways to test for equality:

§ The == operator tests value equivalence. Python performs an equivalence test, comparing all nested objects recursively.

§ The is operator tests object identity. Python tests whether the two are really the same object (i.e., live at the same address in memory).

In the preceding example, L1 and L2 pass the == test (they have equivalent values because all their components are equivalent) but fail the is check (they reference two different objects, and hence two different pieces of memory). Notice what happens for short strings, though:

>>> S1 = 'spam'

>>> S2 = 'spam'

>>> S1 == S2, S1 is S2

(True, True)

Here, we should again have two distinct objects that happen to have the same value: == should be true, and is should be false. But because Python internally caches and reuses some strings as an optimization, there really is just a single string 'spam' in memory, shared by S1 and S2; hence, the is identity test reports a true result. To trigger the normal behavior, we need to use longer strings:

>>> S1 = 'a longer string'

>>> S2 = 'a longer string'

>>> S1 == S2, S1 is S2

(True, False)

Of course, because strings are immutable, the object caching mechanism is irrelevant to your code—strings can’t be changed in place, regardless of how many variables refer to them. If identity tests seem confusing, see Chapter 6 for a refresher on object reference concepts.

As a rule of thumb, the == operator is what you will want to use for almost all equality checks; is is reserved for highly specialized roles. We’ll see cases later in the book where both operators are put to use.

Relative magnitude comparisons are also applied recursively to nested data structures:

>>> L1 = [1, ('a', 3)]

>>> L2 = [1, ('a', 2)]

>>> L1 < L2, L1 == L2, L1 > L2 # Less, equal, greater: tuple of results

(False, False, True)

Here, L1 is greater than L2 because the nested 3 is greater than 2. By now you should know that the result of the last line is really a tuple of three objects—the results of the three expressions typed (an example of a tuple without its enclosing parentheses).

More specifically, Python compares types as follows:

§ Numbers are compared by relative magnitude, after conversion to the common highest type if needed.

§ Strings are compared lexicographically (by the character set code point values returned by ord), and character by character until the end or first mismatch ("abc" < "ac").

§ Lists and tuples are compared by comparing each component from left to right, and recursively for nested structures, until the end or first mismatch ([2] > [1, 2]).

§ Sets are equal if both contain the same items (formally, if each is a subset of the other), and set relative magnitude comparisons apply subset and superset tests.

§ Dictionaries compare as equal if their sorted (key, value) lists are equal. Relative magnitude comparisons are not supported for dictionaries in Python 3.X, but they work in 2.X as though comparing sorted (key, value) lists.

§ Nonnumeric mixed-type magnitude comparisons (e.g., 1 < 'spam') are errors in Python 3.X. They are allowed in Python 2.X, but use a fixed but arbitrary ordering rule based on type name string. By proxy, this also applies to sorts, which use comparisons internally: nonnumeric mixed-type collections cannot be sorted in 3.X.

In general, comparisons of structured objects proceed as though you had written the objects as literals and compared all their parts one at a time from left to right. In later chapters, we’ll see other object types that can change the way they get compared.

Python 2.X and 3.X mixed-type comparisons and sorts

Per the last point in the preceding section’s list, the change in Python 3.X for nonnumeric mixed-type comparisons applies to magnitude tests, not equality, but it also applies by proxy to sorting, which does magnitude testing internally. In Python 2.X these all work, though mixed types compare by an arbitrary ordering:

c:\code> c:\python27\python

>>> 11 == '11' # Equality does not convert non-numbers

False

>>> 11 >= '11' # 2.X compares by type name string: int, str

False

>>> ['11', '22'].sort() # Ditto for sorts

>>> [11, '11'].sort()

But Python 3.X disallows mixed-type magnitude testing, except numeric types and manually converted types:

c:\code> c:\python33\python

>>> 11 == '11' # 3.X: equality works but magnitude does not

False

>>> 11 >= '11'

TypeError: unorderable types: int() > str()

>>> ['11', '22'].sort() # Ditto for sorts

>>> [11, '11'].sort()

TypeError: unorderable types: str() < int()

>>> 11 > 9.123 # Mixed numbers convert to highest type

True

>>> str(11) >= '11', 11 >= int('11') # Manual conversions force the issue

(True, True)

Python 2.X and 3.X dictionary comparisons

The second-to-last point in the preceding section also merits illustration. In Python 2.X, dictionaries support magnitude comparisons, as though you were comparing sorted key/value lists:

C:\code> c:\python27\python

>>> D1 = {'a':1, 'b':2}

>>> D2 = {'a':1, 'b':3}

>>> D1 == D2 # Dictionary equality: 2.X + 3.X

False

>>> D1 < D2 # Dictionary magnitude: 2.X only

True

As noted briefly in Chapter 8, though, magnitude comparisons for dictionaries are removed in Python 3.X because they incur too much overhead when equality is desired (equality uses an optimized scheme in 3.X that doesn’t literally compare sorted key/value lists):

C:\code> c:\python33\python

>>> D1 = {'a':1, 'b':2}

>>> D2 = {'a':1, 'b':3}

>>> D1 == D2

False

>>> D1 < D2

TypeError: unorderable types: dict() < dict()

The alternative in 3.X is to either write loops to compare values by key, or compare the sorted key/value lists manually—the items dictionary methods and sorted built-in suffice:

>>> list(D1.items())

[('b', 2), ('a', 1)]

>>> sorted(D1.items())

[('a', 1), ('b', 2)]

>>>

>>> sorted(D1.items()) < sorted(D2.items()) # Magnitude test in 3.X

True

>>> sorted(D1.items()) > sorted(D2.items())

False

This takes more code, but in practice, most programs requiring this behavior will develop more efficient ways to compare data in dictionaries than either this workaround or the original behavior in Python 2.X.

The Meaning of True and False in Python

Notice that the test results returned in the last two examples represent true and false values. They print as the words True and False, but now that we’re using logical tests like these in earnest, I should be a bit more formal about what these names really mean.

In Python, as in most programming languages, an integer 0 represents false, and an integer 1 represents true. In addition, though, Python recognizes any empty data structure as false and any nonempty data structure as true. More generally, the notions of true and false are intrinsic properties of every object in Python—each object is either true or false, as follows:

§ Numbers are false if zero, and true otherwise.

§ Other objects are false if empty, and true otherwise.

Table 9-4 gives examples of true and false values of objects in Python.

Table 9-4. Example object truth values

|

Object |

Value |

|

"spam" |

True |

|

"" |

False |

|

[1, 2] |

True |

|

[] |

False |

|

{'a': 1} |

True |

|

{} |

False |

|

1 |

True |

|

0.0 |

False |

|

None |

False |

As one application, because objects are true or false themselves, it’s common to see Python programmers code tests like if X:, which, assuming X is a string, is the same as if X != '':. In other words, you can test the object itself to see if it contains anything, instead of comparing it to an empty, and therefore false, object of the same type (more on if statements in the next chapter).

The None object

As shown in the last row in Table 9-4, Python also provides a special object called None, which is always considered to be false. None was introduced briefly in Chapter 4; it is the only value of a special data type in Python and typically serves as an empty placeholder (much like a NULLpointer in C).

For example, recall that for lists you cannot assign to an offset unless that offset already exists—the list does not magically grow if you attempt an out-of-bounds assignment. To preallocate a 100-item list such that you can add to any of the 100 offsets, you can fill it with None objects:

>>> L = [None] * 100

>>>

>>> L

[None, None, None, None, None, None, None, ... ]

This doesn’t limit the size of the list (it can still grow and shrink later), but simply presets an initial size to allow for future index assignments. You could initialize a list with zeros the same way, of course, but best practice dictates using None if the type of the list’s contents is variable or not yet known.

Keep in mind that None does not mean “undefined.” That is, None is something, not nothing (despite its name!)—it is a real object and a real piece of memory that is created and given a built-in name by Python itself. Watch for other uses of this special object later in the book; as we’ll learn in Part IV, it is also the default return value of functions that don’t exit by running into a return statement with a result value.

The bool type

While we’re on the topic of truth, also keep in mind that the Python Boolean type bool, introduced in Chapter 5, simply augments the notions of true and false in Python. As we learned in Chapter 5, the built-in words True and False are just customized versions of the integers 1 and 0—it’s as if these two words have been preassigned to 1 and 0 everywhere in Python. Because of the way this new type is implemented, this is really just a minor extension to the notions of true and false already described, designed to make truth values more explicit:

§ When used explicitly in truth test code, the words True and False are equivalent to 1 and 0, but they make the programmer’s intent clearer.

§ Results of Boolean tests run interactively print as the words True and False, instead of as 1 and 0, to make the type of result clearer.

You are not required to use only Boolean types in logical statements such as if; all objects are still inherently true or false, and all the Boolean concepts mentioned in this chapter still work as described if you use other types. Python also provides a bool built-in function that can be used to test the Boolean value of an object if you want to make this explicit (i.e., whether it is true—that is, nonzero or nonempty):

>>> bool(1)

True

>>> bool('spam')

True

>>> bool({})

False

In practice, though, you’ll rarely notice the Boolean type produced by logic tests, because Boolean results are used automatically by if statements and other selection tools. We’ll explore Booleans further when we study logical statements in Chapter 12.

Python’s Type Hierarchies

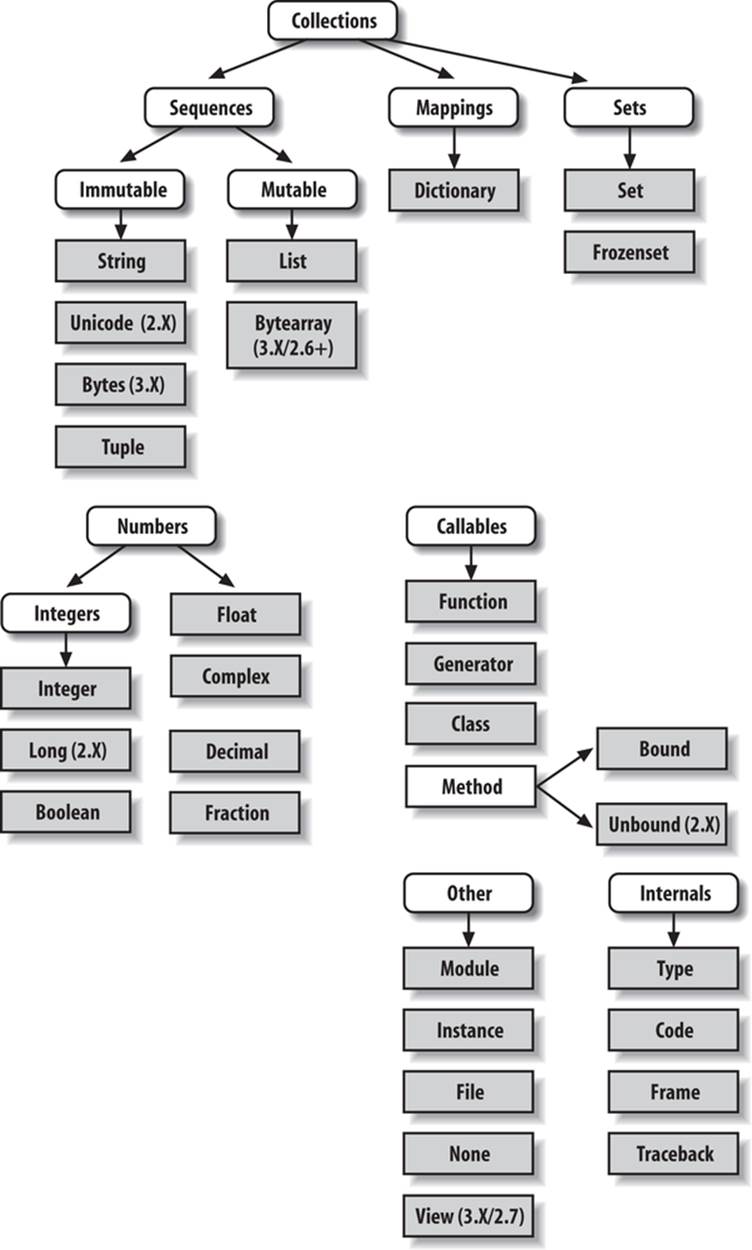

As a summary and reference, Figure 9-3 sketches all the built-in object types available in Python and their relationships. We’ve looked at the most prominent of these; most of the other kinds of objects in Figure 9-3 correspond to program units (e.g., functions and modules) or exposed interpreter internals (e.g., stack frames and compiled code).

The largest point to notice here is that everything in a Python system is an object type and may be processed by your Python programs. For instance, you can pass a class to a function, assign it to a variable, stuff it in a list or dictionary, and so on.

Type Objects

In fact, even types themselves are an object type in Python: the type of an object is an object of type type (say that three times fast!). Seriously, a call to the built-in function type(X) returns the type object of object X. The practical application of this is that type objects can be used for manual type comparisons in Python if statements. However, for reasons introduced in Chapter 4, manual type testing is usually not the right thing to do in Python, since it limits your code’s flexibility.

One note on type names: as of Python 2.2, each core type has a new built-in name added to support type customization through object-oriented subclassing: dict, list, str, tuple, int, float, complex, bytes, type, set, and more. In Python 3.X names all references classes, and in Python 2.X but not 3.X, file is also a type name and a synonym for open. Calls to these names are really object constructor calls, not simply conversion functions, though you can treat them as simple functions for basic usage.

In addition, the types standard library module in Python 3.X provides additional type names for types that are not available as built-ins (e.g., the type of a function; in Python 2.X but not 3.X, this module also includes synonyms for built-in type names), and it is possible to do type tests withthe isinstance function. For example, all of the following type tests are true:

type([1]) == type([]) # Compare to type of another list

type([1]) == list # Compare to list type name

isinstance([1], list) # Test if list or customization thereof

import types # types has names for other types

def f(): pass

type(f) == types.FunctionType

Because types can be subclassed in Python today, the isinstance technique is generally recommended. See Chapter 32 for more on subclassing built-in types in Python 2.2 and later.

Figure 9-3. Python’s major built-in object types, organized by categories. Everything is a type of object in Python, even the type of an object! Some extension types, such as named tuples, might belong in this figure too, but the criteria for inclusion in the core types set are not formal.

NOTE

Also in Chapter 32, we will explore how type(X) and type testing in general apply to instances of user-defined classes. In short, in Python 3.X and for new-style classes in Python 2.X, the type of a class instance is the class from which the instance was made. For classic classes in Python 2.X, all class instances are instead of the type “instance,” and we must compare instance __class__attributes to compare their types meaningfully. Since we’re not yet equipped to tackle the subject of classes, we’ll postpone the rest of this story until Chapter 32.

Other Types in Python

Besides the core objects studied in this part of the book, and the program-unit objects such as functions, modules, and classes that we’ll meet later, a typical Python installation has dozens of additional object types available as linked-in C extensions or Python classes—regular expression objects, DBM files, GUI widgets, network sockets, and so on. Depending on whom you ask, the named tuple we met earlier in this chapter may fall in this category too (Decimal and Fraction of Chapter 5 tend to be more ambiguous).

The main difference between these extra tools and the built-in types we’ve seen so far is that the built-ins provide special language creation syntax for their objects (e.g., 4 for an integer, [1,2] for a list, the open function for files, and def and lambda for functions). Other tools are generally made available in standard library modules that you must first import to use, and aren’t usually considered core types. For instance, to make a regular expression object, you import re and call re.compile(). See Python’s library reference for a comprehensive guide to all the tools available to Python programs.

Built-in Type Gotchas

That’s the end of our look at core data types. We’ll wrap up this part of the book with a discussion of common problems that seem to trap new users (and the occasional expert), along with their solutions. Some of this is a review of ideas we’ve already covered, but these issues are important enough to warn about again here.

Assignment Creates References, Not Copies