Python in Practice: Create Better Programs Using Concurrency, Libraries, and Patterns (2014)

Chapter 3. Behavioral Design Patterns in Python

The behavioral patterns are concerned with how things get done; that is, with algorithms and object interactions. They provide powerful ways of thinking about and organizing computations, and like a few of the patterns seen in the previous two chapters, some of them are supported directly by built-in Python syntax.

The Perl programming language’s well-known motto is, “there’s more than one way to do it”; whereas in Tim Peters’ Zen of Python, “there should be one—and preferably only one—obvious way to do it”.* Yet, like any programming language, there are sometimes two or more ways to do things in Python, especially since the introduction of comprehensions (use a comprehension or a for loop) and generators (use a generator expression or a function with a yield statement). And as we will see in this chapter, Python’s support for coroutines adds a new way to do certain things.

*To see the Zen of Python, enter import this at an interactive Python prompt.

3.1. Chain of Responsibility Pattern

The Chain of Responsibility Pattern is designed to decouple the sender of a request from the recipient that processes the request. So, instead of one function directly calling another, the first function sends a request to a chain of receivers. The first receiver in the chain either can handle the request and stop the chain (by not passing the request on) or can pass on the request to the next receiver in the chain. The second receiver has the same choices, and so on, until the last one is reached (which could choose to throw the request away or to raise an exception).

Let’s imagine that we have a user interface that receives events to be handled. Some of the events come from the user (e.g., mouse and key events), and some come from the system (e.g., timer events). In the following two subsections we will look at a conventional approach to creating an event-handling chain, and then at a pipeline-based approach using coroutines.

3.1.1. A Conventional Chain

In this subsection we will review a conventional event-handling chain where each event has a corresponding event-handling class.

handler1 = TimerHandler(KeyHandler(MouseHandler(NullHandler())))

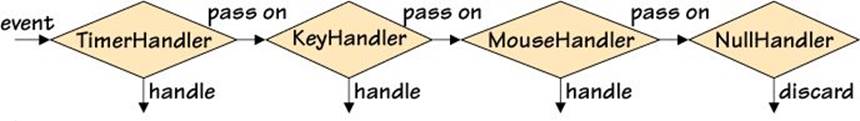

Here is how the chain might be set up using four separate handler classes. The chain is illustrated in Figure 3.1. Since we throw away unhandled events, we could have just passed None—or nothing—as the MouseHandler’s argument.

Figure 3.1 An event-handling chain

The order in which we create the handlers should not matter since each one handles events only of the type it is designed for.

while True:

event = Event.next()

if event.kind == Event.TERMINATE:

break

handler1.handle(event)

Events are normally handled in a loop. Here, we exit the loop and terminate the application if there is a TERMINATE event; otherwise, we pass the event to the event-handling chain.

handler2 = DebugHandler(handler1)

Here we have created a new handler (although we could just as easily have assigned back to handler1). This handler must be first in the chain, since it is used to eavesdrop on the events passing into the chain and to report them, but not to handle them (so it passes on every event it receives).

We can now call handler2.handle(event) in our loop, and in addition to the normal event handlers we will now have some debugging output to see the events that are received.

class NullHandler:

def __init__(self, successor=None):

self.__successor = successor

def handle(self, event):

if self.__successor is not None:

self.__successor.handle(event)

This class serves as the base class for our event handlers and provides the infrastructure for handling events. If an instance is created with a successor handler, then when this instance is given an event, it simply passes the event down the chain to the successor. However, if there is no successor, we have decided to simply discard the event. This is the standard approach in GUI (graphical user interface) programming, although we could easily log or raise an exception for unhandled events (e.g., if our program was a server).

class MouseHandler(NullHandler):

def handle(self, event):

if event.kind == Event.MOUSE:

print("Click: {}".format(event))

else:

super().handle(event)

Since we haven’t reimplemented the __init__() method, the base class one will be used, so the self.__successor variable will be correctly created.

This handler class handles only those events that it is interested in (i.e., of type Event.MOUSE) and passes any other kind of event on to its successor in the chain (if there is one).

The KeyHandler and TimerHandler classes (neither of which is shown) have exactly the same structure as the MouseHandler. These other classes only differ in which kind of event they respond to (e.g., Event.KEYPRESS and Event.TIMER) and the handling they perform (i.e., they print out different messages).

class DebugHandler(NullHandler):

def __init__(self, successor=None, file=sys.stdout):

super().__init__(successor)

self.__file = file

def handle(self, event):

self.__file.write("*DEBUG*: {}\n".format(event))

super().handle(event)

The DebugHandler class is different from the other handlers in that it never handles any events, and it must be first in the chain. It takes a file or file-like object to direct its reports to, and when an event occurs, it reports the event and then passes it on.

3.1.2. A Coroutine-Based Chain

A generator is a function or method that has one or more yield expressions instead of returns. Whenever a yield is reached, the value yielded is produced, and the function or method is suspended with all its state intact. At this point the function has yielded the processor (to the receiver of the value it has produced), so although suspended, the function does not block. Then, when the function or method is used again, execution resumes from the statement following the yield. So, values are pulled from a generator by iterating over it (e.g., using for value ingenerator:) or by calling next() on it.

A coroutine uses the same yield expression as a generator but has different behavior. A coroutine executes an infinite loop and starts out suspended at its first (or only) yield expression, waiting for a value to be sent to it. If and when a value is sent, the coroutine receives this as the value of its yield expression. The coroutine can then do any processing it wants and when it has finished, it loops and again becomes suspended waiting for a value to arrive at its next yield expression. So, values are pushed into a coroutine by calling the coroutine’s send() or throw()methods.

In Python, any function or method that contains a yield is a generator. However, by using a @coroutine decorator, and by using an infinite loop, we can turn a generator into a coroutine. (We discussed decorators and the @functools.wraps decorator in the previous chapter; §2.4, 48![]() .)

.)

def coroutine(function):

@functools.wraps(function)

def wrapper(*args, **kwargs):

generator = function(*args, **kwargs)

next(generator)

return generator

return wrapper

The wrapper calls the generator function just once and captures the generator it produces in the generator variable. This generator is really the original function with its arguments and any local variables captured as its state. Next, the wrapper advances the generator—just once, using the built-in next() function—to execute it up to its first yield expression. The generator—with its captured state—is then returned. This returned generator function is a coroutine, ready to receive a value at its first (or only) yield expression.

If we call a generator, it will resume execution where it left off (i.e., continue after the last—or only—yield expression it executed). However, if we send a value into a coroutine (using Python’s generator.send(value) syntax), this value will be received inside the coroutine as the currentyield expression’s result, and execution will resume from that point.

Since we can both receive values from and send values to coroutines, they can be used to create pipelines, including event-handling chains. Furthermore, we don’t need to provide a successor infrastructure, since we can use Python’s generator syntax instead.

pipeline = key_handler(mouse_handler(timer_handler()))

Here, we create our chain (pipeline) using a bunch of nested function calls. Every function called is a coroutine, and each one executes up to its first (or only) yield expression, here suspending execution, ready to be used again or sent a value. So, the pipeline is created immediately, with no blocking.

Instead of having a null handler, we pass nothing to the last handler in the chain. We will see how this works when we look at a typical handler coroutine (key_handler()).

while True:

event = Event.next()

if event.kind == Event.TERMINATE:

break

pipeline.send(event)

Just as with the conventional approach, once the chain is ready to handle events, we handle them in a loop. Because each handler function is a coroutine (a generator function), it has a send() method. So, here, each time we have an event to handle, we send it into the pipeline. In this example, the value will first be sent to the key_handler() coroutine, which will either handle the event or pass it on. As before, the order of the handlers often doesn’t matter.

pipeline = debug_handler(pipeline)

This is the one case where it does matter which order we use for a handler. Since the debug_handler() coroutine is intended to spy on the events and simply pass them on, it must be the first handler in the chain. With this new pipeline in place, we can once again loop over events, sending each one to the pipeline in turn using pipeline.send(event).

@coroutine

def key_handler(successor=None):

while True:

event = (yield)

if event.kind == Event.KEYPRESS:

print("Press: {}".format(event))

elif successor is not None:

successor.send(event)

This coroutine accepts a successor coroutine to send to (or None) and begins executing an infinite loop. The @coroutine decorator ensures that the key_handler() is executed up to its yield expression, so when the pipeline chain is created, this function has reached its yieldexpression and is blocked, waiting for the yield to produce a (sent) value. (Of course, it is only the coroutine that is blocked, not the program as a whole.)

Once a value is sent to this coroutine—either directly, or from another coroutine in the pipeline—it is received as the event value. If the event is of a kind that this coroutine handles (i.e., of type Event.KEYPRESS), it is handled—in this example, just printed—and not sent any further. However, if the event is not of the right type for this coroutine, and providing that there is a successor coroutine, it is sent on to its successor to handle. If there is no successor, and the event isn’t handled here, it is simply discarded.

After handling, sending, or discarding an event, the coroutine returns to the top of the while loop, and then, once again, waits for the yield to produce a value sent into the pipeline.

The mouse_handler() and timer_handler() coroutines (neither of which is shown), have exactly the same structure as the key_handler(); the only differences being the type of event they handle and the messages they print.

@coroutine

def debug_handler(successor, file=sys.stdout):

while True:

event = (yield)

file.write("*DEBUG*: {}\n".format(event))

successor.send(event)

The debug_handler() waits to receive an event, prints the event’s details, and then sends it on to the next coroutine to be handled.

Although coroutines use the same machinery as generators, they work in a very different way. With a normal generator, we pull values out one at a time (e.g., for x in range(10):). But with coroutines, we push values in one at a time using send(). This versatility means that Python can express many different kinds of algorithm in a very clean and natural way. For example, the coroutine-based chain shown in this subsection was implemented using far less code than the conventional chain shown in the previous subsection.

We will see coroutines in action again when we look at the Mediator Pattern (§3.5, ![]() 100).

100).

The Chain of Responsibility Pattern can, of course, be applied in many other contexts than those illustrated in this section. For example, we could use the pattern to handle requests coming into a server.

3.2. Command Pattern

The Command Pattern is used to encapsulate commands as objects. This makes it possible, for example, to build up a sequence of commands for deferred execution or to create undoable commands. We have already seen a basic use of the Command Pattern in the ImageProxy example (§2.7, 67 ![]() ), and in this section we will go a step further and create classes for undoable individual commands and for undoable macros (i.e., undoable sequences of commands).

), and in this section we will go a step further and create classes for undoable individual commands and for undoable macros (i.e., undoable sequences of commands).

Let’s begin by seeing some code that uses the Command Pattern, and then we will look at the classes it uses (UndoableGrid and Grid) and the Command module that provides the do–undo and macro infrastructure.

grid = UndoableGrid(8, 3) # (1) Empty

redLeft = grid.create_cell_command(2, 1, "red")

redRight = grid.create_cell_command(5, 0, "red")

redLeft() # (2) Do Red Cells

redRight.do() # OR: redRight()

greenLeft = grid.create_cell_command(2, 1, "lightgreen")

greenLeft() # (3) Do Green Cell

rectangleLeft = grid.create_rectangle_macro(1, 1, 2, 2, "lightblue")

rectangleRight = grid.create_rectangle_macro(5, 0, 6, 1, "lightblue")

rectangleLeft() # (4) Do Blue Squares

rectangleRight.do() # OR: rectangleRight()

rectangleLeft.undo() # (5) Undo Left Blue Square

greenLeft.undo() # (6) Undo Left Green Cell

rectangleRight.undo() # (7) Undo Right Blue Square

redLeft.undo() # (8) Undo Red Cells

redRight.undo()

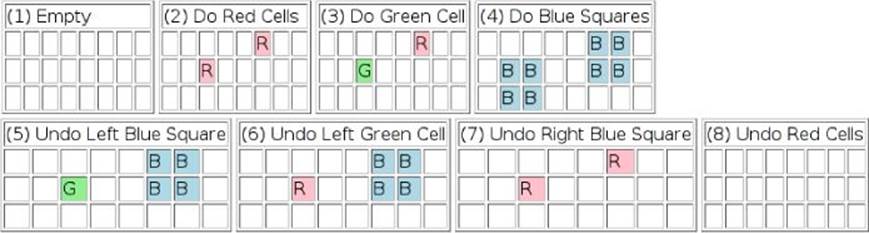

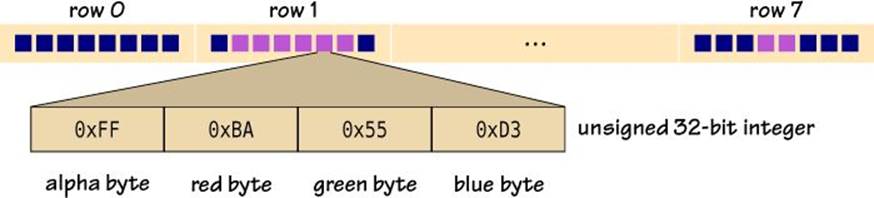

Figure 3.2 shows the grid rendered as HTML eight different times. The first one shows the grid after it has been created in the first place (i.e., when it is empty). Then, each subsequent one shows the state of things after each command or macro is created and then called (either directly or using its do() method) and after every undo() call.

Figure 3.2 A grid being done and undone

class Grid:

def __init__(self, width, height):

self.__cells = [["white" for _ in range(height)]

for _ in range(width)]

def cell(self, x, y, color=None):

if color is None:

return self.__cells[x][y]

self.__cells[x][y] = color

@property

def rows(self):

return len(self.__cells[0])

@property

def columns(self):

return len(self.__cells)

This Grid class is a simple image-like class that holds a list of lists of color names.

The cell() method serves as both a getter (when the color argument is None) and a setter (when a color is given). The rows and columns read-only properties return the grid’s dimensions.

class UndoableGrid(Grid):

def create_cell_command(self, x, y, color):

def undo():

self.cell(x, y, undo.color)

def do():

undo.color = self.cell(x, y) # Subtle!

self.cell(x, y, color)

return Command.Command(do, undo, "Cell")

To make the Grid support undoable commands, we have created a subclass that adds two additional methods, the first of which is shown here.

Every command must be of type Command.Command or Command.Macro. The former takes do and undo callables and an optional description. The latter has an optional description and can have any number of Command.Commands added to it.

In the create_cell_command() method, we accept the position and color of the cell to set and then create the two functions required to create a Command.Command. Both commands simply set the given cell’s color.

Of course, at the time the do() and undo() functions are created, we cannot know what the color of the cell will be immediately before the do() command is applied, so we don’t know what color to undo it to. We have solved this problem by retrieving the cell’s color inside the do()function—at the time the function is called—and setting it as an attribute of the undo() function. Only then do we set the new color. Note that this works because the do() function is a closure that not only captures the x, y, and color parameters as part of its state, but also the undo()function that has just been created.

Once the do() and undo() functions have been created, we create a new Command.Command that incorporates them, plus a simple description, and return the command to the caller.

def create_rectangle_macro(self, x0, y0, x1, y1, color):

macro = Command.Macro("Rectangle")

for x in range(x0, x1 + 1):

for y in range(y0, y1 + 1):

macro.add(self.create_cell_command(x, y, color))

return macro

This is the second UndoableGrid method for creating doable–undoable commands. This method creates a macro that will create a rectangle spanning the given coordinates. For each cell to be colored, a cell command is created using the class’s other method (create_cell_command()), and this command is added to the macro. Once all the commands have been added, the macro is returned.

As we will see, both commands and macros support do() and undo() methods. Since commands and macros support the same methods, and macros contain commands, their relationship to each other is a variation of the Composite Pattern (§2.3, 40 ![]() ).

).

class Command:

def __init__(self, do, undo, description=""):

assert callable(do) and callable(undo)

self.do = do

self.undo = undo

self.description = description

def __call__(self):

self.do()

A Command.Command expects two callables: the first is the “do” command, and the second is the “undo” command. (The callable() function is a Python 3.3 built-in; for earlier versions an equivalent function can be created with: def callable(function): return isinstance(function, collections.Callable).)

A Command.Command can be executed simply by calling it (thanks to our implementation of the __call__() special method) or equivalently by calling its do() method. The command can be undone by calling its undo() method.

class Macro:

def __init__(self, description=""):

self.description = description

self.__commands = []

def add(self, command):

if not isinstance(command, Command):

raise TypeError("Expected object of type Command, got {}".

format(type(command).__name__))

self.__commands.append(command)

def __call__(self):

for command in self.__commands:

command()

do = __call__

def undo(self):

for command in reversed(self.__commands):

command.undo()

The Command.Macro class is used to encapsulate a sequence of commands that should all be done—or undone—as a single operation.* The Command.Macro offers the same interface as Command.Commands: do() and undo() methods, and the ability to be called directly. In addition, macros provide an add() method through which Command.Commands can be added.

*Although we speak of macros executing in a single operation, this operation is not atomic from a concurrency point of view, although it could be made atomic if we used appropriate locks.

For macros, commands must be undone in reverse order. For example, suppose we created a macro and added the commands A, B, and C. When we executed the macro (i.e., called it or called its do() method), it would execute A, then B, and then C. So when we call undo(), we must execute the undo() methods for C, then B, and then A.

In Python, functions, bound methods, and other callables are first-class objects that can be passed around and stored in data structures such as lists and dicts. This makes Python an ideal language for implementations of the Command Pattern. And the pattern itself can be used to great effect, as we have seen here, in providing do–undo functionality, as well as being able to support macros and deferred execution.

3.3. Interpreter Pattern

The Interpreter Pattern formalizes two common requirements: providing some means by which users can enter nonstring values into applications, and allowing users to program applications.

At the most basic level, an application will receive strings from the user—or from other programs—that must be interpreted (and perhaps executed) appropriately. Suppose, for example, we receive a string from the user that is supposed to represent an integer. An easy—and unwise—way to get the integer’s value is like this: i = eval(userCount). This is dangerous, because although we hope the string is something innocent like "1234", it could be "os.system('rmdir /s /q C:\\\\')".

In general, if we are given a string that is supposed to represent the value of a specific data type, we can use Python to obtain the value directly and safely.

try:

count = int(userCount)

when = datetime.datetime.strptime(userDate, "%Y/%m/%d").date()

except ValueError as err:

print(err)

In this snippet, we get Python to safely try to parse two strings, one into an int and the other into a datetime.date.

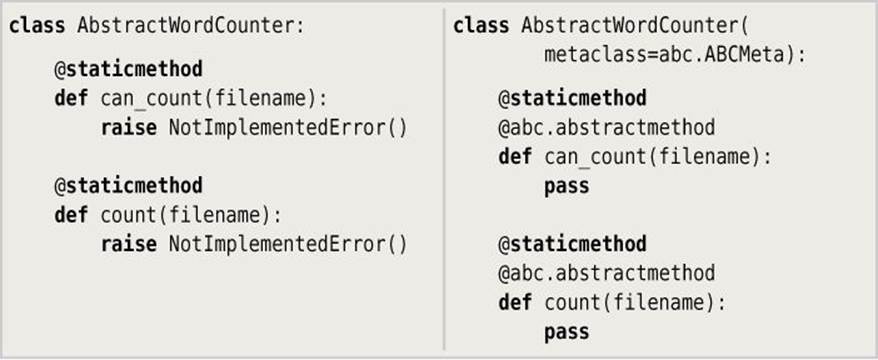

Sometimes, of course, we need to go beyond interpreting single strings into values. For example, we might want to provide an application with a calculator facility or allow users to create their own code snippets to be applied to application data. One popular approach to these kinds of requirements is to create a DSL (Domain Specific Language). Such languages can be created with Python out of the box—for example, by writing a recursive descent parser. However, it is much simpler to use a third-party parsing library such as PLY (www.dabeaz.com/ply), PyParsing (pyparsing.wikispaces.com), or one of the many other libraries that are available.*

*Parsing, including using PLY and PyParsing, is introduced in this author’s book, Programming in Python 3, Second Edition; see the Selected Bibliography for details (![]() 287).

287).

If we are in an environment where we can trust our applications’ users, we can give them access to the Python interpreter itself. The IDLE IDE (Integrated Development Environment) that is included with Python does exactly this, although IDLE is smart enough to execute user code in a separate process, so that if it crashes IDLE isn’t affected.

3.3.1. Expression Evaluation with eval()

The built-in eval() function evaluates a single string as an expression (with access to any global or local context we give it) and returns the result. This is sufficient to build the simple calculator.py application that we will review in this subsection. Let’s begin by looking at some interaction.

$ ./calculator.py

Enter an expression (Ctrl+D to quit): 65

A=65

ANS=65

Enter an expression (Ctrl+D to quit): 72

A=65, B=72

ANS=72

Enter an expression (Ctrl+D to quit): hypotenuse(A, B)

name 'hypotenuse' is not defined

Enter an expression (Ctrl+D to quit): hypot(A, B)

A=65, B=72, C=97.0

ANS=97.0

Enter an expression (Ctrl+D to quit): ^D

The user entered two sides of a right-angled triangle and then used the math.hypot() function (after making a mistake) to calculate the hypotenuse. After each expression is entered, the calculator.py program prints the variables it has created so far (and that are accessible to the user) and the answer to the current expression. (We have indicated user-entered text using bold—with Enter or Return at the end of each line implied—and Ctrl+D with ^D.)

To make the calculator as convenient as possible, the result of each expression entered is stored in a variable, starting with A, then B, and so on, and restarting at A if Z is reached. Furthermore, we have imported all the math module’s functions and constants (e.g., hypot(), e, pi, sin(), etc.) into the calculator’s namespace so that the user can access them without qualifying them (e.g., cos() rather than math.cos()).

If the user enters a string that cannot be evaluated, the calculator prints an error message and then repeats the prompt, and all the existing context is kept intact.

def main():

quit = "Ctrl+Z,Enter" if sys.platform.startswith("win") else "Ctrl+D"

prompt = "Enter an expression ({} to quit): ".format(quit)

current = types.SimpleNamespace(letter="A")

globalContext = global_context()

localContext = collections.OrderedDict()

while True:

try:

expression = input(prompt)

if expression:

calculate(expression, globalContext, localContext, current)

except EOFError:

print()

break

We have used EOF (End Of File) to signify that the user has finished. This means that the calculator can be used in a shell pipeline, accepting input redirected from a file, as well as for interactive user input.

We need to keep track of the name of the current variable (A or B or ...) so that we can update it each time a calculation is done. However, we can’t simply pass it as a string, since strings are copied and cannot be changed. A poor solution is to use a global variable. A better and much more common solution is to create a one-item list; for example, current = ["A"]. This list can be passed as current and its string can be read or changed by accessing it as current[0].

For this example, we have taken a more modern approach and created a tiny namespace with a single attribute (letter) whose value is "A". We can freely pass the current simple namespace instance around, and since it has a letter attribute, we can read or change the attribute’s value using the nice current.letter syntax.

The types.SimpleNamespace class was introduced in Python 3.3. For earlier versions an equivalent effect can be achieved by writing current = type("_", (), dict(letter="A"))(). This creates a new class called _ with a single attribute called letter with an initial value of "A". The built-in type() function returns the type of an object if called with one argument, or creates a new class if given a class name, a tuple of base classes, and a dictionary of attributes. If we pass an empty tuple, the base class will be object. Since we don’t need the class but only an instance, having called type(), we immediately call the class itself—hence the extra parentheses—to return the instance of it that we assign to current.

Python can supply the current global context using the built-in globals() function; this returns a dict that we can modify (e.g., add to, as we saw earlier; 23 ![]() ). Python can also supply the local context using the built-in locals() function, although the dict returned by this function must not be modified.

). Python can also supply the local context using the built-in locals() function, although the dict returned by this function must not be modified.

We want to provide a global context supplemented with the math module’s constants and functions and an initially empty local context. Although the global context must be a dict, the local context can be supplied as a dict—or as any other mapping object. Here, we have chosen to use acollections.OrderedDict—an insertion-ordered dictionary—as the locals context.

Since the calculator can be used interactively, we have created an event loop that is terminated when EOF is encountered. Inside the loop we prompt the user for input (also telling them how to quit), and if they enter any text, we call our calculate() function to perform the calculation and to print the results.

import math

def global_context():

globalContext = globals().copy()

for name in dir(math):

if not name.startswith("_"):

globalContext[name] = getattr(math, name)

return globalContext

This helper function starts by creating a local (shallow-copied) dict of the program’s global modules, functions, and variables. Then it iterates over all the public constants and functions in the math module and, for each one, adds its unqualified name to the globalContext dict and sets its value to be the actual math module constant or function it refers to. So, for example, when the name is "factorial", this name is added as a key in the globalContext, and its value is set to be the (i.e., a reference to the) math.factorial() function. This is what allows the calculator’s users to use unqualified names.

A simpler approach would have been to do from math import * and then use globals() directly, with no need for the globalContext dict. Such an approach is probably okay for the math module, but the way we have done it here provides finer control that might be more appropriate for other modules.

def calculate(expression, globalContext, localContext, current):

try:

result = eval(expression, globalContext, localContext)

update(localContext, result, current)

print(", ".join(["{}={}".format(variable, value)

for variable, value in localContext.items()]))

print("ANS={}".format(result))

except Exception as err:

print(err)

This is the function where we ask Python to evaluate the string expression using the global and local context dictionaries that we have created. If the eval() succeeds, we update the local context with the result and print the variables and the result. And if an exception occurs, we safely print it. Since we used a collections.OrderedDict for the local context, the items() method returns the items in insertion order without the need for an explicit sort. (Had we used a plain dict we would have needed to write sorted(localContext.items()).)

Although it is usually poor practice to use the Exception catch-all exception, it seems reasonable in this case, because the user’s expression could raise any kind of exception at all.

def update(localContext, result, current):

localContext[current.letter] = result

current.letter = chr(ord(current.letter) + 1)

if current.letter > "Z": # We only support 26 variables

current.letter = "A"

This function assigns the result to the next variable in the cyclic sequence A ... Z A ... Z .... This means that after the user has entered 26 expressions, the result of the last one is set as Z’s value, and the result of the next one will overwrite A’s value, and so on.

The eval() function will evaluate any Python expression. This is potentially dangerous if the expression is received from an untrusted source. An alternative is to use the standard library’s more restrictive—and safe—ast.literal_eval() function.

3.3.2. Code Evaluation with exec()

The built-in exec() function can be used to execute arbitrary pieces of Python code. Unlike eval(), exec() is not restricted to a single expression and always returns None. Context can be passed to exec() in the same way as for eval(), via globals and locals dictionaries. Results can be retrieved from exec() through the local context it is passed.

In this subsection, we will review the genome1.py program. This program creates a genome variable (a string of random A, C, G, and T letters) and executes eight pieces of user code with the genome in the code’s context.

context = dict(genome=genome, target="G[AC]{2}TT", replace="TCGA")

execute(code, context)

This code snippet shows the creation of the context dictionary with some data for the user’s code to work on and the execution of a user’s Code object (code) with the given context.

TRANSFORM, SUMMARIZE = ("TRANSFORM", "SUMMARIZE")

Code = collections.namedtuple("Code", "name code kind")

We expect user code to be provided in the form of Code named tuples, with a descriptive name, the code itself (as a string), and a kind—either TRANSFORM or SUMMARIZE. When executed, the user code should create either a result object or an error object. If their code’s kind isTRANSFORM, the result is expected to be a new genome string, and if the kind is SUMMARIZE, result is expected to be a number. Naturally, we will try to make our code robust enough to cope with user code that doesn’t meet these requirements.

def execute(code, context):

try:

exec(code.code, globals(), context)

result = context.get("result")

error = context.get("error")

handle_result(code, result, error)

except Exception as err:

print("'{}' raised an exception: {}\n".format(code.name, err))

This function performs the exec() call on the user’s code, using the program’s own global context and the provided local context. It then tries to retrieve the result and error objects, one of which the user code should have created, and passes them on to the customhandle_result() function.

Just as with the previous subsection’s eval() example, we have used the (normally to be avoided) Exception exception, since the user code could raise any kind of exception.

def handle_result(code, result, error):

if error is not None:

print("'{}' error: {}".format(code.name, error))

elif result is None:

print("'{}' produced no result".format(code.name))

elif code.kind == TRANSFORM:

genome = result

try:

print("'{}' produced a genome of length {}".format(code.name,

len(genome)))

except TypeError as err:

print("'{}' error: expected a sequence result: {}".format(

code.name, err))

elif code.kind == SUMMARIZE:

print("'{}' produced a result of {}".format(code.name, result))

print()

If the error object is not None, it is printed. Otherwise, if the result is None, we print a “produced no result” message. If we have a result and the user code’s kind is TRANSFORM, we assign result to genome, and in this case we simply print the genome’s new length. The try... except block is designed to protect our program from a user code error (e.g., returning a single value rather than a string or other sequence for a TRANSFORM). If the result’s kind is SUMMARIZE, we just print a summary line containing the result.

The genome1.py program has eight Code items: the first two (which we will see shortly) produce legitimate results, the third has a syntax error, the fourth reports an error, the fifth does nothing, the sixth has the wrong kind set, the seventh calls sys.exit(), and the eighth is never reached because the seventh terminates the program. Here is the program’s output.

$ ./genome1.py

'Count' produced a result of 12

'Replace' produced a genome of length 2394

'Exception Test' raised an exception: invalid syntax (<string>, line 4)

'Error Test' error: 'G[AC]{2}TT' not found

'No Result Test' produced no result

'Wrong Kind Test' error: expected a sequence result: object of type 'int' has

no len()

As the output makes clear, because the user code is executing in the same interpreter as the program itself, the user code can terminate or crash the program. (Note that the last line has been wrapped to fit on the page.)

Code("Count",

"""

import re

matches = re.findall(target, genome)

if matches:

result = len(matches)

else:

error = "'{}' not found".format(target)

""", SUMMARIZE)

This is the “Count” Code item. The item’s code does much more than is possible in a single expression of the kind that eval() could handle. The target and genome strings are taken from the context object that is passed as the exec()’s local context—and it is this same contextobject that any new variables (such as result and error) are implicitly stored in.

Code("Replace",

"""

import re

result, count = re.subn(target, replace, genome)

if not count:

error = "no '{}' replacements made".format(target)

""", TRANSFORM)

The “Replace” Code item’s code performs a simple transformation on the genome string, replacing nonoverlapping substrings that match the target regex with the replace string.

The re.subn() function (and regex.subn() method) performs substitutions exactly the same as re.sub() (and regex.sub()). However, whereas the sub() function (and method) returns a string where all the replacements have been made, the subn() function (and method) returns both the string and a count of how many replacements were made.

Although the genome1.py program’s execute() and handle_result() functions are easy to use and understand, in one respect the program is fragile: if the user code crashes—or simply calls sys.exit()—our program will terminate. In the next subsection we will explore a solution to this problem.

3.3.3. Code Evaluation Using a Subprocess

One possible answer to executing user code without compromising our application is to execute it in a separate process. This subsection’s genome2.py and genome3.py programs show how we can execute a Python interpreter in a subprocess, feed the interpreter with a program to execute through its standard input, and retrieve its results by reading its standard output.

We have given the genome2.py and genome3.py programs exactly the same eight Code items as the genome1.py program. Here is genome2.py’s output (genome3.py’s is identical):

$ ./genome2.py

'Count' produced a result of 12

'Replace' produced a genome of length 2394

'Exception Test' has an error on line 3

if genome[i] = "A":

^

SyntaxError: invalid syntax

'Error Test' error: 'G[AC]{2}TT' not found

'No Result Test' produced no result

'Wrong Kind Test' error: expected a sequence result: object of type 'int' has

no len()

'Termination Test' produced no result

'Length' produced a result of 2406

Notice that even though the seventh Code item calls sys.exit(), the genome2.py program continues afterward, merely reporting “produced no result” for that piece of code, and then going on to execute the “Length” code. (The genome1.py program was terminated by thesys.exit() call, so its last line of output was “...error: expected a sequence...”.) Another point to note is that genome2.py produces much better error reporting (e.g., the “Exception Test” code’s syntax error).

context = dict(genome=genome, target="G[AC]{2}TT", replace="TCGA")

execute(code, context)

The creation of the context and the execution of the user’s code with the context is exactly the same as for the genome1.py program.

def execute(code, context):

module, offset = create_module(code.code, context)

with subprocess.Popen([sys.executable, "-"], stdin=subprocess.PIPE,

stdout=subprocess.PIPE, stderr=subprocess.PIPE) as process:

communicate(process, code, module, offset)

This function begins by creating a string of code (module) containing the user’s code plus some supporting code that we will see in a moment. The offset is the number of lines we have added before the user’s code—this will help us to provide accurate line numbers in error messages. The function then starts a subprocess in which it executes a new instance of the Python interpreter, whose name is in sys.executable, and whose - (hyphen) argument means that the interpreter will expect to execute Python code sent to its sys.stdin.* The interaction with the process—including sending it the module code—is handled by our custom communicate() function.

*The subprocess.Popen() function added support for context managers (i.e., the with statement) in Python 3.2.

def create_module(code, context):

lines = ["import json", "result = error = None"]

for key, value in context.items():

lines.append("{} = {!r}".format(key, value))

offset = len(lines) + 1

outputLine = "\nprint(json.dumps((result, error)))"

return "\n".join(lines) + "\n" + code + outputLine, offset

This function creates a list of lines that will form a new Python module to be executed by a Python interpreter in a subprocess. The first line imports the json module that we will use to return results to the initiating process (i.e., to the genome2.py program). The second line initializes theresult and error variables to ensure that they exist. Then, we add a line for each of the context variables. Finally, we store the result and error (which the user’s code might have changed) inside a string using JSON (JavaScript Object Notation) that will be printed to sys.stdoutafter the user’s code has been executed.

UTF8 = "utf-8"

def communicate(process, code, module, offset):

stdout, stderr = process.communicate(module.encode(UTF8))

if stderr:

stderr = stderr.decode(UTF8).lstrip().replace(", in <module>", ":")

stderr = re.sub(", line (\d+)",

lambda match: str(int(match.group(1)) - offset), stderr)

print(re.sub(r'File."[^"]+?"', "'{}' has an error on line "

.format(code.name), stderr))

return

if stdout:

result, error = json.loads(stdout.decode(UTF8))

handle_result(code, result, error)

return

print("'{}' produced no result\n".format(code.name))

The communicate() function begins by sending the module code we created earlier to the subprocess’s Python interpreter to execute, and then blocks waiting for results to be produced. Once the interpreter finishes execution, its standard output and standard error output are collected in our local stdout and stderr variables. Note that all communication takes place using raw bytes—hence our need to encode the module string into UTF-8-encoded bytes.

If there is any error output (i.e., if an exception was raised, or if anything is written to sys.stderr), we replace the reported line number (which includes the lines we added before the user’s code) with the actual line number in the user’s code, and we replace the “File "<stdin>"” text with the Code object’s name. Then, we print the error text as a string.

The re.sub() call matches—and captures—the line number’s digits with (\d+) and replaces them with the result of the call to the lambda function given as its second argument. (More commonly, we give a string as second argument, but here we need to do some computation.) Thelambda function converts the digits into an integer and subtracts the offset, then returns the new line number as a string to replace the original. This ensures that the error message’s line number is correct for the user’s code, regardless of how many lines we put in front of it when creating the module we sent to be interpreted.

If there was no error output, but there was standard output, we decode the output’s bytes into a string (which we expect to be in JSON format) and parse this into Python objects—in this case a 2-tuple of a result and an error. Then we call our custom handle_result() function. (This function is identical in genome1.py, genome2.py, and genome3.py, and was shown earlier; 89 ![]() .)

.)

The genome2.py program’s user code is identical to genome1.py’s, although for genome2.py we provide some additional supporting code before and after the user code. Using JSON format to return results is safe and convenient but limits the data types we can return (e.g., result’s type) to dict, list, str, int, float, bool, or None, and where a dict or list may only contain objects of these types.

The genome3.py program is almost the same as genome2.py but returns its results in a pickle. This means that most Python types can be used.

def create_module(code, context):

lines = ["import pickle", "import sys", "result = error = None"]

for key, value in context.items():

lines.append("{} = {!r}".format(key, value))

offset = len(lines) + 1

outputLine = "\nsys.stdout.buffer.write(pickle.dumps((result, error)))"

return "\n".join(lines) + "\n" + code + outputLine, offset

This function is very similar to the genome2.py version. A minor difference is that we must import sys. The major difference is that whereas the json module’s loads() and dumps() methods work on strs, the pickle module’s equivalent functions work on bytes. So, here, we must write the raw bytes directly to sys.stdout’s underlying buffer to avoid the bytes being erroneously encoded.

def communicate(process, code, module, offset):

stdout, stderr = process.communicate(module.encode(UTF8))

...

if stdout:

result, error = pickle.loads(stdout)

handle_result(code, result, error)

return

The genome3.py program’s communicate() method is the same as for genome2.py (93 ![]() ) except for the line that has the loads() method call. For the JSON data we had to decode the bytes into a UTF-8-encoded str, but here we work directly on the raw bytes.

) except for the line that has the loads() method call. For the JSON data we had to decode the bytes into a UTF-8-encoded str, but here we work directly on the raw bytes.

Using exec() to execute arbitrary pieces of Python code received from the user or from other programs gives that code access to the full power of the Python interpreter—and to its entire standard library. And by executing the user code in a separate Python interpreter in a subprocess, we can protect our program from being crashed or terminated by it. However, we cannot really stop the user code from doing anything malicious. To execute untrusted code we would need to use some kind of sandbox; for example, the one provided by the PyPy Python interpreter (pypy.org).

For some programs, blocking while waiting for user code to finish execution might be acceptable, but it does run the risk of waiting “forever” if the user code has a bug (e.g., an infinite loop). One possible solution would be to create the subprocess in a separate thread and use a timer in the main thread. If the timer times out, we could then forcibly terminate the subprocess and report the problem to the user. Concurrent programming is introduced in the next chapter.

3.4. Iterator Pattern

The Iterator Pattern provides a way of sequentially accessing the items inside a collection or an aggregate object without exposing any of the internals of the collection or aggregate’s implementation. This pattern is so useful that Python provides built-in support for it, as well as providing special methods that we can implement in our own classes to make them seamlessly support iteration.

Iteration can be supported by satisfying the sequence protocol, or by using the two-argument form of the built-in iter() function, or by satisfying the iterator protocol. We will see examples of all these in the following subsections.

3.4.1. Sequence Protocol Iterators

One way to provide iterator support for our own classes is to make them support the sequence protocol. This means that we must implement a __getitem__() special method that can accept an integer index argument that starts from 0 and that raises an IndexError exception if no further iteration is possible.

for letter in AtoZ():

print(letter, end="")

print()

for letter in iter(AtoZ()):

print(letter, end="")

print()

ABCDEFGHIJKLMNOPQRSTUVWXYZ

ABCDEFGHIJKLMNOPQRSTUVWXYZ

These two code snippets create an AtoZ() object and then iterate over it. The object first returns the single character string "A", then "B", and so on, up to "Z". The object could have been made iterable in a number of ways, although in this case we’ve provided a __getitem__()method, as we will see in a moment.

In the second loop we have used the built-in iter() function to obtain an iterator to an instance of the AtoZ class. Clearly, this isn’t necessary in this case, but as we will see in a moment (and elsewhere in the book), iter() does have its uses.

class AtoZ:

def __getitem__(self, index):

if 0 <= index < 26:

return chr(index + ord("A"))

raise IndexError()

This is the complete AtoZ class. We have provided it with a __getitem__() method that satisfies the sequence protocol. When an object of this class is iterated, on the twenty-seventh iteration it will raise an IndexError. If this occurs inside a for loop, the exception is discarded, the loop is cleanly terminated, and execution resumes from the first statement after the loop.

3.4.2. Two-Argument iter() Function Iterators

Another way to provide iteration support is to use the built-in iter() function, but passing it two arguments instead of one. When this form is used, the first argument must be a callable (a function, a bound method, or any other callable object), and the second argument must be a sentinel value. When this form is used the callable is called at each iteration—with no arguments—and iteration will stop only if the callable raises a StopIteration exception or if it returns the sentinel value.

for president in iter(Presidents("George Bush"), None):

print(president, end=" * ")

print()

for president in iter(Presidents("George Bush"), "George W. Bush"):

print(president, end=" * ")

print()

George Bush * Bill Clinton * George W. Bush * Barack Obama *

George Bush * Bill Clinton *

The Presidents() call creates an instance of the Presidents class, and—thanks to the implementation of the __call__() special method—such instances are callable. So, here, we create a Presidents object that is a callable (as required by the two-argument form of the built-initer() function), and we provide a sentinel of None. A sentinel must be provided, even if it is None, so that Python knows to use the two-argument iter() function rather than the one-argument version.

The Presidents constructor creates a callable that will return each president in turn, starting with George Washington or, optionally, from the president we give it. In this case we told it to start from George Bush. Here, the first time we iterate, we have used a sentinel of None to signify “go to the end”, which, at the time of this writing, is Barack Obama. The second time we iterate we have provided the name of a president as the sentinel; this means that the callable will output each president from the first up to the one before the sentinel.

class Presidents:

__names = ("George Washington", "John Adams", "Thomas Jefferson",

...

"Bill Clinton", "George W. Bush", "Barack Obama")

def __init__(self, first=None):

self.index = (-1 if first is None else

Presidents.__names.index(first) - 1)

def __call__(self):

self.index += 1

if self.index < len(Presidents.__names):

return Presidents.__names[self.index]

raise StopIteration()

The Presidents class keeps a static (that is, class-wide) __names list with the names of all the U.S. presidents. The __init__() method sets the initial index to one less than either the first president in the list or the president the user has specified.

The instances of any class that implements the __call__() special method are callable. And when such an instance is called, it is this __call__() method that is actually executed.*

*In languages that don’t support functions as first-class objects, callable instances are called functors.

In this class’s __call__() special method, we either return the name of the next president in the list, or we raise a StopIteration exception. In the first iteration where the sentinel was None, the sentinel was never reached (since __call__() never returns None), but iteration still stopped cleanly because once we ran out of presidents, we raised the StopIteration exception. But in the second iteration, as soon as the sentinel president was returned to the built-in iter() function, the function itself raised StopIteration to terminate the loop.

3.4.3. Iterator Protocol Iterators

Probably the easiest way to provide iterator support in our own classes is to make them support the iterator protocol. This protocol requires that a class implements the __iter__() special method and that this method returns an iterator object. The iterator object must have its own__iter__() method that returns the iterator itself and a __next__() method that returns the next item—or that raises a StopIteration exception if there are no more items. Python’s for loop and in statement make use of this protocol under the hood. One simple way to implement an __iter__() method is to make it a generator—or to make it return a generator, since generators satisfy the iterator protocol. (See §3.1.2, 76 ![]() for more about generators.)

for more about generators.)

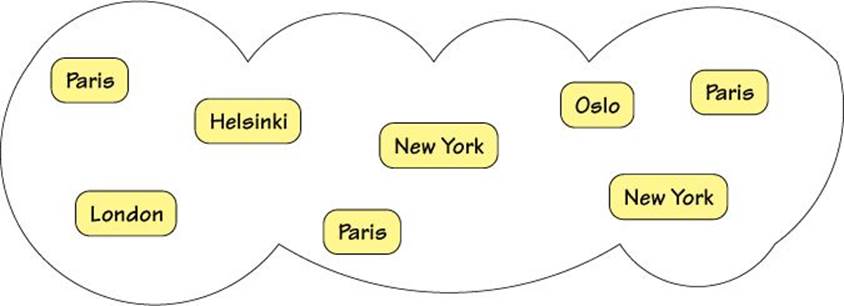

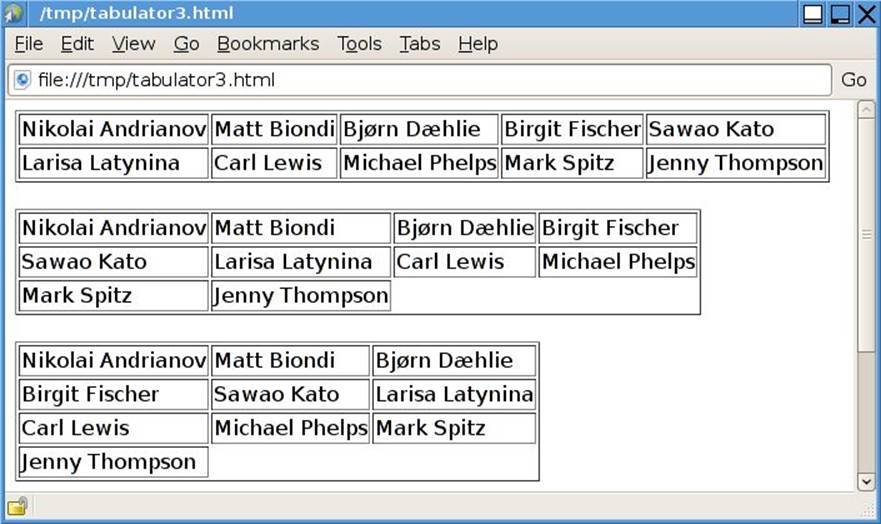

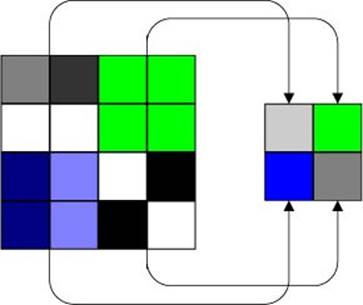

In this subsection we will create a simple bag class (also called a multiset). A bag is a collection class that is like a set but which allows duplicate items. An example bag is illustrated in Figure 3.3. Naturally, we will make the bag iterable and show three ways to do so. All the code is quoted from Bag1.py except where stated otherwise.

Figure 3.3 A bag is an unsorted collection of values with duplicates allowed

class Bag:

def __init__(self, items=None):

self.__bag = {}

if items is not None:

for item in items:

self.add(item)

The bag’s data is stored in the private self.__bag dictionary. The dictionary’s keys are anything hashable (i.e., they are the bag’s items), and the values are counts (i.e., how many of the item are in the bag). Users can add some initial items to a newly created bag if they wish.

def add(self, item):

self.__bag[item] = self.__bag.get(item, 0) + 1

Since self.__bag is not a collections.defaultdict, we must be careful to only increment an item that already exists; otherwise, we would get a KeyError exception. We use the dict.get() method to retrieve an existing item’s count, or 0 if there isn’t such an item, and set the dictionary to have an item with this number plus 1, creating the item if necessary.

def __delitem__(self, item):

if self.__bag.get(item) is not None:

self.__bag[item] -= 1

if self.__bag[item] <= 0:

del self.__bag[item]

else:

raise KeyError(str(item))

If an attempt is made to delete an item that isn’t in the bag, we raise a KeyError exception containing the item in string form. On the other hand, if the item is in the bag, we begin by reducing its count. If this drops to zero or less, we delete it from the bag.

We have not implemented the __getitem__() or __setitem__() special methods, because neither of them make sense for bags (since bags are unordered). Instead, we use bag.add() to add items, del bag[item] to delete items, and bag.count(item) to check how many of a particular item are in the bag.

def count(self, item):

return self.__bag.get(item, 0)

This method simply returns how many occurrences of the given item are in the bag—or zero if the item isn’t in the bag. A perfectly reasonable alternative would be to raise a KeyError for attempts to count an item that isn’t in the bag. This could be done simply by changing the method’s body to return self.__bag[item].

def __len__(self):

return sum(count for count in self.__bag.values())

This method is subtle since we must count all the duplicate items in the bag separately. To do this, we iterate over all the bag’s values (i.e., its item counts) and sum them using the built-in sum() function.

def __contains__(self, item):

return item in self.__bag

This method returns True if the bag contains at least one of the given item (since if an item is in the bag at all, its count is at least 1); otherwise, it returns False.

We have now seen all of the bag’s methods except for its iteration support. First, we’ll look at the Bag1.py module’s Bag.__iter__() method.

def __iter__(self): # This needlessly creates a list of items!

items = []

for item, count in self.__bag.items():

for _ in range(count):

items.append(item)

return iter(items)

This method is a first attempt. It builds up a list of items—as many of each as its count indicates—and then returns an iterator for the list. For a large bag, this could result in the creation of a very large list, which is rather inefficient, so we will look at two better approaches.

def __iter__(self):

for item, count in self.__bag.items():

for _ in range(count):

yield item

This code is from the Bag2.py module and is the only method that is different from Bag1.py’s Bag class.

Here, we iterate over the bag’s items, retrieving each item and its count, and yielding each item count times. There is a tiny fixed overhead for making the method a generator, but this is independent of the number of items, and of course, no separate list needs to be created, so this method is much more efficient than the Bag1.py version.

def __iter__(self):

return (item for item, count in self.__bag.items()

for _ in range(count))

Here is the Bag3.py module’s version of the Bag.__iter__() method. It is effectively the same as the Bag2.py module’s version, only instead of making the method into a generator, it returns a generator expression.

Although the book’s bag implementations work perfectly well, keep in mind that the standard library has its own bag implementation: collections.Counter.

3.5. Mediator Pattern

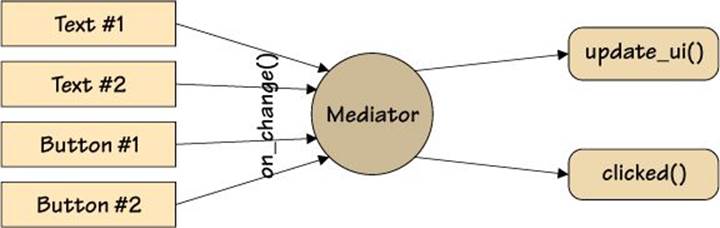

The Mediator Pattern provides a means of creating an object—the mediator—that can encapsulate the interactions between other objects. This makes it possible to achieve interactions between objects that have no direct knowledge of each other. For example, if a button object is clicked, it need only tell the mediator; then, it is up to the mediator to notify any objects that are interested in the button click. A mediator for a form with some text and button widgets, and a couple of associated methods, is illustrated in Figure 3.4.

Figure 3.4 A form’s widget mediator

This pattern is clearly of great utility in GUI programming. In fact, all of the GUI toolkits available for Python (e.g., Tkinter, PyQt/PySide, PyGObject, wxPython) provide some equivalent facility. We will see Tkinter examples of this in Chapter 7 (![]() 231).

231).

In this section’s two subsections, we will look at two approaches to implementing a mediator. The first is quite conventional; the second uses coroutines. Both make use of Form, Button, and Text classes (whose implementations we will see) for a fictitious user interface toolkit.

3.5.1. A Conventional Mediator

In this subsection we will create a conventional mediator—a class that will orchestrate interactions—in this case, for a form. All the code shown here is from the mediator1.py program.

class Form:

def __init__(self):

self.create_widgets()

self.create_mediator()

Like most functions and methods shown in this book, this method has been ruthlessly refactored, in this case to the point where it passes on all its work.

def create_widgets(self):

self.nameText = Text()

self.emailText = Text()

self.okButton = Button("OK")

self.cancelButton = Button("Cancel")

This form has two text entry widgets for a user’s name and email address, and two buttons, OK and Cancel. Naturally, in a real user interface we would have to include label widgets, and then lay out the widgets, but here our example is purely to show the Mediator Pattern, so we don’t do any of that. We will see the Text and Button classes shortly.

def create_mediator(self):

self.mediator = Mediator(((self.nameText, self.update_ui),

(self.emailText, self.update_ui),

(self.okButton, self.clicked),

(self.cancelButton, self.clicked)))

self.update_ui()

We create a single mediator object for the entire form. This object takes one or more widget–callable pairs, which describe the relationships the mediator must support. In this case all the callables are bound methods. (See the “Bound and Unbound Methods” sidebar, 63 ![]() .) Here, we are saying that if the text of one of the text entry widgets changes, the Form.update_ui() method should be called; and that if one of the buttons is clicked, the Form.clicked() method should be called. After creating the mediator, we call the update_ui() method to initialize the form.

.) Here, we are saying that if the text of one of the text entry widgets changes, the Form.update_ui() method should be called; and that if one of the buttons is clicked, the Form.clicked() method should be called. After creating the mediator, we call the update_ui() method to initialize the form.

def update_ui(self, widget=None):

self.okButton.enabled = (bool(self.nameText.text) and

bool(self.emailText.text))

This method enables the OK button if both the text entry widgets have some text in them; otherwise it disables the button. Clearly, this method should be called whenever the text of one of the text entry widgets is changed.

def clicked(self, widget):

if widget == self.okButton:

print("OK")

elif widget == self.cancelButton:

print("Cancel")

This method is designed to be called whenever a button is clicked. In a real application it would do something more interesting than printing the button’s text.

class Mediator:

def __init__(self, widgetCallablePairs):

self.callablesForWidget = collections.defaultdict(list)

for widget, caller in widgetCallablePairs:

self.callablesForWidget[widget].append(caller)

widget.mediator = self

This is the first of the Mediator class’s two methods. We want to create a dictionary whose keys are widgets and whose values are lists of one or more callables. This is achieved by using a default dictionary. When we access an item in a default dictionary, if the item is not present, it is created and added with the value being created by the callable given to the dictionary in the first place. In this case, we gave the dictionary a list object, which when called returns a new empty list. So, the first time a particular widget is looked up in the dictionary, a new item is inserted with the widget as the key and an empty list as the value, and we immediately append the caller to the list. And whenever a widget is looked up subsequently, the caller is appended to the item’s existing list. We also set the widget’s mediator attribute (creating it if necessary) to this mediator (self).

The method adds the bound methods in the order they appear in the pairs; if we didn’t care about the order we could pass set instead of list when creating the default dictionary, and use set.add() instead of list.append() to add the bound methods.

def on_change(self, widget):

callables = self.callablesForWidget.get(widget)

if callables is not None:

for caller in callables:

caller(widget)

else:

raise AttributeError("No on_change() method registered for {}"

.format(widget))

Whenever a mediated object—that is, any widget passed to a Mediator—has a change of state, it is expected to call its mediator’s on_change() method. This method then retrieves and calls every bound method associated with the widget.

class Mediated:

def __init__(self):

self.mediator = None

def on_change(self):

if self.mediator is not None:

self.mediator.on_change(self)

This is a convenience class designed to be inherited by mediated classes. It keeps a reference to the mediator object, and if its on_change() method is called, it calls the mediator’s on_change() method, parameterized by this widget (i.e., self, the widget that has had a change of state).

Since this base class’s method is never modified in any of its subclasses, we could replace the base class with a class decorator, as we saw earlier (§2.4.2.2, 58 ![]() ).

).

class Button(Mediated):

def __init__(self, text=""):

super().__init__()

self.enabled = True

self.text = text

def click(self):

if self.enabled:

self.on_change()

This Button class inherits Mediated. This gives the button a self.mediator attribute and an on_change() method that it is expected to call when it experiences a change of state; for example, when it is clicked.

So, in this example, a call to Button.click() will result in a call to Button.on_change() (inherited from Mediated), which will result in a call to the media-tor’s on_change() method, which will then call whatever method or methods are associated with this button—in this case, the Form.clicked() method, with the button as the widget argument.

class Text(Mediated):

def __init__(self, text=""):

super().__init__()

self.__text = text

@property

def text(self):

return self.__text

@text.setter

def text(self, text):

if self.text != text:

self.__text = text

self.on_change()

Structurally, the Text class is the same as the Button class and also inherits Mediated.

For any widget (button, text entry, and so on), so long as we make them a Mediated subclass and call on_change() whenever they have a change of state, we can leave it to the Mediator to take care of the interactions. Of course, when we create the Mediator, we must also register the widgets and the associated methods we want called. This means that all of a form’s widgets are loosely coupled, thereby avoiding direct—and potentially fragile—relationships.

3.5.2. A Coroutine-Based Mediator

A mediator can be viewed as a pipeline that receives messages (on_change() calls) and passes these on to interested objects. As we have already seen (§3.1.2, 76 ![]() ), coroutines can be used to provide such facilities. All the code shown here is from the mediator2.py program, and all the code not shown is identical to that shown in the previous subsection from the mediator1.py program.

), coroutines can be used to provide such facilities. All the code shown here is from the mediator2.py program, and all the code not shown is identical to that shown in the previous subsection from the mediator1.py program.

The approach used in this subsection is different from that taken in the previous subsection. There, we associated pairs of widgets and methods, and whenever the widget notified it had changed, the mediator called the associated methods.

Here, every widget is given a mediator that is actually a pipeline of coroutines. Whenever a widget has a change of state, it sends itself into the pipeline, and it is up to the pipeline components (i.e., the coroutines) to decide whether they want to perform any action in response to a change in the widget they are sent.

def create_mediator(self):

self.mediator = self._update_ui_mediator(self._clicked_mediator())

for widget in (self.nameText, self.emailText, self.okButton,

self.cancelButton):

widget.mediator = self.mediator

self.mediator.send(None)

For the coroutine version we don’t need a separate mediator class. Instead, we create a pipeline of coroutines; in this case, one with two components, self._update_ui_mediator() and self._clicked_mediator(). (These are all Form methods.)

Once the pipeline is in place, we set each widget’s mediator attribute to the pipeline. And at the end, we send None down the pipeline. Since no widget is None, no widget-specific actions will be triggered, but any form-level actions (such as enabling or disabling the OK button in_update_ui_mediator()) will be performed.

@coroutine

def _update_ui_mediator(self, successor=None):

while True:

widget = (yield)

self.okButton.enabled = (bool(self.nameText.text) and

bool(self.emailText.text))

if successor is not None:

successor.send(widget)

This coroutine is part of the pipeline. (The @coroutine decorator was shown and discussed earlier; 77 ![]() .)

.)

Whenever a widget reports a change, the widget is passed into the pipeline and is returned by the yield expression into the widget variable. When it comes to enabling or disabling the OK button, we do this regardless of which widget has changed. (After all, it may be that no widget has changed, that widget is None, and so the form is simply being initialized.) After dealing with the button the coroutine passes on the changed widget to the next coroutine in the chain (if there is one).

@coroutine

def _clicked_mediator(self, successor=None):

while True:

widget = (yield)

if widget == self.okButton:

print("OK")

elif widget == self.cancelButton:

print("Cancel")

elif successor is not None:

successor.send(widget)

This pipeline coroutine is only concerned with OK and Cancel button clicks. If either of these buttons is the changed widget, this coroutine handles it; otherwise, it passes on the widget to the next coroutine, if any.

class Mediated:

def __init__(self):

self.mediator = None

def on_change(self):

if self.mediator is not None:

self.mediator.send(self)

The Button and Text classes are the same as for mediator1.py, but the Mediated class has one tiny change: if its on_change() method is called, it sends the changed widget (self) into the mediator pipeline.

As we mentioned in the previous subsection, the Mediated class could be replaced with a class decorator. The book’s examples include a mediator2d.py version of this example where this is done. (See §2.4.2.2, 58 ![]() .)

.)

The Mediator Pattern can also be varied to provide multiplexing; that is, many-to-many communications between objects. See, also, the Observer Pattern (§3.7, ![]() 107) and the State Pattern (§3.8,

107) and the State Pattern (§3.8, ![]() 111).

111).

3.6. Memento Pattern

The Memento Pattern is a means of saving and restoring an object’s state without violating encapsulation.

Python has support for this pattern out of the box: we can use the pickle module to pickle and unpickle arbitrary Python objects (with a few constraints; e.g., we cannot pickle a file object). In fact, Python can pickle None, bools, bytearrays, bytes, complexes, floats, ints, andstrs, as well as dicts, lists, and tuples that contain only pickleable objects (including collections), top-level functions, top-level classes, and instances of custom top-level classes whose __dict__ is pickleable; that is, objects of most custom classes. It is also possible to achieve the same effect using the json module, although this only supports Python’s basic types along with dictionaries and lists. (We saw examples of json and pickle use in §3.3.3, 91 ![]() ).

).

Even in the quite rare cases where we hit a limitation in what can be pickled, we can always add our own custom pickling support; for example, by implementing the __getstate__() and __setstate__() special methods, and possibly the __getnewargs__() method. Similarly, if we want to use JSON format with our own custom classes, we can extend the json module’s encoder and decoder.

We could also create our own format and protocols, but there is little point in doing so, given Python’s rich support for this pattern.

Unpickling essentially involves executing arbitrary Python code, so it is poor practice to unpickle pickles that are received from untrusted sources such as physical media or over a network connection. In such cases JSON is safer, or we can use checksums and encryption with pickling to ensure that the pickle hasn’t been meddled with.

3.7. Observer Pattern

The Observer Pattern supports many-to-many dependency relationships between objects, such that when one object changes state, all its related objects are notified. Nowadays, probably the most common expression of this pattern and its variants is the model/view/controller (MVC) paradigm. In this paradigm, a model represents data, one or more views visualize that data, and one or more controllers mediate between input (e.g., user interaction) and the model. And any changes to the model are automatically reflected in the associated views.

One popular simplification of the MVC approach is to use a model/view where the views both visualize the data and mediate input to the model; that is, the views and controllers are combined. In terms of the Observer Pattern, this means that the views are observers of the model, and the model is the subject being observed.

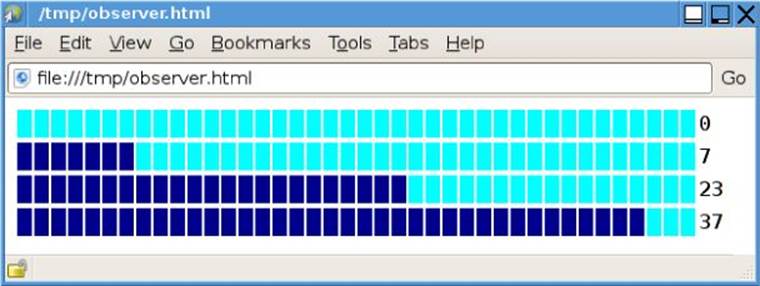

In this section we will create a model that represents a value with a minimum and a maximum (such as a scrollbar or slider widget or a temperature monitor). And we will create two separate observers (views) for the model: one to output the model’s value whenever it changes (as a kind of progress bar using HTML), and another to keep a history of the changes (their values and timestamps). Here is a sample run of the observer.py program.

$ ./observer.py > /tmp/observer.html

0 2013-04-09 14:12:01.043437

7 2013-04-09 14:12:01.043527

23 2013-04-09 14:12:01.043587

37 2013-04-09 14:12:01.043647

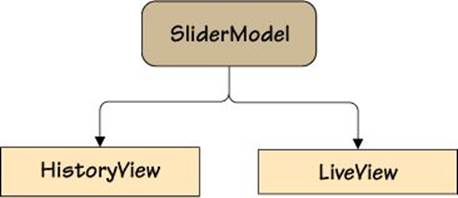

The history data is sent to sys.stderr and the HTML to sys.stdout, which we have redirected into an HTML file. The HTML page is shown in Figure 3.5. The program outputs four one-row HTML tables, the first when the (empty) model is first observed, and then each time the model is changed. Figure 3.6 illustrates the example’s model/view architecture.

Figure 3.5 The observer example’s HTML output as the model changes

Figure 3.6 A model and two views

This section’s example, observer.py, uses an Observed base class to provide the functionality for adding, removing, and notifying observers. The SliderModel class provides a value with a minimum and maximum, and inherits the Observed class so that it can support being observed. And then we have two views that observe the model, HistoryView and LiveView. Naturally, we will review all of these classes, but first we will look at the program’s main() function to see how they are used and how the output shown earlier and in Figure 3.5 was obtained.

def main():

historyView = HistoryView()

liveView = LiveView()

model = SliderModel(0, 0, 40) # minimum, value, maximum

model.observers_add(historyView, liveView) # liveView produces output

for value in (7, 23, 37):

model.value = value # liveView produces output

for value, timestamp in historyView.data:

print("{:3} {}".format(value, datetime.datetime.fromtimestamp(

timestamp)), file=sys.stderr)

We begin by creating the two views. Next we create a model with a minimum of 0, a current value of 0, and a maximum of 40. Then we add the two views as observers of the model. As soon as the LiveView is added as an observer it produces its first output, and as soon as theHistoryView is added it records its first value and timestamp. We then update the model’s value three times, and at each update the LiveView outputs a new one-row HTML table and the HistoryView records the value and the timestamp.

At the end we print out the entire history to sys.stderr (i.e., to the console). The datetime.datetime.fromtimestamp() function accepts a timestamp (number of seconds since the epoch as returned by time.time()) and returns an equivalent datetime.datetimeobject. The str.format() method is smart enough to output datetime.datetimes in ISO-8601 format.

class Observed:

def __init__(self):

self.__observers = set()

def observers_add(self, observer, *observers):

for observer in itertools.chain((observer,), observers):

self.__observers.add(observer)

observer.update(self)

def observer_discard(self, observer):

self.__observers.discard(observer)

def observers_notify(self):

for observer in self.__observers:

observer.update(self)

This class is designed to be inherited by models or by any other class that wants to support observation. The Observed class maintains a set of observing objects. Whenever an object is added, its update() method is called to initialize it with the model’s current state. Then, whenever the model changes state it is expected to call its inherited observers_notify() method, so that every observer’s update() method can be called to ensure that every observer (i.e., every view) is representing the model’s new state.

The observers_add() method is subtle. We want to accept one or more observers to add, but using just *observers would allow zero or more. So, we require at least one observer (observer) and accept zero or more in addition (*observers). We could have done this using tuple concatenation (e.g., for observer in (observer,) + observers:), but we have used the more efficient itertools.chain() function instead. As noted earlier (46 ![]() ), this function accepts any number of iterables and returns a single iterable that is effectively the concatenation of all the iterables passed to it.

), this function accepts any number of iterables and returns a single iterable that is effectively the concatenation of all the iterables passed to it.

class SliderModel(Observed):

def __init__(self, minimum, value, maximum):

super().__init__()

# These must exist before using their property setters

self.__minimum = self.__value = self.__maximum = None

self.minimum = minimum

self.value = value

self.maximum = maximum

@property

def value(self):

return self.__value

@value.setter

def value(self, value):

if self.__value != value:

self.__value = value

self.observers_notify()

...

This is the particular model class for this example, but of course, it could be any kind of model. By inheriting Observed, the class gains a private set of observers (initially empty) and the observers_add(), observer_discard(), and observers_notify() methods. Whenever the model’s state changes—for example, when its value is changed—it must call its observers_notify() method so that any observers can respond accordingly.

The class also has minimum and maximum properties whose code has been elided; they are structurally identical to the value property, and, of course, their setters also call observers_notify().

class HistoryView:

def __init__(self):

self.data = []

def update(self, model):

self.data.append((model.value, time.time()))

This view is an observer of the model since it provides an update() method that accepts the observed model as its only argument besides self. Whenever the update() method is called, it adds a value–timestamp 2-tuple to its self.data list, thus preserving a history of all the changes that are applied to the model.

class LiveView:

def __init__(self, length=40):

self.length = length