Algorithms (2014)

Six. Context

COMPUTING DEVICES ARE UBIQUITOUS in the modern world. In the last several decades, we have evolved from a world where computing devices were virtually unknown to a world where billions of people use them regularly. Moreover, today’s cellphones are orders of magnitude more powerful than the supercomputers that were available only to the privileged few as little as 30 years ago. But many of the underlying algorithms that enable these devices to work effectively are the same ones that we have studied in this book. Why? Survival of the fittest. Scalable (linear and linearithmic) algorithms have played a central role in the process and validate the idea that efficient algorithms are important. Researchers of the 1960s and 1970s built the basic infrastructure that we now enjoy with such algorithms. They knew that scalable algorithms are the key to the future; the developments of the past several decades have validated that vision. Now that the infrastructure is built, people are beginning to use it, for all sorts of purposes. As B. Chazelle has famously observed, the 20th century was the century of the equation, but the 21st century is the century of the algorithm.

Our treatment of fundamental algorithms in this book is only a starting point. The day is soon coming (if it is not already here) when one could build a college major around the study of algorithms. In commerical applications, scientific computing, engineering, operations research (OR), and countless other areas of inquiry too diverse to even mention, efficient algorithms make the difference between being able to solve problems in the modern world and not being able to address them at all. Our emphasis throughout this book has been to study important and useful algorithms. In this chapter, we reinforce this orientation by considering examples that illustrate the role of the algorithms that we have studied (and our approach to the study of algorithms) in several advanced contexts. To indicate the scope of the impact of the algorithms, we begin with a very brief description of several important areas of application. To indicate the depth, we later consider specific representative examples in detail and introduce the theory of algorithms. In both cases, this brief treatment at the end of a long book can only be indicative, not inclusive. For every area of application that we mention, there are dozens of others, equally broad in scope; for every point that we describe within an application, there are scores of others, equally important; and for every detailed example we consider, there are hundreds if not thousands of others, equally impactful.

Commercial applications

The emergence of the internet has underscored the central role of algorithms in commercial applications. All of the applications that you use regularly benefit from the classic algorithms that we have studied:

• Infrastructure (operating systems, databases, communications)

• Applications (email, document processing, digital photography)

• Publishing (books, magazines, web content)

• Networks (wireless networks, social networks, the internet)

• Transaction processing (financial, retail, web search)

As a prominent example, we consider in this chapter B-trees, a venerable data structure that was developed for mainstream computers of the 1960s but still serve as the basis for modern database systems. We will also discuss suffix arrays, for text indexing.

Scientific computing

Since von Neumann developed mergesort in 1950, algorithms have played a central role in scientific computing. Today’s scientists are awash in experimental data and are using both mathematical and computational models to understand the natural world for:

• Mathematical calculations (polynomials, matrices, differential equations)

• Data processing (experimental results and observations, especially genomics)

• Computational models and simulation

All of these can require complex and extensive computing with huge amounts of data. As a detailed example of an application in scientific computing, we consider in this chapter a classic example of event-driven simulation. The idea is to maintain a model of a complicated real-world system, controlling changes in the model over time. There are a vast number of applications of this basic approach. We also consider a fundamental data-processing problem in computational genomics.

Engineering

Almost by definition, modern engineering is based on technology. Modern technology is computer-based, so algorithms play a central role for

• Mathematical calculations and data processing

• Computer-aided design and manufacturing

• Algorithm-based engineering (networks, control systems)

• Imaging and other medical systems

Engineers and scientists use many of the same tools and approaches. For example, scientists develop computational models and simulations for the purpose of understanding the natural world; engineers develop computational models and simulations for the purpose of designing, building, and controlling the artifacts they create.

Operations research

Researchers and practitioners in OR develop and apply mathematical models for problem solving, including

• Scheduling

• Decision making

• Assignment of resources

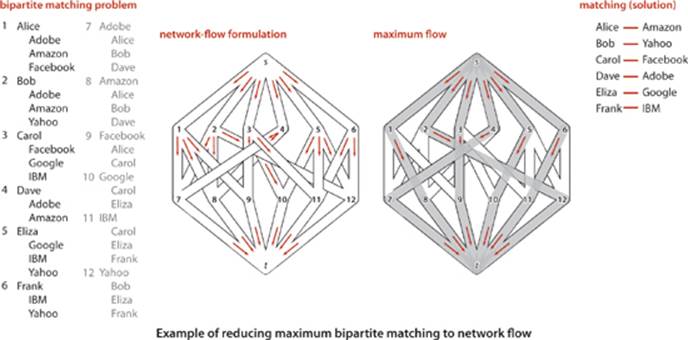

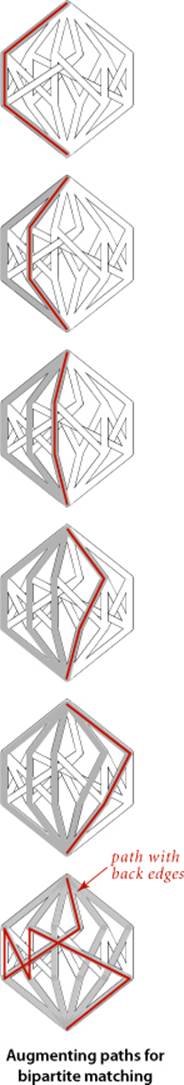

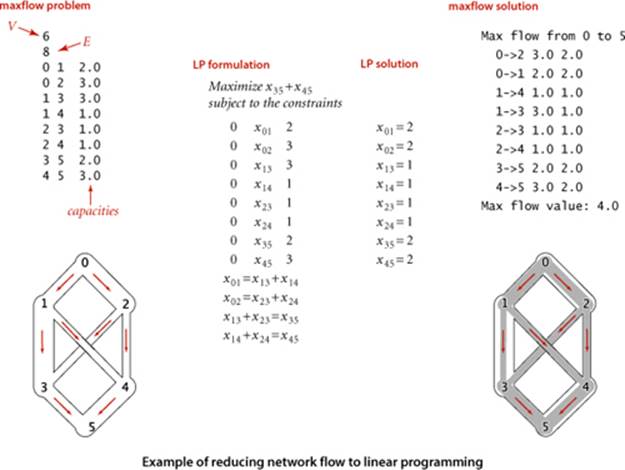

The shortest-paths problem of SECTION 4.4 is a classic OR problem. We revisit this problem and consider the maxflow problem, illustrate the importance of reduction, and discuss implications for general problem-solving models, in particular the linear programming model that is central in OR.

ALGORITHMS PLAY AN IMPORTANT ROLE in numerous subfields of computer science with applications in all of these areas, including, but certainly not limited to

• Computational geometry

• Cryptography

• Databases

• Programming languages and systems

• Artificial intelligence

In each field, articulating problems and finding efficient algorithms and data structures for solving them play an essential role. Some of the algorithms we have studied apply directly; more important, the general approach of designing, implementing, and analyzing algorithms that lies at the core of this book has proven successful in all of these fields. This effect is spreading beyond computer science to many other areas of inquiry, from games to music to linguistics to finance to neuroscience.

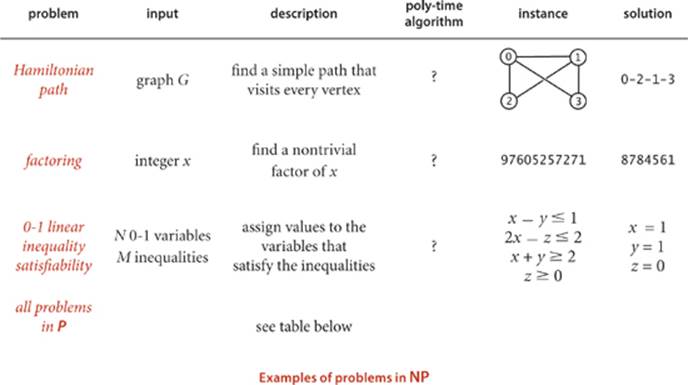

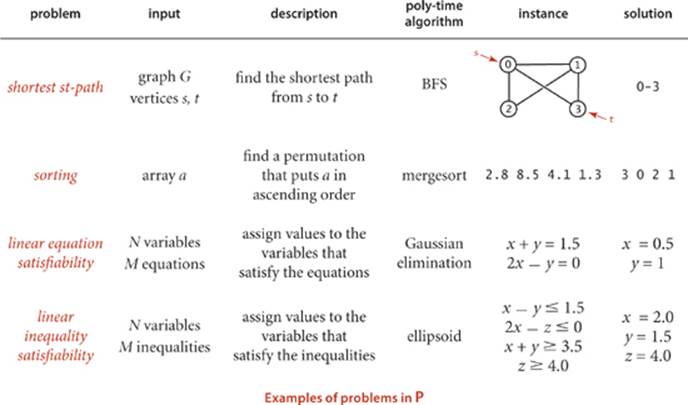

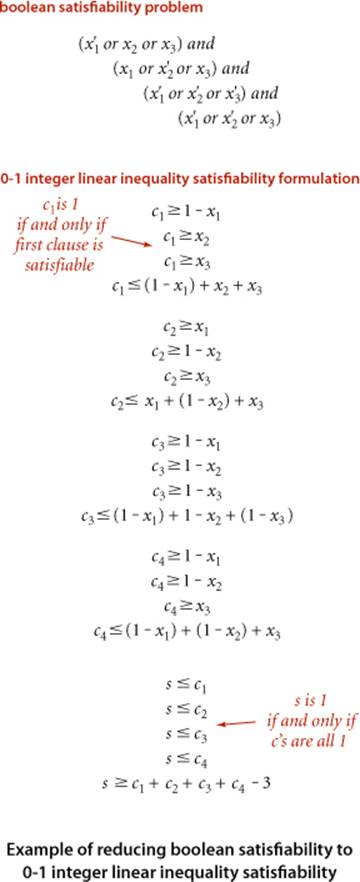

So many important and useful algorithms have been developed that learning and understanding relationships among them are essential. We finish this section (and this book!) with an introduction to the theory of algorithms, with particular focus on intractability and the P=NP? question that still stands as the key to understanding the practical problems that we aspire to solve.

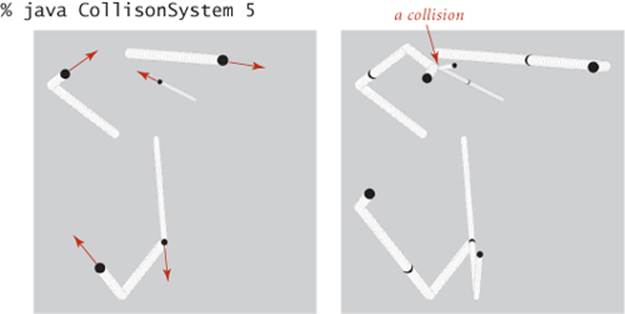

Event-driven simulation

Our first example is a fundamental scientific application: simulate the motion of a system of moving particles that behave according to the laws of elastic collision. Scientists use such systems to understand and predict properties of physical systems. This paradigm embraces the motion of molecules in a gas, the dynamics of chemical reactions, atomic diffusion, sphere packing, the stability of the rings around planets, the phase transitions of certain elements, one-dimensional self-gravitating systems, front propagation, and many other situations. Applications range from molecular dynamics, where the objects are tiny subatomic particles, to astrophysics, where the objects are huge celestial bodies.

Addressing this problem requires a bit of high-school physics, a bit of software engineering, and a bit of algorithmics. We leave most of the physics for the exercises at the end of this section so that we can concentrate on the topic at hand: using a fundamental algorithmic tool (heap-based priority queues) to address an application, enabling calculations that would not otherwise be possible.

Hard-disc model

We begin with an idealized model of the motion of atoms or molecules in a container that has the following salient features:

• Moving particles interact via elastic collisions with each other and with walls.

• Each particle is a disc with known position, velocity, mass, and radius.

• No other forces are exerted.

This simple model plays a central role in statistical mechanics, a field that relates macroscopic observables (such as temperature and pressure) to microscopic dynamics (such as the motion of individual atoms and molecules). Maxwell and Boltzmann used the model to derive the distribution of speeds of interacting molecules as a function of temperature; Einstein used the model to explain the Brownian motion of pollen grains immersed in water. The assumption that no other forces are exerted implies that particles travel in straight lines at constant speed between collisions. We could also extend the model to add other forces. For example, if we add friction and spin, we can more accurately model the motion of familiar physical objects such as billiard balls on a pool table.

Time-driven simulation

Our primary goal is simply to maintain the model: that is, we want to be able to keep track of the positions and velocities of all the particles as time passes. The basic calculation that we have to do is the following: given the positions and velocities for a specific time t, update them to reflect the situation at a future time t+dt for a specific amount of time dt. Now, if the particles are sufficiently far from one another and from the walls that no collision will occur before t+dt, then the calculation is easy: since particles travel in a straight-line trajectory, we use each particle’s velocity to update its position. The challenge is to take the collisions into account. One approach, known as time-driven simulation, is based on using a fixed value of dt. To do each update, we need to check all pairs of particles, determine whether or not any two occupy the same position, and then back up to the moment of the first such collision. At that point, we are able to properly update the velocities of the two particles to reflect the collision (using calculations that we will discuss later). This approach is computationally intensive when simulating a large number of particles: if dt is measured in seconds (fractions of a second, usually), it takes time proportional to N2/dt to simulate an N-particle system for 1 second. This cost is prohibitive (even worse than usual for quadratic algorithms)—in the applications of interest, N is very large and dt is very small. The challenge is that if we make dt too small, the computational cost is high, and if we make dt too large, we may miss collisions.

Event-driven simulation

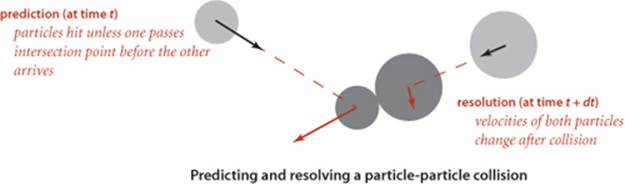

We pursue an alternative approach that focuses only on those times at which collisions occur. In particular, we are always interested in the next collision (because the simple update of all of the particle positions using their velocities is valid until that time). Therefore, we maintain a priority queue of events, where an event is a potential collision sometime in the future, either between two particles or between a particle and a wall. The priority associated with each event is its time, so when we remove the minimum from the priority queue, we get the next potential collision.

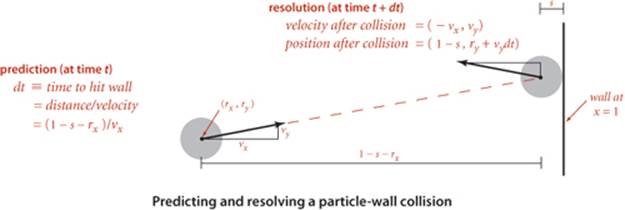

Collision prediction

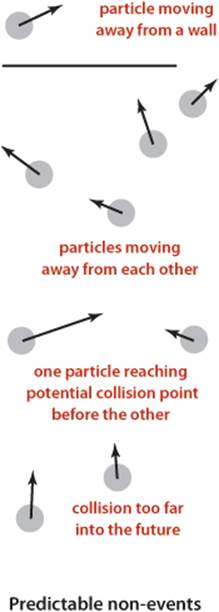

How do we identify potential collisions? The particle velocities provide precisely the information that we need. For example, suppose that we have, at time t, a particle of radius s at position (rx, ry) moving with velocity (vx, vy) in the unit box. Consider the vertical wall at x = 1 with y between 0 and 1. Our interest is in the horizontal component of the motion, so we can concentrate on the x-component of the position rx and the x-component of the velocity vx. If vx is negative, the particle is not on a collision course with the wall, but if vx is positive, there is a potential collision with the wall. Dividing the horizontal distance to the wall (1 − s − rx) by the magnitude of the horizontal component of the velocity (vx) we find that the particle will hit the wall after dt = (1 − s − rx)/vx time units, when the particle will be at (1 − s, ry + vy dt), unless it hits some other particle or a horizontal wall before that time. Accordingly, we put an entry on the priority queue with priority t + dt (and appropriate information describing the particle-wall collision event). The collision-prediction calculations for other walls are similar (see EXERCISE 6.1). The calculation for two particles colliding is also similar, but more complicated. Note that it is often the case that the calculation leads to a prediction that the collision will not happen (if the particle is moving away from the wall, or if two particles are moving away from one another)—we do not need to put anything on the priority queue in such cases. To handle another typical situation where the predicted collision might be too far in the future to be of interest, we include a parameter limit that specifies the time period of interest, so we can also ignore any events that are predicted to happen at a time later thanlimit.

Collision resolution

When a collision does occur, we need to resolve it by applying the physical formulas that specify the behavior of a particle after an elastic collision with a reflecting boundary or with another particle. In our example where the particle hits the vertical wall, if the collision does occur, the velocity of the particle will change from (vx, vy) to (– vx, vy) at that time. The collision-resolution calculations for other walls are similar, as are the calculations for two particles colliding, but these are more complicated (see EXERCISE 6.1).

Invalidated events

Many of the collisions that we predict do not actually happen because some other collision intervenes. To handle this situation, we maintain an instance variable for each particle that counts the number of collisions in which it has been involved. When we remove an event from the priority queue for processing, we check whether the counts corresponding to its particle(s) have changed since the event was created. This approach to handling invalidated collisions is the so-called lazy approach: when a particle is involved in a collision, we leave the now-invalid events associated with it on the priority queue and essentially ignore them when they come off. An alternative approach, the so-called eager approach, is to remove from the priority queue all events involving any colliding particle before calculating all of the new potential collisions for that particle. This approach requires a more sophisticated priority queue (that implements the remove operation).

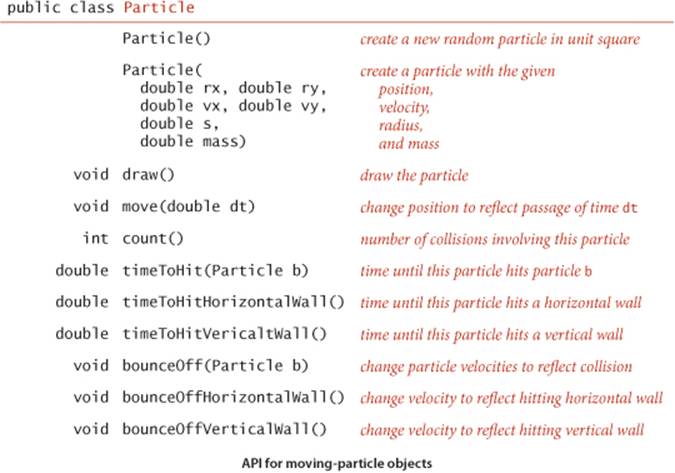

THIS DISCUSSION sets the stage for a full event-driven simulation of particles in motion, interacting according to the physical laws of elastic collisions. The software architecture is to encapsulate the implementation in three classes: a Particle data type that encapsulates calculations that involve particles, an Event data type for predicted events, and a CollisionSystem client that does the simulation. The centerpiece of the simulation is a MinPQ that contains events, ordered by time. Next, we consider implementations of Particle, Event, and CollisionSystem.

Particles

EXERCISE 6.1 outlines the implementation of a data type particles, based on a direct application of Newton’s laws of motion. A simulation client needs to be able to move particles, draw them, and perform a number of calculations related to collisions, as detailed in the following API:

The three timeToHit*() methods all return Double.POSITIVE_INFINITY for the (rather common) case when there is no collision course. These methods allow us to predict all future collisions that are associated with a given particle, putting an event on a priority queue corresponding to each one that happens before a given time limit. We use the bounceoff() method each time that we process an event that corresponds to two particles colliding to change the velocities (of both particles) to reflect the collision, and the bounceOff*() methods for events corresponding to collisions between a particle and a wall.

Events

We encapsulate in a private class the description of the objects to be placed on the priority queue (events). The instance variable time holds the time when the event is predicted to happen, and the instance variables a and b hold the particles associated with the event. We have three different types of events: a particle may hit a vertical wall, a horizontal wall, or another particle. To develop a smooth dynamic display of the particles in motion, we add a fourth event type, a redraw event that is a command to draw all the particles at their current positions. A slight twist in the implementation of Event is that we use the fact that particle values may be null to encode these four different types of events, as follows:

• Neither a nor b null: particle-particle collision

• a not null and b null: collision between a and a vertical wall

• a null and b not null: collision between b and a horizontal wall

• Both a and b null: redraw event (draw all particles)

While not the finest object-oriented programming, this convention is a natural one that enables straightforward client code and leads to the implementation shown below.

Event class for particle simulation

private static class Event implements Comparable<Event>

{

private final double time;

private final Particle a, b;

private final int countA, countB;

public Event(double t, Particle a, Particle b)

{ // Create a new event to occur at time t involving a and b.

this.time = t;

this.a = a;

this.b = b;

if (a != null) countA = a.count(); else countA = -1;

if (b != null) countB = b.count(); else countB = -1;

}

public int compareTo(Event that)

{

if (this.time < that.time) return -1;

else if (this.time > that.time) return +1;

else return 0;

}

public boolean isValid()

{

if (a != null && a.count() != countA) return false;

if (b != null && b.count() != countB) return false;

return true;

}

}

A second twist in the implementation of Event is that we maintain the instance variables countA and countB to record the number of collisions involving each of the particles at the time the event is created. If these counts are unchanged when the event is removed from the priority queue, we can go ahead and simulate the occurrence of the event, but if one of the counts changes between the time an event goes on the priority queue and the time it leaves, we know that the event has been invalidated and can ignore it. The method isValid() allows client code to test this condition.

Simulation code

With the computational details encapsulated in Particle and Event, the simulation itself requires remarkably little code, as you can see in the implementation in the class CollisionSystem (see page 863 and page 864). Most of the calculations are encapsulated in the predictCollisions() method shown on this page. This method calculates all potential future collisions involving particle a (either with another particle or with a wall) and puts an event corresponding to each onto the priority queue.

Predicting collisions with other particles

private void predictCollisions(Particle a, double limit)

{

if (a == null) return;

for (int i = 0; i < particles.length; i++)

{ // Put collision with particles[i] on pq.

double dt = a.timeToHit(particles[i]);

if (t + dt <= limit)

pq.insert(new Event(t + dt, a, particles[i]));

}

double dtX = a.timeToHitVerticalWall();

if (t + dtX <= limit)

pq.insert(new Event(t + dtX, a, null));

double dtY = a.timeToHitHorizontalWall();

if (t + dtY <= limit)

pq.insert(new Event(t + dtY, null, a));

}

The heart of the simulation is the simulate() method shown on page 864. We initialize by calling predictCollisions() for each particle to fill the priority queue with the potential collisions involving all particle-wall and all particle-particle pairs. Then we enter the main event-driven simulation loop, which works as follows:

• Delete the impending event (the one with minimum priority t).

• If the event is invalid, ignore it.

• Advance all particles to time t on a straight-line trajectory.

• Update the velocities of the colliding particle(s).

• Use predictCollisions() to predict future collisions involving the colliding particle(s) and insert onto the priority queue an event corresponding to each.

This simulation can serve as the basis for computing all manner of interesting properties of the system, as explored in the exercises. For example, one fundamental property of interest is the amount of pressure exerted by the particles against the walls. One way to calculate the pressure is to keep track of the number and magnitude of wall collisions (an easy computation based on particle mass and velocity) so that we can easily compute the total. Temperature involves a similar calculation.

Event-driven simulation of colliding particles (scaffolding)

public class CollisionSystem

{

private class Event implements Comparable<Event>

{ /* See text. */ }

private MinPQ<Event> pq; // the priority queue

private double t = 0.0; // simulation clock time

private Particle[] particles; // the array of particles

public CollisionSystem(Particle[] particles)

{ this.particles = particles; }

private void predictCollisions(Particle a, double limit)

{ /* See text. */ }

public void redraw(double limit, double Hz)

{ // Redraw event: redraw all particles.

StdDraw.clear();

for (int i = 0; i < particles.length; i++) particles[i].draw();

StdDraw.show(20);

if (t < limit)

pq.insert(new Event(t + 1.0 / Hz, null, null));

}

public void simulate(double limit, double Hz)

{ /* See next page. */ }

public static void main(String[] args)

{

StdDraw.show(0);

int N = Integer.parseInt(args[0]);

Particle[] particles = new Particle[N];

for (int i = 0; i < N; i++)

particles[i] = new Particle();

CollisionSystem system = new CollisionSystem(particles);

system.simulate(10000, 0.5);

}

}

This class is a priority-queue client that simulates the motion of a system of particles over time. The main() test client takes a command-line argument N, creates N random particles, creates a CollisionSystem consisting of the particles, and calls simulate() to do the simulation. The instance variables are a priority queue for the simulation, the time, and the particles.

Event-driven simulation of colliding particles (primary loop)

public void simulate(double limit, double Hz)

{

pq = new MinPQ<Event>();

for (int i = 0; i < particles.length; i++)

predictCollisions(particles[i], limit);

pq.insert(new Event(0, null, null)); // Add redraw event.

while (!pq.isEmpty())

{ // Process one event to drive simulation.

Event event = pq.delMin();

if (!event.isValid()) continue;

for (int i = 0; i < particles.length; i++)

particles[i].move(event.time - t); // Update particle positions

t = event.time; // and time.

Particle a = event.a, b = event.b;

if (a != null && b != null) a.bounceOff(b);

else if (a != null && b == null) a.bounceOffVerticalWall();

else if (a == null && b != null) b.bounceOffHorizontallWall();

else if (a == null && b == null) redraw(limit, Hz);

predictCollisions(a, limit);

predictCollisions(b, limit);

}

}

This method represents the main event-driven simulation. First, the priority queue is initialized with events representing all predicted future collisions involving each particle. Then the main loop takes an event from the queue, updates time and particle positions, and adds new events to reflect changes.

Performance

As described at the outset, our interest in event-driven simulation is to avoid the computationally intensive inner loop intrinsic in time-driven simulation.

Proposition A. An event-driven simulation of N colliding particles requires at most N2 priority queue operations for initialization, and at most N priority queue operations per collision (with one extra priority queue operation for each invalid collision).

Proof Immediate from the code.

Using our standard guaranteed-logarithmic-time-per operation priority-queue implementation from SECTION 2.4, the time needed per collision is linearithmic. Simulations with large numbers of particles are therefore quite feasible.

EVENT-DRIVEN SIMULATION applies to countless other domains that involve physical modeling of moving objects, from molecular modeling to astrophysics to robotics. Such applications may involve extending the model to add other kinds of bodies, to operate in three dimensions, to include other forces, and in many other ways. Each extension involves its own computational challenges. This event-driven approach results in a more robust, accurate, and efficient simulation than many other alternatives that we might consider, and the efficiency of the heap-based priority queue enables calculations that might not otherwise be possible.

Simulation plays a vital role in helping researchers to understand properties of the natural world in all fields of science and engineering. Applications ranging from manufacturing processes to biological systems to financial systems to complex engineered structures are too numerous to even list here. For a great many of these applications, the extra efficiency afforded by the heap-based priority queue data type or an efficient sorting algorithm can make a substantial difference in the quality and extent that are possible in the simulation.

B-trees

In CHAPTER 3, we saw that algorithms that are appropriate for accessing items from huge collections of data are of immense practical importance. Searching is a fundamental operation on huge data sets, and such searching consumes a significant fraction of the resources used in many computing environments. With the advent of the web, we have the ability to access a vast amount of information that might be relevant to a task—our challenge is to be able to search through it efficiently. In this section, we describe a further extension of the balanced-tree algorithms fromSECTION 3.3 that can support external search in symbol tables that are kept on a disk or on the web and are thus potentially far larger than those we have been considering (which have to fit in addressable memory). Modern software systems are blurring the distinction between local files and web pages, which may be stored on a remote computer, so the amount of data that we might wish to search is virtually unlimited. Remarkably, the methods that we shall study can support search and insert operations on symbol tables containing trillions of items or more using only four or five references to small blocks of data.

Cost model

Data storage mechanisms vary widely and continue to evolve, so we use a simple model to capture the essentials. We use the term page to refer to a contiguous block of data and the term probe to refer to the first access to a page. We assume that accessing a page involves reading its contents into local memory, so that subsequent accesses are relatively inexpensive. A page could be a file on your local computer or a web page on a distant computer or part of a file on a server, or whatever. Our goal is to develop search implementations that use a small number of probes to find any given key. We avoid making specific assumptions about the page size and about the ratio of the time required for a probe (which presumably requires communicating with a distant device) to the time required, subsequently, to access items within the block (which presumably happens in a local processor). In typical situations, these values are likely to be on the order of 100 or 1,000 or 10,000; we do not need to be more precise because the algorithms are not highly sensitive to differences in the values in the ranges of interest.

B-tree cost model. When studying algorithms for external searching, we count page accesses (the number of times a page is accessed, for read or write).

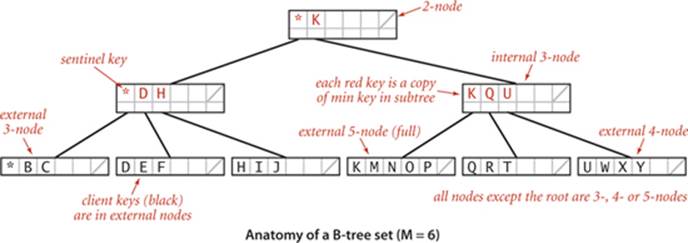

B-trees

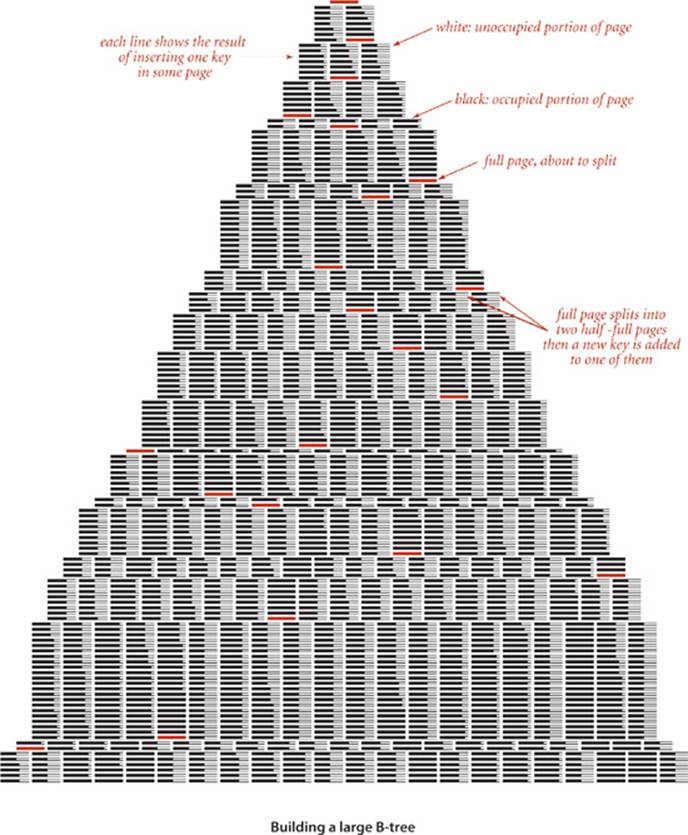

The approach is to extend the 2-3 tree data structure described in SECTION 3.3, with a crucial difference: rather than store the data in the tree, we build a tree with copies of the keys, each key copy associated with a link. This approach enables us to more easily separate the index from the table itself, much like the index in a book. As with 2-3 trees, we enforce upper and lower bounds on the number of key-link pairs that can be in each node: we choose a parameter M (an even number, by convention) and build multiway trees where every node must have at most M − 1 key-link pairs (we assume that M is sufficiently small that an M-way node will fit on a page) and at least M/2 key-link pairs (to provide the branching that we need to keep search paths short), except possibly the root, which can have fewer than M/2 key-link pairs but must have at least 2. Such trees were named B-trees by Bayer and McCreight, who, in 1970, were the first researchers to consider the use of multiway balanced trees for external searching. Some people reserve the term B-tree to describe the exact data structure built by the algorithm suggested by Bayer and McCreight; we use it as a generic term for data structures based on multiway balanced search trees with a fixed page size. We specify the value of M by using the terminology “B-tree of order M.” In a B-tree of order 4, each node has at most 3 and at least 2 key-link pairs; in a B-tree of order 6, each node has at most 5 and at least 3 key-link pairs (except possibly the root, which could have 2 key-link pairs), and so forth. The reason for the exception at the root for larger M will become clear when we consider the construction algorithm in detail.

Conventions

To illustrate the basic mechanisms, we consider an (ordered) SET implementation (with keys and no values). Extending to provide an ordered ST to associate keys with values is an instructive exercise (see EXERCISE 6.16). Our goal is to support add() and contains() for a set of keys that could be huge. We use ordered keys because we are generalizing search trees, which are based on ordered keys. Extending our implementation to support other ordered operations is also an instructive exercise. In external searching applications, it is common to keep the index separate from the data. For B-trees, we do so by using two different kinds of nodes:

• Internal nodes, which associate copies of keys with pages

• External nodes, which have references to the actual data

Every key in an internal node is associated with another node that is the root of a tree containing all keys greater than or equal to that key and less than the next largest key, if any. It is convenient to use a special key, known as a sentinel, that is defined to be less than all other keys, and to start with a root node containing that key, The symbol table does not contain duplicate keys. The symbol table does not contain duplicate keys, but we use copies of keys (in internal nodes) to guide the search. (In our examples, we use single-letter keys and the character * as the sentinel that is less than all other keys.) These conventions simplify the code somewhat and thus represent a convenient (and widely used) alternative to mixing all the data with links in the internal nodes, as we have done for other search trees.

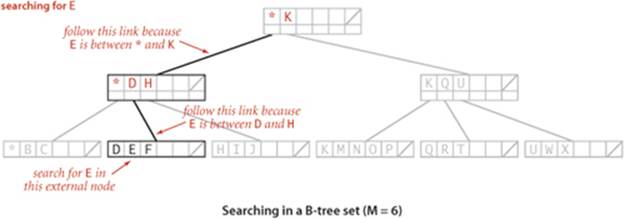

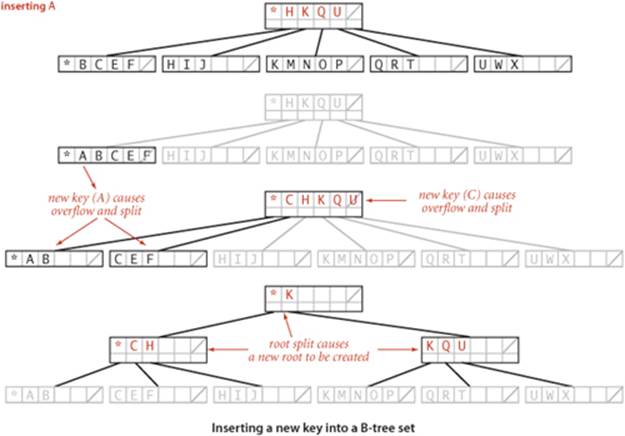

Search and insert

Search in a B-tree is based on recursively searching in the unique subtree that could contain the search key. Every search ends in an external node that contains the key if and only if it is in the set. We might also terminate a search hit when encountering a copy of the search key in an internal node, but we always search to an external node because doing so simplifies extending the code to an ordered symbol-table implementation (also, this event rarely happens when M is large). To be specific, consider searching in a B-tree of order 6: it consists of 3-nodes with 3 key-link pairs, 4-nodes with 4 key-link pairs, and 5-nodes with 5 key-link pairs, with possibly a 2-node at the root. To search, we start at the root and move from one node to the next by finding the proper interval for the search key in the current node and then exiting through the corresponding link to get to the next node. Eventually, the search process leads us to a page containing keys at the bottom of the tree. We terminate the search with a search hit if the search key is in that page; we terminate with a search miss if it is not. As with 2-3 trees, we can use recursive code to insert a new key at the bottom of the tree. If there is no room for the key, we allow the node at the bottom to temporarily overflow (become a 6-node) and then split 6-nodes on the way up the tree, after the recursive call. If the root is an 6-node, we split it into a 2-node connected to two 3-nodes; elsewhere in the tree, we replace any k-node attached to a 6-node by a (k+1)-node attached to two 3-nodes. Replacing 3 by M/2 and 6 by M in this description converts it into a description of search and insert for B-trees of order M and leads to the following definition:

Definition. A B-tree of order M (where M is an even positive integer) is a tree that either is an external k-node (with k keys and associated information) or comprises internal k-nodes (each with k keys and k links to B-trees representing each of the k intervals delimited by the keys), having the following structural properties: every path from the root to an external node must be the same length (perfect balance); and k must be between 2 and M − 1 at the root and between M/2 and M − 1 at every other node.

Representation

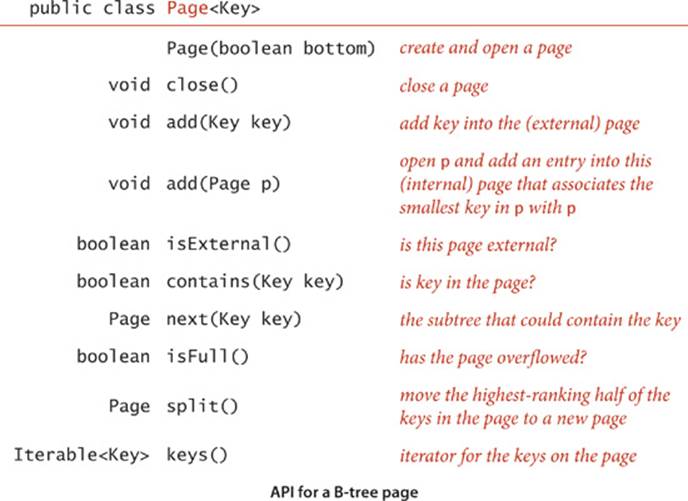

As just discussed, we have a great deal of freedom in choosing concrete representations for nodes in B-trees. We encapsulate these choices in a Page API that associates keys with links to Page objects and supports the operations that we need to test for overfull pages, split them, and distinguish between internal and external pages. You can think of a Page as a symbol table, kept externally (in a file on your computer or on the web). The terms open and close in the API refer to the process of bringing an external page into internal memory and writing its contents back out (if necessary). The add() method for internal pages is a symbol-table operation that associates the given page with the minimum key in the tree rooted at that page. The add() and contains() methods for external pages are like their corresponding SET operations. The workhorse of any implementation is the split()method, which splits a full page by moving the M/2 key-value pairs of rank greater than M/2 to a new Page and returns a reference to that page. EXERCISE 6.15 discusses an implementation of Page using BinarySearchST, which implements B-trees in memory, like our other search implementations. On some systems, this might suffice as an external searching implementation because a virtual-memory system might take care of disk references. More typical practical implementations might involve hardware-specific code that reads and writes pages. EXERCISE 6.19 encourages you to think about implementing Page using web pages. We ignore such details here in the text to emphasize the utility of the B-tree concept in a broad variety of settings.

With these preparations, the code for BTreeSET on page 872 is remarkably simple. For contains(), we use a recursive method that takes a Page as argument and handles three cases:

• If the page is external and the key is in the page, return true.

• If the page is external and the key is not in the page, return false.

• Otherwise, do a recursive call for the subtree that could contain the key.

For add() we use the same recursive structure, but insert the key at the bottom if it is not found during the search and then split any full nodes on the way up the tree.

Performance

The most important property of B-trees is that for reasonable values of the parameter M the search cost is constant, for all practical purposes:

Proposition B. A search or an insertion in a B-tree of order M with N items requires between logM N and logM/2 N probes—a constant number, for practical purposes.

Proof This property follows from the observation that all the nodes in the interior of the tree (nodes that are not the root and are not external) have between M/2 and M − 1 links, since they are formed from a split of a full node with M keys and can only grow in size (when a child is split). In the best case, these nodes form a complete tree of branching factor M − 1, which leads immediately to the stated bound. In the worst case, we have a root with two entries each of which refers to a complete tree of degree M/2. Taking the logarithm to the base M results in a very small number—for example, when M is 1,000, the height of the tree is less than 4 for N less than 62.5 billion.

In typical situations, we can reduce the cost by one probe by keeping the root in internal memory. For searching on disk or on the web, we might take this step explicitly before embarking on any application involving a huge number of searches; in a virtual memory with caching, the root node will be the one most likely to be in fast memory, because it is the most frequently accessed node.

Space

The space usage of B-trees is also of interest in practical applications. By construction, the pages are at least half full, so, in the worst case, B-trees use about double the space that is absolutely necessary for keys, plus extra space for links. For random keys, A. Yao proved in 1979 (using mathematical analysis that is beyond the scope of this book) that the average number of keys in a node is about M ln 2, so about 44 percent of the space is unused. As with many other search algorithms, this random model reasonably predicts results for key distributions that we observe in practice.

Algorithm 6.1 B-tree set implementation

public class BTreeSET<Key extends Comparable<Key>>

{

private Page root = new Page(true);

public BTreeSET(Key sentinel)

{ add(sentinel); }

public boolean contains(Key key)

{ return contains(root, key); }

private boolean contains(Page h, Key key)

{

if (h.isExternal()) return h.contains(key);

return contains(h.next(key), key);

}

public void add(Key key)

{

add(root, key);

if (root.isFull())

{

Page lefthalf = root;

Page righthalf = root.split();

root = new Page(false);

root.add(lefthalf);

root.add(righthalf);

}

}

public void add(Page h, Key key)

{

if (h.isExternal()) { h.add(key); return; }

Page next = h.next(key);

add(next, key);

if (next.isFull())

h.add(next.split());

next.close();

}

}

This B-tree implementation implements multiway balanced search trees as described in the text, using a Page data type that supports search by associating keys with subtrees that could contain the key and supports insertion by including a test for overflow and a page split method.

THE IMPLICATIONS OF PROPOSITION B ARE PROFOUND and worth contemplating. Would you have guessed that you can develop a search implementation that can guarantee a cost of four or five probes for search and insert in files as large as you can reasonably contemplate needing to process? B-trees are widely used because they allow us to achieve this ideal. In practice, the primary challenge to developing an implementation is ensuring that space is available for the B-tree nodes, but even that challenge becomes easier to address as available storage space increases on typical devices.

Many variations on the basic B-tree abstraction suggest themselves immediately. One class of variations saves time by packing as many page references as possible in internal nodes, thereby increasing the branching factor and flattening the tree. Another class of variations improves storage efficiency by combining nodes with siblings before splitting. The precise choice of variant and algorithm parameter can be engineered to suit particular devices and applications. Although we are limited to getting a small constant factor improvement, such an improvement can be of significant importance for applications where the table is huge and/or huge numbers of transactions are involved, precisely the applications for which B-trees are so effective.

Suffix arrays

Efficient algorithms for string processing play a critical role in commercial applications and in scientific computing. From the countless strings that define web pages that are searched by billions of users to the extensive genomic databases that scientists are studying to unlock the secret of life, computing applications of the 21st century are increasingly string-based. As usual, some classic algorithms are effective, but remarkable new algorithms are being developed. Next, we describe a data structure and an API that support some of these algorithms. We begin by describing a typical (and a classic) string-processing problem.

Longest repeated substring

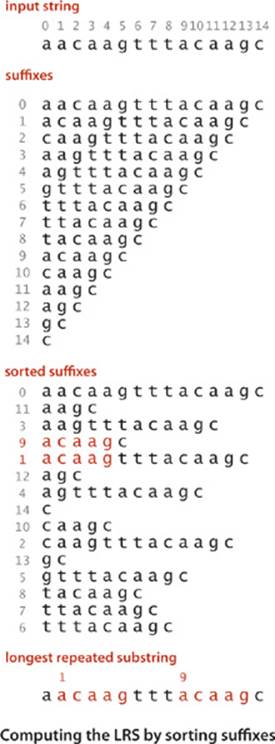

What is the longest substring that appears at least twice in a given string? For example, the longest repeated substring in the string "to be or not to be" is the string "to be". Think briefly about how you might solve it. Could you find the longest repeated substring in a string that has millions of characters? This problem is simple to state and has many important applications, including data compression, cryptography, and computer-assisted music analysis. For example, a standard technique used in the development of large software systems is refactoring code. Programmers often put together new programs by cutting and pasting code from old programs. In a large program built over a long period of time, replacing duplicate code by function calls to a single copy of the code can make the program much easier to understand and maintain. This improvement can be accomplished by finding long repeated substrings in the program. Another application is found in computational biology. Are substantial identical fragments to be found within a given genome? Again, the basic computational problem underlying this question is to find the longest repeated substring in a string. Scientists are typically interested in more detailed questions (indeed, the nature of the repeated substrings is precisely what scientists seek to understand), but such questions are certainly no easier to answer than the basic question of finding the longest repeated substring.

Brute-force solution

As a warmup, consider the following simple task: given two strings, find their longest common prefix (the longest substring that is a prefix of both strings). For example, the longest common prefix of acctgttaac and accgttaa is acc. The code at right is a useful starting point for addressing more complicated tasks: it takes time proportional to the length of the match. Now, how do we find the longest repeated substring in a given string? With lcp(), the following brute-force solution immediately suggests itself: we compare the substring starting at each string position i with the substring starting at each other starting position j, keeping track of the longest match found. This code is not useful for long strings, because its running time is at least quadratic in the length of the string: the number of different pairs i and j is N (N−1)/2, so the number of calls on lcp() for this approach would be ~N2/2. Using this solution for a genomic sequence with millions of characters would require trillions of lcp() calls, which is infeasible.

Longest common prefix of two strings

private static int lcp(String s, String t)

{

int N = Math.min(s.length(), t.length());

for (int i = 0; i < N; i++)

if (s.charAt(i) != t.charAt(i)) return i;

return N;

}

Suffix sort solution

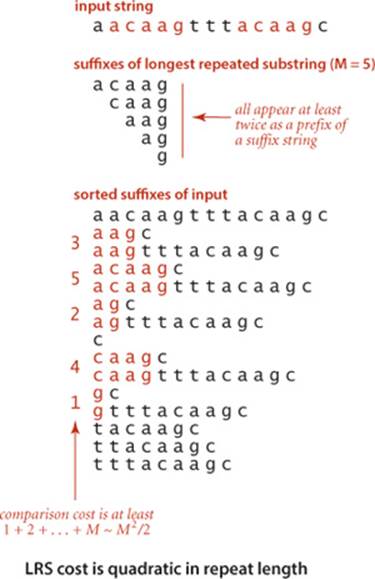

The following clever approach, which takes advantage of sorting in an unexpected way, is an effective way to find the longest repeated substring, even in a huge string: we make an array of the N suffixes of s (the substrings starting at each position and going to the end), and then we sort this array. The key to the algorithm’s correctness is that every substring appears somewhere as a prefix of one of the suffixes in the array. After sorting, the longest repeated substrings will appear in adjacent positions in the array. Thus, we can make a single pass through the sorted array, keeping track of the longest matching prefixes between adjacent strings. The key to the algorithm’s efficiency is to form the N suffixes implicitly (storing only the original string and the index of the first character in each suffix) instead of explicitly (since that would require quadratic time and space). This suffix sorting approach is significantly more efficient than the brute-force method, but before implementing and analyzing it, we consider another application of suffix sorting.

Indexing a string

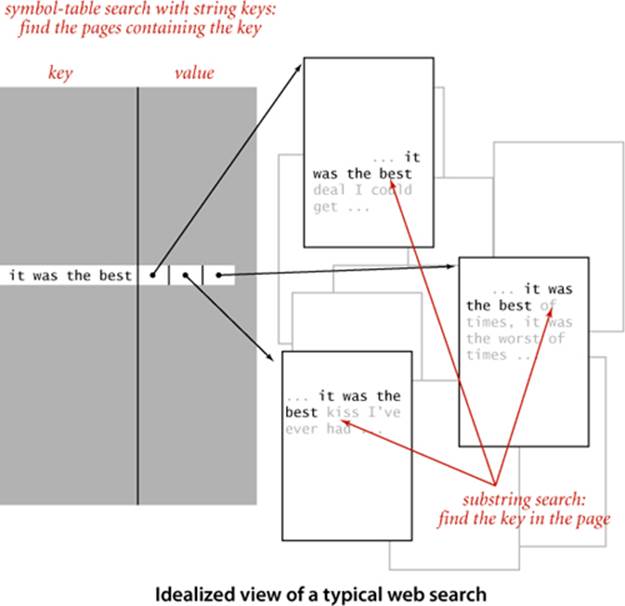

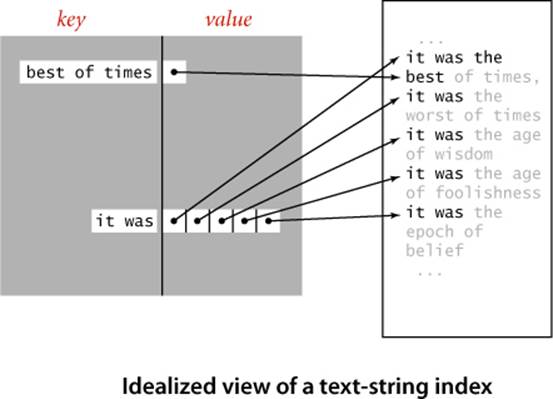

When you are trying to find a particular substring within a large text—for example, while working in a text editor or within a page you are viewing with a browser—you are doing a substring search, the problem we considered in SECTION 5.3. For that problem, we assume the text to be relatively large and focus on preprocessing the substring, with the goal of being able to efficiently find that substring in any given text. When you type search keys into your web browser, you are doing a search with string keys, the subject of SECTION 5.2. Your search engine must precompute an index, since it cannot afford to scan all the pages in the web for your keys. As we discussed in SECTION 3.5 (see FileIndex on page 501), this would ideally be an inverted index associating each possible search string with all web pages that contain it—a symbol table where each entry is a string key and each value is a set of pointers (each pointer giving the information necessary to locate an occurrence of the key on the web—perhaps a URL that names a web page and an integer offset within that page). In practice, such a symbol table would be far too big, so your search engine uses various sophisticated algorithms to reduce its size. One approach is to rank web pages by importance (perhaps using an algorithm like the PageRank algorithm that we discussed on page 502) and work only with highly-ranked pages, not all pages. Another approach to cutting down on the size of a symbol table to support search with string keys is to associate URLs with words (substrings delimited by whitespace) as keys in the precomputed index. Then, when you search for a word, the search engine can use the index to find the (important) pages containing your search keys (words) and then use substring search within each page to find them. But with this approach, if the text were to contain "everything" and you were to search for "thing", you would not find it. For some applications, it is worthwhile to build an index to help find any substring within a given text. Doing so might be justified for a linguistic study of an important piece of literature, for a genomic sequence that might be an object of study for many scientists, or just for a widely accessed web page. Again, ideally, the index would associate all possible substrings of the text string with each position where it occurs in the text string, as depicted at right. The basic problem with this ideal is that the number of possible substrings is too large to have a symbol-table entry for each of them (an N-character text has N(N−1)/2 substrings). The table for the example at right would need entries for b, be, bes, best, best o, best of, e, es, est, est o, est of, s, st, st o, st of, t, t o, t of, o, of, and many, many other substrings. Again, we can use a suffix sort to address this problem in a manner analogous to our first symbol-table implementation using binary search, in SECTION 3.1. We consider each of the N suffixes to be keys, create a sorted array of our keys (the suffixes), and use binary search to search in that array, comparing the search key with each suffix.

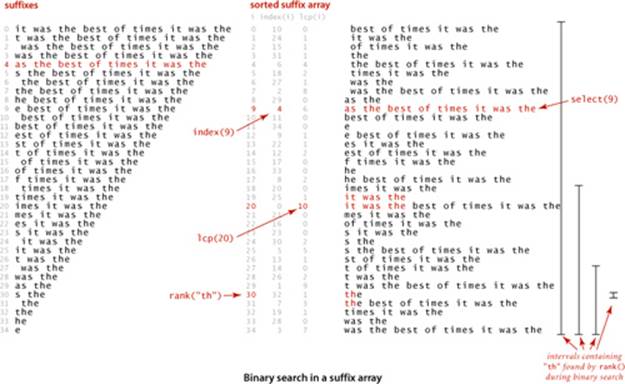

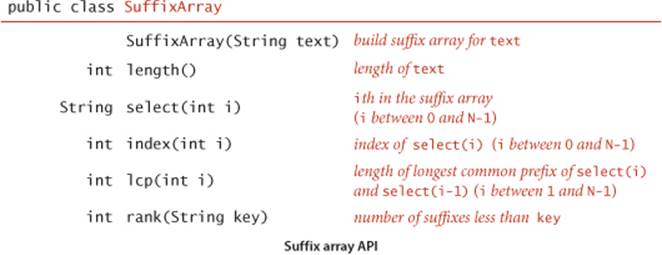

API and client code

To support client code to solve these two problems, we articulate the API shown below. It includes a constructor; a length() method; methods select() and index(), which give the string and index of the suffix of a given rank in the sorted list of suffixes; a method lcp() that gives the length of the longest common prefix of each suffix and the one preceding it in the sorted list; and a method rank() that gives the number of suffixes less than the given key (just as we have been using since we first examined binary search in CHAPTER 1). We use the term suffix array to describe the abstraction of a sorted list of suffix strings, without committing to use an array of strings as the underlying data structure.

In the example on the facing page, select(9) is "as the best of times...", index(9) is 4, lcp(20) is 10 because "it was the best of times..." and "it was the" have the common prefix "it was the" which is of length 10, and rank("th") is 30. Note also that the select(rank(key)) is the first possible suffix in the sorted suffix list that has key as prefix and that all other occurrences of key in the text immediately follow (see the figure on the opposite page). With this API, the client code on the next two pages is immediate. LRS (page 880) finds the longest repeated substring in the text on standard input by building a suffix array and then scanning through the sorted suffixes to find the maximum lcp() value. KWIC (page 881) builds a suffix array for the text named as command-line argument, takes queries from standard input, and prints all occurrences of each query in the text (including a specified number of characters before and after to give context). The name KWIC stands for keyword-in-context search, a term dating at least to the 1960s. The simplicity and efficiency of this client code for these typical string-processing applications is remarkable, and testimony to the importance of careful API design (and the power of a simple but ingenious idea).

Longest repeated substring client

public class LRS

{

public static void main(String[] args)

{

String text = readAll().replaceAll("\\s+", " ");

int N = text.length();

SuffixArray sa = new SuffixArray(text);

String lrs = "";

for (int i = 1; i < N; i++)

{

int length = sa.lcp(i);

if (length > lrs.length())

lrs = text.substring(sa.index(i), sa.index(i) + length);

}

StdOut.println("'" + lrs + "'");

}

}

% more tinyTale.txt

it was the best of times it was the worst of times

it was the age of wisdom it was the age of foolishness

it was the epoch of belief it was the epoch of incredulity

it was the season of light it was the season of darkness

it was the spring of hope it was the winter of despair

% java LRS < tinyTale.txt

'st of times it was the '

% java LRS < mobyDick.txt

',- Such a funny, sporty, gamy, jesty, joky, hoky-poky lad, is the Ocean, oh! Th'

Keyword-in-context indexing client

public class KWIC

{

public static void main(String[] args)

{

In in = new In(args[0]);

int context = Integer.parseInt(args[1]);

String text = in.readAll().replaceAll("\\s+", " ");

int N = text.length();

SuffixArray sa = new SuffixArray(text);

while (StdIn.hasNextLine())

{

String query = StdIn.readLine();

for (int i = sa.rank(query); i < N; i++)

{

// Check if sorted suffix i is a match.

int from1 = sa.index(i);

int to1 = Math.min(N, sa.index(i) + query.length());

if (!query.equals(text.substring(from1, to1))) break;

// Print context surrounding sorted suffix i.

int from2 = Math.max(0, sa.index(i) - context);

int to2 = Math.min(N, sa.index(i) + context + query.length());

StdOut.println(text.substring(from2, to2));

}

StdOut.println();

}

}

}

% java KWIC tale.txt 15

search

o st giless to search for contraband

her unavailing search for your fathe

le and gone in search of her husband

t provinces in search of impoverishe

dispersing in search of other carri

n that bed and search the straw hold

better thing

t is a far far better thing that i do than

some sense of better things else forgotte

was capable of better things mr carton ent

Implementation

The code on the facing page is an elementary implementation of the SuffixArray API. The key to the implementation is a nested class Suffix that represents a suffix of a text string. A Suffix has two instance variables: a String reference to the text string and an int index of its first character. It provides four utility methods: length() returns the length of the suffix; charAt(i) returns the ith character in the suffix; toString() returns a string representation of the suffix; and compareTo() compares two suffixes, for use in sorting. Using this nesed class, it is straigthfoward to complete the implementation. The constructor builds an array of Suffix objects and sorts them, so index(i) just returns the index associated with suffixes[i]. The implementations of length() and select() are also a one-liners. The implementation of lcp() is similar to the lcp() on page 875, and rank() is virtually the same as our implementation of binary search for symbol tables, on page 381. Again, the simplicity and elegance of this implementation should not mask the fact that it is a sophisticated algorithm that enables the solution of important problems like the longest repeated substring problem that would otherwise seem to be infeasible.

Performance

The efficiency of our suffix sorting implementation depends on the fact that we form the suffixes implicitly—each suffix is represented by a reference to the text string and the index of its first character. Thus, the space to store the array of suffixes is linear in the length of the text string. This point is a bit counterintuitive because the total number of characters in the N suffixes is ~N2/2, a quadratic function of the length of the string. Moreover, that quadratic factor gives one pause when considering the cost of sorting the array of suffixes. It is very important to bear in mind that this approach is effective for long strings because of our implicit representation for suffixes: when we exchange two suffixes, we are exchanging only references, not the whole suffixes. Now, the cost of comparing two suffixes may be proportional to the length of the suffixes in the case when their common prefix is very long, but most comparisons in typical applications involve only a few characters. If so, the running time of the suffix sort is linearithmic. For example, in many applications, it is reasonable to use a random string model:

public int compareTo(Suffix that)

{

if (this == that) return 0;

int N = Math.min(this.length(), that.length());

for (int i = 0; i < N; i++)

{

if (this.charAt(i) < that.charAt(i)) return -1;

if (this.charAt(i) > that.charAt(i)) return +1;

}

return this.length() - that.length();

}

Comparing two suffixes

Proposition C. Using 3-way string quicksort, we can build a suffix array from a random string of length N with space proportional to N and ~ 2N ln N character compares, on the average.

Discussion: The space bound is immediate, but the time bound follows from a detailed and difficult research result by P. Jacquet and W. Szpankowski, which implies that the cost of sorting the suffixes is asymptotically the same as the cost of sorting N random strings (see PROPOSITION Eon page 723).

Algorithm 6.2 Suffix array (elementary implementation)

import java.util.Arrays;

public class SuffixArray

{

private Suffix[] suffixes; // array of suffixes

public SuffixArray(String text)

{

int N = text.length();

this.suffixes = new Suffix[N];

for (int i = 0; i < N; i++)

suffixes[i] = new Suffix(text, i);

Arrays.sort(suffixes);

}

private static class Suffix implements Comparable<Suffix>

{

private final String text; // reference to text string

private final int index; // index of suffix's first character

private Suffix(String s, int index)

{

this.text = text;

this.index = index;

}

private int length() { return text.length() - index; }

private char charAt(int i) { return text.charAt(index + i); }

public String toString() { return text.substring(index); }

public int compareTo(Suffix that) // See page 882.

}

public int index(int i) { return suffixes[i].index; }

public int length() { return suffixes.length; }

public String select(int i) { return suffixes[i].toString(); }

public int lcp(int i) // See Exercise 6.28.

public int rank(String key) // See Exercise 6.28.

}

This implementation of our SuffixArray API depends for its efficiency on the fact that substrings are constant-size references and substring extraction takes constant time (see text).

Improved implementations

Our elementary implementation of SuffixArray (ALGORITHM 6.2) has poor worst-case performance. For example, if all the characters are equal, the sort examines every character in each suffix and thus takes quadratic time. For strings of the type we have been using as examples, such as genomic sequences or natural-language text, this is not likely to be problematic, but the algorithm can be slow for texts with long runs of identical characters. Another way of looking at the problem is to observe that the cost of finding the longest repeated substring is (at least) quadratic in the length of the longest repeated substring because all of the prefixes of the repeat need to be checked (see the diagram at right). This is not a problem for a text such as A Tale of Two Cities, where the longest repeated substring

"s dropped because it would have

been a bad thing for me in a

worldly point of view i"

has just 84 characters, but it is a serious problem for genomic data, where long repeated substrings are not unusual. How can this quadratic behavior for repeat searching be avoided? Remarkably, research by P. Weiner in 1973 showed that it is possible to solve the longest repeated substring problem in guaranteed linear time. Weiner’s algorithm was based on building a suffix tree data structure (essentially a trie for suffixes). With multiple pointers per character, suffix trees consume too much space for many practical problems, which led to the development of suffix arrays. In the 1990s, U. Manber and E. Myers presented a linearithmic algorithm for building suffix arrays directly and a method that does preprocessing at the same time as the suffix sort to support constant-time lcp(). Several linear-time suffix sorting algorithms have been developed since. With a bit more work, the Manber-Myers implementation can also support a two-argument lcp() that finds the longest common prefix of two given suffixes that are not necessarily adjacent in guaranteed constant time, again a remarkable improvement over the straightforard implementation. These results are quite surprising, as they achieve efficiencies quite beyond what you might have expected.

Proposition D. With suffix arrays, we can solve both the suffix sorting and longest repeated substring problems in linear time.

Proof: The remarkable algorithms for these tasks are just beyond our scope, but you can find on the booksite code that implements the SuffixArray constructor in linear time and lcp() queries in constant time.

A SuffixArray implementation based on these ideas supports efficient solutions of numerous string-processing problems, with simple client code, as in our LRS and KWIC examples.

SUFFIX ARRAYS ARE THE CULMINATION of decades of research that began with the development of tries for KWIC indices in the 1960s. The algorithms that we have discussed were worked out by many researchers over several decades in the context of solving practical problems ranging from putting the Oxford English Dictionary online to the development of the first web search engines to sequencing the human genome. This story certainly helps put the importance of algorithm design and analysis in context.

Network-flow algorithms

Next, we consider a graph model that has been successful not just because it provides us with a simply stated problem-solving model that is useful in many practical applications but also because we have efficient algorithms for solving problems within the model. The solution that we consider illustrates the tension between our quest for implementations of general applicability and our quest for efficient solutions to specific problems. The study of network-flow algorithms is fascinating because it brings us tantalizingly close to compact and elegant implementations that achieve both goals. As you will see, we have straightforward implementations that are guaranteed to run in time proportional to a polynomial in the size of the network.

The classical solutions to network-flow problems are closely related to other graph algorithms that we studied in CHAPTER 4, and we can write surprisingly concise programs that solve them, using the algorithmic tools we have developed. As we have seen in many other situations, good algorithms and data structures can lead to substantial reductions in running times. Development of better implementations and better algorithms is still an area of active research, and new approaches continue to be discovered.

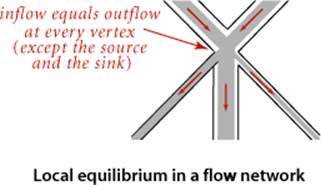

A physical model

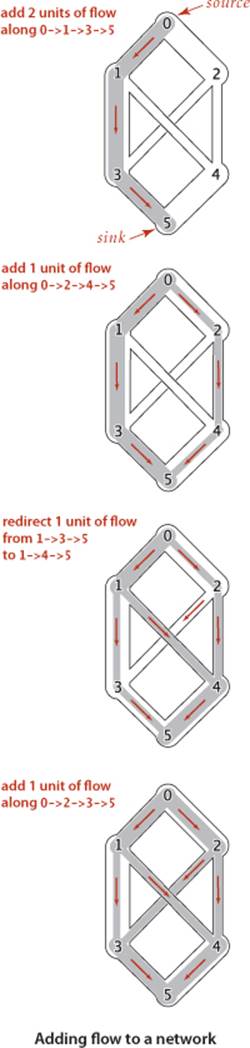

We begin with an idealized physical model in which several of the basic concepts are intuitive. Specifically, imagine a collection of interconnected oil pipes of varying sizes, with switches controlling the direction of flow at junctions, as in the example illustrated at right. Suppose further that the network has a single source (say, an oil field) and a single sink (say, a large refinery) to which all the pipes ultimately connect. At each vertex, the flowing oil reaches an equilibrium where the amount of oil flowing in is equal to the amount flowing out. We measure both flow and pipe capacity in the same units (say, gallons per second). If every switch has the property that the total capacity of the ingoing pipes is equal to the total capacity of the outgoing pipes, then there is no problem to solve: we simply fill all pipes to full capacity. Otherwise, not all pipes are full, but oil flows through the network, controlled by switch settings at the junctions, satisfying a local equilibrium condition at the junctions: the amount of oil flowing into each junction is equal to the amount of oil flowing out. For example, consider the network in the diagram on the opposite page. Operators might start the flow by opening the switches along the path 0->1->3->5, which can handle 2 units of flow, then open switches along the path 0->2->4->5 to get another unit of flow in the network. Since 0->1, 2->4, and 3->5 are full, there is no direct way to get more flow from 0 to 5, but if we change the switch at 1 to redirect enough flow to fill 1->4, we open up enough capacity in 3->5 to allow us to add a unit of flow on 0->2->3->5. Even for this simple network, finding switch settings that increase the flow is not an easy task; for a complicated network, we are clearly interested in the following question: What switch settings will maximize the amount of oil flowing from source to sink? We can model this situation directly with an edge-weighted digraph that has a single source and a single sink. The edges in the network correspond to the oil pipes, the vertices correspond to the junctions with switches that control how much oil goes into each outgoing edge, and the weights on the edges correspond to the capacity of the pipes. We assume that the edges are directed, specifying that oil can flow in only one direction in each pipe. Each pipe has a certain amount of flow, which is less than or equal to its capacity, and every vertex satisfies the equilibrium condition that the flow in is equal to the flow out. This flow-network abstraction is a useful problem-solving model that applies directly to a variety of applications and indirectly to still more. We sometimes appeal to the idea of oil flowing through pipes for intuitive support of basic ideas, but our discussion applies equally well to goods moving through distribution channels and to numerous other situations. As with our use of distance in shortest-paths algorithms, we are free to abandon any physical intuition when convenient because all the definitions, properties, and algorithms that we consider are based entirely on an abstract model that does not necessarily obey physical laws. Indeed, a prime reason for our interest in the network-flow model is that it allows us to solve numerous other problems through reduction, as we see in the next section.

Definitions

Because of this broad applicability, it is worthwhile to consider precise statements of the terms and concepts that we have just informally introduced:

Definition. A flow network is an edge-weighted digraph with positive edge weights (which we refer to as capacities). An st-flow network has two identified vertices, a source s and a sink t.

We sometimes refer to edges as having infinite capacity or, equivalently, as being uncapacitated. That might mean that we do not compare flow against capacity for such edges, or we might use a sentinel value that is guaranteed to be larger than any flow value. We refer to the total flow into a vertex (the sum of the flows on its incoming edges) as the vertex’s inflow, the total flow out of a vertex (the sum of the flows on its outgoing edges) as the vertex’s outflow, and the difference between the two (inflow minus outflow) as the vertex’s netflow. To simplify the discussion, we also assume that there are no edges leaving t or entering s.

Definition. An st-flow in an st-flow network is a set of nonnegative values associated with each edge, which we refer to as edge flows. We say that a flow is feasible if it satisfies the condition that no edge’s flow is greater than that edge’s capacity and local equilibrium condition that the every vertex’s netflow is zero (except s and t).

We refer to the sink’s inflow as the st-flow value. We will see in PROPOSITION E that the value is also equal to the source’s outflow. With these definitions, the formal statement of our basic problem is straightforward:

Maximum st-flow. Given an st-flow network, find an st-flow such that no other flow from s to t has a larger value.

For brevity, we refer to such a flow as a maxflow and the problem of finding one in a network as the maxflow problem. In some applications, we might be content to know just the maxflow value, but we generally want to know a flow (edge flow values) that achieves that value.

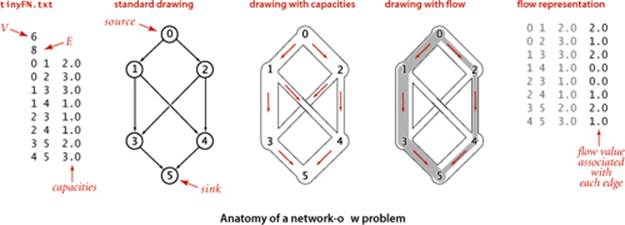

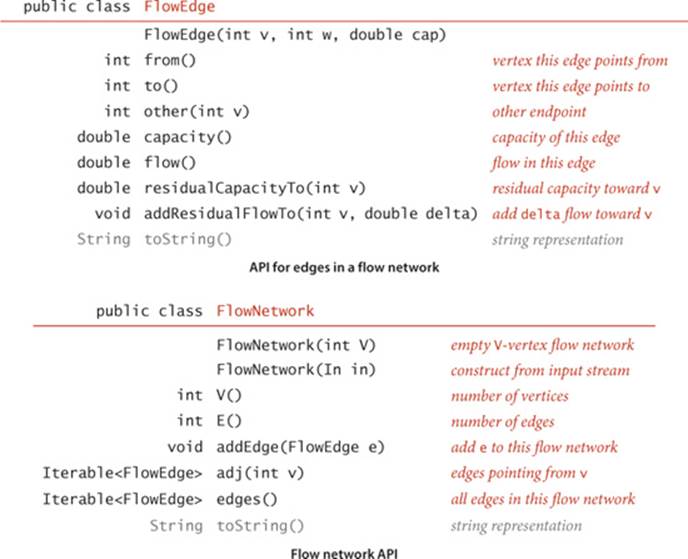

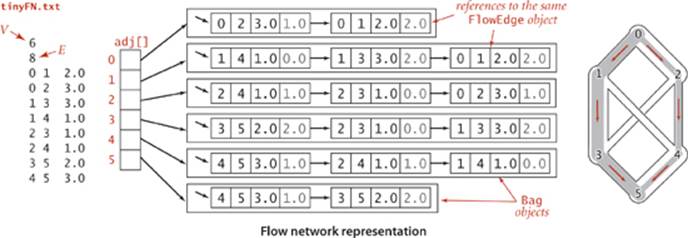

APIs

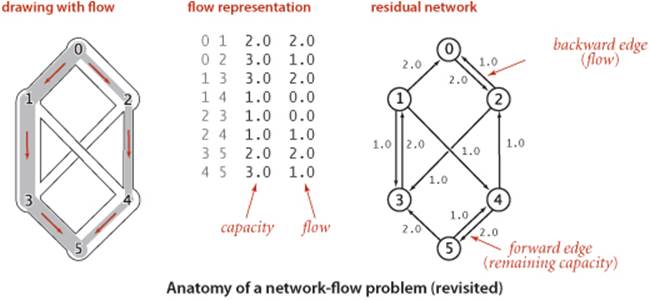

The FlowEdge and FlowNetwork APIs shown on page 890 are straightforward extensions of APIs from CHAPTER 4. We will consider on page 896 an implementation of FlowEdge that is based on adding an instance variable containing the flow to our Edge class from page 610. Flows have a direction, but we do not base FlowEdge on DirectedEdge because we work with a more general abstraction known as the residual network that is described below, and we need each edge to appear in the adjacency lists of both its vertices to implement the residual network. The residual network allows us to both add and subtract flow and to test whether an edge is full to capacity (no more flow can be added) or empty (no flow can be subtracted). This abstraction is implemented via the methods residualCapacity() and addResidualFlow() that we will consider later. The implementation of FlowNetwork is virtually identical to our EdgeWeightedGraph implementation on page 611, so we omit it. To simplify the file format, we adopt the convention that the source is 0 and the sink is V − 1. These APIs leave a straightforward goal for maxflow algorithms: build a network, then assign values to the flow instance variables in the client’s edges that maximize flow through the network. Shown at right are client methods for certifying whether a flow is feasible. Typically, we might do such a check as the final action of a maxflow algorithm.

Checking that a flow is feasible in a flow network

private boolean localEq(FlowNetwork G, int v)

{ // Check local equilibrium at vertex v.

double EPSILON = 1E-11;

double netflow = 0.0;

for (FlowEdge e : G.adj(v))

if (v == e.from()) netflow -= e.flow();

else netflow += e.flow();

return Math.abs(netflow) < EPSILON;

}

private boolean isFeasible(FlowNetwork G)

{

// Check that flow on each edge is nonnegative

// and not greater than capacity.

for (int v = 0; v < G.V(); v++)

for (FlowEdge e : G.adj(v))

if (e.flow() < 0 || e.flow() > e.capacity())

return false;

// Check local equilibrium at each vertex.

for (int v = 0; v < G.V(); v++)

if (v !=s && v != t && !localEq(v))

return false;

return true;

}

Ford-Fulkerson algorithm

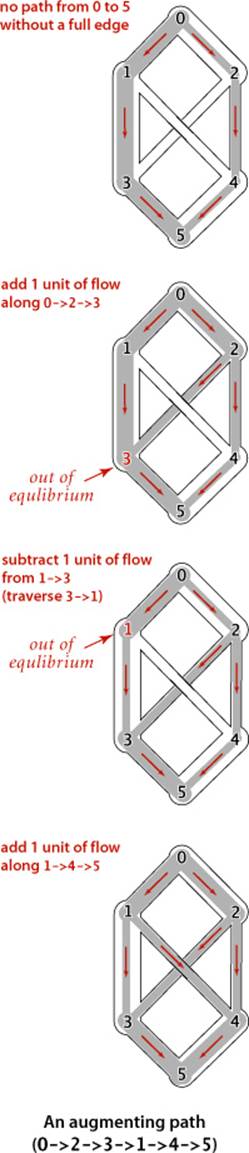

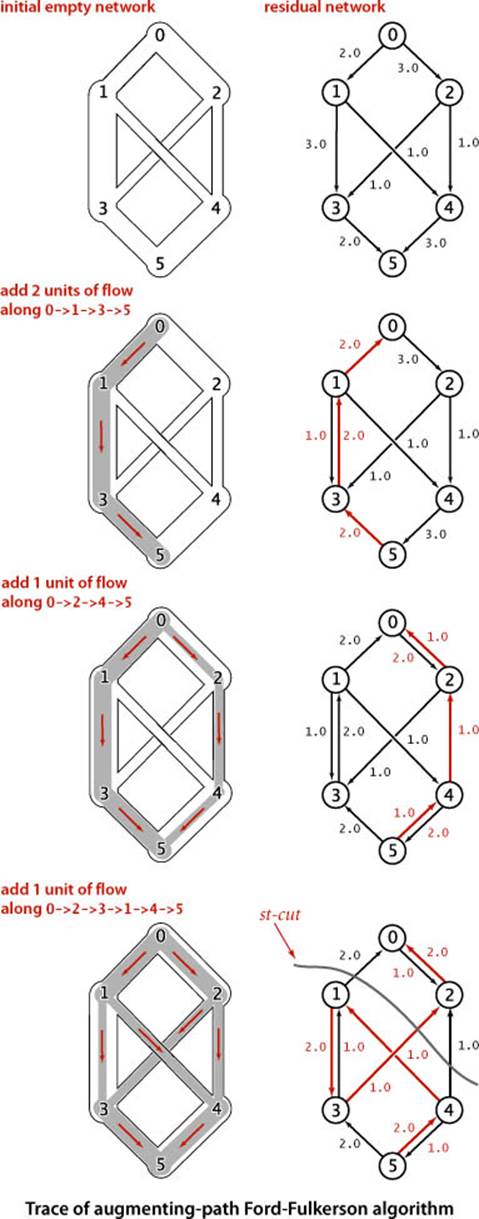

An effective approach to solving max-flow problems was developed by L. R. Ford and D. R. Fulkerson in 1962. It is a generic method for increasing flows incrementally along paths from source to sink that serves as the basis for a family of algorithms. It is known as the Ford-Fulkerson algorithm in the classical literature; the more descriptive term augmenting-path algorithm is also widely used. Consider any directed path from source to sink through an st-flow network. Let x be the minimum of the unused capacities of the edges on the path. We can increase the network’s flow value by at least x by increasing the flow in all edges on the path by that amount. Iterating this action, we get a first attempt at computing flow in a network: find another path, increase the flow along that path, and continue until all paths from source to sink have at least one full edge (so that we can no longer increase flow in this way). This algorithm will compute the maxflow in some cases but will fall short in other cases. Our introductory example on page 886 is such an example. To improve the algorithm such that it always finds a maxflow, we consider a more general way to increase the flow, along a path from source to sink through the network’s underlying undirected graph. The edges on any such path are either forward edges, which go with the flow (when we traverse the path from source to sink, we traverse the edge from its source vertex to its destination vertex), or backward edges, which go against the flow (when we traverse the path from source to sink, we traverse the edge from its destination vertex to its source vertex). Now, for any path from source to sink with no full forward edges and no empty backward edges, we can increase the amount of flow in the network by increasing flow in forward edges and decreasing flow in backward edges. The amount by which the flow can be increased is limited by the minimum of the unused capacities in the forward edges and the flows in the backward edges. Such a path is called an augmenting path. An example is shown at right. In the new flow, at least one of the forward edges along the path becomes full or at least one of the backward edges along the path becomes empty. The process just sketched is the basis for the classical Ford-Fulkerson maxflow algorithm (augmenting-path method). We summarize it as follows:

Ford-Fulkerson maxflow algorithm. Start with zero flow everywhere. Increase the flow along any augmenting path from source to sink (with no full forward edges or empty backward edges), continuing until there are no such paths in the network.

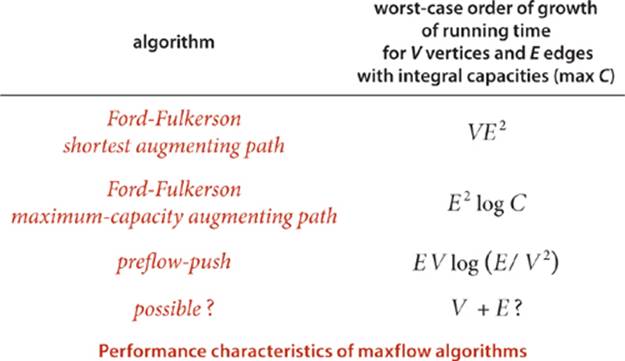

Remarkably (under certain technical conditions about numeric properties of the flow), this method always finds a maxflow, no matter how we choose the paths. Like the greedy MST algorithm discussed in SECTION 4.3 and the generic shortest-paths method discussed in SECTION 4.4, it is a generic algorithm that is useful because it establishes the correctness of a whole family of more specific algorithms. We are free to use any method whatsoever to choose the path. Several algorithms that compute sequences of augmenting paths have been developed, all of which lead to a maxflow. The algorithms differ in the number of augmenting paths they compute and the costs of finding each path, but they all implement the Ford-Fulkerson algorithm and find a maxflow.

Maxflow-mincut theorem

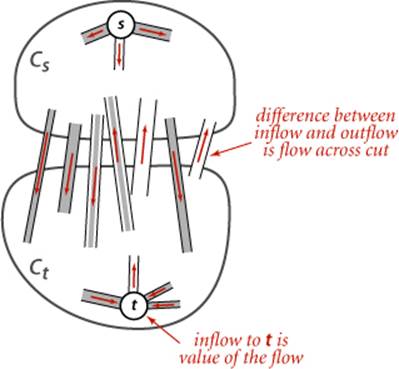

To show that any flow computed by any implementation of the Ford-Fulkerson algorithm is indeed a maxflow, we prove a key fact known as the maxflow-mincut theorem. Understanding this theorem is a crucial step in understanding network-flow algorithms. As suggested by its name, the theorem is based on a direct relationship between flows and cuts in networks, so we begin by defining terms that relate to cuts. Recall from SECTION 4.3 that a cut in a graph is a partition of the vertices into two disjoint sets, and a crossing edge is an edge that connects a vertex in one set to a vertex in the other set. For flow networks, we refine these definitions as follows:

Definition. An st-cut is a cut that places vertex s in one of its sets and vertex t in the other.

Each crossing edge corresponding to an st-cut is either an st-edge that goes from a vertex in the set containing s to a vertex in the set containing t, or a ts-edge that goes in the other direction. We sometimes refer to the set of crossing st-edges as a cut set. The capacity of an st-cut in a flow network is the sum of the capacities of that cut’s st-edges, and the flow across an st-cut is the difference between the sum of the flows in that cut’s st-edges and the sum of the flows in that cut’s ts-edges. Removing all the st-edges (the cut set) in an st-cut of a network leaves no path from s to t, but adding any one of them back could create such a path. Cuts are the appropriate abstraction for many applications. For our oil-flow model, a cut provides a way to completely stop the flow of oil from the source to the sink. If we view the capacity of the cut as the cost of doing so, to stop the flow in the most economical manner is to solve the following problem:

Minimum st-cut. Given an st-network, find an st-cut such that the capacity of no other cut is smaller. For brevity, we refer to such a cut as a mincut and to the problem of finding one in a network as the mincut problem.

The statement of the mincut problem includes no mention of flows, and these definitions might seem to digress from our discussion of the augmenting-path algorithm. On the surface, computing a mincut (a set of edges) seems easier than computing a maxflow (an assignment of weights to all the edges). On the contrary, the maxflow and mincut problems are intimately related. The augmenting-path method itself provides a proof. That proof rests on the following basic relationship between flows and cuts, which immediately gives a proof that local equilibrium in an st-flow implies global equilibrium as well (the first corollary) and an upper bound on the value of any st-flow (the second corollary):

Proposition E. For any st-flow, the flow across each st-cut is equal to the value of the flow.

Proof: Let Cs be the vertex set containing s and Ct the vertex set containing t. This fact follows immediately by induction on the size of Ct. The property is true by definition when Ct is t and when a vertex is moved from Cs to Ct, local equilibrium at that vertex implies that the stated property is preserved. Any st-cut can be created by moving vertices in this way.

Corollary. The outflow from s is equal to the inflow to t (the value of the st-flow).

Proof: Let Cs be {s}.

Corollary. No st-flow’s value can exceed the capacity of any st-cut.

Proposition F. (Maxflow-mincut theorem) Let f be an st-flow. The following three conditions are equivalent:

i. There exists an st-cut whose capacity equals the value of the flow f.

ii. f is a maxflow.

iii. There is no augmenting path with respect to f.

Proof: Condition i. implies condition ii. by the corollary to PROPOSITION E. Condition ii. implies condition iii. because the existence of an augmenting path implies the existence of a flow with a larger flow value, contradicting the maximality of f.

It remains to prove that condition iii. implies condition i. Let Cs be the set of all vertices that can be reached from s with an undirected path that does not contain a full forward or empty backward edge, and let Ct be the remaining vertices. Then, t must be in Ct, so (Cs, Ct) is an st-cut, whose cut set consists entirely of full forward or empty backward edges. The flow across this cut is equal to the cut’s capacity (since forward edges are full and the backward edges are empty) and also to the value of the flow (by PROPOSITION E).

Corollary. (Integrality property) When capacities are integers, there exists an integer-valued maxflow, and the Ford-Fulkerson algorithm finds it.

Proof: Each augmenting path increases the flow by a positive integer (the minimum of the unused capacities in the forward edges and the flows in the backward edges, all of which are always positive integers).

It is possible to design a maxflow with noninteger flows, even when capacities are all integers, but we do not need to consider such flows. From a theoretical standpoint, this observation is important: allowing capacities and flows that are real numbers, as we have done and as is common in practice, can lead to unpleasant anomalous situations. For example, it is known that the Ford-Fulkerson algorithm could, in principle, lead to an infinite sequence of augmenting paths that does not even converge to the maxflow value. The version of the algorithm that we consider is known to always converge, even when capacities and flows are real-valued. No matter what method we choose to find an augmenting path and no matter what paths we find, we always end up with a flow that does not admit an augmenting path, which therefore must be a maxflow.

Residual network

The generic Ford-Fulkerson algorithm does not specify any particular method for finding an augmenting path. How can we find a path with no full forward edges and no empty backward edges? To this end, we begin with the following definition: