Pragmatic Enterprise Architecture (2014) Strategies to Transform Information Systems in the Era of Big Data

PART III Information Systems

Abstract

This part enters into the main territory of enterprise architecture for information systems which is as rich in technology specialties as the IT ecosystem is diverse. Most organizations fail to recognize the need for diverse specialization within architecture because they fail to understand the depth of complexity and the costs associated with mediocrity within each area of specialization. They also believe that a general practioner, which we will call a solution architect, is qualified and appropriate to address the complexities across a wide array of technology areas. In reality, this is equivalent to staffing a medical center primarily with general practioners that act as the specialists. A healthy organization maintains top specialists with which the general practioners can participate in getting expertise that is in alignment with a future state vision that reduces complexity and costs.

Keywords

information systems architecture

enterprise architecture

business architecture

operations architecture

systems architecture

control systems architecture

cross-discipline capabilities

solution architect

subject matter expert

stakeholders

Legal

Compliance

Auditing

Chief Customer Officer

outsourcing partners

customers

investors

shareholders

regulators

enterprise architects

hedgehog principle

architectural standards

technology portfolio management

TPM

organizing technologies into portfolios

architecture ROI framework

application impact

infrastructure impact

personnel impact

vendor impact

operational workflow impact

business impact

after tax implications

net present value

NPV

internal rate of return

IRR

personnel severance costs

airfare

lodging

enhanced technology portfolio management

technology categories

technology subcategories

technology metadata

reporting architecture

OLTP

online transaction processing

OLAP

online analytical processing

business intelligence

nonstatistical analysis

data mining

predictive analytics

operational data store

ODS

DW

data warehouse

data mart

DM

GIS

geographic information system

Big Data

mashup technology

data warehouse architecture

BI Architecture

rollups

aggregates

dimensions

MOLAP

ROLAP

HOLAP

WOLAP

DOLAP

RTOLAP

CEP

complex event processing

NLP

natural language processing

data visualization

cluster diagrams

terrain maps

architectural drawings

floor plans

shelf layouts

routes

connectivity diagrams

bubbles

histograms

heat maps

scatter plots

rose charts

cockpit gauges

radar diagrams

stem and leaf plots

predict behavior

longitudinal analysis

survey sampling models

stimulus-response predictive models

statistical methods

nonstatistical models

neural networks

neural nets

polynomial

Big Data architecture

structured data

unstructured data

semistructured data

velocity

volume

frequency of updates

variety

concurrent users

OldSQL

New SQL

SQL

free space

query language

ACID

atomicity

consistency

isolation

durability

node

symmetric multiprocessing

SMP

massively parallel processing

MPP

asymmetric massively parallel processing

AMPP

Hadoop

DFS

distributed file system

MapReduce

Apache Software Foundation

ASF

FOSS

IP

GFS

HDSF

GPFS

BigTable

NoSQL

Hbase

R Language

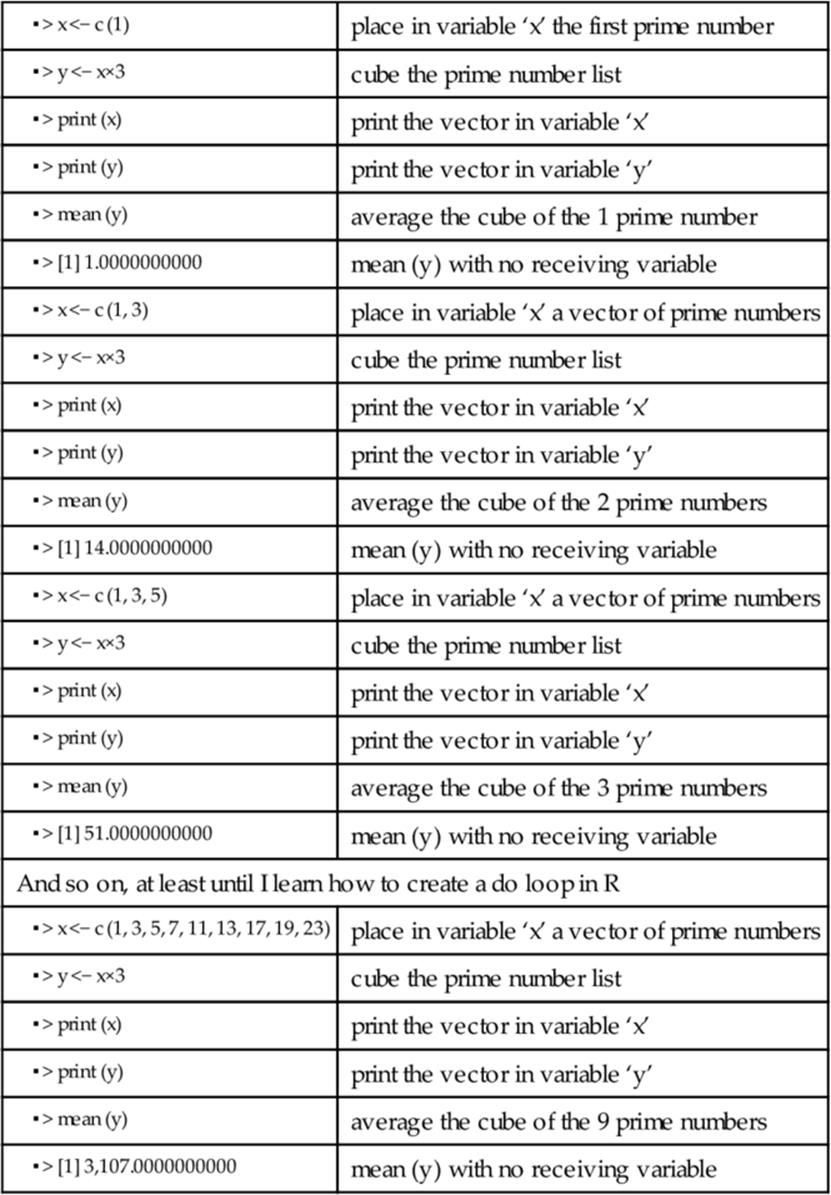

R Programming Language

Sqoop

Hive

Pig

Impala

FSF

free software foundation

CRAN

Comprehensive R Archive Network

R extensions

Luisi Prime Numbers

competing Hadoop frameworks

Cloudera

Hortonworks

MapR

IBM

Microsoft

Intel

Syncsort

SAS

Big Data is use case driven

document management/content management

knowledge management

graph DB

online transaction processing systems

data warehousing

real-time analytics

algorithmic approaches

batch analysis

advanced search

relational database technology in Hadoop

novelty discoveries

class discoveries

association discoveries

document management

content management

government archival records

business document management

IT document management

customer document management

Basho Riak

MarkLogic

MongoDB

Cassandra

Couchbase

Hadoop HDFS

Hadoop HBase

KYC

AML

SAP Hana

HP Vertica

Greenplum

Teradata

lifetime value

LTV

forecasting

Fair Isaac’s

HNC

Ward Systems

SAS complex event processing

algorithm based

matrix vector multiplication

relational algebraic operations

selections and projections

union

intersection and difference

grouping and aggregation

reducer size and replication rates

similarity joins

graph modeling

Netezza

NewSQL

e-Commerce

Akiban

Clustrix

Google Spanner

NuoDB

SQLFire

VoltDB

batch analytics

HDFS

address geocoding

linear measures event modeling

routing

topological

cartography

Neo4j

PostGIS

Oracle Spatial

Geotime

search and discovery

Lucidworks

Solr

Splunk

relational database technology in Hadoop

Splice Machine

Citus Data

use case driven

life cycle

ad hoc deployment

Big Data deployment

metadata

Big Data ecosystem

use case planning

business metadata

use case requirements

internal data discovery

external data discovery

inbound metadata

ingestion metadata

data persistence layer metadata

outbound metadata

lifecycle metadata

operations metadata

data governance metadata

compliance metadata

configuration management metadata

team metadata

directory services metadata

ecosystem administrator metadata

stakeholder metadata

workflow metadata

decommissioning metadata

metadata summary

There is no magic

Big Data accelerators

parallel and distributed processing

reduced code set that eliminates large amounts of DBMS code

fewer features

compression

proprietary hardware

SOA

OOA

LDA

CPU

LZ77

LZ78

Huffman

columnar

Big Data the future

quantum computing

code breaking

cryptography

prime number generation

traveling salesman problem

labeling images and objects within images

identifying correlations in genetic code

testing a scientific hypothesis

machine learning for problem solving (aka self-programming)

adiabatic

AQC

gate model

D-Wave

qubit

quantum error correction

quantum processor

titanium

niobium

Columbian

Kelvin

Tesla

Josephson junction

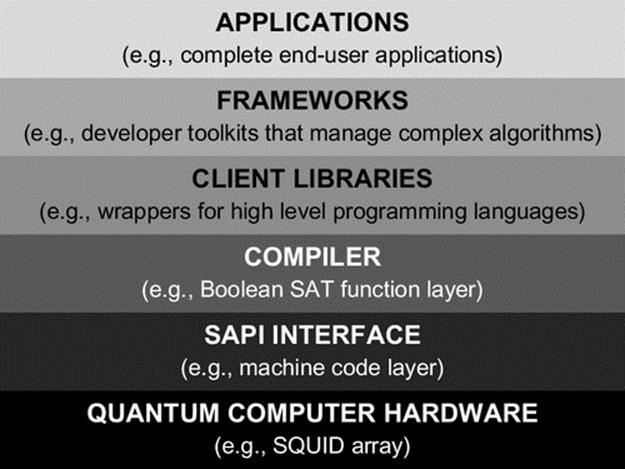

Boolean SAT

SAPI interface

compiler

client libraries

frameworks

applications

mashup architecture

data virtualization layer

cross data landscape metrics

data security

data visualization styles

self-service

LDAP

compliance architecture

treaty zone

business compliance

IT compliance

ISO 17799

ISO 27000

COSO

Committee of Sponsoring Organizations of the Treadway Commission

OFAC

Office of Foreign Assets Control

Treasury department

United and Strengthening America by Providing Appropriate Tools Required to Intercept and Obstruct Terrorism

Section 314(a)

USA PATRIOT Act

Office of Federal Contract Compliance Programs

OFCCP

Equal Employment Opportunity Act

EEOA

Financial Stability Board

FSB

Global Financial Markets Association

GFMA

Bank Secrecy Act

BSA

Regulation E of the Electronic Fund Transfer Act

EFTA

Dodd-Frank

Securities and Exchange Commission

SEC

Federal Trade Commission

FTC

Office of the Comptroller of the Currency

OCC

Commodity Futures Trading Commission

CFTC

International Swaps and Derivatives Association

ISDA

Sarbanes Oxley

SOX

Basel II

Solvency II

Blocked Persons List

Targeted Countries List

Denied Persons List

Denied Entities List

FBI’s Most Wanted

Debarred Parties List

Global Watch List

Politically Exposed Persons

PEP

anti-money laundering

know your client

suspicious activity report

SAR

CIP

DTCC

NSCC

DTC

SWIFT

CICI

LEI

CFTC Interim Compliant Identifier

National Securities Clearing Corporation

Customer Identification Program

Society for the Worldwide Interbank Financial Telecommunication

Legal Entity Identifier

RIM

legal hold

records information management

Governance Risk and Compliance

GRC

XBRL

10-Q

10-K

20-F

8-K

6-K

legal compliance

HR compliance

financial compliance

application portfolio architecture

APM

applications architecture

business rules

workflow architecture

business capabilities

BPMN

workflow automation

BPM technology

application architecture

singular application

first-generation language

second-generation language

third-generation language

fourth-generation language

drag-and-drop self-service

array language

vector language

assembly language

command line interfaces

compiled language

interpreted language

data manipulation language

object-oriented language

scripting language

procedural language

rules engine

requirements traceability

error handling

software reuse

application architecture design patterns

integration pattern

distribution pattern

tier pattern

procedural pattern

processing pattern

usage pattern

analytical pattern

interactive pattern

data communication pattern

message dissemination pattern

resource sequence pattern

pipeline pattern

pipe and filter

event-driven pattern

blackboard pattern

MV pattern

integration architecture

hub and spoke

ESB

service-oriented architecture

enterprise service bus

extract transform and load

ETL

FTP

file transfer protocol

B2B

B2C

partner integration

life cycle architecture

software development life cycle

data centric life cycle

DCLC

DGLC

DLC

merger and acquisition life cycle

MALC

data center consolidation life cycle

DCCLC

corporate restructuring life cycle

CRLC

outsourcing life cycle

OSLC

insourcing life cycle

ISLC

operations life cycle

OLC

SDLC

ISO/IEC 12207

inception

high-level analysis

detail analysis

logical design

physical design

build

validation

deployment

post-implementation

logical data architecture

data requirements

data analysis

data profiling

conceptual data modeling

logical data modeling

physical data modeling

data discovery

data acquisition

data cleansing

data standardization

data integration

user acceptance

production

data governance life cycle

identifying data points

populating business data glossary

conceptual data architecture

business designated access rights

legal and compliance oversight

secure canned reporting data points

report and querying

production to nonproduction data movement

AGLC

architecture governance life cycle

analyze business direction

analyze business pain points

analyze types of technological issues

analyze all business initiative types

assess business alignment

initial architecture review

postmortem architecture review

divestiture life cycle

identify scope of business being divested

identify divested business capabilities

identify shared operations

automation supporting divested capabilities

identify dedicated operations

detach general ledger

identify unstructured data of divested areas

identify RIM data

define RIM business data

safeguard RIM data

validate RIM reporting

identify legal holds

safeguard legal hold data

validate legal hold reporting

downsize business operations

downsize automation

decommission

downsize IT operations

mergers and acquisitions

identify business scope being acquired

identify business organization impact

identify acquired automation

analyze overlapping automation

identify legal holds

compare data landscapes

identify automation impact

identify development environment impact

implement automation strategy

identify IT organization impact

general ledger integration

right-size business operations

right-size automation

right-size IT operations

data center consolidation

insourcing life cycle

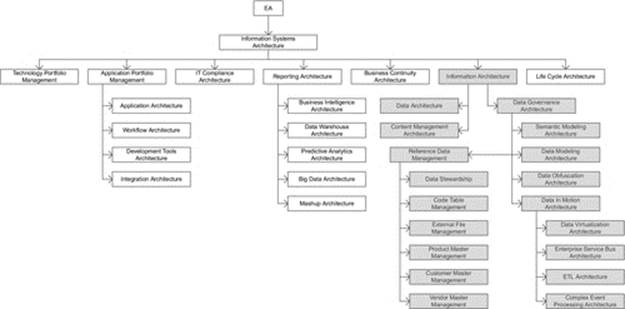

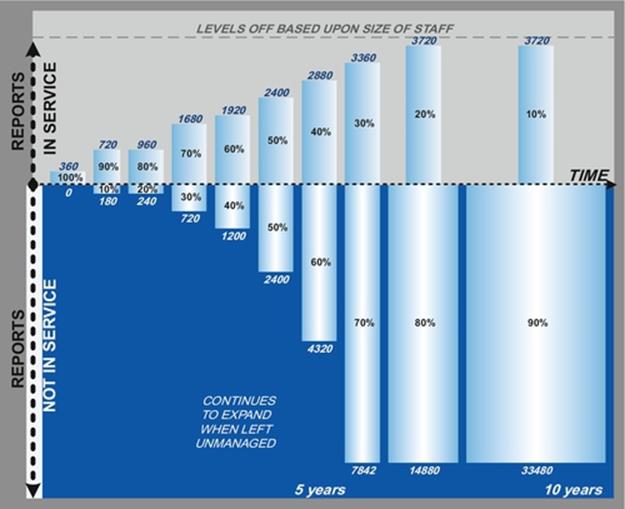

DIAGRAM Information systems architecture overview.

3.1 “Information Systems” Architecture and Governance

The global operating model for information systems architecture is one where there are various distinct architectural disciplines that require architectural standards, frameworks, and services to deliver the following:

- align the many technical disciplines across IT with the strategic direction of the business,

- provide representation of stakeholder interests across a large number of application development teams,

- identify opportunities to executive management,

- manage complexity across the IT landscape,

- exploit the synergies across the various architectural disciplines of enterprise architecture,

- optimize the return of business investment into automation,

- act as an accelerator to the automation activities across each life cycle of the enterprise,

- continually illustrate architecture’s return on investment.

Traditionally, information systems architecture has been simply referred to as enterprise architecture, without acknowledgment of there being a distinct set of architectural disciplines that belong to business architecture and operations architecture, or the distinction between information systems architecture and control systems architecture, or realization that there are a number of cross-discipline capabilities that span the aforementioned.

One may wonder why so many categories of architectural disciplines are valuable. After all, there are plenty of solution architects that are already assigned to application teams around the country and/or globe.

To understand this question more thoroughly, it is first important to look at the skill sets of solution architects and what their focus has been throughout their career, and what it is now.

Before the name “solution architect” came into fashion, solution architects would have been recognized the best and brightest programmer analysts and developers that implemented many of the applications within a large enterprise. Since solution architects are among the few that understand the majority of the critical application systems across the enterprise, they are valuable resources that cannot be readily replaced. In fact, it can take years to replace a typical solution architect as their accumulated knowledge of application systems is usually not documented to the extent that would be necessary to guide a substitute in a reasonable time frame.

Of the various roles across IT, solution architects have general to intermediate knowledge of many topics within technology. In fact, a typical solution architect can provide a fairly informative perspective on the widest variety of technologies of any type of resource across most any organization. So why not leverage solution architects to fill the role of enterprise architects? The answer can best be conveyed through a simple story.

A new management regime is introduced into a large enterprise. They reasonably set new business principles to leverage the economies of scale in negotiating with vendors for software licenses. They become aware of the fact that there are hundreds of different tools that are used for reporting and business intelligence (BI) purposes. However, they notice that none of these tools support the new Big Data space of technology.

A team of the top dozen solution architects from across the company are assembled, as well as two members from enterprise architecture that are subject matter experts (SMEs) in information architecture, itself an immensely vast architectural discipline.

Management proposes that a list of several Big Data technologies should be assembled for consideration to determine the best technology choice for the enterprise as a global standard.

[Rather than picking on specific vendors and products, as this is certainly not the intention of this book, we will give fictitious product names, although we will try to associate a few characteristics to them that are realistic from a high-level perspective where they are necessary to serve the purposes of this discussion.]

The team of solution architects schedule length daily meetings across a period of several months. The two enterprise architects divide their time so that only one of them has to be present for each given meeting, and they dial into the meetings when their schedule permits. It is also fair to state that the goal of the two enterprise architects is to have a good collaborative series of meetings with their architectural brethren.

Unlike the well-facilitated meetings, these meetings were loosely facilitated, often driven by who could call out the loudest. The two enterprise architects stated the importance of getting requirements from the various stakeholders, although no requirements were ever collected from any of the lines of business. To obfuscate the fact that requirements were not available, the team adopted a resolution to state to management specific phrases like, “What we are hearing from the business is that they want, or would like, to be able to do deep analytics.”

After many hours of meetings, the team elected the path that they wanted to take. It is a path that many architecture review boards commonly take, and if it is good enough for architecture review boards that make technology decisions every time they convene, then it must be an industry best practice. This is the approach where a team of generalists decide to leverage the feature list of every major product from its marketing materials, and capture it in a spreadsheet to be used as the master set of evaluation criteria.

Quite the feature list was assembled from scouring the marketing materials of the major Big Data products. In a number of cases, the features seemed to conflict with one another, particularly because some of the products had vastly different architectures, but why quibble over details. The weeks flew by.

Now that the feature list, which was being loosely used as business requirements, was assembled, the team proceeded to the next step of matching the candidate products to the master feature list to determine which products had more features than other products. However, not all products matched up to features on the basis of a clear yes or no. Some products partially had some of the features, and it had to be determined how much to award each product. More weeks flew by.

Finally, all of the products were mapped to the master feature list, with their varying degrees noted in the scoring system. However, a simple count of features that a given product had seemed somewhat unfair. Certainly, some features in this long list were more important than others, so now it had to be determined how much weight to assign each feature. More weeks flew by.

Eventually, the team had a new weighted score for each of the products. It should be noted that the weightings did not alter the relative ranking of the products, although it did bring some of them closer together in total score. Now many months later, the solution architects were quite pleased with their accomplishment, which selected the most expensive product from among the candidate products. But what did the enterprise architects think of it?

In fact, the enterprise architects could have saved 9 months off the process and countless man hours of effort because it was obvious to them that the outcome had to be the biggest, most mature, most expensive product of the bunch. It should always make sense that the most expensive product would have the most features and would have been the one that had been around the longest to build up those features. Newer products generally have to be less expensive to get market share, and they take years to accumulate a litany of features. But was the most expensive product the right choice from the perspective of a SME in information architecture?

The best choice from the perspective of the information architecture SMEs was actually the least expensive product, which ironically did not even make the top three in any of the scorings performed by the solution architects, and was summarily dismissed. However, it was less expensive by a factor of nearly 20 to 1 over a 5-year period ($5MM versus $100MM).

In fact, from a software internals perspective, it had the most efficient architecture, least complicated to install, setup and use, required less expensive personnel to manage, administer, and utilize it, with a significantly shorter time to deployment. It was also more suitable to be distributed across the many countries, many of which had medium to small data centers, and small budgets.

In all fairness to the solution architects, they played by the rules they were given. The actual recommendation from the two enterprise architects was to select two products. What we had found from being on conference calls with reference clients of the various products was that the least expensive product accomplished the job better than the most expensive one about 95% of the time. There were, however, 5% that needed some feature of the most expensive product. Clients indicated that the technology footprint for the most expensive product was therefore limited to the few areas that required those features, and that represented significant savings.

It is also fair to say that this author was very lucky to have been familiar with much of the internal design of the various products. However, it was that subject matter expertise that made it obvious early on as to which product a SME in the architectural discipline of database technology would select.

The point we make is a simple one. There is a benefit to having an SME in any of the architectural disciplines that represent areas of technology that are either already in use or will be in use across the IT landscape. Enterprise architects are SMEs in one or more particular areas of technology, as compared to a solution architect who is an expert in one or more applications and a generalist in the many technologies that those applications use.

Still another area of benefit has to do with the various corporate stakeholders from across the organization. These include the heads of departments such as Legal, Compliance, Auditing, and Chief Customer Officer, as well as external stakeholders such as outsourcing partners, customers, investors and regulators.

Since it is unrealistic to expect each stakeholder to interact with many solution architects, not to mention the fact that they may all have different interpretations of the various stakeholder interests, it is up to the few enterprise architects to incorporate the interests of the various stakeholders into the standards and frameworks of the architectural discipline in which they are an SME.

Equally as important, there are valuable synergies among architectural disciplines that offer opportunities of incorporating improved standards and frameworks that materialize simply from having enterprise architects who are SMEs in their respective discipline explain their disciplines to and collaborate with one another. This leads to added data security and data privacy benefits, such as easy and automatic data masking for ad hoc reporting.

Therefore, in the modern information systems architecture, technology challenges are addressed by instantiating the architectural disciplines that correspond to the areas of technology in use, or are planned to be in use, around the globe. Although the number of pertinent architectural disciplines for any company will vary, approximately 30 disciplines form a basic set that we discuss below. In addition, the specific architectural disciplines that may need to be instantiated at a given point in time can vary depending upon the technologies in use and the activities that are in progress or soon to start across the enterprise.

The operating model for information systems architecture is one where the expert defines the scope of their architectural discipline, and then identifies the hedgehog principle that drives the particular discipline, and a small number of additional metrics-driven principles that provide the ability to measure efficacy of the architectural discipline across the IT landscape.

Each SME must also determine the current state, future state, and a transition plan to get from the current state to the future state. Each SME must also present their discipline to their peers of SMEs for the other architectural disciplines. Each SME would identify the standards and frameworks that they propose and why, develop and refine these artifacts, and then mentor local governance boards, solution architects, and application development teams across the IT community.

Although this will be addressed in more detail later, local governance boards, solution architects, and application development teams should jointly participate in a process that determines whether designs and implementations are in compliance with the standards and frameworks, and to request exceptions, as well as a process to escalate requests for exceptions when it is believed that the exception should have been granted and/or the standard changed.

That said, even though architectural standards would be developed with the objective of controlling costs across the enterprise, there must still be a process in place to request exceptions to evaluate opportunities for improvement. If an exception does not violate the interests of another stakeholder and is clearly advantageous cost-wise over the potential life of the design, then the exception should be approved. Likewise, if the standard can be improved to better control costs or protect the interests of stakeholders across the enterprise, then the process to update the standard should be engaged.

We will now discuss a set of candidate architectural disciplines to be evaluated for inclusion into a modern information systems architecture practice.

3.1.1 Technology Portfolio Management

Technology portfolio management (TPM) is the discipline of managing the technology assets of an enterprise in a manner that is somewhat analogous to managing a portfolio of securities, whose focus is to optimize present and future value while managing risk.

At the onset, this is somewhat more challenging than one might expect as financial securities have consistent measurement criteria and technology products do not, at least not without a good amount of work as no industry standard has yet been established.

The challenge is that consistent measurement is only possible when comparing technologies that belong to the same area of technology and provide the same or overlapping capabilities. The development of standard categories is only beginning to emerge with tools for administrating TPM, such as the typical TPM tools. That said, the small number of categories that have been identified out of the box by the typical TPM tools is simply not granular enough to support the needs of large organizations, as the high-level categories should correspond directly with the architectural discipline that is most closely aligned to its core capability.

Once allocated to their associated architectural discipline, the subcategories, and in some cases, the sub-subcategories of technologies are best determined by the SME responsible for the particular architectural discipline.

For example, the subcategories for many operations and infrastructure components can be any combination of the hardware environment categories, such as mainframe; mid-range application server, database server, network server, or security server.

An example within the architectural discipline of workflow automation, technologies can be categorized as business process modeling notation (BPMN) tools, business process modeling (BPM) technologies, or workflow automation (WFA) tools, which we will discuss in the section that addresses the architectural discipline of workflow automation.

One approach to begin managing a portfolio of technology is to first develop an inventory of technologies in use across your company. This is not always easy as there may be technologies purchased and administered by business that may not be apparent to IT personnel. A thorough review of procurement contracts globally and incoming annual maintenance fees to accounts payable are typically required.

As the list of technologies is being assembled from across the globe, a variety of information associated with each technology can be gathered, noting that much of this information can further evolve repeatedly over time. The basic information that one would start with should include information from the last point in time payment was effected.

This should include exact name of the product, the name of the vendor, a vendor supplied product identifier, the product versions purchased, when each version was acquired, the platforms it was acquired for, and date of last update to the records of this product, and a high-level statement of the product’s capabilities.

One should also analyze each application system and the particular technologies that support them in production, as well as the technologies that support them in the development and deployment life cycle. To do so however, there needs to be a clear understanding of the distinction in definition between an application and a technology.

To do this we must first be careful with the use of terms. The term “business capability” refers to the business functions that are performed within a given department using any combination of manual procedures and automation. A department receives a “request” corresponding to a “business capability” that it is responsible to perform, such as the business capability for accepting and processing a payment, or the business capability of effecting a payment.

Just as business departments perform business capabilities, IT departments perform business capabilities as well, such as the business capability of a Help Desk providing advice and support to users for personal computer equipment and software.

A given business capability may be performed manually, with automation, or using a combination of manual operations with automation. The automation itself, however, may be an application, such as a funds transfer application which executes business rules specific to the business capability of funds transfer, or a technology, such as the Internet which executes generic capabilities of the particular technology.

As such, the business rules of an application must be maintained by an application development team. The application development team can be within the enterprise either onshore or offshore; it may be outsourced to a vendor.

So here’s the critical distinction that we are making. A technology does not contain business-specific business rules that support a business capability, whereas an application does contain business-specific business rules. Therefore, there are numerous software products that are technologies, such as rules engines, spreadsheets, and development tools (e.g., MS Access). These are simply technologies. However, once business rules are placed within a given instance of such a technology, then that instance becomes an application, which should be managed and maintained by an application development team as a production asset.

So to clarify, once a spreadsheet contains complex formulas that is used to support a business capability, that instance of that spreadsheet is an application that should be tested, its source should be controlled, it should be backed up for recovery purposes, and it should be considered as an inventory item in a disaster recovery (DR) plan.

However, if the spreadsheet is simply a document or a report, such as any word processing document like an MS Word file or Google Doc that do not contain business rules, then those instances are simply electronic documents and cannot be classified and managed as an application.

This means that the each application must also be analyzed to determine the specific technologies that ultimately support the automation needs of a given business capability. This includes network software, database software, operating systems, and security software, as well as the various types of drivers that integrate different components together.

Also included should be document management systems, and the development and testing tools, as well as monitoring tools that support the development as well as maintenance process for supporting automation upon which business capabilities rely.

3.1.1.1 Organizing Technologies into Portfolios

Portfolios of technologies represent a way to group technologies so that they are easier to manage. In general, the better the framework of portfolios, the more evenly distributed the technologies should be into those portfolios.

Organizing technologies into portfolios may be approached either bottom up, by first identifying the inventory of technologies and then attempting to compartmentalize them into portfolios, or top down. Once an inventory of technologies has been established, no matter how large it may be, the process of identifying the portfolio that they belong to may be conducted.

The number of technologies can be large; we have seen it even in the thousands. Although a number of classification schemes can be used to identify portfolios, the approach that we have seen that has been best for us is to classify them into portfolios that most closely match the a particular architectural discipline.

It is important to classify technologies into portfolios that correspond directly with architectural disciplines for a number of reasons. First and foremost is that there is a major difference in the result of managing technologies using a team of generalists, such as by individual solution architects, versus having an SME managing the portfolio in which they are expert.

This approach has been the best we’ve seen for managing a large portfolio of existing technologies, and when it comes to the selection of new technologies, or the selection of a future state set of technologies, it is also best.

As discussed earlier, a team of generalists, who know a great deal about many architectural disciplines, but no one discipline to the extent that they could be considered an expert, will repeatedly demonstrate a propensity to select the most expensive technology for any given capability. The approach that they take can be quite methodical, although flawed.

The approach of most generalists is to begin by getting a list of the leading technologies for a given capability from a major research company. Depending upon how this list is used, this can be the first misstep for a couple of reasons.

First, the criteria that research companies use are necessarily a best guess as to what the important characteristics are to an average enterprise, although it is difficult to define what an average enterprise may be. Unless your enterprise meets the criteria of being close to the average, it will not likely be as pertinent to your organization as you might like. Your enterprise may have particular strategies and technology direction that can easily outweigh the criteria used by an average organization.

Second, one must frequently take into account the relationship that research companies have with vendors, as some vendors represent large cash streams to the research company who sometimes hire research companies for consulting services. The advice of these firms may not be intentionally slanted at all, but we have seen at least one situation where the recommendation of a major research company was the result of deception, negligence, or incompetence.

Unfortunately, generalists are at an unfair disadvantage to detect questionable research, whereas an SME will tend to spot it immediately.

The next potential misstep performed by generalists is that they tend to use the product feature list from marketing literature as a substitute for requirements and evaluation criteria. This has a number of problems associated with it. While the evaluation criteria itself may not conform to the evaluation criteria most appropriate for your enterprise, the potentially bigger issues are that the feature list identified within the marketing literature is likely to be slanted toward the evaluation criteria used by the research company, and the evaluation criteria of the research company may actually be influenced by the vendor to favor themselves during the product evaluation process while working with the research analyst.

The final potential misstep performed by generalists is that they may not understand the all-in costs of a technology over the life of the technology. Introductory discounts and prices can distort the true cost structure, and the business benefits ofthe technology are often not realized due to tool complexity and hidden costs.

Vendor marketing personnel are the best at what they do. They are familiar with many of the financial ROI analysis approaches used by large organizations. Although most technical people do not enjoy performing a detailed financial analysis of a technology that is under evaluation, it is extremely important that this step is performed carefully in an impartial manner.

3.1.1.2 Architecture ROI Framework

When it comes to analyzing the all-in cost of each vendor technology, the SME will already have valuable insight into what other customers have experienced with a given technology, why and what the costs and benefits are. Even armed with that knowledge, it is still advisable for the SME to make use of a framework to evaluate the various aspects from an architectural perspective using an architecture ROI framework.

An architecture ROI framework can contain a number of categories with which to evaluate costs and benefits. Foremost, the appropriate SMEs should determine each technology’s compatibility with the application strategy, technology strategy, and data strategy. If the technology is not compatible with strategy of the enterprise, the technology can be rejected and the architecture ROI need not be performed.

If, however, the technology is compatible with the strategy of the enterprise, then we recommend that the architecture ROI framework minimally addresses the following with the minimum of a 3-year projection:

- application impact

- costs include new application licensing, maintenance, implementation, and decommissioning

- savings include decommissioned application license reduction, reallocation, and maintenance

- infrastructure impact

- costs include new infrastructure purchases, maintenance, installation and setup, decommissioning

- savings include decommissioned infrastructure reduction, reallocation, annual charges, and infrastructure avoidance

- personnel impact

- costs include additional employees, time and materials consultant labor, SOW costs, travel expenses, training costs, conference fees, membership fees, and overtime nonexempt charges

- savings include employee hiring avoidance, employee attrition, employee position elimination, consultant hiring avoidance, consultant personnel reduction, training avoidance, travel expense avoidance, conference fee avoidance, and membership fee avoidance

- vendor impact

- costs include hosting fees, service subscription fees, usage fee estimates, setup fees, support fees, appliance fees, and travel

- savings include hosting fee reduction, service subscription fee reduction, usage fee reduction, appliance fee reduction, and travel expense reduction

- operational workflow impact

- costs include increased rate of inbound incidents/requests, estimated increase in processing time, and average incident/request cost increase

- savings include decreased rate of incoming incidents/requests, estimated decrease in processing time, and average incident/request cost decrease

- business impact

- costs include estimated business startup costs, estimated losses from periodic loss of business capabilities, estimated loss from customer dissatisfaction, and estimated exposure from regulatory noncompliance

- savings include value of additional business capabilities, value of improved customer satisfaction, and value of enhanced regulatory reporting

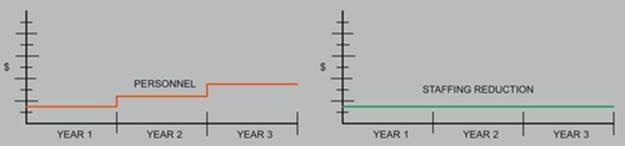

Additionally, each cost and benefit should have a visual illustration of a 3- to 5-year projection associated with it, such as in the cost illustration in red, and savings illustration in green, shown in Figure A.

FIGURE A 3-year cost-benefit projection example.

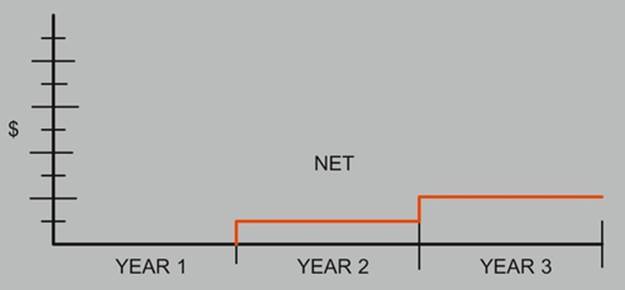

Once the figures have been reasonably verified, then it is time to prepare subtotals for each category followed at the end by a grand total chart to depict the net costs and savings of all categories, showing an architecture ROI cost, as illustrated in Figure B.

FIGURE B Net cost and savings.

The assumption associated with the architecture ROI framework is that it does not consider:

- after tax implications,

- net present value (NPV) to account for the future value of money,

- internal rate of return (IRR) to compare two or more investments,

- personnel severance costs as negotiated by HR,

- the distinction between airfare and lodging rates, and

- subjective measures of earnings and capital assumptions.

At this point, the architecture ROI is ready to go to finance to be included into their financial framework.

In conclusion, one or few experts will select technologies that will provide the greatest business value, as it is more likely to satisfy the capabilities actually required, to be less complex, be a vendor whose core capability more closely corresponds to what is needed to satisfy the pertinent business capability, and to have a better understanding of the all-in cost over the life of the technology.

Another important reason to classify technologies into portfolios that correspond directly with architectural disciplines is that it is much easier to identify a point of responsibility for a given technology that can perform the role and add real value to the users of the particular technology.

Once the appropriate portfolios for classifying technologies have been determined, it is a straightforward process to allocate those technologies that have a clear focus. It should be noted that some technologies have such a number of capabilities such that they begin to spread into multiple architectural disciplines. When this occurs, it is important to take note which capabilities a technology was acquired and approved for. Identifying which capabilities that a technology is to be used for is another role that experts within architectural disciplines are well suited for.

3.1.1.3 Enhanced TPM

After each technology has been allocated to the most appropriate architectural discipline, there are a few of basic steps to follow that will help with managing the content of that portfolio. Depending upon the particular architectural discipline, technologies can be further organized in useful ways.

If we consider the architectural discipline “Content Management Architecture” as an example, the technologies that could be allocated to that discipline can be organized into enterprise content management systems (aka document management systems) may include:

- Web content management systems,

- mobile content management,

- collaboration management,

- component content management,

- media content management (e.g., audio, video), and

- image management systems.

By organizing technologies of a portfolio by further allocating them in a diagram into such technology categories, it now becomes easy to visually illustrate technologies by these technology categories to readily depict gaps, overlaps, and oversaturation of technologies within each technology category of the particular portfolio.

The characteristics used to create technology subcategories within each portfolio are best determined by the SME that manages the particular architectural discipline. To speak to it generally however, the characteristics of the subcategories used should provide a good distribution of the technologies that have been allocated to the specific architectural discipline.

When the technologies belonging to a particular portfolio have been organized in such a manner, the SME is better positioned to identify technology strategy that is optimal for the particular portfolio.

Now that the various technologies have been organized into subcategories within their associated architectural discipline, it is time to consider technology metadata and metrics. Since all architectural disciplines need to acquire many of the same metadata about their respective technologies, it is best to develop a shared process and repository developed by the architectural discipline TPM.

As one would expect, products that have a high concentration of adoption within a line of business are not readily subject to a change in technology direction, whereas technologies that have few users and instances within a line of business can be subject to a rapid change of technology direction.

Basic metadata can include vendor name, technology name, supported hardware and operating system environments, approved usage, whether there are any special considerations for failover or DR, and the degree to which it is compatible with each line of business application strategy, technology strategy, and data strategy.

Basic metrics can include licenses purchased, licenses consumed, annual maintenance fees, cost of additional licenses, lines of business that use the technology, the level of experience across the users, degree of user training required, number of outstanding product issues, the frequency of product patches and new releases, and the number of hours consumed to provide administration for the product.

With this information, the SME can take into consideration, the costs associated with the potential disruption of each potential change to determine the most beneficial future state for the organization within each line of business. It then becomes possible to develop a roadmap to achieve the intended future state which optimizes business value, ultimately affecting the competitiveness of the company within the marketplace, as these practices accumulatively influence the infrastructural costs of the enterprise.

Additionally, publishing these artifacts as part of a policy of full transparency is the best way to illustrate the direction and strategy of technology within each technology portfolio. Imparting knowledge of the future state roadmap and the supporting metrics of a technology portfolio communicates all of the necessary information in the appropriate context as opposed to generalists assigning a status of buy, hold, or divest to each technology to drive toward the future direction with minimal knowledge and a lack of gathered information.

One last interesting topic to consider in TPM is to understand the circumstances when technologies drive the application strategy and when applications drive the technology strategy.

Although there are always exceptions, it is much more common to see a particular line of business drive the entire IT infrastructure relative to their line of business because applications for a given line of business have mostly evolved on some platforms more than in others. For example, an Investments line of business within an insurance company is far more likely to be Windows and Microsoft centric than IBM mainframe or Oracle centric, whereas HR within a large company is more likely to be Oracle UNIX centric than Microsoft centric. Once the suite of applications within a given line of business determines the dominant applications and their associated environments, the technology stack simply follows their lead.

In contrast, there are still occasions when technology leads the selection of applications and environment. This may help to explain IBM’s motivation to get into the Big Data space so that IBM hardware can play more of a central role in the high-end Hadoop world within large enterprises.

3.1.2 Reporting Architecture

Developing reports in the early years of IT was rather easy, especially since the volume of data available in a digital form at that time was relatively low. As the volume of information increased soon, it became valuable to report on historical data and the depiction of trends, statistics, and statistical correlations.

It was not long before reports went from batch to online transaction processing (OLTP) reporting, with applications generating reporting journals, which were simply flat file records generated during normal processing to support easy reporting afterward. The earliest advancements in the analysis of larger amounts of data were propelled by the business advantages that could be had within the most competitive industries, such as among the advertising and financial investment firms.

Soon new terms emerged; some of these terms emerged out of necessity, as the term “reporting” would prove too general and extremely ineffective within Internet search engines. Hence, a variety of more specific terms entered the language.

These included:

- statistical analysis,

- online analytical processing (OLAP),

- BI,

- nonstatistical analysis (e.g., neural networks),

- data mining,

- predictive analytics (aka forecasting models),

- operational data stores (ODS),

- data warehouse (DW),

- data marts (DM),

- geographic information systems (GIS), and more recently,

- Big Data, and

- mashup technology.

New techniques using hardware were developed in an attempt to deal with the ability of the already existing hardware to process large amounts of data.

These included the emergence of:

- approximately a dozen levels of a redundant array of independent disks (RAID),

- solid state drives,

- vector processing,

- parallel processing,

- supercomputers,

- multiprocessing,

- massively parallel computing (MPP),

- massively parallel processing arrays (MPPA),

- symmetric multiprocessing (SMP),

- cluster computing,

- distributed computing,

- grid computing,

- cloud computing, and

- in memory computing.

To further advance the handling of larger quantities of data, file access methods and file organizations gave way to database technologies, with a variety of database types, such as:

- transactional,

- multidimensional,

- spatial, and

- object oriented.

Alongside these technologies came a variety of database architectures, such as:

- hierarchical,

- network,

- inverted list,

- relational,

- columnar-relational hybrids, and

- true columnar (where relational database management system overhead is eliminated).

Although this can seem overly complex initially, it is not difficult to understand any reporting software architecture as long as you begin at the foundation of the technology, which is to first establish a good understanding of the I/O substructure and its performance specifications. Performance specifications vary with the type of operation, but they basically consist of two types of access, which are sequential access and random access.

From the foundation you build up to understanding programs that access the data, called access methods, as well as the way that they organize data on storage media, called file organizations.

Once access methods and file organizations are understood, then you are ready to understand the types of indexes, database architectures, and the architectures of database management systems including how they manage buffers to minimize the frequency with which the CPU must wait for data to move to and from the storage devices through the I/O substructure.

An expert in this discipline should be able to accurately calculate how long it will take to do each type of report by estimating the amount of time it takes for each block of data to traverse through the various parts of the I/O substructure to the CPU core. In this manner an expert can tell if a given technology will be sufficient for the particular type of report and quantity of data.

3.1.2.1 Data Warehouse Architecture

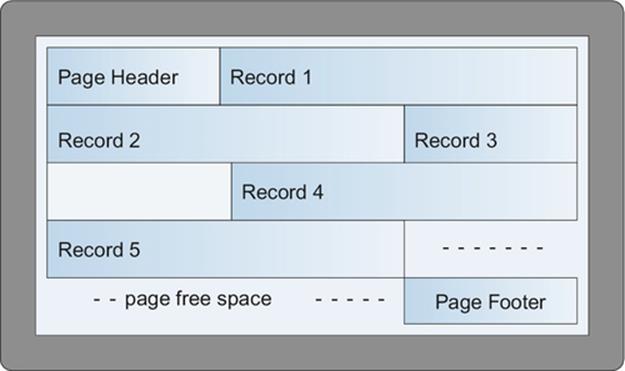

Before the volume of data grew beyond the capacity of standard reporting technologies data was read directly from their associated production transaction system files and databases. As data volume grew a problem emerged in that reporting and transactional activity shared the same production files and databases. As this resource contention grew, the need to replicate data for reporting purposes away from transactional systems grew.

When transactional systems were relatively few, replication of data for reporting was first implemented with the generation of files that could each would be used to create a particular report. With the growth in number of these files a more consolidated approach was sought, and from that the concept of the data warehouse emerged as a means to support many different reports.

As the variety of transaction system grew, along with the volume of data and number of reports, so did the complexity of the data warehouse. Soon more than one data warehouses were needed to support reporting requirements.

The complexity of creating and maintaining additional data warehouses creates opportunities for data inconsistencies across data warehouses. This led the industry to conclude that manageable collections of transaction systems should have their data integrated into ODS where it should be easier to resolve data inconsistencies because they were from similar transaction systems. Once the first wave of consolidation issues had been resolved then multiple ODSs could be further consolidated into a data warehouse.

With numerous transaction systems acting as the source of data bound for data warehouses, ODSs served as an intermediate step that could act as a mini-data warehouse for a collection of related transaction systems. These mini-data warehouses were easier to implement because the database designs of related transaction systems tended to be less disparate from one another than more distantly related transactions systems. Additionally, a number of reports could be supported from the layer of ODS databases, thereby reducing the load and complexity placed upon a single consolidated data warehouse.

With the emergence of an ODS layer, the data warehouse could return to its role of supporting the consolidated reporting needs of the organization that any could only be otherwise supported using a combination of one or more ODSs. In this approach, ODSs would house the details associated with their collection of transaction system databases, and the data warehouse would house the details associated with the collection of the ODS layer.

Needless to say, housing such a large accumulation of detail data from across several transaction systems poses a major challenge to database technologies that were designed to best address the needs of transaction processing.

Using database technology designed for transactional processing, the ability to read the detail data necessary to calculate basic totals and statistics in real time was soon lost. Data warehouses needed either a new technique to support the types of reports that focused on data aggregation, or it needed a new breed of hardware, software and databases that could support analytical processing, and a new breed of hardware, software and database technologies were born.

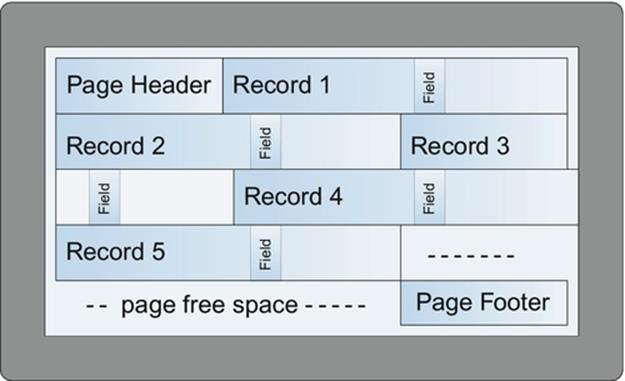

Data warehouse architecture deals with an array of complexities that occur in metadata, data and databases designs.

In metadata, issues include anomalies such as:

- ambiguously named fields,

- multiple terms that mean the same thing,

- one term that has multiple meanings,

- terms that do not represent an atomic data point such as compound fields,

- terms that have incorrect, missing, or useless definitions.

In data, issues include anomalies such as:

- sparseness of data where few values were populated for specific fields,

- invalid data like birth dates in the future,

- invalid or inconceivable values like a month of zero,

- partial loss of data due to truncation,

- invalid formats like alphabetic characters in a numeric field,

- invalid codes like a state code of ZZ,

- one field populated with data belonging to a different field like surname in first name,

- application code is required to interpret the data.

In individual databases, issues include anomalies such as:

- children records with no association to parent records

- children associated with the wrong parent

- duplicate records having the same business data

- schema designs that do not correctly correlate to the business

- indexes that point to incorrect rows of data

- loss of historical data

In multiple databases, issues include anomalies such as:

- inconsistent code values for the same idea like New Jersey = “99” or “NJ”

- incompatible code values for the same idea like New Jersey = Northeast US

- non matching values for the same fields like the same person having different birth dates

- incompatible structures that intended to represent the same things

The process of untangling metadata, data, and database issues may require tracing the data back to the online forms and batch programs that populated the values to be able to decipher the source and meaning of the data, often requiring knowledge of data discovery techniques, data quality expertise, data cleansing, data standardization and data integration experience.

3.1.2.2 BI Architecture

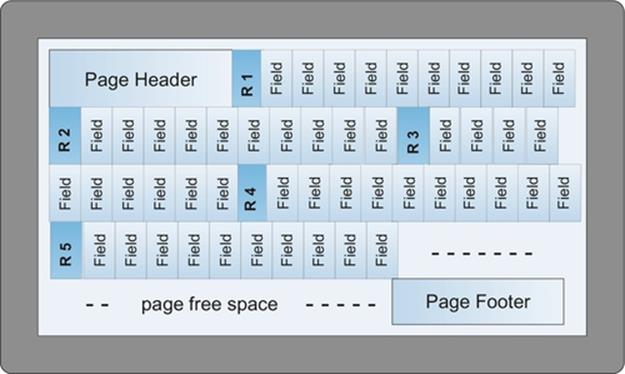

BI architecture is generally a discipline that organizes raw data into useful information for reporting to support business decision making, frequently using forms of data aggregation, commonly referred to as online analytical processing (OLAP).

OLAP comprises a set of reporting data visualization techniques that provide the capability to view aggregated data, called aggregates (aka rollups), from different perspectives, which are called dimensions. As an example aggregates, such as “sales unit volumes” and “revenue totals” may be viewed by a variety of dimensions, such as:

- “calendar period,”

- “geographic region,”

- “sales representative,”

- “product,”

- “product type,”

- “customer,”

- “customer type,”

- “delivery method,” or

- “payment method.”

The choice of aggregates and dimensions is specified by the user, and the results are displayed in real time.

To deliver results in real time however, the initial approach to support data aggregation techniques was somewhat primitive in that all of the desired aggregates and dimensions that would be needed had to be predicted in advance and then precalculated typically during batch process usually performed overnight. This also means that although the responses were in real time, the data was from the day before and would not include anything from today until the next day.

Since unanticipated business questions cannot be addressed in real time, there is sometimes the tendency to overpredict the possible aggregates and dimensions and to precalculate them as well. This technique has grown to such an extent that the batch cycle to precalculate the various aggregates by the desired dimensions has become so time consuming that it frequently creates pressure to extend the batch window of the system.

Data mart load programs literally have to calculate each aggregate for each dimension, such as totaling up all of the “sales unit volumes” and “revenue totals” by each “calendar period,” “geographic region,” “sales representative,” “product,” “product type,” “customer,” “customer type,” “delivery method,” “payment method,” and every combination of these dimensions in a long running overnight batch job.

Precalculated aggregates were stored in a variety of representations, sometimes called data marts, star schemas, fact tables, snowflakes, or binary representations known as cubes.

The feature that these approaches had in common was that dimensions acted as indexes to the aggregates to organize the precalculated results. A number of books have been written on this approach where they will also refer to a number of OLAP variants, such as MOLAP, ROLAP, HOLAP, WOLAP, DOLAP, and RTOLAP.

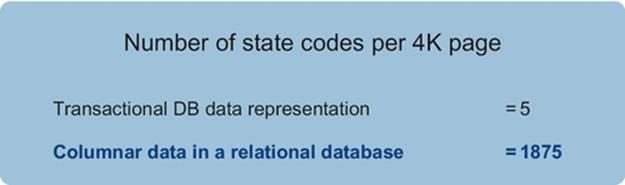

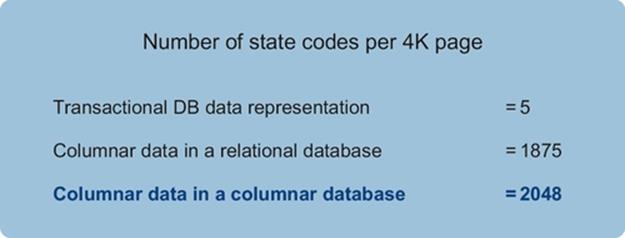

In contrast, the new breed of hardware, software, and databases approaches this problem now in new ways. The two major approaches include a distributed approach to have many servers working on the many parts of the same problem at the same time, and an approach that simply compresses the data to such an extent that the details of billion rows of data can be processed in real time on inexpensive commodity hardware, or trillions of rows of data in real time at a somewhat higher cost on mainframes.

As a result, physical data marts and the need to precalculate them are no longer necessary, with the added advantage that these new technologies can automatically support drill-down capabilities to illustrate the underlying detail data that was used to determine the aggregated totals.

The new breed of specialized hardware is typically referred to as an appliance, referring to the fact that the solution is an all included combination of software and hardware. Appliance solutions are higher priced, often significantly so in the millions of dollars, and have higher degrees of complexity associated with them particularly in areas such as failover and DR. That said, BI architecture encompasses more than just the capabilities of data aggregation.

BI can be expansive encompassing a number of architectural disciplines that are so encompassing themselves that they need to stand alone from BI architecture. These include topics such as data mining, data visualization, complex event processing (CEP), natural language processing (NLP), and predictive analytics.

Data mining is an architectural discipline that focuses on knowledge discovery in data. Early forms of data mining evaluated the statistical significance between the values of pairs of data elements. It soon grew to include analysis into the statistical significance among three or more combinations of data elements.

The premise of data mining is that one ever knows in advance what relationships may be discovered within the data. As such, data mining is a data analysis technique that simply looks for correlations among variables in a database by testing for possible relationships among their values and patterns of values. The types of relationships among variables may be considered directly related, inversely related, logarithmically related, or related via statistical clusters.

One challenge of data mining is that most statistical relationships found among variables do not represent business significance, such as a correlation between a zip code and a telephone area code. Therefore, a business SME is required to evaluate each correlation.

The body of correlations that have no business significance must be designated as not useful so that those correlations may be ignored going forward. The correlations that cannot be summarily dismissed are then considered by the business SME to evaluate the potential business value of the unexpected correlation.

Hence, examples of some potentially useful correlations may include the situations, such as a correlation between the numbers of times that a customer contacts the customer service hotline with a certain type of issue before transferring their business to a competitor, or a correlation among the value of various currencies, energy product prices, and precious metal commodity prices.

An active data mining program can cause business executives to reevaluate the level of importance that they place upon information when it is illustrated that valuable information for decision making lays hidden among vast quantities of business data. For example, data mining could discover the factors that correspond to the buying patterns of customers in different geographic regions.

Data visualization is an architectural discipline closely related to BI architecture that studies the visual representation of data, often over other dimensions such as time. Given the way that human brain works, taking data that exists as rows of numbers into different visual patterns across space, using different colors, intensities, shapes, sizes, and movements, can communicate clearly and bring to attention the more important aspects. Some of the common functions include drill downs, drill ups, filtering, group, pivot, rank, rotate, and sort. There are hundreds of ways to visualize data and hundreds of products in this space, many of which are highly specialized to particular use cases in targeted applications within specific industries.

A partial list of visual representations includes:

- cluster diagrams,

- terrain maps,

- architectural drawings,

- floor plans,

- shelf layouts,

- routes,

- connectivity diagrams,

- bubbles,

- histograms,

- heat maps,

- scatter plots,

- rose charts,

- cockpit gauges,

- radar diagrams, and

- stem and leaf plots.

3.1.2.3 Predictive Analytics Architecture

Predictive analytics is another architectural discipline that encompasses such a large space that it is worthy of its own discipline. Predictive analytics encompasses a variety of techniques, statistical as well as nonstatistical, modeling, and machine learning. Its focus, however, is identifying useful data, understanding that data, developing a predictive or forecasting capability using that data, and then deploying those predictive capabilities in useful ways across various automation capabilities of the enterprise.

Usually, the breakthroughs that propel a business forward originate on the business side or in executive management. There are a handful of factors that can lead to breakthroughs in business, where competitive advantages in technology can suddenly shift to one company within an industry for a period of time until the others catch up.

The basic types of competitive breakthroughs involve innovations in products, processes, paradigms, or any combination of these. Breakthroughs in paradigms are the most interesting as for the most part they facilitate a different way of looking at something. Some of the companies that have done particularly well involving breakthroughs in paradigms are companies such as Google, Apple, Facebook, and Amazon.

In a number of cases, however, a breakthrough in paradigm can be caused by mathematics, such as the mathematical developments that eventually led to and included the Black-Scholes options pricing model, which is where most agree that the discipline of quantitative analysis emerged.

The ability of a statistical model to predict behavior or forecast a trend is dependent upon the availability of data and its correct participation in the statistical model. One advantage that statistical models have to offer is their rigor and ability to trace the individual factors that contribute to their predictive result. Statistical methods however require individuals to be highly skilled in this specialized area.

The architectural discipline of predictive analytics is deeply engrained in statistics and mathematics, with numerous specialty areas.

Some examples of a specialty area include:

- longitudinal analysis, which involves the development of models that observe a particular statistical unit over a period of time,

- survey sampling models, which project the opinions and voting patterns of sample populations to a larger population, and

- stimulus-response predictive models, which forecast future behavior or traits of individuals.

While knowledge of statistical methods is essential for this discipline, it should not be without knowledge of nonstatistical methods, such as neural network technology (aka neural nets).

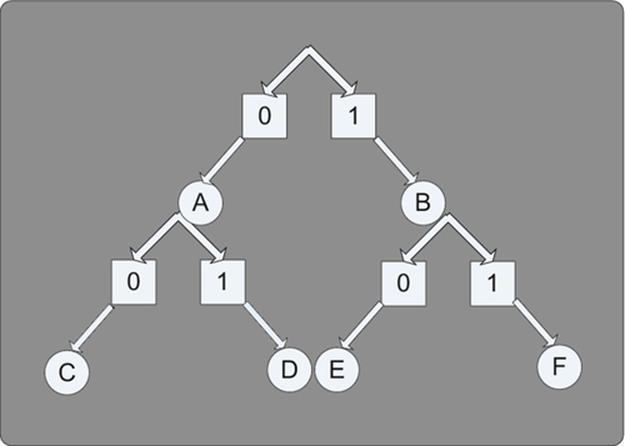

Neural networks are nonstatistical models that produce an algorithm based upon visual patterns. To be useful, numerical and textual information are converted into a visual image. The role of the algorithm is ultimately to classify each new visual image as having a substantial resemblance to an already known image.

Similar to statistical models, the ability of a neural network to predict behavior or forecast a trend is dependent upon the availability of data and its participation in the nonstatistical model to properly form the “visual image.”

Neural nets are essentially complex nonlinear modeling equations. The parameters of the equations are optimized using a particular optimization method. There are various types of neural nets that use different modeling equations and optimization methods. Optimization methods range from simple methods like gradient descent to more powerful ones like genetic algorithms.

The concepts of neural networks and regression analysis are surprisingly similar. The taxonomy of each is different, as is usually the case among the disciplines of artificial intelligence.

As examples, in regression analysis, we have independent variables; in neural networks, they are referred to as “inputs.” In regression analysis, you have dependent variables; in neural nets, they are referred to as “outputs.” In regression analysis, there are observations; in neural nets, they are referred to as “patterns.”

The patterns are the samples from which the neural net builds the model. In regression analysis, the optimization method finds coefficients. In neural nets, the coefficients are referred to as weights.

Neural network “training” results in mathematical equations (models) just like regression analysis, but the neural network equations are more complex and robust than the simple “polynomial” equations produced by regression analysis. This is why neural networks are generally better at recognizing complex patterns.

That said, it should also be noted that it is often a trial-and-error process to identify the optimum type of neural network and corresponding features and settings to use given the data and the particular problem set. This tends to drive the rigorous statistician insane. Although early neural networks lacked the ability to trace the individual factors that contributed to the result, which also drove many a statistician insane, modern neural networks can now provide traceability for each and every outcome.

Early neural nets required highly specialized personnel; however, the products and training in this space have become user friendly for business users and even IT users to understand and use.

An early adopter of neural nets was American Express. Early on credit card applications were evaluated manually by clerical staff. They would review the information on the credit card application and then based upon their experience judge whether or not the applicant was a good credit risk.

The paradigm breakthrough that AMEX created was that they envisioned that the data on credit card applications could be converted to digital images that in turn could be recognized by a neural network. If the neural net could learn the patterns of images made by the data from the credit card applications of those that proved good credit risks, as well as patterns corresponding from bad credit risks, then it could potentially classify the patterns of images made by the data from new credit card applications as resembling good or bad credit risks correctly, and in a split second.

AMEX was so right. In fact, the error rate in correctly evaluating a credit card application dropped significantly with neural nets, giving them the ability to evaluate credit card applications better than any company in the industry, faster, more accurately, and at a fraction of the cost. At that time, AMEX was not a dominant global credit card company, but they rapidly became the global leader and continue to endeavor to maintain that status.

Regardless of the particular technique that is adopted, the use of predictive analytics has become essential to many businesses. Some insurance companies use it to identify prospective customers that will be profitable versus those that will actually cause the company to lose money.

For example, predictive analytics have been successfully deployed to determine which customers actively rate shop for insurance policies. If customers attain an insurance policy and then defect to another carrier within a relatively short period of time, then it ends up costing the insurance company more than they have made in profits for the given time period.

Today, retailers use predictive analytics to identify what products to feature to whom and at what time so as to maximize their advertising expenditures. Internet providers use predictive analytics to determine what advertisements to display, where it should be displayed, to whom. There are numerous applications across many industries, such as pharmaceuticals, health care, and financial services.

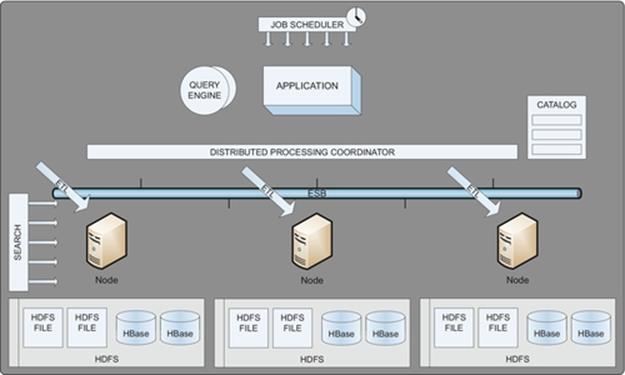

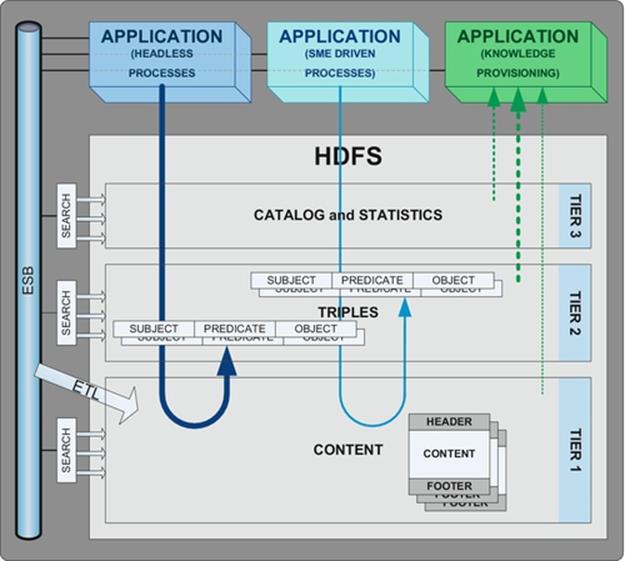

3.1.2.4 Big Data Architecture

The term “big data” means different things to different people. In its most simple form, big data refers to sufficient amounts of data that it becomes difficult to analyze it or report on it using the standard transactional, BI, and data warehouse technologies. Many of the “big data”-specific technologies, however, require significant budgets and usually require an extensive infrastructure to support. As such, it is critical for enterprise architecture to oversee it with the appropriate business principles to protect the interests of the enterprise.

In the context of control systems, big data is generally understood as representing large amounts of unstructured data. In this context, the true definition of unstructured data refers to the types of data that do not have discrete data points within the data that can be designed to map the stream of data such that anyone would know where one data point begins and ends after which the next data point would begin.

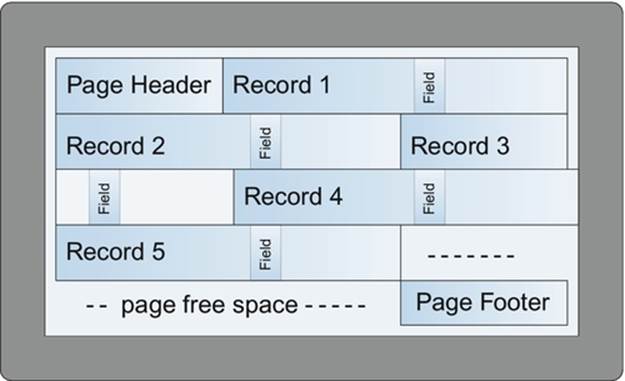

In a control system, the concept of a “record” housing unstructured data is different, as it represents a specific continuum of time when the data was recorded. In contrast, a record within an information system context will typically represent an instance of something.

In the context of information systems, big data is generally understood to be structured data and semistructured data, which is often referred to as unstructured data, as there are few examples of true unstructured data in an information system paradigm.

That said, it is important to clearly define what is meant by structured, unstructured, and semistructured data.

Structured data is the term used when it is clear what data elements exist, where, and in what form. In its most simple form, structured data is a fixed record layout; however, there are variable record layouts, including XML that make it clear what data points exist, where, and in what form.

The most common form of structured data is file and database data. This includes the content of the many databases and files within an enterprise company where there is a formal file layout or database schema. This data is typically the result of business applications collecting books and records data for the enterprise.

The next most common form of structured data refers to the content of machine generated outputs (e.g., logs), that are produced by various types of software products, such as application systems, database management systems, networks, and security software. The ability to search, monitor, and analyze machine generated output from across the operational environment can provide significant benefit to any large company.

Unstructured data is the term used when it is not clear what data elements exist, where they exist, and the form they may be in. Common examples include written or spoken language, although heuristics can often be applied to discern some sampling of structured data from them.

The most unstructured data does not even have data elements. These forms of unstructured data include signal feeds from sensors involving streaming video, sound, radar, radio waves, sonar, light sensors, and charged particle detectors. Often some degree of structured data may be known or inferred with even these forms of unstructured data, such as its time, location, source, and direction.