Pragmatic Enterprise Architecture (2014) Strategies to Transform Information Systems in the Era of Big Data

PART IV Information Architecture

Abstract

This part separates one of the most important areas of specialization, information architecture, from the rest of the vast area of enterprise architecture so as to provide it the appropriate degree of focus and attention. Information architecture is the brain and central nervous system of any large organization and as such should be called out as a distinct set of disciplines with its own philosophy and mindset. While arguably the most important part of an organization's ecosystem, information architecture is among the most difficult for the general population to understand due to the need to intimately understand the business as well as a vast array of IT areas of specialization involving data architecture, reference data, master data, data governance, data stewardship, data discovery, data in motion, and a variety of associated disciplines that reside in operations architecture, business architecture, and the main body of enterprise architecture in the previous major section.

Keywords

Information architecture

data architecture

data governance

business data glossary architecture

data ownership architecture

data access rights architecture

ETL data masking architecture

canned report access architecture

data stewardship

data discovery

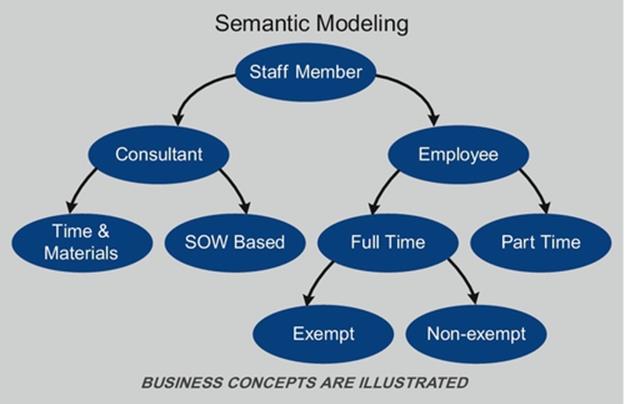

semantic modeling

architecture governance component registry

data governance dashboard

data obfuscation architecture

data modeling architecture

reference data management—product master

reference data management—code tables

reference data management—external files

data in motion architecture

data virtualization architecture

ETL architecture

ESB architecture

CEP architecture

content management architecture

master data management

MDM

logical data architecture

LDA

code tables

external files

operational workflow

reference data

activity data

initial business setup

conducting business

analyzing business

business data glossary

business metadata

identifying the source of data

extracting data to a landing zone

data profiling

data standardization

data integration

data ownership

data access rights

sensitive data

masked

encrypted

canned report with variable data

canned report with fixed data

treaty zone

jurisdictional level

authority document level

data steward level

data discovery architecture

data landscape

Big Data

ontology

document management

taxonomy of legislative jurisdictions

administration

document development

approval process

production use

metrics

data obfuscation

data access restrictions

data masking

data encryption

data at rest

DAR

data in motion

DIM

protection of business communications

SSN

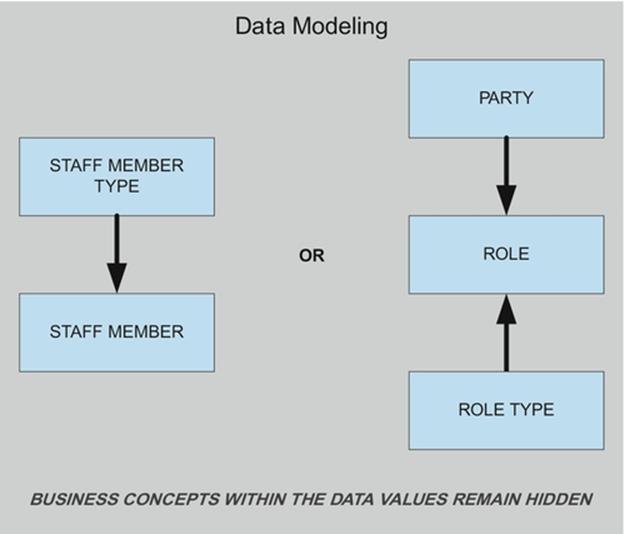

data modeling

conceptual data model

logical data model

physical data model

normalization

1NF

2NF

3NF

4NF

5NF

6NF

7NF

DKNF

BCNF

weaknesses or normalization

abstraction

rules of data abstraction

class words

1AF

2AF

3AF

4AF

transaction path analysis

TAPA

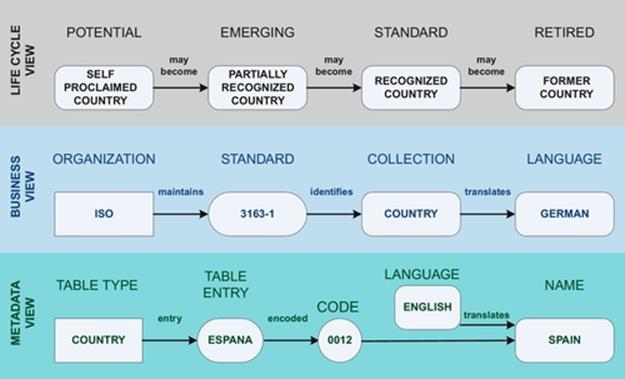

reference data management

product master management

metadata

code tables management

ISO

International Organization for Standardization

external files management

A.M Best

Bank of Canada

Bank of England

Dun & Bradstreet

Equifax

Experian

Fitch

Moody’s

Morningstar

United Nations

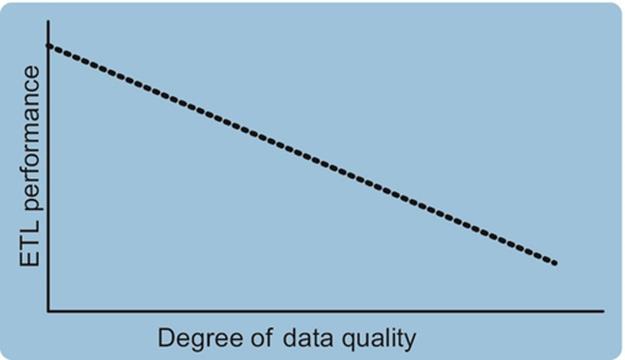

ETL

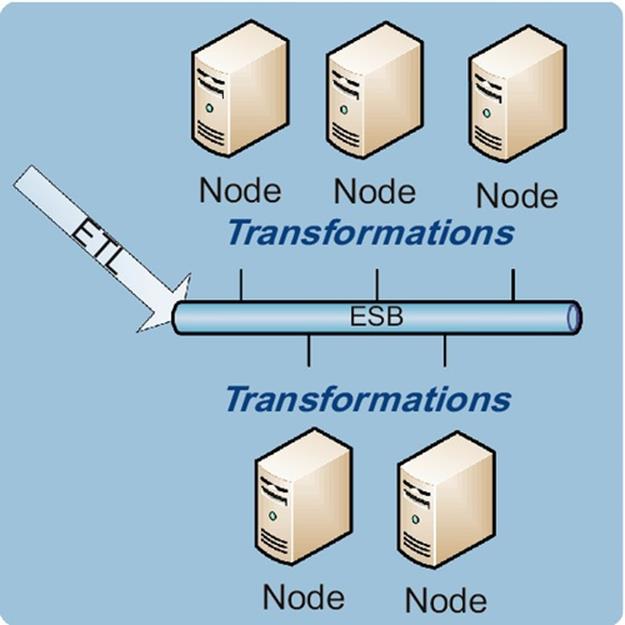

ESB

CEP

FTP

XML

data streaming

data virtualization

ODS

data warehouse

extract transform and load

ETL CASE tool

enterprise service bus

SOAP

service-oriented architecture protocol

complex event processing

content management

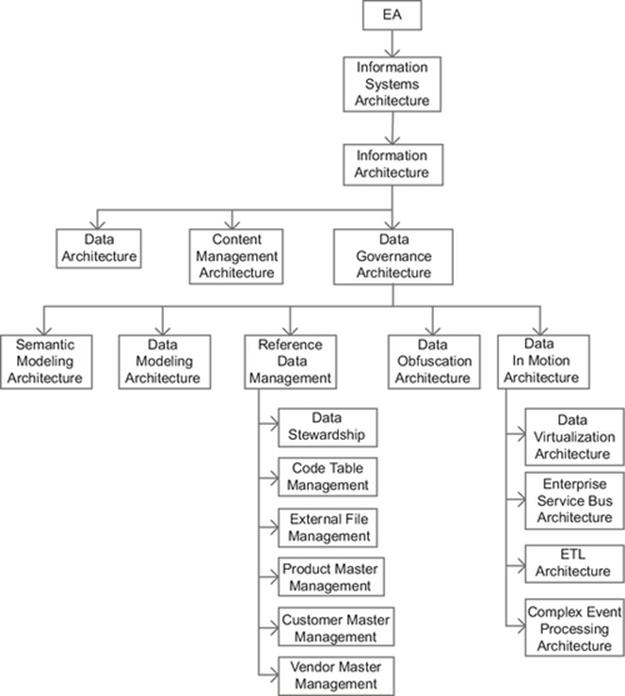

DIAGRAM Information architecture overview.

4.1 Information Architecture

Information architecture is the foundation of information systems architecture that also represents a significant number of the architectural disciplines of information systems architecture.

To begin, every enterprise has assets. As examples, it has employees, customers, IT facilities, business facilities, and financial assets. However, the most significant asset of most large enterprises is its data.

The reason for this is that the data asset of each and every company defines its individuality. A company’s data reflects its particular marketing efforts, business activities, customer interactions, product sets, employees, and financial history.

An enterprise can have its:

- employees lured to other firms,

- customers attracted away by competitors,

- data centers demolished by meteors,

- buildings lost in storms and fires, or

- capital consumed in a single catastrophic event.

An enterprise can also:

- hire new employees,

- build a new customer base,

- construct a new data center,

- acquire new buildings with leases, and

- raise new capital through investors or by borrowing.

However, if an enterprise loses or destroys its information in every location it is kept, then there is no external source from which it can be reacquired.

Information architecture is responsible for knowing everything about the data assets of the enterprise. Due to the extensive breadth and depth of information, information architecture has the greatest breadth and depth of any set of frameworks within any of the architectural disciplines.

The first contributing factor is that the number of business data points within businesses. These are the number distinct items of data that each have their own business definition that for a large enterprise can number in the millions, such as the following sample from a small segment of the employee onboarding process:

- prospective employment candidate resume received date,

- prospective employee interview date,

- prospective employee reference check date,

- prospective employee former employer verification date,

- prospective letter rejection letter date,

- prospective employee offer letter date,

- prospective employee offer letter acceptance date,

- prospective employee intended start date,

- prospective employee pre-hire medical date,

- prospective employee background check date, and

- employee start date.

The above data points are a sampling of dates that do not begin to address the hundreds of data points involved in the other types of data point involving that segment of onboarding and the many hundreds of data points involved in the following onboarding activities:

- assigned work space,

- assigned HR representative,

- assigned furniture,

- assigned equipment,

- assigned telephone number,

- assigned conference calling number,

- employee badge,

- supervisor,

- assigned fire drill station assembly area,

- benefit options,

- associated budgets,

- travel expense reimbursement,

- relocation,

- job training,

- compliance training,

- access to physical compartments,

- access to software applications,

- access to servers, and

- access to network components.

The architectural disciplines that are part of information architecture include:

- data architecture,

- data governance,

- business data glossary architecture,

- data ownership architecture,

- data access rights architecture,

- ETL data masking architecture,

- canned report access architecture,

- data stewardship,

- data discovery,

- semantic modeling,

- architecture governance component registry,

- data governance dashboard,

- data obfuscation architecture,

- data modeling architecture,

- reference data management (RDM)—product master,

- RDM—code tables,

- RDM—external files,

- data in motion (DIM) architecture,

- data virtualization architecture,

- ETL architecture,

- ESB architecture,

- CEP architecture, and

- content management architecture.

Within each of these disciplines, there are a variety of technologies that for the most part are far from inexpensive. These require subject matter experts to match the needs of the organization with the most appropriate technologies, standards, and frameworks.

Within information architecture also exists data life cycle management (DLM) (aka information life cycle management (ILM)). This is a policy-based approach to managing the flow of an information system’s data through its life cycle from creation to the time it becomes obsolete and must be properly disposed of.

As such, information architecture must ensure the inclusion of DLM policies into the appropriate information architecture frameworks, namely, data governance standards and frameworks. It should be noted that policy-based approaches are generally not successful unless their content is incorporated into the appropriate data governance standards and frameworks. DLM must also be coordinated with records information management (RIM) and legal hold (LH) in collaboration with areas such as content management architecture.

4.1.1 Master Data Management

In this section, we see that there are over 20 distinct architectural disciplines that comprise information architecture. As you probably noticed, these architectural disciplines were neither named master data management (MDM) nor had MDM participating in any part of their name.

MDM is a term that has been given a variety of different meanings and varying scope that as a term it has become relatively ineffective for communicating clearly.

As examples of what may be found within the scope of MDM:

- some variations on logical data architecture (LDA) (i.e., part of data architecture),

- all aspects of data governance,

- data glossaries of all types,

- occasionally data ownership,

- occasionally data access rights (i.e., data security),

- data masking—usually restricted to batch ETL from Production to a test environment,

- aspects of data stewardship,

- aspects of data discovery,

- occasionally semantic modeling,

- occasionally data mining,

- occasionally content management,

- some aspects of metadata—of which there are many,

- RDM—although MDM has numerous definitions for it including party and product master,

- occasionally data resellers, data concentrators, and data enhancement vendors,

- sometimes RIM and data archival,

- sometimes continuity management and disaster recovery (DR),

- sometimes LHs,

- occasionally data virtualization,

- increasingly ESB architecture,

- increasingly CEP architecture,

- all aspects of data quality—including data standardization, and

- most aspects of data profiling—the term “most” was used because there are aspects of data profiling for advanced data compression that MDM does not consider.

The variety of capabilities that participate under the umbrella of MDM is larger than the topics related to data that are not part of MDM (e.g., physical data modeling). Products marketed within the MDM space range between bundled offerings that are “all encompassing” and extremely expensive, both from a licensing and internal cost perspective, to offerings that are clearly focused and well priced for the value they deliver.

Even though a substantial portion of this book addresses topics that belong to MDM, the only reason that this section was added, with the name “MDM,” is to address the fact that someone will ask how an entire book about enterprise architecture could have missed the topic of MDM.

MDM is a term that has been given a variety of different meanings and varying scope that as a term it has become relatively ineffective for communicating a particular idea that anyone would immediately understand.

As examples of what may be found within the scope of MDM:

- some variations on LDA (i.e., part of data architecture),

- all aspects of data governance,

- data glossaries of all types,

- occasionally data ownership,

- occasionally data access rights (i.e., data security),

- data masking—usually restricted to batch ETL from production to a test environment,

- aspects of data stewardship,

- aspects of data discovery,

- occasionally semantic modeling,

- occasionally data mining,

- occasionally content management,

- some aspects of metadata—of which there are many,

- RDM—although MDM has numerous definitions for it including party and product master,

- occasionally data resellers, data concentrators, and data enhancement vendors,

- sometimes RIM and data archival,

- sometimes continuity management and DR,

- sometimes LHs,

- occasionally data virtualization,

- increasingly ESB architecture,

- increasingly CEP architecture,

- all aspects of data quality—including data standardization, and

- most aspects of data profiling—the term “most” was used because there are aspects of data profiling for advanced data compression that MDM does not consider.

The variety of capabilities that participate under the umbrella of MDM is larger than the topics related to data that are not part of MDM (e.g., physical data modeling, name and address standardization, and match and merge). Products marketed within the MDM space range between bundled offerings that are “all encompassing” and extremely expensive, both from a licensing and internal cost perspective, to offerings that are clearly focused and well priced for the value they deliver.

Even though a substantial portion of this book addresses topics that belong to MDM, the only reason that this section was added, with the name “MDM,” is to address the fact that someone will ask how an entire book about enterprise architecture could have missed the topic of MDM.

My advice on MDM is to first distill out the disciplines related to it and address them one at a time including those processes that support their governance, starting with:

- LDA

- business data glossary

- RDM

- code tables reference data—(e.g., country codes) including all codes that may be shared across applications or may be useful for business analytics and reporting including all forms of product and customer segmentation

- files that serve as reference data—(e.g., pricing feed) including all externally acquired files that can be used to support marketing, pricing, or risk analysis

- LDA reference data subject areas—(e.g., chart of accounts, customer, product, vendor, distribution channel) including any data that can be shared across the organization

The goal is to standardize all information with which management may want to analyze the business activity, such that the data representing the same concepts all reference the same names and codes. When done properly, it becomes possible to correctly determine inventory levels, costs, margins, and profitability across what may have previously been disparate collections of data that don’t match.

That said, when asked to kick off an MDM initiative, the following information is useful to gather from business and IT resources before one engages the appropriate architectural disciplines.

4.1.1.1 Business Perspective

- Is there a chief customer officer (CCO) role in the organization?

- Is procurement centralized?

- Is product development centralized?

- What is the desired business direction of the organization?

- What is the business strategy for moving the organization in the desired business direction?

- What is necessary for the business strategy to be successful?

- How would you define the concept of “your biggest competitor”?

- What company would you consider to be your biggest competitor(s)?

- What would you say is their core competency?

- What is the core competency of your organization?

- What core competencies are necessary to implement your organization’s business strategy?

- What is necessary to hone the necessary core competencies?

- What data informs and drives the necessary core competencies?

- Where does the data that informs and drive these necessary core competencies originate?

- What business capabilities do you have insight into?

- What would are the biggest business pain points in these business capabilities and why?

- Given the desired business direction what pain points are likely to emerge?

- What data enters the organization from your business areas?

- What business capabilities outside your own use data from other business areas?

- What data originates from your business area?

- What business capabilities outside your own use data originated in your business area?

- What data does your area use that enters the organization from a different business area?

- What data does your area use that originates in a different business area?

- What data that originated within your area does your area update?

- What data that originated elsewhere does your area update?

- What is the definition of customer including any classification schemes for customers?

- Is there a customer hierarchy across the organization?

- What is the definition of product including any classification schemes for products?

- Is there a product hierarchy across the organization?

- Are products uniquely and consistently identified across the organization?

- What is the definition of vendor including any classification schemes for vendors?

- Is there a vendor hierarchy across the organization?

- What is the process for handling LHs?

- After customer, product, and vendor what would you say is the next most important data?

- What percent of staff are primarily maintaining or developing new reports?

- What percent of staff are primarily monitoring and maintaining data quality?

- What is the overall budget used for developing new reports for your area of the business?

- What is the overall budget used to maintain data quality within your area of the business?

4.1.1.2 Business IT Perspective

- What infrastructure is used to support each business capability within your area?

- What external software applications are used to support each business capability in your area?

- What internal software applications are used to support each business capability in your area?

- What software products (MS Excel) are used to support each business capability in your area?

- What software products have business rules to support each business capability in your area?

- For each business capability what types of reports, ad hoc queries, and data analysis are used?

- What types of reports, ad hoc queries, and analysis are produced for executive management?

- What types of reports, ad hoc queries, and analysis are produced for internal oversight?

- What types of reports, ad hoc queries, and analysis are produced for regulatory bodies?

- What business metadata exists for report content and who maintains it?

- What is being done to remediate any lack of business metadata?

- What data quality issues if any would you say impede any of these types of reports?

- What efforts are being made to address data quality issues?

- What is the process for decommissioning business automation?

- To what degree (%) do data models have meaningful business definitions?

- To what degree (%) have data model business definitions been validated by business?

4.1.1.3 IT Perspective

- What is an LDA and is there one for the organization?

- What life cycle does the PMO use for data centric data warehouse initiatives?

- What life cycle does the PMO use for data centric data governance initiatives?

- What information architecture disciplines are you aware of within the organization?

- What data governance architecture disciplines are you aware of within the organization?

- What RDM disciplines are you aware of within the organization?

- What reporting architecture disciplines are you aware of within the organization?

- What reporting technologies are you aware of within the organization?

- What data stewardship disciplines are you aware of within the organization?

- Is DIM architecture restricted to ETL, ESB, and CEP, and which brands of each?

- Is there an ETL CASE tool in place?

- Who determines data ownership?

- Who determines ad hoc data access rights?

- Who determines canned report data access rights?

- Who determines cyclical canned report data access rights?

- Which report types use a master data source?

- Which report types do not use a master data source?

- What are the business and IT purposes for content management technologies?

- Which products are used for content management?

- How is content within each content management repository organized?

- What is the content management ontology for content?

- What is the process for managing content?

- What databases/files/content management repositories house each type of data (e.g., customer, product, vendor, distribution channel, employee, contracts)?

- What is the data lineage for each type of data?

- What are the data quality issues for each type of data?

- Is each type of data cleansed uniformly?

- Is each type of data standardized uniformly?

- Is each type of data integrated uniformly?

- What would business metadata characteristics are captured in data models?

In summary, there are absolutely great architectural patterns available to address various MDM-related challenges in cost-effective ways. Before arriving at one, however, it is critical to understand the Web of desktop software and manual processes that have been deployed to compensate for the lack of unique identifiers for data that is shared across the organization, as well as the Web of desktop software tools and manual processes that perform application integration among business systems and reporting platforms.

4.1.2 Logical Data Architecture

When developing an architecture for anything, whether that thing consists of things that are tangible, intangible, or both, it forms a foundation and foundations are clearly best when they are stable.

As mentioned earlier, the most stable foundation upon which one may organize the parts in a control system—software that operates machinery—is the discrete hardware components that one can point to and touch (e.g., anemometer, throttle). The LDA for a simple control system consisting of just these two parts would be one data subject area for the anemometer and one for the throttle.

In contrast, the role of LDA in information systems is somewhat similar to that of hardware architecture in control systems. In information systems, a well-formed framework formed by the business data can be extremely stable. If well formed in accordance with a rigorous LDA approach, the data architecture would be stable not only for the particular line of business in the particular company, but it would be stable for that line of business across all companies, geographic regions, and political jurisdictions operating the same line of business.

This is not to mean that new data points will not be discovered in one company versus another; however, it does mean that the LDA framework will be stable for use in areas such as application architecture, data warehouse architecture, and business data glossary architecture.

To describe an LDA in more detail, the first thing to remember is that it is a business artifact. The LDA diagram is an illustration of all data and information that is related to the enterprise.

For example, this includes any data about the business, whether it can be found in a computer file or database, e-mail, text message, tape-recorded telephone conversation, on an index card or a sheet of paper in a file cabinet, or in someone’s head. It also includes data that is sourced externally and brought into the enterprise.

Examples of external data include:

- code tables—ISO country codes, zip codes, currency codes

- external files—securities pricing feeds, prospective customer lists, demographic overlays

The purpose of the LDA is to depict clear distinctions of meaning among each and every business term used to represent every piece of data that can be used or referred to across the enterprise. With categories of clear distinction also comes a rigorous taxonomy.

Just as an expert wine taster is empowered with a vocabulary of almost a hundred words to describe several different characteristics of wine and its effects upon the palate and nose, the business vocabulary of a business domain empowers the participants of business, such as a business user and a developer, to communicate ideas more effectively.

Multiple interpretations and/or ambiguities are eliminated when vocabulary is used effectively. As an example, in insurance, the business meaning of something called Policy Payment Date communicates little information in comparison with something called a Policy Payment Due Date, Policy Payment Received Date, Policy Settlement Date, Policy Clearance Date, and Policy Funds Delivery Date.

As in the above example, individuals equipped with a comprehensive vocabulary are better equipped to communicate details about the business better than individuals that are not so prepared; however, the presence of a large vocabulary brings the burden of managing its documentation and disseminating it to others. As such, another important role of an LDA is to organize vocabulary, by creating easy to use categories for locating and managing it.

Such categories of information may differ from one another in one important aspect, their stability. Categories that are unstable can create massive amounts of work when information has to be reorganized to adhere to a new set of categories.

That said, perhaps the most important role of an LDA is to establish stable components of information that can be used as a foundation for other architectures, such as an object-oriented application architecture. The more stable the application architecture’s foundation, the more cost-effective maintenance will become.

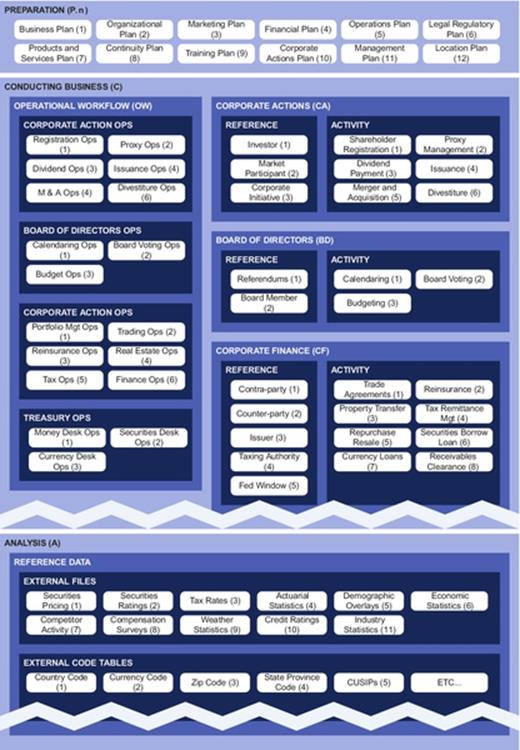

The LDA is a top-down hierarchy of data-related topics.

For example, if the business were a bagel store, we would start with three major categories of data:

- initial business setup

- conducting business

- analyzing business

For example, there are large amounts of data and information that are collected and generated well before the business is ever ready to make and sell its first bagel. There are business plans, determining the size and location of the store, its design and equipment, its staffing, its operational processes, various permits, and licensing and inspections, and then there is the matter of ownership, management, and the financial details.

The next distinct category of data and information is the data and information that is generated once the doors of the store have opened for business.

For example, using the same store, there are data and information collected and generated while conducting business, entering into transactions with customers, taking one time orders from walk-in customers, taking and supporting reoccurring orders from local convenience stores and restaurants, and performing the various operations of the business that keep supplies in check with the demands of the store’s products and services.

The third distinct category of data and information is generated only after the doors of the store are closed.

For example, the same store may wish to analyze which products and promotions were most and least popular and profitable. A trend analysis may reveal that business is cyclical while also growing at a rapid rate, or declining due to a competitor that just opened down the street. This third category of data uses the information generated in the previous category and analyzes it to help make informed management decisions.

Within each distinct category of data, there are additional distinct categories down that are driven down to the business data subject area, which is the lowest level category that is useful to compartmentalize data into.

Let’s consider what is typically the largest category of data, which is called “conducting business.” It consists of the information that is employed and generated while business is being conducted, as is legally referred to as “books and records” of the business.

This area of data and information embodies the results of each step within each operational process pertaining to customer acquisition, and engagement, as it encompasses all contractual agreements with customers for the products and services of the enterprise in support of revenue generation, and supporting services to the customer.

As defined by the Securities and Exchange Commission, “books and records” information includes purchase and sale documents, customer records, associated person records, customer complaints, and information specifically designed to assist regulators when conducting examinations of business practices and/or specific business transactions, including accessibility of books and records on a timely basis.

Within the major category of “conducting business,” the next data categories are:

- operational workflow,

- reference data, and

- activity data.

To define these, the operational workflow layer is data that represents the information about each request or event that initiate operational actions within a given operational process, and includes the assignment of resources that participate in the workflow, the steps they perform, the authorizations they receive, and the conclusion of the request. As such, the operations layer represents information about how, when, and by whom each workflow is exercised within the enterprise representing the workload, the resources involved, and the various logistics that describe how the operational processes occurred.

It should be noted that the same operational information is applicable whether the operation was performed in-house, outsourced, off-shored, or using any combination of the aforementioned.

In contrast, the reference data layer is the data that must first be established prior to a specific business transaction. To best understand this, let’s consider a simple sale transaction to purchase some number of bagels. Prior to the actual sale, the reference data that we would need to effect the purchase would include:

- product reference data—which would include the product characteristics, its unit price, and its volume discounted pricing,

- tax rate reference data—which would include the tax rates for the various possible jurisdictions within which the transaction to purchase bagels is being conducted, and

- customer reference data—which would include information about the customer, particularly institutional customers that may be purchasing significant quantities of product for delivery each morning.

The activity data layer is the data that records the all of the information about the sale, which may include orders that are made well in advance, and well as spot contracts of bagel transactions that are effected for immediate pickup or delivery.

In a large enterprise, the data subcategories of operational, reference, and activity data often include several data categories, such as:

- front office—the books and record data associated with the front office includes the business dealings that are the primary sources for generating revenue for the enterprise from sales and sometimes includes corporate finance.

As such, the front office represents the greatest profit center of the enterprise, which must fund all of the cost centers of the enterprise, which include middle office and back office areas.

Examples of front office business areas include the various sales, marketing, and distribution channels of the enterprise.

- corporate actions—the data and information of corporate actions include the business matters that affect shareholders, which may or may not require shareholders to weigh in by voting at a shareholder meeting or by mail in voting proxy

Examples of corporate actions include shareholder registration, payments of dividends, payment of debt on bonds, forward and reverse stock splits, mergers, acquisitions, and divestitures.

- board of directors—the data and information of board of directors include the business matters that are addressed by the board of directors, who are selected by the shareholders and governed by the company bylaws to provide guidance to the enterprise in the marketplace and who are accountable to ensure that the company conducts business in accordance to law.

The members of the board of directors are legally charged with the responsibility to govern the corporation and are accountable to the shareholders. The board of directors usually consists of a board chair, vice chair, secretary and treasurer, and one or more committee chairs, and board members.

The board is responsible for establishing and maintaining the corporation in the legal jurisdictions required, for selecting and appointing a chief executive, for governing the company with principles and objectives, for acquiring resources for the operation of the company, and for accounting to the public and regulators for the funds and resources of the company. The board of directors must also agree to corporate actions before shareholder voting takes place.

- corporate finance—the data and information of the corporate finance include the financial decisions of the corporation for managing investments, working capital, cash-on-hand, tax remittances, and the securities inventory to maximize the long-term value of the corporation as well as support the daily cash requirements of the operation.

Examples of corporate finance business dealings include the various activities with contra-parties and large corporations to support primary and secondary market transactions to maintain the appropriate cash balances and reserves, such as a repurchase resale agreement to raise overnight cash reserves.

The lowest level grouping within these is called subject areas of data.

A sampling of data subject areas that would typically be located within the data subcategories of operational, reference, and activity for corporate actions includes:

- corporate actions operational workflow

- shareholder registration operations

- dividend payment operations

- stock split issuance operations

- shareholder voting operations

- merger and acquisition operations

- divestiture operations

- corporate actions reference data

- investors/shareholders reference data

- corporate initiatives reference data

- market participants reference data

- corporate actions activity data

- shareholder registration

- dividend payment

- stock split issuance

- shareholder voting

- mergers and acquisitions

- divestitures

The properties of data subject areas are:

- stability of structure,

- encompasses all business information specifically within its scope,

- based upon business terminology and business concepts,

- manageable size for business stakeholders and IT staff,

- well-defined to achieve clear and unambiguous boundaries,

- creates distinctions among terms that could otherwise have multiple meanings,

- abstractions never obfuscate the true business meaning,

- organized to optimize ease of use,

- names and definitions demonstrate a consistent use of terminology, and

- compliant with the business data glossary.

The LDA has many use cases.

As examples, let’s consider the following:

- provides business context to all data,

- provides a rigorous taxonomy (aka business vocabulary) across all data,

- accelerates communication across business areas and with and across IT,

- acts as an accelerator to the majority of life cycles of the enterprise,

- software development life cycle,

- data centric life cycle,

- data governance life cycle,

- insourcing life cycle,

- outsourcing life cycle,

- merger and acquisition life cycle,

- divestiture life cycle,

- provides a business-oriented view into data governance,

- business data glossary,

- data ownership,

- business administered data access rights,

- organizes the conceptual data models as one per data subject area,

- drives database architectures,

- provides a framework for data warehousing,

- acts as an accelerator to object-oriented application architecture,

- forms the basis for a physical data architecture that identifies the physical location and ownership of data belonging to each category of data,

- maps to the business capability model, and

- teaches vast amounts of business knowledge to newly hired staff.

As a rough gauge to estimate the number of data subject areas to expect, a large enterprise, particularly a large financial conglomerate, may have an excess of 600 distinct subject areas of data. Of the total number of data subject areas, it is common for less than half to have automation associated with them.

Since the LDA is a business artifact, the best approach we have experienced is to train business users how to maintain and manage the LDA.

DIAGRAM Logical data architecture sample.

There will be departments that are the sole location across the enterprise that originate data for a given subject area of data. For these, data ownership and maintenance of the model are clear.

However, there will also be situations where multiple departments originate data for a given subject area of data. These situations will require information architecture to broker changes to data subject areas across the affected lines of business.

We live in a knowledge economy within which information is the currency. Having information with a clear understanding of its meaning allows us to operate more effectively across a large enterprise to facilitate informed decision making.

4.1.3 Data Governance

If you ask 10 different experts to define data governance, you are likely to get 11 different definitions.

Consider the following definitions:

- Data governance is a committee that convenes to prioritize what data-related initiatives should be funded, to make policies and rules, and to resolve issues among stakeholders.

- Data governance is an IT process that deploys technology to conduct data discovery and data quality initiatives.

- Data governance is a process that determines who can take what actions with what data, when, under what circumstances, using what methods.

- Data governance is the convergence of data management, data quality, data stewardship, data policies, and data security.

- Data governance is cultural shift away from thinking about data as a commodity toward thinking about data as a valuable asset class.

- Data governance is a cultural shift that treats data as an asset class realized through a data stewardship process that acts as a liaise between business and IT to define and organize business data from a business perspective standardizing the business vocabulary to facilitate communication and addressing the data-related needs of stakeholders across the enterprise.

While some of these definitions are notably better than others, none of these definitions can be held up as being completely accurate.

However, to begin to understand what data governance is more accurately, let’s first discuss what data governance is not and what it should not become for the well-being of the organization.

They are as follows:

- committee approach—organizations tend to form committees when they do not have a subject matter expert at a senior enough level to look out for the business interests of the enterprise.

Committees too frequently slow down the various data-related activities of the organization with bureaucracy in a struggle to establish the scope of their authority.

- IT owned process to determine authority over data—data must be governed by the business that is closest to where the particular subject of data originates.

Governing data from IT places those who understand the data and its business value the least in charge of it.

- process that determines data accountability—every category of data has a source, which is the only place that true data custodianship can pragmatically occur.

If we start by recognizing the natural areas of accountability that originate each category of data and then address the challenges that present themselves, we are on the correct path.

- convergence of many disciplines— data governance is responsible to determine the framework of architectural disciplines that comprise it and then integrate them in a manner that provides maximum business benefit.

The architectural disciplines that comprise data governance each require subject matter expertise associated with them.

- cultural shift—wholeheartedly data governance does require a cultural shift, although this is just part of what data governance is

The cultural shift is an important first step that serves to pave the way to achieve data governance within a large enterprise.

- The last definition listed was our own, and while it is not bad, it is still deficient.

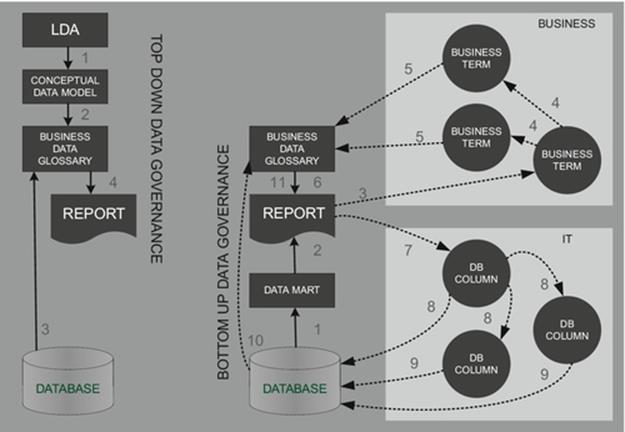

The modern view of data governance is that it is an area of specialization within information architecture that is responsible for a framework of subordinate architectural disciplines to manage data and its associated metadata as an asset of the enterprise in a comprehensive manner.

It begins with understanding the business scope of the entire data landscape of an enterprise and then expands into the following:

- business data glossary—to provide a taxonomy to uniquely identify each data point in a manner similar to establishing unique identifiers for each financial account, staff member, or unit of property

- business owner—to identify the organizational unit or units that originate data for each category of data

- business metadata—to establish a clear understanding of each data point collect the important business information about data assets in a manner similar to that of establishing important information about financial transactions, staff members, and property

- stakeholders—business owners need to have a 360° view of stakeholders (e.g., legal compliance, financial compliance, HR compliance, auditing, CCO) and what is required to protect their interests

- determining the framework of component architectures—candidate architectural disciplines for a large enterprise include:

- data stewardship—the stewardship of data begins within the business areas that originate it,

- RDM—consists of three area of specialization,

– product master management—a form of code table management that focuses on code tables that are generated internally within the enterprise,

– code table management—a form of code table management that focuses on code tables that are generated outside the enterprise,

– external file management—a form of file management that focuses on files that are purchased externally for use across the enterprise,

- DIM architecture—management of the technologies that transport data,

– extract transform and load/extract load and transform (ETL/ELT) architecture—management of a class of batch tools that transport data,

– Enterprise service bus (ESB) architecture—management of a class of real-time tools that transport data,

– data virtualization architecture—management of a class of technologies that render data from disparate sources while hiding the details of their data types, data formats, and database structures,

– complex event processing (CEP) architecture—management of a class of rules engine that inspects DIM for patterns that may be useful to detect and act upon,

- semantic modeling architecture—management of a class of models that either illustrate the relationships data values have among themselves or more advanced models, such as those that depict the usage, sources, and destinations of data,

- data modeling architecture—management of a class of models that depict conceptual, logical, or physical characteristics for classes of data, and

- data obfuscation architecture—management of the technologies that mask and/or encrypt data as it is transported.

As we can see, the modern view of data governance shifts the role of data governance from IT to the business taking the lead utilizing IT to support its automation requirements, which are focused on empowering the business to govern one of its most valuable business assets.

Now that we have established a good appreciate of what data governance is and is not, let’s look briefly at the not insignificant path of how to get there, or at least some high-level milestones.

The first step toward achieving this modern view of data governance begins when IT finally recognizes that it lacks expert knowledge about the data they manage.

To business users who observe the condition of their data, data is lots of database columns with definitions that are systematically missing, incomplete, misleading, or ambiguous. When IT eventually understands what a real business definition is, then they have taken the first step.

Some of the distinctions about “customer” that IT will eventually understand include:

- customer—any individual or legal entity that has one or more agreements or contracts with the enterprise or has amounts on deposit with the enterprise, including any of its domestic or international affiliates, or for whose person, family members, or property, the enterprise or affiliate otherwise provides protection, or an administrative or other service.

A legal definition of “customer” was established by the US Congress within the Gramm-Leach-Bliley Act (GLBA) in 1999 for financial companies as it relates to the treatment of individual persons. Although insurance companies are state regulated, they must minimally comply with federal legislation, such as the GLBA.

First, the GLBA defines a “consumer” as “an individual who obtains, from a financial institution, financial products or services which are to be used primarily for personal, family, or household purposes, and also means the legal representative of such an individual.”

A “customer” is a “consumer” that has developed a relationship with privacy rights protected under the GLBA.

For example, a “customer” is not someone simply using an automated teller machine or having a check cashed at a cash advance business. These are not ongoing relationships with the “consumer,” as compared to when the “consumer” participates in a mortgage loan, tax return preparation, or line of credit.

Privacy rights protected under the GLBA require financial institutions to provide a privacy notice to customers that explain what information the company gathers about them, where this information is shared, and how the company safeguards that information. An official policy that addresses each of the three areas of information is a legal requirement for financial companies. The privacy notice containing this information must be given to the “consumer” prior to entering into an agreement to do business. Additionally, the GLBA also requires customer notification of their right to “opt-out” under the terms of The Fair Credit Reporting Act.

The law also requires that companies keep a record of all customer complaints as part of the books and records of the firm, which would be associated with the customer.

- prospective customer—an individual or legal entity that has never had and/or does not presently have a customer relationship with the enterprise

- former customer—an individual or legal entity that previously had a customer relationship with the enterprise

- the concept of customer within the confines of a particular database—such as the business concept of customer versus the database containing only customers who are residents of Canada, and

- beneficiary—an individual or legal entity that is designated as the heir, recipient, successor, or payee only within the context of a specific customer contract, policy, or other agreement

The second step toward achieving this modern view of data governance begins when IT recognizes that they cannot realistically govern something (i.e., business data) that they lack expert knowledge in. Similarly, it would be like business users thinking that they should be governing the company’s firewall architecture.

At this point, IT is then somewhat prepared to adopt the realization that it has an even more important role to play than governing business assets, and that is to transform itself to empower the individuals that have the knowledge to govern.

This means that control shifts away from IT back to business users, which is where it actually all began decades ago. In the beginning, business users sat side by side with IT resources. At that time, many data assets were still not automated and hence were under direct control of the business.

Initially, when IT slowly began to grow into the behemoth that it is today, the separation of business and IT did not manifest itself as a problem immediately as many of the early IT resources was nearly equally as fluent in the business as the result of working so closely with the people in the business. It was only when business knowledgeable IT resources became increasingly scarce as IT continued to grow and become increasingly distant from the business.

Hence, in the modern view, there are 10 basic areas of data governance that belong to the business as opposed to IT.

The 10 areas of business-driven data governance, which can incidentally be consolidated into a data governance portal, include:

- business data glossary,

- business data ownership,

- business administered data access rights,

- business designated data masking templates for data leaving production,

- business designated canned report access and distribution,

- business data stewardship,

- business-driven data discovery,

- business-driven semantic modeling,

- data governance and architecture registry for business and IT artifacts, and

- consolidated business metrics in a data governance dashboard.

The new role of IT for data governance is to render the technologies to support these 10 areas usable to business users.

4.1.3.1 Business Data Glossary Architecture

Prior to written language, there was only spoken language. Spoken language only ever occurred one individual time, that is, unless there was the rare experience of an echo, where the same words could be heard exactly as spoken a second time. During this extensive historical period prior to written language, story tellers roamed the land to tell their stories of historical events. When the Greek myths and legends were told by these story tellers, there would invariably be subtle differences in the stories. It was not until Homer wrote down a version of each Greek myth and legend, he encountered that the variances found in the telling of myths and legends could be brought under relative control.

When written language first emerged using the alphabet as we know it, terms were not controlled by their spelling. Instead, terms were phonetically spelled, and within the same documents, the spelling of the same phonetically spelled words could and often did vary.

Plato, when reflecting upon the oral culture that surrounded him, recognized that the preliterate masses lacked the ability to truly think in a structured way when he wrote, “They have no vivid in their souls.” Without written words to capture ideas in a firm medium, how could anyone understand the numerous and complex ideas of others to ponder and weigh in ones’ mind? This was not just the discovery of the self, but the thinking self.

Eventually, when Newton developed his theories involving force, time, and mass, there were no formal definitions of these terms available to him at that time. Newton used these terms with new purpose and gave them new and precise definition for the first time. Without precise definitions for the terms we use, there is little ability to communicate to others the exact meaning of what we think.

Today, the notion of thinking and the recorded word are hardly separable. The recorded word is the prerequisite to conscious thought as we understand it. We know the exact spelling or usage of most words we employ, we can look up their meaning, we know there are reference books that use these words from which we can gather information, and we can put our thoughts about that information into a recorded form using those words to share ideas with others, using books, articles, e-mails, film, or online videos.

Once recorded, thoughts are able to become detached from the speaker speaking them. Instead, the thoughts become attached to the words so that they may be shared with others to learn and understand, and share with still other individuals. Transitioning from the oral culture to the written one is a journey that begins with the notion of things represented by sounds to the written word where language can further develop. The journey then continues from words to taxonomies with specific meaning and from taxonomies to logic.

Also from writing came mathematics. Derived data is the result of mathematics where the sentences or formulas that define derived data must be recorded to convey their derivation and meaning. If we look at the progression it starts with the emergence of the first written language around 3200 BC, then money around 2500 BC, with the emergence of numbers necessarily having to be somewhere between those time periods.

Note: The origin of numbers is debated to be well after the emergence of money which seems to be an unsupportable argument no matter how many pints of mead (i.e., fermented honey beverage predating both wine and beer) one consumes.

Regardless of the type of industry or commerce, at the core of all business and commerce is data, consisting mostly of numbers. As an example, modern energy companies analyze streams of data using distributed sensors and high-speed communications to determine where it should drill for natural gas or oil. The data points of these sensors however have precise definitions that anyone who would use them needs to understand.

While the breadth of data can vary significantly in organizations, the larger the business, generally the more data there is. However, the most interesting fact is that most data within these large enterprises is not even being recorded though it can hold the key to significantly higher productivity and profitability. As an example, large amounts of data about operational workflow in large organizations are routinely not collected.

That said many organizations spend large sums of money managing what are perceived as their other valuable assets. People assets are well managed with a vast array of HR policies and processes, and similarly, financial and property assets have meticulous oversight and management, and are even insured where possible.

However, when it comes to arguably the most valuable asset of all, data, often just the basics are collected and then few of the protections that exist for people, finance, and property assets have analogous counterparts to manage it as a company asset.

Data can be found in many locations. It can be found in computers of various types, on paper in file cabinets, index cards in boxes, in smartphones, and in people’s heads. Regardless of where it is, it is the life blood of almost every enterprise. However, for it to be useful, someone has to know where to find it and they have to be able to understand what it means.

In a way, what is needed is for business management to take ownership and responsibility of data assets the same way that corporate officers take ownership and responsibility for financial assets. But what does this mean?

Consider a bank manager who must account for every penny of his branch’s transactions. While resources are dedicated to ensure that every financial account is completely accurate to the penny, comparatively few resources are spent to ensure the underlying data collected from each transaction is equally valid.

For example, the customer’s name, address, phone number, tax ID number, birth date, nationality, mother’s maiden name, and occupation are data that are collected for a purpose. Yet no matter how well the data services organization of the enterprise secures and protects the data, the concern for the validity of its content is of less importance.

While many business users generally believe that the ownership of data and information is the responsibility of IT, others have correctly zeroed in on the fact that responsibility for data cannot reside with those who do not originate the data. IT is simply responsible for the safekeeping of the data across the many files, databases, disks, tapes, and other storage media, and the technology that supports them.

In a comparable analogy, accountants are responsible for organizing and reporting financial data that have been provided to them. Even so, they are not responsible for the business decisions and activities that generated those financial numbers. Whether it involves financial accounting or data accounting, the responsibility for the accuracy and completeness of the finances and the data must reside with the business.

As such, when someone in the business buys or sells an asset, the accountant tracks the financial asset, its cost basis, depreciation, appreciation, accretion, and so on. The accountant is not responsible for the transaction or the gain or loss of assets that resulted. The accountant’s responsibility is to organize these calculations and tabulations, and then accurately report on these figures to management and the necessary regulatory bodies.

Similarly, IT reports on the data that it organizes and safeguards for the business. Like the accountants, IT is responsible for reporting on the data, although it is not responsible for the accuracy and completeness of the data provided them.

The primary focus of data that is collected in an enterprise is usually to support the particular business operation within the particular line of business. However, the usefulness of that data across various other parts of the organization can extend well beyond that particular line of business.

As examples, it could be useful for:

- opportunities to cross sell products and services to customers,

- new product development decisions,

- merger and acquisition decisions,

- divestiture decisions,

- marketing decisions,

- expansion decisions,

- corporate restructuring decisions, and

- budgeting decisions.

Hence, to be useful across these different areas, the parts of the organization that collect data need to be aware of the impact of capturing data in a nonchalant manner. This includes eliminating a few bad habits, such as typing any characters as values into required fields just to get to their next activity faster.

These types of habits create and support entire industries, such as the development of data quality products. In the end, however, the only ones that have a chance at figuring out the correct data is the business.

Let’s also consider the usefulness of business areas managing the business metadata for data that they originate.

If we consider the alternative, which is usually having a team of data modelers in IT enter hundreds and thousands of definitions for data points for which they are not the subject matter experts, we find that the usefulness of the resulting definitions can range significantly.

For example, a small portion of data modelers research each data point with business users or industry reference materials, while a larger portion of data modelers leave the definition blank or they simply repeat the name of the data point as part of its definition.

I can’t speak to how other people react, but when I see a data point name like “Coupon Rate,” it disturbs me to see a definition the likes of “A coupon rate is the rate of a coupon.”

In contrast, a data steward or other individual that has a thorough understanding of the business would provide a somewhat more useful definition.

4.1.3.1.1 Coupon Rate

Definition: A coupon rate of a financial instrument (e.g., bond, note or other fixed income security) is the amount of interest paid by the issuer of the financial instrument per year expressed as a percentage of the “principal amount” or “face value” of the security, to the holder of the security, which is usually paid twice per year.

Purpose: The coupon rate of a financial instrument participates in calculations that determine the market price and the rate of return of the financial instrument that bears the coupon.

Data format: Coupon rates are represented or quoted in either fractions of 32nds or decimal.

Data sensitivity: Coupon rates are not considered sensitive data as there are neither external regulatory restrictions nor internal policies that restrict its disclosure for competitive or proprietary reasons.

Business synonyms: “fixed income interest,” “coupon yield.”

Subject area of data: This data point is based within the business context of the data subject area named, “PRODUCT.”

The difference in usefulness to other business stakeholders and IT is simply remarkable. One approach actually costs the enterprise money to have a high-priced IT resource to perform data entry of content that is of no use to anyone, while another approach takes a less expensive subject matter expert to record knowledge that will be useful to mentor new staff and to convey the true business meaning of the data point to every stakeholder across the enterprise.

In fact, if we were to disclose a more complete set of useful business metadata that a business subject matter expert could record, we would consider recording the following business metadata characteristics:

- LDA data subject area,

- unique business data glossary name,

- business trustee for the data glossary field,

- business definition,

- business purpose and usage,

- format of the data,

- data sensitivity, such as confidential for customer privacy,

- specific regulatory or compliance rules that are deemed applicable,

- synonyms used in the industry,

- labels typically used in applications, forms, and reports,

- business processes that originate the data content,

- business area(s) and business processes that typically use, report, or analyze the data content,

- related business data glossary fields,

- source of the data in the business where the data point originates, or if calculated, what is the formula for its derivation,

- basic business data class (e.g., date, amount, quantity, rate) and required level of precision,

- whether the data point is sent to the general ledger (GL),

- whether the data point is sent to external organizations, such as regulatory bodies,

- mapping to external standards, such as ACORD and MISMO,

- complete or sample business values or domain values, and

- global variances in definition, such as with “personally identifying information” (PII) where the European Union includes small businesses as those that have an income under ten million Euros and less than 50 employees.

4.1.3.1.2 Identifying the Source of Data

Once the business has thoroughly defined a business data point, then it is the role of IT to work with the data steward to locate its sources from across the various production databases of the company. Each source will have its own profile for data quality (e.g., data sparseness, accuracy, numerical precision versus rounding, standardization of format and values, and segment of the business, such as pertaining only to Canadian business)

4.1.3.1.3 Extracting Data to a Landing Zone

Once the sources of data have been confirmed, it can be extracted into a landing zone, either a conventional landing zone or a low cost big data landing zone. The considerations here will involve the appropriate frequency with which data extracts to the landing zone need to occur, or whether a real-time feed from the production database will be required to stream data as it is written, or updated.

The answer to this will depend upon the real-time requirements, and to an extent, it will depend upon the degree of data cleansing, standardization, and integration that may ultimately be required before the data can be useful to a real-time business capability.

What’s important here is that the extraction stage provides valuable metadata to the business data glossary to communicate to the user which applications the data is available from, and the business assessment of the degree of reliability of the data from each source being reliable.

These types of metrics can provide guidance to stakeholders that may need the data to request additional services from IT to improve the quality of the data by enhancing the application software, provide monitoring and alerts on data quality, assist business users in viewing and correcting data, or request additional services from data stewards to develop training for business users to capture the information more reliably.

4.1.3.1.4 Data Profiling

Data profiling is mostly a consideration during the development process, but in some cases can continue into production. Once landed, the data can be analyzed so that specifications can be developed to perform data cleansing (aka scrubbing) on the data.

As data cleansing occurs, the original data should be retained partially for historical purposes and partially for the possibility of needing to reprocess the data if issues arose downstream that required corrections at this early stage of processing.

Once cleansed, the data is placed in the next layer of the landing zone, with statistics and metrics about the cleansing process being stored for display into the business data glossary.

4.1.3.1.5 Data Standardization

When even the same business data is sourced from different application databases, a number of issues tend to arise. The first issue that becomes apparent is that each application tends to use different codes and/or different data types for the same things, which is easy to remedy with a good RDM code tables initiative. The additional issues tend to demonstrate somewhat greater complexity, such as:

- values of a data point not having a one to one relationship with the corresponding data point in another system,

- values of a data point corresponding to domain values that are outside the domain values of the same data point in another system,

- values of a data point containing values that correspond to something that should have been a separate data point, but was incorrectly combined together.

As in each previous phase, the standardized data is placed in yet the next layer of the landing zone, with statistics and metrics about the standardization process being stored for display into the business data glossary.

4.1.3.1.6 Data Integration

Once standardized data is available, it is possible to prepare specifications for data integration. The ideal target state for data integration is one that closely resembles the conceptual data model that data stewards and business users develop for the particular data subject areas involved.

As in each previous phase, the integrated data is placed in yet the next layer of the landing zone, with statistics and metrics about the integration process being stored for display into the business data glossary.

These metrics can be far more comprehensive and can include for each data point the following:

- sparseness,

- reliability,

- mean,

- median,

- standard deviation,

- skew, and

- any other standard or proprietary calculation.

As for the usefulness of metrics such as sparseness and reliability, if a metric for a business data glossary entry indicates that data values are missing 30% of the time or have invalid values 10% of the time, then using that business data glossary data point to organize totals or subtotals may not be practical.

At this point, the integrated data can be stored within an operational data store (ODS) layer that mirrors the data subject areas of the LDA.

If the resulting ODS layer is too large and complex to support ad hoc joins using a standard relational database management system (DBMS), then one more step should be undertaken to migrate it into a high-performance analytical data base that can support real-time joins and analytics pertaining to one or more technologies associated with big data architecture.

The result has far reaching implications for the many stakeholders of business and IT. For the first time since automation, business will have taken back control of their data asset. In addition, the data with the highest priority to the business has meaningful definitions that can be communicated across the company.

4.1.3.1.7 Shopping Cart

The next step for the business data glossary is to make it go somewhere. In other words, let’s suppose the business data glossary was also a business data catalog for business users. A business user or stakeholder can look up the data points that they need to see in a report, confirm that the data points are in fact the correct ones based upon their business metadata, and then put them in a shopping basket for reporting.

Once the shopping cart contains everything the particular business user requires, then they can automatically get a list of existing reports that they can run from a mashup technology, or they can easily assemble their own report using a mashup technology.

The benefits from a business perspective are compelling, such as:

- the correct data points required by business can be identified in seconds as opposed to negotiating months with various IT resources,

- from this point forward the analysis to determine what applications to source the data is performed just once,

- from this point forward the development work to land the data from production is performed just once,

- from this point forward the analysis and programming to cleanse the data is performed just once,

- from this point forward reporting results no longer display inconsistencies that resulted from different rounding factors and data cleansing logic,

- from this point forward the analysis and programming to standardize the data is performed just once,

- as additional data points are sourced, cleansed, standardized, and integrated, they become permanently available to stakeholders,

- the speed with which new reports can be developed is a fraction of what it was previously,

- the cost of new reports to be developed is a fraction of what it was previously,

- the development effort that ties up resources to develop duplicate reports is eliminated,

- the costs associated with developing duplicate reports are eliminated,

- the licensing costs associated with a high-end mashup technology is a fraction of the costs associated with non-mashup technologies,

- the ability to gather metrics about data usage across the entire data landscape is facilitated by a high-end mashup technology,

- data masking for ad hoc reporting is automatically facilitated by a high-end mashup technology,

- a high-end mashup technology can source data from an HDFS and/or HBase environment, and

- a high-end mashup technology will support high-speed data streaming for critical real-time reporting, analysis, and alerting.

As is now apparent, a modern business data glossary places a great deal of knowledge about the data assets in the hands of the business. This allows business to make informed decisions about how to prioritize the activities of IT to address data-related issues that are of greatest priority to the business and reveals what data assets are available and their condition for use in business decision making where informed decisions are required.

As such, business data glossary architecture is the architectural discipline responsible for building the foundation for the data assets of the company. Standing on the foundation of an LDA, the business data glossary architecture has synergies with and impacts every area of the business as well as all of the other architectural disciplines within business and information systems architectures.

4.1.3.2 Data Ownership Architecture

The next valuable area associated with data governance is data ownership architecture. The foundation for data ownership is also the LDA. Using the LDA as a source that identifies all subject areas of data across the enterprise, each subject area is designated as being owned by the business department or business departments that originate the data for that topic of data.

The principle at work here is that the most appropriate individuals to act as custodians for a collection of data points are the individuals that are responsible for originating the data for those data points. The department head, or their designated data steward, would therefore act as the person managing responsibility for that portion of the data asset.

In our earlier discussion of LDA, we know that there are many business categories that data may be organized into.

As examples of this:

- when considering the data that is originated during business preparation, the owners are the company founders,

- when considering the data that pertains to any operational workflow while conducting business, this data is originated with the workflow inside the department whose workflow it is,

- when considering the business activity data while conducting business, this data is originated with the business users that perform the activity, such as the board of directors creating conducting corporate action activity, and

- when considering analysis activities, the data originates in the business area that conducts business intelligence.

However, not all data originates within the company, and therefore data ownership is at times external to the company. In fact, there are several situations when data is originated outside the company, such as “reference data.”

While not all reference data originates outside the company, such as the reference data of corporate initiatives and company bylaws associated with “corporate actions,” there is a considerable amount of information about many reference business subject areas of data that do originate from outside the company.

Examples of data that originates outside an enterprise include:

- customer data—which can be entered through a Web interface by the customer,

- distribution channel data—which can be entered through a Web interface by the distribution channel,

- shareholder data—which is provided via feeds from brokerage firms whose customers have acquired financial instruments in your enterprise,

- issuer data—provided from external feeds,

- vendors—which can be entered through a Web interface by the vendor,

- RDM code tables—which originate from various external authorities, such as the International Organization for Standardization (ISO) which originates many categories of data including country codes and currency codes, and

- RDM external files—which originate from various authorities and may include topics, such as economic data and demographic data.

When data originates externally, it is still important to designate one or more internal departments and data stewards to act as the custodian for those data assets within the enterprise. As an example, information architecture, reference data architecture, or the data governance area of data stewardship and their associated subject matter experts are good candidates to act as data custodians for data that is typically shared across the enterprise.

The role of a data owner should include:

- fiduciary responsibilities of the data assets that are “owned” by the particular department including the proper designation of business metadata,

- mentor department personnel in their role as “owners” of the particular class of data assets,

- liaise with the individuals that are stakeholders in the data “owned” by the department,

- understand the data quality-related issues affecting the data assets “owned” by the department and their impact upon other stakeholders,

- coordinate with IT to prioritize and remedy the business critical issues affecting the data assets “owned” by the department,

- facilitate the reporting needs of the department by coordinating with IT to source, cleanse, standardize, and integrate additional data points from production applications,

- convey to IT any reporting requirements in which their assistance is requested,

- with regard to sensitive data, administer access rights to the other departments across the enterprise based upon their functional need to know,

- coordinate to acquire from the appropriate authorities the necessary RDM code tables or external files by the enterprise

As such, data ownership architecture is the data governance-related architectural discipline responsible for the frameworks associated with determining data ownership and subsequent fiduciary responsibility over the data assets that are owned by the department.

4.1.3.3 Data Access Rights Architecture