Implementing Cloud Storage with OpenStack Swift (2014)

Chapter 7. Tuning Your Swift Installation

OpenStack Swift's tremendous flexibility comes at a cost—it has a very large number of tuning options. Therefore, users utilizing Swift as a private cloud will need to tune their installation to optimize performance, durability, and availability for their unique workload. This chapter walks you through a performance benchmarking tool and the basics mechanisms available to tune your Swift cluster.

Performance benchmarking

There are several tools that can be used to benchmark the performance of your Swift cluster against a specific workload. COSBench, ssbench, and swift-bench are the most popular tools available. While swift-bench (https://pypi.python.org/pypi/swift-bench/1.0) used to be a part of the Swift project, and is therefore a common default benchmarking tool, this chapter discusses COSBench, given its completeness and the availability of graphical user interfaces with this tool.

COSBench is an open source distributed performance benchmarking tool for object storage systems. It is developed and maintained by Intel. COSBench supports a variety of object storage systems, including OpenStack Swift.

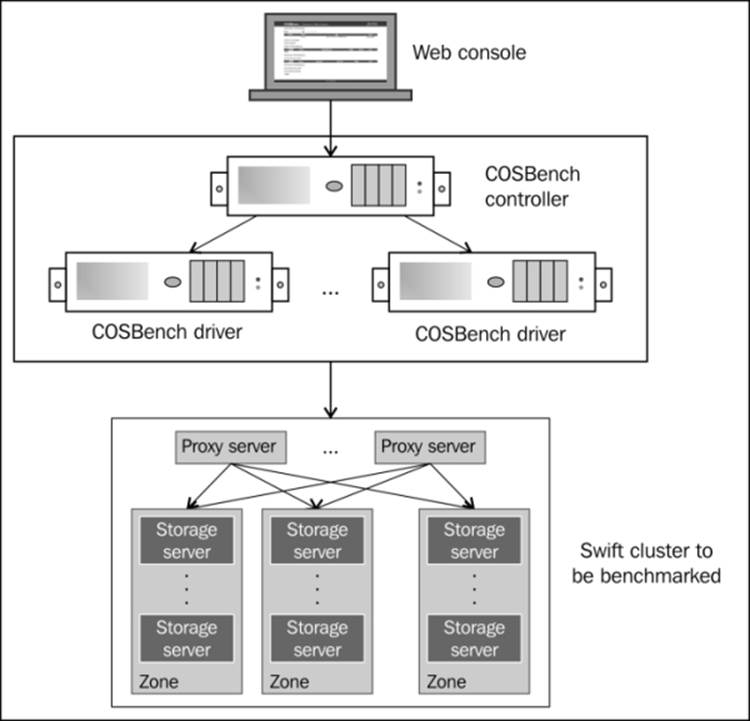

The physical configuration of COSBench is shown in the following diagram:

The key components of COSBench are:

· Driver (also referred to as COSBench driver or load generator):

· Responsible for workload generation, issuing operations to target cloud object storage, and collecting performance statistics

· In our test environment, the drivers were accessed via http://10.27.128.14:18088/driver/index.html and http://10.27.128.15:18088/driver/index.html

· Controller (also referred to as COSBench controller):

· Responsible for coordinating drivers to collectively execute a workload, collecting and aggregating runtime status or benchmark results from driver instances, and accepting workload submissions

· In our environment, the controller was accessed via http://10.27.128.14:19088/controller/index.html

A critical item to keep in mind as we start with COSBench is to ensure that the driver and controller machines do not inadvertently become performance bottlenecks. These nodes need to have adequate resources.

Next, the benchmark parameters are tied closely to your use case, and they need to be set accordingly. Chapter 8, Additional Resources, explores use cases in more detail, but a couple of benchmark-related examples are as follows:

· Audio file sharing and collaboration: This is a warm data use case, where you may want to set the ratio of read requests to write requests as relatively high, for example, 80 percent. The access rate for containers and objects may be relatively small (in tens of requests per second) with rather large objects (say a size of hundreds of MB or larger per object).

· Document archiving: This is a somewhat cold data use case, where you may want to set a relatively low read request to write request ratio, for example, 5 percent. The access rate for containers and objects may be high (in hundreds of requests per second) with medium size objects (say a size of 5 MB per object).

Keep these use cases in mind as we proceed.

In our test setup, COSBench was installed on a Ubuntu 12.04 operating system. The system also had JRE, unzip, and cURL installed prior to installing COSBench Version 0.3.3.0 (https://github.com/intel-cloud/cosbench/releases/tag/0.3.3.0). The installation is very simple as you will see in the following easy steps:

unzip 0.3.3.0.zip

ln -s 0.3.3.0/ cos

cd cos

chmod +x *.sh

More details on the installation and validation that the software has been installed correctly can be obtained from the COSBench user guide located at https://github.com/intel-cloud/cosbench. With the installation of COSBench, the user has access to a number of scripts. Some of these scripts are as follows:

· start-all.sh / stop-all.sh: Used to start/stop both controller and driver on the current node

· start-controller.sh / stop-controller.sh: Used to start/stop only controller on the current node

· start-driver.sh / stop-driver.sh: Used to start/stop only driver on the current node

· cosbench-start.sh / cosbench-stop.sh: These are internal scripts called by the preceding scripts

· cli.sh: Used to manipulate workload through command lines

The controller can be configured using the conf/controller.conf file, and the driver can be configured using the conf/driver.conf file.

The drivers can be started on all the driver nodes using the start-driver.sh script, while the controller can be started on the controller node using the start-controller.sh script.

Next, we need to create workloads. A workload can be considered as one complete benchmark test. A workload consists of workstages. Each workstage consists of work items. Finally, work items consist of operations. A workload can have more than one workstagethat is executed sequentially. A workstage can have more than one work item that are executed in parallel.

There is one normal type (main) and four special types (init, prepare, cleanup, and dispose) of work. Type main is where we will spend the rest of this discussion; the key parameters for this phase are as follows:

· workers is used to specify the number of workers used to conduct work in parallel, and thus control the load generated

· runtime (plus rampup and rampdown), totalOps, and totalBytes are used to control other parameters of the load generated, including how to start and end the work

The main phase has the operations of read, write, and delete. You will typically want to specify the number of containers and objects to be written, and the object sizes. Numbers and sizes are specified as expressions, and a variety of options, such as constant, uniform, and sequential, are available.

The workload is specified as an XML file. We will now create a workload that is fashioned after the document archiving use case discussed earlier. It uses a workload ratio of 95 percent writes and 5 percent reads. The drivers will spawn 128 workers for the duration of one hour; the object size is static at 5 MB and 100 objects will be created. The workload is as follows:

<?xml version="1.0" encoding="UTF-8"?>

<workload name="LTS2-UAT-V1-128W-5MB-Baseline" description="LTS2 UAT workload configuration">

<auth type="swauth" config=" ;password=xxxx;url= >username=8016-2588:evault-user@evault.com https://auth.lts2.evault.com/v1.0"/

<storage type="swift" config=""/>

<workflow>

<workstage name="init" closuredelay="0">

<work name="init" type="init" workers="16" interval="20"

division="container" runtime="0" rampup="0" rampdown="0"

totalOps="1" totalBytes="0" config="containers=r(1,32)">

<operation type="init" ratio="100" division="container"

config="objects=r(0,0);sizes=c(0)B;containers=r(1,32)" id="none"/>

</work>

</workstage>

<workstage name="prepare" closuredelay="0">

<work name="prepare" type="prepare" workers="16" interval="20"

division="object" runtime="0" rampup="0" rampdown="0"

totalOps="1" totalBytes="0" config="containers=r(1,32);objects=r(1,50);sizes=u(5,5)MB">

<operation type="prepare" ratio="100" division="object"

config="createContainer=false;containers=r(1,32);objects=r(1,50);sizes=u(5,5)MB" id="none"/>

</work>

</workstage>

<workstage name="normal" closuredelay="0">

<work name="normal" type="normal" workers="128" interval="20"

division="none" runtime="300" rampup="100" rampdown="0"

totalOps="0" totalBytes="0">

<operation type="read" ratio="5" division="none"

config="containers=u(1,32);objects=u(1,50);" id="none"/>

<operation type="write" ratio="95" division="none"

config="containers=u(1,32);objects=u(51,100);sizes=u(5,5)MB" id="none"/>

</work>

</workstage>

<workstage name="cleanup" closuredelay="0">

<work name="cleanup" type="cleanup" workers="16" interval="20"

division="object" runtime="0" rampup="0" rampdown="0"

totalOps="1" totalBytes="0" config="containers=r(1,32);objects=r(1,100);">

< operation type="cleanup" ratio="100" division="object"

config="deleteContainer=false;containers=r(1,32);objects=r(1,100);" id="none"/>

</work>

</workstage>

<workstage name="dispose" closuredelay="0">

<work name="dispose" type="dispose" workers="16" interval="20"

division="container" runtime="0" rampup="0" rampdown="0"

totalOps="1" totalBytes="0" config="containers=r(1,32);">

<operation type="dispose" ratio="100"

division="container"

config="objects=r(0,0);sizes=c(0)B;containers=r(1,32);" id="none"/>

</work>

</workstage>

</workflow>

</workload>

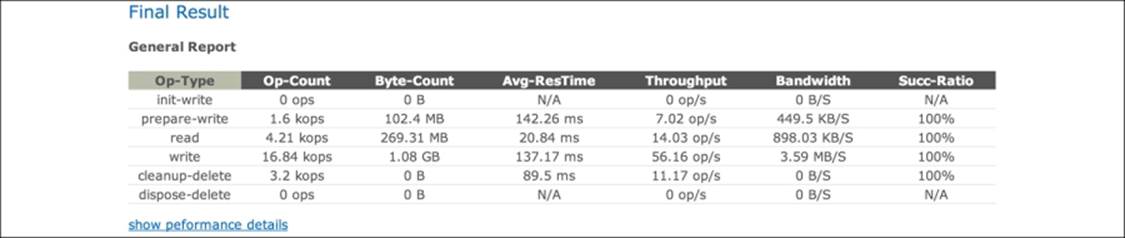

The result of workload is a series of reported metrics: throughput as measured by operations/second, response time measured by average duration between operation start to end, bandwidth as measured by MBps, success ratio (percentage successful), and other metrics. A sample unrelated report is shown in the following screenshot:

If the Swift cluster under test stands up to your workload, you are done. You may want to perform some basic tuning, but this is optional. However, if the Swift cluster is unable to cope with your workload, you need to perform tuning.

The first step is to identify bottlenecks. See Chapter 5, Managing Swift, for tools to find performance bottlenecks. Nagios or swift-recon may be particularly well suited for this. Of course, simple tools such as top may be used as well. Once you isolate the bottleneck to particular server(s) and the underlying components such as CPU performance, memory, I/O, disk bandwidth, and response times, we can move to the next step, which is tuning.

Hardware tuning

Chapter 6, Choosing the Right Hardware, discusses the hardware considerations in great detail. It is sufficient to say that choosing the right hardware can have a huge impact on your performance, durability, availability, and cost.

Software tuning

In Chapter 2, OpenStack Swift Architecture, we talked about Swift using two types of software modules—data path (referred to as WSGI servers in Swift documentation) and postprocessing (referred to as background daemons). In addition, there is the ring. All three merit different considerations in terms of software tuning. Also, we will briefly look at some additional tuning considerations.

The ring considerations

The number of partitions in a ring affects performance and needs to be chosen carefully because this cannot be changed easily. Swift documentation recommends a minimum of 100 partitions per drive to ensure even distribution across servers. Taking the maximum anticipated number of drives multiplied by 100 and then rounded up to the nearest power of two provides the minimum number of total partitions. Using a number higher than is needed will mean extremely uniform distribution, but at the cost of performance, since more partitions put a higher burden on replicators and other postprocessing jobs. Therefore, users should not overestimate the ultimate size of the cluster.

For example, let us assume that we expect our cluster to have a maximum of 1,000 nodes each with 60 disks. That gives us 60 x 1,000 x 100 = 6,000,000 partitions. Rounded up to the nearest power of two, we get 2^23 = 8,388,608. The value that will be used to create the ring will therefore be 23. We did not discuss the disk size in this computation, but a cluster with smaller/faster disks (for example, a 2 TB SAS drive) will perform better than clusters with larger/slower disks (for example, a 6 TB SATA drive) with the same number of partitions.

Data path software tuning

The key data path software modules are proxy, account, container, and object servers. There are literally dozens of tuning parameters, but the four most important ones in terms of performance impact are as follows:

|

Parameter |

Proxy server |

Storage server |

|

workers (auto by default) |

Each worker can handle a max_clients number of simultaneous requests. Ideally, having more workers means more requests can be handled without being blocked. However, there is an upper limit dictated by the CPU. Start by setting workers as 2 multiplied by the number of cores. If the storage server includes account, container, and object servers, you may have to do some experimentation. |

|

|

max_clients (1024by default) |

Since we want the most effective use of network capacity, we want a large number of simultaneous requests. You probably won't need to change the default setting. |

In data published by RedHat, filesystem calls were found to block an entire worker. This means that having a very large setting for max_clients is not useful. Experiment with this parameter, and don't be afraid to reduce this number all the way down to match threads_per_disk or even 1. |

|

object_chunk_size(64 KB by default) |

Given that this data is flowing over an internal Swift network, a larger setting may be more efficient. RedHat found 2 MB to be more efficient than the default size when using a 10 Gbps network. |

N/A |

|

threads_per_disk(0 by default) |

N/A |

This parameter defines the size of the per-disk thread pool. A default value of 0 means a per-disk thread pool will not be used. In general, Swift documentation recommends keeping this small to prevent large queue depths that result in high read latencies. Try starting with four threads per disk. |

Postprocessing software tuning

The impact of tuning postprocessing software is very different from data path software. The focus is not so much on servicing API requests, but rather on reliability, performance, and consistency. Increasing the rate of operations for replicators and auditors makes the system more durable, since this reduces the time required to find and fix faults, at the expense of increased server load. Also, increasing the auditor rate reduces consistency windows by putting a higher server load. The following are the parameters to consider:

· concurrency: Swift documentation (http://docs.openstack.org/developer/swift/deployment_guide.html) recommends setting the concurrency of most postprocessing jobs at 1, except for replicators where they recommend 2. If you need a higher level of durability, consider increasing this number. Durability, again, is measured by 1–P (object loss in a year), where the object size is typically 1 KB.

· interval: Unless you want to reduce the load on servers, increase reliability, or reduce consistency windows, you probably want to stick with the default value.

Additional tuning parameters

A number of additional tuning parameters are available to the user. The important ones are listed as follows:

· memcached: A number of Swift services rely on memcached to cache lookups since Swift does not cache any object data. While memcached can be run on any server, it should be turned on for all proxy servers. If memcached is turned on, please ensure adequate RAM and CPU resources are available.

· System time: Given that Swift is a distributed system, the timestamp of an object is used for a number of reasons. Therefore, it is important to ensure that time is consistent between servers. Services such as NTP may be used for this purpose.

· Filesystem: Swift is filesystem agnostic; however, XFS is the one tested by the Swift community. It is important to keep a high inode size, for example, 1024 to ensure that default and some additional metadata can be stored efficiently. Other parameters should be set as described in Chapter 3, Installing OpenStack Swift.

· Operating system: General operating system tuning is outside the scope of this book. However, Swift documentation suggests disabling TIME_WAIT and syn cookies and doubling the amount of allowed conntrack in sysctl.conf. Since the OS is usually installed on a disk that is not part of storage drives, you may want to consider a small SSD to get fast boot times.

· Network stack: Network stack tuning is also outside the scope of this book. However, there may be some additional obvious tuning, for example, enabling jumbo frames for the internal storage cluster network. Jumbo frames may also be enabled on the client facing or replication network if this traffic is over the LAN (in the case of private clouds).

· Logging: Unless custom log handlers are used, Swift logs directly to syslog. Swift generates a large amount of log data, and therefore, managing logs correctly is extremely important. Setting logs appropriately can impact both performance and your ability to diagnose problems. You may want to consider high performance variants such as rsyslog (http://www.rsyslog.com/) or syslog-ng (http://www.balabit.com/network-security/syslog-ng/opensource-logging-system).

Summary

In this chapter, we reviewed how to benchmark a Swift cluster and tune it for a specific use case for private cloud users. The next and final chapter covers use cases appropriate for OpenStack Swift and additional resources.