C# 5.0 in a Nutshell (2012)

Chapter 12. Disposal and Garbage Collection

Some objects require explicit teardown code to release resources such as open files, locks, operating system handles, and unmanaged objects. In .NET parlance, this is called disposal, and it is supported through the IDisposable interface. The managed memory occupied by unused objects must also be reclaimed at some point; this function is known as garbage collection and is performed by the CLR.

Disposal differs from garbage collection in that disposal is usually explicitly instigated; garbage collection is totally automatic. In other words, the programmer takes care of such things as releasing file handles, locks, and operating system resources while the CLR takes care of releasing memory.

This chapter discusses both disposal and garbage collection, also describing C# finalizers and the pattern by which they can provide a backup for disposal. Lastly, we discuss the intricacies of the garbage collector and other memory management options.

IDisposable, Dispose, and Close

The .NET Framework defines a special interface for types requiring a tear-down method:

public interface IDisposable

{

void Dispose();

}

C#’s using statement provides a syntactic shortcut for calling Dispose on objects that implement IDisposable, using a try/finally block. For example:

using (FileStream fs = new FileStream ("myFile.txt", FileMode.Open))

{

// ... Write to the file ...

}

The compiler converts this to:

FileStream fs = new FileStream ("myFile.txt", FileMode.Open);

try

{

// ... Write to the file ...

}

finally

{

if (fs != null) ((IDisposable)fs).Dispose();

}

The finally block ensures that the Dispose method is called even when an exception is thrown,[10] or the code exits the block early.

In simple scenarios, writing your own disposable type is just a matter of implementing IDisposable and writing the Dispose method:

sealed class Demo : IDisposable

{

public void Dispose()

{

// Perform cleanup / tear-down.

...

}

}

NOTE

This pattern works well in simple cases and is appropriate for sealed classes. In Calling Dispose from a Finalizer, we’ll describe a more elaborate pattern that can provide a backup for consumers that forget to call Dispose. With unsealed types, there’s a strong case for following this latter pattern from the outset—otherwise, it becomes very messy if the subtype wants to add such functionality itself.

Standard Disposal Semantics

The Framework follows a de facto set of rules in its disposal logic. These rules are not hard-wired to the Framework or C# language in any way; their purpose is to define a consistent protocol to consumers. Here they are:

1. Once disposed, an object is beyond redemption. It cannot be reactivated, and calling its methods or properties throws an ObjectDisposedException.

2. Calling an object’s Dispose method repeatedly causes no error.

3. If disposable object x contains or “wraps” or “possesses” disposable object y, x’s Dispose method automatically calls y’s Dispose method—unless instructed otherwise.

These rules are also helpful when writing your own types, though not mandatory. Nothing prevents you from writing an “Undispose” method, other than, perhaps, the flak you might cop from colleagues!

According to rule 3, a container object automatically disposes its child objects. A good example is a Windows container control such as a Form or Panel. The container may host many child controls, yet you don’t dispose every one of them explicitly: closing or disposing the parent control or form takes care of the whole lot. Another example is when you wrap a FileStream in a DeflateStream. Disposing the DeflateStream also disposes the FileStream—unless you instructed otherwise in the constructor.

Close and Stop

Some types define a method called Close in addition to Dispose. The Framework is not completely consistent on the semantics of a Close method, although in nearly all cases it’s either:

§ Functionally identical to Dispose

§ A functional subset of Dispose

An example of the latter is IDbConnection: a Closed connection can be re-Opened; a Disposed connection cannot. Another example is a Windows Form activated with ShowDialog: Close hides it; Dispose releases its resources.

Some classes define a Stop method (e.g., Timer or HttpListener). A Stop method may release unmanaged resources, like Dispose, but unlike Dispose, it allows for re-Starting.

With WinRT, Close is considered identical to Dispose—in fact, the runtime projects methods called Close into methods called Dispose, to make their types friendly to using statements.

When to Dispose

A safe rule to follow (in nearly all cases) is “if in doubt, dispose.” A disposable object—if it could talk—would say the following:

When you’ve finished with me, let me know. If simply abandoned, I might cause trouble for other object instances, the application domain, the computer, the network, or the database!

Objects wrapping an unmanaged resource handle will nearly always require disposal, in order to free the handle. Examples include Windows Forms controls, file or network streams, network sockets, GDI+ pens, brushes, and bitmaps. Conversely, if a type is disposable, it will often (but not always) reference an unmanaged handle, directly or indirectly. This is because unmanaged handles provide the gateway to the “outside world” of operating system resources, network connections, database locks—the primary means by which objects can create trouble outside of themselves if improperly abandoned.

There are, however, three scenarios for not disposing:

§ When obtaining a shared object via a static field or property

§ When an object’s Dispose method does something that you don’t want

§ When an object’s Dispose method is unnecessary by design, and disposing that object would add complexity to your program

The first category is rare. The main cases are in the System.Drawing namespace: the GDI+ objects obtained through static fields or properties (such as Brushes.Blue) must never be disposed because the same instance is used throughout the life of the application. Instances that you obtain through constructors, however (such as new SolidBrush), should be disposed, as should instances obtained through static methods (such as Font.FromHdc).

The second category is more common. There are some good examples in the System.IO and System.Data namespaces:

|

Type |

Disposal function |

When not to dispose |

|

MemoryStream |

Prevents further I/O |

When you later need to read/write the stream |

|

StreamReader, StreamWriter |

Flushes the reader/writer and closes the underlying stream |

When you want to keep the underlying stream open (you must instead call Flush on a StreamWriter when you’re done) |

|

IDbConnection |

Releases a database connection and clears the connection string |

If you need to re-Open it, you should call Close instead of Dispose |

|

DataContext (LINQ to SQL) |

Prevents further use |

When you might have lazily evaluated queries connected to that context |

MemoryStream’s Dispose method disables only the object; it doesn’t perform any critical cleanup because a MemoryStream holds no unmanaged handles or other such resources.

The third category includes the following classes: WebClient, StringReader, StringWriter, and BackgroundWorker (in System.ComponentModel). These types are disposable under the duress of their base class rather than through a genuine need to perform essential cleanup. If you happen to instantiate and work with such an object entirely in one method, wrapping it in a using block adds little inconvenience. But if the object is longer-lasting, keeping track of when it’s no longer used so that you can dispose of it adds unnecessary complexity. In such cases, you can simply ignore object disposal.

Opt-in Disposal

Because IDisposable makes a type tractable with C#’s using construct, there’s a temptation to extend the reach of IDisposable to nonessential activities. For instance:

public sealed class HouseManager : IDisposable

{

public void Dispose()

{

CheckTheMail();

}

...

}

The idea is that a consumer of this class can choose to circumvent the nonessential cleanup—simply by not calling Dispose. This, however, relies on the consumer knowing what’s inside HouseManager’s Dispose method. It also breaks if essential cleanup activity is later added:

public void Dispose()

{

CheckTheMail(); // Nonessential

LockTheHouse(); // Essential

}

The solution to this problem is the opt-in disposal pattern:

public sealed class HouseManager : IDisposable

{

public readonly bool CheckMailOnDispose;

public Demo (bool checkMailOnDispose)

{

CheckMailOnDispose = checkMailOnDispose;

}

public void Dispose()

{

if (CheckMailOnDispose) CheckTheMail();

LockTheHouse();

}

...

}

The consumer can then always call Dispose—providing simplicity and avoiding the need for special documentation or reflection. An example of where this pattern is implemented is in the DeflateStream class, in System.IO.Compression. Here’s its constructor:

public DeflateStream (Stream stream, CompressionMode mode, bool leaveOpen)

The nonessential activity is closing the inner stream (the first parameter) upon disposal. There are times when you want to leave the inner stream open and yet still dispose the DeflateStream to perform its essential tear-down activity (flushing buffered data).

This pattern might look simple, yet until Framework 4.5, it escaped StreamReader and StreamWriter (in the System.IO namespace). The result is messy: StreamWriter must expose another method (Flush) to perform essential cleanup for consumers not calling Dispose. (Framework 4.5 now exposes a constructor on these classes that lets you keep the stream open.) The CryptoStream class in System.Security.Cryptography suffers a similar problem and requires that you call FlushFinalBlock to tear it down while keeping the inner stream open.

NOTE

You could describe this as an ownership issue. The question for a disposable object is: do I really own the underlying resource that I’m using? Or am I just renting it from someone else who manages both the underlying resource lifetime and, by some undocumented contract, my lifetime?

Following the opt-in pattern avoids this problem by making the ownership contract documented and explicit.

Clearing Fields in Disposal

In general, you don’t need to clear an object’s fields in its Dispose method. However, it is good practice to unsubscribe from events that the object has subscribed to internally over its lifetime (see Managed Memory Leaks for an example). Unsubscribing from such events avoids receiving unwanted event notifications—and avoids unintentionally keeping the object alive in the eyes of the garbage collector (GC).

NOTE

A Dispose method itself does not cause (managed) memory to be released—this can happen only in garbage collection.

It’s also worth setting a field to indicate that the object is disposed so that you can throw an ObjectDisposedException if a consumer later tries to call members on the object. A good pattern is to use a publicly readable automatic property for this:

public bool IsDisposed { get; private set; }

Although technically unnecessary, it can also be good to clear an object’s own event handlers (by setting them to null) in the Dispose method. This eliminates the possibility of those events firing during or after disposal.

Occasionally, an object holds high-value secrets, such as encryption keys. In these cases, it can make sense to clear such data from fields during disposal (to avoid discovery by less privileged assemblies or malware). The SymmetricAlgorithm class inSystem.Security.Cryptography does exactly this, by calling Array.Clear on the byte array holding the encryption key.

Automatic Garbage Collection

Regardless of whether an object requires a Dispose method for custom tear-down logic, at some point the memory it occupies on the heap must be freed. The CLR handles this side of it entirely automatically, via an automatic GC. You never deallocate managed memory yourself. For example, consider the following method:

public void Test()

{

byte[] myArray = new byte[1000];

...

}

When Test executes, an array to hold 1,000 bytes is allocated on the memory heap. The array is referenced by the variable myArray, stored on the local variable stack. When the method exits, this local variable myArray pops out of scope, meaning that nothing is left to reference the array on the memory heap. The orphaned array then becomes eligible to be reclaimed in garbage collection.

NOTE

In debug mode with optimizations disabled, the lifetime of an object referenced by a local variable extends to the end of the code block to ease debugging. Otherwise, it becomes eligible for collection at the earliest point at which it’s no longer used.

Garbage collection does not happen immediately after an object is orphaned. Rather like garbage collection on the street, it happens periodically, although (unlike garbage collection on the street) not to a fixed schedule. The CLR bases its decision on when to collect upon a number of factors, such as the available memory, the amount of memory allocation, and the time since the last collection. This means that there’s an indeterminate delay between an object being orphaned and being released from memory. This delay can range from nanoseconds to days.

NOTE

The GC doesn’t collect all garbage with every collection. Instead, the memory manager divides objects into generations and the GC collects new generations (recently allocated objects) more frequently than old generations (long-lived objects). We’ll discuss this in more detail in How the Garbage Collector Works.

GARBAGE COLLECTION AND MEMORY CONSUMPTION

The GC tries to strike a balance between the time it spends doing garbage collection and the application’s memory consumption (working set). Consequently, applications can consume more memory than they need, particularly if large temporary arrays are constructed.

You can monitor a process’s memory consumption via the Windows Task Manager or Resource Monitor—or programmatically by querying a performance counter:

// These types are in System.Diagnostics:

string procName = Process.GetCurrentProcess().ProcessName;

using (PerformanceCounter pc = new PerformanceCounter

("Process", "Private Bytes", procName))

Console.WriteLine (pc.NextValue());

This queries the private working set, which gives the best overall indication of your program’s memory consumption. Specifically, it excludes memory that the CLR has internally deallocated and is willing to rescind to the operating system should another process need it.

Roots

A root is something that keeps an object alive. If an object is not directly or indirectly referenced by a root, it will be eligible for garbage collection.

A root is one of the following:

§ A local variable or parameter in an executing method (or in any method in its call stack)

§ A static variable

§ An object on the queue that stores objects ready for finalization (see next section)

It’s impossible for code to execute in a deleted object, so if there’s any possibility of an (instance) method executing, its object must somehow be referenced in one of these ways.

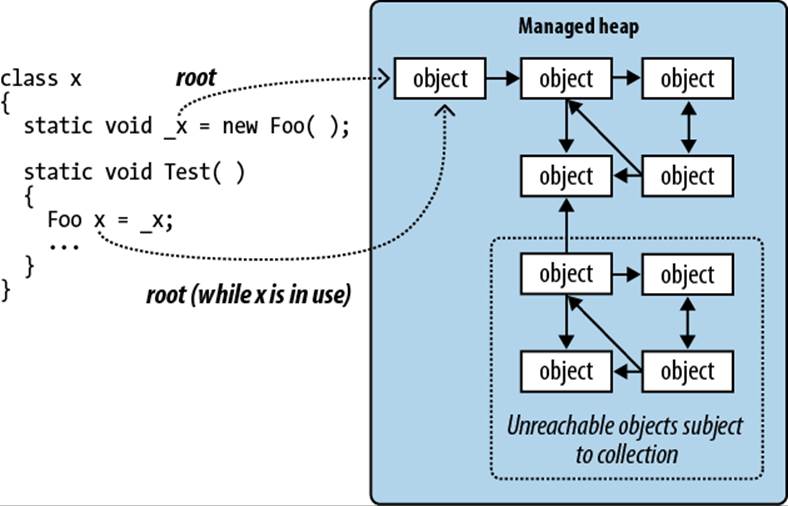

Note that a group of objects that reference each other cyclically are considered dead without a root referee (see Figure 12-1). To put it in another way, objects that cannot be accessed by following the arrows (references) from a root object are unreachable—and therefore subject to collection.

Figure 12-1. Roots

Garbage Collection and WinRT

Windows Runtime relies on COM’s reference-counting mechanism to release memory instead of depending on an automatic garbage collector. Despite this, WinRT objects that you instantiate from C# have their lifetime managed by the CLR’s garbage collector, because the CLR mediates access to the COM object through an object that it creates behind the scenes called a runtime callable wrapper (Chapter 24).

Finalizers

Prior to an object being released from memory, its finalizer runs, if it has one. A finalizer is declared like a constructor, but it is prefixed by the ˜ symbol:

class Test

{

˜Test()

{

// Finalizer logic...

}

}

(Although similar in declaration to a constructor, finalizers cannot be declared as public or static, cannot have parameters, and cannot call the base class.)

Finalizers are possible because garbage collection works in distinct phases. First, the GC identifies the unused objects ripe for deletion. Those without finalizers are deleted right away. Those with pending (unrun) finalizers are kept alive (for now) and are put onto a special queue.

At that point, garbage collection is complete, and your program continues executing. The finalizer thread then kicks in and starts running in parallel to your program, picking objects off that special queue and running their finalization methods. Prior to each object’s finalizer running, it’s still very much alive—that queue acts as a root object. Once it’s been dequeued and the finalizer executed, the object becomes orphaned and will get deleted in the next collection (for that object’s generation).

Finalizers can be useful, but they come with some provisos:

§ Finalizers slow the allocation and collection of memory (the GC needs to keep track of which finalizers have run).

§ Finalizers prolong the life of the object and any referred objects (they must all await the next garbage truck for actual deletion).

§ It’s impossible to predict in what order the finalizers for a set of objects will be called.

§ You have limited control over when the finalizer for an object will be called.

§ If code in a finalizer blocks, other objects cannot get finalized.

§ Finalizers may be circumvented altogether if an application fails to unload cleanly.

In summary, finalizers are somewhat like lawyers—although there are cases in which you really need them, in general you don’t want to use them unless absolutely necessary. If you do use them, you need to be 100% sure you understand what they are doing for you.

Here are some guidelines for implementing finalizers:

§ Ensure that your finalizer executes quickly.

§ Never block in your finalizer (Chapter 14).

§ Don’t reference other finalizable objects.

§ Don’t throw exceptions.

WARNING

An object’s finalizer can get called even if an exception is thrown during construction. For this reason, it pays not to assume that fields are correctly initialized when writing a finalizer.

Calling Dispose from a Finalizer

A popular pattern is to have the finalizer call Dispose. This makes sense when cleanup is non-urgent and hastening it by calling Dispose is more of an optimization than a necessity.

NOTE

Bear in mind that with this pattern, you couple memory deallocation to resource deallocation—two things with potentially divergent interests (unless the resource is itself memory). You also increase the burden on the finalization thread.

This pattern can also be used as a backup for cases when a consumer simply forgets to call Dispose. However, it’s then a good idea to log the failure so that you can fix the bug.

There’s a standard pattern for implementing this, as follows:

class Test : IDisposable

{

public void Dispose() // NOT virtual

{

Dispose (true);

GC.SuppressFinalize (this); // Prevent finalizer from running.

}

protected virtual void Dispose (bool disposing)

{

if (disposing)

{

// Call Dispose() on other objects owned by this instance.

// You can reference other finalizable objects here.

// ...

}

// Release unmanaged resources owned by (just) this object.

// ...

}

˜Test()

{

Dispose (false);

}

}

Dispose is overloaded to accept a bool disposing flag. The parameterless version is not declared as virtual and simply calls the enhanced version with true.

The enhanced version contains the actual disposal logic and is protected and virtual; this provides a safe point for subclasses to add their own disposal logic. The disposing flag means it’s being called “properly” from the Dispose method rather than in “last-resort mode” from the finalizer. The idea is that when called with disposing set to false, this method should not, in general, reference other objects with finalizers (because such objects may themselves have been finalized and so be in an unpredictable state). This rules out quite a lot! Here are a couple of tasks it can still perform in last-resort mode, when disposing is false:

§ Releasing any direct references to operating system resources (obtained, perhaps, via a P/Invoke call to the Win32 API)

§ Deleting a temporary file created on construction

To make this robust, any code capable of throwing an exception should be wrapped in a try/catch block, and the exception, ideally, logged. Any logging should be as simple and robust as possible.

Notice that we call GC.SuppressFinalize in the parameterless Dispose method—this prevents the finalizer from running when the GC later catches up with it. Technically, this is unnecessary, as Dispose methods must tolerate repeated calls. However, doing so improves performance because it allows the object (and its referenced objects) to be garbage-collected in a single cycle.

Resurrection

Suppose a finalizer modifies a living object such that it refers back to the dying object. When the next garbage collection happens (for the object’s generation), the CLR will see the previously dying object as no longer orphaned—and so it will evade garbage collection. This is an advanced scenario, and is called resurrection.

To illustrate, suppose we want to write a class that manages a temporary file. When an instance of that class is garbage-collected, we’d like the finalizer to delete the temporary file. It sounds easy:

public class TempFileRef

{

public readonly string FilePath;

public TempFileRef (string filePath) { FilePath = filePath; }

~TempFileRef() { File.Delete (FilePath); }

}

Unfortunately, this has a bug: File.Delete might throw an exception (due to a lack of permissions, perhaps, or the file being in use). Such an exception would take down the whole application (as well as preventing other finalizers from running). We could simply “swallow” the exception with an empty catch block, but then we’d never know that anything went wrong. Calling some elaborate error reporting API would also be undesirable because it would burden the finalizer thread, hindering garbage collection for other objects. We want to restrict finalization actions to those that are simple, reliable, and quick.

A better option is to record the failure to a static collection as follows:

public class TempFileRef

{

static ConcurrentQueue<TempFileRef> _failedDeletions

= new ConcurrentQueue<TempFileRef>();

public readonly string FilePath;

public Exception DeletionError { get; private set; }

public TempFileRef (string filePath) { FilePath = filePath; }

~TempFileRef()

{

try { File.Delete (FilePath); }

catch (Exception ex)

{

DeletionError = ex;

_failedDeletions.Enqueue (this); // Resurrection

}

}

}

Enqueuing the object to the static _failedDeletions collection gives the object another referee, ensuring that it remains alive until the object is eventually dequeued.

NOTE

ConcurrentQueue<T> is a thread-safe version of Queue<T> and is defined in System.Collections.Concurrent (see Chapter 23). There are a couple of reasons for using a thread-safe collection. First, the CLR reserves the right to execute finalizers on more than one thread in parallel. This means that when accessing shared state such as a static collection, we must consider the possibility of two objects being finalized at once. Second, at some point we’re going to want to dequeue items from _failedDeletions so that we can do something about them. This also has to be done in a thread-safe fashion, because it could happen while the finalizer is concurrently enqueuing another object.

GC.ReRegisterForFinalize

A resurrected object’s finalizer will not run a second time—unless you call GC.ReRegisterForFinalize.

In the following example, we try to delete a temporary file in a finalizer (as in the last example). But if the deletion fails, we reregister the object so as to try again in the next garbage collection.

public class TempFileRef

{

public readonly string FilePath;

int _deleteAttempt;

public TempFileRef (string filePath) { FilePath = filePath; }

~TempFileRef()

{

try { File.Delete (FilePath); }

catch

{

if (_deleteAttempt++ < 3) GC.ReRegisterForFinalize (this);

}

}

}

After the third failed attempt, our finalizer will silently give up trying to delete the file. We could enhance this by combining it with the previous example—in other words, adding it to the _failedDeletions queue after the third failure.

NOTE

Be careful to call ReRegisterForFinalize just once in the finalizer method. If you call it twice, the object will be reregistered twice and will have to undergo two more finalizations!

How the Garbage Collector Works

The standard CLR uses a generational mark-and-compact GC that performs automatic memory management for objects stored on the managed heap. The GC is considered to be a tracing garbage collector in that it doesn’t interfere with every access to an object, but rather wakes up intermittently and traces the graph of objects stored on the managed heap to determine which objects can be considered garbage and therefore collected.

The GC initiates a garbage collection upon performing a memory allocation (via the new keyword) either after a certain threshold of memory has been allocated, or at other times to reduce the application’s memory footprint. This process can also be initiated manually by callingSystem.GC.Collect. During a garbage collection, all threads may by frozen (more on this in the next section).

The GC begins with its root object references, and walks the object graph, marking all the objects it touches as reachable. Once this process is complete, all objects that have not been marked are considered unused, and are subject to garbage collection.

Unused objects without finalizers are immediately discarded; unused objects with finalizers are enqueued for processing on the finalizer thread after the GC is complete. These objects then become eligible for collection in the next GC for the object’s generation (unless resurrected).

The remaining “live” objects are then shifted to the start of the heap (compacted), freeing space for more objects. This compaction serves two purposes: it avoids memory fragmentation, and it allows the GC to employ a very simple strategy when allocating new objects, which is to always allocate memory at the end of the heap. This avoids the potentially time-consuming task of maintaining a list of free memory segments.

If there is insufficient space to allocate memory for a new object after garbage collection, and the operating system is unable to grant further memory, an OutOfMemoryException is thrown.

Optimization Techniques

The GC incorporates various optimization techniques to reduce the garbage collection time.

Generational collection

The most important optimization is that the GC is generational. This takes advantage of the fact that although many objects are allocated and discarded rapidly, certain objects are long-lived and thus don’t need to be traced during every collection.

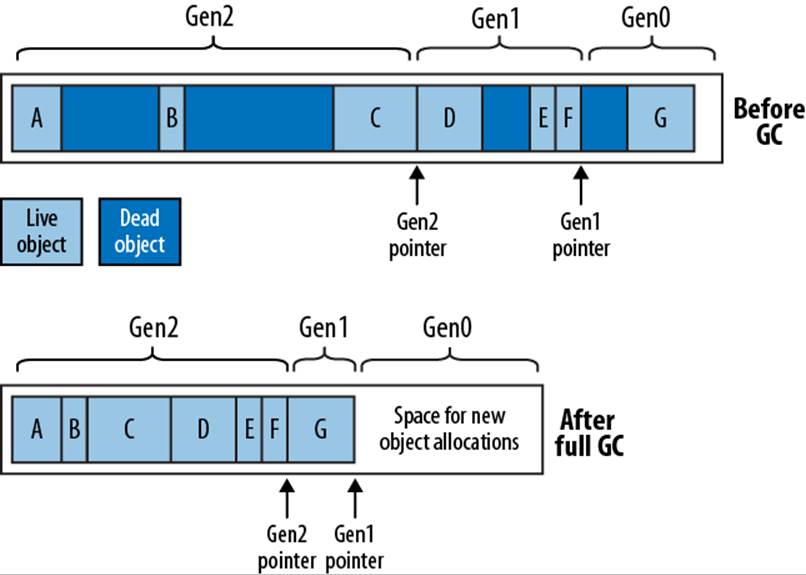

Basically, the GC divides the managed heap into three generations. Objects that have just been allocated are in Gen0 and objects that have survived one collection cycle are in Gen1; all other objects are in Gen2.

The CLR keeps the Gen0 section relatively small (a maximum of 16 MB on the 32-bit workstation CLR, with a typical size of a few hundred KB to a few MB). When the Gen0 section fills up, the GC instigates a Gen0 collection—which happens relatively often. The GC applies a similar memory threshold to Gen1 (which acts as a buffer to Gen2), and so Gen1 collections are relatively quick and frequent too. Full collections that include Gen2, however, take much longer and so happen infrequently. Figure 12-2 shows the effect of a full collection.

Figure 12-2. Heap generations

To give some very rough ballpark figures, a Gen0 collection might take less than 1 ms, which is not enough to be noticed in a typical application. A full collection, however, might take as long as 100 ms on a program with large object graphs. These figures depend on numerous factors and so may vary considerably—particularly in the case of Gen2 whose size is unbounded (unlike Gen0 and Gen1).

The upshot is that short-lived objects are very efficient in their use of the GC. The StringBuilders created in the following method would almost certainly be collected in a fast Gen0:

string Foo()

{

var sb1 = new StringBuilder ("test");

sb1.Append ("...");

var sb2 = new StringBuilder ("test");

sb2.Append (sb1.ToString());

return sb2.ToString();

}

The large object heap

The GC uses a separate heap called the Large Object Heap (LOH) for objects larger than a certain threshold (currently 85,000 bytes). This avoids excessive Gen0 collections—without the LOH, allocating a series of 16 MB objects might trigger a Gen0 collection after every allocation.

The LOH is not subject to compaction, because moving large blocks of memory during garbage collection would be prohibitively expensive. This has two consequences:

§ Allocations can be slower, because the GC can’t always simply allocate objects at the end of the heap—it must also look in the middle for gaps, and this requires maintaining a linked list of free memory blocks.[11]

§ The LOH is subject to fragmentation. This means that the freeing of an object can create a hole in the LOH that may be hard to fill later. For instance, a hole left by an 86,000-byte object can be filled only by an object of between 85,000 bytes and 86,000 bytes (unless adjoined by another hole).

The large object heap is also nongenerational: all objects are treated as Gen2.

Concurrent and background collection

The GC must freeze (block) your execution threads for periods during a collection. This includes the entire period during which a Gen0 or Gen1 collection takes place.

The GC makes a special attempt, though, at allowing threads to run during a Gen2 collection as it’s undesirable to freeze an application for a potentially long period. This optimization applies to the workstation version of the CLR only, which is used on desktop versions of Windows (and on all versions of Windows with standalone applications). The rationale is that the latency from a blocking collection is less likely to be a problem for server applications that don’t have a user interface.

NOTE

A mitigating factor is that the server CLR leverages all available cores to perform GCs, so an eight-core server will perform a full GC many times faster. In effect, the server GC is tuned to maximize throughput rather than minimize latency.

The workstation optimization has historically been called concurrent collection. From CLR 4.0, it’s been revamped and renamed to background collection. Background collection removes a limitation whereby a concurrent collection would cease to be concurrent if the Gen0 section filled up while a Gen2 collection was running. This means that from CLR 4.0, applications that continually allocate memory will be more responsive.

GC notifications (server CLR)

From Framework 3.5 SP1, the server version of the CLR can notify you just before a full GC will occur. This is intended for server farm configurations: the idea is that you divert requests to another server just before a collection. You then instigate the collection immediately and wait for it to complete before rerouting requests back to that server.

To start notification, call GC.RegisterForFullGCNotification. Then start up another thread (see Chapter 14) that first calls GC.WaitForFullGCApproach. When this method returns a GCNotificationStatus indicating that a collection is near, you can reroute requests to other servers and force a manual collection (see the following section). You then call GC.WaitForFullGCComplete: when this method returns, GC is complete and you can again accept requests. You then repeat the whole cycle.

Forcing Garbage Collection

You can manually force a GC at any time, by calling GC.Collect. Calling GC.Collect without an argument instigates a full collection. If you pass in an integer value, only generations to that value are collected, so GC.Collect(0) performs only a fast Gen0 collection.

In general, you get the best performance by allowing the GC to decide when to collect: forcing collection can hurt performance by unnecessarily promoting Gen0 objects to Gen1 (and Gen1 objects to Gen2). It can also upset the GC’s self-tuning ability, whereby the GC dynamically tweaks the thresholds for each generation to maximize performance as the application executes.

There are exceptions, however. The most common case for intervention is when an application goes to sleep for a while: a good example is a Windows Service that performs a daily activity (checking for updates, perhaps). Such an application might use a System.Timers.Timer to initiate the activity every 24 hours. After completing the activity, no further code executes for 24 hours, which means that for this period, no memory allocations are made and so the GC has no opportunity to activate. Whatever memory the service consumed in performing its activity, it will continue to consume for the following 24 hours—even with an empty object graph! The solution is to call GC.Collect right after the daily activity completes.

To ensure the collection of objects for which collection is delayed by finalizers, you can take the additional step of calling WaitForPendingFinalizers and re-collecting:

GC.Collect();

GC.WaitForPendingFinalizers();

GC.Collect();

Another case for calling GC.Collect is when you’re testing a class that has a finalizer.

Memory Pressure

The runtime decides when to initiate collections based on a number of factors, including the total memory load on the machine. If your program allocates unmanaged memory (Chapter 25), the runtime will get an unrealistically optimistic perception of its memory usage, because the CLR knows only about managed memory. You can mitigate this by telling the CLR to assume a specified quantity of unmanaged memory has been allocated, by calling GC.AddMemoryPressure. To undo this (when the unmanaged memory is released) call GC.RemoveMemoryPressure.

Managed Memory Leaks

In unmanaged languages such as C++, you must remember to manually deallocate memory when an object is no longer required; otherwise, a memory leak will result. In the managed world, this kind of error is impossible due to the CLR’s automatic garbage collection system.

Nonetheless, large and complex .NET applications can exhibit a milder form of the same syndrome with the same end result: the application consumes more and more memory over its lifetime, until it eventually has to be restarted. The good news is that managed memory leaks are usually easier to diagnose and prevent.

Managed memory leaks are caused by unused objects remaining alive by virtue of unused or forgotten references. A common candidate is event handlers—these hold a reference to the target object (unless the target is a static method). For instance, consider the following classes:

class Host

{

public event EventHandler Click;

}

class Client

{

Host _host;

public Client (Host host)

{

_host = host;

_host.Click += HostClicked;

}

void HostClicked (object sender, EventArgs e) { ... }

}

The following test class contains a method that instantiates 1,000 clients:

class Test

{

static Host _host = new Host();

public static void CreateClients()

{

Client[] clients = Enumerable.Range (0, 1000)

.Select (i => new Client (_host))

.ToArray();

// Do something with clients ...

}

}

You might expect that after CreateClients finishes executing, the 1,000 Client objects will become eligible for collection. Unfortunately, each client has another referee: the _host object whose Click event now references each Client instance. This may go unnoticed if the Clickevent doesn’t fire—or if the HostClicked method doesn’t do anything to attract attention.

One way to solve this is to make Client implement IDisposable, and in the Dispose method, unhook the event handler:

public void Dispose() { _host.Click -= HostClicked; }

Consumers of Client then dispose of the instances when they’re done with them:

Array.ForEach (clients, c => c.Dispose());

NOTE

In “Weak References,” we’ll describe another solution to this problem, which can be useful in environments which tend not to use disposable objects (an example is WPF). In fact, the WPF framework offers a class called WeakEventManager that leverages a pattern employing weak references.

On the topic of WPF, data binding is another common cause for memory leaks: the issue is described at http://support.microsoft.com/kb/938416.

Timers

Forgotten timers can also cause memory leaks (we discuss timers in Chapter 22). There are two distinct scenarios, depending on the kind of timer. Let’s first look at the timer in the System.Timers namespace. In the following example, the Foo class (when instantiated) calls thetmr_Elapsed method once every second:

using System.Timers;

class Foo

{

Timer _timer;

Foo()

{

_timer = new System.Timers.Timer { Interval = 1000 };

_timer.Elapsed += tmr_Elapsed;

_timer.Start();

}

void tmr_Elapsed (object sender, ElapsedEventArgs e) { ... }

}

Unfortunately, instances of Foo can never be garbage-collected! The problem is the .NET Framework itself holds references to active timers so that it can fire their Elapsed events. Hence:

§ The .NET Framework will keep _timer alive.

§ _timer will keep the Foo instance alive, via the tmr_Elapsed event handler.

The solution is obvious when you realize that Timer implements IDisposable. Disposing of the timer stops it and ensures that the .NET Framework no longer references the object:

class Foo : IDisposable

{

...

public void Dispose() { _timer.Dispose(); }

}

NOTE

A good guideline is to implement IDisposable yourself if any field in your class is assigned an object that implements IDisposable.

The WPF and Windows Forms timers behave in exactly the same way, with respect to what’s just been discussed.

The timer in the System.Threading namespace, however, is special. The .NET Framework doesn’t hold references to active threading timers; it instead references the callback delegates directly. This means that if you forget to dispose of a threading timer, a finalizer can fire which will automatically stop and dispose the timer. For example:

static void Main()

{

var tmr = new System.Threading.Timer (TimerTick, null, 1000, 1000);

GC.Collect();

System.Threading.Thread.Sleep (10000); // Wait 10 seconds

}

static void TimerTick (object notUsed) { Console.WriteLine ("tick"); }

If this example is compiled in “release” mode (debugging disabled and optimizations enabled), the timer will be collected and finalized before it has a chance to fire even once! Again, we can fix this by disposing of the timer when we’re done with it:

using (var tmr = new System.Threading.Timer (TimerTick, null, 1000, 1000))

{

GC.Collect();

System.Threading.Thread.Sleep (10000); // Wait 10 seconds

}

The implicit call to tmr.Dispose at the end of the using block ensures that the tmr variable is “used” and so not considered dead by the GC until the end of the block. Ironically, this call to Dispose actually keeps the object alive longer!

Diagnosing Memory Leaks

The easiest way to avoid managed memory leaks is to proactively monitor memory consumption as an application is written. You can obtain the current memory consumption of a program’s objects as follows (the true argument tells the GC to perform a collection first):

long memoryUsed = GC.GetTotalMemory (true);

If you’re practicing test-driven development, one possibility is to use unit tests to assert that memory is reclaimed as expected. If such an assertion fails, you then have to examine only the changes that you’ve made recently.

If you already have a large application with a managed memory leak, the windbg.exe tool can assist in finding it. There are also friendlier graphical tools such as Microsoft’s CLR Profiler, SciTech’s Memory Profiler, and Red Gate’s ANTS Memory Profiler.

The CLR also exposes numerous Windows WMI counters to assist with resource monitoring.

Weak References

Occasionally, it’s useful to hold a reference to an object that’s “invisible” to the GC in terms of keeping the object alive. This is called a weak reference, and is implemented by the System.WeakReference class.

To use WeakReference, construct it with a target object as follows:

var sb = new StringBuilder ("this is a test");

var weak = new WeakReference (sb);

Console.WriteLine (weak.Target); // This is a test

If a target is referenced only by one or more weak references, the GC will consider the target eligible for collection. When the target gets collected, the Target property of the WeakReference will be null:

var weak = new WeakReference (new StringBuilder ("weak"));

Console.WriteLine (weak.Target); // weak

GC.Collect();

Console.WriteLine (weak.Target); // (nothing)

To avoid the target being collected in between testing for it being null and consuming it, assign the target to a local variable:

var weak = new WeakReference (new StringBuilder ("weak"));

var sb = (StringBuilder) weak.Target;

if (sb != null) { /* Do something with sb */ }

Once a target’s been assigned to a local variable, it has a strong root and so cannot be collected while that variable’s in use.

The following class uses weak references to keep track of all Widget objects that have been instantiated, without preventing those objects from being collected:

class Widget

{

static List<WeakReference> _allWidgets = new List<WeakReference>();

public readonly string Name;

public Widget (string name)

{

Name = name;

_allWidgets.Add (new WeakReference (this));

}

public static void ListAllWidgets()

{

foreach (WeakReference weak in _allWidgets)

{

Widget w = (Widget)weak.Target;

if (w != null) Console.WriteLine (w.Name);

}

}

}

The only proviso with such a system is that the static list will grow over time, accumulating weak references with null targets. So you need to implement some cleanup strategy.

Weak References and Caching

One use for WeakReference is to cache large object graphs. This allows memory-intensive data to be cached briefly without causing excessive memory consumption:

_weakCache = new WeakReference (...); // _weakCache is a field

...

var cache = _weakCache.Target;

if (cache == null) { /* Re-create cache & assign it to _weakCache */ }

This strategy may be only mildly effective in practice, because you have little control over when the GC fires and what generation it chooses to collect. In particular, if your cache remains in Gen0, it may be collected within microseconds (and remember that the GC doesn’t collect only when memory is low—it collects regularly under normal memory conditions). So at a minimum, you should employ a two-level cache whereby you start out by holding strong references that you convert to weak references over time.

Weak References and Events

We saw earlier how events can cause managed memory leaks. The simplest solution is to either avoid subscribing in such conditions, or implement a Dispose method to unsubscribe. Weak references offer another solution.

Imagine a delegate that holds only weak references to its targets. Such a delegate would not keep its targets alive—unless those targets had independent referees. Of course, this wouldn’t prevent a firing delegate from hitting an unreferenced target—in the time between the target being eligible for collection and the GC catching up with it. For such a solution to be effective, your code must be robust in that scenario. Assuming that is the case, a weak delegate class can be implemented as follows:

public class WeakDelegate<TDelegate> where TDelegate : class

{

class MethodTarget

{

public readonly WeakReference Reference;

public readonly MethodInfo Method;

public MethodTarget (Delegate d)

{

Reference = new WeakReference (d.Target);

Method = d.Method;

}

}

List<MethodTarget> _targets = new List<MethodTarget>();

public WeakDelegate()

{

if (!typeof (TDelegate).IsSubclassOf (typeof (Delegate)))

throw new InvalidOperationException

("TDelegate must be a delegate type");

}

public void Combine (TDelegate target)

{

if (target == null) return;

foreach (Delegate d in (target as Delegate).GetInvocationList())

_targets.Add (new MethodTarget (d));

}

public void Remove (TDelegate target)

{

if (target == null) return;

foreach (Delegate d in (target as Delegate).GetInvocationList())

{

MethodTarget mt = _targets.Find (w =>

d.Target.Equals (w.Reference.Target) &&

d.Method.MethodHandle.Equals (w.Method.MethodHandle));

if (mt != null) _targets.Remove (mt);

}

}

public TDelegate Target

{

get

{

var deadRefs = new List<MethodTarget>();

Delegate combinedTarget = null;

foreach (MethodTarget mt in _targets.ToArray())

{

WeakReference target = mt.Reference;

if (target != null && target.IsAlive)

{

var newDelegate = Delegate.CreateDelegate (

typeof (TDelegate), mt.Reference.Target, mt.Method);

combinedTarget = Delegate.Combine (combinedTarget, newDelegate);

}

else

deadRefs.Add (mt);

}

foreach (MethodTarget mt in deadRefs) // Remove dead references

_targets.Remove (mt); // from _targets.

return combinedTarget as TDelegate;

}

set

{

_targets.Clear();

Combine (value);

}

}

}

This code illustrates a number of interesting points in C# and the CLR. First, note that we check that TDelegate is a delegate type in the constructor. This is because of a limitation in C#—the following type constraint is illegal because C# considers System.Delegate a special type for which constraints are not supported:

... where TDelegate : Delegate // Compiler doesn't allow this

Instead, we must choose a class constraint, and perform a runtime check in the constructor.

In the Combine and Remove methods, we perform the reference conversion from target to Delegate via the as operator rather than the more usual cast operator. This is because C# disallows the cast operator with this type parameter—because of a potential ambiguity between a custom conversion and a reference conversion.

We then call GetInvocationList because these methods might be called with multicast delegates—delegates with more than one method recipient.

In the Target property, we build up a multicast delegate that combines all the delegates referenced by weak references whose targets are alive. We then clear out the remaining (dead) references from the list—to avoid the _targets list endlessly growing. (We could improve our class by doing the same in the Combine method; yet another improvement would be to add locks for thread safety [Chapter 22]).

The following illustrates how to consume this delegate in implementing an event:

public class Foo

{

WeakDelegate<EventHandler> _click = new WeakDelegate<EventHandler>();

public event EventHandler Click

{

add { _click.Combine (value); } remove { _click.Remove (value); }

}

protected virtual void OnClick (EventArgs e)

{

EventHandler target = _click.Target;

if (target != null) target (this, e);

}

}

Notice that in firing the event, we assign _click.Target to a temporary variable before checking and invoking it. This avoids the possibility of targets being collected in the interim.

[10] In Interrupt and Abort in Chapter 22, we describe how aborting a thread can violate the safety of this pattern. This is rarely an issue in practice because aborting threads is widely discouraged for precisely this (and other) reasons.

[11] The same thing may occur occasionally in the generational heap due to pinning (see The fixed Statement in Chapter 4).

All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL)

If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval.

© 2016-2025 All site design rights belong to S.Y.A.