Exam Ref 70-483: Programming in C# (2013)

Chapter 3. Debug applications and implement security

When you are building your applications, you will always run into unforeseen problems. Maybe you see the error and you start looking for the problem, or a user reports the error while the application is being tested or is already in production.

Debugging is the process of finding errors in your software and removing them. The C# compiler and the .NET Framework help you identify these bugs, whether on your development machine or in a production environment. In this chapter, you learn how to use the compiler to output extra information that can help you find bugs. You also look at implementing diagnostics in your application that output critical information about its health.

When building real-world applications, it’s also important to make sure that your applications are secure. You look at validating application input to ensure that the data is correct, but also to avoid malicious actions. Another area of security has to do with the code itself. If a user can change your assemblies and deploy them in a production environment, he can change the way your application behaves without you even knowing it. In this chapter, you look at the options you have to protect yourself against these attacks.

Objectives in this chapter:

§ Objective 3.1: Validate application input

§ Objective 3.2 Perform symmetric and asymmetric encryption

§ Objective 3.3 Manage assemblies

§ Objective 3.4 Debug an application

§ Objective 3.5 Implement diagnostics in an application

Objective 3.1: Validate application input

If your application runs in total isolation and processes only its own data, you can be sure that your application behaves the way it should behave.

But in the real world, you have to deal with external input. This input can come from another system, but most of the time it comes from your users. Validating all input to your application is an area that’s sometimes overlooked but that is of critical importance.

THIS OBJECTIVE COVERS HOW TO:

§ Explain why validating application input is important.

§ Manage data integrity.

§ Use Parse, TryParse, and Convert.

§ Use regular expressions for input validation.

§ Validating JSON and XML.

Why validating application input is important

When your application is in production, it has to deal with various types of input. Some of this input comes from other systems that it integrates with, and most input is generated by users. Those users fall into two categories:

§ Innocent users

§ Malicious users

Innocent users are the ones who try to use your application to get some work done. They have no bad intentions when working with your application, but they can still make mistakes. Maybe they forget to input some required data, or they make a typo and insert invalid data.

Malicious users are a different species. They actively seek weaknesses in your application and try to exploit them. Maybe they want access to some privileged information, or they try to add or remove information. These are the users who try to insert invalid data, decompile your code to see how it works, or just start looking for hidden areas of your system.

Even when your application integrates with other applications that have no bad intentions, you still have to validate the data you’re consuming. Maybe you have developed your application, tested it, and made sure everything was working, but suddenly the other system is upgraded to a new version. The fields you were expecting are gone or moved to another location, and new data is suddenly added. If you don’t protect yourself against these kinds of situations, they can crash your application or corrupt the data in your system.

When building real world applications, you will probably use frameworks such as Windows Presentation Foundation (WPF), ASP.NET, or the Entity Framework. Those frameworks have built-in functionality to validate data, but it’s still important to make sure that you know how to perform your own validation.

Managing data integrity

When invalid data enters your application, it can be that your application crashes. Maybe you expect a valid date, but a user makes a typo. When you try to perform some calculations on the date, an exception is thrown, and your application crashes.

Of course, this is inconvenient for the user and definitely something you should avoid. But crashing isn’t the worst that can happen. What if the invalid data isn’t recognized and is saved to your database? That can lead to corrupt data and jeopardize the integrity of your data.

Let’s say, for example, that you have built an online shopping application. One of the administrators decides to do some cleaning and removes a couple of user accounts that he presumes are no longer in use. But he forgets that those accounts have a purchase history. Suddenly your data is in an inconsistent state. You have orphaned orders in your database that can’t be linked to a specific user any more.

Another situation can arise when you have a power outage or a hardware failure. Maybe you have developed an application for a bank. You receive a message that a certain amount of money should be removed from one account and added to another one. After removing the money, your application is abruptly terminated, and suddenly the money is gone.

Avoiding these types of problems is the area of managing data integrity. There are four different types of data integrity:

§ Entity integrity. States that each entity (a record in a database) should be uniquely identifiable. In a database, this is achieved by using a primary key column. A primary key uniquely identifies each row of data. It can be generated by the database or by your application.

§ Domain integrity. Refers to the validity of the data that an entity contains. This can be about the type of data and the possible values that are allowed (a valid postal code, a number within a certain range, or a default value, for example).

§ Referential integrity. The relationship that entities have with each other, such as the relationship between an order and a customer.

§ User-defined integrity. Comprises specific business rules that you need to enforce. A business rule for a web shop might involve a new customer who is not allowed to place an order above a certain dollar amount.

Most of these integrity checks are integrated into modern database systems. You can use primary keys and foreign keys to let the database perform certain basic checks on your data. A primary key uniquely identifies each row of data. Defining them makes sure that no rows have the same ID. A foreign key is used to point to another record (for example, the manager of a person or the order for an order line). You can configure the database to disallow the removal of an order without also removing the order lines.

When working with a database, you will probably use an object-relational mapper such as the Entity Framework, which enables a couple of different ways to work with your database. One approach is to define your object model in code and then let the Entity Framework generate a database that can store your model. You can annotate your classes with attributes that specify certain validation rules, or you can use a special mapping syntax to configure the way your database schema is generated.

MORE INFO: ENTITY FRAMEWORK

For more information on the Entity Framework and the different ways you can use it, see Chapter 4.

For example, when working with a web shop, you have classes for at least an order, a customer, an order line, and a product.

You need entity integrity to ensure that each entity can be uniquely identified. You do this by adding an ID property to each entity. The database helps you generate unique values for your IDs when you add entities to the database.

Referential integrity is necessary to ensure a relationship is maintained between orders, order lines, customers, and products. Foreign key constraints show which relationships are required and which are optional.

Domain integrity also comes into play. You have specific data types, such as a DateTime for your order date and shipping date. Some fields are required, such as the name of the customer and the quantity of products you want to order.

User-defined integrity is another issue. It can’t be handled automatically by the Entity Framework. You can define these checks in code or write custom code that will be executed by your database. Another way is to use a trigger. Triggers are special methods that run when data in your database is updated, inserted, or removed. Such an action triggers your method to execute. You can also use stored procedures, which are subroutines that are stored in your database and can be executed to validate date or control access to data.

Example 3-1 is a code sample that describes the Customer and Address classes. As you can see, some of the properties are annotated with special attributes. These attributes can be found in the System.ComponentModel.DataAnnotations.dll, which is included in the Entity Framework. You can add the Entity Framework to your application by installing the Entity Framework NuGet package.

MORE INFO: NUGET

NuGet is a free and open-source package manager. It’s installed as a Visual Studio extension that can be used to easily download software packages and add them to your applications. You can find more information about NuGet at http://nuget.org/.

Example 3-1. Customer and Address classes

public class Customer

{

public int Id { get; set; }

[Required, MaxLength(20)]

public string FirstName { get; set; }

[Required, MaxLength(20)]

public string LastName { get; set; }

[Required]

public Address ShippingAddress { get; set; }

[Required]

public Address BillingAddress { get; set; }

}

public class Address

{

public int Id { get; set; }

[Required, MaxLength(20)]

public string AddressLine1 { get; set; }

[Required, MaxLength(20)]

public string AddressLine2 { get; set; }

[Required, MaxLength(20)]

public string City { get; set; }

[RegularExpression(@"^[1-9][0-9]{3}\s?[a-zA-Z]{2}$")]

public string ZipCode { get; set; }

}

You can use the following predefined attributes:

§ DataTypeAttribute

§ RangeAttribute

§ RegularExpressionAttribute

§ RequiredAttribute

§ StringLengthAttribute

§ CustomValidationAttribute

§ MaxLengthAttribute

§ MinLengthAttribute

You can apply these attributes to your class members, and when you save your changes to the database, the validation code runs.

Example 3-2 shows an example of using an Entity Framework context to save a new customer to the database.

Example 3-2. Saving a new customer to the database

public class ShopContext : DbContext

{

public IDbSet<Customer> Customers { get; set; }

protected override void OnModelCreating(DbModelBuilder modelBuilder)

{

// Make sure the database knows how to handle the duplicate address property

modelBuilder.Entity<Customer>().HasRequired(bm => bm.BillingAddress)

.WithMany().WillCascadeOnDelete(false);

}

}

using (ShopContext ctx = new ShopContext())

{

Address a = new Address

{

AddressLine1 = "Somewhere 1",

AddressLine2 = "At some floor",

City = "SomeCity",

ZipCode = "1111AA"

};

Customer c = new Customer()

{

FirstName = "John",

LastName = "Doe",

BillingAddress = a,

ShippingAddress = a,

};

ctx.Customers.Add(c);

ctx.SaveChanges();

}

If you forget to set the FirstName property, the Entity Framework throws the following exception:

System.Data.Entity.Validation.DbEntityValidationException : Validation failed for one or

more entities. See 'EntityValidationErrors' property for more details.

Looking at the EntityValidationErrors property tells you that the FirstName field is required. You can run this validation code manually outside of the context of the Entity Framework. Example 3-3 shows a class that can run validation on an entity and report the errors.

Example 3-3. Running manual validation

public static class GenericValidator<T>

{

public static IList<ValidationResult> Validate(T entity)

{

var results = new List<ValidationResult>();

var context = new ValidationContext(entity, null, null);

Validator.TryValidateObject(entity, context, results);

return results;

}

}

The Entity Framework also creates foreign keys and primary keys in the database for your entities. They ensure that you don’t have entities with the same ID and that the relationships between your entities, such as from Customer to Address, are correct.

MORE INFO: ENTITY FRAMEWORK

For more information about the Entity Framework, see the Microsoft documentation at http://msdn.microsoft.com/en-us/data/ef.aspx. You can also read Programming Entity Framework: DbContext by Julia Lerman and Rowan Miller (O’Reilly Media, 2012).

Another important topic when managing data integrity with a database is using transactions. A transaction helps you group a set of related operations on a database. It ensures that those operations are seen as one distinct action. If one fails, they all fail and can easily be rolled back.

You can also run into problems when your users work concurrently with the same set of data. By using transactions, you can configure your database to throw an exception when there is a conflicting update. In your application, you can catch those exceptions and write code that handles the conflict. You could, for example, allow the user to choose which update should win, or you can let the last update win. This helps you maintain your data integrity.

MORE INFO: TRANSACTIONS

For more information on using transactions, see Chapter 4.

Using Parse, TryParse, and Convert

Most input to your application comes in as a simple string. Maybe you know that it actually represents a number or a valid date, but you have to check this to ensure that the data is valid.

The .NET Framework has some built-in types that help you convert data from one type to another.

The Parse and TryParse methods can be used when you have a string that you want to convert to a specific data type. For example, if you have a string that you know is a Boolean value, you can use the bool.Parse method, as Example 3-4 shows.

Example 3-4. Using Parse

string value = "true";

bool b = bool.Parse(value);

Console.WriteLine(b); // displays True

The bool.Parse method uses the static readonly fields TrueString and FalseString to see whether your string is true or false. If your string contains an invalid value, Parse throws a FormatException. If you pass a null value for the string, you will get an ArgumentNullException. Parse should be used if you are certain the parsing will succeed. If an exception is thrown, this denotes a real error in your application.

TryParse does things differently. You use TryParse if you are not sure that the parsing will succeed. You don’t want an exception to be thrown and you want to handle invalid conversion gracefully. Look at Example 3-5 for an example of using the int.TryParse method that tries to parse a string to a valid number.

Example 3-5. Using TryParse

string value = "1";

int result;

bool success = int.TryParse(value, out result);

if (success)

{

// value is a valid integer, result contains the value

}

else

{

// value is not a valid integer

}

As Example 3-5 shows, TryParse returns a Boolean value that indicates whether the value could be parsed. The out parameter contains the resulting value when the operation is successful. If the parsing succeeds, the variable holds the converted value; otherwise, it contains the initial value.

TryParse can be used when you are parsing some user input. If the user provides invalid data, you can show a friendly error message and let him try again.

When using the bool.Parse or bool.TryParse methods, you don’t have any extra parsing options. When parsing numbers, you can supply extra options for the style of the number and the specific culture that you want to use. Example 3-6 shows how you can parse a string that contains a currency symbol and a decimal separator. The CultureInfo class can be found in the System.Globalization namespace.

Example 3-6. Using configuration options when parsing a number

CultureInfo english = new CultureInfo("En");

CultureInfo dutch = new CultureInfo("Nl");

string value = "€19,95";

decimal d = decimal.Parse(value, NumberStyles.Currency, dutch);

Console.WriteLine(d.ToString(english)); // Displays 19.95

A complex subject is parsing a date and time. You can use the DateTime.Parse method for this, which offers several overloads (methods with the same name but different arguments):

§ Parse(string) uses the current thread culture and the DateTimeStyles.AllowWhiteSpaces.

§ Parse(string, IFormatProvider) uses the specified culture and the DateTimeStyles.AllowWhiteSpaces.

§ Parse(string, IFormatProvider, DateTimeStyles).

When parsing a DateTime, you must take into account things such as time zone differences and cultural differences, especially when working on an application that uses globalization. It’s important to parse user input with the correct culture.

MORE INFO: PARSING DATETIME

For more information on parsing dates and times, see the MSDN documentation at http://msdn.microsoft.com/en-us/library/system.datetime.parse.aspx.

The .NET Framework also offers the Convert class to convert between base types. The supported base types are Boolean, Char, SByte, Byte, Int16, Int32, Int64, UInt16, Uint32, Uint64, Single, Double, Decimal, DateTime, and String. The difference between Parse/TryParse and Convert is that Convert enables null values. It doesn’t throw an ArgumentNullException; instead, it returns the default value for the supplied type, as Example 3-7 shows.

Example 3-7. Using Convert with a null value

int i = Convert.ToInt32(null);

Console.WriteLine(i); // Displays 0

A difference between Convert and the Parse methods is that Parse takes a string only as input, while Convert can also take other base types as input. Example 3-8 shows an example of converting a double to an int. The double value is rounded.

Example 3-8. Using Convert to convert from double to int

double d = 23.15;

int i = Convert.ToInt32(d);

Console.WriteLine(i); // Displays 23

Methods such as these throw an OverflowException when the parsed or converted value is too large for the target type.

EXAM TIP

It’s important to know that when you are parsing user input, the best choice is the TryParse method. Throwing exceptions for “normal” errors is not a best practice. TryParse just returns false when the value can’t be parsed.

Using regular expressions

A regular expression is a specific pattern used to parse and find matches in strings. A regular expression is sometimes called regex or regexp.

Regular expressions are flexible. For example, the regex ^(\(\d{3}\)|^\d{3}[.-]?)?\d{3}[.-]?\d{4}$ matches North American telephone numbers with or without parentheses around the area code, and with or without hyphens or dots between the numbers.

Regular expressions have a history of being hard to write and use. Luckily, a lot of patterns are already written by someone else. Websites such as http://regexlib.com/ contain a lot of examples that you can use or adapt to your own needs. Regular expressions can be useful when validating application input, reducing to a few lines of code what can take dozens or more with manual parsing. Maybe you allow a user to use both slashes and dashes to input a valid date. Or you allow white space when entering a ZIP Code. Example 3-9 shows how cumbersome it is to validate a Dutch ZIP Code manually.

Example 3-9. Manually validating a ZIP Code

static bool ValidateZipCode(string zipCode)

{

// Valid zipcodes: 1234AB | 1234 AB | 1001 AB

if (zipCode.Length < 6) return false;

string numberPart = zipCode.Substring(0, 4);

int number;

if (!int.TryParse(numberPart, out number)) return false;

string characterPart = zipCode.Substring(4);

if (numberPart.StartsWith("0")) return false;

if (characterPart.Trim().Length < 2) return false;

if (characterPart.Length == 3 && characterPart.Trim().Length != 2)

return false;

return true;

}

If you use a regular expression, the code is much shorter. A regular expression that matches Dutch ZIP Codes is ^[1-9][0-9]{3}\s?[a-zA-Z]{2}$.

You can use this pattern with the RegEx class that can be found in the System.Text.Regular-Expressions namespace. Example 3-10 shows how you can use the RegEx class to validate a zip code.

Example 3-10. Validate a ZIP Code with a regular expression

static bool ValidateZipCodeRegEx(string zipCode)

{

Match match = Regex.Match(zipCode, @"^[1-9][0-9]{3}\s?[a-zA-Z]{2}$",

RegexOptions.IgnoreCase);

return match.Success;

}

Next to matching application input to a specific pattern, you can also use regular expressions to ensure that input doesn’t contain certain restricted characters. You can use regex to replace those characters with another value to remove them from the input.

Especially when working in the context of a web application, it is important to filter the user input. Imagine that a user inputs some HTML inside an input field that is meant for information such as a name or address. The application doesn’t validate the input and saves it straight to the database. The next time the user visits the application, the HTML is directly rendered as a part of the page. A user can do a lot of harm by using this technique, so it’s important to ensure that input doesn’t contain potentially harmful characters.

Example 3-11 shows an example of using a RegEx expression to remove all excessive use of white space. Every single space is allowed but multiple spaces are replaced with a single space.

Example 3-11. Validate a ZIP Code with a regular expression

RegexOptions options = RegexOptions.None;

Regex regex = new Regex(@"[ ]{2,}", options);

string input = "1 2 3 4 5";

string result = regex.Replace(input, " ");

Console.WriteLine(result); // Displays 1 2 3 4 5

Although regex looks more difficult than writing the validation code in plain C#, it’s definitely worth learning how it works. A regular expression can dramatically simplify your code, and it’s worth examining if you are in a situation requiring validation.

Validating JSON and XML

When exchanging data with other applications, you will often receive JavaScript Object Notation (JSON) or Extensible Markup Language (XML) data. JSON is a popular format that has its roots in the JavaScript world. It’s a compact way to represent some data. XML has a stricter schema and is considered more verbose, but certainly has its uses. It’s important to make sure that this data is valid before you start using it.

Valid JSON starts with { or [, and ends with } or ]. You can easily see whether a string starts with these characters by using the code in Example 3-12.

Example 3-12. Seeing whether a string contains potential JSON data

public static bool IsJson(string input)

{

input = input.Trim();

return input.StartsWith("{") && input.EndsWith("}")

|| input.StartsWith("[") && input.EndsWith("]");

}

Checking only the start and end characters is, of course, not enough to know whether the whole object can be parsed as JSON. The .NET Framework offers the JavaScriptSerializer that you can use to deserialize a JSON string into an object. You can find the JavaScriptSerializer in theSystem.Web.Extensions dynamic-link library (DLL) in the System.Web.Script.Serialization namespace.

Example 3-13 shows how you can use the JavaScriptSerializer. In this case, you are deserializing the data to a Dictionary<string,object>. You can then loop through the dictionary to see the property names and their values.

Example 3-13. Deserializing an object with the JavaScriptSerializer

var serializer = new JavaScriptSerializer();

var result = serializer.Deserialize<Dictionary<string, object>>(json);

If you pass some invalid JSON to this function, an ArgumentException is thrown with a message that starts with “Invalid object passed in”.

An XML file can be described by using an XML Schema Definition (XSD). This XSD can be used to validate an XML file.

Take, for example, the XML file that is described in Example 3-14.

Example 3-14. A sample XML with person data

<?xml version="1.0" encoding="utf-16" ?>

<Person xmlns:xsi="http://www.w3.org/2001/XMLSchema-

instance" xmlns:xsd="http://www.w3.org/2001/XMLSchema">

<FirstName>John</FirstName>

<LastName>Doe</LastName>

<Age>42</Age>

</Person>

You can create an XSD file for this schema by using the XML Schema Definition Tool (Xsd.exe) that is a part of Visual Studio. This tool can generate XML Schema files or C# classes.

The following line will generate an XSD file for the person.xml file:

Xsd.exe person.xml

The tool creates a file called person.xsd. You can see the content of this XSD file in Example 3-15.

Example 3-15. A sample XSD file

<?xml version="1.0" encoding="utf-8"?>

<xs:schema id="NewDataSet" xmlns="" xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns:msdata="urn:schemas-microsoft-com:xml-msdata">

<xs:element name="Person">

<xs:complexType>

<xs:sequence>

<xs:element name="FirstName" type="xs:string" minOccurs="0" />

<xs:element name="LastName" type="xs:string" minOccurs="0" />

<xs:element name="Age" type="xs:string" minOccurs="0" />

</xs:sequence>

</xs:complexType>

</xs:element>

<xs:element name="NewDataSet" msdata:IsDataSet="true" msdata:UseCurrentLocale="true">

<xs:complexType>

<xs:choice minOccurs="0" maxOccurs="unbounded">

<xs:element ref="Person" />

</xs:choice>

</xs:complexType>

</xs:element>

</xs:schema>

By default, none of the items in the file is required. It does, however, record which elements are possible and what the structure of the file should look like.

You can now use this XSD file to validate an XML file. Example 3-16 shows a way to do this.

Example 3-16. Validating an XML file with a schema

public void ValidateXML()

{

string xsdPath = "person.xsd";

string xmlPath = "person.xml";

XmlReader reader = XmlReader.Create(xmlPath);

XmlDocument document = new XmlDocument();

document.Schemas.Add("", xsdPath);

document.Load(reader);

ValidationEventHandler eventHandler =

new ValidationEventHandler(ValidationEventHandler);

document.Validate(eventHandler);

}

static void ValidationEventHandler(object sender,

ValidationEventArgs e)

{

switch (e.Severity)

{

case XmlSeverityType.Error:

Console.WriteLine("Error: {0}", e.Message);

break;

case XmlSeverityType.Warning:

Console.WriteLine("Warning {0}", e.Message);

break;

}

}

If there is something wrong with the XML file, such as a non-existing element, the ValidationEventHandler is called. Depending on the type of validation error, you can decide which action to take.

THOUGHT EXPERIMENT

Strange errors

In this thought experiment, apply what you’ve learned about this objective. You can find answers to these questions in the Answers section at the end of this chapter.

You have developed a complex web application and deployed it to production. The application is a new hybrid of wiki and a forum. Users can use it to brainstorm on ideas and write a document together.

Suddenly users start contacting your support desk. They are all reporting “that your application looks strange.” It suddenly contains extra URLs that link to external websites that are mixed with the original website’s layout.

1. What could be the problem?

2. How will you solve it?

Objective summary

§ Validating application input is important to protect your application against both mistakes and attacks.

§ Data integrity should be managed both by your application and your data store.

§ The Parse, TryParse, and Convert functions can be used to convert between types.

§ Regular expressions, or regex, can be used to match input against a specified pattern or replace specified characters with other values.

§ When receiving JSON and XML files, it’s important to validate them using the built-in types, such as with JavaScriptSerializer and XML Schemas.

Objective review

Answer the following questions to test your knowledge of the information in this objective. You can find the answers to these questions and explanations of why each answer choice is correct or incorrect in the Answers section at the end of this chapter.

1. A user needs to enter a DateTime in a text field. You need to parse the value in code. Which method do you use?

a. DateTime.Parse

b. DateTime.TryParse

c. Convert.ToDateTime

d. Regex.Match.

2. You are working on a globalized web application. You need to parse a text field where the user enters an amount of money. Which method do you use?

a. int.TryParse(value, NumberStyles.Currency, UICulture);

b. decimal.TryParse(value, NumberStyles.Currency, UICulture);

c. decimal.TryParse(value, ServerCulture);

d. decimal.TryParse(value)

3. You need to validate an XML file. What do you use?

a. JavaScriptSerializer

b. RegEx

c. StringBuilder

d. XSD

Objective 3.2 Perform symmetric and asymmetric encryption

Security and cryptography are closely related to each other. Some important steps in building a secure application are the authentication of users, making sure your data stays confidential, and ensuring that no one can tamper with your data. The .NET Framework offers several implementations of popular algorithms that you can use to protect your applications.

THIS OBJECTIVE COVERS HOW TO:

§ Use symmetric and asymmetric encryption algorithms.

§ Work with encryption in the .NET Framework.

§ Use hashing.

§ Manage and create certificates.

§ Use the code access permissions from the System.Security namespace.

§ Secure string data.

Using symmetric and asymmetric encryption

Security is about keeping secrets. You can use complex algorithms to encrypt your data, but if you can’t keep your passwords and codes secret, everyone will be able to read your private data.

Cryptography is about encrypting and decrypting data. With encryption, you take a piece of plain text (regular text that’s human readable) and then run an algorithm over it. The resulting data looks like a random byte sequence, often called ciphertext. Decryption is the opposite process: The byte sequence is transformed into the original plain text data.

In cryptography, you can keep your algorithm secret, or you can use a public algorithm and keep your key secret.

Keeping your algorithm secret is often impractical because you would need to switch algorithms each time someone leaked the algorithm. Instead a key is kept secret. A key is used by an algorithm to control the encryption process. An encryption key is the same as a regular password: It shouldn’t be easy to guess the key you have chosen.

Another advantage of making the algorithm public is that it’s extensively tested. When a successor for the widespread Data Encryption Standard (DES) algorithm became necessary, the American National Institute of Standards and Technology (NIST) invited anyone to submit new algorithms. After the submission period was closed, NIST made the source code for these algorithms public and invited everyone to break them. Some algorithms were broken in a matter of days, and only a small number made it to the final round. Making those algorithms public improved security.

Because the algorithm is public, the key is the thing that should be kept private. The difference in symmetric and asymmetric encryption strategies lies in the way this key is used. A symmetric algorithm uses one single key to encrypt and decrypt the data. You need to pass your original key to the receiver so he can decrypt your data. And this automatically leads to the problem of securely exchanging keys.

This is where an asymmetric algorithm can be used. An asymmetric algorithm uses two different keys that are mathematically related to each other. Although they are related, it’s infeasible to determine one when you know the other. One key is completely public and can be read and used by everyone. The other part is private and should never be shared with someone else. When you encrypt something with the public key, it can be decrypted by using the private key, and vice versa.

Another difference between symmetric and asymmetric encryption has to do with performance and message size. Symmetric encryption is faster than asymmetric encryption and is well-suited for larger data sets. Asymmetric encryption is not optimized for encrypting long messages, but it can be very useful for decrypting a small key. Combining these two techniques can help you transmit large amounts of data in an encrypted way.

Let’s say that Bob and Alice want to send each other a message. They take the following steps:

1. Alice and Bob both generate their own asymmetric key pair.

2. They send each other their public key and keep their private key secret.

3. They both generate a symmetric key and encrypt it only with the other parties’ public key (so that it can be encrypted by the private key of the other person).

4. They send their own encrypted symmetric key to one another and decrypt the others symmetric key with their own private key.

5. To send a confidential message, they use the symmetric key of the other person and use it to encrypt their message.

6. The receiving person decrypts the message with their own symmetric key.

As you can see, the asymmetric encryption is used to encrypt a symmetric key. After the key is safely transmitted, Bob and Alice can use it to send larger messages to one another.

Next to using a key, another important concept in cryptography is the initialization vector (IV). An IV is used to add some randomness to encrypting data. If encrypting the same text would always give the same results, this could be used by a potential attacker to break the encryption. The IV makes sure that the same data results in a different encrypted message each time.

EXAM TIP

Try to remember the differences between symmetric and asymmetric algorithms. A symmetric algorithm uses one key; an asymmetric algorithm uses two: a key pair that consists of both a public and a private key.

MORE INFO: SYMMETRIC AND ASYMMETRIC ENCRYPTION

If you want to know more about encryption a good place to start is http://msdn.microsoft.com/en-us/library/0ss79b2x.aspx.

Working with encryption in the .NET Framework

The .NET Framework offers an extensive set of algorithms for both symmetric and asymmetric encryption.

One symmetric algorithm is the Advanced Encryption Standard (AES). AES is adopted by the U.S. government and is becoming the standard worldwide for both governmental and business use. The .NET Framework has a managed implementation of the AES algorithm in the AesManagedclass. All cryptography classes can be found in the System.Security.Cryptography class.

Example 3-17 shows an example of using this algorithm to encrypt and decrypt a piece of text. As you can see, AES is a symmetric algorithm that uses a key and IV for encryption. By using the same key and IV, you can decrypt a piece of text. The cryptography classes all work on byte sequences.

Example 3-17. Use a symmetric encryption algorithm

public static void EncryptSomeText()

{

string original = "My secret data!";

using (SymmetricAlgorithm symmetricAlgorithm =

new AesManaged())

{

byte[] encrypted = Encrypt(symmetricAlgorithm, original);

string roundtrip = Decrypt(symmetricAlgorithm, encrypted);

// Displays: My secret data!

Console.WriteLine("Original: {0}", original);

Console.WriteLine("Round Trip: {0}", roundtrip);

}

}

static byte[] Encrypt(SymmetricAlgorithm aesAlg, string plainText)

{

ICryptoTransform encryptor = aesAlg.CreateEncryptor(aesAlg.Key, aesAlg.IV);

using (MemoryStream msEncrypt = new MemoryStream())

{

using (CryptoStream csEncrypt =

new CryptoStream(msEncrypt, encryptor, CryptoStreamMode.Write))

{

using (StreamWriter swEncrypt = new StreamWriter(csEncrypt))

{

swEncrypt.Write(plainText);

}

return msEncrypt.ToArray();

}

}

}

static string Decrypt(SymmetricAlgorithm aesAlg, byte[] cipherText)

{

ICryptoTransform decryptor = aesAlg.CreateDecryptor(aesAlg.Key, aesAlg.IV);

using (MemoryStream msDecrypt = new MemoryStream(cipherText))

{

using (CryptoStream csDecrypt =

new CryptoStream(msDecrypt, decryptor, CryptoStreamMode.Read))

{

using (StreamReader srDecrypt = new StreamReader(csDecrypt))

{

return srDecrypt.ReadToEnd();

}

}

}

}

The SymmetricAlgorithm class has both a method for creating an encryptor and a decryptor. By using the CryptoStream class, you can encrypt or decrypt a byte sequence.

The .NET Framework also has support for asymmetric encryption. You can use the RSACryptoServiceProvider and DSACryptoServiceProvider classes. When working with asymmetric encryption, you typically use the public key from another party. You encrypt the data using the public key so only the other party can decrypt the data with their private key.

Example 3-18 shows how you can create a new instance of the RSACryptoServiceProvider and export the public key to XML. By passing true to the ToXmlString method, you also export the private part of your key.

Example 3-18. Exporting a public key

RSACryptoServiceProvider rsa = new RSACryptoServiceProvider();

string publicKeyXML = rsa.ToXmlString(false);

string privateKeyXML = rsa.ToXmlString(true);

Console.WriteLine(publicKeyXML);

Console.WriteLine(privateKeyXML);

// Displays:

//<RSAKeyValue>

// <Modulus>

// tYo35ywT0Q0KCNhFPu207bS8rrTk91YaxNcD2ElQ1eoWpdYnoCsdj1KaW/

as9zFLYW5slg5Qq8ltdkxZuU//

fh0j2t+7ZFH8RRAD808GkZTrUi1zv3yqMjQDphHOcNfWh+dQrPmp1ShFxEGuA9Y4Ij9RINU5jcfviPa

// B1ClLXaGbc=

// </Modulus>

// <Exponent>AQAB</Exponent>

//</RSAKeyValue>

//<RSAKeyValue>

// <Modulus>

// tYo35ywT0Q0KCNhFPu207bS8rrTk91YaxNcD2ElQ1eoWpdYnoCsdj1KaW/as9zFLYW5slg5Qq8ltdkxZuU

// fh0j2t+7ZFH8RRAD808GkZTrUi1zv3yqMjQDphHOcNfWh+dQrPmp1ShFxEGuA9Y4Ij9RINU5jcfviPa

// B1ClLXaGbc=

// </Modulus>

// <Exponent>AQAB</Exponent>

// <P>

// 4uhNaN3cPSUzr+KxHmpKyeaD39RT+kWjjDcn/9sTAV/HmDzFzjsiov3KyJ+3XCXucx5TU0lhDOLc/

// cO+Xrquqw==

// </P>

// <Q>

// zNDVw6oL7YNglrFAeqmgIL3Oj2PkUxrWvoYHCbuFwJKpkWvFBRwZfKXHzzU0zaU5bGdX7M24hW8z5s0

// eF9CRJQ==

// </Q>

// <DP>

// jkS+/GhWxZPEw5vsF7jnaY3502ZqvPna4HhYwQgX832dRKueDn9vaSidc4sIyWMTDeTOs+LHUfAQRZ/

// shbKg/w==

// </DP>

// <DQ>

// HV4QWJboUO0Wi2Ts/umViTxOAudq1LOzeOwU1ENsITmmULCoNlxaFzJaHQ7e/GGlgzKqO80fmRph0c

// U1fGqudQ==

// </DQ>

// <InverseQ>

// BW1VUOgXpkRnn2twvb72uxcbK6+o9ns3xa4Ypm+++7vzlg6t/Iyvk94xNJWjjgR+XsSpN6JEtztWol8

// bv8HEyA==

// </InverseQ>

// <D>

// IOZUrUNyr+8iA2pWWkowAOhBTZQg7qYfIc8ptjfLO4k544IFGmTV7ZR1vvbcb8vyMk0Vxrf/bLKLcOX

// zWL2rMeWYGuoTbZEeUbr0SlmesHARL7X/feCm9MIyPjhlhJieRVG3h4f+TyAVo70jmYVcSou+xAaad3

// 7o3Pa8Vny6qIk=

// </D>

//</RSAKeyValue>

The public key is the part you want to publish so others can use it to encrypt data. You can send it to someone directly or publish it on a website that belongs to you. Example 3-19 shows an example of using a public key to encrypt data and decrypt it with the private key.

Example 3-19. Using a public and private key to encrypt and decrypt data

UnicodeEncoding ByteConverter = new UnicodeEncoding();

byte[] dataToEncrypt = ByteConverter.GetBytes("My Secret Data!");

byte[] encryptedData;

using (RSACryptoServiceProvider RSA = new RSACryptoServiceProvider())

{

RSA.FromXmlString(publicKeyXML);

encryptedData = RSA.Encrypt(dataToEncrypt, false);

}

byte[] decryptedData;

using (RSACryptoServiceProvider RSA = new RSACryptoServiceProvider())

{

RSA.FromXmlString(privateKeyXML);

decryptedData = RSA.Decrypt(encryptedData, false);

}

string decryptedString = ByteConverter.GetString(decryptedData);

Console.WriteLine(decryptedString); // Displays: My Secret Data!

As you can see, you first need to convert the data you want to encrypt to a byte sequence. To encrypt the data, you need only the public key. You then use the private key to decrypt the data.

Because of this, it’s important to store the private key in a secure location. If you would store it in plain text on disk or even in a nonsecure memory location, your private key could be extracted and your security would be compromised.

The .NET Framework offers a secure location for storing asymmetric keys in a key container. A key container can be specific to a user or to the whole machine. Example 3-20 shows how to configure an RSACryptoServiceProvider to use a key container for saving and loading the asymmetric key.

Example 3-20. Using a key container for storing an asymmetric key

string containerName = "SecretContainer";

CspParameters csp = new CspParameters() { KeyContainerName = containerName };

byte[] encryptedData;

using (RSACryptoServiceProvider RSA = new RSACryptoServiceProvider(csp))

{

encryptedData = RSA.Encrypt(dataToEncrypt, false);

}

Loading the key from the key container is the exact same process. You can securely store your asymmetric key without malicious users being able to read it.

Using hashing

To understand what hashing is and see some of the ideas behind a hash code, take a look at Example 3-21. Example 3-21 shows an example of how you can implement a set class. A set stores only unique items, so it sees whether an item already exists before adding it.

Example 3-21. A naïve set implementation

class Set<T>

{

private List<T> list = new List<T>();

public void Insert(T item)

{

if (!Contains(item))

list.Add(item);

}

public bool Contains(T item)

{

foreach (T member in list)

if (member.Equals(item))

return true;

return false;

}

}

For each item that you add, you have to loop through all existing items. This doesn’t scale well and leads to performance problems when you have a large amount of items. It would be nice if you somehow needed to check only a small subgroup instead of all the items.

This is where a hash code can be used. Hashing is the process of taking a large set of data and mapping it to a smaller data set of fixed length. For example, mapping all names to a specific integer. Instead of checking the complete name, you would have to use only an integer value.

By using hashing, you can improve the design of the set class. You split the data in a set of buckets. Each bucket contains a subgroup of all the items in the set. Example 3-22 shows how you can do this.

Example 3-22. A set implementation that uses hashing

class Set<T>

{

private List<T>[] buckets = new List<T>[100];

public void Insert(T item)

{

int bucket = GetBucket(item.GetHashCode());

if (Contains(item, bucket))

return;

if (buckets[bucket] == null)

buckets[bucket] = new List<T>();

buckets[bucket].Add(item);

}

public bool Contains(T item)

{

return Contains(item, GetBucket(item.GetHashCode()));

}

private int GetBucket(int hashcode)

{

// A Hash code can be negative. To make sure that you end up with a positive

// value cast the value to an unsigned int. The unchecked block makes sure that

// you can cast a value larger then int to an int safely.

unchecked

{

return (int)((uint)hashcode % (uint)buckets.Length);

}

}

private bool Contains(T item, int bucket)

{

if (buckets[bucket] != null)

foreach (T member in buckets[bucket])

if (member.Equals(item))

return true;

return false;

}

}

If you look at the Contains method, you can see that it uses the GetHashCode method of each item. This method is defined on the base class Object. In each type, you can override this method and provide a specific implementation for your type. This method should output an integer code that describes your particular object. As a general guideline, the distribution of hash codes must be as random as possible. This is why the set implementation uses the GetHashCode method on each object to calculate in which bucket it should go.

Now your items are distributed over a hundred buckets instead of one single bucket. When you see whether an item exists, you first calculate the hash code, go to the corresponding bucket, and look for the item.

This technique is used by the Hashtable and Dictionary classes in the .NET Framework. Both use the hash code to store and access items. Hashtable is nongeneric collection; Dictionary is a generic collection.

A couple of important principles can be deduced from this. First of all, equal items should have equal hash codes. This means that you can check to determine whether two items are equal by checking their hash codes. It also means that your implementation of GetHashCode should return the same value during time. It shouldn’t depend on changing values such as the current date or time.

MORE INFO: IMPLEMENTING GETHASHCODE

For more information on how to implement GetHashCode correctly, see http://msdn.microsoft.com/en-us/library/system.object.gethashcode(v=vs.110).aspx.

These properties are important when looking at hashing in a security context. If you hash a paragraph of text and change only one letter, the hash code will change, so hashing is used to check the integrity of a message.

For example, let’s say that Alice and Bob want to send a message to each other. Alice creates a hash of the message and sends both the hash and the message to Bob. Bob creates a hash of the message he has received from Alice and compares the two hash codes with each other. If they match, Bob knows he has received the correct message.

Of course, without any additional encryption, a third party can still tamper with the message by changing both the message and the hash code. Combined with the encryption technologies that the .NET Framework offers, hashing is an important technique to validate the authenticity of a message.

The .NET Framework offers a couple of classes to generate hash values. The algorithms that the .NET Framework offers are optimized hashing algorithms that output a significantly different hash code for a small change in the data.

Example 3-23 shows an example of using the SHA256Managed algorithm to calculate the hash code for a piece of text.

Example 3-23. Using SHA256Managed to calculate a hash code

UnicodeEncoding byteConverter = new UnicodeEncoding();

SHA256 sha256 = SHA256.Create();

string data = "A paragraph of text";

byte[] hashA = sha256.ComputeHash(byteConverter.GetBytes(data));

data = "A paragraph of changed text";

byte[] hashB = sha256.ComputeHash(byteConverter.GetBytes(data));

data = "A paragraph of text";

byte[] hashC = sha256.ComputeHash(byteConverter.GetBytes(data));

Console.WriteLine(hashA.SequenceEqual(hashB)); // Displays: false

Console.WriteLine(hashA.SequenceEqual(hashC)); // Displays: true

As you can see, different strings give a different hash code and the same string gives the exact same hash code. This enables you to see whether a string has been altered by comparing the hash codes.

Managing and creating certificates

Digital certificates are the area where both hashing and asymmetric encryption come together. A digital certificate authenticates the identity of any object signed by the certificate. It also helps with protecting the integrity of data.

If Alice sends a message to Bob, she first hashes her message to generate a hash code. Alice then encrypts the hash code with her private key to create a personal signature. Bob receives Alice’s message and signature. He decrypts the signature using Alice’s public key and now he has both the message and the hash code. He can then hash the message and see whether his hash code and the hash code from Alice match.

A digital certificate is part of a Public Key Infrastructure (PKI). A PKI is a system of digital certificates, certificate authorities, and other registration authorities that authenticate and verify the validity of each involved party.

A Certificate Authority (CA) is a third-party issuer of certificates that is considered trustworthy by all parties. The CA issues certificates, or certs, that contain a public key, a subject to which the certificate is issued, and the details of the CA.

When working on your development or testing environment, you can create certificates by using the Makecert.exe tool. This tool generates X.509 certificates for testing purposes. The X.509 certificate is a widely used standard for defining digital certificates.

If you open a developer command prompt as administrator, you can run the following command to generate a testing certificate:

makecert testCert.cer

This command generates a file called testCert.cer that you can use as a certificate. You first need to install this certificate on your computer to be able to use it. After installation, it’s stored in a certificate store. The following line creates a certificate and installs it in a custom certificate store named testCertStore:

makecert -n "CN=WouterDeKort" -sr currentuser -ss testCertStore

Example 3-24 shows how to use this generated certificate to sign and verify some text. The data is hashed and then signed. When verifying, the same hash algorithm is used to make sure the data has not changed.

Example 3-24. Signing and verifying data with a certificate

public static void SignAndVerify()

{

string textToSign = "Test paragraph";

byte[] signature = Sign(textToSign, "cn=WouterDeKort");

// Uncomment this line to make the verification step fail

// signature[0] = 0;

Console.WriteLine(Verify(textToSign, signature));

}

static byte[] Sign(string text, string certSubject)

{

X509Certificate2 cert = GetCertificate();

var csp = (RSACryptoServiceProvider)cert.PrivateKey;

byte[] hash = HashData(text);

return csp.SignHash(hash, CryptoConfig.MapNameToOID("SHA1"));

}

static bool Verify(string text, byte[] signature)

{

X509Certificate2 cert = GetCertificate();

var csp = (RSACryptoServiceProvider)cert.PublicKey.Key;

byte[] hash = HashData(text);

return csp.VerifyHash(hash,

CryptoConfig.MapNameToOID("SHA1"),

signature);

}

private static byte[] HashData(string text)

{

HashAlgorithm hashAlgorithm = new SHA1Managed();

UnicodeEncoding encoding = new UnicodeEncoding();

byte[] data = encoding.GetBytes(text);

byte[] hash = hashAlgorithm .ComputeHash(data);

return hash;

}

private static X509Certificate2 GetCertificate()

{

X509Store my = new X509Store("testCertStore",

StoreLocation.CurrentUser);

my.Open(OpenFlags.ReadOnly);

var certificate = my.Certificates[0];

return certificate;

}

The SignHash method uses the private key of the certificate to create a signature for the data. VerifyHash uses the public key of the certificate to see whether the data has changed.

MORE INFO: USING MAKECERT.EXE

For more information on how to use makecert.exe, see http://msdn.microsoft.com/en-us/library/bfsktky3(v=vs.110).aspx.

One use of digital certificates is to secure Internet communication. The popular HTTPS communication protocol is used to secure communication between a web server and a client. Digital certificates are used to make sure that the client is talking to the correct web server, not to an imposter.

Using code access permissions

The days when the only way to get a program on your computer was by using a floppy disk to install it to your hard drive are long gone. Today, you can install programs from a variety of sources, which can lead to several security issues. Your computer is probably running a virus scanner, and you’re in the habit of making sure that you know the sender of an e-mail message before you open an attachment.

The .NET Framework helps you protect your computers from malicious code via a mechanism called code access security (CAS). Instead of giving every application full trust, applications can be restricted on the types of resources they can access and the operations they can execute.

When using CAS, your code is the untrusted party. You need to ask for permission to execute certain operations or access protected resources. The common language runtime (CLR) enforces security restrictions on managed code and makes sure that your code has the correct permissions to access privileged resources.

Applications that are installed on your computer or on your local intranet have full trust. They can access resources and execute all kinds of operations. When running in a sandboxed environment such as Internet Explorer or SQL Server, CAS restricts the operations that an application can execute.

Each code access permission represents one of the following rights:

§ The right to access a protected resource, such as a file

§ The right to perform a protected operation, such as accessing unmanaged code

It can also be that you are creating a plug-in system and you want to make sure that third-party plug-ins can’t compromise your security. CAS can then be used to restrict the things a plug-in is allowed to do.

CAS performs the following functions in the .NET Framework:

§ Defines permissions for accessing system resources.

§ Enables code to demand that its callers have specific permissions. For example, a library that exposes methods that create files should enforce that its callers have the right for file input/output.

§ Enables code to demand that its callers possess a digital signature. This way, code can make sure that it’s only called by callers from a particular organization or location.

§ Enforces all those restrictions at runtime.

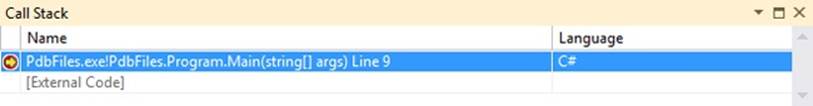

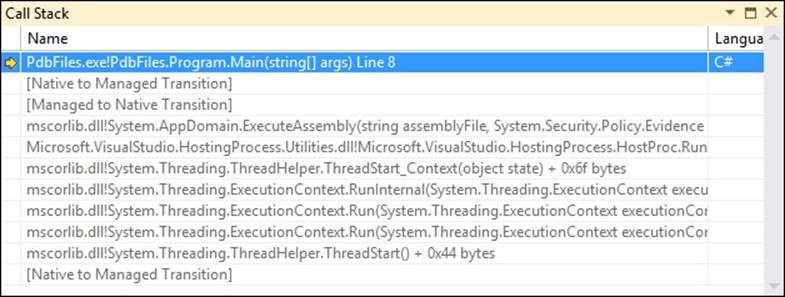

One important concept of CAS is that each and every element on the current call stack is checked. The call stack is a data structure that stores information about all the active methods at a specific moment. So if your application starts in the Main method and then calls method A which calls method B, all three methods will be on the call stack. When method B returns, only Main and A are on the call stack.

CAS walks the call stack and sees whether every element on the stack has the required permissions. This way, you can be sure that a less-trusted method cannot call some restricted code through a highly trusted method.

The base class for all things related to CAS is System.Security.CodeAccessPermission. Permissions that inherit from CodeAccessPermission are permissions such as FileIOPermission, ReflectionPermission, or SecurityPermission. When applying one of those permissions, you ask the CLR for the permission to execute a protected operation or access a resource.

You can specify CAS in two ways: declarative or imperative.

Declarative means that you use attributes to apply security information to your code. Example 3-25 shows an example of asking for the permission to read all local files by using the FileIOPermissionAttribute.

Example 3-25. Declarative CAS

[FileIOPermission(SecurityAction.Demand,

AllLocalFiles = FileIOPermissionAccess.Read)]

public void DeclarativeCAS()

{

// Method body

}

You can also do this in an imperative way, which means that you explicitly ask for the permission in the code. Example 3-26 shows how you can create a new instance of FileIOPermission and demand certain rights.

Example 3-26. Imperative CAS

FileIOPermission f = new FileIOPermission(PermissionState.None);

f.AllLocalFiles = FileIOPermissionAccess.Read;

try

{

f.Demand();

}

catch (SecurityException s)

{

Console.WriteLine(s.Message);

}

MORE INFO: CODE ACCESS SECURITY

For more information about CAS, see http://msdn.microsoft.com/en-us/library/c5tk9z76.aspx.

Securing string data

A lot of data in your application consists of simple strings. For example, passwords and credit card numbers are both strings. But the default System.String implementation is not optimized for security. Using a string for storing sensitive data has a couple of problems:

§ The string value can be moved around in memory by the garbage collector leaving multiple copies around.

§ The string value is not encrypted. If you run low on memory, it could be that your string is written as plain text to a page file on disk. The same could happen when your application crashes and a memory dump is made.

§ System.String is immutable. Each change will make a copy of the data, leaving multiple copies around in memory.

§ It’s impossible to force the garbage collector to remove all copies of your string from memory.

The .NET Framework offers a special class that can help you minimize the surface area an attacker has: System.Security.SecureString.

A SecureString automatically encrypts its value so the possibility of an attacker finding a plain text version of your string is decreased. A SecureString is also pinned to a specific memory location. The garbage collector doesn’t move the string around, so you avoid the problem of having multiple copies. SecureString is a mutable string that can be made read-only when necessary. Finally, SecureString implements IDisposable so you can make sure that its content is removed from memory whenever you’re done with it.

MORE INFO: IDISPOSABLE AND GARBAGE COLLECTION

For more info on what IDisposable is and how to use it, see Chapter 2. Chapter 2 also discusses how the garbage collector works.

A SecureString doesn’t completely solve all security problems. Because it needs to be initialized at some point, the data that is used to initialize the SecureString is still in memory. To minimize this risk and force you to think about it, SecureString can deal with only individual characters at a time. It’s not possible to pass a string directly to a SecureString. Example 3-27 shows an example of using a SecureString. The application reads one character at a time from the user and appends these characters to the SecureString.

Example 3-27. Initializing a SecureString

using (SecureString ss = new SecureString())

{

Console.Write("Please enter password: ");

while (true)

{

ConsoleKeyInfo cki = Console.ReadKey(true);

if (cki.Key == ConsoleKey.Enter) break;

ss.AppendChar(cki.KeyChar);

Console.Write("*");

}

ss.MakeReadOnly();

}

As you can see, the SecureString is used with a using statement, so the Dispose method is called when you are done with the string so that it doesn’t stay in memory any longer then strictly necessary.

At some point, you probably want to convert the SecureString back to a normal string so you can use it. The .NET Framework offers some special functionality for this. It’s important to make sure that the regular string is cleared from memory as soon as possible. This is why there is atry/finally statement around the code. The finally statement makes sure that the string is removed from memory even if there is an exception thrown in the code. Example 3-28 shows an example of how to do this.

Example 3-28. Getting the value of a SecureString

public static void ConvertToUnsecureString(SecureString securePassword)

{

IntPtr unmanagedString = IntPtr.Zero;

try

{

unmanagedString = Marshal.SecureStringToGlobalAllocUnicode(securePassword);

Console.WriteLine(Marshal.PtrToStringUni(unmanagedString));

}

finally

{

Marshal.ZeroFreeGlobalAllocUnicode(unmanagedString);

}

}

The Marshal class is located in the System.Runtime.InteropServices namespace. It offers five methods that can be used when you are decrypting a SecureString. Those methods accept a SecureString and return an IntPtr. Each method has a corresponding method that you need to call to zero out the internal buffer. Table 3-1 shows these methods.

Table 3-1. Methods for working with SecureString

|

Decrypt method |

Clear memory method |

|

SecureStringToBSTR |

ZeroFreeBSTR |

|

SecureStringToCoTaskMemAnsi |

ZeroFreeCoTaskMemAnsi |

|

SecureStringToCoTaskMemUnicode |

ZeroFreeCoTaskMemUnicode |

|

SecureStringToGlobalAllocAnsi |

ZeroFreeGlobalAllocAnsi |

|

SecureStringToGlobalAllocUnicode |

ZeroFreeGlobalAllocUnicode |

It’s important to realize that a SecureString is not completely secure. You can create an application, running in full thrust, which will be able to read the SecureString content. However, it does add to the complexity of hacking your application. All the small steps you can take to make your application more secure will create a bigger hindrance for an attacker.

THOUGHT EXPERIMENT

Choosing your technologies

In this thought experiment, apply what you’ve learned about this objective. You can find answers to these questions in the Answers section at the end of this chapter.

You are working on an application that helps users track their time and shows them when they are most productive. The application runs on a server with a web, desktop, and mobile front end being developed with the .NET Framework.

You are assigned the task of determining which security features should be used in the application.

Make a list of the possible technologies that can be used to secure the application.

Objective summary

§ A symmetric algorithm uses the same key to encrypt and decrypt data.

§ An asymmetric algorithm uses a public and private key that are mathematically linked.

§ Hashing is the process of converting a large amount of data to a smaller hash code.

§ Digital certificates can be used to verify the authenticity of an author.

§ CAS are used to restrict the resources and operations an application can access and execute.

§ System.Security.SecureString can be used to keep sensitive string data in memory.

Objective review

Answer the following questions to test your knowledge of the information in this objective. You can find the answers to these questions and explanations of why each answer choice is correct or incorrect in the Answers section at the end of this chapter.

1. Bob and Alice are using an asymmetric algorithm to exchange data. Which key should they send to the other party to make this possible?

a. Bob sends Alice his private key, and Alice sends Bob her public key.

b. Bob sends Alice his private key, and Alice sends Bob her private key.

c. Bob sends Alice his public key, and Alice sends Bob her public key.

d. Bob sends Alice his public key, and Alice sends Bob her private key.

2. You need to encrypt a large amount of data. Which algorithm do you use?

a. SHA256

b. RSACryptoServiceProvider

c. MD5CryptoServiceProvider

d. AesManaged

3. You need to send sensitive data to another party and you want to make sure that no one tampers with the data. Which method do you use?

a. X509Certificate2.SignHash

b. RSACryptoServiceProvider.Encrypt

c. UnicodeEncoding.GetBytes

d. Marshal.ZeroFreeBSTR

Objective 3.3 Manage assemblies

When building your applications, you work with source code files and projects, but this is not the way your application is deployed. The C# compiler takes your source code and produces assemblies. Managing those assemblies is important when you are deploying applications to production environments or distributing them to other parties.

THIS OBJECTIVE COVERS HOW TO:

§ Explain what an assembly is.

§ Sign assemblies using strong names.

§ Put an assembly in the GAC.

§ Version assemblies and implement side-by-side hosting.

§ Create a WinMD assembly.

What is an assembly?

Before Microsoft released the .NET Framework, the Component Object Model (COM) was dominant, but there were several problems.

One of those problems was known as “DLL hell.” Microsoft and other software companies distributed DLLs that can be used as building blocks by other applications. Problems start to arise when a company distributes a new version of a DLL without fully testing it against all applications that depend on it (which can be numerous applications which makes thorough testing almost impossible). Updating one application can lead to problems in another, seemingly unrelated applications.

Another problem had to do with the way applications were installed. Often an application would have to make changes to several parts of your system. Of course, there would be application directories copied to your system, but also changes were made to the registry and shortcuts were deployed. This made the installation process more difficult because uninstalling an application is hard and sometimes leaves traces of an application behind.

A third issue is security. Because applications made so many changes during installation, it was hard for a user to determine what was actually installed. It could be that one application in turn installs other components that form a security risk.

The .NET Framework addresses these issues and tries to solve them by making some radical changes. One important component of those changes is the concept of an assembly. An assembly still has the .dll (or .exe) extension like previous Windows components. Internally, however, they are completely different.

Assemblies are completely self-contained; they don’t need to write any information to the registry or some other location. Assemblies contain all the information they need to run. This is called the assembly’s manifest.

Another important aspect is that an assembly is language-neutral. You can write some C# code, compile it to an assembly, and then use the assembly directly from other .NET languages such as F# or Visual Basic.

In contrast to an old DLL, an assembly can be versioned, which enables you to have different versions of a specific assembly on one system without causing conflicts.

One other important change is the way assemblies are deployed. If you want, you can deploy an application by simply copying it to a new machine. All the assemblies that are required are deployed locally in the new application folder. An assembly can even contain resource files, such as images, that are directly embedded in the assembly. You can also choose to deploy an assembly in a shared way so it can be used by multiple applications.

Signing assemblies using a strong name

The CLR supports two different types of assemblies: strong-named assemblies and regular assemblies.

A regular assembly is what Visual Studio generates for you by default. It’s structurally identical to a strong-named assembly. They both contain metadata, header, manifest, and all the types that are in your assembly.

A strong-named assembly is signed with a public/private key pair that uniquely identifies the publisher of the assembly and the content of the assembly. A strong name consists of the simple text name of the assembly, its version number, and culture information. It also contains a public key and a digital signature.

Strongly naming an assembly has several benefits:

§ Strong names guarantee uniqueness. Your unique private key is used to generate the name for your assembly. No other assembly can have the exact same strong name.

§ Strong names protect your versioning lineage. Because you control the private key, you are the only one who can distribute updates to your assemblies. Users can be sure that the new version originates from the same publisher.

§ Strong names provide a strong integrity check. The .NET Framework sees whether a strong-named assembly has changed since the moment it was signed.

Overall, you can see that a strong-named assembly ensures a user that they can trust the origin and content of an assembly.

You generate a strong-named assembly by using your own private key to sign the assembly. Other users can verify the assembly by using the public key that is distributed with the assembly.

MORE INFO: PUBLIC AND PRIVATE KEY USAGE

For more information on using private and public keys to generate a digital signature see the section “Objective 3.2: Perform symmetric and asymmetric encryption” earlier in this chapter.

Signing an assembly can be done both at the command line and by using Visual Studio. The first step you have to take is to generate a key pair. A key pair is usually a file with an .snk extension that contains your public/private key information.

When using the developer command prompt, you can run the following command to generate a new key pair file:

sn -k myKey.snk

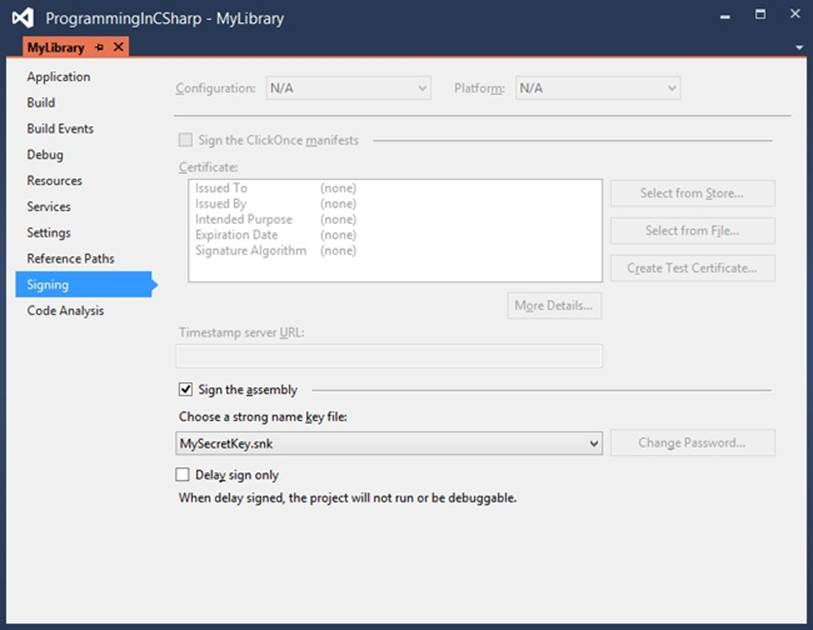

An easier way is to use Visual Studio to generate the key pair file for you. You can open the property page of the project you want to sign and then navigate to the Signing tab, as shown in Figure 3-1.

Figure 3-1. The Signing page in Visual Studio

By enabling the signing of the assembly, you can let Visual Studio generate a new key file, which is then added to your project and is used in the compilation step to strongly sign your assembly.

A strong-named assembly can reference only other assemblies that are also strongly named. This is to avoid security flaws where a depending assembly could be changed to influence the behavior of a strong-named assembly. When you add a reference to a regular assembly and try to invoke code from that assembly, the compiler issues an error:

Assembly generation failed -- Referenced assembly 'MyLib' does not have a strong name

After signing an assembly, you can view the public key by using the Strong Name tool (Sn.exe) that’s installed with Visual Studio. One of the strongly named assemblies that’s installed with the .NET Framework is System.Data. Example 3-29 shows how you can get the public key of this assembly.

Example 3-29. Inspecting the public key of a signed assembly

C:\>sn -Tp C:\Windows\Microsoft.NET\Framework\v4.0.30319\System.D

ata.dll

Microsoft (R) .NET Framework Strong Name Utility Version 4.0.30319.17929

Copyright (c) Microsoft Corporation. All rights reserved.

Identity public key (hash algorithm: Unknown):

00000000000000000400000000000000

Signature public key (hash algorithm: sha256):

002400000c800000140100000602000000240000525341310008000001000100613399aff18ef1

a2c2514a273a42d9042b72321f1757102df9ebada69923e2738406c21e5b801552ab8d200a65a2

35e001ac9adc25f2d811eb09496a4c6a59d4619589c69f5baf0c4179a47311d92555cd006acc8b

5959f2bd6e10e360c34537a1d266da8085856583c85d81da7f3ec01ed9564c58d93d713cd0172c

8e23a10f0239b80c96b07736f5d8b022542a4e74251a5f432824318b3539a5a087f8e53d2f135f

9ca47f3bb2e10aff0af0849504fb7cea3ff192dc8de0edad64c68efde34c56d302ad55fd6e80f3

02d5efcdeae953658d3452561b5f36c542efdbdd9f888538d374cef106acf7d93a4445c3c73cd9

11f0571aaf3d54da12b11ddec375b3

Public key token is b77a5c561934e089

The public key token is a small string that represents the public key. It is generated by hashing the public key and taking the last eight bytes. If you reference another assembly, you store only the public key token, which preserves space in the assembly manifest. The CLR does not use the public key token when making security decisions because it could happen that several public keys have the same public key token.

Within an organization, it’s important to secure the private key. If all employees have access to the private key, someone might leak or steal the key. They are then able to distribute assemblies that look legitimate. But without access to the private key, developers can’t sign the assembly and use it while building the application.

To avoid this problem, you can use a feature called delayed or partial signing. When using delayed signing, you use only the public key to sign an assembly and you delay using the private key until the project is ready for deployment. If you look at Figure 3-1, you can see that there is an option to activate delayed signing in Visual Studio.

IMPORTANT: ORIGIN OF A STRONG-NAMED ASSEMBLY

One thing that’s important to understand is that a strongly named assembly does not prove that the assembly comes from the original publisher. It only shows that the person who created the assembly has access to the private key.

If you want to make sure that users can verify you as the publisher, you have to use something called Authenticode. Authenticode is a technology that uses digital certificates to identify the publisher of an application. You need to buy a certificate online and then use that certificate to sign your application.

For more information on Authenticode, see http://technet.microsoft.com/en-us/library/cc750035.aspx.

Putting an assembly in the GAC

Assemblies that are local to an application are called private assemblies. You can easily deploy an application that depends on private assemblies by copying it to a new location.

Another way to deploy an assembly is to deploy it to the global assembly cache (GAC). The GAC is a specialized library for storing assemblies. It is machine-wide and it is one of the locations the CLR checks when looking for an assembly.

Normally, you want to avoid installing assemblies in the GAC. One reason to deploy to the GAC is when an assembly is shared by multiple applications. Other reasons for installing an assembly into the GAC can be the enhanced security (normally only users with administrator rights can alter the GAC) or the situation where you want to deploy multiple versions of the same assembly.

Deploying an assembly in the GAC can be done in two ways:

§ For production scenarios, use a specific installation program that has access to the GAC such as the Windows Installer 2.0.

§ In development scenarios, use a tool called the Global Assembly Cache tool (Gacutil.exe).

You can view the content of your GAC by running the following command from a developer command prompt:

gacutil -l

This returns a list of all the assemblies that are installed in the GAC.

Installing an assembly in the GAC can be done with the following command:

gacutil –i [assembly name]

You can also remove an assembly from the GAC:

gacutil –u [assembly name]

When referencing a shared assembly from your project, you can add a reference to the file located in the GAC or to a local copy of it. When Visual Studio detects that there is a GAC version of the DLL you are referencing, it will add a reference to the GAC, not to the local version.

Versioning assemblies

In stark contrast with how DLLs worked before the .NET Framework, an assembly has a version number. Inside the assembly manifest, the assembly records its own version number and the version numbers of all the assemblies that it references.

Each assembly has a version number that has the following format:

{Major Version}.{Minor Version}.{Build Number}.{Revision}

§ The Major Version is manually incremented for each major release. A major release should contain many new features or breaking changes.

§ The Minor Version is incremented for minor releases that introduce only some small changes to existing features.

§ The Build Number is automatically incremented for each build by the build server. This way, each build has a unique identification number that can be used to track it.

§ The Revision is used for patches to the production environment.

When building an assembly, there are two version numbers that you need to take into account: the file version number and the .NET assembly version number.

If you create a new project in Visual Studio, it automatically adds an AssemblyInfo.cs file to the properties of your project.

This file contains the following two lines:

[assembly: AssemblyVersion("1.0.0.0")]

[assembly: AssemblyFileVersion("1.0.0.0")]

AssemblyFileVersionAttribute is the one that should be incremented with each build. This is not something you want to do on the client, where it would get incremented with every developer build. Instead, you should integrate this into your build process on your build server.

AssemblyVersionAttribute should be incremented manually. This should be done when you plan to deploy a specific version to production.

Because the version of an assembly is important when the runtime tries to locate an assembly, you can deploy multiple versions of the same assembly to the GAC and avoid the DLL problem that happened with regular DLL files. This is called side-by-side hosting, in which multiple versions of an assembly are hosted together on one computer.

The process of finding the correct assembly starts with the version number that is mentioned in the manifest file of the original assembly to determine which assembly to load. These bindings can be influenced with specific configuration files, however.

Three configuration files are used:

§ Application configuration files

§ Publisher policy files