Client-Server Web Apps with JavaScript and Java (2014)

Chapter 13. Testing and Documentation

Without language, thought is a vague, uncharted nebula. Nothing is distinct before the appearance of language.

—Saussure

James Lind was an 18th-century Scottish physician. While serving in the Navy, he conducted what today might be described as the first clinical trial. By dividing a dozen sick sailors into groups of two and providing a controlled diet and specific treatments, he was able to determine that oranges and lemons were effective for warding off scurvy. We know today that scurvy is a result of vitamin C deficiency, but it would take a century of similar experiments to eventually lead Casimir Funk to coin the term “vitamin” in 1912.

Clinical trials are an application of the experimental step of the scientific method. The Oxford Dictionary describes the scientific method as “a method of procedure that has characterized natural science since the 17th century, consisting in systematic observation, measurement, and experiment, and the formulation, testing, and modification of hypotheses.” This definition, while accurate, does not capture one of the important outcomes of repeated experimentation, the characterization of phenomena and development of hypotheses that are articulated in clear, unambiguous language. Testing at its best leads to crisp, clear descriptions of the subject being tested that allow subsequent researchers to more clearly communicate.

Software testing finds its roots in this same tradition. It essentially adopts procedures that have been practiced in the natural sciences for the past 400 years. Testing is done to prove or disprove hypotheses. In the case of software testing, this involves an assertion that all or part of a system functions as specified. Software testing can also lead to insights into how an application might be better designed, modularized, and structured. In addition, it can help clarify requirements and identify precise language to describe the system being scrutinized.

Types of Testing

Most software development projects include some claim of being tested, but what precisely is meant by this is not necessarily evident. Testing is a broad subject and can be subdivided based on the outcome of testing, the construction of the tests, the portion of an overall system being tested, or the role of the people involved.

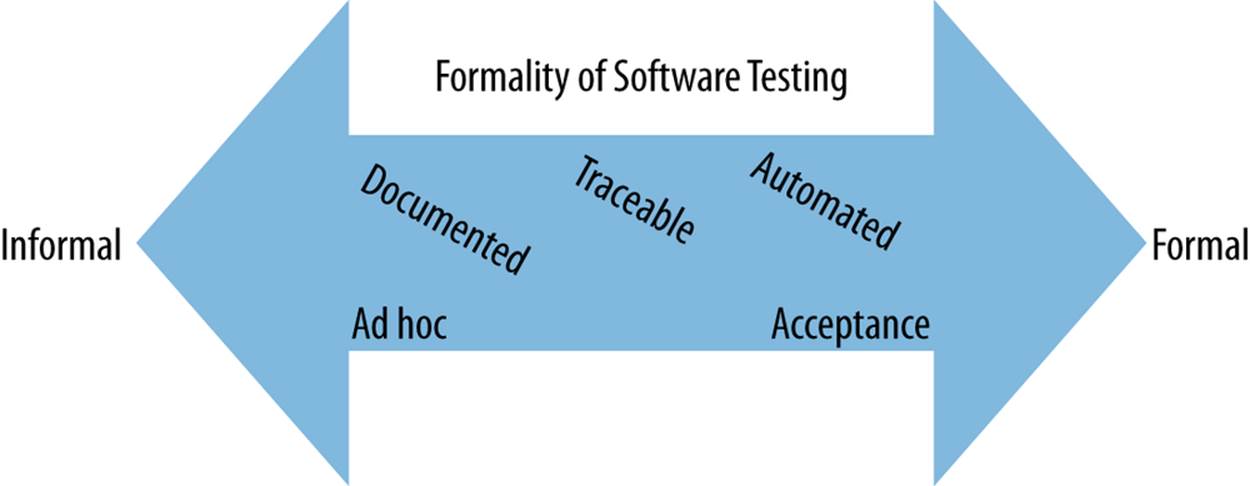

Formal Versus Informal

Ad hoc testing is used when the purpose of testing is not clear and the outcome is expected to be informal and arbitrary. It is analogous to exploratory programming in an REPL. It is a preliminary step to other forms of testing and is a way of kicking the tires of an unfamiliar application. Formalized acceptance testing is on the opposite end of the spectrum, as it is structured, organized, defined, and specifically intended to determine whether the customers are going to accept a system. Informal testing is generally a manual endeavor, whereas formalized methods can be highly automated.

Rather than memorizing a laundry list of testing types and methodologies, it is more important to understand the goal of testing and choose an approach that is rigorous enough for the project without adding unnecessary overhead. As shown in Figure 13-1, in the case of test formality, a continuum can be visualized that comprises the range of possible choices. Though the scientific method was cited in this chapter, the selection of applicable test approaches for a given project is more an art than a science.

Figure 13-1. Range of test formality

Extent of Testing

The extent of testing can vary a great deal. Simple smoke tests (or sanity tests) can be carried out to provide minimal assurance of no obvious disruptive defects, whereas exhaustive testing is intended to exercise every aspect of a system. The extent to which a system has been tested can be quantifed from different perspectives. Test coverage analysis indicates which lines of code are being unit tested. Even in a system that has 100% coverage, every possible permutation of inputs is required to substantiate a claim of exhaustive testing. Few projects can make such a claim, but those that do might only be exercising the functional aspects of the system.

Nonfunctional attributes of a system can be tested as well, including load testing, stress testing, and testing on diverse platforms, browsers, or devices. Cloud deployments have led to innovations in testing that have pushed the boundaries of testing to include intentionally introducing significant system failures to ensure system resilience. For instance, Netflix’s Chaos Monkey project runs within Amazon Web Services and verifies the ability of a system to withstand repeated server failures by seeking out and terminating VM instances in Auto Scaling Groups.

Who Tests What for Whom?

The time, manner, creator, and audience for tests are also significant concerns. Unit tests can be created by developers before code is even written or added when it is all completed. Black-box testing generally involves higher-level functional tests written by testers. Unit tests are tied closely to implementation in code, while black-box tests are created without reference to the software’s internal structure. Highly isolated tests might evaluate a single tiny aspect of a system, whereas integration testing can ensure defects do not exist in interfaces between related systems.

Testing can also be considered in terms of the people who create the tests and evaluate the results. The audience for test results is initially developers, but later, QA analysts and eventually those authorized to accept a system are viable. Tests can be authored by different parties, including testers and developers. Since tests are created in relation to requirements, there can be significant involvement from business analysts and stakeholders involved in defining requirements. In fact, the definition of tests can contribute to the development of a common, unambiguous language for all parties involved in testing a system.

Testing as an Indicator of Organizational Maturity

In practice, requirements for a software system can reside in many places and exist in many forms. Although documentation is generally assumed to be a primary source, not all projects have formal documentation. Even if a project does have formally defined requirements and a system description, these tend to quickly become outdated in an actively developed system if not automatically generated or intentionally maintained. There are instances where a programmer’s memory or comments in code specifically describe expected behavior. In the absence of these, the source code itself might be the only remaining formal description of system functionality. The inaccessiblilty of source code is an excellent reason why tests can serve as the most accurate final definition of software requirements outside the system itself.

In fact, sets of well-defined tests can remain in use long after all original code written for a system has been replaced. In this respsect, tests can be a more enduring and valuable artifact than the system under construction itself! They can be an effective means of communication throughout an organization and among stakeholders about the state and functionality of the system.

CMM to Assess Process Uniformity

Conway’s Law suggests that “Any organization that designs a system will produce a design whose structure is a copy of the organization’s communication structure.” It might also be said that software process maturity (the extent to which processes are clearly defined and controlled) is reflected by the state of an organization’s software tests. The Capability Maturity Model (CMM) defines a hierarchy of stages describing degrees of structure, process stability, and discipline for an organization reflected in processes for developing and maintaining software. This is shown inTable 13-1.

Table 13-1. Capability maturity model stages

|

Level |

Name |

Description |

Testing |

|

Level 1 |

Initial |

Inconsistent, disorganized |

None, ad hoc |

|

Level 2 |

Repeatable |

Disciplined processes |

Some unit testing |

|

Level 3 |

Defined |

Standard, consistent processes |

Uniform projects, unit tests run each build |

|

Level 4 |

Managed |

Predictable processes |

Continuous integration testing |

|

Level 5 |

Optimizing |

Continuously improving processes |

Coverage, code quality, reports |

An organization that is highly optimized is less reliant on the heroic effort of a few individuals. It is less prone to missed deadlines, “death marches”, and other symptoms of a project that is out of control. With the focus on application scalability, it is possible to lose sight of organizationalscalability.

Higher CPM is essential if there is an intention to grow an organization over time. Consistent processes and procedures ease the transition of new members to the team. They minimize the amount of time lost having a productive member of the team cease work to teach each new member a set of in-house, undocumented, unenforced practices. They lessen the possibility that actions taken by new members will destabilize the existing application. In the best case, good processes subtly teach employees approaches that will benefit other projects that do not yet have controls in place.

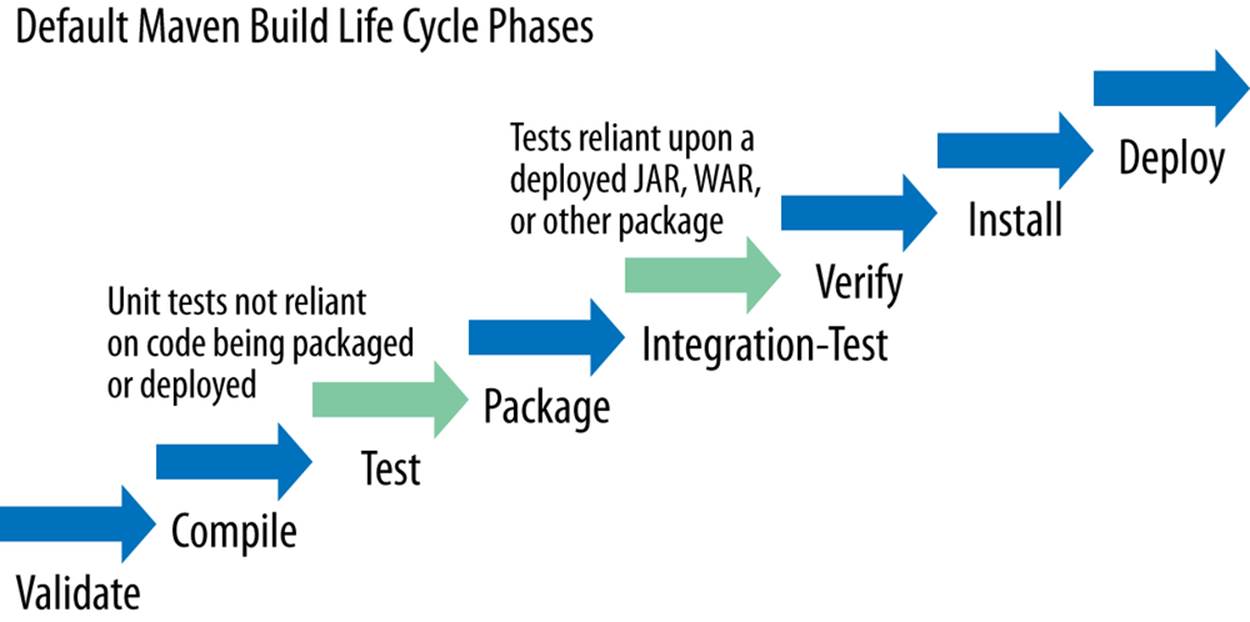

Maven to Promote Uniform Processes

Directly convincing people to adopt a consistent software practice can be difficult. It is much easier to use tools that promote or enforce practices that are in line with organizational goals. For example, Maven introduces a consistent build cycle, which allows extensibility through a plug-in environment without being so flexible as to allow significant deviation from a number of best practices.

One of the objectives of Maven is to provide a uniform build system. Maven might be perceived as inflexibile when compared to Gradle or other build tools. But uniformity implies a degree of rigidity. Rigidity is not simply an evil to be avoided. Decisions must be made to implement structures and tools that provide constraints that will encourage positive uniform processes within an organization. Structured testing processes and reporting are fundamental and applicable to most software projects.

Maven includes two phases for testing by default (test and integration test). These are shown in Figure 13-2 in the midst of the other Maven build life cycle phases. Typically, the Surefire plug-in is called during the test phase, and the Failsafe plug-in is called during the integration test phase. Surefire reports can be presented in an HTML form using the Surefire Report plug-in while the integration test reports can be rendered in HTML using the Failsafe Report plug-in.

Figure 13-2. Maven life cycle phases

Maven’s build life cycle is one of several ways that it promotes project uniformity. Unit testing and integration testing are included phases by default. They occur at a logical point in the process, and produce reports that can be published to a known location (the Maven site). The default phases provide a good basic framework, and Maven can be extended to handle other testing concerns if needed.

Maven can execute an application’s unit tests periodically as part of the build process when called by a continuous integration (CI) server. There are many such servers available, including the open source Jenkins or commercial products like Team City or Bamboo. A CI server also tends to promote standard software best practices. CI servers require the use of version control, encourage Maven assets to be properly maintained, and quickly reveal whether the build runs to completion in a reasonable amount of time.

Maven Reports can be published to a designated location by the CI server. These reports provides visibility as to the structure and quality of code. They also make the results of testing evident, including information on the number of tests written, their outcomes, performance, and overall code coverage.

THE GOAL OF 100% COVERAGE

Once coverage reports begin being used, it is easy to focus on a goal of 100% test coverage to the exclusion of the value of the tests themselves. Coverage tools simply determine which code paths are executed. Some code paths might never be executed in practice; others might identify paths that do not merit testing consideration. Test coverage therefore does not indicate testquality. Testing by definition can prove only the presence of problems. It cannot prove that none exist. Putting undue emphasis on coverage report percentages can result in programmers making changes that do not benefit the software in any meaningful way.

The makers of JUnit recognize the tension of wanting comprehensive test coverage and also recognize that some code does not require testing. They cite a maxim on their FAQ that, like many highly subjective rules of thumb, rings true:

“Test until fear turns to boredom.”

An organization can relatively quickly achieve Level 3 of the Capability Maturity Model in a preliminary way by using Maven with JUnit tests on all projects and adding each project to a CI server. Publishing reports indicating test results can contribute to clear communication beyond the development team about the state of the software and related tests. Although Maven includes capacity for handwritten documentation, it does not include mechanisms out of the box related to requirements or their relationship with the tests that are constructed.

An additional level of project review and process improvement can be accomplished by tracking project statistics over time. Data related to code complexity, test coverage, total lines of code, duplicated code, occurences of comments, and coding practice violations are objective measures that can be calculated and stored in a database using a product like SonarQube. This helps to identify trends indicating that a code base is improving or deteriorating.

BDD to Promote Uniform Processes

Informal testing is often done with the intention of finding bugs or causing a malfunction. Such tests can be retained and run regularly to ensure that problems are not reintroduced later. The creation of a set of unit tests that run regularly at build time can become an extremely effective safety net in the ongoing support of an application. But tests should not be viewed in isolation. They are integral to other aspects of software development. Consider this statement that appeared in Code Complete by Steve McConnell (Microsoft Press) in 1993: “As you’re writing the routine, think about how you can test it. This is useful for you when you do unit testing and for the tester who tests your routine independently.”

The insight to note is that testing while designing results in better, more structured code, better naming, and other benefits. It suggests a greater appreciation of the close connection between testing and other phases of the SDLC. It also anticipates more recent software testing practices.

Test-Driven Development (TDD) takes the idea of thinking about tests while writing code to a logical conclusion where tests are actually written prior to writing associated code. More specifically, TDD follows a cycle of writing an initially failed test case representing a new requirement followed by writing, coding, and refactoring that causes the test to pass.

Behavior-Driven Development (BDD) was subsequently introduced by Dan North to help developers better understand where to start in TDD, what to test, what should be put in a given test, and how to name them. BDD provides a narrative style that bridges the gap between code and human language. TDD is wide open about the style of tests being created, whereas BDD follows a TDD-style workflow but provides a more formal format for behavioral specification.

BDD requires more personal commitment across an organization to be successful but addresses several areas related to attaining higher CMM levels. BDD requires involvement and communication across teams. Quality assurance testers, developers, and business analysts must coordinate efforts in order to be effective. The benefit is the construction of relevant test cases directly tied to requirements and expressed in common, unambiguous shared language.

Maven, in conjunction with mature testing frameworks, introduces a coherent organization and structure to developer processes. Including BDD encourages communication and process refinement that extends beyond developers to those involved in testing and requirement-gathering and definition.

Testing Frameworks

Testing approaches tend to be codified in testing packages. JUnit is used for unit testing in Java, while Jasmine is used for unit testing in JavaScript. JBehave is a Behavior-Driven Development framework for Java, while Cucumber is Ruby-based.

JUnit

JUnit was originally written by Erich Gamma and Kent Beck. It has grown well beyond its humble beginnings to its rather sophisticated form in JUnit 4. In previous versions, unit tests belonged to specific object hierarchies and followed method-naming conventions in order to be functional. Like many projects in the Java ecosystem, JUnit 4 relies heavily on annotations. For instance, rather than having to explicitly create methods named setUp() and tearDown(), the @before and @after annotations are used to run code before and after test execution. The @beforeClassand @afterClass are also available to run code before and after test class instantiation. The @Test annotation replaces the former “test” naming convention for methods, and the @ignore directive can be used rather than the distasteful practice of commenting out tests. Since assertions can no longer be found in the object hierarchy, the static import of assertion classes is generally required. Assertions can also be used to specify parameterized tests or test suites.

TESTNG

Another Java unit-test framework that has gained attention in the last few years is TestNG. It has similar functionality to JUnit at this point but included certain features, such as parameterized tests, before they became available in JUnit. It is also better able to manage groups of tests. This provides the flexibility required for effective integration testing. Some developers prefer TestNG’s conventions and use of annotations over JUnit’s as well.

Unit tests are generally written in the same programming language as the main application. There are obvious advantages to this because specific isolated methods or functions of the application can be easily exercised. As use of unit tests gained popularity, they served as a sort of documentation of usage among developers. A common response to the question, “How does this project work?” is, “See the unit tests.” Unfortunately, programming languages do not help nonprogrammers clearly understand how a system functions. In many cases, requirements cannnot be derived from tests even by programmers who know the language.

Jasmine and Cucumber are written to provide BDD implementations that in large part support human languages. Jasmine uses JavaScript to specify tests, while Cucumber is basically an interpretter for a little language called Gherkin. Gherkin is a business-readable DSL to describe the behavior of software. The actual programming language code used to test an application is separated into external files.

Jasmine

Jasmine expresses requirements using JavaScript strings and functions. It is small and lightweight, so it can be included easily in a project and run simply by opening a browser. The basic structure for a test includes a description of a test suite followed by blocks that contain expectations which are essentially assertions that return true or false. By convention, they reside in a JavaScript file with “Spec” in the name and are called by opening a SpecRunner.html that includes the dependent library:

describe("Suite Title Here", function() {

it("Expectation (assertion) Title Here", function() {

expect(true).toBe(true);

});

});

While certainly closer to human language, the format of a test remains JavaScript, which to the untrained eye appears as human language riddled with a menagerie of symbols. An uninitiated individual might ask, “Why the sad winking emoticons on the last two lines?”

Cucumber

Cucumber relies on a DSL called Gherkin that closely resembles human language. The login.feature file, for example, is a structured text document with a few keywords and neat indentation:

Feature: Login

As a user,

I want to be able to log in with correct credentials

Scenario: Success login

Given correct credentials

When I load the page

Then I should be able to log in

The code implementation corresponding with each step in the feature is maintained separately:

...

Given(/^correct credentials$/) do

end

When(/^I load the page$/) do

Capybara.visit(VALID_LOGIN_URL)

end

Then(/^I should be able to log in$/) do

Capybara.page.has_content?('To NOT Do')

end

Regular expressions are used to match text in the test specification. Ruby code is used to perform the actual tests. In this example, Capybara is used for browser automation to open a browser, visit a URL, and determine if expected content is present.

FROM THE CUCUMBER BOOK

Cucumber helps facilitate the discovery and use of a ubiquitous language within the team, by giving the two sides of the linguistic divide a place where they can meet. Cucumber tests interact directly with the developers’ code, but they’re written in a medium and language business stakeholders can understand. By working together to write these tests—specifying collaboratively—not only do the team members decide what behavior they need to implement next, but they learn how to describe that behavior in a common language that everyone understands.

Test frameworks can be invoked directly or by build tools. The project in this chapter includes examples of tests run as part of a Maven build.

Project

The project for this chapter demonstrates how JUnit and Jasmine tests can be integrated into a Maven project build. It also includes Cucumber tests that use Capybara to call Selenium as an example of BDD applied to browser-automated functional testing.

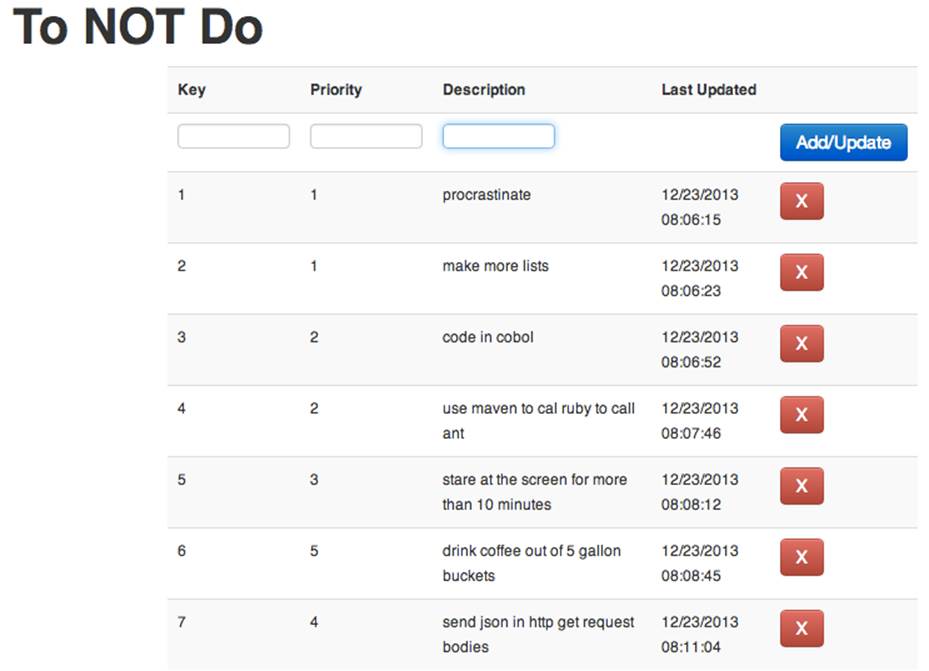

Rather than implementing something so common and mundane as a “TODO list” application, the project will consist of a “To NOT do list” web application, as shown in Figure 13-3. Such an application can be used to list things that one intends to cease doing or avoid. Fascinatingly refreshing and novel, don’t you think?

The project will demonstrate how various testing frameworks can be called from Maven throughout the course of a build. The end result is a site that includes reports on tests that have been run, as well as related documentation.

Figure 13-3. To Not Do web application

JUnit

The starting point for determining project configuration for any Maven project is the pom.xml. The dependencies section includes an entry for JUnit that is restricted to test scope:

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.11</version>

<scope>test</scope>

Jasmine

In the same way that the JUnit module is added to the pom.xml for Java testing, a dependency needs to be added to support JavaScript testing. Justin Searls maintains the Jasmine plug-in used in the project:

<groupId>com.github.searls</groupId>

<artifactId>jasmine-maven-plugin</artifactId>

<version>1.3.1.3</version>

A sample test included in the project validates the presence and functionality of a dependent library:

describe("Validate moment.min.js", function() {

it("expects moment.min.js to be functional", function() {

expect(

moment("20111031", "YYYYMMDD").

format('MMMM Do YYYY, h:mm:ss a')

).toBe(

"October 31st 2011, 12:00:00 am"

);

});

});

Besides the declaration of the preceding Maven coordinates, additional configuration is required to include the JavaScript files used. When correctly configured, the tests will be run when mvn install is executed:

...

[INFO]

J A S M I N E S P E C S

[INFO]

Suite Title Here

Expectation (assertion) Title Here

Validate moment.min.js

expects moment.min.js to be functional

Results: 2 specs, 0 failures

...

The Jasmine plug-in is a perfect solution in many cases. However, browser-specific code cannot be exercised, which limits its applicability. Browser automation is better suited for testing requirements involving extensive DOM manipulation. A solution like Selenium can be used to drive various browsers. Browser automation allows each browser’s unique idiosyncrasies to be uncovered. Selenium can be used directly within Java unit tests, but in many cases, automating a browser suggests higher-level functional or integration testing. It is so effective for controlling browsers that higher-level libraries such as Capybara rely on Selenium as a driver implementation. The following Cucumber example uses Capybara in this very manner.

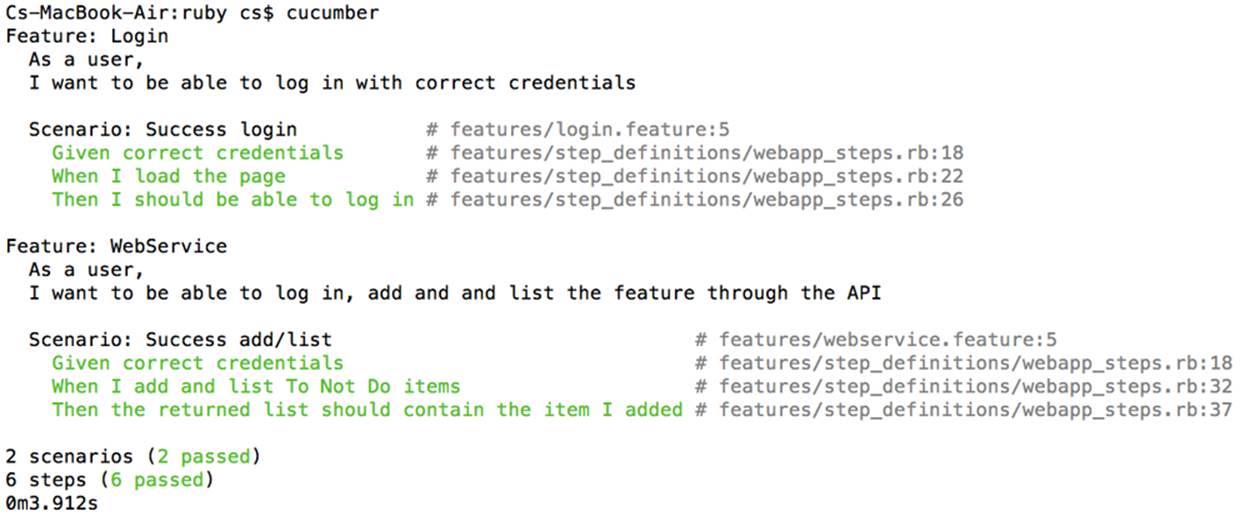

Cucumber

The /ruby directory contains a Ruby-based version of the Cucumber login test. The Gemfile lists the dependencies, and the webapp_steps.rb contains the code to process the specifications defined in the feature files. Start the application in one OS session:

mvn jetty:run

In a second session, run Cucumber:

cd ruby

cucumber

The features that have been specified will be run with output that reflects the origin of each step being executed, as shown in Figure 13-4.

Figure 13-4. Cucumber run

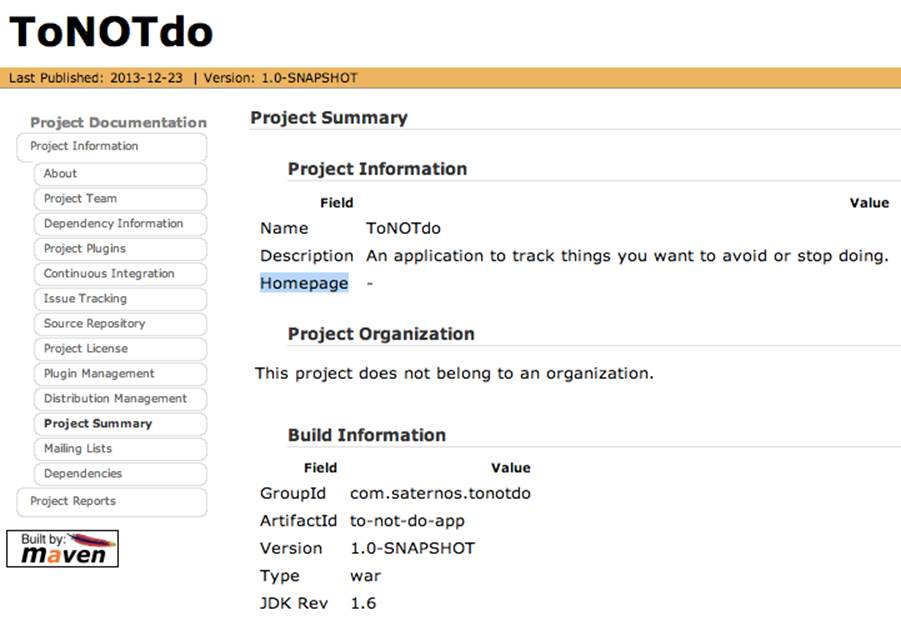

Maven Site Reports

The pom.xml included in this chapter is quite a bit longer than the ones associated with other projects in this book. Numerous dependencies and plug-ins are not associated with the core application but with testing. In addition, many of the configuration options are used to populate information in a Maven project site.

The Maven Site plug-in is used to generate a site and run it on port 8080:

mvn site:site

...

mvn site:run

The Maven /site/ directory can contain a variety of resources to customize the site. In Figure 13-5, a site.xml has been added to use a custom “skin” to provide a different style to the site.

Figure 13-5. To Not Do Maven site

Conclusion

Scalability in software requires well-architected, predictable, performant systems. Testing provides the means of ensuring that such systems are developed. Unit tests, Maven, and systems related to continuous integration promote the creation of such systems by clarifying the development process and making visible the state of an application.

Scalability of an organization requires defined processes and clear communication. The same tools listed above, along with an approach like BDD, give testing a definitive social benefit for a team that will tend to feed back into the quality of the software itself.

Software testing provides objective support based on the scientific method and practices that have been refined in the physical sciences. Properly conducted, it also provides immense practical value in demonstrating the quality and performance of an application and encouraging a common understanding of how requirements have been implemented.