Security for Web Developers (2015)

III Creating Useful and Efficient Testing Strategies

It isn’t likely that you’ll escape potential security problems with your application, but you can reduce them by ensuring you take time to think things through and perform proper testing techniques. This part of the book is all about helping you reduce the risk that someone will find your application inviting enough to try to break into it. Chapter 9 starts out by helping you think like a hacker, which is an important exercise if you actually want to see the security holes in your application. It isn’t as if someone marks security holes in red with a big sign that says, “Fix Me!” so this thought process is incredibly important.

Chapter 10 discusses sandboxing techniques. Keeping code in a sandbox doesn’t actually force it to behave, but it does reduce the damage that the code can do. Sandboxes make your system and its resources far less accessible and could make all the difference when it comes to security.

Chapters 11 and 12 discuss testing of various sorts. Chapter 11 focuses on in-house testing, which often helps locating major problems, but may not be enough to find subtle problems that could cost you later. Chapter 12 discusses third party testing, which is an especially important option for smaller businesses that may lack a security expert. No matter where you perform testing, ensuring you test fully is essential to knowing what the risks are of using a particular product with your application.

9 Thinking Like a Hacker

Most developers spend their time in a world where it’s important to consider how things should work—will work when the code is correct. The whole idea of thinking about things as they shouldn’t work—will break when the code is errant—is somewhat alien. Yes, developers deal with bugs all the time, but the line of thought is different. When you think like a hacker, you might actually use code that is perfectly acceptable as written—it may not have a bug, but it may have a security hole.

This chapter contains a process that helps you view code as a hacker would. You use tools to look for potential security holes, create a test system to use while attempting to break the code, and rely on common breaches to make your life a little easier. Hackers love the Bring Your Own Device (BYOD) phenomena because now you have all these unsecured systems floating about using operating systems that IT may not have much experience working with. Of course, there is always the ultimate application tester, the user. Users can find more ways to break applications than any developer would even want to think about, but user testing can be valuable in finding those assumptions you made that really weren’t valid.

In fact, it’s the need to think along these lines that drives many organizations to hire a security expert to think about all of the devious ways in which hackers will break perfectly functional applications in an effort to gain a tangible advantage they can use to perform specific tasks. However, this chapter assumes that you really don’t have anyone wearing the security expert shoes in your organization right now. You may consider it when the application nears completion, but by then it’s often too late to fix major problems without incurring huge costs. Thinking like a hacker when viewing your application can save your organization money, time, effort, and most importantly, embarrassment.

Defining a Need for Web Security Scans

The basic idea behind web security scans is that they tell you whether your site is currently clean and sometimes help you consider potential security holes. If you have a large setup, then buying a web security scanner is a good idea. However, smaller businesses can often make use of one of the free web security scanners online. Of course, these products really aren’t completely free. When the web security scanner does find a problem on your system, then the company that owns it will likely want you to engage it to clean your system up and protect it afterward. (There truly is no free lunch in the world of computing.)

It isn’t possible for any web security scanner to detect potential problems with your site with 100 percent accuracy. This is especially true of remote scanners—those that aren’t actually running on a system that has the required access to the server you want to test. Scanners do a remarkable job of locating existing issues, but they can’t find everything.

You must also realize that web security scanners can produce false positive results. This means that the scanner could tell you that a security issue exists when it really doesn’t.

The best safeguard against either missing issues or false positives is to use more than one web security scanner. Counting on a single web security scanner really is a good start, but you’ll likely end up working harder than needed to create a fully workable setup with the fewest possible security holes.

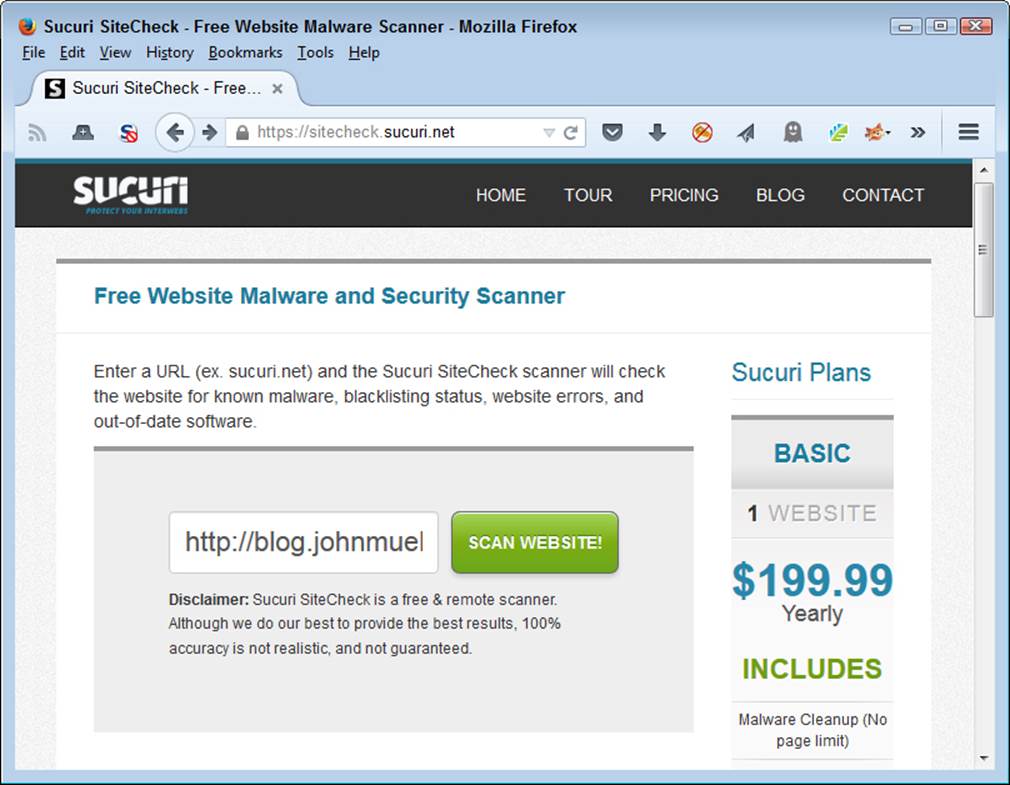

Before looking more at web security scanners, it’s important to see what tasks it performs. In this case, the example will use Securi (https://sitecheck.sucuri.net/), which is a free scanner that can detect a number of security issues, mostly revolving around potential infections. The main page shown in Figure 9-1 lets you enter your site URL and click Scan Website! to begin the scanning process.

Figure 9-1. Starting the scan involves entering your site URL and clicking a button.

If you do have a hosted site, make sure you check with the vendor providing the hosting services. Many of them offer low cost web security scanners. For example, you can find the offering for GoDaddy users at https://www.godaddy.com/security/malware-scanner.aspx. The vendor has a vested interest in ensuring your site isn’t compromised, so the low cost offering benefits the vendor too.

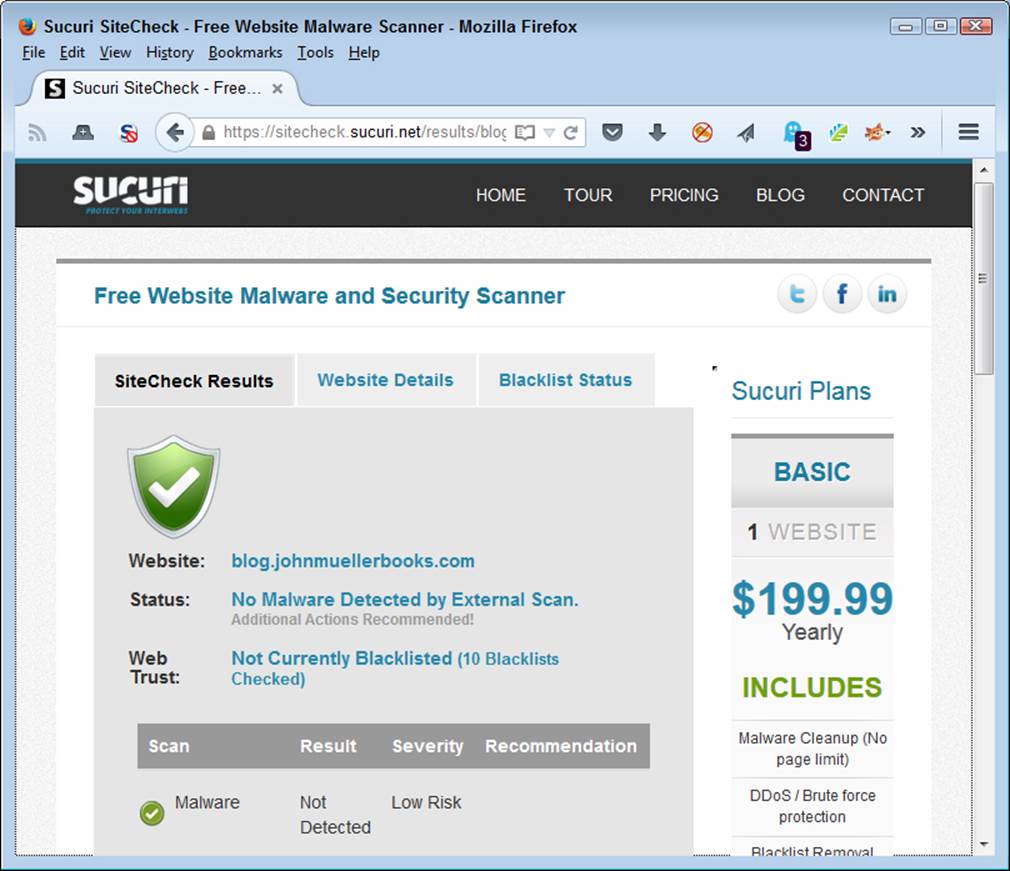

After the scan is completed, you see an overview of what the scanner found and a more detailed listing of actual checks as shown in Figure 9-2. Of course, if you want a more detailed check, you can always buy one of the Securi plans listed on the right side of the page. However, for the purposes of seeing what a scanner can do for you, the free version works just fine.

Figure 9-2. The completed scan shows any problems with your site.

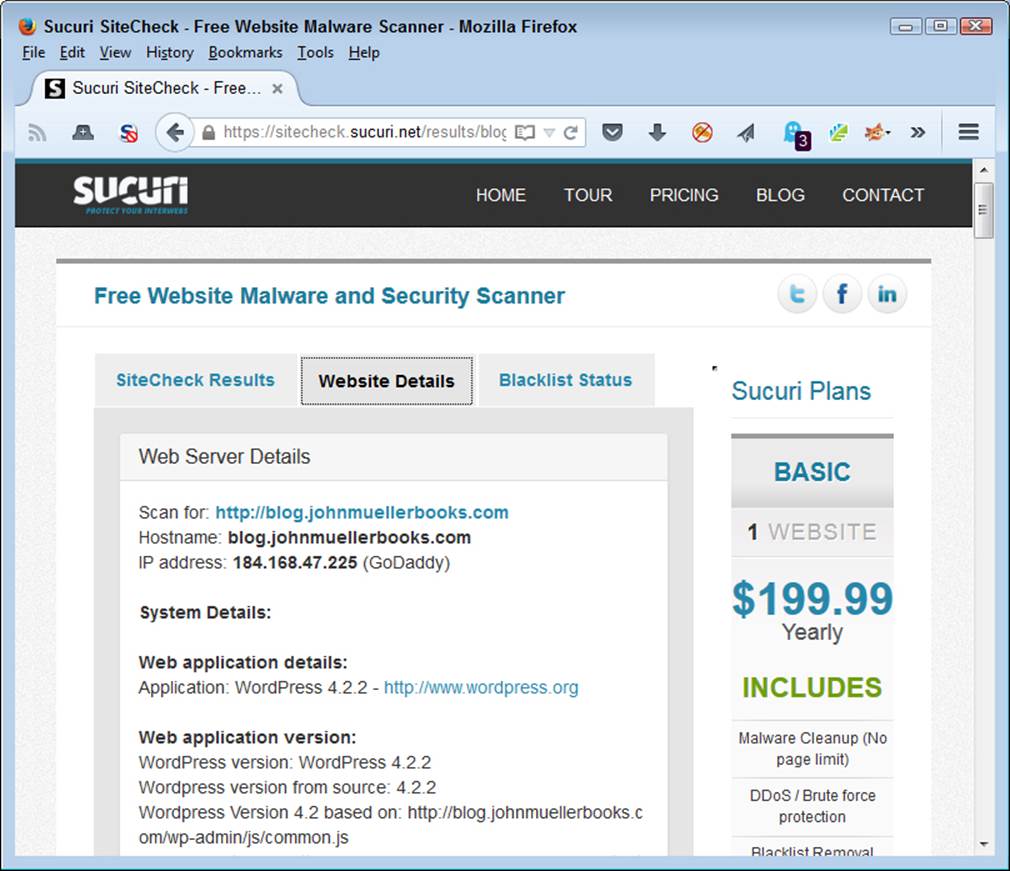

It can be interesting to view the site Website Details information. Figure 9-3 shows the information for my site. Interestingly enough, a hacker could employ a scanner just like this one to start looking for potential areas of interest on your site, such as which version of a particular piece of software you use. The web security scanner helps you understand the sorts of information that hackers have available. Seeing the kinds of information everyone can find is often a bit terrifying.

Figure 9-3. The detailed information can provide insights into what hackers can learn about your setup.

The detailed information can be helpful for another reason. When you use a hosted site, as many small businesses do, it’s hard to know whether the vendor providing hosting services actually keeps the software updated. The Securi (and other) web security scanners probe the site carefully and come up with lists of version information you might not even see when using the dashboard for your site. For example, it’s possible that even though a library still works fine, it’s outdated and has a potential for creating a security breach on your system. A call to the hosting company can usually clear matters up quickly, but you need to know about the problem before you can call anyone about it.

You can find lists of web security scanners online. One of the better lists is from Web Application Security Consortium (http://projects.webappsec.org/w/page/13246988/Web Application Security Scanner List). The authors have categorized the list by commercial, Software-As-A-Service (SAAS), and free open source tools. Another good list of tools appears on the Open Web Application Security Project (OWASP) site at https://www.owasp.org/index.php/Phoenix/Tools. You can find tools for all sorts of purposes on this site.

Building a Testing System

When thinking like a hacker, it’s often a good idea to have a system that you don’t really have to worry about trashing. In fact, it’s more than likely that the system will get trashed somewhere along the way, so it’s important to build such a system with ease-of-restoration in mind. The following sections provide you with a good overview of the things you should consider when building a test setup for your organization.

Considering the Test System Uses

You need a test system so that you can feel free to explore every possible avenue of hacking. Until you fully understand just how vulnerable most applications are today, you really can’t fix your own application. Developers make all sorts of mistakes that seem innocent at the time, but really cause security issues later. Something as minor as the wrong sort of comment in your code can cause all kinds of problems. Using a production system to experiment is simply a bad idea because the techniques described in this chapter could potentially leave you open to a real attack. You want to use a system that you can trash and restore as needed to ensure the lessons you learn really do stick.

The idea is to test each application under adverse conditions, but in a safe manner that doesn’t actually compromise any real data or any production systems. Giving a test system a virus will tell you how your application will react to that virus without actually experiencing the virus in a production environment. Of course, your test systems can’t connect to your production network (or else the virus could get out and infect production systems). You can also use this environment to test out various exploits and determine just what it does take to break your application as a hacker would.

A test system need not test just your application, however. You can also use it to test the effectiveness of countermeasures or the vulnerabilities of specific configurations. Testing the system as a whole helps you ensure that your application isn’t easily broken by vulnerabilities in other parts of the system setup. It’s essential to test the whole environment because you can count on any hacker doing so.

Getting the Required Training

Before a developer can really test anything, it’s important to know what to test and understand how to test it. You can begin the training process by using the OWASP Security Shepard (https://www.owasp.org/index.php/OWASP_Security_Shepherd), which illustrates the top ten security risks for applications. The Security Shepard can provide instruction to just one person or you can use it in a classroom environment to teach multiple students at once. The application provides a competitive environment, so in some respects it’s a security game where the application keeps score of the outcome of each session. The application supports over 60 levels and you can configure it to take the user’s learning curve and experience into account.

After you learn the basics, you need to spend some time actually breaking into software as a hacker would so that you can see the other side of the security environment. Of course, you want to perform this task in a safe and legal environment, which means not breaking into your own software. OWASP specifically designed the WebGoat application (https://www.owasp.org/index.php/Category:OWASP_WebGoat_Project) to provide vulnerabilities that an application developer can use to discover how exploits work in a real application setting. The movies athttp://webappsecmovies.sourceforge.net/webgoat/ take you step-by-step through the hacking process so that you better understand what hackers are doing to your application. The training includes movies on:

• General Principles

• Code Quality

• Concurrency

• Unvalidated Parameters

• Access Control Flaws

• Authentication Flaws

• Session Management Flaws

• Cross-Site Scripting (XSS)

• Buffer Overflows

• Injection Flaws

• Insecure Storage

• Denial of Service (DOS)

• Configuration

• Web Services

• AJAX Security

The final step is a WebGoat challenge where you demonstrate your new found skills by breaking the authentication scheme, stealing all the credit cards, and then defacing the site. The point is to fully understand hacking from the hackers viewpoint, but in a safe (and legal) environment.

It’s important to understand how to perfect your security testing skills. The article at http://blogs.atlassian.com/2012/01/13-steps-to-learn-perfect-security-testing-in-your-org/ provides you with thirteen steps you can follow to improve your testing methods. This information, combined with the tools in this section, should help you test any application even if your organization lacks the resources to perform testing in depth.

Creating the Right Environment

Any environment you build must match the actual application environment. This means having access to each of the operating systems you plan to support, browsers the users plan to use, and countermeasures that each system will employ (which may mean nothing at all in some cases). Make sure the systems are easy to access because you may find yourself reconfiguring them to mimic the kinds of systems that users have. In addition, you must plan to rebuild the test systems regularly.

Because of the kind of testing you perform using the test system, you must provide physical security for your lab. Otherwise, some well-meaning individual could let a virus or other nasty bit of code free in the production environment. You also don’t want to allow access to the security setup to disgruntled employees or others who might use it to make your life interesting. The test environment should reflect your production environment as closely as possible, but you also need to keep it physically separate or face the consequences.

As part of keeping production and test environments separate, you should consider using a different color cabling for the test environment. You don’t want to connect a production system to the test environment accidentally or vice versa. In addition, it’s essential to label test systems so no one uses them in the production environment.

Using Virtual Machines

Most organizations won’t have enough hardware to run a single machine for each configuration of operating system and browser that users expect to rely on when working with the application. The solution is to use virtual machines so that you can configure one physical computer to host multiple virtual computers. Each of the virtual computers would represent a single user configuration for testing purposes.

Using virtual machines is a good idea for another reason. When the virtual system eventually succumbs to the attacks you make on it, you can simply stop that virtual machine, delete the file holding that computer, and create a new copy from a baseline configuration stored on the hard drive. Instead of hours to set up a new test system, you can create a new setup in just a few minutes.

Virtual machines can solve many problems with your setup, but you also need to consider the need for higher end hardware to make them work properly. An underpowered system won’t produce the kind of results you need to evaluate security issues. The number of systems that you can create virtually on a physical machine is limited to the amount of memory, processing cycles, and other resources the system has to devote to the process.

It’s also essential that the virtual machine operating software provide support for all the of the platforms you want to test. Products, such as VMWare (http://partnerweb.vmware.com/GOSIG/home.html) offer support for most major operating systems. Of course, this support comes at an additional cost.

As an alternative to going virtual, some organizations use older systems that are still viable, but not as useful as they once were in the production environment. Because of the way in which web applications work, these older systems usually provide all the computing horsepower needed and let an organization continue to receive some benefit from an older purchase. Of course, maintaining these old systems also incurs a cost, so you need to weigh the difference in cost between a virtual machine setup and the use of older systems (or perhaps create a combination of the two).

Getting the Tools

Unless you want to write your own security tool applications (a decidedly bad idea), you need to obtain them from someone else. Fortunately, sites such as McAfee (http://www.mcafee.com/us/downloads/free-tools/index.aspx) provide you with all the free tools you could ever want to perform tasks such as:

• Detect malware on the host system

• Assess whether the system is vulnerable to attack

• Perform forensic analysis after an attack

• Use the Foundstone Software Application Security Services (SASS) tools to make applications more secure

• Determine when an intrusion occurs

• Scan the system for various vulnerabilities

• Stress test the system

Configuring the System

Starting with a clean setup is important to conducting forensic examination of the system after attacking it. Before you install any software or perform any configuration, make sure you zero wipe it (write all zeroes to it). A number of software products lets you perform this task. Writing all zeroes to the hard drive ensures that any data you do see is the result of the testing you perform, rather than information left behind by a previous installation.

It’s important to create a clean setup of each platform you intend to support at the outset. Make sure you make a copy of the setup image so that you can restore it later. The setup should include the operating system, browsers, and any test software needed to support the test environment. You may also want to include software that the user commonly installs on the system if the web application interacts with the software in any way.

Products such as Norton Ghost (http://www.symantec.com/page.jsp?id=ghost) make it considerably easier to create images of each configuration. Make sure you have an image creation strategy in mind before you do anything to the clean configuration you create. You need clean images later to restore the configuration after you trash it by attacking it.

In addition to standard software, you may want to install remote access software so that you can access the system from a remote location outside the physically secured test area. External access must occur over a physically separate network to ensure there is no risk of contaminating the production environment. The use of remote access software lets more than one tester access the systems as needed from their usual workplace, rather than having to access the systems physically from within the secure area.

Some organizations provide workstations that access the test systems using a KVM (keyboard, video, and mouse) switch. Using a KVM setup with a transmitter lets you easily switch between test systems from a remote location. However, the use of remote access software is probably more flexible and faster.

Restoring the System

It won’t take long and your test system will require restoration. The viruses, adware, and Trojans that you use to test it are only one source of problems. Performing exploits and determining how easy it is to break your application will eventually damage the operating system, test application, test data, and the countermeasures used to protect the system. In short, you need some method of restoring the system to a known state quite quickly.

Restoration also includes reconfiguring the system to mimic another environment. It pays to use images to create various test environments quickly. As previously mentioned, using virtual machines saves a lot of time because you don’t have to rebuild the hard drive from scratch each time. However, make sure you also have each operating system image on a separate hard drive, DVD, or other storage media because the hard drive will eventually become corrupted.

Defining the Most Common Breach Sources

Every day sees the addition of new security breaches. It’s not likely that anyone could keep up with them all. However, some security breaches require special attention and others are indicative of the sorts of things you see in the future. Of course, it’s nice to have an organized method for locating the security beaches and the checklist provided by OWASP at https://www.owasp.org/index.php/Web_Application_Security_Testing_Cheat_Sheet is a start in the right direction.

Once you know about the potential attack vectors for your application, it helps to score them so that you know which vulnerabilities to fix first. An attack that lets someone access all the pictures of the company picnic is far lower priority than one that allows access to the credit card information of all your clients. One of the best systems you can use for scoring potential attack vectors is Common Vulnerability Scoring System (CVSS) (http://www.first.org/cvss/v2/faq). Using this system helps you create an organized list of problems that you can deal with to make your application more secure.

Of course, it also helps you know what to test. With this in mind, the following sections describe the most common breach sources as of the writing of this book. (These sections build on the information you already obtain about basic hacks from Chapter 1.) You’ll likely find even more breaches by the time the book is released because hackers are nothing if not creative in their methods of breaking software.

You can’t categorize every potential breach source hackers will use. In fact, hackers often rely on misdirection (much like magicians) to keep you from figuring out what’s going on. A recent news story about LOT Polish airlines serves to point out the results of misdirection (see http://www.computerworld.com/article/2938486/security/cyberattack-grounds-planes-in-poland.html). In this case, authorities have spent considerable time and resources ensuring that flight systems remain unaffected by potential attacks. However, they weren’t as diligent about ground systems. Hackers managed to break into the ground systems and make it impossible to create flight plans for outbound passengers. Whether the attack grounded the planes by affecting their flight systems or by affecting ground control doesn’t matter. What matters is that the planes couldn’t take off. The hackers achieved a desired result through misdirection. The lesson is that you need to look everywhere—not just where you think hackers will attack based on the latest statistics.

Avoiding SQL Injection Attacks

There are many forms of the SQL injection attack. For example, you could mess with the form data in an application to determine whether the form is capable of sending commands to the backend server. However, it’s best to start with a popular method of checking for the potential for a SQL injection attack.

Let’s say that you have an URL such as http://www.mysite.com/index.php?itemid=10. You have probably see URLs structured like this one on many sites. One way to check for a vulnerability is to simply add a single quite after the URL, making it http://www.mysite.com/index.php?itemid=10'. When you press Enter, the site sends back a SQL error message. The message varies by system, but the idea is that a backend server receives your URL as a SQL request that’s formatted something like: SELECT * WHERE itemid='10''. The addition of another single quote makes the query invalid, which produces the error.

When you do find a problem with your application that someone could potentially exploit, it’s important to make the entire development team aware of it. Demonstrating the exploit so that everyone can see how it works and what you need to do to fix it is an essential part of developer training for any organization. Products such as the Browser Exploitation Framework (BeEF) (http://beefproject.com/) can help you find the attack vector and then demonstrate it to others in your organization.

You can now start playing with the URL to see what’s possible. For example, let’s say you want to determine how many columns that query produces and what those columns are. It’s possible to use the SQL ORDER BY clause to perform this task. Change the URL so that it includes the ORDER BY clause like this: http://www.mysite.com/index.php?itemid=10 ORDER BY 1. This is the same as typing SELECT * WHERE itemid='10' ORDER BY 1 as a command. By increasing the ORDER BY value by 1 for each request, you eventually see an error again. Say you see the error when you try ORDER BY 10. The query results actually have 9 columns in this case.

A hacker will continue to add SQL commands to the basic URL to learn more about the query results from the database. For example, using the SELECT clause helps determine which data appears on screen. You can also request special information as part of the SELECT clause, such as@@version, to obtain the version number of the SQL server (giving you some idea of what vulnerabilities the SQL server might have). The point is that the original URL provides direct access to the SQL server, making it possible for someone to take the SQL server over without doing much work at all.

You can see another type of SQL injection attack dissected at http://www.w3schools.com/sql/sql_injection.asp. The underlying cause of all these attacks is that a developer used data directly from a page or request without first checking it for issues and potentially removing errant information from it.

Understanding Cross-Site Scripting

XSS is similar to SQL injection in many ways because of the way in which the exploit occurs. However, the actual technique differs. The two types of XSS are non-persistent (where the exploit relies on the user visiting a specially crafted link) and persistent (where the attack code is stored in secondary storage, such as a database). Both attacks rely on JavaScript code put into a place where you wouldn’t expect.

As an example of a non-persistent form of XSS, consider this link: http://www.mysite.com/index.php?name=guest. It looks like a perfectly harmless link with a name/value entry added to it. However, if you add a script to it, such as http://www.mysite.com/index.php?name=guest<script>alert(‘XSS’)</script>, the user could see a dialog box pop up with the message, XSS. This example doesn’t do any damage, but the script could easily do anything you can do in a script. For example, you could craft the script in such a manner that it actually redirects the user to another site where the page would download a virus or other nasty piece of software.

Production: Please do not change the % shown below to percent. –John M

The example shows the <script> tag in plain text. A real exploit would encode the <script> tag so that the user couldn’t easily recognize it. What the user would see is a long URL with an overly complex set of % values that appear regularly in valid URLs as well.

A persistent XSS attack is harder to implement, but also does a lot more damage. For example, consider what happens when a user logs into an application. The server sends a session ID as a cookie back to the user’s machine. Every request after that uses the session ID so that the application can maintain state information about the user. Let’s say that the attacker sends a specially crafted script to the server as part of the login process that gets stored into the database under a name that the administrator is almost certain to want to review. When the administrator clicks on the user name, the script executes and sends the administrator’s session ID to the attacker. The attacker now has administrator privileges for the rest of the session, making it possible to perform any administrator level task. You can get more detailed information about persistent XSS athttps://www.acunetix.com/blog/articles/persistent-cross-site-scripting/.

In both cases, the best defense against XSS is to sanitize any input you receive. The process of sanitizing the input removes any scripts, weird characters, or other information that isn’t part of the expected response to a request. For example, if you expect a numeric input, the response shouldn’t contain alphabetic characters.

Tackling Denial of Service Issues

The idea behind a DOS attack is relatively straightforward. You find an open port on a server and keep sending nonsense packets to it in an effort to overwhelm the associated service. Most servers have services that offer open ports, such as:

• DNS servers

• E-mail servers

• FTP servers

• Telnet servers

• Web servers

Of course, the more open ports you provide, the better the chance of overwhelming your server. A first line of defense is to close ports that you don’t need by not installing services you don’t require. An application server may only require a Web server, so that’s the only service you should have installed. As an application developer, you can recommend keeping other services uninstalled (or at least inactive). When creating a private application, using a non-standard port can also help, as does requiring authentication.

Hackers are usually looking for services that don’t have a maximum number of connections, so ensuring you keep the maximum number of connections to a value that your server can handle is another step in the right direction. It’s important in a DOS attack to give the server something to do, such as perform a complex search when working with a Web server. Authentication would help keep a hacker from making requests without proper authorization.

Still, it’s possible to bring down any server if you have enough systems sending an endless stream of worthless requests. Some of the defenses against DOS attacks include looking for patterns in the request and then simply deny them, rather than expend system resources trying to resolve the request. You can find a host of DOS attack tools to use to test your system at http://resources.infosecinstitute.com/dos-attacks-free-dos-attacking-tools/. Beside looking for patterns in the attack and attempting to resolve them yourself, you can also try:

• Purchase specialized equipment designed to help mitigate DOS attacks

• Rely on your ISP to detect and mitigate DOS attacks

• Obtain the services of a cloud mitigation provider

Nipping Predictable Resource Location

It’s possible to attack a system by knowing the location of specific resources and then using those resources to gain enough information to access the system. Some sites also call this type of attack as forced browsing. Of course, the best way to prevent this sort of an attack is to keep resources in unpredictable locations. In addition, you can ensure that the authentication scheme works and that you properly secure resources. However, let’s look at how this particular attack works.

One example of this sort of attack is where a URL points out a valid resource and then you use that URL to access another resource owned by someone else. Let’s say that you have your agenda for a particular day located at http://www.mysite.com/Sam/10/01/2015 and that you want to access Amy’s agenda for the same day. Change the URL to http://www.mysite.com/Amy/10/01/2015 might provide the required access if the administrator hasn’t configured the server’s authentication correctly.

As another example, some servers place information in specific directories. For example, you might have authorized access to http://www.mysite.com/myapp/app.html. However, you could change the URL to see if http://www.mysite.com/system/ exists. If you get a response of 200 back from the server, the directory does exist and you can start querying it for useful information. Of course, this assumes that the administrator hasn’t properly secured the system directory and that system is a standard directory location. The administrator could always change the name of system directory and also ensure that it only allows access by those with the proper credentials.

Overcoming Unintentional Information Disclosure

Unintentional information disclosure can occur in all sorts of ways. However, the exploit always involves the hacker gaining unauthorized access to a centralized database. At one time, the source of the information would have been something like the Network Information System (NIS). However, today the information could come from any source that isn’t secure or has vulnerabilities that a hacker can exploit. There are so many of these sources that it’s not really possible to come up with a simple example that illustrates them all. You can overcome this type of hack by:

• Applying all required patches to the operating system, services, application environment, libraries, APIs, and microservices as required

• Configure the border routers and firewalls to block requests that could request information from a sensitive source

• Restrict access to all sensitive information sources

• Never hard code passwords or place them where someone could easily find them

• Use two-factor authentication for any sensitive information source

• Perform audits to look for potential breaches (even if you feel the system is completely secure)

• Use assessment tools to determine whether it’s possible to access the sensitive information source from anywhere other than a designated location

Testing in a BYOD Environment

The BYOD phenomenon keeps building in strength. It’s important to realize that BYOD isn’t going to go away. In fact, you probably already know that BYOD is going to become the preferred method of outfitting users at some point. Organizations will eventually tell users to bring whatever device is needed to get their work done and leave everything in the hands of the user. It sounds like a disaster in the making, but that’s where users are taking things now.

According to Gartner, Inc., by 2017 half of organizations will no longer supply any devices to users (see http://www.gartner.com/newsroom/id/2466615). In addition, by 2020 75 percent of users will pay less than $100 for a smartphone (see http://www.gartner.com/newsroom/id/2939217), so getting a device smart enough to perform most tasks won’t even be that expensive. Creating and managing applications will become harder because you must ensure that the application really does work everywhere and on any device. Of course, it has to work without compromising organizational security. The following sections will help you provide some level of testing and isolation for the BYOD environment.

It’s also important to realize that users are now participating strongly in Bring Your Own Application (BYOA). The reason this new move on the part of users is so important is that it introduces yet another level of unpredictability to the application environment. You never know when another application will cause your application woe. In fact, the third party application could provide the conduit for the next major breach your company suffers. Users will definitely continue using applications such as Dropbox, Google Docs, and CloudOn because they’re convenient and run everywhere. To ensure the integrity of the application environment, you need to continue viewing these applications as contamination just waiting to ruin your day.

One of the reasons that BYOA is such a problem is that the organization loses control over its data. If the user stores organizational data in a personal account on Dropbox, the organization can’t easily retrieve that data in the event of an emergency. In short, BYOA opens serious security holes that could be a problem for any application data that you want to protect.

Configuring a Remote Access Zone

When working within the BYOD environment, the best assumption you can make is that the device environment isn’t secure and that you can’t easily make it secure. With this in mind, a BYOD session usually has four phases:

1. The client and server create a secure tunnel to make it harder for outsiders to listen in. The intent is to prevent man-in-the-middle attacks.

2. The user supplies two forms of authentication. A two-factor authentication process makes it less likely that someone will successfully mimic the user.

3. The client makes one or more requests that the server mediates using a service mediation module. The service mediation module only honors requests for valid services. The service mediation module automatically logs every request, successful or not.

4. A service separation module provides access to public data only. It disallows access to sensitive data. The client sees just the data that the organization deems acceptable for a BYOD environment.

The remote access zone is part of phases 1 and 2. It consists of an external firewall and a VPN and authentication gateway. The remote access zone provides a first level of defense against intrusion.

The information gleaned from the user must appear as part of your application strategy. A BYOD access is different from local access from a desktop system the organization owns in that you have no idea of where this access occurs or whether the device itself is secure. When a user accesses your application in such a manner, you need to provide a role that matches the device used. This means that you don’t allow any sensitive information to appear on the device and could potentially limit access to other sorts of data. For example, your organization might decide that it’s acceptable to obtain a listing of sales for a particular client, but the list is read-only, which means that the user can’t make any changes to the sales list in the field. In order to make these changes, the user would need to log into the account from a local system.

The use of a remote access zone also implies that your organization configures Mobile Device Management (MDM). This is a set of products and services that help ensure the mobile device remains as secure as is possible. For example, the MDM could check mobile devices for patch requirements and ensure the user patches the device before you allow application access (you could simply patch the device automatically). Even with an MDM in place, you can’t assume the device is secure when:

• The device reports values that imply a secure configuration, but your organization doesn’t actually implement the specified values. A malware developer won’t know which values to report and will simply report them all.

• Other authorities can override the device settings. For example, if a smartphone vendor can automatically patch the device outside your control, you must assume that some of those patches could contain viruses or other malware.

• It isn’t possible to enforce settings on the mobile device. The device may not provide the required support, your application may not be configured to support the device, or malware interferes with the updates.

Checking for Cross Application Hacks

A cross application hack is one in which one application gains access to data or other resources used by another application. In many cases, the hack occurs when two applications have access to the same data source (cross application resource access or XARA). One such recent hack is for the OS X and iOS operating systems (read about it at http://oversitesentry.com/xara-an-old-way-to-hack-cross-application-resource-access/).

Another type of cross application problem is Cross Application Scripting (CAS). In this case, the bug causes a problem where JavaScript code executes within certain types of applications. You can read about one such exploit for Android at http://news.softpedia.com/news/Apache-Cordova-for-Android-Patched-Against-Cross-Application-Scripting-453942.shtml.

The best way to verify that your application isn’t potentially exposing data through this kind of exploit is to stay on top of any news for the platforms you support. It takes a security expert to find potential problems of this sort. Unfortunately, barring a patch from an operating system or browser vendor, you can’t really do too much about this particular hack except to remain vigilant in checking your data stores for potential damage. Of course, this particular kind of problem just lends more credence to creating a remote access zone (see the previous section of the chapter).

Dealing with Really Ancient Equipment and Software

Research firms love to paint pretty pictures of precisely how the future will look. Of course, some of those dreams really do come true, but they don’t take into account the realities of the range of user equipment in use. For example, it might trouble some people to discover that (as of this writing) 95 percent of all ATMs still rely on Windows XP as an operating system (see http://info.rippleshot.com/blog/windows-xp-still-running-95-percent-atms-world). The US Navy is still using Windows XP on 100,000 desktops (see http://arstechnica.com/information-technology/2015/06/navy-re-ups-with-microsoft-for-more-windows-xp-support/). Yes, old, archaic, creaky software still exists out there and you might find it used to run your application.

According to NetMarketShare (http://www.netmarketshare.com/operating-system-market-share.aspx?qprid=10&qpcustomd=0) Windows XP still powers upwards of 14.6 percent of the systems out there. It’s important to realize that your main concern when creating an application may not be the shiny new smartphone with the updated, fully patched operating system and the latest in browser technology. The real point of concern may be that creaky old Windows XP system loaded with Internet Explorer 8 that your users insist on using. It’s interesting to note that Internet Explorer 8 still commands about 25 percent of the desktop market share (see https://www.netmarketshare.com/browser-market-share.aspx?qprid=2&qpcustomd=0).

Of course, you can always attempt to force users to upgrade. However, if you’ve dealt with the whole BYOD phenomena for long enough, you know that users will simply ignore you. Yes, they might be able to show you a shiny new system, but they’ll continue to use the system they like—the older clunker that’s causing your application major woe.

About the only effective means you have of dealing with outdated equipment is to check the browser data during requests. Doing so lets you choose whether to allow the request to succeed. When the browser is too old, you can simply display a message telling the user to upgrade their equipment. The article at http://sixrevisions.com/javascript/browser-detection-javascript/ describes how to perform browser version checking. You can see your actual browser information at https://www.cyscape.com/showbrow.asp. The only problem with this approach is that it’s possible to thwart the check in some cases and some browsers also report incorrect version information.

Relying on User Testing

Nothing can test software in a more violent and unpredictable manner than a user. Most developers have seen users try to make software do things that it quite obviously shouldn’t because the user doesn’t realize the software shouldn’t do that. It’s this disconnect between what the software appears to do and what the user makes it do that provides the serious testing that only a user can provide.

The important part of the process is the part that will cause the most pain to developers. Any attempt to create an organized approach to user testing will only result in testing failures. Users need to have the freedom to play with the software. Actually, play time is good for everyone, but it’s an essential element of user testing. The following sections describe how you can get the user to play with your software and come up with those serious security deficiencies that only users (and apparently some hackers) seem to find.

Interestingly enough, you don’t actually have to perform your own user testing any longer. If you really want a broad base of test users, but don’t have the resources to do it yourself, you can always rely on sites such as User Testing (http://www.usertesting.com/). Theoretically, the site will provide you with honest evaluations of your application in as little as an hour (although one quote provided on the main page said the results were delivered in 20 minutes). Most of these third party testing sites offer web application, mobile application, and prototype testing.

A few third party testers, such as Applause (http://www.applause.com/web-app-testing), specifically offer “in the wild” testing where your application actually sees use on the Internet from unknown users, just as it would in the production environment. Of course, the difference is that that testing occurs without any risk to your equipment and Applause provides the tools required to obtain measurable results. In general, you would want to save this level of testing for a time when your application is almost ready for release and you want to perform a sanity check.

Letting the User Run Amok

Sometimes the best way to test your application is to give your user a set of printed steps and a goal. Watching how the user interacts with the application can tell you a lot about how the user perceives the application and where you need to make changes. Actually videotaping the user at work can be helpful, but you need to be aware that the act of videotaping will change the user’s behavior. Keylogging and other techniques are also helpful in keeping track of what the user does with the application without actually standing over the top of the user to observe (which would definitely change user behavior and your testing would fail).

Fortunately, you don’t have to rely on just the resources you have at hand. Sites such as Mashable (http://mashable.com/2011/09/30/website-usability-tools/) provide you with a wealth of testing tools you can use to check your web application for problems. The site documents the tools well and tells you why each tool is important. Most importantly, the site helps you understand the importance of specific kinds of testing that you might not have considered. For example, Check My Colours (http://www.checkmycolours.com/) verifies that people with various visual needs can actually see your site. Yes, using the wrong colors really can be a problem and testing for that issue can help you avoid potential security problems caused by user error.

Another good place to find testing tools is The Daily Egg (http://blog.crazyegg.com/2013/08/08/web-usability-tools/). Some of the tools on this site are the same as those on the Mashable site, but you obtain additional insights about them. A few of the tools are unique. For example, the list of page speed testing tools is better on The Daily Egg and the list includes GTMetrix (http://gtmetrix.com/), which can help you locate the actual source of slowdowns on your page.

Developing Reproducible Steps

Part of user testing is to obtain a set of reproducible steps. Unless you gain this kind of information, you can’t really fix application issues that cause problems. This is the reason that you need specific testing software that records what the user does in detail. Asking the user to reproduce the steps that led to an error will never work. In many cases, the user has no idea of how the error occurred and simply feels that the computer doesn’t like them. Trying to get the user to reproduce the steps later will likely lead to frustration and cause the user to dislike the application (which usually leads to more errors and more security problems). Therefore, you need to get the steps required to reproduce an error on the first try.

In order to create an environment where you can obtain the best level of testing and also ensure that the user has a good chance of finding potential errors, you need to perform specific kinds of testing. Letting the user run amok to see what they can do without any sort of input is useful, but the chaos hardly produces predictable results. When working through user testing, you need to consider these kinds of tests:

• Functionality: It’s important to test all of the application features. This means asking the users to try out forms, perform file manipulation and calculation tasks, search for information using application features, and try out any media features your application provides. As the user tests these various features, make sure that the tests also check out the libraries, APIs, and microservices that your application relies upon to perform most tasks.

• User Interface and Usability: The user interface must keep the user engaged and provide support for anyone with special needs. As part of this level of testing, you need to check navigation, accessibility, usefulness from multiple browser types, error messages and warnings, help and any other documentation you provide, and layouts.

• Security: Even though you have tested the application to determine whether it suffers from any of the common security breaches listed in the “Defining the Most Common Breach Sources” section of this chapter, you need to have the user test for them as well. See if the user can get the application to break in the same ways that you did. Look for ways in which the user’s method of interacting with the application creates new breach conditions.

• Load and Scalability: It’s impossible for you to test an application fully to determine how it acts under load. You need to ensure that the application scales well and that its performance degrades gracefully as load increases. However, most importantly, you need to verify that load doesn’t cause issues where a security breach can occur. It’s important to know that the application will continue to work properly no matter how much load you apply to it. Of course, the application will run more slowly when the load exceeds expectations, but that’s not the same as actually breaking—causing a failure that could let someone in.

Giving the User a Voice

Interviewing a user or allowing users to discuss the application in a gripe session after working with it is a good way to discover more potential problems with the application. In many cases, a user will be afraid to perform certain steps due to the perception that the application will break. This problem occurs even in a test environment where the user is supposed to break the application. You may not want to hear what the users have to say, but it’s better to hear it during the development stage than to put the application into production and find out that it has major issues later.

As an alternative to confrontational approaches to obtaining user input, you can also rely on surveys. An anonymous survey could help you obtain information that the user might otherwise shy away from providing. It’s important to consider the effects of stress and perceived risk on the quality of user input you receive.

Using Outside Security Testers

Penetration testing relies on the services of a third party to determine whether an application, site, or even an entire organization is susceptible to various kinds of intrusion. The attacker probes defenses using the same techniques that a hacker uses. Because the attacker is a security professional, the level of testing is likely better than what an organization can provide on its own. Most organizations use outside security testing services for these reasons:

• Locating the vulnerabilities missed by in-house audits

• Providing customers and other third parties with an independent audit of the security included with an application

• Ensuring the security testing is complete because the organization doesn’t have any security professionals on staff

• Validating the effectiveness of incident management procedures

• Training the incident handling team

• Reducing security costs for the organization as a whole

It’s a bad idea to allow someone to penetration test your application, site, or organization without having legal paperwork in place, such as a Non-Disclosure Agreement (NDA). Don’t assume that you can trust anyone, especially not someone who is purposely testing the security of your setup. Make sure you have everything in writing and that there is no chance of misunderstanding from the outset.

Considering the Penetration Testing Company

Of course, like anything, penetration testing comes with some risks. For example, it’s likely that you expect the third party tester to be completely honest with you about the vulnerabilities found in your application, but this often isn’t the case. In some cases, the third party fails to document the vulnerabilities completely, but in other cases, the penetration tester might actually be sizing your company up to determine whether a real intrusion would be a good idea. It’s important to ensure you deal with a reputable security tester and verify the types of services rendered in advance. You should spend more time checking your security services if you experience:

• Disclosure, abuse, or loss of sensitive information obtained during a penetration test

• Missed vulnerabilities or weaknesses

• Availability of the target application or site is impacted

• Testing doesn’t occur in a timely manner

• Reporting is overly technical and hard to comprehend

• Project management looks disorganized, rushed, or ill-considered

When it comes to penetration testing, you tend to get what you pay for. Your organization must consider the tradeoffs between cost, timeliness, and quality of testing when hiring a third party to perform penetration testing. The less you pay, the more you tend to wait, the less you get for your money, and the more likely it is that something negative will happen as a result of the testing.

In some cases, a tradeoff in amount of testing works better than other tradeoffs do. Testing just one application, rather than the entire site, will cost less and you’ll be able to hire a higher quality security firm to perform the task. Of course, if you test just one application, you can’t check for issues such as trust relationships between the target and another systems.

Managing the Project

Before you allow anyone to perform penetration testing, you need a proposal that outlines the scope, objectives, and methods of testing. Any penetration testing must include social engineering attacks because most hackers employ them. In fact, the people in your organization are the weakest link in your security. An application can provide nearly perfect security, but a single disclosure by the wrong person can thwart all your attempts at maintaining a secure environment.

Ensure that the testing methodology is well-documented and adheres to industry best practices. For example, many security firms adhere to the Open Source Security Testing Methodology (OSSTMM) (check out the Institute for Security and Open Methodologies, ISECOM, site for details athttp://www.isecom.org/). If the tester uses some other methodology, make sure you understand precisely what that methodology is and what sorts of benefits it provides.

The proposal should state what type of feedback you receive as part of the testing. For example, it’s important to decide whether the penetration testing includes full disclosure with a demonstration of precisely how the system is penetrated, or does the report simply indicate that a particular area is vulnerable to attack.

Defining the time of the attack is also important. You might not want to allow testing during the busiest time of the day to avoid risking sales. On the other hand, you may need to allow testing at less convenient times if you truly want to see the effects of load on the system.

Covering the Essentials

Any organization you engage for penetration testing should have a single point of contact. It’s important that you be able to reach this contact at any time. The leader of your development team and the penetration team contact should be able to contact each other at any time to stop testing should the need arise. The contact should also have full details on precisely how the testing is proceeding and specifics about any potential issues that might occur during the current testing phase.

You should also know whether the tester has liability insurance to cover any losses incurred during testing. The liability insurance should include repayment of time invested in recovering data, down time for the system, and any potential loss of revenue that your organization might incur.

The testing team must also demonstrate competency. You don’t want just anyone penetration testing your system. The team that appears in the proposal should also be the team that does the actual testing. Look for certifications such as:

• GIAC Certified Incident Handler (GCIH)

• Certified Ethical Hacker (CEH)

• OSSTMM Professional Security Tester (OPST)

Getting the Report

The results of any penetration testing appear as a report. To ensure you get a report that you can actually use, make sure you request an example report before testing begins. The report should include a summary of the testing, details of any vulnerabilities, a risk assessment, and details of all the actions the penetration testing involves. The report must contain enough information that you can actually fix the problems found during testing, but still be readable by management staff so you get the time and resources required to perform the fixes.

Along with the report, you should also receive log files of every action taken during the testing phase. The log files should show every packet sent and received during testing so that you can go back later to follow the testing process step-by-step.

The security company you hire should also keep an archive of the testing process. You need to know how they plan to maintain this archive. Part of the reason for checking into the archive process is to ensure your confidential data remains confidential. It’s important that the archive appear as part of off-line storage, rather than a network drive on the vendor’s system.