MySQL Stored Procedure Programming (2009)

Part II. Stored Program Construction

Chapter 8. Transaction Management

A transaction is a set of one or more SQL statements that are logically grouped together and that must be either applied to the database in their entirety or not applied at all.

Consider the commonly cited example of a funds transfer from one account to another. In its most simple form, this transfer will involve two UPDATE statements: one to reduce the account balance in the "from" account, and another to increase the account balance in the "to" account. Suppose that the "from" account has been updated, but then the change to the "to" account cannot be completed. We must be sure to undo that first update, or the money that was to be transferred will have, in effect, "disappeared."

We expect database transactions to conform to the ACID principle, which means that transactions should be:

Atomic

The transaction is indivisible—either all the statements in the transaction are applied to the database, or none are.

Consistent

The database remains in a consistent state before and after transaction execution.

Isolated

While multiple transactions can be executed by one or more users simultaneously, one transaction should not see the effects of other concurrent transactions.

Durable

Once a transaction is saved to the database (an action referred to in database programming circles as a commit), its changes are expected to persist. Even if the user turns off her computer or the database server goes down, the changes will be saved. This usually means that the result of the transaction must be written to a nonvolatile form of storage, such as a hard disk (alternatively, it could be redundantly stored in multiple memory stores, written to battery-backed memory, or written to solid state disk).

Stored programs provide an excellent mechanism for defining, encapsulating, and managing transactions. Without the features available in stored progams, the calling program would need to issue the relevant SQL statements for the transaction and provide the logic to control locking and handle transaction failure. With MySQL stored program support , we can now encapsulate the multiple, interdependent SQL statements of the transaction into a single stored program. The application code, such as a PHP program, calls the stored program and transfers the responsibility for transaction management to the program executing in the database server.

In this chapter we review transactional support in MySQL and show how to create a transaction within a stored program. We also discuss how to deal with common transaction-related issues, such as lock timeouts, deadlocks, and locking strategies. We conclude by providing a general-purpose set of guidelines for transaction design.

Transactional Support in MySQL

MySQL is virtually unique in modern relational databases in that transactions are not mandatory. Under certain circumstances, they are not even possible. In fact, with MySQL, transactional support is a property not of the MySQL server itself, but of the underlying storage engine employed. Currently, the two most popular storage engines used with MySQL are MyISAM and InnoDB, although a small number of users use BerkeleyDB:

MyISAM

MyISAM does not support transactions. Using a nontransactional storage engine is fine for certain applications—in particular those that are overwhelmingly read-only. Certainly, if you do not need to manage transactions, you can improve the performance of some applications by avoiding the overhead associated with transaction management. If, on the other hand, you are building an application with a significant amount of updates and concurrent updates to the database, you will probably want to avoid MyISAM and instead rely on a transactional engine.

InnoDB

InnoDB is the most popular transaction-safe MySQL storage engine. It supports ACID transactions as well as row-level locking and multiversion concurrency.

Berkeley DB

This storage engine also supports transactions but is currently less widely used than InnoDB.

In a survey conducted by MySQL AB (http://dev.mysql.com/tech-resources/quickpolls/storage-engines.html), about 60% of respondents reported using MyISAM as their primary storage engine, while 37% used InnoDB and about 1% used BerkeleyDB. However, these figures are likely to change over the next few years, as MySQL AB releases additional storage engine types, many of which will be transactional.

Tip

This chapter assumes that you are using a transactional storage engine such as InnoDB or BerkeleyDB.

First, we need to discuss the concept of isolation levels and sessions.

Isolation Levels

Before we can talk sensibly about transactions and isolation levels, we need to be clear on the concept of a session. A database session is a unique connection to the database that commences when you log on to MySQL and that terminates when you disconnect—either explicitly or when MySQL notices that your client program has "gone away."

Every session has its own memory areas and—more importantly—can hold locks on data or have a unique view of certain data. Isolation levels determine the degree to which transactions in one session may affect the data seen or accessed by another session. All isolation levels are compromises between concurrency —the ability for multiple sessions to perform operations on the database at the same time—and consistency—the degree to which a session sees a logical and correct view of the data regardless of what activities might be going on in other sessions.

The isolation level of a transaction also determines the degree to which that transaction conforms to the ACID properties described at the beginning of this chapter. Each of the four isolation levels represents a different balance between the isolation and concurrency of transactions. At the highest isolation levels, very few transactions will be able to execute concurrently, but the chances of one transaction interfering with another will be minimized. At the lower isolation levels, many transactions will be able to execute concurrently, but the chances of conflicts between transactions will be higher.

The ANSI standard defines four isolation levels, all of which are supported by MySQL when using the InnoDB engine:

READ UNCOMMITTED

This is the lowest possible isolation level. Sometimes called dirty read, this level permits a transaction to read rows that have not yet been committed. Using this isolation level might improve performance, but the idea of one user retrieving data changed by another user, which might not actually be committed, is usually unacceptable.

READ COMMITTED

At this isolation level, only committed rows can be seen by a transaction. Furthermore, any changes committed after a statement commences execution cannot be seen. For example, if you have a long-running SELECT statement in session A that queries from the BOOKS table, and session B inserts a row into BOOKS while A's query is still running, that new row will not be visible to the SELECT.

REPEATABLE READ

At this isolation level, no changes to the database that are made by other sessions since the transaction commenced can be seen within the transaction, until the transaction is committed or rolled back (cancelled). This means that if you re-execute a SELECT within your transaction, it will always show the same results (other than any updates that occurred in the same transaction).

SERIALIZABLE

At this isolation level, every transaction is completely isolated so that transactions behave as if they had executed serially, one after the other. In order to achieve this, the RDBMS will typically lock every row that is read, so other sessions may not modify that data until the transaction is done with it. The locks are released when you commit or cancel the transaction.

You can change the isolation level in your MySQL session with the SET statement:

SET TRANSACTION ISOLATION LEVEL {READ UNCOMMITTED | READ COMMITTED

|REPEATABLE READ | SERIALIZABLE}

Under normal circumstances, you should avoid changing the transaction isolation level from the default of REPEATABLE READ. In particular, think carefully before setting the isolation level to READ UNCOMMITTED or SERIALIZABLE. READ UNCOMMITTED can lead to serious problems with the integrity of the data returned by the SELECT statement, while SERIALIZABLE will have a noticeable, negative effect on performance and can also increase the chance of "deadlocks" (described later in this chapter).

Transaction Management Statements

Use the following transaction management statements in MySQL stored programs:

START TRANSACTION

Signifies the commencement of a new transaction. If an existing transaction is already in progress, then START TRANSACTION will issue an implicit COMMIT . When you issue START TRANSACTION, the autocommit property (described in the next section) is effectively and implicitly set to 0 until the transaction ends. We recommend that you explicitly commit or roll back existing transactions before any START TRANSACTION statements, since the implicit COMMIT might not be obvious to someone reading or maintaining your code.

COMMIT

Saves all changes made in the transaction to the database and then terminates a transaction. COMMIT also releases any locks that might be in effect, whether they are explicit locks from FOR UPDATE or LOCK TABLES or implicit locks acquired as a result of executing DML statements.

ROLLBACK

Undoes any changes to the database made by the transaction and then terminates that transaction. Like COMMIT, ROLLBACK releases any locks held by the transaction.

SAVEPOINT savepoint_name

Creates a named savepoint identifier that can be the target of a ROLLBACK TO SAVEPOINT statement.

ROLLBACK TO SAVEPOINT savepoint_name

Performs a rollback on all statements that have been executed since the specified savepoint was created. In this way, you can roll back only part of a transaction, preserving some subset of your changes to still be saved. You may find savepoints useful when you need to save part of your work after an error has occurred. See the section "Working with Savepoints" later in this chapter for more details.

SET TRANSACTION

Allows you to change the isolation level of your transaction. See the section "Isolation Levels" earlier in this chapter for more details.

LOCK TABLES

Allows you to explicitly lock one or more tables. Note that LOCK TABLES implicitly closes any currently open transactions . We recommend that you explicitly commit or roll back your transaction before any LOCK TABLES statements. We rarely want to lock entire tables in the normal course of transaction processing, so we don't usually include LOCK TABLES statements in our transactional code.

Defining a Transaction

The default behavior of MySQL is to perform a COMMIT after the execution of each individual SQL statement, effectively turning every statement into an individual transaction. This approach is inadequate for most complex applications.

To enable transactions, allowing multiple SQL statements to be executed before a COMMIT or ROLLBACK is performed, you must take one of the following two steps:

§ Set the MySQL autocommit property or variable to 0. The default setting for AUTOCOMMIT is 1.

§ Explicitly initiate a transaction with the START TRANSACTION statement.

Since it is dangerous to assume that the MySQL environment is running with the necessary transaction setting, you should generally include either a SET AUTOCOMMIT=0 or START TRANSACTION statement in any transactional stored program.

The SET autocommit=0 statement simply ensures that MySQL will not implicitly issue a COMMIT after every SQL statement. Note, however, that if you have already initiated a transaction, issuing SET autocommit will have no effect. START TRANSACTION, on the other hand, implicitly commits any currently outstanding changes in your session, terminating the existing transaction and starting a new one.

We recommend that you leave nothing to chance when programming transactions in MySQL stored programs. Therefore, we suggest that you always explicitly commence a transaction with a START TRANSACTION statement and explicitly end your transaction with a COMMIT or ROLLBACK.

Tip

Wherever possible, define explicitly the beginning and end of every transaction with START TRANSACTION and COMMIT/ROLLBACK statements. Place the START TRANSACTION statement at the beginning of your transaction, and terminate it with either COMMIT orROLLBACK. If your program ends with conditional logic as part of its error handling, you may, in fact, need to use both of these statements—in different branches of your IF or CASE statement.

Example 8-1 shows a transaction implemented in a stored procedure using a SET AUTOCOMMIT statement.

Example 8-1. Commencing a transaction using SET AUTOCOMMIT

CREATE PROCEDURE tfer_funds

(from_account int, to_account int,tfer_amount numeric(10,2))

BEGIN

SET autocommit=0;

UPDATE account_balance

SET balance=balance-tfer_amount

WHERE account_id=from_account;

UPDATE account_balance

SET balance=balance+tfer_amount

WHERE account_id=to_account;

COMMIT;

END;

Example 8-2 shows an example of defining a transaction using START TRANSACTION.

Example 8-2. Commencing a transaction using START TRANSACTION

CREATE PROCEDURE tfer_funds

(from_account int, to_account int,tfer_amount numeric(10,2))

BEGIN

START TRANSACTION;

UPDATE account_balance

SET balance=balance-tfer_amount

WHERE account_id=from_account;

UPDATE account_balance

SET balance=balance+tfer_amount

WHERE account_id=to_account;

COMMIT;

END;

As we've said, transactions normally complete when either a COMMIT or a ROLLBACK statement is executed. However, be aware that some statements—usually Data Definition Language (DDL) statements—can cause implicit COMMITs. The statements that implicitly commit, and should therefore be avoided when a transaction is active, include the following:

|

ALTER FUNCTION |

ALTER PROCEDURE |

ALTER TABLE |

|

BEGIN |

CREATE DATABASE |

CREATE FUNCTION |

|

CREATE INDEX |

CREATE PROCEDURE |

CREATE TABLE |

|

DROP DATABASE |

DROP FUNCTION |

DROP INDEX |

|

DROP PROCEDURE |

DROP TABLE |

UNLOCK TABLES |

|

LOAD MASTER DATA |

LOCK TABLES |

RENAME TABLE |

|

TRUNCATE TABLE |

SET AUTOCOMMIT=1 |

START TRANSACTION |

Working with Savepoints

Savepoints allow you to perform a partial rollback of the changes in your transaction. If you issue an unqualified ROLLBACK, any and all changes in your current session are erased. If, however, you place a SAVEPOINT statement in your program, then you can roll back to that point in your program (and your transaction). In other words, any changes made before that statement can still be saved to the database with a COMMIT.

Generally, savepoints are intended to allow you to recover from a statement-level error without having to abort and restart your transaction. In these circumstances, the transaction includes one or more statements that might fail, yet should not force the invalidation of the entire transaction. Usually you will want to roll back to a savepoint, as part of handling the error, and then take the appropriate action, as indicated by the particular error that was raised.

Example 8-3 demonstrates the use of a savepoint with a transaction that creates or updates a location record, and then creates or updates a departments record that resides at that location:

Example 8-3. Example of a transaction that uses a savepoint

1 CREATE PROCEDURE savepoint_example(in_department_name VARCHAR(30),

2 in_location VARCHAR(30),

3 in_address1 VARCHAR(30),

4 in_address2 VARCHAR(30),

5 in_zipcode VARCHAR(10),

6 in_manager_id INT)

7 BEGIN

8 DECLARE location_exists INT DEFAULT 0;

9 DECLARE duplicate_dept INT DEFAULT 0;

10

11

12 START TRANSACTION;

13

14 -- Does the location exist?

15 SELECT COUNT(*)

16 INTO location_exists

17 FROM locations

18 WHERE location=in_location;

19

20 IF location_exists=0 THEN

21

22 INSERT INTO AUDIT_LOG (audit_message)

23 VALUES (CONCAT('Creating new location',in_location));

24

25 INSERT INTO locations (location,address1,address2,zipcode)

26 VALUES (in_location,in_address1,in_address2,in_zipcode);

27 ELSE

28

29 UPDATE locations set address1=in_address1,

30 address2=in_address2,

31 zipcode=in_zipcode

32 WHERE location=in_location;

33

34 END IF;

35

36 SAVEPOINT savepoint_location_exists;

37

38 BEGIN

39 DECLARE DUPLICATE_KEY CONDITION FOR 1062;

40 DECLARE CONTINUE HANDLER FOR DUPLICATE_KEY /*Duplicate key value*/

41 BEGIN

42 SET duplicate_dept=1;

43 ROLLBACK TO SAVEPOINT savepoint_location_exists;

44 END;

45

46 INSERT INTO AUDIT_LOG (audit_message)

47 VALUES (CONCAT('Creating new department',in_department_name));

48

49 INSERT INTO DEPARTMENTS (department_name,location,manager_id)

50 VALUES (in_department_name,in_location, in_manager_id);

51

52 IF duplicate_dept=1 THEN

53

54 UPDATE departments

55 SET location=in_location,

56 manager_id=in_manager_id

57 WHERE department_name=in_department_name;

58 END IF;

59

60 END;

61

62 COMMIT;

63

64 END;

Here is an explanation of this complex transaction logic:

|

Line(s) |

Explanation |

|

12 |

The START TRANSACTION statement denotes the start of the transaction. We can place this statement after our declarations, since they do not participate in any way in the transaction. |

|

15 |

In this SQL statement we check to see if a matching location exists. |

|

20-26 |

If the location does not exist (line 20), we insert an audit log record (lines 22-23) and then create the location (lines 25-26). |

|

29-32 |

If the location already exists, we update it with new detail. |

|

36 |

Whether or not the location existed in line 20, it definitely exists now, so we establish a savepoint indicating that we have gotten this much work done. |

|

39-44 |

Define an error handler that will fire in the event of a duplicate key error. If the handler is invoked, it will issue a rollback to our savepoint and then set the duplicate_dept variable so that we can detect that the rollback has occurred. You will find more information about handler logic in Chapter 6. |

|

46-50 |

Insert an audit record and then insert a new department. If a department already exists with this name, the handler will fire, setting the duplicate_dept variable and rolling back to the savepoint. This partial rollback will undo the audit log entry for the new department, but will preserve the inserts or update executed to ensure that the location existed. |

|

52-58 |

Check the duplicate_dept variable to see if there was a problem inserting the department. If so, then update the existing DEPARTMENTS record with the new information. |

Now that you have seen how to use the SAVEPOINT and ROLLBACK TO statements, we need to point out two undesirable side effects of this approach and then offer a restructuring of the program that renders savepoints unnecessary. These are the side effects:

§ The insert into the AUDIT_LOG table on line 46 will, indeed, be rolled back when the department cannot be inserted. However, the overhead of inserting and then rolling back that insert might not be trivial in a high-throughput environment.

§ The execution flow of the transaction is unclear. The rollback is defined in the handler on line 43, but actually will be triggered only when the insert fails on line 49. It is hard to tell just by looking at the INSERT statement what will happen, making it difficult to understand the overall logic of the transaction. It is, quite simply, more complicated than necessary.

We can rewrite this program to avoid the use of savepoints altogether (see Example 8-4). A hint of this approach was offered earlier in the procedure (lines 20-34): check to see if the record exists, then issue the INSERT or UPDATE as appropriate. The resulting logic is more straightforward, and actually reduces the number of SQL statements we need to code.

Example 8-4. Alternative to the SAVEPOINT implementation

CREATE PROCEDURE nosavepoint_example(in_department_name VARCHAR(30),

in_location VARCHAR(30),

in_address1 VARCHAR(30),

in_address2 VARCHAR(30),

in_zipcode VARCHAR(10),

in_manager_id INT)

BEGIN

DECLARE location_exists INT DEFAULT 0;

DECLARE department_exists INT DEFAULT 0;

START TRANSACTION;

-- Does the location exist?

SELECT COUNT(*)

INTO location_exists

FROM locations

WHERE location=in_location;

IF location_exists=0 THEN

INSERT INTO AUDIT_LOG (audit_message)

VALUES (CONCAT('Creating new location',in_location));

INSERT INTO locations (location,address1,address2,zipcode)

VALUES (in_location,in_address1,in_address2,in_zipcode);

ELSE

UPDATE locations set address1=in_address1,

address2=in_address2,

zipcode=in_zipcode

WHERE location=in_location;

END IF;

-- Does the department exists?

SELECT COUNT(*)

INTO department_exists

FROM departments

WHERE department_name=in_department_name;

IF department_exists=1 THEN

UPDATE departments

SET location=in_location,

manager_id=in_manager_id

WHERE department_name=in_department_name;

ELSE

INSERT INTO AUDIT_LOG (audit_message)

VALUES (CONCAT('Creating new department',in_department_name));

INSERT INTO DEPARTMENTS (department_name,location,manager_id)

VALUES (in_department_name,in_location, in_manager_id);

END IF;

COMMIT;

END;

Tip

Savepoints can be used to partially roll back transactions in the event of an error. If you cannot achieve the same effect through the use of exception handlers and conditional logic, then savepoints may be required. Watch out for SAVEPOINT-based implementations, however, that result in unnecessary and unnecessarily complicated code.

One good use of savepoints is to implement "nested" transactions inside of discrete stored programs. You may with to implement a stored program that performs a small transaction, but you don't want a rollback in that program to abort any larger transaction that may be in progress. A savepoint is a good way to do this, since you can easily roll back only the statements that you have issued within the procedure. Example 8-5 shows a stored program that implements this approach.

Example 8-5. Example of a "nested" transaction using a savepoint

CREATE PROCEDURE nested_tfer_funds(

in_from_acct INTEGER,

in_to_acct INTEGER,

in_tfer_amount DECIMAL(8,2))

BEGIN

DECLARE txn_error INTEGER DEFAULT 0 ;

DECLARE CONTINUE HANDLER FOR SQLEXCEPTION BEGIN

SET txn_error=1;

END;

SAVEPOINT savepoint_tfer;

UPDATE account_balance

SET balance=balance-in_tfer_amount

WHERE account_id=in_from_acct;

IF txn_error THEN

ROLLBACK TO savepoint_tfer;

SELECT 'Transfer aborted ';

ELSE

UPDATE account_balance

SET balance=balance+in_tfer_amount

WHERE account_id=in_to_acct;

IF txn_error THEN

ROLLBACK TO savepoint_tfer;

SELECT 'Transfer aborted ';

END IF;

END IF;

END;

The program in Example 8-5 creates a savepoint before issuing any DML statements. Should any errors occur, the program issues a rollback to that savepoint to ensure that the DML statements issued by the program—but only those statements—are reversed.

Transactions and Locks

The ACID properties of a transaction can only be implemented by restricting simultaneous changes to the database. This is achieved by placing locks on modified data. These locks persist until the transaction issues a COMMIT or ROLLBACK statement.

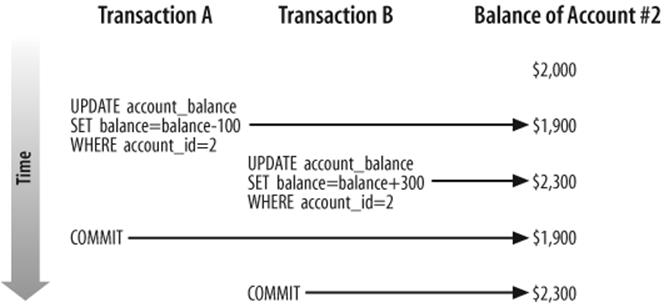

Without locks, a change made by one transaction could be overwritten by another transaction that executes at the same time. Consider, for example, the scenario shown in Figure 8-1, based on the tfer_funds procedure of Example 8-2. When two different sessions run this program for the same account number, we encounter some obvious difficulties if locks are not in place.

Figure 8-1. Illustration of a transaction without locking

In this scenario, account 2 starts with a balance of $2,000. Transaction A reduces the balance of the account by $100. Before transaction A commits, transaction B increases the account value by $300. Because transaction B cannot see the uncommitted updates made by transaction A, it increases the balance to $2,300. Because we allowed two transactions to simultaneously modify the same row, the database is now in an inconsistent state. The end balance for the account will be the value set by whichever transaction commits last. If transaction B is the last to commit, then the owner of account 2 will have $100 more than she should. On the other hand, if transaction A commits first, the account owner will be $300 out of pocket!

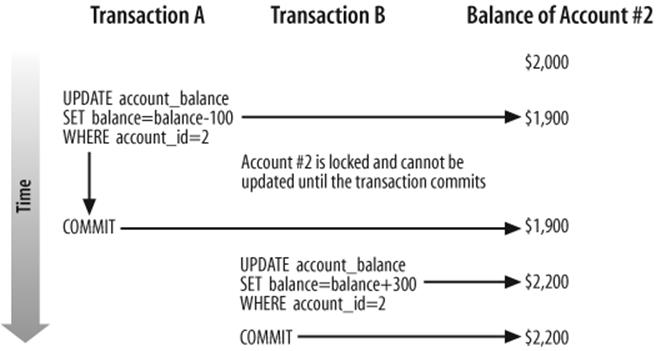

This clearly unacceptable result is completely avoidable when locks are placed on rows that have been changed, as is illustrated in Figure 8-2.

Now, when transaction A updates account 2, the relevant row is locked and cannot be updated by another transaction. Transaction B must wait for transaction A to be committed before its update can proceed. When transaction A commits, transaction B applies its update to the modified account balance, and the integrity of the account balance is maintained.

Figure 8-2. Illustration of a transaction with locking

The downside of this locking strategy is that transaction B must wait for transaction A to complete. The more programs you have waiting for locks to clear, the less throughput your transactional system will be able to support.

MySQL/InnoDB minimizes the amount of lock contention by locking at the row level only. In our example, updates to other rows in the ACCOUNT_BALANCE table are able to proceed without restriction. Furthermore, with InnoDB, reads do not normally cause locks to occur, and readers do not need to wait for locks to be released before accessing data. Other transactional storage engines—and other RDBMS systems—may behave differently.

You can, however, place locks on rows that have only been read by using the FOR UPDATE or LOCK IN SHARE MODE clause in the SELECT statement, and this is sometimes required to implement a specific locking strategy (see "Optimistic and Pessimistic Locking Strategies," later in this chapter).

In the following subsections we'll look at various types of locking situations, problems, and strategies.

Situations in Which Locks Arise

While it is possible for you to lock rows explicitly, you will generally rely on the storage engine to lock rows (or an entire table) implicitly, which it will do under the following circumstances:

§ When an UPDATE statement is executed, all rows modified will be locked.

§ An INSERT statement will cause any primary or unique key records to be locked. This will prevent a concurrent insert of a statement with an identical primary key.

§ You can lock entire tables with the LOCK TABLES statement. This is not generally recommended, because it not only reduces concurrency, it operates above the storage engine layer, which might mean that any storage engine deadlock resolution mechanisms may be ineffectual.

§ If you use the FOR UPDATE or LOCK IN SHARE MODE clauses in a SELECT statement, all of the rows returned by that SELECT statement will be locked.

Locking rows as they are read is an important technique that we'll demonstrate in subsequent examples. To read and simultaneously lock a row, you include the FOR UPDATE or LOCK IN SHARE MODE clause in the SELECT statement, as follows:

SELECT SELECT_statement options

[FOR UPDATE|LOCK IN SHARE MODE]

The two locking options differ in the following ways:

FOR UPDATE

When you use this clause, you acquire an exclusive lock on the row with the same characteristics as an UPDATE on that row. Only one SELECT statement can simultaneously hold a FOR UPDATE lock on a given row; other SELECT statements (or DML statements) will have to wait until the transaction ends.

LOCK IN SHARE MODE

When you use this clause, it prevents any DML from being applied to the row you have locked. However—unlike FOR UPDATE—any number of SHARE MODE locks can be applied to a single row simultaneously.

Deadlocks

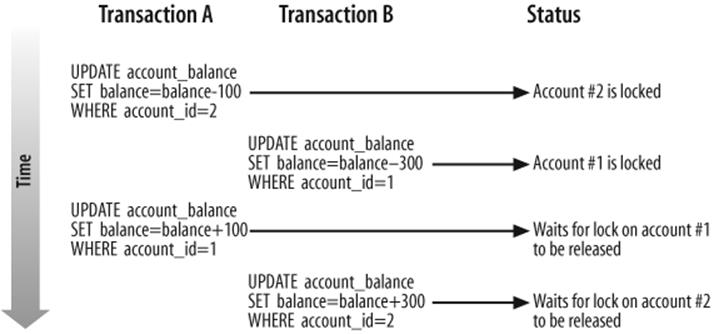

A deadlock occurs when two transactions are each waiting for the other to release a lock—they each block each other, and neither can proceed. For instance, consider the situation in which one transaction attempts to transfer $100 from account 2 to account 1. Simultaneously, another transaction attempts to transfer $300 from account 1 to account 2. If the timing of the two transactions is sufficiently unfortunate, then each may end up waiting for the other to release a lock, resulting in a stalemate that will never end. Figure 8-3 shows the sequence of events.

Figure 8-3. Sequence of events that leads to a deadlock condition

When MySQL/InnoDB detects a deadlock situation, it will force one of the transactions to roll back and issue an error message, as shown in Example 8-6. In the case of InnoDB, the transaction thus selected will be the transaction that has done the least work (in terms of rows modified).

Example 8-6. Example of a deadlock error

mysql> CALL tfer_funds(1,2,300);

ERROR 1213 (40001): Deadlock found when trying to get lock; try restarting transaction

Deadlocks can occur in any database system, but in row-level locking databases like MySQL/InnoDB, the possibility of a deadlock is usually low. You can further reduce the frequency of deadlocks by locking rows or tables in a consistent order, and by keeping your transactions as short as possible.

If you are building (or debugging) an application in which deadlocks seem likely to occur, and you cannot reorganize your transactions to avoid them, you can add logic to your programs to handle deadlocks and retry the transaction.

Example 8-7 shows a modified version of the stored procedure in Example 8-2 that will retry its transaction up to three times in the event of a deadlock.

Example 8-7. Stored procedure with deadlock-handling logic

1 CREATE PROCEDURE tfer_funds2

2 (from_account INT, to_account INT,

3 tfer_amount numeric(10,2), OUT out_status INT,

4 OUT out_message VARCHAR(30))

5 BEGIN

6

7 DECLARE deadlock INT DEFAULT 0;

8 DECLARE attempts INT DEFAULT 0;

9

10 tfer_loop:WHILE (attempts<3) DO

11 BEGIN

12 DECLARE deadlock_detected CONDITION FOR 1213;

13 DECLARE EXIT HANDLER FOR deadlock_detected

14 BEGIN

15 ROLLBACK;

16 SET deadlock=1;

17 END;

18 SET deadlock=0;

19

20 START TRANSACTION;

21

22 UPDATE account_balance

23 SET balance=balance-tfer_amount

24 WHERE account_id=from_account;

25

26 UPDATE account_balance

27 SET balance=balance+tfer_amount

28 WHERE account_id=to_account;

29

30 COMMIT;

31

32 END;

33 IF deadlock=0 THEN

34 LEAVE tfer_loop;

35 ELSE

36 SET attempts=attempts+1;

37 END IF;

38 END WHILE tfer_loop;

39

40 IF deadlock=1 THEN

41 SET out_status=-1;

42 SET out_message="Failed with deadlock for 3 attempts";

43

44 ELSE

45 SET out_status=0;

46 SET out_message=CONCAT("OK (",attempts," deadlocks)");

47 END IF;

48

49 END;

The error-handling techniques in Example 8-7 rely on statements introduced in Chapter 6. Here is a line-by-line explanation of the code:

|

Line(s) |

Explanation |

|

10 |

Commence a WHILE loop that will control attempts to execute (and possibly re-execute) the transaction. The WHILE loop condition of (attempts<3) ensures that we will try no more than three times to complete this task. |

|

11 |

Define an anonymous BEGIN block within the loop to contain the transaction. The END statement for this block appears on line 32. The block allows us to trap an error within the body of the loop, but not exit the loop itself. |

|

12-18 |

Prepare the block for the execution of the transaction. Define an EXIT handler and associate it with the deadlock error. When a deadlock occurs, the handler will set a variable indicating failure, issue a ROLLBACK, and then terminate the block, while remaining within the loop. |

|

20-30 |

The SQL statements that make up the transaction for this program. |

|

33-37 |

Determine if it is time to leave the loop or increment the counter. If a deadlock did not occur, the value of the deadlock variable is 0, so we use the LEAVE statement to terminate the WHILE loop. If deadlock equals 1, then the BEGIN-END block has terminated because of a deadlock, so we increment the attempts variable and (provided that attempts has not yet reached 3) allow the loop to re-execute the SQL statements and thereby retry the transaction. |

|

40-47 |

On these lines we examine the deadlock and attempts variables to determine the final state of the transaction. If deadlock=1, then our most recent attempt to execute the transaction failed with a deadlock, and—since we have tried three times—we terminate with an error. Otherwise, we signal a successful end to the transaction, although we note how many times we encountered a deadlock in the process. |

Going to this much effort to handle deadlocks will be overkill for most applications. Unless your application design is particularly vulnerable to deadlocks, you will encounter deadlocks so infrequently that you actually weaken your application by including so much hard-to-maintain deadlock-handling code.

As noted above, there are usually other ways to avoid deadlock scenarios. For instance, in Example 8-8 we lock the rows to be updated in numerical order before issuing any UPDATEs. Because the rows are always locked in the same order, one instance of this transaction should not cause a deadlock if another session runs the same program.

Example 8-8. Locking rows in order to avoid deadlock conditions

CREATE PROCEDURE tfer_funds3

(from_account INT, to_account INT,tfer_amount NUMERIC(10,2))

BEGIN

DECLARE local_account_id INT;

DECLARE lock_cursor CURSOR FOR

SELECT account_id

FROM account_balance

WHERE account_id IN (from_account,to_account)

ORDER BY account_id

FOR UPDATE;

START TRANSACTION;

OPEN lock_cursor;

FETCH lock_cursor INTO local_account_id;

UPDATE account_balance

SET balance=balance-tfer_amount

WHERE account_id=from_account;

UPDATE account_balance

SET balance=balance+tfer_amount

WHERE account_id=to_account;

CLOSE lock_cursor;

COMMIT;

END;

Lock Timeouts

A deadlock is the most severe result of locking. Yet, in many other situations, a program in one session may be unable to read or write a particular row, because it is locked by another session. In this case, the program can and—by default—will wait for a certain period of time for the lock to be released. It will then either acquire the lock or time out. You can set the length of time a session will wait for an InnoDB lock to be released by setting the value of the innodb_lock_wait_timeout configuration value, which has a default of 50 seconds.

When a timeout occurs, MySQL/InnoDB will roll back the transaction and issue an error code 1205, as shown in Example 8-9.

Example 8-9. Lock timeout error

mysql> SELECT * FROM account_balance FOR UPDATE;

ERROR 1205 (HY000): Lock wait timeout exceeded; try restarting transaction

So if you have very long-running transactions, you may want to increase the value of innodb_lock_wait_timeout or introduce error-handling code to cope with the occasional 1205 error.

In some circumstances—particularly when you mix MySQL/InnoDB and non-InnoDB tables in the same transaction (a practice we do not normally recommend)—MySQL/InnoDB may be unable to detect a deadlock. In such cases, the "lock wait timeout" error will eventually occur. If you are mixing MySQL/InnoDB and non-InnoDB tables, and you are particularly concerned about deadlocks, you may want to implement error-handling logic for lock timeouts similar to that implemented for deadlocks in Example 8-7.

Optimistic and Pessimistic Locking Strategies

If your transaction reads data that subsequently participates in an UPDATE, INSERT, or DELETE, you need to take steps to ensure that the integrity of your transaction is not jeopardized by the possibility of another transaction changing the relevant data between the time you read it and the time you update it.

For instance, consider the transaction in Example 8-10. This variation on our funds transfer transaction makes sure that there are sufficient funds in the "from" account before executing the transaction. It first queries the account balance, and then takes an action depending on that value (the balance must be greater than the transfer amount).

Example 8-10. Funds transfer program without locking strategy

CREATE PROCEDURE tfer_funds4

(from_account int, to_account int,tfer_amount numeric(10,2),

OUT status int, OUT message VARCHAR(30))

BEGIN

DECLARE from_account_balance NUMERIC(10,2);

SELECT balance

INTO from_account_balance

FROM account_balance

WHERE account_id=from_account;

IF from_account_balance >= tfer_amount THEN

START TRANSACTION;

UPDATE account_balance

SET balance=balance-tfer_amount

WHERE account_id=from_account;

UPDATE account_balance

SET balance=balance+tfer_amount

WHERE account_id=to_account;

COMMIT;

SET status=0;

SET message='OK';

ELSE

SET status=-1;

SET message='Insufficient funds';

END IF;

END;

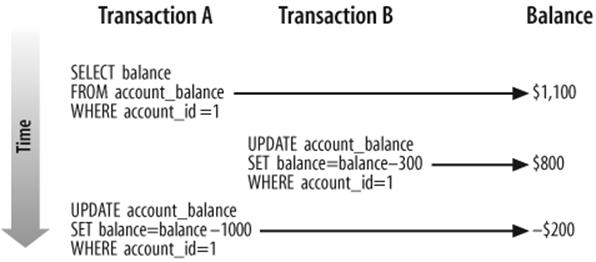

Unfortunately, as currently written, this program might under the right circumstances allow the "from" account to become overdrawn. Since some amount of time elapses between the query that establishes the current balance and the update transaction that reduces that balance, it is possible that another transaction could reduce the balance of the account within that period of time with its own UPDATE statement. This program's UPDATE would, then, cause a negative balance in the account.

Figure 8-4 shows the business policy violation that can result from a poor locking strategy. Transaction A determines that account 1 has sufficient funds before executing the transfer, but in the meantime transaction B has reduced the available funds by $300. When transaction A finally executes its update, the result is a negative balance.

Figure 8-4. Error resulting from a poor locking strategy

There are two typical solutions to this kind of scenario:

The pessimistic locking strategy

Assume that concurrent updates are quite likely to occur, and write programs to prevent them from happening. Generally, this means you will need to lock rows as they are read. Other transactions that want to update the row must wait until the "pessimistic transaction" ends.

The optimistic locking strategy

Assume that it is unlikely that anyone will update a row between the time we view it and the time we update it. Since we cannot be sure that this assumption is true, we must then, at the last possible moment, make sure that the row has not been updated. If the row has been updated, the transaction cannot be trusted and will have to be aborted.

Pessimistic locking strategy

Let's explore the pessimistic strategy first, with a simple example. We ensure that nobody modifies the balance of the "from" account by locking it with the FOR UPDATE clause as we retrieve the balance. We can now rest assured that when we issue our UPDATE statement, the balance of the account cannot have been altered. Example 8-11 shows how easy this is; all we needed to do was move the SELECT statement inside of the transaction and cause it to lock the rows selected with the FOR UPDATE clause.

Example 8-11. Pessimistic locking strategy

CREATE PROCEDURE tfer_funds5

(from_account INT, to_account INT,tfer_amount NUMERIC(10,2),

OUT status INT, OUT message VARCHAR(30))

BEGIN

DECLARE from_account_balance NUMERIC(10,2);

START TRANSACTION;

SELECT balance

INTO from_account_balance

FROM account_balance

WHERE account_id=from_account

FOR UPDATE;

IF from_account_balance>=tfer_amount THEN

UPDATE account_balance

SET balance=balance-tfer_amount

WHERE account_id=from_account;

UPDATE account_balance

SET balance=balance+tfer_amount

WHERE account_id=to_account;

COMMIT;

SET status=0;

SET message='OK';

ELSE

ROLLBACK;

SET status=-1;

SET message='Insufficient funds';

END IF;

END;

The pessimistic locking strategy usually results in the simplest and most robust code —code that ensures consistency between SELECT and DML statements within your transaction. The pessimistic strategy can, however, lead to long-held locks that degrade performance (forcing a large number of sessions to wait for the locks to be released). For instance, suppose that after you validate the balance of the transaction, you are required to perform some long-running validation—perhaps you need to check various other databases (credit checking, blocked accounts, online fraud, etc.) before finalizing the transaction. In this case, you may end up locking the account for several minutes—leading to disgruntlement if the customer happens to be trying to withdraw funds at the same time.

Optimistic locking strategy

The optimistic locking strategy assumes that it is unlikely that the row will be updated between the initial SELECT and the end of the transaction, and therefore does not attempt to lock that row. Instead, the optimistic strategy requires that we perform a check just before the update to ensure that the row has not been altered.

To detect if a row has been changed, we simply refetch the row—locking the row as we do so—and compare the current values with the previous values.

Example 8-12 demonstrates the optimistic locking strategy. If the account row has changed since the time of the initial balance check, the transaction will be aborted (line 33), although alternatively you could retry the transaction.

Example 8-12. Optimistic locking strategy

1 CREATE PROCEDURE tfer_funds6

2 (from_account INT, to_account INT, tfer_amount NUMERIC(10,2),

3 OUT status INT, OUT message VARCHAR(30) )

4

5 BEGIN

6

7 DECLARE from_account_balance NUMERIC(8,2);

8 DECLARE from_account_balance2 NUMERIC(8,2);

9 DECLARE from_account_timestamp1 TIMESTAMP;

10 DECLARE from_account_timestamp2 TIMESTAMP;

11

12 SELECT account_timestamp,balance

13 INTO from_account_timestamp1,from_account_balance

14 FROM account_balance

15 WHERE account_id=from_account;

16

17 IF (from_account_balance>=tfer_amount) THEN

18

19 -- Here we perform some long running validation that

20 -- might take a few minutes */

21 CALL long_running_validation(from_account);

22

23 START TRANSACTION;

24

25 -- Make sure the account row has not been updated since

26 -- our initial check

27 SELECT account_timestamp, balance

28 INTO from_account_timestamp2,from_account_balance2

29 FROM account_balance

30 WHERE account_id=from_account

31 FOR UPDATE;

32

33 IF (from_account_timestamp1 <> from_account_timestamp2 OR

34 from_account_balance <> from_account_balance2) THEN

35 ROLLBACK;

36 SET status=-1;

37 SET message=CONCAT("Transaction cancelled due to concurrent update",

38 " of account" ,from_account);

39 ELSE

40 UPDATE account_balance

41 SET balance=balance-tfer_amount

42 WHERE account_id=from_account;

43

44 UPDATE account_balance

45 SET balance=balance+tfer_amount

46 WHERE account_id=to_account;

47

48 COMMIT;

49

50 SET status=0;

51 SET message="OK";

52 END IF;

53

54 ELSE

55 ROLLBACK;

56 SET status=-1;

57 SET message="Insufficient funds";

58 END IF;

59 END$$

Optimistic locking strategies are often employed by transactions that involve user interaction, since there is sometimes the chance that a user will "go to lunch," leaving a pessimistic lock in place for an extended period. Since stored programs do not involve direct user interaction, optimistic strategies in stored programs are not required for this reason. However, an optimistic strategy might still be selected as a means of reducing overall lock duration and improving application throughput—at the cost of occasionally having to retry the transaction when the optimism is misplaced.

Choosing between strategies

Don't choose between optimistic and pessimistic strategies based on your personality or disposition. Just because your analyst assures you that you are a fairly fun-loving, optimistic guy or gal, that does not mean you should affirm this by always choosing the optimistic locking strategy!

The choice between the two strategies is based on a trade-off between concurrency and robustness: pessimistic locking is less likely to require transaction retries or failures, while optimistic locking minimizes the duration of locks, thus improving concurrency and transaction throughput. Usually, we choose optimistic locking only if the duration of the locks or the number of rows locked by the pessimistic solution would be unacceptable.

Transaction Design Guidelines

A well-designed transaction should have the following properties:

§ The integrity of the database will be maintained at all times.

§ The duration and coverage of locks will be minimized. Locks should be applied to as few rows as possible and maintained for the shortest possible duration.

§ Rollbacks will be minimal—transactions that eventually issue a rollback have needlessly consumed resources.

§ User expectations about the persistence of data will be met. For instance, a user who clicks a Save or Apply button has a reasonable expectation that the data will not disappear if he subsequently clicks Cancel on another page.

To achieve these goals, we recommend the following general guidelines for transaction design:

Keep transactions small

A transaction should generally include as small a logical unit of work as possible to reduce the duration of locks.

Avoid a transaction design that encourages rollbacks

For instance, rather than trying an insert and rolling back if there is a "duplicate key" error, check for the existence of the key value before issuing the DML.

Avoid savepoints whenever possible

The existence of a savepoint may indicate that you have failed to check for success criteria before issuing a DML statement and may indicate a transaction design that encourages rollbacks.

By default, rely on a pessimistic locking strategy

Lock rows that you SELECT if the results of the SELECT statement affect DML executed later in the transaction. Pessimistic locking is easy to implement and is a robust solution. However, issue SELECTs with FOR UPDATE as late in the transaction as possible to minimize duration of locks.

Consider optimistic locking for throughput-critical transactions

Optimistic locking requires more coding (to handle failed transactions) and may lead to user frustration if the optimism is misplaced. However, optimistic locking can reduce lock duration and thereby increase throughput for high-volume transactions.

Explicitly commence transactions and avoid leaving transactions "dangling"

Stored programs that issue transactional statements should generally take responsibility for commencing and terminating the transaction, rather than assuming that some external program is going to handle a COMMIT or ROLLBACK.

While these are reasonable guidelines, there are sometimes trade-offs that you will need to consider:

§ Unlike any other MySQL statement, the COMMIT statement always requires a physical write to disk to complete. Therefore, although it is a good idea in general to commit as soon as some logical unit of work is completed, there is a strong performance incentive to commit infrequently when possible. This usually means that for OLTP operations, you commit when the logical transaction is complete, whereas in batch programs and bulk operations, you commit infrequently. We discuss the performance implications of COMMIT in Chapter 21.

§ Checking all possible success criteria before issuing a DML statement might be overly expensive in some cases. It might be preferable to let a DML statement fail and then roll back to a savepoint under certain circumstances.

§ The trade-offs for the optimistic and pessimistic locking strategies are heavily dependent on the characteristics of your application.

§ Modular design considerations may sometimes lead you to write a stored program in such a way that the control of the overall transaction is delegated to a higher-level program.

Conclusion

In this chapter we looked at how to manage transactions in MySQL stored programs, allowing us to group together related database changes, applying them all or aborting them all as a single logical unit. Implementing transactions using stored programs is a fairly natural choice, since a stored program can encapsulate complex transaction logic into a single database call, providing good separation between database and application logic.

To use transactions in MySQL, you will need to create tables using one of the transactional storage engines—such as the InnoDB engine that ships with the MySQL standard distributions.

By default, transactions are disabled in MySQL; to enable them you need to either set AUTOCOMMIT=0 or (recommended) commence a transaction with the START TRANSACTION statement. Transactions are normally terminated with a COMMIT or ROLLBACK statement, though be aware that certain DDL statements can cause implicit COMMITs to occur.

Savepoints can be used to partially roll back transactions in the event of an error. We believe, however, that the reliance on savepoints is justified in only a very few specific circumstances.

Transactional databases use locking mechanisms to prevent data inconsistencies or logical errors when rows are updated, inserted, and deleted. MySQL/InnoDB minimizes the overhead of these locking mechanisms by using an efficient row-level locking mechanism in which readers never block other readers or writers. Even with this row-level locking, though, you should construct your transactions to minimize the duration of any locks taken out as a result of DML statements or SELECTs with the FOR UPDATE or LOCK IN SHARE MODE clause.

In rare circumstances, errors can occur if a lock timeout is exceeded or if an irresolvable lock conflict arises (a deadlock). There are mechanisms for reducing the frequency with which these occur, but you may want to add exception handlers to your stored programs or restructure them to handle these occurrences.

Whenever you SELECT data that is used to construct DML statements later in a transaction, you need to ensure that the data is not changed between the time it is read and the time the read data is used to modify the database. Locking the data as it is read—a pessimistic locking strategy—is usually the simplest and most robust solution. However, an optimistic locking strategy—in which the data is confirmed just prior to the DML being applied—can reduce the duration of locks and improve transaction throughput in some circumstances.

Good transaction design can improve the reliability, integrity, and performance of your application. In general, transactions—and the duration of locks—should be kept as short as possible. However, the overriding consideration is to maintain data integrity and the reliability of transaction processing.

All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL)

If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval.

© 2016-2026 All site design rights belong to S.Y.A.