Data Stewardship (2014)

CHAPTER 8 Measuring Data Stewardship Progress

The Metrics

This chapter explains how to measure your progress in implementing Data Stewardship using two categories of measurement: Business results and operational metrics. Business results metrics measures improvements in managing data in terms of improvements in how the company does business because of better data management. Operational metrics measures the number and quality of direct Data Stewardship deliverables.

Keywords

metrics; measure; operational; business; value; results; critical success factors

Introduction

As you have seen in previous chapters, the Data Stewardship program requires resources and effort to be expended to improve how the enterprise understands and manages its data. It should come as no surprise, therefore, that you are likely to be called on to measure and report the progress you have made—that is, the Return on Investment (ROI) that has been made in Data Stewardship.

The metrics you can record and report break down broadly into two categories: business results metrics and operational metrics. Business results metrics measure the effectiveness of Data Stewardship in supporting the data program and returning value to the company through better management of the data. Operational metrics measure the acceptance of the Data Stewardship program and how effectively the Data Stewards are performing their duties.

![]() In the Real World

In the Real World

It is all very well to talk about using and reporting metrics, but there are certain critical success factors you need to keep in mind to make metrics effective and relevant. The first (especially for business results metrics) is the willingness to consider longer-term value as a result of the Data Stewardship program. A better understanding of data meaning, increasing data quality, and well-understood business rules take a while to make themselves felt in the overall metrics that the corporation pays attention to. These overall metrics (as will be discussed later in this chapter) include such items as decreasing costs, increasing profits, and shortening time to market.

The second critical success factor is that the company must be willing to attribute the improvements in information-based capabilities to the actions of the Data Governance and Data Stewardship programs. When things improve, there is often a rush to get the credit for improvement. If at least some of the improvement is not credited to better management of data through the efforts of Data Stewardship, it can be very difficult to show (and quantify) the effect that the program has on the company’s bottom line.

Finally, you must carefully tailor your metrics to ensure that they are meaningful. You should start with the most critical business processes. For each of this (hopefully) small number of processes, identify the data elements that are most critical to those processes. Design your metrics (e.g., the overall quality, number of elements with definitions, how many elements are owned and stewarded, and so on) around that data. Grow your effort as you have the resources to do so.

Business Results Metrics

Business results metrics attempt to measure the business value that the Data Stewardship effort adds. Business value includes such things as:

- Increased revenues and profits

- Reduced duplicate data and data storage

- Increased productivity on use of data

- Reduced application development and system integration costs

- Increased ROI on projects

- Reduced time to market

- Increased adherence to audit and corporate responsibility

- Reduced compliance issues

- Increased knowledge about the customer and customer satisfaction

Many of these items are difficult to measure directly. One technique is to survey the data users in the company to get their opinions on the business results of the Data Stewardship efforts. That is, find out how they see the Data Stewardship effort impacting the following items:

- Is there better understanding of the data?

- Does the data have better quality? Note that you can measure directly how well the data matches the specified quality rules, but it also helps to understand whether the perceived data quality has improved.

- Has the enterprise improved its early warning of data quality issues through profiling?

- Has the enterprise determined how many customer complaints there have been driven by poor-quality data? Has this number decreased over time?

- Has the incidence of issues with regulatory and compliance organizations decreased?

- Has the enterprise reduced time spent cleansing and fixing data?

- Has the enterprise reduced time spent arguing about what data means or how it is calculated?

- Has the enterprise reduced or eliminated the efforts necessary to reconcile different reports through:

![]() Consistent definitions and derivations?

Consistent definitions and derivations?

![]() Consistent specification of what data is included in reports?

Consistent specification of what data is included in reports?

- Have specific costs decreased due to better management of data (see the sidebar “Business Results Metrics Examples”)? Can any of these costs be quantified?

- Does the enterprise make better business decisions? This one is hard to measure, even as part of a survey. But there is usually some perception about whether the enterprise is getting “smarter” about what it does (or not).

Business Results Metrics Examples

The questions that you ask to try and judge the impact of the Data Stewardship effort will depend on the business you are in. But the more specific you can be, the better your measurements will be. In addition, any claims you make for the positive impact of Data Stewardship on the company will be more defensible. The following are some sample questions from an insurance company with a robust Data Stewardship function:

- Calling/mailing costs: How many times did we contact someone who already had a particular type of policy or who was not eligible for that type of policy? How much postage/time was wasted?

- Loss of productivity/opportunity cost: How many policies could have been sold if agents had only contacted eligible potential customers? How much would those policies have been worth?

- Loss of business cost: How many policyholders canceled their policies because we didn’t understand their needs or didn’t appear to value their business (survey can give you an idea)? What is the lost lifetime value of those customers?

- Compliance cost: How much did we spend responding to regulatory or audit requests (demands!)? How much of that was attributable to poor data quality or information not being available?

Note that the business case for establishing Data Stewardship in the first place should already have established some baseline findings to compare the changes in cost against.

Operational Metrics

Operational metrics are a compilation of various measures that indicate:

- The level of participation of the business.

- The level of importance given to the Data Stewardship effort.

- Numerical measures for results achieved.

- How often and how effectively the Data Stewardship deliverables are used.

There are many specific measures that can be tracked and reported on as operational metrics, including:

- What changes in maturity measures have occurred for Data Stewardship (see Chapter 9)? There are a variety of ways to measure the maturity level of the effort, and periodic evaluation of those aspects can tell you whether the effort is gaining maturity, starting from sporadic and unmanaged attempts at data management, up to a well-managed, enterprise-wide, and standardized way of doing business. The organizational awareness dimension (see Table 9.1 in Chapter 9) includes the development of metrics as one of its measurements. In level 3, well defined, operational metrics are in place and some participation metrics are included in the evaluation of Data Stewards. In addition, direct impacts to data quality improvements are also being measured, and by the time the organization reaches level 4, strategic, a formalized method for gauging and attributing the value of data quality improvement to Data Stewardship is in place.

- How many disparate data sources have been consolidated? Many companies have a huge number of data sources, from “official” systems and data stores to servers hidden under people’s desks. Of course, the more data sources that are present, the more likely that the data has different meanings, business rules, and quality. One goal of a Data Stewardship effort is to achieve standardization of data, identify systems of record, and dispense with redundant data sources and stores. Retiring or shutting down disparate data sources is one measure of the success of the Data Stewardship effort, as well as a maturing of the data management culture.

- How many standardized/owned definitions have been gathered? Business data elements form the backbone of improvements in understanding and quality. Having a robust definition is the starting point. This is a simple metric, as all you need to do is count the number of data elements with a definition in a “completed” state. An alternative is to count the data elements that have definitions as well as a set of creation and usage business rules.

- How many business functions have provided assigned stewards to participate in the Data Stewardship program? When you are first starting a Data Stewardship program, it is not unusual for some business functions to refuse to provide resources (Business Data Stewards). This is likely to change over time as the results and value of Data Stewardship become apparent, but it will also help to have a scorecard that shows which business functions have provided the required resources.

- How many business functions have added Data Stewardship performance to their compensation plans? Although we said earlier that Data Stewardship is the formalizing of responsibility that has likely been in place for a while, rewarding the Data Stewards for performing these tasks is a critical success factor. That isn’t to say that Data Stewardship is ineffective if the compensation plan doesn’t include it, but as with anything else, you’ll get better performance if you compensate people for doing a good job.

- Which business functions are the most active in meetings, driving definitions, data quality rules, and the like? Even when people are assigned to perform the Data Stewardship functions, not everyone will participate to the same degree. Noting and reporting the level of participation of the most active Data Stewards can encourage and reinforce those people, as well as encourage the nonparticipators to join in as they see the value and rewards of participation. Of course, an increase in the level of participation does not necessarily mean that value is being added.

- Who are the most active contributors in each business function? As discussed earlier, the Data Stewardship effort benefits greatly from support and issue reporting from the data analysts in each business function. One of things that occurs as the program matures is that champions (typically, data analysts) appear in the field. These champions help to educate their peers, warn when Data Stewardship tenets are not being followed, and provide valuable input to the Data Stewards. Having many of these champions in each business function is a sign that the program is gaining traction, and they should be counted, recognized, and rewarded.

- How many people have been trained as stewards? One simple measure of the penetration of Data Stewardship into the enterprise is to count how many people have been trained as Data Stewards. As noted earlier, this is a rigorous training regimen. And even when Data Stewards rotate out of the assignment and new Data Stewards replace them, having the previous (trained) Data Stewards out in the enterprise is a good thing.

- How many times has stewardship metadata been accessed? After doing all the work to gather and document the metadata, you want it to be actively used. A good metric for that usage is to measure how often the metadata is viewed in whatever recording tool (e.g., a metadata repository or business glossary) you are using to store the metadata. You may need to filter the number of views a bit, such as to limit the count to unique views by separate individuals, but if the metadata is being viewed often, it is a good sign that it is not only useful but that the Data Stewardship message is getting out into the enterprise.

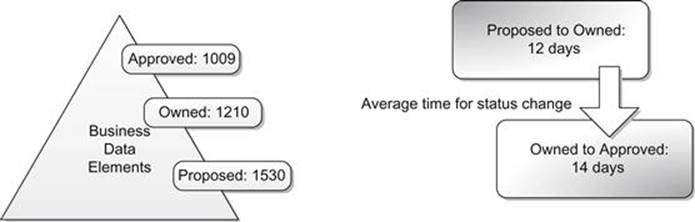

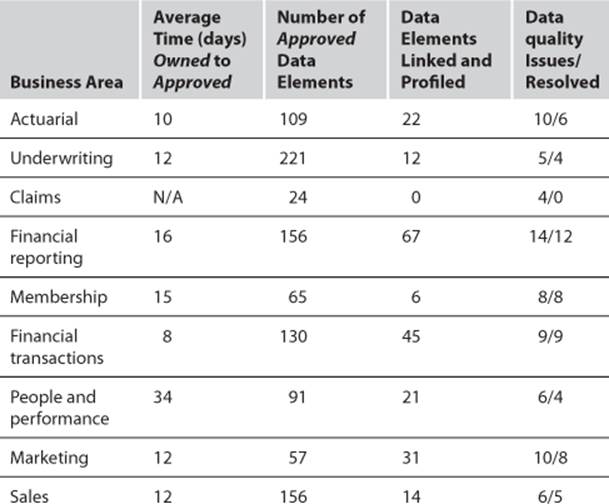

Data Stewardship work progress is another good set of operational metrics. These elements can be tracked in a table (see Table 8.1) or presented graphically in a dashboard (see Figure 8.1). They may include such things as:

FIGURE 8.1 Showing key Data Stewardship work progress in a scorecard.

- Data elements proposed, owned/stewarded, defined, and approved

- Business rules proposed, owned, and defined (e.g., data quality, creation, and usage rules)

- Average time elapsed from owned to approved

- Number of data elements linked and profiled

Table 8.1

Recording Key Data Stewardship Work Progress Metrics

Summary

Metrics are important to show the value that Data Stewardship adds to the enterprise, and to therefore justify the resources and efforts put into the Data Stewardship effort. Business Value Metrics show how the Data Stewardship program contributes value to the company in terms of increased profits, reduced costs, shortened time to market, fewer compliance and regulatory issues, and other measures that translate into conducting business better and more efficiently. Operational metrics show how much work the Data Stewardship program is performing by counting things like how many data elements have been defined and owned, how many stewards have been trained, how often the Data Stewardship metadata is being used, and other measures of the program’s efforts.