Computer Security Basics, 2nd Edition (2011)

Part V. Appendixes

Appendix C. The Orange Book, FIPS PUBS, and the Common Criteria

When the U.S. government writes the standards, and then becomes itself one of the largest customers for equipment that meets requirements defined by those standards, those standards become important very quickly. Add to this the fact that once the government overcomes its own bureaucratic forces to the point it actually accomplishes something, the corollary is that the work stays in force for a long time.

Such is the case of the Orange Book. Different organizations required different levels of security, and because security professionals needed a metric to gauge if a computer system was secure enough for the intended purpose, the government developed the Trusted Computer System Evaluation Criteria (TCSEC) and published them in a book that had an orange cover, hence the nickname “Orange Book.” The Orange Book was part of a family of publications on security with different colored covers called the Rainbow Book series. See the sidebar "Somewhere Over the Rainbow.”

SOMEWHERE OVER THE RAINBOW

The Orange Book was an abstract, very concise description of computer security requirements. It raised more questions than it answered. In an attempt to help system developers, the government has published a number of additional books interpreting Orange Book requirements in particular, puzzling areas. These were known collectively as the Rainbow Series because each had a different color cover, and the influence of these publications is still felt in government computing today.

Chief among the documents in the Rainbow Series was the Trusted Network Interpretation (TNI), the Red Book, which interpreted the criteria described in the Orange Book for networks and network components. Published in 1987, this book identified security features not mentioned in the Orange Book that applied to networks, and it described how these features fit into the graded classification of systems described in the Orange Book. For example, the Red Book discussed how the concept of group identification for DAC might be extended to Internet addresses.

Another book that left traces of language that can sometimes be seen today is Trusted Database Management System Interpretation (TDI), the Lavender Book. This book was directed toward developers of database management systems and interpreted Orange Book requirements for DBMS products. For example, this book discussed how the concept of security labels might be extended to labels for stored view definitions (obtained via DBMS query commands).

There were more than 20 books in the Rainbow Series. Some others included:

Green Book

Password Management Guideline

Tan Book

A Guide to Understanding Audit in Trusted Systems

Purple Book

Guidelines for Formal Verification Systems

Burgundy Book

A Guide to Understanding Design Documentation in Trusted Systems

In theory, the Orange Book, et al., have been superseded by the international Common Criteria, but you would not know it in some circles. The language of the Orange Book, and its rating system, is so pervasive that if you’re at all interested in computer security, you’ll need to know something about the Orange Book.

Concurrent with the development of the Orange Book is a series of government standards known as the Federal Information Processing Standards Publications. FIPS PUBS are the language of computer system acquisition and information technology operations, as viewed by the U.S. government.

The Common Criteria is the name given to a unification of TCSEC and its European counterpart, the Information Technology Security Evaluation Criteria. Common Criteria represents where the definition of security standardization is going, but the actual state of the art is found somewhere in the nexus of TCSEC, ITSEC, and the national security evaluation policies from several other nations.

The following sections describe these three standards.

About the Orange Book

The Orange Book defined four broad hierarchical divisions of security protection. In increasing order of trust, they were:

D:

Minimal security

C:

Discretionary protection

B:

Mandatory protection

A:

Verified protection

Each division consisted of one or more numbered classes, with higher numbers indicating a greater degree of security. For example, division C contained two distinct classes (C2 offered more security than C1); division B contained three classes (B3 offers more security than B2, which offered more security than B1). Each class was defined by a specific set of criteria that a system must meet to be awarded a rating in that class.

WHY WAS THERE AN ORANGE BOOK?

What was the purpose of the Orange Book? According to the book itself, the evaluation criteria were developed with three basic objectives:

Measurement

To provide users with a metric with which to assess the degree of trust that can be placed in computer systems for the secure processing of classified or other sensitive information. For example, a user can rely on a B2 system to be “more secure” than a C2 system.

Guidance

To provide guidance to manufacturers as to what to build into their trusted commercial products to satisfy trust requirements for sensitive applications.

Acquisition

To provide a basis for specifying security requirements in acquisition specifications. Rather than specifying a hodge-podge of security requirements, and having vendors respond in piecemeal fashion, the Orange Book provides a clear way of specifying a coordinated set of security functions. A customer can be confident that the system she acquires has already been checked out for the needed degree of security.

As the Orange Book put it, the criteria “constitute a uniform set of basic requirements and evaluation classes for assessing the effectiveness of security controls built into . . . systems.”

These philosophical underpinnings echo through the international replacement for the Orange Book, the Common Criteria.

Orange Book Security Concepts

The Orange Book criteria had four pillars:

§ Security policy

§ Accountability

§ Assurance

§ Documentation

Security policy

A security policy is the set of rules and practices that regulate how an organization manages, protects, and distributes sensitive information. It’s the framework in which a system provides trust.

A security policy is typically stated in terms of subjects and objects. A subject is something active in the system; examples of subjects are users, processes, and programs. An object is something that a subject acts upon; examples of objects are files, directories, devices, sockets, and windows.

The Orange Book defined a security policy as follows:

Given identified subjects and objects, there must be a set of rules that are used by the system to determine whether a given subject can be permitted to gain access to a specific object.

At the lower levels of security, a security policy could be informal, and the Orange Book did not even require that it be written. For B1 systems and above, the Orange Book required a written security policy. At the higher levels, the security policy became increasingly formal and had to be expressed in mathematically precise notation.

A security model is usually required to give life to the security policy. The Orange Book criteria were based on the state-machine model developed by David Bell and Leonard LaPadula in 1973 under the sponsorship of the U.S. Air Force Electronic Systems Division (ESD). The Bell and LaPadula model was also the first mathematical model of a multilevel secure computer system. This involved the concept of a security lattice, which is now seen in most other security models. The difference between various models today often boils down to what subjects can access which objects from what level of the lattice.

Accountability

Accountability requirements defined in the Orange Book centered on the idea that a system must identify all users and use that information to decide whether the user could legitimately access information. The system was also required to keep track of any security-related actions taken in the system. There was also a requirement that the system produce audit reports, if required, so that system access and usage could be examined.

Generally, the requirements increased in rigor as the trust classification increased. For instance, for C1, the system distinguished only between authorized users and unauthorized users; for C2 and above, each user had an individual ID. Once logged in, the system used the user ID, and the security profile associated with it, to make access decisions. The system also used the ID to audit user actions. The Orange Book required user auditing beginning at the C2 level.

Assurance

Assurance is a guarantee (or at least a reasonable confidence) that the security policy of a trusted system has been implemented correctly and that the system’s security features accurately carry out that security policy. The Orange Book identified two types of assurance: operational assurance and life-cycle assurance. Operational assurance focused on the basic architecture and features of the system; life-cycle assurance focused on controls and standards for building and maintaining the system.

Moving up the ladder from less trusted to more trusted, systems encountered more and more assurance requirements only to a point. At the highest levels (B3 and A1), there were very few additional security features beyond those required for lower-level systems. What was added was a greater demand that a far greater assurance, or trust, could be placed in the security features.

A very important element of system security is the assurance that the system will function as expected and will remain available for operation over the course of its lifetime. Many vendors met the system integrity requirement by providing a set of integrity tests. These tests occurred regularly, often each time the system was powered up, reminiscent of the power on self test (POST) performed by most computers and network appliances. More substantial security diagnostics were usually performed at scheduled preventive maintenance periods.

In theory, every operation performed by every piece of information in a network can provide information that is useful to an attacker. A covert channel is an information path that’s not ordinarily used for communication in a system and therefore isn’t protected by the system’s normal security mechanisms. Covert channels have a nice subversive sound to them. They’re a secret way to convey information to another person or program—the computer equivalent of a spy carrying a newspaper as a signal: for example, the New York Times means the blueprints have been smuggled out of the plant, and the Washington Post means there’s been a problem.

The reason covert channels aren’t more of a problem is that getting meaningful information in this way is quite cumbersome. The Orange Book wisely reserved covert channel analysis and protection mechanisms for the highest levels of security (B2 systems and above), where the information gained by exploiting covert channels is more likely to be worth the quest.

Trusted facility management, another Orange Book criterion, is the assignment of a specific individual to administer the security-related functions of a system. Trusted facility management is closely related to the concept of least privilege, which means that the users in a system should have the least number of privileges—and for the shortest amount of time—needed to do their work. It’s also related to the administrative concept of separation of duties, the idea that it’s better to assign pieces of security-related tasks to several specific individuals; if no one user has total control of the system’s security mechanisms, no one user can completely compromise the system.

The Orange Book concept of trusted recovery ensures that security is not breached when a system crash or some other system failure occurs. Trusted recovery actually involves two activities: preparing for a system failure and recovering the system. A system failure represents a possibly serious security risk because it is a time when the system is not functioning in the ordinary way. For example, if the system crashes while sensitive data is being written to disk (where it is protected by the system’s access control mechanisms), the possibility exists that the data will be left unprotected in memory. Alternatively, a data label representing a lower level of security may have been assigned to a file that was in the process of having secret materials removed from it, but the operation had not been completed when the failure occurred.

Life-cycle assurance.

Life-cycle assurance ensures that once assurance activities are begun, they will continue for the rest of the time the computer or network remains in service. It is a metric of diligence to protect hardware and software from tampering or inadvertent modification at any stage in the system’s life cycle—during design, development, testing, updating, or distribution. An example of a key life-cycle assurance is configuration management, which carefully monitors and protects all changes to the system’s hardware, software, firmware, test suites, and documentation.

These and other Orange Book assurances are subject to verification by testing. The Orange Book even carries a requirement for the highest levels of security that all material and parts into and out of the computer or network be protected by trusted distribution, which means that materials must be protected while they are at vendors’ facilities and while they are transported to and from. This may require secure storage, protective, tamper-proof packaging, and the use of couriers.

Documentation

The final set of requirements defined in the Orange Book was the set of documentation requirements. Developers of trusted systems sometimes complained that the documentation required for the evaluation of a trusted system was formidable. The Orange Book mandated four specific documents, although this varied by trust classification. As you’d expect, the amount and depth of the documentation increased as you moved up the trusted system ladder.

The documentation requirements were:

§ Security Features User’s Guide (SFUG)

§ Trusted Facility Manual (TFM)

§ Test documentation

§ Design documentation

The Security Features User’s Guide (SFUG) was aimed at ordinary, unprivileged system users. It told them what they needed to know about system security features and how to enforce them, including: logging in, protecting and moving files, and warnings about why certain file moves, adds, and changes were disallowed by the system.

The Trusted Facility Manual (TFM) was aimed at system administrators and/or security administrators. It told them everything they needed to know about setting up the system so it would be secure, and how to enforce system security. The Orange Book required that the TFM contain warnings about the functions and privileges that must be controlled in a trusted system. The TFM was a requirement at all levels of security. As security requirements became more complex at the higher levels, the TFM addressed increasingly complex topics as well.

The test documentation requirement was also an important piece of security testing. Good test documentation is usually simple but voluminous. It was not uncommon that the test documentation for even a C1 or C2 system to consist of many volumes of test descriptions and results. For all Orange Book levels, the test documentation had to “show how the security mechanisms were tested, and results of the security mechanisms’ functional testing.”

The design documentation was a formidable requirement for most system developers. The focus of the design documentation was to document the internals of the system’s (or at least the Trusted Computing Base’s [TCB]) hardware, software, and firmware. A key task was to define the boundaries of the system and to clearly distinguish between those portions of the system that are security-relevant and those that are not.

There were two major goals of design documentation: to prove to the evaluation team that the system fulfills the evaluation criteria, and to aid the design and development team by helping them define the system’s security policy and how well that policy was implemented.

Because there were so many questions about the scope, organization, and quantity of design documentation during system development and evaluation, the government published A Guide to Understanding Design Documentation in Trusted Systems (another in the Rainbow Series: the Burgundy Book). This document summarizes all the specific design documentation requirements (36 in all!).

Rating by the Book

As discussed earlier, a security policy states the rules enforced by the system to provide the necessary degree of security. The Orange Book defined the following specific requirements in the security policy category:

§ Discretionary access control

§ Object reuse

§ Mandatory access control

§ Labels

WHAT’S A TRUSTED SYSTEM?

The Orange Book talked about “trusted” systems rather than “secure” systems. The words aren’t really synonymous. No system is completely secure. Any system can be penetrated—given enough tools and enough time. But systems can be trusted, some more than others, to do what we want them to do, and what we expect them to continue to do over time.

The Orange Book provided its own definition of a trusted system, and the language lingers to this day:

. . . a system that employs sufficient hardware and software integrity measures to allow its use to simultaneously process a range of sensitive unclassified or classified (e.g., confidential through top secret) information for a diverse set of users without violating access privileges.

The concept of the Trusted Computing Base is central to the notion of a trusted system. The Orange Book used the term TCB to refer to the mechanisms that enforce security in a system. The book defined the TCB as follows:

The totality of protection mechanisms within a computer system—including hardware, firmware, and software—the combination of which is responsible for enforcing a security policy. It creates a basic protection environment and provides additional user services required for a trusted computer system. The ability of a trusted computing base to correctly enforce a security policy depends solely on the mechanisms within the TCB and on the correct input by system administrative personnel of parameters (e.g., a user’s clearance) related to the security policy.

Not every part of an operating system needs to be trusted. An important part of an evaluation of a computer system is to identify the architecture, assurance mechanisms, and security features that comprise the TCB, and to show how the TCB is protected from interference and tampering—either accidentally or deliberately.

NO GUARANTEES

An Orange Book rating, even a very high rating, can’t guarantee security. A “trusted” system is still only as secure as the enforcement of that system. Much of actual system security is in the hands of your system administrator and/or your security administrator. Your administrative staff must understand the full range of system features, must set up all necessary security structures, and must train users to do their part. Often, their efforts will seem toothless unless high management lends them weight. The more highly trusted a system is, the less individual users can compromise the system. But system administration remains the vital link between a system’s theoretical security and its actual ability to function securely in the real world.

Discretionary and Mandatory Access Control

The discretionary access control method restricts access to files (and other system objects) based on the identity of users and/or the groups to which they belong. DAC is the most common type of access control mechanism found in trusted systems.

The DAC requirement specifies that users should be able to protect their own files by indicating who can and cannot access them (on a “need-to-know” basis) and by specifying the type of access allowed (e.g., read-only, read and modify, etc.). In contrast to mandatory access control, in which the system controls access, DAC is applied at the discretion of the user (or a program executing on behalf of the user). With DAC, you can choose to give away your data; with MAC, you can’t.

Object Reuse

Object reuse requirements protect files, memory, and other objects in a trusted system from being accidentally accessed by users who aren’t authorized to access them. Consider a few obvious examples of what could happen if object reuse features are not part of a trusted system. Suppose that when you create a new file, your system allocates a certain area of disk to that file. You store confidential data in the file, print it, and eventually delete it. However, suppose the system doesn’t actually delete the data from the physical disk, but simply rewrites the header of the file to indicate deletion. There are many ways to bypass ordinary system procedures and read the data on the disk without using the normal filesystem. Object reuse features provide security by ensuring that when an object—for example, a login ID—is assigned, allocated, or reallocated, the object doesn’t contain data left over from previous usage.

Common object reuse features implemented in trusted systems include:

§ Clearing memory blocks or pages before they are allocated to a program or data.

§ Clearing disk blocks when a file is scratched or before the blocks are allocated to a file.

§ Degaussing magnetic tapes when they’re no longer needed.

§ Clearing X Window System objects before they are reassigned to another user.

§ Erasing password buffers after encryption.

§ Clearing buffered pages, documents, or screens from the local memory of a terminal or printer.

Labels

A sensitivity label describes briefly who can access what information in your system. Labels and mandatory access control are separate security policy requirements, but they work together. Beginning at the B1 level, the Orange Book required that every subject (e.g., user, process) and storage object (e.g., file, directory, window, socket) have a sensitivity label associated with it. (For B2 systems and above, all system resources (e.g., devices, ROM) must have sensitivity labels.) A user’s sensitivity label specifies the sensitivity level, or level of trust, associated with that user; a user’s sensitivity label is usually called a clearance. A file’s sensitivity label specifies the level of trust that a user must have to be able to access that file.

Label integrity

Label integrity ensures that the sensitivity labels associated with subjects and objects are accurate representations of the security levels of these subjects and objects. Thus, a file sensitivity label, such as:

TOP SECRET [VENUS]

must actually be associated with a TOP SECRET file containing information about the planet Venus. A clearance, such as:

COMPANY CONFIDENTIAL [PAYROLL AUDIT]

must be assigned to an accountant who’s responsible for the payroll and auditing activities in your company. Similarly, when information is exported (as discussed in the next few sections), the sensitivity label written on the output must match the internal labels of the information exported.

Suppose that a user edits a TOP SECRET file to remove all TOP SECRET and SECRET information (leaving only UNCLASSIFIED information), and then changes the sensitivity label to UNCLASSIFIED. If the system crashes at this point, has it ensured that the label didn’t get written to disk before the changes to the file were written? If such a thing could happen, you’d end up with a file labeled UNCLASSIFIED that actually did contain TOP SECRET data—a clear violation of label integrity. Systems rated at B1 and above must prove that their label integrity features guard against such violations.

Exportation of labeled information

A trusted system must be sure that when information is written by the system, that information continues to have protection mechanisms associated with it. Two important ways of securing exported information are to assign security levels to output devices and to write sensitivity labels along with data. Systems rated at B1 and above must provide secure export facilities.

The Orange Book defined two types of export devices: multilevel and single-level. Every I/O device and communications channel in a system must be designated as one type or the other. Any changes to these designations must be able to be audited. Typically, a system administrator designates devices during system installation and setup.

Exportation to multilevel devices

A multilevel device or a multilevel communications channel is one to which you can write information at a number of different sensitivity levels. The system must support a way of specifying the lowest (e.g., UNCLASSIFIED) and the highest (e.g., TOP SECRET) security levels allowed for data being written to such a device.

To write information to a multilevel device, the Orange Book required that the system have some way to associate a security level with it. Mechanisms may differ for different systems and different types of devices. Files written to such devices must have sensitivity levels attached to them (usually written in a header record preceding the data in the file). This prevents a user from bypassing system controls by simply copying a sensitive file to another, untrusted system or device. Of course, there must be system controls on what devices can actually be used in this way—and what happens to the transferred data. A sensitivity label on a file isn’t much protection if a user can simply carry a CD home, or can post the file to a web page. In most trusted systems, only disks are categorized as multilevel devices.

Exportation to single-level devices

A single-level device or a single-level communications channel is one to which you can write information at only one particular sensitivity level. Usually, terminals, printers, tape drives, and communication ports are categorized as single-level devices. The level you specify for a device is usually dependent on its physical location or the inherent security of the device type. For example, your installation might locate printers in a number of different computer rooms and offices. You’d designate these printers as having sensitivity levels corresponding to the personnel who have access to the printers.

You might designate a printer in a public area as UNCLASSIFIED, whereas a printer in a highly protected office used only by an individual with a TOP SECRET clearance might be designated TOP SECRET. Once you’ve designated a device in this way, the system will be able to send to that device only information at the level associated with the device.

Unlike output sent to multilevel devices, output sent to single-level devices is not required to be labeled with the security level of the exported information, although many trusted systems do label such output. The Orange Book does require that there be some way (system or procedural) to designate the single level of information being sent to the device.

Labeling human-readable output

The Orange Book included very clear requirements for how to label hard-copy output (output that people can see). This included pages of printed output, maps, graphics, and other displays. The system administrator must have some way of specifying the labels that appear on the output.

Subject sensitivity labels

The subject sensitivity label requirement states that the system must notify a terminal user of any change in the security level associated with that user during an interactive session. This requirement applies to systems rated at B2 and above.

The idea of subject sensitivity labels is that you must always know what security level you’re working at. Trusted systems typically display your clearance when you log in, and redisplay it if the security level changes, either automatically or at the user’s request.

Device labels

The device labels requirement states that each physical device attached to your system must have minimum and maximum security levels associated with them, and that these levels are used to “enforce constraints imposed by the physical environments in which the devices are located.”

Summary of Orange Book Classes

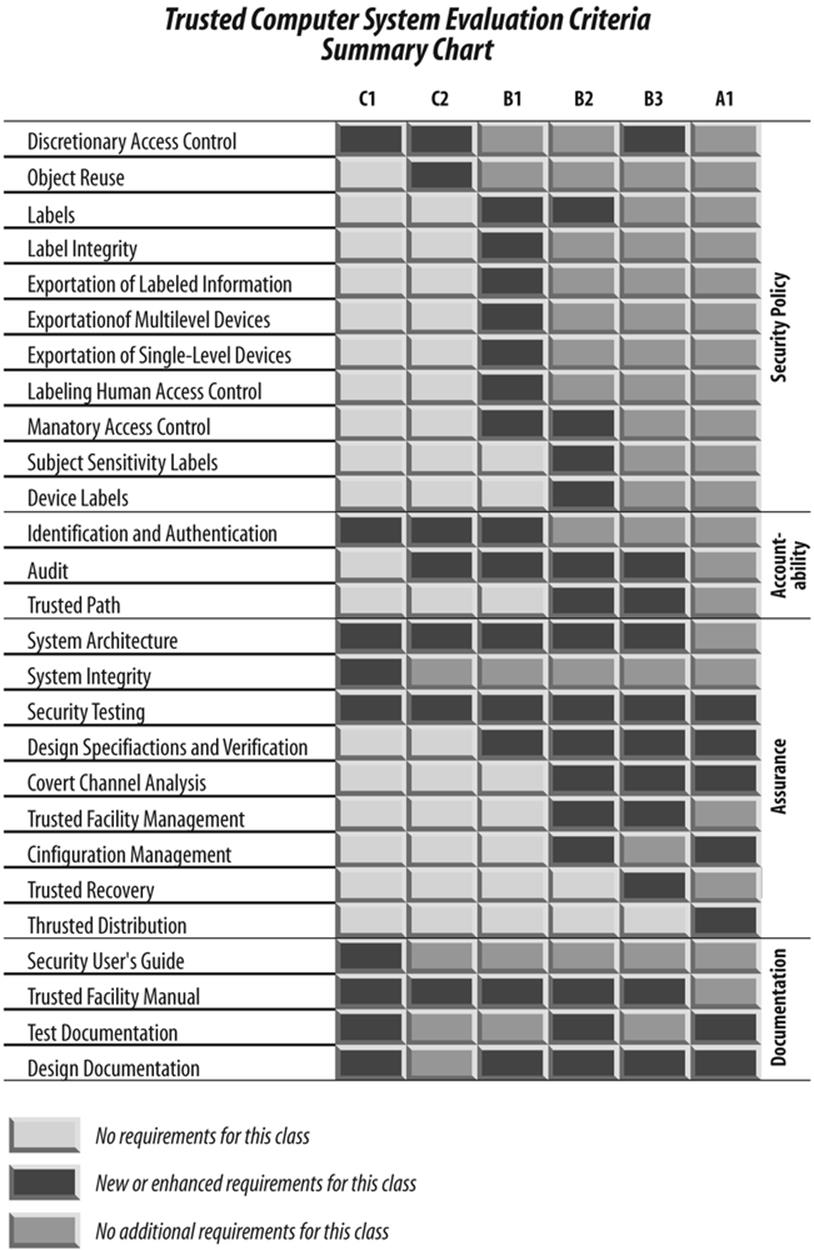

The following sections summarize very briefly the high points of each of the Orange Book evaluation classes described in this appendix. (See Figure C-1.)

Figure C-1. Comparison of evaluation classes

D Systems: Minimal Security

According to the Orange Book, division D “is reserved for systems that have been evaluated but that fail to meet the requirements for a higher evaluation class.” The Orange Book listed no requirements for division D. It’s simply a catch-all category for systems that don’t provide the security features offered by systems more highly rated.

In fact, there are no evaluated systems in this class; a vendor wouldn’t go to the trouble of getting a system evaluated if it didn’t offer a reasonable set of security features. It’s likely that the most basic personal computer systems would fall into the division D category if they were evaluated.

C1 Systems: Discretionary Security Protection

C1 systems provide rather limited security features. The Orange Book described C1 systems as an environment of “cooperating users processing data at the same level(s) of security.” At this level, security features are intended primarily to keep users from making honest mistakes that could damage the system (e.g., by writing over system memory or critical software) or could interfere with other users’ work (by deleting or modifying their programs or data). The security features of a C1 system aren’t sufficient to keep a determined intruder out.

The two main user-visible features required in the C1 class are:

Passwords (or some other mechanism)

These identify and authenticate a user before letting him use the system.

Discretionary protection of files and other objects

With systems of this kind, you can protect your own files by deciding who will be allowed to access them.

C1 systems must also provide a system architecture that can protect system code from tampering by user programs. The system must be tested to ensure that it works properly and that the security features can’t be bypassed in any obvious way. There are also specific documentation requirements. Many people feel that if an ordinary Unix or Linux system (with no enhanced security features) were submitted for evaluation, it would be given a C1 rating. (Others claim that it would get a C2 rating.)

C2 Systems: Controlled Access Protection

C2 systems provide much more stringent security than C1 systems. In addition to providing all the features required at the C1 level, C2 systems offer the following additional user-visible features:

Accountability of individual users

Through individual password controls and auditing (maintaining a record of every security-related action every user performs), the system can keep track of who’s doing what in the system.

More detailed discretionary controls

The Orange Book describes C2 requirements as follows: “These access controls shall be capable of including or excluding to the granularity of a single user.” Through access control lists or some other mechanism, you must be able to specify, for example, that only Mary and Joe can read a file and that only Sam can change it.

Object reuse

This feature makes sure that any data left in memory, on disk, or anywhere else in the system doesn’t accidentally become accessible to a user.

The C2 system architecture must allow system resources to be protected via access control features. C2 systems also require more rigorous testing and documentation.

B1 Systems: Labeled Security Protection

B1 (and higher) systems support mandatory access controls. In a MAC system, every file (and other major object) in the system must be labeled. The system uses these sensitivity labels, and the security levels of the system’s users, to enforce the system’s security policy. In MAC systems, protection of files is no longer discretionary. You can’t give another user access to one of your files unless that user has the necessary clearance to the file. The Orange Book imposed many specific labeling requirements on B1 systems, including labeling of all information that’s exported from the system.

B1 systems must have a system architecture that more rigorously separates the security-related portions of the system from those that are not security-related. B1 systems also require more stringent testing and documentation. In the B1 class, your documentation must include a model of the security policy supported by the system. This policy need not be a mathematical one (as is required in the higher classes), but it must be a clear statement of the rules enforced by the system’s security features.

B2 Systems: Structured Protection

B2 and higher systems don’t actually add many more specific user-visible security features to the set already required of B1 systems. Instead, they extend these features, and they require additional assurance that the features were designed and work properly.

In the B2 class, labeling features are extended to include all objects in the system, including devices. B2 systems also add a trusted path feature, allowing the user to communicate with the system in a direct and unmistakable way—for example, by pressing a certain key or interacting with a certain menu. The system design must offer proof that an intruder can’t interfere with, or “spoof,” this direct channel.

B2 systems support least privilege, the concept that users and programs should possess the least number of privileges they need, and for the shortest time necessary, to perform system functions. This concept is implemented via a combination of design, implementation, and assurance requirements. One example is the requirement that there be a separate system administrator and operator.

From a system design point of view, B2 systems require substantially more modularity and use of hardware features to isolate security-related functions from those that are not security-related. B2 systems also require a formal, mathematical statement of the system’s security policy, as well as more stringent testing and documentation. They also require that a configuration management system manage all changes to the system code and documentation and that system designers conduct a search for covert channels—secret ways that a developer could use the system to reveal information.

Few systems have been successfully evaluated at B2 and higher.

B3 Systems: Security Domains

There are no requirements for new user-visible features at the B3 level, but the system design and assurance features are substantially more rigorous. Trusted facility management (assignment of a specific individual as security administrator) is required, as is trusted recovery (procedures ensuring that security doesn’t fail if the system does) and the ability to signal the administrator immediately if the system detects an “imminent violation of security policy.”

The Orange Book indicated that B3 systems must be “highly resistant to penetration.” The TCB is very tightly structured to exclude code not required for the enforcement of system security.

B3 systems aren’t very common. It’s difficult enough to obtain a B3 rating that vendors may choose to go all the way for an A1 rating.

A1 Systems: Verified Design

At present, A1 systems are at the top of the security heap, although the Orange Book does discuss the possibility of defining requirements for systems that exceed current A1 requirements in areas of system architecture, testing, and formal verification.

A1 systems are pretty much functionally equivalent to B3 systems. The only actual feature provided by A1 systems beyond B3 requirements is trusted distribution, which enforces security while a trusted system is being shipped to a customer. What makes a system an A1 system is the additional assurance that’s offered by the formal analysis and mathematical proof that the system design matches the system’s security policy and its design specifications.

Only two or three systems ever received an A1 rating.

Complaints About the Orange Book

Not everyone thought the Orange Book answered all needs, and it tended to be government-specific. Some respected security practitioners disagreed with the government’s reliance on this book alone as a way of measuring trust. Here are some of the main claims about the inadequacies of the Orange Book.

§ The Orange Book model worked only in a government-classified environment, and the higher levels of security weren’t appropriate for the protection of commercial data, where data integrity rather than confidentially was the chief concern.

§ The Orange Book emphasized protection from unauthorized access, while most security attacks actually involve insiders. (Orange Book defenders pointed out that the book’s principle of least privilege addressed this issue to some degree.)

§ The Orange Book did not address networking issues, although the Red Book did.

§ The Orange Book contained a relatively small number of security ratings.

In addition, there was a need to harmonize the computer security requirements with those of nations with which there was a likelihood of joint military and commercial operations. The European Information Technology Security Evaluation Criteria (ITSEC) was similar to U.S. TCSEC, of which the Orange Book was central. A merger of the two was in order. It came to be known as the Common Criteria.

FIPS by the Numbers

The Federal Information Processing Standards Publications describe how the U.S. government acquires computer systems. If you or your company sells computers or anything that connects to them to the government, you will need to be aware of the FIPS PUBS that deal with that equipment and the procedures that surround it. There are actually some computer security industry certifications that are based largely on the mastery of the FIPS PUBS.

The FIPS PUBS list is maintained by the NIST, an agency of the U.S. Computer Departments Technology Administration. In 2005, over a dozen FIPS PUBS were withdrawn. Current information can be obtained by checking the FIPS web site at: http://www.itl.nist.gov/fipspubs/index.htm or calling the department at 301-975-2832.

Some of the following references include dates, which are written in the government format of YR(YR)-MONTH-DATE.

FIPS 4-2, Representation of calendar date to facilitate interchange of data among information systems.

Adopts American National Standard ANSI X3.30-1997: Representation of Date for Information Interchange (revision of ANSI X3.30-1985 (R1991)). FIPS 4-2 supersedes FIPS PUB 4-1, dated January 27, 1988, and updates the standard for representing calendar date and implements the U.S. government’s commitment to use four-digit year elements (e.g., 1999, 2000, etc.) in its information technology systems.

FIPS 5-2 Codes for the Identification of the States, the District of Columbia and the Outlying Areas of the United States, and Associated Areas—87 May 28

Provides a set of two-digit numeric codes and a set of two-letter alphabetic codes that represent the 50 states, the District of Columbia and the outlying areas of the United States, and associated areas such as the Federated States of Micronesia and Marshall Islands, and the trust territory of Palau.

Additional information on the content of this FIPS may be obtained from the Bureau of the Census at (301) 763-1522.

FIPS 6-4 Counties and Equivalent Entities of the U.S., Its Possessions, and Associated Areas—90 Aug 31

Provides the names and three-digit codes that represent the counties and statistically equivalent entities of the 50 states, the District of Columbia, and the possessions and associated areas of the United States, for use in the interchange of formatted machine-sensible data. Implements ANSI X3.31-1988. Minor editorial corrections made in January 2005.

Additional information on the content of this FIPS may be obtained from the Bureau of the Census at (301) 763-9031.

FIPS 10-4 Countries, Dependencies, Areas of Special Sovereignty, and Their Principal Administrative Divisions—95 Apr

Provides a list of the basic geopolitical entities in the world, together with the principal administrative divisions that comprise each entity. Each basic geopolitical entity is represented by a two-character, alphabetic country code. Each principal administrative division is identified by a four-character code consisting of the two-character country code followed by a two-character administrative division code. These codes are intended for use in activities associated with the mission of the Department of State and in national defense programs.

Note: Change notices for FIPS 10-4 are issued by the National Geospatial-Intelligency Agency (NGA), and are available on NGA’s GEOnet Names Server (GNS) at http://earth-info.nga.mil/gns/html/fips_files.html.

Additional information on the content of this FIPS may be obtained from the National Geospatial-Intelligence Agency (NGA) at (301) 227-1407.

FIPS 113 Computer Data Authentication—85 May 30

Specifies a data authentication algorithm (DAA) which, when applied to computer data, automatically and accurately detects unauthorized modifications, both intentional and accidental. Based on FIPS PUB 46, this standard is compatible with requirements adopted by the Department of Treasury and the banking community to protect electronic fund transfer transactions.

FIPS 140-2 Security Requirements for Cryptographic Modules—01 May 25

This Federal Information Processing Standard (140-2) was recently approved by the Secretary of Commerce. It specifies the security requirements that will be satisfied by a cryptographic module, providing four increasing, qualitative levels intended to cover a wide range of potential applications and environments. The areas covered, related to the secure design and implementation of a cryptographic module, include specification; ports and interfaces; roles, services, and authentication; finite state model; physical security; operational environment; cryptographic key management; electromagnetic interference/electromagnetic compatibility (EMI/EMC); self-tests; design assurance; and mitigation of other attacks.

This version supersedes FIPS PUB 140-1, January 11, 1994.

FIPS 161-2 Electronic Data Interchange (EDI)—96 May 22

FIPS 161-2 adopts, with specific conditions, the families of EDI standards known as X12, UN/EDIFACT, and HL7 developed by national and international standards developing organizations. FIPS 161-2 does not mandate the implementation of EDI systems within the federal government but requires the use of the identified families of standards when federal agencies and organizations implement EDI systems.

FIPS 180-2 Secure Hash Standard (SHS)—02 Aug

Specifies a secure hash algorithm to be used by both the transmitter and intended receiver of a message in computing and verifying a digital signature.

FIPS 180-2 superseded FIPS 180-1 as of February 1, 2003.

FIPS 181 Automated Password Generator (APG)—93 Oct 05

Specifies a standard to be used by Federal organizations that require computer-generated pronounceable passwords to authenticate the personal identity of an automated data processing (ADP) system user, and to authorize access to system resources. The standard describes an automated password generation algorithm that randomly creates simple pronounceable syllables as passwords. The password generator accepts input from a random number generator based on the DES cryptographic algorithm defined in Federal Information Processing Standard 46-2.

FIPS 183 Integration Definition for Function Modeling (IDEF0)—93 Dec 21

Describes the IDEF0 modeling language (semantics and syntax), and associated rules and techniques, for developing structured graphical representations of a system or enterprise. Use of this standard permits the construction of models comprising system functions (activities, actions, processes, and operations), functional relationships, and data (information or objects) that support systems integration.

FIPS 184 Integration Definition for Information Modeling (IDEFIX)—93 Dec 21

Describes the IDEF1X modeling language (semantics and syntax), and associated rules and techniques, for developing a logical model of data. IDEF1X is used to produce a graphical information model that represents the structure and semantics of information within an environment or system. Use of this standard permits the construction of semantic data models, which may serve to support the management of data as a resource, the integration of information systems, and the building of computer databases.

FIPS 185 Escrowed Encryption Standard (EES)—94 Feb 09

This nonmandatory standard provides an encryption/decryption algorithm and a Law Enforcement Access Field (LEAF) creation method that may be implemented in electronic devices and may be used at the option of government agencies to protect government telecommunications. The algorithm and the LEAF creation method are classified and are referenced, but not specified, in the standard. Electronic devices implementing this standard may be designed into cryptographic modules that are integrated into data-security products and systems used in data-security applications. The LEAF is used in a key escrow system that provides for decryption of telecommunications when access to the telecommunications is lawfully authorized.

FIPS 186-2 Digital Signature Standard (DSS)—00 Jan 27

Specifies algorithms appropriate for applications requiring a digital, rather than written, signature. A digital signature is represented in a computer as a string of binary digits; it is computed using a set of rules and a set of parameters such that the identity of the signatory and integrity of the data can be verified. An algorithm provides the capability to generate and verify signatures. Signature generation uses a private key to generate a digital signature; it uses a public key that corresponds to, but is not the same as, the private key. Each user possesses a private and public key pair. Private keys are kept secret; public keys may be shared. Anyone can verify the signature of a user by using that user’s public key. Signature generation can be performed only by the possessor of the user’s private key.

This revision supersedes FIPS 186-1 in its entirety.

FIPS 188 Standard Security Label for Information Transfer—94 Sept 6

Defines a security label syntax for information exchanged over data networks and provides label encodings for use at the Application and Network Layers. ANSI/TIA/EIA-606-1993

FIPS 190 Guideline for the Use of Advanced Authentication Technology Alternatives—94 Sept 28

Describes the primary alternative methods for verifying the identities of computer system users, and provides recommendations to federal agencies and departments for the acquisition and use of technology that supports these methods.

FIPS 191 Guideline for the Analysis of Local Area Network Security—94 Nov 9

Discusses threats and vulnerabilities and considers technical security services and security mechanisms.

FIPS 192 Application Profile for the Government Information Locator Service (GILS)—94 Dec 7

Describes an application profile for the GILS, which is based primarily on ANSI/NISO Z39.50-1992. GILS is a decentralized collection of servers and associated information services that is used by the public either directly or through intermediaries to find public information throughout the federal government.

FIPS 192-1 (a) & (b) Application Profile for the Government Information Locator Service (GILS)—97 Aug 1

Describes the U.S. federal government use of the international application profile for the GILS. The GILS Profile is based primarily on ISO 23950, presently equivalent to the ANSI/NISO Z39.50-1995/Version 2. GILS is a decentralized collection of servers and associated information services that is used by the public either directly or through intermediaries to find public information throughout the federal government.

FIPS 196 Entity Authentication Using Public Key Cryptography—1997 Feb 18

Specifies two challenge-response protocols by which entitites in a computer system may authenticate their identities to one another. These protocols may be used during session initiation, and at any other time that entity authentication is necessary. Depending on which protocol is implemented, either one or both entities involved may be authenticated. The defined protocols are derived from an international standard for entity authentication based on public key cryptography, which uses digital signatures and random number challenges.

FIPS 197 Advanced Encryption Standard (AES)—01 Nov 26

The AES specifies a FIPS-approved cryptographic algorithm that can be used to protect electronic data. The AES algorithm is a symmetric block cipher that can encrypt (encipher) and decrypt (decipher) information. Encryption converts data to an unintelligible form called ciphertext; decrypting the ciphertext converts the data back to its original form, called plaintext.

FIPS 198 The Keyed-Hash Message Authentication Code (HMAC)—02 March

This standard describes a keyed-hash message authentication code (HMAC), a mechanism for message authentication using cryptographic hash functions. HMAC can be used with any iterative approved cryptographic hash function, in combination with a shared secret key. The cryptographic strength of HMAC depends on the properties of the underlying hash function. The HMAC specification in this standard is a generalization of Internet RFC 2104, HMAC, Keyed-Hashing for Message Authentication, and ANSI X9.71, Keyed Hash Message Authentication Code.

FIPS 199 Standards for Security Categorization of Federal Information and Information Systems—04 Feb

FIPS 199 addresses one of the requirements specified in the Federal Information Security Management Act (FISMA) of 2002, which requires all federal agencies to develop, document, and implement agency-wide information security programs for the information and information systems that support the operations and the assets of the agency, including those provided or managed by another agency, contractor, or other source. FIPS 199 provides security categorization standards for information and information systems. Security categorization standards make available a common framework and method for expressing security. They promote the effective management and oversight of information security programs, including the coordination of information security efforts throughout the civilian, national security, emergency preparedness, homeland security, and law enforcement communities. Such standards also enable consistent reporting to OMB and Congress on the adequacy and effectiveness of information security policies, procedures, and practices.

FIPS 201 Personal Identity Verification for Federal Employees and Contractors—05 Feb

This standard specifies the architecture and technical requirements for a common identification standard for federal employees and contractors. The overall goal is to achieve appropriate security assurance for multiple applications by efficiently verifying the claimed identity of individuals seeking physical access to federally controlled government facilities and electronic access to government information systems.

I Don’t Want You Smelling My Fish

At the time the United States was assembling the TCSEC into the Orange Book, many other nations did the same. Europe put together its ITSEC program. The Canadians developed the Canadian Trusted Computer Product Evaluation Criteria (CTCPEC) guidelines. Each project had similar goals and several differences.

With all the computers and networks in a country being responsible for an increasing part of the security, or at least the economic well being, of that country, it is only natural that each country has its own national interests at heart when it develops its security evaluation programs and capabilities.

It is a little like buying fresh seafood. Each of us has pet procedures for picking piscatorial products. (Find a good supplier, avoid fish that smell, look for clear eyes and bright pink or red gills, and well-defined fins.) The individual who has no idea what he is looking for is the most likely to be sold flounder that is past its prime. However, with networking equipment and computers, it is more than a dinner party that is being threatened. The nation that can keep itself free of intruding eavesdroppers, capacity-robbing viruses, infrastructure-damaging threats, and terrorists’ activities, is so much ahead on the world stage. And to insure that, each nation needs to develop and administer its own methods of ensuring secure computing.

And yet we do all have to play together. Equipment is cheaper for all if it is manufactured for a world, rather than for a national, market. Operating system software is more likely to run universally if there is a commonality to the platforms on which it plays. Working together simplifies standardization and provides a uniform landscape. Even bad artifacts of the Internet age, such as phishing, spam, and online scams are easier to prosecute if both the victim and the attacker live in countries with computer crime laws. It’s that much more difficult if the attack is launched from a country that does not acknowledge illicit computer activity as a criminal act.

The Common Criteria is an attempt to take the best of many methods and provide the world with a single method of determining a product’s security and protection levels. The project began as a joint effort between the United States (TCSEC), Europe (ITSEC), and Canada (CTCPEC). It has continued to develop into an international equipment certification system that can serve many nations at the same time. Common Criteria also frees governments from direct involvement, because a Common Criteria testing laboratory may in fact be a commercial entity that is certified for testing.

Common Criteria recognizes that equipment intended for different uses can be subjected to different tests, unlike the Orange Book, which tended to view everything from its attitude of security and defense potential. The Common Criteria process is now part of an international standard known as ISO 15408 (Common Criteria). The web site for the consortium is http://www.commoncriteriaportal.org/.

Common Criteria Evaluation Assurance Levels (EALs)

The evaluation assurance level defines the thoroughness of the testing to be done on a product. The protection profile (PP) states the need for a security solution and the rationale for the testing. Protection profiles are meant to be reusable so that every sponsor does not need to reinvent the wheel for each new product to be evaluated. If, for instance, a profile exists that defines the nature of “medium strength robustness” for security algorithms, a developer who has developed a new algorithm can submit it for Common Criteria evaluation according to that profile, if it is relevant and will give an adequate test. A protection profile should include the intended evaluation assurance level for the product to be tested.

The next requirement is to provide the definition of the security target, which is a fancy way of explaining how a product is intended to operate, in what environment it is to operate, and what components should be subjected to testing. The product to be tested is called the target of evaluation.

Next, the functionality requirements and the assurance requirements are spelled out, followed by the actual evaluation. Presumably, the product will meet the requirements of the EAL specified in the protection profile.

The EAL is actually a measure of how much rigor, or the depth of the science, is involved in the test. There are seven possible levels that can result from a successful evaluation. One, EAL0 indicates failure. The various EALs and their relationship to the corresponding Orange Book ratings are displayed in Table C-1.

Table C-1. Common Criteria to Orange Book Harmony

|

EAL Level |

Description of the Level |

Orange Book Level (TCSEC) |

|

EAL0 |

Inadequate assurance |

n/a |

|

EAL1 |

Functionally tested |

n/a |

|

EAL2 |

Structurally tested |

C1 |

|

EAL3 |

Methodically tested and checked |

C2 |

|

EAL4 |

Methodically designed, tested, and reviewed |

B1 |

|

EAL5 |

Semiformally designed and tested |

B2 |

|

EAL6 |

Semiformally verified design and tested |

B3 |

|

EAL7 |

Formally verified design and tested |

A1 |

Returning to the analogy of selecting your own fish, the result of this standards activity is that, instead of subjecting equipment to its own evaluation process, the U.S. government has transferred equipment verification duties to signatories of the Common Criteria agreements. This is by virtue of what is called the Common Criteria Recognition Arrangement (CCRA). Within the CCRA only evaluations up to the trust level called EAL 4 are mutually recognized, however some European countries that were formerly part of the ITSEC agreement may recognize higher EALs as well.

Evaluations at EAL5 and above tend to involve the security requirements of the host nation’s government and likely will be performed by those nations’ own national laboratories. In other words, for small items, Common Criteria works well. For important stuff, the job is still done in-house.

Common Criteria documentation and further information can be accessed at this web site: http://niap.nist.gov/cc-scheme/index.html.

DOES ISO 15408 CERTIFICATION MEAN A PRODUCT IS ALWAYS SECURE?

Interestingly, the closer an item moves toward being a commodity, the more flexible its security issues can be. Let’s consider a common PC operating system, such as Linux or some version of Microsoft Windows. It happens that Windows 2000 is ISO 15408-certified, but regular security patches to address security vulnerabilities are still published by Microsoft for Windows 2000.

This seeming contradiction is possible, in fact necessary, because of the broad potential use for such products. As an adaptation to the process of getting an ISO 15408 certification, a vendor is allowed to stipulate certain assumptions about the operating environment. In addition, the strength of the threats to be faced in that environment can be stated in advance. Finally, the manufacture can state what configuration of the product is to be evaluated.

As a result, this kind of broadly deployed, multiple use product can be ISO 15408 certified, but should be considered only secure in the assumed, specified circumstances of the test, also known as the evaluated configuration.