Information Security Management Handbook, Sixth Edition (2012)

DOMAIN 3: INFORMATION SECURITY AND RISK MANAGEMENT

Risk Management

Chapter 9. Server Virtualization: Information Security Considerations

Thomas A. Johnson

Overview

Still considered an ‘emergent technology,’ virtualization has been in use since the mid-1960s (CDW 2010), and is well established in the mainframe and minicomputer world as a common way of sharing resources (ISACA 2010). The adoption of virtualization and the way the industry is currently using it is making the term popular, much like Windows, which has become a household name today. Until recently, there was no software package available, much less a need for one, to establish true resource sharing on servers common to a data center. Now that virtualization has been proliferating throughout data centers, companies have been able to take advantage of the technology on Intel-based servers, virtualizing everything from in-house utility servers to enterprise content management systems. With advancements in virtualization technology moving to the workstation, disk, and CPU, virtualization has been revolutionizing data centers, allowing companies to realize major wins, such as cost savings and streamlined recovery plans.

Virtualization technology can encompass various technologies, doing amazing things with disk and network communications. Many virtualization concepts are emerging in the marketplace, namely, workstation and disk virtualization. While these exciting technologies can take virtualization to the next level, expanding on the concept to cover all the popular and emerging practices would fill a book. Because of the heightened exposure that this technology has gained over other virtualization technologies, as well as its widespread adoption, our focus will be on the issues surrounding server virtualization.

What exactly is virtualization? “Virtualization is simply the logical separation of the request for some service from the physical resources that actually provide that service” (von Hagen, 2008). Putting it another way, “you can think of virtualization as inserting another layer of encapsulation so that multiple operating systems can operate on a single piece of hardware” (Golden, 2008). If you are still confused, you are not alone. It is difficult to sum up the technology in one or two sentences, but it can be described as software or technology that allows multiple systems to use the same resources, such as computer processors or disks, while tricking the system into thinking that these resources are exclusively theirs, when in reality they are shared.

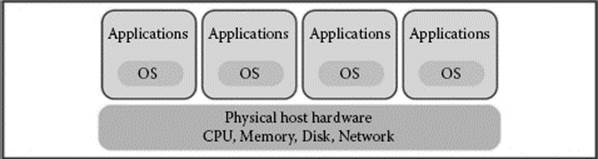

The following diagram logically shows how server virtualization works. The illustration shows four servers running on one machine. Instances are shown of each server, including the operating system and its associated applications, operating in its own ecosystem—accessing physical hardware. The hypervisor brokers the requests for the CPU, memory, disk, and network resources and creates an illusion for the virtual server to which the resources are dedicated (Rule and Dittner, 2007).

As the illustration shows, because four machines are able to run on a single piece of hardware, there are cost savings associated with virtualization. Both hard and soft costs will be covered here, as well as the underlying management, audit, and security issues. As with other innovative technologies, care should be taken while evaluating the lofty claims made by the vendors. Although cost savings and improved business agility are certainly proven with virtualization, it is often the security and management of these systems that seem to be lacking. There are many security and management issues surrounding virtualization and challenges that information security staff and auditors face when approaching this new technology, prompting more collaboration between these areas (ISACA, 2010). “It is critical for all parties involved to understand the business process changes that occur as a result of migrating to a virtualized environment” (ISACA, 2010).

Benefits of Server Virtualization

In a cost-conscious environment where businesses are constantly trying to reduce spending, many are turning to the benefits of virtualization. The promise of “lower [total cost of ownership], increased efficiency, positive impacts to sustainable IT plans and increased agility” is what server virtualization can bring to an organization and they are a major catalyst to getting virtualization projects approved (ISACA, 2010).

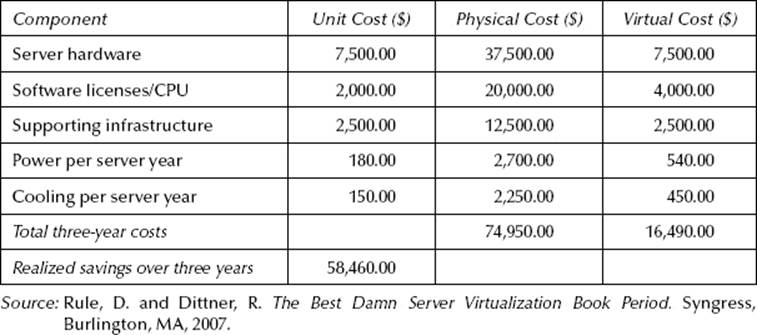

The fact that an organization could eliminate half of its servers from its data center and gain additional functionality would have sounded absurd as little as 5 years ago. Now, companies are scrambling to get traction on virtualization projects and counting the savings by identifying multiple servers that are candidates for virtualization and moving them into a virtual environment. Table 9.1 shows a simple example of how consolidating servers into a single machine can generate significant cost savings (Rule and Dittner, 2007).

Reducing the acquisition cost of equipment is not the only way that cost savings are gained by virtualization. By having fewer servers, the data center power consumption goes down dramatically. Most data centers charge by the kilowatt-hour, so an annual savings is practically guaranteed (Fogarty, 2009). Soft costs, such as time-to-implement and personnel costs associated with projects, are also part of the cost savings. When a new initiative comes from management, there is no longer lead time for hardware to arrive, be racked, installed, and configured—it can all be done remotely, consistently, and quickly, dramatically decreasing the time it takes to provision a new system. Decreasing the time that engineers spend on provisioning hardware translates to cost savings.

Table 9.1 A Simple Example of the Cost of Five Two-Way Application Servers

Imagine you can take a set of applications and move them over to the disaster recovery site in a matter of minutes. This seems too good to be true, but it is true, and many companies are taking advantage of these abilities to enhance their business continuity plans and reduce the time to recover. Virtualized systems in the recovery center are intelligent and can handle load balancing and advanced disaster scenarios. This provides the company with “previously unavailable options for flexibly scaling for load and dynamically shifting and aligning resources to respond to business continuity and disaster events” (ISACA, 2010).

Testing and development are two areas that are often neglected by smaller IT departments. The equipment and procedures for migrating from test to production cost time and money. Today, test environments can be built from images of real production environments so that patches and new applications can be tested. A tested application can then be rolled out to a production environment and, if there are issues, can be rolled back with the snapshotting capabilities of virtualization.

There are a number of options available when evaluating virtualization platforms and proper due diligence should be performed when deciding which solution is right for your business. The following systems are arguably the three most popular and offer enterprise-class solutions for server virtualization: VMware, Citrix XENServer, and Microsoft Hyper-V.

VMware, a commercial software company that specializes in virtualization, is probably the most popular and is a widely adopted system for server and desktop virtualization. Their product offering comes with many tools and functionality to allow a business to take full advantage of virtualization technology.

The Citrix XENServer, a virtualization platform based on the open source XENSource project, is gaining ground, offering a robust suite of products to support an enterprise virtualization effort. Despite its origins in the open source world, companies such as Novell, HP, and IBM have invested time and effort in the development of the platform. The XEN Server is commercially supported, easy to use, and offers a robust management interface (Rule and Dittner, 2007, p. 423).

Microsoft Hyper-V, offered with the latest version of Enterprise Windows Server, is continually maturing and could be a great option if an organization is running Microsoft servers. While Linux is supported, only two distributions are available with Hyper-V (Microsoft, 2011). The Microsoft solution may be right for an enterprise if the right combination of licensing and existing operating systems exist. Because the product is not as mature as the other platforms, it may lack the reporting and granular administrative controls offered by VMware.

When introducing a new technology, the timeline for adoption naturally extends to cover educating engineers, managers, and technicians. Once able to access the console of a virtual server, it looks and feels just like any other server, so an engineer familiar with a traditional server may not know that they are working on the one that is virtualized. Because of this, only specific training relating to the virtual environment is needed and is another reason why virtualization has been rapidly adopted.

The statistics are compelling, the offerings continue to get more robust, and the software supporting virtualization is continually maturing. Cost-saving promises, along with many availability, testing, and development options make virtualization seem like an obvious choice. This begs the question: What concerns should IT professionals and management have with the deployment of such an important technology?

Security and Control of Virtual Servers

Companies around the world are scrambling to take advantage of server virtualization and are rapidly adopting the technology. As with any new technology, marketing efforts are continually guiding us toward looking at the possibilities of what the technology can do for our business, but few look deeper into the risks that it will introduce. Virtualization is exactly one of those technologies. It has become mainstream and there is a more widespread understanding of the technology. Information security professionals are starting to take note of the risks that such a technology introduces.

Administration and management are critical to maintaining control of the environment. With all the benefits that virtualization brings to a business, when left unchecked, it has many risks that warrant attention. Putting forth the effort on the administration and management of the virtual environment will allow management and information security personnel to identify common information security issues and institute policy, procedures, and standards to control risk. Because management needs to be behind the policies put in place by the business, they need to be actively involved from the very beginning so that the controls are already in place once the virtual environment is built.

Once the management and administration functions have been identified and the environment has been created, a postimplementation assessment will need to be performed in addition to regular auditing to ensure that the environment conforms to the set standards, policies, and procedures put forth for the implementation.

Administration

Administration of the virtual environment consists of the day-to-day care and feeding of the systems to keep them up and running and secure. “Patching, antivirus, limited services, logging, appropriate permissions and other configuration settings that represent a secure configuration for any server gain importance on a host carrying multiple guest servers” (Hoesing, 2006). Any administrative tasks being performed on a nonvirtualized server should always continue to be performed on the server once it is virtualized, but it does not stop there. Based on the policies put in place, administrative tasks will need to be created for the hypervisor as well, adding overhead to the administrative function of the server environment.

To administer the virtual environment, there are tools available for the platform that is chosen. “Virtualization tools usually include a management console used to start and stop guests and to provision resources (memory, storage) used by those guests” (Hoesing, 2006). The tools can also do things such as moving a virtual server to another physical machine, as well as adjusting the resources available to the virtual machine. The virtual console and the management tools should be tightly restricted to only appropriate and approved personnel, and the administration of security to the virtual environment should be audited regularly. “Access to the host OS and the management tool should be strictly limited to technical staff with a role-based need” (Hoesing, 2006). Access to the tools or the “management console” should be set to the highest security setting to reduce the possibility of unauthorized access. “Access to configuration files used for host and guest OSs should be limited to system administrators. Strong root passwords should be verified as well as allowed routes to and from hosts” (Hoesing, 2006). As noted, ensuring proper rights management of the tools and administrative console is important in maintaining the integrity of the environment.

As virtual servers get provisioned and decommissioned, housekeeping tasks must be addressed. Images of old machines, long forgotten by the IT staff and management, may still reside on the shared disk. Without monitoring and inventories of the virtual machines, images provisioned for other business units throughout the organization will be forgotten (Fogarty, 2009). Because virtual systems are so portable, the ease with which someone can copy a system for malicious use becomes quite easy. “With virtualization, a server (or cluster of servers) can be deployed into production with a single mouse click, potentially bypassing the controls associated with traditional system life cycle management” (ISACA, 2010). Virtualization platforms, such as VMware, comprise a couple of files stored on the disk. These files are easily portable, which is great for disaster recovery scenarios, but can be disastrous if they are in the hands of individuals with bad intentions. Not managing old images creates a risk, so securing and keeping an inventory of the images become a top priority. If someone gains access to the files, it does not take much to launch an instance of the virtual machine anywhere, regardless of hardware type. A proper cleanup and inventory should be performed on a regular basis and the audit program should address this as well (ISACA, 2010).

Running a backup system that is incompatible with virtualization technology could render all the virtual servers running on a physical machine useless. For instance, if all the virtual machines launched a backup job at the same time on the same hardware, the underlying backbone that supports the data transfer could be saturated to the point where a denial-of-service could be realized, even if there is disk-to-disk technology in place (Marks, 2010). Taking a close look at the backup jobs and how they affect the performance of the virtual machine should be evaluated before launching a new backup system in a virtual environment.

Running antivirus systems on a virtual environment complicates matters in much the same way as backup systems affect the virtual machines. When selecting an antivirus system, a product that is aware of the virtual environment is necessary to prevent problems. Configuration and managing signature download are important to the health of the virtual system. The scheduling of virus scanning and the signature download on the same host must be staggered to prevent saturation of the CPU. Some sort of antivirus measures should be taken to protect the hypervisor as well.

Management

Although a business may get excited about the versatility of a virtual environment and the cost savings that are realized from the technology, management of the virtual environment understandably gets a lot less attention. Designing proper management controls to include policies, standards, and best practices will go a long way to producing secure systems.

Policies will set the stage for what is expected of the personnel taking care of the system and outline the rules that they need to live by. Adhering to industry standards and best practices will create a strong and consistent environment, providing a configuration that has been proven by others. These governance principles are essential to protecting the virtual environment.

Design is an important element when building a virtual solution. When designing a solution, it is important to take into consideration the network design, the firewall placement, and the development of a virtual network. Segmenting the connectivity to the shared disk is also important and should be on its own physical network or a virtual LAN. “A host server failure can take down several virtual servers and cripple your network. Because host server failures can be so disruptive, you need to have a plan that will help minimize the impact of an outage” (Posey, 2010). Adding at least one additional host will allow the administrators to spread the load across multiple machines, in effect, hedging against the possibility of a total failure.

Roles will need to be defined and procedures developed to ensure that the right people are doing the right things with the technology. “Traditionally, technology has been managed by IT within various functional and technical areas, such as storage, networking, security and computers/servers. With virtualization, these lines are significantly blurred, so enterprises that embrace virtualization need to mature their support models to reap all of the benefits that virtualization can provide” (ISACA, 2010). A training program for all the functional areas of IT would be beneficial to ensure that personnel all understand the technology and look for ways to expand on its adoption.

“Probably the single biggest mistake administrators make when virtualizing a datacenter is overloading the host servers” (Posey, 2010). While virtualization provides many options to prevent this by sharing resources across multiple physical servers, such options need to be identified and implemented. Hardware failures are not uncommon, so hardware support contracts will still be needed and possibly enhanced to provide a quicker response time. A plan for maintaining the availability of the systems will also need to be developed and added to the corporate business continuity plan.

An unpatched server operating system and hypervisor can leave vulnerabilities exposed, so it is important to make sure that all systems are patched on a regular basis. “The most tangible risk that can come out of a lack of responsibility is the failure to keep up with the constant, labor-intensive process of patching, maintaining and securing each virtual server in a company” (Fogarty, 2009). Virtualization adds some additional overhead to a patch management program. Not only does the operating system in the virtual environment need to be patched, but the hypervisor does as well. The very nature of the physical servers makes the patching process straightforward and immediate. In the virtual environment, an image of a server could be in any number of states and could have been built long before critical patches are issued, so it is imperative to have an inventory of the virtual servers that breaks down the patch level of the virtual machine (Fogarty, 2009). Access to the server through vulnerabilities in the operating system could provide a hacker with access to the server environment and compromise the data. Attackers realize the importance of attacking the hypervisor, so we will see more attacks targeting the hypervisor in the future. A comprehensive patch management program that addresses not only the operating system but also the hypervisor is paramount to securing the environment.

Depending on the type of patch or upgrade, the environment containing all the virtual servers might need to be taken offline. This could be an issue in high availability environments. Virtual systems have the capabilities to achieve a high rate of availability, but it may not be automatic. Ensuring that the organization has the correct licensing and virtual design will allow the systems to be upgraded, patched, or even moved in the event of a hardware failure.

Whether or not there is a configuration management program in place, introducing virtualization into the organization will create a need to formalize it. Managing the configurations on systems that will be harder to identify will be a challenge. By virtue of having virtual systems, there will be virtual machines in various states: on, off, and standby—all of which will need to be tracked and accounted for. Managing the configuration of the virtual environment is important, because if the standards are not followed, there is a chance that integrity can be compromised.

Continued monitoring is necessary to ensure that the system complies with the policies and standards established by management. This holds true for any server, whether or not it is virtual. However, because of the complexity of the virtual environment, focusing on the enhanced monitoring of both the guest and hypervisor is required. Various things should be included in the monitoring plan, including administrative access, access control, virtual machine state, and hardware resource consumption. In a comprehensive monitoring plan, each of these items should already be addressed, but because a hypervisor and a management system for the virtual systems are additional devices, the duplication of this effort to include the hypervisor is mandatory.

Whether there is an internal audit department or a third party handling the technology audits for the company, the project should be introduced to the audit department from its inception. The audit department needs to put together an audit program that will address the monitoring and management controls and to ensure that the practices comply with stated policies and standards (ISACA, 2010). If there is internal audit staff, they may have difficulty keeping up with technology and they could have problems putting together an audit program. Regardless, a comprehensive audit program to test the controls that were put in place will need to be tested to identify where the risks to the data really lie. It is common to outsource this function, and the management should seriously consider doing so to gain a comprehensive audit.

Sometimes it is easier to document what you can see. With virtualization, you cannot exactly see all of the virtual servers running on each host, and you certainly cannot see the servers in an “off” or “suspended” state. There may be communications between virtual sessions that might not be able to be traced or reported on. Virtual server-to-server communications should be documented so that the auditors can report correctly on the risks (ISACA, 2010). Comprehensive documentation on the virtual environment will allow the engineers to troubleshoot and give the auditors or experts assessing risk the ability to see if there are any areas of risk that need to be addressed.

Risk assessments should be performed throughout the life cycle of the system. This will include a preimplementation assessment, a postimplementation assessment, and ongoing assessments until the environment is decommissioned. When choosing a virtualization technology, a risk assessment will need to be performed and this should be included in the project plan. The risks associated with the implementation can be tracked by management while approaching milestones in the project. Approaching a project with a preliminary risk assessment completed will make many of the decisions easier (vendor, topology design, etc.). When performing a security assessment after the environment has been built, there are areas that might be missed if the documentation is inaccurate or not inclusive of communications happening between virtual machines residing on the same host. This postimplementation assessment will ensure that all policies and guidelines are complied with. This will also show the need for monitoring and management software. Ongoing risk assessments and security assessments are also necessary and will be able to capture changes in the environment. The procedures and policies surrounding the virtualization technology will need to be kept up to date and modified on an as-needed basis.

“The auditor may use commercial management or assessment tools to poll the environment and compare what is found to the authorized inventory” (ISACA, 2010). VMware has a robust set of tools to assess the security of the environment, but not all hypervisors have this capability built in. A thorough evaluation of the solutions will ensure that the appropriate reporting and management tools are available to be able to do a proper audit and security assessment. “Assessment procedures should ensure that the hypervisor and related management tools are kept current with vendor patches so that communication and related actions take place as designed” (ISACA, 2010). Out-of-date management tools will not provide management and auditors with an accurate security picture of the environment.

Keeping up with technology and the latest findings in the virtualization arena is important. Every day there are more exploits found, so understanding them and knowing the technology will help implement the appropriate controls and procedures. Of all the security concerns discussed, there are certainly controls, processes, and procedures that can be put in place to mitigate the risks associated with implementing server virtualization. The management’s ability to monitor the activities in the virtual environment is crucial and should be instituted from the beginning. Each company will need to evaluate the possible security risks that are associated with moving to a virtualized environment. “Mitigating many of these threats and having well-documented business processes and strong audit capabilities will help ensure that enterprises generate the highest possible value from their IT environments” (ISACA, 2010).

Conclusion

Virtualization can be a powerful tool for an organization—not only from a cost-saving standpoint, but also from a process and management standpoint. Being able to move machines from one location to another for disaster recovery purposes is a quantum leap from the expense and logistics of what businesses were doing just a few years ago. Making use of idle server time with multiple server instances running on one machine makes great financial sense, especially if you can cut down on the number of servers in the data center. This not only saves money, but also provides for a greener operation.

As with any new initiative, there are always risks that must be identified. A thorough review of the technology is essential to highlight these risks so that management can address them. Once the technology risks are identified, the auditors and compliance personnel should be looking at the human element behind the processes and procedures to ensure that the administrators of the new system are following the policy. This is an area that is often overlooked because it is hard not to focus on the new technology.

The auditors’ role should be highly considered, not only because they are required on any new projects or initiatives, but also because they will report any lack of control to management. While malicious activities are a concern, part of the control review will be to check to make sure that people who are doing their job are doing it accurately and following the policy. All of the control reviews will ultimately go toward protecting the organization.

The need for controls is important. By instituting controls to address some of the risks with virtualization, you will lower your overall risk in implementing such a technology. These controls can be as simple as basic reports, or as complex as technological controls. Knowing your technology is also important and getting the training needed to create a safe computing environment is essential.

Selecting a virtualization platform should be a fully vetted process, addressing all the technical needs, but making sure not to forget information security. Often times, reporting and auditing take a back seat to the process, but should really be brought to the forefront because it is difficult to evaluate what you have when you do not have the tools to be able to do so.

In the future, virtualization may create other obstacles that need to be addressed. With the emergence of CPU virtualization, I/O virtualization, and virtual appliances, there may be control problems that will need attention. Gartner (2010), a research firm, states that 18 percent of systems that could, took advantage of virtualization with an anticipated target of over 50 percent by 2012. More than half of them will not be as secure as their physical equivalent.

Virtualization is here to stay and will only get bigger and more complex. Howard Marks (2010) of Information Week said it well: “Server virtualization is a clear win for the data center, but it presents a mixed bag of challenges and opportunities for those charged with backing up their companies’ data.” Our ability to adapt and ensure the safety of the organization is critical.

References

CDW. Server virtualization [White paper], 2010. http://viewer.media.bitpipe.com/1064596181_865/1292877744_720/TT10019CDWVirtlztnComparWPFINAL.pdf.

Fogarty, K. Server virtualization: Top five security concerns. CIO, May 13, 2009. http://www.cio.com/article/492605/Server_Virtualization_Top_Five_Security_Concerns.

Gartner. Gartner says 60 percent of virtualized servers will be less secure than the physical servers they replace through 2012 [Press release], March 15, 2010. http://www.gartner.com/it/page.jsp?id=1322414.

Golden, B. Wrapping your head around virtualization. In Virtualization for Dummies, Chapter 1. Wiley, Hoboken, NJ, 2008. [Books24 / 7 version]. http://common.books24/7.com.ezproxy.gl.iit.edu/book/id_23388/book.asp.

Hoesing, M. Virtualization usage, risks and audit tools. Information Systems Control Journal (Online), 2006. http://www.isaca.org/Journal/Past-Issues/2006/Volume-3/Pages/JOnline-Virtualization-Usage-Risks-and-Audit-Tools1.aspx.

ISACA. Virtualization: Benefits and challenges [White paper], 2010. http://www.isaca.org/Knowledge-Center/Research/Documents/Virtulization-WP-27Oct2010-Research.pdf.

Marks, H. Virtualization and backup: VMs need protection, too. Information Week, February 12, 2010. http://www.informationweek.com/news/storage/virtualization/showArticle.jhtml?articleID=229200218&cid=RSSfeed_IWK_All.

Microsoft. Microsoft server Hyper-V home page, 2011. http://www.microsoft.com/hyper-v-server/.

Posey, B. 10 issues to consider during virtualization planning, February 5, 2010. Message posted to http://www.techrepublic.com/blog/10things/10-issues-to-consider-during-virtualization-planning/1345.

Rule, D. and Dittner, R. The Best Damn Server Virtualization Book Period. Syngress, Burlington, MA, 2007.

Von Hagen, W. Professional Xen virtualization, 2008. [Books24 / 7 version]. http://common.books24/7.com.ezproxy.gl.iit.edu/book/id_23442/book.asp.