Threat Modeling: Designing for Security (2014)

Part V. Taking It to the Next Level

Up to this point, you've been learning what's known about threat modeling. From this point on, it's all focused on the future: the future of threat modeling in your organization, and the future of threat modeling approaches.

This part of the book contains the following three chapters:

§ Chapter 17: Bringing Threat Modeling to Your Organization includes how to introduce threat modeling, who does what, how to integrate it into a development process, how to integrate it into roles and responsibilities, and how to overcome objections to threat modeling.

§ Chapter 18: Experimental Approaches includes a set of emerging approaches to operations threat modeling, the “Broad Street” taxonomy, adversarial machine learning, threat modeling a business, and then gets cheeky with threats to threat modeling approaches and a few thoughts on effective experimentation.

§ Chapter 19: Architecting for Success provides some final advice on going forward—all the mental models, touchpoints, and process design advice to help you develop new and better approaches to threat modeling, along with a few last words on artistry in your threat modeling.

Chapter 17. Bringing Threat Modeling to Your Organization

This chapter starts from the assumption that your organization does not threat model. If that assumption is wrong, the chapter may still help you bring more advanced threat modeling to your organization, or better organize the threat modeling you perform to generate greater impact. What you've learned through this point in the book can be applied by an individual without organizational support. This chapter is for those who want to influence the practices of the organization they're working for. (Consultants will also find it helpful.)

There are many ways to introduce a new practice to your organization. One is to stand up in front of everyone and say, “I just read this awesome book, and we should totally do this!” Another is to say, “I just tried this, and look how many bugs I found!” Yet another would be to intrigue people with a copy of Elevation of Privilege, saying “Check out this cool card game!” Each of these represents a strategy, and different strategies will work or not in different situations. There are many good books on how to work within an organization. Sam Lightstone's Making It Big in Software is one of the more comprehensive for software professionals (Pearson, 2010). Obviously, one chapter can't provide all the information that a full book will, but in this chapter you'll learn a few key strategies and how to apply each of them to individual contributors. The chapter also includes a section on convincing management because management will almost always want evidence that threat modeling is worthwhile, and that evidence will almost certainly involve the experiences of individual contributors.

Up to now, you've been learning about the tasks and processes of threat modeling, with the focus on threat modeling itself. In this chapter, you'll learn about how threat modeling interfaces with how an organization works. The chapter opens with a discussion about how to introduce threat modeling to an organization including how to convince individual contributors and how to convince management. From there, it covers who does what, including prerequisites, deliverables, roles and responsibilities, and group interaction issues such as decision models and effective meetings. Next, the chapter discusses integrating threat modeling into a software development process, including agile threat modeling, how testing and threat modeling relate, and how to measure threat modeling, along with HR issues such as training, ladders, and interviewing. The chapter closes with a discussion of how to overcome objections. Of course, overcoming objections is part of convincing people, but the details you learn about who does what and how to integrate into a process will inform how you overcome objections. For example, you can't overcome an objection that “we're an agile shop” until you know how threat modeling connects to agile development.

How To Introduce Threat Modeling

This section covers how to “sell” an idea in general, then how to sell it to individual contributors and then management. That's the path you'll need to follow, both in the book and in the workplace. Figure out how to make a convincing case, then make that case to the people who will be doing the work, and the people who will be managing them.

After reading the preceding chapters and exploring the techniques, you're obviously excited to ask your organization to start threat modeling. Your excitement may not lead to their excitement. Even with Elevation of Privilege, threat modeling may not be enough fun for a game night with your family. Threat modeling is a serious tool to reach a goal. The more clearly you can state your goal, the better you'll be able to achieve it. The more clearly you can state how your goal differs from your current state, the easier it will be to draw a straight line between the two. For example, if your organization does no threat modeling and has no one with experience doing so, perhaps a useful goal would be a threat modeling exercise that results in fixing a security bug in the next release. You might also consider that as a milestone along the way to a greater goal, such as not losing sales because of security concerns.

In order to introduce threat modeling into an organization, you need to convince two types of people that it's worthwhile: the individual contributors who will participate and the managers who reward them. Without both of those sets of people on board, threat modeling will not be adopted. And so you're going to need to “sell” them. Technical people are often averse to sales, but competent enterprise sales people have many tricks and even a few skills which may help you get your ideas implemented. The salespeople you want to borrow from aren't the type who sell used cars. They're the type who spend weeks or months to understand an organization and get their product paid for and implemented. Salespeople have ways of understanding the world and bending it to their will. They understand the value of knowing who can sign a check, and who can effectively say “no.” They know that as you “work an organization” to get someone to sign that check, it's valuable to identify how each person you work with gets both an “organizational win” and a “personal win.” For example, if you're buying a new database system, the organizational win might be more flexibility in designing new queries, and the personal win might be gaining a new skill or technology for your resume. Salespeople also learn that it's useful to have a “champion” who can help you understand the organization and the various decision makers you'll need to convince along the way. Within larger organizations, having such a champion you can bounce such ideas off of and strategize with can be a good complement to your technical arguments.

Applying those sales lessons to threat modeling, you can ask the following: Who signs off? It's probably someone like a VP of R&D who could require that no code goes out the door without a threat model. Why will your VP sign off? Fewer bugs? More secure products? Competitive edge? Fewer schedule changes due to fixing security bugs late? What will their personal win be? That's hard to predict. It might be something like helping their engineers add new skills, or less energy spent on making hard security decisions about those bugs.

Convincing Individual Contributors

If you're the only person in the room who wants the team to do something, few managers will sign off. You must convince individual contributors that threat modeling is a worthwhile use of their time. You should start with fun, which means Elevation of Privilege. If you don't have one of the professionally produced Microsoft or Wiley decks, spend a bit of energy to print a copy or two in color. Making an activity fun keeps people engaged, and it may help you overcome past poor experiences. Besides, fun is fun. Bringing pizza and beer rarely hurts.

But fun is not enough. At some point you'll need to give everyone a business reason to keep showing up. Maybe modeling the software with good diagrams helps people stay aligned with what's being built. Maybe good security bugs are being found and fixed. Maybe a competitor is falling behind because they're spending all their time cleaning up after a security failure. Identifying the value your team will receive is key to convincing management. Additionally, your effort may not be enough. Having a respected engineer as a champion can be a tremendous help.

Note

A test lead in Windows wrote “I can't overstate how much having Larry's support for [this tool] helped us gain credibility in the development community. You should find a Larry equivalent . . . and make sure he is sold on evangelizing the new process/tool.”

There are many objections you might face, which are covered in depth in the “Overcoming Objections to Threat Modeling” section at the end of this chapter. One objection is so common and important that it's threaded throughout the chapter, and worth discussing now: YAGNI. (For those who haven't encountered it, YAGNI is the agile development mantra of “You Ain't Gonna Need It.”) YAGNI is a mantra because it's a great way to push back on red tape in the development or operations process. The really tricky challenge in bringing in any new process is that your organization has not gone out of business despite not having that new process—so how do you convince anyone to invest in it?

Convincing Management

Management, at its core, is about bringing people together to accomplish a task (Magretta, 2002). You need management to accomplish tasks that are bigger than one person can do alone. That management can be implicit and shared when two people are collaborating, it can be a general ordering troops into a dangerous situation, and it can be all sorts of things in between. Tim Ferris has written: “The word decision, closely related to incision, derives from the meaning “a cutting off.” Making effective decisions—and learning effectively—requires massive elimination and the removal of options” (Ferriss, 2012).

This matters to you because management is management in part because of their ability to say no to good ideas. That sounds harsh, and it is; but you and your coworkers probably have dozens of good ideas every month. A manager who says yes to all of them will never bring a project to fruition. Therefore, an important part of management's job is to eliminate work that isn't highly likely to add enough business value. By default, you don't need whatever the flavor of the month is, and this month, what you don't need may appear to be threat modeling.

Therefore, to convince management, you need a plan with some “proof points.” (I really hate that jargon, but I'm betting your management loves it, so I'm going to hold my nose and use it.) One of the very first proof points will probably be buy-in from a co-worker.

Your plan needs to explain what to do, the resources required, and what value you expect it to bring. The way to present your play will vary by organization. The more your proposal aligns with management's expectations, the less time they'll spend figuring out what you mean, and that means more time spent on the content itself. Do you want to train everyone, or just a particular group? Do you want to stop development for a month to comprehensively threat model everything? You'll need more proof points for that. Do you want to require a formal, documented threat model for each sprint? What resources will be required? Do you need a consultant or training company to come in? How much time do you want from how many people? What impact is this investment going to have? How does that compare to other efforts, either in security or some other “property,” or even compared to feature work? What evidence do you have that that impact will materialize?

If you are at a smaller organization, this will be less formal than at a large organization. At a large organization, you may find yourself presenting to layers of management and even senior executives. If you are used to presenting to engineers, presenting to executives can be very different. Nancy Duarte has an excellent and concise summary of how to do that in a Harvard Business Review article titled “How to Present to Senior Executives” (Duarte, 2012). Whoever you're presenting to, if you are not used to presenting at all, consider it a growth opportunity, not a threat.

Who Does What?

If you want an organization to start doing something, you'll need to figure out exactly who does what and when. This section covers a set of project management issues, such as prerequisites, deliverables, roles and responsibilities, and group interaction topics such as decision models and effective meetings.

Threat Modeling and Project Management

Project management is a big enough discipline to fill books and offer competing certifications. If your organization has project managers, talk to them about fitting threat modeling into their approach. If not, some baseline questions you'll need to answer include the following:

§ Participants: Who's involved?

§ Tasks: What do they do?

§ Training: How do we help them do it?

§ Prep work: What needs to be done before a kick-off?

§ Help: What do they do when confused?

§ Conflict management: Who makes a call when different participants disagree and can't reach a consensus?

§ Deliverables: What do the people produce?

§ Milestones: When are deliverables due?

§ Interaction: How does communication occur (in-person meetings, e-mail, IRC, wikis, etc.)?

As you roll out threat modeling, you'll also need to define tactical process elements for deliverables such as the following:

§ What documents need to be created?

§ What tooling should be used, and where do you get it?

§ Who creates those documents, and who signs off on them?

§ When are those documents required, and what work can be held up until they're done?

§ How are those documents named?

§ Where can someone go to find them?

Most of both lists are self explanatory. The answer to “who” are roles. For example, developers create software diagrams, and development managers sign off on them. Naming is a question of what conventions have been established. For example, is it TM-Featurename-owner.html? Featurename-threat-model.docx? Feature.tms? Feature/threatmodel.xml?

Once you've answered these questions, you'll need to inform those who will be threat modeling. That might be done with a training course, an e-mail informing people of a new process, or it might be a wiki that defines your development or operational process. The approach should line up with how other processes roll out.

Prerequisites

In thinking about threat modeling, it's helpful to define what you need to kick off the threat modeling activity and what the deliverables will be. For example, creating a preliminary model of the software such as a data flow diagram (DFD) and gathering the project stakeholders in a room might be your prerequisites, along with getting Elevation of Privilege (and enough copies of this book for everyone!). How you divide the tasks is up to you. Having explicit prerequisites and deliverables can help you integrate threat modeling into other software engineering. It's important to have the right amount of process for your organization, and to respect the YAGNI mantra. Even if your organization isn't one where that's regularly invoked, it's a good idea to focus on the highest value work.

Deliverables

If you're part of an organization that thinks in terms of projects and deliverables, you can consider threat modeling as a project with its own deliverables, or you can integrate smaller activities into other parts of development.

If you treat threat modeling as a project in and of itself, there are a number of deliverables you could look for. The most important include diagrams, security requirements and non-requirements, and bugs. These should be treated like any other artifact created as part of the project, with ownership, version control, and so on. These documents should be focused on the security value you gain from creating them. Some approaches suggest that documents be created especially for the security experts involved in threat modeling, or that the documents must have large sections of introductory text copied in from elsewhere, such as requirements or design documentation. That doesn't add a lot of value. You might be surprised that a list of threats is not a deliverable. That's because lists of threats are not consumed anywhere else in development. Unlike architectural diagrams, requirements, and bugs, they are not easily added. (Bugs are a good intermediate deliverable, and are the way to bring threat lists beyond the threat modeling tasks.)

You'll need to define what the deliverables are called, where they're stored, what gates or quality checks are in place, and where they feed into. These deliverables are delivered to, and must be of a quality that's acceptable to, the project owner. Even if they are created or used by security experts, they should be designed for use by the folks to whom they are delivered. Depending on how structured an approach to threat modeling you have, there may be other, intermediate documents created as part of the process.

So when should you produce these deliverables? If you consider threat modeling as part of other work, you could look at maintaining or updating your software model as something that happens at the start of a sprint. Finding threats could happen as part of that model, as part of test planning, or at another time that makes sense for your project. Addressing threats can become a step in bug triage.

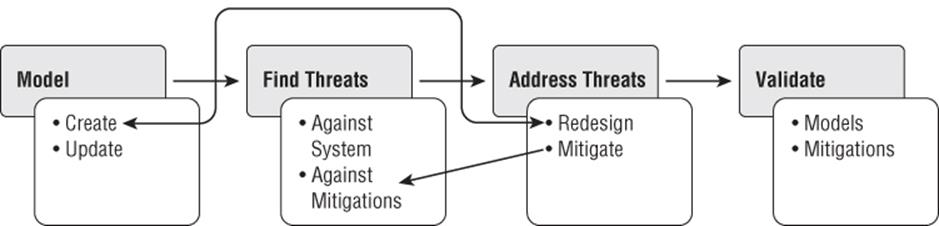

As the participants become more experienced and an organization's threat-modeling muscles develop, the approach will likely become less rigid, and more fluid, and stepping between activities more natural. Such a process might look like what is illustrated in Figure 17.1.

Figure 17.1 A four-stage approach with feedback

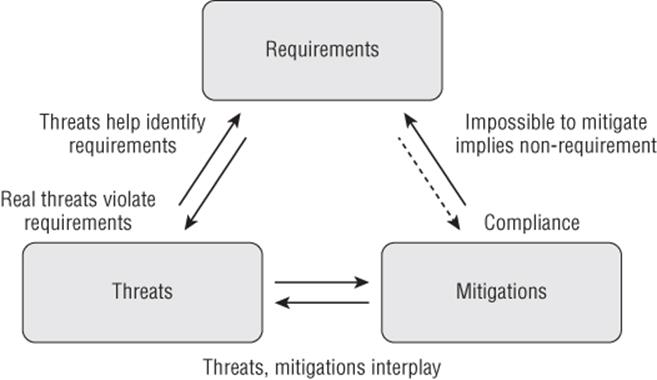

It's also possible to develop an organization's capabilities around the interplay of requirements, threats, and mitigations, as discussed in Chapter 12, “Requirements Cookbook.” As described there and shown in Figure 12-1, reproduced here as Figure 17.2, real threats violate requirements and can be mitigated in some way.

Figure 17.2 The interplay of threats, requirements, and mitigations

Direct interaction is much more common between threats and requirements and threats and mitigations. The interaction between threats and requirements may lead to you trying to implement both a threat modeling process and a requirements process at the same time. Doing so would be a challenging task, and should not be taken on lightly.

Individual Roles and Responsibilities

When an organization wants work done, it assigns that work to people. This section covers how you can do so.

There are roughly three models that can be employed:

§ Everyone threat models.

§ Experts within the business are consulted.

§ Consultants (internal or external) are used.

Each has advantages and costs. Having everyone perform threat modeling will get you basic threat models, and an awareness of security throughout the organization. The cost is that everyone needs to develop some skill at threat modeling, at the expense of other work. Using the second model, experts within the organization report to whomever owns product delivery, and they work as part of the product team on security. The advantage is that one person owns delivery of the threat model and how that's integrated into the product. The disadvantage is that person needs to be present at all the right meetings, and that won't always happen. Even with the best intentions, people may not realize they're going to make security decisions, or the security person may be triple-booked when everyone else is available. The final model uses consultants, either from within the company or external. Either way, consultants offer the advantage of specialization in threat modeling, but the disadvantage of lacking product context. External consultants can be extra valuable because management values what it pays for, and external consultants can sometimes deliver bad news with less worry about politics. However, external consultants may be more restricted than internal ones in terms of what they may be exposed to. Furthermore, external consultants often deliver a report that is filed away, without further action taken. You can mitigate that risk by having one of their deliverables be a list of bugs, which either they or someone in your organization can file.

An additional consideration is how people get help when needed. In smaller organizations, this can be organic; in larger ones, having a defined way to get help can be very helpful.

The next question is what sort of skill sets or roles should be assigned threat modeling tasks? The answer, of course, is that there's more than one way to do it, and the right way is the one that works for your organization. Even within one organization, there might be multiple right ways to do it, and multiple right ways to split up the work.

For example, one business unit within Microsoft has made threat modeling a part of the program management job. In another it is driven by the software developers. Yet another has split it up so that development owns creating the diagrams, and test owns ensuring that there's an appropriate test plan, including finding threats against the system with STRIDE per element. What works for your organization cannot be a matter of one person succeeding at the tasks. Don't get me wrong—that's a necessary start. If no enthusiast has succeeded at the tasks and produced bugs that were worth fixing, you can't expect anyone else to waste their time. That may seem harsh, but if you, as the enthusiast reading this book, can't demonstrate value, how can others be expected to?

Group Interaction

Three important elements of group interaction are who's in the group, how they make decisions, and how to hold effective meetings.

Group Composition

As you move from an individual threat modeling to a group effort, a natural question is what sorts of people form the group? Generally, you'll want to include the following (example job titles are shown in parentheses):

§ People who are building the system (developers, architects, systems administrators, devops)

§ People who will be testing the system (QA, test)

§ People who understand the business goals of the system (product managers, business representatives, program managers)

§ People who are tracking and managing progress (project managers, project coordinators, program managers)

Decision Models

In any group activity, there is a risk of conflict, and organizations take a variety of approaches to managing those conflicts and reaching decisions. It is important to align threat modeling decision making with whatever your organization does to make decisions, and consider where decisions may need to be made. The issues most likely to be contentious are requirements and bugs. For requirements, the issue is “what's a requirement?” For bugs, issues can include what constitutes a bug, what severity bugs have, and what bugs can be deferred or not fixed. Tools like bug bars can help (as covered in Chapter 9, “Trade-Offs When Addressing Threats”), but you will still need a way to resolve disputes.

There are a variety of decision matrices that break out decision responsibility in various ways. One of the most common is RACI: Responsible, Approver, Consulted, Informed. Responsible indicates those people who are assigned tasks and possibly deliverables. The approver makes decisions when conflict can't be handled otherwise. Those who are consulted may expect that someone will ask for their opinions, but they do not have authority to make the decisions. The last group is kept informed about progress. Various implementations will define who is expected to escalate or object in various ways, with those in the informed or consulted categories less expected to escalate. Table 17.1 shows part of a sample RACI matrix for security activities including threat modeling (Meier, 2003). The line listed as design principles is similar to my use of “requirements.”

Table 17.1 A Sample RACI Matrix for Threat Modeling and Related Tasks

|

Tasks |

Architect |

System Adminis-trator |

Developer |

Tester |

Security Profes-sional |

|

Threat Modeling |

A |

I |

I |

R |

|

|

Security Design Principles |

A |

I |

I |

C |

|

|

Security Architecture |

A |

C |

R |

||

|

Architecture Design and Review |

R |

A |

Another large (product) team at Microsoft broke out threat modeling by discipline and activity within threat modeling, using a model of “own (O), participate (P), and validate (V),” as shown in Table 17.2.

Table 17.2 Threat Modeling Tasks by Role

|

Session |

Architect |

Program Manager |

Soft-ware Test |

Penetra-tion Test |

Developer |

Security Consul-tant |

|

Requirements |

O |

O |

V |

P |

V |

|

|

Model (software) |

P |

P |

O |

V |

O |

|

|

Threat Enumeration |

P |

P |

V |

O |

V |

|

|

Mitigations |

P |

P |

O |

V |

O |

|

|

Validate |

O |

O |

P |

P |

P |

V |

Effective TM Meetings

A great deal has been written about how to run an effective meeting, because running an effective meeting is hard. Common issues include missing or unclear agendas, confusion over meeting goals, and wasting time. This leads to a lot of people working hard to avoid meetings, and I'm quite sympathetic to that goal. Threat modeling often includes people with different perspectives discussing complex and contentious topics. This may make it a good candidate for holding one or more meetings, or even setting up a regular meeting for a long threat modeling project. It's also helpful to define the meetings as either decision meetings, working meetings or review meetings. Each may be required, and clarity about the goals of a meeting makes it more likely that it will be effective.

The agenda for a threat modeling meeting can be complex because numerous tasks might need to be handled, including the following:

§ Project kickoff

§ Diagramming or modeling the software

§ Threat discovery

§ Bug triage

These different tasks may lead to different meeting structure or goals:

§ Creating a model of the software with a diagram can be a collaborative, action-oriented meeting with a clear deliverable. If the right people are in the room, they can likely judge whether the model of the software is complete.

§ Threat discovery can be a collaborative brainstorming meeting, and while the deliverables are clear, completeness is less obvious.

§ Bug triage may be performed in a decision meeting with an identified decision process or decision maker.

These different goals are often overlapped into a single “threat modeling” meeting. If you need to overlap, consider using three whiteboards or easels: one for diagrams, one for threats, and one for the bugs. Label each clearly and explain that each is different and has different rules. Physically moving from one whiteboard to the next can help establish the rule set for that portion of a meeting. However, it is challenging to go from collaborative to authoritative and back within a single meeting. Generally, that means it will be challenging to triage bugs in a diagram or threat-focused meeting. Similarly, threat modeling meetings with a goal of creating a document are probably not a good use of everyone's time. Finally, holding a meeting without a designated note taker will be a lot less effective.

A good threat modeling meeting focuses on tasks that require each person in the room to be present. It is likely that the meeting will require people from each discipline in your organization (such as programmers and testers). A threat model review with the wrong folks is not just a waste of time, it risks leaving you and your organization with a false sense of confidence. Make sure that you have the right architects, drivers, or whatever else is organizationally appropriate. These people can be quite busy, so it helps to ensure that they get value from the meetings, which in turn helps to ensure that the entire team shares a mental model of the system, which makes development and operations flow more smoothly.

One last comment about meetings. Words matter greatly. Threat modeling can be contentious under the best of circumstances. Meetings are far more likely to be effective if discoveries and discussions are about the code or the feature, rather than Alice's code orBob's feature. They'll also be more effective if those presenting the system focus on what's being built, rather than the whys. (I recall one meeting in which every third sentence seemed to be about why the system was impenetrable. What turned out to be impenetrable was the description, not the system.) Similarly, you want to avoid words that inflame anyone. For example, if people believe that discussing attacks implies advocating criminal activity, then it's probably best to use another word such as threat or vulnerability; or if a team is concerned that the term “breach” implies mandatory reporting, it might be better to discuss incidents. As a last fallback, you can say “issue” or “thing.” If your threat modeling meetings become contentious, it may help to start with a brief statement from the meeting leader about the scope, goals, and process for the meeting, or even to have neutral moderators join in. The skill sets required of the moderators will depend on what turns out to be contentious.

Diversity in Threat Modeling Teams

Regardless of what you're threat modeling, it's useful to bring a diverse set of skills and perspectives to bear on analyzing a system. If you're building software, that includes those who write and test code, while if it's an operational project, it might be systems managers and architects. Unless it's a small project, it will probably also include project management.

You'll need to figure out to what extent customers or customer advocates should be involved. Often, these perspectives will inform your decisions about what's important, what's an acceptable threat, or where you need to focus your attention.

For example, in discussing their voting system threat model, Alec Yanisac and colleagues mention the enormous value they observed from bringing together technologists, election officials, and accessibility experts with voting systems vendors. They attributed a great deal of their success to having the various parties in the same place to discuss trade-offs and feasibility (Yanisac, 2012).

Bringing in a wide set of skills, perspectives, and backgrounds can slow down discussion as people go through the team formation process of “storming, forming, norming and performing.” The more diversity in the group, the longer such a process will take. However, the more diverse group will also be able to find a broader set of threats.

Threat Modeling within a Development Life Cycle

Any organization that is already developing software has some sort of process or system that helps it move the software from whiteboard to customers. Smart organizations have formalized that process in some way, and try to repeat the good parts from cycle to cycle. Sometimes that includes security activities, but not always.

Note

A full discussion about how to bring security into your development life cycle is beyond the scope of this book. One could make the case that threat modeling should always be the first security activity an organization undertakes, but that sort of one-size-fits-all thinking is one of the ideas that I hope this book puts to rest. Other activities, such as fuzzing, may have a lower cost of entry and produce bugs faster. On the other hand, an hour of whiteboard threat modeling can point that fuzzer in the right direction. More important, it's helpful to think of security as something that permeates through development and deployment. One good resource for thinking about this is the Security Development Lifecycle Optimization Model (http://www.microsoft.com/security/sdl/learn/assess.aspx).

Development Process Issues

In this section, you'll learn about bringing threat modeling into either waterfall or agile development, how to integrate threat modeling into operational planning, and how to measure threat modeling.

Waterfalls and Gates

If you're using something like a waterfall process, then threat modeling plays in at several stages, including requirements, design, and testing. (You doubtless slice your development process a little differently than the next team, but you should have roughly similar phases.) During requirements and design, you create models of the software, and you find threats. Of course, you let those activities influence each other. During testing, you ensure that the software you built matches the model of the software you intended to build, and you ensure that you're properly resolving your bugs.

If your process includes gates (sometimes also called functional milestones), such as “we can't exit the design phase until . . . ,” then those gates can be helpful places to integrate threat modeling tasks. Following are a few examples:

§ We can't exit design without a completed model of the software for threat identification, such as a DFD.

§ We can't start coding until threat enumeration is complete.

§ Test plans can't be marked complete until threat enumeration is complete.

§ Each mitigation is complete when there's a feature spec (or spec section) to cover the mitigation.

Threat Modeling in Agile

The process of diagramming, identifying, and addressing threats doesn't need to be a waterfall. It's been presented like that to help you understand it, but you can jump back and forth between the tasks as appropriate for your team or organization. There's nothing inherent to threat modeling that is at odds with being agile. (There is an awful lot of writing on threat modeling that is process heavy. That's not the same as threat modeling being inherently process heavy.) Feel free to apply YAGNI to whatever extent it makes sense for you.

Now, you might get super-duper agile and say, “Threat models? We ain't gonna need them.” That may be true. You've probably gotten away without threat models to date, and you're worried that threat modeling as described doesn't feel agile. (If that's the case, thanks for reading this far.) One agile practice that seems to relate very closely to threat modeling is test-driven design. In test-driven design, you carefully consider your tests before you start writing code, using the tests to drive conversations about what the code will really do. Threat modeling is a way to think through your security threats, and derive a set of test cases.

If you believe that threat modeling can be useful, you'll need a model of the software and a model of the threats. That can be a whiteboard model, using STRIDE or Elevation of Privilege to drive discussion of the threats.

Even if you're not an agile maven, YAGNI can be a useful perspective. When you're adding something to the diagram, are you going to need that there? When you identify a threat, can you address it? Someone taking out the hard drive and editing bits is hard to address. You won't need that threat.

If you're in the YAGNI camp, you might be saying, “That last security problem was really a painful way to spend a week,” or you might be looking at these arguments and asking, “Do we have important security threats?” If you are in an “already experiencing pain” scenario, then you probably don't need any more selling. Pick and choose from the techniques in this book to find a set of trade-offs that work in your agile environment. If you're in the skeptical camp, you've looked at a lot of arguments for an appropriately sized investment in threat modeling. That is, invest in a small agile experiment: Try it yourself on a real system, and see if the result justifies doing more.

Operations Planning

Much like planning development, there's more than one way to plan for new deployments of networks, software, or systems. (For simplicity, please read systems as inclusive of any technology you might be planning to deploy.) Integrating threat modeling into deployment processes involves the maintenance of models of the system. These models are, admittedly, rarely prioritized, but without knowing what a system looks like, understanding the scope or impact of a change due to a new deployment is more challenging.

Finding threats against a system is a matter of understanding the changes that occur as systems are operated or modified, and ensuring that you look for threats where changes are happening. Often, but not always, the new threats are associated with changes to connectivity (including firewalls).

The (acceptance or deployment) testing of a change should include any planned mitigations, checking to see whether the mitigations were deployed, and checking that they both allow what's planned, and prevent what they are expected to deny. Checking the deny rules can be a tricky step. It's easy to find one case and see if it's denied, but checking the full set of what should be denied can be challenging.

Testing and Threat Modeling

Don't underestimate the value of skilled testers to threat modeling. The testing mindset that asks the question “How can this break?” overlaps with threat modeling. Each threat is an answer to that question. Each layer of threat mitigation and response is a bug variant. Looking for the weakest link is similar to test planning. Unit tests can look like vulnerability proof-of-concept code. Therefore, your testers (under whatever name they work) are natural allies. Furthermore, threat modeling can solve a number of problems for testers, including the following:

§ Testing is undervalued.

§ Limited career advancement

§ Exclusion from meetings

Testing is sometimes undervalued, and testers often feel their work is not valued. Helping them find important bugs can increase the importance of testing, and help security practitioners get those bugs fixed.

Related to the problem of being undervalued, testers often feel like their career path is more limited than that of developers. Working with testers to enable them to perform effective security tests can help open new career paths.

Lastly, developers and architects often feel that architectural choices are detached from testing or testability, and do not bother to include testers in those discussions. When threat modeling drives security test planning, seeing the software models early becomes an important part of test activity, and this may drive greater inclusion.

This can be a virtuous circle, in which quality assurance and threat modeling are mutually reinforcing.

Measuring Threat Modeling

In any organization, it is helpful to be able to measure the quality of a product. Measuring threat modeling is a complex topic, in the same way that measuring software development is tricky. The first blush approaches, such as measuring the number of diagrams or lines of code, don't really measure the right things. The appropriate measurements will depend on the threat modeling techniques you're using and the security or quality goals of the business. You can measure how much or how broadly a larger business is applying threat modeling, and you can measure the threat model documents themselves. When you measure the models themselves, two approaches can be used: pass or fail, and additive scoring.

Either approach can start from a checklist. For example, in the Microsoft SDL Threat Modeling Tool, there's a four-element checklist per threat. (Does the threat have text? Does the mitigation have text? Is the threat marked “complete”? Is there a bug?) At the end, each threat has either a score of 0–4 or a pass (at 4). You could apply similar logic to a data flow diagram. Is there at least one process and one external entity? Is there a trust boundary? Is it labeled? You can assess the model as a whole. In The Security Development Lifecycle, Howard and Lipner present the scoring system shown as Table 17.3 (Microsoft Press, 2006).

Table 17.3 Measuring Threat Models

|

Rating |

Comments |

|

0 – No threat model |

No TM in place, unacceptable |

|

1 – Not acceptable |

Out of date indicated by design changes or document age |

|

2 – OK |

DFD with “assets” (processes, data stores, data flows), users, trust boundaries At least one threat per asset Mitigations for threats above a certain risk level Current |

|

3 – Good |

Meets the OK bar, plus: Anon, authenticated local and remote users shown S,T,I,E threats all accepted or mitigated |

|

4 – Excellent |

Meets the good bar, plus: All STRIDE threats identified, mitigated, plus external security notes and dependencies identified Mitigations for all threats “External security notes” include plan for customer-facing documentation |

A more nuanced approach would score the various building blocks separately. Diagram scoring might involve a quality ranking by participants, tracking the rate of change (or requested changes), and the presence of the appropriate external entities. Threat scoring could entail a measure of threats identified (as in Table 17.3), or perhaps coverage of first- and second-order threats.

You might also use a combination of pass/fail and additive. For example, if you were using a bar and each threat needs a 5 on some 7-point scale, you could then sum the threats, requiring a 50, or 10 points per DFD element, or you could start from whatever level the “good” threat models you look at seem to be hitting. You might also require an improvement over a prior project, perhaps 10 percent or 15 percent higher scores. Such an approach encourages people to do better without requiring an unreasonable investment in improvement for a given release.

Measuring the Wrong Thing

People focus their energy on the things that are measured by those who reward them. People also expect that if you're measuring something, there's a pass bar that indicates what is good enough. Both of these behaviors are risks for threat modeling for the same reason that few organizations measure lines of code or bugs as a measure of software productivity: Measurements can drive the wrong behavior. (There's a classic Dilbert on wrong behaviors, with the punch line, “I'm gonna write me a new minivan this afternoon!” [Adams, 1995])

Therefore, measuring threat modeling might be counterproductive. It may be that measuring is a useful way to help people develop threat modeling muscles. You might be able to use people's instinct to “game” the scoring system by awarding points for reporting (good) bugs in the threat modeling approach .

One other possible issue with a measurement scale is that it's likely to stop too early. The Howard–Lipner scoring system described earlier stops at “excellent,” reducing the incentive to strive beyond that point. What if it had an “awesome” level, which was awarded at the discretion of the scorer?

When to Complete Threat Modeling Activities

The tasks discussed in this section relate closely to the section “Iteration” in Chapter 7, ”Digging Deeper into Mitigations.” The difference is that as threat modeling moves from an individual activity (as discussed in Chapter 7 to an activity situated within an organization (as discussed in this chapter), the organization may want some degree of consistency.

Two organizational factors affect when to complete threat modeling:

§ Is it a separate activity?

§ How deep do you go?

Threat modeling can be a separate activity or integrated into other work. It is very helpful to threat model early in the development cycle, when requirements and designs are being worked out. This is threat modeling in the sense of requirements analysis, finding threats, and designing mitigations. As you get close to release or delivery, it is also helpful to validate that the models match what you've built, that the mitigations weren't thoughtlessly pushed to the next version or a backlog, and that the test bugs have been addressed.

The question of how deep you go is an important one. Many security practitioners like to say “you're never done threat modeling.” Steve Jobs liked to say “great artists ship.” (That makes you wonder, was he familiar with the career of Leonardo da Vinci? On the other hand, Leonardo's work languished in obscurity for hundreds of years.) It is almost always a good idea to start with breadth first, and then iterate through threat modeling your mitigations. How long you should continue is an understudied area. In a security-perfect world, you could continue as long as you're productively finding threats, and then stop. One good way to justify needing more time to threat model mitigations is if you can find attacks against them with a few minutes of consideration or by reviewing similar mitigations. Unfortunately, that requires experience, and that experience is expensive.

Postmortems and Feedback Loops

There are two salient times to analyze your threat modeling activity: immediately after reaching a milestone and after finding an issue in code that was threat modeled and shipped. The easiest is right after some milestone, while the potentially more valuable is when threats are found by outsiders. After a milestone, memories are fresh, the work is recent, and the involved participants are available. You can ask questions such as the following:

§ Was that effective?

§ What can we do better?

§ What tasks should we ensure that we continue to perform?

All of the standard sorts of questions that you ask in a development or operations after-incident analysis can be applied to threat modeling.

The second sort of analysis is after an issue is found. You'll want to consider whether it's the sort of thing that your threat modeling should have prevented; and if you believe it is, try to understand why it was not prevented. For example, you might have not thought of that threat, which might mean spending more time finding threats, or adding structure to the process. You might misunderstand the design or deployment scenarios, in which case more effort on appropriate modeling might be helpful. Or you might have found the threat and decided it wasn't worth addressing or documenting.

Organizational Issues

These issues relate less to process and more to the organizational structures that surround threat modeling. These issues include who leads (such as programmers or testers or systems architects), training, modifying ladders, and how to interview for threat modeling.

Who Leads?

The question of who is responsible for ensuring that threat modeling happens varies according to organization, and depends heavily on the people involved and their skills and aptitudes. It also depends on to what degree the organization is led by individuals driving tasks versus having a process or collaborative approach.

Breaking threat modeling into subtasks, such as software modeling, threat enumeration, mitigation planning, and validation, can offer more flexibility to assign the tasks to different people. The following guidelines may be helpful:

§ Developers leading threat modeling activity can be effective when developers are the strongest technical contributors or when they have more decision-making power than people in other roles. Developers are often well positioned to create and provide a model of the software they plan to build, but having them lead the threat modeling activity carries the risk of “creator blindness”—that is, not seeing threats in features they built, or not seeing the importance of those threats.

§ Testers driving the process can be effective if your testers are technical; and as discussed earlier in the section “Testing and Threat Modeling,” it can be a powerful way to align security and test goals. Testers can be great at threat enumeration.

§ Program managers or project managers can lead. A threat model diagram or threat list is just another spec that they create as part of making a great product. Program/project managers can ensure that all subtasks are executed appropriately.

§ Security practitioners can own the process, as long as they understand the development or deployment processes well enough to integrate effectively. Depending on the type of practitioner, they can be intensely helpful in threat enumeration, mitigation planning, and validation.

§ Architects (either IT architects or business architects) can also own threat modeling, ensuring that it's a step that they execute as they develop a new design. Much like developers, architects are well positioned to provide a model of the system being built, but can be at risk of creator blindness.

Regardless of who owns, leads, or drives, threat modeling tasks tend to span disciplines and require collaboration, with all the trickiness that can entail.

Training

From the dawn of time, threat modeling has been passed down from master to apprentice. Oh, who am I kidding? People have learned to threat model from their buddies. It's typically experiential learning, without a lot of the structure that this book is bringing to the field. That kind of informal apprenticeship has (you'll be shocked to learn) pros and cons. The advantage is having someone who can answer questions. The disadvantage is that without any structure, when the mentor leaves sometimes the “apprentices” flail around. Thus, the trick for security practitioners is to truly mentor, rather than dominate. That might involve leading questions, or debriefs before or after tasks.

When an organization begins its foray into threat modeling, participants need to be trained and told how they'll be evaluated. Threat modeling includes a large set of tasks, and it's important to be clear about what those tasks entail and how they should be accomplished. This takes very different forms in different organizations, including approaches like written process documentation, brown bag lunches, formal classroom training, computer-based training, and my favorite, Elevation of Privilege. Of course, Elevation of Privilege doesn't cover process elements such as what documents need to be created, so that can be integrated into the skill-teaching portion of a training.

Modifying Ladders

Job “ladders” are a common way to structure and differentiate levels of seniority within an organization. For example, someone hired right out of school would start as a junior developer, be promoted to developer, then senior developer. So let me get this out of the way. Ladders are a big company thing. They're bureaucracy personified, and reflect what happens when humans are treated as resources, not individuals. If your company is a small start-up, ladders are antithetical to the artisanal approach that it personifies; but if you want to introduce threat modeling to a company that uses them, they're a darned useful tool for setting expectations.

When you have an idea of what threat modeling approach and details work for your organization, and when you have a set of people repeatedly performing threat modeling tasks and demonstrating impact, talk to your management about what they see. Are all the people doing the same tasks in the same way? Are they delivering to the same quality and with similar impact, or are there distinctions? If there are distinctions, do they show up among individuals at different levels on the current ladders? If so, great; those distinctions are candidates for addition to the core or optional skills that define a ladder.

If, for example, your organization has five levels on the software engineering ladders, with a 1 being just out of college, and a 5 being the top, determine whether some level of subcompetency in threat modeling, such as diagram creation or using STRIDE per element, can be added to the ladder, at level 2 or 3, and then everyone who wants to be promoted must demonstrate those skills. At the higher end, perhaps refactoring or operational deployment changes have been rolled out to address systemic threats. Those would be candidate skills to expect to see at level 4 or 5.

Interviewing for Threat Modeling

When threat modeling becomes a skill set you hire or promote for, it can help to have some interview techniques. This section simply presents some questions and techniques to get you started. The same questions apply effectively to software and operations folks, but the answers will be quite different.

General knowledge

1. Tell me about threat modeling.

2. What are the pros and cons of using DFDs in threat modeling?

3. Describe STRIDE per element threat modeling.

4. How could you mitigate a threat such as X?

5. How would you approach mitigating a threat such as X?

Questions 4 and 5 are subtly different; the former asks for a list, whereas the latter asks the interviewee to demonstrate judgment and be able to discuss trade-offs. Depending on the circumstance, it might be interesting to see if the candidate asks questions to ensure he or she understands the context and constraints around the issue.

Skills testing

1. Have the candidate ask you architectural questions and draw a DFD.

2. Give the candidate a DFD, and ask them to find threats against the system.

3. Give the candidate one or more threats and ask them to describe (or code) a way to address it.

Behavior-based questioning

Behavior-based interviewing is an interview technique in which questions are asked about a specific past situation. The belief is that by moving away from generic questions, such as “How would you approach mitigating a threat such as X?” to “Tell me about a time you mitigated a threat such as X,” the interviewee is moved from platitudes to real situations, and the interviewer can ask probing questions to understand what the candidate really did. Of course, the questions suggested here are simply starting points, and much of the value will result from the follow-up questions:

1. Tell me about your last threat modeling experience.

2. Tell me about a threat modeling bug that you had to fight for.

3. Tell me about a situation in which you discovered that a big chunk of a threat model diagram was missing.

4. Tell me about the last time you threat modeled an interview situation.

Threat Modeling As a Discipline

The last question related to who does what is: “Can we as a community make threat modeling into a discipline?” By a discipline, I mean, can enthusiasts expect threat modeling to be a career description, in the way that software engineer or quality assurance engineering is a career?

Today, it would be an unusual VP of engineering who didn't have a strategy for achieving a predictable level of quality in any releases, and a person or team assigned to ensure that it happens. It's no accident that the past few decades have seen the development of software quality assurance as a discipline. The people who cared greatly about software quality spent time and energy discussing what they do, why they do it, and how to sell and reward it within an organization.

Whatever name it goes by, and whatever nuances or approaches an organization applies, quality assurance has become a recognized organizational discipline. As such, it has career paths. There are skill sets that are commonly associated with titles or ladder levels. There are local variations, and an important division between those who write code to test code and a shrinking set that is paid to test software manually. However, there are recognized types of impact and leadership, along with a set of skills related to strategy, planning, budgeting, mentoring, and developing those around you—all of which are expected as one becomes more senior. Those expectations (and the associated rewards) are a result of testers forming a community, learning to demonstrate value to their organization and sharing those lessons with their peers, and a host of related activities. Furthermore, the discipline exists across a wide variety of organizations. You can move relatively smoothly from being a “software development engineer in test” at one company to a “software engineer in test” at another.

Should threat modelers aspire to the same? Is threat modeling a career path? Is it the sort of skill set that an organization will reward in and of itself, or is threat modeling more analogous to being able to program in Python? That is, is it a skill set within the professional toolbox of many software developers, but one that you don't expect every developer to know? It may be something your organization already does, and if a candidate knows Perl, not Python, then you'll expect that he or she can pick up Python. Or threat modeling may be more similar to version control in your organization. You expect a candidate at one level above entry grade to be able to talk about version control, branches, and integration, but that may not be sufficient to hire the person. Conversely, perhaps only a basic skill level is required, and hiring managers don't expect most people to develop much beyond that.

Perhaps threat modeling is more similar to performance expertise. There are tools to help, such as profilers, and it's easy to get started with one. There are also people with a deep knowledge of how to use them, who can have detailed conversations about what the tools mean. Expertise in making systems fast can be a real differentiator for some engineers in development or operations.

Nothing in this section should be taken as an argument that threat modeling can't develop to the extent that quality has. Quality as a discipline has been developing for decades, and it's entirely possible that over time, the security, development, or operations communities will see ways to make threat modeling a discipline in and of itself. In the meantime, we need to develop its value proposition within today's disciplines and career ladders.

Customizing a Process For Your Organization

As discussed in Chapter 6 “Privacy Tools,” the Internet Engineering Task Force (IETF) has an approach to threat modeling that is focused on their needs as a standards organization. You can treat it as an interesting case study of how the situation and demands of an organization influence process design. The approach is covered in “Guidelines for Writing RFC Text on Security Considerations” (Rescorla, 2003). The guidelines cover how to find threats, how to address them, and how to communicate them to a variety of audiences. What threats the IETF will consider was the subject of intense discussion at their November, 2013 meeting (Brewer, 2013). That discussion continues as this book goes to press, but the approach used over the last decade will remain a valuable case study.

The document by Rescorla focuses on three security properties: confidentiality, data integrity, and peer authentication. There is also a brief discussion of availability. Their approach is explicitly focused on an attacker with “nearly complete control of the communications channel.” The document describes how to address threats, providing a repertoire of ways that network engineers can provide first and second order mitigations and the tradeoffs associated with those mitigations. The threat enumeration and designing of mitigations are precursors to the creation of a security consideration section. The security considerations section is required for all new RFCs, and so acts as a gate to ensure that threat enumeration and mitigation design have taken place. These sections are a form of external security note, focused on the needs of implementers, and they also disclose the residual threats, a form of non-requirement. These forms are discussed further in Chapter 7 and Chapter 12.

You'll note that their threats are a subset of STRIDE, without repudiation or elevation of privilege. This makes sense for the IETF, defining the behavior of the network protocols, rather than the endpoints. The IETF's situation is somewhat unusual in that respect. Few other organizations define network protocols. But many organizations ship products in a family, and the products in that family will often face similar threats, allowing for a learning process and some reuse of work. The IETF is also a large organization that can amortize that work over many products.

However, the IETF, like your organization, can make decisions about what threats matter most to them, to assemble lessons about mitigation techniques, and to develop an approach to communicating with those who use their products. You can learn from what the IETF does.

Overcoming Objections to Threat Modeling

Earlier, you learned that your plan needs to explain what to do, what resources are required, and what value you expect it to bring. Objections can be raised against each of these, so it's important to talk about handling them. As you develop a plan, many of the objections that will be raised are valid. (Even invalid objections probably have a somewhat valid basis, even if it's only that someone doesn't like change or they like to argue.) Some of these objections will be feedback-like, and expressed as ways to improve your plan. To the extent that they don't remove value, incorporating as many of these as possible helps people realize that you're listening to them, which in turn helps them “buy in” and support your plan. Obviously, if a suggestion is counterproductive, you want to address it. For example, someone might say, “Don't we need to start threat modeling from our assets?” and you could respond, “Well, that's a common approach, but I haven't seen it add anything to threat models and it often seems to be a rathole. And since Alice has already expressed concern about the cost of these tasks, maybe we should try it without assets first.”

As you listen to objections, it may be helpful to model them as threats to one or more elements of the plan (resource, value, or planning), and start from the overall response, getting more specific as needed. Note that (all models are wrong) these meld together pretty quickly, and (some models are useful) it might be useful to ask clarifying questions. For example, if someone is objecting to the number of people involved, is that a concern about the resourcing involved, or about the quality of the evidence relative to the proposal?

Resource Objections

Objections in this group relate to the input side of the equation. At some point, even the largest organizations have no more money to spend on security, and management will start making trade-offs; but before you get there, you may well reach objections to the size of the investment. Examples include too many people or too much work per person.

Too Many People

How many people are you proposing get involved in threat modeling? It may be that your proposal really does take time from too many people. This may be a resource objection, or it may be a way of presenting a value objection (it's too many people for the expected value), or it may be a proof objection—that is, your evidence is insufficient for the organization to make the investment.

Too Much Work per Person

There are only 24 hours in a day, and only 8 or 10 of them are working hours. If you're proposing that your most senior people should spend an hour per day threat modeling, that requires pushing aside that much other work. Especially if they're senior people, that work is probably important and requires their time. A typical software process might incorporate not only the feature being worked on, but properties such as security, privacy, usability, reliability, programmability, accessibility, internationalization, and so on. Each of these is an important property, and at the end of the day, it can overwhelm the actual feature work (Shostack, 2011). You'll need to craft your proposal to constrain how much time is asked of each person. You may want to suggest what should be removed from people's workload, but doing so may anger someone who has worked to ensure that those tasks happen.

Too Much Busywork/YAGNI

A lot of activities associated with older approaches to threat modeling are like busywork, or they invite the objection that “you ain't gonna need it”. Examples include entering a description of the project into a threat modeling tool (hey, it's in the spec) or listing all your assumptions (what do you do with that?). If you get this objection, you'll want to show how each of your activities and artifacts is used and valued by people later in the chain.

Value Objections

Objections in this group relate to the output side of the equation. Someone might well believe that security investments are a good idea, but this particular proposal doesn't reach the bar.

“I've Tried Threat Modeling. . .”

Many people have tried various threat modeling approaches, and many of those approaches provided incredibly low value for the work invested. It is tremendously important to respect this objection, and to understand exactly what the objector has done. You might even have to listen to them vent for a while. Once you understand what they did and where it broke down, you'll need to distinguish your proposal by showing how it is different.

There are both practical and personal objections based on past experience. The practical objections stem from complex, inefficient, or ineffective approaches that have been advocated elsewhere. Those objections inform the approaches in this book. However, there are also personal objections, from where those ineffective approaches were pushed in ways that repelled people. There are a number of mistakes you should avoid, including:

§ Not training people in what you want them to do

§ Give people contradictory or confusing advice.

§ Threat model when it's too late to fix anything.

§ Condescend to people when they make mistakes.

§ Focus on process, rather than results.

“We Don't Fix Those Bugs”

If your approach to threat modeling is producing many bugs that are resolved with something like WONTFIX, then that's a problem. It might be that the approach finds bugs that don't matter, or it might be that the organization sets the bar high for determining which bugs matter. In either event, some adjustment is called for. If it's the approach, can you categorize the bugs that are being punted? Is it entities outside some boundary? Stop analyzing there. Is it a class of unfixable bugs? Document it once, and stop filing bugs for it. If it's one of the STRIDE types, perhaps lowering the priority until the organization is more willing to accept those bugs will help you address other security bugs. If it's the organizational response, is a single individual making the call? If so, perhaps talking to that person to understand their prioritization rationale would help.

“No One Would Ever Do That”

This objection (and its close relative, “Why would anyone do that?”) is less an objection to threat modeling per se than to specific bugs being fixed. Generally, the best way to address these concerns is to look through the catalog of attackers in Appendix C “Attacker Lists” and find one who plausibly might “do that,” and/or find an instance of a similar attack being executed, possibly against another product. If you respond by saying “If I can find you an example, will you agree to fix it?” then you distinguish between where the objector really thinks no one would ever do that, and where the real objection is that the fix seems hard or changes a cherished feature.

Objections to the Plan

If you have a reasonable amount of investment to produce a reasonable return, you may still not get approval. Recall that management is management because they'll say no to good ideas. Therefore, your threat modeling proposal not only has to be good enough to overcome that bar, it has to overcome that bar in the particular circumstances when the proposal is made. Several of those circumstances relate to the state of the organization, and include factors such as timing and recent change, among other things. They also include things particular to your plan, such as other security activity or the quality of evidence you provide for your plan.

Timing

Management may already be investing in a couple of pilot projects, or one may have just blown up in their face. It may be the day after the budget was locked. A global recession may have just hit. These are hard objections to overcome. The best bet may be to ask when would be the right time to come back.

Change

Changing the behavior of individuals is hard, and changing the behaviors of an organization is even harder. Change requires adjustment of people, processes, and habits. Each adjustment, however much it will eventually help, requires time and energy that are taken away from productive work. Each person involved in a change wants to know how it will impact them; and if the impact is not positive, they may oppose or impede it. As such, people often fear change, and managers fear kicking off changes. If your organization has just been through a big change or a difficult change, appetite for more may be lacking.

Other Security Activity

The challenge may be that you have a decent investment proposal and decent return but the return on another security investment is higher. The ways to overcome this are either an adjustment to the investment or finding a way to improve the return. A key part of that strategy is emphasizing that finding bugs early is cheaper than finding them late. For some bugs that's because you avoid dependencies on the bug, but for many it's because the cheapest bugs to fix are the ones that you head off before you implement them. Similarly, if you can show that threat modeling could have prevented a class of bugs that continue to cause problems, then that may be sufficient justification.

Quality of Evidence

A final challenge to your plan may be that the quality of your proof points is insufficient in some way. If so, ask clarifying questions as discussed earlier, but it may simply be that you need more local experience with threat modeling before it can be formally sanctioned, or you need to gather additional data through a pilot project or something similar.

Summary

Getting an organization to adopt a new practice is always challenging. On the one hand, the issues you face will have a great deal in common with the issues faced by others who have introduced threat modeling. On the other hand, how you face them and overcome them will have aspects unique to your organization. Everyone will need to “sell” their case to individual contributors and to management.

Along the way to convincing people, you'll have to answer a variety of questions related to project management and roles and responsibilities, and ensure that those answers fit your organization's approach to building systems. Generally, that will include understanding the prerequisites to various tasks, and what deliverables those tasks will produce. As you execute the required tasks, you'll run into interaction issues, so you need to understand how decisions will be made. The decision models are likely different for different threat modeling tasks. Some of those tasks will require meetings, and making meetings effective can be tricky, especially if you overload agendas, meeting goals, and decision models. Such overload is common to threat modeling.

Organizations vary in terms of delivering technology, and they deliver technology of different types. Some organizations use waterfalls with gates and checkpoints; others are more agile. All organizations need to roll out systems, and some also deliver software. Each development and deployment approach will have different places to integrate threat modeling tasks. Most organizations also have organized testing, and testing can be a great complement to threat modeling. How an organization approaches technology also has implications for how it hires, rewards, and promotes people, including factors such as training, career ladders, and interviewing.

When attempting to bring threat modeling to an organization, you'll encounter a variety of objections that you'll need to address. Those objections can be roughly modeled as resource objections, value objections, and objections to the plan; and each can be understood and approached in the various ways described in this chapter.