SEO for Small Business: Easy SEO Strategies to Get Your Website Discovered on Google (2015)

INTRODUCTION

![]()

When we think of quality control, we might think of people in lab coats testing pharmaceuticals for consumer safety, or warehouse workers making a final product check before shipping it out. But did you know that Internet searches also have a kind of quality control? This allows sites considered to be “valuable” to appear higher up in search results if others have found them useful, making your time online more productive and informative. Essentially, the search engine is similar to a restaurant critic, assigning scores to sites based on quality and popularity.

![]()

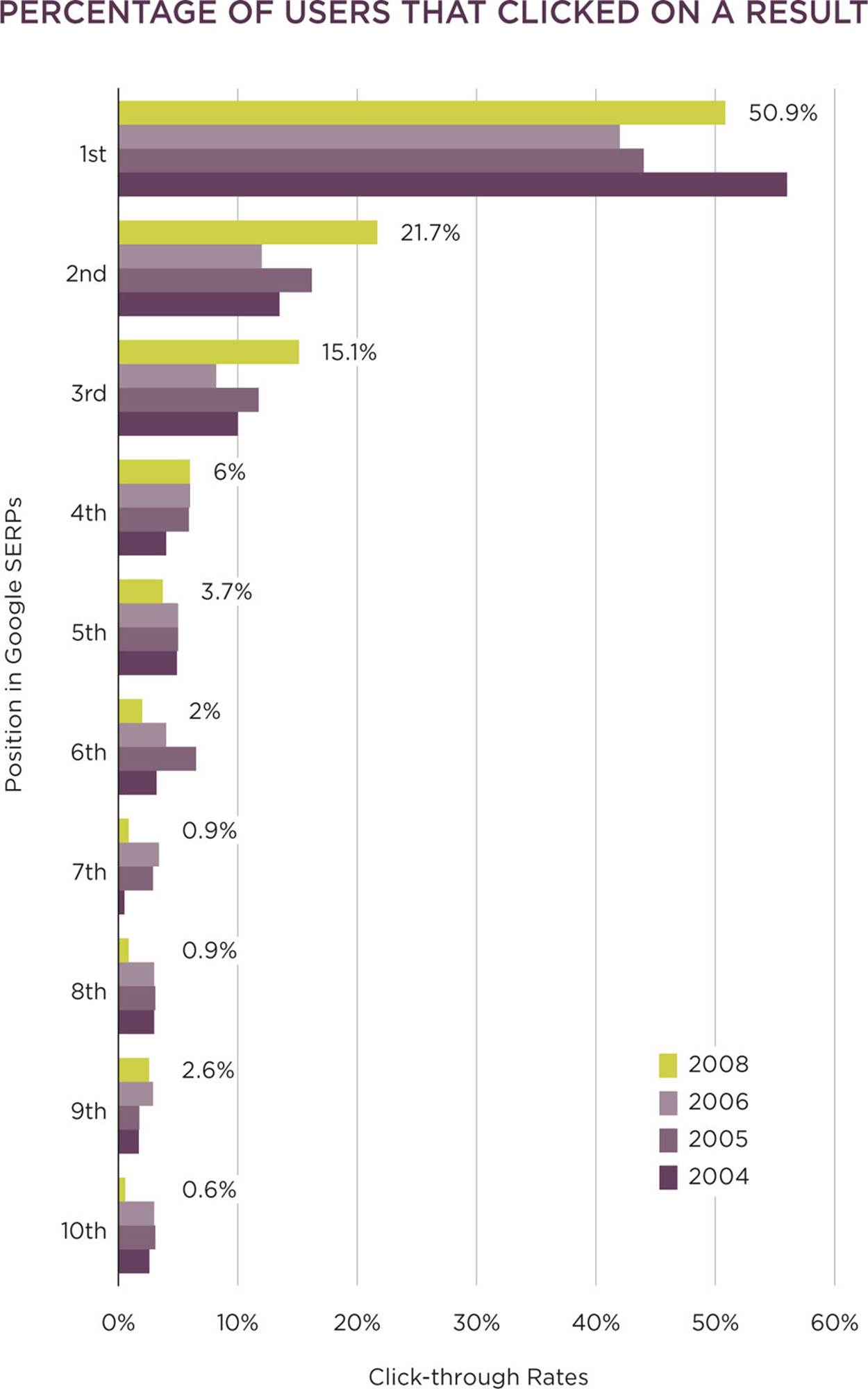

FIGURE 1.1 Clickability based on Google ranking and CTR

A valuable website achieves higher rankings because it offers useful, relevant information about a specific topic, engages readers, and gets many new and repeat visitors. In creating and promoting your own website, it’s important to ensure your content is easily searchable online because this will improve your ranking on a search engine results page, or SERP. Ranking higher makes users more likely to see and click on your website over other results. The way to do this is through search engine optimization, or SEO.

SEO is the process of improving and presenting your website in a way that will attract more visitors from search engine results. Think of SEO as a way to butter up the virtual restaurant critic and increase the chances that your website will rank higher on the results page.

THE ROLE OF ALGORITHMS IN SEO

How do search engines decide which websites are valuable? It’s not magic— it’s math. Algorithms, to be exact. Algorithms are programming logic used to process data in a way that determines the ranking factors of a site, based on a variety of variables. Value is assigned to a site based on the relevance of keywords and phrases in a site’s headlines and the quality of its written content. Digestible, valuable content is defined as being keyword-rich, as well as frequently clicked on and linked to by users across the Web.

Algorithms need data to make their calculations, and special programs called spiders retrieve this data through processes called crawling and indexing. Crawling is when a search engine spider moves through the Web, following links from site to site and enabling search engines to discover new websites, as well as assess how many sites link to them. Search engine indexing is the digestion and storage of a website’s information for quick and accurate retrieval. Search engines are more likely to index sites that are popular, so the more clickable and linkable users prove a site to be, the more likely it is to get indexed.

After crawling and indexing, a search engine will consider the relative quality, popularity, and number of incoming links to give a site an initial ranking in search results.

BLACK HATS & WHITE HATS

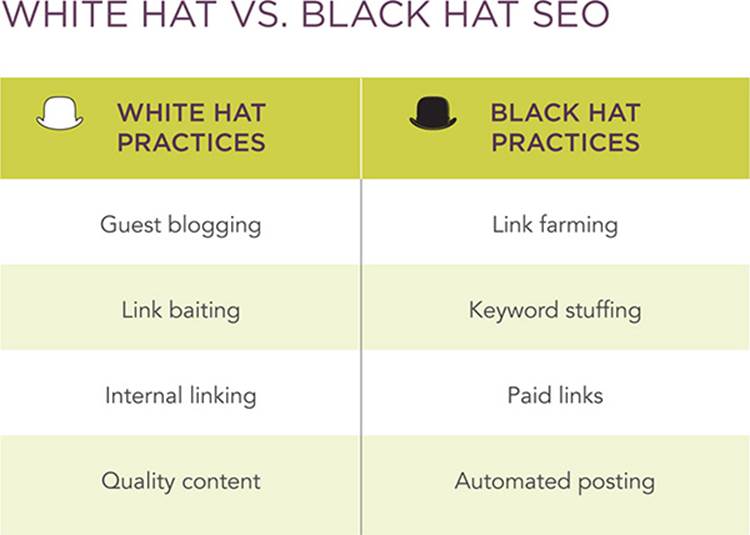

Unfortunately, some early adopters of SEO, realizing the importance of a search engine’s initial analysis, began abusing this process, using various techniques to cause advertising or malware sites to get higher rankings on a SERP.

This so-called black hat SEO behavior improves their search results and corrals users into viewing ads or visiting virus-infected pages. Spammers would also cram lots of potentially relevant keywords into their sites, making them attractive to spiders and enabling them to rank high without necessarily being of any value. Black hat SEO tactics also include inserting links to spam sites into places all over the Web to make a site look desirable and trustworthy to search engine algorithms.

In contrast, white hat SEO behavior adheres to ethical use principles, such as those outlined by the Google Webmaster Guidelines, which are publicly available for users to read and learn from at www.support.google.com/webmasters/answer/35769?hl=en. In general, white hat SEO involves practices that improve positioning by creating relevant and useful content, ensuring a link exists to take readers to key content within a site, and seeding it with keywords that appeal to human readers. Easy navigation and user-friendly interfaces also help sites ethically improve their rankings, as people who enjoy using these sites revisit, share, and link to them. Increased traffic through search engine hits happens automatically for sites whose managers ramp up their white hat SEO tactics.

Over time, search engine algorithms have been improved to recognize keyword cramming and other black hat tricks, and to prefer terms showing up naturally in contexts that appeal to human readers. In the spring of 2012, Google dramatically stepped up its efforts to stop spammers from engaging in unethical use of SEO strategies by enacting Penguin, an algorithm update that identified and pushed low-quality but high-ranking sites out of influential spots in SERPs. That summer, the Google Webmaster Central Blog stated, “The change will decrease rankings for sites that we believe are violating Google’s existing quality guidelines. We’ve always targeted webspam in our rankings, and this algorithm represents another improvement in our efforts to reduce webspam and promote high-quality content.”

HUMMINGBIRD

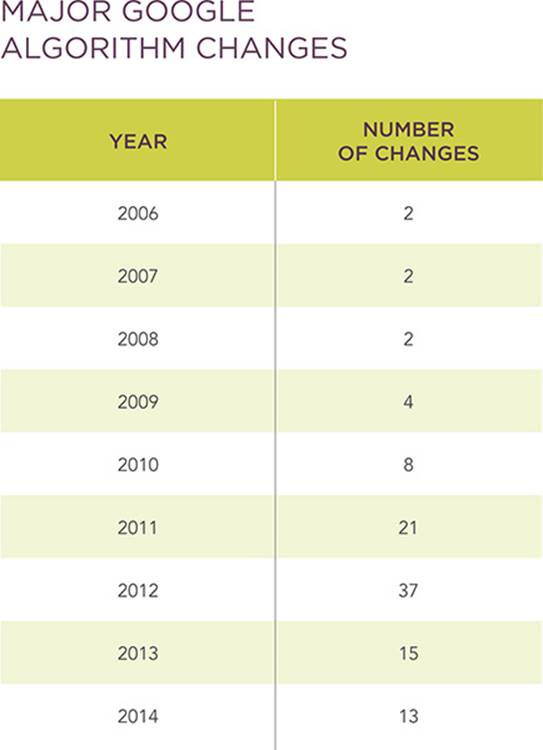

Between 2014 and 2015, Google has altered its algorithm hundreds of times, with most changes being comparatively small. As spammers find new ways around the algorithm’s protective barrier, Google must constantly update its math. The algorithm changes discourage innovative spammers, while continuing to provide relevant and useful positioning of clickable content. Other algorithm changes have included Panda, in (insert year), and Hummingbird, in 2013.

With Hummingbird, Google attempts to look at users’ keywords in order to better understand the meaning behind them. This improves results when users type in a more conversational style search, such as “Where to get a haircut in Oklahoma City?” Instead of focusing on keywords, Hummingbird extrapolates the meaning behind the entire query.

Pigeon, another algorithm update, began to influence searches during the last half of 2014, providing assistance with queries involving local directory sites, such as Yelp, where distance and location play a role in results. As users’ needs change—along with the landscape of the Internet and society at large—algorithm updates will continue to tailor the types of hits users receive in response to searches.

![]()

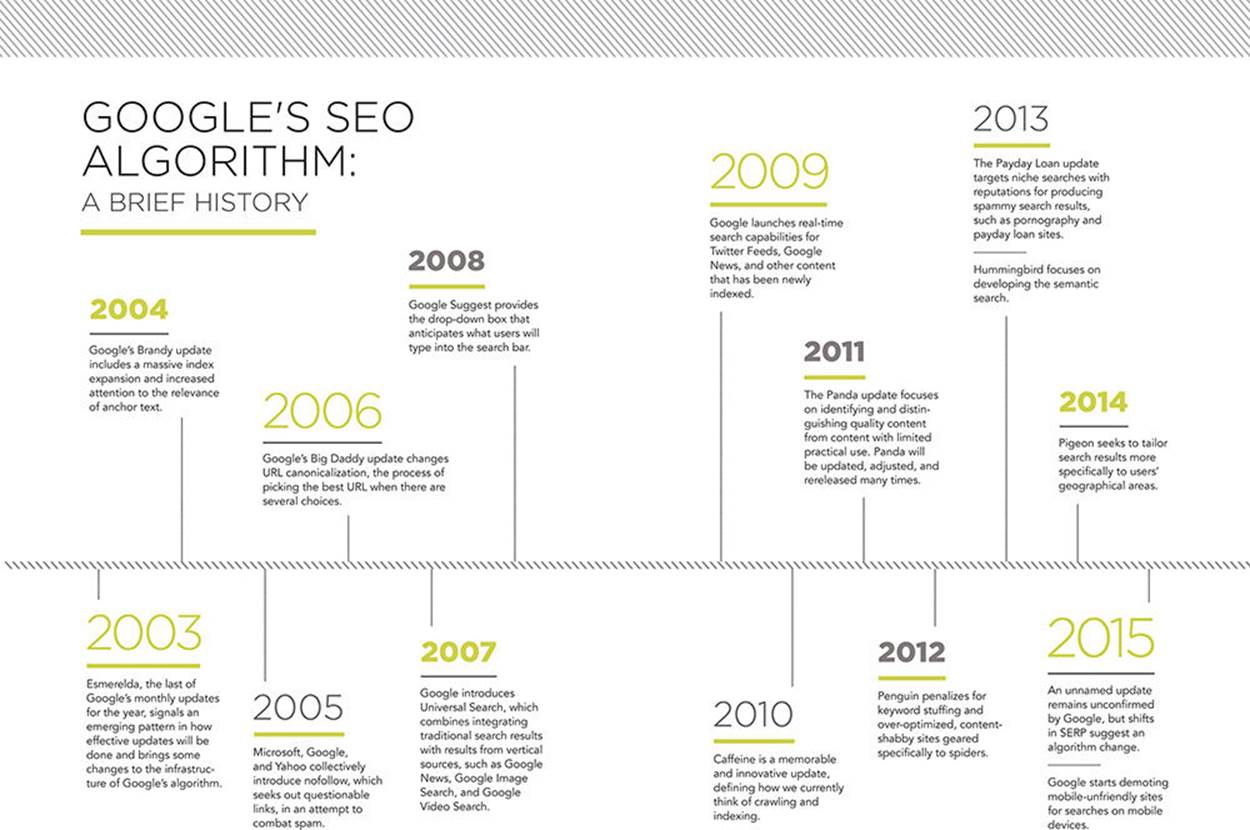

Google’s algorithm is altered 500 or more times every year. The algorithm has come a long way since February 2003, when the first named update, Boston, took place.