iOS Drawing: Practical UIKit Solutions (2014)

Chapter 7. Masks, Blurs, and Animation

Masking, blurring, and animation represent day-to-day development challenges you experience when drawing. These techniques enable you to add soft edges to your interface, depth-of-field effects, and updates that change over time. This chapter surveys these technologies, introducing solutions for your iOS applications.

Drawing into Images with Blocks

Chapter 6 introduced custom PushDraw() and PushLayerDraw() functions that combined Quartz drawing with Objective-C blocks for graphics state management and transparency layers. Listing 7-1 riffs off that idea, introducing a new function that returns an image. It uses the sameDrawingStateBlock type to pass a series of drawing operations within a block, inscribing them into a new image drawing context.

Although I originally built this function to create mask images (as you’ll see in Listing 7-2), I have found myself using it in a wide variety of circumstances. For example, it’s handy for building content for image views, creating subimages for compositing, building color swatches, and more.Listing 7-1 is used throughout this chapter in a variety of supporting roles and offers a great jumping-off point for many of the tasks you will read about.

Listing 7-1 Creating an Image from a Drawing Block

UIImage *DrawIntoImage(

CGSize size, DrawingStateBlock block)

{

UIGraphicsBeginImageContextWithOptions(size, NO, 0.0);

if (block) block();

UIImage *image = UIGraphicsGetImageFromCurrentImageContext();

UIGraphicsEndImageContext();

return image;

}

Simple Masking

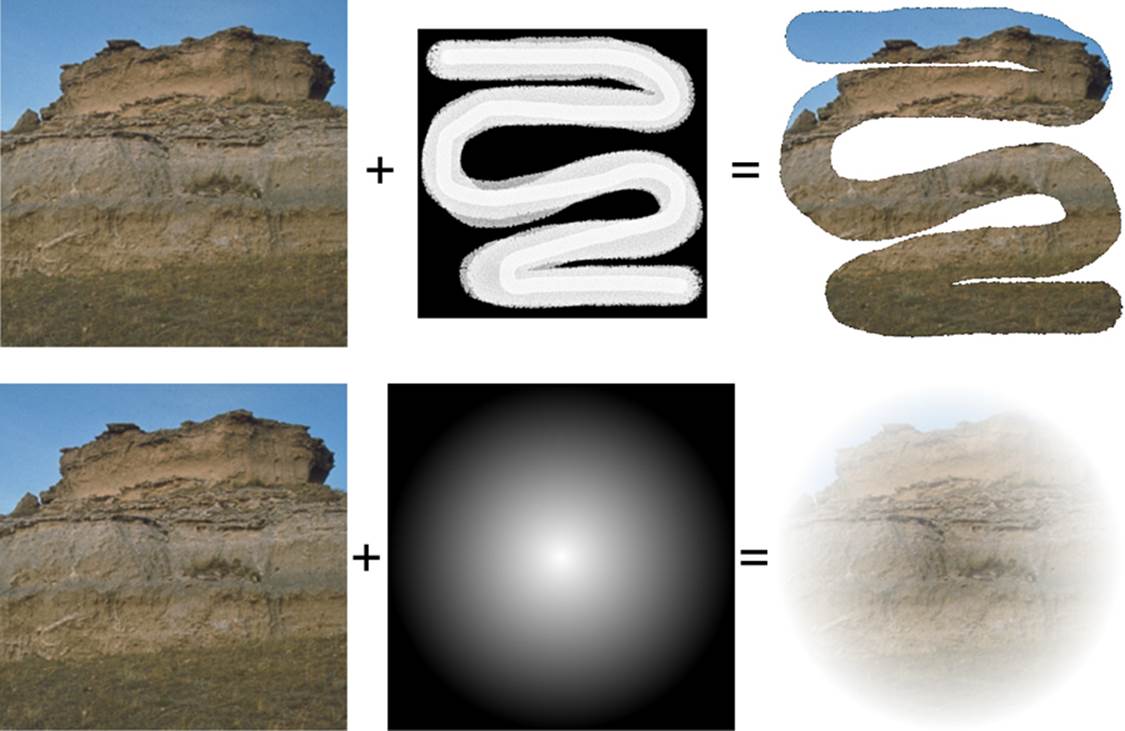

As you’ve discovered in earlier chapters, clipping enables you to limit drawing to the area inside a path. Figure 7-1 shows an example of simple mask clipping. In this example, only the portions within the path paint into the drawing context.

Figure 7-1 Clipping limits drawing within the bounds of a path. Public domain images courtesy of the National Park Service.

You achieve this result through either Quartz or UIKit calls. For example, you may call CGContextClip() to modify the context to clip to the current path or send the addClip method to a UIBezierPath instance. I built Figure 7-1 with the addClip approach.

Example 7-1 shows the code that built this figure. These commands build a path, apply a clip, and then draw an image to the context

Example 7-1 Basic Clipping

// Create the clipping path

UIBezierPath *path =

[UIBezierPath bezierPathWithOvalInRect:inset];

UIBezierPath *inner = [UIBezierPath

bezierPathWithOvalInRect:

RectInsetByPercent(inset, 0.4)];

// The even-odd rule is essential here to establish

// the "inside" of the donut

path.usesEvenOddFillRule = YES;

[path appendPath:inner];

// Apply the clip

[path addClip];

// Draw the image

UIImage *agate = [UIImage imageNamed:@"agate.jpg"];

[agate drawInRect:targetRect];

Complex Masking

The masks in Figure 7-2 produce results far more complex than basic path clipping. Each grayscale mask determines not only where each pixel can or cannot be painted but also to what degree that pixel is painted. As mask elements range from white down to black, their gray levels describe the degree to which a pixel contributes to the final image.

Figure 7-2 The levels in a grayscale mask establish how to paint pixels. Public domain images courtesy of the National Park Service.

These masks work by updating context state. Use the CGContextCliptoMask() function to map a mask you supply into a rectangle within the current context:

void CGContextClipToMask (

CGContextRef c,

CGRect rect,

CGImageRef mask

);

For complex drawing, perform your masking within a GState block. You can save and restore the context GState around masking calls to temporarily apply a mask. This enables you to restore your context back to its unmasked condition for further drawing tasks, as in this example:

PushDraw(^{

ApplyMaskToContext(mask); // See Listing 7-2

[image drawInRect:targetRect];

});

A context mask determines what pixels are drawn and the degree to which they are painted. A black pixel in the mask is fully obscured. No data can pass through. A white pixel allows all data to pass. Gray levels between pure black and pure white apply corresponding alpha values to the painting. For example, a medium gray is painted with 50% alpha. With black-to-white masking, the mask data must use a grayscale source image. (There’s also a second approach to this function, which you’ll see slightly later in this chapter.)

Listing 7-2 demonstrates how you apply a grayscale mask to the current context. This function starts by converting a mask to a no-alpha device gray color space (see Chapter 3). It calculates the context size so the mask is stretched across the entire context (see Chapter 1).

To apply the mask, you must be in Quartz space, or the mask will be applied upside down. This function flips the context, adds the mask, and then flips the context back to UIKit coordinates to accommodate further drawing commands. This flip–apply–flip sequence applies the mask from top to bottom, just as you drew it.

If you’re looking for inspiration, a simple “Photoshop masks” Web search will return a wealth of prebuilt black-to-white masks, ready for use in your iOS applications. Make sure to check individual licensing terms, but you’ll find that many masks have been placed in the public domain or offer royalty-free terms of use.

Listing 7-2 Applying a Mask to the Context

void ApplyMaskToContext(UIImage *mask)

{

if (!mask)

COMPLAIN_AND_BAIL(@"Mask image cannot be nil", nil);

CGContextRef context = UIGraphicsGetCurrentContext();

if (context == NULL) COMPLAIN_AND_BAIL(

@"No context to apply mask to", nil);

// Ensure that mask is grayscale

UIImage *gray = GrayscaleVersionOfImage(mask);

// Determine the context size

CGSize size = CGSizeMake(

CGBitmapContextGetWidth(context),

CGBitmapContextGetHeight(context));

CGFloat scale = [UIScreen mainScreen].scale;

CGSize contextSize = CGSizeMake(

size.width / scale, size.height / scale);

// Update the GState for masking

FlipContextVertically(contextSize);

CGContextClipToMask(context,

SizeMakeRect(contextSize), gray.CGImage);

FlipContextVertically(contextSize);

}

Blurring

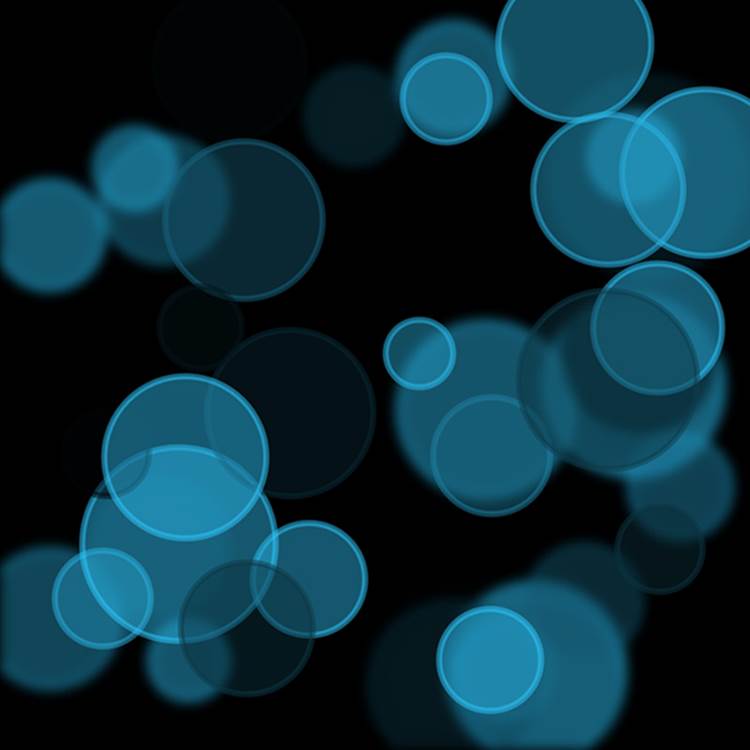

Blurring is an essential, if computationally expensive, tool for drawing. It enables you to soften transitions at boundaries when masking and build eye-pleasing visuals that create a sense of pseudo-depth. You see an example of this in Figure 7-3. Called “bokeh,” this effect refers to an aesthetic of out-of-focus elements within an image. Blurring emulates the way a photographic lens captures depth of field to create a multidimensional presentation.

Figure 7-3 Blurring builds complex and interesting depth effects.

Although blurring is a part of many drawing algorithms and is strongly featured in the iOS 7 UI, its implementation lies outside the Core Graphics and UIKit APIs. At the time this book was being written, Apple had not released APIs for their custom iOS 7 blurring. Apple engineers suggest using image-processing solutions from Core Image and Accelerate for third-party development.

Listing 7-3 uses a Core Image approach. It’s one that originally debuted with iOS 6. This implementation is simple, taking just a few lines of code, and acceptable in speed and overhead.

Acceptable is, of course, a relative term. I encourage you to time your device-based drawing tasks to make sure they aren’t overloading your GUI. (Remember that most drawing is thread safe!) Blurring is a particularly expensive operation as these things go. I’ve found that Core Image and Accelerate solutions tend to run with the same overhead on-device. Core Image is slightly easier to read; that’s the one I’ve included here.

Besides using performance-monitoring tools, you can also use simple timing checks in your code. Store the current date before drawing and examine the elapsed time interval after the drawing concludes. Here’s an example:

NSDate *date = [NSDate date];

// Perform drawing task here

NSLog(@"Elapsed time: %f",

[[NSDate date] timeIntervalSinceDate:date]);

Remember that most drawing is thread safe. Whenever possible, move your blurring routines out of the main thread. Store results for re-use, whether in memory or cached locally to the sandbox.

Note

The blurred output of the Gaussian filter is larger than the input image to accommodate blurring on all sides. The Crop filter in Listing 7-3 restores the original dimensions.

Listing 7-3 Core Image Blurring

UIImage *GaussianBlurImage(

UIImage *image, NSInteger radius)

{

if (!image) COMPLAIN_AND_BAIL_NIL(

@"Mask cannot be nil", nil);

// Create Core Image blur filter

CIFilter *blurFilter =

[CIFilter filterWithName:@"CIGaussianBlur"];

[blurFilter setValue:@(radius) forKey:@"inputRadius"];

// Pass the source image as the input

[blurFilter setValue:[CIImage imageWithCGImage:

image.CGImage] forKey:@"inputImage"];

CIFilter *crop =

[CIFilter filterWithName: @"CICrop"];

[crop setDefaults];

[crop setValue:blurFilter.outputImage

forKey:@"inputImage"];

// Apply crop

CGFloat scale = [[UIScreen mainScreen] scale];

CGFloat w = image.size.width * scale;

CGFloat h = image.size.height * scale;

CIVector *v = [CIVector vectorWithX:0 Y:0 Z:w W:h];

[crop setValue:v forKey:@"inputRectangle"];

CGImageRef cgImageRef =

[[CIContext contextWithOptions:nil]

createCGImage:crop.outputImage

fromRect:crop.outputImage.extent];

// Render the cropped, blurred results

UIGraphicsBeginImageContextWithOptions(

image.size, NO, 0.0);

// Flip for Quartz drawing

FlipContextVertically(image.size);

// Draw the image

CGContextDrawImage(UIGraphicsGetCurrentContext(),

SizeMakeRect(image.size), cgImageRef);

// Retrieve the final image

UIImage *blurred =

UIGraphicsGetImageFromCurrentImageContext();

UIGraphicsEndImageContext();

return blurred;

}

Blurred Drawing Blocks

Listing 7-4 returns once again to Objective-C blocks that encapsulate a series of painting commands. In this case, the solution blurs those drawing operations and paints them into the current context.

To accomplish this, the function must emulate a transparency layer. It cannot use a transparency layer directly as there’s no way to intercept that material, blur it, and then pass it on directly to the context. Instead, the function draws its block into a new image, using DrawIntoImage() (seeListing 7-1), blurs it (using Listing 7-3), and then draws the result to the active context.

You see the result of Listing 7-4 in Figure 7-3. This image consists of two requests to draw random circles. The first is applied through a blurred block and the second without:

DrawAndBlur(4, ^{[self drawRandomCircles:20

withHue:targetColor into:targetRect];});

[self drawRandomCircles:20

withHue:targetColor into:targetRect];

Listing 7-4 Applying a Blur to a Drawing Block

// Return the current context size

CGSize GetUIKitContextSize()

{

CGContextRef context = UIGraphicsGetCurrentContext();

if (context == NULL) return CGSizeZero;

CGSize size = CGSizeMake(

CGBitmapContextGetWidth(context),

CGBitmapContextGetHeight(context));

CGFloat scale = [UIScreen mainScreen].scale;

return CGSizeMake(size.width / scale,

size.height / scale);

}

// Draw blurred block

void DrawAndBlur(CGFloat radius, DrawingStateBlock block)

{

if (!block) return; // Nothing to do

CGContextRef context = UIGraphicsGetCurrentContext();

if (context == NULL) COMPLAIN_AND_BAIL(

@"No context to draw into", nil);

// Draw and blur the image

UIImage *baseImage = DrawIntoImage(

GetUIKitContextSize(), block);

UIImage *blurred = GaussianBlurImage(baseImage, radius);

// Draw the results

[blurred drawAtPoint:CGPointZero];

}

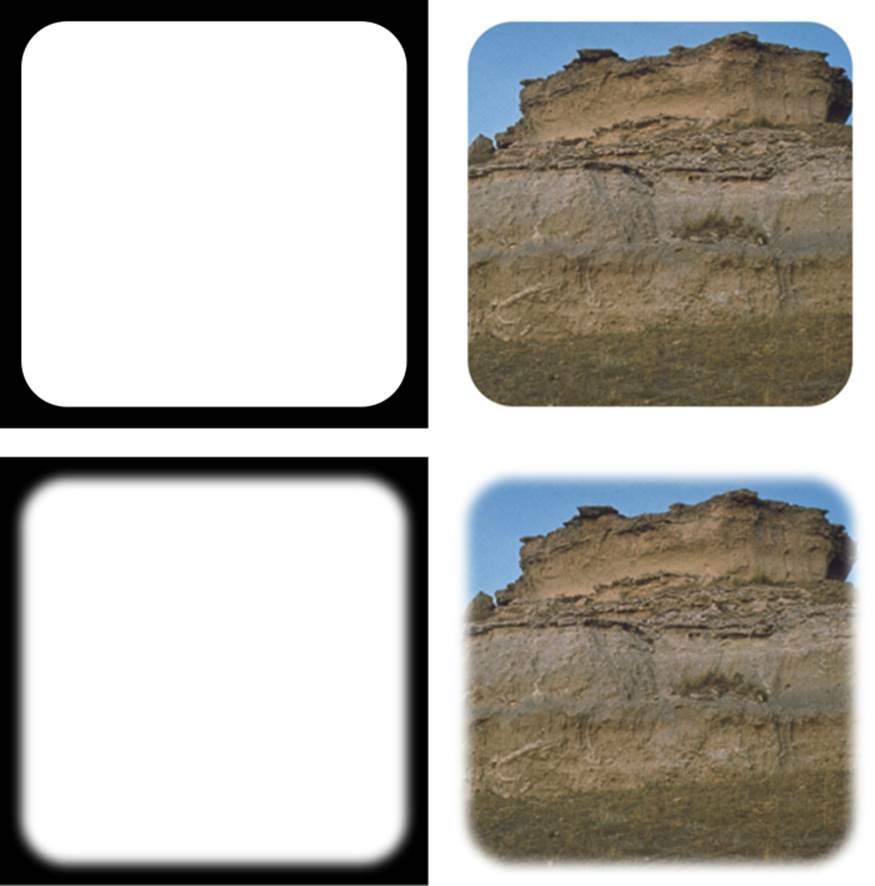

Blurred Masks

When you blur masks, you create softer edges for drawing. Figure 7-4 shows the result of painting an image using the normal outlines of a rounded-rectangle Bezier path and one that’s been blurred (see Example 7-2). In the top image, the path was filled but not blurred. In the bottom image, the DrawAndBlur() request softens the edges of the filled path.

Figure 7-4 Blurring a mask creates softer edges. Public domain images courtesy of the National Park Service.

Softened edges enable graphics to smoothly blend into each other onscreen. This technique is also called feathering. In feathering, edge masks are softened to create a smoother transition between a drawn image and its background.

Example 7-2 Drawing to a Blurred Mask

UIBezierPath *path = [UIBezierPath

bezierPathWithRoundedRect:inset cornerRadius:32];

UIImage *mask = DrawIntoImage(targetRect.size, ^{

FillRect(targetRect, [UIColor blackColor]);

DrawAndBlur(8, ^{[path fill:[UIColor whiteColor]];}); // blurred

// [path fill:[UIColor whiteColor]]; // non-blurred

});

ApplyMaskToContext(mask);

[agate drawInRect:targetRect];

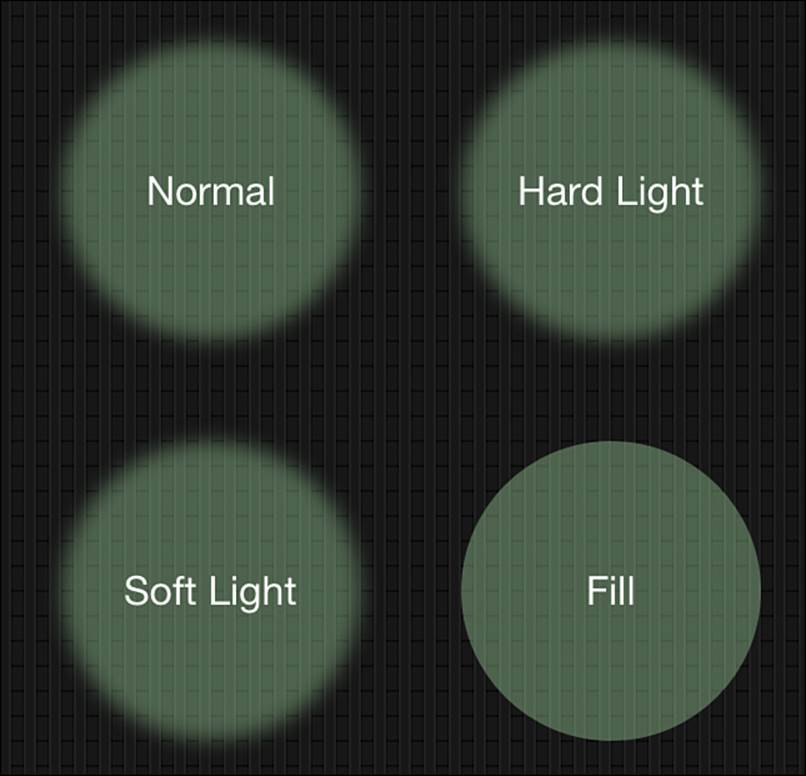

Blurred Spotlights

Drawing “light” into contexts is another common use case for blurring. Your goal in this situation is to lighten pixels, optionally add color, and blend the light without obscuring items already drawn to the context. Figure 7-5 shows several approaches. As you can see, the differences between them are quite subtle.

Figure 7-5 Blurring emulates light being shined on a background surface.

All four examples in Figure 7-5 consist of a circular path filled with a light, translucent green. Each sample, except the bottom-right circle, was blurred using the following code:

path = [UIBezierPath bezierPathWithOvalInRect:rect];

PushDraw(^{

CGContextSetBlendMode(UIGraphicsGetCurrentContext(),

blendMode);

DrawAndBlur(8, ^{[path fill:spotlightColor];});

});

The top-left example uses a normal blending mode, the top-right example uses a hard light mode, and the bottom-left example uses a soft light mode. Here are a few things to notice:

• The kCGBlendModeHardLight sample at the top right produces the subtlest lighting, adding the simplest highlights to the original background.

• The kCGBlendModeSoftLight sample at the bottom left is the most diffuse, with brighter highlighting.

• The kCGBlendModeNormal sample at the top left falls between these two. The center of the light field actually matches the sample at the bottom right—the one without blurring, which was also drawn using normal blending.

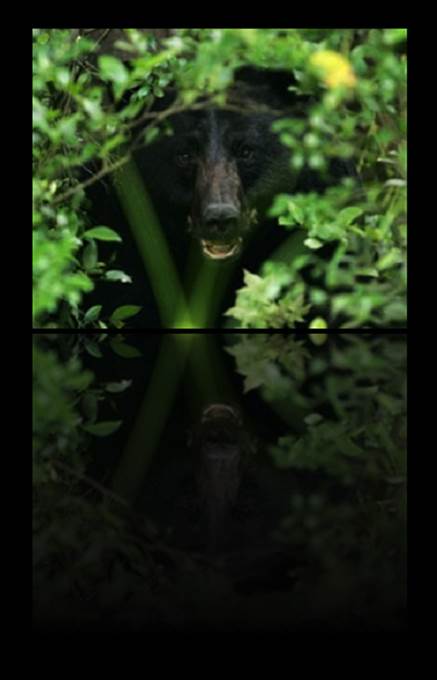

Drawing Reflections

When drawing reflections, you paint an inverted image that gently fades away. Figure 7-6 demonstrates this common technique. I’ve added a slight vertical gap to highlight where the original image ends and the reflected image begins. Most images that draw reflections use this gap to emulate a difference in elevation between the source image and a reflecting surface below it.

Figure 7-6 Gradients enable you to fade reflections. Public domain images courtesy of the National Park Service.

Listing 7-5 shows the function that built this flipped mirror. There are several things to note in this implementation:

• The context is flipped vertically at the point at which the reflection starts. This enables the reflection to draw in reverse, starting at the bottom, near the bushes, and moving up past the head of the bear.

• Unlike previous mask examples, Listing 7-5 uses a rectangle argument to limit the mask and the image drawing. This enables you to draw a reflection into a rectangle within a larger context.

• The CGContextClipToMask() function is applied slightly differently than in Listing 7-2. Instead of passing a grayscale image mask to the third parameter, this function passes a normal RGB image with an alpha channel. When used in this fashion, the image acts as an alpha mask. Alpha levels from the image determine what portions of the clipping area are affected by new updates. In this example, the drawn inverted image fades away from top to bottom.

Listing 7-5 Building a Reflected Image

// Draw an image into the target rectangle

// inverted and masked to a gradient

void DrawGradientMaskedReflection(

UIImage *image, CGRect rect)

{

CGContextRef context = UIGraphicsGetCurrentContext();

if (context == NULL)

COMPLAIN_AND_BAIL(

@"No context to draw into", nil);

// Build gradient

UIImage *gradient = GradientImage(rect.size,

WHITE_LEVEL(1.0, 0.5), WHITE_LEVEL(1.0, 0.0));

PushDraw(^{

// Flip the context vertically with respect

// to the origin of the target rectangle

CGContextTranslateCTM(context, 0, rect.origin.y);

FlipContextVertically(rect.size);

CGContextTranslateCTM(context, 0, -rect.origin.y);

// Add clipping and draw

CGContextClipToMask(context, rect, gradient.CGImage);

[image drawInRect:rect];

});

}

Although reflections provide an intriguing example of context clipping, they’re a feature you don’t always need to apply in applications. That’s because the CAReplicatorLayer class and layer masks accomplish this too, plus they provide live updates—so if a view’s contents change, so do the reflections. Although you can do it all in Quartz, there are sometimes good reasons why you shouldn’t. Reflections provide one good example of that rule.

You should draw reflections in Quartz when you’re focused on images rather than views. Reflections often form part of a drawing sequence rather than the end product.

Creating Display Links for Drawing

Views may need to change their content over time. They might transition from one image to another, or provide a series of visual updates to indicate application state. Animation meets drawing through a special timing class.

The CADisplayLink class provides a timer object for view animation. It fires a refresh clock that’s synced to a display’s refresh rate. This enables you to redraw views on a clock. You can use this clock to produce Quartz-based animation effects such as marching ants or to add Core Image–based transitions to your interfaces. Display links are part of the QuartzCore framework. You create these timers and associate them with run loops.

Although you can use an NSTimer to achieve similar results, using a display link frees you from trying to guess the ideal refresh interval. What’s more, a display link offers better guarantees about the accuracy of the timer (that it will fire on time). Apple writes in the documentation:

The actual time at which the timer fires potentially can be a significant period of time after the scheduled firing time.

Example 7-3 shows how you might create a display link. You should use common modes (NSRunLoopCommonModes) for the least latency. In this example, the target is a view, and the fired selector is setNeedsDisplay, a system-supplied UIView method. When triggered, this target–selector pair tells the system to mark that view’s entire bounds as dirty and request a drawRect: redraw on the next drawing cycle. The drawRect: method manually draws the content of a custom view using Quartz and iOS drawing APIs.

Example 7-3 Creating a Display Link

CADisplayLink *link = [CADisplayLink

displayLinkWithTarget:view

selector:@selector(setNeedsDisplay)];

[link addToRunLoop:[NSRunLoop mainRunLoop]

forMode:NSRunLoopCommonModes];

A display link’s frame interval is the property that controls its refresh rate. This normally defaults to 1. At 1, the display link notifies the target each time the link timer fires. This results in updates that match the display’s refresh rate. To adjust this, change the display link’s integerframeInterval property. Higher numbers slow down the refresh rate. Setting it to 2 halves your frame rate, and so forth:

link.frameInterval = 2;

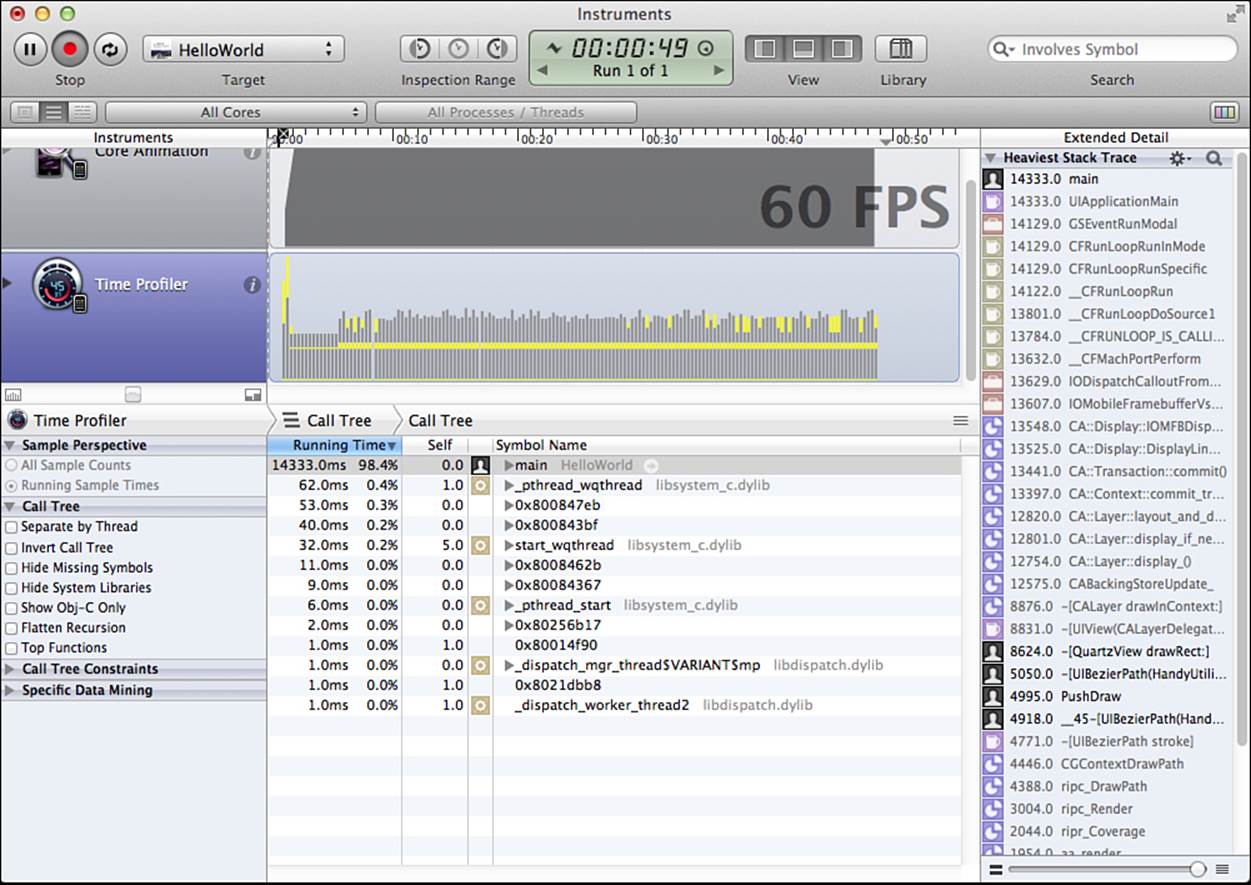

Assuming no processing bottlenecks, a well-behaved system runs at 60 frames per second (fps). You test the refresh rate using the Core Animation profiler in Instruments (see Figure 7-7) while running your app on a device with a frame interval set to 1. This way, you can get an idea of how much burden you’re placing on your app while running animated drawing tasks. If you see your refresh rate drop to, for example, 12 fps or 3 fps or worse, you need to seriously rethink how you’re performing your drawing tasks.

Figure 7-7 The Core Animation template, running in Instruments.

Note

Instruments plays a critical role in making sure your applications run efficiently by profiling how iOS applications work under the hood. The utility samples memory usage and monitors performance. This lets you identify and target problem areas in your applications and work on their efficiency before you ship apps.

Instruments offers graphical time-based performance plots that show where your applications are using the most resources. Instruments is built around the open-source DTrace package developed by Sun Microsystems.

In Xcode 5, the Debug Navigator enables you to track CPU and memory load as your application runs.

When you are done with your display loop, invalidate your display link (using invalidate). This removes it from the run loop and disassociates the target/action:

[link invalidate];

Alternatively, you can set the link’s paused property to YES and suspend the display link until it’s needed again.

Building Marching Ants

The display link technology you just read about also powers regular drawing updates. You can use this approach for many animated effects. For example, Figure 7-8 shows a common “marching ants” display. In this interface, the light gray lines animate, moving around the rectangular selection. First developed by Bill Atkinson for MacPaint on the old-style Macintosh line, it is named for the idea of ants marching in line. This presentation enables users to easily distinguish the edges of a selection.

Figure 7-8 A “marching ants” selection animates by offsetting each dash over time.

Example 7-4 presents a drawRect: implementation that draws a marching ants effect. It calculates a dash offset related to the current real-world time and strokes a path that you supply. Although Figure 7-8 uses a rectangle, you can use this code with any path shape.

This method is intended for use in a clear, lightweight view that’s stretched over your interface. This enables you to separate your selection presentation from the rest of your GUI, with a view you can easily hide or remove, as needed.

It uses a 12 point–3 point dash pattern for long dashes and short gaps. Importantly, it uses system time rather than any particular counter to establish its animation offsets. This ensures that any glitches in the display link (typically caused by high computing overhead) won’t affect the placement at each refresh, and the animation will proceed at the rate you specify.

There are two timing factors working here at once. The first is the refresh rate. It controls how often the drawRect: method fires to request a visual update. The second controls the pattern offsets. This specifies to what degree the dash pattern has moved and is calculated, independently, from system time.

To animate, Example 7-4 calculates a phase. This is what the UIBezierPath class (and, more to the point, its underlying Quartz CGPath) uses to present dashed offsets. The phase can be positive (typically, counterclockwise movement) or negative (clockwise) and specifies how far into the pattern to start drawing. This example uses a pattern that is 15 points long. Every 15 points, the dashes return to their original position.

To calculate an offset, this method applies a factor called secondsPerFrame. Example 7-4 cycles every three-quarters of a second. You can adjust this time to decrease or increase the pattern’s speed.

Example 7-4 Displaying Marching Ants

// The drawRect: method is called each time the display

// link fires. That's because calling setNeedsDisplay

// on the view notifies the system that the view contents

// need redrawing on the next drawing cycle.

- (void) drawRect:(CGRect)rect

{

CGContextRef context = UIGraphicsGetCurrentContext();

CGContextClearRect(context, rect);

CGFloat dashes[] = {12, 3};

CGFloat distance = 15;

CGFloat secondsPerFrame = 0.75f; // Adjust as desired

NSTimeInterval ti = [NSDate

timeIntervalSinceReferenceDate] / secondsPerFrame;

BOOL goesCW = YES;

CGFloat phase = distance * (ti - floor(ti)) *

(goesCW ? -1 : 1);

[path setLineDash:dashes count:2 phase:phase];

[path stroke:3 color:WHITE_LEVEL(0.75, 1)];

}

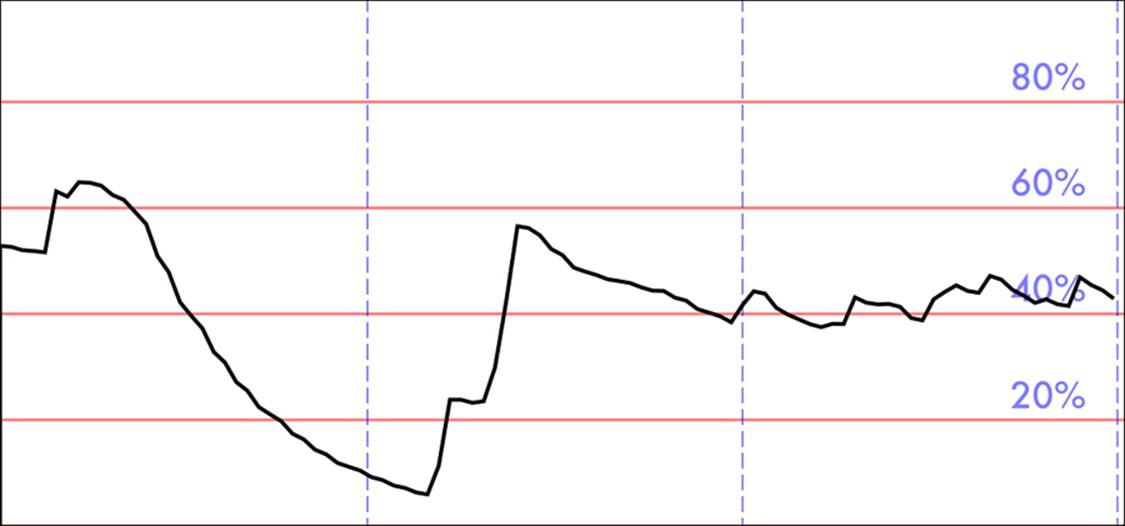

Drawing Sampled Data

There are many other applications for combining iOS drawing with a display link timer. One of the most practical involves data sampling from one of the onboard sensors. Figure 7-9 shows an app that monitors audio levels. At each link callback, it draws a UIBezierPath that shows the most recent 100 samples.

Figure 7-9 Sampling audio over time.

You can, of course, use a static background, simply drawing your data over a grid. If you use any vertical drawing elements (such as the dashed blue lines in Figure 7-9), however, you’ll want those elements to move along with your data updates. The easy way to accomplish this is to build a vertical grid and draw offset copies as new data arrives. Here’s one example of how to approach this problem:

PushDraw(^{

UIBezierPath *vPath = [vGrid safeCopy];

OffsetPath(vPath, CGSizeMake(-offset * deltaX, 0));

AddDashesToPath(vPath);

[vPath stroke:1 color:blueColor];

});

In this snippet, the vertical path offsets itself by some negative change in X position. Repeating this process produces drawings that appear to move to the left over time.

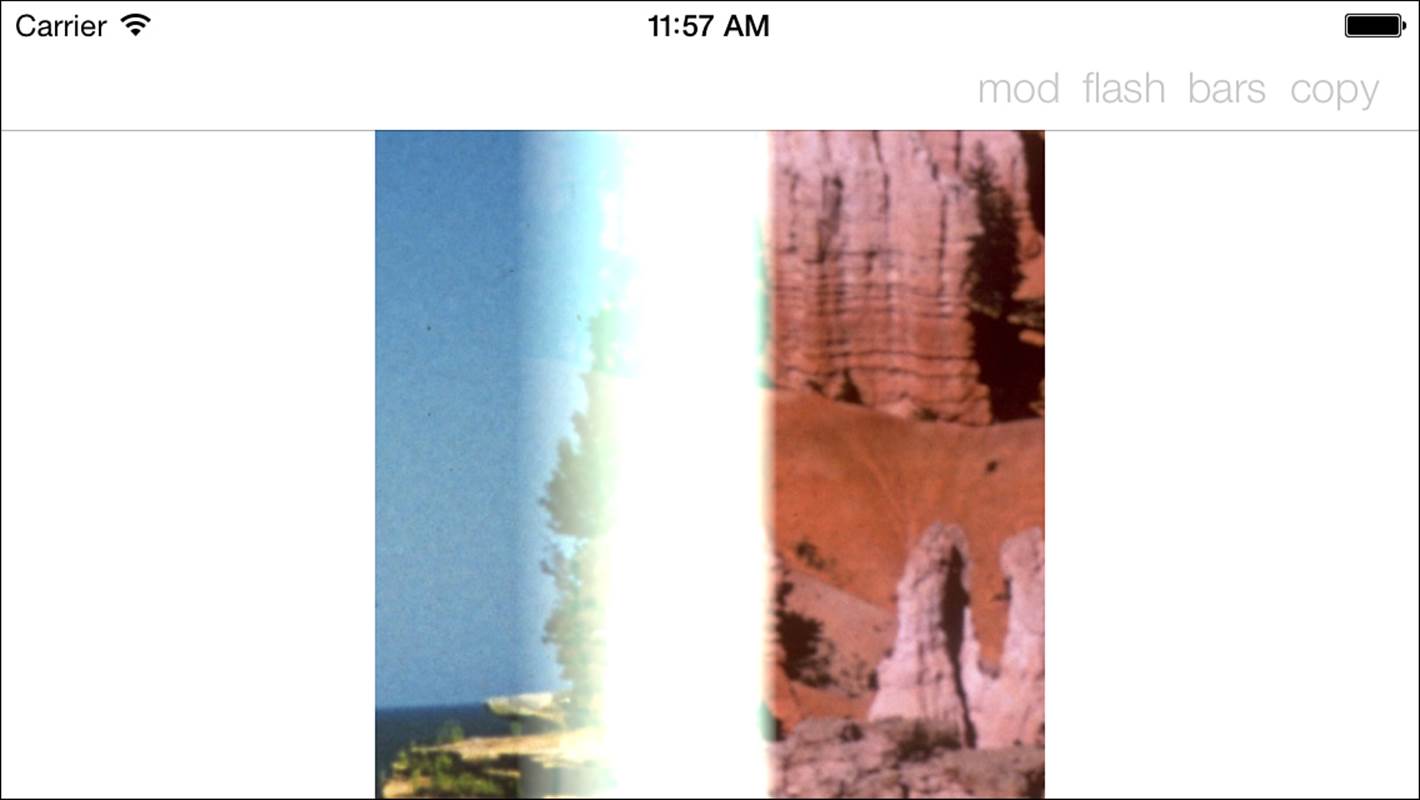

Applying Core Image Transitions

Core Image transitions are another valuable timer-meets-drawing solution. They enable you to create sequences between a source image and a target image in order to build lively visual effects that transition from one to the other.

You start by creating a new transition filter, such as a copy machine–style transition:

transition = [CIFilter filterWithName:@"CICopyMachineTransition"];

You provide an input image and a target image, and you specify how far along the transition has progressed, from 0.0 to 1.0. Listing 7-6 defines a method that demonstrates this, producing a CIImage interpolated along that timeline.

Listing 7-6 Applying the Core Image Copy Machine Transition Effect

- (CIImage *)imageForTransitionCopyMachine: (float) t

{

CIFilter *crop;

if (!transition)

{

transition = [CIFilter filterWithName:

@"CICopyMachineTransition"];

[transition setDefaults];

}

[transition setValue: self.inputImage

forKey: @"inputImage"];

[transition setValue: self.targetImage

forKey: @"inputTargetImage"];

[transition setValue: @(fmodf(t, 1.0f))

forKey: @"inputTime"];

// This next bit crops the image to the desired size

CIFilter *crop = [CIFilter filterWithName: @"CICrop"];

[crop setDefaults];

[crop setValue:transition.outputImage

forKey:@"inputImage"];

CIVector *v = [CIVector vectorWithX:0 Y:0

Z:_i1.size.width W:_i1.size.width];

[crop setValue:v forKey:@"inputRectangle"];

return [crop valueForKey: @"outputImage"];

}

Each Core Image filter uses a custom set of parameters, which are documented in Apple’s Core Image Filter reference. The copy machine sequence is one of the simplest transition options. As Listing 7-6 reveals, it works well with nothing more than the two images and an inputTime. You see the transition in action in Figure 7-10.

Figure 7-10 The copy machine transition moves from one image to another by mimicking a copy machine with its bright scanning bar. Public domain images courtesy of the National Park Service.

A display link enables you to power the transition process. Unlike Example 7-4, Listing 7-7 does not use real-world timing—although you could easily modify these methods to make them do so. Instead, it tracks a progress variable, incrementing it each time the display link fires, moving 5% of the way (progress += 1.0f / 20.0f) each time through.

As the link fires, the transition method updates the progress and requests a redraw. The drawRect: method in Listing 7-7 pulls the current “between” image from the filter and paints it to the context. When the progress reaches 100%, the display link invalidates itself.

Listing 7-7 Transitioning Using Core Image and Display Links

// Begin a new transition

- (void) transition: (int) theType bbis: (NSArray *) items

{

// Disable the GUI

for (UIBarButtonItem *item in (bbitems = items))

item.enabled = NO;

// Reset the current CATransition

transition = nil;

transitionType = theType;

// Start the transition from zero

progress = 0.0;

link = [CADisplayLink displayLinkWithTarget:self

selector:@selector(applyTransition)];

[link addToRunLoop:[NSRunLoop mainRunLoop]

forMode:NSRunLoopCommonModes];

}

// This method runs each time the display link fires

- (void) applyTransition

{

progress += 1.0f / 20.0f;

[self setNeedsDisplay];

if (progress > 1.0f)

{

// Our work here is done

[link invalidate];

// Toggle the two images

useSecond = ! useSecond;

// Re-enable the GUI

for (UIBarButtonItem *item in bbitems)

item.enabled = YES;

}

}

// Update the presentation

- (void) drawRect: (CGRect) rect

{

// Fit the results

CGRect r = SizeMakeRect(_i1.size);

CGRect fitRect = RectByFittingRect(r, self.bounds);

// Retrieve the current progress

CIImage *image = [self imageForTransition:progress];

// Draw it (it's a CIImage, not a CGImage)

if (!cicontext) cicontext =

[CIContext contextWithOptions:nil];

CGImageRef imageRef = [cicontext

createCGImage:image fromRect:image.extent];

FlipContextVertically(self.bounds.size);

CGContextDrawImage(UIGraphicsGetCurrentContext(),

fitRect, imageRef);

}

Summary

This chapter discusses techniques for masking, blurring, and animating drawn content. You read about ways to apply edge effects to selections, soften visuals, and use Core Image transitions in your drawRect: routines. Here are some concluding thoughts:

• No matter what kind of drawing you do, profiling your app’s performance is a critical part of the development process. Always make space in your development schedule to evaluate and tune your rendering and animation tasks. If you find that in-app processing time is too expensive, consider solutions like threading your drawing (UIKit and Quartz are thread safe when drawn to a context) and predrawing effects into images (this is great for adding precalculated glosses to UI elements like buttons).

• Core Image transitions are a lot of fun, but a little pizzazz goes a very long way. Don’t overload your apps with flashy effects. Your app is meant to serve your user, not to draw unnecessary attention to itself. In all things UI, less is usually more.

• When drawing animated material to external screens (whether over AirPlay or via connected cables), make sure you establish a display link for updates, just as you would for drawing to the main device screen.

• Although iOS 7 uses blurring as a key UI element, Apple has not yet made those real-time APIs public at the time this book was being written. Apple engineers recommend rolling your own blurring solutions and caching the results, especially when working with static backdrops.

• Core Image isn’t just about transitions. It offers many image-processing and image-generation options you might find handy. More and more filters are arriving on iOS all the time. It’s worth taking another look if you haven’t poked around for a while.