The C++ Programming Language (2013)

Part IV: The Standard Library

41. Concurrency

Keep it simple:

as simple as possible,

but no simpler.

– A. Einstein

• Introduction

• Memory Model

Memory Location; Instruction Reordering; Memory Order; Data Races

• Atomics

atomic Types; Flags and Fences

• volatile

• Advice

41.1. Introduction

Concurrency – the execution of several tasks simultaneously – is widely used to improve throughput (by using several processors for a single computation) or to improve responsiveness (by allowing one part of a program to progress while another is waiting for a response).

The C++ standard support for concurrency is introduced in a tutorial manner in §5.3. This chapter and the next provide a more detailed and systematic view.

We call an activity potentially executed concurrently with other activities a task. A thread is the system-level representation of a computer’s facilities for executing a task. A standard-library thread (§42.2) can execute a task. A thread may share an address space with other threads. That is, all threads in a single address space can access the same memory locations. One of the central challenges of the programmer of a concurrent system is to make sure that threads access memory in a sensible manner.

The standard library’s support for concurrency includes:

• A memory model: a set of guarantees for concurrent access to memory (§41.2) that basically ensures that simple and ordinary access works as one would naively expect

• Support for programming without locks: fine-grained low-level mechanisms for avoiding data races (§41.3)

• A thread library: a set of components supporting traditional threads-and-locks-style system-level concurrent programming, such as thread, condition_variable, and mutex (§42.2)

• A task support library: a few facilities supporting task-level concurrent programming: future, promise, packaged_task, and async() (§42.4)

These topics are ordered from the most fundamental and low-level to the highest-level. The memory model is common to all programming. For programmer productivity and error minimization, work at the highest feasible level. For example, prefer a future over a mutex for exchanging information and a mutex over an atomic for anything but simple counters and the like. Leave the complexities to standard-library implementers whenever feasible.

In the context of the C++ standard library, a lock is a mutex (a mutual exclusion variable) and any abstraction built upon a mutex to provide mutually exclusive access to a resource or to synchronize the progress of several concurrent tasks.

The topic of processes, that is, threads of execution in their own address spaces and communicating though inter-process communication mechanisms [Tanenbaum,2007], is not addressed in this book. I suspect that after reading about the problems with and techniques for managing shared data, you may become sympathetic to my view that explicitly shared data is best avoided. Naturally, communication implies some form of sharing, but that sharing most often need not be directly managed by the application programmer.

Please also note that as long as you don’t pass pointers to your local data to other threads, your local data is free of the problems mentioned here. This is yet another reason to avoid global data.

This chapter is not a comprehensive guide to concurrent programming or even a complete explanation of the C++ standard-library facilities for concurrent programming. It provides:

• A basic description of the problems facing a programmer who has to deal with concurrency at the system level

• A fairly detailed overview of the concurrency facilities provided by the standard

• An introduction to the basic uses of the standard-library concurrency features at the threads-and-locks level and above It does not:

• Go into details of the relaxed memory models or lock-free programming

• Teach advanced concurrent programming and design techniques

Concurrent and parallel programming have been popular topics of research and widely used for more than 40 years, so there is an extensive specialized literature (for example, for C++-based concurrency see [Wilson,1996]). In particular, just about any presentation of POSIX threads can be used as a source of examples that can be easily improved by using the standard-library facilities described here.

In contrast to the C-style POSIX facilities and to many older C++ thread-support libraries, the standard-library thread support is type-safe. There is no longer any reason to mess around with macros or void**s to pass information among threads. Similarly, we can define tasks as function objects (e.g., lambdas) and pass them to threads without using casts or worrying about type violations. Furthermore, there is no reason to invent elaborate conventions for reporting errors from one thread to another; futures (§5.3.5.1, §42.4.4) can transmit exceptions. Given that concurrent software is often complex and that code running in different threads is often separately developed, I consider type safety and a standard (preferably exception-based) error-handling strategy even more important than for single-threaded software. The standard-library thread support also greatly simplifies notation.

41.2. Memory Model

The C++ concurrency mechanisms are mostly supplied as standard-library components. These components rely on a set of language guarantees known as the memory model. A memory model is the result of discussions between machine architects and compiler writers about how best to represent computer hardware. The memory model, as specified in the ISO C++ standard, represents a contract between the implementers and the programmers to ensure that most programmers do not have to think about the details of modern computer hardware.

To understand the problems involved, keep one simple fact in mind: operations on an object in memory are never directly performed on the object in memory. Instead, the object is loaded into a processor register, modified there, and then written back. Worse still, an object is typically first loaded from the main memory into a cache memory and from there to a register. For example, consider incrementing a simple integer x:

// add one to x:

load x into cache element Cx

load Cx into register Rx

Rx=Rx+1;

store Rx back into Cx

store Cx back into x

Memory can be shared by several threads, and cache memory may (depending on the machine architecture) be shared among threads running on the same or different “processing units” (usually called something like processors, cores, or hyper-threads; this is an area of rapid evolution of both system facilities and terminology). This opens a host of opportunities for a simple operation (such as “add one to x”) to get corrupted. It will be obvious to machine architecture experts that I am simplifying. For the few who notice that I have not mentioned store buffers, I recommend Appendix C of [McKenney,2012].

41.2.1. Memory Location

Consider two global variables b and c:

// thread 1: // thread 2:

char c = 0; char b = 0;

void f() void g()

{ {

c = 1; b = 1;

int x = c; int y = b;

} }

Now, x==1 and y==1 as anyone would expect. Why is this even worth saying? Consider what might happen if a linker allocated c and b in the same word in memory and (like most modern hardware) the machine could not load or store anything smaller than a word:

![]()

Without a well-defined and reasonable memory model, thread 1 might read the word containing b and c, change c, and write the word back into memory. At the same time, thread 2 could do the same with b. Then, whichever thread managed to read the word first and whichever thread managed to write its result back into memory last would determine the result. We might get 10, 01, or 11 (but not 00). The memory model saves us from such chaos; we get 11. The reason that 00 cannot happen is that the initializations of b and c are done (by the compiler or the linker) before either thread starts.

The C++ memory model guarantees that two threads of execution can update and access separate memory locations without interfering with each other. This is exactly what we would naively expect. It is the compiler’s job to protect us from the sometimes very strange and subtle behaviors of modern hardware. How a compiler and hardware combination achieves that is up to the compiler. We program a “machine” that is provided by a combination of hardware and very low-level (compiler-generated) software.

Bit-fields (§8.2.7) give access to parts of a word. If two threads simultaneously access two fields of the same word, all bets are off. If b and c are two fields of the same word, most hardware has no way of avoiding the problem (the race condition) from the b-and-c example without using some form of (potentially very expensive) locking. Lock and unlock operations are not a cost we could implicitly impose on bit-fields, which are commonly used in critical device drivers. Consequently, the language defines memory location as the unit of memory for which sensible behavior is guaranteed to exclude individual bit-fields.

A memory location is either an object of arithmetic type (§6.2.1), a pointer, or a maximal sequence of adjacent bit-fields all having nonzero width. For example:

struct S {

char a; // location #1

int b:5; // location #2

unsigned c:11;

unsigned :0; // note: :0 is "special" (§8.2.7)

unsigned d:8; // location #3

struct { int ee:8; } e; // location #4

};

Here, S has exactly four separate memory locations. Don’t try to update bit-fields b and c from separate threads without explicit synchronization.

From the explanation above, you might conclude that if x and y are of the same type, x=y is guaranteed to result in x being a copy of y. This is true if and only if you don’t have a data race (§41.2.4) and if x and y are memory locations. However, if x and y are of a multiword struct they are not a single memory location, and if you have a data race, all behavior is undefined, so make sure you have proper synchronization in place if you share data (§41.3, §42.3.1).

41.2.2. Instruction Reordering

To gain performance, compilers, optimizers, and hardware reorder instructions. Consider:

// thread 1:

int x;

bool x_init;

void init()

{

x = initialize(); // no use of x_init in initialize()

x_init = true;

// ...

}

For this piece of code there is no stated reason to assign to x before assigning to x_init. The optimizer (or the hardware instruction scheduler) may decide to speed up the program by executing x_init=true first.

We probably meant for x_init to indicate whether x had been initialized by initializer() or not. However, we did not say that, so the hardware, the compiler, and the optimizer do not know that.

Add another thread to the program:

// thread 2:

extern int x;

extern bool x_init;

void f2()

{

int y;

while (!x_init) // if necessary, wait for initialization to complete

this_thread::sleep_for(milliseconds{10});

y = x;

// ...

}

Now we have a problem: thread 2 may never wait and thus will assign an uninitialized x to y.

Even if thread 1 did not set x_init and x in “the wrong order,” we still may have a problem. In thread 2, there are no assignments to x_init, so an optimizer may decide to lift the evaluation of !x_init out of the loop, so that thread 2 either never sleeps or sleeps forever.

41.2.3. Memory Order

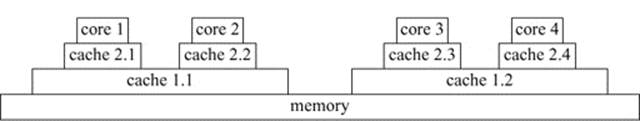

The time needed to get the value of a word from memory into a cache, then into a register, can be (on a processor’s time scale) very long. At best, maybe 500 instructions are executed before the value reaches the register, and another 500 instructions are executed before a new value reaches its intended location. The figure 500 is a guess that depends on machine architecture and varies over time, but for the last decades it has steadily increased. When there is no rush to load and store a particular value because the computation is optimized for throughput, the time taken can be much higher. A value can be “away from its location” for tens of thousands of instruction cycles. This is one of the facts that give modern hardware its amazing performance, but it also opens huge opportunities for confusion as different threads look at a value at different times and in different places in the memory hierarchy. For example, my simplified description mentions only a single cache; many popular architectures use a three-level cache. To illustrate, here is a diagram of a possible two-level cache architecture where each core has its own level-2 cache, a pair of cores share a level-1 cache, and all cores share the memory:

Memory ordering is the term used to describe what a programmer can assume about what a thread sees when it looks at a value from memory. The simplest memory order is called sequentially consistent. In a sequentially consistent memory model, every thread sees the effects of every operation done in the same order. The order is as if the instructions were done sequentially in a single thread. A thread can still reorder operations, but at every point where another thread might observe a variable, the set of operations performed before and (therefore) the value of the memory location observed must be well defined and the same for all threads. An operation that “observes” a value and thereby forces a consistent view of a memory location is called an atomic operation (see §41.3). A simple read or write does not impose an order.

There are many possible sequentially consistent orders for a given set of threads. Consider:

// thread 1: // thread 2:

char c = 0; char b = 0;

extern char b; extern char c;

void f1() void f2()

{ {

c = 1; b=1;

int x = b; int y = c;

} }

Assuming that the initialization of c and b is done statically (before any thread starts), there are three possible executions:

c = 1; b = 1; c = 1;

x = b; y = c; b = 1;

b = 1; c = 1; x = b;

y = c; x = b; y = c;

The results are 01, 10, and 11, respectively. The only result we cannot get is 00. Obviously, to get a predictable result, you need some form of synchronization of the access to the shared variables.

The sequential consistent order is just about all a programmer can effectively reason about, but on some machine architectures it imposes significant synchronization costs that can be eliminated by relaxing the rules. For example, two threads running on separate cores might decide to initiate the reads of x and y before the writes of a and b or at least before the writes had completed. That could give the nonsequentially consistent result 00. More relaxed memory models allow that.

41.2.4. Data Races

From these examples, every sensible person will conclude that we have to be very careful when programming threads. But how? First, we must avoid data races. Two threads have a data race if both can access a memory location (as defined in §41.2.1) simultaneously and at least one of their accesses is a write. Note that defining “simultaneously” precisely is not trivial. If two threads have a data race, no language guarantees hold: the behavior is undefined. This may sound drastic, but the effects of a data race can (as shown in §41.2.2) be drastic. An optimizer (or a hardware instruction scheduler) may reorder code based on assumptions about values and may execute sections of code (affecting apparently unrelated data) or not based on such assumptions.

There are many ways of avoiding data races:

• Use only a single thread. That eliminates the benefits of concurrency (unless you use processes or co-routines).

• Put a lock on every data item that might conceivably be subject to a data race. That can eliminate the benefits of concurrency almost as effectively as single threading because we easily get into a situation where all but one thread waits. Worse still, heavy use of locks increases the chances of deadlock, where a thread waits for another forever, and other locking problems.

• Try to look carefully at the code and avoid data races by selectively adding locks. This may be the currently most popular approach, but it is error-prone.

• Have a program detect all data races and either report them for the programmer to fix or automatically insert locks. Programs that can do that for programs of commercial size and complexity are not common. Programs that can do that and also guarantee the absence of deadlocks are still research projects.

• Design the code so that threads communicate only through simple put-and-get-style interfaces that do not require two threads to directly manipulate a single memory location (§5.3.5.1, §42.4).

• Use a higher-level library or tool that makes data sharing and/or concurrency implicit or sufficiently stylized to make sharing manageable. Examples include parallel implementations of algorithms in a library, directive-based tools (e.g., OpenMP), and transactional memory (often abbreviated to TM).

One way of looking at the rest of this chapter is as a bottom-up approach to arrive at support for one variant of that last style of programming. In the process, we encounter the tools needed to support just about every way of avoiding data races.

Why must programmers suffer all this complexity? An alternative would be to provide only a simple, sequentially consistent model with minimal (or no) opportunities for data races. I can offer two reasons:

[1] That is not the way the world is. The complexities of machine architectures are real, and a systems programming language, such as C++, must provide the tools for programmers to live with them. Maybe someday machine architects will deliver simpler alternatives, but for now someone must deal with a bewildering variety of low-level facilities provided by machine architects to deliver the performance that their customers demand.

[2] We (the C++ standards committee) seriously considered that. We would have liked to provide a memory model that was an improved version of what Java and C# provide. That would have saved a lot of work for the committee and for some programmers. However, this idea was effectively vetoed by the providers of operating systems and virtual machines: they insisted that they needed roughly what was then provided by the various C++ implementations – what is now provided by the C++ standard. The alternative would be for your operating systems and your virtual machines to slow down “by a factor of two or more.” I guess that programming language fanatics might have welcomed an opportunity to simplify C++ at the expense of other languages, but doing so would have been neither practical nor professional.

Fortunately, most programmers never have to work directly at the lowest level of the hardware. Most programmers do not need to understand a memory model at all and can think of reordering problems as amusing curiosities:

Write data-race-free code and don’t mess with memory order (§41.3); then the memory model guarantees that code executes as naively expected. It’s even better than sequential consistency. I find machine architecture a fascinating topic (e.g., see [Hennesey,2011] [McKenney,2012]), but as sensible and productive programmers, we stay away from the lowest levels of software whenever we can. Leave those for the experts and enjoy the higher levels that those experts provide for you.

41.3. Atomics

Lock-free programming is a set of techniques for writing concurrent programs without using explicit locks. Instead, the programmer relies on primitive operations (directly supported by hardware) to avoid data races (§41.2.4) for small objects (typically a single word or a double word). Primitive operations that do not suffer data races, often called atomic operations, can then be used in the implementation of higher-level concurrency mechanisms, such as locks, threads, and lock-free data structures.

With the notable exception of simple atomic counters, lock-free programming is for specialists. In addition to an understanding of language mechanisms, a detailed understanding of specific machine architectures and a knowledge of somewhat specialized implementation techniques are needed. Do not try lock-free programming with only the information provided here. The primary logical advantage of lock-free techniques over lock-based techniques is that classical locking problems, such as deadlock and starvation, cannot happen. For each atomic operation, it is guaranteed that every thread will eventually (and typically soon) make progress even if other threads compete for access to an atomic object. In addition, lock-free techniques can be significantly faster than lock-based alternatives.

The standard atomic types and operations provide a portable alternative to traditional ways of expressing lock-free code. Those typically either rely on assembly code or system-specific primitives. In this sense, the standard support for atomics is another step in C and C++’s long tradition of increasing portable and relatively comprehensible support for systems programming.

A synchronization operation is something that determines when a thread sees the effects of another thread; it determines what is considered to have happened before something else. Between synchronization operations a compiler and a processor are free to reorder code as long as the semantic rules of the language are maintained. In principle, nobody is looking and all that is affected is performance. A synchronization operation on one or more memory locations is a consume operation, an acquire operation, a release operation, or both an acquire and release operation (§iso.1.10).

• For an acquire operation, other processors will see its effect before any subsequent operation’s effect.

• For a release operation, other processors will see every preceding operation’s effect before the effect of the operation itself.

• A consume operation is a weaker form of an acquire operation. For a consume operation, other processors will see its effect before any subsequent operation’s effect, except that effects that do not depend on the consume operation’s value may happen before the consume operation.

An atomic operation ensures that the state of the memory is as required by the specified memory order (§41.2.2). By default, the memory order is memory_order_seq_cst (sequentially consistent; §41.2.2). The standard memory orders are (§iso.29.3):

enum memory_order {

memory_order_relaxed,

memory_order_consume,

memory_order_acquire,

memory_order_release,

memory_order_acq_rel,

memory_order_seq_cst

};

The enumerations represent:

• memory_order_relaxed: No operation orders memory.

• memory_order_release, memory_order_acq_rel, and memory_order_seq_cst: A store operation performs a release operation on the affected memory location.

• memory_order_consume: A load operation performs a consume operation on the affected memory location

• memory_order_acquire, memory_order_acq_rel, and memory_order_seq_cst: a load operation performs an acquire operation on the affected memory location.

As an example, consider (§iso.29.3) using atomic loads and stores (§41.3.1) to express a relaxed memory order:

// thread 1:

r1 = y.load(memory_order_relaxed);

x.store(r1,memory_order_relaxed);

// thread 2:

r2 = x.load(memory_order_relaxed);

y.store(42,memory_order_relaxed);

This is allowed to produce r2==42, making it appear that time went backward in thread 2. That is, memory_order_relaxed allows this execution order:

y.store(42,memory_order_relaxed);

r1 = y.load(memory_order_relaxed);

x.store(r1,memory_order_relaxed);

r2 = x.load(memory_order_relaxed);

For explanations, see the specialist literature, for example, [Boehm,2008] and [Williams,2012].

It is entirely architecture-specific whether a given memory order makes sense. Clearly, a relaxed memory model is not something to be directly used in applications programming. Utilizing a relaxed memory model is an even more specialized task than general lock-free programming. I see it as something done by a small subset of operating system kernel, device driver, and virtual machine implementers. It can also be useful in machine-generated code (as gotos can be). If two threads really do not directly share data, some machine architectures deliver significant performance improvements through the use of a relaxed memory model at the cost of complexity in the implementation of message-passing primitives (e.g., future and promise; §42.4.4).

To allow significant optimizations for architectures with relaxed memory models, the standard provides an attribute [[carries_dependency]] for transmitting memory order dependencies across function calls (§iso.7.6.4). For example:

[[carries_dependency]] struct foo* f(int i)

{

// let the caller use memory_order_consume for the result:

return foo_head[i].load(memory_order_consume);

}

You can also put [[carries__dependency]] on function arguments, and there is a function kill_dependency() for stopping the propagation of such dependencies.

One of the designers of the C++ memory model, Lawrence Crowl, summarizes:

“Dependency ordering is probably the most complex concurrency feature. It’s really worth using when

• you have a machine for which it matters,

• you have a very high bandwidth read-mostly atomic data structure, and

• you’re willing to spend a couple of weeks in testing and external reviews.

This is real expert territory.”

Consider yourself warned.

41.3.1. atomic Types

An atomic type is a specialization of the atomic template. An operation on an object of an atomic type is atomic. That is, it is performed by a single thread without interference from other threads.

The operations on atomics are very simple: loads and stores, swapping, incrementing, etc., on a simple object (usually a single memory location; §41.2.1). They have to be simple or the hardware can’t handle them directly.

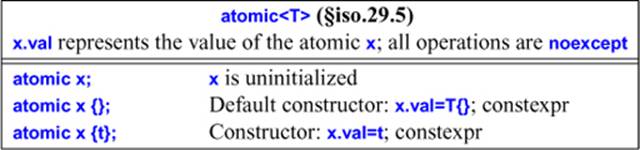

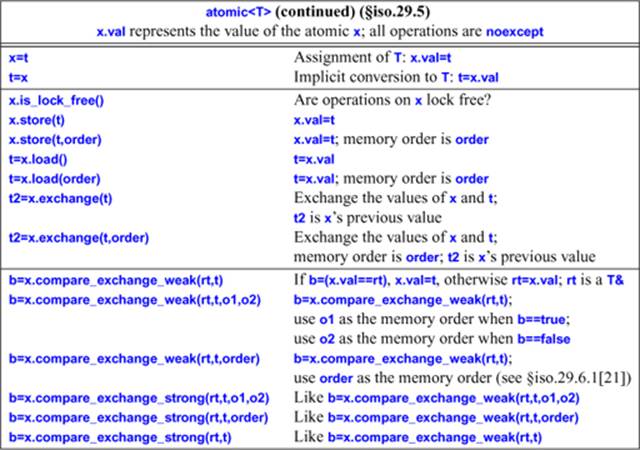

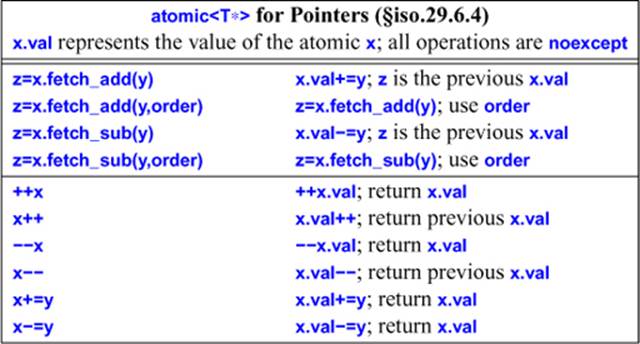

The following tables aim to give a first impression and an overview (only). Unless explicitly stated, the memory order is memory_order_seq_cst (sequentially consistent).

There are no copy or move operations for atomics. The assignment operator and constructor take values of the contained type T and access the contained value.

A default atomic (without an explicit {}) is uninitialized to allow the C standard library to be compatible.

The is_lock_free() operation is there so that you can test if these operations are lock free or if they have been implemented using a lock. On all major implementations, is_lock_free() returns true for integral and pointer types.

The atomic facilities are designed for types that map to a simple built-in type. Expect atomic<T> to be implemented using locks if T objects are large. The template argument type T must be trivially copyable (must not have user-defined copy operations).

The initialization of an atomic variable is not an atomic operation, so an initialization may have a data race with an access from another thread (§iso.29.6.5). However, a data race with an initialization is quite hard to achieve. As ever, keep initialization of nonlocal objects simple and prefer to initialize with constant expressions (you can’t have a data race before the program starts).

A simple atomic variable is close to ideal for a shared counter, such as a use count for a shared data structure. For example:

template<typename T>

class shared_ptr {

public:

// ...

~shared_ptr()

{

if (––**puc) delete p;

}

private:

T* p; // pointer to shared object

atomic<int>*puc; // pointer to use count

};

Here, *puc is an atomic (allocated somewhere by a shared_ptr constructor), so that the decrement operation (––) is atomic and the new value is correctly reported in the thread destroying a shared_ptr.

The first argument of a compare-and-exchange operation (rt in the table) is a reference so that the object referred to can be updated if the operation fails to update its target (x in the table).

The difference between compare_exchange_strong() and compare_exchange_weak() is that the weak version can fail for “spurious reasons.” That is, some peculiarity of the hardware or the implementation of x.compare_exchange_weak(rt,t) may cause a failure even if x.val==rt. Allowing such failures makes compare_exchange_weak() implementable on architectures where compare_exchange_strong() would be difficult or relatively expensive.

The classic compare-and-swap loop can be written like this:

atomic<int> val = 0;

// ...

int expected = val.load(); // read current value

do {

int next = fct(expected); // calculate new value

} while (!val.compare_exchange_weak(expected,next)); // write next to val or to expected

The atomic val.compare_exchange_weak(expected,next) reads the current value of val and compares it to expected; if equal, it writes next to val. If some other thread has written to val since we read it in preparation to an update, we have to try again. When we try again, we use the new value of expected obtained from compare_exchange_weak(). Eventually, the expected value will be written. The value of expected is “the current value of val as seen by this thread.” So, since expected is updated to the current value each time the compare_exchange_weak() is executed, we should never get an infinite loop.

Operations like compare_exchange_strong() are widely known as compare-and-swap operations (CAS operations). There is a potentially serious problem with all CAS operations (in any language and on any machine) known as the ABA problem. Consider adding a node at the head of a very simple lock-free singly-linked list if the data value is less than the head’s data:

extern atomic<Link*> head; // the shared head of a linked list

Link* nh = new Link(data,nullptr); // make a link ready for insertion

Link* h = head.load(); // read the shared head of the list

do {

if (h–>data<data) break; // if so, insert elsewhere

nh–>next = h; // next element is the previous head

} while (!head.compare_exchange_weak(h,nh)); // write nh to head or to h

This is a simplified version of code that would insert data at the right position in an ordered linked list. I read the head, use it as the next of my new Link, and then write the pointer to my new Link to head. I do that repeatedly until no other thread has managed to change the head while I was getting nh ready.

Let us examine this code in some detail. Call the value of head that I read A. If no other thread changed the value of head before I executed my compare_exchange_weak(), it finds A in head and succeeds in replacing it with my nh. If some other thread changed the value of head to Bafter I read A, my compare_exchange_weak() will fail, and I’ll go around my loop to read head again.

This looks right. What could possibly go wrong? Well, after I read the value A, some other thread changed the value of head to B and recycled the Link. Then, some thread reused the node A and reinserted it at the head of the list. Now my compare_exchange_weak() finds A and does the update. However, the list had changed; the value of head went from A to B and then back to A. That change may be significant in many different ways, but in this simplified example, A–>data may have changed so that the critical data comparison may be wrong. ABA problems can be very subtle and hard to detect. There are a variety of ways of dealing with the ABA problem [Dechev,2010]. I mention it here primarily to warn about the subtleties of lock-free programming.

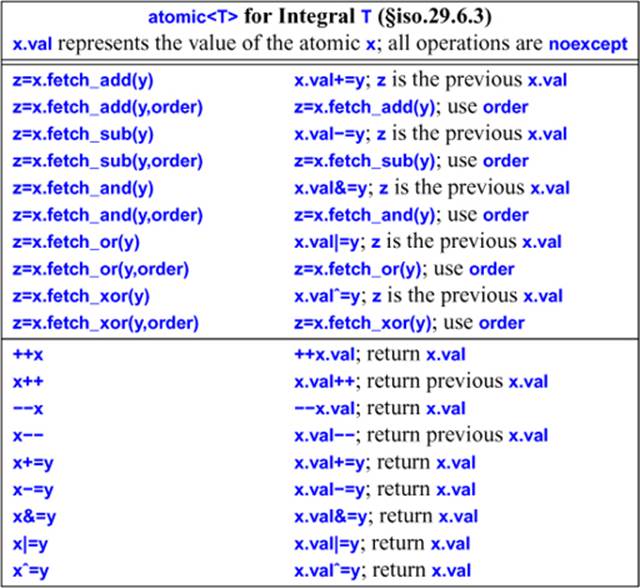

Integral atomic types offer atomic arithmetic and bit operations:

Consider the popular double-checked locking idiom. The basic idea is that if initializing some x must be done under a lock, you may not want to incur the cost of acquiring that lock every time you access x to see if the initialization has been done. Instead, you lock and initialize only if a variable x_init is false:

X x; // we need a lock to initialize an X

mutex lx; // the mutex to be used to lock x during initialization

atomic<bool> x_init {false}; // an atomic used to minimize locking

void some_code()

{

if (!x_init) { // proceed if x is uninitialized

lx.lock();

if (!x_init) { // proceed if x is still uninitialized

// ... initialize x ...

x_init = true;

}

lx.unlock();

}

// ... use x ...

}

Had init_x not been atomic, instruction reordering could have moved the initialization of x ahead of the apparently unrelated test of init_x (see §41.2.2). Making init_x atomic prevents that.

The !x_init relies on the implicit conversion from an atomic<T> to a T.

This code can be simplified further by using RAII (§42.3.1.4).

The double-checked locking idiom is represented in the standard library by once_flag and call_once() (§42.3.3), so you don’t have to write such code directly.

The standard library also supports atomic pointers:

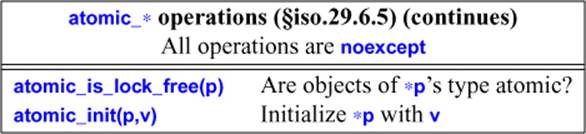

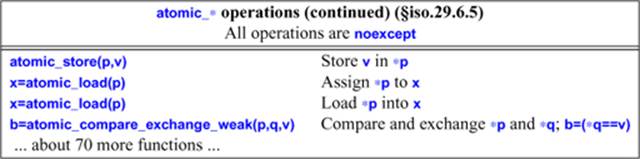

To allow the C standard library to be compatible, the atomic member function types have freestanding equivalents:

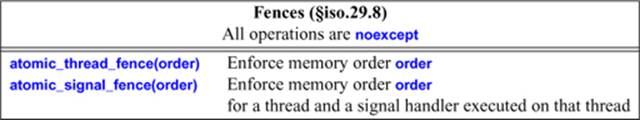

41.3.2. Flags and Fences

In addition to the support for atomic types, the standard library offers two lower-level synchronization facilities: atomic flags and fences. The primary use of these is to implement the lowest-level atomic facilities, such as spinlocks and the atomic types. They are the only lock-free mechanisms that are guaranteed to be supported on every implementation (though all major platforms support atomic types).

Essentially no programmers need to use flags or fences. Those who do usually work closely with machine architects.

41.3.2.1. atomic Flags

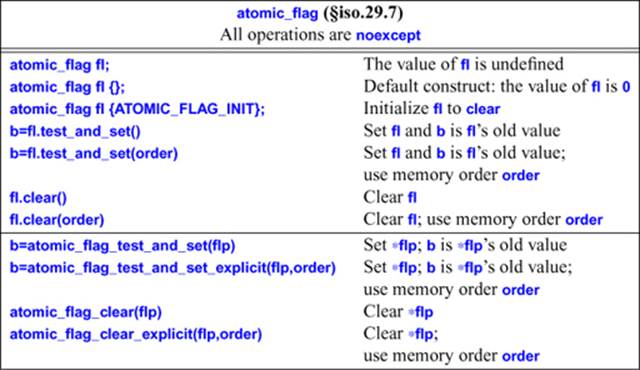

An atomic_flag is the simplest atomic type and the only one with operations guaranteed to be atomic for every implementation. An atomic_flag represents a single bit of information. If necessary, the other atomic types can be implemented using atomic_flag.

The two possible values of an atomic_flag are called set and clear.

The bool return values are true for set and false for clear.

Using {} to initialize atomic_flag seems to make sense. However, there is no guarantee that 0 represents clear. A machine where clear is 1 is rumored to exist. Clearing using ATOMIC_FLAG_INIT is the only portable and reliable way of initializing an atomic_flag. TheATOMIC_FLAG_INIT is an implementation-supplied macro.

You can think of an atomic_flag as a very simple spin lock:

class spin_mutex {

atomic_flag flag = ATOMIC_FLAG_INIT;

public:

void lock() { while(flag.test_and_set()); }

void unlock() { flag.clear(); }

};

Note that spin locks can easily become very expensive.

As usual, I leave the memory orders and their proper use to the specialist literature.

41.3.2.2. Fences

A fence, also known as a memory barrier, is an operation that restricts operation reordering according to some specified memory ordering (§41.2.3). The fence operations do not do anything else. Think of them as simply slowing down a program to a safe speed, allowing the memory hierarchy to reach a reasonably well-defined state.

Fences are used in combination with atomics (needed to observe the effects of the fences).

41.4. volatile

The volatile specifier is used to indicate that an object can be modified by something external to the thread of control. For example:

volatile const long clock_register; // updated by the hardware clock

A volatile specifier basically tells the compiler not to optimize away apparently redundant reads and writes. For example:

auto t1 {clock_register};

// ... no use of clock_register here ...

auto t2 {clock_register};

Had clock_register not been volatile, the compiler would have been perfectly entitled to eliminate one of the reads and assume t1==t2.

Do not use volatile except in low-level code that deals directly with hardware.

Do not assume that volatile has special meaning in the memory model. It does not. It is not – as in some later languages – a synchronization mechanism. To get synchronization, use an atomic (§41.3), a mutex (§42.3.1), or a condition_variable (§42.3.4).

41.5. Advice

[1] Use concurrency to improve responsiveness or to improve throughput; §41.1.

[2] Work at the highest level of abstraction that you can afford; §41.1.

[3] Prefer packaged_task and futures over direct use of threads and mutexes; §41.1.

[4] Prefer mutexes and condition_variables over direct use of atomics except for simple counters; §41.1.

[5] Avoid explicitly shared data whenever you can; §41.1.

[6] Consider processes as an alternative to threads; §41.1.

[7] The standard-library concurrency facilities are type safe; §41.1.

[8] The memory model exists to save most programmers from having to think about the machine architecture level of computers; §41.2.

[9] The memory model makes memory appear roughly as naively expected; §41.2.

[10] Separate threads accessing separate bit-fields of a struct may interfere with each other; §41.2.

[11] Avoid data races; §41.2.4.

[12] Atomics allow for lock-free programming; §41.3.

[13] Lock-free programming can be essential for avoiding deadlock and to ensure that every thread makes progress; §41.3.

[14] Leave lock-free programming to experts; §41.3.

[15] Leave relaxed memory models to experts; §41.3.

[16] A volatile tells the compiler that the value of an object can be changed by something that is not part of the program; §41.4.

[17] A C++ volatile is not a synchronization mechanism; §41.4.