C++ Recipes: A Problem-Solution Approach (2015)

CHAPTER 14

3D Graphics Programming

C++ is the programming language of choice for developers of high-performance applications. This often includes applications that are required to display 3D graphics to a user. 3D graphics are common in medical applications, design applications, and video games. All of these types of applications demand responsiveness as a key usability feature. This makes the C++ language a perfect choice for this type of program, because programmers can target and optimize for specific hardware platforms.

Microsoft supplies the proprietary DirectX API for building 3D applications for the Windows operating system. This chapter, however, looks at writing a simple 3D program using the OpenGL API. OpenGL is supported on Windows, OS X, and most Linux distributions; it’s a perfect choice in this case, because you might be using any of these operating systems.

One of the more tedious aspects of OpenGL programming is the requirement to set up and manage windows in multiple operating systems if you’re targeting more than one. This job is made much easier by the GLFW package, which abstracts this task away behind an API so you don’t have to worry about the details.

14-1. An Introduction to GLFW

Problem

You’re writing a cross platform application containing 3D graphics, and you want a fast way to get up and running.

Solution

GLFW abstracts out the task of creating and managing a window for many popular operating systems.

How It Works

The GLFW API is written in the C programming language and can therefore be used in C++ applications without issue. The API is available to download from www.glfw.org. You can also read the documentation for the API at the same web site. The instructions to configure and build a GLFW library change frequently and so aren’t included with this chapter. At the time of this writing, the most up-to-date instructions for building GLFW can be found at www.glfw.org/docs/latest/compile.html.

The instructions for GLFW currently involve using CMake to build a project that can then be used to compile a library that you can link into your own project. Once you have this up and running, you can use the code in Listing 14-1 to run a program that initializes OpenGL and creates a window for your program.

Listing 14-1. A Simple GLFW Program

#include "GLFW\glfw3.h"

intmain()

{

GLFWwindow* window;

/* Initialize the library */

if (!glfwInit())

return -1;

/* Create a windowed mode window and its OpenGL context */

window = glfwCreateWindow(640, 480, "Hello World", NULL, NULL);

if (!window)

{

glfwTerminate();

return -1;

}

/* Make the window's context current */

glfwMakeContextCurrent(window);

/* Loop until the user closes the window */

while (!glfwWindowShouldClose(window))

{

/* Render here */

/* Swap front and back buffers */

glfwSwapBuffers(window);

/* Poll for and process events */

glfwPollEvents();

}

glfwTerminate();

return 0;

}

The code in Listing 14-1 is the sample program supplied on the GLFW web site to ensure that your build is working properly. It initializes the glfw library with a call to glfwInit. A window is created using the glfwCreateWindow function. The sample creates a window with 640 × 480 resolution and the title “Hello World”. If the window creation fails, then the glfwTerminate function is called. If it’s successful, the program calls glfwMakeContextCurrent. The OpenGL API supports multiple rendering contexts, and you have to ensure that yours is the current context when you want to render. The main loop of the program continues until the glfwWindowShouldClose function returns true. The glfwSwapBuffers function is responsible for swapping the front buffer with the back buffer. Double-buffered rendering is useful to prevent the user from seeing unfinished frames of animation. The graphics card can display one buffer while the program is rendering into a second. These buffers are swapped at the end of each frame. The glfwPollEvents function is responsible for communicating with the operating system and receiving any messages. The program ends with a call to glfwTerminate to shut everything down.

The OpenGL API provides a lot of its functionality through extensions, and this means the functions you’re using may not be supported directly by the platform you’re working on. Fortunately, the GLEW library is available to help with using OpenGL extensions on multiple platforms. Again, the instructions for obtaining, building, and linking this library change from time to time. The latest information can be obtained from the GLEW web site at http://glew.sourceforge.net.

Once you have GLEW up and running, you can initialize the library using the glewInit function call shown in Listing 14-2.

Listing 14-2. Initializing GLEW

#include <GL/glew.h>

#include "GLFW/glfw3.h"

int main(void)

{

GLFWwindow* window;

// Initialize the library

if (!glfwInit())

{

return -1;

}

// Create a windowed mode window and its OpenGL context

window = glfwCreateWindow(640, 480, "Hello World", NULL, NULL);

if (!window)

{

glfwTerminate();

return -1;

}

// Make the window's context current

glfwMakeContextCurrent(window);

GLenum glewError{ glewInit() };

if (glewError != GLEW_OK)

{

return -1;

}

// Loop until the user closes the window

while (!glfwWindowShouldClose(window))

{

// Swap front and back buffers

glfwSwapBuffers(window);

// Poll for and process events

glfwPollEvents();

}

glfwTerminate();

return 0;

}

It’s important that this step occurs after you have a valid and current OpenGL context, because the GLEW library relies on this in order to load the most common extensions you may be using from the OpenGL API.

There are sample applications accompanying this book that have both GLEW and GLFW included and configured. You should download these if you would like to see a project that has been configured to work with these libraries. In addition, excellent documentation is available at the libraries’ web sites (http://glew.sourceforge.net/install.html and www.glfw.org/download.html).

14-2. Rendering a Triangle

Problem

You would like to render a 3D object in your application.

Solution

OpenGL provides APIs to configure the rendering pipeline on a graphics card and display 3D objects onscreen.

How It Works

OpenGL is a graphics library that allows an application to send data to a GPU in a computer to render images to a window. This recipe introduces you to three concepts that are necessary for rendering graphics to a window when using OpenGL on a modern computer system. The first is the concept of geometry.

The geometry of an object is made up of a collection of vertices and indices. A vertex specifies the point in space where the vertex should be rendered onto the screen. A vertex passes through the GPU, and different operations are applied to it at different points. This recipe bypasses most of the processing of the vertices and instead specifies vertices in what is known as normalized device coordinates. A GPU transforms vertices using a vertex shader to generate vertices that sit inside a normalized cube. These vertices are then passed to a fragment shader, and the fragments are used to determine the output color to be written to the frame buffer at a given point. You learn more about these operations as you move through this chapter’s recipes.

The code in Listing 14-3 shows the Geometry class and how it can be used to specify storage for vertices and indices.

Listing 14-3. The Geometry Class

using namespace std;

class Geometry

{

public:

using Vertices = vector < float >;

using Indices = vector < unsigned short >;

private:

Vertices m_Vertices;

Indices m_Indices;

public:

Geometry() = default;

~Geometry() = default;

void SetVertices(const Vertices& vertices)

{

m_Vertices = vertices;

}

Vertices::size_type GetNumVertices() const

{

return m_Vertices.size();

}

Vertices::const_pointer GetVertices() const

{

return m_Vertices.data();

}

void SetIndices(const Indices& indices)

{

m_Indices = indices;

}

Indices::size_type GetNumIndices() const

{

return m_Indices.size();

}

Indices::const_pointer GetIndices() const

{

return m_Indices.data();

}

};

The Geometry class contains two vector aliases. The first alias is used to define a type that represents a vector of floats. This type is used to store vertices in the Geometry class. The second type alias defines a vector of unsigned shorts. This type alias is used to create them_Indices vector that is used to store indices.

Indices are a useful tool when working with OpenGL because they allow you to reduce duplicate vertices in your vertex data. A mesh is typically made up of a collection of triangles, each of which shares edges with other triangles to create a complete shape that doesn’t have any holes. This means a s ingle vertex that isn’t at the edge of an object is shared between multiple triangles. Indices let you create all the vertices for a mesh and then use the indices to represent the order in which OpenGL reads the vertices to create the individual triangles of the mesh. You see vertex and index definitions later in this recipe.

A typical OpenGL program consists of multiple shader programs. Shaders allow you control the behavior of multiple stages of the OpenGL rendering pipeline. At this point, you need to be able to create a vertex shader and a fragment shader that can act as a single pipeline for the GPU. OpenGL enforces this by having you create a vertex shader and a fragment shader independently and link them into a single shader program. You typically have more than one of these, so the Shader base class in Listing 14-4 shows how to create a base class to be shared among multiple derived shader programs.

Listing 14-4. The Shader Class

class Shader

{

private:

void LoadShader(GLuint id, const std::string& shaderCode)

{

const unsigned int NUM_SHADERS{ 1 };

const char* pCode{ shaderCode.c_str() };

GLint length{ static_cast<GLint>(shaderCode.length()) };

glShaderSource(id, NUM_SHADERS, &pCode, &length);

glCompileShader(id);

glAttachShader(m_ProgramId, id);

}

protected:

GLuint m_VertexShaderId{ GL_INVALID_VALUE };

GLuint m_FragmentShaderId{ GL_INVALID_VALUE };

GLint m_ProgramId{ GL_INVALID_VALUE };

std::string m_VertexShaderCode;

std::string m_FragmentShaderCode;

public:

Shader() = default;

virtual ~Shader() = default;

virtual void Link()

{

m_ProgramId = glCreateProgram();

m_VertexShaderId = glCreateShader(GL_VERTEX_SHADER);

LoadShader(m_VertexShaderId, m_VertexShaderCode);

m_FragmentShaderId = glCreateShader(GL_FRAGMENT_SHADER);

LoadShader(m_FragmentShaderId, m_FragmentShaderCode);

glLinkProgram(m_ProgramId);

}

virtual void Setup(const Geometry& geometry)

{

glUseProgram(m_ProgramId);

}

};

The Shader class is the first time you see the use of the OpenGL API. This class contains variables for storing the IDs that OpenGL provides to act as handles to the vertex and fragment shaders as well as the shader program. The m_ProgramId field is initialized in the Link method when it’s assigned the result of the glCreateProgram method. m_VertexShaderId is assigned the value of the glCreateShader program, which is passed the GL_VERTEX_SHADER variable. The m_FragmentShaderId variable is initialized using the same variable, but it’s passed the GL_FRAGMENT_SHADER variable. You can use the LoadShader method to load shader code for either a vertex shader of a fragment shader. You can see this when the LoadShader method is called twice in the Link method: first with the m_VertexShaderId andm_VertexShaderCode variables as parameters and the second time with the m_FragmentShaderId and m_FragentShaderCode variables. The Link method ends with a call to glLinkProgram.

The LoadShader method is responsible for attaching the shader source code to the shader ID, compiling the shader, and attaching it to the relevant OpenGL shader program. The Setup method is used while rendering objects and tells OpenGL that you would like to make this shader program the active shader in use. This recipe needs a single shader program to render a triangle to the screen. This shader program is created by deriving a class named BasicShader from the Shader class in Listing 14-4, as shown in Listing 14-5.

Listing 14-5. The BasicShader Class

class BasicShader

: public Shader

{

private:

GLint m_PositionAttributeHandle;

public:

BasicShader()

{

m_VertexShaderCode =

"attribute vec4 a_vPosition; \n"

"void main(){ \n"

" gl_Position = a_vPosition; \n"

"} \n";

m_FragmentShaderCode =

"#version 150 \n"

"precision mediump float; \n"

"void main(){ \n"

" gl_FragColor = vec4(0.2, 0.2, 0.2, 1.0); \n"

"} \n";

}

~BasicShader() override = default;

void Link() override

{

Shader::Link();

GLint success;

glGetProgramiv(m_ProgramId, GL_ACTIVE_ATTRIBUTES, &success);

m_PositionAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vPosition");

}

void Setup(const Geometry& geometry) override

{

Shader::Setup(geometry);

glVertexAttribPointer(

m_PositionAttributeHandle,

3,

GL_FLOAT,

GL_FALSE,

0,

geometry.GetVertices());

glEnableVertexAttribArray(m_PositionAttributeHandle);

}

};

The BasicShader class begins by initializing the protected m_VertexShaderCode and m_FragmentShaderCode variables from the Shader class in its constructor. The Link method is responsible for calling the base class Link method and then retrieving handles to the attributes in the shader code. The Setup method also calls the Setup method in the base class. It then sets up the attribute in the shader program. An attribute is a variable that receives data from a data stream or fields set using the OpenGL API functions from the application code. In this case, the attribute is a vec4 field in the GL Shading Language (GLSL) code. GLSL is used to write OpenGL shader code; this language is based on C and is therefore familiar, but it contains its own types and keywords necessary for communication with the application-side OpenGL calls. The a_vPosition vec4 attribute in the vertex shader code is responsible for receiving every position in a stream of vertices sent to OpenGL for rendering. A handle to the attribute is retrieved using the glGetAttribLocation OpenGL API function that takes the program ID and the name of the attribute to be retrieved. The attribute handle for a vertex position can then be used with the glVertexAttribPointer function in the Setup method. This method takes the attribute handle as a parameter followed by the number of elements per vertex. In this case, the vertices are supplied with an x, y, z component; therefore the number 3 is passed to the size parameter. The GL_FLOAT value specifies that the vertices are floating-point. GL_FALSE tells OpenGL that the vertices should not be normalized by the API when it receives them. The 0 value tells OpenGL the size of the gap between positions of the vertex data; in this case there are no gaps, so you can pass 0. Finally, a pointer to the vertex data is supplied. After this function call, the glEnableVertexAttribArray function is called to tell OpenGL that the attribute should be enabled using the data supplied to it in the previous call, to supply position data to the vertex-shader execution system on the GPU.

The next step is to use these classes in the main function to render a triangle to your window. Listing 14-6 contains the complete listing for a program that achieves this.

Listing 14-6. A Program that Renders a Triangle

#include "GL/glew.h"

#include "GLFW/glfw3.h"

#include <string>

#include <vector>

using namespace std;

class Geometry

{

public:

using Vertices = vector < float >;

using Indices = vector < unsigned short >;

private:

Vertices m_Vertices;

Indices m_Indices;

public:

Geometry() = default;

~Geometry() = default;

void SetVertices(const Vertices& vertices)

{

m_Vertices = vertices;

}

Vertices::size_type GetNumVertices() const

{

return m_Vertices.size();

}

Vertices::const_pointer GetVertices() const

{

return m_Vertices.data();

}

void SetIndices(const Indices& indices)

{

m_Indices = indices;

}

Indices::size_type GetNumIndices() const

{

return m_Indices.size();

}

Indices::const_pointer GetIndices() const

{

return m_Indices.data();

}

};

class Shader

{

private:

void LoadShader(GLuint id, const std::string& shaderCode)

{

const unsigned int NUM_SHADERS{ 1 };

const char* pCode{ shaderCode.c_str() };

GLint length{ static_cast<GLint>(shaderCode.length()) };

glShaderSource(id, NUM_SHADERS, &pCode, &length);

glCompileShader(id);

glAttachShader(m_ProgramId, id);

}

protected:

GLuint m_VertexShaderId{ GL_INVALID_VALUE };

GLuint m_FragmentShaderId{ GL_INVALID_VALUE };

GLint m_ProgramId{ GL_INVALID_VALUE };

std::string m_VertexShaderCode;

std::string m_FragmentShaderCode;

public:

Shader() = default;

virtual ~Shader() = default;

virtual void Link()

{

m_ProgramId = glCreateProgram();

m_VertexShaderId = glCreateShader(GL_VERTEX_SHADER);

LoadShader(m_VertexShaderId, m_VertexShaderCode);

m_FragmentShaderId = glCreateShader(GL_FRAGMENT_SHADER);

LoadShader(m_FragmentShaderId, m_FragmentShaderCode);

glLinkProgram(m_ProgramId);

}

virtual void Setup(const Geometry& geometry)

{

glUseProgram(m_ProgramId);

}

};

class BasicShader

: public Shader

{

private:

GLint m_PositionAttributeHandle;

public:

BasicShader()

{

m_VertexShaderCode =

"attribute vec4 a_vPosition; \n"

"void main(){ \n"

" gl_Position = a_vPosition; \n"

"} \n";

m_FragmentShaderCode =

"#version 150 \n"

"precision mediump float; \n"

"void main(){ \n"

" gl_FragColor = vec4(0.2, 0.2, 0.2, 1.0); \n"

"} \n";

}

~BasicShader() override = default;

void Link() override

{

Shader::Link();

m_PositionAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vPosition");

}

void Setup(const Geometry& geometry) override

{

Shader::Setup(geometry);

glVertexAttribPointer(

m_PositionAttributeHandle,

3,

GL_FLOAT,

GL_FALSE,

0,

geometry.GetVertices());

glEnableVertexAttribArray(m_PositionAttributeHandle);

}

};

int CALLBACK WinMain(

_In_ HINSTANCE hInstance,

_In_ HINSTANCE hPrevInstance,

_In_ LPSTR lpCmdLine,

_In_ int nCmdShow

)

{

GLFWwindow* window;

// Initialize the library

if (!glfwInit())

{

return -1;

}

// Create a windowed mode window and its OpenGL context

window = glfwCreateWindow(640, 480, "Hello World", NULL, NULL);

if (!window)

{

glfwTerminate();

return -1;

}

// Make the window's context current

glfwMakeContextCurrent(window);

GLenum glewError{ glewInit() };

if (glewError != GLEW_OK)

{

return -1;

}

BasicShader basicShader;

basicShader.Link();

Geometry triangle;

Geometry::Vertices vertices{

0.0f, 0.5f, 0.0f,

0.5f, -0.5f, 0.0f,

-0.5f, -0.5f, 0.0f

};

Geometry::Indices indices{ 0, 1, 2 };

triangle.SetVertices(vertices);

triangle.SetIndices(indices);

glClearColor(0.25f, 0.25f, 0.95f, 1.0f);

// Loop until the user closes the window

while (!glfwWindowShouldClose(window))

{

glClear(GL_COLOR_BUFFER_BIT);

basicShader.Setup(triangle);

glDrawElements(GL_TRIANGLES,

triangle.GetNumIndices(),

GL_UNSIGNED_SHORT,

triangle.GetIndices());

// Swap front and back buffers

glfwSwapBuffers(window);

// Poll for and process events

glfwPollEvents();

}

glfwTerminate();

return 0;

}

The main function in Listing 14-6 shows how and where to use the Geometry and BasicShader classes to render a triangle to your window. The OpenGL API is available to use immediately after the call to glewInit has completed successfully. The main function follows this call by initializing a BasicShader object and calling BasicShader::Link then a Geometry object to represent the vertices of a triangle. The vertices are supplied in a post-transformed state because the vertex shader in BasicShader isn’t carrying out any operations on the data passed through. The vertices are specified in normalized device coordinates; in OpenGL, these coordinates must fit inside a cube that ranges from -1, -1, -1 to 1, 1, 1 for the x, y, and z coordinates. The indices tell OpenGL the order in which to pass the vertices to the vertex shader; in this case, you’re passing the vertices in the order they’re defined.

The glClearColor function tells OpenGL the color to use to represent the background color when no other pixels have been rendered to that position. Here the color is set to light blue so it’s easy to tell when a pixel has been rendered to. Colors are represented in OpenGL using four components: red, green, blue, and alpha. The red, green, and blue components combine to generate a color for a pixel. When all the component values are 1, the color is white; and when all the values are 0, the color is black. The alpha component is used to determine how transparent a pixel is. There’s little reason to set a transparency value of less than 1 on the background color.

You can find a call to glClear in the render loop. This call uses the values set by glClearColor to fill the framebuffer and overwrite anything rendered the last time this buffer was used. Remember that when you’re using double buffering, the buffer you’re rendering to is two frames old, not one. The BasicShader::Setup function sets up the shader with the current geometry for rendering. This could have been a one-time operation in this program, but it’s more common for programs to render more than one object with a given shader.

Finally, the glDrawElements function is responsible for asking OpenGL to render the triangle. The glDrawElements call specifies that you want to render triangle primitives, the number of indices to render, the type of the indices, and a pointer to the index data stream.

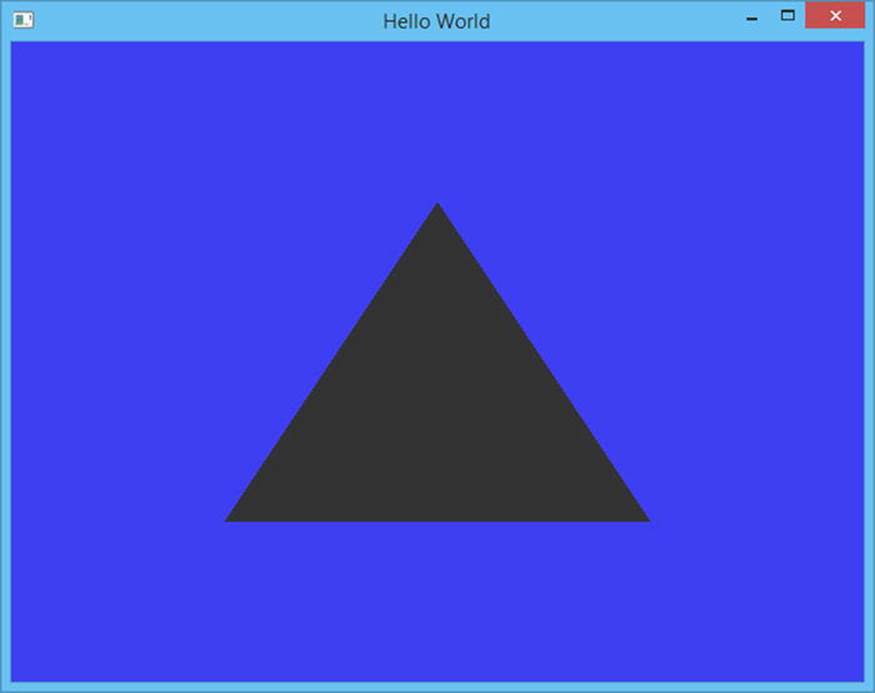

Figure 14-1 shows the output generated by this program.

Figure 14-1. The triangle rendered by the code in Listing 14-6

14-3. Creating a Textured Quad

Problem

GPU power is limited, and you would like to give your objects a more highly detailed appearance.

Solution

Texture mapping allows you to create 2D images that you can map over the surface of a mesh to give the appearance of increased geometric complexity.

How It Works

GLSL provides support for samplers that you can use to read texels from an assigned texture. A texel is a single color element from a texture; the term is short for texture element in the same way that pixel is short for picture element. The term pixel is usually used when referring to individual colors that make up the image on your display, whereas texel is used when referring to individual colors in a texture image.

A texture is mapped to a mesh using texture coordinates. Each vertex in a mesh is given an associated texture coordinate that you can use to look up the color to be applied to the fragment in a fragment shader. The texture coordinate from each vertex is interpolated across the surface of a polygon using an interpolator unit on the GPU. Interpolated values to be passed from a vertex shader to a fragment shader are represented in OpenGL using the varying keyword. This keyword makes logical sense because varyings are used to represent variables that vary across the surface of the polygon. Varyings are initialized in the vertex shader by being either assigned from an attribute or by generated by code.

You need a way to represent mesh data that contains texture coordinates before you can worry about having textures in your applications. Listing 14-7 shows a definition of the Geometry class that supports texture coordinates in the vertex data.

Listing 14-7. A Geometry Class that Supports Texture Coordinates

class Geometry

{

public:

using Vertices = vector < float >;

using Indices = vector < unsigned short >;

private:

Vertices m_Vertices;

Indices m_Indices;

unsigned int m_NumVertexPositionElements{};

unsigned int m_NumTextureCoordElements{};

unsigned int m_VertexStride{};

public:

Geometry() = default;

~Geometry() = default;

void SetVertices(const Vertices& vertices)

{

m_Vertices = vertices;

}

Vertices::size_type GetNumVertices() const

{

return m_Vertices.size();

}

Vertices::const_pointer GetVertices() const

{

return m_Vertices.data();

}

void SetIndices(const Indices& indices)

{

m_Indices = indices;

}

Indices::size_type GetNumIndices() const

{

return m_Indices.size();

}

Indices::const_pointer GetIndices() const

{

return m_Indices.data();

}

Vertices::const_pointer GetTexCoords() const

{

return static_cast<Vertices::const_pointer>(&m_Vertices[m_NumVertexPositionElements]);

}

void SetNumVertexPositionElements(unsigned int numVertexPositionElements)

{

m_NumVertexPositionElements = numVertexPositionElements;

}

unsigned int GetNumVertexPositionElements() const

{

return m_NumVertexPositionElements;

}

void SetNumTexCoordElements(unsigned int numTexCoordElements)

{

m_NumTextureCoordElements = numTexCoordElements;

}

unsigned int GetNumTexCoordElements() const

{

return m_NumTextureCoordElements;

}

void SetVertexStride(unsigned int vertexStride)

{

m_VertexStride = vertexStride;

}

unsigned int GetVertexStride() const

{

return m_VertexStride;

}

};

This code shows the definition for a Geometry class that stores vertices and indices in separate vectors. There are also fields that store the number of vertex position elements and the number of texture coordinate elements. A single vertex can consist of a variable number of vertex elements and a variable number of texture coordinates. The m_VertexStride field stores the number of bytes from the beginning of one vertex to the beginning of the next vertex. The GetTexCoords method is one of the more important methods in this class because it shows that the vertex data this class supports is in an array of structures format. There are two main ways to read in vertex data: you can set up separate streams for the vertices and the texture coordinates in separate arrays, or you can set up a single stream that interleaves the vertex position and texture-coordinate data per vertex. This class supports the latter style, because it’s the most optimal data format for modern GPUs. The GetTexCoords method returns the address of the first texture coordinate using the m_NumVertexPositionElements as an index to find that data. This relies on your mesh data being tightly packed and your first texture coordinate coming immediately after the vertex position elements.

The next important element when rendering textured object with OpenGL is a class that can load texture data from a file. The TGA file format is simple and easy to use and can store image data. Its simplicity means it’s a common choice of file format for uncompressed textures when working with OpenGL. The TGAFile class in Listing 14-8 shows how a TGA file is loaded.

Listing 14-8. The TGAFile Class

class TGAFile

{

private:

#ifdef _MSC_VER

#pragma pack(push, 1)

#endif

struct TGAHeader

{

unsigned char m_IdSize{};

unsigned char m_ColorMapType{};

unsigned char m_ImageType{};

unsigned short m_PaletteStart{};

unsigned short m_PaletteLength{};

unsigned char m_PaletteBits{};

unsigned short m_XOrigin{};

unsigned short m_YOrigin{};

unsigned short m_Width{};

unsigned short m_Height{};

unsigned char m_BytesPerPixel{};

unsigned char m_Descriptor{};

}

#ifndef _MSC_VER

__attribute__ ((packed))

#endif // _MSC_VER

;

#ifdef _MSC_VER

#pragma pack(pop)

#endif

std::vector<char> m_FileData;

TGAHeader* m_pHeader{};

void* m_pImageData{};

public:

TGAFile(const std::string& filename)

{

std::ifstream fileStream{ filename, std::ios_base::binary };

if (fileStream.is_open())

{

fileStream.seekg(0, std::ios::end);

m_FileData.resize(static_cast<unsigned int>(fileStream.tellg()));

fileStream.seekg(0, std::ios::beg);

fileStream.read(m_FileData.data(), m_FileData.size());

fileStream.close();

m_pHeader = reinterpret_cast<TGAHeader*>(m_FileData.data());

m_pImageData = static_cast<void*>(m_FileData.data() + sizeof(TGAHeader));

}

}

unsigned short GetWidth() const

{

return m_pHeader->m_Width;

}

unsigned short GetHeight() const

{

return m_pHeader->m_Height;

}

unsigned char GetBytesPerPixel() const

{

return m_pHeader->m_BytesPerPixel;

}

unsigned int GetDataSize() const

{

return m_FileData.size() - sizeof(TGAHeader);

}

void* GetImageData() const

{

return m_pImageData;

}

};

The TGAFile class contains a header structure that represents the header data included in a TGA file when saved by an image-editing program such as Adobe Photoshop. This structure has some interesting compiler metadata associated with it. A modern C++ compiler is aware of the memory layout of the data structures in applications. A given CPU architecture may operate more efficiently with variables that lie on certain memory boundaries. This is fine for structures that are non-portable and used in a single program on a single-CPU architecture, but it may cause problems for data that is saved and loaded by different programs on different computers. To counteract this, you can specify the amount of padding a compiler can add to your programs to optimize access to individual variables. The TGAHeader struct requires that no padding be added, because the TGA file format doesn’t contain any padding when the file is saved. This is achieved when using Visual Studio by using the pragma preprocessor directive along with the pack command to push and pop a packing value of 1. This disables the automatic spacing of variables for speed efficiency. On most other compilers, you can use the __attribute__ ((packed)) compiler directive to achieve the same result.

The TGAHeader fields store metadata that represents the type of image data stored in the file. This recipe only deals with RGBA data in a TGA, so the only relevant fields are the width, height, and bytes per pixel. These are found in the file in the exact byte positions represented in theTGAHeader structure. The data from the file is mapped into the TGAHeader object by using a pointer. The filename is passed to the constructor for the class, and this file is opened and read using an ifstream object. The ifstream object is the STL class provided for reading data in from a file. The ifstream is constructed by passing it the filename to be opened and the binary data mode, because you want to read binary data from the file. The entire file is read into a vector of char variables by seeking to the end of the file, reading the position of the end of file to determine the size of the data in the file, and then seeking back to the beginning and using the size to resize the vector. The data is then read into the vector by using the ifstream read method that takes a pointer to the buffer where the data should be read and the size of the buffer to read into. You can then use reinterpret_cast to map the data read from the file onto a TGAHeader struct, and a static_cast can be used to store a pointer to the beginning of the image data.

Loading the TGA data is separated from the OpenGL texture setup by using separate classes. The data loaded from the TGA can be passed to the texture class shown in Listing 14-9 to create an OpenGL texture object.

Listing 14-9. The Texture Class

class Texture

{

private:

unsigned int m_Width{};

unsigned int m_Height{};

unsigned int m_BytesPerPixel{};

unsigned int m_DataSize{};

GLuint m_Id{};

void* m_pImageData;

public:

Texture(const TGAFile& tgaFile)

: Texture(tgaFile.GetWidth(),

tgaFile.GetHeight(),

tgaFile.GetBytesPerPixel(),

tgaFile.GetDataSize(),

tgaFile.GetImageData())

{

}

Texture(unsigned int width,

unsigned int height,

unsigned int bytesPerPixel,

unsigned int dataSize,

void* pImageData)

: m_Width(width)

, m_Height(height)

, m_BytesPerPixel(bytesPerPixel)

, m_DataSize(dataSize)

, m_pImageData(pImageData)

{

}

~Texture() = default;

GLuint GetId() const

{

return m_Id;

}

void Init()

{

GLint packBits{ 4 };

GLint internalFormat{ GL_RGBA };

GLint format{ GL_BGRA };

glGenTextures(1, &m_Id);

glBindTexture(GL_TEXTURE_2D, m_Id);

glPixelStorei(GL_UNPACK_ALIGNMENT, packBits);

glTexImage2D(GL_TEXTURE_2D,

0,

internalFormat,

m_Width,

m_Height,

0,

format,

GL_UNSIGNED_BYTE,

m_pImageData);

}

};

The Texture class initializes an OpenGL texture for use when rendering objects. The two class constructors are provided to simplify initializing the class from a TGA file or from in-memory data. The constructor that takes a TGAFile reference uses the C++11 concept of delegating constructors to call the in-memory constructor. The Init method is responsible for creating an OpenGL texture object. This method can create RGBA textures from a BGRA source using the width and height supplied in the constructor. You might notice here that the source pixels in a TGA file are back to front; this method is responsible for transposing the red and green channels into the correct position for the GPU. The image data is copied onto the GPU by the glTextImage2D function so that draw calls can use this texture data in your fragment shaders.

The next step in being able to render with textures is to look at the TextureShader class, which includes a vertex shader that can read in texture coordinates and pass them to the fragment shader through a varying object. You can see this class in Listing 14-10.

Listing 14-10. The TextureShader Class

class Shader

{

private:

void LoadShader(GLuint id, const std::string& shaderCode)

{

const unsigned int NUM_SHADERS{ 1 };

const char* pCode{ shaderCode.c_str() };

GLint length{ static_cast<GLint>(shaderCode.length()) };

glShaderSource(id, NUM_SHADERS, &pCode, &length);

glCompileShader(id);

glAttachShader(m_ProgramId, id);

}

protected:

GLuint m_VertexShaderId{ GL_INVALID_VALUE };

GLuint m_FragmentShaderId{ GL_INVALID_VALUE };

GLint m_ProgramId{ GL_INVALID_VALUE };

std::string m_VertexShaderCode;

std::string m_FragmentShaderCode;

public:

Shader() = default;

virtual ~Shader() = default;

virtual void Link()

{

m_ProgramId = glCreateProgram();

m_VertexShaderId = glCreateShader(GL_VERTEX_SHADER);

LoadShader(m_VertexShaderId, m_VertexShaderCode);

m_FragmentShaderId = glCreateShader(GL_FRAGMENT_SHADER);

LoadShader(m_FragmentShaderId, m_FragmentShaderCode);

glLinkProgram(m_ProgramId);

}

virtual void Setup(const Geometry& geometry)

{

glUseProgram(m_ProgramId);

}

};

class TextureShader

: public Shader

{

private:

const Texture& m_Texture;

GLint m_PositionAttributeHandle;

GLint m_TextureCoordinateAttributeHandle;

GLint m_SamplerHandle;

public:

TextureShader(const Texture& texture)

: m_Texture(texture)

{

m_VertexShaderCode =

"attribute vec4 a_vPosition; \n"

"attribute vec2 a_vTexCoord; \n"

"varying vec2 v_vTexCoord; \n"

" \n"

"void main() { \n"

" gl_Position = a_vPosition; \n"

" v_vTexCoord = a_vTexCoord; \n"

"} \n";

m_FragmentShaderCode =

"#version 150 \n"

" \n"

"precision highp float; \n"

"varying vec2 v_vTexCoord; \n"

"uniform sampler2D s_2dTexture; \n"

" \n"

"void main() { \n"

" gl_FragColor = \n"

" texture2D(s_2dTexture, v_vTexCoord); \n"

"} \n";

}

~TextureShader() override = default;

void Link() override

{

Shader::Link();

m_PositionAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vPosition");

m_TextureCoordinateAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vTexCoord");

m_SamplerHandle = glGetUniformLocation(m_ProgramId, "s_2dTexture");

}

void Setup(const Geometry& geometry) override

{

Shader::Setup(geometry);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, m_Texture.GetId());

glUniform1i(m_SamplerHandle, 0);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glVertexAttribPointer(

m_PositionAttributeHandle,

geometry.GetNumVertexPositionElements(),

GL_FLOAT,

GL_FALSE,

geometry.GetVertexStride(),

geometry.GetVertices());

glEnableVertexAttribArray(m_PositionAttributeHandle);

glVertexAttribPointer(

m_TextureCoordinateAttributeHandle,

geometry.GetNumTexCoordElements(),

GL_FLOAT,

GL_FALSE,

geometry.GetVertexStride(),

geometry.GetTexCoords());

glEnableVertexAttribArray(m_TextureCoordinateAttributeHandle);

}

};

The TextureShader class inherits from the Shader class. The vertex shader code in the TextureShader class constructor contains two attributes and a varying. The position element of the vertex is passed straight through without modification to the built-ingl_Position variable, which receives the final transformed position of a vertex. The a_vTexCoord attribute is passed to the v_vTexCoord varying. Varyings are used to transfer interpolated data from vertex shaders to fragment shaders, so it’s important that both your vertex shader and your fragment shader contain a varying with the same type and name. OpenGL works out the plumbing behind the scenes to make sure the varying output from the vertex shader is passed to the same varying in the fragment shader.

The fragment shader contains a uniform. Uniforms are more like shader constants in that they’re set by a single call for each draw call, and every instance of the shader receives the same value. In this case, every instance of the fragment shader receives the same sampler ID to retrieve data from the same texture. This data is read using the texture2D function, which takes a sampler2D uniform and the v_vTexCoord varying. The texture-coordinate varying has been interpolated across the surface of a polygon, so the polygon is mapped using different texels from the texture data.

The TextureShader::Setup function is responsible for initializing the sampler state before each draw call. The texture unit you want to use is initialized using the glActiveTexture function. A texture is bound to this texture unit using glBindTexture, which is passed the ID of the OpenGL texture. The uniform binding is somewhat unintuitive. glActiveTexture receives the constant value GL_TEXTURE0 as the value and not 0. This allows the glActiveTexture call to associate the texture with the texture-image unit binding, but the fragment shader doesn’t use the same value; instead, it uses an index to the texture-image units. In this case, GL_TEXTURE0 can be found at index 0, so the value 0 is bound to the m_SamplerHandle uniform in the fragment shader.

The sampler parameters are then initialized for the bound texture. They’re set to clamp the texture in both directions. This would be useful for cases where you want to use values outside the normal range of 0 to 1 for texture coordinates. It’s also possible to set up textures to wrap, repeat, or mirror in these cases. The next two options configure the settings for sampling textures when they’re minified or magnified on the screen. Minification happens when the texture is being applied to an object that takes up less screen space than the texture would at a 1-to-1 mapping. This could occur with a 512 × 512 texture that was being rendered onscreen at 256 × 256. Magnification occurs in the opposite case, where the texture is being rendered to an object that is taking up more screen space than the texture provides texels for. The linear mapping uses the four texels nearest to the sampling point to work out an average of the color to be applied to the fragment. This gives textures a less blocky appearance at the expense of blurring the texture slightly. The effect is more pronounced depending on how much minification or magnification is applied to the texture.

The TextureShader::Setup function then initializes the data streams for the vertex shader’s attribute fields. The vertex-position elements are bound to the m_PositionAttributeHandle location using the number of position elements from the geometry object as well as the stride from that location. After the attribute is initialized, it’s enabled with a call to glEnableVertexAttribArray. The m_TextureCoordinateAttributeHandle attribute is initialized using the same functions but with different data. The number of texture elements per vertex is retrieved from the geometry object, as is the texture coordinate stream. The stride of the data remains the same for both the vertex data and the texture data because they’re packed into the same stream in the array-of-structures format.

The code in Listing 14-11 brings all of this together and adds a main function to show how a texture and geometry can be initialized to render a quad to the screen that has a texture image applied.

Listing 14-11. The Textured Quad Program

#include "GL/glew.h"

#include "GLFW/glfw3.h"

#include <string>

#include <vector>

using namespace std;

class Geometry

{

public:

using Vertices = vector < float >;

using Indices = vector < unsigned short >;

private:

Vertices m_Vertices;

Indices m_Indices;

unsigned int m_NumVertexPositionElements{};

unsigned int m_NumTextureCoordElements{};

unsigned int m_VertexStride{};

public:

Geometry() = default;

~Geometry() = default;

void SetVertices(const Vertices& vertices)

{

m_Vertices = vertices;

}

Vertices::size_type GetNumVertices() const

{

return m_Vertices.size();

}

Vertices::const_pointer GetVertices() const

{

return m_Vertices.data();

}

void SetIndices(const Indices& indices)

{

m_Indices = indices;

}

Indices::size_type GetNumIndices() const

{

return m_Indices.size();

}

Indices::const_pointer GetIndices() const

{

return m_Indices.data();

}

Vertices::const_pointer GetTexCoords() const

{

return static_cast<Vertices::const_pointer>(&m_Vertices

[m_NumVertexPositionElements]);

}

void SetNumVertexPositionElements(unsigned int numVertexPositionElements)

{

m_NumVertexPositionElements = numVertexPositionElements;

}

unsigned int GetNumVertexPositionElements() const

{

return m_NumVertexPositionElements;

}

void SetNumTexCoordElements(unsigned int numTexCoordElements)

{

m_NumTextureCoordElements = numTexCoordElements;

}

unsigned int GetNumTexCoordElements() const

{

return m_NumTextureCoordElements;

}

void SetVertexStride(unsigned int vertexStride)

{

m_VertexStride = vertexStride;

}

unsigned int GetVertexStride() const

{

return m_VertexStride;

}

};

class TGAFile

{

private:

#ifdef _MSC_VER

#pragma pack(push, 1)

#endif

struct TGAHeader

{

unsigned char m_IdSize{};

unsigned char m_ColorMapType{};

unsigned char m_ImageType{};

unsigned short m_PaletteStart{};

unsigned short m_PaletteLength{};

unsigned char m_PaletteBits{};

unsigned short m_XOrigin{};

unsigned short m_YOrigin{};

unsigned short m_Width{};

unsigned short m_Height{};

unsigned char m_BytesPerPixel{};

unsigned char m_Descriptor{};

}

#ifndef _MSC_VER

__attribute__ ((packed))

#endif // _MSC_VER

;

#ifdef _MSC_VER

#pragma pack(pop)

#endif

std::vector<char> m_FileData;

TGAHeader* m_pHeader{};

void* m_pImageData{};

public:

TGAFile(const std::string& filename)

{

std::ifstream fileStream{ filename, std::ios_base::binary };

if (fileStream.is_open())

{

fileStream.seekg(0, std::ios::end);

m_FileData.resize(static_cast<unsigned int>(fileStream.tellg()));

fileStream.seekg(0, std::ios::beg);

fileStream.read(m_FileData.data(), m_FileData.size());

fileStream.close();

m_pHeader = reinterpret_cast<TGAHeader*>(m_FileData.data());

m_pImageData = static_cast<void*>(m_FileData.data() + sizeof(TGAHeader));

}

}

unsigned short GetWidth() const

{

return m_pHeader->m_Width;

}

unsigned short GetHeight() const

{

return m_pHeader->m_Height;

}

unsigned char GetBytesPerPixel() const

{

return m_pHeader->m_BytesPerPixel;

}

unsigned int GetDataSize() const

{

return m_FileData.size() - sizeof(TGAHeader);

}

void* GetImageData() const

{

return m_pImageData;

}

};

class Texture

{

private:

unsigned int m_Width{};

unsigned int m_Height{};

unsigned int m_BytesPerPixel{};

unsigned int m_DataSize{};

GLuint m_Id{};

void* m_pImageData;

public:

Texture(const TGAFile& tgaFile)

: Texture(tgaFile.GetWidth(),

tgaFile.GetHeight(),

tgaFile.GetBytesPerPixel(),

tgaFile.GetDataSize(),

tgaFile.GetImageData())

{

}

Texture(unsigned int width,

unsigned int height,

unsigned int bytesPerPixel,

unsigned int dataSize,

void* pImageData)

: m_Width(width)

, m_Height(height)

, m_BytesPerPixel(bytesPerPixel)

, m_DataSize(dataSize)

, m_pImageData(pImageData)

{

}

~Texture() = default;

GLuint GetId() const

{

return m_Id;

}

void Init()

{

GLint packBits{ 4 };

GLint internalFormat{ GL_RGBA };

GLint format{ GL_BGRA };

glGenTextures(1, &m_Id);

glBindTexture(GL_TEXTURE_2D, m_Id);

glPixelStorei(GL_UNPACK_ALIGNMENT, packBits);

glTexImage2D(GL_TEXTURE_2D,

0,

internalFormat,

m_Width,

m_Height,

0,

format,

GL_UNSIGNED_BYTE,

m_pImageData);

}

};

class Shader

{

private:

void LoadShader(GLuint id, const std::string& shaderCode)

{

const unsigned int NUM_SHADERS{ 1 };

const char* pCode{ shaderCode.c_str() };

GLint length{ static_cast<GLint>(shaderCode.length()) };

glShaderSource(id, NUM_SHADERS, &pCode, &length);

glCompileShader(id);

glAttachShader(m_ProgramId, id);

}

protected:

GLuint m_VertexShaderId{ GL_INVALID_VALUE };

GLuint m_FragmentShaderId{ GL_INVALID_VALUE };

GLint m_ProgramId{ GL_INVALID_VALUE };

std::string m_VertexShaderCode;

std::string m_FragmentShaderCode;

public:

Shader() = default;

virtual ~Shader() = default;

virtual void Link()

{

m_ProgramId = glCreateProgram();

m_VertexShaderId = glCreateShader(GL_VERTEX_SHADER);

LoadShader(m_VertexShaderId, m_VertexShaderCode);

m_FragmentShaderId = glCreateShader(GL_FRAGMENT_SHADER);

LoadShader(m_FragmentShaderId, m_FragmentShaderCode);

glLinkProgram(m_ProgramId);

}

virtual void Setup(const Geometry& geometry)

{

glUseProgram(m_ProgramId);

}

};

class TextureShader

: public Shader

{

private:

const Texture& m_Texture;

GLint m_PositionAttributeHandle;

GLint m_TextureCoordinateAttributeHandle;

GLint m_SamplerHandle;

public:

TextureShader(const Texture& texture)

: m_Texture(texture)

{

m_VertexShaderCode =

"attribute vec4 a_vPosition; \n"

"attribute vec2 a_vTexCoord; \n"

"varying vec2 v_vTexCoord; \n"

" \n"

"void main() { \n"

" gl_Position = a_vPosition; \n"

" v_vTexCoord = a_vTexCoord; \n"

"} \n";

m_FragmentShaderCode =

"#version 150 \n"

" \n"

"precision highp float; \n"

"varying vec2 v_vTexCoord; \n"

"uniform sampler2D s_2dTexture; \n"

" \n"

"void main() { \n"

" gl_FragColor = \n"

" texture2D(s_2dTexture, v_vTexCoord); \n"

"} \n";

}

~TextureShader() override = default;

void Link() override

{

Shader::Link();

m_PositionAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vPosition");

m_TextureCoordinateAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vTexCoord");

m_SamplerHandle = glGetUniformLocation(m_ProgramId, "s_2dTexture");

}

void Setup(const Geometry& geometry) override

{

Shader::Setup(geometry);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, m_Texture.GetId());

glUniform1i(m_SamplerHandle, 0);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glVertexAttribPointer(

m_PositionAttributeHandle,

geometry.GetNumVertexPositionElements(),

GL_FLOAT,

GL_FALSE,

geometry.GetVertexStride(),

geometry.GetVertices());

glEnableVertexAttribArray(m_PositionAttributeHandle);

glVertexAttribPointer(

m_TextureCoordinateAttributeHandle,

geometry.GetNumTexCoordElements(),

GL_FLOAT,

GL_FALSE,

geometry.GetVertexStride(),

geometry.GetTexCoords());

glEnableVertexAttribArray(m_TextureCoordinateAttributeHandle);

}

};

int CALLBACK WinMain(

_In_ HINSTANCE hInstance,

_In_ HINSTANCE hPrevInstance,

_In_ LPSTR lpCmdLine,

_In_ int nCmdShow

)

{

GLFWwindow* window;

// Initialize the library

if (!glfwInit())

{

return -1;

}

// Create a windowed mode window and its OpenGL context

window = glfwCreateWindow(640, 480, "Hello World", NULL, NULL);

if (!window)

{

glfwTerminate();

return -1;

}

// Make the window's context current

glfwMakeContextCurrent(window);

GLenum glewError{ glewInit() };

if (glewError != GLEW_OK)

{

return -1;

}

TGAFile myTextureFile("MyTexture.tga");

Texture myTexture(myTextureFile);

myTexture.Init();

TextureShader textureShader(myTexture);

textureShader.Link();

Geometry quad;

Geometry::Vertices vertices{

-0.5f, 0.5f, 0.0f,

0.0f, 1.0f,

0.5f, 0.5f, 0.0f,

1.0f, 1.0f,

-0.5f, -0.5f, 0.0f,

0.0f, 0.0f,

0.5f, -0.5f, 0.0f,

1.0f, 0.0f

};

Geometry::Indices indices{ 0, 2, 1, 2, 3, 1 };

quad.SetVertices(vertices);

quad.SetIndices(indices);

quad.SetNumVertexPositionElements(3);

quad.SetNumTexCoordElements(2);

quad.SetVertexStride(sizeof(float) * 5);

glClearColor(0.25f, 0.25f, 0.95f, 1.0f);

// Loop until the user closes the window

while (!glfwWindowShouldClose(window))

{

glClear(GL_COLOR_BUFFER_BIT);

textureShader.Setup(quad);

glDrawElements(GL_TRIANGLES,

quad.GetNumIndices(),

GL_UNSIGNED_SHORT,

quad.GetIndices());

// Swap front and back buffers

glfwSwapBuffers(window);

// Poll for and process events

glfwPollEvents();

}

glfwTerminate();

return 0;

}

The full source for the program in Listing 14-11 shows how all the classes introduced in this recipe can be brought together to render a single textured quad. The TGAFile class is initialized to load the MyTexture.tga file. This is passed to the myTexture object, which is of typeTexture. The Texture::Init function is called to initialize the OpenGL texture object. The initialized texture is in turn passed to an instance of the TextureShader class, which creates, initializes, and links an OpenGL shader program that can be used to render 2D textured geometry. The geometry is then created; the vertices specified include three position elements and two texture-coordinate elements for each vertex. OpenGL uses four vertices and six indices to render a quad made from two triangles. The vertices at indices 1 and 2 are shared by both triangles; you can see how indices can be used to reduce the required geometry definitions for the mesh. There’s another optimization advantage here: many modern CPUs cache the results from already-processed vertices, so you can read reused vertex data from a cache rather than have the GPU reprocess it.

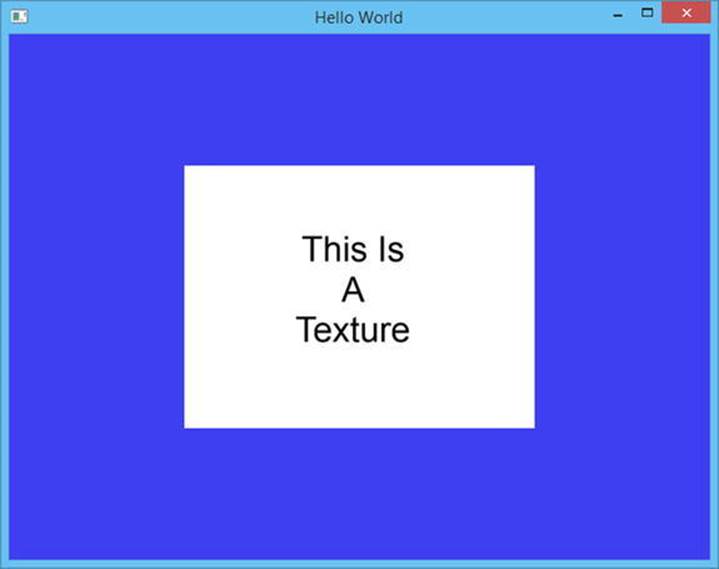

The actual rendering is trivial after all the setup work is done. There are calls to clear the frame buffer, set up the shader, draw the elements, swap the buffers, and poll for operating system events. Figure 14-2 shows what the output from this program looks like when everything is complete and working properly.

Figure 14-2. Output showing a textured quad rendered using OpenGL

14-4. Loading Geometry from a File

Problem

You would like to be able to load mesh data from files created by artists on your team.

Solution

C++ allows you to write code that can load many different file formats. This recipe shows you how to load Wavefront .obj files.

How It Works

The .obj file format was initially developed by Wavefront Technologies. It can be exported from many 3D modelling programs and is a simple text-based format, making it an ideal intermediary for learning how to import 3D data. The OBJFile class in Listing 14-12 shows how to load an.obj file from a source file.

Listing 14-12. Loading an .obj File

class OBJFile

{

public:

using Vertices = vector < float > ;

using TextureCoordinates = vector < float > ;

using Normals = vector < float > ;

using Indices = vector < unsigned short > ;

private:

Vertices m_VertexPositions;

TextureCoordinates m_TextureCoordinates;

Normals m_Normals;

Indices m_Indices;

public:

OBJFile(const std::string& filename)

{

std::ifstream fileStream{ filename, std::ios_base::in };

if (fileStream.is_open())

{

while (!fileStream.eof())

{

std::string line;

getline(fileStream, line);

stringstream lineStream{ line };

std::string firstSymbol;

lineStream >> firstSymbol;

if (firstSymbol == "v")

{

float vertexPosition{};

for (unsigned int i = 0; i < 3; ++i)

{

lineStream >> vertexPosition;

m_VertexPositions.emplace_back(vertexPosition);

}

}

else if (firstSymbol == "vt")

{

float textureCoordinate{};

for (unsigned int i = 0; i < 2; ++i)

{

lineStream >> textureCoordinate;

m_TextureCoordinates.emplace_back(textureCoordinate);

}

}

else if (firstSymbol == "vn")

{

float normal{};

for (unsigned int i = 0; i < 3; ++i)

{

lineStream >> normal;

m_Normals.emplace_back(normal);

}

}

else if (firstSymbol == "f")

{

char separator;

unsigned short index{};

for (unsigned int i = 0; i < 3; ++i)

{

for (unsigned int j = 0; j < 3; ++j)

{

lineStream >> index;

m_Indices.emplace_back(index);

if (j < 2)

{

lineStream >> separator;

}

}

}

}

}

}

}

const Vertices& GetVertices() const

{

return m_VertexPositions;

}

const TextureCoordinates& GetTextureCoordinates() const

{

return m_TextureCoordinates;

}

const Normals& GetNormals() const

{

return m_Normals;

}

const Indices& GetIndices() const

{

return m_Indices;

}

};

This code shows how to read data from an .obj file. The .obj data is stored in lines. A line that represents a vertex position starts with the letter v and contains three floating-point numbers representing the x, y, and z displacement of a vertex. A line beginning with vt contains a texture coordinate and the two floating-point numbers represent the u and v components of the texture coordinate. The vn lines represent vertex normals and contains the x, y, and z components of the vertex normal. The last type of line you’re interested in begins with an n and represents the indices for a triangle. Each vertex is represented in the face using three numbers: an index into the list of vertex positions, an index into the texture coordinates, and an index into the vertex normals. All of this data is loaded into the four vectors in the class; there are accessors to retrieve the data from the class. The Geometry class in Listing 14-13 has a constructor that can take a reference to an OBJFile object and create a mesh that OpenGL can render.

Listing 14-13. The Geometry Class

class Geometry

{

public:

using Vertices = vector < float >;

using Indices = vector < unsigned short >;

private:

Vertices m_Vertices;

Indices m_Indices;

unsigned int m_NumVertexPositionElements{};

unsigned int m_NumTextureCoordElements{};

unsigned int m_VertexStride{};

public:

Geometry() = default;

Geometry(const OBJFile& objFile)

{

const OBJFile::Indices& objIndices{ objFile.GetIndices() };

const OBJFile::Vertices& objVertexPositions{ objFile.GetVertices() };

const OBJFile::TextureCoordinates& objTextureCoordinates{

objFile.GetTextureCoordinates() };

for (unsigned int i = 0; i < objIndices.size(); i += 3U)

{

m_Indices.emplace_back(i / 3);

const Indices::value_type index{ objIndices[i] - 1U };

const unsigned int vertexPositionIndex{ index * 3U };

m_Vertices.emplace_back(objVertexPositions[vertexPositionIndex]);

m_Vertices.emplace_back(objVertexPositions[vertexPositionIndex+1]);

m_Vertices.emplace_back(objVertexPositions[vertexPositionIndex+2]);

const OBJFile::TextureCoordinates::size_type texCoordObjIndex{

objIndices[i + 1] - 1U };

const unsigned int textureCoodsIndex{ texCoordObjIndex * 2U };

m_Vertices.emplace_back(objTextureCoordinates[textureCoodsIndex]);

m_Vertices.emplace_back(objTextureCoordinates[textureCoodsIndex+1]);

}

}

~Geometry() = default;

void SetVertices(const Vertices& vertices)

{

m_Vertices = vertices;

}

Vertices::size_type GetNumVertices() const

{

return m_Vertices.size();

}

Vertices::const_pointer GetVertices() const

{

return m_Vertices.data();

}

void SetIndices(const Indices& indices)

{

m_Indices = indices;

}

Indices::size_type GetNumIndices() const

{

return m_Indices.size();

}

Indices::const_pointer GetIndices() const

{

return m_Indices.data();

}

Vertices::const_pointer GetTexCoords() const

{

return static_cast<Vertices::const_pointer>(&m_Vertices[m_NumVertexPositionElements]);

}

void SetNumVertexPositionElements(unsigned int numVertexPositionElements)

{

m_NumVertexPositionElements = numVertexPositionElements;

}

unsigned int GetNumVertexPositionElements() const

{

return m_NumVertexPositionElements;

}

void SetNumTexCoordElements(unsigned int numTexCoordElements)

{

m_NumTextureCoordElements = numTexCoordElements;

}

unsigned int GetNumTexCoordElements() const

{

return m_NumTextureCoordElements;

}

void SetVertexStride(unsigned int vertexStride)

{

m_VertexStride = vertexStride;

}

unsigned int GetVertexStride() const

{

return m_VertexStride;

}

};

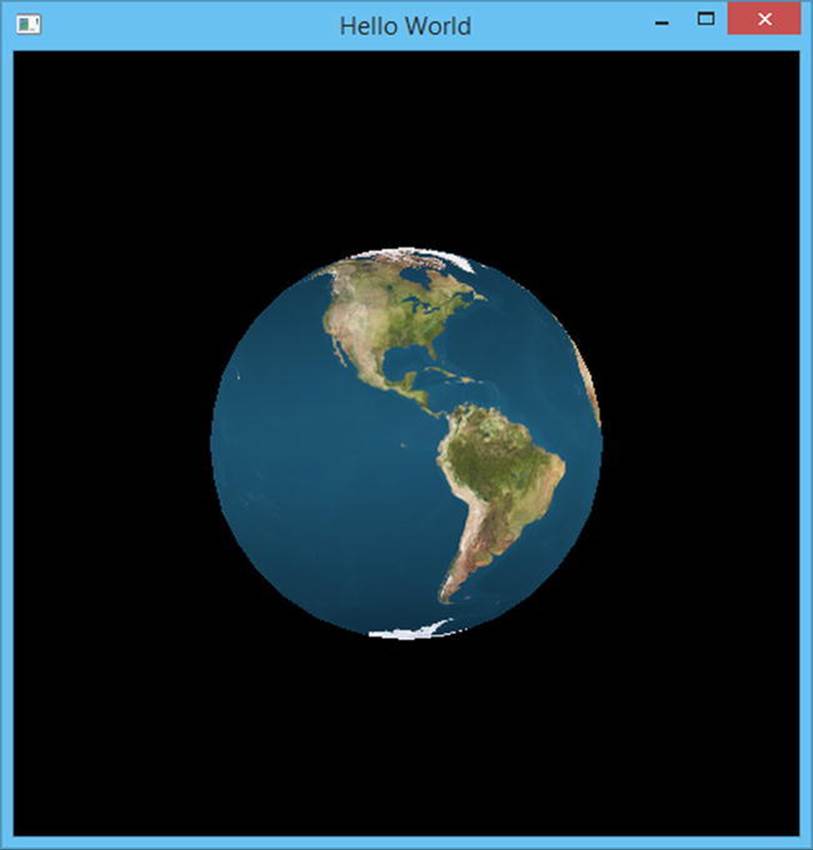

Listing 14-13 contains a constructor for the Geometry class that can build the geometry for OpenGL from an OBJFile instance. The OBJFile::m_Indices vector contains three indices per OpenGL vertex. The Geometry class for this recipe is only concerned with the vertex-position index and the texture-coordinate index, but the for loop is still configured to skip ahead three indices for each iteration. The vertex index for the Geometry object is the obj index divided by 3; the current vertex is constructed from the data obtained by looking up the obj vertex positions and texture coordinates for the given obj index obtained in each iteration of the for loop. The vertex indices and texture-coordinate indices in the .obj file start at 1 and not 0, so 1 is subtracted from each index to get the correct vector index. This index is then multiplied by 3 for vertex -position indices or 2 for texture-coordinate indices, because there are three elements per vertex position and two elements per texture coordinate read in from the original .obj file. By the end of the loop, you have a Geometry object with the vertex and texture-coordinate data loaded from the file. The code in Listing 14-14 shows how you can use these classes in a program to render a textured sphere that has been created and exported using the Blender 3D modelling package.

![]() Note Most of the recipes in this book are self-contained, but the OpenGL API covers a lot of code that is necessary to carry out seemingly simple tasks. Listing 14-14 contains the Texture, Shader, and TextureShader classes covered in Recipe 14-3.

Note Most of the recipes in this book are self-contained, but the OpenGL API covers a lot of code that is necessary to carry out seemingly simple tasks. Listing 14-14 contains the Texture, Shader, and TextureShader classes covered in Recipe 14-3.

Listing 14-14. Rendering a Textured Sphere

#include <cassert>

#include <fstream>

#include "GL/glew.h"

#include "GLFW/glfw3.h"

#include <memory>

#include <sstream>

#include <string>

#include <vector>

using namespace std;

class OBJFile

{

public:

using Vertices = vector < float > ;

using TextureCoordinates = vector < float > ;

using Normals = vector < float > ;

using Indices = vector < unsigned short > ;

private:

Vertices m_VertexPositions;

TextureCoordinates m_TextureCoordinates;

Normals m_Normals;

Indices m_Indices;

public:

OBJFile(const std::string& filename)

{

std::ifstream fileStream{ filename, std::ios_base::in };

if (fileStream.is_open())

{

while (!fileStream.eof())

{

std::string line;

getline(fileStream, line);

stringstream lineStream{ line };

std::string firstSymbol;

lineStream >> firstSymbol;

if (firstSymbol == "v")

{

float vertexPosition{};

for (unsigned int i = 0; i < 3; ++i)

{

lineStream >> vertexPosition;

m_VertexPositions.emplace_back(vertexPosition);

}

}

else if (firstSymbol == "vt")

{

float textureCoordinate{};

for (unsigned int i = 0; i < 2; ++i)

{

lineStream >> textureCoordinate;

m_TextureCoordinates.emplace_back(textureCoordinate);

}

}

else if (firstSymbol == "vn")

{

float normal{};

for (unsigned int i = 0; i < 3; ++i)

{

lineStream >> normal;

m_Normals.emplace_back(normal);

}

}

else if (firstSymbol == "f")

{

char separator;

unsigned short index{};

for (unsigned int i = 0; i < 3; ++i)

{

for (unsigned int j = 0; j < 3; ++j)

{

lineStream >> index;

m_Indices.emplace_back(index);

if (j < 2)

{

lineStream >> separator;

}

}

}

}

}

}

}

const Vertices& GetVertices() const

{

return m_VertexPositions;

}

const TextureCoordinates& GetTextureCoordinates() const

{

return m_TextureCoordinates;

}

const Normals& GetNormals() const

{

return m_Normals;

}

const Indices& GetIndices() const

{

return m_Indices;

}

};

class Geometry

{

public:

using Vertices = vector < float >;

using Indices = vector < unsigned short >;

private:

Vertices m_Vertices;

Indices m_Indices;

unsigned int m_NumVertexPositionElements{};

unsigned int m_NumTextureCoordElements{};

unsigned int m_VertexStride{};

public:

Geometry() = default;

Geometry(const OBJFile& objFile)

{

const OBJFile::Indices& objIndices{ objFile.GetIndices() };

const OBJFile::Vertices& objVertexPositions{ objFile.GetVertices() };

const OBJFile::TextureCoordinates& objTextureCoordinates{

objFile.GetTextureCoordinates() };

for (unsigned int i = 0; i < objIndices.size(); i += 3U)

{

m_Indices.emplace_back(i / 3);

const Indices::value_type index{ objIndices[i] - 1U };

const unsigned int vertexPositionIndex{ index * 3U };

m_Vertices.emplace_back(objVertexPositions[vertexPositionIndex]);

m_Vertices.emplace_back(objVertexPositions[vertexPositionIndex+1]);

m_Vertices.emplace_back(objVertexPositions[vertexPositionIndex+2]);

const OBJFile::TextureCoordinates::size_type texCoordObjIndex{

objIndices[i + 1] - 1U };

const unsigned int textureCoodsIndex{ texCoordObjIndex * 2U };

m_Vertices.emplace_back(objTextureCoordinates[textureCoodsIndex]);

m_Vertices.emplace_back(objTextureCoordinates[textureCoodsIndex+1]);

}

}

~Geometry() = default;

void SetVertices(const Vertices& vertices)

{

m_Vertices = vertices;

}

Vertices::size_type GetNumVertices() const

{

return m_Vertices.size();

}

Vertices::const_pointer GetVertices() const

{

return m_Vertices.data();

}

void SetIndices(const Indices& indices)

{

m_Indices = indices;

}

Indices::size_type GetNumIndices() const

{

return m_Indices.size();

}

Indices::const_pointer GetIndices() const

{

return m_Indices.data();

}

Vertices::const_pointer GetTexCoords() const

{

return static_cast<Vertices::const_pointer>(&m_Vertices[m_NumVertexPositionElements]);

}

void SetNumVertexPositionElements(unsigned int numVertexPositionElements)

{

m_NumVertexPositionElements = numVertexPositionElements;

}

unsigned int GetNumVertexPositionElements() const

{

return m_NumVertexPositionElements;

}

void SetNumTexCoordElements(unsigned int numTexCoordElements)

{

m_NumTextureCoordElements = numTexCoordElements;

}

unsigned int GetNumTexCoordElements() const

{

return m_NumTextureCoordElements;

}

void SetVertexStride(unsigned int vertexStride)

{

m_VertexStride = vertexStride;

}

unsigned int GetVertexStride() const

{

return m_VertexStride;

}

};

class TGAFile

{

private:

#ifdef _MSC_VER

#pragma pack(push, 1)

#endif

struct TGAHeader

{

unsigned char m_IdSize{};

unsigned char m_ColorMapType{};

unsigned char m_ImageType{};

unsigned short m_PaletteStart{};

unsigned short m_PaletteLength{};

unsigned char m_PaletteBits{};

unsigned short m_XOrigin{};

unsigned short m_YOrigin{};

unsigned short m_Width{};

unsigned short m_Height{};

unsigned char m_BytesPerPixel{};

unsigned char m_Descriptor{};

}

#ifndef _MSC_VER

__attribute__ ((packed))

#endif // _MSC_VER

;

#ifdef _MSC_VER

#pragma pack(pop)

#endif

std::vector<char> m_FileData;

TGAHeader* m_pHeader{};

void* m_pImageData{};

public:

TGAFile(const std::string& filename)

{

std::ifstream fileStream{ filename, std::ios_base::binary };

if (fileStream.is_open())

{

fileStream.seekg(0, std::ios::end);

m_FileData.resize(static_cast<unsigned int>(fileStream.tellg()));

fileStream.seekg(0, std::ios::beg);

fileStream.read(m_FileData.data(), m_FileData.size());

fileStream.close();

m_pHeader = reinterpret_cast<TGAHeader*>(m_FileData.data());

m_pImageData = static_cast<void*>(m_FileData.data() + sizeof(TGAHeader));

}

}

unsigned short GetWidth() const

{

return m_pHeader->m_Width;

}

unsigned short GetHeight() const

{

return m_pHeader->m_Height;

}

unsigned char GetBytesPerPixel() const

{

return m_pHeader->m_BytesPerPixel;

}

unsigned int GetDataSize() const

{

return m_FileData.size() - sizeof(TGAHeader);

}

void* GetImageData() const

{

return m_pImageData;

}

};

class Texture

{

private:

unsigned int m_Width{};

unsigned int m_Height{};

unsigned int m_BytesPerPixel{};

unsigned int m_DataSize{};

GLuint m_Id{};

void* m_pImageData;

public:

Texture(const TGAFile& tgaFile)

: Texture(tgaFile.GetWidth(),

tgaFile.GetHeight(),

tgaFile.GetBytesPerPixel(),

tgaFile.GetDataSize(),

tgaFile.GetImageData())

{

}

Texture(unsigned int width,

unsigned int height,

unsigned int bytesPerPixel,

unsigned int dataSize,

void* pImageData)

: m_Width(width)

, m_Height(height)

, m_BytesPerPixel(bytesPerPixel)

, m_DataSize(dataSize)

, m_pImageData(pImageData)

{

}

~Texture() = default;

GLuint GetId() const

{

return m_Id;

}

void Init()

{

GLint packBits{ 4 };

GLint internalFormat{ GL_RGBA };

GLint format{ GL_BGRA };

glGenTextures(1, &m_Id);

glBindTexture(GL_TEXTURE_2D, m_Id);

glPixelStorei(GL_UNPACK_ALIGNMENT, packBits);

glTexImage2D(GL_TEXTURE_2D,

0,

internalFormat,

m_Width,

m_Height,

0,

format,

GL_UNSIGNED_BYTE,

m_pImageData);

}

};

class Shader

{

private:

void LoadShader(GLuint id, const std::string& shaderCode)

{

const unsigned int NUM_SHADERS{ 1 };

const char* pCode{ shaderCode.c_str() };

GLint length{ static_cast<GLint>(shaderCode.length()) };

glShaderSource(id, NUM_SHADERS, &pCode, &length);

glCompileShader(id);

glAttachShader(m_ProgramId, id);

}

protected:

GLuint m_VertexShaderId{ GL_INVALID_VALUE };

GLuint m_FragmentShaderId{ GL_INVALID_VALUE };

GLint m_ProgramId{ GL_INVALID_VALUE };

std::string m_VertexShaderCode;

std::string m_FragmentShaderCode;

public:

Shader() = default;

virtual ~Shader() = default;

virtual void Link()

{

m_ProgramId = glCreateProgram();

m_VertexShaderId = glCreateShader(GL_VERTEX_SHADER);

LoadShader(m_VertexShaderId, m_VertexShaderCode);

m_FragmentShaderId = glCreateShader(GL_FRAGMENT_SHADER);

LoadShader(m_FragmentShaderId, m_FragmentShaderCode);

glLinkProgram(m_ProgramId);

}

virtual void Setup(const Geometry& geometry)

{

glUseProgram(m_ProgramId);

}

};

class TextureShader

: public Shader

{

private:

const Texture& m_Texture;

GLint m_PositionAttributeHandle;

GLint m_TextureCoordinateAttributeHandle;

GLint m_SamplerHandle;

public:

TextureShader(const Texture& texture)

: m_Texture(texture)

{

m_VertexShaderCode =

"attribute vec4 a_vPosition; \n"

"attribute vec2 a_vTexCoord; \n"

"varying vec2 v_vTexCoord; \n"

" \n"

"void main() { \n"

" gl_Position = a_vPosition; \n"

" v_vTexCoord = a_vTexCoord; \n"

"} \n";

m_FragmentShaderCode =

"#version 150 \n"

" \n"

"varying vec2 v_vTexCoord; \n"

"uniform sampler2D s_2dTexture; \n"

" \n"

"void main() { \n"

" gl_FragColor = \n"

" texture2D(s_2dTexture, v_vTexCoord); \n"

"} \n";

}

~TextureShader() override = default;

void Link() override

{

Shader::Link();

m_PositionAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vPosition");

m_TextureCoordinateAttributeHandle = glGetAttribLocation(m_ProgramId, "a_vTexCoord");

m_SamplerHandle = glGetUniformLocation(m_ProgramId, "s_2dTexture");

}

void Setup(const Geometry& geometry) override

{

Shader::Setup(geometry);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, m_Texture.GetId());

glUniform1i(m_SamplerHandle, 0);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_REPEAT);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glVertexAttribPointer(

m_PositionAttributeHandle,

geometry.GetNumVertexPositionElements(),

GL_FLOAT,

GL_FALSE,

geometry.GetVertexStride(),

geometry.GetVertices());

glEnableVertexAttribArray(m_PositionAttributeHandle);

glVertexAttribPointer(

m_TextureCoordinateAttributeHandle,

geometry.GetNumTexCoordElements(),

GL_FLOAT,

GL_FALSE,

geometry.GetVertexStride(),

geometry.GetTexCoords());

glEnableVertexAttribArray(m_TextureCoordinateAttributeHandle);

}

};

int main(void)

{

GLFWwindow* window;

// Initialize the library

if (!glfwInit())

{

return -1;

}

glfwWindowHint(GLFW_RED_BITS, 8);

glfwWindowHint(GLFW_GREEN_BITS, 8);

glfwWindowHint(GLFW_BLUE_BITS, 8);

glfwWindowHint(GLFW_DEPTH_BITS, 8);

glfwWindowHint(GLFW_DOUBLEBUFFER, true);

// Create a windowed mode window and its OpenGL context

window = glfwCreateWindow(480, 480, "Hello World", NULL, NULL);

if (!window)

{

glfwTerminate();

return -1;

}

// Make the window's context current

glfwMakeContextCurrent(window);

GLenum glewError{ glewInit() };

if (glewError != GLEW_OK)

{

return -1;

}

TGAFile myTextureFile("earthmap.tga");

Texture myTexture(myTextureFile);

myTexture.Init();

TextureShader textureShader(myTexture);

textureShader.Link();

OBJFile objSphere("sphere.obj");

Geometry sphere(objSphere);

sphere.SetNumVertexPositionElements(3);

sphere.SetNumTexCoordElements(2);

sphere.SetVertexStride(sizeof(float) * 5);

glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

glEnable(GL_CULL_FACE);

glCullFace(GL_BACK);

glEnable(GL_DEPTH_TEST);

// Loop until the user closes the window

while (!glfwWindowShouldClose(window))

{

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

textureShader.Setup(sphere);

glDrawElements(GL_TRIANGLES,

sphere.GetNumIndices(),

GL_UNSIGNED_SHORT,

sphere.GetIndices());

// Swap front and back buffers

glfwSwapBuffers(window);

// Poll for and process events

glfwPollEvents();

}

glfwTerminate();

return 0;

}