Bioinformatics Data Skills (2015)

Part III. Practice: Bioinformatics Data Skills

Chapter 8. A Rapid Introduction to the R Language

In summary, data analysis, like experimentation, must be considered as an open-ended, highly interactive, iterative process, whose actual steps are selected segments of a stubbily branching, tree-like pattern of possible actions.

Data analysis and statistics: an expository overview J. W. Tukey and M. B. Wilk (1966)

…exploratory data analysis is an attitude, a state of flexibility, a willingness to look for those things that we believe are not there, as well as for those we believe might be there. Except for its emphasis on graphs, its tools are secondary to its purpose.

J. W. Tukey in a comment to E. Parzen (1979)

Many biologists are first exposed to the R language by following a cookbook-type approach to conduct a statistical analysis like a t-test or an analysis of variance (ANOVA). Although R excels at these and more complicated statistical tasks, R’s real power is as a data programming language you can use to explore and understand data in an open-ended, highly interactive, iterative way. Learning R as a data programming language will give you the freedom to experiment and problem solve during data analysis — exactly what we need as bioinformaticians. In particular, we’ll focus on the subset of the R language that allows you to conduct exploratory data analysis (EDA).Note, however, that EDA is only one aspect of the R language — R also includes state-of-the-art statistical and machine learning methods.

Popularized by statistician John W. Tukey, EDA is an approach that emphasizes understanding data (and its limitations) through interactive investigation rather than explicit statistical modeling. In his 1977 book Exploratory Data Analysis, Tukey described EDA as “detective work” involved in “finding and revealing the clues” in data. As Tukey’s quote emphasizes, EDA is more an approach to exploring data than using specific statistical methods. In the face of rapidly changing sequencing technologies, bioinformatics software, and statistical methods, EDA skills are not only widely applicable and comparatively stable — they’re also essential to making sure that our analyses are robust to these new data and methods.

Exploratory data analysis plays an integral role throughout an entire bioinformatics project. Exploratory data analysis skills are just as applicable in analyzing intermediate bioinformatics data (e.g., are fewer reads from this sequencing lane aligning?) as they are in making sense of results from statistical analyses (e.g., what’s the distribution of these p-values, and do they correlate with possible confounders like gene length?). These exploratory analyses need not be complex or exceedingly detailed (many patterns are visible with simple analyses and visualization); it’s just about wanting to look into the data and having the skill set to do so.

In many cases, exploratory data analysis — and especially visualization — can reveal patterns in bioinformatics data we might overlook with statistical modeling or hypothesis testing. Bioinformatics data is high dimensional and messy, with each data point being a possible mix of biological signal, measurement noise, bias due to ad hoc bioinformatics filtering criteria or analysis steps, and confounding by both technical and biological covariates. Our brains are the most sophisticated pattern-finding instruments on the planet, and exploratory data analysis is the craft of presenting data in different ways to allow our brains to find patterns — both those that indicate interesting biological signals or suggest potential problems. Compared to our brains, statistical tests are a blunt instrument — and one that’s even duller when working with the complex, high-dimensional datasets widespread in bioinformatics.

Although this chapter emphasizes exploratory data analysis, statistical analysis of high-dimensional genomics data is just as important; in fact, this topic is so important that you should seek books and articles that cover it in depth. As stated in Chapter 1, no amount of post-experiment data analysis can rescue a poorly designed experiment. Likewise, no amount of terrific exploratory data analysis is substitute for having a good experimental question and applying appropriate statistical methods. Rather, EDA techniques like visualization should play an ongoing role throughout statistical analysis and complement other statistical methods, assessing the output at each step. The objective of this chapter is to teach you the EDA skills that give you the freedom to explore and experiment with your data at any stage of analysis.

Getting Started with R and RStudio

To capitalize on R’s interactive capabilities, we need a development environment that promotes interactive coding. The most popular option is RStudio, an open source integrated development environment for R. RStudio supports many features useful for working in R: syntax highlighting, quick access to R’s internal help and documentation system, and plots visible alongside code. Additionally, RStudio has an intuitive interface, is easy to use, and is the editor I’d recommend for beginner R users.

Developing code in R is a back-and-forth between writing code in a rerunnable script and exploring data interactively in the R interpreter. To be reproducible, all steps that lead to results you’ll use later must be recorded in the R script that accompanies your analysis and interactive work. While R can save a history of the commands you’ve entered in the interpreter during a session (with the command savehistory()), storing your steps in a well-commented R script makes your life much easier when you need to backtrack to understand what you did or change your analysis. RStudio simplifies developing R scripts this way by allowing you to send lines from your R script to the R interpreter with a simple keyboard shortcut: Command-Enter (for OS X) or Control-Enter (for Windows and Linux).

THE COMPREHENSIVE R ARCHIVE NETWORK (CRAN)

Among R’s greatest strengths are the numerous packages that extend its functionality. The R Project hosts many of these packages (over 6,400) on the Comprehensive R Archive Network, or CRAN, on a variety of servers that mirror the R project’s server. You can install these packages directly from within R with the install.packages() function. For example, we can install the ggplot2 package (which we’ll use throughout this chapter) as follows:

> install.packages("ggplot2")

trying URL 'http://cran.cnr.Berkeley.edu/src/contrib/ggplot2_1.0.0.tar.gz'

Content type 'application/x-gzip' length 2351447 bytes (2.2 Mb)

opened URL

==================================================

downloaded 2.2 Mb

Loading required package: devtools

* installing *source* package ‘ggplot2’ ...

** package ‘ggplot2’ successfully unpacked and MD5 sums checked

** R

** data

*** moving datasets to lazyload DB

** inst

** preparing package for lazy loading

** help

*** installing help indices

** building package indices

** installing vignettes

** testing if installed package can be loaded

Loading required package: devtools

* DONE (ggplot2)

This downloads and installs ggplot2 from a CRAN repository (for information on setting various options, including how to configure your repository to a nearby mirror, see help(install.packages)).

CRAN isn’t the only R package repository — in Chapter 9, we’ll use many packages from the Bioconductor project. Bioconductor has its own way of installing packages that we’ll learn about in “Installing and Working with Bioconductor Packages”.

To get started with the examples in this chapter, we first need to install R. You can do this by either downloading R from the R-Project’s website or installing it from a ports or package manager like Ubuntu’s apt-get or OS X’s Homebrew. Then, you’ll need to install RStudio IDE from RStudio’s website. Be sure to keep both R and RStudio up to date. I’ve included some additional resources on getting started with RStudio in this chapter’s README on GitHub.

R Language Basics

Before getting our hands dirty working with real data in R, we need to learn the basics of the R language. Even if you’ve poked around in R and seen these concepts before, I would still recommend skimming through this section. Many of the difficulties beginning R users face stem from misunderstanding the language’s basic concepts, data structures, and behavior (which can differ quite significantly from other languages like Python and Perl). In this section, we’ll learn how to do simple calculations in R, assign values to variables, and call functions. Then, we’ll look at R’s vectors, vector data types, and vectorization. Vectors and vectorization underpin how we approach many problems in R — a theme we’ll continue to see throughout this chapter.

Simple Calculations in R, Calling Functions, and Getting Help in R

Let’s begin by looking at some simple calculations in R. Open RStudio (or a terminal R prompt) and try some basic arithmetic calculations in R:

> 4 + 3

[1] 7

> 4 - 3

[1] 1

> 4 * 3

[1] 12

> 4 / 3

[1] 1.333333

You’ll need to familiarize yourself with some R lingo: we say each line contains an expression that is evaluated by R when you press Enter. Whitespace around the arithmetic operations does not change how R evaluates these expressions. In some cases, you will need to surround parts of an expression with parentheses to indicate the order of evaluation. For example:

> 3 + 4/2

[1] 5

> (3 + 4)/2

[1] 3.5

Other mathematical operations can be performed by using functions. Functions take in zero or more arguments, do some work, and output a return value. A very important fact about R’s functions is that they copy their arguments, and do not modify their arguments in place (there are some technical exceptions to this rule we’ll ignore). In contrast, Python’s functions can modify their arguments in place. Functions are the bread and butter of working in R, so it’s necessary to understand and be able to work with functions, function arguments, and return values.

R has numerous mathematical functions (see Table 8-1 for some commonly used ones). For example, we call the function sqrt() on a (nonnegative) numeric argument:

> sqrt(3.5)

[1] 1.870829

|

Function Name |

Description |

Example |

|

exp(x) |

Exponential function |

exp(1), exp(2) |

|

log(x, base=exp(1)), log10(), log2() |

Natural, base 10, and base 2 logarithms |

log(2), log10(100), log2(16) |

|

sqrt(x) |

Square root |

sqrt(2) |

|

sin(x), cos(x), tan(x), etc. |

Trigonometric functions (see help(sin) for more) |

sin(pi) |

|

abs(x) |

Absolute value |

abs(-3) |

|

factorial(x) |

Factorial |

factorial(5) |

|

choose(n, k) |

Binomial coefficient |

choose(5, 3) |

|

Table 8-1. Common mathematic functions |

||

SIGNIFICANT DIGITS, PRINT(), AND OPTIONS IN R

By default, R will print seven significant digits (which is what it did when we executed sqrt(3.5)). While seven significant digits is the default, it’s easy to print more digits of a value by using the function print(). For example:

> print(sqrt(3.5), digits=10)

[1] 1.870828693

Behind the scenes, R uses print() to format the R objects you see printed as output. (note that you won’t see print() explicitly called).

Alternatively, you can change the default number of significant digits R uses by changing the global option in R. You can view the current default value of an option by calling getOption() with the option name as an argument; for example, you can retrieve the number of significant digits printed as follows:

> getOption('digits')

[1] 7

A new option value can be set using the function options():

> options(digits=9)

options() contains numerous user-customizable global options. See help(options) for more information.

Here, 1.870829 is the return value of this function. This value can either be assigned to a variable (which we’ll see later), or passed directly to other functions as an argument. For example, we could pass this return value into another function, round(), to round our square root:

> round(sqrt(3.5))

[1] 2

The round() function rounds sqrt(3.5) to 2, keeping zero decimal places, because the round() function’s second argument (digits) has a default value of zero. You can learn about a function’s arguments and their default values through round()’s documentation, which you can access with help(round) (see “Getting Help in R” for more depth on R’s help and documentation system). We can change the number of digits round() uses by specifying the value of this second digits argument either of two ways:

> round(sqrt(3.5), digits=3)

[1] 1.871

> round(sqrt(3.5), 3)

[1] 1.871

GETTING HELP IN R

As would be expected from a sophisticated scientific and statistical computing language, R has oodles of functions — far more than any reasonable human can expect to learn and remember. Consequently, you’ll need to master two of the most practical R skills:

§ Knowing how to look up a function’s documentation to recall its arguments and how it works

§ Being able to discover new useful functions

Each of R’s functions (and other objects such as constants like pi, classes, and packages) has integrated documentation accessible within R. R’s documentation includes descriptions and details about the function, all arguments of a function, and useful usage examples. You access access R’s built-in documentation with the help() function or its syntactic shortcut, ?:

> help(log)

> ?log

In RStudio, this opens a special help window containing log()’s documentation. In terminal R, this documentation is handled by your default pager (probably the program less). Operators such as + and ^ need to be quoted (e.g., help('+')). R also has documentation for general topics available; see, for example, help(Quotes).

R’s help() function is useful when you already know the name of the function you need documentation for. Unfortunately, we often only have a fuzzier idea of what we need help with (e.g., what was the function in R that calculates cross tabulate vectors?). For tasks like this, we can search R’s help system with the function help.search(), or its shortcut ??:

> help.search("cross tabulate")

> ??"cross tabulate"

In this case, help.search() would help you find the function table(), which is useful in creating counts and cross tabulations from vectors.

Also, R has the neat feature that all examples in an R help file can be executed with the function example(). For example:

> example(log)

log> log(exp(3))

[1] 3

[...]

Finally, R also has functions for listing all functions in a package (e.g., library(help="base")) and finding functions by name (e.g., apropos(norm)), which are often useful in remembering a function’s name.

First, values are matched to arguments by name. Technically, R also allows partial matching of argument names but I would discourage this, as it decreases code readability. Second, values are matched based on position within the argument list. For functions that have many arguments with default values such as foo(x, a=3, b=4, c=5), it’s easier to set an argument later in the function by specifying it by name. For example, compare calling foo() with c=5 by using foo(2, 3, 4, 5) with foo(2, c=5).

Variables and Assignment

To store a value for future use, we assign it to a variable (also known as a symbol in R jargon) using the <- assignment operator:

> x <- 3.1

Once the variable is assigned, we can retrieve its value by evaluating it on a line:

> x

3.1

Variables can also be used in expressions and passed to functions as arguments. R will substitute the variable’s value during evaluation and return the results. These results can then be assigned to other variables:

> (x + 2)/2

[1] 2.55

> exp(x)

[1] 22.1979513

> y <- exp(2*x) - 1

> y

[1] 491.749041

RStudio Assignment Operator Shortcut

In RStudio, you can create the <- assignment operator in one keystroke using Option - (that’s a dash) on OS X or Alt - on Windows/Linux.

It’s also possible to use = for assignment, but <- is more conventional (and is what we’ll use throughout the chapter).

When we assign a value in our R session, we’re assigning it to an environment known as the global environment. The objects we create by assigning values to variables are kept in environments. We can see objects we’ve created in the global environment with the function ls():

> ls()

[1] "x"

Here, we see the variable name x, which we assigned the value 3.1 earlier. When R needs to lookup a variable name, it looks in the search path. Calling the function search() returns where R looks when searching for the value of a variable — which includes the global environment (.GlobalEnv) and attached packages.

Vectors, Vectorization, and Indexing

Arguably the most important feature of the R language is its vectors. A vector is a container of contiguous data. Unlike most languages, R does not have a type for a single value (known as a scalar) such as 3.1 or “AGCTACGACT.” Rather, these values are stored in a vector of length 1. We can verify that values like 3.1 are vectors of length 1 by calling the function length() (which returns the length of a vector) on them:

> length(3.1)

[1] 1

To create longer vectors, we combine values with the function c():

> x <- c(56, 95.3, 0.4)

> x

[1] 56.0 95.3 0.4

> y <- c(3.2, 1.1, 0.2)

> y

[1] 3.2 1.1 0.2

R’s vectors are the basis of one of R’s most important features: vectorization. Vectorization allows us to loop over vectors elementwise, without the need to write an explicit loop. For example, R’s arithmetic operators are all vectorized:

> x + y

[1] 59.2 96.4 0.6

> x - y

[1] 52.8 94.2 0.2

> x/y

[1] 17.50000 86.63636 2.00000

Integer sequences crop up all over computing and statistics, so R has a few ways of creating these vectors. We’ll use these later in this section:

> seq(3, 5)

[1] 3 4 5

> 1:5

[1] 1 2 3 4 5

There’s one important subtle behavior of vectorized operations applied to two vectors simultaneously: if one vector is longer than the other, R will recycle the values in the shorter vector. This is an intentional behavior, so R won’t warn you when this happens (unless the recycled shorter vector’s length isn’t a multiple of the longer vector’s length). Recycling is what allows you to add a single value to all elements of a vector; the shorter vector (the single value in this case) is recycled to all elements of the longer vector:

> x

[1] 56.0 95.3 0.4

> x - 3

[1] 53.0 92.3 -2.6

> x / 2

[1] 28.00 47.65 0.20

R does warn if it recycles the shorter vector and there are remainder elements left. Consider the following examples:

> c(1, 2) + c(0, 0, 0, 0) ![]()

[1] 1 2 1 2

> c(1, 2) + c(0, 0, 0) ![]()

[1] 1 2 1

Warning message:

In c(1, 2) + c(0, 0, 0) :

longer object length is not a multiple of shorter object length

![]()

R adds a shorter vector c(1, 2) to a longer vector c(0, 0, 0, 0) by recycling the shorter values. The longer vector is all zeros so this is easier to see.

![]()

When the shorter vector’s length isn’t a multiple of the longer vector’s length, there will be a remainder element. R warns about this.

In addition to operators like + and *, many of R’s mathematical functions (e.g., sqrt(), round(), log(), etc.) are all vectorized:

> sqrt(x)

[1] 7.4833148 9.7621719 0.6324555

> round(sqrt(x), 3)

[1] 7.483 9.762 0.632

> log(x)/2 + 1 # note how we can combined vectorized operations

[1] 3.0126758 3.2785149 0.5418546

This vectorized approach is not only more clear and readable, it’s also often computationally faster. Unlike other languages, R allows us to completely forgo explicitly looping over vectors with a for loop. Later on, we’ll see other methods used for more explicit looping.

We can access specific elements of a vector through indexing. An index is an integer that specifies which element in the vector to retrieve. We can use indexing to get or set values to certain elements from a vector:

> x <- c(56, 95.3, 0.4)

> x[2] ![]()

[1] 95.3

> x[1]

[1] 56

> x[4] ![]()

[1] NA

> x[3] <- 0.5 ![]()

> x

[1] 56.0 95.3 0.5

![]()

R’s vectors are 1-indexed, meaning that the index 1 corresponds to the first element in a list (in contrast to 0-indexed languages like Python). Here, the value 95.3 is the 2nd item, and is accessed with x[2].

![]()

Trying to access an element that doesn’t exist in the vector leads R to return NA, the “not available” missing value.

![]()

We can change specific vector elements by combining indexing and assignment.

Vectors can also have names, which you can set while combining values with c():

> b <- c(a=3.4, b=5.4, c=0.4)

> b

a b c

3.4 5.4 0.4

The names of a vector can be both accessed and set with names():

> names(b)

[1] "a" "b" "c"

> names(b) <- c("x", "y", "z") # change these names

> b

x y z

3.4 5.4 0.4

And just as we can access elements by their positional index, we can also access them by their name:

> b['x']

x

3.4

> b['z']

z

0.4

It is also possible to extract more than one element simultaneously from a vector using indexing. This is more commonly known as subsetting, and it’s a primary reason why the R language is so terrific for manipulating data. Indices like 3 in x[3] are just vectors themselves, so it’s natural to allow these vectors to have more than one element. R will return each element at the positions in the indexing vector:

> x[c(2, 3)]

[1] 95.3 0.4

Vectorized indexing provides some incredibly powerful ways to manipulate data, as we can use indexes to slice out sections of vector, reorder elements, and repeat values. For example, we can use the methods we used to create contiguous integer sequences we learned in “Vectors, Vectorization, and Indexing” to create indexing vectors, as we often want to extract contiguous slices of a vector:

> z <- c(3.4, 2.2, 0.4, -0.4, 1.2)

> z[3:5] # extract third, fourth, and fifth elements

[1] 0.4 -0.4 1.2

Out-of-Range Indexing

Be aware that R does not issue a warning if you try to access an element in a position that’s greater than the number of elements — instead, R will return a missing value (NA; more on this later). For example:

> z[c(2, 1, 10)]

[1] 2.2 3.4 NA

Similarly, missing values in indexes leads to an NA too.

It’s also possible to exclude certain elements from lists using negative indexes:

> z[c(-4, -5)] # exclude fourth and fifth elements

[1] 3.4 2.2 0.4

Negative Indexes and the Colon Operator

One important subtle gotcha occurs when trying to combine negative indexes with the colon operator. For example, if you wanted to exclude the second through fourth elements of a vector x, you might be tempted to use x[-2:4]. However, if you enter -2:4 in the R interpreter, you’ll see that it creates a sequence from -2 to 4 — not -2, -3, and -4. To remedy this, wrap the sequence in parentheses:

> -(2:4)

[1] -2 -3 -4

Indices are also often used to reorder elements. For example, we could rearrange the elements of this vector z with:

> z[c(3, 2, 4, 5, 1)]

[1] 0.4 2.2 -0.4 1.2 3.4

Or we could reverse the elements of this vector by creating the sequence of integers from 5 down to 1 using 5:1:

> z[5:1]

[1] 1.2 -0.4 0.4 2.2 3.4

Similarly, we can use other R functions to create indexes for us. For example, the function order() returns a vector of indexes that indicate the (ascending) order of the elements. This can be used to reorder a vector into increasing or decreasing order:

> order(z)

[1] 4 3 5 2 1

> z[order(z)]

[1] -0.4 0.4 1.2 2.2 3.4

> order(z, decreasing=TRUE)

[1] 1 2 5 3 4

> z[order(z, decreasing=TRUE)]

[1] 3.4 2.2 1.2 0.4 -0.4

Again, R’s vector index rule is simple: R will return the element at the ith position for each i in the indexing vector. This also allows us to repeat certain values in vectors:

> z[c(2, 2, 1, 4, 5, 4, 3)]

[1] 2.2 2.2 3.4 -0.4 1.2 -0.4 0.4

Again, often we use functions to generate indexing vectors for us. For example, one way to resample a vector (with replacement) is to randomly sample its indexes using the sample() function:

> set.seed(0) # we set the random number seed so this example is reproducible

> i <- sample(length(z), replace=TRUE)

> i

[1] 5 2 2 3 5

> z[i]

[1] 1.2 2.2 2.2 0.4 1.2

Just as we use certain functions to generate indexing vectors, we can use comparison operators like ==, !=, <, <=, >, and >= (see Table 8-2) to build logical vectors of TRUE and FALSE values indicating the result of the comparison test for each element in the vector. R’s comparison operators are also vectorized (and will be recycled according to R’s rule). Here are some examples:

> v <- c(2.3, 6, -3, 3.8, 2, -1.1)

> v == 6

[1] FALSE TRUE FALSE FALSE FALSE FALSE

> v <= -3

[1] FALSE FALSE TRUE FALSE FALSE FALSE

> abs(v) > 5

[1] FALSE TRUE FALSE FALSE FALSE FALSE

Logical vectors are useful because they too can be used as indexing vectors — R returns the elements with corresponding TRUE values in the indexing vector (see Example 8-1).

Example 8-1. Indexing vectors with logical vectors

> v[c(TRUE, TRUE, FALSE, TRUE, FALSE, FALSE)]

[1] 2.3 6.0 3.8

But it’s tedious to construct these logical vectors of TRUE and FALSE by hand; if we wanted to select out particular elements using an integer, indexes would involve much less typing. But as you might have guessed, the power of using logical vectors in subsetting comes from creating logical vectors using comparison operators. For example, to subset v such that only elements greater than 2 are kept, we’d use:

> v[v > 2]

[1] 2.3 6.0 3.8

Note that there’s no magic or special evaluation going on here: we are simply creating a logical vector using v > 2 and then using this logical vector to select out certain elements of v. Lastly, we can use comparison operators (that return logical vectors) with vectorized logical operations (also known as Boolean algebra in other disciplines) such as & (AND), | (OR), and ! (NOT). For example, to find all elements of v greater than 2 and less than 4, we’d construct a logical vector and use this to index the vector:

> v > 2 & v < 4

[1] TRUE FALSE FALSE TRUE FALSE FALSE

> v[v > 2 & v < 4]

[1] 2.3 3.8

|

Operator |

Description |

|

> |

Greater than |

|

< |

Less than |

|

>= |

Greater than or equal to |

|

<= |

Less than or equal to |

|

== |

Equal to |

|

! |

Not equal to |

|

& |

Elementwise logical AND |

|

| |

Elementwise logical OR |

|

! |

Elementwise logical NOT |

|

&& |

Logical AND (first element only, for if statements) |

|

|| |

Logical OR (first element only, for if statements) |

|

Table 8-2. R’s comparison and logical operators |

|

We’ll keep returning to this type of subsetting, as it’s the basis of some incredibly powerful data manipulation capabilities in R.

Vector types

The last topic about vectors to cover before diving into working with real data is R’s vector types. Unlike Python’s lists or Perl’s arrays, R’s vectors must contain elements of the same type. In the context of working with statistical data, this makes sense: if we add two vectors x and y together with a vectorized approach like x + y, we want to know ahead of time whether all values in both vectors have the same type and can indeed be added together. It’s important to be familiar with R’s types, as it’s common as an R beginner to run into type-related issues (especially when loading in data that’s messy).

R supports the following vector types (see also Table 8-3):

Numeric

Numeric vectors (also known as double vectors) are used for real-valued numbers like 4.094, -12.4, or 23.0. By default, all numbers entered into R (e.g., c(5, 3, 8)) create numeric vectors, regardless of whether they’re an integer.

Integer

R also has an integer vector type for values like -4, 39, and 23. Because R defaults to giving integer values like -4, 39, and 23 the type numeric, you can explicitly tell R to treat a value as an integer by appending a capital Lafter the value (e.g., -4L, 39L, and 23L). (Note that the seemingly more sensible i or I aren’t used to avoid confusion with complex numbers with an imaginary component like 4 + 3i).

Character

Character vectors are used to represent text data, also known as strings. Either single or double quotes can be used to specify a character vector (e.g., c("AGTCGAT", "AGCTGGA")). R’s character vectors recognize special characters common to other programming languages such as newline (\n) and tab (\t).

Logical

Logical values are used to represent Boolean values, which in R are TRUE and FALSE. T and F are assigned the values TRUE and FALSE, and while you might be tempted to use these shortcuts, do not. Unlike TRUE and FALSE, T and Fcan be redefined in code. Defining T <- 0 will surely cause problems.

|

Type |

Example |

Creation function |

Test function |

Coercion function |

|

Numeric |

c(23.1, 42, -1) |

numeric() |

is.numeric() |

as.numeric() |

|

Integer |

c(1L, -3L, 4L) |

integer() |

is.integer() |

as.integer() |

|

Character |

c("a", "c") |

character() |

is.character() |

as.character() |

|

Logical |

c(TRUE, FALSE) |

logical() |

is.logical() |

as.logical() |

|

Table 8-3. R’s vector types |

||||

In addition to double, integer, character, and logical vectors, R has two other vector types: complex to represent complex numbers (those with an imaginary component), and raw, to encode raw bytes. These types have limited application in bioinformatics, so we’ll skip discussing them.

R’S SPECIAL VALUES

R has four special values (NA, NULL, Inf/-Inf, and NaN) that you’re likely to encounter in your work:

NA

NA is R’s built-in value to represent missing data. Any operation on an NA will return an NA (e.g., 2 + NA returns NA). There are numerous functions to handle NAs in data; see na.exclude() and complete.cases(). You can find which elements of a vector are NA with the function is.na().

NULL

NULL represents not having a value (which is different than having a value that’s missing). It’s analogous to Python’s None value. You can test if a value is NULL with the function is.null().

-Inf, Inf

These are just as they sound, negative infinite and positive infinite values. You can test whether a value is finite with the function is.finite() (and its complement is.infinite()).

NaN

NaN stands for “not a number,” which can occur in some computations that don’t return numbers, i.e., 0/0 or Inf + -Inf. You can test for these with is.nan().

Again, the most important thing to remember about R’s vectors and data types is that vectors are of homogenous type. R enforces this restriction through coercion, which like recycling is a subtle behavior that’s important to remember about R.

Type Coercion in R

Because all elements in a vector must have homogeneous data type, R will silently coerce elements so that they have the same type.

R’s coercion rules are quite simple; R coerces vectors to a type that leads to no information loss about the original value. For example, if you were to try to create a vector containing a logical value and a numeric value, R would coerce the logical TRUE and FALSE values to 1 and 0, as these represent TRUE and FALSE without a loss of information:

> c(4.3, TRUE, 2.1, FALSE)

[1] 4.3 1.0 2.1 0.0

Similarly, if you were to try to create a vector containing integers and numerics, R would coerce this to a numeric vector, because integers can be represented as numeric values without any loss of information:

> c(-9L, 3.4, 1.2, 3L)

[1] -9.0 3.4 1.2 3.0

Lastly, if a string were included in a vector containing integers, logicals, or numerics, R would convert everything to a character vector, as this leads to the least amount of information loss:

> c(43L, TRUE, 3.2413341, "a string")

[1] "43" "TRUE" "3.2413341" "a string"

We can see any object’s type (e.g., a vector’s type) using the function typeof():

> q <- c(2, 3.5, -1.1, 3.8)

> typeof(q)

[1] "double"

Factors and classes in R

Another kind of vector you’ll encounter are factors. Factors store categorical variables, such as a treatment group (e.g., “high,” “medium,” “low,” “control”), strand (forward or reverse), or chromosome (“chr1,” “chr2,” etc.). Factors crop up all over R, and occasionally cause headaches for new R users (we’ll discuss why in “Loading Data into R”).

Suppose we had a character vector named chr_hits containing the Drosophila melanogaster chromosomes where we find a particular sequence aligns. We can create a factor from this vector using the function factor():

> chr_hits <- c("chr2", "chr2", "chr3", "chrX", "chr2", "chr3", "chr3")

> hits <- factor(chr_hits)

> hits

[1] chr2 chr2 chr3 chrX chr2 chr3 chr3

Levels: chr2 chr3 chrX

Printing the hits object shows the original sequence (i.e., chr2, chr2, chr3, etc.) as well as all of this factor’s levels. The levels are the possible values a factor can contain (these are fixed and must be unique). We can view a factor’s levels by using the function levels():

> levels(hits)

[1] "chr2" "chr3" "chrX"

Biologically speaking, our set of levels isn’t complete. Drosophila melanogaster has two other chromosomes: chrY and chr4. Although our data doesn’t include these chromosomes, they are valid categories and should be included as levels in the factor. When creating our factor, we could use the argument levels to include all relevant levels:

> hits <- factor(chr_hits, levels=c("chrX", "chrY", "chr2", "chr3", "chr4"))

> hits

[1] chr2 chr2 chr3 chrX chr2 chr3 chr3

Levels: chrX chrY chr2 chr3 chr4

If we’ve already created a factor, we can add or rename existing levels with the function levels(). This is similar to how we assigned vector names using names(obj) <- . When setting names, we use a named character vector to provide a mapping between the original names and the new names:

> levels(hits) <- list(chrX="chrX", chrY="chrY", chr2="chr2",

chr3="chr3", chr4="chr4")

> hits

[1] chr2 chr2 chr3 chrX chr2 chr3 chr3

Levels: chrX chrY chr2 chr3 chr4

We can count up how many of each level there are in a factor using the function table():

> table(hits)

hits

chrX chrY chr2 chr3 chr4

1 0 3 3 0

Factors are a good segue into briefly discussing classes in R. An object’s class endows objects with higher-level properties that can affect how functions treat that R object. We won’t get into the technical details of creating classes or R’s object orientation system in this section (see a text like Hadley Wickham’s Advanced R for these details). But it’s important to have a working familiarity with the idea that R’s objects have a class and this can change how certain functions treat R objects.

To discern the difference between an object’s class and its type, notice that factors are just integer vectors under the hood:

> typeof(hits)

[1] "integer"

> as.integer(hits)

[1] 3 3 4 1 3 4 4

Functions like table() are generic — they are designed to work with objects of all kinds of classes. Generic functions are also designed to do the right thing depending on the class of the object they’re called on (in programming lingo, we say that the function is polymorphic). For example, table() treats a factor differently than it would treat an integer vector. As another example, consider how the function summary() (which summarizes an object such as vector) behaves when it’s called on a vector of numeric values versus a factor:

> nums <- c(0.97, -0.7, 0.44, 0.25, -1.38, 0.08)

> summary(nums)

Min. 1st Qu. Median Mean 3rd Qu. Max.

-1.38000 -0.50500 0.16500 -0.05667 0.39250 0.97000

> summary(hits)

chrX chrY chr2 chr3 chr4

1 0 3 3 0

When called on numeric values, summary() returns a numeric summary with the quartiles and the mean. This numeric summary wouldn’t be meaningful for the categorical data stored in a factor, so instead summary() returns the level counts like table() did. This is function polymorphism, and occurs because nums has class “numeric” and hits has class “factor”:

> class(nums)

[1] "numeric"

> class(hits)

[1] "factor"

These classes are a part of R’s S3 object-oriented system. R actually has three object orientation systems (S3, S4, and reference classes). Don’t worry too much about the specifics; we’ll encounter classes in this chapter (and also in Chapter 9), but it won’t require an in-depth knowledge of R’s OO systems. Just be aware that in addition to a type, objects have a class that changes how certain functions treat that object.

Working with and Visualizing Data in R

With a knowledge of basic R language essentials from the previous section, we’re ready to start working with real data. We’ll work a few different datasets in this chapter. All files to load these datasets into R are available in this chapter’s directory on GitHub.

The dataset we’ll use for learning data manipulation and visualization skills is from the 2006 paper “The Influence of Recombination on Human Genetic Diversity” by Spencer et al. I’ve chosen this dataset (Dataset_S1.txt on GitHub) for the following reasons:

§ It’s an excellent piece of genomic research with interesting biological findings.

§ The article is open access and thus freely accessible the public.

§ The raw data is also freely available and is tidy, allowing us to start exploring it immediately.

§ All scripts used in the authors’ analyses are freely available (making this a great example of reproducible research).

In addition, this type of genomic dataset is also characteristic of the data generated from lower-level bioinformatics workflows that are then analyzed in R.

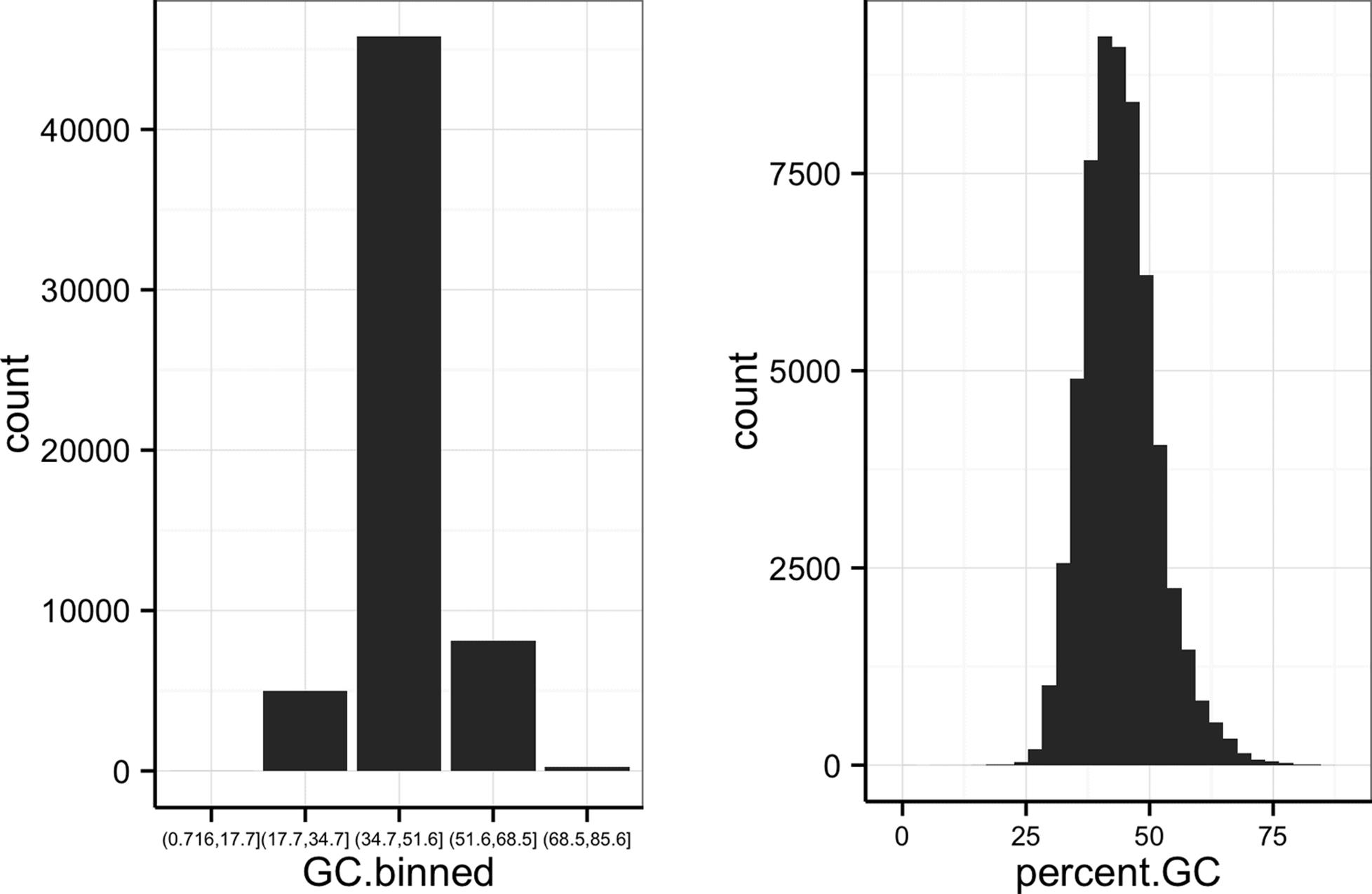

Dataset_S1.txt contains estimates of population genetics statistics such as nucleotide diversity (e.g., the columns Pi and Theta), recombination (column Recombination), and sequence divergence as estimated by percent identity between human and chimpanzee genomes (column Divergence). Other columns contain information about the sequencing depth (depth), and GC content (percent.GC). We’ll only work with a few columns in our examples; see the description of Dataset_S1.txt in this paper’s supplementary information for more detail. Dataset_S1.txt includes these estimates for 1kb windows in human chromosome 20.

Loading Data into R

The first step of any R data analysis project is loading data into R. For some datasets (e.g., Dataset_S1.txt), this is quite easy — the data is tidy enough that R’s functions for loading in data work on the first try. In some cases, you may need to use Unix tools (Chapter 7) to reformat the data into a tab-delimited or CSV plain-text format, or do some Unix sleuthing to find strange characters in the file (see “Decoding Plain-Text Data: hexdump”). In other cases, you’ll need to identify and remove improper values from data within R or coerce columns.

Before loading in this file, we need to discuss R’s working directory. When R is running, the process runs in a specific working directory. It’s important to mind this working directory while loading data into R, as which directory R is running in will affect how you specify relative paths to data files. By default, command-line R will use the directory you start the R program with; RStudio uses your home directory (this is a customizable option). You can usegetwd() to get R’s current working directory and setwd() to set the working directory:

> getwd()

[1] "/Users/vinceb"

> setwd("~/bds-files/chapter-08-r") # path to this chapter's

# directory in the Github repository.

For all examples in this chapter, I’ll assume R’s working directory is this chapter’s directory in the book’s GitHub repository. We’ll come back to working directories again when we discuss R scripts in “Working with R Scripts”.

Next, it’s wise to first inspect a file from the command line before loading it into R. This will give you a sense of the column delimiter being used, whether there are comment lines (e.g., lines that don’t contain data and begin with a character like #), and if the first line is a header containing column names. Either head or less work well for this:

$ head -n 3 Dataset_S1.txt

start,end,total SNPs,total Bases,depth,unique SNPs,dhSNPs, [...]

55001,56000,0,1894,3.41,0,0, [...]

56001,57000,5,6683,6.68,2,2, [...]

From this, we see Dataset_S1.txt is a comma-separated value file with a header. If you explore this file in more detail with less, you’ll notice this data is tidy and organized. Each column represents a single variable (e.g., window start position, window end position, percent GC, etc.) and each row represents an observation (in this case, the values for a particular 1kb window). Loading tidy plain-text data like Dataset_S1.txt requires little effort, so you can quickly get started working with it. Your R scripts should organize data in a similar tidy fashion (and in a format like tab-delimited or CSV), as it’s much easier to work with tidy data using Unix tools and R.

LARGE GENOMICS DATA INTO R: COLCLASSES, COMPRESSION, AND MORE

It’s quite common to encounter genomics datasets that are difficult to load into R because they’re large files. This is either because it takes too long to load the entire dataset into R, or your machine simply doesn’t have enough memory. In many cases, the best strategy is to reduce the size of your data somehow: summarizing data in earlier processing steps, omitting unnecessary columns, splitting your data into chunks (e.g., working with a chromosome at a time), or working on a random subset of your data. Many bioinformatics analyses do not require working on an entire genomic dataset at once, so these strategies can work quite well. These approaches are also the only way to work with data that is truly too large to fit in your machine’s memory (apart from getting a machine with more memory).

If your data will fit in your machine’s memory, it’s still possible that loading the data into R may be quite slow. There are a few tricks to make the read.csv() and read.delim() functions load large data files more quickly. First, we could explicitly set the class of each column through thecolClasses argument. This saves R time (usually making the R’s data reading functions twice as fast), as it takes R time to figure out what type of class a column has and convert between classes. If your dataset has columns you don’t need in your analysis (and unnecessarily take up memory), you can set their value in the colClasses vector to "NULL" (in quotes) to force R to skip them.

Additionally, specifying how many rows there are in your data by setting nrow in read.delim() can lead to some performance gains. It’s OK to somewhat overestimate this number; you can get a quick estimate using wc -l. If these solutions are still too slow, you can install the data.tablepackage and use its fread() function, which is the fastest alternative to read.* functions (though be warned: fread() returns a data.table, not a data.frame, which behaves differently; see the manual).

If your data is larger than the available memory on your machine, you’ll need to use a strategy that keeps the bulk of your data out of memory, but still allows for easy access from R. A good solution for moderately large data is to use SQLite and query out subsets for computation using the R package RSQLite (we’ll cover SQLite and other strategies for data too large to fit in memory in Chapter 13).

Finally, as we saw in Chapter 6, many Unix data tools have versions that work on gzipped files: zless, zcat (gzcat on BSD-derived systems like Max OS X), and others. Likewise, R’s data-reading functions can also read gzipped files directly — there’s no need to uncompress gzipped files first. This saves disk space and there can be some slight performance gains in reading in gzipped files, as there are fewer bytes to read off of (slow) hard disks.

With our working directory properly set, let’s load Dataset_S1.txt into R. The R functions read.csv() and read.delim() are used for reading CSV and tab-delimited files, respectively. Both are thin wrappers around the functionread.table() with the proper arguments set for CSV and tab-delimited files — see help(read.table) for more information. To load the CSV file Dataset_S1.txt, we’d use read.csv() with "Dataset_S1.txt" as the file argument (the first argument). To avoid repeatedly typing a long name when we work with our data, we’ll assign this to a variable named d:

> d <- read.csv("Dataset_S1.txt")

Note that the functions read.csv() and read.delim() have the argument header set to TRUE by default. This is important because Dataset_S1’s first line contains column names rather than the first row of data. Some data files don’t include a header, so you’d need to set header=FALSE. If your file lacks a column name header, it’s a good idea to assign column names as you read in the data using the col.names argument. For example, to load a fake tab-delimited file named noheader.bed that contains three columns named chrom, start, and end, we’d use:

> bd <- read.delim("noheader.bed", header=FALSE,

col.names=c("chrom", "start", "end"))

R’s read.csv() and read.delim() functions have numerous arguments, many of which will need to be adjusted for certain files you’ll come across in bioinformatics. See Table 8-4 for a list of some commonly used arguments, and consult help(read.csv) for full documentation. Before we move on to working with the data in Dataset_S1.txt, we need to discuss one common stumbling block when loading data into R: factors. As we saw in “Factors and classes in R”, factors are R’s way of encoding categorical data in a vector. By default, R’s read.delim() and read.csv() functions will coerce a column of strings to a factor, rather than treat it as a character vector. It’s important to know that R does this so you can disable this coercion when you need a column as a character vector. To do this, set the argument stringsAsFactors=FALSE (or use asis; see help(read.table) for more information). In “Factors and classes in R”, we saw how factors are quite useful, despite the headaches they often cause new R users.

|

Argument |

Description |

Additional comments |

|

header |

A TRUE/FALSE value indicating whether the first row contains column names rather than data |

|

|

sep |

A character value indicating what delimits columns; using the empty string "" treats all whitespace as a separator |

|

|

stringsAsFactors |

Setting this argument as FALSE prevents R from coercing character vectors to factors for all columns; see argument asis in help(read.delim) to prevent coercion to factor on specific columns |

This is an important argument to be aware of, because R’s default behavior of coercing character vector columns to factors is a common stumbling block for beginners. |

|

col.names |

A character vector to be used as column names |

|

|

row.names |

A vector to use for row names, or a single integer or column name indicating which column to use as row names |

|

|

na.strings |

A character vector of possible missing values to convert to NA |

Files using inconsistent missing values (e.g., a mix of “NA,” “Na,” “na”) can be corrected using na.strings=c("NA", "Na", "na"). |

|

colClasses |

A character vector of column classes; "NULL" indicates a column should be skipped and NA indicates R should infer the type |

colClasses can drastically decrease the time it takes to load a file into R, and is a useful argument when working with large bioinformatics files. |

|

comment.char |

Lines beginning with this argument are ignored; the empty string (i.e., "") disables this feature |

This argument is useful in ignoring metadata lines in bioinformatics files. |

|

Table 8-4. Commonly used read.csv() and read.delim() arguments |

||

GETTING DATA INTO SHAPE

Quite often, data we load in to R will be in the wrong shape for what we want to do with it. Tabular data can come in two different formats: long and wide. With wide data, each measured variable has its own column (Table 8-5).

|

Gene |

Meristem |

Root |

Flower |

|

gene_1 |

582 |

91 |

495 |

|

gene_2 |

305 |

3505 |

33 |

|

Table 8-5. A gene expression counts table by tissue in wide format |

|||

With long data, one column is used to store what type of variable was measured and another column is used to store the measurement (Table 8-6).

|

Gene |

Tissue |

Expression |

|

gene_1 |

meristem |

582 |

|

gene_2 |

meristem |

305 |

|

gene_1 |

root |

91 |

|

gene_2 |

root |

3503 |

|

gene_1 |

flower |

495 |

|

gene_2 |

flower |

33 |

|

Table 8-6. A gene expression counts table by tissue in long format |

||

In many cases, data is recorded by humans in wide format, but we need data in long format when working with and plotting statistical modeling functions. Hadley Wickham’s reshape2 package provides functions to reshape data: the function melt() turns wide data into long data, and cast()turns long data into wide data. There are numerous resources for learning more about the reshape2 package:

§ reshape2 CRAN page

§ Hadley Wickham’s reshape page

Exploring and Transforming Dataframes

The Dataset_S1.txt data we’ve loaded into R with read.csv() is stored as a dataframe. Dataframes are R’s workhorse data structure for storing tabular data. Dataframes consist of columns (which represent variables in your dataset), and rows (which represent observations). In this short section, we’ll learn the basics of accessing the dimensions, rows, columns, and row and column names of a dataframe. We’ll also see how to transform columns of a dataframe and add additional columns.

Each of the columns of a dataframe are vectors just like those introduced in “Vectors, Vectorization, and Indexing”. Consequently, each element in a dataframe column has the same type. But a dataframe can contain many columns of all different types; storing columns of heterogeneous types of vectors is what dataframes are designed to do.

First, let’s take a look at the dataframe we’ve loaded in with the function head(). By default, head() prints the first six lines of a dataframe, but we’ll limit this to three using the n argument:

> head(d, n=3)

start end total.SNPs total.Bases depth unique.SNPs dhSNPs reference.Bases

1 55001 56000 0 1894 3.41 0 0 556

2 56001 57000 5 6683 6.68 2 2 1000

3 57001 58000 1 9063 9.06 1 0 1000

Theta Pi Heterozygosity X.GC Recombination Divergence Constraint SNPs

1 0.000 0.000 0.000 54.8096 0.009601574 0.003006012 0 0

2 8.007 10.354 7.481 42.4424 0.009601574 0.018018020 0 0

3 3.510 1.986 1.103 37.2372 0.009601574 0.007007007 0 0

(Note that R has wrapped the columns of this dataset.)

Other things we might want to know about this dataframe are its dimensions, which we can access using nrow() (number of rows), ncol() (number of columns), and dim() (returns both):

> nrow(d)

[1] 59140

> ncol(d)

[1] 16

> dim(d)

[1] 59140 16

We can also print the columns of this dataframe using col.names() (there’s also a row.names() function):

> colnames(d)

[1] "start" "end" "total.SNPs" "total.Bases"

[5] "depth" "unique.SNPs" "dhSNPs" "reference.Bases"

[9] "Theta" "Pi" "Heterozygosity" "X.GC"

[13] "Recombination" "Divergence" "Constraint" "SNPs"

Note that R’s read.csv() function has automatically renamed some of these columns for us: spaces have been converted to periods and the percent sign in %GC has been changed to an “X.” “X.GC” isn’t a very descriptive column name, so let’s change this. Much like we’ve set the names of a vector using names() <-, we can set column names with col.names() <-. Here, we only want to change the 12th column name, so we’d use:

> colnames(d)[12] # original name

[1] "X.GC"

> colnames(d)[12] <- "percent.GC"

> colnames(d)[12] # after change

[1] "percent.GC"

Creating Dataframes from Scratch

R’s data loading functions read.csv() and read.delim() read in data from a file and return the results as a data.frame. Sometimes you’ll need to create a dataframe from scratch from a set of vectors. You can do this with the function data.frame(), which creates a dataframe from vector arguments (recycling the shorter vectors when necessary). One nice feature of data.frame() is that if you provide vectors as named arguments, data.frame() will use these names as column names. For example, if we simulated data from a simple linear model using sample()and rnorm(), we could store it in a dataframe with:

> x <- sample(1:50, 300, replace=TRUE)

> y <- 3.2*x + rnorm(300, 0, 40)

> d_sim <- data.frame(y=y, x=x)

As with R’s read.csv() and read.delim() functions, data.frame() will convert character vectors into factors. You can disable this behavior by setting stringsAsFactors=FALSE.

Similarly, we could set row names using row.names() <- (note that row names must be unique).

The most common way to access a single column of a dataframe is with the dollar sign operator. For example, we could access the column depth in d using:

> d$depth

[1] 3.41 6.68 9.06 10.26 8.06 7.05 [...]

This returns the depth column as a vector, which we can then pass to R functions like mean() or summary() to get an idea of what depth looks like across this dataset:

> mean(d$depth)

[1] 8.183938

> summary(d$depth)

Min. 1st Qu. Median Mean 3rd Qu. Max.

1.000 6.970 8.170 8.184 9.400 21.910

The dollar sign operator is a syntactic shortcut for a more general bracket operator used to access rows, columns, and cells of a dataframe. Using the bracket operator is similar to accessing the elements of a vector (e.g., vec[2]), except as two-dimensional data structures, dataframes use two indexes separated by a comma: df[row, col]. Just as with indexing vectors, these indexes can be vectors to select multiple rows and columns simultaneously. Omitting the row index retrieves all rows, and omitting the column index retrieves all columns. This will be clearer with some examples. To access the first two columns (and all rows), we’d use:

> d[ , 1:2]

start end

1 55001 56000

2 56001 57000

3 57001 58000

[...]

It’s important to remember the comma (and note that whitespace does not matter here; it’s just to increase readability). Selecting two columns like this returns a dataframe. Equivalently, we could use the column names (much like we could use names to access elements of a vector):

> d[, c("start", "end")]

start end

1 55001 56000

2 56001 57000

3 57001 58000

[...]

If we only wanted the first row of start and end positions, we’d use:

> d[1, c("start", "end")]

start end

1 55001 56000

Similarly, if we wanted the first row of data for all columns, we’d omit the column index:

> d[1, ]

start end total.SNPs total.Bases depth unique.SNPs dhSNPs reference.Bases

1 55001 56000 0 1894 3.41 0 0 556

Theta Pi Heterozygosity percent.GC Recombination Divergence Constraint SNPs

1 0 0 0 54.8096 0.009601574 0.003006012 0 0

Single cells can be accessed by specifying a single row and a single column:

> d[2, 3]

[1] 5

However, in practice we don’t usually need to access single rows or cells of a dataframe during data analysis (see Fragile Code and Accessing Rows and Columns in Dataframes).

Fragile Code and Accessing Rows and Columns in Dataframes

It’s a good idea to avoid referring to specific dataframe rows in your analysis code. This would produce code fragile to row permutations or new rows that may be generated by rerunning a previous analysis step. In every case in which you might need to refer to a specific row, it’s avoidable by using subsetting (see “Exploring Data Through Slicing and Dicing: Subsetting Dataframes”).

Similarly, it’s a good idea to refer to columns by their column name, not their position. While columns may be less likely to change across dataset versions than rows, it still happens. Column names are more specific than positions, and also lead to more readable code.

When accessing a single column from a dataframe, R’s default behavior is to return this as a vector — not a dataframe with one column. Sometimes this can cause problems if downstream code expects to work with a dataframe. To disable this behavior, we set the argument drop to FALSE in the bracket operator:

> d[, "start", drop=FALSE]

start

1 55001

2 56001

3 57001

[...]

Now, let’s add an additional column to our dataframe that indicates whether a window is in the centromere region. The positions of the chromosome 20 centromere (based on Giemsa banding) are 25,800,000 to 29,700,000 (see this chapter’s README on GitHub to see how these coordinates were found). We can append to our d dataframe a column called cent that has TRUE/FALSE values indicating whether the current window is fully within a centromeric region using comparison and logical operations:

> d$cent <- d$start >= 25800000 & d$end <= 29700000

Note the single ampersand (&), which is the vectorized version of logical AND. & operates on each element of the two vectors created by dd$start >= 25800000 and dd$end <= 29700000 and returns TRUE when both are >true. How many windows fall into this centromeric region? There are a few ways to tally the TRUE values. First, we could use table():

> table(d$cent)

FALSE TRUE

58455 685

Another approach uses the fact that sum() will coerce logical values to integers (so TRUE has value 1 and FALSE has value 0). To count how many windows fall in this centromeric region, we could use:

> sum(d$cent)

[1] 685

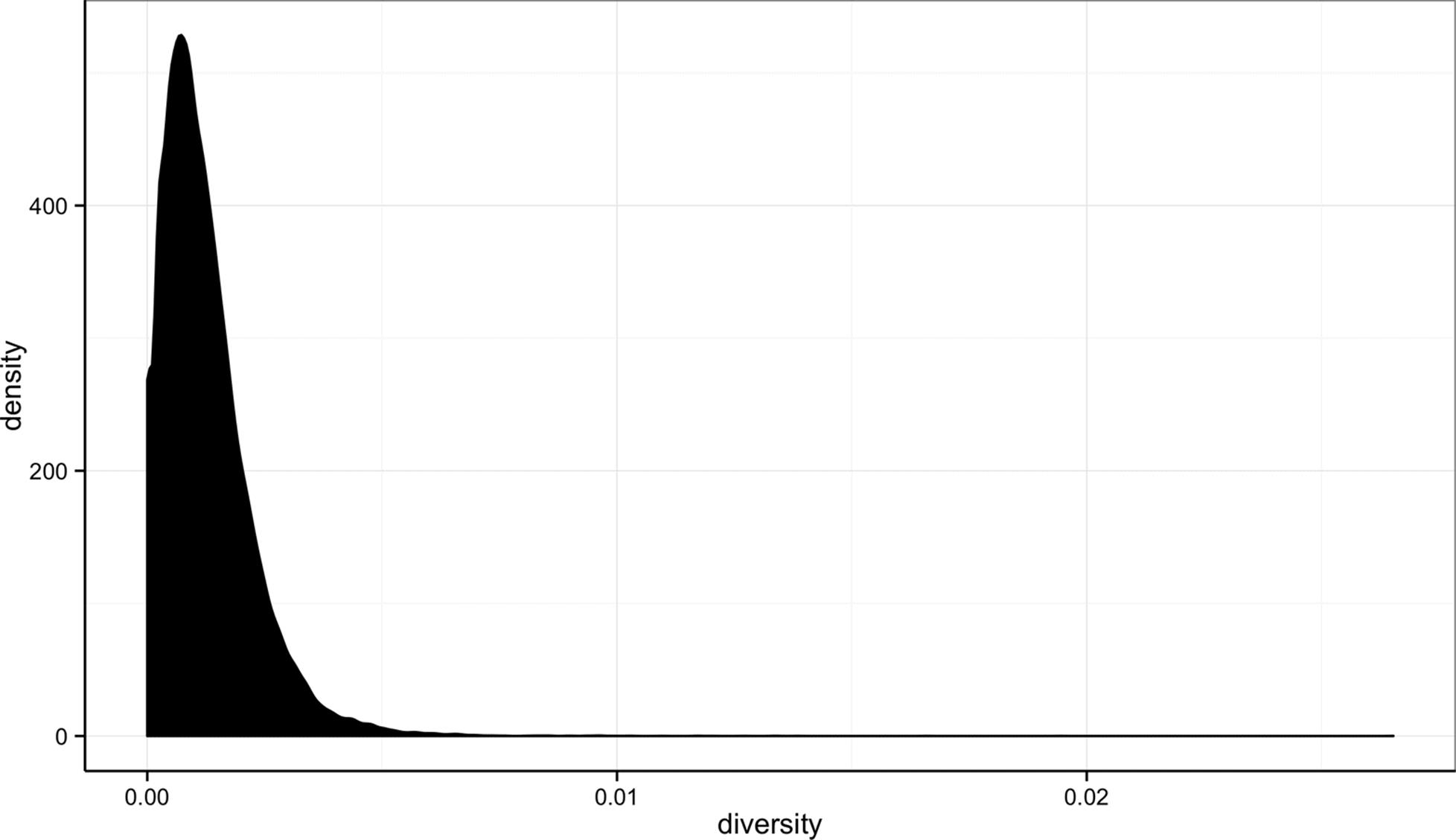

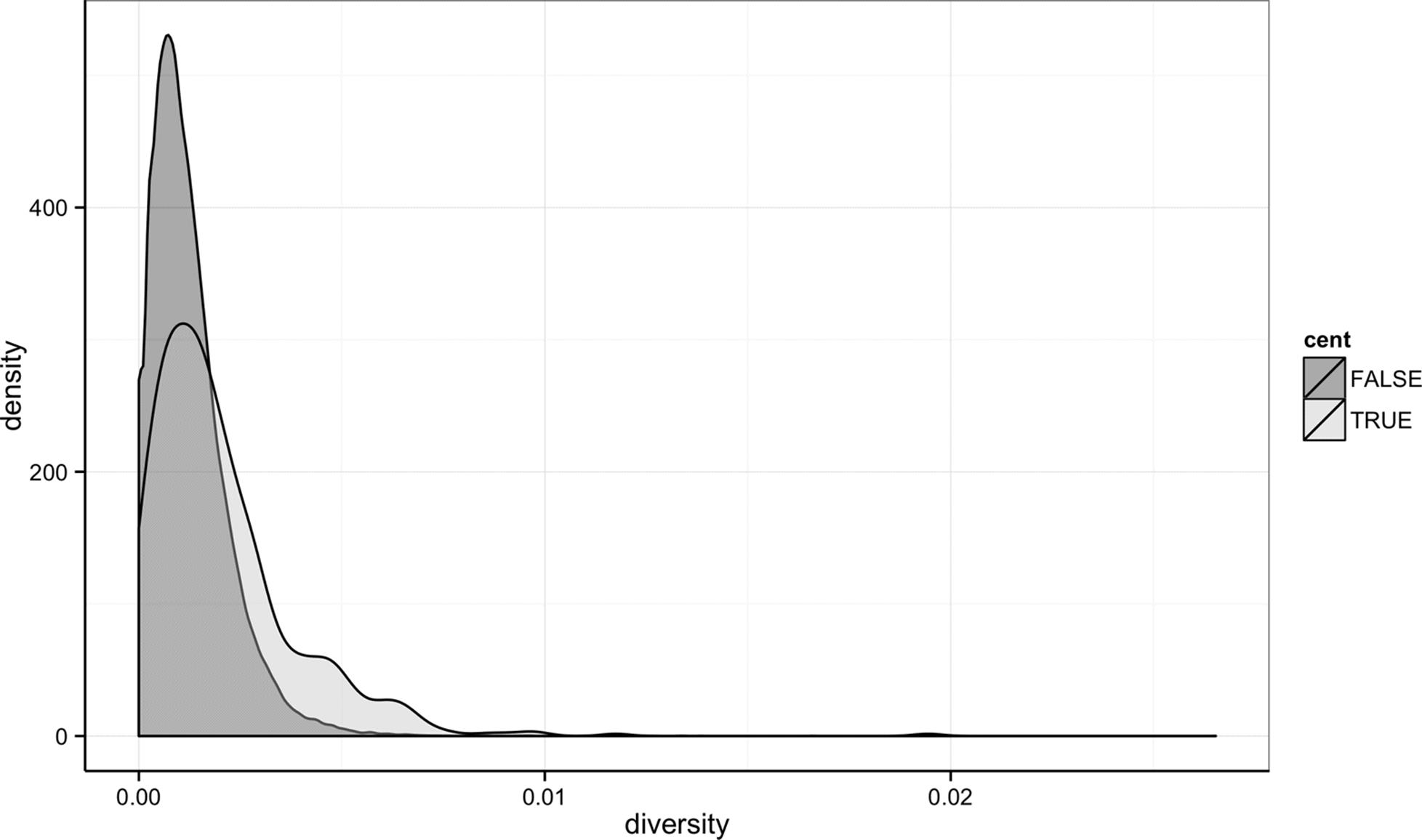

Lastly, note that according to the supplementary material of this paper, the diversity estimates (columns Theta, Pi, and Heterozygosity) are all scaled up by 10x in the dataset (see supplementary Text S1 for more details). We’ll use the nucleotide diversity column Pi later in this chapter in plots, and it would be useful to have this scaled as per basepair nucleotide diversity (so as to make the scale more intuitive). We can create a new rescaled column called diversity with:

> d$diversity <- d$Pi / (10*1000) # rescale, removing 10x and making per bp

> summary(d$diversity )

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.0000000 0.0005577 0.0010420 0.0012390 0.0016880 0.0265300

Average nucleotide diversity per basepair in this data is around 0.001 (0.12%), roughly what we’d expect from other estimates of human diversity (Hernandez et al., 2012, Perry et al., 2012).

Exploring Data Through Slicing and Dicing: Subsetting Dataframes

The most powerful feature of dataframes is the ability to slice out specific rows by applying the same vector subsetting techniques we saw in “Vectors, Vectorization, and Indexing” to columns. Combined with R’s comparison and logical operators, this leads to an incredibly powerful method to query out rows in a dataframe. Understanding and mastering dataframe subsetting is one of the most important R skills to develop, as it gives you considerable power to interrogate and explore your data. We’ll learn these skills in this section by applying them to the Dataset_S1.txt dataset to explore some features of this data.

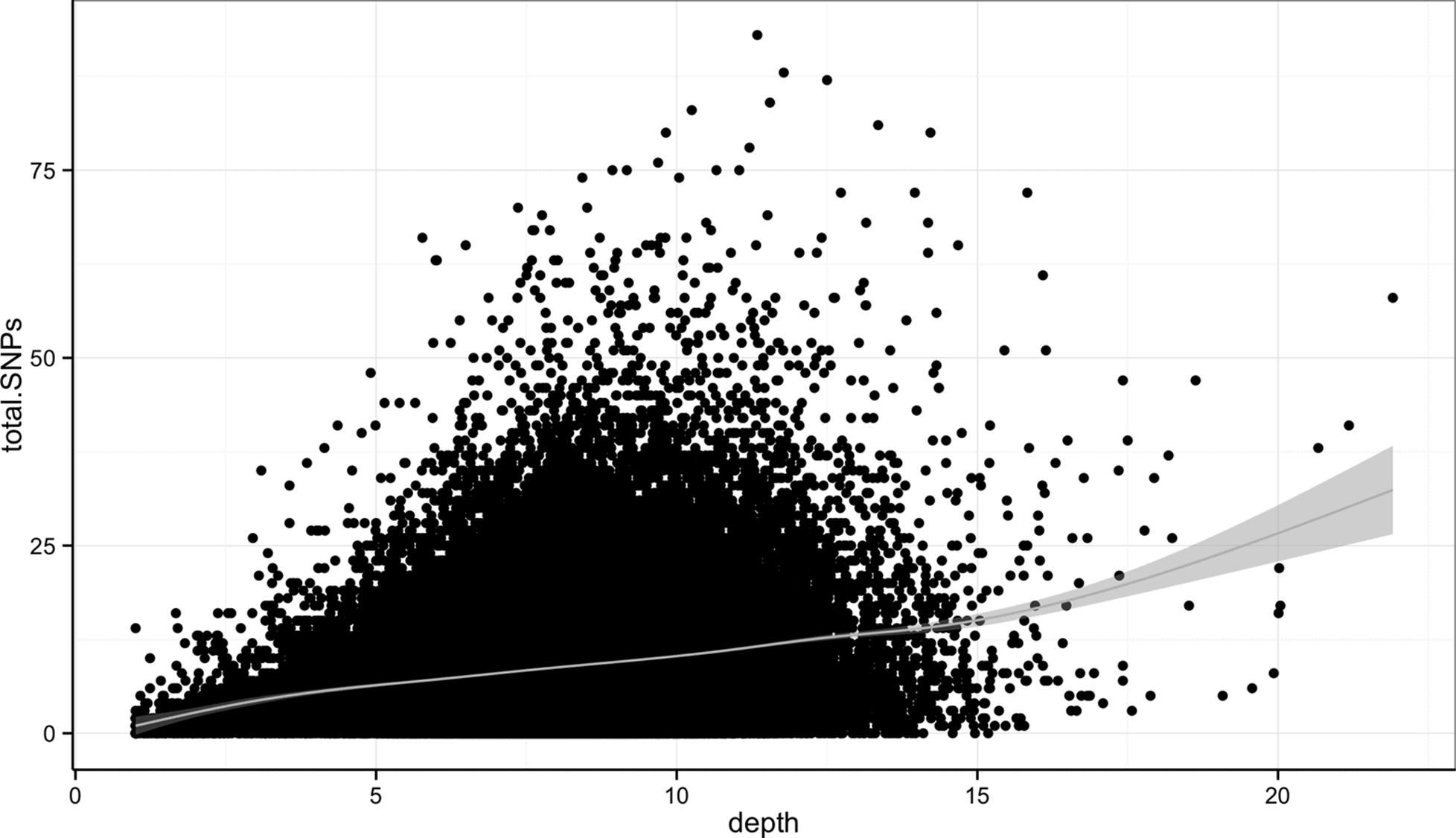

Let’s start by looking at the total number of SNPs per window. From summary(), we see that this varies quite considerably across all windows on chromosome 20:

> summary(d$total.SNPs)

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.000 3.000 7.000 8.906 12.000 93.000

Notice how right-skewed this data is: the third quartile is 12 SNPs, but the maximum is 93 SNPs. Often we want to investigate such outliers more closely. Let’s use data subsetting to select out some rows that have 85 or more SNPs (this arbitrary threshold is just for exploratory data analysis, so it doesn’t matter much). We can create a logical vector containing whether each observation (row) has 85 or more SNPs using the following:

> d$total.SNPs >= 85

[1] FALSE FALSE FALSE FALSE FALSE FALSE [...]

Recall from “Vectors, Vectorization, and Indexing” that in addition to integer indexing vectors, we can use logical vectors (Example 8-1). Likewise, we can use the logical vector we created earlier to extract the rows of our dataframe. R will keep only the rows that have a TRUE value:

> d[d$total.SNPs >= 85, ]

start end total.SNPs total.Bases depth unique.SNPs dhSNPs

2567 2621001 2622000 93 11337 11.34 13 10

12968 13023001 13024000 88 11784 11.78 11 1

43165 47356001 47357000 87 12505 12.50 9 7

reference.Bases Theta Pi Heterozygosity percent.GC Recombination

2567 1000 43.420 50.926 81.589 43.9439 0.000706536

12968 1000 33.413 19.030 74.838 28.8288 0.000082600

43165 1000 29.621 27.108 69.573 46.7467 0.000500577

Divergence Constraint SNPs cent

2567 0.01701702 0 1 FALSE

12968 0.01401401 0 1 FALSE

43165 0.02002002 0 7 FALSE

This subset of the data gives a view of windows with 85 or greater SNPs. With the start and end positions of these windows, we can see if any potential confounders stand out. If you’re curious, explore these windows using the UCSC Genome Browser; remember to use the NCBI34/hg16 version of the human genome).

We can build more elaborate queries by chaining comparison operators. For example, suppose we wanted to see all windows where Pi (nucleotide diversity) is greater than 16 and percent GC is greater than 80. Equivalently, we could work with the rescaled diversity column but subsetting with larger numbers like 16 is easier and less error prone than with numbers like 0.0016. We’d use:

> d[d$Pi > 16 & d$percent.GC > 80, ]

start end total.SNPs total.Bases depth unique.SNPs dhSNPs

58550 63097001 63098000 5 947 2.39 2 1

58641 63188001 63189000 2 1623 3.21 2 0

58642 63189001 63190000 5 1395 1.89 3 2

reference.Bases Theta Pi Heterozygosity percent.GC Recombination

58550 397 37.544 41.172 52.784 82.0821 0.000781326

58641 506 16.436 16.436 12.327 82.3824 0.000347382

58642 738 35.052 41.099 35.842 80.5806 0.000347382

Divergence Constraint SNPs cent

58550 0.03826531 226 1 FALSE

58641 0.01678657 148 0 FALSE

58642 0.01793722 0 0 FALSE

In these examples, we’re extracting all columns by omitting the column argument in the bracket operator (e.g., col in df[row, col]). If we only care about a few particular columns, we could specify them by their position or their name:

> d[d$Pi > 16 & d$percent.GC > 80, c("start", "end", "depth", "Pi")]

start end depth Pi

58550 63097001 63098000 2.39 41.172

58641 63188001 63189000 3.21 16.436

58642 63189001 63190000 1.89 41.099

Similarly, you could reorder columns by providing the column names or column indexes in a different order. For example, if you wanted to swap the order of depth and Pi, use:

> d[d$Pi > 16 & d$percent.GC > 80, c("start", "end", "Pi", "depth")]

start end Pi depth

58550 63097001 63098000 41.172 2.39

58641 63188001 63189000 16.436 3.21

58642 63189001 63190000 41.099 1.89

Remember, columns of a dataframe are just vectors. If you only need the data from one column, just subset it as you would a vector:

> d$percent.GC[d$Pi > 16]

[1] 39.1391 38.0380 36.8368 36.7367 43.0430 41.1411 [...]

This returns all of the percent GC values for observations where that observation has a Pi value greater than 16. Note that there’s no need to use a comma in the bracket because d$percent is a vector, not a two-dimensional dataframe.

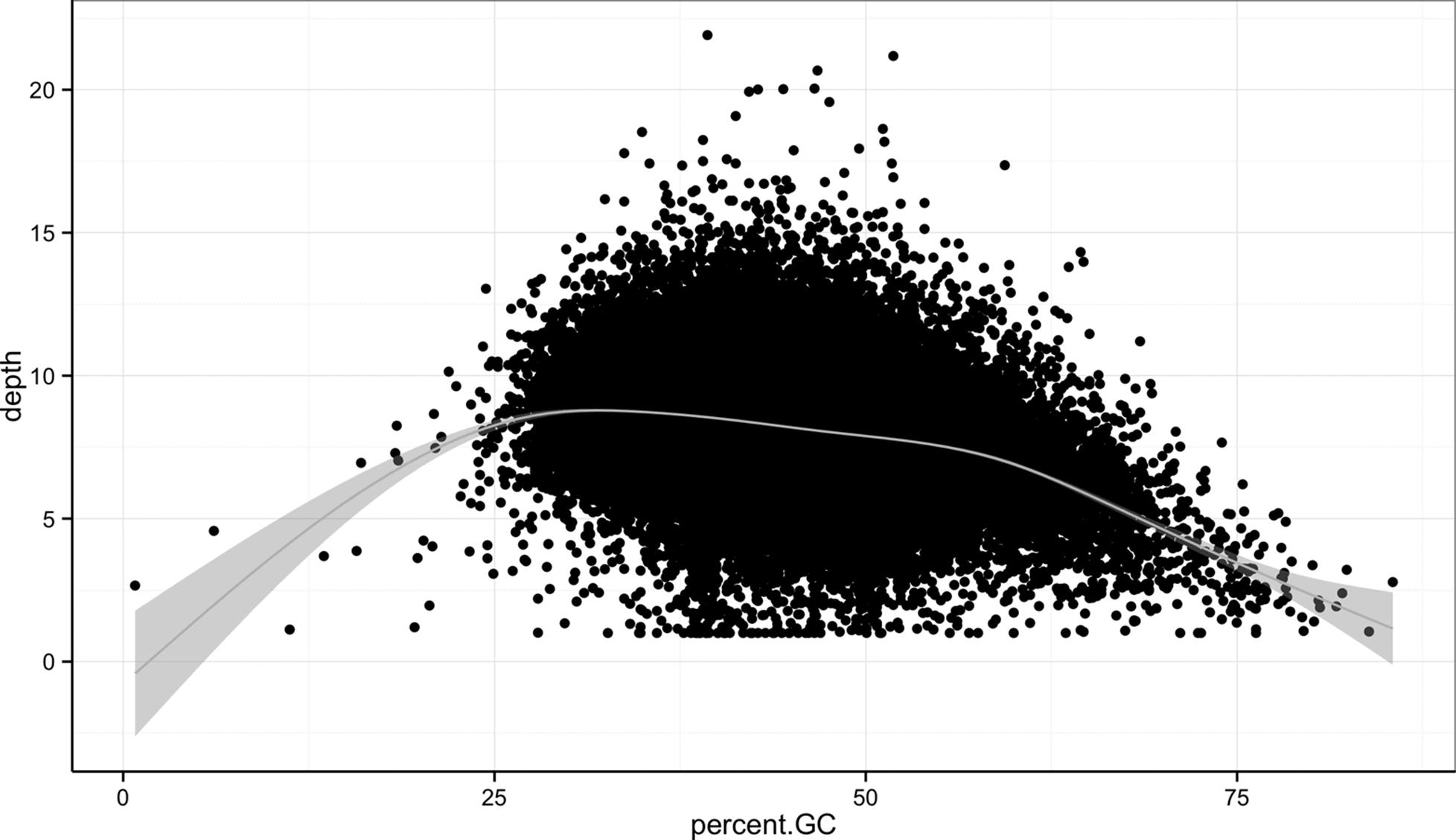

Subsetting columns can be a useful way to summarize data across two different conditions. For example, we might be curious if the average depth in a window (the depth column) differs between very high GC content windows (greater than 80%) and all other windows:

> summary(d$depth[d$percent.GC >= 80])

Min. 1st Qu. Median Mean 3rd Qu. Max.

1.05 1.89 2.14 2.24 2.78 3.37

> summary(d$depth[d$percent.GC < 80])

Min. 1st Qu. Median Mean 3rd Qu. Max.

1.000 6.970 8.170 8.185 9.400 21.910

This is a fairly large difference, but it’s important to consider how many windows this includes. Indeed, there are only nine windows that have a GC content over 80%:

> sum(d$percent.GC >= 80)

[1] 9

As another example, consider looking at Pi by windows that fall in the centromere and those that do not. Because d$cent is a logical vector, we can subset with it directly (and take its complement by using the negation operator, !):

> summary(d$Pi[d$cent])

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.00 7.95 16.08 20.41 27.36 194.40

> summary(d$Pi[!d$cent])

Min. 1st Qu. Median Mean 3rd Qu. Max.

0.000 5.557 10.370 12.290 16.790 265.300

Indeed, the centromere does appear to have higher nucleotide diversity than other regions in this data. Extracting specific observations using subsetting, and summarizing particular columns is a quick way to explore relationships within the data. Later on in “Working with the Split-Apply-Combine Pattern”, we’ll learn ways to group observations and create per-group summaries.

In addition to using logical vectors to subset dataframes, it’s also possible to subset rows by referring to their integer positions. The function which() takes a vector of logical values and returns the positions of all TRUE values. For example:

> d$Pi > 3

[1] FALSE TRUE FALSE TRUE TRUE TRUE [...]

> which(d$Pi > 3)

[1] 2 4 5 6 7 10 [...]

Thus, d[$Pi > 3, ] is identical to d[which(d$Pi > 3), ]; subsetting operations can be expressed using either method. In general, you should omit which() when subsetting dataframes and use logical vectors, as it leads to simpler and more readable code. Under other circumstances, which() is necessary — for example, if we wanted to select the four first TRUE values in a vector:

> which(d$Pi > 10)[1:4]

[1] 2 16 21 23

which() also has two related functions that return the index of the first minimum or maximum element of a vector: which.min() and which.max(). For example:

> d[which.min(d$total.Bases),]

start end total.SNPs total.Bases depth [...]

25689 25785001 25786000 0 110 1.24 [...]

> d[which.max(d$depth),]

start end total.SNPs total.Bases depth [...]

8718 8773001 8774000 58 21914 21.91 [...]

Sometimes subsetting expressions inside brackets can be quite redundant (because each column must be specified like d$Pi, d$depth, etc). A useful convenience function (intended primarily for interactive use) is the R function subset(). subset() takes two arguments: the dataframe to operate on, and then conditions to include a row. With subset(), d[d$Pi > 16 & d$percent.GC > 80, ] can be expressed as:

$ subset(d, Pi > 16 & percent.GC > 80)

start end total.SNPs total.Bases depth [...]

58550 63097001 63098000 5 947 2.39 [...]

58641 63188001 63189000 2 1623 3.21 [...]

58642 63189001 63190000 5 1395 1.89 [...]

Optionally, a third argument can be supplied to specify which columns (and in what order) to include:

> subset(d, Pi > 16 & percent.GC > 80,

c(start, end, Pi, percent.GC, depth))

start end Pi percent.GC depth

58550 63097001 63098000 41.172 82.0821 2.39

58641 63188001 63189000 16.436 82.3824 3.21

58642 63189001 63190000 41.099 80.5806 1.89

Note that we (somewhat magically) don’t need to quote column names. This is because subset() follows special evaluation rules, and for this reason, subset() is best used only for interactive work.

Exploring Data Visually with ggplot2 I: Scatterplots and Densities

Instead of spending time making your graph look pretty, [ggplot2 allows you to] focus on creating a graph that best reveals the messages in your data.

ggplot2: Elegant Graphics for Data Analysis Hadley Wickham

Exploratory data analysis emphasizes visualization as the best tool to understand and explore our data — both to learn what the data says and what its limitations are. We’ll learn visualization in R through Hadley Wickham’s powerful ggplot2 package, which is just one of a few ways to plot data in R; R also has a built-in graphics system (known as base graphics) and the lattice package. Every R user should be acquainted with base graphics (at some point, you’ll encounter a base graphics plot you need to modify), but we’re going to skip base graphics in this chapter to focus entirely on learning visualization with ggplot2. The reason for this is simple: you’ll be able to create more informative plots with less time and effort invested with ggplot2 than possible with base graphics.

As with other parts of this chapter, this discussion of ggplot2 will be short and deficient in some areas. This introduction is meant to get you on your feet so you can start exploring your data, rather than be an exhaustive reference. The best up-to-date reference for ggplot2 is the ggplot2 online documentation. As you shift from beginning ggplot2 to an intermediate user, I highly recommend the books ggplot2: Elegant Graphics for Data Analysis by Hadley Wickham (Springer, 2010) and R Graphics Cookbook by Winston Chang (O’Reilly, 2012) for more detail.

First, we need to load the ggplot2 package with R’s library() function. If you don’t have ggplot2 installed, you’ll need to do that first with install.packages():

> install.packages("ggplot2")

> library(ggplot2)

ggplot2 is quite different from R’s base graphics because it’s built on top of a grammar inspired by Leland Wilkinson’s Grammar of Graphics (Springer, 2005). This grammar provides an underlying logic to creating graphics, which considerably simplifies creating complex plots. Each ggplot2 plot is built by adding layers to a plot that map the aesthetic properties of geometric objects to data. Layers can also apply statistical transformations to data and change the scales of axes and colors. This may sound abstract now, but you’ll become familiar with gplot2’s grammar through examples.

Let’s look at how we’d use ggplot2 to create a scatterplot of nucleotide diversity along the chromosome in the diversity column in our d dataframe. Because our data is window-based, we’ll first add a column called position to our dataframe that’s the midpoint between each window:

> d$position <- (d$end + d$start) / 2

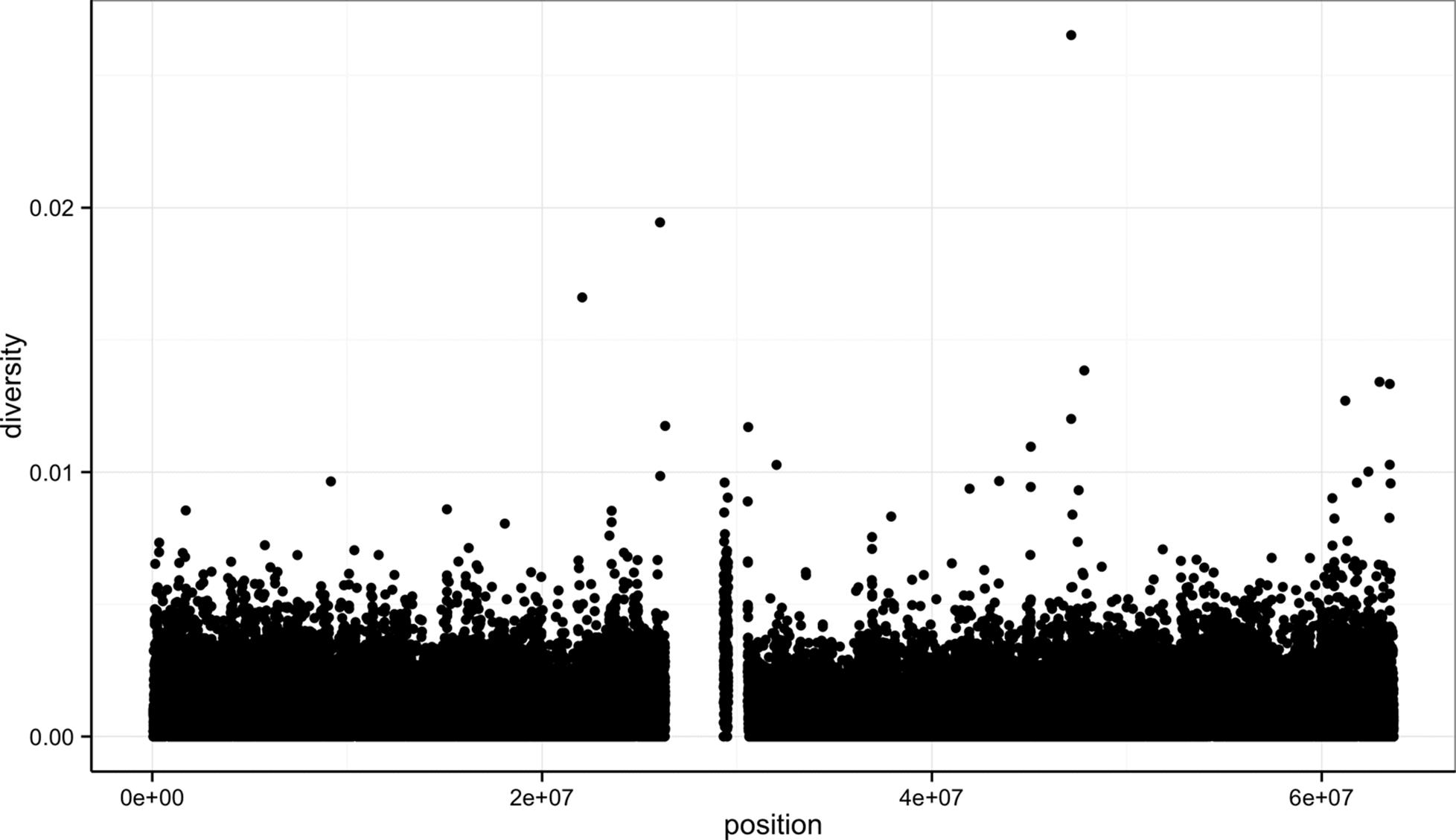

> ggplot(d) + geom_point(aes(x=position, y=diversity))

This creates Figure 8-1.

There are two components of this ggplot2 graphic: the call to the function ggplot(), and the call to the function geom_point(). First, we use ggplot(d) to supply this plot with our d dataframe. ggplot2 works exclusively with dataframes, so you’ll need to get your data tidy and into a dataframe before visualizing it with ggplot2.

Figure 8-1. ggplot2 scatterplot nucleotide diversity by position for human chromosome 20

Second, with our data specified, we then add layers to our plot (remember: ggplot2 is layer-based). To add a layer, we use the same + operator that we use for addition in R. Each layer updates our plot by adding geometric objects such as the points in a scatterplot, or the lines in a line plot.

We add geom_point() as a layer because we want points to create a scatterplot. geom_point() is a type of geometric object (or geom in ggplot2 lingo). ggplot2 has many geoms (e.g., geom_line(), geom_bar(), geom_density(),geom_boxplot(), etc.), which we’ll see throughout this chapter. Geometric objects have many aesthetic attributes (e.g., x and y positions, color, shape, size, etc.). Different geometric objects will have different aesthetic attributes (ggplot2 documentation refers to these as aesthetics). The beauty of ggplot2’s grammar is that it allows you to map geometric objects’ aesthetics to columns in your dataframe. In our diversity by position scatterplot, we mapped the xposition aesthetic to the position column, and the y position to the diversity column. We specify the mapping of aesthetic attributes to columns in our dataframe using the function aes().

Axis Labels, Plot Titles, and Scales

As my high school mathematics teacher Mr. Williams drilled into my head, no plot is complete without proper axis labels and a title. While ggplot2 chooses smart labels based on your column names, you might want to change this down the road. ggplot2 makes specifying labels easy: simply use the xlab(), ylab(), and ggtitle() functions to specify the x-axis label, y-axis label, and plot title. For example, we could change our x- and y-axis labels when plotting the diversity data with p + xlab("chromosome position (basepairs)") + ylab("nucleotide diversity"). To avoid clutter in examples in this book, I’ll just use the default labels.

You can also set the limits for continuous axes using the function scale_x_continuous(limits=c(start, end)) where start and end are the start and end of the axes (and scale_y_continuous() for the y axis). Similarly, you can change an axis to a log10-scale using the functionsscale_x_log10() and scale_y_log10(). ggplot2 has numerous other scale options for discrete scales, other axes transforms, and color scales; see http://docs.ggplot2.org for more detail.

Aesthetic mappings can also be specified in the call to ggplot() — geoms will then use this mapping. Example 8-2 creates the exact same scatterplot as Figure 8-1.

Example 8-2. Including aes() in ggplot()

> ggplot(d, aes(x=position, y=diversity)) + geom_point()

Notice the missing diversity estimates in the middle of this plot. What’s going on in this region? ggplot2’s strength is that it makes answering these types of questions with exploratory data analysis techniques effortless. We simply need to map a possible confounder or explanatory variable to another aesthetic and see if this reveals any unexpected patterns. In this case, let’s map the color aesthetic of our point geometric objects to the column cent, which indicates whether the window falls in the centromeric region of this chromosome (see Example 8-3).

Example 8-3. A simple diversity scatterplot with ggplot2

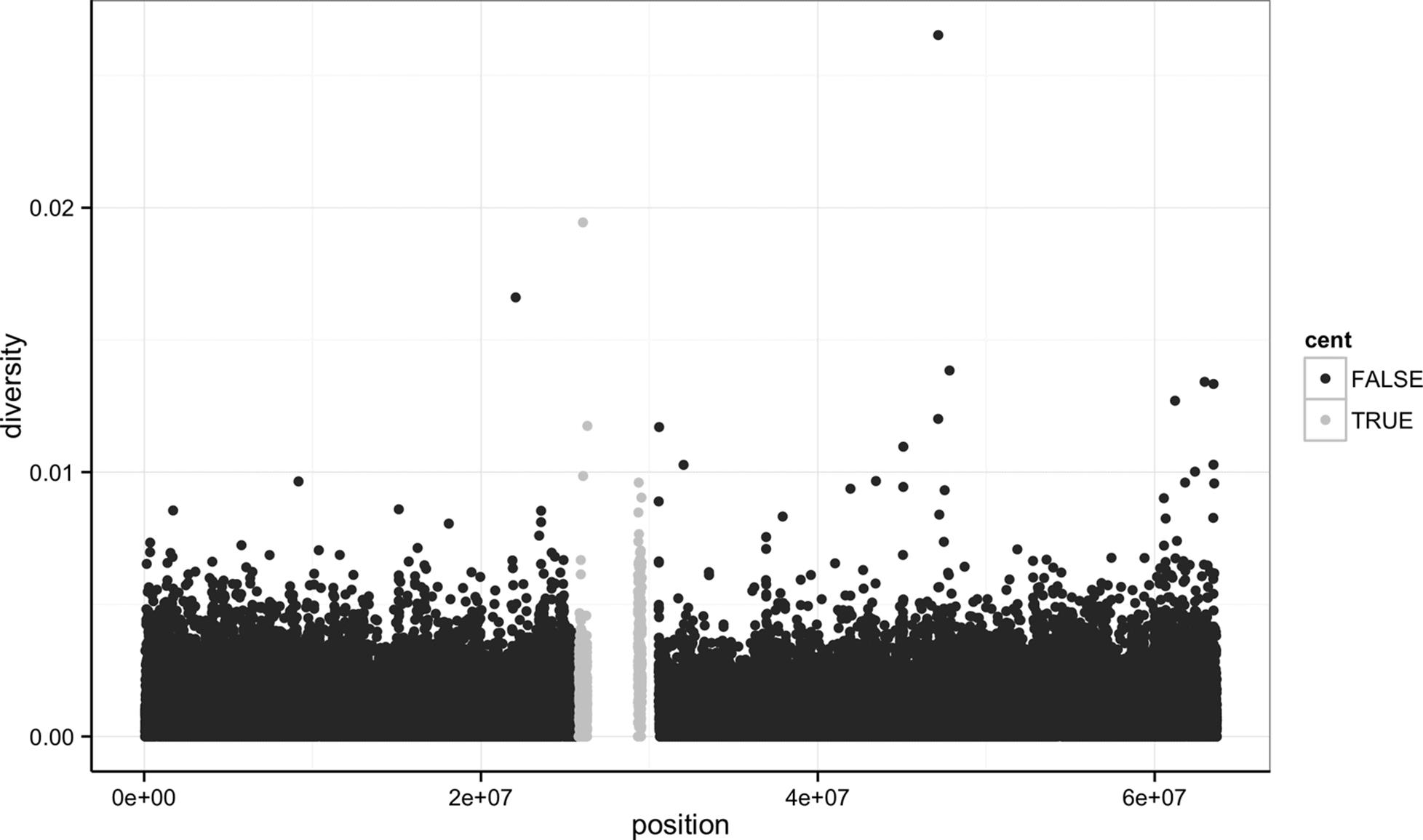

> ggplot(d) + geom_point(aes(x=position, y=diversity, color=cent))

As you can see from Figure 8-2, the region with missing diversity estimates is around the centromere. This is intentional; centromeric and heterochromatic regions were excluded from this study.

Figure 8-2. ggplot2 scatterplot nucleotide diversity by position coloring by whether windows are in the centromeric region

NOTE

Throughout this chapter, I’ve used a slightly different ggplot theme than the default to optimize graphics for print and screen. All of the code to produce the plots exactly as they appear in this chapter is in this chapter’s directory on GitHub.

A particularly nice feature of ggplot2 is that it has well-chosen default behaviors, which allow you to quickly create or adjust plots without having to consider technical details like color palettes or a legend (though these details are customizable). For example, in mapping the color aesthetic to the cent column, ggplot2 considered cent’s type (logical) when choosing a color palette. Discrete color palettes are automatically used with columns of logical or factor data mapped to the color aesthetic; continuous color palettes are used for numeric data. We’ll see further examples of mapping data to the color aesthetic later on in this chapter.

As Tukey’s quote at the beginning of this chapter explains, exploratory analysis is an interactive, iterative process. Our first plot gives a quick first glance at what the data say, but we need to keep exploring to learn more. One problem with this plot is the degree of overplotting (data oversaturating a plot so as to obscure the information of other data points). We can’t get a sense of the distribution of diversity from this figure — everything is saturated from about 0.05 and below.

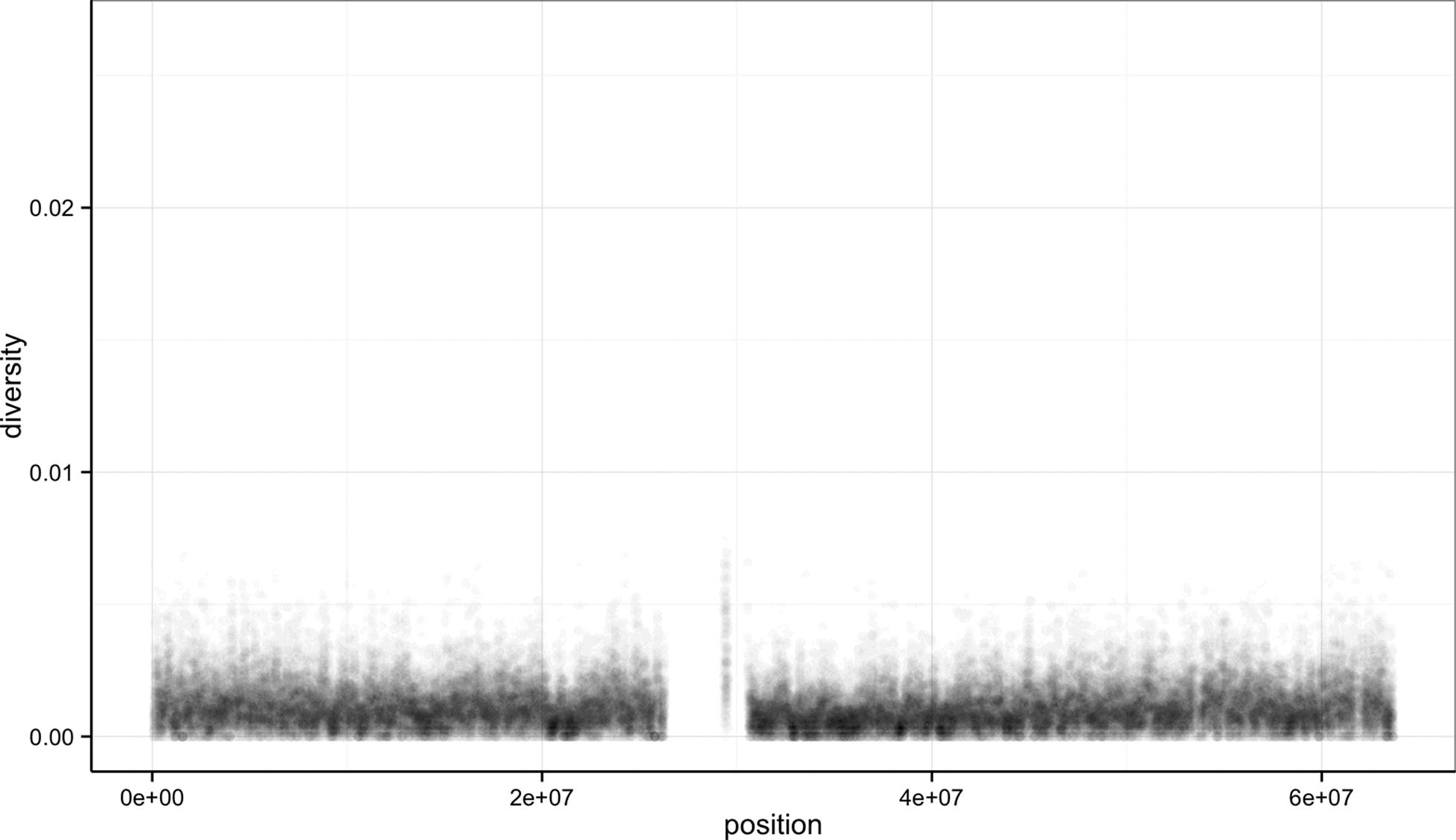

One way to alleviate overplotting is to make points somewhat transparent (the transparency level is known as the alpha). Let’s make points almost entirely transparent so only regions with multiple overlapping points appear dark (this produces a plot like Figure 8-3):

> ggplot(d) + geom_point(aes(x=position, y=diversity), alpha=0.01)

Figure 8-3. Using transparency to address overplotting

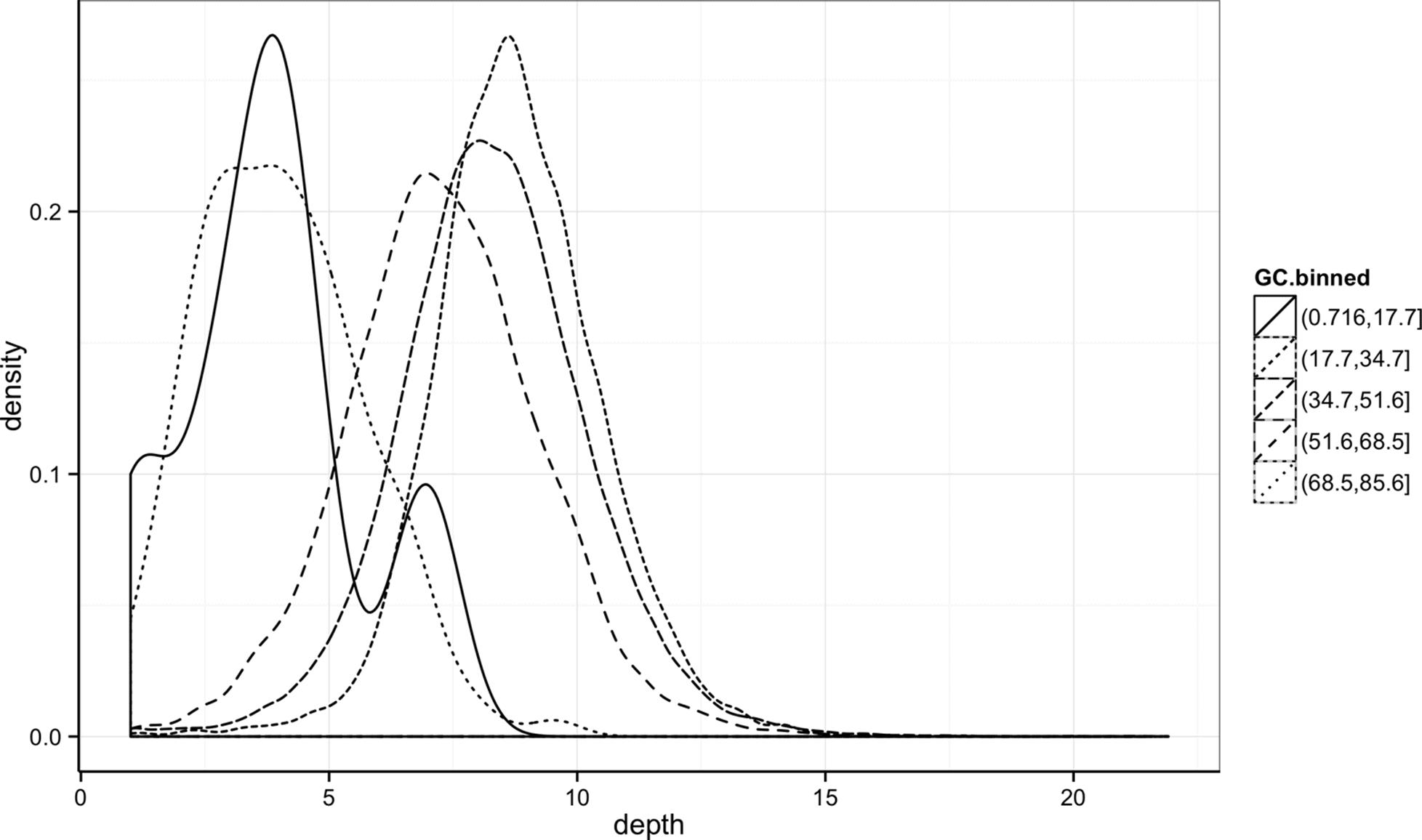

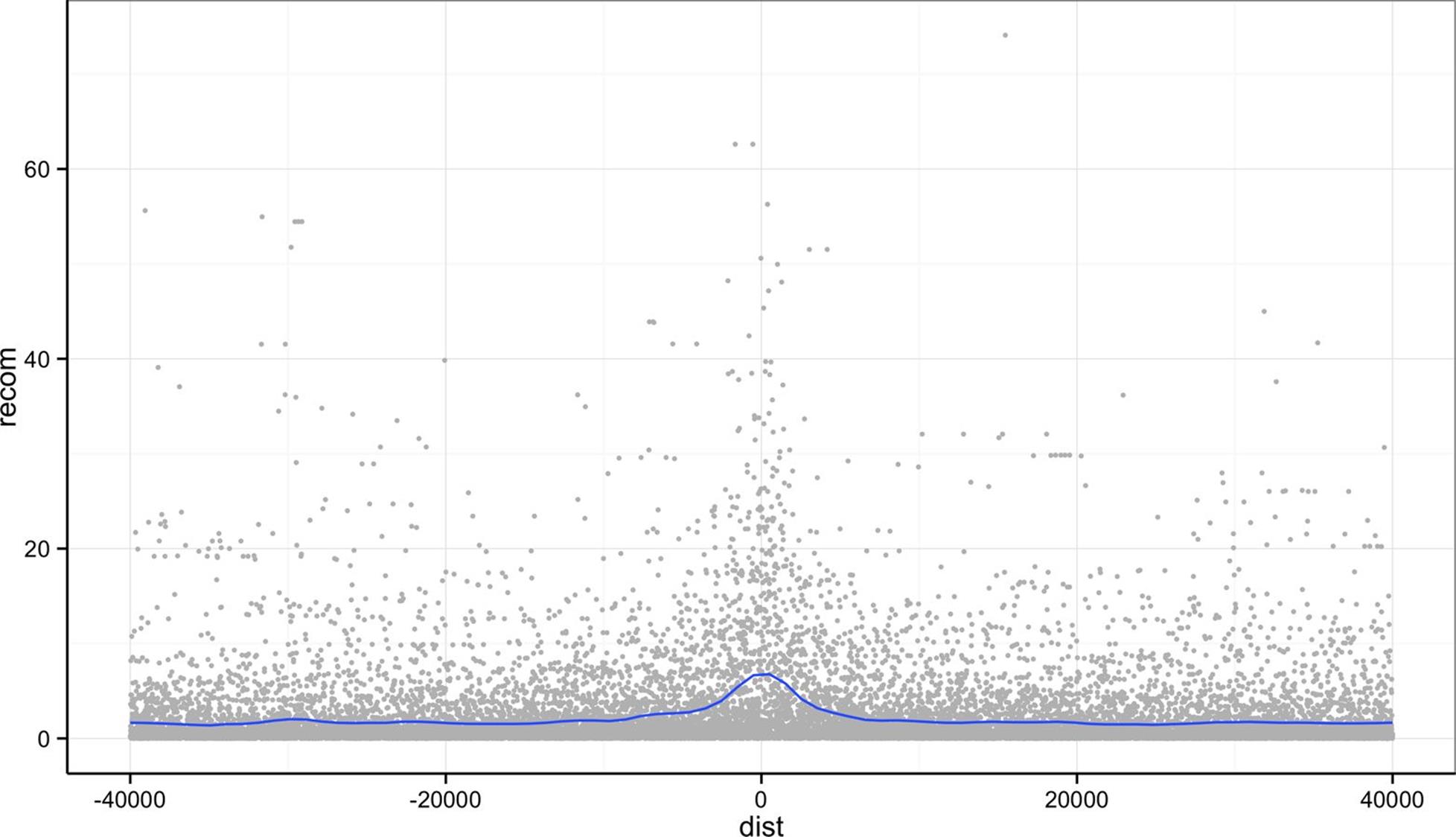

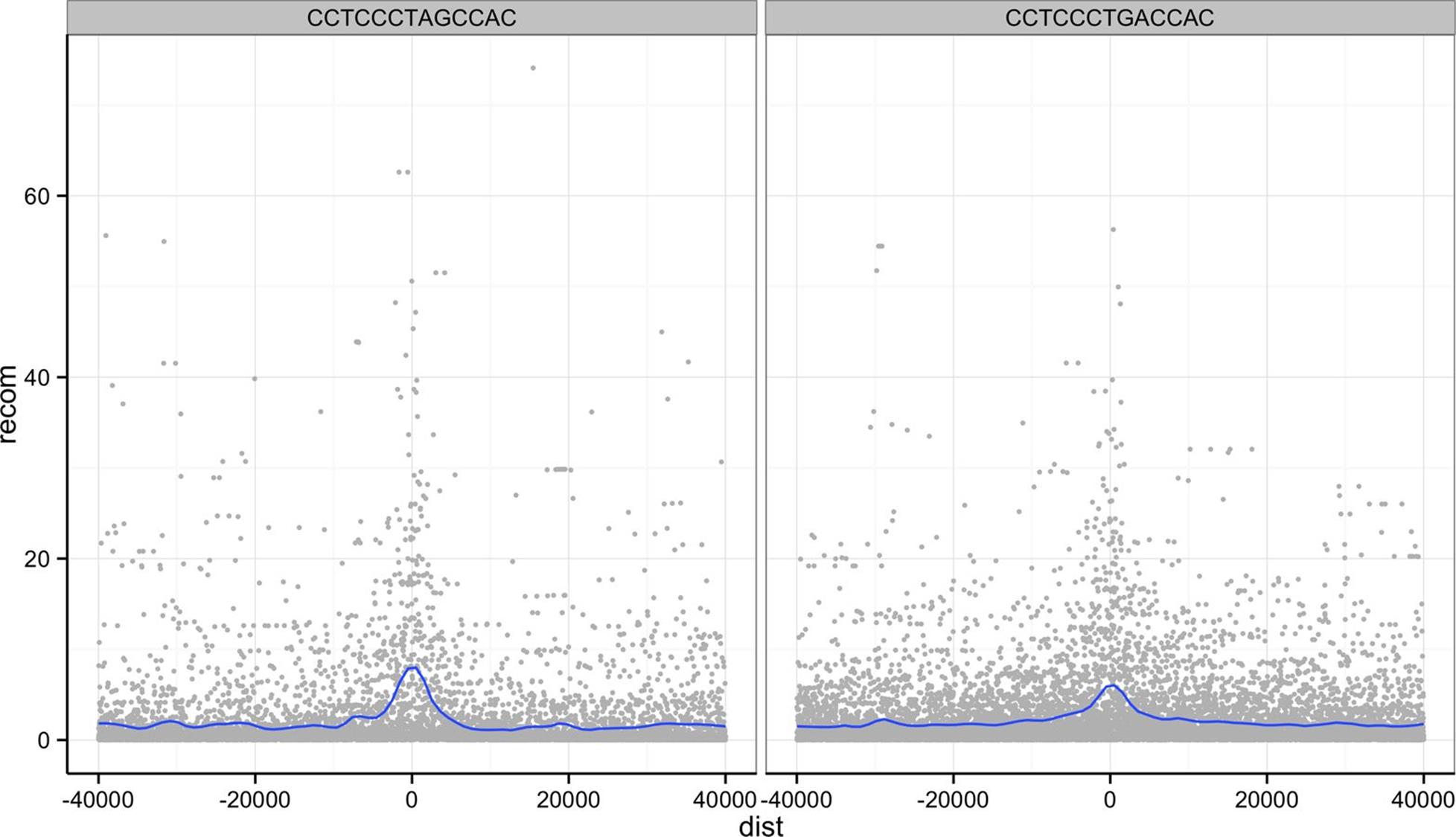

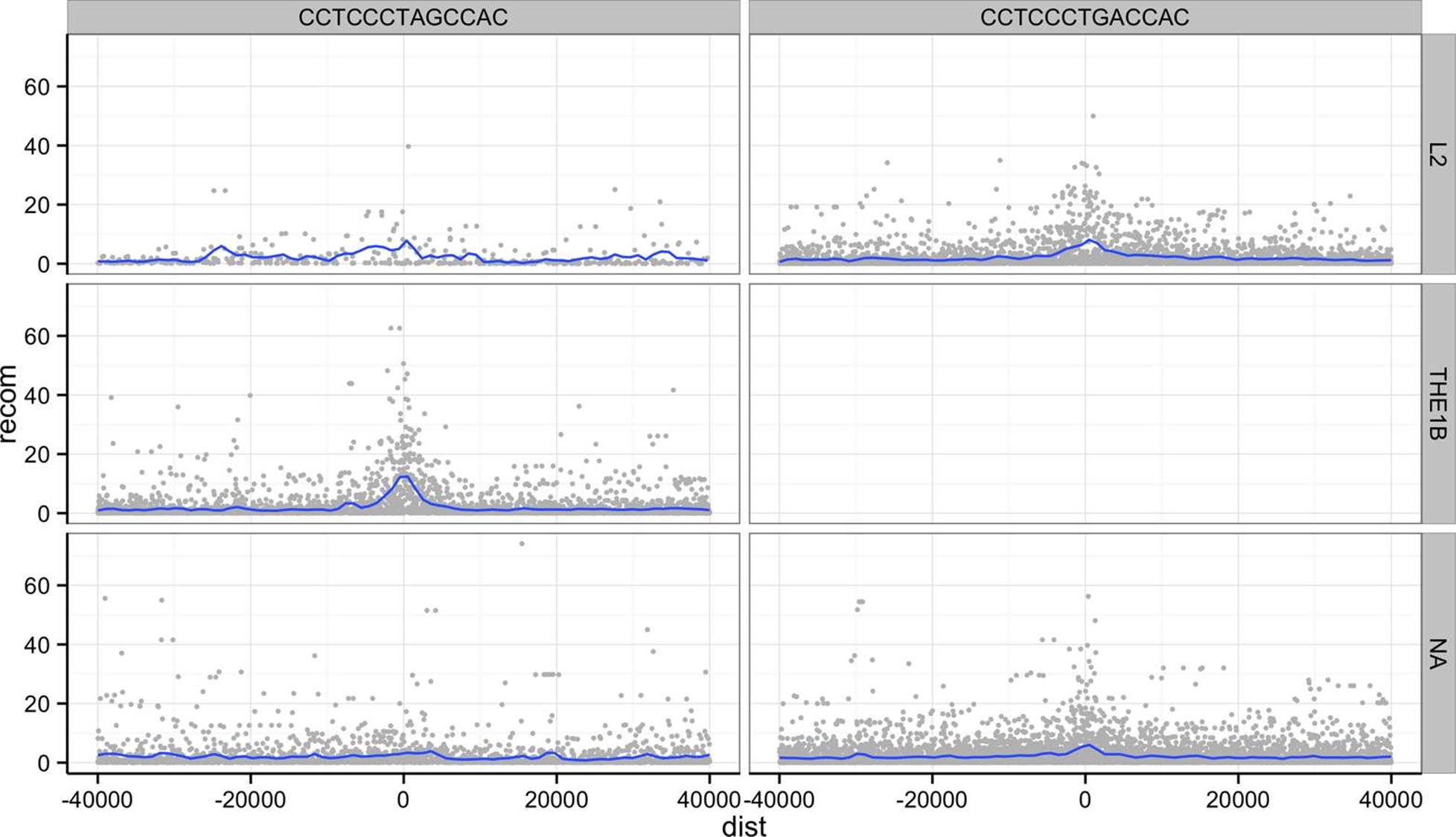

There’s a subtlety here that illustrates a very important ggplot2 concept: we set alpha=0.01 outside of the aesthetic mapping function aes(). This is because we’re not mapping the alpha aesthetic to a column of data in our dataframe, but rather giving it a fixed value for all data points.