Large Scale Machine Learning with Python (2016)

Appendix A. Introduction to GPUs and Theano

Up until now we performed neural networks and deep learning tasks utilizing regular CPU's. Lately however, computational advantages of GPU's become widespread. This chapter dives in to the basics of GPU together with the Theano framework for deep learning.

GPU computing

When we use regular CPU computing packages for machine learning, such as Scikit-learn, the amount of parallelization is surprisingly limited because, by default, an algorithm utilizes only one core even when there are multiple cores available. In the chapter aboutClassification and Regression Trees (CART), we will see some advanced examples of speeding up Scikit-learn algorithms.

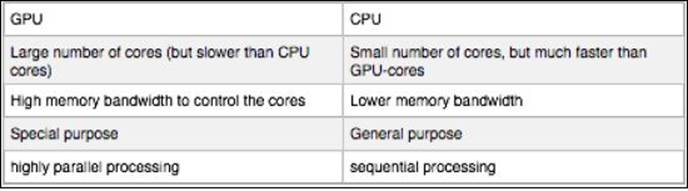

Unlike CPU, GPU units are designed to work in parallel from the ground up. Imagine projecting an image on a screen through a graphical card; it will come as no surprise that the GPU unit has to be able to process and project a lot of information (motion, color, and spatiality) at the same time. CPUs on the other hand are designed for sequential processing suitable for tasks where more control is needed, such as branching and checking. In contrast to the CPU, GPUs are composed of lots of cores that can handle thousands of tasks simultaneously. The GPU can outperform a CPU 100-fold at a lower cost. Another advantage is that modern GPUs are relatively cheap compared to state-of-the-art CPUs.

So all this sounds great but remember that the GPU is only good at carrying out a certain type of task. A CPU consists of a few cores optimized for sequential serial processing while a GPU consists of thousands of smaller, more efficient cores designed to handle tasks simultaneously.

CPUs and GPUs have different architectures that make them better-suited to different tasks. There are still a lot of tasks such as checking, debugging, and switching that GPUs can't do effectively because of its architecture.

A simple way to understand the difference between a CPU and GPU is to compare how they process tasks. An analogy that is often made is that of the analytical and sequential left brain (CPU) and the holistic right brain (GPU). This is just an analogy and should not be taken too seriously.

See more at the following links:

· http://www.nvidia.com/object/what-is-gpu-computing.html#sthash.c4R7eJ3s.dpuf

· http://www.nvidia.com/object/what-is-gpu-computing.html#sthash.c4R7eJ3s.dpuf

In order to utilize the GPU for machine learning, a specific platform is required. Unfortunately, as of yet, there are no stable GPU computation platforms other than CUDA; this means that you must have an NVIDIA graphical card installed on your computer. GPU computing will NOT work without an NVIDIA card. Yes, I know that this is bad news for most Mac users out there. I really wish it were different but it is a limitation that we have to live with. There are other projects such as OpenCL that provide GPU computation for other GPU brands through initiatives such as BLAS (https://github.com/clMathLibraries/clBLAS), but they are under heavy development and are not fully optimized for deep learning applications in Python. Another limitation of OpenCL is that only AMD is actively involved so that it will be beneficial to AMD GPUs. There is no hope for a hardware-independent GPU application for machine learning in the following years (decade even!). However, check out the news and developments of the OpenCL project (https://www.khronos.org/opencl/). Considering the widespread media attention that this limitation of GPU accessibility might be quite underwhelming. Only NVIDIA seems to put their research efforts in developing GPU platforms, and it is highly unlikely to see any new serious developments in that field in the years to come.

You will need the following things for the usage of CUDA.

You need to test if the graphical card on your computer is suitable for CUDA. It should at least be an NVIDIA card. You can test if your GPU is viable for CUDA with this line of code in the terminal:

$ su

Now type your password at the root:

$ lspci | grep -i nvidia

If you do have an NVIDIA-based GNU, you can download the NVIDIA CUDA Toolkit (http://developer.nvidia.com/cuda-downloads).

At the time of writing, NVIDIA is on the verge of releasing CUDA version 8, which will have different installation procedures, so we advice you to follow the directions on the CUDA website. For further installation procedures, consult the NVIDIA website:

http://docs.nvidia.com/cuda/cuda-getting-started-guide-for-linux/#axzz3xBimv9ou

Theano – parallel computing on the GPU

Theano is a Python library originally developed by James Bergstra at the University of Montreal. It aims at providing more expressive ways to write mathematical functions with symbolic representations (F. Bastien, P. Lamblin, R. Pascanu, J. Bergstra, I. Goodfellow, A. Bergeron, N. Bouchard, D. Warde-Farley and Y. Bengio. Theano: new features and speed improvements. NIPS 2012 Deep Learning Workshop). Interestingly, Theano is named after the Greek mathematician, who may have been Pythagoras' wife. It's strongest points are fast c-compiled computations, symbolic expressions, and GPU computation, and Theano is under active development. Improvements are made regularly with new features. Theano's implementations are much wider than scalable machine learning so I will narrow down and use Theano for deep learning. Visit the Theano website for more information—http://deeplearning.net/software/theano/.

When we want to perform more complex computations on multidimensional matrices, basic NumPy will resort to costly loops and iterations driving up the CPU load as we have seen earlier. Theano aims to optimize these computations by compiling them into highly optimized C-code and, if possible, utilizing the GPU. For neural networks and deep learning, Theano has the useful capability to automatically differentiate mathematical functions, which is very convenient for calculation of the partial derivatives when using algorithms such as backpropagation.

Currently, Theano is used in all sorts of deep learning projects and has become the most used platform in this field. Lately, new packages have been built on top of Theano in order to make utilizing deep learning functionalities easier for us. Considering the steep learning curve of Theano, we will use packages built on Theano, such as theanets, pylearn2, and Lasagne.

Installing Theano

First, make sure that you install the development version from the Theano page. Note that if you do "$ pip install theano", you might end up with problems. Installing the development version from GitHub directly is a safer bet:

$ git clone git://github.com/Theano/Theano.git

$ pip install Theano

If you want to upgrade Theano, you can use the following command:

$ sudo pip install --upgrade theano

If you have questions and want to connect with the Theano community, you can refer to https://groups.google.com/forum/#!forum/theano-users.

That's it, we are ready to go!

To make sure that we set the directory path toward the Theano folder, we need to do the following:

#!/usr/bin/python

import cPickle as pickle

from six.moves import cPickle as pickle

import os

#set your path to the theano folder here

path = '/Users/Quandbee1/Desktop/pthw/Theano/'

Let's install all the packages that we need:

from theano import tensor

import theano.tensor as T

import theano.tensor.nnet as nnet

import numpy as np

import numpy

In order for Theano to work on the GPU (if you have an NVIDIA card + CUDA installed), we need to configure the Theano framework first.

Normally, NumPy and Theano use the double-precision floating-point format (float64). However, if we want to utilize the GPU for Theano, a 32-bit floating point is used. This means that we have to change the settings between 32- and 64-bits floating points depending on our needs. If you want to see which configuration is used by your system by default, type the following:

print(theano.config.floatX)

output: float64

You can to change your configuration to 32 bits for GPU computing as follows:

theano.config.floatX = 'float32'

Sometimes it is more practical to change the settings via the terminal.

For a 32-bit floating point, type as follows:

$ export THEANO_FLAGS=floatX=float32

For a 64-bit floating point, type as follows:

$ export THEANO_FLAGS=floatX=float64

If you want a certain setting attached to a specific Python script, you can do this:

$ THEANO_FLAGS=floatX=float32 python you_name_here.py

If you want to see which computational method your Theano system is using, type the following:

print(theano.config.device)

If you want to change all the settings, both bits floating point and computational method (GPU or CPU) of a specific piece of script, type as follows:

$ THEANO_FLAGS=device=gpu,floatX=float32 python your_script.py

This can be very handy for the testing and coding. You might not want to use the GPU all the time; sometimes it is better to use the CPU for the prototyping and sketching and run it on the GPU once your script is ready.

First, let's test if GPU works for your setup. You can skip this if you don't have an NVIDIA GPU card on your computer:

from theano import function, config, shared, sandbox

import theano.tensor as T

import numpy

import time

vlen = 10 * 30 * 768 # 10 x #cores x # threads per core

iters = 1000

rng = numpy.random.RandomState(22)

x = shared(numpy.asarray(rng.rand(vlen), config.floatX))

f = function([], T.exp(x))

print(f.maker.fgraph.toposort())

t0 = time.time()

for i in xrange(iters):

r = f()

t1 = time.time()

print("Looping %d times took %f seconds" % (iters, t1 - t0))

print("Result is %s" % (r,))

if numpy.any([isinstance(x.op, T.Elemwise) for x in f.maker.fgraph.toposort()]):

print('Used the cpu')

else:

print('Used the gpu')

Now that we know how to configure Theano, let's run through some simple examples to see how it works. Basically, every piece of Theano code is composed of the same structure:

1. The initialization part where the variables are declared in the class.

2. The compiling where the functions are formed.

3. The execution where the functions are applied to data types.

Let's use these principles in some basic examples of vector computations and mathematical expressions:

#Initialize a simple scalar

x = T.dscalar()

fx = T.exp(T.tan(x**2)) #initialize the function we want to use.

type(fx) #just to show you that fx is a theano variable type

#Compile create a tanh function

f = theano.function(inputs=[x], outputs=[fx])

#Execute the function on a number in this case

f(10)

As we mentioned before, we can use Theano for mathematical expressions. Look at this example where we use a powerful Theano feature called autodifferentiation, a feature that becomes highly useful for backpropagation:

fp = T.grad(fx, wrt=x)

fs= theano.function([x], fp)

fs(3)

output:] 4.59

Now that we understand the way in which we can use variables and functions, let's perform a simple logistic function:

#now we can apply this function to matrices as well

x = T.dmatrix('x')

s = 1 / (1 + T.exp(-x))

logistic = theano.function([x], s)

logistic([[2, 3], [.7, -2],[1.5,2.3]])

output:

array([[ 0.88079708, 0.95257413],

[ 0.66818777, 0.11920292],

[ 0.81757448, 0.90887704]])

We can clearly see that Theano provides faster methods of applying functions to data objects than would be possible with NumPy.

All materials on the site are licensed Creative Commons Attribution-Sharealike 3.0 Unported CC BY-SA 3.0 & GNU Free Documentation License (GFDL)

If you are the copyright holder of any material contained on our site and intend to remove it, please contact our site administrator for approval.

© 2016-2026 All site design rights belong to S.Y.A.