Beginning HTML5 Media. Make the most of the new video and audio standards for the Web (2015)

CHAPTER 1

![]()

Encoding Video

The “art” of encoding video for HTML5 is, in many respects, a “black art.” There are no standards other than file format and everything else from data rate to audio is left to your best judgment. The decisions you make in creating the MPEG4, WebM, and Ogg files are therefore “subjective” not “objective.” Before you can play the video and audio content in HTML5 you need to clearly understand how these files are created and the decisions you will need to make to ensure smooth playback. This process starts with a rather jarring revelation: video is not video. The extensions used to identify video and audio files are more like shoeboxes than anything else. The file formats—MPEG4, WebM, and Ogg—are the names on the boxes and inside the boxes are a video track and an audio track. The box with the file format label is called a “container.”

In this chapter you’ll learn the following:

· The importance of containers and codecs.

· The audio and video codecs used to encode the audio and video files used by HTML5.

· How to use Miro Video Converter to create .mp4, .webm, and .ogv files.

· How to use the Firefogg extension to create .webm and .ogv files.

· How to use the Adobe Media Encoder to create an .mp4 file.

· The commands used by FFmpeg to encode the various audio and video formats used by HTML5.

Containers

Though you may regard video files as say, an .mp4 file, in reality, it is nothing more than a container format. What it does is define how to store what is in the container, not what types of data are stored in the container. This is a critical distinction to understand.

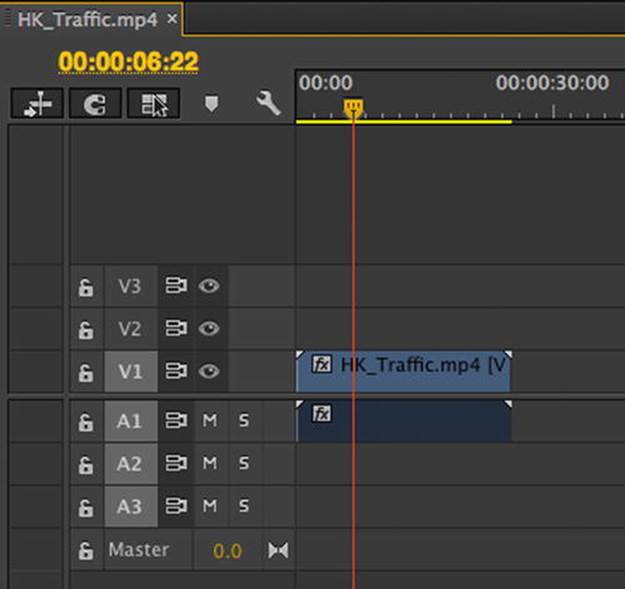

If you were able to open the box labeled MPEG4 you would see a video track (without audio), plus one or more audio tracks (without video), which is exactly what you see when you edit a video file in a video editor as shown in Figure 1-1. These tracks are usually interrelated. For example, the audio track will contain markers that allow it to synchronize with the video track. The tracks typically contain metadata such as the aspect ratio of the images of a video track or the language used in the audio track. The containers that encapsulate the tracks can also hold metadata such as the title of the video production, the cover art for the file, a list of chapters for navigation, and so on.

Figure 1-1. You can see the video and audio tracks in a video editor

In addition, it is possible that video files contain text tracks, such as captions or subtitles, or tracks that contain programming information, or tracks that contain small thumbnails to help users when using fast forward to a jog control to find a particular point in the video. We call these tracks “timed metadata” and will talk about them later. For now, we’ll focus on creating video files that contain a video and an audio track.

Similar to video files, audio files also consist of a container with tracks of content inside them. Often, the same container formats are used for creating audio files as for creating video files, but some simpler containers exist also for audio alone.

Video and audio signals are put into container formats by encoding to binary form and compressing the bits. This process is called encoding and the process of returning the binary data to audio/video signals is called decoding. The algorithm for encoding and decoding is a codec (COder/DECoder). We’ll talk about codecs in a bit; first we need to understand the container formats that browsers support.

Video Containers

Though there are a lot of container formats out there, thankfully browsers only support a limited set and there really are only three you need to know.

· MPEG4 which usually contains a .mp4 or a .m4v extension. This container, which typically holds an H.264 or H.265 encoded video track and an AAC (Advanced Audio Codec) audio track, is based on Apple’s older .mov format and is the one most commonly produced by the video camera in your smartphone or tablet.

· Ogg which uses the .ogg or .ogv extension. Ogg, as pointed out in the “Introduction,” is an open source format unencumbered by patents. This container holds Ogg video (the Theora codec) and Ogg audio (the Vorbis or Opus codec). This format is supported, “out of the box” by all major Linux distributions and is also playable on both the Mac and Windows platforms using the VLC player which is freely available at www.videolan.org/vlc/index.html.

· WebM which uses the .webm extension. As pointed out in the “Introduction,” WebM is a royalty-free open source format designed specifically for HTML5 video. This container holds a VP8 or VP9 encoded video track and a Vorbis or Opus encoded audio track. This format is supported natively by many of the modern browsers except for Internet Explorer (IE) and Safari for which you need to install Media Foundation or Quicktime components, respectively.

All browsers that support the Ogg container format also support the more modern WebM container format. Therefore, this book focuses on WebM and we only cover Ogg in this chapter for completeness.

![]() Note For more information regarding video and browsers, check out http://en.wikipedia.org/wiki/HTML5_Video#Browser_support.

Note For more information regarding video and browsers, check out http://en.wikipedia.org/wiki/HTML5_Video#Browser_support.

Audio Containers

Most of the video containers are also applicable to audio-only files, but then use different MIME types and different file extensions. HTML5 supports the following audio file formats:

· MPEG4 files that are audio-only have an AAC encoded audio track and an .m4a extension.

· Ogg files that are audio-only have either a Vorbis encoded audio track and an .ogg or .oga extension, or an Opus encoded audio track and an .opus extension.

· WebM files that are audio-only have a Vorbis or Opus encoded audio track but also a .webm extension.

· MP3 files contain the MPEG-1 audio layer 3 defined codec as a sequence of packets. While MP3 is not really a container format (e.g., it cannot contain video tracks), MP3 files have many features of a container, such as metadata in ID3 format headers. MP3 audio files are found in files with the extension .mp3.

· RIFF WAVE files are containers for audio tracks that are typically raw PCM encoded, which means that the audio data is essentially uncompressed, making for larger files. The RIFF WAVE file extension is .wav.

Each of these five audio files formats are supported in one or more of the Web browsers natively in their audio or video elements. Since there is no format that all browsers support equally, you will need to pick, at a minimum, two to cover all browsers (e.g., .m4a and .webm with Opus). (See also http://en.wikipedia.org/wiki/HTML5_Audio#Supported_audio_codecs.)

Codecs

When you watch a video in a browser there is a lot going on under the hood that you don’t see. Your video player is actually doing three things at the same time.

· It is opening the container to see which audio and video formats are used and how they are stored in the file so they can be “decoded.”

· Decoding the video stream and shooting the images to the screen.

· Decoding the audio and sending that data to the speakers.

What you can gather from this is that a video codec is an algorithm which encodes a video track to enable the computer to unpack the images in the video and shoot them to your screen. The video player is the technology that actually does the decoding and display.

Video Codecs

There are two types of video codecs: lossy and lossless.

Lossy video is the most common type. As the video is encoded, increasingly more information is, essentially, thrown out. This process is called compression. Compression starts by throwing away information that is not relevant to the visual perception of the human eye. The end result is a seriously smaller file size. The more you squeeze down the size of a video file, the more information you lose and the more the quality of the video images decreases.

Similarly, compressing a file that has already been compressed with a lossy codec is not a “good thing.” If you have ever compressed a .jpg image a few times you would have noticed the quality of the image degrade because jpg is a “lossy” image compressor. The same thing happens with video. Therefore, when you encode video, you should always keep a copy of the original files in case you have to re-encode at a later stage.

As the name implies, “lossless video” loses no information and results in files that are simply too large to be of any use for online playback. Still, they are extremely useful for the original video file especially when it comes to creating three versions of the same file. A common lossless codec is Animation available through QuickTime.

There are myriad codecs out there all claiming to do amazing things. Thankfully when it comes to HTML5 video we only have three with which to concern ourselves: H.264, Theora, and VP8.

H.264

If H.264 were a major crime figure it would have a few aliases: MPEG-4 part 10 or MPEG-4 AVC or MPEG-4 Advanced Video Coding. Regardless of how it is known, this codec’s primary purpose was to make a single codec available for anything from the cell phone in your pocket (low bandwidth, low CPU) to your desktop computer (high bandwidth, high CPU) and practically anything else that has a screen. To accomplish this rather broad range of situations the H.264 standard is broken into a series of profiles, each of which defines a number of optional features that trade off complexity for file size. The most used profiles, for our purposes, are

· Baseline: use this with iOS devices.

· Main: this is mostly an historic profile used on standard definition (SD) (4:3 Aspect Ratio) TV broadcasts.

· High: use this for Web, SD, and HD (high definition) video publishing.

You also should know that most non-PC devices such as iPhones and Android devices actually do the decoding piece on a separate chip since their main CPU is not even close to being powerful enough to do the decoding in real time. Finally, the only browser that doesn’t support the H.264 standard is Opera.

While H.264 is still the dominant codec in the MPEG world, a new codec called H.265/HEVC (MPEG High Efficiency Video Coding) is emerging. The tools to create content in H.265 will likely be the same as for creating H.264, except that the resulting files will be smaller or of higher image quality.

Theora

Theora grew out of the VP3 codec from On2 and has subsequently been published under a BSD-style license by Xiph.org. All major Linux installations and the Firefox and Opera browsers support Theora while IE and Safari as well as iOS and Android devices don’t support it. While being royalty-free and open source, it has been superseded by VP8.

VP8

This is the “New Kid On The Block.” Technically VP8 output is right up there with the H.264 high profile. It also works rather nicely in low bandwidth situations comparable to the H.264 baseline profile. As pointed out in the “Introduction,” Apple is not a huge supporter of this codec, which explains why it isn’t supported in Safari or iOS devices. You can, however, get support by installing a QuickTime component (see https://code.google.com/p/webm/downloads/list). Similarly, installation of a Microsoft Media Foundation component from the same site enables support in IE.

While VP8 is still the dominant codec in the WebM world, a new codec called VP9 is emerging. It is comparable in quality to H.265 but is royalty-free. As both of these codecs are starting to take the stage at around the same time, new hardware for hardware-accelerated encoding and decoding seems to focus on supporting both codecs. This is good news for users and publishers of HTML5 video content, in particular if you are interested in very high resolution video such as 4K.

Audio Codecs

Video without audio is a lot like watching Fred Astaire dance without Ginger Rogers. It just doesn’t seem natural.

When an audio source is digitized, this is called sampling, because a sound pressure value is sampled every few microseconds and converted into a digital value. There are basically three parameters that influence the quality of audio sampling: the sampling rate (i.e., how often is the pressure sampled during a second), the number of channels (i.e., how many locations do we use to sample the same signal), and the precision of the samples (i.e., how exact a value do we sample, or how many bits to we use to store the sample—also called bitdepth). A typical sampling rate for phone quality sound is 8 kHz (i.e., 8,000 times per second), while stereo music quality is 44.1 kHz or 48 kHz. A typical number of channels is 2 (stereo), and a typical bitdepth is 8 bits for telephone quality and 16 for stereo music quality. The resulting sampled data is compressed with different codecs to reduce the storage or bandwidth footprint of digitized audio.

Like video, audio codecs are algorithms that encode the audio stream, and like video codecs, they come in two flavors: lossy and lossless. Since we’re dealing with video online, where we want to save as much bandwidth as possible, we need only concern ourselves with lossy audio codecs. Note that the RIFF WAVE format is uncompressed and is supported natively in all browsers except IE, so should you need uncompressed audio, this would be your choice. For compressed audio, there really are only three codecs you need to be aware of.

Before we start let’s get really clear on audio. Just because you can sit in your living room and watch a video with six or more speakers of glorious surround sound doesn’t mean web viewers get the same privilege. Most content on the Web is mono or stereo and your typical smartphone or mobile device will not offer you more than stereo output anyway. It is, however, possible to create Ogg Vorbis and MPEG AAC files with six or more channels and get these played back in your browser as surround sound, always assuming that your surround system is actually available to your web browser through your operating system. There will be occasions where you simply want to add an audio file to a web page. In this case, the three audio codecs you need to be aware of are MP3, Vorbis, and AAC.

MP3: MPEG-1 Audio Layer 3

The heading may be confusing but this is the official name for the ubiquitous MP3 file.

MP3 files can contain up to two channels—mono or stereo—of sound. There is a backward-compatible extension of MP3 for surround sound, which might also work in your browser of choice. MP3 can be encoded at different bitrates. For those who may be encountering the term “bitrate” for the first time, it is a measure of how many thousand 1s and 0s are transferred to your computer each second. For example, a 1 k bitrate means 1,000 bits, or kilobits (kb), move from the server to the MP3 player each second.

For MP3 files the bitrates (Kbps) can range between 32, 64, 128, and 256 up to 320 Kbps. Simply “supersizing” the bitrate does nothing more than supersize the file size with a marginally noticeable increase in audio quality. For example, a 128 Kbps file sounds a lot better than one at 64 Kbps. But the audio quality doesn’t double at 256 Kbps. Another aspect of this topic is that MP3 files allow for a variable bitrate. To understand this, consider an audio file with 5 seconds of silence in the middle of the file. This audio section could have a bitrate of 32 Kbps applied to it, and as soon as the band kicks in, the bitrate jumps to 128 Kbps.

All of the modern browsers except for Opera 10.0+ support the MP3 format on the desktop. For smartphones, the decoder is, in many cases, device dependent; though you can reasonably expect an MP3 file to play, it doesn’t hurt to have an Ogg Vorbis fallback. MPEG’s MP3 has generally been superseded by the more modern and more efficient AAC.

Vorbis

Though commonly referred to as Ogg Vorbis, strictly speaking, this is incorrect. Ogg is the container and Vorbis is the audio track in the container. When Vorbis is found in WebM, it’s a WebM audio file, but with a Vorbis encoded audio track. Vorbis has, generally, a higher fidelity than MP3 and is royalty-free. There is no need to choose from a set list of fixed bitrates for Vorbis encoding —you can request the encoder to choose whichever bitrate you require. As is the case for all the Ogg codecs, neither Safari nor IE supports Vorbis out of the box and you need to install extra components to decode them.

Advanced Audio Coding

This format, more commonly known as “AAC,” was dragged into prominence by Apple in 1997 when it was designated as the default format for the iTunes store. AAC has been standardized by MPEG in MPEG-2 and in MPEG-4.

In many respects AAC is a more “robust” file format than its MP3 predecessor. It delivers better sound quality at the same bitrate but it can encode audio any bitrate without the 320 Kbps speedbrake applied to the MP3 format. When used in the MP4 container, AAC has multiple profiles for exactly the same reasons—to accommodate varying playback conditions.

As you may have guessed, there is no magical combination of containers and codecs that work across all browsers and devices. For video to work everywhere you will need a minimum of two video files—mp4 and webm—and stand-alone audio will require two files—mp3 and Vorbis. Video is a little more complicated, especially when audio is found in the container. If you are producing WebM files, the combination is VP8 and Vorbis. In the case of MP4, the video codec is H.264 and the audio duties are handled by AAC.

Encoding Video

Now that you understand the file formats and their uses and limitations, let’s put that knowledge to work and encode video. Before we start, it is important that you understand why we are using four different pieces of software to create the video files.

The main reason is the skill level of many of you reading this book. It ranges from those new to this subject to those of you who feel comfortable using command lines to accomplish a variety of tasks. Thus the sequence will be Miro Video Converter, Firefogg, the Adobe Media Encoder (AME) , and finally FFmpeg. The only commercial product is Adobe’s and we include it because, as part of the Creative Cloud, AME has a significant installation base. As well, it gives us the opportunity to explore a critical aspect of MP4 file creation. You will also be asked to make some fundamental decisions while encoding the video file and each of these applications gives us the opportunity to discuss the decisions made.

Finally, other than FFmpeg, you will need to use a combination of encoders because none can be used for everything and the four applications demonstrated contain features common to many of the other products out there.

We start with an uncomplicated encoder: Miro Video Encoder. Alternative open source encoding software with a GUI (graphical user interface) include Handbrake (http://handbrake.fr/) and VLC (www.videolan.org/vlc/index.html), both of which are available in Linux, Mac, and PC versions.

Encoding with Miro Video Converter

If all you are looking for is a dead-simple, easy-to-use encoder then Miro is for you. Having said that, Miro does produce reasonable quality output, but, thanks to its overly simple interface, if you are unhappy with the end result you might want to try one of the other applications presented.

Miro Video Converter is an open source, GPL-licensed application available for Macintosh, Windows, and Linux computers. It is free and you can download the installer at www.mirovideoconverter.com.

Take the following steps to create either the WebM, MP4, or Ogg Theora versions of a video:

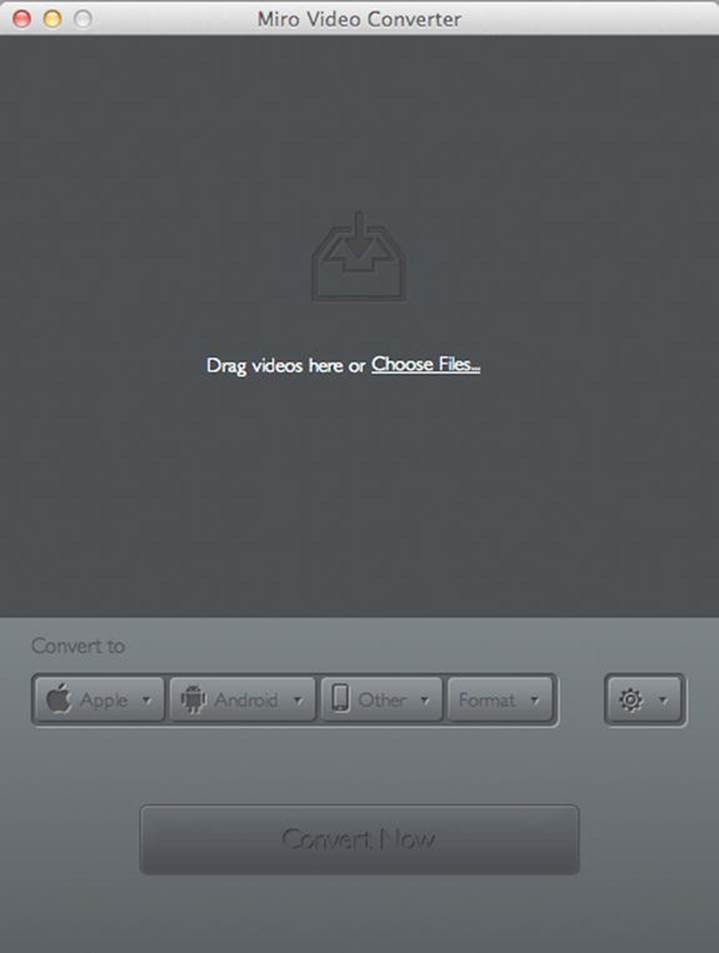

1. Run the Miro Video Converter application. When the application launches, you will see the home screen shown in Figure 1-2. Next you will need to add your original video file to the converter queue. Do this by dragging the video file to the drop area, or choose the file through the file selector. Once added, it will appear in the drop area with a thumbnail as shown in the top of Figure 1-3.

Figure 1-2. The Miro Converter home screen

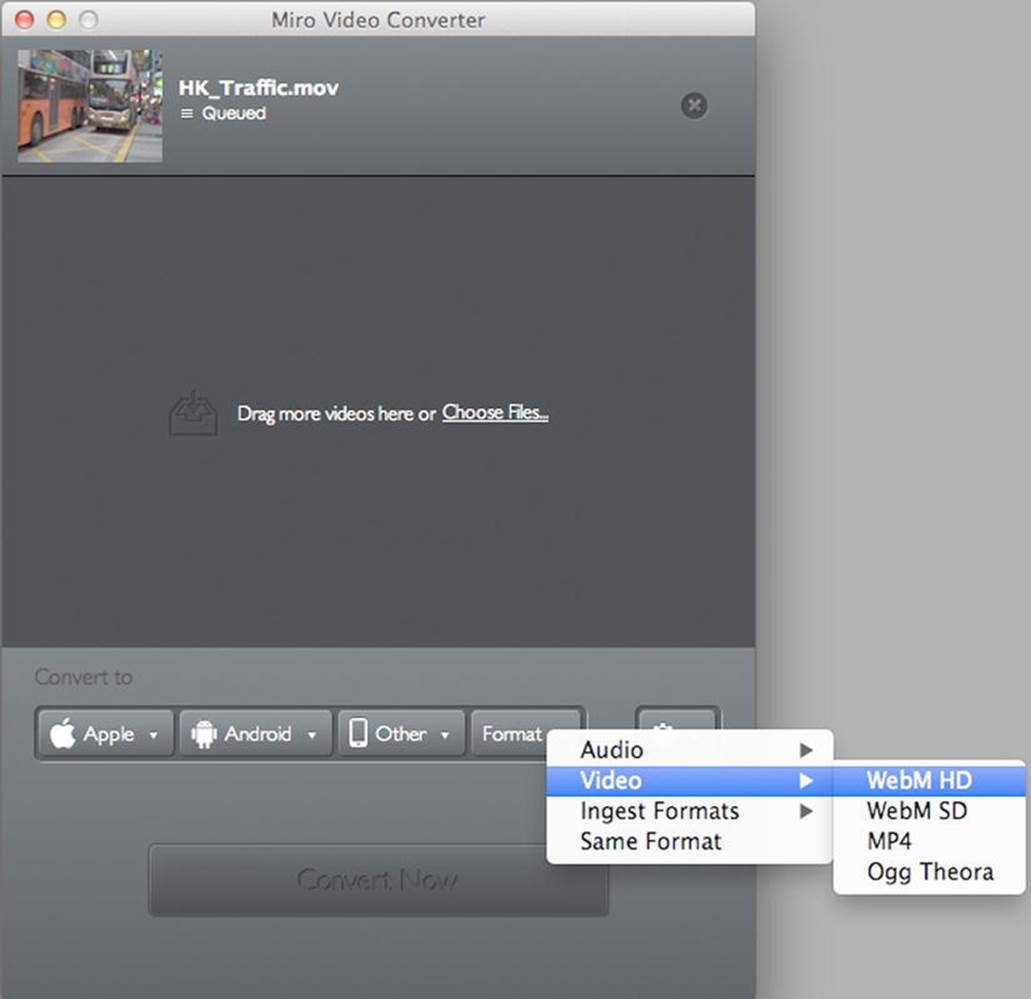

2. The plan is to create the WebM version first. To do this, click the Format button and, as shown in Figure 1-3, select WebM HD from the Video selection. The Convert Now button will turn green and change to read Convert to WebM HD. Click it.

Figure 1-3. Creating a WebM version of a video

3. As the video is converted you will see a progress bar beside the video thumbnail at the top of the interface. Depending on the size of the video this could be a rather slow process so be patient. The encoder tries to maintain the duration of the original video file, its resolution, its framerate, its audio samplerate, and the number of audio channels that were used in the original audio file.

4. When it finishes you will see a green check mark. Miro places the completed files in a separate folder on a Mac. The easiest way to find any conversion is to click the Show File link and move the file to your project’s video folder. Miro also adds the format name to the resulting filename. Feel free to remove it.

You can follow the same process to encode MP4. Just select MP4 from the Video menu in step 2 instead of WebM HD and repeat the same process. Similarly for Ogg Theora, if needed.

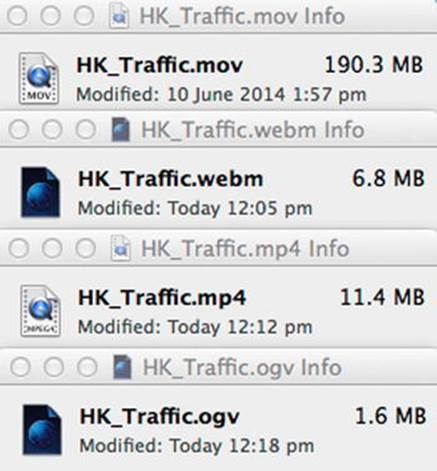

This is a great place to demonstrate just how powerful these codecs are when it comes to compression. In Figure 1-4, we have placed the original .mov version of the file above the WebM, MP4, and Ogg Theora versions just created. Note the significant difference in file size—about 180 MB—between the original and its compressed versions. Due to the lossy nature of the codecs there will be a slight loss of quality but not one that is noticeable. In fact, the Miro WebM and MP4 encodings are of comparable quality, maintaining all video parameters except for WebM having resampled the 48 kHz audio to 44.1 kHz. The Ogg Theora version is of much poorer quality because the default Miro encoding setting for Ogg Theora is suboptimal.

Figure 1-4. The drop in file size is due to the “lossy” codec

For you “Power Users”: Miro uses FFmpeg under the hood. It calls the FFmpeg command line via python scripts. If you want to make changes to your default encoding of Miro, it is possible to change the python scripts (see www.xlvisuals.com/index.php?/archives/43-How-to-change-Miro-Video-Converter-3.0-output-quality-for-Ogg-Theora-on-OS-X..html). If you make these changes and re-encode Ogg Theora, this will result in a 12.4 MB file of comparable quality to the WebM and MP4 files.

If you open one of the files in a browser, it will expand to the full size of your browser window. This can look a bit fuzzy if your video resolution is smaller than your current browser window. You will need to reduce the size of the browser window to the resolution of your video and the image will become sharper.

As pointed out earlier, you can convert practically any video file to its WebM, Ogg Theora, and MP4 counterparts. You are also given the choice to prepare the file for a number of iOS and Android devices.

In Figure 1-3 you will notice the Apple button on the left side. Click this and you can prepare the file for playback on the full range of Apple iOS devices. When the file is converted, Miro will ask if you want this file placed in your iTunes library. The choice is up to you.

COMPRESSION SCORE CARD FOR MIRO VIDEO CONVERTER

· Original file: 190.3 MB.

· WebM: 6.8 MB.

· MP4: 11.4 MB

· OGG: 1.6 MB.

Encoding Ogg Video with Firefogg

Firefogg is an open source, GPL-licensed Firefox extension whose sole purpose is to encode a video file to WebM or Ogg Theora. To use this extension you will need to have Firefox version 3.5 or higher installed on your computer. To add the extension, head to http://firefogg.org/and follow the installation instructions.

Follow these steps to encode video using Firefogg:

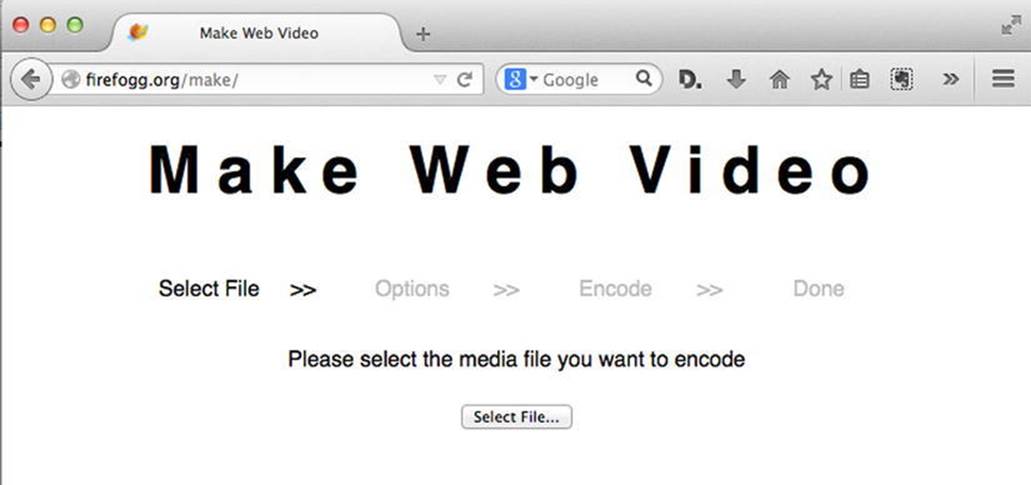

1. Launch Firefox and point your browser to http://firefogg.org/make/. You will see the Make Web Video page shown in Figure 1-5. Click the Select File … button and navigate to the file to be encoded.

Figure 1-5. We start by selecting the video to be encoded

2. When you select the file to be encoded the interface changes to show you the specifications of the original file and two pop-downs for Format and Preset. You can choose between WebM with the VP8 and Vorbis codecs, WebM with the VP9 and Opus codecs, and Ogg Theora with Vorbis. Also choose a Preset adequate to your input file format, which is usually pre-selected for you. If this is as far as you choose to go with adapting encoding parameters, then feel free to click the Encode button. It will ask you what to name the encoded file and where to store it.

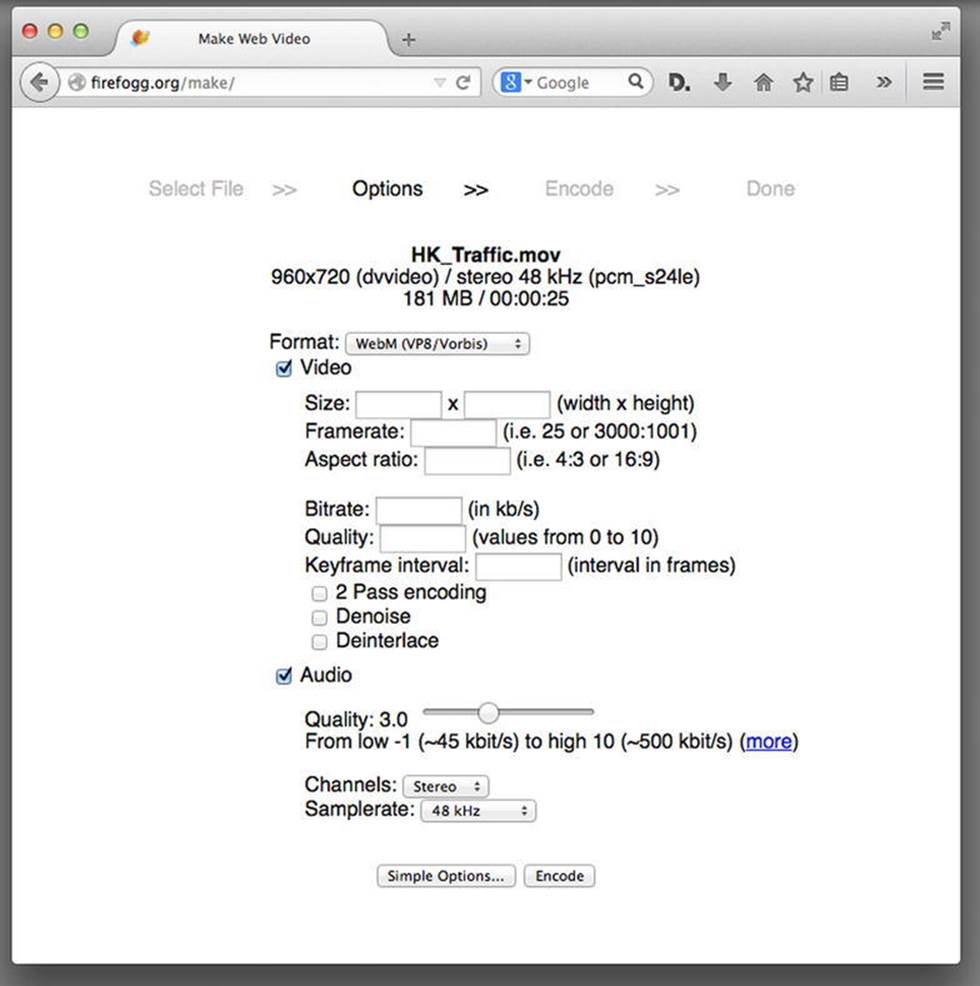

3. In this instance, we want to exert a lot more control over the encoding parameters. Click the Advanced Options button. The interface changes to that shown in Figure 1-6. It shows you the most important encoding parameters for WebM (VP8/Vorbis) video. This is actually a subset of the FFmpeg encoding parameters, which—as in the case of Miro Video Encoder—is the actual encoding software used under the hood by Firefogg.

Figure 1-6. The Firefogg Advanced Options dialog box

The choices presented here give you absolute control over the quality of the video. Let’s go through them.

· Format: You are given three choices. The WebM (VP9/Opus) selection offers you the opportunity to use the latest version of the codecs for the WebM format. The WebM (VP8/Vorbis) selection is the most common format and the final choice Ogg (Theora/Vorbis) lets you create an Ogg Theora version of the file. We chose Ogg (Theora/Vorbis).

· Size: You can change the resolution of the video but just keep in mind that changing this value without maintaining the aspect ratio will distort the final product or place it in a letterbox. Also, increasing the physical dimensions of the video does nothing more than stretch the input pixels over more output pixels and thus has to create new pixels from the existing ones. This upsampling actually reduces quality through anti-aliasing and should be avoided. We chose to resize this video to 50% and change the width and height values to 480 x 360.

· Framerate: If you leave this section blank, the encoding video’s framerate will be used. A common framerate is 30 frames per second (fps). If there is not a lot of motion in the video, reducing the fps to 15 will not have a noticeable effect on playback quality. We enter 30 as the value, since our example video is of road traffic and thus has a lot of motion.

· Aspect Ratio: If the video is standard definition, enter 4:3. If it is HD, enter 16:9. Our example video is 4:3.

· Bitrate: In many respects this is where the “rubber hits the road.” There is no magic number here but studies have shown the average US broadband speed is 6.6 megabits per second (Mbps). Don’t become attached to that number because those same studies have shown the average mobile broadband speed is 2.7 Mbps. If you are streaming HTML5 video, you may want to target the lowest common denominator, so a good place to start is 2,000. Even then this choice could be dangerous. Bitrate for any video is the sum of the audio and video bitrates. If you decide to use 2,700 Kbps for the video and 700 Kbps for the audio you can pretty well guarantee that the user experience on a mobile device will involve video that starts and stops. In our case, because the physical dimensions of the video are small, we decided to use 1,200 Kbps for the video track which leaves plenty of bandwidth for the audio track.

· Quality: Your choices are any number between 0 and 10. The choice affects file size and image quality. If the video was shot using your smartphone, a value between 4 and 6 will work. Save 10 for high-quality studio productions. Our video was shot using a Flip video camera so we chose 6 for our quality setting.

· Keyframe interval: This is the time interval between two full-quality video frames. All frames in between will just be difference frames to the previous full video frame, thus providing for good compression. However, difference frames cannot be accessed when seeking, since they cannot be decoded by themselves. By default, Firefogg uses 64 frames as a keyframe interval, which—at 30 fps—means you can basically seek to a 2-sec resolution. That should be acceptable for almost all uses. Leave this blank and let the software do the work using its default setting.

· 2 pass encoding: We always select this option. This feature, also called “2 pass variable bitrate encoding,” always improves the output quality. What it does is to hunt through the video on its first pass looking for abrupt changes between the frames. The second pass does the actual encoding and will make sure to place full-quality keyframes where abrupt changes happen. This avoids difference frames being created across these boundaries, which would obviously be of poor quality. For example, assume the video subject is a car race. The cars will zip by for a few seconds and then there is nothing more than spectators and an empty track. The first pass catches and notes these two transitions. The second pass will reset the keyframe after the cars have zoomed through the frame and allow for a lower bitrate in the shot that has less motion.

· Denoise: This filter will attempt to smooth out any artifacts found in the input video. We won’t worry about this option.

· Deinterlace: Select this only if you are using a clip prepared for broadcast. We won’t worry about this option.

The audio choices, as you may have noticed, are a bit limited. Our video contains nothing more than the noise of the traffic on the street and the usual background street noise. With this in mind, we weren’t terribly concerned with the audio quality. Still some choices had to be made.

· Quality: This slider is a bit deceiving because it actually sets the audio bitrate. We chose a value of 6. If audio quality is of supreme importance select 10. If it is simply unimportant background noise a value of 1 will do. Other than that, only trial and error will help you get the number set to your satisfaction.

· Channels: Your choices are stereo and mono. We choose mono because of the nature of the audio. If this file were professionally produced and only destined for desktop playback then stereo would be the logical choice. Also keep in mind that choosing stereo will increase the file size.

· Sample rate: The three choices presented determine the accuracy of the audio file. If accuracy is a prime consideration then 48 kHz or 44.1 kHz are the logical choices. We chose 22 kHz since our audio is not that important to be reproduced exactly.

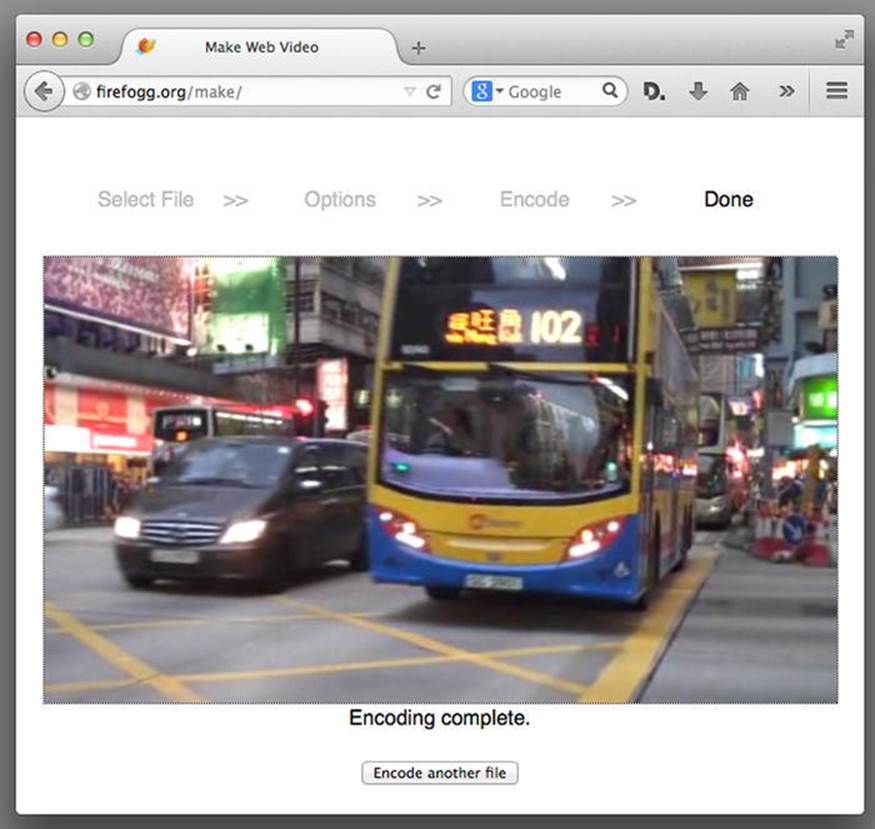

4. With our choices made we clicked the Encode button. You will see the Progress Bar twice as it completes its two passes and the video, Figure 1-7, will launch in the Firefox browser.

Figure 1-7. The Ogg Video file plays in the Firefox browser

The previous options are almost identical when encoding WebM. This is because these are rather typical options of any video encoder. There are many more options that you can use in Firefogg when using the Firefogg Javascript API (application programming interface) (seewww.firefogg.org/dev/). It allows you to include the encoding via the Firefogg Firefox extension in your own Web application.

COMPRESSION SCORE CARD FOR FIREFOGG

· Original file: 190.3 MB.

· WebM (VP8/Vorbis): 14.4 MB.

· WebM (VP9.Opus): 7.6 MB.

· Ogg Theora (default settings): 11.4 MB.

· Ogg Theora (our custom encode): 4.2 MB.

Encoding an MP4 File with the Adobe Media Encoder CC

Encoding an MP4 file involves many of the same decisions around bitrate, audio quality, and so on, as those encountered in the previous example. Though there are a number of products out there that will encode an .mp4, ranging in complexity from video editors to Miro—they are all remarkably similar in that you will be asked to make the same decisions regardless of the product. In this example we are using the Adobe Media Encoder to present those decisions. As well, the Media Encoder adds another degree of complexity to the process outlined to this point in the chapter. So let’s get started.

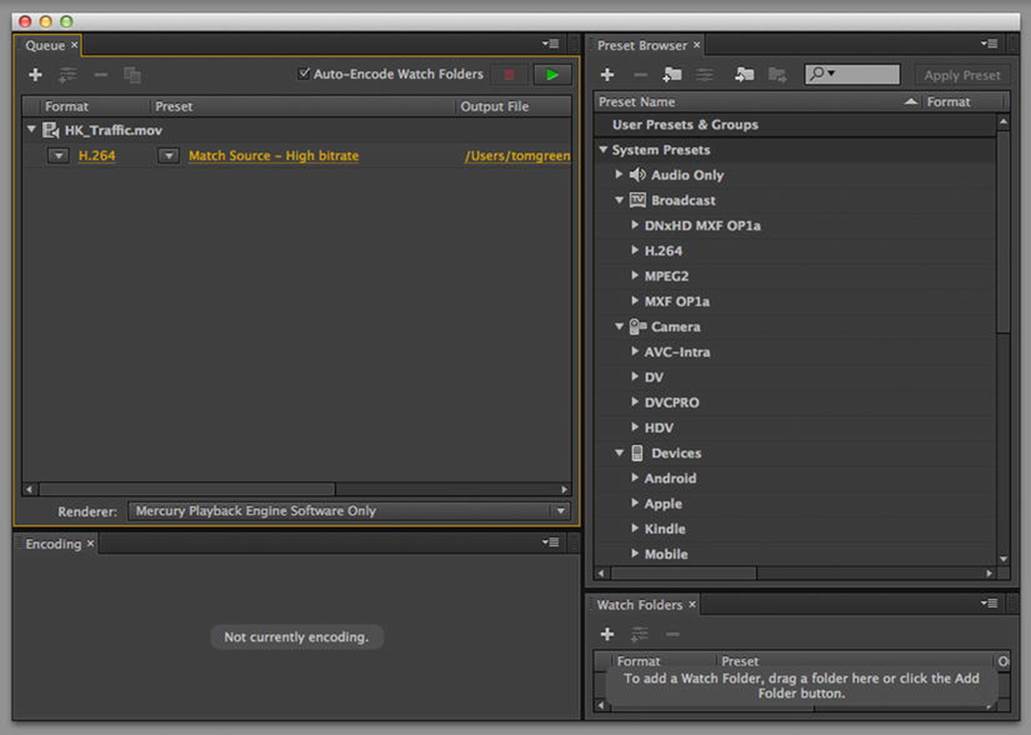

1. When the Media Encoder launches, drag your video into the queue area on the left, as shown in Figure 1-8. Over on the right side of the interface are a number of preset encoding options. We tend to ignore them, preferring, instead, to set our own values rather than use ones that may or may not fit our intention.

Figure 1-8. The file to be encoded is added to the Encoding Queue

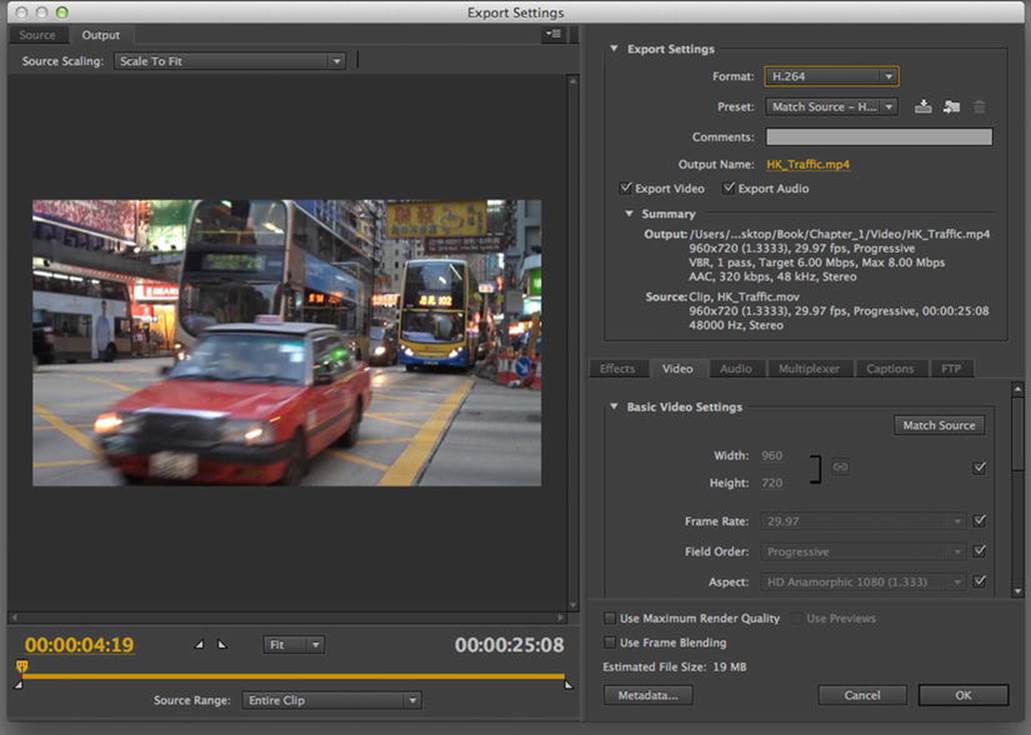

2. When the file appears, click on the “Format” link to open the Export Settings dialog box shown in Figure 1-9.

Figure 1-9. The Export Settings dialog box is where the magic happens

The left side of the dialog box allows you to set the In and Out points of the video and not much else. The right side of the dialog box is where you make some fundamental decisions.

3. The first decision is which codec to use. We clicked the format pop-down and selected the H.264 codec from the list. You don’t need to select a preset but make sure the output name includes the .mp4 extension.

![]() Note Any output created by the Adobe Media Encoder is placed in the same folder as the source file.

Note Any output created by the Adobe Media Encoder is placed in the same folder as the source file.

4. Select Export Video and Export Audio if they are not selected. Leaving these unchecked will have the obvious consequences.

5. Click the Video tab to edit Basic Video Settings. This is where we set the values for the video part of the MP4 container.

6. Clicking a check box will allow you to change the values in the Basic Video Settings area. If you do need to change the physical dimensions, be sure the chain link is selected and the width and height values will change proportionally.

7. Click the check mark beside “profile” and, as pointed out earlier in this chapter, select the “High” profile, which is used for Web video. If our target included iOS devices, then “Baseline” would be the profile choice.

Setting the Bitrate

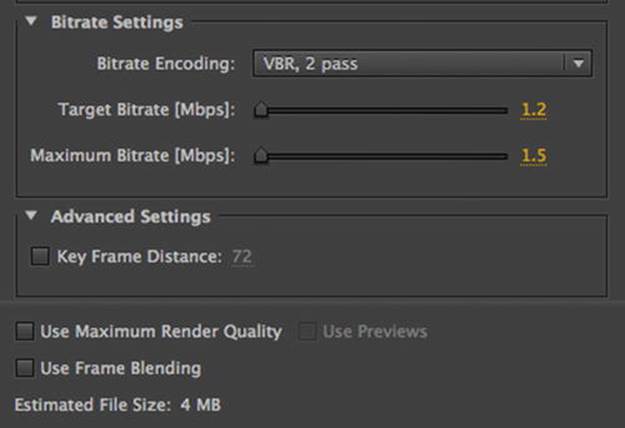

The next important decision to be made involves bitrate. If you click the pop-down you will be presented with three choices: CBR (constant bitrate), VBR (variable bitrate),1 Pass, and VBR,2 Pass. You will notice, as you select each one, the Target Bitrate area changes to include an extra slider for the two VBR choices. So what are the differences?

CBR encoding applies the set data rate to your setting to the entire video clip. Use CBR only if your clip contains a similar motion level—think a tree in a field—across the entire clip.

VBR encoding adjusts the data rate down and to the upper limit you set, based on the data required by the compressor. This explains the appearance of that extra slider. Unlike the Ogg Theora example, you get set the upper and target limits for the bitrate.

When it comes to encoding mp4 video, VBR 2 pass is the gold standard. This is the same as the 2 pass encoding that we used for Ogg Theora.

1. Select VBR,2 pass from the pop-down menu.

2. Set the Target Bitrate to 1.2 and the Maximum Bitrate to 1.5 as shown in Figure 1-10. Remember, bitrate is the sum of the audio and video bitrates. We know our bandwidth limit is around 2 Mbps so this leaves us ample room for the audio. Also note, the projected file size is now sitting at 4 MB.

Figure 1-10. The bitrate targets are set for the H.264 codec in the .mp4 container

3. Ignore the keyframe distance setting. Leave that to the software. Now we can turn our attention to the audio track in the mp4 container.

Setting the Audio values for the AAC Codec

The mp4 container uses the H.264 codec to compress the video track and the AAC audio codec to compress the audio track in the container. In this example we are going to apply AAC encoding to the audio track in the video. Here’s how.

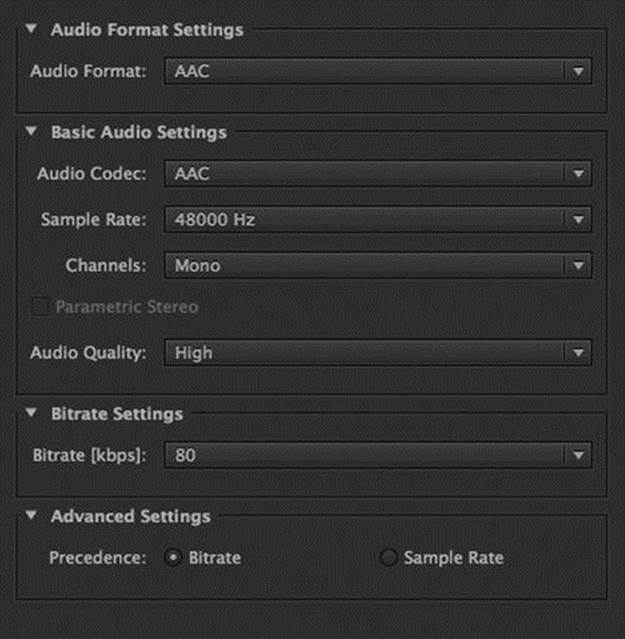

1. Click the Audio tab to open the Audio options shown in Figure 1-11.

Figure 1-11. The Audio Encoding options

2. In the format area select AAC. The other two options—Dolby Digital and MPEG—can’t be used for the Web.

3. Select AAC as the audio codec. Though there are two other options—AAC+ Version 1 and AAC+ Version 2—these are more applicable to streaming audio and radio broadcasting.

4. Reduce the Sample Rate to 32000 Hz. The reason is that the audio track is more background noise than anything else and reducing its sample rate won’t have a major effect on the audio quality.

5. Select Mono from the Channels pop-down.

6. Set the Audio Quality to High though; with the audio contained in our clip, a quality setting of medium would not be noticeably different.

7. Set the Bitrate value to 64. If this video were to contain a stereo track, then anything between 160 and 96 would be applicable. In our case it is a Mono track and 64 Kbps is a good medium to aim for. Also be aware that bitrate does affect file size. In our case simply selecting 160 Kbps increased the file size by 1 MB.

8. Advanced Settings offers you two choices for Precedence for playback. Obviously, since we are running this file through a browser, Bitrate is the obvious choice.

9. With our choices made, click the OK button to return to the queue. When you arrive click the Green Start Queue button to start the encoding process. When it finishes, you will hear a chime.

COMPRESSION SCORE CARD FOR ADOBE MEDIA ENCODER

· Original file: 190.3 mb.

· MP4: 4 MB.

Encoding Media Resources Using FFmpeg

In this final section of the chapter, we focus on using an open source command-line tool to perform the encoding.

The tool we will be using is FFmpeg, which is also the tool of choice for many online video publishing sites, including, allegedly, YouTube. This software is available to everyone, on every major platform and is vendor-neutral.

Install Windows builds from http://ffmpeg.zeranoe.com/builds/. On Mac OS X we recommend using Homebrew (make sure to install it with the following command-line options: -with-libvorbis, –with-libvpx for WebM support, and –with-theora for Theora support). On Linux, there should be a package built for your distribution. Even if you have an exotic platform, you are likely to make it work by compiling from source code.

We start with encoding MPEG-4 H.264 video.

Open source tools for encoding MPEG-4 H.264 basically all use the x264 encoding library, which is published under the GNU GPL license. x264 is among the most feature-complete H.264 codecs and is widely accepted as one of the fastest with highest-quality results.

Let’s assume you have a video file from a digital video camera. It could already be in MPEG-4 H.264 format, but let’s assume it is in DV, QuickTime, VOB, AVI, MXF, or any other such format. FFmpeg understands almost all input formats (assuming you have the right decoding libraries installed). Running ffmpeg --formats will list all the supported codec formats.

To get all the options possible with libx264 encoding in FFmpeg, run the following command:

$ ffmpeg -h encoder=libx264

There is up-to-date information on command-line switches at https://trac.ffmpeg.org/wiki/Encode/H.264.

The following are some of the important command-line switches:

· The -c switch specifies the codec to use. “:v” specifies a video codec and libx264 the x264 encoder. “:a” specifies the audio codec and libfaac the AAC encoder.

· The -profile:v switch specifies a H.264 video profile with a choice of baseline, main or high.

· To constrain a bitrate, use the -b [bitrate] switch, with “:v” for video and “:a” for audio.

· To specify the run in a multi-pass, use the -pass [number] switch.

· To specify the number of audio channels, use -ac [channels] and the sampling rate, use -ar [rate].

Following is a simple command line that converts an input.mov file to an output.mp4 file using the main profile:

$ ffmpeg -i input.mov -c:v libx264 -profile:v main -pix_fmt yuv420p -c:a libfaac output.mp4

The pixel format needs to be specified because Apple QuickTime only supports YUV planar color space with 4:2:0 chroma subsampling in H.264.

Here is a two-pass encoding example. The first pass only needs video as input because it creates the temporary log files required for input to the second pass.

$ ffmpeg -i input.mov -c:v libx264 -profile:v main -b:v 1200k -pass 1 -pix_fmt yuv420p -an temp.mp4

$ ffmpeg -i input.mov -c:v libx264 -profile:v main -b:v 1200k -pass 2 -pix_fmt yuv420p \

-c:a libfaac -b:a 64k -ac 1 -ar 22050 output.mp4

COMPRESSION SCORE CARD FOR FFMPEG MP4

· Original file: 190.3 MB.

· MP4 (unchanged encoding settings): 9.5 MB.

· MP4 (first pass w/o audio): 3.8 MB.

· MP4 (second pass): 4 MB.

Encoding Ogg Theora

Open source tools for encoding Ogg Theora basically use the libtheora encoding library, which is published under a BSD style license by Xiph.org. There are several encoders written on top of libtheora, of which the most broadly used are ffmpeg2theora and FFmpeg.

The main difference between ffmpeg2theora and FFmpeg is that ffmpeg2theora is fixed to use the Xiph libraries for encoding, while FFmpeg has a choice of codec libraries, including its own Vorbis implementation. ffmpeg2theora has far fewer options to worry about. To use FFmpeg for encoding Ogg Theora, make sure to use the libvorbis and vorbis encoding library; otherwise your files may be suboptimal.

Because ffmpeg2theora is optimized toward creating Ogg Theora files and has therefore more specific options and functionality for Ogg Theora, we use it here.

The following command can be used to create an Ogg Theora video file with Vorbis audio. It will simply retain the width, height, and framerate of your input video and the sampling rate and number of channels of your input audio in a new Ogg Theora/Vorbis encoded resource.

$ ffmpeg2theora -o output.ogv input.mov

Just like MPEG H.264, Ogg Theora also offers the possibility of two-pass encoding to improve the quality of the video image. Here’s how to run ffmpeg2theora with two-pass.

$ ffmpeg2theora -o output.ogv -- two-pass input.mov

There are a lot more options available in ffmpeg2theora. For example, you can include subtitles, metadata, an index to improve seekability on the Ogg Theora file, and even options to improve the video quality with some built-in filters. Note that inclusion of an index has been a default since ffmpeg2theora version 0.27. The index will vastly improve seeking performance in browsers.

You can discover all of the options by calling

$ ffmpeg2theora -h

Encoding WebM

Open source tools for encoding WebM basically use the libvpx encoding library, which is published under a BSD style license by Google. FFmpeg has many command line options for encoding WebM. The most important are described here:https://trac.ffmpeg.org/wiki/Encode/VP8.

If you enter the following command, you should be able to create a WebM file with VP8 video and Vorbis audio. It will simply retain the width, height, and framerate of your input video as well as the sample rate and number of channels of your input audio in a new WebM-encoded resource:

$ ffmpeg -i input.mov output.webm.

Strangely, FFmpeg tries to compress the WebM file to a bitrate of 200 Kbps for the video, which results in pretty poor picture quality.

Following is a command line that targets 1,200 Kbps:

$ ffmpeg -i input.mov -b:v 1200k output.webm

COMPRESSION SCORE CARD FOR FFMPEG WEBM

· Original file: 190.3 MB.

· WebM (unchanged encoding settings): 1.7 MB.

· WebM (1200Kbps bitrate): 4.2 MB.

Using Online Encoding Services

While you can do video encoding yourself, and even set up your own automated encoding pipeline using FFmpeg, it may be easier to use an online encoding service. There are a large number of services available (e.g., Zencoder, Encoding.com, HeyWatch, Gomi, PandaStream, uEncode), with different pricing options. The use of online encoding services is particularly interesting since they will run the software for you with already optimized parameters, and the cost will scale with the amount of encoding you need to undertake.

Encoding MP3 and Ogg Vorbis Audio Files

As you may have guessed, encoding audio is far easier than encoding video. Several programs are available for encoding an audio recording to the MP3 container format, including lame or FFmpeg (which incidentally uses the same encoding library as lame: libmp3lame). Though most audio-editing software is able to encode MP3, let’s use FFmpeg. Here’s how.

1. Enter the following command:

$ ffmpeg -i audiofile -acodec libmp3lame -aq 0 audio.mp3

The aq parameter signifies the audio quality with potential values ranging between 0 and 255 with 0, used in this example, being the best quality. There are further parameters available to change such as bitrate, number of channels, volume, and sampling rate.

To encode an Ogg Vorbis file

2. Enter the following command:

$ ffmpeg -i audiofile -f ogg -acodec libvorbis -ab 192k audio.ogg

The ab parameter signifies the target audio bitrate of 192 Kbps. There are further parameters available to change, such as, the number of channels, volume, and sampling rate.

3. You can also use oggenc to encode to Ogg Vorbis. This command is slightly easier to use and has some specific Ogg Vorbis functionality. Enter the following command to create an Ogg Vorbis file with a target bitrate of 192K:

$ oggenc audiofile -b 192 -o audio.ogg

The oggenc command also offers multiple extra parameters, such as the inclusion of skeleton—which will create an index to improve seeking functionality on the file—a quality parameter –q – with values that range between -1 and 10, with 10 being the best quality. The command also includes parameters that let you change channels, audio volume, the samplerate, and a means to include name-value pairs of metadata.

Oggenc accepts input the audio input file only in raw, wav, or AIFF format, so it’s a little more restricted than the ffmpeg command.

Summary

We won’t deny this has been a rather “tech heavy” chapter. The reason is simple: You can’t really use audio and video files in HTML5 if you don’t understand their underlying technologies and how these files are created. We started by explaining container formats and then moved into the all-important codecs. From there we started encoding video. Though we showed you a number of free and commercial tools used to encode video, the stress was on the decisions you will have to make at each step of the process. As we pointed out, these decisions are more subjective than objective and regardless of which software you use to encode video, you will be making similar decisions.

We ended the chapter by moving from GUI-based encoding to using a command line tool—FFmpeg—to create audio and video files. Again, you will have to make the same subjective decisions regarding a number of important factors that have a direct impact on the audio and video output.

Speaking of output, now that you know how the files are created let’s play them in HTML5. That’s the subject of the next chapter. We’ll see you there.