Microsoft Press Programming Windows Store Apps with HTML, CSS and JavaScript (2014)

Chapter 13 Media

To say that media is important to apps—and to culture in general—is a terrible understatement. Ever since the likes of Edison made it possible to record a performance for later enjoyment, and the likes of Marconi made it possible to widely broadcast and distribute such performances, humanity’s worldwide appetite for media—graphics, audio, and video—has probably outpaced the appetite for automobiles, electricity, and even junk food. In the early days of the Internet, graphics and images easily accounted for the bulk of network traffic. Today, streaming video even from a single source like Netflix holds top honors for pushing the capabilities of our broadband infrastructure! (It certainly holds true in my own household with my young son’s love of Tintin, Bob the Builder, Looney Tunes, and other such shows.)

Incorporating some form of media is likely a central concern for most Windows Store apps. Simple ones, even, probably use at least a few graphics to brand the app and present an attractive UI, as we’ve already seen on a number of occasions. Many others, especially games, will certainly use graphics, video, and audio together. In the context of this book, all of this means using the img, svg (Scalable Vector Graphics), canvas, audio, and video elements of HTML5.

Of course, working with media goes well beyond just presentation because apps might also provide any of the following capabilities:

• Organize and edit media files, including those in the pictures, music, and videos media libraries.

• Playback of custom audio and video formats.

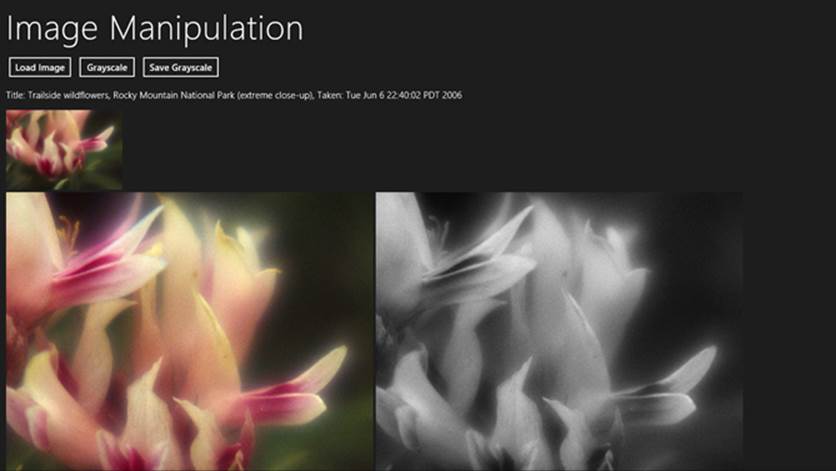

• Transcode (convert) media files, possibly applying various filters.

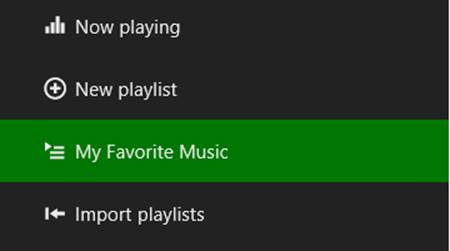

• Organize and edit playlists.

• Capture audio and video from available devices.

• Edit or modify media directly in the rendering pipeline through media stream sources.

• Stream media from a server to a device, or from a device to a Play To target, perhaps also applying digital rights management (DRM).

These capabilities, for which many WinRT APIs exist, along with the media elements of HTML5 and their particular capabilities within the Windows environment, will be our focus for this chapter.

Note As is relevant to this chapter, a complete list of audio and video formats that are natively supported for Windows Store apps can be found on Supported audio and video formats.

The Media Hub sample In the Windows SDK you’ll find the Media Hub sample, which provides an rich, end-to-end sample for many of the individual features that we’ll talk about in this chapter, including media playback, media capture, effects, system media transport controls, background audio, 3D video, and Play To. I won’t be drawing from this sample here, however, as it has its own documentation on the MediaHub sample app page.

Sidebar: Performance Tricks for Faster Apps

Various recommendations in this chapter come from two great //build talks: 50 Performance Tricks to Make Your HTML5 Apps and Sites Faster and Fast Apps and Sites with JavaScript. While some tricks are specifically for web applications running in a browser, many of them are wholly applicable to Windows Store apps written in JavaScript because they run on top of the same infrastructure as Internet Explorer.

Creating Media Elements

Certainly the easiest means to incorporate media into an app is what we’ve already been doing for years: simply use the appropriate HTML element in your layout and voila! there you have it. With img, audio, and video elements, in fact, you’re completely free to use content from just about any location. That is, the src attributes of these elements can be assigned http:// or https:// URIs for remote content, ms-appx:/// and ms-appdata:/// URIs for local content, or URIs from URL.createObjectURL for any content represented by a StorageFile object. Remember with bitmap images that it’s more memory efficient to use the StorageFile thumbnail APIs and pass the thumbnail to URL.createObjectURL instead of opening the whole image file. The img element can also use an SVG file as a source.

There are three ways to create a media element in a page or page control.

First is to include the element directly in declarative HTML. Here it’s often useful to use the preload="auto" attribute for remote audio and video to increase the responsiveness of controls and other UI that depend on those elements. (Doing so isn’t really important for local media files since they are, well, already local!) Oftentimes, media elements are placed near the top of the HTML file, in order of priority, so that downloading can begin while the rest of the document is being parsed.

On the flip side, if the user can wait a short time to start a video, use a preview image in place of the video and don’t start the download until it’s actually necessary. Code for this is shown later in this chapter in the “Video Playback and Deferred Loading” section. You can also consider using the background transfer APIs, as we discussed in Chapter 4, “Web Content and Services,” to save media files locally for later playback.

Playback for a declarative element can be automatically started with the autoplay attribute, through the built-in UI if the element has the controls attribute, or by calling <element>.play() from JavaScript.

The second method is to create an HTML element in JavaScript via document.createElement and add it to the DOM with <parent>.appendChild and similar methods. Here’s an example using media files in this chapter’s companion content, though you’ll need to drop the code into a new project of your own in a media folder:

//Create elements and add to DOM, which will trigger layout

var picture = document.createElement("img");

picture.src = "/media/wildflowers.jpg";

picture.width = 300;

picture.height = 450;

document.getElementById("divShow").appendChild(picture);

var movie = document.createElement("video");

movie.src = "/media/ModelRocket1.mp4";

movie.autoplay = false;

movie.controls = true;

document.getElementById("divShow").appendChild(movie);

var sound = document.createElement("audio");

sound.src = "/media/SpringyBoing.mp3";

sound.autoplay = true; //Play as soon as element is added to DOM

sound.controls = true; //If false, audio plays but does not affect layout

document.getElementById("divShow").appendChild(sound);

Unless otherwise hidden by styles, adding image and video elements to the DOM, plus audio elements with the controls attribute, will trigger re-rendering of the document layout. An audio element without that attribute will not cause re-rendering. As with declarative HTML, settingautoplay to true will cause video and audio to start playing as soon as the element is added to the DOM.

Finally, for audio, apps can create an Audioobject in JavaScript to play sounds or music without any effect on UI. More on this later. JavaScript also has the Image class, and the Audio class can be used to load video:

//Create objects (preloading), then set other DOM object sources accordingly

var picture = new Image(300, 450);

picture.src = "http://www.kraigbrockschmidt.com/downloads/media/wildflowers.jpg";

document.getElementById("image1").src = picture.src;

//Audio object can be used to preload (but not render) video

var movie = new Audio("http://www.kraigbrockschmidt.com/downloads/media/ModelRocket1.mp4");

document.getElementById("video1").src = movie.src;

var sound = new Audio("http://www.kraigbrockschmidt.com/downloads/media/SpringyBoing.mp3");

document.getElementById("audio1").src = sound.src;

Creating an Image or Audio object from code does not create elements in the DOM, which can be a useful trait. The Image object, for instance, has been used for years to preload an array of image sources for use with things like image rotators and popup menus, and you can use the same trick for preloading image thumbnails. For remote sources, preloading means that the images have been downloaded and cached. This way, assigning the same URI to the src attribute of an element that is in the DOM, as shown above, will make that image appear immediately. The same is true for preloading video and audio, but again, this is primarily helpful with remote media because files on the local file system will load relatively quickly as is. Still, if you have large local images and want them to appear quickly when needed, preloading their thumbnails is a useful strategy.

Of course, you might want to load media only when it’s needed, in which case the same type of code can be used with existing elements, or you can just create an element and add it to the DOM as shown earlier.

Graphics Elements: Img, Svg, and Canvas (and a Little CSS)

I know you’re probably excited to get to sections of this chapter on video and audio, but we cannot forget that images have been the backbone of web applications since the beginning and remain a huge part of any app’s user experience. Indeed, it’s helpful to remember that video itself is conceptually just a series of static images sequenced over time! Fortunately, HTML5 has greatly expanded an app’s ability to incorporate image data by adding SVG support and the canvas element to the tried-and-true img element. Furthermore, applying CSS animations and transitions (covered in detail in Chapter 14, “Purposeful Animations”) to otherwise static image elements can make them appear very dynamic.

Speaking of CSS, it’s worth noting that many graphical effects that once required the use of static images can be achieved with just CSS, especially CSS3:

• Borders, background colors, and background images

• Folder tabs, menus, and toolbars

• Rounded border corners, multiple backgrounds/borders, and image borders

• Transparency

• Embeddable fonts

• Box shadows

• Text shadows

• Gradients

In short, if you’ve ever used img elements to create small visual effects, create gradient backgrounds, use a nonstandard font, or provide some kind of graphical navigation structure, there’s probably a way to do it in pure CSS. For details, see the great overview of CSS3 by Smashing Magazine as well as the CSS specs at http://www.w3.org/. CSS also provides the ability to declaratively handle some events and visual states using pseudo-selectors of hover, visited, active, focus, target, enabled, disabled, and checked. For more, see http://css-tricks.com/ as well as another Smashing Magazine tutorial on pseudo-classes.

That said, let’s review the three primary HTML5 elements for graphics:

• img is used for raster data. The PNG format is generally preferred over other formats, especially for text and line art, though JPEG makes smaller files for photographs. GIF is generally considered outdated, as the primary scenarios where GIF produced a smaller file size can probably be achieved with CSS directly. Where scaling is concerned, Windows Store apps need to consider pixel density, as we saw in Chapter 8, “Layout and Views,” and provide separate image files for each scale the app might encounter. This is where the smaller size of JPEGs can reduce the overall size of your app package in the Windows Store.

• SVGs are best used for smooth scaling across display sizes and pixel densities. SVGs can be declared inline, created dynamically in the DOM, or maintained as separate files and used as a source for an img element (in which case all the scaling characteristics are maintained). As we saw in Chapter 8, preserving the aspect ratio of an SVG is often important, for which you employ the viewBox and preserveAspectRatio attributes of the svg tag.

• The canvas element provides a drawing surface and API for creating graphics with lines, rectangles, arcs, text, and so forth, including 3D graphics via WebGL (starting in Windows 8.1). The canvas ultimately generates raster data, which means that once created, a canvas scales like a bitmap. (An app, of course, will typically redraw a canvas with scaled coordinates when necessary to avoid pixelation.) The canvas is also very useful for performing pixel manipulation, even on individual frames of a video while it’s playing.

Apps often use all three of these elements, drawing on their various strengths. I say this because when canvas first became available, developers seemed so enamored with it that they seemed to forget how to use img elements and they ignored the fact that SVGs are often a better choice altogether! (And did I already say that CSS can accomplish a great deal by itself as well?)

In the end, it’s helpful to think of all the HTML5 graphics elements as ultimately producing a bitmap that the app host simply renders to the display. You can, of course, programmatically animate the internal contents of these elements in JavaScript, as we’ll see in Chapter 14, but for our purposes here it’s helpful to think of these as essentially static.

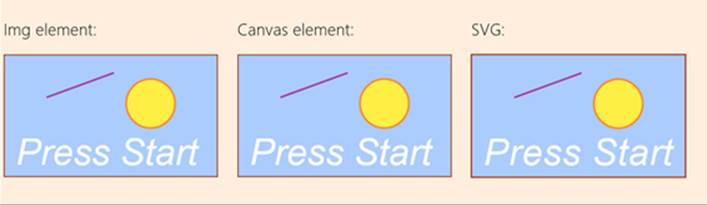

What differs between the elements is how image data gets into the element to begin with.Img elements are loaded from a source file, svgs are defined in markup, and canvas elements are filled through procedural code. But in the end, as demonstrated in scenario 1 in the HTML Graphics example for this chapter and shown in Figure 13-1, each can produce identical results.

FIGURE 13-1 Image, canvas, and svg elements showing identical results.

In short, there are no fundamental differences as to what can be rendered through each type of element (though WebGL in a canvas has much richer 3D capabilities). However, they do have differences that become apparent when we begin to manipulate those elements as with CSS. Because each element is just a node in the DOM, plain and simple, they are treated like all other nongraphic elements: CSS doesn’t affect the internals of the element, just how it ultimately appears on the page. Individual parts of SVGs declared in markup can, in fact, be separately styled so long as they can be identified with a CSS selector. In any case, such styling affects only presentation, so if new styles are applied, they are applied to the original contents of the element.

What’s also true is that graphics elements can overlap with each other and with nongraphic elements (as well as video), and the rendering engine automatically manages transparency according to the z-index of those elements. Each graphic element can have clear or transparent areas, as is built into image formats like PNG. In a canvas, any areas cleared with the clearRect method that aren’t otherwise affected by other API calls will be transparent. Similarly, any area in an SVG’s rectangle that’s not affected by its individual parts will be transparent.

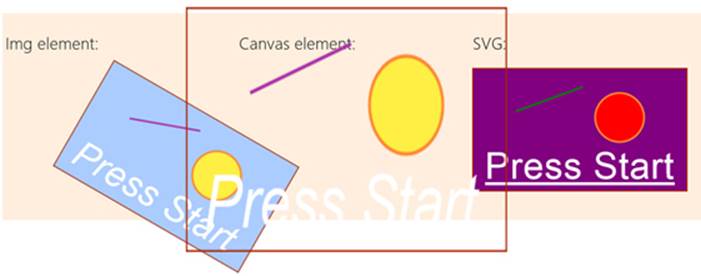

Scenario 2 in the HTML Graphics example allows you to toggle a few styles (with a check box) on the same elements shown earlier. In this case, I’ve left the background of the canvas element transparent so that we can see areas that show through. When the styles are applied, the imgelement is rotated and transformed, the canvas gets scaled, and individual parts of the svg are styled with new colors, as shown in Figure 13-2.

FIGURE 13-2 Styles applied to graphic elements; individual parts of the SVG can be styled if they are accessible through the DOM.

The styles in css/scenario2.css are simple:

.transformImage {

transform: rotate(30deg)translateX(120px);

}

.scaleCanvas {

transform: scale(1.5,2);

}

as is the code in js/scenario2.js that applies them:

function toggleStyles() {

var applyStyles = document.getElementById("check1").checked;

document.getElementById("image1").className = applyStyles ? "transformImage" : "";

document.getElementById("canvas1").className = applyStyles ? "scaleCanvas" : "";

document.getElementById("r").style.fill = applyStyles ? "purple" : "";

document.getElementById("l").style.stroke = applyStyles ? "green" : "";

document.getElementById("c").style.fill = applyStyles ? "red" : "";

document.getElementById("t").style.fontStyle = applyStyles ? "normal" : "";

document.getElementById("t").style.textDecoration = applyStyles ? "underline" : "";

}

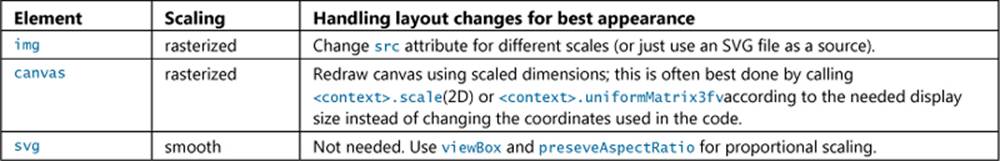

The other thing you might have noticed when the styles are applied is that the scaled-up canvas looks rasterized, like a bitmap would typically be. This is expected behavior, as shown in the following table of scaling characteristics. These are demonstrated in scenarios 3 and 4 of the HTML Graphics example.

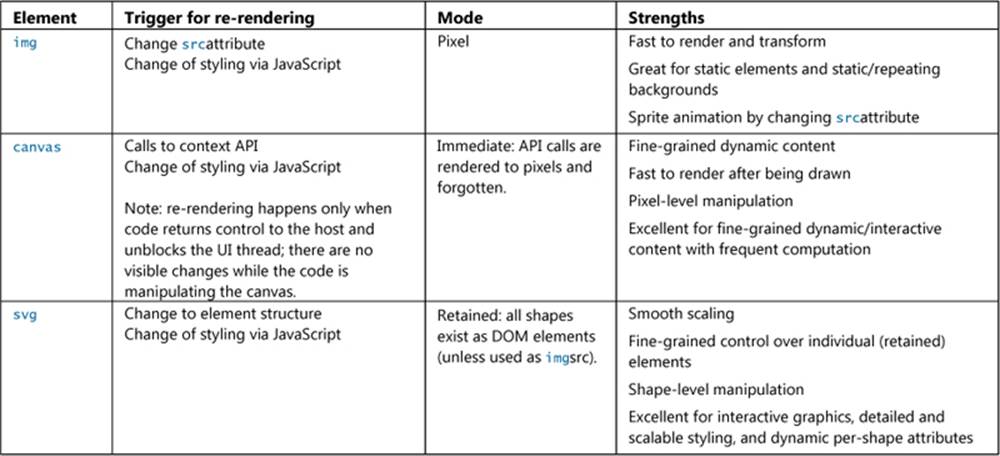

Additional Characteristics of Graphics Elements

There are a few additional characteristics to be aware of with graphics elements.First, different kinds of operations will trigger a re-rendering of the element in the document. Second is the mode of operation of each element. Third are the relative strengths of each element. These are summarized in the following table:

Sidebar: Using Media Queries to Show and Hide SVG Elements

Because SVGs generate elements in the DOM, those elements can be individually styled. You can use this fact with media queries to hide different parts of the SVG depending on its size. To do this, add different classes to those SVG elements. Then, in CSS, add or remove the display: none style for those classes within media queries like@media (min-width:300px) and (max-width:499px). You may need to account for the size of the SVG relative to the app window, but it means that you can effectively remove detail from an SVG rather than allowing those parts to be rendered with too few pixels to be useful.

In the end, HTML5 includes all three of these elements because all three are really needed. All of them benefit from full hardware acceleration, just as they do in Internet Explorer, since apps written in HTML and JavaScript run on the same rendering engine as the browser.

The best practice in app design is to explore the appropriate use of each type of elements. Each element can have transparent areas, so you can easily achieve some very fun effects. For example, if you have data that maps video timings to caption or other text, you can use an interval handler (with the interval set to the necessary granularity like a half-second) to take the video’s currentTime property, retrieve the appropriate text for that segment, and render the text to an otherwise transparent canvas that sits on top of the video. Titles and credits can be done in a similar manner, eliminating the need to re-encode the video.

Some Tips and Tricks

Working with the HTML graphics elements is generally straightforward, but knowing some details can help when working with them inside a Windows Store app.

General tip To protect any content of an app view from screen capture, obtain the ApplicationView object from Windows.UI.ViewManagement.ApplicationView.getForCurrentView() and set its isScreenCaptureEnabled property to false. This is demonstrated in the Disable screen capture sample in the Windows SDK. You would do this, for example, when rendering content obtained from a rights-protected source.

Img Elements

• When possible, avoid loading an entire image file by using the StorageFile thumbnail APIs, getThumbnailAsync and getScaledImageAsThumbnailAsync, as described in Chapter 11, “The Story of State, Part 2.” You can pass a thumbnail to URL.createObjectURL as you would aStorageFile. Of course, if you’re using remote resources directly with http[s]:// URIs, you won’t be able to intercept the rendering to do this.

• Use the title attribute of img for tooltips, not the alt attribute. You can also use a WinJS.-UI.Tooltip control, as described in Chapter 5, “Controls and Control Styling.”

• To create an image from an in-memory stream, see MSApp.createBlobFromRandomAccess-Stream (introduced in Chapter 10, “The Story of State, Part 1”), the result of which can be then given to URL.createObjectURL to create an appropriate URI for a src attribute. We’ll encounter this elsewhere in this chapter, and we’ll need it when working with the Share contract in Chapter 15, “Contracts.” The same technique also works for audio and video streams, including those partially downloaded from the web.

• When loading images from http:// or other remote sources, you run the risk of having the element show a red X placeholder image. To prevent this, catch the img.onerror event and supply your own placeholder:

var myImage = document.getElementById('image');

myImage.onerror = function () { onImageError(this);}

function onImageError(source) {

source.src = “placeholder.png”;

source.onerror = "";

}

• Supported image formats for the img element are listed at the bottom of the img element documentation. Note that as of Windows 8.1, the img element supports the Direct Draw Surface (DDS) file format for in-package content. DDS files are commonly used for game assets and benefit from full hardware acceleration and very short image decoding time. A demonstration of using these can be found in the Block compressed images sample.

• Want to do optical character recognition? Check out the Bing OCR control available from http://www.bing.com/dev/en-us/ocr, which is free to use for up to 5,000 transactions per month.

Svg Elements

• <script> tags are not supported within <svg>.

• If you have an SVG file in your package (or appdata), you can load it into an img element by pointing at the file with the src attribute, but this doesn’t let you traverse the SVG in the DOM. What you can do instead is load the SVG file by using the simple XMLHttpRequest method or theWinJS.xhr wrapper (see Appendix C, “Additional Networking Topics”), and then insert the marking directly into the DOM as a child of some other element. This lets you traverse the SVG’s content and style it with CSS without having to place the SVG directly in your HTML files. Scenario 2 of the HTML Graphics example in the companion content shows this (js/scenario2.js):

WinJS.xhr({ url: "/html/graphic.svg", responseType: "text" }).done(function (request) {

//setInnerHTMLUnsafe is OK because we know the content is coming from our package.

WinJS.Utilities.setInnerHTMLUnsafe(document.getElementById("svgPlaceholder"),

request.response);

});

• PNGs and JPEGs generally perform better than SVGs, so if you don’t technically need an SVG or have a high-performance scenario, consider using scaled raster graphics. Or you can dynamically create a scaled static image from an SVG so as to use the image for faster rendering later:

<!-- in HTML-->

<img id="svg" src="somesvg.svg" style=" display: none;" />

<canvas id="canvas" style=" display: none;"/>

// in JavaScript

var c = document.getElementById("canvas").getContext("2d");

c.drawImage(document.getElementById("svg"),0,0);

var imageURLToUse = document.getElementById("canvas").toDataURL();

• Two helpful SVG references (JavaScript examples): http://www.carto.net/papers/svg/samples/ and http://srufaculty.sru.edu/david.dailey/svg/.

• A number of tools are available to create SVGs: see 4 useful commercial SVG tools and 5 useful open source SVG tools (both on the IDR solutions blog).

Canvas Elements

As you probably know, and as demonstrated in the HTML Graphics example, you obtain a 2D context for a canvas with code like this:

var c = document.getElementById("canvas").getContent("2d");

To obtain a 3D WebGL context (as can be done starting with Windows 8.1), the argument to getContext must be experimental-webgl:

var c = document.getElementById("canvas").getContent("experimental-webgl");

From that point you can use the supported WebGL APIs as documented in WebGL APIs for Internet Explorer. In this book I won’t go into any of the details about the API itself, as it quickly gets complicated. Besides, there are plenty of tutorials on the web.

WebGL aside, here are other tips and tricks for the canvas (note that all the methods named here are found on the context object):

• Remember that a canvas element needs specific width and heightattributes (in JavaScript, canvas.width and canvas.height), not styles. It does not accept px, em, %, or other units.

• Despite its name, theclosePathmethod isnot a direct complement to beginPath. beginPath is used to start a new path that can be stroked, clearing any previous path. closePath, on the other hand, simply connects the two endpoints of the current path, as if you did a lineTo between those points. It does not clear the path or start a new one. This seems to confuse programmers quite often, which is why you sometimes see a circle drawn with a line to the center!

• A call to stroke is necessary to render a path; until that time, think of paths as a pencil sketch of something that’s not been inked in. Note also that stroking implies a call to beginPath.

• When animating on a canvas, doing clearRect on the entire canvas and redrawing every frame is generally easier to work with than clearing many small areas and redrawing individual parts of the canvas. The app host eventually has to render the entire canvas in its entirety with every frame anyway to manage transparency, so trying to optimize performance by clearing small rectangles isn’t an effective strategy except when you’re doing only a small number of API calls for each frame.

• Rendering canvas API calls is accomplished by converting them to the equivalent DirectX calls in the GPU. This draws shapes with automatic antialiasing. As a result, drawing a shape like a 2D circle in a color and drawing the same circle with the background color does not erase every pixel. To effectively erase a shape, use clearRect on an area that’s slightly larger than the shape itself. This is one reason why clearing the entire canvas and redrawing every frame often ends up being easier.

• To set a background image in a canvas (so that you don’t have to draw each time), you can use the canvas.style.backgroundImage property with an appropriate URI to the image.

• Use the msToBlob method on a canvas object to obtain a blob for the canvas contents.

• When using drawImage, you may need to wait for the source image to load using code such as

var img = new Image();

img.onload = function () { myContext.drawImage(myImg, 0, 0); }

myImg.src = "myImageFile.png";

• The context’s msImageSmoothingEnabled property (a Boolean) determines how images are resized on the canvas when rendered with drawImage or pattern-filling through fill, stroke, or fillText. By default, smoothing is enabled (true), which uses a bilinear smoothing method. When this flag is false, a nearest-neighbor algorithm is used instead, which is appropriate for the retro-graphics look of 1980s video games.

• Although other graphics APIs see a circle as a special case of an ellipse (with x and y radii being the same), the canvas arc function works with circles only. Fortunately, a little use of scaling makes it easy to draw ellipses, as shown in the utility function below. Note that we use save andrestore so that the scale call applies only to the arc; it does not affect the stroke that’s used from main. This is important, because if the scaling factors are still in effect when you call stroke, the line width will vary instead of remaining constant.

function arcEllipse(ctx, x, y, radiusX, radiusY, startAngle, endAngle, anticlockwise) {

//Use the smaller radius as the basis and stretch the other

var radius = Math.min(radiusX, radiusY);

var scaleX = radiusX / radius;

var scaleY = radiusY / radius;

ctx.save();

ctx.scale(scaleX, scaleY);

//Note that centerpoint must take the scale into account

ctx.arc(x / scaleX, y / scaleY, radius, startAngle, endAngle, anticlockwise);

ctx.restore();

}

• There’s no rule that says you have to do everything on a single canvas element. It can be very effective to layer multiple elements directly on top of one another to optimize rendering of different parts of your display, especially where game animations are concerned. See to Optimize HTML5 canvas rendering with layering (IBM developerWorks).

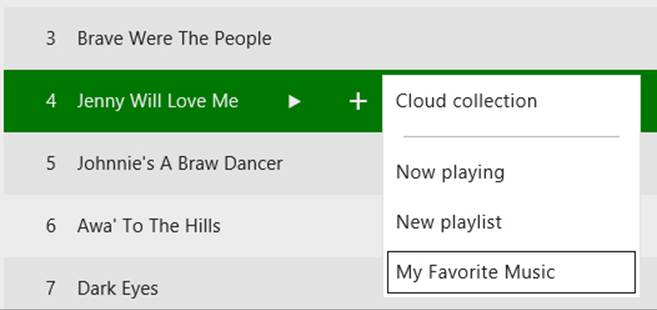

• By copying pixel data from a video, it’s possible with the canvas to dynamically manipulate a video (without affecting the source, of course). This is a useful technique, even if it’s processor-intensive (which means it might not work well on low-power devices).

Here’s an example of frame-by-frame video manipulation, the technique for which is nicely outlined in a Windows team blog post, Canvas Direct Pixel Manipulation.94 In the VideoEdit example for this chapter, default.html contains a video and canvas element in its main body:

<video id="video1" src="Rocket01.mp4" mutedstyle=" display: none"></video>

<canvas id="canvas1" width="640" height="480"></canvas>

In code (js/default.js), we call startVideo from within the activated handler. This function starts the video and uses requestAnimationFrame to do the pixel manipulation for every video frame:

var video1, canvas1, ctx;

var colorOffset = { red: 0, green: 1, blue: 2, alpha: 3 };

function startVideo() {

video1 = document.getElementById("video1");

canvas1 = document.getElementById("canvas1");

ctx = canvas1.getContent("2d");

video1.play();

requestAnimationFrame(renderVideo);

}

function renderVideo() {

//Copy a frame from the video to the canvas

ctx.drawImage(video1, 0, 0, canvas1.width, canvas1.height);

//Retrieve that frame as pixel data

var imgData = ctx.getImageData(0, 0, canvas1.width, canvas1.height);

var pixels = imgData.data;

//Loop through the pixels, manipulate as needed

var r, g, b;

for (var i = 0; i < pixels.length; i += 4) {

r = pixels[i + colorOffset.red];

g = pixels[i + colorOffset.green];

b = pixels[i + colorOffset.blue];

//This creates a negative image

pixels[i + colorOffset.red] = 2–5 - r;

pixels[i + colorOffset.green] = 2–5 - g;

pixels[i + colorOffset.blue] = 2–5 - b;

}

//Copy the manipulated pixels to the canvas

ctx.putImageData(imgData, 0, 0);

//Request the next frame

requestAnimationFrame(renderVideo);

}

Here the page contains a hidden video element (style="display: none") that is told to start playing once the document is loaded (video1.play()). In a requestAnimationFrameloop, the current frame of the video is copied to the canvas (drawImage) and the pixels for the frame are copied (getImageData) into the imgData buffer. We then go through that buffer and negate the color values, thereby producing a photographically negative image (an alternate formula to change to grayscale is also shown in the code comments, omitted above). We then copy those pixels back to the canvas (putImageData) so that when we return, those negated pixels are rendered to the display.

Again, this is processor-intensive because it’s not generally a GPU-accelerated process, and it might perform poorly on lower-power devices. (Be sure, however, to run a Release build outside the debugger when evaluating performance.) It’s much better to write a video effect DLL where possible, as discussed in “Applying a Video Effect” later in this chapter. Nevertheless, it is a useful technique to know. What’s really happening is that instead of drawing each frame with API calls, we’re simply using the video as a data source. So we could, if we like, embellish the canvas in any other way we want before returning from the renderVideo function. An example of this that I enjoy is shown in Manipulating video using canvas on Mozilla’s developer site, which dynamically makes green-screen background pixels transparent so that an img element placed underneath the video shows through as a background. The same could even be used to layer two videos so that a background video is used instead of a static image. Again, be mindful of performance on low-power devices; you might consider providing a setting through which the user can disable such extra effects.

Rendering PDFs

In addition to the usual image formats, you may need to load and display a PDF into an img element (or a canvas by using its drawImage function). Aside from third-party libraries, you can use the WinRT APIs in Windows.Data.Pdf for this purpose. Here you’ll find a PdfDocument object that represents a document as a whole, along with a PdfPage object that represents a single page within a document.

Note Although WinRT offers a means to load and display PDFs, it does not have an API for generating PDFs. You’ll still need third-party libraries for that.

There are two ways to load a PDF into a PdfDocument:

• Given a StorageFile object (from the local file system, the file picker, removable storage, etc.), call the static method PdfDocument.loadFromFileAsync (which has two variants, the second of which takes a password if that’s necessary).

• Given some kind of random access stream object (from a partial HTTP request operation, for instance; refer to “Q&A on Files, Streams, Buffers, and Blobs” in Chapter 10), call the static method PdfDocument.loadFromStreamAsync (which again has a password variant).

In both cases the load* methods return a promise that’s fulfilled with a PdfDocument instance. Here’s an example from scenario 1 of the PDF viewer sample where the file in question (represented by pdfFileName) is located in the app package (js/scenario1.js):

var pdfLib = Windows.Data.Pdf;

Windows.ApplicationModel.Package.current.installedLocation.getFileAsync(pdfFileName)

.then(function loadDocument(file) {

return pdfLib.PdfDocument.loadFromFileAsync(file);

}).then(function setPDFDoc(doc) {

renderPage(doc, pageIndex, renderOptions);

});

The file variable from the first promise is just a StorageFile, so you can substitute any other code that results in such an object before the call to loadFromFileAsync. The setPDFDoc completed handler, as it’s named here, receives the PdfDocument, whose isPasswordProtected andpageCount properties provide you with some obvious information.

The next thing to do is then render one or more pages of that document, or portions of those pages. The API is specifically set up to render one page at a time, so if you want to provide a multipage view you’ll need to render multiple pages and display them in side-by-side img elements (using a Repeater control, perhaps), display them in a ListView control, or render those pages into a large canvas. More on this in a bit.

To get a PdfPage object for any given page, call PdfDocument.getPage with the desired (zero-based index), as shown here from within the renderPage function of the sample (js/scenario1.js):

var pdfPage = pdfDocument.getPage(pageIndex);

At this point the page’s properties will be populated. These include the following:

• index The zero-based position of the page in the document.

• preferredZoom The preferred magnification factor (a number) for the page.

• rotation A value from the PdfPageRotation enumeration, one of normal, rotate90, rotate180, and rotate270.

• dimensions A PdfPageDimensions object containing artBox, bleedBox, cropBox, mediaBox, and trimBox, each of which is a Windows.Foundation.Rect. All of these represent intentions of the PDF’s author; for specific definitions, refer to the Abode PDF Reference.

• size A Windows.Foundation.Sizeobject the page’s basic width and height based on the dimensions.cropBox, dimensions.mediaBox, and rotation properties.

To render the page, call its renderToStreamAsync, which, as its name implies, requires a random access stream that receives the rendering. You can create an in-memory stream, a file-based stream, or perhaps a stream to some other data store entirely, again using the APIs discussed inChapter 10, depending on where you want the rendering to end up. Generally speaking, if you want to render just a single page for display, create an in-memory stream like the sample (js/scenario1.js):

var pageRenderOutputStream = new Windows.Storage.Streams.InMemoryRandomAccessStream();

If, on the other hand, you want to render a whole document and don’t want to goggle up so much memory that you kick out every other suspended app, you should definitely render each page into a temporary file instead. This is demonstrated in the other SDK sample for PDFs, the PDF showcase viewer sample, whose code contains a more sophisticated mechanism to build a data source for pages that are then displayed in a ListView. (This sample also has its own documentation on the PDF viewer end-to-end sample page.) Once it opens a PdfDocument, it iterates all the pages and calls the following loadPage method (which also allows for in-memory rendering; js/pdflibrary.js):

loadPage: function (pageIndex, pdfDocument, pdfPageRenderingOptions, inMemoryFlag, tempFolder) {

var filePointer = null;

var promise = null;

if (inMemoryFlag) {

promise = WinJS.Promise.wrap(new Windows.Storage.Streams.InMemoryRandomAccessStream());

} else {

// Creating file on disk to store the rendered image for a page on disk

// This image will be stored in the temporary folder provided during VDS init

var filename = this.randomFileName() + ".png";

var file = null;

promise = tempFolder.createFileAsync(filename,

Windows.Storage.CreationCollisionOption.replaceExisting).then(function (filePtr) {

filePointer = filePtr;

return filePointer.openAsync(Windows.Storage.FileAccessMode.readWrite);

}, function (error) {

// Error while opening a file

filePointer = null;

}, function (error) {

// Error while creating a file

});

}

return promise.then(function (imageStream) {

var pdfPage = pdfDocument.getPage(pageIndex);

return pdfPage.renderToStreamAsync(imageStream, pdfPageRenderingOptions)

.then(function () {

return imageStream.flushAsync();

})

// ...

Either way, your stream object must get to PdfPage.renderToStreamAsync, which has two variants. One just takes a stream, and the other takes the stream plus a PdfPageRenderingOptions object that controls finer details: backgroundColor, destinationHeight, destinationWidth,sourceRect, isIgnoringHighContrast, and bitmapEncoderId. With these options, as shown in the first PDF viewer sample, you can render a whole page, a zoomed-in page, or a portion of a page (js/scenario1.js):

var pdfPage = pdfDocument.getPage(pageIndex);

var pdfPageRenderOptions = new Windows.Data.Pdf.PdfPageRenderOptions();

var renderToStreamPromise;

var pagesize = pdfPage.size;

switch (renderOptions) {

case RENDEROPTIONS.NORMAL:

renderToStreamPromise = pdfPage.renderToStreamAsync(pageRenderOutputStream);

break;

case RENDEROPTIONS.ZOOM:

// Set pdfPageRenderOptions.'destinationwidth' or 'destinationHeight' to take

// zoom factor into effect

pdfPageRenderOptions.destinationHeight = pagesize.height * ZOOM_FACTOR;

renderToStreamPromise = pdfPage.renderToStreamAsync(pageRenderOutputStream,

pdfPageRenderOptions);

break;

case RENDEROPTIONS.PORTION:

// Set pdfPageRenderOptions.'sourceRect' to the rectangle containing portion to show

pdfPageRenderOptions.sourceRect = PDF_PORTION_RECT;

renderToStreamPromise = pdfPage.renderToStreamAsync(pageRenderOutputStream,

pdfPageRenderOptions);

break;

};

The promise that comes back from renderToStreamAsync doesn’t have any results, because the rendering will be contained in the stream. If the operation succeeds, your completed handler will be called and you can then pass the stream onto MSApp.createBlobFromRandomAccessStream, followed by our old friend URL.createObjectURL, whose result you can assign to an img.src. If the operation fails, your error handler is called, of course. Be mindful to call the stream’s flushAsync first thing before getting the URL and to close the stream (through its close method orblob.msClose). Here’s the whole process from the sample (js/scenario1.js):

renderToStreamPromise.then(function Flush() {

return pageRenderOutputStream.flushAsync();

}).then(function DisplayImage() {

if (pageRenderOutputStream !== null) {

var blob = MSApp.createBlobFromRandomAccessStream("image/png", pageRenderOutputStream);

var picURL = URL.createObjectURL(blob, { oneTimeOnly: true });

scenario1ImageHolder1.src = picURL;

pageRenderOutputStream.close();

blob.msClose();// Closes the stream

};

},

function error() {

if (pageRenderOutputStream !== null) {

pageRenderOutputStream.close();

}

});

If you’re using file-based streams, as in the PDF showcase viewer sample, you can just hold onto a collection of StorageFile objects. When you need to render any particular page, you can grab a thumbnail from the StorageFile and pass it to URL.createObjectURL. Alternately, if you use the PdfPageRenderOptions to generate renderings that match your screen size, you can just pass those StorageFile objects to URL.createObjectURL directly. This is what the PDF showcase viewer sample does. Its data source, again, manages a bunch of StorageFile objects (or in-memory streams). To show that flow, we can see that each item in the data source is an object with pageIndex and imageSrc properties (js/pdfLibrary.js):

loadPage: function (pageIndex, pdfDocument, pdfPageRenderingOptions, inMemoryFlag, tempFolder) {

// ... all code as shown earlier

return promise.then(function (imageStream) {

var pdfPage = pdfDocument.getPage(pageIndex);

return pdfPage.renderToStreamAsync(imageStream, pdfPageRenderingOptions)

.then(function () {

return imageStream.flushAsync();

})

.then(function closeStream() {

var picURL = null;

if (inMemoryFlag) {

var renderStream = Windows.Storage.Streams.RandomAccessStreamReference

.createFromStream(imageStream);

return renderStream.openReadAsync().then(function (stream) {

imageStream.close();

pdfPage.close();

return { pageIndex: pageIndex, imageSrc: stream };

});

} else {

imageStream.close();

pdfPage.close();

return { pageIndex: pageIndex, imageSrc: filePointer };

}

});

});

},

In default.html, the app’s display is composed of nothing more than two ListView controls inside a Semantic Zoom control:

<div id="pdfViewTemplate" data-win-control="WinJS.Binding.Template">

<div id="pdfitemmainviewdiv" data-win-control="WinJS.UI.ViewBox">

<img src="/images/placeholder.jpg" alt="PDF page"

data-win-bind="src: imageSrc blobUriFromStream" style=" width: 100%; height: 100%;"/>

</div>

</div>

<div id="pdfSZViewTemplate" data-win-control="WinJS.Binding.Template" style=" display: none">

<div>

<img src="/images/placeholder.jpg" alt="PDF page thumbnail"

data-win-bind="src: imageSrc blobUriFromStream"/>

</div>

</div>

<div id="semanticZoomDiv" data-win-control="WinJS.UI.SemanticZoom"

data-win-options="{zoomedInItem: window.zoomedInItem, zoomedOutItem: window.zoomedOutItem }"

style=" height: 100%; width: 100%">

<!-- zoomed-in view. -->

<div id="zoomedInListView" data-win-control="WinJS.UI.ListView"

data-win-options="{ itemTemplate: pdfViewTemplate, selectionMode: 'none',

tapBehavior: 'invokeOnly', swipeBehavior: 'none',

layout: {type: WinJS.UI.GridLayout, maxRows: 1},}">

</div>

<!--- zoomed-out view. -->

<div id="zoomedOutListView" data-win-control="WinJS.UI.ListView"

data-win-options="{ itemTemplate: pdfSZViewTemplate, selectionMode: 'none',

tapBehavior: 'invokeOnly', swipeBehavior: 'none',

layout: {type: WinJS.UI.GridLayout}}">

</div>

</div>

The last piece that glues it all together is the blobUriFromStream initializer in the data-win-bind statements of the templates. The code for this is hiding out at the bottom of js/default.js and is where the imageSrc from the data source—a StorageFile or stream—gets sent toURL.createObjectURL:

window.blobUriFromStream = WinJS.Binding.initializer(function (source, sourceProp,

dest, destProp) {

if (source[sourceProp] !== null) {

dest[destProp] = URL.createObjectURL(source[sourceProp], { oneTimeOnly: true });

}

});

The results of all this are shown in two views below, the zoomed-in view (left) and the zoomed-out view (right), revealing a curious advertisement for Windows 7!

Video Playback and Deferred Loading

Let’s now talk about video playback. As we’ve already seen, simply including a video element in your HTML or creating an element on the fly gives you playback ability. In the code below, the video is sourced from an in-package file, starts playing by itself, loops continually, and provides controls:

<video src="/media/ModelRocket1.mp4" controlsloopautoplay></video>

As with other standards we’ve discussed, I’m not going to rehash the details (properties, methods, and events) that are available in the W3C spec for the video and audio tags, found on http://www.w3.org/TR/html51/ in sections 4.8.6 to 4.8.10. Especially note the event summary in section 4.8.10.15 and that most of the properties and methods for both are found in section 4.8.10.

Note that the track element for subtitles is supported for both video and audio; you can find an example of using it in scenario 4 of the HTML media playback sample, which includes a WebVTT file (a simple text file; see media/sample-subtitle-en.vtt in the sample) that contains entries like the following to describe when a give subtitle should appear:

00:00:05.242 --> 00:00:08.501

My name is Jason Weber, and my job is to make Internet Explorer fast.

This file is then referenced in the track element in its src attribute (html/Subtitles.html):

<video id="subtitleVideo" style=" position: relative; z-index: auto; width: 50%;"

src="http://ie.microsoft.com/testdrive/Videos/BehindIE9AllAroundFast/Video.mp4"

poster="images/Win8MediaLogo.png" loopcontrols>

<track id="scenario3entrack" src="/media/sample-subtitle-en.vtt" kind="subtitles"

srclang="en" default>

</video>

Another bit that’s helpful to understand is that video and audio are closely related, because they’re part of the same spec. In fact, if you want to play just the audio portion of a video, you can use the Audio object in JavaScript:

//Play just the audio of a video

var movieAudio = new Audio("http://www.kraigbrockschmidt.com/downloads/media/ModelRocket1.mp4");

movieAudio.load();

movieAudio.play();

You can also have a video’s audio track play in the background depending on the value assigned to the element’s msAudioCategory attribute, as we’ll see later under “Playback Manager and Background Audio.” The short of it is that if you use the value ForegroundOnlyMedia for this attribute, the video will be muted when in the background, and you can also use this condition to automatically pause the video (again, see “Playback Manager and Background Audio”). If you use instead use BackgroundCapableMedia for the attribute, the video’s soundtrack can play in the background provided that you’ve done the other necessary work for background audio. I, for one, appreciate apps that take trouble to make this work—I’ll often listen to the audio for conference talks in the background and then watch only the most important video segments.

For any given video element, you can set the width and height to control the playback size (as to 100% for full-screen). This is important when your app view changes size, and you’ll likely have CSS styles for video elements in your various media queries. Also, if you have a control to play full screen, simply make the video the size of the viewport. In addition, when you create a video element with the controls attribute, it will automatically have a full-screen control on the far right that does exactly what you expect within a Windows Store app:

In short, you don’t need to do anything special to make this work, although you can employ the :-ms-fullscreen pseudo-class in CSS for full-screen styling. When the video is full screen, a similar button (or the ESC key) returns to the normal app view. If there’s a problem going to full screen, the video element will fire an MSFullScreenError event.

Note In case you’re wondering, the audio and video elements don’t provide any CSS pseudo-selectors for styling the controls bar. As my son’s preschool teacher would say (in reference to handing out popsicles, but it works here too), “You get what you get and you don’t throw a fit and you’re happy with it.” If you’d like to do something different with these controls, you’ll need to turn off the defaults (set the controls attribute to false) and provide controls of your own that would call the element methods appropriately.

When implementing your own controls, be sure to set a timeout to make the controls disappear (either hiding them or changing the z-index) when they’re not being used. This is especially important because whenever the video is partly obstructed by other controls, even by a single pixel, playback decoding will switch from the GPU to the CPU and thus consume more power and other system resources. So be sure to hide those controls after a short time or size the video so that there’s no overlap. Your customers will greatly appreciate it! I, for one, have been impressed with how power-efficient video is with GPU playback on ARM devices such as the Microsoft Surface. In years past, video playback was a total battery killer, but now it’s no more an impact than answering emails.

You can use the various events of the video element to know when the video is played and paused through the controls, among other things (though there is not an event for going full-screen), but you should also respond appropriately when hardware buttons for media control are used. For this purpose, listen for the buttonpressedevent coming from the Windows.Media.SystemMediaTransport-Controls object.95 (This is a WinRT object event, so call removeEventListener as needed.) Refer to the System media transport controls sample for a demonstration; the process is basically add a listener for buttonpressed and then enable the buttons for which you want to receive that event (js/scenario1.js):

systemMediaControls = Windows.Media.SystemMediaTransportControls.getForCurrentView();

systemMediaControls.addEventListener("buttonpressed", systemMediaControlsButtonPressed, false);

systemMediaControls.isPlayEnabled = true;

systemMediaControls.isPauseEnabled = true;

systemMediaControls.isStopEnabled = true;

systemMediaControls.playbackStatus = Windows.Media.MediaPlaybackStatus.closed;

We’ll talk more of these later under “The Media Transport Control UI” because they very much apply to audio playback where you might not have any other controls available.

I also mentioned that you might want to defer loading a video (called lazy loading) until it’s needed and show a preview image in its place. This is accomplished with the poster attribute, whose value is the image to use, and then later setting the src attribute and calling the element’sload method:

<video id="video1" poster="/media/rocket.png" width="640" height="480"></video>

var video1 = document.getElementById("video1");

var clickListener = video1.addEventListener("click", function () {

video1.src = "http://www.kraigbrockschmidt.com/downloads/media/ModelRocket1.mp4";

video1.load();

//Remove listener to prevent interference with video controls

video1.removeEventListener("click", clickListener);

video1.addEventListener("click", function () {

video1.controls = true;

video1.play();

});

});

In this case I’m not using preload="true" or even providing a src value so that nothing is transferred until the video is started with a click or tap. Then that listener is removed, the video’s own controls are turned on, and playback is started. This, of course, is a more roundabout method; often you’ll use preload="true" controls src="..." directly in the video element, as the poster attribute will handle the preview image.

Streaming video Windows Store apps can certainly take advantage of streaming media, a subject that we’ll return to in “Streaming Media and Play To” at the end of this chapter.

Sidebar: Source Attributes and Custom Formats

In web applications, video (and audio) elements can use HTML5 source attributes to provide alternate formats in case a client system doesn’t have the necessary codec for the primary source. Given that the list of supported formats in Windows is well known (refer again to Supported audio and video formats), this isn’t much of a concern for Windows Store apps. However, source is still useful because it can identify the specific codecs for the source:

<video controlsloopautoplay>

<source src="video1.vp8" type="video/webm"/>

</video>

This is important when you need to provide a custom codec for your app through Windows.-Media.MediaExtensionManager, outlined in the “Handling Custom Audio and Video Formats” section later in this chapter, because the codec identifies the extension to load for decoding. I show WebM as an example here because it’s not directly available to Windows Store apps (though it is in Internet Explorer). When the app host running a Windows Store app encounters the video element above, it will look for a matching decoder for the specified type.

Alternately, the Windows.Media.Core.MediaStreamSource object makes it possible for you to handle audio, video, and image formats that aren’t otherwise supported in the platform, including plug-in free decryption of protected content. We’ll also talk about this in the “Handling Custom Audio and Video Formats” section.

Disabling Screen Savers and the Lock Screen During Playback

When playing video, especially full-screen, it’s important to disable any automatic timeouts that would blank the display or lock the device. This is done through the Windows.System.Display.Display-Request object. Before starting playback, create an instance of this object and call itsrequestActive method.

var displayRequest = new Windows.System.Display.DisplayRequest();

if (displayRequest) {

displayRequest.requestActive();

}

If this call succeeds, you’ll be guaranteed that the screen will stay active despite user inactivity. When the video is complete, be sure to call requestRelease:

displayRequest.releaseRequest();

See to the simple Display power state sample for a reference project.

Note that Windows will automatically deactivate such requests when your app is moved to the background, and it will reactivate them when the user switches back.

Tip As with image content, if you have a rights-protected video for which you want to disable screen capture, call Windows.UI.ViewManagement.ApplicationView.getForCurrentView() and set the resulting object’s isScreenCaptureEnabled property to false. This is again demonstrated in the Disable screen capture sample.

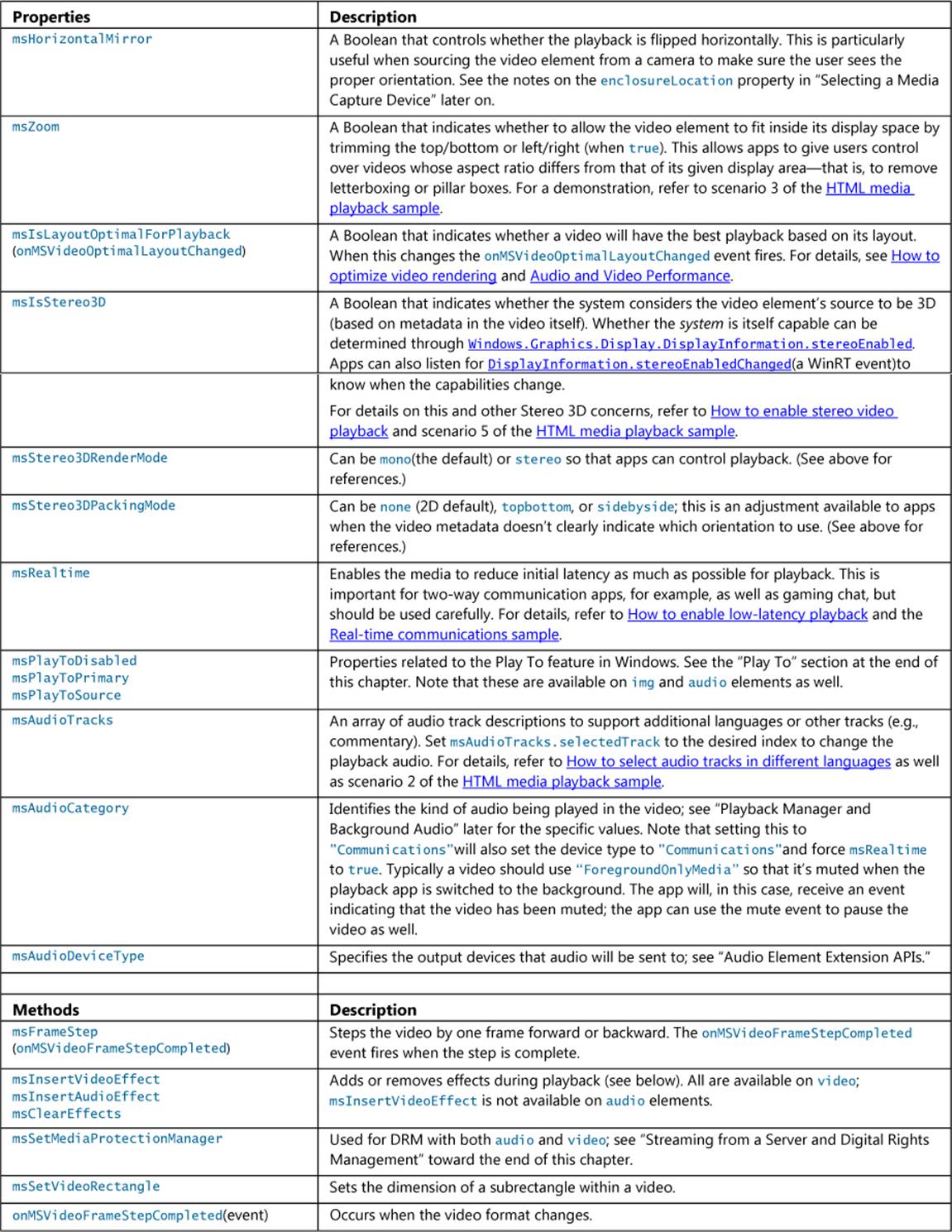

Video Element Extension APIs

Beyond the HTML5 standards for video elements, the app host adds some additional properties and methods, as shown in the following table and documented on the video element page. Also note the references to the HTML media playback sample where you can find some examples of using these.

Sidebar: Zooming Video for Smaller Screens

With video playback on small devices, it’s a good idea to provide a control that sets the msZoom property to true for full-screen playback. By default, full-screen video that doesn’t exactly match the aspect ratio of the display will have pillar boxes. On a very small screen—such as 7” or 8” tablets, this might cause the video to be shrunk down to a size that’s hard to see. By setting msZoom to true, you remove those pillar boxes automatically. If you want to go further, you can also do full-screen playback by default and even stretch the video element to be larger than the display size, effectively zooming in even further.

Applying a Video Effect

The earlier table shows that video elements have msInsertVideoEffect and msInsertAudioEffect methods on them. WinRT provides a built-in video stabilization effect that is easily applied to an element. This is demonstrated in scenario 3 of the Media extensions sample, which plays the same video with and without the effect, so the stabilized one is muted:

vidStab.msClearEffects();

vidStab.muted = true;

vidStab.msInsertVideoEffect(Windows.Media.VideoEffects.videoStabilization, true, null);

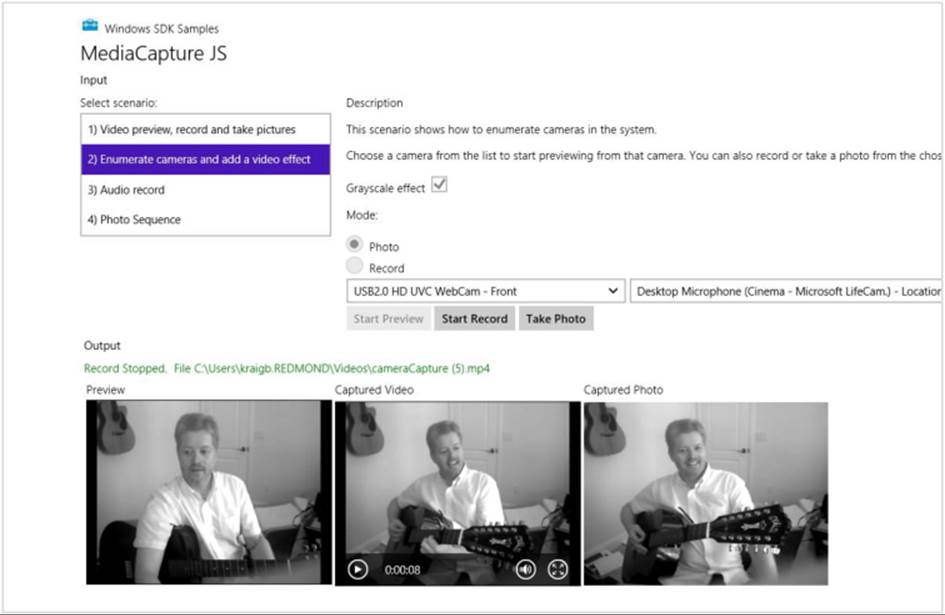

Custom effects, as demonstrated in scenario 4 of the sample, are implemented as separate dynamic-link libraries (DLLs) written in C++ and are included in the app package because a Windows Store app can install a DLL only for its own use and not for systemwide access. With the sample you’ll find DLL projects for a grayscale, invert, and geometric effects, where the latter has three options for fisheye, pinch, and warp. In the js/CustomEffect.js file you can see how these are applied, with the first parameter to msInsertVideoEffect being a string that identifies the effect as exported by the DLL (see, for instance, the InvertTransform.idl file in the InvertTransform project):

vid.msInsertVideoEffect("GrayscaleTransform.GrayscaleEffect", true, null);

vid.msInsertVideoEffect("InvertTransform.InvertEffect", true, null);

The second parameter to msInsertVideoEffect, by the way, indicates whether the effect is required, so it’s typically true. The third is a parameter called config, which just contains additional information to pass to the effect. In the case of the geometric effects in the sample, this parameter specifies the particular variation:

var effect = new Windows.Foundation.Collections.PropertySet();

effect["effect"] = effectName;

vid.msClearEffects();

vid.msInsertVideoEffect("PolarTransform.PolarEffect", true, effect);

where effectName will be either “Fisheye”, “Pinch”, or “Warp”.

To be more specific, the config argument is a PropertySet that you can use to pass any information you need to the effect object. It can also communicate information back: if the effect writes information into the PropertySet, it will fire its mapchanged event.

Audio effects, not shown in the sample, are applied the same way with msInsertAudioEffect (with the same parameters). Do note that each element can have at most two effects per media stream. A video element can have two video effects and two audio effects; an audio element can have two audio effects. If you try to add more, the methods will throw an exception. This is why it’s a good idea to call msClearEffects before inserting any others.

For additional discussion on effects and other media extensions, see Using media extensions.

Browsing Media Servers

Many households, including my own, have one or more media servers available on the local network from which apps can play media. Getting to these servers is the purpose of the one other property in Windows.Storage.KnownFolders that we haven’t mentioned yet: mediaServerDevices. As with other known folders, this is simply a StorageFolder object through which you can then enumerate or query additional folders and files. In this case, if you call its getFoldersAsync, you’ll receive back a list of available servers, each of which is represented by another StorageFolder. From there you can use file queries, as discussed in Chapter 11, to search for the types of media you’re interested in or apply user-provided search criteria. An example of this can be found in the Media Server client sample.

Audio Playback and Mixing

The audio element in HTML5 has many things in common with video. For one, the audio element provides its own playback abilities, including controls, looping, and autoplay:

<audio src="media/SpringyBoing.mp3" controlsloopautoplay></audio>

The same W3C spec applies to both video and audio elements, so the same code to play just the audio portion of a video is exactly what we use to play an audio file:

var sound = new Audio("media/SpringyBoing.mp3");

sound1.msAudioCategory = "SoundEffect";

sound1.load(); //For preloading media

sound1.play(); //At any later time

As mentioned earlier in this chapter, creating an Audio object without controls and playing it has no effect on layout, so this is what’s generally used for sound effects in games and other apps.

As with video, it’s important for many audio apps to respond appropriately to the buttonpressed event coming from the Windows.Media.SystemMediaTransportControlsobject96 so that the user can control playback with hardware buttons. This is not a concern with audio such as game sounds, however, where playback control is not needed.

Speaking of which, an interesting aspect of audio is mixing multiple sounds together, as games generally require. Here it’s important to understand that each audio element can be playing one sound: it has only one source file and one source file alone. However, multiple audio (andvideo) elements can be playing at the same time with automatic intermixing depending on their assigned msAudioCategory attributes. (See “Playback Manager and Background Audio” below.) In the following example, some background music plays continually (loop is set to true, and the volume is halved) while another sound is played in response to taps (see the AudioPlayback examplewith this chapter’s companion content):97

var sound1 = new Audio("/media/SpringyBoing.mp3");

sound1.msAudioCategory = "SoundEffects"; //Set this before setting src if possible

sound1.load(); //For preloading media

//Background music

var sound2 = new Audio();

sound2.msAudioCategory = "ForegroundOnlyMedia"; //Set this before setting src

sound2.src = "http://www.kraigbrockschmidt.com/mp3/WhoIsSylvia_PortlandOR_5-06.mp3";

sound2.loop = true;

sound2.volume = 0.5; //50%;

sound2.play();

document.getElementById("btnSound").addEventListener("click", function () {

//Reset position in case we're already playing

sound1.currentTime = 0;

sound1.play();

});

By loading the tap sound when the object is created, we know we can play it at any time. When initiating playback, it’s a good idea to set the currentTime to 0 so that the sound always plays from the beginning.

The question with mixing, especially in games, is a matter of managing many different sounds without knowing ahead of time how they will be combined. You may need, for instance, to overlap playback of the same sound with different starting times, but it’s impractical to declare three audio elements with the same source. The technique that’s emerged is to use “rotating channels,” as described on HTML5 Audio Tutorial: Rotating Channels (Ajaxian website) and demonstrated in the AudioPlayback example in this chapter’s companion content. To summarize:

15. Declareaudio elements for each sound (with preload="auto"), and make sure they aren’t showing controls so that they aren’t part of your layout..

16. Create a pool (array) of Audio objects for however many simultaneous channels you need.

17. To play a sound:

a. Obtain an available Audio object from the pool.

b. Set its src attribute to one that matches a preloaded audio element.

c. Call that pool object’s play method.

As sound designers in the movies have discovered, it is possible to have too much sound going on at the same time, because it gets really muddied. You might not need more than a couple dozen channels at most.

Hint Need some sounds for your app? Check out http://www.freesound.org.

Custom formats The Windows.Media.Core.MediaStreamSource object enables you to work with audio formats that don’t have native support in the platform. See “Handling Custom Audio and Video Formats” later in this chapter.

Audio Element Extension APIs

As with the video element, a few extensions are available on audio elements as well, namely those to do with effects (msInsertAudioEffect; see “Applying a Video Effect” earlier for a general discussion), DRM (msSetMediaProtectionManager), Play To (msPlayToSource, etc.), msRealtime, andmsAudioTracks, as listed earlier in “Video Element Extension APIs.” In fact, every extension API for audio exists on video, but two of them have primary importance for audio:

• msAudioDeviceType Allows an app to determine which output device audio will render to: "Console" (the default) and "Communications". This way an app that knows it’s doing communication (like chat) doesn’t interfere with media audio.

• msAudioCategory Identifies the type of audio being played (see table in the next section), which determines how it will mix with other audio streams. This is also very important to identify audio that can continue to play in the background (thereby preventing the app from being suspended), as described in the next section. Note that you should always set this property before setting the audio’s src and that setting this to "Communications" will also set the device type to "Communications" and force msRealtime to true.

Do note that despite the similarities between the values in these properties, msAudioDeviceType is for selecting an output device whereas msAudioCategory identifies the nature of the audio that’s being played through whatever device. A communications category audio could be playing through the console device, for instance, or a media category could be playing through the communications device. The two are separate concepts.

One other capability that’s available for audio is effects discovery, which means an app can enumerate effects that are being used in the audio processing chain on any given device. I won’t go into details here, but refer to the Windows.Media.Effects namespace and the Audio effects discovery sample in the SDK.

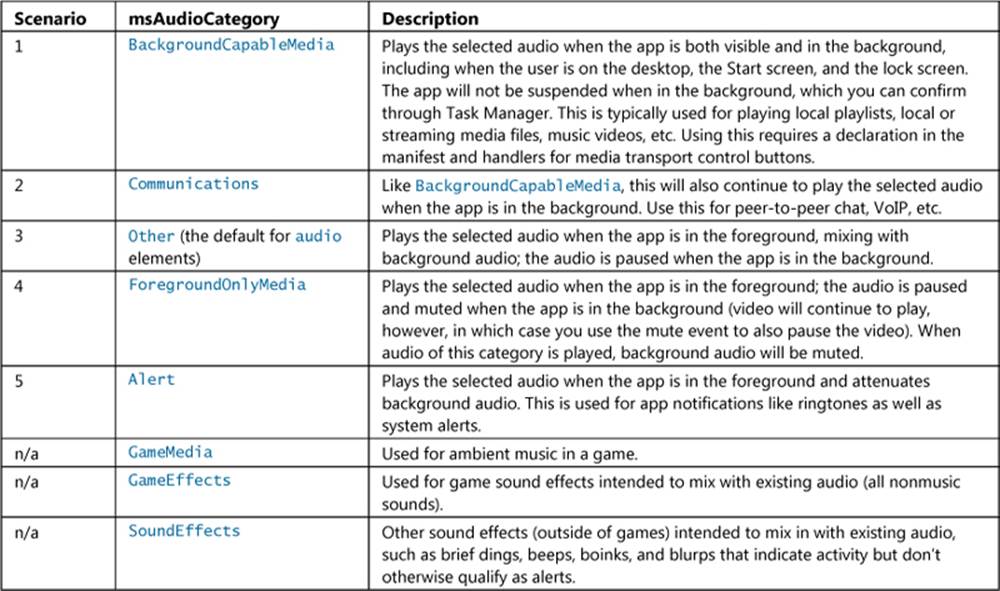

Playback Manager and Background Audio

To explore different kinds of audio playback (including the audio track of videos), let’s turn our attention to the Playback Manager msAudioCategory sample. I won’t show a screen shot of this because, doing nothing but audio, there isn’t much to show! Instead, let me outline the behaviors of its different scenarios—which align to msAudioCategory values—in the following table, as well as list those categories that aren’t represented in the sample but that can be used in your own app. In each scenario you need to first select an audio file through the file picker.

Where a single audio stream is concerned, there isn’t always a lot of difference between most of these categories. Yet as the table indicates, different categories have different effects on other concurrent audio streams. For this purpose, the Windows SDK does an odd thing by providing a second identical sample to the first, the Playback Manager companion sample.This allows you run these apps at the same time (side by side, or one or both in the background) and play audio with different category settings to see how they combine.

How different audio streams combine is a subject that’s discussed in the Audio Playback in a Windows Store App whitepaper. However, you don’t have direct control over mixing—instead, the important thing is that you assign the most appropriate category to any particular audio stream. These categories help the playback manager perform the right level of mixing between audio streams according to user expectations, both with multiple streams in the same app, and streams coming from multiple apps (with limits on how many background audio apps can be going at once). For example, users will expect that alarms, being an important form of notification, will temporarily attenuate other audio streams (just like the GPS system in my car attenuates music when it gives directions). Similarly, users expect that an audio stream of a foreground app takes precedence over a stream of the same category of audio playing in the background.

As a developer, then, avoid playing games with the categories or trying to second guess the mixing algorithms, because you’ll end up creating an inconsistent user experience. Just assign the most appropriate category to your audio stream and let the playback manager deliver a consistentsystemwide experience with audio from all sources.

Setting an audio category for any given audio element is a simple matter of setting its msAudio-Category attribute. Every scenario in the sample does the same thing for this, making sure to set the category before setting the src attribute (shown here from js/backgroundcapablemedia.js):

audtag = document.createElement('audio');

audtag.setAttribute("msAudioCategory", "BackgroundCapableMedia");

audtag.setAttribute("src", fileLocation);

You could accomplish the same thing through audtag.msAudioCategory property, as seen in the previous section, as well as in markup:

<audio id="audio1" src="song.mp3" msAudioCategory="BackgroundCapableMedia"></audio>

<audio id="audio2" src="voip.mp3" msAudioCategory="Communications"></audio>

<audio id="audio3" src="lecture.mp3" msAudioCategory="Other"></audio>

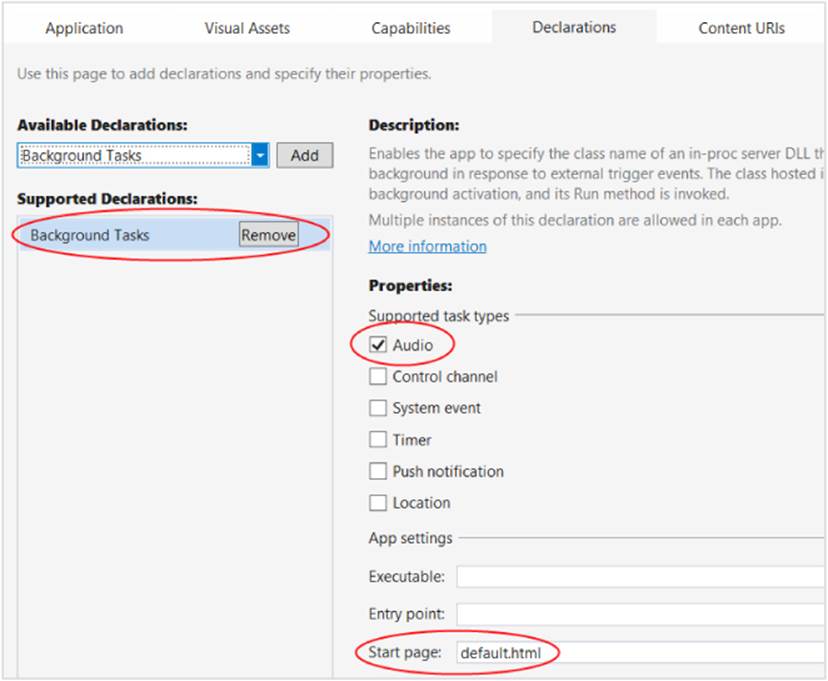

With BackgroundCapableMedia and Communications, however, simply setting the category isn’t sufficient: you also need to declare an audio background task extension in your manifest. This is easily accomplished by going to the Declarations tab in the manifest designer:

First, select Background Tasks from the Available Declarations drop-down list and click Add. Then check Audio under Supported Task Types, and identify a Start Page under App Settings. The start page isn’t really essential for background audio (because the app will never be launched for this purpose), but you need to provide something to make the manifest editor happy.

These declarations appear as follows in the manifest XML, should you care to look:

<Application Id="App"StartPage="default.html">

<!-- ... -->

<Extensions>

<Extension Category="windows.backgroundTasks"StartPage="default.html">

<BackgroundTasks>

<Task Type="audio" />

</BackgroundTasks>

</Extension>

</Extensions>

</Application>

Furthermore, background audio apps must do a few things with the Windows.Media.System-MediaTransportControls object that we’ve already mentioned so that the user can control background audio playback through the media control UI (see the next section):

• Set the object’s isPlayEnabled and isPauseEnabled properties to true.

• Listen to the buttonpressed event and handle play and pause cases in your handler by starting and stopping the audio playback as appropriate.

These requirements also make it possible for the playback manager to control the audio streams as the user switches between apps. If you fail to provide these listeners, your audio will always be paused and muted when the app goes into the background. (You can also optionally listen to the propertychanged event that is triggered for sound level changes.)

How to do this is shown in the Playback Manager sample for all its scenarios; the following is from js/backgroundcapablemedia.js (some code omitted), and note that the propertychanged event handler is not required for background audio:

var systemMediaControls = Windows.Media.SystemMediaTransportControls.getForCurrentView();

systemMediaControls.addEventListener("propertychanged", mediaPropertyChanged, false);

systemMediaControls.addEventListener("buttonpressed", mediaButtonPressed, false);

systemMediaControls.isPlayEnabled = true;

systemMediaControls.isPauseEnabled = true;

// audtag variable is the global audio element for the page

audtag.setAttribute("msAudioCategory", "BackgroundCapableMedia");

audtag.setAttribute("src", fileLocation);

audtag.addEventListener("playing", audioPlaying, false);

audtag.addEventListener("pause", audioPaused, false);

function mediaButtonPressed(e) {

switch (e.button) {

case Windows.Media.SystemMediaTransportControlsButton.play:

audtag.play();

break;

case Windows.Media.SystemMediaTransportControlsButton.pause:

audtag.pause();

break;

case Windows.Media.SystemMediaTransportControlsButton.pause:

default:

break;

}

}

function mediaPropertyChanged(e) {

switch (e.property) {

case Windows.Media.SystemMediaTransportControlsProperty.soundLevel:

//Catch SoundLevel notifications and determine SoundLevel state. If it's muted,

// we'll pause the player.If your app is playing media you feel that a user should

// not miss if a VOIP call comes in, you maywant to consider pausing playback when

// your app receives a SoundLevel(Low) notification.A SoundLevel(Low) means your

// app volume has been attenuated by the system (likely for a VOIP call).

var soundLevel = e.target.soundLevel;

switch (soundLevel) {

case Windows.Media.SoundLevel.muted:

log(getTimeStampedMessage("App sound level is: Muted"));

break;

case Windows.Media.SoundLevel.low:

log(getTimeStampedMessage("App sound level is: Low"));

break;

case Windows.Media.SoundLevel.full:

log(getTimeStampedMessage("App sound level is: Full"));

break;

}

appMuted(); // Typically only call this for muted and perhaps low levels.

break;

default:

break;

}

}

function audioPlaying() {

systemMediaControls.playbackStatus = Windows.Media.MediaPlaybackStatus.playing;

}

function audioPaused() {

systemMediaControls.playbackStatus = Windows.Media.MediaPlaybackStatus.paused;

}

function appMuted() {

if (audtag) {

if (!audtag.paused) {

audtag.pause();

}

}

}

Note Using the propertychanged event to detect a SoundLevel.muted on a video is the condition you typically use to pause a foreground-only video.

Given that WinRT events are involved here, the page control’s unload handler makes sure to clear everything out (js/backgroundcapablemedia.js):

if (systemMediaControls) {

systemMediaControls.removeEventListener("buttonpressed", mediaButtonPressed, false);

systemMediaControls.removeEventListener("propertychanged", mediaPropertyChanged, false);

systemMediaControls.isPlayEnabled = false;

systemMediaControls.isPauseEnabled = false;

systemMediaControls.playbackStatus = Windows.Media.MediaPlaybackStatus.closed;

systemMediaControls = null;

}

Again, setting the media control object’s isPlayEnabled and isPauseEnabled properties to true, make sure that the play/pause button is clickable in the UI and that the system controls also respond to hardware events, such as the buttons on my keyboard. For example, my keyboard also has next, previous, and stop buttons, but unless the app sets isNextEnabled, isPreviousEnabled, and isStopEnabled and handles those cases in the buttonpressed event, they won’t have any effect. We’ll see more in the next section.

Note The SystemMediaTransportControls.isEnabled property affects the entire control panel.

The other very important part to making the UI work properly is setting the playbackStatus value, otherwise the actual audio playback will be out of sync with the system controls. Take a look at the code again and you’ll see that the playing and pause events of the audio element are wired to functions named audioPlaying and audioPaused. Those functions then set the playbackStatus to the appropriate value from the Windows.Media.MediaPlaybackStatus enumeration, whose values are playing, paused, stopped, closed, and changing.

In short, the buttonpressed event is how an app responds to system control events. Setting playbackStatus is how you then affect the system controls in response to app events.

A few additional notes about background audio:

• If the audio is paused, a background audio app will be suspended like any other, but if the user presses a play button, the app will be resumed and audio will then continue playback.

• The use of background audio is carefully evaluated with apps submitted to the Windows Store. If you attempt to play an inaudible track as a means to avoid being suspended, the app will fail Windows Store certification.

• A background audio app should be careful about how it uses the network for streaming media to support the low-power state called connected standby. For details, refer to Writing a power savvy background media app.

Now let’s see the UI that Windows displays in response to hardware buttons.

The Media Transport Control UI

As mentioned in the previous section, handling the buttonpressed event from the SystemMedia-TransportControls object is required for background audio so that the user can control the audio through hardware buttons (built into many devices, including keyboards and remote controls) without needing to switch to the app. This is especially important because background audio continues to play not only when the user switches to another app but also when the user switches to the Start screen, switches to the desktop, or locks the device. Furthermore, the system controls also integrate automatically with Play To, meaning that they act as a remote control for the remote Play To device.

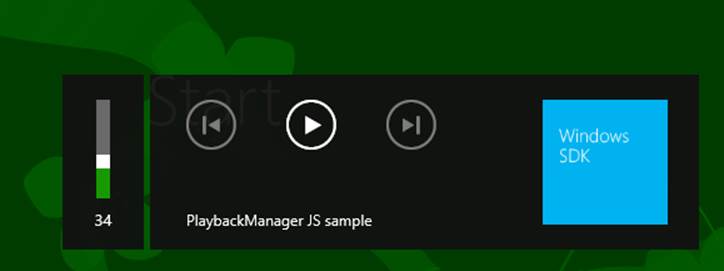

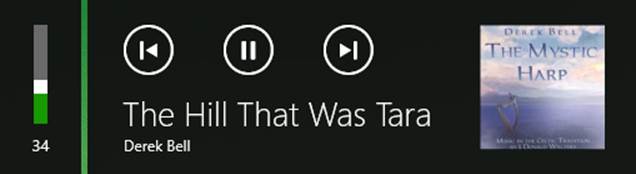

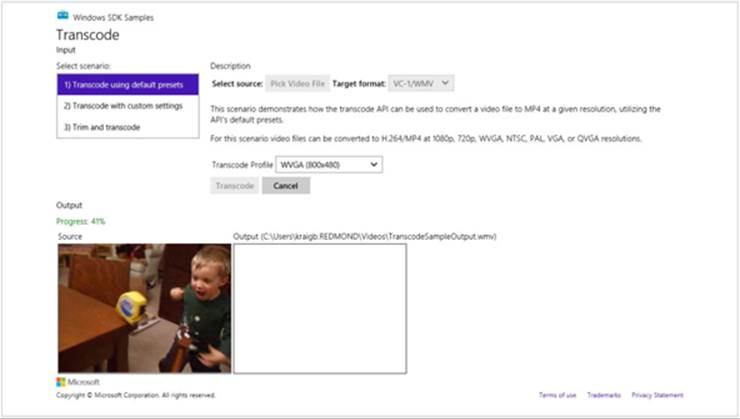

The default media control UI appears in the upper left of the screen, as shown in Figure 13-3, regardless of what is on the screen at the time. Tapping anywhere outside the specific control buttons will switch to the app.

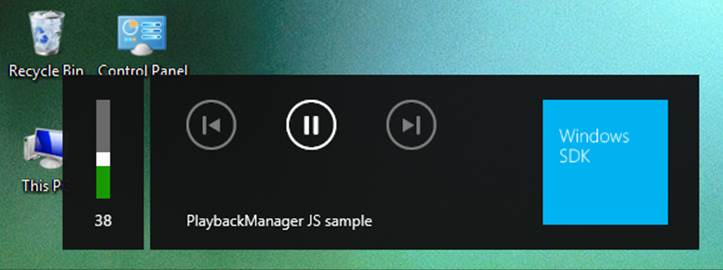

FIGURE 13-3 The system media control UI appearing above the Start screen (top) and the desktop (bottom). It will also show on the lock screen and on top of other Windows Store apps.

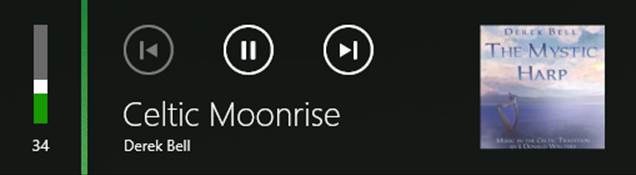

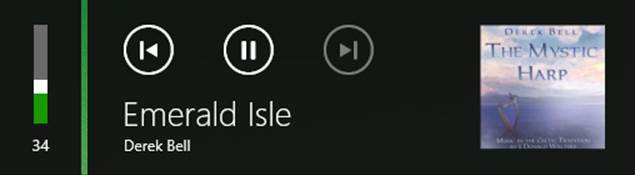

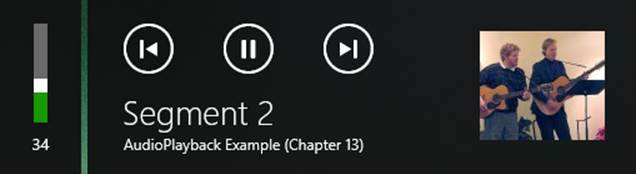

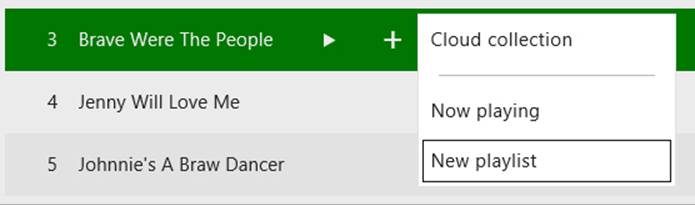

Setting the control object’s isPreviousEnabled and isNextEnabled properties to true will, as you’d expect, enable the other two buttons you see in Figure 13-3. This is demonstrated in the System media transport controls sample, in whose single scenario you can select multiple files for playback. When you have multiple files selected, it will play them in sequence, enabling and disabling the buttons depending on the position of the track in the list, as shown in Figure 13-4. (The AudioPlayback example in the companion content shows this as well—see the next section.)

FIGURE 13-4 The system media control UI with different states of the previous and next buttons. Note that the gap between the volume control and the other controls is transparent and just shows whatever is underneath.