Linux Kernel Networking: Implementation and Theory (2014)

CHAPTER 14. Advanced Topics

Chapter 13 dealt with the InfiniBand subsystem and its implementation in Linux. This chapter deals with several advanced topics and some topics that didn’t fit logically into other chapters. The chapter starts with a discussion about network namespaces, a type of lightweight process virtualization mechanism that was added to Linux in recent years. I will discuss the namespaces implementation in general and network namespaces in particular. You will learn that only two new system calls are needed in order to implement namespaces. You will also see several examples of how simple it is to create and manage network namespaces with the ip command of iproute2, and how simple it is to move one network device from one network namespace to another and to attach a specified process to a specified network namespace. The cgroups subsystem also provides resource management solution, which is different from namespaces. I will describe the cgroups subsystem and its two network modules, net_prio and cls_cgroup, and give two examples of using these cgroup network modules.

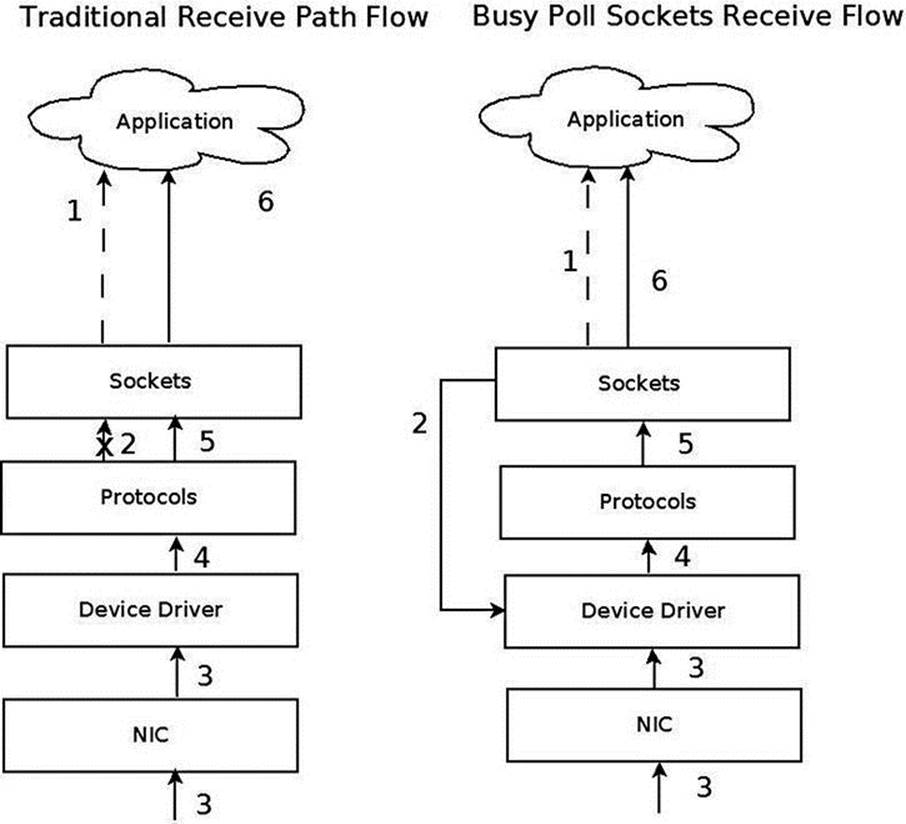

Later on in this chapter, you will learn about Busy Poll Sockets and how to tune them. The Busy Poll Sockets feature provides an interesting performance optimization technique for sockets that need low latency and are willing to pay a cost of higher CPU utilization. The Busy Poll Sockets feature is available from kernel 3.11. I will also cover the Bluetooth subsystem, the IEEE 802.15.4 subsystem and the Near Field Communication (NFC) subsystem; these three subsystems typically work in short range networks, and the development of new features for these subsystem is progressing at a rapid pace. I will also discuss Notification Chains, which is an important mechanism that you may encounter while developing or debugging kernel networking code and the PCI subsystem, as many network devices are PCI devices. I will not delve deep into the PCI subsystem details, as this book is not about device drivers. I will conclude the chapter with three short sections, one about the teaming network driver (which is the new kernel link aggregation solution), one about the Point-to-Point over Ethernet (PPPoE) Protocol, and finally one about Android.

Network Namespaces

This section covers Linux namespaces, what they are for and how they are implemented. It includes an in-depth discussion of network namespaces, giving some examples that will demonstrate their usage. Linux namespaces are essentially a virtualization solution. Operating system virtualization was implemented in mainframes many years before solutions like Xen or KVM hit the market. Also with Linux namespaces, which are a form of process virtualization, the idea is not new at all. It was tried in the Plan 9 operating system (see this article from 1992: “The Use of Name Spaces in Plan 9”, www.cs.bell-labs.com/sys/doc/names.html).

Namespaces is a form of lightweight process virtualization, and it provides resource isolation. As opposed to virtualization solutions like KVM or Xen, with namespaces you do not create additional instances of the operating system on the same host, but use only a single operating system instance. I should mention in this context that the Solaris operating system has a virtualization solution named Solaris Zones, which also uses a single operating system instance, but the scheme of resource partitioning is somewhat different than that of Linux namespaces (for example, in Solaris Zones there is a global zone which is the primary zone, and which has more capabilities). In the FreeBSD operating system there is a mechanism called jails, which also provides resource partitioning without running more than one instance of the kernel.

The main idea of Linux namespaces is to partition resources among groups of processes to enable a process (or several processes) to have a different view of the system than processes in other groups of processes. This feature is used, for example, to provide resource isolation in the Linux containers project (http://lxc.sourceforge.net/). The Linux containers project also uses another resource management mechanism that is provided by the cgroups subsystem, which will be described later in this chapter. With containers, you can run different Linux distributions on the same host using one instance of the operating systems. Namespaces are also needed for the checkpoint/restore feature, which is used in high performance computing (HPC). For example, it is used in CRIU (http://criu.org/Main_Page), a software tool of OpenVZ (http://openvz.org/Main_Page), which implements checkpoint/restore functionality for Linux processes mostly in userspace, though there are very few places when CRIU kernel patches were merged. I should mention that there were some projects to implement checkpoint/restore in the kernel, but these projects were not accepted in mainline because they were too complex. For example, take the CKPT project: https://ckpt.wiki.kernel.org/index.php/Main_Page. The checkpoint/restore feature (sometimes referred to as checkpoint/restart) enables stopping and saving several processes on a filesystem, and at a later time restores those processes (possibly on a different host) from the filesystem and resumes its execution from where it was stopped. Without namespaces, checkpoint/restore has very limited use cases, in particular live migration is only possible with them. Another use case for network namespaces is when you need to set up an environment that needs to simulate different network stacks for testing, debugging, etc. For readers who want to learn more about checkpoint/restart, I suggest reading the article “Virtual Servers and Checkpoint/Restart in Mainstream Linux,” by Sukadev Bhattiprolu, Eric W. Biederman, Serge Hallyn, and Daniel Lezcano.

Mount namespaces were the first type of Linux namespaces to be merged in 2002, for kernel 2.4.19. User namespaces were the last to be implemented, in kernel 3.8, for almost all filesystems types. It could be that additional namespaces will be developed, as is discussed later in this section. For creating a namespace you should have the CAP_SYS_ADMIN capability for all namespaces, except for the user namespace. Trying to create a namespace without the CAP_SYS_ADMIN capability for all namespaces, except for the user namespace, will result with an –EPRM error (“Operation not permitted”). Many developers took part in the development of namespaces, among them are Eric W. Biederman, Pavel Emelyanov, Al Viro, Cyrill Gorcunov, Andrew Vagin, and more.

After getting some background about process virtualization and Linux namespaces, and how they are used, you are now ready to dive in into the gory implementation details.

Namespaces Implementation

As of this writing, six namespaces are implemented in the Linux kernel. Here is a description of the main additions and changes that were needed in order to implement namespaces in the Linux kernel and to support namespaces in userspace packages:

· A structure called nsproxy (namespace proxy) was added. This structure contains pointers to five namespaces out of the six namespaces that are implemented. There is no pointer to the user namespace in the nsproxy structure; however, all the other five namespace objects contain a pointer to the user namespace object that owns them, and in each of these five namespaces, the user namespace pointer is called user_ns. The user namespace is a special case; it is a member of the credentials structure (cred), called user_ns. Thecred structure represents the security context of a process. Each process descriptor (task_struct) contains two cred objects, for effective and objective process descriptor credentials. I will not delve into all the details and nuances of user namespaces implementation, since this is not in the scope of this book. An nsproxy object is created by the create_nsproxy() method and it is released by the free_nsproxy() method. A pointer to nsproxy object, which is also called nsproxy, was added to the process descriptor (a process descriptor is represented by the task_struct structure, include/linux/sched.h.) Let’s take a look at the nsproxy structure, as it’s quite short and should be quite self-explanatory:

struct nsproxy {

atomic_t count;

struct uts_namespace *uts_ns;

struct ipc_namespace *ipc_ns;

struct mnt_namespace *mnt_ns;

struct pid_namespace *pid_ns;

struct net *net_ns;

};

(include/linux/nsproxy.h)

· You can see in the nsproxy structure five pointers of namespaces (there is no user namespace pointer). Using the nsproxy object in the process descriptor (task_struct object) instead of five namespace objects is an optimization. When performing fork(), a new child is likely to live in the same set of namespaces as its parent. So instead of five reference counter increments (one per each namespace), only one reference counter increment would happen (of the nsproxy object). The nsproxy count member is a reference counter, which is initialized to 1 when the nsproxy object is created by the create_nsproxy() method, and which is decremented by the put_nsproxy() method and incremented by the get_nsproxy() method. Note that the pid_ns member of thensproxy object was renamed to pid_ns_for_children in kernel 3.11.

· A new system call, unshare(), was added. This system call gets a single parameter that is a bitmask of CLONE* flags. When the flags argument consists of one or more namespace CLONE_NEW* flags, the unshare() system call performs the following steps:

· First, it creates a new namespace (or several namespaces) according to the specified flag. This is done by calling the unshare_nsproxy_namespaces() method, which in turn creates a new nsproxy object and one or more namespaces by calling thecreate_new_namespaces() method. The type of the new namespace (or namespaces) is determined according to the specified CLONE_NEW* flag. The create_new_namespaces() method returns a new nsproxy object that contains the new created namespace (or namespaces).

· Then it attaches the calling process to that newly created nsproxy object by calling the switch_task_namespaces() method.

When CLONE_NEWPID is the flag of the unshare() system call, it works differently than with the other flags; it's an implicit argument to fork(); only the child task will happen in a new PID namespace, not the one calling the unshare() system call. Other CLONE_NEW* flags immediately put the calling process into a new namespace.

The six CLONE_NEW* flags, which were added to support the creation of namespaces, are described later in this section. The implementation of the unshare() system call is in kernel/fork.c.

· A new system call, setns(), was added. It attaches the calling thread to an existing namespace. Its prototype is int setns(int fd, int nstype); the parameters are:

· fd: A file descriptor which refers to a namespace. These are obtained by opening links from the /proc/<pid>/ns/ directory.

· nstype: An optional parameter. When it is one of the new CLONE_NEW* namespaces flags, the specified file descriptor must refer to a namespace which matches the type of the specified CLONE_NEW* flag. When the nstype is not set (its value is 0) the fdargument can refer to a namespace of any type. If the nstype does not correspond to the namespace type associated with the specified fd, a value of –EINVAL is returned.

You can find the implementation of the setns() system call in kernel/nsproxy.c.

· The following six new clone flags were added in order to support namespaces:

· CLONE_NEWNS (for mount namespaces)

· CLONE_NEWUTS (for UTS namespaces)

· CLONE_NEWIPC (for IPC namespaces)

· CLONE_NEWPID (for PID namespaces)

· CLONE_NEWNET (for network namespaces)

· CLONE_NEWUSER (for user namespaces)

The clone() system call is used traditionally to create a new process. It was adjusted to support these new flags so that it will create a new process attached to a new namespace (or namespaces). Note that you will encounter usage of the CLONE_NEWNET flag, for creating a new network namespace, in some of the examples later in this chapter.

· Each subsystem, from the six for which there is a namespace support, had implemented a unique namespace of its own. For example, the mount namespace is represented by a structure called mnt_namespace, and the network namespace is represented by a structure called net, which is discussed later in this section. I will mention the other namespaces later in this chapter.

· For namespaces creation, a method named create_new_namespaces() was added (kernel/nsproxy.c). This method gets as a first parameter a CLONE_NEW* flag or a bitmap of CLONE_NEW* flags. It first creates an nsproxy object by calling thecreate_nsproxy() method, and then it associates a namespace according to the specified flag; since the flag can be a bitmask of flags, the create_new_namespaces() method can associate more than one namespace. Let’s take a look at thecreate_new_namespaces() method:

static struct nsproxy *create_new_namespaces(unsigned long flags,

struct task_struct *tsk, struct user_namespace *user_ns,

struct fs_struct *new_fs)

{

struct nsproxy *new_nsp;

int err;

Allocate an nsproxy object and initialize its reference counter to 1:

new_nsp = create_nsproxy();

if (!new_nsp)

return ERR_PTR(-ENOMEM);

. . .

After creating successfully an nsproxy object, we should create namespaces according to the specified flags, or associate an existing namespace to the new nsproxy object we created. We start by calling copy_mnt_ns(), for the mount namespaces, and then we callcopy_utsname(), for the UTS namespace. I will describe here shortly the copy_utsname() method, because the UTS namespace is discussed in the “UTS Namespaces Implementation” section later in this chapter. If the CLONE_NEWUTS is not set in the specified flags of thecopy_utsname() method, the copy_utsname() method does not create a new UTS namespace; it returns the UTS namespace that was passed by tsk->nsproxy->uts_ns as the last parameter to the copy_utsname() method. In case the CLONE_NEWUTS is set, thecopy_utsname() method clones the specified UTS namespace by calling the clone_uts_ns() method. The clone_uts_ns() method, in turn, allocates a new UTS namespace object, copies the new_utsname object of the specified UTS namespace (tsk->nsproxy->uts_ns) into the new_utsname object of the newly created UTS namespace object, and returns the newly created UTS namespace. You will learn more about the new_utsname structure in the “UTS Namespaces Implementation” section later in this chapter:

new_nsp->uts_ns = copy_utsname(flags, user_ns, tsk->nsproxy->uts_ns);

if (IS_ERR(new_nsp->uts_ns)) {

err = PTR_ERR(new_nsp->uts_ns);

goto out_uts;

}

. . .

After handling the UTS namespace, we continue with calling the copy_ipcs() method to handle the IPC namespace, copy_pid_ns() to handle the PID namespace, and copy_net_ns() to handle the network namespace. Note that there is no call to the copy_user_ns()method, as the nsproxy does not contain a pointer to user namespace, as was mentioned earlier. I will describe here shortly the copy_net_ns() method. If the CLONE_NEWNET is not set in the specified flags of the create_new_namespaces() method, the copy_net_ns()method returns the network namespace that was passed as the third parameter to the copy_net_ns() method, tsk->nsproxy->net_ns, much like the copy_utsname() did, as you saw earlier in this section. If the CLONE_NEWNET is set, the copy_net_ns() method allocates a new network namespace by calling the net_alloc() method, initializes it by calling the setup_net() method, and adds it to the global list of all network namespaces, net_namespace_list:

new_nsp->net_ns = copy_net_ns(flags, user_ns, tsk->nsproxy->net_ns);

if (IS_ERR(new_nsp->net_ns)) {

err = PTR_ERR(new_nsp->net_ns);

goto out_net;

}

return new_nsp;

}

Note that the setns() system call, which does not create a new namespace but only attaches the calling thread to a specified namespace, also calls create_new_namespaces(), but it passes 0 as a first parameter; this implies that only an nsproxy is created by calling the create_nsproxy() method, but no new namespace is created, but the calling thread is associated with an existing network namespace which is identified by the specified fd argument of the setns() system call. Later in the setns() system call implementation, the switch_task_namespaces() method is invoked, and it assigns the new nsproxy which was just created to the calling thread (see kernel/nsproxy.c).

· A method named exit_task_namespaces()was added in kernel/nsproxy.c. It is called when a process is terminated, by the do_exit() method (kernel/exit.c). The exit_task_namespaces() method gets the process descriptor (task_structobject) as a single parameter. In fact the only thing it does is call the switch_task_namespaces() method, passing the specified process descriptor and a NULL nsproxy object as arguments. The switch_task_namespaces() method, in turn, nullifies thensproxy object of the process descriptor of the process which is being terminated. If there are no other processes that use that nsproxy, it is freed.

· A method named get_net_ns_by_fd() was added. This method gets a file descriptor as its single parameter, and returns the network namespace associated with the inode that corresponds to the specified file descriptor. For readers who are not familiar with filesystems and with inode semantics, I suggest reading the “Inode Objects” section of Chapter 12, “The Virtual Filesystem,” in Understanding the Linux Kernel by Daniel P. Bovet and Marco Cesati (O’Reilly, 2005).

· A method named get_net_ns_by_pid()was added. This method gets a PID number as a single argument, and it returns the network namespace object to which this process is attached.

· Six entries were added under /proc/<pid>/ns, one for each namespace. These files, when opened, should be fed into the setns() system call. You can use ls –al or readlink to display the unique proc inode number which is associated with a namespace. This unique proc inode is created by the proc_alloc_inum() method when the namespace is created, and is freed by the proc_free_inum() method when the namespace is released. See, for example, in the create_pid_namespace() method inkernel/pid_namespace.c. In the following example, the number in square brackets on the right is the unique proc inode number of each namespace:

· ls -al /proc/1/ns/

total 0

dr-x--x--x 2 root root 0 Nov 3 13:32 .

dr-xr-xr-x 8 root root 0 Nov 3 12:17 ..

lrwxrwxrwx 1 root root 0 Nov 3 13:32 ipc -> ipc:[4026531839]

lrwxrwxrwx 1 root root 0 Nov 3 13:32 mnt -> mnt:[4026531840]

lrwxrwxrwx 1 root root 0 Nov 3 13:32 net -> net:[4026531956]

lrwxrwxrwx 1 root root 0 Nov 3 13:32 pid -> pid:[4026531836]

lrwxrwxrwx 1 root root 0 Nov 3 13:32 user -> user:[4026531837]

lrwxrwxrwx 1 root root 0 Nov 3 13:32 uts -> uts:[4026531838]

· A namespace can stay alive if either one of the following conditions is met:

· The namespace file under /proc/<pid>/ns/ descriptor is held.

· bind mounting the namespace proc file somewhere else, for example, for PID namespace, by: mount --bind /proc/self/ns/pid /some/filesystem/path

· For each of the six namespaces, a proc namespace operations object (an instance of proc_ns_operations structure) is defined. This object consists of callbacks, such as inum, to return the unique proc inode number associated with the namespace or install, for namespace installation (in the install callback, namespace specific actions are performed, such as attaching the specific namespace object to the nsproxy object, and more; the install callback is invoked by the setns system call). The proc_ns_operationsstructure in defined in include/linux/proc_fs.h. Following is the list of the six proc_ns_operations objects:

· utsns_operations for UTS namespace (kernel/utsname.c)

· ipcns_operations for IPC namespace (ipc/namespace.c)

· mntns_operations for mount namespaces (fs/namespace.c)

· pidns_operations for PID namespaces (kernel/pid_namespace.c)

· userns_operations for user namespace (kernel/user_namespace.c)

· netns_operations for network namespace (net/core/net_namespace.c)

· For each namespace, except the mount namespace, there is an initial namespace:

· init_uts_ns: For UTS namespace (init/version.c).

· init_ipc_ns: For IPC namespace (ipc/msgutil.c).

· init_pid_ns: For PID namespace (kernel/pid.c).

· init_net: For network namespace (net/core/net_namespace.c).

· init_user_ns: For user namespace (kernel/user.c).

· An initial, default nsproxy object is defined: it is called init_nsproxy and it contains pointers to five initial namespaces; they are all initialized to be the corresponding specific initial namespace except for the mount namespace, which is initialized to be NULL:

· struct nsproxy init_nsproxy = {

.count = ATOMIC_INIT(1),

.uts_ns = &init_uts_ns,

#if defined(CONFIG_POSIX_MQUEUE) || defined(CONFIG_SYSVIPC)

.ipc_ns = &init_ipc_ns,

#endif

.mnt_ns = NULL,

.pid_ns = &init_pid_ns,

#ifdef CONFIG_NET

.net_ns = &init_net,

#endif

};

(kernel/nsproxy.c)

· A method named task_nsproxy() was added; it gets as a single parameter a process descriptor (task_struct object), and it returns the nsproxy associated with the specified task_struct object. See include/linux/nsproxy.h.

These are the six namespaces available in the Linux kernel as of this writing:

· Mount namespaces: The mount namespaces allows a process to see its own view of the filesystem and of its mount points. Mounting a filesystem in one mount namespace does not propagate to the other mount namespaces. Mount namespaces are created by setting the CLONE_NEWNS flag when calling the clone() or unshare() system calls. In order to implement mount namespaces, a structure called mnt_namespace was added (fs/mount.h), and nsproxy holds a pointer to an mnt_namespace object called mnt_ns. Mount namespaces are available from kernel 2.4.19. Mount namespaces are implemented primarily in fs/namespace.c. When creating a new mount namespace, the following rules apply:

· All previous mounts will be visible in the new mount namespace.

· Mounts/unmounts in the new mount namespace are invisible to the rest of the system.

· Mounts/unmounts in the global mount namespace are visible in the new mount namespace.

Mount namespaces use a VFS enhancement called shared subtrees, which was introduced in the Linux 2.6.15 kernel; the shared subtrees feature introduced new flags: MS_PRIVATE, MS_SHARED, MS_SLAVE and MS_UNBINDABLE . (Seehttp://lwn.net/Articles/159077/ and Documentation/filesystems/sharedsubtree.txt.) I will not discuss the internals of mount namespaces implementation. For readers who want to learn more about mount namespaces usage, I suggest reading the following article: “Applying Mount Namespaces,” by Serge E. Hallyn and Ram Pai (http://www.ibm.com/developerworks/linux/library/l-mount-namespaces/index.html).

· PID namespaces: The PID namespaces provides the ability for different processes in different PID namespaces to have the same PID. This feature is a building block for Linux containers. It is important for checkpoint/restore of a process, because a process checkpointed on one host can be restored on a different host even if there is a process with the same PID on that host. When creating the first process in a new PID namespace, its PID is 1. The behavior of this process is somewhat like the behavior of the init process. This means that when a process dies, all its orphaned children will now have the process with PID 1 as their parent (child reaping). Sending SIGKILL signal to a process with PID 1 does not kill the process, regardless of in which namespace the SIGKILL signal was sent, in the initial PID namespace or in any other PID namespace. But killing init of one PID namespace from another (parent one) will work. In this case, all of the tasks living in the former namespace will be killed and the PID namespace will be stopped. PID namespaces are created by setting the CLONE_NEWPID flag when calling the clone() or unshare() system calls. In order to implement PID namespaces, a structure called pid_namespace was added (include/linux/pid_namespace.h), and nsproxy holds a pointer to apid_namespace object called pid_ns. In order to have PID namespaces support, CONFIG_PID_NS should be set. PID namespaces are available from kernel 2.6.24. PID namespaces are implemented primarily in kernel/pid_namespace.c.

· Network namespaces: The network namespace allows creating what appears to be multiple instances of the kernel network stack. Network namespaces are created by setting the CLONE_NEWNET flag when calling the clone() or unshare() system calls. In order to implement network namespaces, a structure called net was added (include/net/net_namespace.h), and nsproxy holds a pointer to a net object called net_ns. In order to have network namespaces support, CONFIG_NET_NS should be set. I will discuss network namespaces later in this section. Network namespaces are available from kernel 2.6.29. Network namespaces are implemented primarily in net/core/net_namespace.c.

· IPC namespaces: The IPC namespace allows a process to have its own System V IPC resources and POSIX message queues resources. IPC namespaces are created by setting the CLONE_NEWIPC flag when calling the clone() or unshare() system calls. In order to implement IPC namespaces, a structure called ipc_namespace was added (include/linux/ipc_namespace.h), and nsproxy holds a pointer to an ipc_namespace object called ipc_ns. In order to have IPC namespaces support, CONFIG_IPC_NS should be set. Support for System V IPC resources is available in IPC namespaces from kernel 2.6.19. Support for POSIX message queues resources in IPC namespaces was added later, in kernel 2.6.30. IPC namespaces are implemented primarily in ipc/namespace.c.

· UTS namespaces: The UTS namespace provides the ability for different UTS namespaces to have different host name or domain name (or other information returned by the uname() system call). UTS namespaces are created by setting the CLONE_NEWUTS flag when calling the clone() or unshare() system calls. UTS namespace implementation is the simplest among the six namespaces that were implemented. In order to implement the UTS namespace, a structure called uts_namespace was added (include/linux/utsname.h), and nsproxy holds a pointer to a uts_namespace object called uts_ns. In order to have UTS namespaces support, CONFIG_UTS_NS should be set. UTS namespaces are available from kernel 2.6.19. UTS namespaces are implemented primarily in kernel/utsname.c.

· User namespaces: The user namespace allows mapping of user and group IDs. This mapping is done by writing to two procfs entries that were added for supporting user namespaces: /proc/sys/kernel/overflowuid and/proc/sys/kernel/overflowgid. A process attached to a user namespace can have a different set of capabilities then the host. User namespaces are created by setting the CLONE_NEWUSER flag when calling the clone() or unshare() system calls. In order to implement user namespaces, a structure called user_namespace was added (include/linux/user_namespace.h). The user_namespace object contains a pointer to the user namespace object that created it (parent). As opposed to the other five namespaces, nsproxy does not hold a pointer to a user_namespace object. I will not delve into more implementation details of user namespaces, as it is probably the most complex namespace and as it is beyond the scope of the book. In order to have user namespaces support, CONFIG_USER_NS should be set. User namespaces are available from kernel 3.8 for almost all filesystem types. User namespaces are implemented primarily in kernel/user_namespace.c.

Support to namespaces was added in four userspace packages:

· In util-linux:

· The unshare utility can create any of the six namespaces, available since version 2.17.

· The nsenter utility (which is in fact a light wrapper around the setns system call), available since version 2.23.

· In iproute2, management of network namespaces is done with the ip netns command, and you will see several examples for this later in this chapter. Moreover, you can move a network interface to a different network namespace with the ip link command as you will see in the “Moving a Network Interface to a different Network Namespace” section later in this chapter.

· In ethtool, support was added to enable to find out whether the NETIF_F_NETNS_LOCAL feature is set for a specified network interface. When the NETIF_F_NETNS_LOCAL feature is set, this indicates that the network interface is local to that network namespace, and you cannot move it to a different network namespace. The NETIF_F_NETNS_LOCAL feature will be discussed later in this section.

· In the wireless iw package, an option was added to enable moving a wireless interface to a different namespace.

![]() Note In a presentation in Ottawa Linux Symposium (OLS) in 2006, “Multiple Instances of the Global Linux Namespaces,” Eric W. Biederman (one of the main developers of Linux namespaces) mentioned ten namespaces; the other four namespaces that he mentioned in this presentation and that are not implemented yet are: device namespace, security namespace, security keys namespace, and time namespace. (See https://www.kernel.org/doc/ols/2006/ols2006v1-pages-101-112.pdf.) For more information about namespaces, I suggest reading a series of six articles about it by Michael Kerrisk (https://lwn.net/Articles/531114/). Mobile OS virtualization projects triggered a development effort to support device namespaces; for more information about device namespaces, which are not yet part of the kernel, see “Device Namespaces” By Jake Edge (http://lwn.net/Articles/564854/) and also (http://lwn.net/Articles/564977/). There was also some work for implementing a new syslog namespace (see the article “Stepping Closer to Practical Containers: “syslog” namespaces”, http://lwn.net/Articles/527342/).

Note In a presentation in Ottawa Linux Symposium (OLS) in 2006, “Multiple Instances of the Global Linux Namespaces,” Eric W. Biederman (one of the main developers of Linux namespaces) mentioned ten namespaces; the other four namespaces that he mentioned in this presentation and that are not implemented yet are: device namespace, security namespace, security keys namespace, and time namespace. (See https://www.kernel.org/doc/ols/2006/ols2006v1-pages-101-112.pdf.) For more information about namespaces, I suggest reading a series of six articles about it by Michael Kerrisk (https://lwn.net/Articles/531114/). Mobile OS virtualization projects triggered a development effort to support device namespaces; for more information about device namespaces, which are not yet part of the kernel, see “Device Namespaces” By Jake Edge (http://lwn.net/Articles/564854/) and also (http://lwn.net/Articles/564977/). There was also some work for implementing a new syslog namespace (see the article “Stepping Closer to Practical Containers: “syslog” namespaces”, http://lwn.net/Articles/527342/).

The following three system calls can be used with namespaces:

· clone(): Creates a new process attached to a new namespace (or namespaces). The type of the namespace is specified by a CLONE_NEW* flag which is passed as a parameter. Note that you can also use a bitmask of these CLONE_NEW* flags. The implementation of the clone() system call is in kernel/fork.c.

· unshare(): Discussed earlier in this section.

· setns(): Discussed earlier in this section.

![]() Note Namespaces do not have names inside the kernel that userspace processes can use to talk with them. If namespaces would have names, this would require keeping them globally, in yet another special namespace. This would complicate the implementation and can raise problems in checkpoint/restore for example. Instead, userspace processes should open namespace files under /proc/<pid>/ns/ and their file descriptors can be used to talk to a specific namespace, in order to keep that namespace alive. Namespaces are identified by a unique proc inode number generated when they are created and freed when they are released. Each of the six namespace structures contains an integer member called proc_inum, which is the namespace unique proc inode number and is assigned by calling the proc_alloc_inum() method. Each of the six namespaces has also a proc_ns_operations object, which includes namespace-specific callbacks; one of these callbacks, called inum, returns the proc_inum of the associated namespace (for the definition of proc_ns_operations structure, refer toinclude/linux/proc_fs.h).

Note Namespaces do not have names inside the kernel that userspace processes can use to talk with them. If namespaces would have names, this would require keeping them globally, in yet another special namespace. This would complicate the implementation and can raise problems in checkpoint/restore for example. Instead, userspace processes should open namespace files under /proc/<pid>/ns/ and their file descriptors can be used to talk to a specific namespace, in order to keep that namespace alive. Namespaces are identified by a unique proc inode number generated when they are created and freed when they are released. Each of the six namespace structures contains an integer member called proc_inum, which is the namespace unique proc inode number and is assigned by calling the proc_alloc_inum() method. Each of the six namespaces has also a proc_ns_operations object, which includes namespace-specific callbacks; one of these callbacks, called inum, returns the proc_inum of the associated namespace (for the definition of proc_ns_operations structure, refer toinclude/linux/proc_fs.h).

Before discussing network namespaces, let’s describe how the simplest namespace, the UTS namespace, is implemented. This is a good starting point to understand the other, more complex namespaces.

UTS Namespaces Implementation

In order to implement UTS namespaces, a struct called uts_namespace was added:

struct uts_namespace {

struct kref kref;

struct new_utsname name;

struct user_namespace *user_ns;

unsigned int proc_inum;

};

(include/linux/utsname.h)

Here is a short description of the members of the uts_namespace structure:

· kref: A reference counter. It is a generic kernel reference counter, incremented by the kref_get() method and decremented by the kref_put() method. Besides the UTS namespace, also the PID namespace has a kref object as a reference counter; all the other four namespaces use an atomic counter for reference counting. For more info about the kref API look in Documentation/kref.txt.

· name: A new_utsname object, contains fields like domainname and nodename (will be discussed shortly).

· user_ns: The user namespace associated with the UTS namespace.

· proc_inum: The unique proc inode number of the UTS namespace.

The nsproxy structure contains a pointer to the uts_namespace:

struct nsproxy {

. . .

struct uts_namespace *uts_ns;

. . .

};

(include/linux/nsproxy.h)

As you saw earlier, the uts_namespace object contains an instance of the new_utsname structure. Let’s take a look at the new_utsname structure, which is the essence of the UTS namespace:

struct new_utsname {

char sysname[__NEW_UTS_LEN + 1];

char nodename[__NEW_UTS_LEN + 1];

char release[__NEW_UTS_LEN + 1];

char version[__NEW_UTS_LEN + 1];

char machine[__NEW_UTS_LEN + 1];

char domainname[__NEW_UTS_LEN + 1];

};

(include/uapi/linux/utsname.h)

The nodename member of the new_utsname is the host name, and domainname is the domain name. A method named utsname() was added; this method simply returns the new_utsname object which is associated with the process that currently runs (current):

static inline struct new_utsname *utsname(void)

{

return ¤t->nsproxy->uts_ns->name;

}

(include/linux/utsname.h)

Now, the new gethostname() system call implementation is the following:

SYSCALL_DEFINE2(gethostname, char __user *, name, int, len)

{

int i, errno;

struct new_utsname *u;

if (len < 0)

return -EINVAL;

down_read(&uts_sem);

Invoke the utsname() method, which accesses the new_utsname object of the UTS namespace associated with the current process:

u = utsname();

i = 1 + strlen(u->nodename);

if (i > len)

i = len;

errno = 0;

Copy to userspace the nodename of the new_utsname object that the utsname() method returned:

if (copy_to_user(name, u->nodename, i))

errno = -EFAULT;

up_read(&uts_sem);

return errno;

}

(kernel/sys.c)

You can find a similar approach in the sethostbyname() and in the uname() system calls, which are also defined in kernel/sys.c. I should note that UTS namespaces implementation also handles UTS procfs entries. There are only two UTS procfs entries,/proc/sys/kernel/domainname and /proc/sys/kernel/hostname, which are writable (this means that you can change them from userspace). There are other UTS procfs entries which are not writable, like /proc/sys/kernel/ostype and/proc/sys/kernel/osrelease. If you will look at the table of the UTS procfs entries, uts_kern_table (kernel/utsname_sysctl.c), you will see that some entries, like ostype and osrelease, have mode of “0444”, which means they are not writable, and only two of them, hostname and domainname, have mode of “0644”, which means they are writable. Reading and writing the UTS procfs entries is handled by the proc_do_uts_string() method. Readers who want to learn more about how UTS procfs entries are handled should look into the proc_do_uts_string() method and into the get_uts() method; both are in kernel/utsname_sysctl.c.

Now that you learned about how the simplest namespace, the UTS namespace, is implemented, it is time to learn about network namespaces and their implementation.

Network Namespaces Implementation

A network namespace is logically another copy of the network stack, with its own network devices, routing tables, neighbouring tables, netfilter tables, network sockets, network procfs entries, network sysfs entries, and other network resources. A practical feature of network namespaces is that network applications running in a given namespace (let’s say ns1) will first look for configuration files under /etc/netns/ns1, and only afterward under /etc. So, for example, if you created a namespace called ns1 and you have created /etc/netns/ns1/hosts, every userspace application that tries to access the hosts file will first access /etc/netns/ns1/hosts and only then (if the entry being looked for does not exist) will it read /etc/hosts. This feature is implemented using bind mounts and is available only for network namespaces created with the ip netns add command.

The Network Namespace Object (struct net)

Let’s turn now to the definition of the net structure, which is the fundamental data structure that represents a network namespace:

struct net {

. . .

struct user_namespace *user_ns; /* Owning user namespace */

unsigned int proc_inum;

struct proc_dir_entry *proc_net;

struct proc_dir_entry *proc_net_stat;

. . .

struct list_head dev_base_head;

struct hlist_head *dev_name_head;

struct hlist_head *dev_index_head;

. . .

int ifindex;

. . .

struct net_device *loopback_dev; /* The loopback */

. . .

atomic_t count; /* To decided when the network

* namespace should be shut down.

*/

struct netns_ipv4 ipv4;

#if IS_ENABLED(CONFIG_IPV6)

struct netns_ipv6 ipv6;

#endif

#if defined(CONFIG_IP_SCTP) || defined(CONFIG_IP_SCTP_MODULE)

struct netns_sctp sctp;

#endif

. . .

#if defined(CONFIG_NF_CONNTRACK) || defined(CONFIG_NF_CONNTRACK_MODULE)

struct netns_ct ct;

#endif

#if IS_ENABLED(CONFIG_NF_DEFRAG_IPV6)

struct netns_nf_frag nf_frag;

#endif

. . .

struct net_generic __rcu *gen;

#ifdef CONFIG_XFRM

struct netns_xfrm xfrm;

#endif

. . .

};

(include/net/net_namespace.h)

Here is a short description of several members of the net structure:

· user_ns represents the user namespace that created the network namespace; it owns the network namespace and all its resources. It is assigned in the setup_net() method. For the initial network namespace object (init_net), the user namespace that created it is the initial user namespace, init_user_ns.

· proc_inum is the unique proc inode number associated to the network namespace. This unique proc inode is created by the proc_alloc_inum() method, which also assigns proc_inum to be the proc inode number. The proc_alloc_inum() method is invoked by the network namespace initialization method, net_ns_net_init(), and it is freed by calling the proc_free_inum() method in the network namespace cleanup method, net_ns_net_exit().

· proc_net represents the network namespace procfs entry (/proc/net) as each network namespace maintains its own procfs entry.

· proc_net_stat represents the network namespace procfs statistics entry (/proc/net/stat) as each network namespace maintains its own procfs statistics entry.

· dev_base_head points to a linked list of all network devices.

· dev_name_head points to a hashtable of network devices, where the key is the network device name.

· dev_index_head points to a hashtable of network devices, where the key is the network device index.

· ifindex is the last device index assigned inside a network namespace. Indices are virtualized in network namespaces; this means that loopback devices would always have index of 1 in all network namespaces, and other network devices may have coinciding indices when living in different network namespaces.

· loopback_dev is the loopback device. Every new network namespace is created with only one network device, the loopback device. The loopback_dev object of a network namespace is assigned in the loopback_net_init() method,drivers/net/loopback.c. You cannot move the loopback device from one network namespace to another.

· count is the network namespace reference counter. It is initialized to 1 when the network namespace is created by the by the setup_net() method. It is incremented by the get_net() method and decremented by the put_net() method. If the count reference counter reaches 0 in the put_net() method, the __put_net() method is called. The __put_net() method, in turn, adds the network namespace to a global list of network namespaces to be removed, cleanup_list, and later removes it.

· ipv4 (an instance of the netns_ipv4 structure) for the IPv4 subsystem. The netns_ipv4 structure contains IPv4 specific fields which are different for different namespaces. For example, in Chapter 6 you saw that the multicast routing table of a specified network namespace called net is stored in net->ipv4.mrt. I will discuss the netns_ipv4 later in this section.

· ipv6 (an instance of the netns_ipv6 structure) for the IPv6 subsystem.

· sctp (an instance of the netns_sctp structure) for SCTP sockets.

· ct (an instance of the netns_ct structure, which is discussed in Chapter 9) for the netfilter connection tracking subsystem.

· gen (an instance of the net_generic structure, defined in include/net/netns/generic.h) is a set of generic pointers on structures describing a network namespace context of optional subsystems. For example, the sit module (Simple Internet Transition, an IPv6 tunnel, implemented in net/ipv6/sit.c) puts its private data on struct net using this engine. This was introduced in order not to flood the struct net with pointers for every single network subsystem that is willing to have per network namespace context.

· xfrm (an instance of the netns_xfrm structure, which is mentioned several times in Chapter 10) for the IPsec subsystem.

Let’s take a look at the IPv4 specific namespace, the netns_ipv4 structure:

struct netns_ipv4 {

. . .

#ifdef CONFIG_IP_MULTIPLE_TABLES

struct fib_rules_ops *rules_ops;

bool fib_has_custom_rules;

struct fib_table *fib_local;

struct fib_table *fib_main;

struct fib_table *fib_default;

#endif

. . .

struct hlist_head *fib_table_hash;

struct sock *fibnl;

struct sock **icmp_sk;

. . .

#ifdef CONFIG_NETFILTER

struct xt_table *iptable_filter;

struct xt_table *iptable_mangle;

struct xt_table *iptable_raw;

struct xt_table *arptable_filter;

#ifdef CONFIG_SECURITY

struct xt_table *iptable_security;

#endif

struct xt_table *nat_table;

#endif

int sysctl_icmp_echo_ignore_all;

int sysctl_icmp_echo_ignore_broadcasts;

int sysctl_icmp_ignore_bogus_error_responses;

int sysctl_icmp_ratelimit;

int sysctl_icmp_ratemask;

int sysctl_icmp_errors_use_inbound_ifaddr;

int sysctl_tcp_ecn;

kgid_t sysctl_ping_group_range[2];

long sysctl_tcp_mem[3];

atomic_t dev_addr_genid;

#ifdef CONFIG_IP_MROUTE

#ifndef CONFIG_IP_MROUTE_MULTIPLE_TABLES

struct mr_table *mrt;

#else

struct list_head mr_tables;

struct fib_rules_ops *mr_rules_ops;

#endif

#endif

};

(net/netns/ipv4.h)

You can see in the netns_ipv4 structure many IPv4-specific tables and variables, like the routing tables, the netfilter tables, the multicast routing tables, and more.

Network Namespaces Implementation: Other Data Structures

In order to support network namespaces, a member called nd_net, which is a pointer to a network namespace, was added to the network device object (struct net_device). Setting the network namespace for a network device is done by calling the dev_net_set() method, and getting the network namespace associated to a network device is done by calling the dev_net() method. Note that a network device can belong to only a single network namespace at a given moment. The nd_net is set typically when a network device is registered or when a network device is moved to a different network namespace. For example, when registering a VLAN device, both these methods just mentioned are used:

static int register_vlan_device(struct net_device *real_dev, u16 vlan_id)

{

struct net_device *new_dev;

The network namespace to be assigned to the new VLAN device is the network namespace associated with the real device, which is passed as a parameter to the register_vlan_device() method; we get this namespace by calling dev_net(real_dev):

struct net *net = dev_net(real_dev);

. . .

new_dev = alloc_netdev(sizeof(struct vlan_dev_priv), name, vlan_setup);

if (new_dev == NULL)

return -ENOBUFS;

Switch the network namespace by calling the dev_net_set() method:

dev_net_set(new_dev, net);

. . .

}

A member called sk_net, a pointer to a network namespace, was added to struct sock, which represents a socket. Setting the network namespace for a sock object is done by calling the sock_net_set() method, and getting the network namespace associated to a sock object is done by calling the sock_net() method. Like in the case of the nd_net object, also a sock object can belong to only a single network namespace at a given moment.

When the system boots, a default network namespace, init_net, is created. After the boot, all physical network devices and all sockets belong to that initial namespace, as well as the network loopback device.

Some network devices and some network subsystems should have network namespaces specific data. In order to enable this, a structure named pernet_operations was added; this structure includes an init and exit callbacks:

struct pernet_operations {

. . .

int (*init)(struct net *net);

void (*exit)(struct net *net);

. . .

int *id;

size_t size;

};

(include/net/net_namespace.h)

Network devices that need network namespaces specific data should define a pernet_operations object, and define its init() and exit() callbacks for device specific initialization and cleanup, respectively, and call the register_pernet_device() method in their module initialization and the unregister_pernet_device() method when the module is removed, passing the pernet_operations object as a single parameter in both cases. For example, the PPPoE module exports information about PPPoE session by a procfs entry,/proc/net/pppoe. The information exported by this procfs entry depends on the network namespace to which this PPPoE device belongs (since different PPPoE devices can belong to different network namespaces). So the PPPoE module defines a pernet_operations object called pppoe_net_ops:

static struct pernet_operations pppoe_net_ops = {

.init = pppoe_init_net,

.exit = pppoe_exit_net,

.id = &pppoe_net_id,

.size = sizeof(struct pppoe_net),

}

(net/ppp/pppoe.c)

In the init callback, pppoe_init_net(), it only creates the PPPoE procfs entry, /proc/net/pppoe, by calling the proc_create() method:

static __net_init int pppoe_init_net(struct net *net)

{

struct pppoe_net *pn = pppoe_pernet(net);

struct proc_dir_entry *pde;

rwlock_init(&pn->hash_lock);

pde = proc_create("pppoe", S_IRUGO, net->proc_net, &pppoe_seq_fops);

#ifdef CONFIG_PROC_FS

if (!pde)

return -ENOMEM;

#endif

return 0;

}

(net/ppp/pppoe.c)

And in the exit callback, pppoe_exit_net(), it only removes the PPPoE procfs entry, /proc/net/pppoe, by calling the remove_proc_entry() method:

static __net_exit void pppoe_exit_net(struct net *net)

{

remove_proc_entry("pppoe", net->proc_net);

}

(net/ppp/pppoe.c)

Network subsystems that need network-namespace-specific data should call register_pernet_subsys() when the subsystem is initialized and unregister_pernet_subsys() when the subsystem is removed. You can look for examples in net/ipv4/route.c, and there are many other examples of reviewing these methods. The network namespace module itself also defines a net_ns_ops object and registers it in the boot phase:

static struct pernet_operations __net_initdata net_ns_ops = {

.init = net_ns_net_init,

.exit = net_ns_net_exit,

};

static int __init net_ns_init(void)

{

. . .

register_pernet_subsys(&net_ns_ops);

. . .

}

(net/core/net_namespace.c)

Each time a new network namespace is created, the init callback (net_ns_net_init) is called, and each time a network namespace is removed, the exit callback (net_ns_net_exit) is called. The only thing that the net_ns_net_init() does is to allocate a unique proc inode for the newly created namespace by calling the proc_alloc_inum() method; the newly created unique proc inode number is assigned to net->proc_inum:

static __net_init int net_ns_net_init(struct net *net)

{

return proc_alloc_inum(&net->proc_inum);

}

And the only thing that the net_ns_net_exit() method does is to remove that unique proc inode by calling the proc_free_inum() method:

static __net_exit void net_ns_net_exit(struct net *net)

{

proc_free_inum(net->proc_inum);

}

When you create a new network namespace, it has only the network loopback device. The most common ways to create a network namespace are:

· By a userspace application which will create a network namespace with the clone() system call or with the unshare() system call, setting the CLONE_NEWNET flag in both cases.

· Using ip netns command of iproute2 (you will shortly see an example).

· Using the unshare utility of util-linux, with the --net flag.

Network Namespaces Management

Next you will see some examples of using the ip netns command of the iproute2 package to perform actions such as creating a network namespace, deleting a network namespace, showing all the network namespaces, and more.

· Creating a network namespace named ns1 is done by:

ip netns add ns1

Running this command triggers first the creation of a file called /var/run/netns/ns1, and then the creation of the network namespace by the unshare() system call, passing it a CLONE_NEWNET flag. Then /var/run/netns/ns1 is attached to the network namespace (/proc/self/ns/net) by a bind mount (calling the mount() system call with MS_BIND). Note that network namespaces can be nested, which means that from within ns1 you can also create a new network namespace, and so on.

· Deleting a network namespace named ns1 is done by:

ip netns del ns1

Note that this will not delete a network namespace if there is one or more processes attached to it. In case there are no such processes, the /var/run/netns/ns1 file is deleted. Note also that when deleting a namespace, all its network devices are moved to the initial, default network namespace, init_net, except for network namespace local devices, which are network devices whose NETIF_F_NETNS_LOCAL feature is set; such network devices are deleted. See more in the “Moving a Network Interface to a Network Namespace” section later in this chapter and in Appendix A.

· Showing all the network namespaces in the system that were added by ip netns add is done by:

ip netns list

In fact, running ip netns list simply shows the names of files under /var/run/netns. Note that network namespaces not added by ip netns add will not be displayed by ip netns list, because creating such network namespaces did not trigger creation of any file under /var/run/netns. So, for example, a network namespace created by unshare --net bash will not appear when running ip netns list.

· Monitoring creation and removal of a network namespace is done by:

ip netns monitor

After running ip netns monitor, when you add a new namespace by ip netns add ns2 you will see on screen the following message: “add ns2”, and after you delete that namespace by ip netns delete ns2 you will see on screen the following message: “delete ns2”. Note that adding and removing network namespaces not by running ip netns add and ip netns delete, respectively, does not trigger displaying any messages on screen by ip netns monitor. The ip netns monitor command is implemented by setting an inotify watch on /var/run/netns. Note that in case you will run ip netns monitor before adding at least one network namespace with ip netns add you will get the following error: inotify_add_watch failed: No such file or directory. The reason is that trying to set a watch on /var/run/netns, which does not exist yet, fails. See man inotify_init() and man inotify_add_watch().

· Start a shell in a specified namespace (ns1 in this example) is done by:

ip netns exec ns1 bash

Note that with ip netns exec you can run any command in a specified network namespace. For example, the following command will display all network interfaces in the network namespace called ns1:

ip netns exec ns1 ifconfig -a

In recent versions of iproute2 (since version 3.8), you have these two additional helpful commands:

· Show the network namespace associated with the specified pid:

ip netns identify #pid

This is implemented by reading /proc/<pid>/ns/net and iterating over the files under /var/run/netns to find a match (using the stat() system call).

· Show the PID of a process (or list of processes) attached to a network namespace called ns1 by:

ip netns pids ns1

This is implemented by reading /var/run/netns/ns1, and then iterating over /proc/<pid> entries to find a matching /proc/pid/ns/net entry (using the stat() system call).

![]() Note For more information about the various ip netns command options see man ip netns.

Note For more information about the various ip netns command options see man ip netns.

Moving a Network Interface to a Different Network Namespace

Moving a network interface to a network namespace named ns1 can be done with the ip command. For example, by: ip link set eth0 netns ns1. As part of implementing network namespaces, a new feature named NETIF_F_NETNS_LOCAL was added to the features of thenet_device object (The net_device structure represents a network interface. For more information about the net_device structure and its features see Appendix A). You can find out whether the NETIF_F_NETNS_LOCAL feature is set for a specified network device by looking at the netns-local flag in the output of ethtool -k eth0 or in the output of ethtool --show-features eth0 (both commands are equivalent.) Note that you cannot set the NETIF_F_NETNS_LOCAL feature with ethtool. This feature, when set, denotes that the network device is a network namespace local device. For example, the loopback, the bridge, the VXLAN and the PPP devices are network namespace local devices. Trying to move a network device whose NETIF_F_NETNS_LOCAL feature is set to a different namespace will fail with an error of –EINVAL, as you will shortly see in the following code snippet. The dev_change_net_namespace() method is invoked when trying to move a network interface to a different network namespace, for example by: ip link set eth0 netns ns1. Let’s take a look at thedev_change_net_namespace() method:

int dev_change_net_namespace(struct net_device *dev, struct net *net, const char *pat)

{

int err;

ASSERT_RTNL();

/* Don't allow namespace local devices to be moved. */

err = -EINVAL;

Return –EINVAL in case that the device is a local device (The NETIF_F_NETNS_LOCAL flag in the features of net_device object is set)

if (dev->features & NETIF_F_NETNS_LOCAL)

goto out;

. . .

Actually switch the network namespace by setting nd_net of the net_device object to the new specified namespace:

dev_net_set(dev, net)

. . .

out:

return err;

}

(net/core/dev.c)

![]() Note You can move a network interface to a network namespace named ns1 also by specifying a PID of a process that is attached to that namespace, without specifying the namespace name explicitly. For example, if you know that a process whose PID is <pidNumber> is attached tons1, running ip link set eth1 netns <pidNumber> will move eth1 to the ns1 namespace. Implementation details: getting the network namespace object when specifying one of the PIDs of its attached processes is implemented by the get_net_ns_by_pid() method, whereas getting the network namespace object when specifying the network namespace name is implemented by the get_net_ns_by_fd() method; both methods are in net/core/net_namespace.c. In order to move a wireless network interface to a different network namespace you should use the iw command. For example, if you want to move wlan0 to a network namespace and you know that a process whose PID is <pidNumber> is attached to that namespace, you can run iw phy phy0 set netns <pidNumber> to move it to that network namespace. For the implementation details, refer to the nl80211_wiphy_netns() method in net/wireless/nl80211.c.

Note You can move a network interface to a network namespace named ns1 also by specifying a PID of a process that is attached to that namespace, without specifying the namespace name explicitly. For example, if you know that a process whose PID is <pidNumber> is attached tons1, running ip link set eth1 netns <pidNumber> will move eth1 to the ns1 namespace. Implementation details: getting the network namespace object when specifying one of the PIDs of its attached processes is implemented by the get_net_ns_by_pid() method, whereas getting the network namespace object when specifying the network namespace name is implemented by the get_net_ns_by_fd() method; both methods are in net/core/net_namespace.c. In order to move a wireless network interface to a different network namespace you should use the iw command. For example, if you want to move wlan0 to a network namespace and you know that a process whose PID is <pidNumber> is attached to that namespace, you can run iw phy phy0 set netns <pidNumber> to move it to that network namespace. For the implementation details, refer to the nl80211_wiphy_netns() method in net/wireless/nl80211.c.

Communicating Between Two Network Namespaces

I will end the network namespaces section with a short example of how two network namespaces can communicate with each other. It can be done either by using Unix sockets or by using the Virtual Ethernet (VETH) network driver to create a pair of virtual network devices and moving one of them to another network namespace. For example, here are the first two namespaces, ns1 and ns2:

ip netns add ns1

ip netns add ns2

Start a shell in ns1:

ip netns exec ns1 bash

Create a virtual Ethernet device (its type is veth):

ip link add name if_one type veth peer name if_one_peer

Move if_one_peer to ns2:

ip link set dev if_one_peer netns ns2

You can now set addresses on if_one and on if_one_peer as usual, with the ifconfig command or with the ip command, and send packets from one network namespace to the other.

![]() Note Network namespaces are not mandatory for a kernel image. By default, network namespaces are enabled (CONFIG_NET_NS is set) in most distributions. However, you can build and boot a kernel where network namespaces are disabled.

Note Network namespaces are not mandatory for a kernel image. By default, network namespaces are enabled (CONFIG_NET_NS is set) in most distributions. However, you can build and boot a kernel where network namespaces are disabled.

I have discussed in this section what namespaces are, and in particular what are network namespaces. I mentioned some of the major changes that were required in order to implement namespaces in general, like adding 6 new CLONE_NEW* flags, adding two new systems calls, adding an nsproxy object to the process descriptor, and more. I also described the implementation of UTS namespaces, which are the most simple among all namespaces, and the implementation of network namespaces. Several examples were given showing how simple it is to manipulate network namespaces with the ip netns command of the iproute2 package. Next I will describe the cgroups subsystem, which provides another solution of resource management, and two network modules that belong to it.

Cgroups

The cgroups subsystem is a project started by Paul Menage, Rohit Seth, and other Google developers in 2006. It was initially called “process containers,” but later it was renamed to “Control Groups.” It provides resource management and resource accounting for groups of processes. It has been part of the mainline kernel since kernel 2.6.24, and it’s used in several projects: for example by systemd (a service manager which replaced SysV init scripts; used, for example, by Fedora and by openSUSE), by the Linux Containers project, which was mentioned earlier in this chapter, by Google containers (https://github.com/google/lmctfy/), by libvirt (http://libvirt.org/cgroups.html) and more. Cgroups kernel implementation is mostly in non-critical paths in terms of performance. The cgroups subsystem implements a new Virtual File System (VFS) type named “cgroups”. All cgroups actions are done by filesystem actions, like creating cgroups directories in a cgroup filesystem, writing or reading to entries in these directories, mounting cgroup filesystems, etc. There is a library called libcgroup (a.k.a. libcg), which provides a set of userspace utilities for cgroups management: for example, cgcreate to create a new cgroup, cgdelete to delete a cgroup, cgexec to run a task in a specified control group, and more. In fact this is done by calling the cgroup filesystem operations from the libcglibrary. The libcg library is likely to see reduced usage in the future because it doesn’t provide any coordination among multiple parties trying to use the cgroup controllers. It could be that in the future all the cgroup file operations will be performed by a library or by a daemon and not directly. The cgroups subsystem, as currently implemented, needs some form of coordination, because there is only a single controller for each resource type. When multiple actors modify it, this necessarily leads to conflicts. The cgroups controllers can be used by many projects likelibvirt, systemd, lxc and more, simultaneously. When working only via cgroups filesystem operations, and when all the projects try to impose their own policy through cgroups at too low a level, without knowing about each other, they may accidently walk over each other. When each will talk to a daemon, for example, such a clash will be avoided. For more information about libcg see http://libcg.sourceforge.net/.

As opposed to namespaces, no new system calls were added for implementing the cgroup subsystem. As in namespaces, several cgroups can be nested. There were code additions in the boot phase, mainly for the initialization of the cgroups subsystem, and in various subsystems, like the memory subsystem or security subsystem. Following here is a short, partial list of tasks that you can perform with cgroups:

· Assign a set of CPUs to a set of processes, with the cpusets cgroup controller. You can also control the NUMA node memory is allocated from with the cpusets cgroup controller.

· Manipulate the out of memory (oom) killer operation or create a process with a limited amount of memory with the memory cgroup controller (memcg). You will see an example later in this chapter.

· Assign permissions to devices under /dev, with the devices cgroup. You will see later an example of using the devices cgroup in the “Cgroup Devices – A Simple Example” section.

· Assign priority to traffic (see the section “The net_prio Module” later in this chapter).

· Freeze processes with the freezer cgroup.

· Report CPU resource usage of tasks of a cgroup with the cpuacct cgroup. Note that there is also the cpu controller, which can provision CPU cycles either by priority or by absolute bandwidth and provides the same or a superset of statistics.

· Tag network traffic with a class identifier (classid); see the section “The cls_cgroup Classifier” later in this chapter.

Next I will describe very briefly some changes that were done for supporting cgroups.

Cgroups Implementation

The cgroup subsystem is very complex. Here are several implementation details about the cgroup subsystem that should give you a good starting point to delve into its internals:

· A new structure called cgroup_subsys was added (include/linux/cgroup.h). It represents a cgroup subsystem (also known as a cgroup controller). The following cgroup subsystems are implemented:

· mem_cgroup_subsys: mm/memcontrol.c

· blkio_subsys: block/blk-cgroup.c

· cpuset_subsys: kernel/cpuset.c

· devices_subsys: security/device_cgroup.c

· freezer_subsys: kernel/cgroup_freezer.c

· net_cls_subsys: net/sched/cls_cgroup.c

· net_prio_subsys: net/core/netprio_cgroup.c

· perf_subsys: kernel/events/core.c

· cpu_cgroup_subsys: kernel/sched/core.c

· cpuacct_subsys: kernel/sched/core.c

· hugetlb_subsys: mm/hugetlb_cgroup.c

· A new structure called cgroup was added; it represents a control group (linux/cgroup.h)

· A new virtual file system was added; this was done by defining the cgroup_fs_type object and a cgroup_ops object (instance of super_operations):

static struct file_system_type cgroup_fs_type = {

.name = "cgroup",

.mount = cgroup_mount,

.kill_sb = cgroup_kill_sb,

};

static const struct super_operations cgroup_ops = {

.statfs = simple_statfs,

.drop_inode = generic_delete_inode,

.show_options = cgroup_show_options,

.remount_fs = cgroup_remount,

};

(kernel/cgroup.c)

And registering it is done like any other filesystem with the register_filesystem() method in the cgroup_init() method; see kernel/cgroup.c.

· The following sysfs entry, /sys/fs/cgroup, is created by default when the cgroup subsystem is initialized; this is done by calling kobject_create_and_add("cgroup", fs_kobj) in the cgroup_init() method. Note that cgroup controllers can be mounted also on other directories.

· There is a global array of cgroup_subsys objects named subsys, defined in kernel/cgroup.c (note that from kernel 3.11, the array name was changed from subsys to cgroup_subsys). There are CGROUP_SUBSYS_COUNT elements in this array. Aprocfs entry called /proc/cgroups is exported by the cgroup subsystem. You can display the elements of the global subsys array in two ways:

· By running cat /proc/cgroups.

· By the lssubsys utility of libcgroup-tools.

· Creating a new cgroup entails generating these four control files always under that cgroup VFS:

· notify_on_release: Its initial value is inherited from its parent. It’s represents a boolean variable, and its usage is related to the release_agenttopmost-only control file, which will be explained shortly.

· cgroup.event_control: This file enables getting notification from a cgroup, using the eventfd() system call. See man 2 eventfd, and fs/eventfd.c.

· tasks: A list of the PIDs which are attached to this group. Attaching a process to a cgroup is done by writing the value of its PID to the tasks control file and is handled by the cgroup_attach_task() method, kernel/cgroup.c. Displaying the cgroups to which a process is attached is done by cat /proc/<processPid>/cgroup. This is handled in the kernel by the proc_cgroup_show() method, in kernel/cgroup.c.

· cgroup.procs: A list of the thread group ids which are attached to this cgroup. The tasks entry allows attaching threads of the same process to different cgroup controllers, whereas cgroup.procs has a process-level granularity (all threads of a single process are moved together and belong to the same cgroup).

· In addition to these four control files, a control file named release_agent is created for the topmost cgroup root object only. The value of this file is a path of an executable that will be executed when the last process of a cgroup is terminated; thenotify_on_release mentioned earlier should be set so that the release_agent feature will be enabled. The release_agent can be assigned as a cgroup mount option; this is the case, for example, in systemd in Fedora. The release_agent mechanism is based on a user-mode helper: the call_usermodehelper() method is invoked and a new userspace process is created each time that the release_agent is activated, which is costly in terms of performance. See: “The past, present, and future of control groups”,lwn.net/Articles/574317/. For the release_agent implementation details see the cgroup_release_agent() method in kernel/cgroup.c.

· Apart from these four default control files and the release_agent topmost-only control file, each subsystem can create its own specific control files. This is done by defining an array of cftype (Control File type) objects and assigning this array to thebase_cftypes member of the cgroup_subsys object. For example, for the memory cgroup controller, we have this definition for the usage_in_bytes control file:

· static struct cftype mem_cgroup_files[] = {

{

.name = "usage_in_bytes",

.private = MEMFILE_PRIVATE(_MEM, RES_USAGE),

.read = mem_cgroup_read,

.register_event = mem_cgroup_usage_register_event,

.unregister_event = mem_cgroup_usage_unregister_event,

},

. . .

struct cgroup_subsys mem_cgroup_subsys = {

.name = "memory",

. . .

.base_cftypes = mem_cgroup_files,

};

(mm/memcontrol.c)

· A member called cgroups, which is a pointer to a css_set object, was added to the process descriptor, task_struct. The css_set object contains an array of pointers to cgroup_subsys_state objects (one such pointer for each cgroup subsystem). The process descriptor itself (task_struct ) does not contain a direct pointer to a cgroup subsystem it is associated to, but this could be determined from this array of cgroup_subsys_state pointers.

Two cgroups networking modules were added. They will be discussed later in this section:

· net_prio (net/core/netprio_cgroup.c).

· cls_cgroup (net/sched/cls_cgroup.c).

![]() Note The cgroup subsystem is still in its early days and likely to see a fair amount of development in its features and interface.

Note The cgroup subsystem is still in its early days and likely to see a fair amount of development in its features and interface.

Next you will see a short example that illustrates how the devices cgroup controller can be used to change the write permission of a device file.

Cgroup Devices Controller: A Simple Example

Let’s look at a simple example of using the devices cgroup. Running the following command will create a devices cgroup:

mkdir /sys/fs/cgroup/devices/0

Three control files will be created under /sys/fs/cgroup/devices/0:

· devices.deny: Devices for which access is denied.

· devices.allow: Devices for which access is allowed.

· devices.list: Available devices.

Each such control file consists of four fields:

· type: possible values are: ‘a’ is all, ‘c’ is char device and ‘b’ is block device.

· The device major number.

· The device minor number.

· Access permission: ‘r’ is permission to read, ’w’ is permission to write, and ’m’ is permission to perform mknod.

By default, when creating a new devices cgroup, it has all the permissions:

cat /sys/fs/cgroup/devices/0/devices.list

a *:* rwm

The following command adds the current shell to the devices cgroup that you created earlier:

echo $$ > /sys/fs/cgroup/devices/0/tasks

The following command will deny access from all devices:

echo a > /sys/fs/cgroup/devices/0/devices.deny

echo "test" > /dev/null

-bash: /dev/null: Operation not permitted

The following command will return the access permission for all devices:

echo a > /sys/fs/cgroup/devices/0/devices.allow

Running the following command, which previously failed, will succeed now:

echo "test" > /dev/null

Cgroup Memory Controller: A Simple Example

You can disable the out of memory (OOM) killer thus, for example:

mkdir /sys/fs/cgroup/memory/0

echo $$ > /sys/fs/cgroup/memory/0/tasks

echo 1 > /sys/fs/cgroup/memory/0/memory.oom_control

Now if you will run some memory-hogging userspace program, the OOM killer will not be invoked. Enabling the OOM killer can be done by:

echo 0 > /sys/fs/cgroup/memory/0/memory.oom_control

You can use the eventfd() system call the get notifications in a userspace application about a change in the status of a cgroup. See man 2 eventfd.

![]() Note You can limit the memory a process in a cgroup can have up to 20M, for example, by:

Note You can limit the memory a process in a cgroup can have up to 20M, for example, by:

echo 20M > /sys/fs/cgroup/memory/0/memory.limit_in_bytes

The net_prio Module

The network priority control group (net_prio) provides an interface for setting the priority of network traffic that is generated by various userspace applications. Usually this can be done by setting the SO_PRIORITY socket option, which sets the priority of the SKB, but it is not always wanted to use this socket option. To support the net_prio module, an object called priomap, an instance of netprio_map structure, was added to the net_device object. Let’s take a look at the netprio_map structure:

struct netprio_map {

struct rcu_head rcu;

u32 priomap_len;

u32 priomap[];

};

(include/net/netprio_cgroup.h)

The priomap array is using the net_prio sysfs entries, as you will see shortly. The net_prio module exports two entries to cgroup sysfs: net_prio.ifpriomap and net_prio.prioidx. The net_prio.ifpriomap is used to set the priomap object of a specified network device, as you will see in the example immediately following. In the Tx path, the dev_queue_xmit() method invokes the skb_update_prio() method to set skb->priority according to the priomap which is associated with the outgoing network device (skb->dev). The net_prio.prioidx is a read-only entry, which shows the id of the cgroup. The net_prio module is a good example of how simple it is to develop a cgroup kernel module in less than 400 lines of code. The net_prio module was developed by Neil Horman and is available from kernel 3.3. For more information see Documentation/cgroups/net_prio.txt. The following is an example of how to use the network priority cgroup module (note that you must load the netprio_cgroup.ko kernel module in case CONFIG_NETPRIO_CGROUP is set as a module and not as a built-in):

mkdir /sys/fs/cgroup/net_prio

mount -t cgroup -onet_prio none /sys/fs/cgroup/net_prio

mkdir /sys/fs/cgroup/net_prio/0

echo "eth1 4" > /sys/fs/cgroup/net_prio/0/net_prio.ifpriomap

This sequence of commands would set any traffic originating from processes belonging to the netprio “0” group and outgoing on interface eth1 to have the priority of four. The last command triggers writing an entry to a field in the net_device object called priomap.

![]() Note In order to work with net_prio, CONFIG_NETPRIO_CGROUP should be set.

Note In order to work with net_prio, CONFIG_NETPRIO_CGROUP should be set.

The cls_cgroup Classifier

The cls_cgroup classifier provides an interface to tag network packets with a class identifier (classid). You can use it in conjunction with the tc tool to assign different priorities to packets from different cgroups, as the example that you will soon see demonstrates. The cls_cgroupmodule exports one entry to cgroup sysfs, net_cls.classid. The control group classifier (cls_cgroup) was merged in kernel 2.6.29 and was developed by Thomas Graf. Like the net_prio module which was discussed in the previous section, also this cgroup kernel module is less than 400 lines of code, which proves again that adding a cgroup controller by a kernel module is not a heavy task. Here is an example of using the control group classifier (note that you must load the cls_cgroup.ko kernel module in case that CONFIG_NETPRIO_CGROUP is set as a module and not as a built-in):

mkdir /sys/fs/cgroup/net_cls

mount -t cgroup -onet_cls none /sys/fs/cgroup/net_cls

mkdir /sys/fs/cgroup/net_cls/0

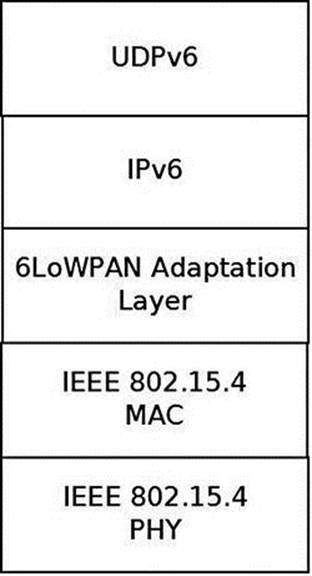

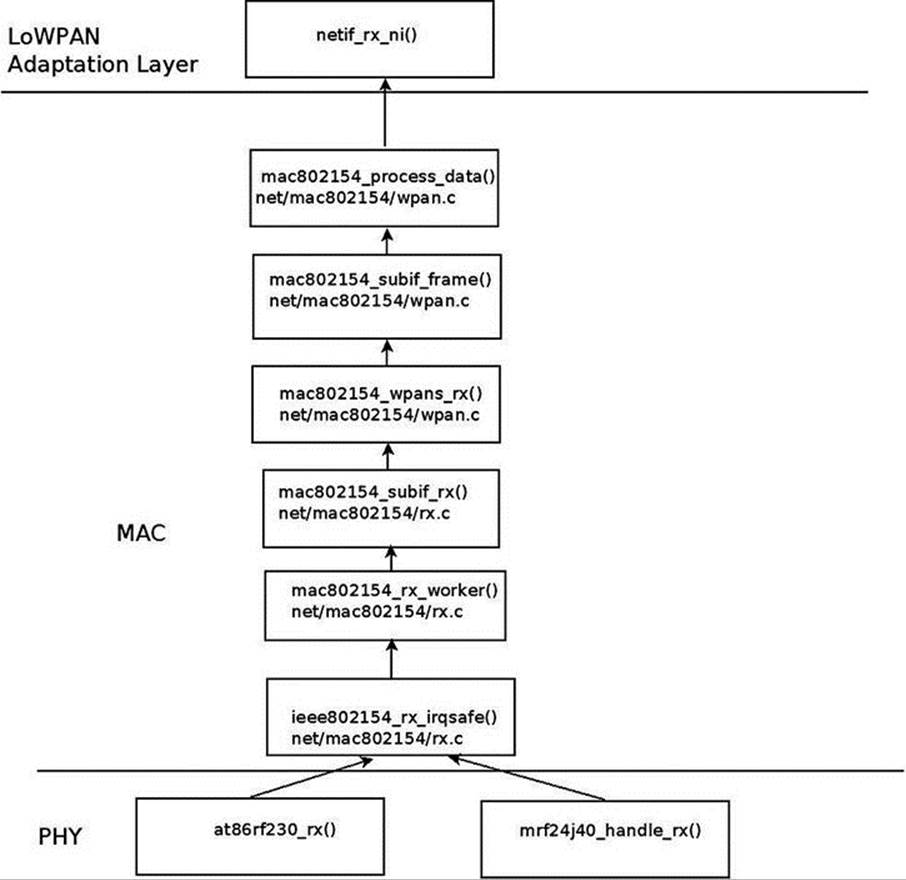

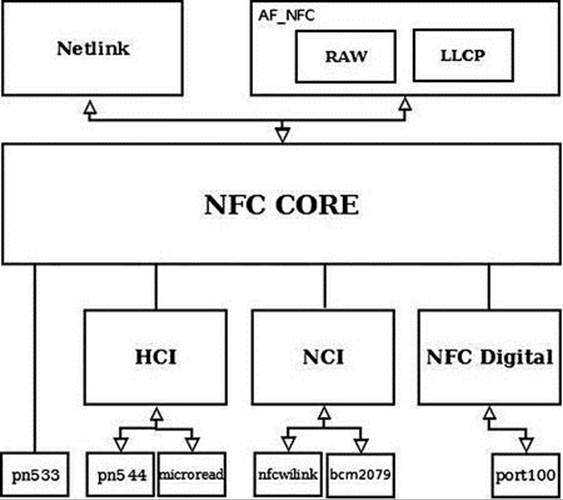

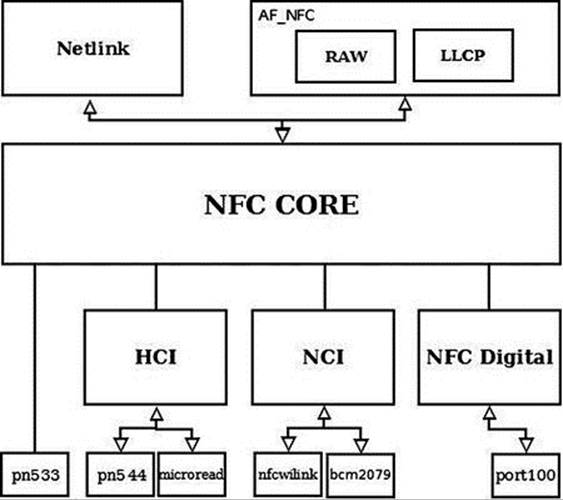

echo 0x100001 > /sys/fs/cgroup/net_cls/0/net_cls.classid