Linux Kernel Networking: Implementation and Theory (2014)

APPENDIX A. Linux API

In this appendix I cover the two most fundamental data structures in the Linux Kernel Networking stack: the sk_buff and the net_device. This is reference material that can help when reading the rest of this book, as you will probably encounter these two structures in almost every chapter. Becoming familiar with and learning about these two data structures is essential for understanding the Linux Kernel Networking stack. Subsequently, there is a section about remote DMA (RDMA), which is further reference material for Chapter 13. It describes in detail the main methods and the main data structures that are used by RDMA. This appendix is a good place to always return to, especially when looking for definitions of the basic terms.

The sk_buff Structure

The sk_buff structure represents a packet. SKB stands for socket buffer. A packet can be generated by a local socket in the local machine, which was created by a userspace application; the packet can be sent outside or to another socket in the same machine. A packet can also be created by a kernel socket; and you can receive a physical frame from a network device (Layer 2) and attach it to an sk_buff and pass it on to Layer 3. When the packet destination is your local machine, it will continue to Layer 4. If the packet is not for your machine, it will be forwarded according to your routing tables rules, if your machine supports forwarding. If the packet is damaged for any reason, it will be dropped. The sk_buff is a very large structure; I mention most of its members in this section. The sk_buff structure is defined in include/linux/skbuff.h. Here is a description of most of its members:

· ktime_t tstamp

Timestamp of the arrival of the packet. Timestamps are stored in the SKB as offsets to a base timestamp. Note: do not confuse tstamp of the SKB with hardware timestamping, which is implemented with the hwtstamps of skb_shared_info. I describe theskb_shared_info object later in this appenidx.

Helper methods:

· skb_get_ktime(const struct sk_buff *skb): Returns the tstamp of the specified skb.

· skb_get_timestamp(const struct sk_buff *skb, struct timeval *stamp): Converts the offset back to a struct timeval.

· net_timestamp_set(struct sk_buff *skb): Sets the timestamp for the specified skb. The timestamp calculation is done with the ktime_get_real() method, which returns the time in ktime_t format.

· net_enable_timestamp(): This method should be called to enable SKB timestamping.

· net_disable_timestamp(): This method should be called to disable SKB timestamping.

· struct sock *sk

The socket that owns the SKB, for local generated traffic and for traffic that is destined for the local host. For packets that are being forwarded, sk is NULL. Usually when talking about sockets you deal with sockets which are created by calling the socket() system call from userspace. It should be mentioned that there are also kernel sockets, which are created by calling the sock_create_kern() method. See for example in vxlan_init_net() in the VXLAN driver, drivers/net/vxlan.c.

Helper method:

· skb_orphan(struct sk_buff *skb): If the specified skb has a destructor, call this destructor; set the sock object (sk) of the specified skb to NULL, and set the destructor of the specified skb to NULL.

· struct net_device *dev

The dev member is a net_device object which represents the network interface device associated to the SKB; you will sometimes encounter the term NIC (Network Interface Card) for such a network device. It can be the network device on which the packet arrives, or the network device on which the packet will be sent. The net_device structure will be discussed in depth in the next section.

· char cb[48]

This is the control buffer. It is free to use by any layer. This is an opaque area used to store private information. For example, the TCP protocol uses it for the TCP control buffer:

#define TCP_SKB_CB(__skb) ((struct tcp_skb_cb *)&((__skb)->cb[0]))

(include/net/tcp.h)

The Bluetooth protocol also uses the control block:

#define bt_cb(skb) ((struct bt_skb_cb *)((skb)->cb))

(include/net/bluetooth/bluetooth.h)

· unsigned long _skb_refdst

The destination entry (dst_entry) address. The dst_entry structrepresents the routing entry for a given destination. For each packet, incoming or outgoing, you perform a lookup in the routing tables. Sometimes this lookup is called FIB lookup. The result of this lookup determines how you should handle this packet; for example, whether it should be forwarded, and if so, on which interface it should be transmitted; or should it be thrown, should an ICMP error message be sent, and so on. The dst_entry object has a reference counter (the __refcnt field). There are cases when you use this reference count, and there are cases when you do not use it. The dst_entry object and the lookup in the FIB is discussed in more detail in Chapter 4.

Helper methods:

· skb_dst_set(struct sk_buff *skb, struct dst_entry *dst): Sets the skb dst, assuming a reference was taken on dst and should be released by the dst_release() method (which is invoked by the skb_dst_drop() method).

· skb_dst_set_noref(struct sk_buff *skb, struct dst_entry *dst): Sets the skb dst, assuming a reference was not taken on dst. In this case, the skb_dst_drop() method will not call the dst_release() method for the dst.

![]() Note The SKB might have a dst_entry pointer attached to it; it can be reference counted or not. The low order bit of _skb_refdst is set if the reference counter was not taken.

Note The SKB might have a dst_entry pointer attached to it; it can be reference counted or not. The low order bit of _skb_refdst is set if the reference counter was not taken.

· struct sec_path *sp

The security path pointer. It includes an array of IPsec XFRM transformations states (xfrm_state objects). IPsec (IP Security) is a Layer 3 protocol which is used mostly in VPNs. It is mandatory in IPv6 and optional in IPv4. Linux, like many other operating systems, implements IPsec both for IPv4 and IPv6. The sec_path structure is defined in include/net/xfrm.h. See more in Chapter 10, which deals with the IPsec subsystem.

Helper method:

· struct sec_path *skb_sec_path(struct sk_buff *skb): Returns the sec_path object (sp) associated with the specified skb.

· unsigned int len

The total number of packet bytes.

· unsigned int data_len

The data length. This field is used only when the packet has nonlinear data (paged data).

Helper method:

· skb_is_nonlinear(const struct sk_buff *skb): Returns true when the data_len of the specified skb is larger than 0.

· __u16 mac_len

The length of the MAC (Layer 2) header.

· __wsum csum

The checksum.

· __u32 priority

The queuing priority of the packet. In the Tx path, the priority of the SKB is set according to the socket priority (the sk_priority field of the socket). The socket priority in turn can be set by calling the setsockopt() system call with the SO_PRIORITY socket option. Using the net_prio cgroup kernel module, you can define a rule which will set the priority for the SKB; see in the description of the sk_buff netprio_map field, later in this section, and also in Documentation/cgroup/netprio.txt. For forwarded packets, the priority is set according to TOS (Type Of Service) field in the IP header. There is a table named ip_tos2prio which consists of 16 elements. The mapping from TOS to priority is done by the rt_tos2priority() method, according to the TOS field of the IP header; see the ip_forward() method in net/ipv4/ip_forward.c and the ip_tos2prio definition in include/net/route.h.

· __u8 local_df:1

Allow local fragmentation flag. If the value of the pmtudisc field of the socket which sends the packet is IP_PMTUDISC_DONT or IP_PMTUDISC_WANT, local_df is set to 1; if the value of the pmtudisc field of the socket is IP_PMTUDISC_DO or IP_PMTUDISC_PROBE, local_df is set to 0. See the implementation of the __ip_make_skb() method in net/ipv4/ip_output.c. Only when the packet local_df is 0 do you set the IP header don’t fragment flag, IP_DF; see the ip_queue_xmit()method in net/ipv4/ip_output.c:

. . .

if (ip_dont_fragment(sk, &rt->dst) && !skb->local_df)

iph->frag_off = htons(IP_DF);

else

iph->frag_off = 0;

. . .

The frag_off field in the IP header is a 16-bit field, which represents the offset and the flags of the fragment. The 13 leftmost (MSB) bits are the offset (the offset unit is 8-bytes) and the 3 rightmost (LSB) bits are the flags. The flags can be IP_MF (there are more fragments), IP_DF (do not fragment), IP_CE (for congestion), or IP_OFFSET (offset part).

The reason behind this is that there are cases when you do not want to allow IP fragmentation. For example, in Path MTU Discovery (PMTUD), you set the DF (don’t fragment) flag of the IP header. Thus, you don’t fragment the outgoing packets. Any network device along the path whose MTU is smaller than the packet will drop it and send back an ICMP packet (“Fragmentation Needed”). Getting these ICMP “Fragmentation Needed” packets is required in order to determine the Path MTU. See more in Chapter 3. From userspace, setting IP_PMTUDISC_DO is done, for example, thus (the following code snippet is taken from the source code of the tracepath utility from the iputils package; the tracepath utility finds the path MTU):

. . .

int on = IP_PMTUDISC_DO;

setsockopt(fd, SOL_IP, IP_MTU_DISCOVER, &on, sizeof(on));

. . .

· __u8 cloned:1

When the packet is cloned with the __skb_clone() method, this field is set to 1 in both the cloned packet and the primary packet. Cloning SKB means creating a private copy of the sk_buff struct; the data block is shared between the clone and the primary SKB.

· __u8 ip_summed:2

Indicator of IP (Layer 3) checksum; can be one of these values:

· CHECKSUM_NONE: When the device driver does not support hardware checksumming, it sets the ip_summed field to be CHECKSUM_NONE. This is an indication that checksumming should be done in software.

· CHECKSUM_UNNECESSARY: No need for any checksumming.

· CHECKSUM_COMPLETE: Calculation of the checksum was completed by the hardware, for incoming packets.

· CHECKSUM_PARTIAL: A partial checksum was computed for outgoing packets; the hardware should complete the checksum calculation. CHECKSUM_COMPLETE and CHECKSUM_PARTIAL replace the CHECKSUM_HW flag, which is now deprecated.

· __u8 nohdr:1

Payload reference only, must not modify header. There are cases when the owner of the SKB no longer needs to access the header at all. In such cases, you can call the skb_header_release() method, which sets the nohdr field of the SKB; this indicates that the header of this SKB should not be modified.

· __u8 nfctinfo:3

Connection Tracking info. Connection Tracking allows the kernel to keep track of all logical network connections or sessions. NAT relies on Connection Tracking information for its translations. The value of the nfctinfo field corresponds to theip_conntrack_info enum values. So, for example, when a new connection is starting to be tracked, the value of nfctinfo is IP_CT_NEW. When the connection is established, the value of nfctinfo is IP_CT_ESTABLISHED. The value of nfctinfo can change to IP_CT_RELATED when the packet is related to an existing connection—for example, when the traffic is part of some FTP session or SIP session, and so on. For a full list of ip_conntrack_info enum values seeinclude/uapi/linux/netfilter/nf_conntrack_common.h. The nfctinfo field of the SKB is set in the resolve_normal_ct() method, net/netfilter/nf_conntrack_core.c. This method performs a Connection Tracking lookup, and if there is a miss, it creates a new Connection Tracking entry. Connection Tracking is discussed in depth in Chapter 9, which deals with the netfilter subsystem.

· __u8 pkt_type:3

For Ethernet, the packet type depends on the destination MAC address in the ethernet header, and is determined by the eth_type_trans() method:

· PACKET_BROADCAST for broadcast

· PACKET_MULTICAST for multicast

· PACKET_HOST if the destination MAC address is the MAC address of the device which was passed as a parameter

· PACKET_OTHERHOST if these conditions are not met

See the definition of the packet types in include/uapi/linux/if_packet.h.

· __u8 ipvs_property:1

This flag indicates whether the SKB is owned by ipvs (IP Virtual Server), which is a kernel-based transport layer load-balancing solution. This field is set to 1 in the transmit methods of ipvs (net/netfilter/ipvs/ip_vs_xmit.c).

· __u8 peeked:1

This packet has been already seen, so stats have been done for it—so don’t do them again.

· __u8 nf_trace:1

The netfilter packet trace flag. This flag is set by the packet flow tracing the netfilter module, xt_TRACE module, which is used to mark packets for tracing (net/netfilter/xt_TRACE.c).

Helper method:

· nf_reset_trace(struct sk_buff *skb): Sets the nf_trace of the specified skb to 0.

· __be16 protocol

The protocol field is initialized in the Rx path by the eth_type_trans() method to be ETH_P_IP when working with Ethernet and IP.

· void (*destructor)(struct sk_buff *skb)

A callback that is invoked when freeing the SKB by calling the kfree_skb() method.

· struct nf_conntrack *nfct

The associated Connection Tracking object, if it exists. The nfct field, like the nfctinfo field, is set in the resolve_normal_ct() method. The Connection Tracking layer is discussed in depth in Chapter 9, which deals with the netfilter subsystem.

· int skb_iif

The ifindex of the network device on which the packet arrived.

· __u32 rxhash

The rxhash of the SKB is calculated in the receive path, according to the source and destination address of the IP header and the ports from the transport header. A value of zero indicates that the hash is not valid. The rxhash is used to ensure that packets with the same flow will be handled by the same CPU when working with Symmetrical Multiprocessing (SMP). This decreases the number of cache misses and improves network performance. The rxhash is part of the Receive Packet Steering (RPS) feature, which was contributed by Google developers (Tom Herbert and others). The RPS feature gives performance improvement in SMP environments. See more in Documentation/networking/scaling.txt.

· __be16 vlan_proto

The VLAN protocol used—usually it is the 802.1q protocol. Recently support for the 802.1ad protocol (also known as Stacked VLAN) was added.

The following is an example of creating 802.1q and 802.1ad VLAN devices in userspace using the ip command of the iproute2 package:

ip link add link eth0 eth0.1000 type vlan proto 802.1ad id 1000

ip link add link eth0.1000 eth0.1000.1000 type vlan proto 802.1q id 100

Note: this feature is supported in kernel 3.10 and higher.

· __u16 vlan_tci

The VLAN tag control information (2 bytes), composed of ID and priority.

Helper method:

· vlan_tx_tag_present(__skb): This macro checks whether the VLAN_TAG_PRESENT flag is set in the vlan_tci field of the specified __skb.

· __u16 queue_mapping

Queue mapping for multiqueue devices.

Helper methods:

· skb_set_queue_mapping (struct sk_buff *skb, u16 queue_mapping): Sets the specified queue_mapping for the specified skb.

· skb_get_queue_mapping(const struct sk_buff *skb): Returns the queue_mapping of the specified skb.

· __u8 pfmemalloc

Allocate the SKB from PFMEMALLOC reserves.

Helper method:

· skb_pfmemalloc(): Returns true if the SKB was allocated from PFMEMALLOC reserves.

· __u8 ooo_okay:1

The ooo_okay flag is set to avoid ooo (out of order) packets.

· __u8 l4_rxhash:1

A flag that is set when a canonical 4-tuple hash over transport ports is used.

See the __skb_get_rxhash() method in net/core/flow_dissector.c.

· __u8 no_fcs:1

A flag that is set when you request the NIC to treat the last 4 bytes as Ethernet Frame Check Sequence (FCS).

· __u8 encapsulation:1

The encapsulation field denotes that the SKB is used for encapsulation. It is used, for example, in the VXLAN driver. VXLAN is a standard protocol to transfer Layer 2 Ethernet packets over a UDP kernel socket. It can be used as a solution when there are firewalls that block tunnels and allow, for example, only TCP or UDP traffic. The VXLAN driver uses UDP encapsulation and sets the SKB encapsulation to 1 in the vxlan_init_net() method. Also the ip_gre module and the ipip tunnel module use encapsulation and set the SKB encapsulation to 1.

· __u32 secmark

Security mark field. The secmark field is set by an iptables SECMARK target, which labels packets with any valid security context. For example:

iptables -t mangle -A INPUT -p tcp --dport 80 -j SECMARK --selctx system_u:object_r:httpd_packet_t:s0

iptables -t mangle -A OUTPUT -p tcp --sport 80 -j SECMARK --selctx system_u:object_r:httpd_packet_t:s0

In the preceding rule, you are statically labeling packets arriving at and leaving from port 80 as httpd_packet_t. See: netfilter/xt_SECMARK.c.

Helper methods:

· void skb_copy_secmark(struct sk_buff *to, const struct sk_buff *from): Sets the value of the secmark field of the first specified SKB (to) to be equal to the value of the secmark field of the second specified SKB (from).

· void skb_init_secmark(struct sk_buff *skb): Initializes the secmark of the specified skb to be 0.

The next three fields: mark, dropcount, and reserved_tailroom appear in a union.

· __u32 mark

This field enables identifying the SKB by marking it.

You can set the mark field of the SKB, for example, with the iptables MARK target in an iptables PREROUTING rule with the mangle table.

· iptables -A PREROUTING -t mangle -i eth1 -j MARK --set-mark 0x1234

This rule will assign the value of 0x1234 to every SKB mark field for incoming traffic on eth1 before performing a routing lookup. You can also run an iptables rule which will check the mark field of every SKB to match a specified value and act upon it. Netfilter targets and iptables are discussed in Chapter 9, which deals with the netfilter subsystem.

· __u32 dropcount

The dropcountcounter represents the number of dropped packets (sk_drops) of the sk_receive_queue of the assigned sock object (sk). See the sock_queue_rcv_skb() method in net/core/sock.c.

· _u32 reserved_tailroom: Used in the sk_stream_alloc_skb() method.

· sk_buff_data_t transport_header

The transport layer (L4) header.

Helper methods:

· skb_transport_header(const struct sk_buff *skb): Returns the transport header of the specified skb.

· skb_transport_header_was_set(const struct sk_buff *skb): Returns 1 if the transport_header of the specified skb is set.

· sk_buff_data_t network_header

The network layer (L3) header.

Helper method:

· skb_network_header(const struct sk_buff *skb): Returns the network header of the specified skb.

· sk_buff_data_t mac_header

The link layer (L2) header.

Helper methods:

· skb_mac_header(const struct sk_buff *skb): Returns the MAC header of the specified skb.

· skb_mac_header_was_set(const struct sk_buff *skb): Returns 1 if the mac_header of the specified skb was set.

· sk_buff_data_t tail

The tail of the data.

· sk_buff_data_t end

The end of the buffer. The tail cannot exceed end.

· unsigned char head

The head of the buffer.

· unsigned char data

The data head. The data block is allocated separately from the sk_buff allocation.

See, in _alloc_skb(), net/core/skbuff.c:

data = kmalloc_reserve(size, gfp_mask, node, &pfmemalloc);

Helper methods:

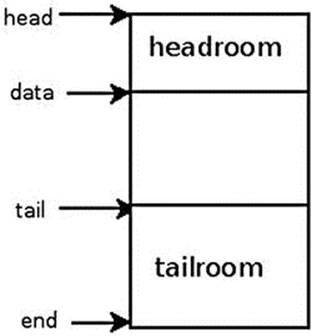

· skb_headroom(const struct sk_buff *skb): This method returns the headroom, which is the number of bytes of free space at the head of the specified skb (skb->data – skb->head). See Figure A-1.

· skb_tailroom(const struct sk_buff *skb): This method returns the tailroom, which is the number of bytes of free space at the tail of the specified skb (skb->end – skb->tail). See Figure A-1.

Figure A-1 shows the headroom and the tailroom of an SKB.

Figure A-1. Headroom and tailroom of an SKB

The following are some methods for handling buffers:

· skb_put(struct sk_buff *skb, unsigned int len): Adds data to a buffer: this method adds len bytes to the buffer of the specified skb and increments the length of the specified skb by the specified len.

· skb_push(struct sk_buff *skb, unsigned int len): Adds data to the start of a buffer; this method decrements the data pointer of the specified skb by the specified len and increments the length of the specified skb by the specified len.

· skb_pull(struct sk_buff *skb, unsigned int len): Removes data from the start of a buffer; this method increments the data pointer of the specified skb by the specified len and decrements the length of the specified skb by the specified len.

· skb_reserve(struct sk_buff *skb, int len): Increases the headroom of an empty skb by reducing the tail.

After describing some methods for handling buffers, I continue with listing the members of the sk_buff structure:

· unsigned int truesize

The total memory allocated for the SKB (including the SKB structure itself and the size of the allocated data block).

· atomic_t users

A reference counter, initialized to 1; incremented by the skb_get() method and decremented by the kfree_skb() method or by the consume_skb() method; the kfree_skb() method decrements the usage counter; if it reached 0, the method will free the SKB—otherwise, the method will return without freeing it.

Helper methods:

· skb_get(struct sk_buff *skb): Increments the users reference counter by 1.

· skb_shared(const struct sk_buff *skb): Returns true if the number of users is not 1.

· skb_share_check(struct sk_buff *skb, gfp_t pri): If the buffer is not shared, the original buffer is returned. If the buffer is shared, the buffer is cloned, and the old copy drops a reference. A new clone with a single reference is returned. When being called from interrupt context or with spinlocks held, the pri parameter (priority) must be GFP_ATOMIC. If memory allocation fails, NULL is returned.

· consume_skb(struct sk_buff *skb): Decrements the users reference counter and frees the SKB if the users reference counter is zero.

struct skb_shared_info

The skb_shared_info struct is located at the end of the data block (skb_end_pointer(SKB)). It consists of only a few fields. Let’s take a look at it:

struct skb_shared_info {

unsigned char nr_frags;

__u8 tx_flags;

unsigned short gso_size;

unsigned short gso_segs;

unsigned short gso_type;

struct sk_buff *frag_list;

struct skb_shared_hwtstamps hwtstamps;

__be32 ip6_frag_id;

atomic_t dataref;

void * destructor_arg;

skb_frag_t frags[MAX_SKB_FRAGS];

};

The following is a description of some of the important members of the skb_shared_info structure:

· nr_frags: Represents the number of elements in the frags array.

· tx_flags can be:

· SKBTX_HW_TSTAMP: Generate a hardware time stamp.

· SKBTX_SW_TSTAMP: Generate a software time stamp.

· SKBTX_IN_PROGRESS: Device driver is going to provide a hardware timestamp.

· SKBTX_DEV_ZEROCOPY: Device driver supports Tx zero-copy buffers.

· SKBTX_WIFI_STATUS: Generate WiFi status information.

· SKBTX_SHARED_FRAG: Indication that at least one fragment might be overwritten.

· When working with fragmentation, there are cases when you work with a list of sk_buffs (frag_list), and there are cases when you work with the frags array. It depends mostly on whether the Scatter/Gather mode is set.

Helper methods:

· skb_is_gso(const struct sk_buff *skb): Returns true if the gso_size of the skb_shared_info associated with the specified skb is not 0.

· skb_is_gso_v6(const struct sk_buff *skb): Returns true if the gso_type of the skb_shared_info associated with the skb is SKB_GSO_TCPV6.

· skb_shinfo(skb): A macro that returns the skb_shinfo associated with the specified skb.

· skb_has_frag_list(const struct sk_buff *skb): Returns true if the frag_list of the skb_shared_info of the specified skb is not NULL.

· dataref: A reference counter of the skb_shared_info struct. It is set to 1 in the method, which allocates the skb and initializes skb_shared_info (The __alloc_skb() method).

The net_device structure

The net_device struct represents the network device. It can be a physical device, like an Ethernet device, or it can be a software device, like a bridge device or a VLAN device. As with the sk_buff structure, I will list its important members. The net_device struct is defined in include/linux/netdevice.h:

· char name[IFNAMSIZ]

The name of the network device. This is the name that you see with ifconfig or ip commands (for example eth0, eth1, and so on). The maximum length of the interface name is 16 characters. In newer distributions with biosdevname support, the naming scheme corresponds to the physical location of the network device. So PCI network devices are named p<slot>p<port>, according to the chassis labels, and embedded ports (on motherboard interfaces) are named em<port>—for example, em1, em2, and so on. There is a special suffix for SR-IOV devices and Network Partitioning (NPAR)–enabled devices. Biosdevname is developed by Dell: http://linux.dell.com/biosdevname. See also this white paper:http://linux.dell.com/files/whitepapers/consistent_network_device_naming_in_linux.pdf.

Helper method:

· dev_valid_name(const char *name): Checks the validity of the specified network device name. A network device name must obey certain restrictions in order to enable creating corresponding sysfs entries. For example, it cannot be “ . ” or “ .. ”; its length should not exceed 16 characters. Changing the interface name can be done like this, for example: ip link set <oldDeviceName> p2p1 <newDeviceName>. So, for example, ip link set p2p1 name a12345678901234567 will fail with this message: Error: argument "a12345678901234567" is wrong: "name" too long. The reason is that you tried to set a device name that is longer than 16 characters. And running ip link set p2p1 name. will fail withRTNETLINK answers: Invalid argument, since you tried to set the device name to be “.”, which is an invalid value. See dev_valid_name() in net/core/dev.c.

· struct hlist_node name_hlist

This is a hash table of network devices, indexed by the network device name. A lookup in this hash table is performed by dev_get_by_name(). Insertion into this hash table is performed by the list_netdevice() method, and removal from this hash table is done with the unlist_netdevice() method.

· char *ifalias

SNMP alias interface name. Its length can be up to 256 (IFALIASZ).

You can create an alias to a network device using this command line:

ip link set <devName> alias myalias

The ifalias name is exported via sysfs by /sys/class/net/<devName>/ifalias.

Helper method:

· dev_set_alias(struct net_device *dev, const char *alias, size_t len): Sets the specified alias to the specified network device. The specified len parameter is the number of bytes of specified alias to be copied; if the specified lenis greater than 256 (IFALIASZ), the method will fail with -EINVAL.

· unsigned int irq

The Interrupt Request (IRQ) number of the device. The network driver should call request_irq() to register itself with this IRQ number. Typically this is done in the probe() callback of the network device driver. The prototype of the request_irq() method is:int request_irq(unsigned int irq, irq_handler_t handler, unsigned long flags, const char *name, void *dev). The first argument is the IRQ number. The sepcified handler is the Interrupt Service Routine (ISR). The network driver should call the free_irq() method when it no longer uses this irq. In many cases, this irq is shared (the request_irq() method is called with the IRQF_SHARED flag). You can view the number of interrupts that occurred on each core by running cat /proc/interrupts. You can set the SMP affinity of the irq by echo irqMask > /proc/irq/<irqNumber>/smp_affinity.

In an SMP machine, setting the SMP affinity of interrupts means setting which cores are allowed to handle the interrupt. Some PCI network interfaces use Message Signaled Interrupts (MSIs).PCI MSI interrupts are never shared, so the IRQF_SHARED flag is not set when calling the request_irq() method in these network drivers. See more info in Documentation/PCI/MSI-HOWTO.txt.

· unsigned long state

A flag that can be one of these values:

· __LINK_STATE_START: This flag is set when the device is brought up, by the dev_open() method, and is cleared when the device is brought down.

· __LINK_STATE_PRESENT: This flag is set in device registration, by the register_netdevice() method, and is cleared in the netif_device_detach() method.

· __LINK_STATE_NOCARRIER: This flag shows whether the device detected loss of carrier. It is set by the netif_carrier_off() method and cleared by the netif_carrier_on() method. It is exported by sysfs via/sys/class/net/<devName>/carrier.

· __LINK_STATE_LINKWATCH_PENDING: This flag is set by the linkwatch_fire_event() method and cleared by the linkwatch_do_dev() method.

· __LINK_STATE_DORMANT: The dormant state indicates that the interface is not able to pass packets (that is, it is not “up”); however, this is a “pending” state, waiting for some external event. See section 3.1.12, “New states for IfOperStatus” in RFC 2863, “The Interfaces Group MIB.”

The state flag can be set with the generic set_bit() method.

Helper methods:

· netif_running(const struct net_device *dev): Returns true if the __LINK_STATE_START flag of the state field of the specified device is set.

· netif_device_present(struct net_device *dev): Returns true if the __LINK_STATE_PRESENT flag of the state field of the specified device is set.

· netif_carrier_ok (const struct net_device *dev): Returns true if the __LINK_STATE_NOCARRIER flag of the state field of the specified device is not set.

These three methods are defined in include/linux/netdevice.h.

· netdev_features_t features

The set of currently active device features. These features should be changed only by the network core or in error paths of the ndo_set_features() callback. Network driver developers are responsible for setting the initial set of the device features. Sometimes they can use a wrong combination of features. The network core fixes this by removing an offending feature in the netdev_fix_features() method, which is invoked when the network interface is registered (in the register_netdevice() method); a proper message is also written to the kernel log.

I will mention some net_device features here and discuss them. For the full list of net_device features, look in include/linux/netdev_features.h.

· NETIF_F_IP_CSUM means that the network device can checksum L4 IPv4 TCP/UDP packets.

· NETIF_F_IPV6_CSUM means that the network device can checksum L4 IPv6 TCP/UDP packets.

· NETIF_F_HW_CSUM means that the device can checksum in hardware all L4 packets. You cannot activate NETIF_F_HW_CSUM together with NETIF_F_IP_CSUM, or together with NETIF_F_IPV6_CSUM, because that will cause duplicate checksumming.

If the driver features set includes both NETIF_F_HW_CSUM and NETIF_F_IP_CSUM features, then you will get a kernel message saying “mixed HW and IP checksum settings.” In such a case, the netdev_fix_features() method removes the NETIF_F_IP_CSUM feature. If the driver features set includes both NETIF_F_HW_CSUM and NETIF_F_IPV6_CSUM features, you get again the same message as in the previous case. This time, the NETIF_F_IPV6_CSUM feature is the one which is being removed by thenetdev_fix_features() method. In order for a device to support TSO (TCP Segmentation Offload), it needs also to support Scatter/Gather and TCP checksum; this means that both NETIF_F_SG and NETIF_F_IP_CSUM features must be set. If the driver features set does not include the NETIF_F_SG feature, then you will get a kernel message saying “Dropping TSO features since no SG feature,” and the NETIF_F_ALL_TSO feature will be removed. If the driver features set does not include the NETIF_F_IP_CSUM feature and does not include NETIF_F_HW_CSUM, then you will get a kernel message saying “Dropping TSO features since no CSUM feature,” and the NETIF_F_TSO will be removed.

![]() Note In recent kernels, if CONFIG_DYNAMIC_DEBUG kernel config item is set, you might need to explicitly enable printing of some messages, via <debugfs>/dynamic_debug/control interface. See Documentation/dynamic-debug-howto.txt.

Note In recent kernels, if CONFIG_DYNAMIC_DEBUG kernel config item is set, you might need to explicitly enable printing of some messages, via <debugfs>/dynamic_debug/control interface. See Documentation/dynamic-debug-howto.txt.

· NETIF_F_LLTX is the LockLess TX flag and is considered deprecated. When it is set, you don’t use the generic Tx lock (This is why it is called LockLess TX). See the following macro (HARD_TX_LOCK) from net/core/dev.c:

#define HARD_TX_LOCK(dev, txq, cpu) { \ if ((dev->features & NETIF_F_LLTX) == 0) { \

__netif_tx_lock(txq, cpu); \

} \

}

NETIF_F_LLTX is used in tunnel drivers like VXLAN, VETH, and in IP over IP (IPIP) tunneling driver. For example, in the IPIP tunnel module, you set the NETIF_F_LLTX flag in the ipip_tunnel_setup() method (net/ipv4/ipip.c).

The NETIF_F_LLTX flag is also used in a few drivers that have implemented their own Tx lock, like the cxgb network driver.

In drivers/net/ethernet/chelsio/cxgb/cxgb2.c, you have:

static int __devinit init_one(struct pci_dev *pdev,

const struct pci_device_id *ent)

{

. . .

netdev->features |= NETIF_F_SG | NETIF_F_IP_CSUM |

NETIF_F_RXCSUM | NETIF_F_LLTX;

. . .

}

· NETIF_F_GRO is used to indicate that the device supports GRO (Generic Receive Offload). With GRO, incoming packets are merged at reception time. The GRO feature improves network performance. GRO replaced LRO (Large Receive Offload), which was limited to TCP/IPv4. This flag is checked in the beginning of the dev_gro_receive() method; devices that do not have this flag set will not perform the GRO handling part in this method. A driver that wants to use GRO should call thenapi_gro_receive() method in the Rx path of the driver. You can enable/disable GRO with ethtool, by ethtool -K <deviceName> gro on/ ethtool -K <deviceName> gro off, respectively. You can check whether GRO is set by runningethtool –k <deviceName> and looking at the gro field.

· NETIF_F_GSO is set to indicate that the device supports Generic Segmentation Offload (GSO). GSO is a generalization of a previous solution called TSO (TCP segmentation offload), which dealt only with TCP in IPv4. GSO can handle also IPv6, UDP, and other protocols. GSO is a performance optimization, based on traversing the networking stack once instead of many times, for big packets. So the idea is to avoid segmentation in Layer 4 and defer segmentation as much as possible. The sysadmin can enable/disable GSO with ethtool, by ethtool -K <driverName> gso on/ethtool -K <driverName> gso off, respectively. You can check whether GSO is set by running ethtool –k <deviceName> and looking at the gso field. To work with GSO, you should work in Scatter/Gather mode. The NETIF_F_SG flag must be set.

· NETIF_F_NETNS_LOCAL is set for network namespace local devices. These are network devices that are not allowed to move between network namespaces. The loopback, VXLAN, and PPP network devices are examples of namespace local devices. All these devices have the NETIF_F_NETNS_LOCAL flag set. A sysadmin can check whether an interface has the NETIF_F_NETNS_LOCAL flag set or not by ethtool -k <deviceName>. This feature is fixed and cannot be changed by ethtool. Trying to move a network device of this type to a different namespace results in an error (-EINVAL). For details, look in the dev_change_net_namespace() method (net/core/dev.c). When deleting a network namespace, devices that do not have the NETIF_F_NETNS_LOCAL flag set are moved to the default initial network namespace (init_net). Network namespace local devices that have the NETIF_F_NETNS_LOCAL flag set are not moved to the default initial network namespace (init_net), but are deleted.

· NETIF_F_HW_VLAN_CTAG_RX is for use by devices which support VLAN Rx hardware acceleration. It was formerly called NETIF_F_HW_VLAN_RX and was renamed in kernel 3.10, when support for 802.1ad was added. “CTAG” was added to indicate that this device differ from “STAG” device (Service provider tagging). A device driver that sets the NETIF_F_HW_VLAN_RX feature must also define the ndo_vlan_rx_add_vid() and ndo_vlan_rx_kill_vid() callbacks. Failure to do so will avoid device registration and result in a “Buggy VLAN acceleration in driver” kernel error message.

· NETIF_F_HW_VLAN_CTAG_TX is for use by devices that support VLAN Tx hardware acceleration. It was formerly called NETIF_F_HW_VLAN_TX and was renamed in kernel 3.10 when support for 802.1ad was added.

· NETIF_F_VLAN_CHALLENGED is set for devices that can’t handle VLAN packets. Setting this feature avoids registration of a VLAN device. Let’s take a look at the VLAN registration method:

static int register_vlan_device(struct net_device *real_dev, u16 vlan_id) {

int err;

. . .

err = vlan_check_real_dev(real_dev, vlan_id);

The first thing the vlan_check_real_dev() method does is to check the network device features and return an error if the NETIF_F_VLAN_CHALLENGED feature is set:

int vlan_check_real_dev(struct net_device *real_dev, u16 vlan_id)

{

const char *name = real_dev->name;

if (real_dev->features & NETIF_F_VLAN_CHALLENGED) {

pr_info("VLANs not supported on %s\n", name);

return -EOPNOTSUPP;

}

. . .

}

For example, some types of Intel e100 network device drivers set the NETIF_F_VLAN_CHALLENGED feature (see e100_probe() in drivers/net/ethernet/intel/e100.c).

You can check whether the NETIF_F_VLAN_CHALLENGED is set by running ethtool –k <deviceName> and looking at the vlan-challenged field. This is a fixed value that you cannot change with the ethtool command.

· NETIF_F_SG is set when the network interface supports Scatter/Gather IO. You can enable and disable Scatter/Gather with ethtool, by ethtool -K <deviceName> sg on/ ethtool -K <deviceName> sg off, respectively. You can check whether Scatter/Gather is set by running ethtool –k <deviceName> and looking at the sg field.

· NETIF_F_HIGHDMA is set if the device can perform access by DMA to high memory. The practical implication of setting this feature is that the ndo_start_xmit() callback of the net_device_ops object can manage SKBs, which have frags elements in high memory. You can check whether the NETIF_F_HIGHDMA is set by running ethtool –k <deviceName> and looking at the highdma field. This is a fixed value that you cannot change with the ethtool command.

· netdev_features_t hw_features

The set of features that are changeable features. This means that their state may possibly be changed (enabled or disabled) for a particular device by a user’s request. This set should be initialized in the ndo_init() callback and not changed later.

· netdev_features_t wanted_features

The set of features that were requested by the user. A user may request to change various offloading features—for example, by running ethtool -K eth1 rx on. This generates a feature change event notification (NETDEV_FEAT_CHANGE) to be sent by thenetdev_features_change() method.

· netdev_features_t vlan_features

The set of features whose state is inherited by child VLAN devices. For example, let’s look at the rtl_init_one() method, which is the probe callback of the r8169 network device driver (see Chapter 14):

int rtl_init_one(struct pci_dev *pdev, const struct pci_device_id *ent)

{

. . .

dev->vlan_features=NETIF_F_SG|NETIF_F_IP_CSUM|NETIF_F_TSO| NETIF_F_HIGHDMA;

. . .

}

(drivers/net/ethernet/realtek/r8169.c)

This initialization means that all child VLAN devices will have these features. For example, let’s say that your eth0 device is an r8169 device, and you add a VLAN device thus: vconfig add eth0 100. Then, in the initialization in the VLAN module, there is this code related to vlan_features:

static int vlan_dev_init(struct net_device *dev)

{

. . .

dev->features |= real_dev->vlan_features | NETIF_F_LLTX;

. . .

}

(net/8021q/vlan_dev.c)

This means that it sets the features of the VLAN child device to be the vlan_features of the real device (which is eth0 in this case), which were set according to what you saw earlier in the rtl_init_one() method.

· netdev_features_t hw_enc_features

The mask of features inherited by encapsulating devices. This field indicates what encapsulation offloads the hardware is capable of doing, and drivers will need to set them appropriately. For more info about the network device features, seeDocumentation/networking/netdev-features.txt.

· ifindex

The ifindex (Interface index) is a unique device identifier. This index is incremented by 1 each time you create a new network device, by the dev_new_index() method. The first network device you create, which is almost always the loopback device, has ifindex of 1. Cyclic integer overflow is handled by the method that handles assignment of the ifindex number. The ifindex is exported by sysfs via /sys/class/net/<devName>/ifindex.

· struct net_device_stats stats

The statistics struct, which was left as a legacy, includes fields like the number of rx_packets or the number of tx_packets. New device drivers use the rtnl_link_stats64 struct (defined in include/uapi/linux/if_link.h) instead of thenet_device_stats struct. Most of the network drivers implement the ndo_get_stats64() callback of net_device_ops (or the ndo_get_stats() callback of net_device_ops, when working with the older API).

The statistics are exported via /sys/class/net/<deviceName>/statistics.

Some drivers implement the get_ethtool_stats() callback. These drivers show statistics by ethtool -S <deviceName>

See, for example, the rtl8169_get_ethtool_stats() method in drivers/net/ethernet/realtek/r8169.c.

· atomic_long_t rx_dropped

A counter of the number of packets that were dropped in the RX path by the core network stack. This counter should not be used by drivers. Do not confuse the rx_dropped field of the sk_buff with the dropped field of the softnet_data struct. Thesoftnet_data struct represents a per-CPU object. They are not equivalent because the rx_dropped of the sk_buff might be incremented in several methods, whereas the dropped counter of softnet_data is incremented only by theenqueue_to_backlog() method (net/core/dev.c). The dropped counter of softnet_data is exported by /proc/net/softnet_stat. In /proc/net/softnet_stat you have one line per CPU. The first column is the total packets counter, and the second one is the dropped packets counter.

For example:

cat /proc/net/softnet_stat

00000076 00000001 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000

00000005 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000 00000000

You see here one line per CPU (you have two CPUs); for the first CPU, you see 118 total packets (hex 0x76), where one packet is dropped. For the second CPU, you see 5 total packets and 0 dropped.

· struct net_device_ops *netdev_ops

The netdev_ops structure includes pointers for several callback methods that you want to define if you want to override the default behavior. Here are some callbacks of netdev_ops:

· The ndo_init() callback is called when network device is registered.

· The ndo_uninit() callback is called when the network device is unregistered or when the registration fails.

· The ndo_open() callback handles change of device state, when a network device state is being changed from down state to up state.

· The ndo_stop() callback is called when a network device state is being changed to be down.

· The ndo_validate_addr() callback is called to check whether the MAC is valid. Many network drivers set the generic eth_validate_addr() method to be the ndo_validate_addr() callback. The generic eth_validate_addr() method returns true if the MAC address is not a multicast address and is not all zeroes.

· The ndo_set_mac_address() callback sets the MAC address. Many network drivers set the generic eth_mac_addr() method to be the ndo_set_mac_address() callback of struct net_device_ops for setting their MAC address. For example, the VETH driver (drivers/net/veth.c) or the VXLAN driver (drivers/nets/vxlan.c).

· The ndo_start_xmit() callback handles packet transmission. It cannot be NULL.

· The ndo_select_queue() callback is used to select a Tx queue, when working with multiqueues. If the ndo_select_queue() callback is not set, then the __netdev_pick_tx() is called. See the implementaion of the netdev_pick_tx() method innet/core/flow_dissector.c.

· The ndo_change_mtu() callback handles modifying the MTU. It should check that the specified MTU is not less than 68, which is the minimum MTU. In many cases, network drivers set the ndo_change_mtu() callback to be the genericeth_change_mtu() method. The eth_change_mtu() method should be overridden if jumbo frames are supported.

· The ndo_do_ioctl() callback is called when getting an IOCTL request which is not handled by the generic interface code.

· The ndo_tx_timeout() callback is called when the transmitter was idle for a quite a while (for watchdog usage).

· The ndo_add_slave() callback is called to set a specified network device as a slave to a specified netowrk device. It is used, for example, in the team network driver and in the bonding network driver.

· The ndo_del_slave() callback is called to remove a previously enslaved network device.

· The ndo_set_features() callback is called to update the configuration of a network device with new features.

· The ndo_vlan_rx_add_vid() callback is called when registering a VLAN id if the network device supports VLAN filtering (the NETIF_F_HW_VLAN_FILTER flag is set in the device features).

· The ndo_vlan_rx_kill_vid() callback is called when unregistering a VLAN id if the network device supports VLAN filtering (the NETIF_F_HW_VLAN_FILTER flag is set in the device features).

![]() Note From kernel 3.10, the NETIF_F_HW_VLAN_FILTER flag was renamed to NETIF_F_HW_VLAN_CTAG_FILTER.

Note From kernel 3.10, the NETIF_F_HW_VLAN_FILTER flag was renamed to NETIF_F_HW_VLAN_CTAG_FILTER.

· There are also several callbacks for handling SR-IOV devices, for example, ndo_set_vf_mac() and ndo_set_vf_vlan().

Before kernel 2.6.29, there was a callback named set_multicast_list() for addition of multicast addresses, which was replaced by the dev_set_rx_mode() method. The dev_set_rx_mode() callback is called primarily whenever the unicast or multicast address lists or the network interface flags are updated.

· struct ethtool_ops *ethtool_ops

The ethtool_ops structure includes pointers for several callbacks for handling offloads, getting and setting various device settings, reading registers, getting statistics, reading RX flow hash indirection table, WakeOnLAN parameters, and many more. If the network driver does not initialize the ethtool_ops object, the networking core provides a default empty ethtool_ops object named default_ethtool_ops. The management of ethtool_ops is done in net/core/ethtool.c.

Helper method:

· SET_ETHTOOL_OPS (netdev,ops): A macro which sets the specified ethtool_ops for the specified net_device.

You can view the offload parameters of a network interface device by running ethtool –k <deviceName>. You can set some offload parameters of a network interface device by running ethtool –K <deviceName> offloadParameter off/on. See man 8 ethtool.

· const struct header_ops *header_ops

The header_ops structinclude callbacks for creating the Layer 2 header, parsing it, rebuilding it, and more. For Ethernet it is eth_header_ops, defined in net/ethernet/eth.c.

· unsigned int flags

The interface flags of the network device that you can see from userspace. Here are some flags (for a full list see include/uapi/linux/if.h):

· IFF_UP flag is set when the interface state is changed from down to up.

· IFF_PROMISC is set when the interface is in promiscuous mode (receives all packets). When running sniffers like wireshark or tcpdump, the network interface is in promiscuous mode.

· IFF_LOOPBACK is set for the loopback device.

· IFF_NOARP is set for devices which do not use the ARP protocol. IFF_NOARP is set, for example, in tunnel devices (see for example, in the ipip_tunnel_setup() method, net/ipv4/ipip.c).

· IFF_POINTOPOINT is set for PPP devices. See for example, the ppp_setup() method, drivers/net/ppp/ppp_generic.c.

· IFF_MASTER is set for master devices. See, for example, for bonding devices, the bond_setup() method in drivers/net/bonding/bond_main.c.

· IFF_LIVE_ADDR_CHANGE flag indicates that the device supports hardware address modification when it’s running. See the eth_mac_addr() method in net/ethernet/eth.c.

· IFF_UNICAST_FLT flag is set when the network driver handles unicast address filtering.

· IFF_BONDING is set for a bonding master device or bonding slave device. The bonding driver provides a method for aggregating multiple network interfaces into a single logical interface.

· IFF_TEAM_PORT is set for a device used as a team port. The teaming driver is a load-balancing network software driver intended to replace the bonding driver.

· IFF_MACVLAN_PORT is set for a device used as a macvlan port.

· IFF_EBRIDGE is set for an Ethernet bridging device.

The flags field is exported by sysfs via /sys/class/net/<devName>/flags.

Some of these flags can be set by userspace tools. For example, ifconfig <deviceName> -arp will set the IFF_NOARP network interface flag, and ifconfig <deviceName> arp will clear the IFF_NOARP flag. Note that you can do the same with theiproute2 ip command: ip link set dev <deviceName> arp on and ip link set dev <deviceName> arp off.

· unsigned int priv_flags

The interface flags, which are invisible from userspace. For example, IFF_EBRIDGE for a bridge interface or IFF_BONDING for a bonding interface, or IFF_SUPP_NOFCS for an interface support sending custom FCS.

Helper methods:

· netif_supports_nofcs(): Returns true if the IFF_SUPP_NOFCS is set in the priv_flags of the specified device.

· is_vlan_dev(struct net_device *dev): Returns 1 if the IFF_802_1Q_VLAN flag is set in the priv_flags of the specified network device.

· unsigned short gflags

Global flags (kept as legacy).

· unsigned short padded

How much padding is added by the alloc_netdev() method.

· unsigned char operstate

RFC 2863 operstate.

· unsigned char link_mode

Mapping policy to operstate.

· unsigned int mtu

The network interface MTU (Maximum Transmission Unit) value. The maximum size of frame the device can handle. RFC 791 sets 68 as a minimum MTU. Each protocol has MTU of its own. The default MTU for Ethernet is 1,500 bytes. It is set in the ether_setup()method, net/ethernet/eth.c. Ethernet packets with sizes higher than 1,500 bytes, up to 9,000 bytes, are called Jumbo frames. The network interface MTU is exported by sysfs via /sys/class/net/<devName>/mtu.

Helper method:

· dev_set_mtu(struct net_device *dev, int new_mtu): Changes the MTU of the specified device to a new value, specified by the mtu parameter.

The sysadmin can change the MTU of a network interface to 1,400, for example, in one of the following ways:

ifconfig <netDevice> mtu 1400

ip link set <netDevice> mtu 1400

echo 1400 > /sys/class/net/<netDevice>/mtu

Many drivers implement the ndo_change_mtu() callback to change the MTU to perform driver-specific needed actions (like resetting the network card).

· unsigned short type

The network interface hardware type. For example, for Ethernet it is ARPHRD_ETHER and is set in ether_setup() in net/ethernet/eth.c. For PPP interface, it is ARPHRD_PPP, and is set in the ppp_setup() method indrivers/net/ppp/ppp_generic.c. The type is exported by sysfs via /sys/class/net/<devName>/type.

· unsigned short hard_header_len

The hardware header length. Ethernet headers, for example, consist of MAC source address, MAC destination address, and a type. The MAC source and destination addresses are 6 bytes each, and the type is 2 bytes. So the Ethernet header length is 14 bytes. The Ethernet header length is set to 14 (ETH_HLEN) in the ether_setup() method, net/ethernet/eth.c. The ether_setup() method is responsible for initializing some Ethernet device defaults, like the hard header len, Tx queue len, MTU, type, and more.

· unsigned char perm_addr[MAX_ADDR_LEN]

The permanent hardware address (MAC address) of the device.

· unsigned char addr_assign_type

Hardware address assignment type, can be one of the following:

· NET_ADDR_PERM

· NET_ADDR_RANDOM

· NET_ADDR_STOLEN

· NET_ADDR_SET

By default, the MAC address is permanent (NET_ADDR_PERM). If the MAC address was generated with a helper method named eth_hw_addr_random(), the type of the MAC address is NET_ADD_RANDOM. The type of the MAC address is stored in theaddr_assign_type member of the net_device. Also when changing the MAC address of the device, with eth_mac_addr(), you reset the addr_assign_type with∼NET_ADDR_RANDOM (if it was marked as NET_ADDR_RANDOM before). When a network device is registered (by the register_netdevice() method), if the addr_assign_type equals NET_ADDR_PERM, dev->perm_addr is set to be dev->dev_addr. When you set a MAC address, you set the addr_assign_typeto be NET_ADDR_SET. This indicates that the MAC address of a device has been set by the dev_set_mac_address() method. The addr_assign_type is exported by sysfs via /sys/class/net/<devName>/addr_assign_type.

· unsigned char addr_len

The hardware address length in octets. For Ethernet addresses, it is 6 (ETH_ALEN) bytes and is set in the ether_setup() method. The addr_len is exported by sysfs via /sys/class/net/<deviceName>/addr_len.

· unsigned char neigh_priv_len

Used in the neigh_alloc() method,net/core/neighbour.c; neigh_priv_len is initialized only in the ATM code (atm/clip.c).

· struct netdev_hw_addr_list uc

Unicast MAC addresses list, initialized by the dev_uc_init() method. There are three types of packets in Ethernet: unicast, multicast, and broadcast. Unicast is destined for one machine, multicast is destined for a group of machines, and broadcast is destined for all the machines in the LAN.

Helper methods:

· netdev_uc_empty(dev): Returns 1 if the unicast list of the specified device is empty (its count field is 0).

· dev_uc_flush(struct net_device *dev): Flushes the unicast addresses of the specified network device and zeroes count.

· struct netdev_hw_addr_list mc

Multicast MAC addresses list, initialized by the dev_mc_init() method.

Helper methods:

· netdev_mc_empty(dev): Returns 1 if the multicast list of the specified device is empty (its count field is 0).

· dev_mc_flush(struct net_device *dev): Flushes the multicast addresses of the specified network device and zeroes the count field.

· unsigned int promiscuity

A counter of the times a network interface card is told to work in promiscuous mode. With promiscuous mode, packets with MAC destination address which is different than the interface MAC address are not rejected. The promiscuity counter is used, for example, to enable more than one sniffing client; so when opening some sniffing clients (like wireshark), this counter is incremented by 1 for each client you open, and closing that client will decrement the promiscuity counter. When the last instance of the sniffing client is closed,promiscuity will be set to 0, and the device will exit from working in promiscuous mode. It is used also in the bridging subsystem, as the bridge interface needs to work in promiscuous mode. So when adding a bridge interface, the network interface card is set to work in promiscuous mode. See the call to the dev_set_promiscuity() method in br_add_if(), net/bridge/br_if.c.

Helper method:

· dev_set_promiscuity(struct net_device *dev, int inc): Increments/decrements the promiscuity counter of the specified network device according to the specified increment. The dev_set_promiscuity() method can get a positive increment or a negative increment parameter. As long as the promiscuity counter remains above zero, the interface remains in promiscuous mode. Once it reaches zero, the device reverts back to normal filtering operation. Because promiscuity is an integer, thedev_set_promiscuity() method takes into account cyclic overflow of integer, which means it handles the case when the promiscuity counter is incremented when it reaches the maximum positive value an unsigned integer can reach.

· unsigned int allmulti

The allmulti counter of the network device enables or disables the allmulticast mode. When selected, all multicast packets on the network will be received by the interface. You can set a network device to work in allmulticast mode by ifconfig eth0 allmulti. You disable the allmulti flag by ifconfig eth0 –allmulti.

Enabling/disabling the allmulticast mode can also be performed with the ip command:

ip link set p2p1 allmulticast on

ip link set p2p1 allmulticast off

You can also see the allmulticast state by inspecting the flags that are shown by the ip command:

ip addr show

flags=4610<BROADCAST,ALLMULTI,MULTICAST> mtu 1500

Helper method:

· dev_set_allmulti(struct net_device *dev, int inc): Increments/decrements the allmulti counter of the specified network device according to the specified increment (which can be a positive or a negative integer). The dev_set_allmulti()method also sets the IFF_ALLMULTI flag of the network device when setting the allmulticast mode and removes this flag when disabling the allmulticast mode.

The next three fields are protocol-specific pointers:

· struct in_device __rcu *ip_ptr

This pointer is assigned to a pointer to struct in_device, which represents IPv4 specific data, in inetdev_init(), net/ipv4/devinet.c.

· struct inet6_dev __rcu *ip6_ptr

This pointer is assigned to a pointer to struct inet6_dev, which represents IPv6 specific data, in ipv6_add_dev(), net/ipv6/addrconf.c.

· struct wireless_dev *ieee80211_ptr

This is a pointer for the wireless device, assigned in the ieee80211_if_add() method, net/mac80211/iface.c.

· unsigned long last_rx

Time of last Rx. It should not be set by network device drivers, unless really needed. Used, for example, in the bonding driver code.

· struct list_head dev_list

The global list of network devices. Insertion to the list is done with the list_netdevice() method, when the network device is registered. Removal from the list is done with the unlist_netdevice() method, when the network device is unregistered.

· struct list_head napi_list

NAPI stands for New API, a technique by which the network driver works in polling mode, and not in interrupt-driven mode, when it is under high traffic. Using NAPI under high traffic has been proven to improve performance. When working with NAPI, instead of getting an interrupt for each received packet, the network stack buffers the packets and from time to time triggers the poll method the driver registered with the netif_napi_add() method. When working with polling mode, the driver starts to work in interrupt-driven mode. When there is an interrupt for the first received packet, you reach the interrupt service routine (ISR), which is the method that was registered with request_irq(). Then the driver disables interrupts and notifies NAPI to take control, usually by calling the__napi_schedule() method from the ISR. See, for example, the cpsw_interrupt() method in drivers/net/ethernet/ti/cpsw.

When the traffic is low, the network driver switches to work in interrupt-driven mode. Nowadays, most network drivers work with NAPI. The napi_list object is the list of napi_struct objects; The netif_napi_add() method adds napi_struct objects to this list, and the netif_napi_del() method deletes napi_struct objects from this list. When calling the netif_napi_add() method, the driver should specify its polling method and a weight parameter. The weight is a limit on the number of packets the driver will pass to the stack in each polling cycle. It is recommended to use a weight of 64. If a driver attempts to call netif_napi_add() with weight higher than 64 (NAPI_POLL_WEIGHT), there is a kernel error message. NAPI_POLL_WEIGHT is defined ininclude/linux/netdevice.h.

The network driver should call napi_enable() to enable NAPI scheduling. Usually this is done in the ndo_open() callback of the net_device_ops object. The network driver should call napi_disable() to disable NAPI scheduling. Usually this is done in thendo_stop() callback of net_device_ops. NAPI is implemented using softirqs. This softirq handler is the net_rx_action() method and is registered by calling open_softirq(NET_RX_SOFTIRQ, net_rx_action) by the net_dev_init()method in net/core/dev.c. The net_rx_action() method invokes the poll method of the network driver which was registered with NAPI. The maximum number of packets (taken from all interfaces which are registered to polling) in one polling cycle (NAPI poll) is by default 300. It is the netdev_budget variable, defined in net/core/dev.c, and can be modified via a procfs entry, /proc/sys/net/core/netdev_budget. In the past, you could change the weight per device by writing values to a procfs entry, but currently, the /sys/class/net/<device>/weight sysfs entry is removed. See Documentation/sysctl/net.txt. I should also mention that the napi_complete() method removes a device from the polling list. When a network driver wants to return to work in interrupt-driven mode, it should call the napi_complete() method to remove itself from the polling list.

· struct list_head unreg_list

The list of unregistered network devices. Devices are added to this list when they are unregistered.

· unsigned char *dev_addr

The MAC address of the network interface. Sometimes you want to assign a random MAC address. You do that by calling the eth_hw_addr_random() method, which also sets the addr_assign_type to be NET_ADDR_RANDOM.

The dev_addr field is exported by sysfs via /sys/class/net/<devName>/address.

You can change dev_addr with userspace tools like ifconfig or ip of iproute2.

Helper methods: Many times you invoke the following helper methods on Ethernet addresses in general and on dev_addr field of a network device in particular:

· is_zero_ether_addr(const u8 *addr): Returns true if the address is all zeroes.

· is_multicast_ether_addr(const u8 *addr): Returns true if the address is a multicast address. By definition the broadcast address is also a multicast address.

· is_valid_ether_addr (const u8 *addr): Returns true if the specified MAC address is not 00:00:00:00:00:00, is not a multicast address, and is not a broadcast address (FF:FF:FF:FF:FF:FF).

· struct netdev_hw_addr_list dev_addrs

The list of device hardware addresses.

· unsigned char broadcast[MAX_ADDR_LEN]

The hardware broadcast address. For Ethernet devices, the broadcast address is initialized to 0XFFFFFF in the ether_setup() method, net/ethernet/eth.c. The broadcast address is exported by sysfs via /sys/class/net/<devName>/broadcast.

· struct kset *queues_kset

A kset is a group of kobjects of a specific type, belonging to a specific subsystem.

The kobject structure is the basic type of the device model. A Tx queue is represented by struct netdev_queue, and the Rx queue is represented by struct netdev_rx_queue. Each of them holds a kobject pointer. The queues_kset object is a group of allkobjects of the Tx queues and Rx queues. Each Rx queue has the sysfs entry /sys/class/net/<deviceName>/queues/<rx-queueNumber>, and each Tx queue has the sysfs entry /sys/class/net/<deviceName>/queues/<tx-queueNumber>. These entries are added with the rx_queue_add_kobject() method and the netdev_queue_add_kobject() method respectively, in net/core/net-sysfs.c. For more information about the kobject and the device model, seeDocumentation/kobject.txt.

· struct netdev_rx_queue *_rx

An array of Rx queues (netdev_rx_queue objects), initialized by the netif_alloc_rx_queues() method. The Rx queue to be used is determined in the get_rps_cpu() method. See more info about RPS in the description of the rxhash field in the previoussk_buff section.

· unsigned int num_rx_queues

The number of Rx queues allocated in the register_netdev() method.

· unsigned int real_num_rx_queues

Number of Rx queues currently active in the device.

Helper method:

· netif_set_real_num_rx_queues (struct net_device *dev, unsigned int rxq): Sets the actual number of Rx queues used for the specified device according to the specified number of Rx queues. The relevant sysfs entries (/sys/class/net/<devName>/queues/*) are updated (only in the case that the state of the device is NETREG_REGISTERED or NETREG_UNREGISTERING). Note that alloc_netdev_mq() initializes num_rx_queues,real_num_rx_queues, num_tx_queues and real_num_tx_queues to the same value. One can set the number of Tx queues and Rx queues by using ip link when adding a device. For example, if you want to create a VLAN device with 6 Tx queues and 7 Rx queues, you can run this command:

· ip link add link p2p1 name p2p1.1 numtxqueues 6 numrxqueues 7 type vlan id 8

· rx_handler_func_t __rcu *rx_handler

Helper methods:

· netdev_rx_handler_register(struct net_device *dev, rx_handler_func_t *rx_handler void *rx_handler_data)

The rx_handler callback is set by calling the netdev_rx_handler_register() method. It is used, for example, in bonding, team, openvswitch, macvlan, and bridge devices.

· netdev_rx_handler_unregister(struct net_device *dev): Unregisters a receive handler for the specified network device.

· void __rcu *rx_handler_data

The rx_handler_data field is also set by the netdev_rx_handler_register() method when a non-NULL value is passed to the netdev_rx_handler_register() method.

· struct netdev_queue __rcu *ingress_queue

Helper method:

· struct netdev_queue *dev_ingress_queue(struct net_device *dev): Returns the ingress_queue of the specified net_device (include/linux/rtnetlink.h).

· struct netdev_queue *_tx

An array of Tx queues (netdev_queue objects), initialized by the netif_alloc_netdev_queues() method.

Helper method:

· netdev_get_tx_queue(const struct net_device *dev,unsigned int index): Returns the Tx queue (netdev_queue object), an element of the _tx array of the specified network device at the specified index.

· unsigned int num_tx_queues

Number of Tx queues, allocated by the alloc_netdev_mq() method.

· unsigned int real_num_tx_queues

Number of Tx queues currently active in the device.

Helper method:

· netif_set_real_num_tx_queues(struct net_device *dev, unsigned int txq): Sets the actual number of Tx queues used.

· struct Qdisc *qdisc

Each device maintains a queue of packets to be transmitted named qdisc. The Qdisc (Queuing Disciplines) layer implements the Linux kernel traffic management. The default qdisc is pfifo_fast. You can set a different qdisc using tc, the traffic control tool of theiproute2 package. You can view the qdisc of your network device by the using the ip command:

ip addr show <deviceName>

For example, running

ip addr show eth1

can give:

2: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:e0:4c:53:44:58 brd ff:ff:ff:ff:ff:ff

inet 192.168.2.200/24 brd 192.168.2.255 scope global eth1

inet6 fe80::2e0:4cff:fe53:4458/64 scope link

valid_lft forever preferred_lft forever

In this example, you can see that a qdisc of pfifo_fast is used, which is the default.

· unsigned long tx_queue_len

The maximum number of allowed packets per queue. Each hardware layer has its own tx_queue_len default. For Ethernet devices, tx_queue_len is set to 1,000 by default (see the ether_setup() method). For FDDI, tx_queue_len is set to 100 by default (see the fddi_setup() method in net/802/fddi.c).

The tx_queue_len field is set to 0 for virtual devices, such as the VLAN device, because the actual transmission of packets is done by the real device on which these virtual devices are based. You can set the Tx queue length of a device by using the commandifconfig (this option is called txqueuelen) or by using the command ip link show (it is called qlen), in this way, for example:

ifconfig p2p1 txqueuelen 900

ip link set txqueuelen 950 dev p2p1

The Tx queue length is exported via the following sysfs entry: /sys/class/net/<deviceName>/tx_queue_len.

· unsigned long trans_start

The time (in jiffies) of the last transmission.

· int watchdog_timeo

The watchdog is a timer that will invoke a callback when the network interface was idle and did not perform transmission in some specified timeout interval. Usually the driver defines a watchdog callback which will reset the network interface in such a case. Thendo_tx_timeout() callback of net_device_ops serves as the watchdog callback. The watchdog_timeo field represents the timeout that is used by the watchdog. See the dev_watchdog() method, net/sched/sch_generic.c.

· int __percpu *pcpu_refcnt

Per CPU network device reference counter.

Helper methods:

· dev_put(struct net_device *dev): Decrements the reference count.

· dev_hold(struct net_device *dev): Increments the reference count.

· struct hlist_node index_hlist

This is a hash table of network devices, indexed by the network device index (the ifindex field). A lookup in this table is performed by the dev_get_by_index() method. Insertion into this table is performed by the list_netdevice() method, and removal from this list is done with the unlist_netdevice() method.

· enum {...} reg_state

An enum that represents the various registration states of the network device.

Possible values:

· NETREG_UNINITIALIZED: When the device memory is allocated, in the alloc_netdev_mqs() method.

· NETREG_REGISTERED: When the net_device is registered, in the register_netdevice() method.

· NETREG_UNREGISTERING: When unregistering a device, in the rollback_registered_many() method.

· NETREG_UNREGISTERED: The network device is unregistered but it is not freed yet.

· NETREG_RELEASED: The network device is in the last stage of freeing the allocated memory of the network device, in the free_netdev() method.

· NETREG_DUMMY: Used in the dummy device, in the init_dummy_netdev() method. See drivers/net/dummy.c.

· bool dismantle

A Boolean flag that shows that the device is in dismantle phase, which means that it is going to be freed.

· enum {...} rtnl_link_state

This is an enum that can have two values that represent the two phases of creating a new link:

· RTNL_LINK_INITIALIZE: The ongoing state, when creating the link is still not finished.

· RTNL_LINK_INITIALIZING: The final state, when work is finished.

See the rtnl_newlink() method in net/core/rtnetlink.c.

· void (*destructor)(struct net_device *dev)

This destructor callback is called when unregistering a network device, in the netdev_run_todo() method. It enables network devices to perform additional tasks that need to be done for unregistering. For example, the loopback device destructor callback,loopback_dev_free(), calls free_percpu() for freeing its statistics object and free_netdev(). Likewise the team device destructor callback, team_destructor(), also calls free_percpu() for freeing its statistics object and free_netdev(). And there are many other network device drivers that define a destructor callback.

· struct net *nd_net

The network namespace this network device is inside. Network namespaces support was added in the 2.6.29 kernel. These features provide process virtualization, which is considered lightweight in comparison to other virtualization solutions like KVM and Xen. There is currently support for six namespaces in the Linux kernel. In order to support network namespaces, a structure called net was added. This structure represents a network namespace. The process descriptor (task_struct) handles the network namespace and other namespaces via a new member which was added for namespaces support, named nsproxy. This nsproxy includes a network namespace object called net_ns, and also four other namespace objects of the following namespaces: pid namespace, mount namespace, uts namespace, and ipc namespace; the sixth namespace, the user namespace, is kept in struct cred (the credentials object) which is a member of the process descriptor, task_struct).

Network namespaces provide a partitioning and isolation mechanism which enables one process or a group of processes to have a private view of a full network stack of their own. By default, after boot all network interfaces belong to the default network namespace,init_net. You can create a network namespace with userspace tools using the ip command from iproute2 package or with the unshare command of util-linux—or by writing your own userspace application and invoking the unshare() or the clone()system calls with the CLONE_NEWNET flag. Moreover, you can also change the network namespace of a process by invoking the setns() system call. This setns() system call and the unshare() system call were added specially to support namespaces. Thesetns() system call can attach to the calling process an existing namespace of any type (network namespace, pid namespace, mount namespace, and so on). You need CAP_SYS_ADMIN privilege to call set_ns() for all namespaces, except the user namespace. Seeman 2 setns.

A network device belongs to exactly one network namespace at a given moment. And a network socket belongs to exactly one network namespace at a given moment. Namespaces do not have names, but they do have a unique inode which identifies them. This unique inode is generated when the namespace is created and can be read by reading a procfs entry (the command ls –al /proc/<pid>/ns/ shows all the unique inode numbers symbolic links of a process—you can also read these symbolic links with the readlinkcommand).

For example, using the ip command, creating a new namespace called ns1 is done thus:

ip netns add myns1

Each newly created network namespace includes only the loopback device and includes no sockets. Each device (like a bridge device or a VLAN device) that is created from a process that runs in that namespace (like a shell) belongs to that namespace.

Removing a namespace is done using the following command:

ip netns del myns1

![]() Note After deleting a namespace, all its physical network devices are moved to the default network namespace. Local devices (namespace local devices that have the NETIF_F_NETNS_LOCAL flag set, like PPP device or VXLAN device) are not moved to the default network namespace but are deleted.

Note After deleting a namespace, all its physical network devices are moved to the default network namespace. Local devices (namespace local devices that have the NETIF_F_NETNS_LOCAL flag set, like PPP device or VXLAN device) are not moved to the default network namespace but are deleted.

Showing the list of all network namespaces on the system is done with this command:

ip netns list

Assigning the p2p1 interface to the myns1 network namespace is done by the command:

ip link set p2p1 netns myns1

Opening a shell in myns1 is done thus:

ip netns exec myns1 bash

With the unshare utility, creating a new namespace and starting a bash shell inside is done thus:

unshare --net bash

Two network namespaces can communicate by using a special virtual Ethernet driver, veth. (drivers/net/veth.c).

Helper methods:

· dev_change_net_namespace(struct net_device *dev, struct net *net, const char *pat): Moves the network device to a different network namespace, specified by the net parameter. Local devices (devices in which the NETIF_F_NETNS_LOCAL feature is set) are not allowed to change their namespace. This method returns -EINVAL for this type of device. The pat parameter, when it is not NULL, is the name pattern to try if the current device name is already taken in the destination network namespace. The method also sends a KOBJ_REMOVE uevent for removing the old namespace entries from sysfs, and a KOBJ_ADD uevent to add the sysfs entries to the new namespace. This is done by invoking thekobject_uevent() method specifying the corresponding uevent.

· dev_net(const struct net_device *dev): Returns the network namespace of the specified network device.

· dev_net_set(struct net_device *dev, struct net *net): Decrements the reference count of the nd_net (namespace object) of the specified device and assigns the specified network namespace to it.

The following four fields are members in a union:

· struct pcpu_lstats __percpu *lstats

The loopback network device statistics.

· struct pcpu_tstats __percpu *tstats

The tunnel statistics.

· struct pcpu_dstats __percpu *dstats

The dummy network device statistics.

· struct pcpu_vstats __percpu *vstats

The VETH (Virtual Ethernet) statistics.

· struct device dev