A practical guide to Fedora and Red Hat Enterprise Linux, 7th Edition (2014)

Part V: Programming Tools

Chapter 27 Programming the Bourne Again Shell (bash)

Chapter 28 The Python Programming Language

Chapter 29 The MariaDB SQL Database Management System

Chapter 27. Programming the Bourne Again Shell (bash)

In This Chapter

Control Structures

File Descriptors

Positional Parameters

Special Parameters

Variables

Environment, Environment Variables, and Inheritance

Array Variables

Builtin Commands

Expressions

Shell Programs

A Recursive Shell Script

The quiz Shell Script

Objectives

After reading this chapter you should be able to:

![]() Use control structures to implement decision making and repetition in shell scripts

Use control structures to implement decision making and repetition in shell scripts

![]() Handle input to and output from scripts

Handle input to and output from scripts

![]() Use shell variables (local) and environment variables (global)

Use shell variables (local) and environment variables (global)

![]() Evaluate the value of numeric variables

Evaluate the value of numeric variables

![]() Use bash builtin commands to call other scripts inline, trap signals, and kill processes

Use bash builtin commands to call other scripts inline, trap signals, and kill processes

![]() Use arithmetic and logical expressions

Use arithmetic and logical expressions

![]() List standard programming practices that result in well-written scripts

List standard programming practices that result in well-written scripts

Chapter 5 introduced the shells and Chapter 9 went into detail about the Bourne Again Shell. This chapter introduces additional Bourne Again Shell commands, builtins, and concepts that carry shell programming to a point where it can be useful. Although you might make use of shell programming as a system administrator, you do not have to read this chapter to perform system administration tasks. Feel free to skip this chapter and come back to it if and when you like.

The first part of this chapter covers programming control structures, also called control flow constructs. These structures allow you to write scripts that can loop over command-line arguments, make decisions based on the value of a variable, set up menus, and more. The Bourne Again Shell uses the same constructs found in programming languages such as C.

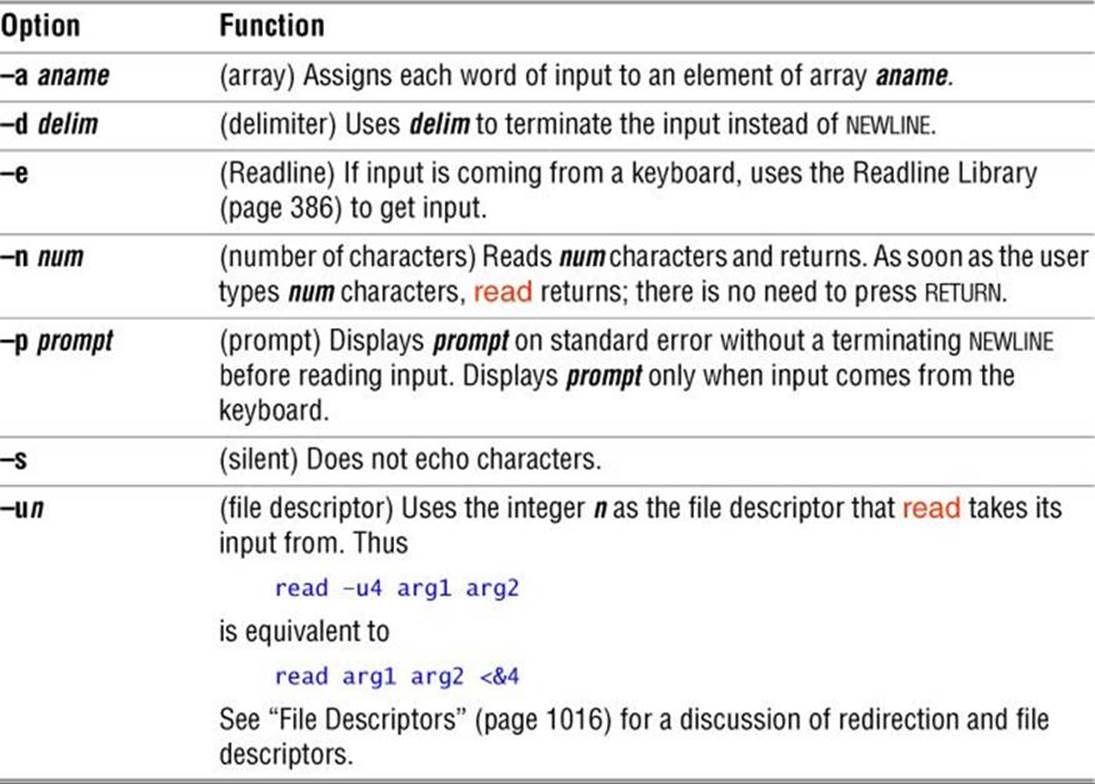

The next part of this chapter discusses parameters and variables, going into detail about array variables, shell versus environment variables, special parameters, and positional parameters. The exploration of builtin commands covers type, which displays information about a command, andread, which allows a shell script to accept user input. The section on the exec builtin demonstrates how to use exec to execute a command efficiently by replacing a process and explains how to use exec to redirect input and output from within a script.

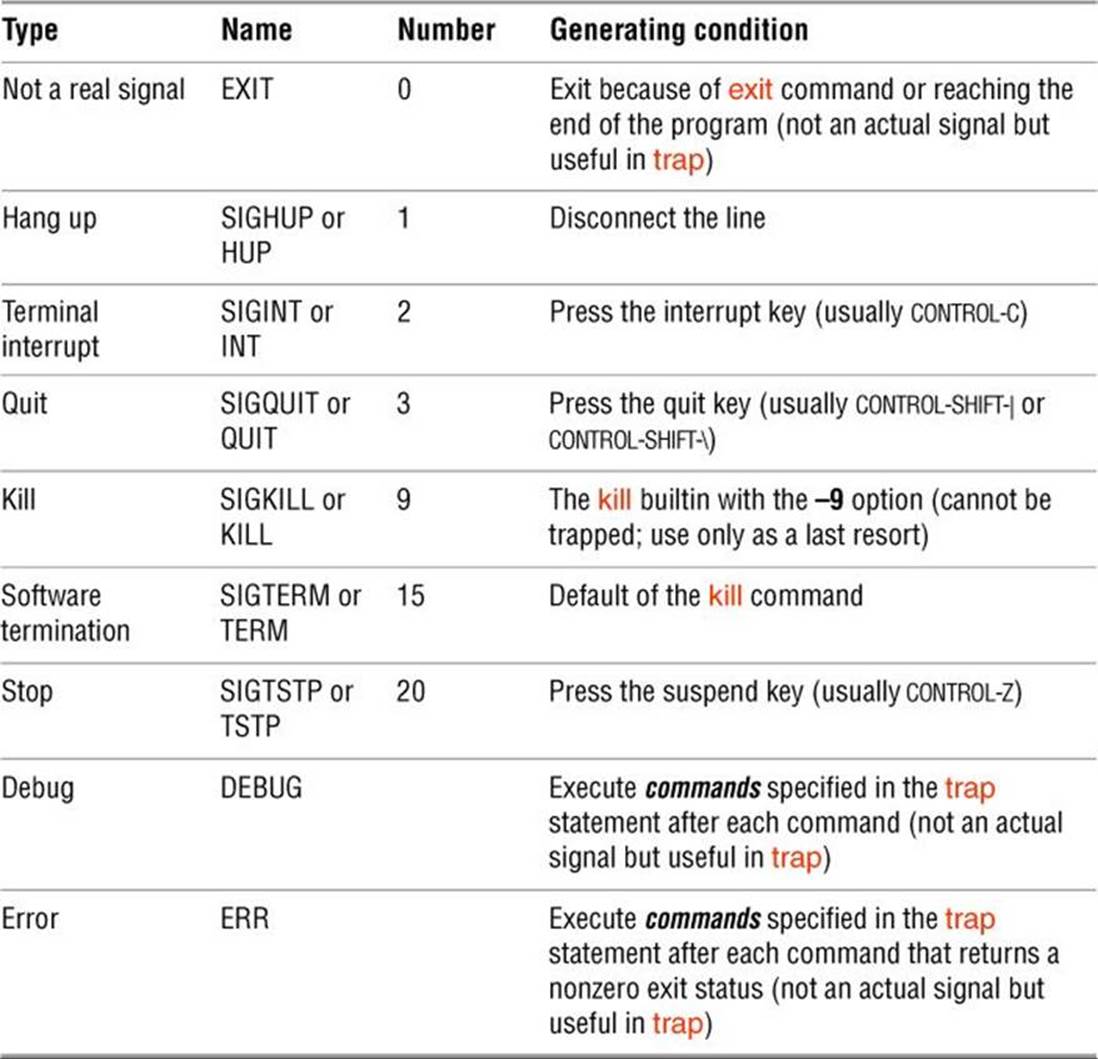

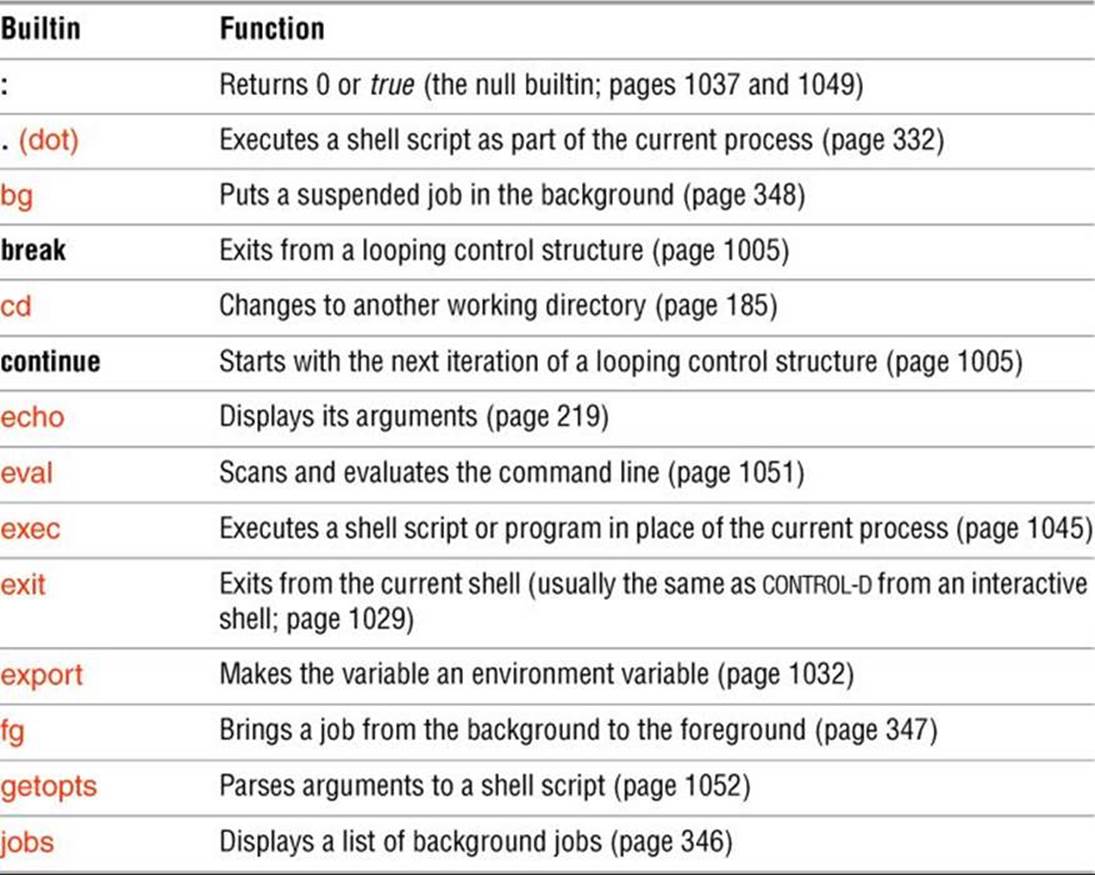

The next section covers the trap builtin, which provides a way to detect and respond to operating system signals (such as the signal generated when you press CONTROL-C). The discussion of builtins concludes with a discussion of kill, which can abort a process, and getopts, which makes it easy to parse options for a shell script. Table 27-6 on page 1055 lists some of the more commonly used builtins.

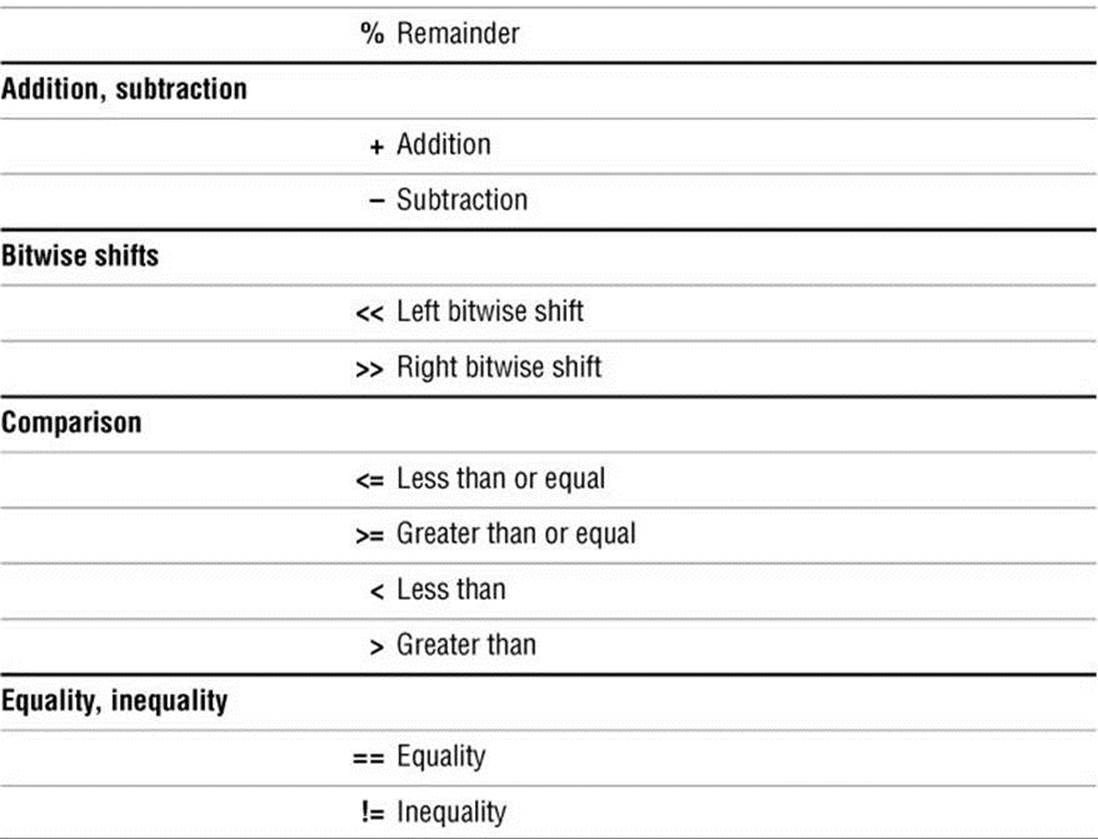

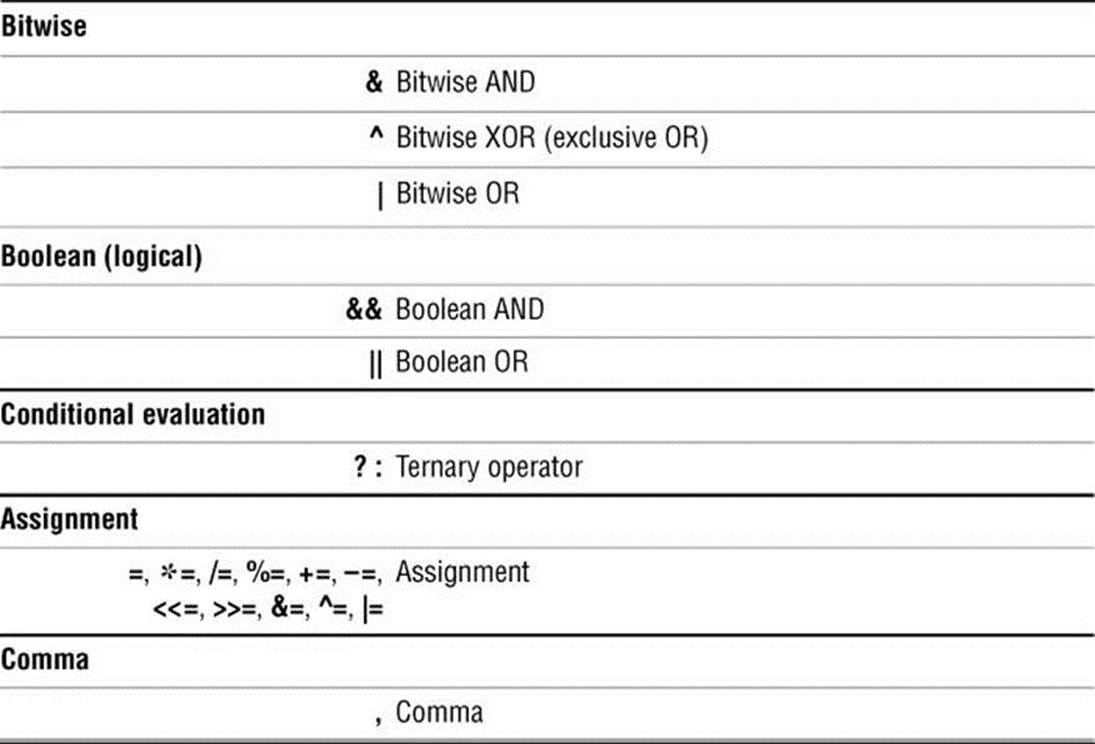

Next the chapter examines arithmetic and logical expressions as well as the operators that work with them. The final section walks through the design and implementation of two major shell scripts.

This chapter contains many examples of shell programs. Although they illustrate certain concepts, most use information from earlier examples as well. This overlap not only reinforces your overall knowledge of shell programming but also demonstrates how you can combine commands to solve complex tasks. Running, modifying, and experimenting with the examples in this book is a good way to become comfortable with the underlying concepts.

Tip: Do not name a shell script test

You can unwittingly create a problem if you name a shell script test because a bash builtin has the same name. Depending on how you call your script, you might run either your script or the builtin, leading to confusing results.

![]() Control Structures

Control Structures

The control flow commands alter the order of execution of commands within a shell script. Control structures include the if...then, for...in, while, until, and case statements. In addition, the break and continue statements work in conjunction with the control structures to alter the order of execution of commands within a script.

Getting help with control structures

You can use the bash help command to display information about bash control structures. See page 134 for more information.

![]() if...then

if...then

The if...then control structure has the following syntax:

if test-command

then

commands

fi

The bold words in the syntax description are the items you supply to cause the structure to have the desired effect. The nonbold words are the keywords the shell uses to identify the control structure.

![]() test builtin

test builtin

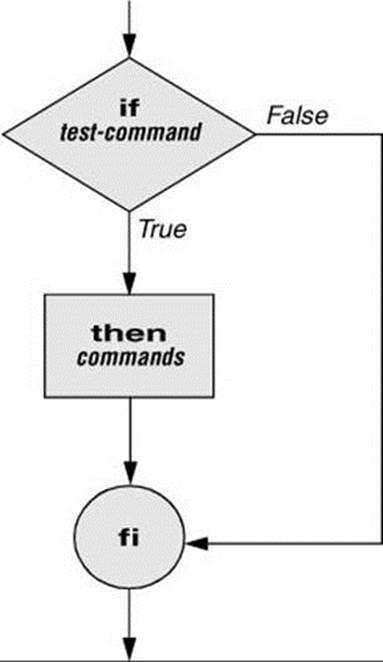

Figure 27-1 shows that the if statement tests the status returned by the test-command and transfers control based on this status. The end of the if structure is marked by a fi statement (if spelled backward). The following script prompts for two words, reads them, and then uses an if structure to execute commands based on the result returned by the test builtin when it compares the two words. (See the test info page for information on the test utility, which is similar to the test builtin.) The test builtin returns a status of true if the two words are the same and false if they are not. Double quotation marks around $word1 and $word2 make sure test works properly if you enter a string that contains a SPACE or other special character.

$ cat if1

read -p "word 1: " word1

read -p "word 2: " word2

if test "$word1" = "$word2"

then

echo "Match"

fi

echo "End of program."

$ ./if1

word 1: peach

word 2: peach

Match

End of program.

Figure 27-1 An if...then flowchart

In the preceding example the test-command is test "$word1" = "$word2". The test builtin returns a true status if its first and third arguments have the relationship specified by its second argument. If this command returns a true status (= 0), the shell executes the commands between thethen and fi statements. If the command returns a false status (not = 0), the shell passes control to the statement following fi without executing the statements between then and fi. The effect of this if statement is to display Match if the two words are the same. The script always displays End of program.

Builtins

In the Bourne Again Shell, test is a builtin—part of the shell. It is also a stand-alone utility kept in /usr/bin/test. This chapter discusses and demonstrates many Bourne Again Shell builtins. The shell will use the builtin version if it is available and the utility if it is not. Each version of a command might vary slightly from one shell to the next and from the utility to any of the shell builtins. See page 1040 for more information on shell builtins.

Checking arguments

The next program uses an if structure at the beginning of a script to confirm that you have supplied at least one argument on the command line. The test –eq criterion compares two integers; the shell expands the $# special parameter (page 1027) to the number of command-line arguments. This structure displays a message and exits from the script with an exit status of 1 if you do not supply at least one argument.

$ cat chkargs

if test $# -eq 0

then

echo "You must supply at least one argument."

exit 1

fi

echo "Program running."

$ ./chkargs

You must supply at least one argument.

$ ./chkargs abc

Program running.

A test like the one shown in chkargs is a key component of any script that requires arguments. To prevent the user from receiving meaningless or confusing information from the script, the script needs to check whether the user has supplied the appropriate arguments. Some scripts simply test whether arguments exist (as in chkargs); other scripts test for a specific number or specific kinds of arguments.

You can use test to verify the status of a file argument or the relationship between two file arguments. After verifying that at least one argument has been given on the command line, the following script tests whether the argument is the name of an ordinary file (not a directory or other type of file). The test builtin with the –f criterion and the first command-line argument ($1) checks the file.

$ cat is_ordfile

if test $# -eq 0

then

echo "You must supply at least one argument."

exit 1

fi

if test -f "$1"

then

echo "$1 is an ordinary file."

else

echo "$1 is NOT an ordinary file."

fi

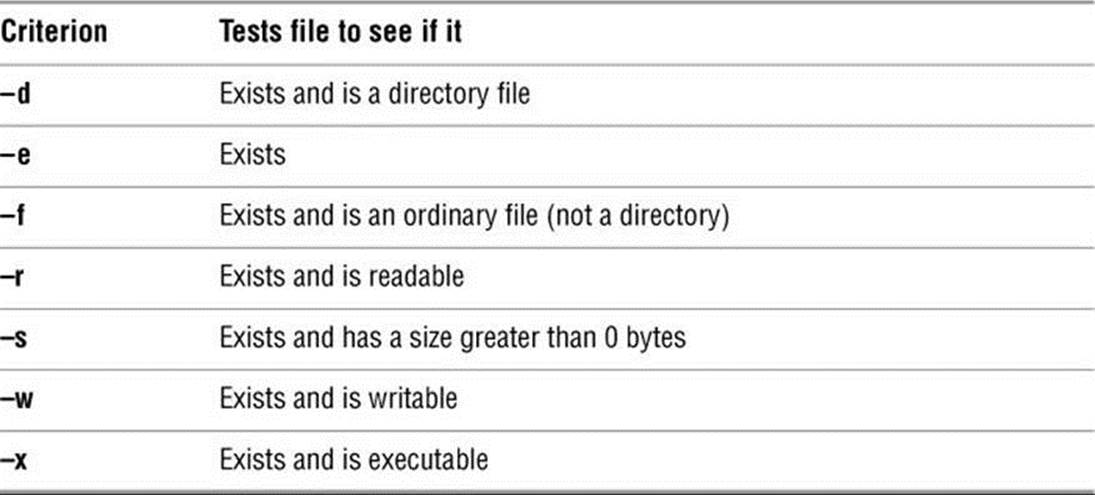

You can test many other characteristics of a file using test criteria; see Table 27-1.

Table 27-1 test builtin criteria

Other test criteria provide ways to test relationships between two files, such as whether one file is newer than another. Refer to examples later in this chapter for more information.

Tip: Always test the arguments

To keep the examples in this book short and focused on specific concepts, the code to verify arguments is often omitted or abbreviated. It is good practice to test arguments in shell programs that other people will use. Doing so results in scripts that are easier to debug, run, and maintain.

![]() [] is a synonym for test

[] is a synonym for test

The following example—another version of chkargs—checks for arguments in a way that is more traditional for Linux shell scripts. This example uses the bracket ([]) synonym for test. Rather than using the word test in scripts, you can surround the arguments to test with brackets. The brackets must be surrounded by whitespace (SPACEs or TABs).

$ cat chkargs2

if [ $# -eq 0 ]

then

echo "Usage: chkargs2 argument..." 1>&2

exit 1

fi

echo "Program running."

exit 0

$ ./chkargs2

Usage: chkargs2 argument...

$ ./chkargs2 abc

Program running.

Usage messages

The error message that chkargs2 displays is called a usage message and uses the 1>&2 notation to redirect its output to standard error (page 335). After issuing the usage message, chkargs2 exits with an exit status of 1, indicating an error has occurred. The exit 0 command at the end of the script causes chkargs2 to exit with a 0 status after the program runs without an error. The Bourne Again Shell returns the exit status of the last command the script ran if you omit the status code.

The usage message is commonly used to specify the type and number of arguments the script requires. Many Linux utilities provide usage messages similar to the one in chkargs2. If you call a utility or other program with the wrong number or wrong kind of arguments, it will often display a usage message. Following is the usage message that cp displays when you call it with only one argument:

$ cp a

cp: missing destination file operand after 'a'

Try 'cp --help' for more information.

![]() if...then...else

if...then...else

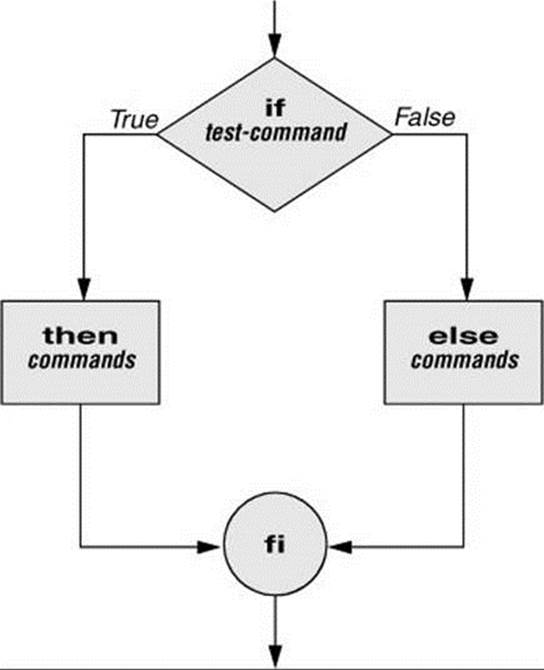

The introduction of an else statement turns the if structure into the two-way branch shown in Figure 27-2. The if...then...else control structure has the following syntax:

if test-command

then

commands

else

commands

fi

Figure 27-2 An if...then...else flowchart

Because a semicolon (;) ends a command just as a NEWLINE does, you can place then on the same line as if by preceding it with a semicolon. (Because if and then are separate builtins, they require a control operator between them; a semicolon and NEWLINE work equally well [page 341].) Some people prefer this notation for aesthetic reasons; others like it because it saves space.

if test-command; then

commands

else

commands

fi

If the test-command returns a true status, the if structure executes the commands between the then and else statements and then diverts control to the statement following fi. If the test-command returns a false status, the if structure executes the commands following the else statement.

When you run the out script with arguments that are filenames, it displays the files on the terminal. If the first argument is –v (called an option in this case), out uses less (page 220) to display the files one screen at a time. After determining that it was called with at least one argument, outtests its first argument to see whether it is –v. If the result of the test is true (the first argument is –v), out uses the shift builtin (page 1025) to shift the arguments to get rid of the –v and displays the files using less. If the result of the test is false (the first argument is not –v), the script uses catto display the files.

$ cat out

if [ $# -eq 0 ]

then

echo "Usage: $0 [-v] filenames..." 1>&2

exit 1

fi

if [ "$1" = "-v" ]

then

shift

less -- "$@"

else

cat -- "$@"

fi

Optional

In out, the –– argument to cat and less tells these utilities that no more options follow on the command line and not to consider leading hyphens (–) in the following list as indicating options. Thus –– allows you to view a file whose name starts with a hyphen (page 146). Although not common, filenames beginning with a hyphen do occasionally occur. (You can create such a file by using the command cat > –fname.) The –– argument works with all Linux utilities that use the getopts builtin (page 1052) to parse their options; it does not work with more and a few other utilities. This argument is particularly useful when used in conjunction with rm to remove a file whose name starts with a hyphen (rm –– –fname), including any you create while experimenting with the –– argument.

![]() if...then...elif

if...then...elif

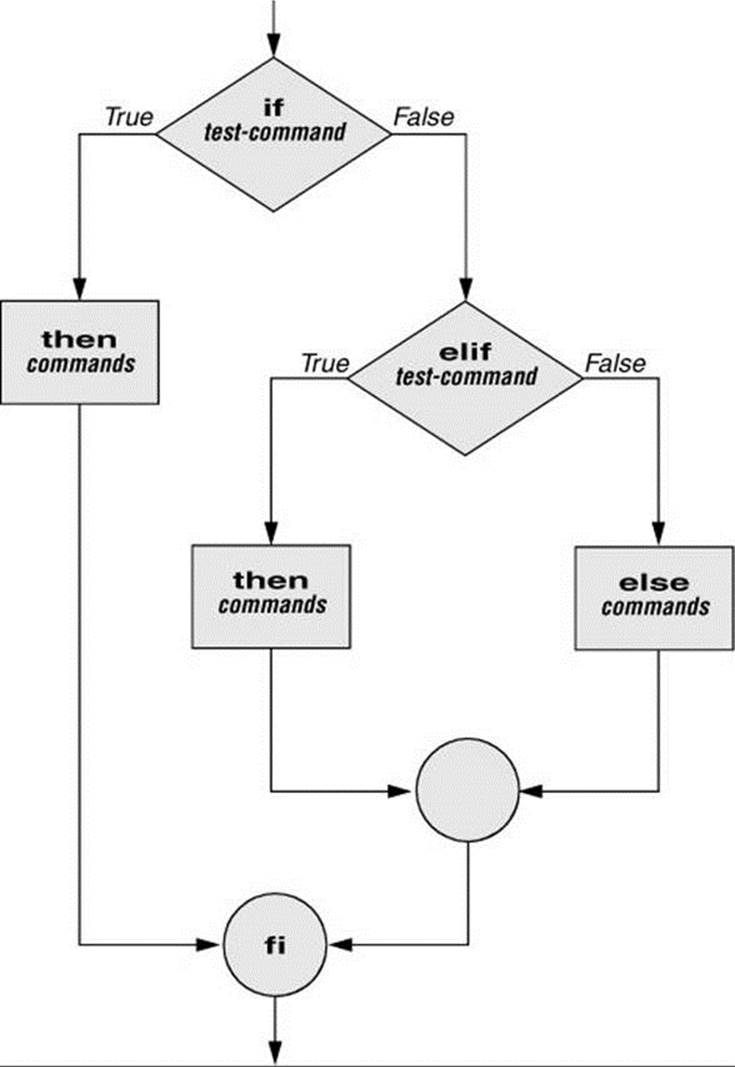

The if...then...elif control structure (Figure 27-3) has the following syntax:

if test-command

then

commands

elif test-command

then

commands

. . .

else

commands

fi

Figure 27-3 An if...then...elif flowchart

The elif statement combines the else statement and the if statement and enables you to construct a nested set of if...then...else structures (Figure 27-3). The difference between the else statement and the elif statement is that each else statement must be paired with a fi statement, whereas multiple nested elif statements require only a single closing fi statement.

The following example shows an if...then...elif control structure. This shell script compares three words the user enters. The first if statement uses the Boolean AND operator (–a) as an argument to test. The test builtin returns a true status if the first and second logical comparisons are true(that is, word1 matches word2 and word2 matches word3). If test returns a true status, the script executes the command following the next then statement, passes control to the statement following fi, and terminates.

$ cat if3

read -p "word 1: " word1

read -p "word 2: " word2

read -p "word 3: " word3

if [ "$word1" = "$word2" -a "$word2" = "$word3" ]

then

echo "Match: words 1, 2, & 3"

elif [ "$word1" = "$word2" ]

then

echo "Match: words 1 & 2"

elif [ "$word1" = "$word3" ]

then

echo "Match: words 1 & 3"

elif [ "$word2" = "$word3" ]

then

echo "Match: words 2 & 3"

else

echo "No match"

fi

$ ./if3

word 1: apple

word 2: orange

word 3: pear

No match

$ ./if3

word 1: apple

word 2: orange

word 3: apple

Match: words 1 & 3

$ ./if3

word 1: apple

word 2: apple

word 3: apple

Match: words 1, 2, & 3

If the three words are not the same, the structure passes control to the first elif, which begins a series of tests to see if any pair of words is the same. As the nesting continues, if any one of the elif statements is satisfied, the structure passes control to the next then statement and subsequently to the statement following fi. Each time an elif statement is not satisfied, the structure passes control to the next elif statement. The double quotation marks around the arguments to echo that contain ampersands (&) prevent the shell from interpreting the ampersands as special characters.

Optional: The lnks Script

The following script, named lnks, demonstrates the if...then and if...then...elif control structures. This script finds hard links to its first argument, a filename. If you provide the name of a directory as the second argument, lnks searches for links in the directory hierarchy rooted at that directory. If you do not specify a directory, lnks searches the working directory and its subdirectories. This script does not locate symbolic links.

$ cat lnks

#!/bin/bash

# Identify links to a file

# Usage: lnks file [directory]

if [ $# -eq 0 -o $# -gt 2 ]; then

echo "Usage: lnks file [directory]" 1>&2

exit 1

fi

if [ -d "$1" ]; then

echo "First argument cannot be a directory." 1>&2

echo "Usage: lnks file [directory]" 1>&2

exit 1

else

file="$1"

fi

if [ $# -eq 1 ]; then

directory="."

elif [ -d "$2" ]; then

directory="$2"

else

echo "Optional second argument must be a directory." 1>&2

echo "Usage: lnks file [directory]" 1>&2

exit 1

fi

# Check that file exists and is an ordinary file

if [ ! -f "$file" ]; then

echo "lnks: $file not found or is a special file" 1>&2

exit 1

fi

# Check link count on file

set -- $(ls -l "$file")

linkcnt=$2

if [ "$linkcnt" -eq 1 ]; then

echo "lnks: no other hard links to $file" 1>&2

exit 0

fi

# Get the inode of the given file

set $(ls -i "$file")

inode=$1

# Find and print the files with that inode number

echo "lnks: using find to search for links..." 1>&2

find "$directory" -xdev -inum $inode -print

Max has a file named letter in his home directory. He wants to find links to this file in his and other users’ home directory file hierarchies. In the following example, Max calls lnks from his home directory to perform the search. The second argument to lnks, /home, is the pathname of the directory where Max wants to start the search. The lnks script reports that /home/max/letter and /home/zach/draft are links to the same file:

$ ./lnks letter /home

lnks: using find to search for links...

/home/max/letter

/home/zach/draft

In addition to the if...then...elif control structure, lnks introduces other features that are commonly used in shell programs. The following discussion describes lnks section by section.

Specify the shell

The first line of the lnks script uses #! (page 338) to specify the shell that will execute the script:

#!/bin/bash

In this chapter, the #! notation appears only in more complex examples. It ensures that the proper shell executes the script, even when the user is running a different shell or the script is called from a script running a different shell.

Comments

The second and third lines of lnks are comments; the shell ignores text that follows a hashmark (#) up to the next NEWLINE character. These comments in lnks briefly identify what the file does and explain how to use it:

# Identify links to a file

# Usage: lnks file [directory]

Usage messages

The first if statement tests whether lnks was called with zero arguments or more than two arguments:

if [ $# -eq 0 -o $# -gt 2 ]; then

echo "Usage: lnks file [directory]" 1>&2

exit 1

fi

If either of these conditions is true, lnks sends a usage message to standard error and exits with a status of 1. The double quotation marks around the usage message prevent the shell from interpreting the brackets as special characters. The brackets in the usage message indicate that the directory argument is optional.

The second if statement tests whether the first command-line argument ($1) is a directory (the –d argument to test returns true if the file exists and is a directory):

if [ -d "$1" ]; then

echo "First argument cannot be a directory." 1>&2

echo "Usage: lnks file [directory]" 1>&2

exit 1

else

file="$1"

fi

If the first argument is a directory, lnks displays a usage message and exits. If it is not a directory, lnks saves the value of $1 in the file variable because later in the script set resets the command-line arguments. If the value of $1 is not saved before the set command is issued, its value is lost.

Test the arguments

The next section of lnks is an if...then...elif statement:

if [ $# -eq 1 ]; then

directory="."

elif [ -d "$2" ]; then

directory="$2"

else

echo "Optional second argument must be a directory." 1>&2

echo "Usage: lnks file [directory]" 1>&2

exit 1

fi

The first test-command determines whether the user specified a single argument on the command line. If the test-command returns 0 (true), the directory variable is assigned the value of the working directory (.). If the test-command returns a nonzero value (false), the elifstatement tests whether the second argument is a directory. If it is a directory, the directory variable is set equal to the second command-line argument, $2. If $2 is not a directory, lnks sends a usage message to standard error and exits with a status of 1.

The next if statement in lnks tests whether $file does not exist. This test keeps lnks from wasting time looking for links to a nonexistent file. The test builtin, when called with the three arguments !, –f, and $file, evaluates to true if the file $file does not exist:

[ ! -f "$file" ]

The ! operator preceding the –f argument to test negates its result, yielding false if the file $file does exist and is an ordinary file.

Next lnks uses set and ls –l to check the number of links $file has:

# Check link count on file

set -- $(ls -l "$file")

linkcnt=$2

if [ "$linkcnt" -eq 1 ]; then

echo "lnks: no other hard links to $file" 1>&2

exit 0

fi

The set builtin uses command substitution (page 410) to set the positional parameters to the output of ls –l. The second field in this output is the link count, so the user-created variable linkcnt is set equal to $2. The –– used with set prevents set from interpreting as an option the first argument produced by ls –l (the first argument is the access permissions for the file and typically begins with –). The if statement checks whether $linkcnt is equal to 1; if it is, lnks displays a message and exits. Although this message is not truly an error message, it is redirected to standard error. The way lnks has been written, all informational messages are sent to standard error. Only the final product of lnks—the pathnames of links to the specified file—is sent to standard output, so you can redirect the output.

If the link count is greater than 1, lnks goes on to identify the inode (page 1254) for $file. As explained on page 206, comparing the inodes associated with filenames is a good way to determine whether the filenames are links to the same file. The lnks script uses set to set the positional parameters to the output of ls –i. The first argument to set is the inode number for the file, so the user-created variable named inode is assigned the value of $1:

# Get the inode of the given file

set $(ls -i "$file")

inode=$1

Finally lnks uses the find utility to search for files having inode numbers that match $inode:

# Find and print the files with that inode number

echo "lnks: using find to search for links..." 1>&2

find "$directory" -xdev -inum $inode -print

The find utility searches the directory hierarchy rooted at the directory specified by its first argument ($directory) for files that meet the criteria specified by the remaining arguments. In this example, the remaining arguments send the names of files having inode numbers matching $inode to standard output. Because files in different filesystems can have the same inode number yet not be linked, find must search only directories in the same filesystem as $directory. The –xdev (cross-device) argument prevents find from searching directories on other filesystems. Refer to page 203 for more information about filesystems and links.

The echo command preceding the find command in lnks, which tells the user that find is running, is included because find can take a long time to run. Because lnks does not include a final exit statement, the exit status of lnks is that of the last command it runs, find.

Debugging Shell Scripts

When you are writing a script such as lnks, it is easy to make mistakes. You can use the shell’s –x option to help debug a script. This option causes the shell to display each command after it expands it but before it runs the command. Tracing a script’s execution in this way can give you information about where a problem lies.

You can run lnks (above) and cause the shell to display each command before it is executed. Either set the –x option for the current shell (set –x) so all scripts display commands as they are run or use the –x option to affect only the shell running the script called by the command line.

$ bash -x lnks letter /home

+ '[' 2 -eq 0 -o 2 -gt 2 ']'

+ '[' -d letter ']'

+ file=letter

+ '[' 2 -eq 1 ']'

+ '[' -d /home ']'

+ directory=/home

+ '[' '!' -f letter ']'

...

PS4

Each command the script executes is preceded by the value of the PS4 variable—a plus sign (+) by default—so you can distinguish debugging output from output produced by the script. You must export PS4 if you set it in the shell that calls the script. The next command sets PS4 to >>>>followed by a SPACE and exports it:

$ export PS4='>>>> '

You can also set the –x option of the shell running the script by putting the following set command near the beginning of the script:

set -x

You can put set –x anywhere in the script to turn debugging on starting at that location. Turn debugging off using set +x. The set –o xtrace and set +o xtrace commands do the same things as set –x and set +x, respectively.

![]() for...in

for...in

The for...in control structure has the following syntax:

for loop-index in argument-list

do

commands

done

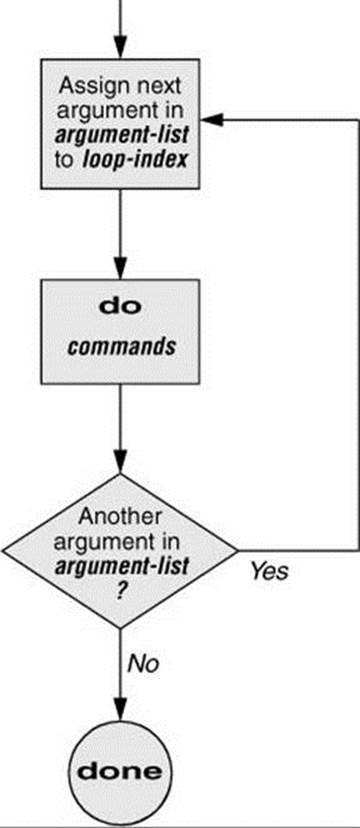

The for...in structure (Figure 27-4) assigns the value of the first argument in the argument-list to the loop-index and executes the commands between the do and done statements. The do and done statements mark the beginning and end of the for loop, respectively.

Figure 27-4 A for...in flowchart

After it passes control to the done statement, the structure assigns the value of the second argument in the argument-list to the loop-index and repeats the commands. It repeats the commands between the do and done statements one time for each argument in the argument-list. When the structure exhausts the argument-list, it passes control to the statement following done.

The following for...in structure assigns apples to the user-created variable fruit and then displays the value of fruit, which is apples. Next the structure assigns oranges to fruit and repeats the process. When it exhausts the argument list, the structure transfers control to the statement following done, which displays a message.

$ cat fruit

for fruit in apples oranges pears bananas

do

echo "$fruit"

done

echo "Task complete."

$ ./fruit

apples

oranges

pears

bananas

Task complete.

The next script lists the names of the directory files in the working directory by looping through the files in the working directory and using test to determine which are directory files:

$ cat dirfiles

for i in *

do

if [ -d "$i" ]

then

echo "$i"

fi

done

The ambiguous file reference character * matches the names of all files (except hidden files) in the working directory. Prior to executing the for loop, the shell expands the * and uses the resulting list to assign successive values to the index variable i.

Optional: Step Values

As an alternative to explicitly specifying values for argument-list, you can specify step values. A for...in loop that uses step values assigns an initial value to or increments the loop-index, executes the statements within the loop, and tests a termination condition at the end of the loop.

The following example uses brace expansion with a sequence expression (page 406) to generate the argument-list. This syntax works on bash version 4.0 and above; give the command echo $BASH_VERSION to see which version you are using. The first time through the loop, bash assigns a value of 0 to count (the loop-index) and executes the statement between do and done. At the bottom of the loop, bash tests whether the termination condition has been met (is count>10?). If it has, bash passes control to the statement following done; if not, bash increments count by the increment value (2) and makes another pass through the loop. It repeats this process until the termination condition is met.

$ cat step1

for count in {0..10..2}

do

echo -n "$count "

done

echo

$ ./step1

0 2 4 6 8 10

Older versions of bash do not support sequence expressions; you can use the seq utility to perform the same function:

$ for count in $(seq 0 2 10); do echo -n "$count "; done; echo

0 2 4 6 8 10

The next example uses bash’s C-like syntax to specify step values. This syntax gives you more flexibility in specifying the termination condition and the increment value. Using this syntax, the first parameter initializes the loop-index, the second parameter specifies the condition to be tested, and the third parameter specifies the increment.

$ cat rand

# $RANDOM evaluates to a random value 0 < x < 32,767

# This program simulates 10 rolls of a pair of dice

for ((x=1; x<=10; x++))

do

echo -n "Roll #$x: "

echo -n $(( $RANDOM % 6 + 1 ))

echo " " $(( $RANDOM % 6 + 1 ))

done

![]() for

for

The for control structure has the following syntax:

for loop-index

do

commands

done

In the for structure, the loop-index takes on the value of each of the command-line arguments, one at a time. The for structure is the same as the for...in structure (Figure 27-4, page 995) except in terms of where it gets values for the loop-index. The for structure performs a sequence of commands, usually involving each argument in turn.

The following shell script shows a for structure displaying each command-line argument. The first line of the script, for arg, implies for arg in "$@", where the shell expands "$@" into a list of quoted command-line arguments (i.e., "$1" "$2" "$3" ...). The balance of the script corresponds to the for...in structure.

$ cat for_test

for arg

do

echo "$arg"

done

$ for_test candy gum chocolate

candy

gum

chocolate

The next example uses a different syntax. In it, the loop-index is named count and is set to an initial value of 0. The condition to be tested is count<=10: bash continues executing the loop as long as this condition is true (as long as count is less than or equal to 10; see Table 27-8 on page1059 for a list of operators). Each pass through the loop, bash adds 2 to the value of count (count+=2).

$ cat step2

for (( count=0; count<=10; count+=2 ))

do

echo -n "$count "

done

echo

$ ./step2

0 2 4 6 8 10

Optional: The whos Script

The following script, named whos, demonstrates the usefulness of the implied "$@" in the for structure. You give whos one or more users’ full names or user-names as arguments, and whos displays information about the users. The whos script gets the information it displays from the first and fifth fields in the /etc/passwd file. The first field contains a username, and the fifth field typically contains the user’s full name. You can provide a username as an argument to whos to display the user’s name or provide a name as an argument to display the user-name. The whos script is similar to the finger utility, although whos delivers less information.

$ cat whos

#!/bin/bash

if [ $# -eq 0 ]

then

echo "Usage: whos id..." 1>&2

exit 1

fi

for id

do

gawk -F: '{print $1, $5}' /etc/passwd |

grep -i "$id"

done

In the next example, whos identifies the user whose username is chas and the user whose name is Marilou Smith:

$ ./whos chas "Marilou Smith"

chas Charles Casey

msmith Marilou Smith

Use of "$@"

The whos script uses a for statement to loop through the command-line arguments. In this script the implied use of "$@" in the for loop is particularly beneficial because it causes the for loop to treat an argument that contains a SPACE as a single argument. This example encloses Marilou Smith in quotation marks, which causes the shell to pass it to the script as a single argument. Then the implied "$@" in the for statement causes the shell to regenerate the quoted argument Marilou Smith so that it is again treated as a single argument. The double quotation marks in the grep statement perform the same function.

gawk

For each command-line argument, whos searches the /etc/passwd file. Inside the for loop, the gawk utility extracts the first ($1) and fifth ($5) fields from each line in /etc/passwd. The –F: option causes gawk to use a colon (:) as a field separator when it reads /etc/passwd, allowing it to break each line into fields. The gawk command sets and uses the $1 and $5 arguments; they are included within single quotation marks and are not interpreted by the shell. Do not confuse these arguments with positional parameters, which correspond to command-line arguments. The first and fifth fields are sent to grep (page 232) via a pipeline. The grep utility searches for $id (to which the shell has assigned the value of a command-line argument) in its input. The –i option causes grep to ignore case as it searches; grepdisplays each line in its input that contains $id.

A pipeline symbol (|) at the end of a line

Under bash, a control operator such as a pipeline symbol ( |) implies continuation: bash “knows” another command must follow it. Therefore, in whos, the NEWLINE following the pipeline symbol at the end of the line with the gawk command does not have to be quoted. For more information refer to “Implicit Command-Line Continuation” on page 1063.

![]() while

while

The while control structure has the following syntax:

while test-command

do

commands

done

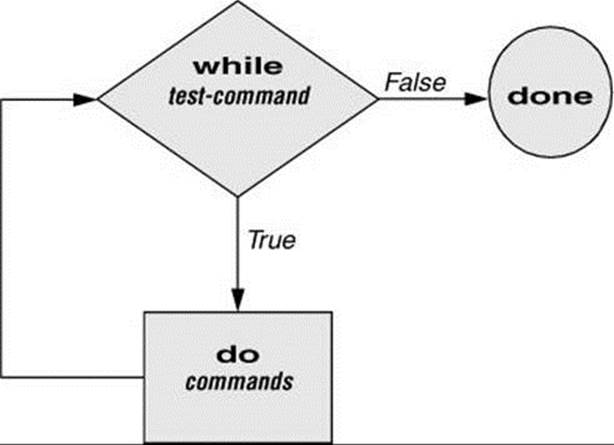

As long as the test-command (Figure 27-5) returns a true exit status, the while structure continues to execute the series of commands delimited by the do and done statements. Before each loop through the commands, the structure executes the test-command. When the exit status of the test-command is false, the structure passes control to the statement after the done statement.

Figure 27-5 A while flowchart

![]() test builtin

test builtin

The following shell script first initializes the number variable to zero. The test builtin then determines whether number is less than 10. The script uses test with the –lt argument to perform a numerical test. For numerical comparisons, you must use –ne (not equal), –eq (equal), –gt (greater than), –ge (greater than or equal to), –lt (less than), or –le (less than or equal to). For string comparisons, use = (equal) or != (not equal) when you are working with test. In this example, test has an exit status of 0 (true) as long as number is less than 10. As long as test returns true, the structure executes the commands between the do and done statements. See page 983 for information on the test builtin.

$ cat count

#!/bin/bash

number=0

while [ "$number" -lt 10 ]

do

echo -n "$number"

((number +=1))

done

echo

$ ./count

0123456789

$

The echo command following do displays number. The –n prevents echo from issuing a NEWLINE following its output. The next command uses arithmetic evaluation [((...)); page 1056] to increment the value of number by 1. The done statement terminates the loop and returns control to thewhile statement to start the loop over again. The final echo causes count to send a NEWLINE character to standard output, so the next prompt is displayed at the left edge of the display rather than immediately following the 9.

Optional: The spell_check Script

The aspell utility (aspell package) checks the words in a file against a dictionary of correctly spelled words. With the list command, aspell runs in list mode: Input comes from standard input and aspell sends each potentially misspelled word to standard output. The following command produces a list of possible misspellings in the file letter.txt:

$ aspell list < letter.txt

quikly

portible

frendly

The next shell script, named spell_check, shows another use of a while structure. To find the incorrect spellings in a file, spell_check calls aspell to check a file against a system dictionary. But it goes a step further: It enables you to specify a list of correctly spelled words and removes these words from the output of aspell. This script is useful for removing words you use frequently, such as names and technical terms, that do not appear in a standard dictionary. Although you can duplicate the functionality of spell_check by using additionalaspell dictionaries, the script is included here for its instructive value.

The spell_check script requires two filename arguments: the file containing the list of correctly spelled words and the file you want to check. The first if statement verifies that the user specified two arguments. The next two if statements verify that both arguments are readable files. (The exclamation point negates the sense of the following operator; the –r operator causes test to determine whether a file is readable. The result is a test that determines whether a file is not readable.)

$ cat spell_check

#!/bin/bash

# remove correct spellings from aspell output

if [ $# -ne 2 ]

then

echo "Usage: spell_check dictionary filename" 1>&2

echo "dictionary: list of correct spellings" 1>&2

echo "filename: file to be checked" 1>&2

exit 1

fi

if [ ! -r "$1" ]

then

echo "spell_check: $1 is not readable" 1>&2

exit 1

fi

if [ ! -r "$2" ]

then

echo "spell_check: $2 is not readable" 1>&2

exit 1

fi

aspell list < "$2" |

while read line

do

if ! grep "^$line$" "$1" > /dev/null

then

echo $line

fi

done

The spell_check script sends the output from aspell (with the list argument, so it produces a list of misspelled words on standard output) through a pipeline to standard input of a while structure, which reads one line at a time (each line has one word on it) from standard input. The test-command (that is, read line) returns a true exit status as long as it receives a line from standard input.

Inside the while loop, an if statement monitors the return value of grep, which determines whether the line that was read is in the user’s list of correctly spelled words. The pattern grep searches for (the value of $line) is preceded and followed by special characters that specify the beginning and end of a line (^ and $, respectively). These special characters ensure that grep finds a match only if the $line variable matches an entire line in the file of correctly spelled words. (Otherwise, grep would match a string, such as paul, in the output ofaspell if the file of correctly spelled words contained the word paulson.) These special characters, together with the value of the $line variable, form a regular expression (Appendix A).

The output of grep is redirected to /dev/null (page 158) because the output is not needed; only the exit code is important. The if statement checks the negated exit status of grep (the leading exclamation point negates or changes the sense of the exit status—true becomesfalse, and vice versa), which is 0 or true (false when negated) when a matching line is found. If the exit status is not 0 or false (true when negated), the word was not in the file of correctly spelled words. The echo builtin sends a list of words that are not in the file of correctly spelled words to standard output.

Once it detects the EOF (end of file), the read builtin returns a false exit status, control passes out of the while structure, and the script terminates.

Before you use spell_check, create a file of correct spellings containing words that you use frequently but that are not in a standard dictionary. For example, if you work for a company named Blinkenship and Klimowski, Attorneys, you would put Blinkenship andKlimowski in the file. The following example shows how spell_check checks the spelling in a file named memo and removes Blinkenship and Klimowski from the output list of incorrectly spelled words:

$ aspell list < memo

Blinkenship

Klimowski

targat

hte

$ cat word_list

Blinkenship

Klimowski

$ ./spell_check word_list memo

targat

hte

Refer to /usr/share/doc/aspell or aspell.net for more information.

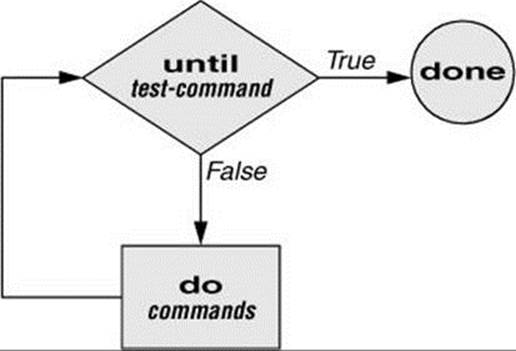

until

The until and while structures are similar, differing only in the sense of the test performed at the top of the loop. Figure 27-6 shows that until continues to loop until the test-command returns a true exit status. The while structure loops while the test-command continues to return a true or nonerror condition. The until control structure has the following syntax:

until test-command

do

commands

done

Figure 27-6 An until flowchart

The following script demonstrates an until structure that includes read (page 1041). When the user enters the correct string of characters, the test-command is satisfied and the structure passes control out of the loop.

$ cat until1

secretname=zach

name=noname

echo "Try to guess the secret name!"

echo

until [ "$name" = "$secretname" ]

do

read -p "Your guess: " name

done

echo "Very good."

$ ./until1

Try to guess the secret name!

Your guess: helen

Your guess: barbara

Your guess: rachael

Your guess: zach

Very good

The following locktty script is similar to the lock command on Berkeley UNIX and the Lock Screen menu selection in GNOME. The script prompts for a key (password) and uses an until control structure to lock the terminal. The until statement causes the system to ignore any characters typed at the keyboard until the user types the key followed by a RETURN on a line by itself, which unlocks the terminal. The locktty script can keep people from using your terminal while you are away from it for short periods of time. It saves you from having to log out if you are concerned about other users using your session.

$ cat locktty

#! /bin/bash

trap '' 1 2 3 18

stty -echo

read -p "Key: " key_1

echo

read -p "Again: " key_2

echo

key_3=

if [ "$key_1" = "$key_2" ]

then

tput clear

until [ "$key_3" = "$key_2" ]

do

read key_3

done

else

echo "locktty: keys do not match" 1>&2

fi

stty echo

Tip: Forget your password for locktty?

If you forget your key (password), you will need to log in from another (virtual) terminal and give a command to kill the process running locktty (e.g., killall –9 locktty).

trap builtin

The trap builtin (page 1047) at the beginning of the locktty script stops a user from being able to terminate the script by sending it a signal (for example, by pressing the interrupt key). Trapping signal 20 means that no one can use CONTROL-Z (job control, a stop from a tty) to defeat the lock.Table 27-5 on page 1047 provides a list of signals. The stty –echo command turns on keyboard echo (causes the terminal not to display characters typed at the keyboard), preventing the key the user enters from appearing on the screen. After turning off keyboard echo, the script prompts the user for a key, reads it into the user-created variable key_1, prompts the user to enter the same key again, and saves it in key_2. The statement key_3= creates a variable with a NULL value. If key_1 and key_2 match, locktty clears the screen (with the tput command) and starts an until loop. The until loop keeps reading from the terminal and assigning the input to the key_3 variable. Once the user types a string that matches one of the original keys (key_2), the until loop terminates and keyboard echo is turned on again.

break and continue

You can interrupt a for, while, or until loop by using a break or continue statement. The break statement transfers control to the statement following the done statement, thereby terminating execution of the loop. The continue command transfers control to the done statement, continuing execution of the loop.

The following script demonstrates the use of these two statements. The for...in structure loops through the values 1–10. The first if statement executes its commands when the value of the index is less than or equal to 3 ($index –le 3). The second if statement executes its commands when the value of the index is greater than or equal to 8 ($index –ge 8). In between the two ifs, echo displays the value of the index. For all values up to and including 3, the first if statement displays continue, executes a continue statement that skips echo $index and the second if statement, and continues with the next for statement. For the value of 8, the second if statement displays the word break and executes a break statement that exits from the for loop.

$ cat brk

for index in 1 2 3 4 5 6 7 8 9 10

do

if [ $index -le 3 ] ; then

echo "continue"

continue

fi

#

echo $index

#

if [ $index -ge 8 ] ; then

echo "break"

break

fi

done

$ ./brk

continue

continue

continue

4

5

6

7

8

break

$

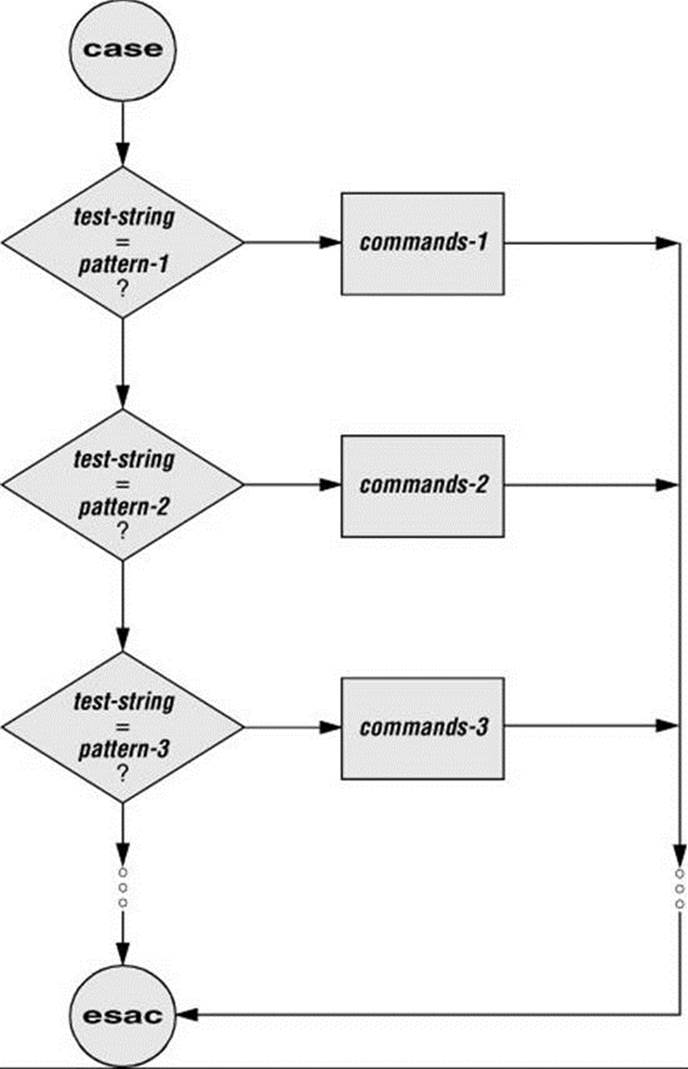

case

The case structure (Figure 27-7) is a multiple-branch decision mechanism. The path taken through the structure depends on a match or lack of a match between the test-string and one of the patterns. When the test-string matches one of the patterns, the shell transfers control to the commandsfollowing the pattern. The commands are terminated by a double semicolon (;;) control operator. When control reaches this control operator, the shell transfers control to the command following the esac statement. The case control structure has the following syntax:

case test-string in

pattern-1)

commands-1

;;

pattern-2)

commands-2

;; pattern-3)

commands-3

;;

. . .

esac

Figure 27-7 A case flowchart

The following case structure uses the character the user enters as the test-string. This value is held in the variable letter. If the test-string has a value of A, the structure executes the command following the pattern A. The right parenthesis is part of the case control structure, not part of thepattern. If the test-string has a value of B or C, the structure executes the command following the matching pattern. The asterisk (*) indicates any string of characters and serves as a catchall in case there is no match. If no pattern matches the test-string and if there is no catchall (*) pattern,control passes to the command following the esac statement, without the case structure taking any action.

$ cat case1

read -p "Enter A, B, or C: " letter

case "$letter" in

A)

echo "You entered A"

;;

B)

echo "You entered B"

;;

C)

echo "You entered C"

;;

*)

echo "You did not enter A, B, or C"

;;

esac

$ ./case1

Enter A, B, or C: B

You entered B

The next execution of case1 shows the user entering a lowercase b. Because the test-string b does not match the uppercase B pattern (or any other pattern in the case statement), the program executes the commands following the catchall pattern and displays a message:

$ ./case1

Enter A, B, or C: b

You did not enter A, B, or C

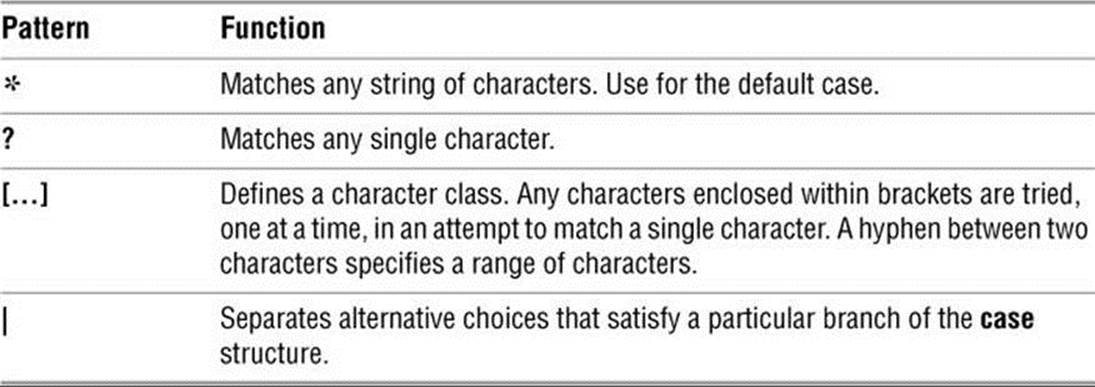

The pattern in the case structure is a glob (it is analogous to an ambiguous file reference). It can include any special characters and strings shown in Table 27-2.

Table 27-2 Patterns

The next script accepts both uppercase and lowercase letters:

$ cat case2

read -p "Enter A, B, or C: " letter

case "$letter" in

a|A)

echo "You entered A"

;;

b|B)

echo "You entered B"

;;

c|C)

echo "You entered C"

;;

*)

echo "You did not enter A, B, or C"

;;

esac

$ ./case2

Enter A, B, or C: b

You entered B

Optional

The following example shows how to use the case structure to create a simple menu. The command_menu script uses echo to present menu items and prompt the user for a selection. (The select control structure [page 1012] is a much easier way of coding a menu.) Thecase structure then executes the appropriate utility depending on the user’s selection.

$ cat command_menu

#!/bin/bash

# menu interface to simple commands

echo -e "\n COMMAND MENU\n"

echo " a. Current date and time"

echo " b. Users currently logged in"

echo " c. Name of the working directory"

echo -e " d. Contents of the working directory\n"

read -p "Enter a, b, c, or d: " answer

echo

#

case "$answer" in

a)

date

;;

b)

who

;;

c)

pwd

;;

d)

ls

;;

*)

echo "There is no selection: $answer"

;;

esac

$ ./command_menu

COMMAND MENU

a. Current date and time

b. Users currently logged in

c. Name of the working directory

d. Contents of the working directory

Enter a, b, c, or d: a

Sun Jan 6 12:31:12 PST 2013

![]() echo –e

echo –e

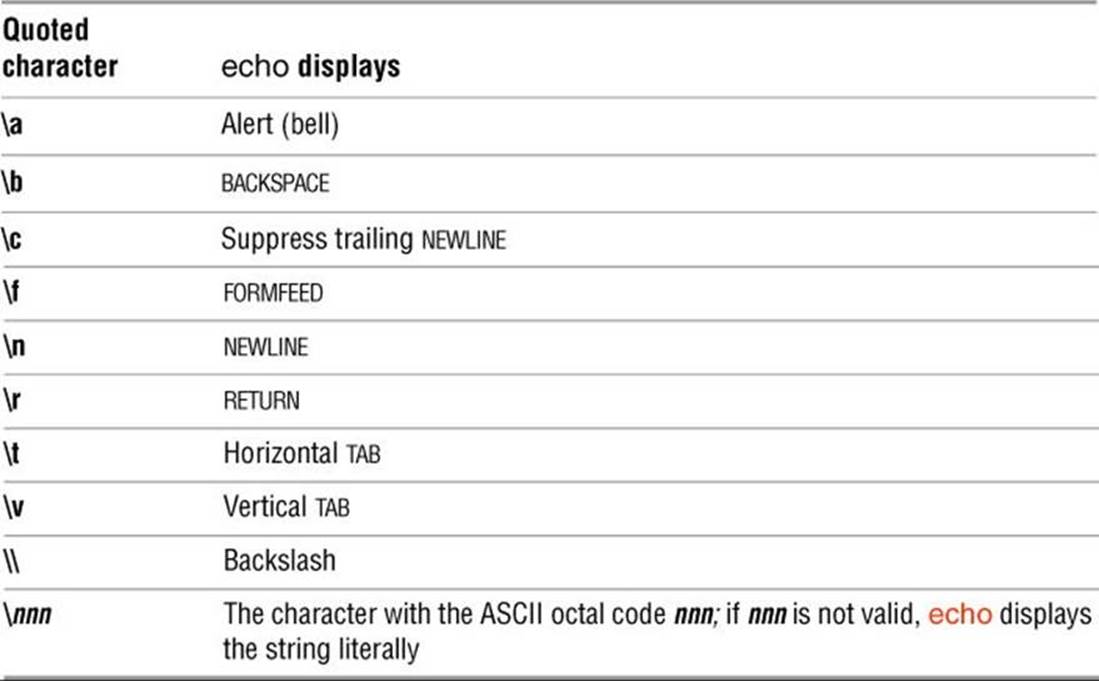

The –e option causes echo to interpret \n as a NEWLINE character. If you do not include this option, echo does not output the extra blank lines that make the menu easy to read but instead outputs the (literal) two-character sequence \n. The –e option causes echo to interpret several other backslash-quoted characters (Table 27-3). Remember to quote (i.e., place double quotation marks around the string) the backslash-quoted character so the shell does not interpret it but rather passes the backslash and the character to echo. See xpg_echo (page403) for a way to avoid using the –e option.

Table 27-3 Special characters in echo (must use –e)

You can also use the case control structure to take various actions in a script, depending on how many arguments the script is called with. The following script, named safedit, uses a case structure that branches based on the number of command-line arguments ($#). It callsvim and saves a backup copy of a file you are editing.

$ cat safedit

#!/bin/bash

PATH=/bin:/usr/bin

script=$(basename $0)

case $# in

0)

vim

exit 0

;;

1)

if [ ! -f "$1" ]

then

vim "$1"

exit 0

fi

if [ ! -r "$1" -o ! -w "$1" ]

then

echo "$script: check permissions on $1" 1>&2

exit 1

else

editfile=$1

fi

if [ ! -w "." ]

then

echo "$script: backup cannot be " \

"created in the working directory" 1>&2

exit 1

fi

;;

*)

echo "Usage: $script [file-to-edit]" 1>&2

exit 1

;;

esac

tempfile=/tmp/$$.$script

cp $editfile $tempfile

if vim $editfile

then

mv $tempfile bak.$(basename $editfile)

echo "$script: backup file created"

else

mv $tempfile editerr

echo "$script: edit error--copy of " \

"original file is in editerr" 1>&2

fi

If you call safedit without any arguments, the case structure executes its first branch and calls vim without a filename argument. Because an existing file is not being edited, safedit does not create a backup file. If you call safedit with one argument, it runs the commands in the second branch of the case structure and verifies that the file specified by $1 does not yet exist or is the name of a file for which the user has read and write permission. The safedit script also verifies that the user has write permission for the working directory. If the user calls safedit with more than one argument, the third branch of the case structure presents a usage message and exits with a status of 1.

Set PATH

At the beginning of the script, the PATH variable is set to search /bin and /usr/bin. Setting PATH in this way ensures that the commands executed by the script are standard utilities, which are kept in those directories. By setting this variable inside a script, you can avoid the problems that might occur if users have set PATH to search their own directories first and have scripts or programs with the same names as the utilities the script calls. You can also include absolute pathnames within a script to achieve this end, although this practice can make a script less portable.

Name of the program

The next line declares a variable named script and initializes it with the simple filename of the script:

script=$(basename $0)

The basename utility sends the simple filename component of its argument to standard output, which is assigned to the script variable, using command substitution. The $0 holds the command the script was called with (page 1022). No matter which of the following commands the user calls the script with, the output of basename is the simple filename safedit:

$ /home/max/bin/safedit memo

$ ./safedit memo

$ safedit memo

After the script variable is set, it replaces the filename of the script in usage and error messages. By using a variable that is derived from the command that invoked the script rather than a filename that is hardcoded into the script, you can create links to the script or rename it, and the usage and error messages will still provide accurate information.

Naming temporary files

Another feature of safedit relates to the use of the $$ parameter in the name of a temporary file. The statement following the esac statement creates and assigns a value to the tempfile variable. This variable contains the name of a temporary file that is stored in the /tmpdirectory, as are many temporary files. The temporary filename begins with the PID number of the shell and ends with the name of the script. Using the PID number ensures that the filename is unique. Thus safedit will not attempt to overwrite an existing file, as might happen if two people were using safedit at the same time. The name of the script is appended so that, should the file be left in /tmp for some reason, you can figure out where it came from.

The PID number is used in front of—rather than after—$script in the filename because of the 14-character limit placed on filenames by some older versions of UNIX. Linux systems do not have this limitation. Because the PID number ensures the uniqueness of the filename, it is placed first so that it cannot be truncated. (If the $script component is truncated, the filename is still unique.) For the same reason, when a backup file is created inside the if control structure a few lines down in the script, the filename consists of the string bak.followed by the name of the file being edited. On an older system, if bak were used as a suffix rather than a prefix and the original filename were 14 characters long, .bak might be lost and the original file would be overwritten. The basename utility extracts the simple filename of $editfile before it is prefixed with bak..

The safedit script uses an unusual test-command in the if structure: vim $editfile. The test-command calls vim to edit $editfile. When you finish editing the file and exit from vim, vim returns an exit code. The if control structure uses that exit code to determine which branch to take. If the editing session completed successfully, vim returns 0 and the statements following the then statement are executed. If vim does not terminate normally (as would occur if the user killed [page 465] the vim process), vim returns a nonzero exit status and the script executes the statements following else.

select

The select control structure is based on the one found in the Korn Shell. It displays a menu, assigns a value to a variable based on the user’s choice of items, and executes a series of commands. The select control structure has the following syntax:

select varname [in arg . . . ]

do

commands

done

The select structure displays a menu of the arg items. If you omit the keyword in and the list of arguments, select uses the positional parameters in place of the arg items. The menu is formatted with numbers before each item. For example, a select structure that begins with

select fruit in apple banana blueberry kiwi orange watermelon STOP

displays the following menu:

1) apple 3) blueberry 5) orange 7) STOP

2) banana 4) kiwi 6) watermelon

The select structure uses the values of the LINES (default is 24) and COLUMNS (default is 80) variables to specify the size of the display. With COLUMNS set to 20, the menu looks like this:

1) apple

2) banana

3) blueberry

4) kiwi

5) orange

6) watermelon

7) STOP

PS3

After displaying the menu, select displays the value of PS3, the select prompt. The default value of PS3 is ?#, but it is typically set to a more meaningful value. When you enter a valid number (one in the menu range) in response to the PS3 prompt, select sets varname to the argument corresponding to the number you entered. An invalid entry causes the shell to set varname to null. Either way, select stores your response in the keyword variable REPLY and then executes the commands between do and done. If you press RETURN without entering a choice, the shell redisplays the menu and the PS3 prompt.

The select structure continues to issue the PS3 prompt and execute the commands until something causes it to exit—typically a break or an exit statement. A break statement exits from the loop and an exit statement exits from the script.

The following script illustrates the use of select:

$ cat fruit2

#!/bin/bash

PS3="Choose your favorite fruit from these possibilities: "

select FRUIT in apple banana blueberry kiwi orange watermelon STOP

do

if [ "$FRUIT" == "" ]; then

echo -e "Invalid entry.\n"

continue

elif [ $FRUIT = STOP ]; then

echo "Thanks for playing!"

break

fi

echo "You chose $FRUIT as your favorite."

echo -e "That is choice number $REPLY.\n"

done

$ ./fruit2

1) apple 3) blueberry 5) orange 7) STOP

2) banana 4) kiwi 6) watermelon

Choose your favorite fruit from these possibilities: 3

You chose blueberry as your favorite.

That is choice number 3.

Choose your favorite fruit from these possibilities: 99

Invalid entry.

Choose your favorite fruit from these possibilities: 7

Thanks for playing!

After setting the PS3 prompt and establishing the menu with the select statement, fruit2 executes the commands between do and done. If the user submits an invalid entry, the shell sets varname ($FRUIT) to a null value. If $FRUIT is null, echo dis-plays an error message; continue then causes the shell to redisplay the PS3 prompt. If the entry is valid, the script tests whether the user wants to stop. If so, echo displays an appropriate message and break exits from the select structure (and from the script). If the user enters a valid response and does not want to stop, the script displays the name and number of the user’s response. (See page 1009 for information about the echo –e option.)

Here Document

A Here document allows you to redirect input to a shell script from within the shell script itself. A Here document is so named because it is here—immediately accessible in the shell script—instead of there, perhaps in another file.

The following script, named birthday, contains a Here document. The two less than symbols (<<) in the first line indicate a Here document follows. One or more characters that delimit the Here document follow the less than symbols—this example uses a plus sign. Whereas the opening delimiter must appear adjacent to the less than symbols, the closing delimiter must be on a line by itself. The shell sends everything between the two delimiters to the process as standard input. In the example it is as though you have redirected standard input to grep from a file, except that the file is embedded in the shell script:

$ cat birthday

grep -i "$1" <<+

Max June 22

Barbara February 3

Darlene May 8

Helen March 13

Zach January 23

Nancy June 26

+

$ ./birthday Zach

Zach January 23

$ ./birthday june

Max June 22

Nancy June 26

When you run birthday, it lists all the Here document lines that contain the argument you called it with. In this case the first time birthday is run, it displays Zach’s birthday because it is called with an argument of Zach. The second run displays all the birthdays in June. The –i argument causes grep’s search not to be case sensitive.

Optional

The next script, named bundle,1 includes a clever use of a Here document. The bundle script is an elegant example of a script that creates a shell archive (shar) file. The script creates a file that is itself a shell script containing several other files as well as the code needed to re-create the original files:

1. Thanks to Brian W. Kernighan and Rob Pike, The Unix Programming Environment (Englewood Cliffs, N.J.: Prentice-Hall, 1984), 98. Reprinted with permission.

$ cat bundle

#!/bin/bash

# bundle: group files into distribution package

echo "# To unbundle, bash this file"

for i

do

echo "echo $i 1>&2"

echo "cat >$i <<'End of $i'"

cat $i

echo "End of $i"

done

Just as the shell does not treat special characters that occur in standard input of a shell script as special, so the shell does not treat the special characters that occur between the delimiters in a Here document as special.

As the following example shows, the output of bundle is a shell script, which is redirected to a file named bothfiles. It contains the contents of each file given as an argument to bundle (file1 and file2 in this case) inside a Here document. To extract the original files frombothfiles, you simply give it as an argument to a bash command. Before each Here document is a cat command that causes the Here document to be written to a new file when bothfiles is run:

$ cat file1

This is a file.

It contains two lines.

$ cat file2

This is another file.

It contains

three lines.

$ ./bundle file1 file2 > bothfiles

$ cat bothfiles

# To unbundle, bash this file

echo file1 1>&2

cat >file1 <<'End of file1'

This is a file.

It contains two lines.

End of file1

echo file2 1>&2

cat >file2 <<'End of file2'

This is another file.

It contains

three lines.

End of file2

In the next example, file1 and file2 are removed before bothfiles is run. The bothfiles script echoes the names of the files it creates as it creates them. The ls command then shows that bothfiles has re-created file1 and file2:

$ rm file1 file2

$ bash bothfiles

file1

file2

$ ls

bothfiles

file1

file2

File Descriptors

As discussed on page 334, before a process can read from or write to a file, it must open that file. When a process opens a file, Linux associates a number (called a file descriptor) with the file. A file descriptor is an index into the process’s table of open files. Each process has its own set of open files and its own file descriptors. After opening a file, a process reads from and writes to that file by referring to its file descriptor. When it no longer needs the file, the process closes the file, freeing the file descriptor.

A typical Linux process starts with three open files: standard input (file descriptor 0), standard output (file descriptor 1), and standard error (file descriptor 2). Often these are the only files the process needs. Recall that you redirect standard output with the symbol > or the symbol 1> and that you redirect standard error with the symbol 2>. Although you can redirect other file descriptors, because file descriptors other than 0, 1, and 2 do not have any special conventional meaning, it is rarely useful to do so. The exception is in programs that you write yourself, in which case you control the meaning of the file descriptors and can take advantage of redirection.

![]() Opening a File Descriptor

Opening a File Descriptor

The Bourne Again Shell opens files using the exec builtin with the following syntax:

exec n> outfile

exec m< infile

The first line opens outfile for output and holds it open, associating it with file descriptor n. The second line opens infile for input and holds it open, associating it with file descriptor m.

![]() Duplicating a File Descriptor

Duplicating a File Descriptor

The <& token duplicates an input file descriptor; >& duplicates an output file descriptor. You can duplicate a file descriptor by making it refer to the same file as another open file descriptor, such as standard input or output. Use the following syntax to open or redirect file descriptor n as a duplicate of file descriptor m:

exec n<&m

Once you have opened a file, you can use it for input and output in two ways. First, you can use I/O redirection on any command line, redirecting standard output to a file descriptor with >&n or redirecting standard input from a file descriptor with <&n. Second, you can use the read (page1041) and echo builtins. If you invoke other commands, including functions (page 396), they inherit these open files and file descriptors. When you have finished using a file, you can close it using the following syntax:

exec n<&–

File Descriptor Examples

When you call the following mycp function with two arguments, it copies the file named by the first argument to the file named by the second argument. If you supply only one argument, the script copies the file named by the argument to standard output. If you invoke mycp with no arguments, it copies standard input to standard output.

Tip: A function is not a shell script

The mycp example is a shell function; it will not work as you expect if you execute it as a shell script. (It will work: The function will be created in a very short-lived subshell, which is of little use.) You can enter this function from the keyboard. If you put the function in a file, you can run it as an argument to the . (dot) builtin (page 332). You can also put the function in a startup file if you want it to be always available (page 397).

function mycp () {

case $# in

0)

# Zero arguments

# File descriptor 3 duplicates standard input

# File descriptor 4 duplicates standard output

exec 3<&0 4<&1

;;

1)

# One argument

# Open the file named by the argument for input

# and associate it with file descriptor 3

# File descriptor 4 duplicates standard output

exec 3< $1 4<&1

;;

2)

# Two arguments

# Open the file named by the first argument for input

# and associate it with file descriptor 3

# Open the file named by the second argument for output

# and associate it with file descriptor 4

exec 3< $1 4> $2

;;

* )

echo "Usage: mycp [source [dest]]"

return 1

;;

esac

# Call cat with input coming from file descriptor 3

# and output going to file descriptor 4

cat <&3 >&4

# Close file descriptors 3 and 4

exec 3<&- 4<&-

}

The real work of this function is done in the line that begins with cat. The rest of the script arranges for file descriptors 3 and 4, which are the input and output of the cat command, respectively, to be associated with the appropriate files.

Optional

The next program takes two filenames on the command line, sorts both, and sends the output to temporary files. The program then merges the sorted files to standard output, preceding each line by a number that indicates which file it came from.

$ cat sortmerg

#!/bin/bash

usage () {

if [ $# -ne 2 ]; then

echo "Usage: $0 file1 file2" 2>&1

exit 1

fi

}

# Default temporary directory

: ${TEMPDIR:=/tmp}

# Check argument count

usage "$@"

# Set up temporary files for sorting

file1=$TEMPDIR/$$.file1

file2=$TEMPDIR/$$.file2

# Sort

sort $1 > $file1

sort $2 > $file2

# Open $file1 and $file2 for reading. Use file descriptors 3 and 4.

exec 3<$file1

exec 4<$file2

# Read the first line from each file to figure out how to start.

read Line1 <&3

status1=$?

read Line2 <&4

status2=$?

# Strategy: while there is still input left in both files:

# Output the line that should come first.

# Read a new line from the file that line came from.

while [ $status1 -eq 0 -a $status2 -eq 0 ]

do

if [[ "$Line2" > "$Line1" ]]; then

echo -e "1.\t$Line1"

read -u3 Line1

status1=$?

else

echo -e "2.\t$Line2"

read -u4 Line2

status2=$?

fi

done

# Now one of the files is at end of file.

# Read from each file until the end.

# First file1:

while [ $status1 -eq 0 ]

do

echo -e "1.\t$Line1"

read Line1 <&3

status1=$?

done

# Next file2:

while [[ $status2 -eq 0 ]]

do

echo -e "2.\t$Line2"

read Line2 <&4

status2=$?

done

# Close and remove both input files

exec 3<&- 4<&-

rm -f $file1 $file2

exit 0

Determining Whether a File Descriptor Is Associated with the Terminal

The test –t criterion takes an argument of a file descriptor and causes test to return a value of 0 (true) or not 0 (false) based on whether the specified file descriptor is associated with the terminal (screen or keyboard). It is typically used to determine whether standard input, standard output, and/or standard error is coming from/going to the terminal.

In the following example, the is.term script uses the test –t criterion ([ ] is a synonym for test; page 1000) to see if file descriptor 1 (initially standard output) of the process running the shell script is associated with the screen. The message the script displays is based on whether test returnstrue (file descriptor 1 is associated with the screen) or false (file descriptor 1 is not associated with the screen).

$ cat is.term

if [ -t 1 ] ; then

echo "FD 1 (stdout) IS going to the screen"

else

echo "FD 1 (stdout) is NOT going to the screen"

fi

When you run is.term without redirecting standard output, the script displays FD 1 (stdout) IS going to the screen because standard output of the is.term script has not been redirected:

$ ./is.term

FD 1 (stdout) IS going to the screen

When you redirect standard output of a program using > on the command line, bash closes file descriptor 1 and then reopens it, associating it with the file specified following the redirect symbol.

The next example redirects standard output of the is.term script: The newly opened file descriptor 1 associates standard output with the file named hold. Now the test command ([ –t 1 ]) fails, returning a value of 1 (false), because standard output is not associated with a terminal. The script writes FD 1 (stdout) is NOT going to the screen to hold:

$ ./is.term > hold

$ cat hold

FD 1 (stdout) is NOT going to the screen

If you redirect standard error from is.term, the script will report FD 1 (stdout) IS going to the screen and will write nothing to the file receiving the redirection; standard output has not been redirected. You can use [ –t 2 ] to test if standard error is going to the screen:

$ ./is.term 2> hold

FD 1 (stdout) IS going to the screen

In a similar manner, if you send standard output of is.term through a pipeline, test reports standard output is not associated with a terminal. In this example, cat copies standard input to standard output:

$ ./is.term | cat

FD 1 (stdout) is NOT going to the screen

Optional

You can also experiment with test on the command line. This technique allows you to make changes to your experimental code quickly by taking advantage of command history and editing (page 378). To better understand the following examples, first verify that test(called as [ ]) returns a value of 0 (true) when file descriptor 1 is associated with the screen and a value other than 0 (false) when file descriptor 1 is not associated with the screen. The $? special parameter (page 1029) holds the exit status of the previous command.

$ [ -t 1 ]

$ echo $?

0

$ [ -t 1 ] > hold

$ echo $?

1

As explained on page 343, the && (AND) control operator first executes the command preceding it. Only if that command returns a value of 0 (true) does && execute the command following it. In the following example, if [ –t 1 ] returns 0, && executes echo "FD 1 to screen". Although the parentheses (page 344) are not required in this example, they are needed in the next one.

$ ( [ -t 1 ] && echo "FD 1 to screen" )

FD 1 to screen

Next, the output from the same command line is sent through a pipeline to cat, so test returns 1 (false) and && does not execute echo.

$ ( [ -t 1 ] && echo "FD 2 to screen" ) | cat

$

The following example is the same as the previous one, except test checks whether file descriptor 2 is associated with the screen. Because the pipeline redirects only standard output, test returns 0 (true) and && executes echo.

$ ( [ -t 2 ] && echo "FD 2 to screen" ) | cat

FD 2 to screen

In this example, test checks whether file descriptor 2 is associated with the screen (it is) and echo sends its output to file descriptor 1 (which goes through the pipeline to cat).

Parameters

Shell parameters were introduced on page 352. This section goes into more detail about positional parameters and special parameters.

Positional Parameters

Positional parameters comprise the command name and command-line arguments. These parameters are called positional because you refer to them by their position on the command line. You cannot use an assignment statement to change the value of a positional parameter. However, the setbuiltin (page 1024) enables you to change the value of any positional parameter except the name of the calling program (the command name).

$0: Name of the Calling Program

The shell expands $0 to the name of the calling program (the command you used to call the program—usually the name of the program you are running). This parameter is numbered zero because it appears before the first argument on the command line:

$ cat abc

echo "This script was called by typing $0"

$ ./abc

This script was called by typing ./abc

$ /home/sam/abc

This script was called by typing /home/sam/abc

The preceding shell script uses echo to verify the way the script you are executing was called. You can use the basename utility and command substitution to extract the simple filename of the script:

$ cat abc2

echo "This script was called by typing $(basename $0)"

$ /home/sam/abc2

This script was called by typing abc2

When you call a script through a link, the shell expands $0 to the value of the link.

$ ln -s abc2 mylink

$ /home/sam/mylink

This script was called by typing mylink

When you display the value of $0 from an interactive shell, the shell displays its name because that is the name of the calling program (the program you are running).

$ echo $0

bash

Tip: bash versus –bash

On some systems, echo $0 displays –bash while on others it displays bash. The former indicates a login shell (page 330); the latter indicates a shell that is not a login shell. In a GUI environment, some terminal emulators launch login shells while others do not.

$1–$n: Positional Parameters

The shell expands $1 to the first argument on the command line, $2 to the second argument, and so on up to $n. These parameters are short for ${1}, ${2}, ${3}, and so on. For values of n less than or equal to 9, the braces are optional. For values of n greater than 9, the number must be enclosed within braces. For example, the twelfth positional parameter is represented by ${12}. The following script displays positional parameters that hold command-line arguments:

$ cat display_5args

echo First 5 arguments are $1 $2 $3 $4 $5

$ ./display_5args zach max helen

First 5 arguments are zach max helen

The display_5args script displays the first five command-line arguments. The shell expands each parameter that represents an argument that is not present on the command line to a null string. Thus the $4 and $5 parameters have null values in this example.

Caution: Always quote positional parameters

You can “lose” positional parameters if you do not quote them. See the following text for an example.