Running Linux, 5th Edition (2009)

Part III. Programming

The tools and practices in this part of the book are not needed by all users, but anyone willing to master them can add a great deal of power to their system. If you've done programming before, this part of the book gets you to a productive state on Linux quickly; if you haven't, it can show you some of the benefits of programming and serve as an introduction to its joys. Chapter 20 shows you two great tools for text editing--vi and Emacs—that you should master even if you don't plan to be a programmer. The material in this part of the book can also be a valuable reference as you read other parts.

Chapter 21: Programming Tools

Chapter 22: Running a Web Server

Chapter 23: Transporting and Handling Email Messages

Chapter 24: SRunning an FTP Server

Chapter 21. Programming Tools

There's much more to Linux than simply using the system. One of the benefits of free software is that you can modify it to suit your needs. This applies equally to the many free applications available for Linux and to the Linux kernel itself.

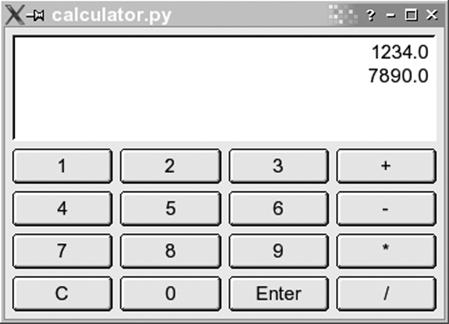

Linux supports an advanced programming interface, using GNU compilers and tools, such as the gcc compiler, the gdb debugger, and so on. An enormous number of other programming languages—ranging from such classics as FORTRAN and LISP to modern scripting languages such as Perl, Python, and Ruby—are also supported. Whatever your programming needs, Linux is a great choice for developing Unix applications. Because the complete source code for the libraries and Linux kernel is provided, programmers who need to delve into the system internals are able to do so.[*]

Many judge a computer system by the tools it offers its programmers. Unix systems have won the contest by many people's standards, having developed a very rich set over the years. Leading the parade is the GNU debugger, gdb. In this chapter, we take a close look at this invaluable utility, and at a number of other auxiliary tools C programmers will find useful.

Even if you are not a programmer, you should consider using the Revision Control System (RCS ). It provides one of the most reassuring protections a computer user could ask for—backups for everything you do to a file. If you delete a file by accident, or decide that everything you did for the past week was a mistake and should be ripped out, RCS can recover any version you want. If you are working on a larger project that involves either a large number of developers or a large number of directories (or both), Concurrent Versioning System (CVS) might be more suitable for you. It was originally based on RCS, but was rewritten from the ground up and provides many additional features. Currently, another tool, called Subversion, is taking over from CVS and filling in some of the gaps that CVS left in the handling of large projects.[*] The goal of Subversion is to be "like CVS; just better." Newer installations typically use Subversion these days, but the vast majority still uses CVS. Finally, the Linux kernel itself uses yet another versioning system. It used to use a software called BitKeeper, but when licensing problems arose, Linus Torvalds wrote his own version control system, called git, that has been introduced recently.

Linux is an ideal platform for developing software to run under the X Window System. The Linux X distribution, as described in Chapter 16, is a complete implementation with everything you need to develop and support X applications. Programming for X is portable across applications, so the X-specific portions of your application should compile cleanly on other Unix systems.

In this chapter, we explore the Linux programming environment and give you a five-cent tour of the many facilities it provides. Half of the trick to Unix programming is knowing what tools are available and how to use them effectively. Often the most useful features of these tools are not obvious to new users.

Since C programming has been the basis of most large projects (even though it is nowadays being replaced more and more by C++ and Java ) and is the language common to most modern programmers—not only on Unix, but on many other systems as well—we start by telling you what tools are available for that. The first few sections of the chapter assume you are already a C programmer.

But several other tools are emerging as important resources, especially for system administration. We examine one in this chapter: Perl. Perl is a scripting language like the Unix shells, taking care of grunt work such as memory allocation so you can concentrate on your task. But Perl offers a degree of sophistication that makes it more powerful than shell scripts and therefore appropriate for many programming tasks.

Several open source projects make it relatively easy to program in Java, and some of the tools and frameworks in the open source community are even more popular than those distributed by Sun Microsystems, the company that invented and licenses Java. Java is a general-purpose language with many potential Internet uses. In a later section, we explore what Java offers and how to get started.

Programming with gcc

The C programming language is by far the most often used in Unix software development. Perhaps this is because the Unix system was originally developed in C; it is the native tongue of Unix. Unix C compilers have traditionally defined the interface standards for other languages and tools, such as linkers, debuggers, and so on. Conventions set forth by the original C compilers have remained fairly consistent across the Unix programming board.

gcc is one of the most versatile and advanced compilers around. Unlike other C compilers (such as those shipped with the original AT&T or BSD distributions, or those available from various third-party vendors), gcc supports all the modern C standards currently in use—such as the ANSI C standard—as well as many extensions specific to gcc. Happily, however, gcc provides features to make it compatible with older C compilers and older styles of C programming. There is even a tool called protoize that can help you write function prototypes for old-style C programs.

gcc is also a C++ compiler. For those who prefer the more modern object-oriented environment, C++ is supported with all the bells and whistles—including most of the C++ introduced when the C++ standard was released, such as method templates. Complete C++ class libraries are provided as well, such as the Standard Template Library (STL).

For those with a taste for the particularly esoteric, gcc also supports Objective-C, an object-oriented C spinoff that never gained much popularity but may see a second spring due to its usage in Mac OS X. And there is gcj, which compiles Java code to machine code. But the fun doesn't stop there, as we'll see.

In this section, we cover the use of gcc to compile and link programs under Linux. We assume you are familiar with programming in C/C++, but we don't assume you're accustomed to the Unix programming environment. That's what we introduce here.

Warning

The latest gcc version at the time of this writing is Version 4.0. However, this is still quite new, sometimes a bit unstable, and, since it is a lot stricter about syntax than previous versions, will not compile some older code. Many developers therefore use either a version of the 3.3 series (with 3.3.5 being the current one at the time of this writing) or Version 3.4. We suggest sticking with either of those unless you know exactly what you are doing.

A word about terminology ahead: Because gcc can these days compile so much more than C (for example, C++, Java, and some other programming languages), it is considered to be the abbreviation for GNU Compiler Collection. But if you speak about just the C compiler, gcc is taken to mean GNU C Compiler.

Quick Overview

Before imparting all the gritty details of gcc, we present a simple example and walk through the steps of compiling a C program on a Unix system.

Let's say you have the following bit of code, an encore of the much overused "Hello, World!" program (not that it bears repeating):

#include <stdio.h>

int main() {

(void)printf("Hello, World!\n");

return 0; /* Just to be nice */

}

Several steps are required to compile this program into a living, breathing executable. You can accomplish most of these steps through a single gcc command, but we've left the specifics for later in the chapter.

First, the gcc compiler must generate an object file from this source code . The object file is essentially the machine-code equivalent of the C source. It contains code to set up the main() calling stack, a call to the printf() function, and code to return the value of 0.

The next step is to link the object file to produce an executable. As you might guess, this is done by the linker. The job of the linker is to take object files , merge them with code from libraries , and spit out an executable. The object code from the previous source does not make a complete executable. First and foremost, the code for printf() must be linked in. Also, various initialization routines, invisible to the mortal programmer, must be appended to the executable.

Where does the code for printf() come from? Answer: the libraries. It is impossible to talk for long about gcc without mentioning them. A library is essentially a collection of many object files, including an index. When searching for the code for printf(), the linker looks at the index for each library it's been told to link against. It finds the object file containing the printf() function and extracts that object file (the entire object file, which may contain much more than just the printf() function) and links it to the executable.

In reality, things are more complicated than this. Linux supports two kinds of libraries: static and shared. What we have described in this example are static libraries : libraries where the actual code for called subroutines is appended to the executable. However, the code for subroutines such as printf() can be quite lengthy. Because many programs use common subroutines from the libraries, it doesn't make sense for each executable to contain its own copy of the library code. That's where shared libraries come in.[*]

With shared libraries, all the common subroutine code is contained in a single library "image file" on disk. When a program is linked with a shared library, stub code is appended to the executable, instead of actual subroutine code. This stub code tells the program loader where to find the library code on disk, in the image file, at runtime. Therefore, when our friendly "Hello, World!" program is executed, the program loader notices that the program has been linked against a shared library. It then finds the shared library image and loads code for library routines, such asprintf(), along with the code for the program itself. The stub code tells the loader where to find the code for printf() in the image file.

Even this is an oversimplification of what's really going on. Linux shared libraries use jump tables that allow the libraries to be upgraded and their contents to be jumbled around, without requiring the executables using these libraries to be relinked. The stub code in the executable actually looks up another reference in the library itself—in the jump table. In this way, the library contents and the corresponding jump tables can be changed, but the executable stub code can remain the same.

Shared libraries also have another advantage: their ability to be upgraded. When someone fixes a bug in printf() (or worse, a security hole), you only need to upgrade the one library. You don't have to relink every single program on your system.

But don't allow yourself to be befuddled by all this abstract information. In time, we'll approach a real-life example and show you how to compile, link, and debug your programs. It's actually very simple; the gcc compiler takes care of most of the details for you. However, it helps to understand what's going on behind the scenes.

gcc Features

gcc has more features than we could possibly enumerate here. The gcc manual page and Info document give an eyeful of interesting information about this compiler. Later in this section, we give you a comprehensive overview of the most useful gcc features to get you started. With this in hand, you should be able to figure out for yourself how to get the many other facilities to work to your advantage.

For starters, gcc supports the standard C syntax currently in use, specified for the most part by the ANSI C standard. The most important feature of this standard is function prototyping. That is, when defining a function foo(), which returns an int and takes two arguments, a (of type char *) and b (of type double), the function may be defined like this:

int foo(char *a, double b) {

/* your code here... */

}

This contrasts with the older, nonprototype function definition syntax, which looks like this:

int foo(a, b)

char *a;

double b;

{

/* your code

here... */

}

and is also supported by gcc. Of course, ANSI C defines many other conventions, but this is the one most obvious to the new programmer. Anyone familiar with C programming style in modern books, such as the second edition of Kernighan and Ritchie's The C Programming Language(Prentice Hall), can program using gcc with no problem.

The gcc compiler boasts quite an impressive optimizer. Whereas most C compilers allow you to use the single switch -O to specify optimization, gcc supports multiple levels of optimization. At the highest level, gcc pulls tricks out of its sleeve, such as allowing code and static data to be shared. That is, if you have a static string in your program such as Hello, World!, and the ASCII encoding of that string happens to coincide with a sequence of instruction code in your program, gcc allows the string data and the corresponding code to share the same storage. How clever is that!

Of course, gcc allows you to compile debugging information into object files, which aids a debugger (and hence, the programmer) in tracing through the program. The compiler inserts markers in the object file, allowing the debugger to locate specific lines, variables, and functions in the compiled program. Therefore, when using a debugger such as gdb (which we talk about later in the chapter), you can step through the compiled program and view the original source text simultaneously.

Among the other tricks gcc offers is the ability to generate assembly code with the flick of a switch (literally). Instead of telling gcc to compile your source to machine code, you can ask it to stop at the assembly-language level, which is much easier for humans to comprehend. This happens to be a nice way to learn the intricacies of protected-mode assembly programming under Linux: write some C code, have gcc translate it into assembly language for you, and study that.

gcc includes its own assembler (which can be used independently of gcc and is called gas) (even though the binary often is just called as on Linux, since there cannot be confusion with other assemblers as on other Unix operating systems such as Solaris), just in case you're wondering how this assembly-language code might get assembled. In fact, you can include inline assembly code in your C source, in case you need to invoke some particularly nasty magic but don't want to write exclusively in assembly.

Basic gcc Usage

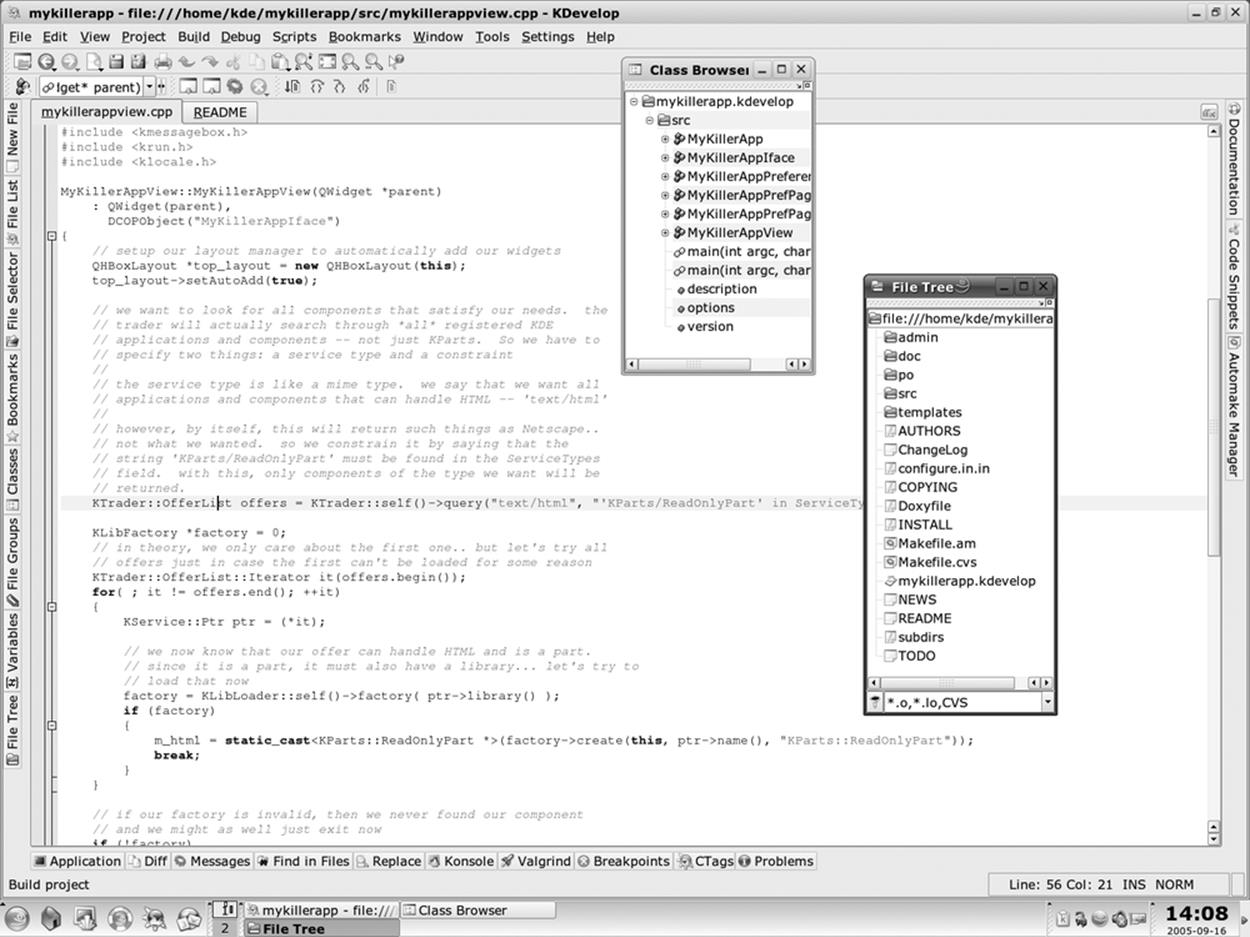

By now, you must be itching to know how to invoke all these wonderful features. It is important, especially to novice Unix and C programmers, to know how to use gcc effectively. Using a command-line compiler such as gcc is quite different from, say, using an integrated development environment (IDE) such as Visual Studio or C++ Builder under Windows. Even though the language syntax is similar, the methods used to compile and link programs are not at all the same.

Tip

A number of IDEs are available for Linux now. These include the popular open source IDE KDevelop, discussed later in this chapter. For Java, Eclipse (http://www.eclipse.org) is the leading choice among programmers who like IDEs.

Let's return to our innocent-looking "Hello, World! " example. How would you go about compiling and linking this program?

The first step, of course, is to enter the source code. You accomplish this with a text editor, such as Emacs or vi. The would-be programmer should enter the source code and save it in a file named something like hello.c. (As with most C compilers, gcc is picky about the filename extension: that is how it distinguishes C source from assembly source from object files, and so on. Use the .c extension for standard C source.)

To compile and link the program to the executable hello, the programmer would use the command:

papaya$ gcc -o hello hello.c

and (barring any errors), in one fell swoop, gcc compiles the source into an object file, links against the appropriate libraries, and spits out the executable hello, ready to run. In fact, the wary programmer might want to test it:

papaya$ ./hello

Hello, World!

papaya$

As friendly as can be expected.

Obviously, quite a few things took place behind the scenes when executing this single gcc command. First of all, gcc had to compile your source file, hello.c, into an object file, hello.o. Next, it had to link hello.o against the standard libraries and produce an executable.

By default, gcc assumes that you want not only to compile the source files you specify, but also to have them linked together (with each other and with the standard libraries) to produce an executable. First, gcc compiles any source files into object files. Next, it automatically invokes the linker to glue all the object files and libraries into an executable. (That's right, the linker is a separate program, called ld, not part of gcc itself—although it can be said that gcc and ld are close friends.) gcc also knows about the standard libraries used by most programs and tells ld to link against them. You can, of course, override these defaults in various ways.

You can pass multiple filenames in one gcc command, but on large projects you'll find it more natural to compile a few files at a time and keep the .o object files around. If you want only to compile a source file into an object file and forego the linking process, use the -c switch with gcc , as in the following example:

papaya$ gcc -c hello.c

This produces the object file hello.o and nothing else.

By default, the linker produces an executable named, of all things, a.out. This is just a bit of left-over gunk from early implementations of Unix, and nothing to write home about. By using the -o switch with gcc, you can force the resulting executable to be named something different, in this case, hello.

Using Multiple Source Files

The next step on your path to gcc enlightenment is to understand how to compile programs using multiple source files . Let's say you have a program consisting of two source files, foo.c and bar.c. Naturally, you would use one or more header files (such as foo.h) containing function declarations shared between the two programs. In this way, code in foo.c knows about functions in bar.c, and vice versa.

To compile these two source files and link them together (along with the libraries, of course) to produce the executable baz, you'd use the command:

papaya$ gcc -o baz foo.c bar.c

This is roughly equivalent to the following three commands:

papaya$ gcc -c foo.c

papaya$ gcc -c bar.c

papaya$gcc -o baz foo.o bar.o

gcc acts as a nice frontend to the linker and other "hidden" utilities invoked during compilation.

Of course, compiling a program using multiple source files in one command can be time-consuming. If you had, say, five or more source files in your program, the gcc command in the previous example would recompile each source file in turn before linking the executable. This can be a large waste of time, especially if you only made modifications to a single source file since the last compilation. There would be no reason to recompile the other source files, as their up-to-date object files are still intact.

The answer to this problem is to use a project manager such as make. We talk about make later in the chapter, in "Makefiles."

Optimizing

Telling gcc to optimize your code as it compiles is a simple matter; just use the -O switch on the gcc command line:

papaya$ gcc -O -o fishsticks fishsticks.c

As we mentioned not long ago, gcc supports different levels of optimization. Using -O2 instead of -O will turn on several "expensive" optimizations that may cause compilation to run more slowly but will (hopefully) greatly enhance performance of your code.

You may notice in your dealings with Linux that a number of programs are compiled using the switch -O6 (the Linux kernel being a good example). The current version of gcc does not support optimization up to -O6, so this defaults to (presently) the equivalent of -O2. However, -O6 is sometimes used for compatibility with future versions of gcc to ensure that the greatest level of optimization is used.

Enabling Debugging Code

The -g switch to gcc turns on debugging code in your compiled object files. That is, extra information is added to the object file, as well as the resulting executable, allowing the program to be traced with a debugger such as gdb. The downside to using debugging code is that it greatly increases the size of the resulting object files. It's usually best to use -g only while developing and testing your programs and to leave it out for the "final" compilation.

Happily, debug-enabled code is not incompatible with code optimization. This means that you can safely use the command:

papaya$ gcc -O -g -o mumble mumble.c

However, certain optimizations enabled by -O or -O2 may cause the program to appear to behave erratically while under a debugger. It is usually best to use either -O or -g, not both.

More Fun with Libraries

Before we leave the realm of gcc, a few words on linking and libraries are in order. For one thing, it's easy for you to create your own libraries. If you have a set of routines you use often, you may wish to group them into a set of source files, compile each source file into an object file, and then create a library from the object files. This saves you from having to compile these routines individually for each program in which you use them.

Let's say you have a set of source files containing oft-used routines, such as:

float square(float x) {

/* Code for square()... */

}

int factorial(int x, int n) {

/* Code for factorial()... */

}

and so on (of course, the gcc standard libraries provide analogs to these common routines, so don't be misled by our choice of example). Furthermore, let's say that the code for square(), which both takes and returns a float, is in the file square.c and that the code for factorial() is infactorial.c. Simple enough, right?

To produce a library containing these routines, all you do is compile each source file, as so:

papaya$ gcc -c square.c factorial.c

which leaves you with square.o and factorial.o. Next, create a library from the object files. As it turns out, a library is just an archive file created using ar (a close counterpart to tar). Let's call our library libstuff.a and create it this way:

papaya$ ar r libstuff.a square.o factorial.o

When updating a library such as this, you may need to delete the old libstuff.a, if it exists. The last step is to generate an index for the library, which enables the linker to find routines within the library. To do this, use the ranlib command, as so:

papaya$ ranlib libstuff.a

This command adds information to the library itself; no separate index file is created. You could also combine the two steps of running ar and ranlib by using the s command to ar:

papaya$ ar rs libstuff.a square.o factorial.o

Now you have libstuff.a, a static library containing your routines. Before you can link programs against it, you'll need to create a header file describing the contents of the library. For example, we could create libstuff.h with the contents:

/* libstuff.h: routines in libstuff.a */

extern float square(float);

extern int factorial(int, int);

Every source file that uses routines from libstuff.a should contain an #include "libstuff.h" line, as you would do with standard header files.

Now that we have our library and header file, how do we compile programs to use them? First, we need to put the library and header file someplace where the compiler can find them. Many users place personal libraries in the directory lib in their home directory, and personal include files under include. Assuming we have done so, we can compile the mythical program wibble.c using the following command:

papaya$ gcc -I../include -L../lib -o wibble wibble.c -lstuff

The -I option tells gcc to add the directory ../include to the include path it uses to search for include files. -L is similar, in that it tells gcc to add the directory ../lib to the library path.

The last argument on the command line is -lstuff, which tells the linker to link against the library libstuff.a (wherever it may be along the library path). The lib at the beginning of the filename is assumed for libraries.

Any time you wish to link against libraries other than the standard ones, you should use the -l switch on the gcc command line. For example, if you wish to use math routines (specified in math.h), you should add -lm to the end of the gcc command, which links against libm. Note, however, that the order of -l options is significant. For example, if our libstuff library used routines found in libm, you must include -lm after -lstuff on the command line:

papaya$ gcc -Iinclude -Llib -o wibble wibble.c -lstuff -lm

This forces the linker to link libm after libstuff, allowing those unresolved references in libstuff to be taken care of.

Where does gcc look for libraries? By default, libraries are searched for in a number of locations, the most important of which is /usr/lib. If you take a glance at the contents of /usr/lib, you'll notice it contains many library files—some of which have filenames ending in .a, others with filenames ending in .so.version. The .a files are static libraries, as is the case with our libstuff.a. The .so files are shared libraries , which contain code to be linked at runtime, as well as the stub code required for the runtime linker (ld.so) to locate the shared library.

At runtime, the program loader looks for shared library images in several places, including /lib. If you look at /lib, you'll see files such as libc.so.6. This is the image file containing the code for the libc shared library (one of the standard libraries, which most programs are linked against).

By default, the linker attempts to link against shared libraries . However, static libraries are used in several cases—for example, when there are no shared libraries with the specified name anywhere in the library search path. You can also specify that static libraries should be linked by using the -static switch with gcc.

Creating shared libraries

Now that you know how to create and use static libraries, it's very easy to take the step to shared libraries. Shared libraries have a number of advantages. They reduce memory consumption if used by more than one process, and they reduce the size of the executable. Furthermore, they make developing easier: when you use shared libraries and change some things in a library, you do not need to recompile and relink your application each time. You need to recompile only if you make incompatible changes, such as adding arguments to a call or changing the size of a struct.

Before you start doing all your development work with shared libraries, though, be warned that debugging with them is slightly more difficult than with static libraries because the debugger usually used on Linux, gdb, has some problems with shared libraries.

Code that goes into a shared library needs to be position-independent. This is just a convention for object code that makes it possible to use the code in shared libraries. You make gcc emit position-independent code by passing it one of the command-line switches -fpic or -fPIC. The former is preferred, unless the modules have grown so large that the relocatable code table is simply too small, in which case the compiler will emit an error message and you have to use -fPIC. To repeat our example from the last section:

papaya$ gcc -c -fpic square.c factorial.c

This being done, it is just a simple step to generate a shared library:[*]

papaya$ gcc -shared -o libstuff.so square.o factorial.o

Note the compiler switch -shared. There is no indexing step as with static libraries.

Using our newly created shared library is even simpler. The shared library doesn't require any change to the compile command:

papaya$ gcc -I../include -L../lib -o wibble wibble.c -lstuff -lm

You might wonder what the linker does if a shared library libstuff.so and a static library libstuff.a are available. In this case, the linker always picks the shared library. To make it use the static one, you will have to name it explicitly on the command line:

papaya$ gcc -I../include -L../lib -o wibble wibble.c libstuff.a -lm

Another very useful tool for working with shared libraries is ldd. It tells you which shared libraries an executable program uses. Here's an example:

papaya$ ldd wibble

linux-gate.so.1 => (0xffffe000)

libstuff.so => libstuff.so (0x400af000)

libm.so.5 => /lib/libm.so.5 (0x400ba000)

libc.so.5 => /lib/libc.so.5 (0x400c3000)

The three fields in each line are the name of the library, the full path to the instance of the library that is used, and where in the virtual address space the library is mapped to. The first line is something arcane, part of the Linux loader implementation that you can happily ignore.

If ldd outputs not found for a certain library, you are in trouble and won't be able to run the program in question. You will have to search for a copy of that library. Perhaps it is a library shipped with your distribution that you opted not to install, or it is already on your hard disk but the loader (the part of the system that loads every executable program) cannot find it.

In the latter situation, try locating the libraries yourself and find out whether they're in a nonstandard directory. By default, the loader looks only in /lib and /usr/lib. If you have libraries in another directory, create an environment variable LD_LIBRARY_PATH and add the directories separated by colons. If you believe that everything is set up correctly, and the library in question still cannot be found, run the command ldconfig as root, which refreshes the linker system cache.

Using C++

If you prefer object-oriented programming, gcc provides complete support for C++ as well as Objective-C. There are only a few considerations you need to be aware of when doing C++ programming with gcc.

First, C++ source filenames should end in the extension .cpp (most often used), .C, or .cc. This distinguishes them from regular C source filenames, which end in .c. It is actually possible to tell gcc to compile even files ending in .c as C++ files, by using the command-line parameter -x c++, but that is not recommended, as it is likely to confuse you.

Second, you should use the g++ shell script in lieu of gcc when compiling C++ code. g++ is simply a shell script that invokes gcc with a number of additional arguments, specifying a link against the C++ standard libraries, for example. g++ takes the same arguments and options as gcc.

If you do not use g++, you'll need to be sure to link against the C++ libraries in order to use any of the basic C++ classes, such as the cout and cin I/O objects. Also be sure you have actually installed the C++ libraries and include files. Some distributions contain only the standard C libraries. gcc will be able to compile your C++ programs fine, but without the C++ libraries, you'll end up with linker errors whenever you attempt to use standard objects.

[*] On a variety of Unix systems, the authors have repeatedly found available documentation to be insufficient. With Linux, you can explore the very source code for the kernel, libraries, and system utilities. Having access to source code is more important than most programmers think.

[*] The name is a very clever pun, if you think about the tool for a while.

[*] It should be noted that some very knowledgeable programmers consider shared libraries harmful, for reasons too involved to be explained here. They say that we shouldn't need to bother in a time when most computers ship with 80-GB hard disks and at least 256 MB of memory preinstalled.

[*] In the ancient days of Linux, creating a shared library was a daunting task of which even wizards were afraid. The advent of the ELF object-file format reduced this task to picking the right compiler switch. Things sure have improved!

Makefiles

Sometime during your life with Linux you will probably have to deal with make, even if you don't plan to do any programming. It's possible you'll want to patch and rebuild the kernel, and that involves running make. If you're lucky, you won't have to muck with the makefiles —but we've tried to direct this book toward unlucky people as well. So in this section, we explain enough of the subtle syntax of make so that you're not intimidated by a makefile.

For some of our examples, we draw on the current makefile for the Linux kernel. It exploits a lot of extensions in the powerful GNU version of make, so we describe some of those as well as the standard make features. Those ready to become thoroughgoing initiates into make can readManaging Projects with GNU Make (O'Reilly). GNU extensions are also well documented by the GNU make manual.

Most users see make as a way to build object files and libraries from sources and to build executables from object files. More conceptually, make is a general-purpose program that builds targets from dependencies. The target can be a program executable, a PostScript document, or whatever. The prerequisites can be C code, a TEX text file, and so on.

Although you can write simple shell scripts to execute gcc commands that build an executable program, make is special in that it knows which targets need to be rebuilt and which don't. An object file needs to be recompiled only if its corresponding source has changed.

For example, say you have a program that consists of three C source files. If you were to build the executable using the command:

papaya$ gcc -o foo foo.c bar.c baz.c

each time you changed any of the source files, all three would be recompiled and relinked into the executable. If you changed only one source file, this is a real waste of time (especially if the program in question is much larger than a handful of sources). What you really want to do is recompile only the one source file that changed into an object file and relink all the object files in the program to form the executable. make can automate this process for you.

What make Does

The basic goal of make is to let you build a file in small steps. If a lot of source files make up the final executable, you can change one and rebuild the executable without having to recompile everything. In order to give you this flexibility, make records what files you need to do your build.

Here's a trivial makefile. Call it makefile or Makefile and keep it in the same directory as the source files:

edimh: main.o edit.o

gcc -o edimh main.o edit.o

main.o: main.c

gcc -c main.c

edit.o: edit.c

gcc -c edit.c

This file builds a program named edimh from two source files named main.c and edit.c. You aren't restricted to C programming in a makefile; the commands could be anything.

Three entries appear in the file. Each contains a dependency line that shows how a file is built. Thus, the first line says that edimh (the name before the colon) is built from the two object files main.o and edit.o (the names after the colon). This line tells make that it should execute the followinggcc line whenever one of those object files changes. The lines containing commands have to begin with tabs (not spaces).

The command:

papaya$ make edimh

executes the gcc line if there isn't currently any file named edimh. However, the gcc line also executes if edimh exists but one of the object files is newer. Here, edimh is called a target. The files after the colon are called either dependencies or prerequisites.

The next two entries perform the same service for the object files. main.o is built if it doesn't exist or if the associated source file main.c is newer. edit.o is built from edit.c.

How does make know if a file is new? It looks at the timestamp, which the filesystem associates with every file. You can see timestamps by issuing the ls -l command. Since the timestamp is accurate to one second, it reliably tells make whether you've edited a source file since the latest compilation or have compiled an object file since the executable was last built.

Let's try out the makefile and see what it does:

papaya$ make edimh

gcc -c main.c

gcc -c edit.c

gcc -o edimh main.o edit.o

If we edit main.c and reissue the command, it rebuilds only the necessary files, saving us some time:

papaya$ make edimh

gcc -c main.c

gcc -o edimh main.o edit.o

It doesn't matter what order the three entries are within the makefile. make figures out which files depend on which and executes all the commands in the right order. Putting the entry for edimh first is convenient because that becomes the file built by default. In other words, typing make is the same as typing make edimh.

Here's a more extensive makefile. See if you can figure out what it does:

install: all

mv edimh /usr/local

mv readimh /usr/local

all: edimh readimh

readimh: read.o main.o

gcc -o readimh main.o read.o

edimh: main.o edit.o

gcc -o edimh main.o edit.o

main.o: main.c

gcc -c main.c

edit.o: edit.c

gcc -c edit.c

read.o: read.c

gcc -c read.c

First we see the target install. This is never going to generate a file; it's called a phony target because it exists just so that you can execute the commands listed under it. But before install runs, all has to run because install depends on all. (Remember, the order of the entries in the file doesn't matter.)

So make turns to the all target. There are no commands under it (this is perfectly legal), but it depends on edimh and readimh. These are real files; each is an executable program. So make keeps tracing back through the list of dependencies until it arrives at the .c files, which don't depend on anything else. Then it painstakingly rebuilds each target.

Here is a sample run (you may need root privilege to install the files in the /usr/local directory):

papaya$ make install

gcc -c main.c

gcc -c edit.c

gcc -o edimh main.o edit.o

gcc -c read.c

gcc -o readimh main.o read.o

mv edimh /usr/local

mv readimh /usr/local

This run of make does a complete build and install. First it builds the files needed to create edimh. Then it builds the additional object file it needs to create readmh. With those two executables created, the all target is satisfied. Now make can go on to build the install target, which means moving the two executables to their final home.

Many makefiles, including the ones that build Linux, contain a variety of phony targets to do routine activities. For instance, the makefile for the Linux kernel includes commands to remove temporary files:

clean: archclean

rm -f kernel/ksyms.lst

rm -f core `find . -name '*.[oas]' -print`

.

.

.

It also includes commands to create a list of object files and the header files they depend on (this is a complicated but important task; if a header file changes, you want to make sure the files that refer to it are recompiled):

depend dep:

touch tools/version.h

for i in init/*.c;do echo -n "init/";$(CPP) -M $$i;done > .tmpdep

.

.

.

Some of these shell commands get pretty complicated; we look at makefile commands later in this chapter, in "Multiple Commands."

Some Syntax Rules

The hardest thing about maintaining makefiles , at least if you're new to them, is getting the syntax right. OK, let's be straight about it: make syntax is really stupid. If you use spaces where you're supposed to use tabs or vice versa, your makefile blows up. And the error messages are really confusing. So remember the following syntax rules:

§ Always put a tab—not spaces—at the beginning of a command. And don't use a tab before any other line.

§ You can place a hash sign (#) anywhere on a line to start a comment. Everything after the hash sign is ignored.

§ If you put a backslash at the end of a line, it continues on the next line. That works for long commands and other types of makefile lines, too.

Now let's look at some of the powerful features of make, which form a kind of programming language of their own.

Macros

When people use a filename or other string more than once in a makefile, they tend to assign it to a macro. That's simply a string that make expands to another string. For instance, you could change the beginning of our trivial makefile to read as follows:

OBJECTS = main.o edit.o

edimh: $(OBJECTS)

gcc -o edimh $(OBJECTS)

When make runs, it simply plugs in main.o edit.o wherever you specify $(OBJECTS). If you have to add another object file to the project, just specify it on the first line of the file. The dependency line and command will then be updated correspondingly.

Don't forget the parentheses when you refer to $(OBJECTS). Macros may resemble shell variables like $HOME and $PATH, but they're not the same.

One macro can be defined in terms of another macro, so you could say something like:

ROOT = /usr/local

HEADERS = $(ROOT)/include

SOURCES = $(ROOT)/src

In this case, HEADERS evaluates to the directory /usr/local/include and SOURCES to /usr/local/src. If you are installing this package on your system and don't want it to be in /usr/local, just choose another name and change the line that defines ROOT.

By the way, you don't have to use uppercase names for macros, but that's a universal convention.

An extension in GNU make allows you to add to the definition of a macro. This uses a := string in place of an equals sign:

DRIVERS = drivers/block/block.a

ifdef CONFIG_SCSI

DRIVERS := $(DRIVERS) drivers/scsi/scsi.a

endif

The first line is a normal macro definition, setting the DRIVERS macro to the filename drivers/block/block.a. The next definition adds the filename drivers/scsi/scsi.a. But it takes effect only if the macro CONFIG_SCSI is defined. The full definition in that case becomes:

drivers/block/block.a drivers/scsi/scsi.a

So how do you define CONFIG_SCSI? You could put it in the makefile, assigning any string you want:

CONFIG_SCSI = yes

But you'll probably find it easier to define it on the make command line. Here's how to do it:

papaya$ make CONFIG_SCSI=yestarget_name

One subtlety of using macros is that you can leave them undefined. If no one defines them, a null string is substituted (that is, you end up with nothing where the macro is supposed to be). But this also gives you the option of defining the macro as an environment variable. For instance, if you don't define CONFIG_SCSI in the makefile, you could put this in your .bashrc file, for use with the bash shell:

export CONFIG_SCSI=yes

Or put this in .cshrc if you use csh or tcsh:

setenv CONFIG_SCSI yes

All your builds will then have CONFIG_SCSI defined.

Suffix Rules and Pattern Rules

For something as routine as building an object file from a source file, you don't want to specify every single dependency in your makefile. And you don't have to. Unix compilers enforce a simple standard (compile a file ending in the suffix .c to create a file ending in the suffix .o), and makeprovides a feature called suffix rules to cover all such files.

Here's a simple suffix rule to compile a C source file, which you could put in your makefile:

.c.o:

gcc -c $(CFLAGS) $<

The .c.o: line means "use a .c dependency to build a .o file." CFLAGS is a macro into which you can plug any compiler options you want: -g for debugging, for instance, or -O for optimization. The string $< is a cryptic way of saying "the dependency." So the name of your .c file is plugged in when make executes this command.

Here's a sample run using this suffix rule. The command line passes both the -g option and the -O option:

papaya$ make CFLAGS="-O -g" edit.o

gcc -c -O -g edit.c

You actually don't have to specify this suffix rule in your makefile because something very similar is already built into make. It even uses CFLAGS, so you can determine the options used for compiling just by setting that variable. The makefile used to build the Linux kernel currently contains the following definition, a whole slew of gcc options:

CFLAGS = -Wall -Wstrict-prototypes -O2 -fomit-frame-pointer -pipe

While we're discussing compiler flags, one set is seen so often that it's worth a special mention. This is the -D option, which is used to define symbols in the source code. Since all kinds of commonly used symbols appear in #ifdefs, you may need to pass lots of such options to your makefile, such as -DDEBUG or -DBSD. If you do this on the make command line, be sure to put quotation marks or apostrophes around the whole set. This is because you want the shell to pass the set to your makefile as one argument:

papaya$ make CFLAGS="-DDEBUG -DBSD" ...

GNUmake offers something called pattern rules, which are even better than suffix rules. A pattern rule uses a percent sign to mean "any string." So C source files would be compiled using a rule such as the following:

%.o: %.c

gcc -c -o $@ $(CFLAGS) $<

Here the output file %.o comes first, and the dependency %.c comes after a colon. In short, a pattern rule is just like a regular dependency line, but it contains percent signs instead of exact filenames.

We see the $< string to refer to the dependency, but we also see $@, which refers to the output file. So the name of the .o file is plugged in there. Both of these are built-in macros; make defines them every time it executes an entry.

Another common built-in macro is $*, which refers to the name of the dependency stripped of the suffix. So if the dependency is edit.c, the string $*.s would evaluate to edit.s (an assembly-language source file).

Here's something useful you can do with a pattern rule that you can't do with a suffix rule: add the string _dbg to the name of the output file so that later you can tell that you compiled it with debugging information:

%_dbg.o: %.c

gcc -c -g -o $@ $(CFLAGS) $<

DEBUG_OBJECTS = main_dbg.o edit_dbg.o

edimh_dbg: $(DEBUG_OBJECTS)

gcc -o $@ $(DEBUG_OBJECTS)

Now you can build all your objects in two different ways: one with debugging information and one without. They'll have different filenames, so you can keep them in one directory:

papaya$ make edimh_dbg

gcc -c -g -o main_dbg.o main.c

gcc -c -g -o edit_dbg.o edit.c

gcc -o edimh_dbg main_dbg.o edit_dbg.o

Multiple Commands

Any shell commands can be executed in a makefile. But things can get kind of complicated because make executes each command in a separate shell. So this would not work:

target:

cd obj

HOST_DIR=/home/e

mv *.o $HOST_DIR

Neither the cd command nor the definition of the variable HOST_DIR has any effect on subsequent commands. You have to string everything together into one command. The shell uses a semicolon as a separator between commands, so you can combine them all on one line:

target:

cd obj ; HOST_DIR=/home/e ; mv *.o $$HOST_DIR

One more change: to define and use a shell variable within the command, you have to double the dollar sign. This lets make know that you mean it to be a shell variable, not a macro.

You may find the file easier to read if you break the semicolon-separated commands onto multiple lines, using backslashes so that make considers them to be on one line:

target:

cd obj ; \

HOST_DIR=/home/e ; \

mv *.o $$HOST_DIR

Sometimes makefiles contain their own make commands; this is called recursive make. It looks like this:

linuxsubdirs: dummy

set -e; for i in $(SUBDIRS); do $(MAKE) -C $$i; done

The macro $(MAKE) invokes make. There are a few reasons for nesting makes. One reason, which applies to this example, is to perform builds in multiple directories (each of these other directories has to contain its own makefile). Another reason is to define macros on the command line, so you can do builds with a variety of macro definitions.

GNUmake offers another powerful interface to the shell as an extension. You can issue a shell command and assign its output to a macro. A couple of examples can be found in the Linux kernel makefile, but we'll just show a simple example here:

HOST_NAME = $(shell uname

-n)

This assigns the name of your network node—the output of the uname -n command—to the macro HOST_NAME.

make offers a couple of conventions you may occasionally want to use. One is to put an at sign before a command, which keeps make from echoing the command when it's executed:

@if [ -x /bin/dnsdomainname ]; then \

echo #define LINUX_COMPILE_DOMAIN \"`dnsdomainname`\"; \

else \

echo #define LINUX_COMPILE_DOMAIN \"`domainname`\"; \

fi >> tools/version.h

Another convention is to put a hyphen before a command, which tells make to keep going even if the command fails. This may be useful if you want to continue after an mv or cp command fails:

- mv edimh /usr/local

- mv readimh /usr/local

Including Other makefiles

Large projects tend to break parts of their makefiles into separate files. This makes it easy for different makefiles in different directories to share things, particularly macro definitions. The line

include filename

reads in the contents of filename. You can see this in the Linux kernel makefile, for instance:

include .depend

If you look in the file .depend, you'll find a bunch of makefile entries: these lines declare that object files depend on particular header files. (By the way, .depend might not exist yet; it has to be created by another entry in the makefile.)

Sometimes include lines refer to macros instead of filenames, as in the following example:

include ${INC_FILE}

In this case, INC_FILE must be defined either as an environment variable or as a macro. Doing things this way gives you more control over which file is used.

Interpreting make Messages

The error messages from make can be quite cryptic, so we'd like to give you some help in interpreting them. The following explanations cover the most common messages.

*** No targets specified and no makefile found. Stop.

This usually means that there is no makefile in the directory you are trying to compile. By default, make tries to find the file GNUmakefile first; then, if this has failed, Makefile, and finally makefile. If none of these exists, you will get this error message. If for some reason you want to use a makefile with a different name (or in another directory), you can specify the makefile to use with the -f command-line option.

make: *** No rule to make target 'blah.c', needed by 'blah.o'. Stop.

This means that make cannot find a dependency it needs (in this case, blah.c) in order to build a target (in this case, blah.o). As mentioned, make first looks for a dependency among the targets in the makefile, and if there is no suitable target, for a file with the name of the dependency. If this does not exist either, you will get this error message. This typically means that your sources are incomplete or that there is a typo in the makefile.

*** missing separator (did you mean TAB instead of 8 spaces?). Stop.

The current versions of make are friendly enough to ask you whether you have made a very common mistake: not prepending a command with a tab. If you use older versions of make, missing separator is all you get. In this case, check whether you really have a tab in front of all commands, and not before anything else.

Autoconf, Automake, and Other Makefile Tools

Writing makefiles for a larger project usually is a boring and time-consuming task, especially if the programs are expected to be compiled on multiple platforms. From the GNU project come two tools called Autoconf and Automake that have a steep learning curve but, once mastered, greatly simplify the task of creating portable makefiles. In addition, libtool helps a lot to create shared libraries in a portable manner. You can probably find these tools on your distribution CD, or you can download them from ftp://ftp.gnu.org/gnu.

From a user's point of view, using Autoconf involves running the program configure, which should have been shipped in the source package you are trying to build. This program analyzes your system and configures the makefiles of the package to be suitable for your system and setup. A good thing to try before running the configure script for real is to issue the command:

owl$ ./configure --help

This shows all command-line switches that the configure program understands. Many packages allow different setups—for example, different modules to be compiled in—and you can select these with configure options.

From a programmer's point of view, you don't write makefiles, but rather files called makefile.in. These can contain placeholders that will be replaced with actual values when the user runs the configure program, generating the makefiles that make then runs. In addition, you need to write a file called configure.in that describes your project and what to check for on the target system. The Autoconf tool then generates the configure program from this configure.in file. Writing the configure.in file is unfortunately way too involved to be described here, but the Autoconf package contains documentation to get you started.

Writing the makefile.in files is still a cumbersome and lengthy task, but even this can be mostly automated by using the Automake package. Using this package, you do not write the makefile.in files, but rather the makefile.am files, which have a much simpler syntax and are much less verbose. By running the Automake tool, you convert these makefile.am files to the makefile.in files, which you distribute along with your source code and which are later converted into the makefiles themselves when the package is configured for the user's system. How to write makefile.am files is beyond the scope of this book as well. Again, please check the documentation of the package to get started.

These days, most open source packages use the libtool/Automake/Autoconf combo for generating the makefiles, but this does not mean that this rather complicated and involved method is the only one available. Other makefile-generating tools exist as well, such as the imake tool used to configure the X Window System.[*] Another tool that is not as powerful as the Autoconf suite (even though it still lets you do most things you would want to do when it comes to makefile generation) but extremely easy to use (it can even generate its own description files for you from scratch) is the qmake tool that ships together with the C++ GUI library Qt (downloadable from http://www.trolltech.com).

[*] X.org is planning to switch to Automake/Autoconf for the next version as well.

Debugging with gdb

Are you one of those programmers who scoff at the very idea of using a debugger to trace through code? Is it your philosophy that if the code is too complex for even the programmer to understand, the programmer deserves no mercy when it comes to bugs? Do you step through your code, mentally, using a magnifying glass and a toothpick? More often than not, are bugs usually caused by a single-character omission, such as using the = operator when you mean +=?

Then perhaps you should meet gdb--the GNU debugger. Whether or not you know it, gdb is your friend. It can locate obscure and difficult-to-find bugs that result in core dumps, memory leaks, and erratic behavior (both for the program and the programmer). Sometimes even the most harmless-looking glitches in your code can cause everything to go haywire, and without the aid of a debugger like gdb, finding these problems can be nearly impossible—especially for programs longer than a few hundred lines. In this section, we introduce you to the most useful features ofgdb by way of examples. There's a book on gdb: Debugging with GDB (Free Software Foundation).

gdb is capable of either debugging programs as they run or examining the cause for a program crash with a core dump. Programs debugged at runtime with gdb can either be executed from within gdb itself or can be run separately; that is, gdb can attach itself to an already running process to examine it. We first discuss how to debug programs running within gdb and then move on to attaching to running processes and examining core dumps.

Tracing a Program

Our first example is a program called trymh that detects edges in a grayscale image. trymh takes as input an image file, does some calculations on the data, and spits out another image file. Unfortunately, it crashes whenever it is invoked, as so:

papaya$ trymh < image00.pgm > image00.pbm

Segmentation fault (core dumped)

Now, using gdb, we could analyze the resulting core file, but for this example, we'll show how to trace the program as it runs.[*]

Before we use gdb to trace through the executable trymh, we need to ensure that the executable has been compiled with debugging code (see "Enabling Debugging Code," earlier in this chapter). To do so, we should compile trymh using the -g switch with gcc.

Note that enabling optimization (-O) with debug code (-g) is legal but discouraged. The problem is that gcc is too smart for its own good. For example, if you have two identical lines of code in two different places in a function, gdb may unexpectedly jump to the second occurrence of the line, instead of the first, as expected. This is because gcc combined the two lines into a single line of machine code used in both instances.

Some of the automatic optimizations performed by gcc can be confusing when using a debugger. To turn off all optimization (even optimizations performed without specifying -O), use the -O0 (that's dash-oh-zero) option with gcc.

Now we can fire up gdb to see what the problem might be:

papaya$ gdb trymh

GNU gdb 6.3

Copyright 2004 Free Software Foundation, Inc.

GDB is free software, covered by the GNU General Public License, and you are

welcome to change it and/or distribute copies of it under certain conditions.

Type "show copying" to see the conditions.

There is absolutely no warranty for GDB. Type "show warranty" for details.

This GDB was configured as "i586-suse-linux".

(gdb)

Now gdb is waiting for a command. (The command help displays information on the available commands.) The first thing we want to do is start running the program so that we can observe its behavior. However, if we immediately use the run command, the program simply executes until it exits or crashes.

First, we need to set a breakpoint somewhere in the program. A breakpoint is just a location in the program where gdb should stop and allow us to control execution of the program. For the sake of simplicity, let's set a breakpoint on the first line of actual code so that the program stops just as it begins to execute. The list command displays several lines of code (an amount that is variable) at a time:

(gdb) list

12 main() {

13

14 FloatImage inimage;

15 FloatImage outimage;

16 BinaryImage binimage;

17 int i,j;

18

19 inimage = (FloatImage)imLoadF(IMAGE_FLOAT,stdin);

20 outimage = laplacian_float(inimage);

21

(gdb) break 19

Breakpoint 1 at 0x289c: file trymh.c, line 19.

(gdb)

A breakpoint is now set at line 19 in the current source file. You can set many breakpoints in the program; breakpoints may be conditional (that is, triggered only when a certain expression is true), unconditional, delayed, temporarily disabled, and so on. You may set breakpoints on a particular line of code, a particular function, or a set of functions, and in a slew of other ways. You may also set a watchpoint, using the watch command, which is similar to a breakpoint but is triggered whenever a certain event takes place—not necessarily at a specific line of code within the program. We'll talk more about breakpoints and watchpoints later in the chapter.

Next, we use the run command to start running the program. run takes as arguments the same arguments you'd give trymh on the command line; these can include shell wildcards and input/output redirection, as the command is passed to /bin/sh for execution:

(gdb) run < image00.pgm > image00.pfm

Starting program: /amd/dusk/d/mdw/vis/src/trymh < image00.pgm > image00.pfm

Breakpoint 1, main () at trymh.c:19

19 inimage = (FloatImage)imLoadF(IMAGE_FLOAT,stdin);

(gdb)

As expected, the breakpoint is reached immediately at the first line of code. We can now take over.

The most useful program-stepping commands are next and step. Both commands execute the next line of code in the program, except that step descends into any function calls in the program, and next steps directly to the next line of code in the same function. next quietly executes any function calls that it steps over but does not descend into their code for us to examine.

imLoadF is a function that loads an image from a disk file. We know this function is not at fault (you'll have to trust us on that one), so we wish to step over it using the next command:

(gdb) next

20 outimage = laplacian_float(inimage);

(gdb)

Here, we are interested in tracing the suspicious-looking laplacian_float function, so we use the step command:

(gdb) step

laplacian_float (fim=0x0) at laplacian.c:21

21 i = 20.0;

(gdb)

Let's use the list command to get some idea of where we are:

(gdb) list

16 FloatImage laplacian_float(FloatImage fim) {

17

18 FloatImage mask;

19 float i;

20

21 i = 20.0;

22 mask=(FloatImage)imNew(IMAGE_FLOAT,3,3);

23 imRef(mask,0,0) = imRef(mask,2,0) = imRef(mask,0,2) = 1.0;

24 imRef(mask,2,2) = 1.0; imRef(mask,1,0) = imRef(mask,0,1) = i/5;

25 imRef(mask,2,1) = imRef(mask,1,2) = i/5; imRef(mask,1,1) = -i;

(gdb) list

26

27 return convolveFloatWithFloat(fim,mask);

28 }

(gdb)

As you can see, using list multiple times just displays more of the code. Because we don't want to step manually through this code, and we're not interested in the imNew function on line 22, let's continue execution until line 27. For this, we use the until command:

(gdb) until 27

laplacian_float (fim=0x0) at laplacian.c:27

27 return convolveFloatWithFloat(fim,mask);

(gdb)

Before we step into the convolveFloatWithFloat function, let's be sure the two parameters, fim and mask, are valid. The print command examines the value of a variable:

(gdb) print mask

$1 = (struct {...} *) 0xe838

(gdb) print fim

$2 = (struct {...} *) 0x0

(gdb)

mask looks fine, but fim, the input image, is null. Obviously, laplacian_float was passed a null pointer instead of a valid image. If you have been paying close attention, you noticed this as we entered laplacian_float earlier.

Instead of stepping deeper into the program (as it's apparent that something has already gone wrong), let's continue execution until the current function returns. The finish command accomplishes this:

(gdb) finish

Run till exit from #0 laplacian_float (fim=0x0) at laplacian.c:27

0x28c0 in main () at trymh.c:20

20 outimage = laplacian_float(inimage);

Value returned is $3 = (struct {...} *) 0x0

(gdb)

Now we're back in main. To determine the source of the problem, let's examine the values of some variables:

(gdb) list

15 FloatImage outimage;

16 BinaryImage binimage;

17 int i,j;

18

19 inimage = (FloatImage)imLoadF(IMAGE_FLOAT,stdin);

20 outimage = laplacian_float(inimage);

21

22 binimage = marr_hildreth(outimage);

23 if (binimage = = NULL) {

24 fprintf(stderr,"trymh: binimage returned NULL\n");

(gdb) print inimage

$6 = (struct {...} *) 0x0

(gdb)

The variable inimage, containing the input image returned from imLoadF, is null. Passing a null pointer into the image manipulation routines certainly would cause a core dump in this case. However, we know imLoadF to be tried and true because it's in a well-tested library, so what's the problem?

As it turns out, our library function imLoadF returns NULL on failure—if the input format is bad, for example. Because we never checked the return value of imLoadF before passing it along to laplacian_float, the program goes haywire when inimage is assigned NULL. To correct the problem, we simply insert code to cause the program to exit with an error message if imLoadF returns a null pointer.

To quit gdb, just use the command quit. Unless the program has finished execution, gdb will complain that the program is still running:

(gdb) quit

The program is running. Quit anyway (and kill it)? (y or n) y

papaya$

In the following sections we examine some specific features provided by the debugger, given the general picture just presented.

Examining a Core File

Do you hate it when a program crashes and spites you again by leaving a 20-MB core file in your working directory, wasting much-needed space? Don't be so quick to delete that core file; it can be very helpful. A core file is just a dump of the memory image of a process at the time of the crash. You can use the core file with gdb to examine the state of your program (such as the values of variables and data) and determine the cause for failure.

The core file is written to disk by the operating system whenever certain failures occur. The most frequent reason for a crash and the subsequent core dump is a memory violation—that is, trying to read or write memory to which your program does not have access. For example, attempting to write data using a null pointer can cause a segmentation fault , which is essentially a fancy way of saying, "you screwed up." Segmentation faults are a common error and occur when you try to access (read from or write to) a memory address that does not belong to your process's address space. This includes the address 0, as often happens with uninitialized pointers. Segmentation faults are often caused by trying to access an array item outside the declared size of the array, and are commonly a result of an off-by-one error. They can also be caused by a failure to allocate memory for a data structure.

Other errors that result in core files are so-called bus errors and floating-point exceptions. Bus errors result from using incorrectly aligned data and are therefore rare on the Intel architecture, which does not pose the strong alignment conditions that other architectures do. Floating-point exceptions point to a severe problem in a floating-point calculation like an overflow, but the most usual case is a division by zero.

However, not all such memory errors will cause immediate crashes. For example, you may overwrite memory in some way, but the program continues to run, not knowing the difference between actual data and instructions or garbage. Subtle memory violations can cause programs to behave erratically. One of the authors once witnessed a bug that caused the program to jump randomly around, but without tracing it with gdb, it still appeared to work normally. The only evidence of a bug was that the program returned output that meant, roughly, that two and two did not add up to four. Sure enough, the bug was an attempt to write one too many characters into a block of allocated memory. That single-byte error caused hours of grief.

You can prevent these kinds of memory problems (even the best programmers make these mistakes!) using the Valgrind package , a set of memory-management routines that replaces the commonly used malloc() and free() functions as well as their C++ counterparts, the operators newand delete. We talk about Valgrind in "Using Valgrind," later in this chapter.

However, if your program does cause a memory fault, it will crash and dump core. Under Linux, core files are named, appropriately, core. The core file appears in the current working directory of the running process, which is usually the working directory of the shell that started the program; on occasion, however, programs may change their own working directory.

Some shells provide facilities for controlling whether core files are written. Under bash, for example, the default behavior is not to write core files. To enable core file output, you should use the command:

ulimit -c unlimited

probably in your .bashrc initialization file. You can specify a maximum size for core files other than unlimited, but truncated core files may not be of use when debugging applications.

Also, in order for a core file to be useful, the program must be compiled with debugging code enabled, as described in the previous section. Most binaries on your system will not contain debugging code, so the core file will be of limited value.

Our example for using gdb with a core file is yet another mythical program called cross. Like trymh in the previous section, cross takes an image file as input, does some calculations on it, and outputs another image file. However, when running cross, we get a segmentation fault:

papaya$ cross < image30.pfm > image30.pbm

Segmentation fault (core dumped)

papaya$

To invoke gdb for use with a core file, you must specify not only the core filename, but also the name of the executable that goes along with that core file. This is because the core file does not contain all the information necessary for debugging:

papaya$ gdb cross core

GDB is free software and you are welcome to distribute copies of it

under certain conditions; type "show copying" to see the conditions.

There is absolutely no warranty for GDB; type "show warranty" for details.

GDB 4.8, Copyright 1993 Free Software Foundation, Inc...

Core was generated by `cross'.

Program terminated with signal 11, Segmentation fault.

#0 0x2494 in crossings (image=0xc7c8) at cross.c:31

31 if ((image[i][j] >= 0) &&

(gdb)

gdb tells us that the core file was created when the program terminated with signal 11. A signal is a kind of message that is sent to a running program from the kernel, the user, or the program itself. Signals are generally used to terminate a program (and possibly cause it to dump core). For example, when you type the interrupt character, a signal is sent to the running program, which will probably kill the program.

In this case, signal 11 was sent to the running cross process by the kernel when cross attempted to read or write to memory to which it did not have access. This signal caused cross to die and dump core. gdb says that the illegal memory reference occurred on line 31 of the source file cross.c:

(gdb) list

26 xmax = imGetWidth(image)-1;

27 ymax = imGetHeight(image)-1;

28

29 for (j=1; j<xmax; j++) {

30 for (i=1; i<ymax; i++) {

31 if ((image[i][j] >= 0) &&

32 (image[i-1][j-1] < 0) ||

33 (image[i-1][j] < 0) ||

34 (image[i-1][j+1] < 0) ||

35 (image[i][j-1] < 0) ||

(gdb)

Here, we see several things. First of all, there is a loop across the two index variables i and j, presumably in order to do calculations on the input image. Line 31 is an attempt to reference data from image[i][j], a two-dimensional array. When a program dumps core while attempting to access data from an array, it's usually a sign that one of the indices is out of bounds. Let's check them:

(gdb) print i

$1 = 1

(gdb) print j

$2 = 1194

(gdb) print xmax

$3 = 1551

(gdb) print ymax

$4 = 1194

(gdb)

Here we see the problem. The program was attempting to reference element image[1][1194]; however, the array extends only to image[1550][1193] (remember that arrays in C are indexed from 0 to max - 1). In other words, we attempted to read the 1195th row of an image that has only 1194 rows.

If we look at lines 29 and 30, we see the problem: the values xmax and ymax are reversed. The variable j should range from 1 to ymax (because it is the row index of the array), and i should range from 1 to xmax. Fixing the two for loops on lines 29 and 30 corrects the problem.

Let's say that your program is crashing within a function that is called from many different locations, and you want to determine where the function was invoked from and what situation led up to the crash. The backtrace command displays the call stack of the program at the time of failure. If you are like the author of this section and are too lazy to type backtrace all the time, you will be delighted to hear that you can also use the shortcut bt.

The call stack is the list of functions that led up to the current one. For example, if the program starts in function main, which calls function foo, which calls bamf, the call stack looks like this:

(gdb) backtrace

#0 0x1384 in bamf () at goop.c:31

#1 0x4280 in foo () at goop.c:48

#2 0x218 in main () at goop.c:116

(gdb)

As each function is called, it pushes certain data onto the stack, such as saved registers, function arguments, local variables, and so forth. Each function has a certain amount of space allocated on the stack for its use. The chunk of memory on the stack for a particular function is called a stack frame, and the call stack is the ordered list of stack frames .

In the following example, we are looking at a core file for an X-based animation program. Using backtrace gives us the following:

(gdb) backtrace

#0 0x602b4982 in _end ()

#1 0xbffff934 in _end ()

#2 0x13c6 in stream_drawimage (wgt=0x38330000, sn=4)\

at stream_display.c:94

#3 0x1497 in stream_refresh_all () at stream_display.c:116

#4 0x49c in control_update_all () at control_init.c:73

#5 0x224 in play_timeout (Cannot access memory at address 0x602b7676.

(gdb)

This is a list of stack frames for the process. The most recently called function is frame 0, which is the "function" _end in this case. Here, we see that play_timeout called control_update_all, which called stream_refresh_all, and so on. Somehow, the program jumped to _end, where it crashed.

However, _end is not a function; it is simply a label that specifies the end of the process data segment. When a program branches to an address such as _end, which is not a real function, it is a sign that something must have caused the process to go haywire, corrupting the call stack. (This is known in hacker jargon as "jumping to hyperspace.") In fact, the error Cannot access memory at address 0x602b7676 is another indication that something bizarre has occurred.

We can see, however, that the last "real" function called was stream_drawimage, and we might guess that it is the source of the problem. To examine the state of stream_drawimage, we need to select its stack frame (frame number 2), using the frame command:

(gdb) frame 2

#2 0x13c6 in stream_drawimage (wgt=0x38330000, sn=4)\

at stream_display.c:94

94 XCopyArea(mydisplay,streams[sn].frames[currentframe],\

XtWindow(wgt),

(gdb) list

91

92 printf("CopyArea frame %d, sn %d, wid %d\n",currentframe,sn,wgt);

93

94 XCopyArea(mydisplay,streams[sn].frames[currentframe],\

XtWindow(wgt),

95 picGC,0,0,streams[sn].width,streams[sn].height,0,0);

(gdb)

Well, not knowing anything else about the program at hand, we can't see anything wrong here, unless the variable sn (being used as an index into the array streams) is out of range. From the output of frame, we see that stream_drawimage was called with an sn parameter of 4. (Function parameters are displayed in the output of backtrace, as well as whenever we change frames.)

Let's move up another frame, to stream_refresh_all, to see how stream_display was called. To do this, we use the up command, which selects the stack frame above the current one:

(gdb) up

#3 0x1497 in stream_refresh_all () at stream_display.c:116

116 stream_drawimage(streams[i].drawbox,i);

(gdb) list

113 void stream_refresh_all(void) {

114 int i;

115 for (i=0; i<=numstreams; i++) {

116 stream_drawimage(streams[i].drawbox,i);

117

(gdb) print i

$2 = 4

(gdb) print numstreams

$3 = 4

(gdb)

Here, we see that the index variable i is looping from 0 to numstreams, and indeed i here is 4, the second parameter to stream_drawimage. However, numstreams is also 4. What's going on?

The for loop on line 115 looks funny; it should read as follows:

for (i=0; i<numstreams; i++) {

The error is in the use of the <= comparison operator. The streams array is indexed from 0 to numstreams-1, not from 0 to numstreams. This simple off-by-one error caused the program to go berserk.

As you can see, using gdb with a core dump allows you to browse through the image of a crashed program to find bugs. Never again will you delete those pesky core files, right?

Debugging a Running Program