Windows Internals, Sixth Edition, Part 1 (2012)

Chapter 7. Networking

Microsoft Windows was designed with networking in mind, and it includes broad networking support that is integrated with the I/O system and the Windows APIs. The four basic types of network software components are services, APIs, protocols, and drivers for network adapters—with each component layered on top of the next to form a network stack. Windows has well-defined interfaces for each layer, so in addition to using the wide variety of APIs, protocols, and network adapter device drivers that ship with Windows, third parties can extend the operating system’s networking capabilities by developing their own components.

In this chapter, we take you from the top of the Windows networking stack to the bottom. First, we present the mapping between the Windows networking software components and the Open Systems Interconnection (OSI) reference model. Then we briefly describe the networking APIs available on Windows and explain how they are implemented. You’ll learn how multiple redirector support and name resolution work, see how to access and cache remote files, and learn how a multitude of drivers interact to form a network protocol stack. After looking at the implementation of network adapter device drivers, we examine binding, which is the glue that connects services, protocol stacks, and network adapters.

Windows Networking Architecture

The goal of network software is to take a request (in the form of an I/O request) from an application on one machine, pass it to another machine, execute the request on the remote machine, and return the results to the first machine. In the course of this process, the request must be transformed several times. A high-level request, such as “read x number of bytes from file y on machine z,” requires software that can determine how to get to machine z and what communication software that machine understands. Then the request must be altered for transmission across a network—for example, divided into short packets of information. When the request reaches the other side, it must be checked for completeness, decoded, and sent to the correct operating system component for execution. Finally, the reply must be encoded for sending back across the network.

The OSI Reference Model

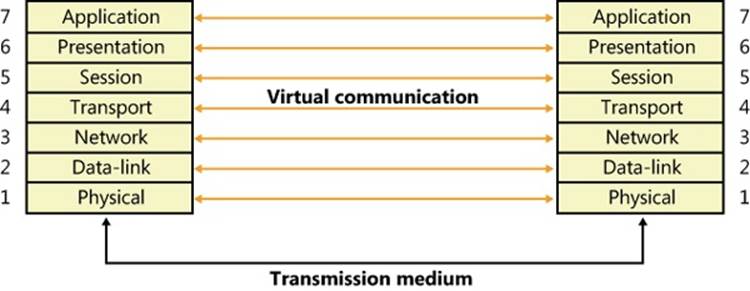

To help different computer manufacturers standardize and integrate their networking software, in 1984 the International Organization for Standardization (ISO) defined a software model for sending messages between machines. The result was the Open Systems Interconnection (OSI)reference model. The model defines six layers of software and one physical layer of hardware, as shown in Figure 7-1.

Figure 7-1. OSI reference model

The OSI reference model is an idealized scheme that few systems implement precisely, but it’s often used to frame discussions of networking principles. Each layer on one machine assumes that it is “talking to” the same layer on the other machine. Both machines “speak” the same language, or protocol, at the same level. In reality, however, a network transmission must pass down each layer on the client machine, be transmitted across the network, and then pass up the layers on the destination machine until it reaches a layer that can understand and implement the request.

The purpose of each layer in the OSI model is to provide services to higher layers and to abstract how the services are implemented at lower layers. Describing the details of each layer is beyond the scope of this book, but following is a brief description of each layer in the OSI model.

NOTE

Most network descriptions start with the top-most layer and work down to the lowest layer; however, here the description of the layers will start at the bottom and work toward the top, to demonstrate how each layer builds upon the services provided by the layer beneath it.

§ Physical. This is the lowest layer in the OSI model, and it exchanges signals between cooperating network entities over some physical medium (wire, radio, fiber, or other type). The physical layer specifies the mechanical, electrical, functional, and procedural standards for accessing the medium, such as connectors, cabling, signaling, and so on. Common examples are Ethernet (IEEE 802.3) and Wi-Fi (IEEE 802.11).

§ Datalink. This layer exchanges data frames (also called packets) between physically adjacent network entities (known as stations) using the services provided by the physical layer. By its nature, the datalink layer is tightly tied to the physical layer and is really more of an architectural abstraction than the other layers within the model. The datalink layer provides each station with its own unique address on the network, and it provides point-to-point communications between stations (such as between two systems connected to the same Ethernet). The capabilities of the datalink layer vary considerably, depending upon the physical layer. Typically, transmit and receive errors are detected by the datalink layer, and in some instances, the error might be corrected. A datalink layer can be connection oriented, which is typically used in wide area networks (WANs), or connectionless, which is typically used in local area networks (LANs). The IEEE (Institute of Electrical and Electronics Engineers) 802 committee is responsible for the majority of the LAN architectures used throughout the world, and they specify the physical and datalink layers of most networking equipment. They divide the datalink layer into two sublayers: the Logical Link Control (LLC) and the Medium Access Control (MAC). The LLC layer provides a single access method for the network layer to communicate with any 802.x MAC, insulating the network layer from the physical LAN type. The MAC layer provides access-control functions to the shared network medium, and it specifies signaling, the sharing protocol, address recognition, frame generation, CRC generation, and so on. The datalink layer does not guarantee that frames will be delivered to their destination.

§ Network. The network layer implements node addresses and routing functions to allow packets to traverse multiple datalinks. This layer understands the network topology (hiding it from the transport layer) and knows how to direct packets to the nearest router. Any network entity containing the network, datalink, and physical layers is considered to be a node, and the network layer can transfer data between any two nodes on the network. There are two types of nodes implemented by the network layer: end nodes, which are the source or destination of data, and intermediate nodes (usually referred to as routers), which route packets between end nodes. Network-layer service can be either connection oriented, where all packets traveling between the end nodes follow the same path through the network, or connectionless, where each packet is routed independently. The network layer does not guarantee that packets will be delivered to their destination.

§ Transport. The transport layer provides a transparent data-transfer mechanism between end nodes. On the sending side, the transport layer receives an unstructured stream of data from the layer above and segments the data into discrete packets, which can be sent across the network, using the services of the network layer beneath it. On the receiving side, the transport layer reassembles the packets received from the network layer into a stream of data and provides it to the layer above. This layer provides reliable data transfer and will re-transmit lost or corrupted packets to ensure that the data stream received is identical to the data stream that was sent.

§ Session. This layer implements a connection or pipe between cooperating applications. Each connection endpoint has its own address (often called a port), which is unique on that system. There are a variety of communications services provided by session layers, such as two-way simultaneous (full-duplex), two-way alternate (single-duplex), or one-way. Once a connection is established, the systems typically send periodic messages to each other to ensure that each end of the connection is functioning. If an uncorrectable transmission error is detected over a connection, the connection is typically terminated and disconnected.

§ Presentation. The presentation layer is responsible for preserving the information content of data sent over the network. It handles data formatting, including issues such as whether lines end in a carriage return/line feed (CR/LF) or just a carriage return (CR), whether data is to be compressed or encrypted, converting binary data from little-endian to big-endian, and so on. This layer is not present in most network protocol stacks, so its functionality is implemented at the application layer.

§ Application. This is a layer that handles the information transfer between two network applications, including functions such as security checks, identification of the participating machines, and initiation of the data exchange. This is the protocol that is used by two communicating applications, and is application specific.

The gray lines in Figure 7-1 represent protocols used in transmitting a request to a remote machine. As stated earlier, each layer of the hierarchy assumes that it is speaking to the same layer on another machine and uses a common protocol. The collection of protocols through which a request passes on its way down and back up the layers of the network is called a protocol stack.

Not all network protocol suites implement all the layers in the OSI model. (The presentation layer is rarely provided.) In particular, the TCP/IP protocol stack (which predates the OSI model) matches poorly to the abstractions of OSI. As data travels down the network stack, each layer adds a header (and possibly a trailer) to the data payload, building up a structure that is very similar to the layers of an onion. When this structure is received on a remote node, it travels up the network stack, with each layer stripping off its header (and trailer) until the data payload is delivered to the receiving application.

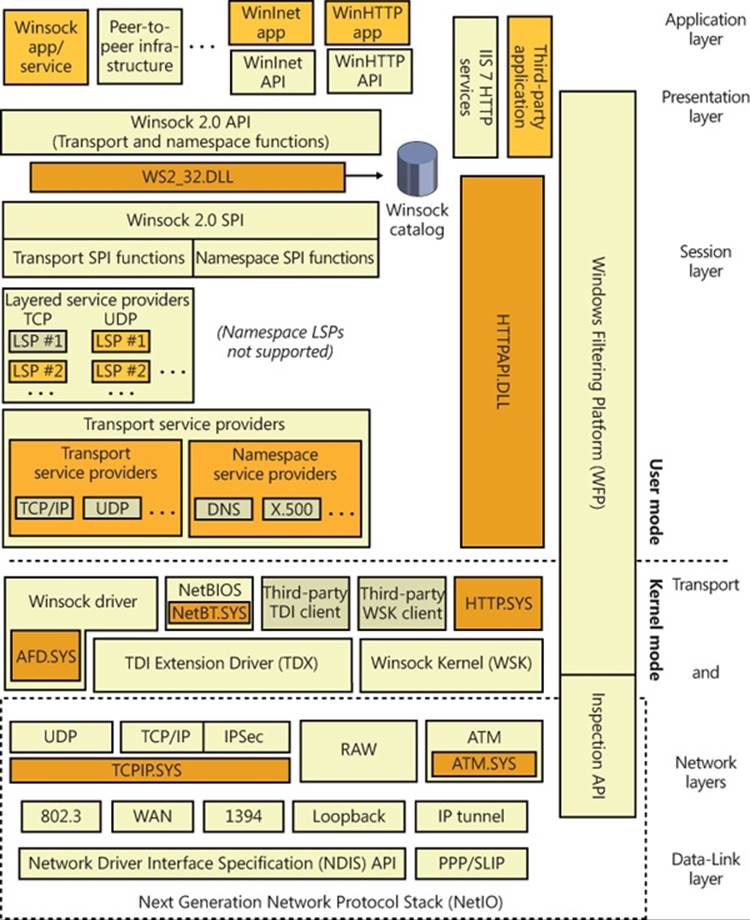

Windows Networking Components

Figure 7-2 provides an overview of the components of Windows networking, showing how each component fits into the OSI reference model and which protocols are used between layers. The mapping between OSI layers and networking components isn’t precise, which is the reason that some components cross layers. The various components include the following:

§ Networking APIs provide a protocol-independent way for applications to communicate across a network. Networking APIs can be implemented in user mode or in both user mode and kernel mode. In some cases, they are wrappers around another networking API that implements a specific programming model or provides additional services. (Note that the term networking API also describes any programming interfaces provided by networking-related software.)

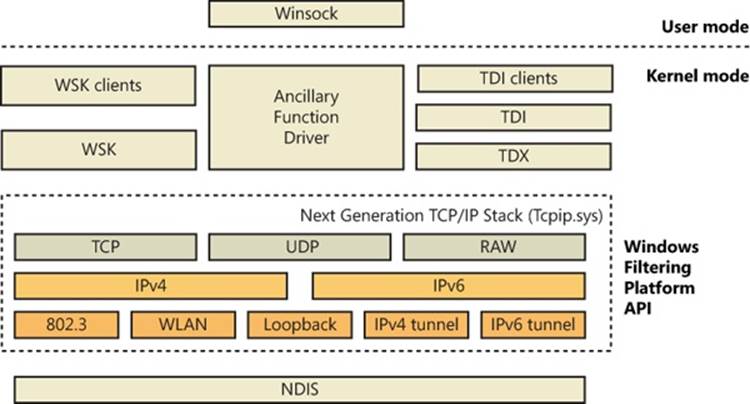

§ Transport Driver Interface (TDI) clients are legacy kernel-mode device drivers that usually implement the kernel-mode portion of a networking API’s implementation. TDI clients get their name from the fact that the I/O request packets (IRPs) they send to protocol drivers are formatted according to the Windows Transport Driver Interface standard (documented in the Windows Driver Kit). This standard specifies a common programming interface for kernel-mode device drivers. (See Chapter 8, “I/O System,” in Part 2 for more information about IRPs.) The TDI interface is deprecated and will be removed in a future version of Windows. The TDI interface is now being exported by the TDI Extension (TDX) Driver. Kernel-mode network clients should now use the Winsock Kernel (WSK) interface for accessing the network stack.

§ TDI transports (also known as transports) and Network Driver Interface Specification (NDIS) protocol drivers (or protocol drivers) are kernel-mode network protocol drivers. They accept IRPs from TDI clients and process the requests these IRPs represent. This processing might require network communications with a peer, prompting the TDI transport to add protocol-specific headers (for example, TCP, UDP, and/or IP) to data passed in the IRP, and to communicate with adapter drivers using NDIS functions (also documented in the Windows Driver Kit). TDI transports generally facilitate application network communications by transparently performing message operations such as segmentation and reassembly, sequencing, acknowledgment, and retransmission.

§ Microsoft has decided that TCP/IP has won the network protocol wars, so it has re-architected the network protocol portion of the network stack from being protocol-neutral to being TCP/IP-centric. The interface between the TCP/IP protocol driver and Winsock is known as theTransport Layer Network Provider Interface (TLNPI) and is currently undocumented.

§ Winsock Kernel (WSK) is a transport-independent, kernel-mode networking API that replaces the legacy TDI. WSK provides network communication by using socket-like programming semantics similar to user-mode Winsock, while also providing unique features such as asynchronous I/O operations built on IRPs and event callbacks. WSK also natively supports IP version 6 (IPv6) functionality in the Next Generation TCP/IP network stack in Windows.

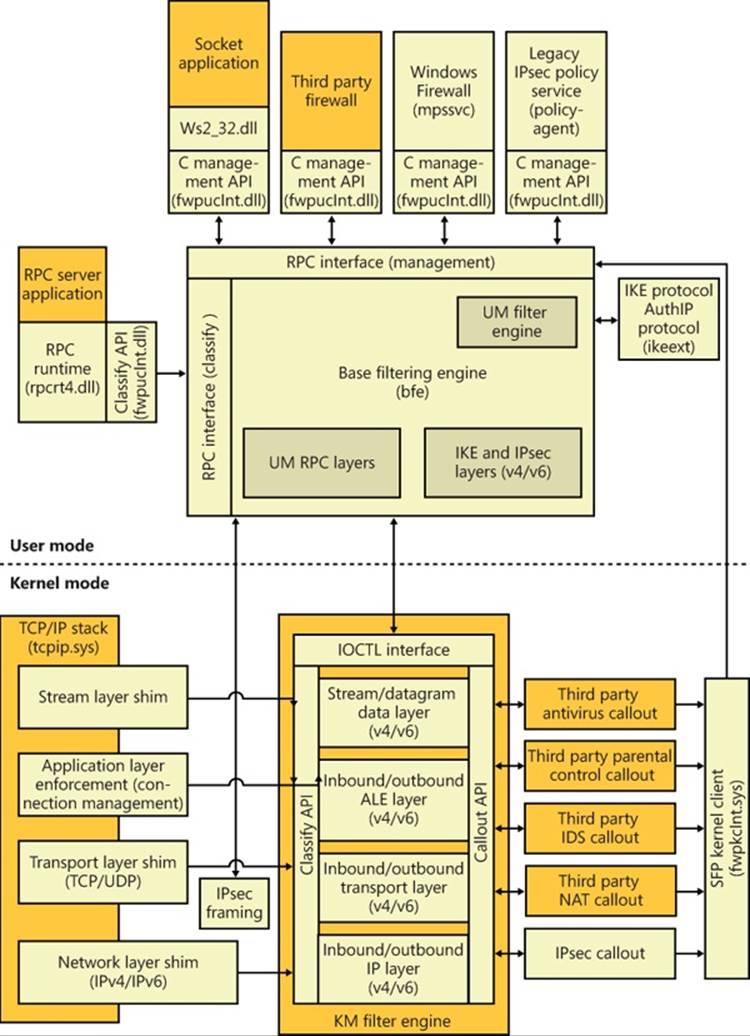

§ The Windows Filtering Platform (WFP) is a set of APIs and system services that provide the ability to create network filtering applications. The WFP allows applications to interact with packet processing at different levels of the Windows networking stack, much like file system filters. Similarly, network data can be traced, filtered, and also modified before it reaches its destination.

§ WFP callout drivers are kernel-mode drivers that implement one or more callouts, which extend the capabilities of the WFP by processing TCP/IP-based network data in ways that extend the basic functionality provided by the WFP.

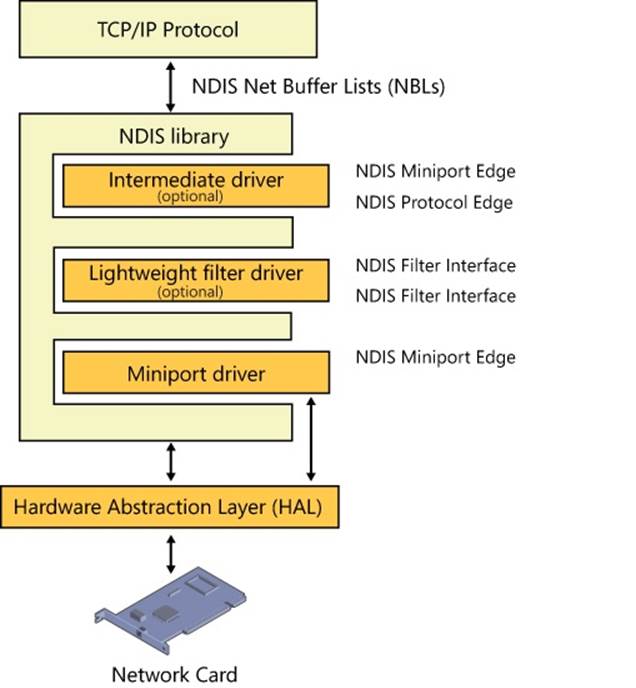

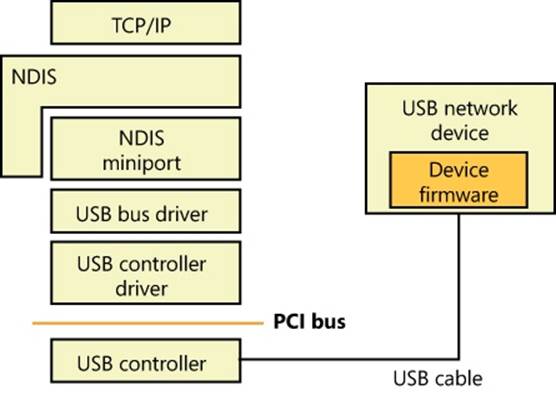

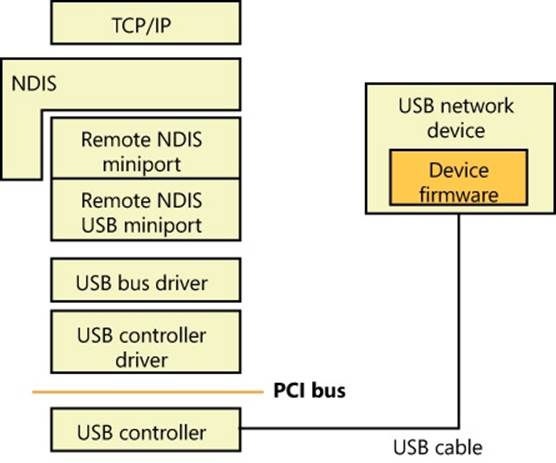

§ The NDIS library (Ndis.sys) provides an abstraction mechanism that encapsulates Network Interface Card (NIC) drivers (also known as NDIS miniports), hiding from them the specifics of the Windows kernel-mode environment. The NDIS library exports functions for use by TCP/IP and legacy TDI transports.

§ NDIS miniport drivers are kernel-mode drivers that are responsible for interfacing the network stack to a particular NIC. NDIS miniport drivers are written so that they are wrapped by the Windows NDIS library. NDIS miniport drivers don’t process IRPs; rather, they register a call-table interface to the NDIS library that contains pointers to functions that perform simple operations on the NIC, such as sending a packet or querying properties. NDIS miniport drivers communicate with network adapters by using NDIS library functions that resolve to hardware abstraction layer (HAL) functions.

As Figure 7-2 shows, the OSI layers don’t correspond to actual software. WSK transport providers, for example, frequently cross several boundaries. In fact, the bottom three layers of software and the hardware layer are often referred to collectively as the transport. Software components residing in the upper three layers are referred to as users or clients of the transport.”

Figure 7-2. OSI model and Windows networking components

In the remainder of this chapter, we’ll examine the networking components shown in Figure 7-2 (as well as others not shown in the figure), looking at how they fit together and how they relate to Windows as a whole.

Networking APIs

Windows implements multiple networking APIs to provide support for legacy applications and compatibility with industry standards. In this section, we’ll briefly look at the networking APIs and describe how applications use them. Keep in mind that the decision about which API an application uses depends on characteristics of the API, such as which protocols the API can layer over, whether the API supports reliable (or bidirectional) communication, and the API’s portability to other Windows platforms the application might run on. We’ll discuss the following networking APIs:

§ Windows Sockets (Winsock)

§ Winsock Kernel (WSK)

§ Remote procedure call (RPC)

§ Web access APIs

§ Named pipes and mailslots

§ NetBIOS

§ Other networking APIs

Windows Sockets

The original Windows Sockets (Winsock) (version 1.0) was Microsoft’s implementation of BSD (Berkeley Software Distribution) Sockets, a programming API that became the standard by which UNIX systems have communicated over the Internet since the 1980s. Support for sockets on Windows makes the task of porting UNIX networking applications to Windows relatively straightforward. The modern versions of Winsock include most of the functionality of BSD Sockets but also include Microsoft-specific enhancements, which continue to evolve. Winsock supports reliable, connection-oriented communication as well as unreliable, connectionless communication. (“Reliable,” in this sense, indicates whether the sender is notified of any problems in the delivery of data to the receiver.) Windows provides Winsock 2.2, which adds numerous features beyond the BSD Sockets specification, such as functions that take advantage of Windows asynchronous I/O, to offer far better performance and scalability than straight BSD Sockets programming.

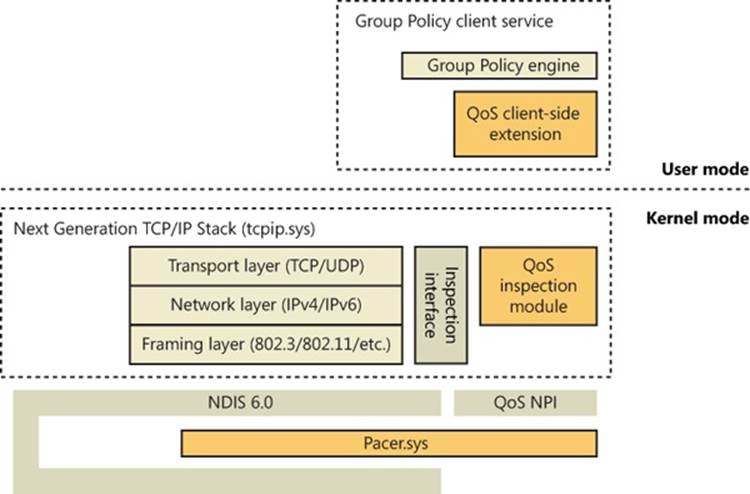

Winsock includes the following features:

§ Support for scatter-gather and asynchronous application I/O.

§ Quality of Service (QoS) conventions so that applications can negotiate latency and bandwidth requirements when the underlying network supports QoS.

§ Extensibility so that Winsock can be used with third-party protocols (deprecated).

§ Support for integrated namespaces with third-party namespace providers. A server can publish its name in Active Directory, for example, and by using namespace extensions, a client can look up the server’s address in Active Directory.

§ Support for multicast messages, where messages transmit from a single source to multiple receivers.

We’ll examine typical Winsock operation and then describe ways that Winsock can be extended.

Winsock Client Operation

The first step a Winsock application takes is to initialize the Winsock API with a call to an initialization function. A Winsock application’s next step is to create a socket that will represent a communications endpoint. The application obtains the address of the server to which it wants to connect by calling getaddrinfo (and later calling freeaddrinfo to release the information). The getaddrinfo function returns the list of protocol-specific addresses assigned to the server, and the client attempts to connect to each one in turn until it is able to establish a connection with one of them. This ensures that a client that supports both IP version 4 (IPv4) and IPv6 will connect to the appropriate and/or most efficient address on a server that might have both IPv4 and IPv6 addresses assigned to it. (IPv6 is preferred over IPv4.) Winsock is a protocol-independent API, so an address can be specified for any protocol installed on the system over which Winsock operates. After obtaining the server address, a connection-oriented client attempts to connect to the server by using connect and specifying the server address.

When a connection is established, the client can send and receive data over its socket using the recv and send APIs. A connectionless client specifies the remote address with connectionless APIs, such as the connectionless equivalents of send and recv, and sendto and recvfrom. Clients can also use the select and WSAPoll APIs to wait on or poll multiple sockets for synchronous I/O operations, or to check their state.

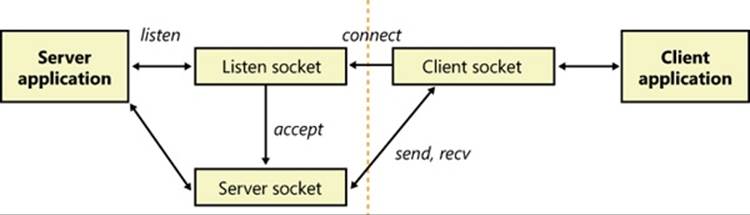

Winsock Server Operation

The sequence of steps for a server application differs from that of a client. After initializing the Winsock API, the server creates a socket and then binds it to a local address by using bind. Again, the address family specified—whether it’s TCP/IPv4, TCP/IPv6, or some other address family—is up to the server application.

If the server is connection oriented, it performs a listen operation on the socket, indicating the backlog, or the number of connections the server asks Winsock to hold until the server is able to accept them. Then it performs an accept operation to allow a client to connect to the socket. If there is a pending connection request, the accept call completes immediately; otherwise, it completes when a connection request arrives. When a connection is made, the accept function returns a new socket that represents the server’s end of the connection. (The original socket used for listening is not used for communications, only for receiving connection requests.) The server can perform receive and send operations by using functions such as recv and send. Like Winsock clients, servers can use the select and WSAPoll functions to query the state of one or more sockets; however, the Winsock WSAEventSelect function and overlapped (asynchronous) I/O extensions are preferred for better scalability. Figure 7-3 shows connection-oriented communication between a Winsock client and server.

Figure 7-3. Connection-oriented Winsock operation

After binding an address, a connectionless server is no different from a connectionless client: it can send and receive data over the socket simply by specifying the remote address with each operation. Most connectionless protocols are unreliable and, in general, will not know whether the destination actually received the sent data packets (which are known as datagrams). Datagram protocols are ideal for quick message passing, where the overhead of establishing a connection is too much and reliability is not required (although an application can build reliability on top of the protocol).

Winsock Extensions

In addition to supporting functions that correspond directly to those implemented in BSD Sockets, Microsoft has added a handful of functions that aren’t part of the BSD standard. Two of these functions, AcceptEx (the Ex suffix is short for Extended) and TransmitFile, are worth describing because many Web servers on Windows use them to achieve high performance. AcceptEx is a version of the accept function that, in the process of establishing a connection with a client, returns the client’s address and the client’s first message. AcceptEx allows the server application to queue multiple accept operations so that high volumes of incoming connection requests can be handled. With this function, a web server avoids executing multiple Winsock functions that would otherwise be required.

After establishing a connection with a client, a web server frequently sends a file, such as a web page, to the client. The TransmitFile function’s implementation is integrated with the Windows cache manager so that a file can be sent directly from the file system cache. Sending data in this way is called zero-copy because the server doesn’t have to read the file data to send it; it simply specifies a handle to a file and the byte range (offset and length) of the file to send. In addition, TransmitFile allows a server to add prefix or suffix data to the file’s data so that the server can send header information, trailer information, or both, which might include the name of the web server and a field that indicates to the client the size of the message the server is sending. Internet Information Services (IIS), which is included with Windows, uses bothAcceptEx and TransmitFile to achieve better performance.

Windows also supports a handful of other multifunction APIs, including ConnectEx, DisconnectEx, and TransmitPackets. ConnectEx establishes a connection and sends the first message on the connection. DisconnectEx closes a connection and allows the socket handle representing the connection to be reused in a call to AcceptEx or ConnectEx. Finally, TransmitPackets is similar to TransmitFile, except that it allows for the sending of in-memory data in addition to, or in lieu of, file data. Finally, by using the WSAImpersonateSocketPeer andWSARevertImpersonation functions, Winsock servers can perform impersonation (described in Chapter 6) to perform authorization or to gain access to resources based on the client’s security credentials.

Extending Winsock

Winsock is an extensible API on Windows because third parties can add a transport service provider that interfaces Winsock with other protocols, or layers on top of existing protocols, to provide functionality such as proxying. Third parties can also add a namespace service providerto augment Winsock’s name-resolution facilities. Service providers plug in to Winsock by using the Winsock service provider interface (SPI). When a transport service provider is registered with Winsock, Winsock uses the transport service provider to implement socket functions, such as connect and accept, for the address types that the provider indicates it implements. There are no restrictions on how the transport service provider implements the functions, but the implementation usually involves communicating with a transport driver in kernel mode.

NOTE

Layered service providers are not secure and can be bypassed; secure network protocol layering must be done in kernel mode. Installing itself as a Winsock layered service provider (LSP) is a technique used frequently by malware and spyware.

A requirement of any Winsock client/server application is for the server to make its address available to clients so that the clients can connect to the server. Standard services that execute on the TCP/IP protocol use well-known addresses to make their addresses available. As long as a browser knows the name of the computer a Web server is running on, it can connect to the web server by specifying the well-known web server address (the IP address of the server concatenated with :80, the port number used for HTTP). Namespace service providers make it possible for servers to register their presence in other ways. For example, one namespace service provider might on the server side register the server’s address in Active Directory and on the client side look up the server’s address in Active Directory. Namespace service providers supply this functionality to Winsock by implementing standard Winsock name-resolution functions such as getaddrinfo and getnameinfo.

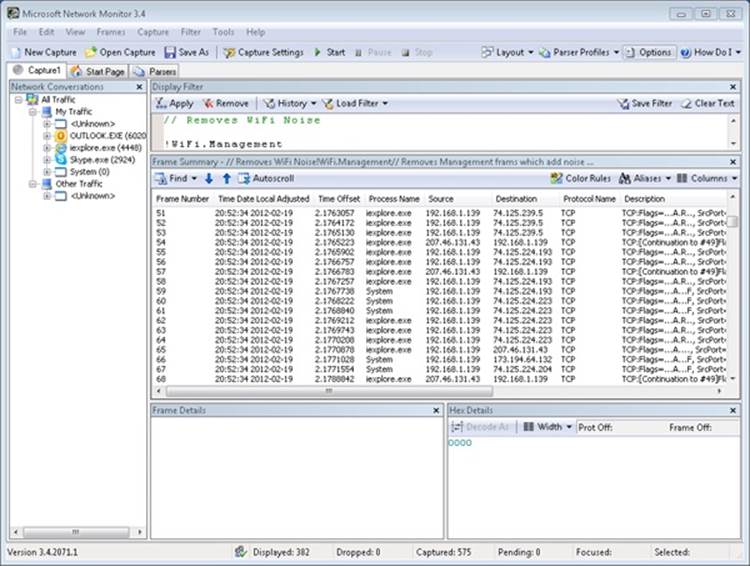

EXPERIMENT: LOOKING AT WINSOCK SERVICE AND NAMESPACE PROVIDERS

The Network Shell (Netsh.exe) utility included with Windows is able to show the registered Winsock transport and namespace providers by using the netsh winsock show catalog command. For example, if there are two TCP/IP transport service providers, the first one listed is the default provider for Winsock applications using the TCP/IP protocol. Here’s sample output from Netsh showing the registered transport service providers:

C:\Users\Toby>netsh winsock show catalog

Winsock Catalog Provider Entry

------------------------------------------------------

Entry Type: Base Service Provider

Description: MSAFD Tcpip [TCP/IP]

Provider ID: {E70F1AA0-AB8B-11CF-8CA3-00805F48A192}

Provider Path: %SystemRoot%\system32\mswsock.dll

Catalog Entry ID: 1001

Version: 2

Address Family: 2

Max Address Length: 16

Min Address Length: 16

Socket Type: 1

Protocol: 6

Service Flags: 0x20066

Protocol Chain Length: 1

Winsock Catalog Provider Entry

------------------------------------------------------

Entry Type: Base Service Provider

Description: MSAFD Tcpip [UDP/IP]

Provider ID: {E70F1AA0-AB8B-11CF-8CA3-00805F48A192}

Provider Path: %SystemRoot%\system32\mswsock.dll

Catalog Entry ID: 1002

Version: 2

Address Family: 2

Max Address Length: 16

Min Address Length: 16

Socket Type: 2

Protocol: 17

Service Flags: 0x20609

Protocol Chain Length: 1

Winsock Catalog Provider Entry

------------------------------------------------------

Entry Type: Base Service Provider

Description: MSAFD Tcpip [RAW/IP]

Provider ID: {E70F1AA0-AB8B-11CF-8CA3-00805F48A192}

Provider Path: %SystemRoot%\system32\mswsock.dll

Catalog Entry ID: 1003

Version: 2

Address Family: 2

Max Address Length: 16

Min Address Length: 16

Socket Type: 3

Protocol: 0

Service Flags: 0x20609

Protocol Chain Length: 1

.

.

.

Name Space Provider Entry

------------------------------------------------------

Description: Network Location Awareness Legacy (NLAv1) Namespace

Provider ID: {6642243A-3BA8-4AA6-BAA5-2E0BD71FDD83}

Name Space: 15

Active: 1

Version: 0

Name Space Provider Entry

------------------------------------------------------

Description: E-mail Naming Shim Provider

Provider ID: {964ACBA2-B2BC-40EB-8C6A-A6DB40161CAE}

Name Space: 37

Active: 1

Version: 0

Name Space Provider Entry

------------------------------------------------------

Description: PNRP Cloud Namespace Provider

Provider ID: {03FE89CE-766D-4976-B9C1-BB9BC42C7B4D}

Name Space: 39

Active: 1

Version: 0

.

.

.

You can also use the Autoruns utility from Windows Sysinternals (www.microsoft.com/technet/sysinternals) to view namespace and transport providers, as well as to disable or delete those that might be causing problems or unwanted behavior on the system.

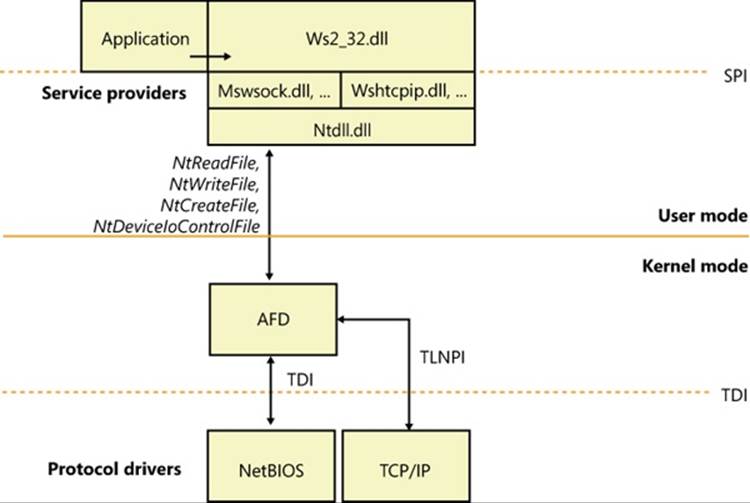

Winsock Implementation

Winsock’s implementation is shown in Figure 7-4. Its application interface consists of an API DLL, Ws2_32.dll (%SystemRoot%\System32\Ws2_32.dll), which provides applications access to Winsock functions. Ws2_32.dll calls on the services of namespace and transport service providers to carry out name and message operations. The Mswsock.dll (%SystemRoot%\System32\mswsock.dll) library acts as a transport service provider for the protocols supported by Microsoft and uses Winsock Helper libraries that are protocol specific to communicate with kernel-mode protocol drivers. For example, Wshtcpip.dll (%SystemRoot%\System32\wshtcpip.dll) is the TCP/IP helper. Mswsock.dll implements the Microsoft Winsock extension functions, such as TransmitFile, AcceptEx, and WSARecvEx.

Windows ships with helper DLLs for TCP/IPv4, TCPv6, Bluetooth, NetBIOS, IrDA (Infrared Data Association), and PGM (Pragmatic General Multicast). It also includes namespace service providers for DNS (TCP/IP), Active Directory (NTDS), NLA (Network Location Awareness), PNRP (Peer Name Resolution Protocol), and Bluetooth.

Like the named-pipe and mailslot APIs (described later in this chapter), Winsock integrates with the Windows I/O model and uses file handles to represent sockets. This support requires the aid of a kernel-mode driver, so Msafd.dll (%SystemRoot%\System32\msafd.dll) uses the services of the Ancillary Function Driver (AFD—%SystemRoot%\System32\Drivers\Afd.sys) to implement socket-based functions. AFD is a Transport Layer Network Provider Interface (TLNPI) client and executes network socket operations, such as sending and receiving messages. TLNPI is the undocumented interface between AFD and the TCP/IP protocol stack. If a legacy protocol driver is installed, Windows will use the TDI-TLNPI translation driver TDX (%SystemRoot%\System32\Drivers\tdx.sys) to map TDI IRPs to TLNPI requests.

Figure 7-4. Winsock implementation

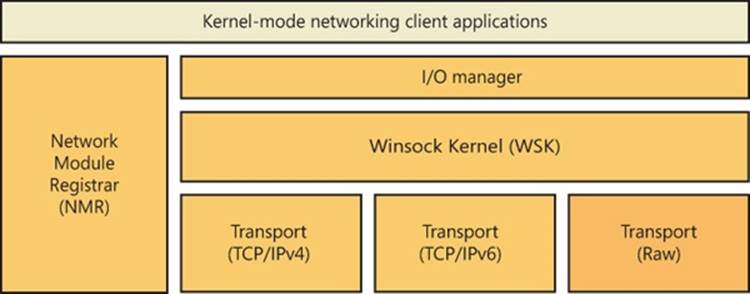

Winsock Kernel

To enable kernel-mode drivers and modules to have access to networking API interfaces similar to those available to user-mode applications, Windows implements a socket-based networking programming interface called Winsock Kernel (WSK). WSK replaces the legacy TDI API interface present on older versions of Windows but maintains the TDI API interface for transport providers. Compared to TDI, WSK provides better performance, better security, better scalability, and a much easier programming paradigm, because it relies less on internal kernel behavior and more on socket-based semantics. Additionally, WSK was written to take full advantage of the latest technologies in the Windows TCP/IP stack, which TDI was not originally anticipated to support. As shown in Figure 7-5, WSK makes use of the Network Module Registrar (NMR) component of Windows (part of %SystemRoot%\System32\drivers\NetIO.sys) to attach and detach from transport protocols, and it can be used, just like Winsock, to support many types of network clients—for example, the Http.sys driver for the HTTP Server API (mentioned later in the chapter) is a WSK client. Using NMR with WSK is rather complicated, so registration-support APIs are provided to register with WSK (WskRegister, WskDeregister, WskCaptureProviderNPI, and WskReleaseProviderNPI).

NOTE

The Raw transport protocol is not really a protocol and does not perform any encapsulation of the user data. This allows the client to directly control the contents of the frames transmitted and received by the network interface.

WSK enhances security by restricting address sharing—which allows multiple sockets to use the same transport (TCP/IP) address—through the use of nondefault sharing and security descriptors on addresses. WSK uses the security descriptor specified by the first socket for an address, and it checks the owning process and thread for each subsequent attempt to use that address.

Figure 7-5. WSK overview

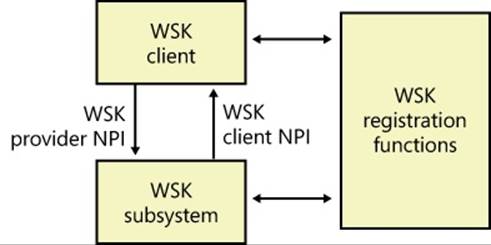

WSK Implementation

WSK’s implementation is shown in Figure 7-6. At its core is the WSK subsystem itself, which uses the Next Generation TCP/IP Stack (%SystemRoot%\System32\Drivers\Tcpip.sys) and the NetIO support library (%SystemRoot%\System32\Drivers\NetIO.sys) but is actually implemented in AFD. The subsystem is responsible for the provider side of the WSK API. The subsystem interfaces with the TCP/IP transport protocols (shown at the bottom of Figure 7-5). Attached to the WSK subsystem are WSK clients, which are kernel-mode drivers that implement the client-side WSK API in order to perform network operations. The WSK subsystem calls WSK clients to notify them of asynchronous events.

Figure 7-6. WSK implementation

WSK clients are bound to the WSK subsystem through the NMR or through the WSK’s registration functions, which allow WSK clients to dynamically detect when the WSK subsystem becomes available and then load their own dispatch table to describe the provider and client-side implementations of the WSK API. These implementations provide the standard WSK socket-based functions, such as WskSocket, WskAccept, WskBind, WskConnect, WskReceive, and WskSend, which have similar semantics (but not necessarily similar parameters) as their user-mode Winsock counterparts. However, unlike user-mode Winsock, the WSK subsystem defines four kinds of socket categories, which identify which functions and events are available:

§ Basic sockets, which are used only to get and set information on the transport. They cannot be used to send or receive data or be bound to an address.

§ Listening sockets, which are used for sockets that accept only incoming connections.

§ Datagram sockets, which are used solely for sending and receiving datagrams.

§ Connection-oriented sockets, which support all the functionality required to send and receive network traffic over an established connection.

Apart from the socket functions described, WSK also provides events through which clients are notified of network status. Unlike the model for socket functions, in which a client controls the connection, events allow the subsystem to control the connection and merely notify the client. These include the WskAcceptEvent, WskInspectEvent, WskAbortEvent, WskReceiveFromEvent, WskReceiveEvent, WskDisconnectEvent, and WskSendBacklogEvent routines.

Finally, like user-mode Winsock, WSK can be extended through extension interfaces that clients can associate with sockets. These extensions can enhance the default functionality provided by the WSK subsystem.

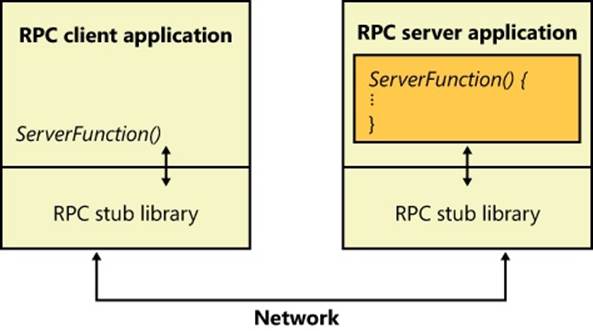

Remote Procedure Call

Remote procedure call (RPC) is a network programming standard originally developed in the early 1980s. The Open Software Foundation (now The Open Group) made RPC part of the distributed computing environment (DCE) distributed computing standard. Although there is a second RPC standard, SunRPC, the Microsoft RPC implementation is compatible with the OSF/DCE standard. RPC builds on other networking APIs, such as named pipes or Winsock, to provide an alternate programming model that in some respects hides the details of networking programming from an application developer. Fundamentally, RPC provides a mechanism for creating programs that are distributed across a network, with portions of the application running transparently on one or more systems.

RPC Operation

An RPC facility is one that allows a programmer to create an application consisting of any number of procedures, some that execute locally and others that execute on remote computers via a network. It provides a procedural view of networked operations rather than a transport-centered view, thus simplifying the development of distributed applications.

Networking software is traditionally structured around an I/O model of processing. In Windows, for example, a network operation is initiated when an application issues an I/O request. The operating system processes the request accordingly by forwarding it to a redirector, which acts as a remote file system by making the client interaction with the remote file system invisible to the client. The redirector passes the operation to the remote file system, and after the remote system fulfills the request and returns the results, the local network card interrupts. The kernel handles the interrupt, and the original I/O operation completes, returning results to the caller.

RPC takes a different approach altogether. RPC applications are like other structured applications, with a main program that calls procedures or procedure libraries to perform specific tasks. The difference between RPC applications and regular applications is that some of the procedure libraries in an RPC application are stored and execute on remote computers, as shown in Figure 7-7, whereas others execute locally.

To the RPC application, all the procedures appear to execute locally. In other words, instead of making a programmer actively write code to transmit computational or I/O-related requests across a network, handle network protocols, deal with network errors, wait for results, and so forth, RPC software handles these tasks automatically. And the Windows RPC facility can operate over any available transport protocols loaded into the system.

Figure 7-7. RPC operation

To write an RPC application, the programmer decides which procedures will execute locally and which will execute remotely. For example, suppose an ordinary workstation has a network connection to a supercomputer (a very fast machine usually designed for high-speed vector operations). If the programmer were writing an application that manipulated large matrices, it would make sense from a performance perspective to offload the mathematical calculations to the supercomputer by writing the program as an RPC application.

RPC applications work like this: As an application runs, it calls local procedures as well as procedures that aren’t present on the local machine. To handle the latter case, the application is linked to a local library or DLL that contains stub procedures, one for each remote procedure. For simple applications, the stub procedures are statically linked with the application, but for bigger components the stubs are included in separate DLLs. In DCOM, covered later in the chapter, the latter method is typically used. The stub procedures have the same name and use the same interface as the remote procedures, but instead of performing the required operations, the stub takes the parameters passed to it and marshals them for transmission across the network. Marshaling parameters means ordering and packaging them in a particular way to suit a network link, such as resolving references and picking up a copy of any data structures that a pointer refers to.

The stub then calls RPC run-time procedures that locate the computer where the remote procedure resides, determines which network transport mechanisms that computer uses, and sends the request to it using local transport software. When the remote server receives the RPC request, it unmarshals the parameters (the reverse of marshaling), reconstructs the original procedure call, and calls the procedure with the parameters passed from the calling system. When the server finishes, it performs the reverse sequence to return results to the caller.

In addition to the synchronous function-call-based interface described here, Windows RPC also supports asynchronous RPC. Asynchronous RPC lets an RPC application execute a function but not wait until the function completes to continue processing. Instead, the application can execute other code and later, when a response has arrived from the server, the RPC runtime notifies the client that the operation has completed. The RPC runtime uses the notification mechanism requested by the client. If the client uses an event synchronization object for notification, it waits for the signaling of the event object by calling either WaitForSingleObject or WaitForMultipleObjects. If the client provides an asynchronous procedure call (APC), the runtime queues the execution of the APC to the thread that executed the RPC function. (The APC will not be delivered until the requesting thread enters an alertable wait state. See Chapter 3, for more information on APCs.) If the client program uses an I/O completion port as its notification mechanism, it must call GetQueuedCompletionStatus to learn of the function’s completion. Alternatively, a client can poll for completion by calling RpcAsyncGetCallStatus.

In addition to the RPC runtime, Microsoft’s RPC facility includes a compiler, called the Microsoft Interface Definition Language (MIDL) compiler. The MIDL compiler simplifies the creation of an RPC application by generating the necessary stub routines. The programmer writes a series of ordinary function prototypes (assuming a C or C++ application) that describe the remote routines and then places the routines in a file. The programmer then adds some additional information to these prototypes, such as a network-unique identifier for the package of routines and a version number, plus attributes that specify whether the parameters are input, output, or both. The embellished prototypes form the developer’s Interface Definition Language (IDL) file.

Once the IDL file is created, the programmer compiles it with the MIDL compiler, which produces client-side and server-side stub routines (mentioned previously), as well as header files to be included in the application. When the client-side application is linked to the stub routines file, all remote procedure references are resolved. The remote procedures are then installed, using a similar process, on the server machine. A programmer who wants to call an existing RPC application need only write the client side of the software and link the application to the local RPC run-time facility.

The RPC runtime uses a generic RPC transport provider interface to talk to a transport protocol. The provider interface acts as a thin layer between the RPC facility and the transport, mapping RPC operations onto the functions provided by the transport. The Windows RPC facility implements transport provider DLLs for named pipes, HTTP, TCP/IP, and UDP. In a similar fashion, the RPC facility is designed to work with different network security facilities.

Most of the Windows networking services are RPC applications, which means that both local applications and applications on remote computers might call them. Thus, a remote client computer might call the server service to list shares, open files, write to print queues, or activate users on your server, all subject to security constraints, of course. The majority of client-management APIs are implemented using RPC.

Server name publishing, which is the ability of a server to register its name in a location accessible for client lookup, is in RPC and is integrated with Active Directory. If Active Directory isn’t installed, the RPC name locator services fall back on NetBIOS broadcast. This behavior allows RPC to function on stand-alone servers and workstations.

RPC Security

Windows RPC includes integration with security support providers (SSPs) so that RPC clients and servers can use authenticated or encrypted communications. When an RPC server wants secure communication, it tells the RPC runtime what authentication service to add to the list of available authentication services. When a client wants to use secure communication, it binds to the server. At that time, it must tell the RPC runtime the authentication service and authentication level it wants. Various authentication levels exist to ensure that only authorized clients connect to a server, verify that each message a server receives originates at an authorized client, check the integrity of RPC messages to detect manipulation, and even encrypt RPC message data. Obviously, higher authentication levels require more processing. The client can also optionally specify the server principal name. A principal is an entity that the RPC security system recognizes. The server must register its SSP-specific principal name with an SSP.

An SSP handles the details of performing network communication authentication and encryption, not only for RPC but also for Winsock. Windows includes a number of built-in SSPs, including a Kerberos SSP to implement Kerberos version 5 authentication (including AES support) and Secure Channel (SChannel), which implements Secure Sockets Layer (SSL) and the Transport Layer Security (TLS) protocols. SChannel also supports TLS and SSL extensions, which allow you to use the AES cipher as well as elliptic curve cryptographic (ECC) ciphers on top of the protocols. Also, because it supports an open cryptographic interface (OCI) and crypto-agile capabilities, SChannel allows an administrator to replace or add to the existing cryptographic algorithms. In the absence of a specified SSP, RPC software uses the built-in security of the underlying transport. Some transports, such as named pipes or local RPC, have built-in security. Others, like TCP, do not, and in this case RPC makes unsecure calls in the absence of a specified SSP.

NOTE

The use of unencrypted RPC might pose serious security issues for your organization.

Another feature of RPC security is the ability of a server to impersonate the security identity of a client with the RpcImpersonateClient function. After a server has finished performing impersonated operations on behalf of a client, it returns to its own security identity by callingRpcRevertToSelf or RpcRevertToSelfEx. (See Chapter 6 for more information on impersonation.)

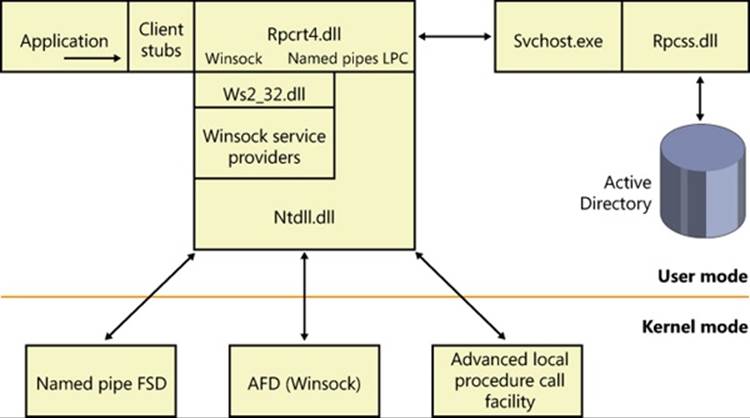

RPC Implementation

RPC implementation is depicted in Figure 7-8, which shows that an RPC-based application links with the RPC run-time DLL (%SystemRoot%\System32\Rpcrt4.dll). The RPC run-time DLL provides marshaling and unmarshaling functions for use by an application’s RPC function stubs as well as functions for sending and receiving marshaled data. The RPC run-time DLL includes support routines to handle RPC over a network as well as a form of RPC called local RPC. Local RPC can be used for communication between two processes located on the same system, and the RPC run-time DLL uses the advanced local procedure call (ALPC) facilities in kernel mode as the local networking API. (See Chapter 3 for more information on ALPCs.) When RPC is based on nonlocal communication mechanisms, the RPC run-time DLL uses the Winsock or named pipe APIs.

Figure 7-8. RPC implementation

The RPC subsystem (RPCSS—%SystemRoot%\System32\Rpcss.dll) is implemented as a Windows service. RPCSS is itself an RPC application that communicates with instances of itself on other systems to perform name lookup, registration, and dynamic endpoint mapping. (For clarity, Figure 7-8 doesn’t show RPCSS linked with the RPC run-time DLL.)

Windows also includes support for RPC in kernel mode through the kernel-mode RPC driver (%SystemRoot%\System32\Drivers\Msrpc.sys). Kernel-mode RPC is for internal use by the system and is implemented on top of ALPC. Winlogon includes an RPC server with a documented set of interfaces that user-mode RPC clients might call, while Win32k.sys includes an RPC client that communicates with Winlogon for internal notifications, such as the secure attention sequence (SAS). (See Chapter 6 for more information.) The TCP/IP stack in Windows (as well as the WFP) also uses kernel-mode RPC to communicate with the Network Storage Interface (NSI) service, which handles network configuration information.

Web Access APIs

To ease the development of Internet applications, Windows provides both client and server Internet APIs. By using the APIs, applications can provide HTTP services and use FTP and HTTP services without knowledge of the intricacies of the corresponding protocols. The client APIs include Windows Internet, also known as WinInet, which enables applications to interact with the FTP and HTTP protocols, and WinHTTP, which enables applications to interact with the HTTP protocol and is more suitable than WinInet in certain situations (Windows services and middle-tier applications). HTTP Server is a server-side API that enables the development of web server applications.

WinInet

WinInet supports the HTTP, FTP, and Gopher protocols. The APIs break down into sub-API sets specific to each protocol. Using the FTP-related APIs—such as InternetConnect to connect to an HTTP server, followed by HttpOpenRequest to open an HTTP request handle,HttpSendRequestEx to send a request to the sever and receive a response, InternetWriteFile to send a file, and InternetReadFileEx to receive a file—an application developer avoids the details of establishing a connection and formatting TCP/IP messages to the various protocols. The HTTP-related APIs also provide cookie persistence, client-side file caching, and automatic credential dialog handling. WinInet is used by core Windows components such as Windows Explorer and Internet Explorer.

NOTE

WinINet does not support server implementations or use by services. For these types of usage, use WinHTTP instead.

WinHTTP provides an abstraction of the HTTP v1.1 protocol for HTTP client applications similar to what the WinInet HTTP-related APIs provide. However, whereas the WinInet HTTP API is intended for user-interactive, client-side applications, the WinHTTP API is designed for server applications that communicate with HTTP servers. Server applications are often implemented as Windows services that do not provide a user interface and so do not desire the dialog boxes that WinInet APIs display. In addition, the WinHTTP APIs are more scalable (such as supporting uploads of greater than 4 GB) and offer security functionality, such as thread impersonation, that is not available from the WinInet APIs.

HTTP

Using the HTTP Server API implemented by Windows, server applications can register to receive HTTP requests for particular URLs, receive HTTP requests, and send HTTP responses. The HTTP Server API includes SSL support so that applications can exchange data over secure HTTP connections. The API includes server-side caching capabilities, synchronous and asynchronous I/O models, and both IPv4 and IPv6 addressing. The HTTP server APIs are used by IIS and other Windows services that rely on HTTP as a transport.

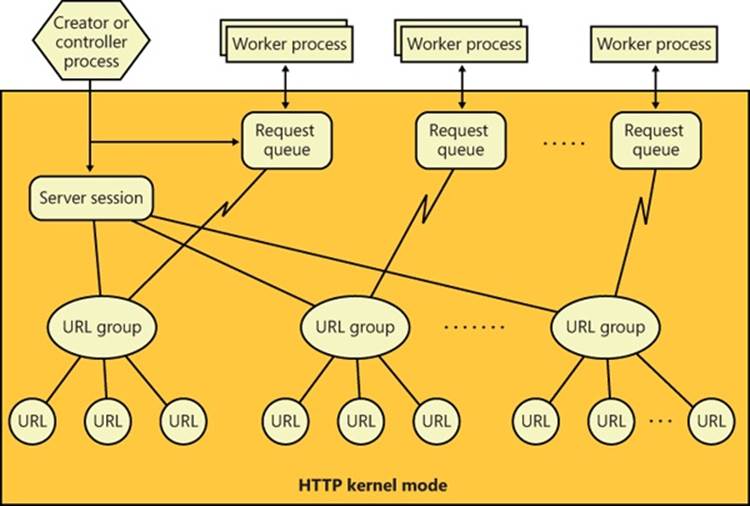

The HTTP Server API, which applications access through %SystemRoot%\System32\Httpapi.dll, relies on the kernel-mode %SystemRoot%\System32\Drivers\Http.sys driver. Http.sys starts on demand the first time any application on the system calls HttpInitialize. Applications then call HttpCreateServerSession to initialize a server session for the HTTP Server API. Next they use HttpCreateRequestQueue to create a private request queue and HttpCreateUrlGroup to create a URL group, specifying the URLs that they want to handle requests for withHttpAddUrlToUrlGroup. Using the request queues and their registered URLs (which they associate by using HttpSetUrlGroupProperty), Http.sys allows more than one application to service HTTP requests on a given port (port 80 for example), with each servicing HTTP requests to different parts of the URL namespace, as shown in Figure 7-9.

Figure 7-9. HTTP request queues and URL groups

HttpReceiveHttpRequest receives incoming requests directed at registered URLs, and HttpSendHttpResponse sends HTTP responses. Both functions offer asynchronous operation so that an application can use GetOverlappedResult or I/O completion ports to determine when an operation is completed.

Applications can use Http.sys to cache data in nonpaged physical memory by calling HttpAddFragmentToCache and associating a fragment name (specified as a URL prefix) with the cached data. Http.sys invokes the memory manager function MmAllocatePagesForMdlEx to allocate unmapped physical pages. (For large requests, Http.sys also attempts to use large pages to optimize access to the buffered data.) When Http.sys requires a virtual address mapping for the physical memory described by an entry in the cache—for instance, when it copies data to the cache or sends data from the cache—it uses MmMapLockedPagesSpecifyCache and then MmUnmapLockedPages after it completes its access. Http.sys maintains cached data until an application invalidates it or an optional application-specified timeout associated with the data expires. Http.sys also trims cached data in a worker thread that wakes up when the low-memory notification event is signaled. (See Chapter 10, “Memory Management,” in Part 2 for information on the low-memory notification event.) When an application specifies one or more fragment names in a call to HttpSendHttpResponse, Http.sys passes a pointer to the cached data in physical memory to the TCP/IP driver and avoids a copy operation. Http.sys also contains code for performing server-side authentication, including full SSL support, which removes the need to call back to the user-mode API to perform encryption and decryption of traffic.

Finally, the HTTP Server API contains many configuration options that clients can use to set functionality, such as authentication policies, bandwidth throttling, logging, connection limits, server state, response caching, and SSL certificate binding.

Named Pipes and Mailslots

Named pipes and mailslots are programming APIs for interprocess communication. Named pipes provide for reliable bidirectional communications, whereas mailslots provide unreliable, unidirectional data transmission. An advantage of mailslots is that they support broadcast capability. In Windows, both APIs make use of standard Windows security authentication and authorization mechanisms, which allow a server to control precisely which clients can connect to it.

The names that servers assign to named pipes and clients conform to the Windows Universal Naming Convention (UNC), which is a protocol-independent way to identify resources on a Windows network. The implementation of UNC names is described later in the chapter.

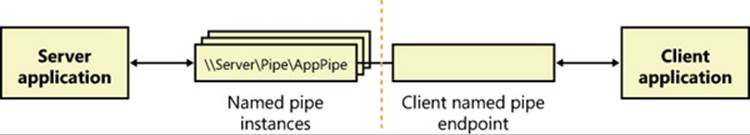

Named-Pipe Operation

Named-pipe communication consists of a named-pipe server and a named-pipe client. A named-pipe server is an application that creates a named pipe to which clients can connect. A named pipe’s name has the format \\Server\Pipe\PipeName. The Server component of the name specifies the computer on which the named-pipe server is executing. (A named-pipe server can’t create a named pipe on a remote system.) The name can be a DNS name (for example, mspress.microsoft.com), a NetBIOS name (mspress), or an IP address (131.107.0.1). The Pipecomponent of the name must be the string “Pipe”, and PipeName is the unique name assigned to a named pipe. The unique portion of the named pipe’s name can include subdirectories; an example of a named-pipe name with a subdirectory is \\MyComputer\Pipe\MyServerApp\ConnectionPipe.

A named-pipe server uses the CreateNamedPipe Windows function to create a named pipe. One of the function’s input parameters is a pointer to the named-pipe name, in the form \\.\Pipe\PipeName. The “\\.\” is a Windows-defined alias for “this system,” because a pipe must be created on the local system (although it can be accessed from a remote system). Other parameters the function accepts include an optional security descriptor that protects access to the named pipe, a flag that specifies whether the pipe should be bidirectional or unidirectional, a value indicating the maximum number of simultaneous connections the pipe supports, and a flag specifying whether the pipe should operate in byte mode or message mode.

Most networking APIs operate only in byte mode, which means that a message sent with one send function might require the receiver to perform multiple receive operations, building up the complete message from fragments. A named pipe operating in message mode simplifies the implementation of a receiver because there is a one-to-one correspondence between send and receive requests. A receiver therefore obtains an entire message each time it completes a receive operation and doesn’t have to concern itself with keeping track of message fragments.

The first call to CreateNamedPipe for a particular name creates the first instance of that name and establishes the behavior of all named-pipe instances having that name. A server creates additional instances, up to the maximum specified in the first call, with additional calls toCreateNamedPipe. After creating at least one named-pipe instance, a server executes the ConnectNamedPipe Windows function, which enables the named pipe the server created to establish connections with clients. ConnectNamedPipe can be executed synchronously or asynchronously, and it doesn’t complete until a client establishes a connection with the instance (or an error occurs).

A named-pipe client uses the Windows CreateFile or CallNamedPipe function, specifying the name of the pipe a server has created, to connect to a server. If the server has performed a ConnectNamedPipe call, the client’s security profile and the access it requests to the pipe (read, write) are validated against the named pipe’s security descriptor. (See Chapter 6 for more information on the security-check algorithms Windows uses.) If the client is granted access to a named pipe, it receives a handle representing the client side of a named-pipe connection and the server’s call to ConnectNamedPipe completes.

After a named-pipe connection is established, the client and server can use the ReadFile and WriteFile Windows functions to read from and write to the pipe. Named pipes support both synchronous and asynchronous operations for message transmittal, depending upon how the handle to the pipe was opened. Figure 7-10 shows a server and client communicating through a named-pipe instance.

Figure 7-10. Named-pipe communications

Another characteristic of the named-pipe networking API is that it allows a server to impersonate a client by using the ImpersonateNamedPipeClient function. See the Impersonation section in Chapter 6 for a discussion of how impersonation is used in client/server applications. A second advanced area of functionality of the named-pipe API is that it allows for atomic send and receive operations through the TransactNamedPipe API, which behaves according to a simple transactional model in which a message is both sent and received in the same operation. In other words, it combines a write operation and a read operation into a single operation by not completing a write request until it has been read by the recipient.

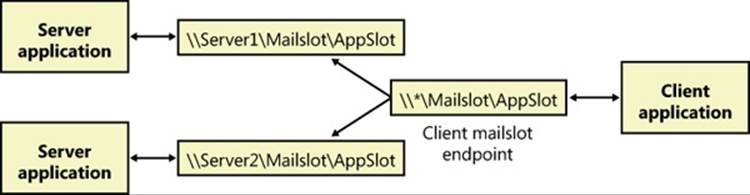

Mailslot Operation

Mailslots provide an unreliable, unidirectional, multicast network transport. Multicast is a term used to describe a sender sending a message on the network to one or more specific listeners, which is different from a broadcast, which all systems would receive. One example of an application that can use this type of communication is a time-synchronization service, which might send a source time across the domain every few seconds. Such a message would be received by all applications listening on the particular mailslot. Receiving the source-time message isn’t crucial for every computer on the network (because time updates are sent relatively frequently); therefore, a source-time message is a good example for the use of mailslots, because the loss of a message will not cause any harm.

Like named pipes, mailslots are integrated with the Windows API. A mailslot server creates a mailslot by using the CreateMailslot function. CreateMailslot accepts a UNC name of the form “\\.\Mailslot\MailslotName” as an input parameter. Again like named pipes, a mailslot server can create mailslots only on the machine it’s executing on, and the name it assigns to a mailslot can include subdirectories. CreateMailslot also takes a security descriptor that controls client access to the mailslot. The handles returned by CreateMailslot are overlapped, which means that operations performed on the handles, such as sending and receiving messages, are asynchronous.

Because mailslots are unidirectional and unreliable, CreateMailslot doesn’t take many of the parameters that CreateNamedPipe does. After it creates a mailslot, a server simply listens for incoming client messages by executing the ReadFile function on the handle representing the mailslot.

Mailslot clients use a naming format similar to that used by named-pipe clients but with variations that make it possible to send messages to all the mailslots of a given name within the client’s domain or a specified domain. To send a message to a particular instance of a mailslot, the client calls CreateFile, specifying the computer-specific name. An example of such a name is “\\Server\Mailslot\MailslotName”. (The client can specify “\\.\” to represent the local computer.) If the client wants to obtain a handle representing all the mailslots of a given name on the domain it’s a member of, it specifies the name in the format “\\*\Mailslot\MailslotName”, and if the client wants to broadcast to all the mailslots of a given name within a different domain, the format it uses is “\\DomainName\Mailslot\MailslotName”.

After obtaining a handle representing the client side of a mailslot, the client sends messages by calling WriteFile. Because of the way mailslots are implemented, only messages smaller than 424 bytescan be sent. If a message is larger than 424 bytes, the mailslot implementation uses a reliable communications mechanism that requires a one-to-one client/server connection, which precludes multicast capability. This limitation makes mailslots generally unsuitable for messages larger than 424 bytes. Figure 7-11 shows an example of a client broadcasting to multiple mailslot servers within a domain.

Figure 7-11. Mailslot broadcast

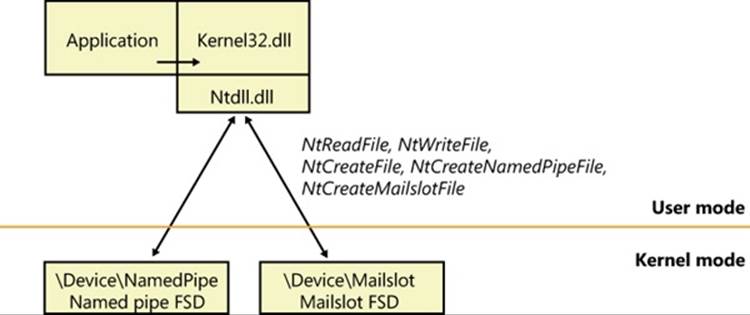

Named Pipe and Mailslot Implementation

As evidence of their tight integration with Windows, named-pipe and mailslot functions are all implemented in the Kernel32.dll Windows client-side DLL. ReadFile and WriteFile, which are the functions applications use to send and receive messages using named pipes or mailslots, are the primary Windows I/O routines. The CreateFile function, which a client uses to open either a named pipe or a mailslot, is also a standard Windows I/O routine. However, the names specified by named-pipe and mailslot applications specify file-system namespaces managed by the named-pipe file-system driver (%SystemRoot%\System32\Drivers\Npfs.sys) and the mailslot file-system driver (%SystemRoot%\System32\Drivers\Msfs.sys), as shown in Figure 7-12.

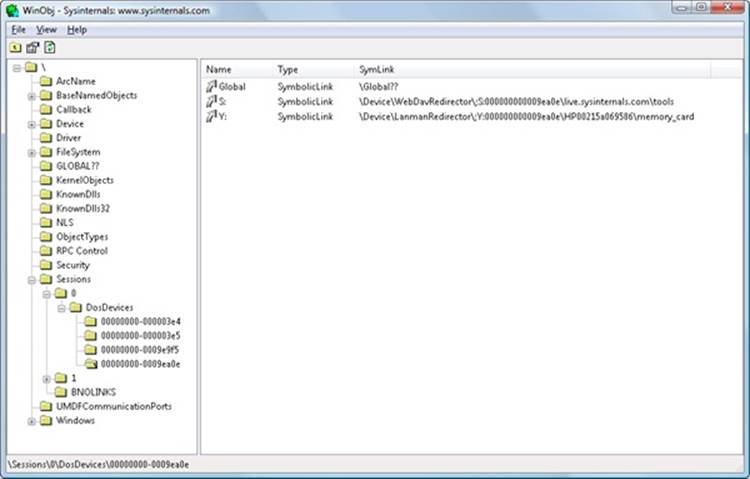

The name- pipe file-system driver creates a device object named \Device\NamedPipe and a symbolic link to that object named \Global??\Pipe. The mailslot file-system driver creates a device object named \Device\Mailslot and a symbolic link named “\Global??\Mailslot”, which points to that device object. (See Chapter 3 for an explanation of the \Global?? object manager directory.) Names passed to CreateFile of the form “\\.\Pipe\...” and “\\.\Mailslot\...” have their prefix of “\\.\” translated to “\Global??\” so that the names resolve through a symbolic link to a device object. The special functions CreateNamedPipe and CreateMailslot use the corresponding native functions NtCreateNamedPipeFile and NtCreateMailslotFile, which ultimately call IoCreateFile.

Figure 7-12. Named-pipe and mailslot implementation

Later in the chapter, we’ll discuss how the redirector file system driver is involved when a name that specifies a remote named pipe or mailslot resolves to a remote system. However, when a named pipe or mailslot is created by a server or opened by a client, the appropriate file-system driver (FSD) on the machine where the named pipe or mailslot is located is eventually invoked. The reason that named pipes and mailslots are implemented as FSDs is that they can take advantage of the existing infrastructure in the object manager, the I/O manager, the redirector (covered later in this chapter), and the Server Message Block (SMB) protocol. (For more information about SMB, see Chapter 12, “File Systems,” in Part 2.) This integration results in several benefits:

§ The FSDs use kernel-mode security functions to implement standard Windows security for named pipes and mailslots.

§ Applications can use CreateFile to open a named pipe or mailslot because FSDs integrate with the object manager namespace.

§ Applications can use Windows functions such as ReadFile and WriteFile to interact with named pipes and mailslots.

§ The FSDs rely on the object manager to track handle and reference counts for file objects representing named pipes and mailslots.

§ The FSDs can implement their own named pipe and mailslot namespaces, complete with subdirectories.

EXPERIMENT: LISTING THE NAMED-PIPE NAMESPACE AND WATCHING NAMED-PIPE ACTIVITY

It’s not possible to use the Windows API to open the root of the named-pipe FSD and perform a directory listing, but you can do this by using native API services. The PipeList tool from Sysinternals shows you the names of the named pipes defined on a computer as well as the number of instances that have been created for a name and the maximum number of instances as defined by a server’s call to CreateNamedPipe. Here’s an example of PipeList output:

C:\>pipelist

PipeList v1.01

by Mark Russinovich

http://www.sysinternals.com

Pipe Name Instances Max Instances

--------- --------- -------------

InitShutdown 3 -1

lsass 6 -1

protected_storage 3 -1

ntsvcs 3 -1

scerpc 3 -1

net\NtControlPipe1 1 1

plugplay 3 -1

net\NtControlPipe2 1 1

Winsock2\CatalogChangeListener-394-0 1 1

epmapper 3 -1

Winsock2\CatalogChangeListener-25c-0 1 1

LSM_API_service 3 -1

net\NtControlPipe3 1 1

eventlog 3 -1

net\NtControlPipe4 1 1

Winsock2\CatalogChangeListener-3f8-0 1 1

net\NtControlPipe5 1 1

net\NtControlPipe6 1 1

net\NtControlPipe0 1 1

atsvc 3 -1

Winsock2\CatalogChangeListener-438-0 1 1

Winsock2\CatalogChangeListener-2c8-0 1 1

net\NtControlPipe7 1 1

net\NtControlPipe8 1 1

net\NtControlPipe9 1 1

net\NtControlPipe10 1 1

net\NtControlPipe11 1 1

net\NtControlPipe12 1 1

142CDF96-10CC-483c-A516-3E9057526912 1 1

net\NtControlPipe13 1 1

net\NtControlPipe14 1 1

TSVNCache-000000000001b017 20 -1

TSVNCacheCommand-000000000001b017 2 -1

Winsock2\CatalogChangeListener-2b0-0 1 1

Winsock2\CatalogChangeListener-468-0 1 1

TermSrv_API_service 3 -1

Ctx_WinStation_API_service 3 -1

PIPE_EVENTROOT\CIMV2SCM EVENT PROVIDER 2 -1

net\NtControlPipe15 1 1

keysvc 3 -1

It’s clear from this output that several system components use named pipes as their communications mechanism. For example, the InitShutdown pipe is created by WinInit to accept remote shutdown commands, and the Atsvc pipe is created by the Task Scheduler service to enable applications to communicate with it to schedule tasks. You can determine what process has each of these pipes open by using the object search facility in Process Explorer.

NOTE

A Max Instances value of –1 means that there is no upper limit on the number of instances.

NetBIOS

Until the 1990s, the Network Basic Input/Output System (NetBIOS) programming API had been the most widely used network programming API on PCs. NetBIOS allows for both reliable connection-oriented and unreliable connectionless communication. Windows supports NetBIOS for its legacy applications. Microsoft discourages application developers from using NetBIOS because other APIs, such as named pipes and Winsock, are much more flexible and portable. NetBIOS is supported by the TCP/IP protocol on Windows.

NetBIOS Names

NetBIOS relies on a naming convention whereby computers and network services are assigned a 16-byte NetBIOS name. The sixteenth byte of a NetBIOS name is treated as a modifier that can specify a name as unique or as part of a group. Only one instance of a unique NetBIOS name can be assigned to a network, but multiple applications can assign the same group name. A client can send multicast messages by sending them to a group name.

To support interoperability with Windows NT 4 systems as well as Windows 9x/Me, Windows automatically defines a NetBIOS name for a domain that includes up to the first 15 bytes of the left-most Domain Name System (DNS) name that an administrator assigns to the domain. For example, if a domain were named mspress.microsoft.com, the NetBIOS name of the domain would be mspress.

Another concept used by NetBIOS is that of LAN adapter (LANA) numbers. A LANA number is assigned to every NetBIOS-compatible protocol that layers above a network adapter. For example, if a computer has two network adapters and TCP/IP and NWLink can use either adapter, there would be four LANA numbers. LANA numbers are important because a NetBIOS application must explicitly assign its service name to each LANA through which it’s willing to accept client connections. If the application listens for client connections on a particular name, clients can access the name only via protocols on the network adapters for which the name is registered.

NetBIOS Operation

A NetBIOS server application uses the NetBIOS API to enumerate the LANAs present on a system and assign a NetBIOS name representing the application’s service to each LANA. If the server is connection oriented, it performs a NetBIOS listen command to wait for client connection attempts. After a client is connected, the server executes NetBIOS functions to send and receive data. Connectionless communication is similar, but the server simply reads messages without establishing connections.

A connection-oriented client uses NetBIOS functions to establish a connection with a NetBIOS server and then executes further NetBIOS functions to send and receive data. An established NetBIOS connection is also known as a session. If the client wants to send connectionless messages, it simply specifies the NetBIOS name of the server with the send function.

NetBIOS consists of a number of functions, but they all route through the same interface: Netbios. This routing scheme is the result of a legacy left over from the time when NetBIOS was implemented on MS-DOS as an MS-DOS interrupt service. A NetBIOS application would execute an MS-DOS interrupt and pass a data structure to the NetBIOS implementation that specified every aspect of the command being executed. As a result, the Netbios function in Windows takes a single parameter, which is a data structure that contains the parameters specific to the service the application requests.

EXPERIMENT: USING NBTSTAT TO SEE NETBIOS NAMES

You can use the Nbtstat command, which is included with Windows, to list the active sessions on a system, the NetBIOS-to-TCP/IP name mappings cached on a computer, and the NetBIOS names defined on a computer. Here’s an example of the Nbtstat command with the –n option, which lists the NetBIOS names defined on the computer:

C:\Users\Toby>nbtstat -n

Local Area Connection:

Node IpAddress: [192.168.0.193] Scope Id: []

NetBIOS Local Name Table

Name Type Status

---------------------------------------------

WIN-NLRTEOW2ILZ<00> UNIQUE Registered

WORKGROUP <00> GROUP Registered

WIN-NLRTEOW2ILZ<20> UNIQUE Registered

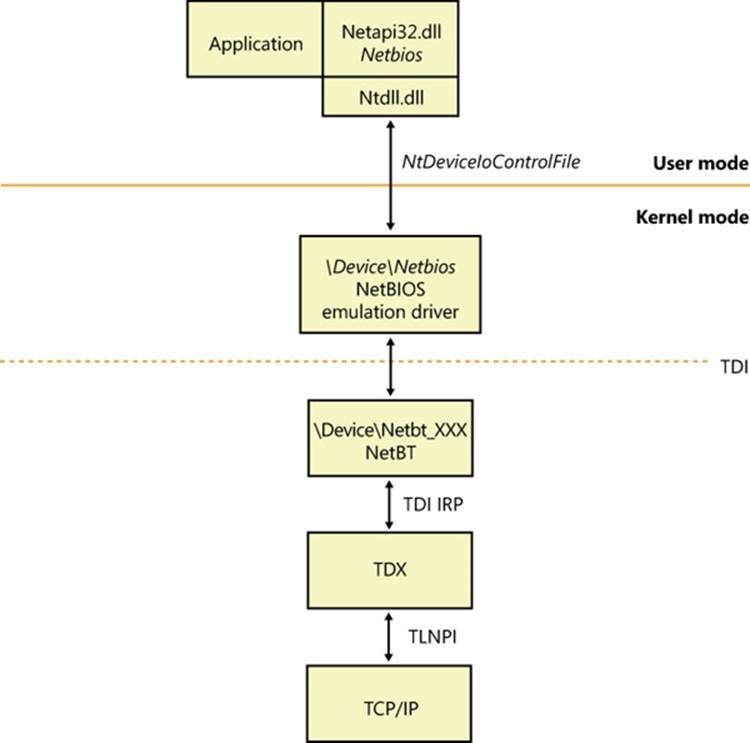

NetBIOS API Implementation

The components that implement the NetBIOS API are shown in Figure 7-13. The Netbios function is exported to applications by %SystemRoot%\System32\Netbios.dll. Netbios.dll opens a handle to the kernel-mode driver named the NetBIOS emulator(%SystemRoot%\System32\Drivers\Netbios.sys) and issues Windows DeviceIoControl file commands on behalf of an application. The NetBIOS emulator translates NetBIOS commands issued by an application into TDI commands that it sends to protocol drivers.

Figure 7-13. NetBIOS API implementation

If an application wants to use NetBIOS over the TCP/IP protocol, the NetBIOS emulator requires the presence of the NetBT driver (%SystemRoot%\System32\Drivers\Netbt.sys). NetBT is known as the NetBIOS over TCP/IP driver and is responsible for supporting NetBIOS semantics that are inherent to the NetBIOS Extended User Interface (NetBEUI) protocol (included in previous versions of Windows) but not the TCP/IP protocol. For example, NetBIOS relies on NetBEUI’s message-mode transmission and NetBIOS name-resolution facilities, so the NetBT driver implements them on top of the TCP/IP protocol.

Other Networking APIs

Windows includes other networking APIs that are used less frequently or are layered on the APIs already described (and outside the scope of this book). Five of these, however—Background Intelligent Transfer Service (BITS), Distributed Component Object Model (DCOM), Message Queuing (MSMQ), Peer-to-Peer Infrastructure (P2P), and Universal Plug and Play (UPnP) with Plug and Play Extensions (PnP-X)—are important enough to the operation of a Windows system and many applications to merit brief descriptions.

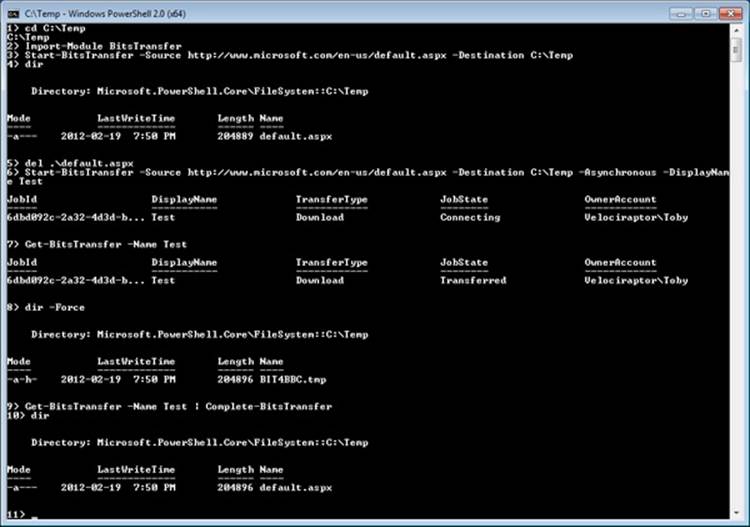

Background Intelligent Transfer Service

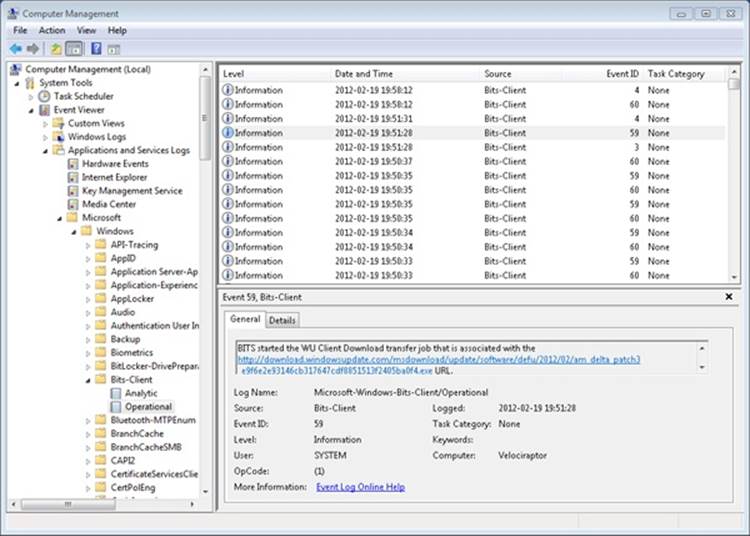

BITS is a service and an API that provides reliable asynchronous transfer of files between systems, using either the SMB, HTTP, or HTTPS protocol. BITS normally runs in the background, making use of unutilized network bandwidth by monitoring network utilization and throttling itself so that it consumes only resources that would otherwise be unused; however, BITS transfers might also take place in the foreground and compete for resources with other processes running on the system.

BITS keeps track of ongoing, or scheduled, transfers in what are known as transfer jobs (not to be confused with jobs and job objects as described in Chapter 5) for each user. Each job is an entry in a queue and describes the files to transfer, the security context (access tokens) to run under, and the priority of the job. BITS version 4.0 is integrated into BranchCache (described later in this chapter) to further reduce network bandwidth.

BITS is used by many other components in Windows, such as Microsoft Update, Windows Update, Internet Explorer (version 9 and later, for downloading files), Microsoft Outlook (for downloading address books), Microsoft Security Essentials (for downloading daily virus signature updates), and others, making BITS the most widely used network file-transfer system in use today.

BITS provides the following capabilities:

§ Seamless data transfer. Components create BITS transfer jobs that will then run until the files are transferred. When a user logs out, the system restarts, or the system loses network connectivity, BITS pauses the transfer. The transfer resumes from where it left off once the user logs in again or network connectivity is restored. The application that created a transfer job does not need to remain running, but the user must remain logged in, while the transfer is taking place. Transfer jobs created under service accounts (such as Windows Update) are always considered to be logged on, allowing those jobs to run continuously.

§ Multiple transfer types. BITS supports three transfer types: download (server to client), upload (client to server), and upload-reply (client to server, with a notification receipt from the server).

§ Prioritization of transfers. When a transfer job is created, the priority is specified (either Foreground, Background High, Background Normal, or Background Low). All background priority jobs make use only of unutilized network resources, while jobs with foreground priority compete with applications for network resources. If there are multiple jobs, BITS processes them in priority order, using a round-robin scheduling system within a particular priority so that all jobs make progress on their transfers.

§ Secure data transfer. BITS normally runs the transfer job using the security context of the job’s creator, but you can also use the BITS API to specify the credentials to use for impersonating a user. For privacy across the network, you should use the HTTPS protocol.

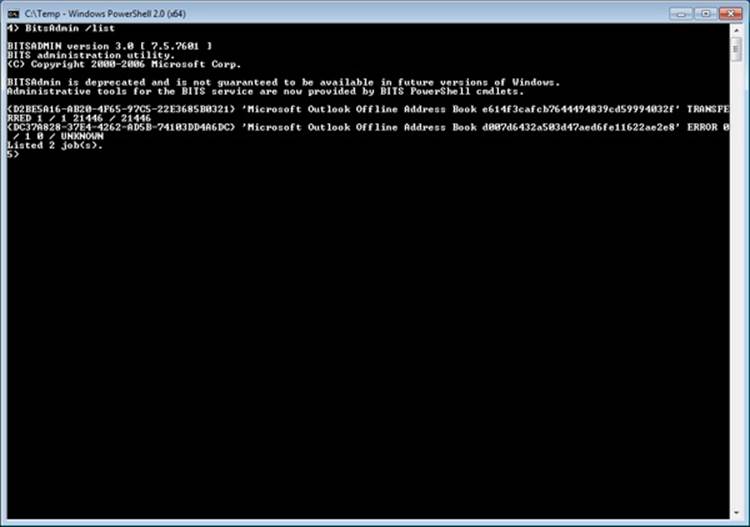

§ Management. The BITS API consists of methods for creating, starting, stopping, monitoring, enumerating, modifying, or requesting notification of transfer-job status changes. Tools include BITSAdmin (which is deprecated and will be removed in a future version of Windows), and Windows PowerShell cmdlets (the preferred management mechanism).

When downloading files, BITS writes the file to a temporary hidden file in the destination directory. Of course, BITS will impersonate the user to ensure that file-system security and quotas are enforced properly. When the application calls the IBackgroundCopyJob::Complete method (or the Complete-BitsTransfer cmdlet in PowerShell), BITS renames the temporary files to their destination names, and the files are available to the client. If there is already a file in the destination directory with the same name, BITS overwrites the file.

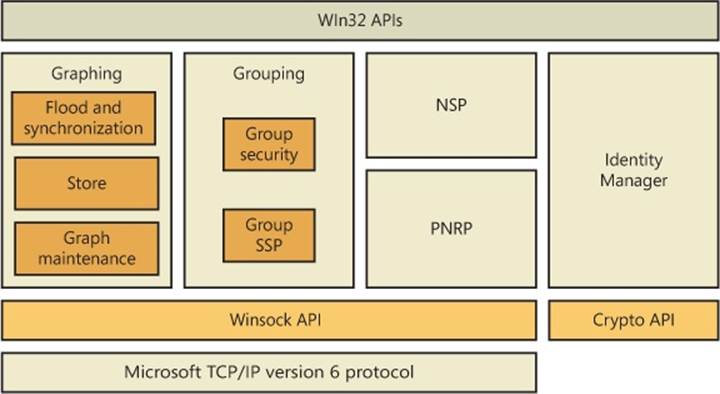

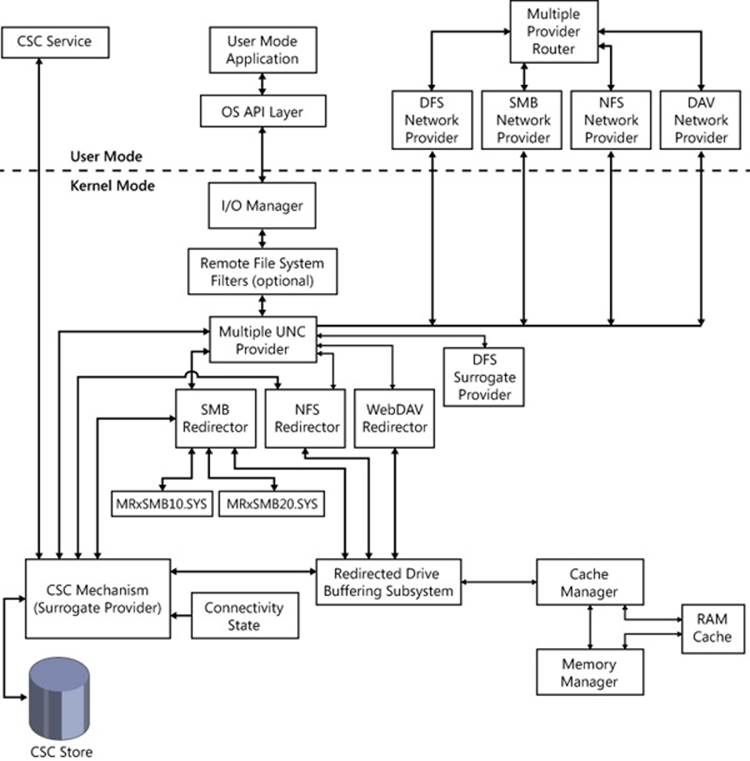

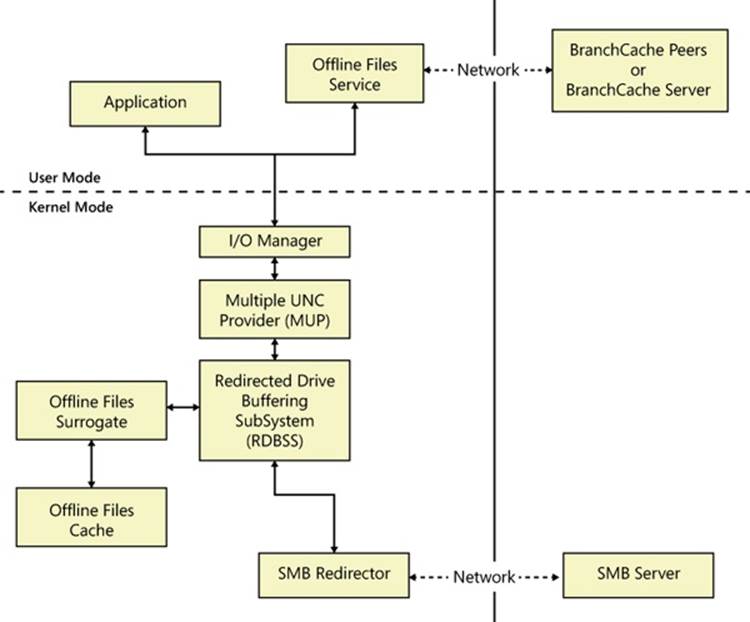

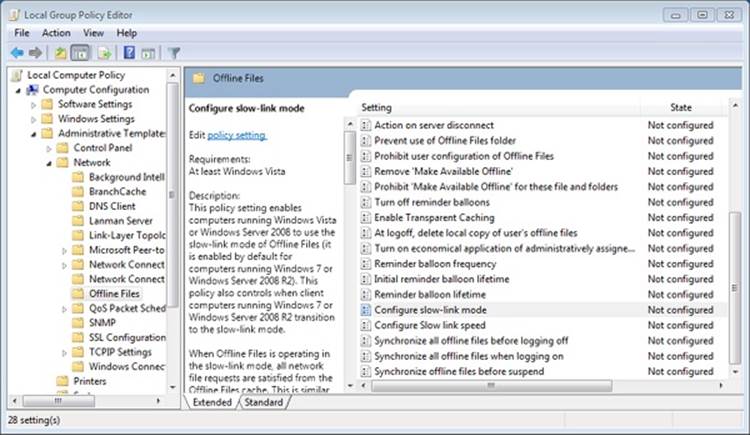

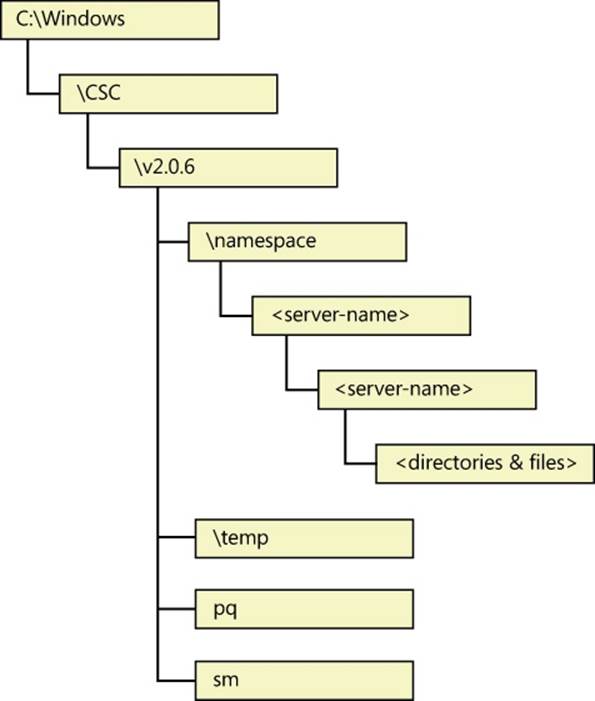

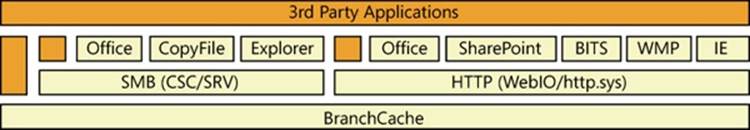

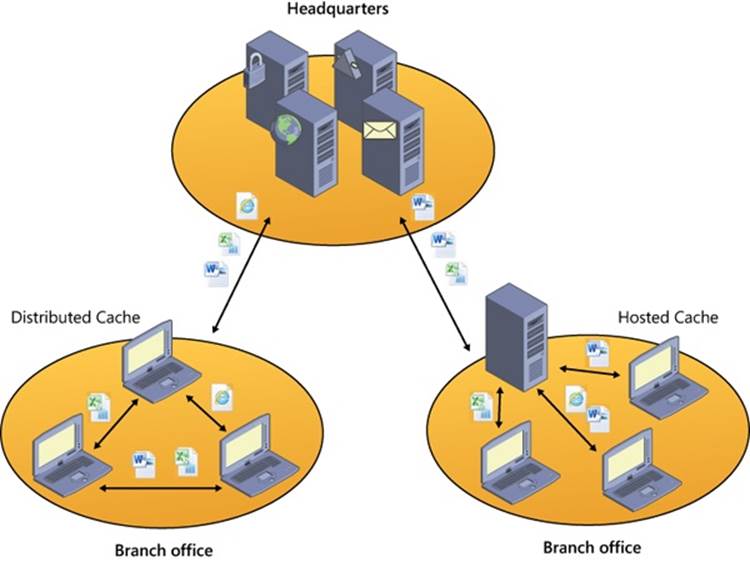

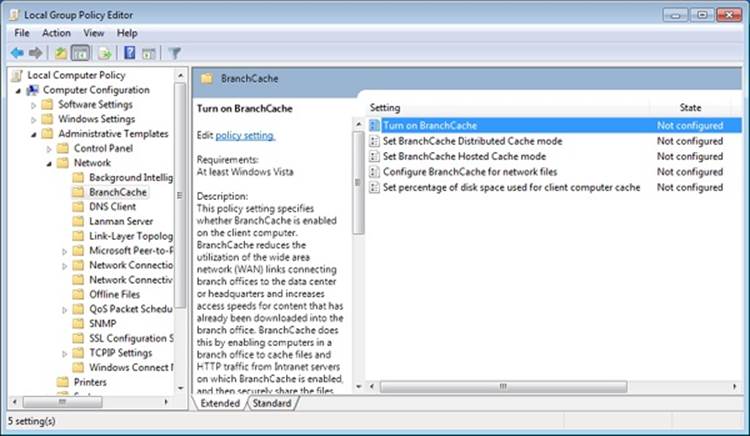

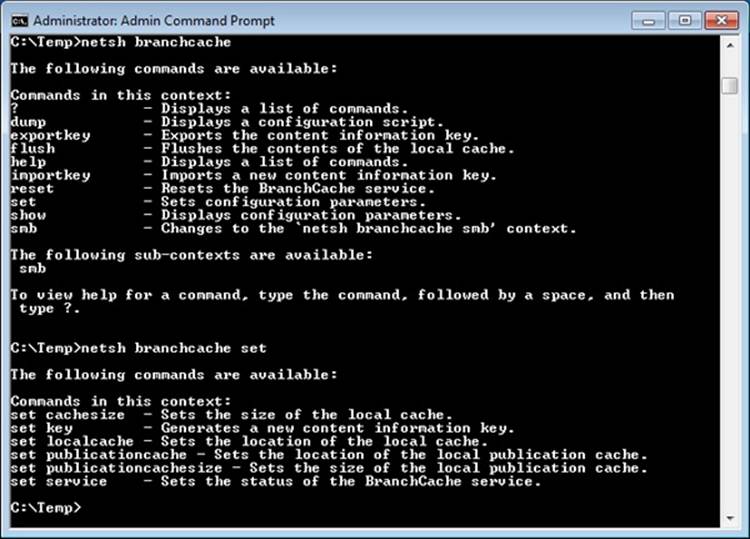

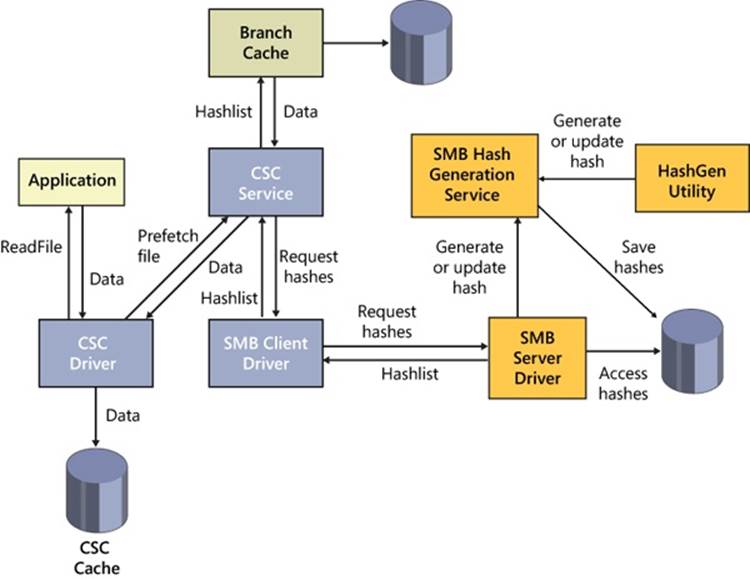

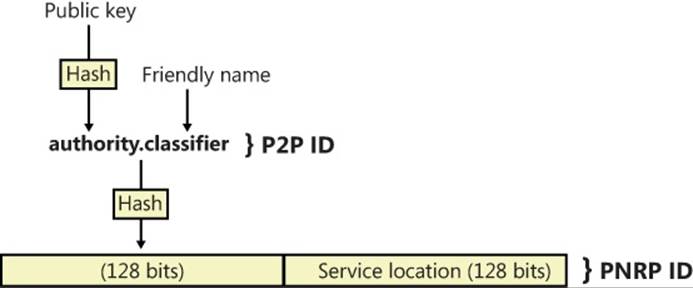

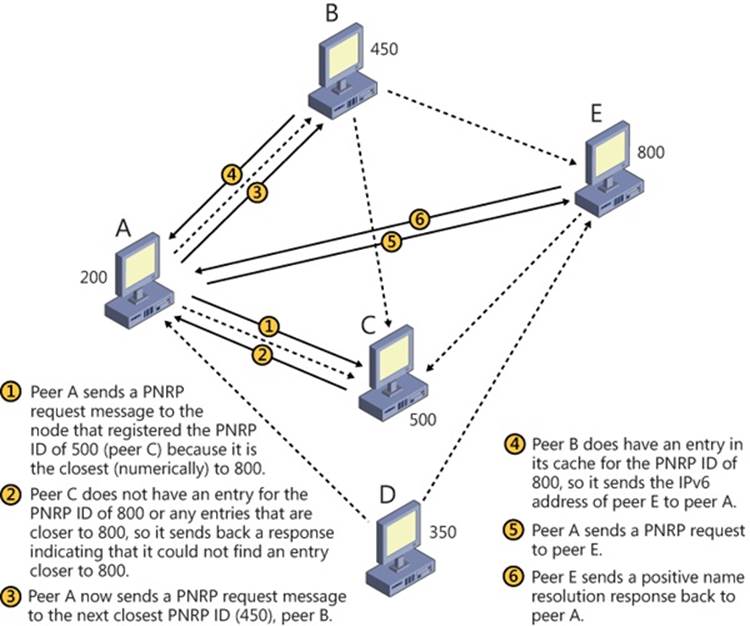

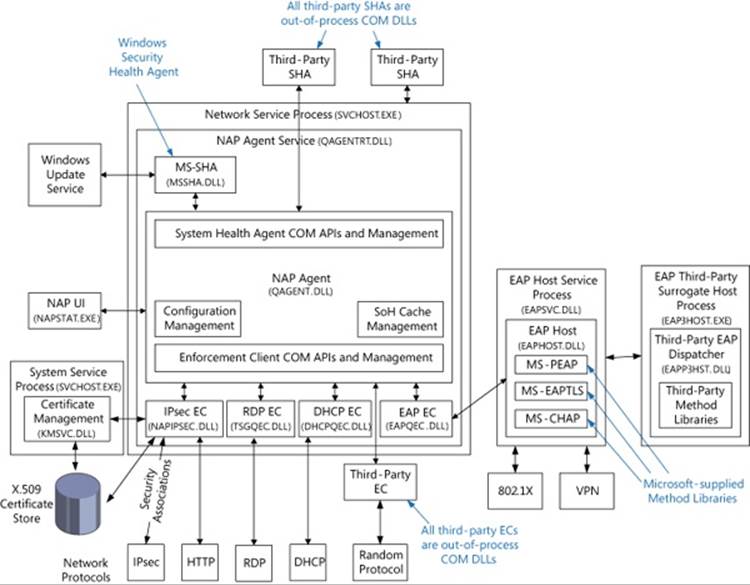

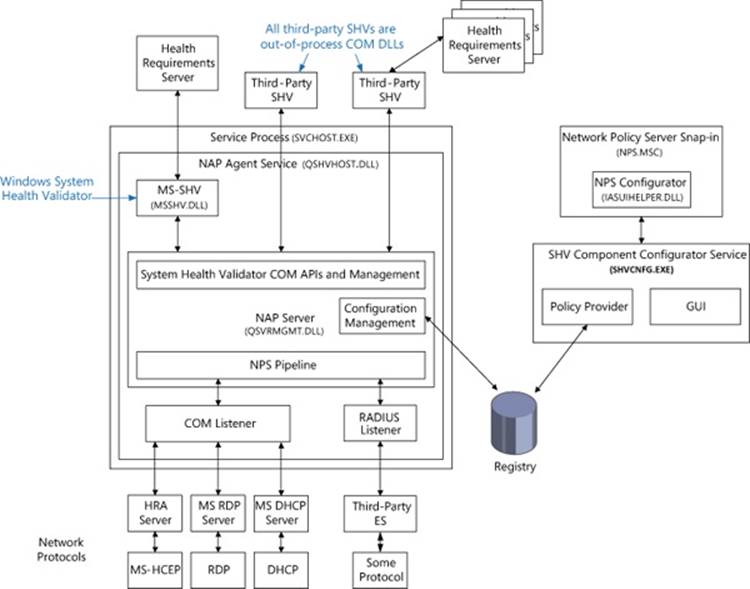

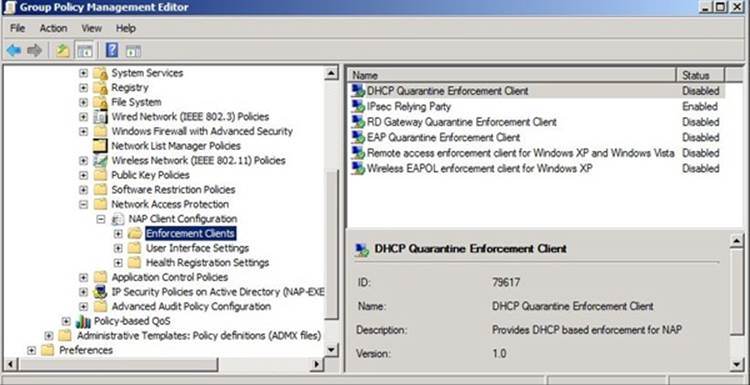

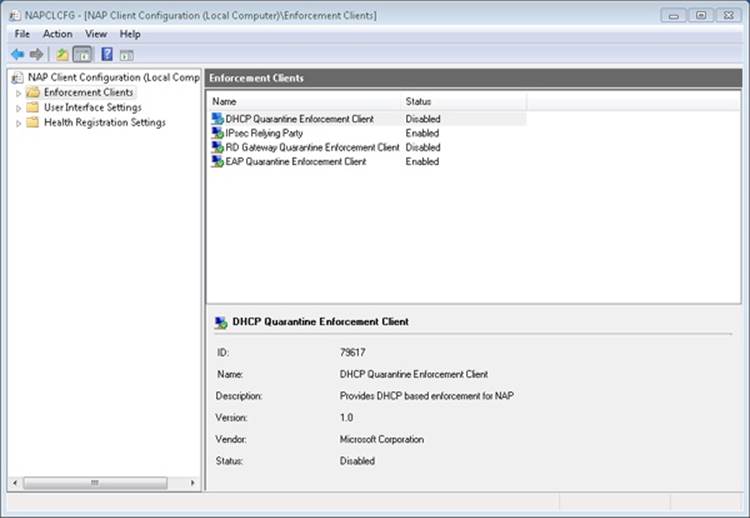

When uploading files, by default, BITS does not allow overwriting an existing file. When the transfer is finished and BITS would overwrite the file, an error is returned to the client. To allow overwrites, set the BITSAllowOverwrites property to True in the Internet Information Services (IIS) metabase using PowerShell or Windows Management Instrumentation (WMI) scripting.