SQL Server Integration Services Design Patterns, Second Edition (2014)

Chapter 15. Logging and Reporting Patterns

An essential part of managing any application is knowing what happens during the day-to-day usage of the application. This theme holds especially true in ETL solutions in which the data being manipulated can be used for reporting and analysis. Administrators satisfy this need through logging and reporting of the executions, errors, and statuses of the applications, which fits perfectly into the management framework concept.

The past few chapters have discussed how to set up other pieces of the management framework, including how to execute parent-child packages and how to implement centralized custom logging. This chapter will describe how to use the built-in logging in Integration Services to report on all aspects of an Integration Services application.

Integration Services provides two primary methods to help satisfy the logging and reporting need.

· Package logging and reporting

· Catalog logging and reporting

Let’s walk through how to set up each of these methods and then utilize patterns that best highlight these methods.

Package Logging and Reporting

The package logging and reporting method has been around since the first edition of Integration Services. This method is characterized by setting up logging during development of the package. A logging provider can log to different outputs, including SQL Server tables, text files, and more. The log information is stored in one object, such as one file or the sysssislog table.

Each log can be restricted to store only certain types of events, such as OnError, OnPreExecute, and OnVariableValueChanged. An administrator can then look at the logs to see what happened during the execution of the package. Once the package has been deployed to the server, you cannot change the type or amount of logging that occurs.

Package logging is the best and only option when you’re using Integration Services 2005 or 2008 or Integration Services 2012 or 2014 in package deployment mode. In the project deployment model, you can use package logging on a regular basis to keep track of errors that may occur or to ensure that packages are executing when expected. For more in-depth logging and reporting, you will want to use catalog logging and reporting, which we will discuss later in this chapter.

Let’s take a look at setting up package logging and then how to use the output.

Setting Up Package Logging

To set up logging at a package level, you will go to the package itself and turn on logging. Each package needs to be set up separately to log to the database. You can do this by right-clicking on the package and selecting the Logging option or by going to the SSIS menu at the top of the SQL Server Data Tools (SSDT) and selecting the Logging option.

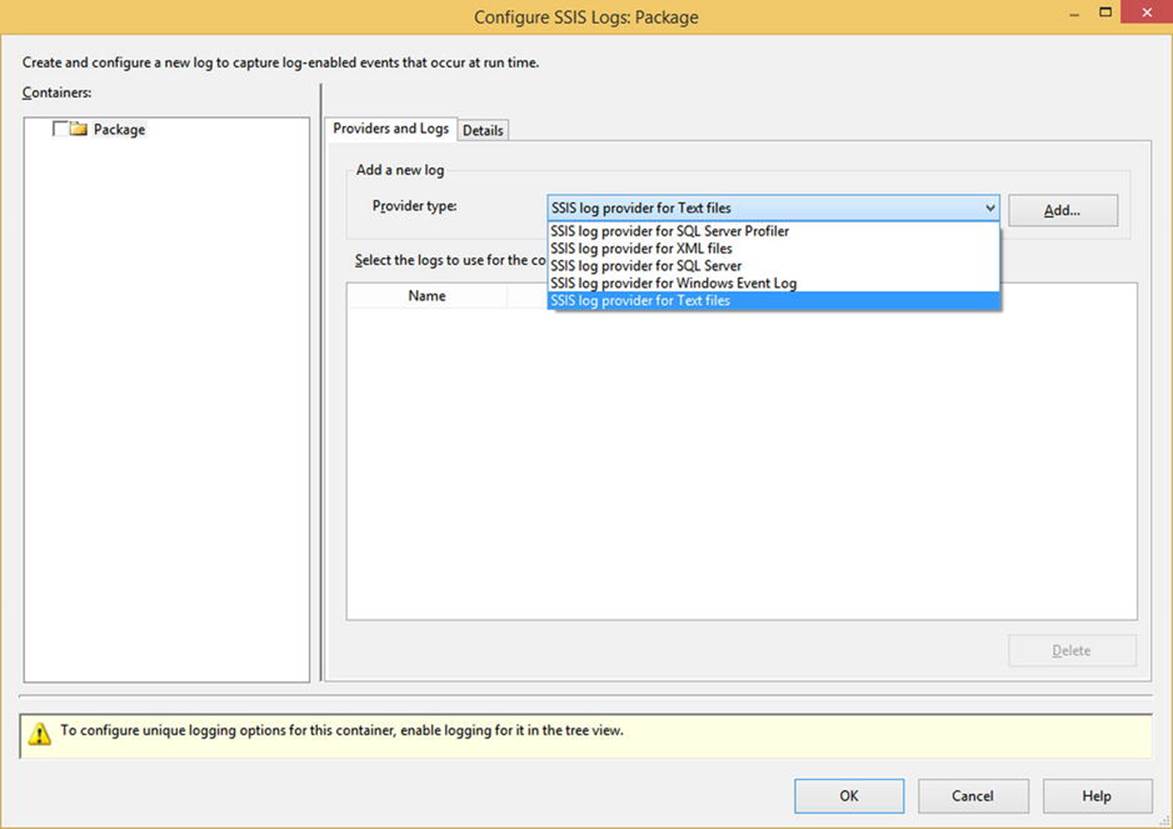

Within the logging menu, which you can see in Figure 15-1, you will decide what type of logging you want to use. Among the options are text files, XML files, and SQL Server tables. Once you have decided on the type of logging, you will select which events you want to log and at what level you want to log these events. If you select events at the highest package level, you will be able to see all events for all lower containers, too.

Figure 15-1. The SSIS logging menu

When the package runs, Integration Services creates a new table, if one is not already available, and stores the logging information within it. The table sysssislog contains the data for all recorded events.

Reporting on Package Logging

Once you’ve run the package with logging, you’ll want to know what happened! The table that contains all of the information you need is called sysssislog. By default, it will be created in the msdb database on the server of the connection manager you selected in the logging menu; however, you can change the database by specifying it directly in the connection manager.

Let’s take a look at the data in the table once we’ve run the package by running the following SQL query:

select * from msdb.dbo.sysssislog

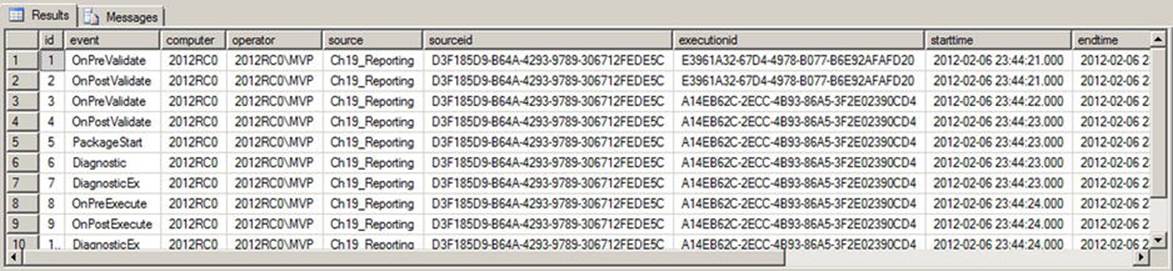

This statement returns results similar to those in Figure 15-2.

Figure 15-2. Results from the SSIS log table

Design Pattern: Package Executions

Although it is possible to use the information in the table directly, you can also combine the information to make it a little more readable. If you want to see the package executions and how long each page took to run, you can use the query in Listing 15-1:

Listing 15-1. Query to Return Package Durations

select ssis.source

, min(starttime) as package_start

, max(endtime) as package_end

,DATEDIFF(ms, min(starttime), max(endtime)) as duration_ms

from msdb.dbo.sysssislog ssis

where event in ('PackageStart', 'PackageEnd')

group by ssis.source, ssis.executionid

Catalog Logging and Reporting

The catalog logging and reporting method was introduced in Integration Services 2012 and is the best logging method to use if it is available. It can be used only if you have set up the project deployment model type. The nice thing about this type of logging is you don’t need to prepare anything in the package to utilize it. Let’s jump right into how to set up the logging and design patterns to report on that data.

Setting Up Catalog Logging

As I mentioned earlier, the benefit of catalog logging is that you don’t need to modify the package at all to use the logging output. The only preparation you need to do is to make sure your package is set to the project deployment type and deploy the package to the SSIS Catalog.

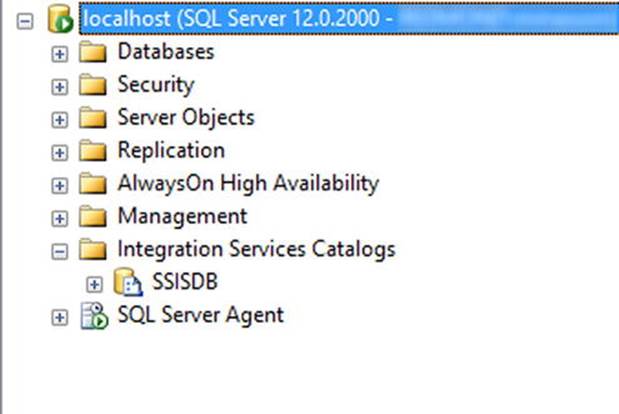

To begin setting up catalog logging and reporting, you will create an SSIS Catalog. You can do this by connecting to the database instance. If Integration Services is installed, you will see a node entitled Integration Services Catalogs. If you create a new catalog named SSISDB, it will look like Figure 15-3.

Figure 15-3. The SSISDB catalog

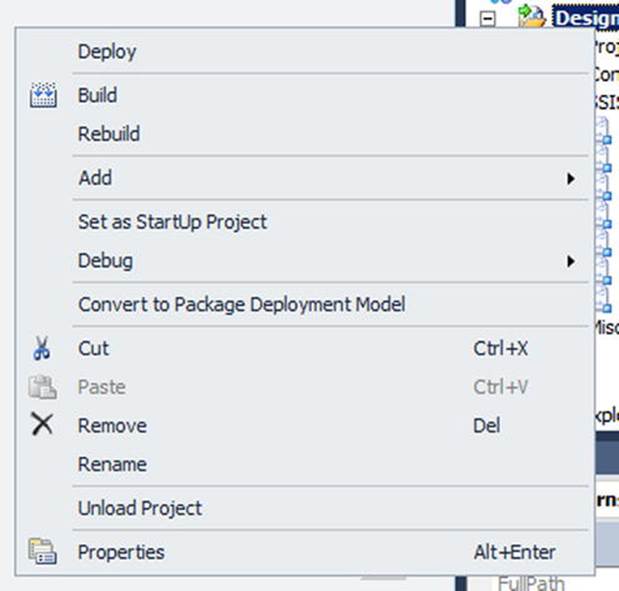

At this point, you are ready to deploy your package. First, though, you should make sure the project is set to use the project deployment model. You can do this by right-clicking on the project. If you see the option for Convert to Package Deployment Model, as shown in Figure 15-4, you are in this mode.

Figure 15-4. A project in project deployment mode

Finally, you will deploy the package to the SSIS Catalog. This stores the package in the SSISDB database and allows for some default and some configurable logging.

Next, we will look at the tables where the information for both types of logging is stored.

Catalog Tables

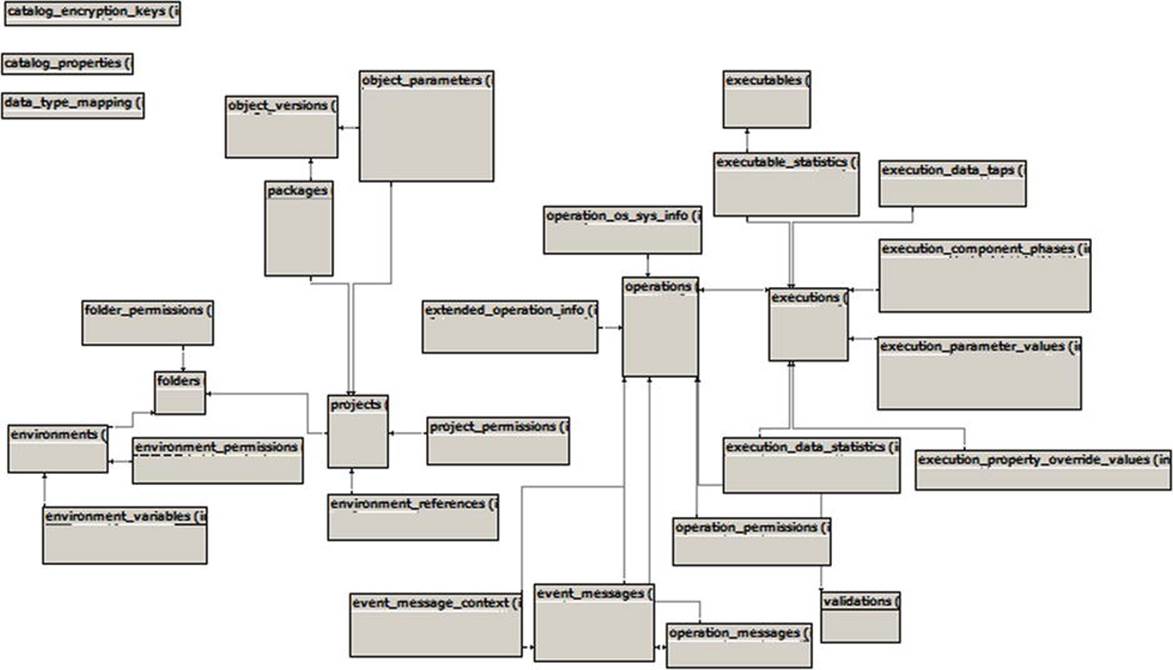

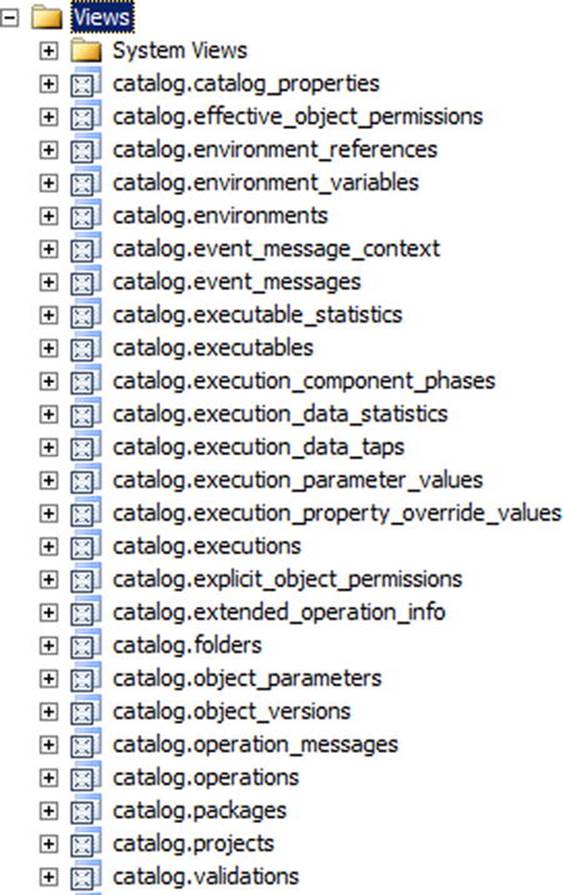

When a package runs, all of the information is stored in a set of tables that reside in the SSISDB database on the same server where the Integration Services package was deployed. Although there is a series of internal tables, you will do most of your reporting from the catalog views. Figure 15-5 shows a database diagram of the SSIS internal tables, whereas Figure 15-6 shows a list of the SSIS catalog views.

Figure 15-5. SSIS catalog internal tables

Figure 15-6. SSIS catalog views

Changing Logging Levels After the Fact

Even after the package has been deployed to the Integration Services server, you can change the amount of logging that occurs. But why would you want to do this? If you initially set up your package with a defined set of logging events, you will see only that set of data. However, you may want to include more events if you are doing more advanced troubleshooting or if you have a specific error you need to track down. On the other hand, you may want to increase the performance of a package by reducing the number of events that are recorded.

Modifying logging at the package level is not a best practice. By opening up the package to change even the slightest item, you increase the risk of a breaking change, whether by mistyping a value or choosing an unavailable logging option. In some organizations, modifications to logging made at the package level may even result in the package having to go through the change control process again. Ideally, you want to make logging changes in an external location without touching the package at all.

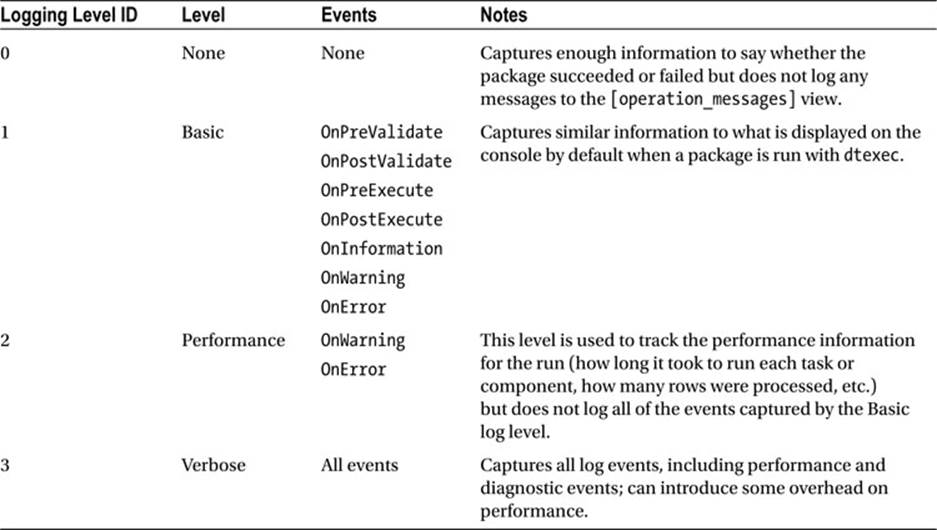

In Integration Services 2012, you can choose from four different logging levels, as described in Table 15-1.

Table 15-1. SSIS Logging Levels

Design Patterns

Now that you know how to set up and log information, let’s walk through the following design patterns:

1. Changing the logging level

2. Utilizing existing reports

3. Creating new reports

Changing the Logging Level

Now that you know what the different logging levels are and when you would use each one, let’s walk through changing the logging level. You can do this in either of two ways: through the execution interface or through a command-line execution.

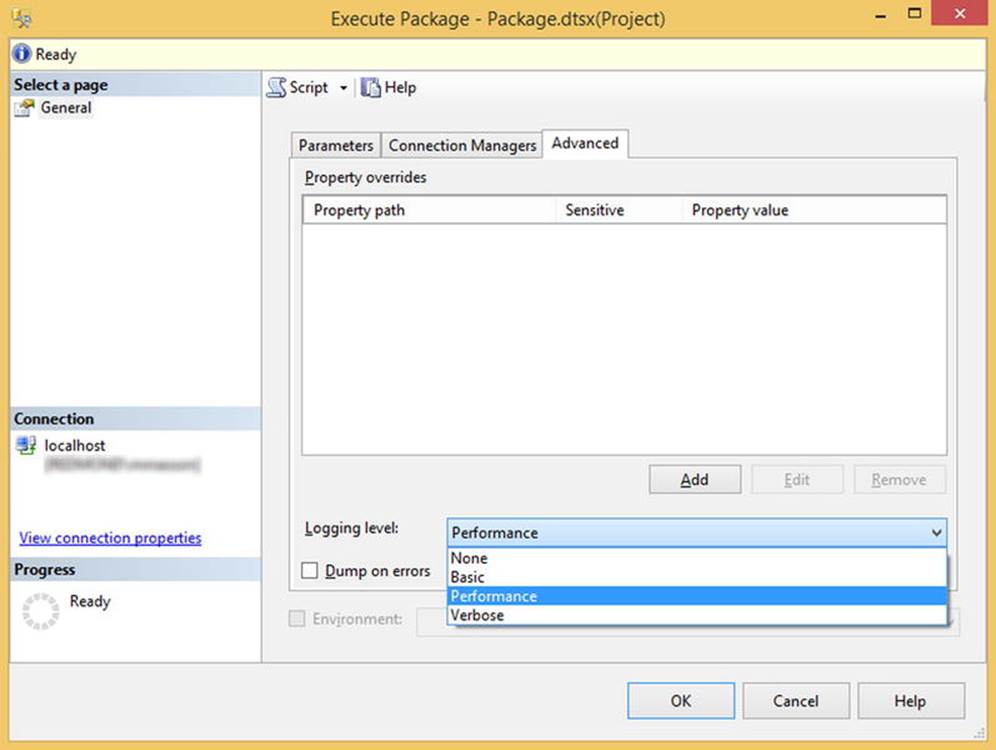

To modify the logging level through the execution interface, you will connect your Integration Services Catalog, right-click on the desired package, and select Execute. On Execute Package screen, you will see the Logging Level option on the Advanced tab. By default, the option is set to Basic, as shown in Figure 15-7. Alternatively, you can modify this value to another logging level to see more or less in the logging tables.

Figure 15-7. Execute Package screen

The other option is to modify logging through the command line. All packages can be executed through the command line, and you can set a logging level associated with an individual execution.

![]() Note Much of the functionality associated with administering Integration Services packages can be accessed through a command-line interface. By using the command line, you can integrate your Integration Services administration with your other maintenance tasks.

Note Much of the functionality associated with administering Integration Services packages can be accessed through a command-line interface. By using the command line, you can integrate your Integration Services administration with your other maintenance tasks.

Run the following code in Listing 15-2 to change the logging level to log all Verbose records for a new execution.

Listing 15-2. Statement to Modify the Logging Level for an Execution

DECLARE @execution_id INT

EXECUTE [catalog].[create_execution]

@folder_name = 'DesignPatterns'

,@project_name = 'DesignPatterns'

,@package_name = 'Ch15_Reporting.dtsx'

,@reference_id = null

,@use32bitruntime = false

,@execution_id = @execution_id OUTPUT

EXECUTE [catalog].[set_execution_parameter_value]

@execution_id

,@object_type = 50

,@parameter_name = 'LOGGING_LEVEL'

,@parameter_value = 3 --Verbose

EXECUTE [catalog].[start_execution]

@execution_id

Once you’ve done this, you can see the output from the newly set logging level by running the query in Listing 15-3.

Listing 15-3. Query to Return All Messages

select * from catalog.event_messages

where operation_id =

(select max(execution_id) from catalog.executions)

Using the Existing Reports

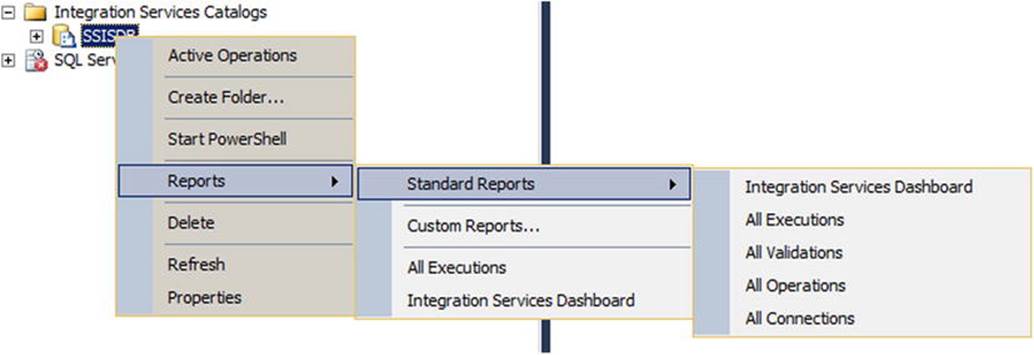

Our next design pattern is an important one: use what is provided to you. Included in the SSIS Catalog are reports that use the logging information we have just discussed. The information in these reports includes an in-depth view of all of your packages’ executions. These reports are a great start for you to see when your packages run, if any errors occur, and potential trouble areas for you to investigate.

Figure 15-8 shows all of the reports available to you. You can access all reports through the Management Studio interface and the Integration Services Catalog node.

Figure 15-8. Available catalog reports

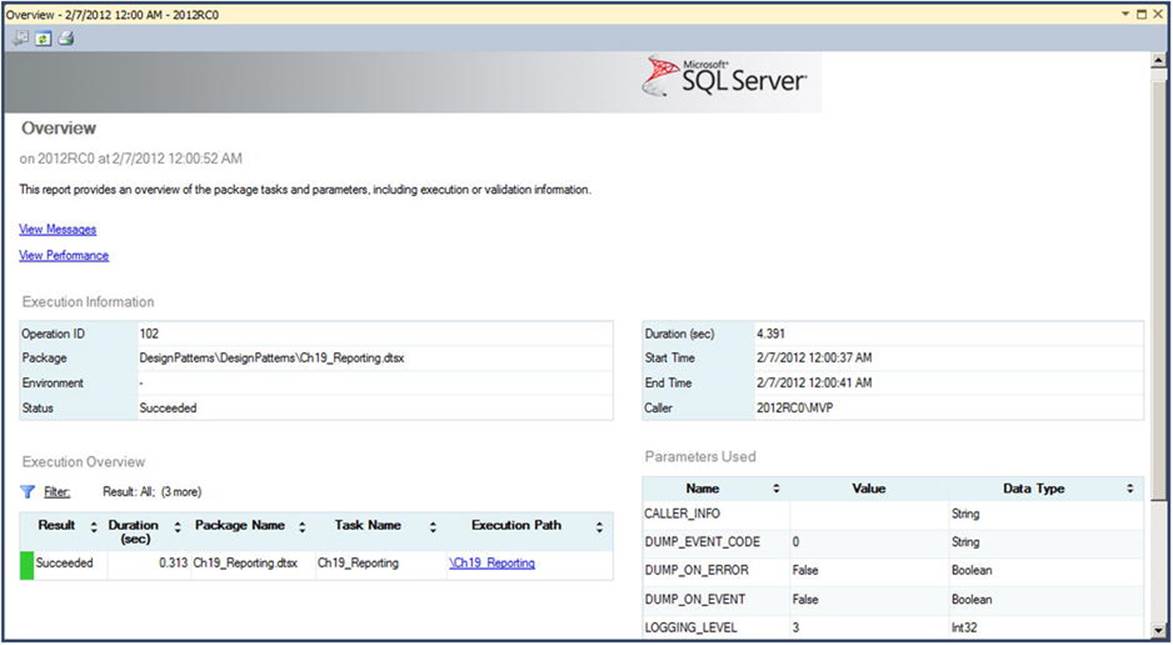

If you are looking at a specific execution, you will always want to start with the Overview report, which can be run by selecting the Overview link on any of the provided reports. In fact, at the end of an execution through the interface, you will be asked if you want to see this report. If you select yes, you will see something similar to Figure 15-9.

Figure 15-9. An overview report

Creating New Reports

Now that you’ve seen the reports that are available to you without doing any work, you may be perfectly happy. If not, you may want to dig into the data a little deeper. You can create new reports by looking at the catalog views that were described earlier. Particular reasons why you may want to do this include

1. Seeing the longest-running executions

2. Finding out why a package failed

3. Understanding the inner workings of a particular component

Let’s start with the first reason. This report is interesting because it uses the main output view, but based on the query and transformations, it becomes a helpful little tool. Listing 15-4 shows the query that lists the five longest-running packages over the past day.

Listing 15-4. Query for Five Longest-Running Packages

select top 5 e.execution_id, e.package_name, DATEDIFF(ms, start_time, end_time) as duration_ms

from catalog.executions e

where e.start_time > DATEADD(dd, -1, getdate())

order by duration_ms desc

The second reason you may want a new report is to see why a package failed. You will use an additional view for this information, the catalog.event_messages view. Restricting the data on both the executions and the event_messages view will ensure that you get only packages that failed entirely and see only the events that caused them to fail. This query can be seen in Listing 15-5.

Listing 15-5. Failed-Packages Query

select e.execution_id, e.package_name, em.*

from catalog.executions e

inner join catalog.event_messages em on e.execution_id=em.operation_id

where e.status = 4 and em.event_name = 'OnError'

The final reason is to understand the inner workings of a particular component. You can see the individual steps that occurred during the execution of each component in the data flow. For example, the query in Listing 15-6 returns each step that occurs in the execution of the sources, transformations, and destinations and how long each step takes.

Listing 15-6. Query to Return Component Phases and Times

select subcomponent_name, phase

, DATEDIFF(ms, start_time, end_time) as duration_ms

from catalog.execution_component_phases

where package_name = 'Ch16_Reporting.dtsx'

and task_name = 'Data Flow Task'

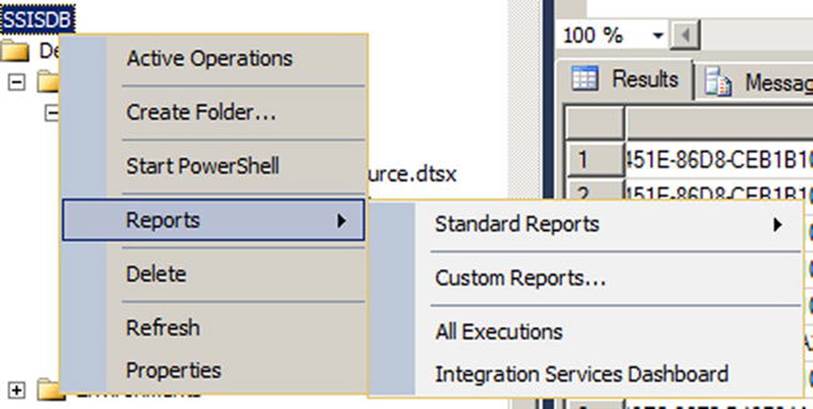

Once you have your desired query, you can either run it directly from Management Studio or embed it into a Reporting Services report to make it look like the standard reports available in the solution. To make the report through Management Studio, you can store the folders in your local Documents folder, under the structure SQL Server Management Studio\Custom Reports. To access them, you will then select the Custom Reports option under the Reports menu on the Integration Services node, as shown in Figure 15-10.

Figure 15-10. Selection of custom reports

Summary

This chapter has discussed many ways to monitor your Integration Services packages. Whether you are using an older version of the tool or the latest and greatest, you will be able to understand the internal workings of the package by following the design patterns described here. Discussions of both package logging and reporting and catalog logging and reporting have shown you how to modify the types of events you log and how to retrieve that information.