Scaling PHP Applications (2014)

Ditching the LAMP Stack

What’s wrong with LAMP?

LAMP (Linux, Apache, MySQL, PHP) is the most popular web development stack in the world. It’s robust, reliable and everyone knows how to use it. So, what’s wrong with LAMP? Nothing. You can go really far on a single server with the default configurations. But what happens when you start to really push the envelope? When you have so much traffic or load that your server is running at full capacity?

You’ll notice tearing at the seams, and in a pretty consistent fashion too. MySQL is always the first to go—I/O bound most of the time. Next up, Apache. Loading the entire PHP interpreter for each HTTP request isn’t cheap, and Apache’s memory footprint will prove it. If you haven’t crashed yet, Linux itself will start to give up on you—all of those sane defaults that ship with your distribution just aren’t designed for scale.

What can we possibly do to improve on this tried-and-true model? Well, the easiest thing is to get better hardware (scale vertically) and split the components up (scale horizontally). This will get you a little further, but there is a better way. Scale intelligently. Optimize, swapping pieces of your stack for better software, customize your defaults and build a stack that’s reliable and fault tolerant. You want to spend your time building an amazing product, not babysitting servers.

The Scalable Stack

After lots of trial and error, I’ve found what I think is a generic, scalable stack. Let’s call it LHNMPRR… nothing is going to be as catchy as LAMP!

Linux

We still have Old Reliable, but we’re going to tune the hell out of it. This book assumes the latest version of Ubuntu Server 12.04, but most recent distributions of Linux should work equally as well. In some places you may need to substitute apt-get with your own package manager, but the kernel tweaks and overall concepts should apply cleanly to RHEL, Debian, and CentOS. I’ll include kernel and software versions where applicable to help avoid any confusion.

HAProxy

We’ve dumped Apache and split its job up. HAProxy acts as our load balancer—it’s a great piece of software. Many people use nginx as a load balancer, but I’ve found that HAProxy is a better choice for the job. Reasons why will be discussed in-depth in Chapter 3.

nginx

Nginx is an incredible piece of software1. It brings the heat without any bloat and does webserving extremely well. In addition to having an incredibly low memory and CPU footprint, it is extremely reliable and can take an ample amount of abuse without complaining.

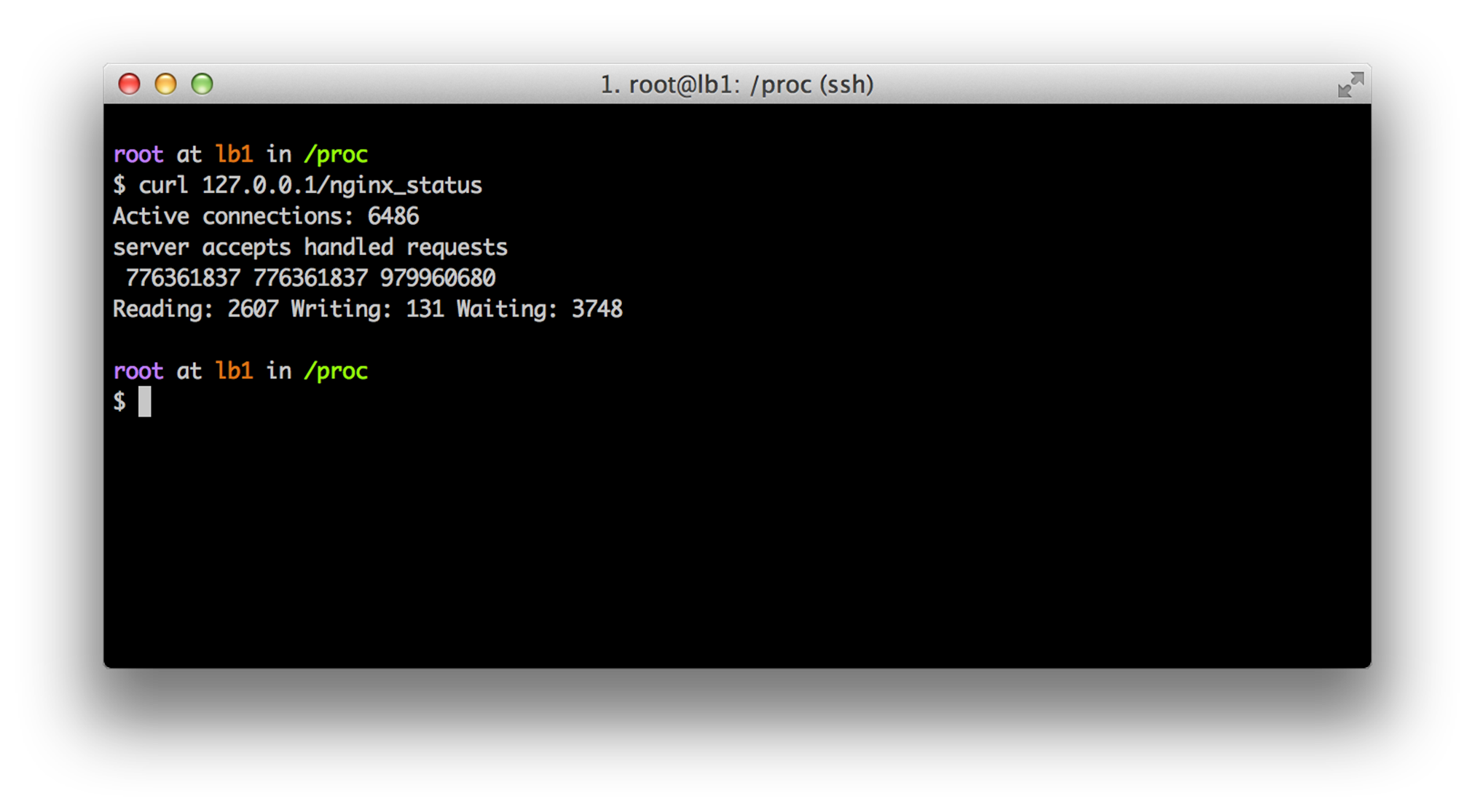

One of our busiest nginx servers at Twitpic handles well over 6,000 connections per second while using only 80MB of memory.

An example of a server with over 6000 active connections

PHP 5.5 / PHP-FPM

There are several ways to serve PHP applications: mod_php, cgi, lighttpd fastcgi??. None of these solutions come close to PHP-FPM, a FastCGI Process Manager written by the nginx team that’s been bundled with PHP since 5.3. What makes PHP-FPM so awesome? Well, in addition to being rock-solid, it’s extremely tunable, provides real-time stats and logs slow requests so you can track and analyze slow portions of your codebase.

MySQL

Most folks coming from a LAMP stack are going to be pretty familiar with MySQL, and I will cover it pretty extensively. For instance, in Chapter 5 you’ll learn how you can get NoSQL performance out of MySQL. That being said, this book is database agnostic, and most of the tips can be similarly applied to any database. There are many great databases to choose from: Postgres, MongoDB, Cassandra, and Riak to name a few. Picking the correct one for your use case is outside the scope of this book.

Redis

Redis can be used as a standalone database, but its primary strength is as a datatype storage system. Think of it as memcache on steroids. You can use memcache (we still do), but Redis performance is just as good or better in most scenarios. It is persistent to disk (so you don’t lose your cache in the case of a crash, which can be catastrophic if your infrastructure can’t deal with 100% cache misses), and is updated extremely frequently by an opinionated, vocal and smart developer, @antirez.

Resque

Doing work in the background is one of the principal concepts of scaling. Resque is one of the best worker/job platforms available. It uses Redis as a backend so it’s inherently fast and scalable, includes a beautiful frontend to give you full visibility into the job queue, and is designed to fail gracefully. Later we’ll talk about using PHP-Resque, along with some patches I’ve provided, to build elegant background workers.

Should I run on AWS?

AWS is awesome. Twitpic is a huge AWS customer—over 1PB of our images are stored on Amazon S3 and served using CloudFront. Building that kind of infrastructure on our own would be extremely expensive and involve hiring an entire team to manage it.

I used to say that running on EC2 full-time was a bad idea— that it was like using your expensive iPhone to hammer a nail into the wall, it works and it’ll get the job done, but it’s going to cost you much more than using a hammer.

I don’t think this is the case any longer. There is a certainly a premium to using AWS, but it’s much cheaper than it used to be and the offering has become much better (200GB+ memory, SSDs, provisioned IOPS). If I was starting up a new company, I would build it on AWS, hands down. Reserved Instances will get your monthly cost down to something that’s reminiscent of Dedicated Server Hosting (albiet still 10-20% more expensive), but being able to bring up a cluster of massive servers instantly or start up a test cluster in California in less than 5 minutes is powerful.

For a bigger, more established startup, I would maintain a fleet of dedicated servers at SoftLayer or Rackspace, and use EC2 for excess capacity, emergengies, and on-demand scaling. You can do this seemlessly with a little bit of network-fu and Amazon VPC. Sudden increase in traffic? Spin up some EC2 instances and handle the burst effortlessly. Using Amazon VPC, your EC2 instances can communicate with your bare-metal servers without being exposed to the public internet.