Professional Microsoft SQL Server 2014 Integration Services (Wrox Programmer to Programmer), 1st Edition (2014)

Chapter 4. The Data Flow

WHAT’S IN THIS CHAPTER?

· Learn about the SSIS Data Flow architecture

· Reading data out of sources

· Loading data into destinations

· Transforming data with common transformations

WROX.COM CODE DOWNLOADS FOR THIS CHAPTER

You can find the wrox.com code downloads for this chapter at www.wrox.com/go/prossis2014 on the Download Code tab.

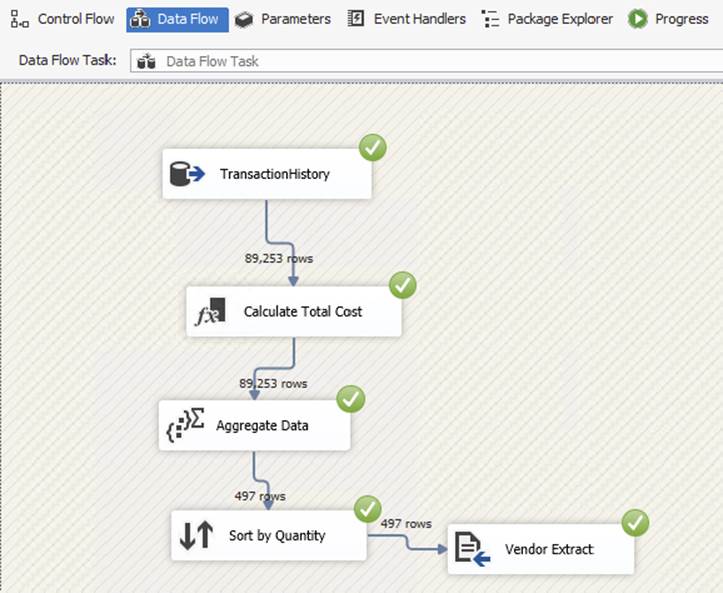

In the last chapter you were introduced to the Control Flow tab through tasks. In this chapter, you’ll continue along those lines with an exploration of the Data Flow tab, which is where you will spend most of your time as an SSIS developer. The Data Flow Task is where the bulk of your data heavy lifting occurs in SSIS. This chapter walks you through the transformations in the Data Flow Task, demonstrating how they can help you move and clean your data. You’ll notice a few components (the CDC ones) aren’t covered in this chapter. Those needed more coverage than this chapter had room for and are covered in Chapter 11.

UNDERSTANDING THE DATA FLOW

The SSIS Data Flow is implemented as a logical pipeline, where data flows from one or more sources, through whatever transformations are needed to cleanse and reshape it for its new purpose, and into one or more destinations. The Data Flow does its work primarily in memory, which gives SSIS its strength, allowing the Data Flow to perform faster than any ELT type environment (in most cases) where the data is first loaded into a staging environment and then cleansed with a SQL statement.

One of the toughest concepts to understand for a new SSIS developer is the difference between the Control Flow and the Data Flow tabs. Chapter 2 explains this further, but just to restate a piece of that concept, the Control Flow tab controls the workflow of the package and the order in which each task will execute. Each task in the Control Flow has a user interface to configure the task, with the exception of the Data Flow Task. The Data Flow Task is configured in the Data Flow tab. Once you drag a Data Flow Task onto the Control Flow tab and double-click it to configure it, you’re immediately taken to the Data Flow tab.

The Data Flow is made up of three components that are discussed in this chapter: sources, transformations (also known as transforms), and destinations. These three components make up the fundamentals of ETL. Sources extract data out of flat files, OLE DB databases, and other locations; transformations process the data once it has been pulled out; and destinations write the data to its final location.

Much of this ETL processing is done in memory, which is what gives SSIS its speed. It is much faster to apply business rules to your data in memory using a transformation than to have to constantly update a staging table. Because of this, though, your SSIS server will potentially need a large amount of memory, depending on the size of the file you are processing.

Data flows out of a source in memory buffers that are 10 megabytes in size or 10,000 rows (whichever comes first) by default. As the first transformation is working on those 10,000 rows, the next buffer of 10,000 rows is being processed at the source. This architecture limits the consumption of memory by SSIS and, in most cases, means that if you had 5 transforms dragged over, 50,000 rows will be worked on at the same time in memory. This can change only if you have asynchronous components like the Aggregate or Sort Transforms, which cause a full block of the pipeline.

DATA VIEWERS

Data viewers are a very important feature in SSIS for debugging your Data Flow pipeline. They enable you to view data at points in time at runtime. If you place a data viewer before and after the Aggregate Transformation, for example, you can see the data flowing into the transformation at runtime and what it looks like after the transformation happens. Once you deploy your package and run it on the server as a job or with the service, the data viewers do not show because they are only a debug feature within SQL Server Data Tools (SSDT).

To place a data viewer in your pipeline, right-click one of the paths (red or blue arrows leaving a transformation or source) and select Enable Data Viewer.

Once you run the package, you’ll see the data viewers open and populate with data when the package gets to that path in the pipeline that it’s attached to. The package will not proceed until you click the green play button (>). You can also copy the data into a viewer like Excel or Notepad for further investigation by clicking Copy Data. The data viewer displays up to 10,000 rows by default, so you may have to click the > button multiple times in order to go through all the data.

After adding more and more data viewers, you may want to remove them eventually to speed up your development execution. You can remove them by right-clicking the path that has the data viewer and selecting Disable Data Viewer.

SOURCES

A source in the SSIS Data Flow is where you specify the location of your source data. Most sources will point to a Connection Manager in SSIS. By pointing to a Connection Manager, you can reuse connections throughout your package, because you need only change the connection in one place.

SOURCE ASSISTANT AND DESTINATION ASSISTANT

The Source Assistant and Destination Assistant are two components designed to remove the complexity of configuring a source or a destination in the Data Flow. The components determine what drivers you have installed and show you only the applicable drivers. It also simplifies the selection of a valid connection manager based on the database platform you select that you wish to connect to.

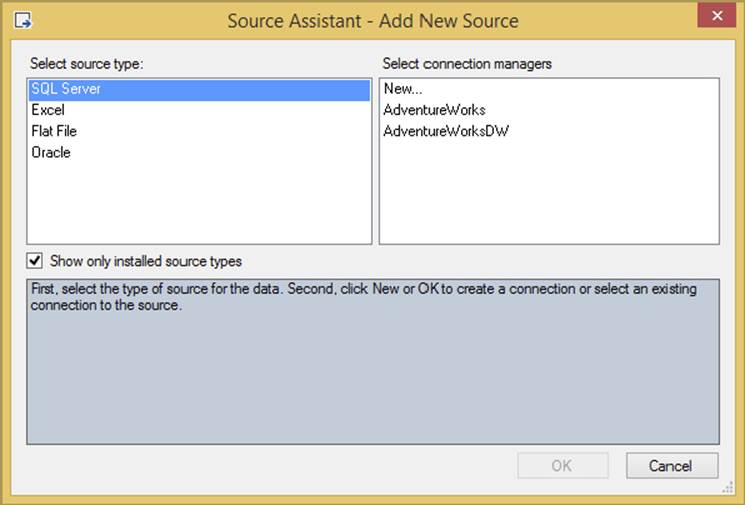

In the Source Assistant or Destination Assistant (the Source Assistant is shown in Figure 4-1), only the data providers that you have installed are actually shown. Once you select how you want to connect, you’ll see a list of Connection Managers on the right that you can use to connect to your selected source. You can also create a new Connection Manager from the same area on the right. If you uncheck the “Show only installed source types” option, you’ll see other providers like DB2 or Oracle for which you may not have the right software installed.

FIGURE 4-1

OLE DB Source

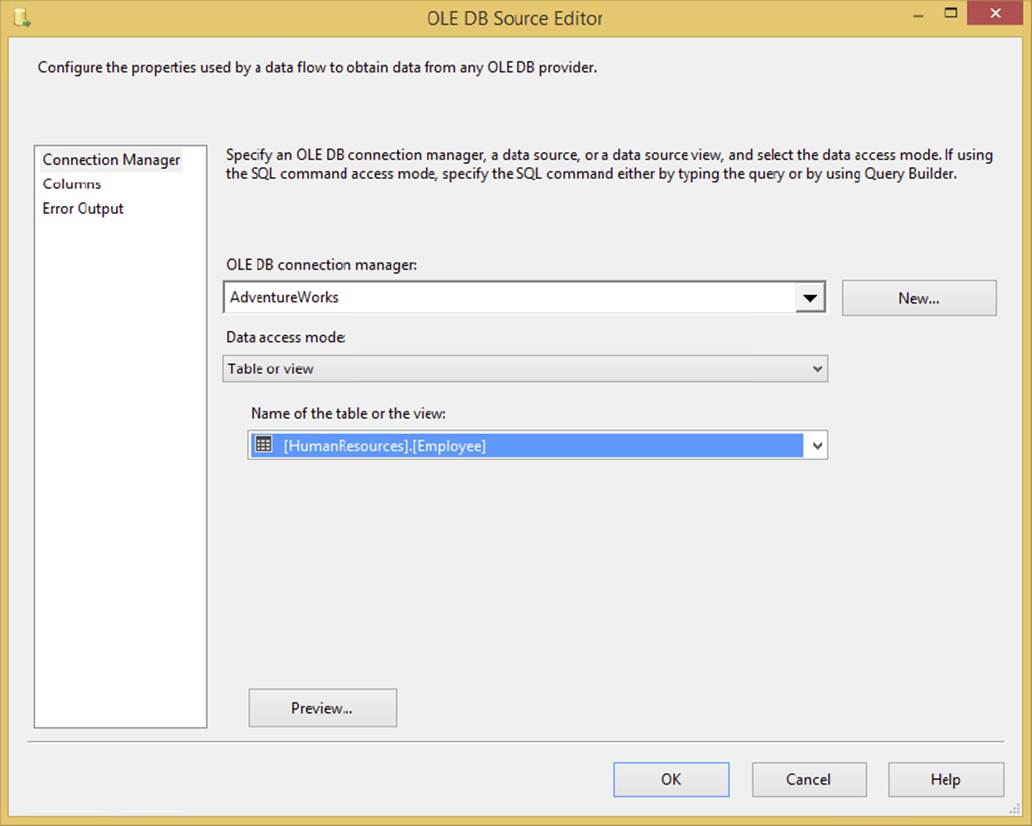

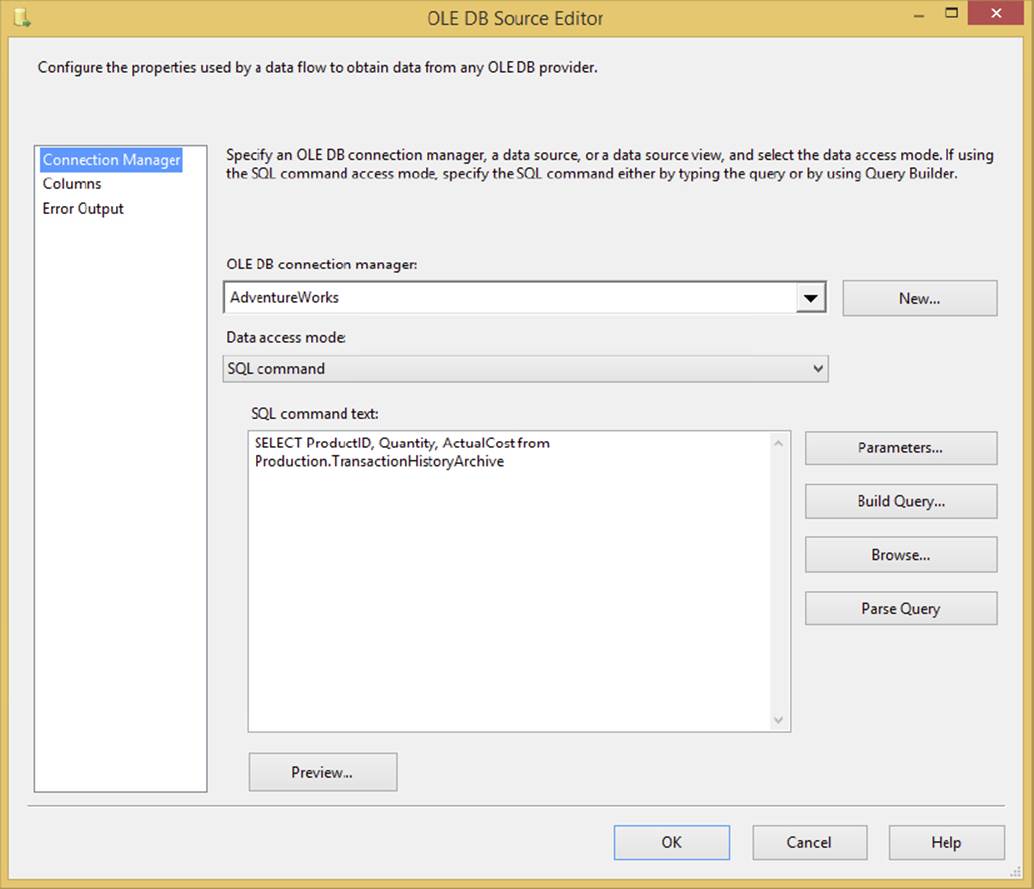

The OLE DB Source is the most common type of source, and it can point to any OLE DB–compliant Data Source such as SQL Server, Oracle, or DB2. To configure the OLE DB Source, double-click the source once you have added it to the design pane in the Data Flow tab. In the Connection Manager page of the OLE DB Source Editor (see Figure 4-2), select the Connection Manager of your OLE DB Source from the OLE DB Connection Manager dropdown box. You can also add a new Connection Manager in the editor by clicking the New button.

FIGURE 4-2

The “Data access mode” option specifies how you wish to retrieve the data. Your options here are Table/View or SQL Command, or you can pull either from a package variable. Once you select the data access mode, you need the table or view, or you can type a query. For multiple reasons that will be explained momentarily, it is a best practice to retrieve the data from a query. This query can also be a stored procedure. Additionally, you can pass parameters into the query by substituting a question mark (?) for where the parameter should be and then clicking the Parameters button. You’ll learn more about parameterization of your queries in Chapter 5.

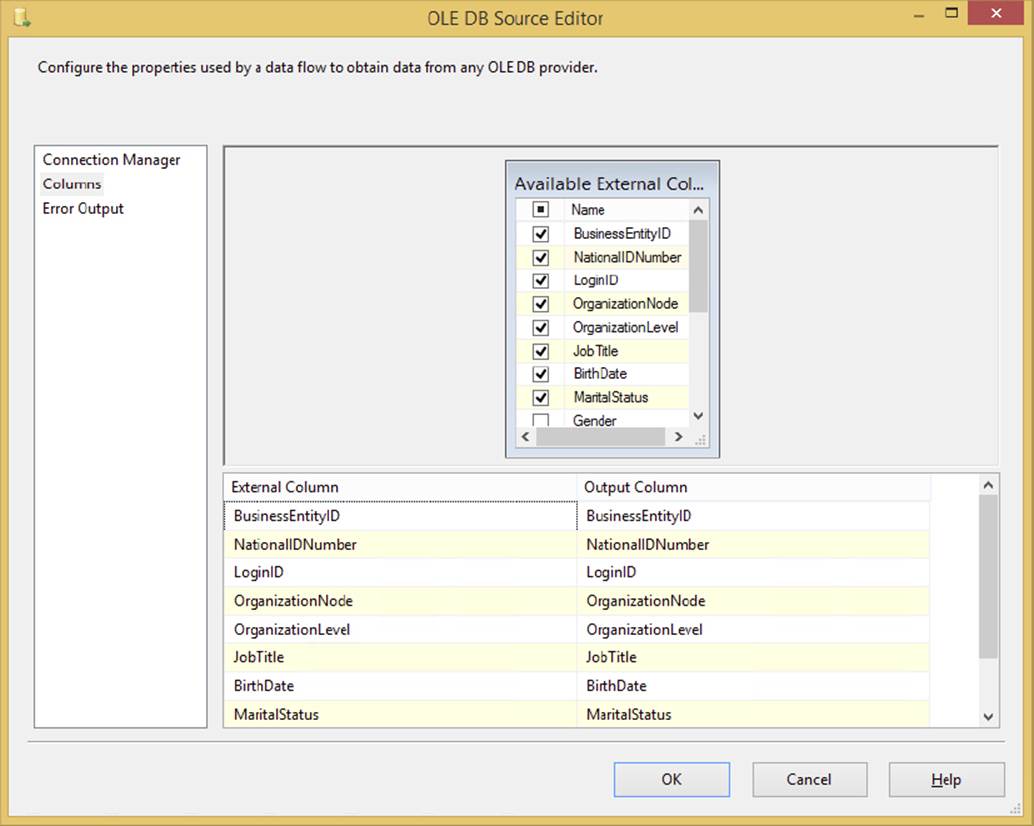

As with most sources, you can go to the Columns page to set columns that you wish to output to the Data Flow, as shown in Figure 4-3. Simply check the columns you wish to output, and you can then assign the name you want to send down the Data Flow in the Output column. Select only the columns that you want to use, because the smaller the data set, the better the performance you will get.

FIGURE 4-3

From a performance perspective, this is a case where it’s better to have typed the query in the Connection Manager page rather than to have selected a table. Selecting a table to pull data from essentially selects all columns and all rows from the target table, transporting all that data across the network. Then, going to the Columns page and unchecking the unnecessary columns applies a client-side filter on the data, which is not nearly as efficient as selecting only the necessary columns in the SQL query. This is also gentler on the amount of buffers you fill as well.

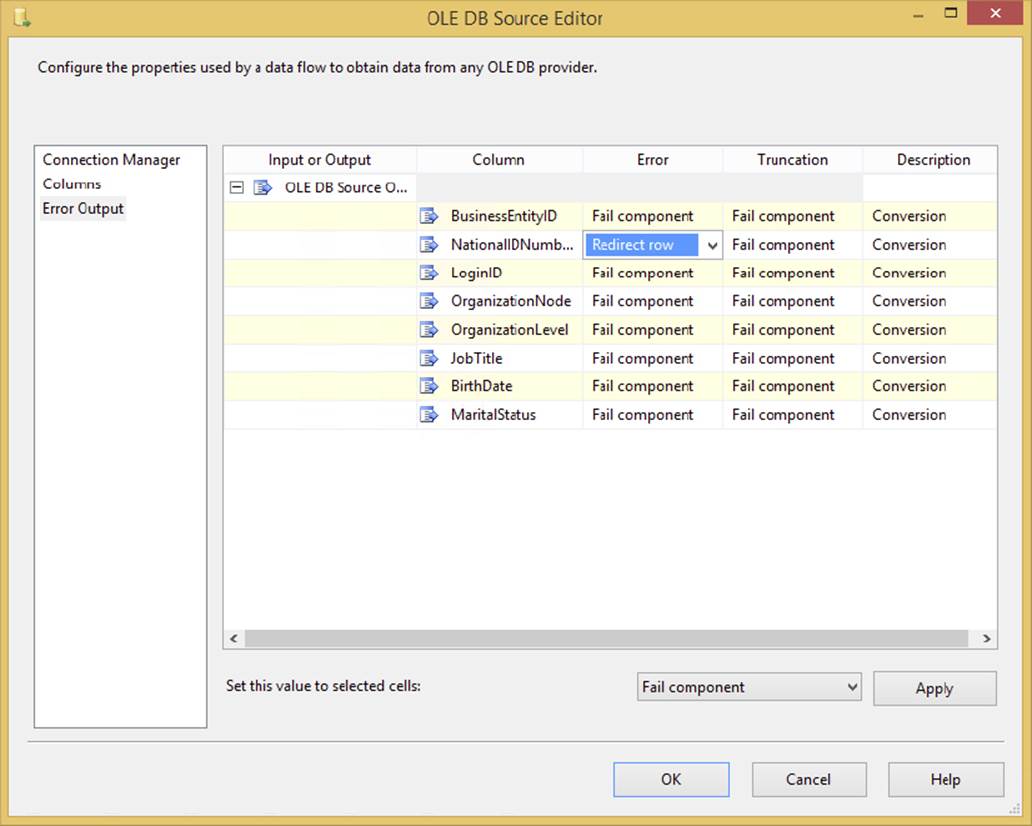

Optionally, you can go to the Error Output page (shown in Figure 4-4) and specify how you wish to handle rows that have errors. For example, you may wish to output any rows that have a data type conversion issue to a different path in the Data Flow. On each column, you can specify that if an error occurs, you wish the row to be ignored, be redirected, or fail. If you choose to ignore failures, the column for that row will be set to NULL. If you redirect the row, the row will be sent down the red path in the Data Flow coming out of the OLE DB Source.

FIGURE 4-4

The Truncation column specifies what to do if data truncation occurs. A truncation error would happen, for example, if you try to place 200 characters of data into a column in the Data Flow that supports only 100. You have the same options available to you for Truncation as you do for the Error option. By default, if an error occurs with data types or truncation, an error will occur, causing the entire Data Flow to fail.

Excel Source

The Excel Source is a source component that points to an Excel spreadsheet, just like it sounds. Once you point to an Excel Connection Manager, you can select the sheet from the “Name of the Excel sheet” dropdown box, or you can run a query by changing the Data Access Mode. This source treats Excel just like a database, where an Excel sheet is the table and the workbook is the database. If you do not see a list of sheets in the dropdown box, you may have a 64-bit machine that needs the ACE driver installed or you need to run the package in 32-bit mode. How to do this is documented in the next section in this chapter.

SSIS supports Excel data types, but it may not support them the way you wish by default. For example, the default format in Excel is General. If you right-click a column and select Format Cells, you’ll find that most of the columns in your Excel spreadsheet have probably been set to General. SSIS translates this general format as a Unicode string data type. In SQL Server, Unicode translates into nvarchar, which is probably not what you want. If you have a Unicode data type in SSIS and you try to insert it into a varchar column, it will potentially fail. The solution is to place a Data Conversion Transformation between the source and the destination in order to change the Excel data types. You can read more about Data Conversion Transformations later in this chapter.

Excel 64-Bit Scenarios

If you are connecting to an Excel 2007 spreadsheet or later, ensure that you select the proper Excel version when creating the Excel Connection Manager. You will not be able to connect to an Excel 2007, Excel 2010, or Excel 2013 spreadsheet otherwise. Additionally, the default Excel driver is a 32-bit driver only, and your packages have to run in 32-bit mode when using Excel connectivity. In the designer, you would receive the following error message if you do not have the correct driver installed:

The 'Microsoft.ACE.OLEDB.12.0' provider is not registered on the local machine.

To fix this, simply locate this driver on the Microsoft site and you’ll be able to run packages with an Excel source in 64-bit.

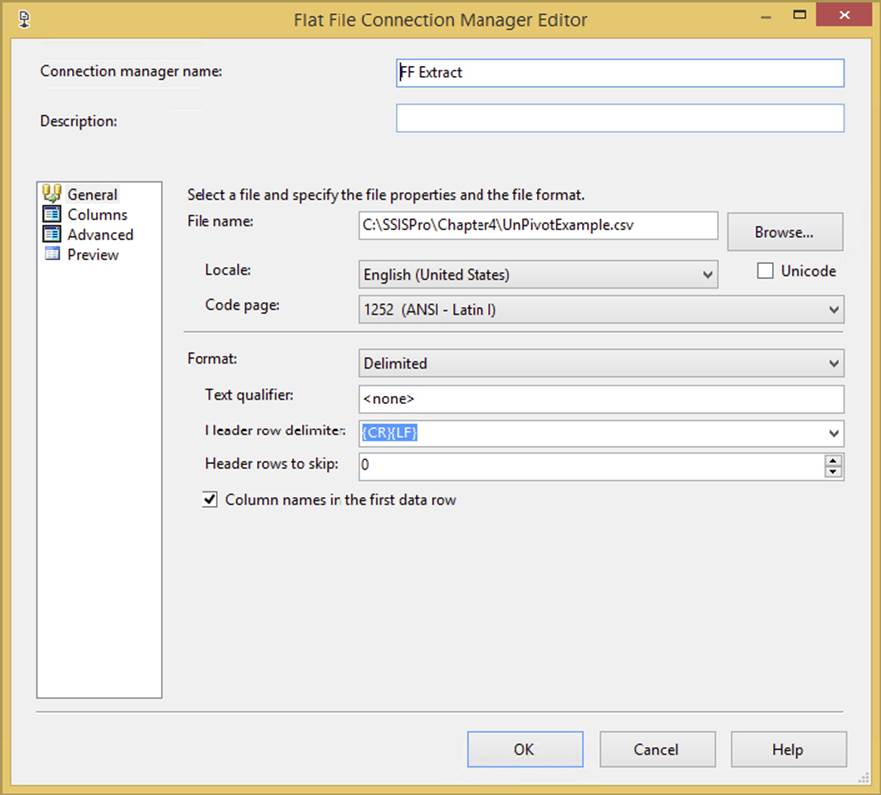

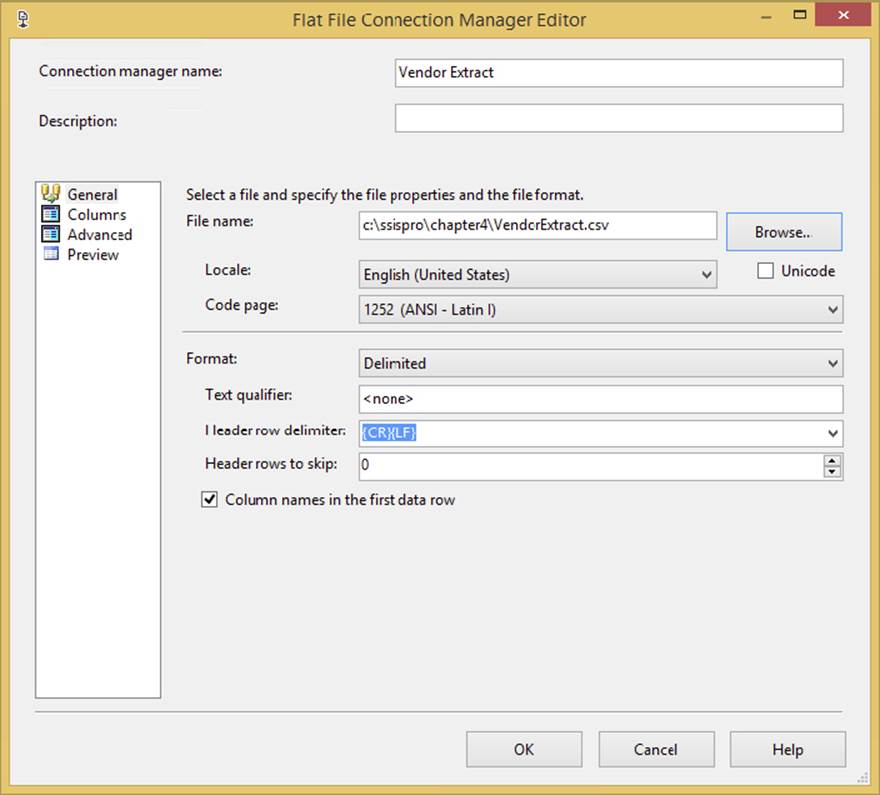

Flat File Source

The Flat File Source provides a data source for connections such as text files or data that’s delimited. Flat File Sources are typically comma- or tab-delimited files, or they could be fixed-width or ragged-right. A fixed-width file is typically received from the mainframe or government entities and has fixed start and stop points for each column. This method enables a fast load, but it takes longer at design time for the developer to map each column. You specify a Flat File Source the same way you specify an OLE DB Source. Once you add it to your Data Flow pane, you point it to a Connection Manager connection that is a flat file or a multi-flat file. Next, from the Columns tab, you specify which columns you want to be presented to the Data Flow. All the specifications for the flat file, such as delimiter type, were previously set in the Flat File Connection Manager.

In this example, you’ll create a Connection Manager that points to a file called FactSales.csv, which you can download from this book’s website at www.wrox.com/go/prossis2014. The file has a date column, a few string columns, integer columns, and a currency column. Because of the variety of data types it includes, this example presents an interesting case study for learning how to configure a Flat File Connection Manager.

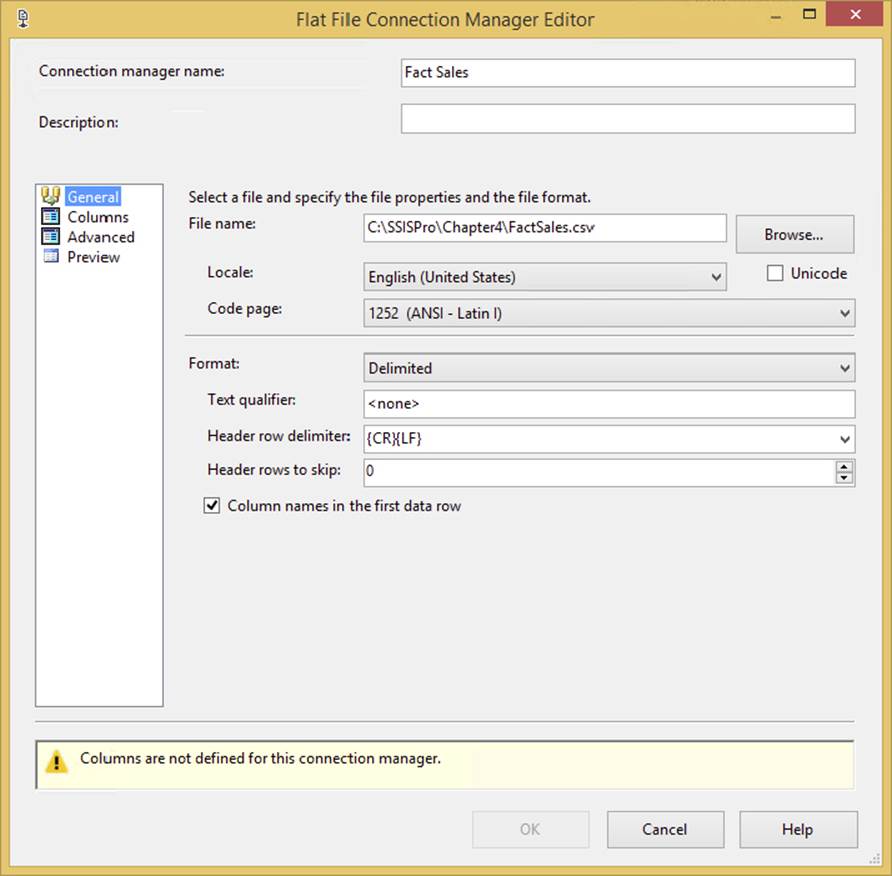

First, right-click in the Connection Manager area of the Package Designer and select New Flat File Connection Manager. This will open the Flat File Connection Manager Editor, as shown in Figure 4-5. Name the Connection Manager Fact Sales and point it to wherever you placed the FactSales.csv file. Check the “Column names in the first data row” option, which specifies that the first row of the file contains a header row with the column names.

FIGURE 4-5

Another important option is the “Text qualifier” option. Although there isn’t one for this file, sometimes your comma-delimited files may require that you have a text qualifier. A text qualifier places a character around each column of data to show that any comma delimiter inside that symbol should be ignored. For example, if you had the most common text qualifier of double-quotes around your data, a row may look like the following, whereby there are only three columns even though the commas may indicate five:

"Knight,Brian", 123, "Jacksonville, FL"

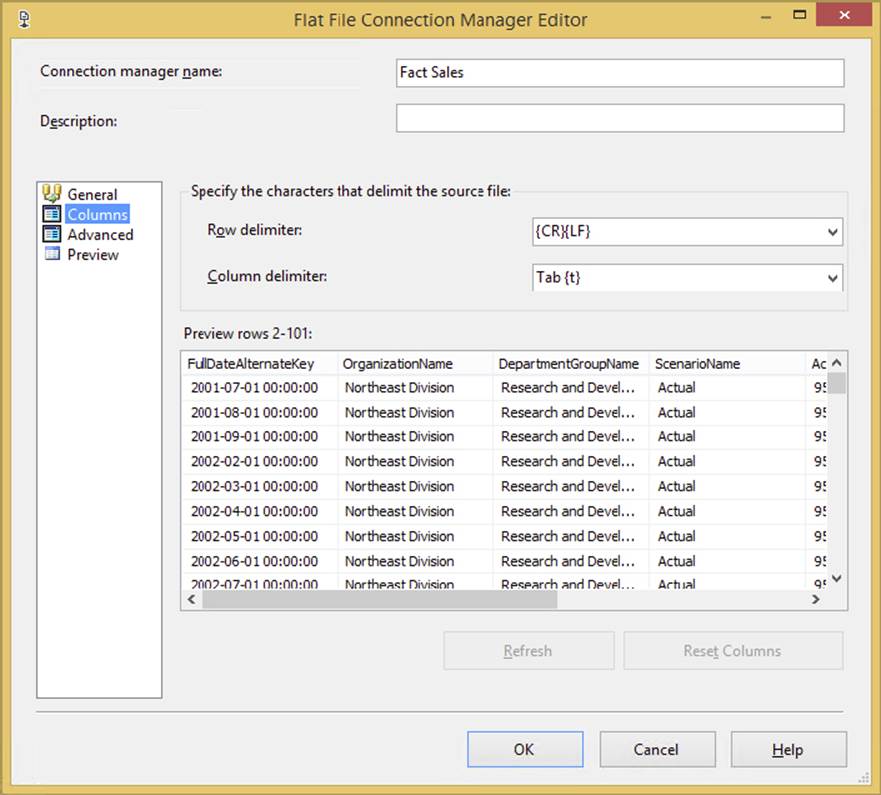

In the Columns page of the Connection Manager, you can specify what will delimit each column in the flat file if you chose a delimited file. The row delimiter specifies what will indicate a new row. The default option is a carriage return followed by a line feed. The Connection Manager’s file is automatically scanned to determine the column delimiter and, as shown in Figure 4-6, use a tab delimiter for the example file.

FIGURE 4-6

NOTE Often, once you make a major change to your header delimiter or your text qualifier, you’ll have to click the Reset Columns button. Doing so requeries the file in order to obtain the new column names. If you click this option, though, all your metadata in the Advanced page will be recreated as well, and you may lose a sizable amount of work.

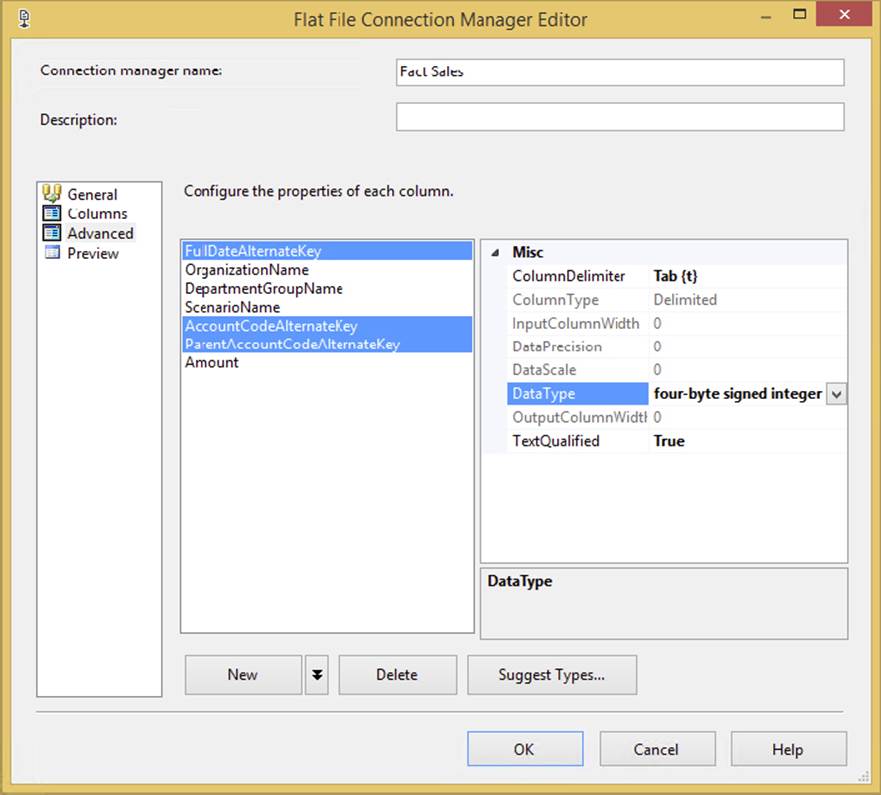

The Advanced page of the Connection Manager is the most important feature in the Connection Manager. In this tab, you specify the data type for each column in the flat file and the name of the column, as shown in Figure 4-7. This column name and data type will be later sent to the Data Flow. If you need to change the data types or names, you can always come back to the Connection Manager, but be aware that you need to open the Flat File Source again to refresh the metadata.

FIGURE 4-7

NOTE Making a change to the Connection Manager’s data types or columns requires that you refresh any Data Flow Task using that Connection Manager. To do so, open the Flat File Source Editor, which will prompt you to refresh the metadata of the Data Flow. Answer yes, and the metadata will be corrected throughout the Data Flow.

If you don’t want to specify the data type for each individual column, you can click the Suggest Types button on this page to have SSIS scan the first 100 records (by default) in the file to guess the appropriate data types. Generally speaking, it does a bad job at guessing, but it’s a great place to start if you have a lot of columns.

If you prefer to do this manually, select each column and then specify its data type. You can also hold down the Ctrl key or Shift key and select multiple columns at once and change the data types or column length for multiple columns at the same time.

A Flat File Connection Manager initially treats each column as a 50-character string by default. Leaving this default behavior harms performance when you have a true integer column that you’re trying to insert into SQL Server, or if your column contains more data than 50 characters of data. The settings you make in the Advanced page of the Connection Manager are the most important work you can do to ensure that all the data types for the columns are properly defined. You should also keep the data types as small as possible. For example, if you have a zip code column that’s only 9 digits in length, define it as a 9-character string. This will save an additional 41 bytes in memory multiplied by however many rows you have.

A frustrating point with SSIS sometimes is how it deals with SQL Server data types. For example, a varchar maps in SSIS to a string column. It was designed this way to translate well into the .NET development world and to provide an agnostic product. The following table contains some of the common SQL Server data types and what they are mapped into in a Flat File Connection Manager.

|

SQL SERVER DATA TYPE |

CONNECTION MANAGER DATA TYPE |

|

Bigint |

Eight-byte signed integer [DT_I8] |

|

Binary |

Byte stream [DT_BYTES] |

|

Bit |

Boolean [DT_BOOL] |

|

Tinyint |

Single-byte unsigned integer [DT_UI1] |

|

Datetime |

Database timestamp [DT_DBTIMESTAMP] |

|

Decimal |

Numeric [DT_NUMERIC] |

|

Real |

Float [DT_R4] |

|

Int |

Four-byte signed integer [DT_I4] |

|

Image |

Image [DT_IMAGE] |

|

Nvarchar or nchar |

Unicode string [DT_WSTR] |

|

Ntext |

Unicode text stream [DT_NTEXT] |

|

Numeric |

Numeric [DT_NUMERIC] |

|

Smallint |

Two-byte signed integer [DT_I2] |

|

Text |

Text stream [DT_TEXT] |

|

Timestamp |

Byte stream [DT_BYTES] |

|

Uniqueidentifier |

Unique identifier [DT_GUID] |

|

Varbinary |

Byte stream [DT_BYTES] |

|

Varchar or char |

String [DT_STR] |

|

Xml |

Unicode string [DT_WSTR] |

FastParse Option

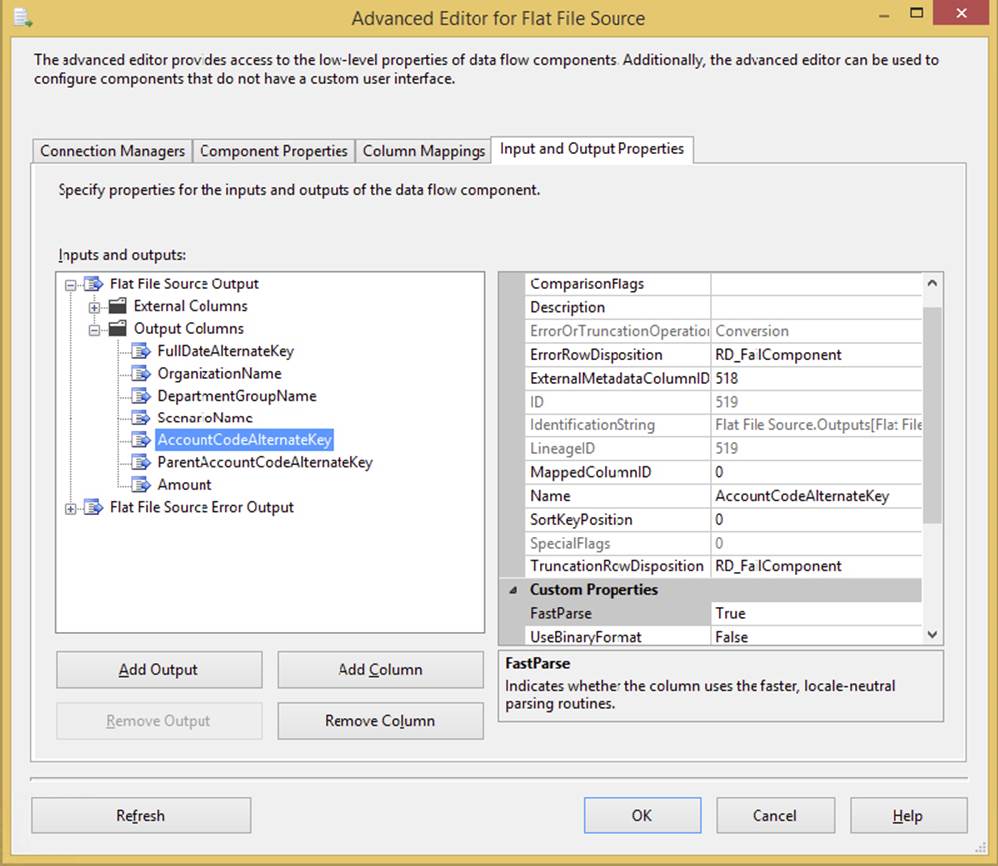

By default, SSIS issues a contract between the Flat File Source and a Data Flow. It states that the source component must validate any numeric or date column. For example, if you have a flat file in which a given column is set to a four-byte integer, every row must first go through a short validation routine to ensure that it is truly an integer and that no character data has passed through. On date columns, a quick check is done to ensure that every date is indeed a valid in-range date.

This validation is fast but it does require approximately 20 to 30 percent more time to validate that contract. To set the FastParse property, go into the Data Flow Task for which you’re using a Flat File Source. Right-click the Flat File Source and select Show Advanced Editor. From there, select the Input and Output Properties tab, and choose any number or date column under Flat File Output ⇒ Output Columns tree. In the right pane, change the FastParse property to True, as shown in Figure 4-8.

FIGURE 4-8

MultiFlatFile Connection Manager

If you know that you want to process a series of flat files in a Data Flow, or you want to refer to many files in the Control Flow, you can optionally use the MultiFlatFile or “Multiple Flat File Connection Manager.” The Multiple Flat File Connection Manager refers to a list of files for copying or moving, or it may hold a series of SQL scripts to execute, similar to the File Connection Manager. The Multiple Flat File Connection Manager gives you the same view as a Flat File Connection Manager, but it enables you to point to multiple files. In either case, you can point to a list of files by placing a vertical bar (|) between each filename:

C:\Projects\011305c.dat|C:\Projects\053105c.dat

In the Data Flow, the Multiple Flat File Connection Manager reacts by combining the total number of records from all the files that you have pointed to, appearing like a single merged file. Using this option will initiate the Data Flow process only once for the files whereas the Foreach Loop container will initiate the process once per file being processed. In either case, the metadata from the file must match in order to use them in the Data Flow. Most developers lean toward using Foreach Loop Containers because it’s easier to make them dynamic. With these Multiple File or Multiple Flat File Connection Managers, you have to parse your file list and add the vertical bar between them. If you use Foreach Loop Containers, that is taken care of for you.

Raw File Source

The Raw File Source is a specialized type of file that is optimized for reading data quickly from SSIS. A Raw File Source is created by a Raw File Destination (discussed later in this chapter). You can’t add columns to the Raw File Source, but you can remove unused columns from the source in much the same way you do in the other sources. Because the Raw File Source requires little translation, it can load data much faster than the Flat File Source, but the price of this speed is little flexibility. Typically, you see raw files used to capture data at checkpoints to be used later in case of a package failure.

These sources are typically used for cross-package or cross-Data Flow communication. For example, if you have a Data Flow that takes four hours to run, you might wish to stage the data to a raw file halfway through the processing in case a problem occurs. Then, the second Data Flow Task would continue the remaining two hours of processing.

XML Source

The XML source is a powerful SSIS source that can use a local or remote (via HTTP or UNC) XML file as the source. This source component is a bit different from the OLE DB Source in its configuration. First, you point to the XML file locally on your machine or at a UNC path. You can also point to a remote HTTP address for an XML file. This is useful for interaction with a vendor. This source is also very useful when used in conjunction with the Web Service Task or the XML Task. Once you point the data item to an XML file, you must generate an XSD (XML Schema Definition) file by clicking the Generate XSD button or point to an existing XSD file. The schema definition can also be an in-line XML file, so you don’t necessarily need an XSD file. Each of these cases may vary based on the XML that you’re trying to connect. The rest of the source resembles other sources; for example, you can filter the columns you don’t want to see down the chain.

ADO.NET Source

The ADO.NET Source enables you to make a .NET provider a source and make it available for consumption inside the package. The source uses an ADO.NET Connection Manager to connect to the provider. The Data Flow is based on OLE DB, so for best performance, using the OLE DB Source is preferred. However, some providers might require that you use the ADO.NET source. Its interface is identical in appearance to the OLE DB Source, but it does require an ADO.NET Connection Manager.

DESTINATIONS

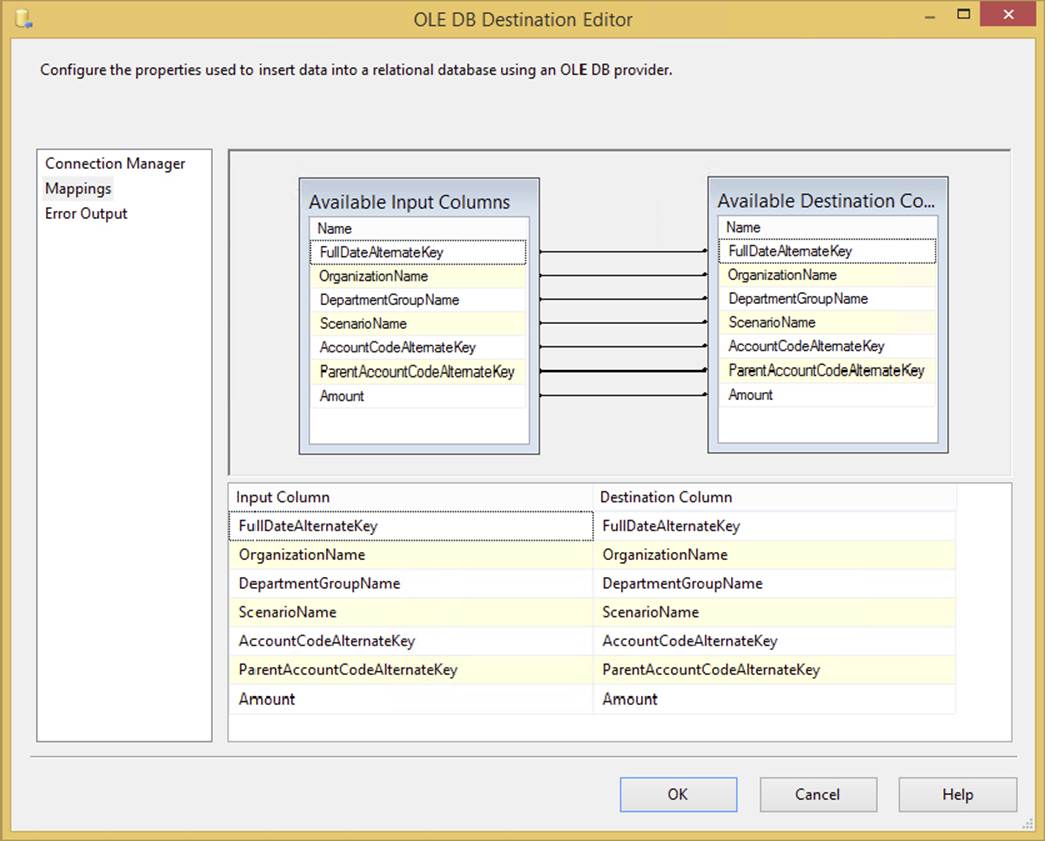

Inside the Data Flow, destinations accept the data from the Data Sources and from the transformations. The architecture can send the data to nearly any OLE DB–compliant Data Source, a flat file, or Analysis Services, to name just a few. Like sources, destinations are managed through Connection Managers. The configuration difference between sources and destinations is that in destinations, you have a Mappings page (shown in Figure 4-9), where you specify how the inputted data from the Data Flow maps to the destination. As shown in the Mappings page in this figure, the columns are automatically mapped based on column names, but they don’t necessarily have to be exactly lined up. You can also choose to ignore given columns, such as when you’re inserting into a table that has an identity column, and you don’t want to inherit the value from the source table.

FIGURE 4-9

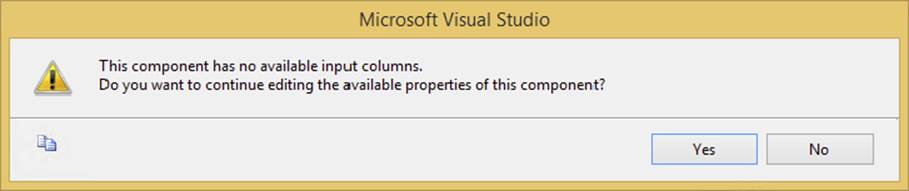

In SQL Server 2014, you can start by configuring the destination first, but it would lack the metadata you need. So, you will really want to connect to a Data Flow path. To do this, select the source or a transformation and drag the blue arrow to the destination. If you want to output bad data or data that has had an error to a destination, you would drag the red arrow to that destination. If you try to configure the destination before attaching it to the transformation or source, you will see the error in Figure 4-10. In SQL Server 2014, you can still proceed and edit the component, but it won’t be as meaningful without the live metadata.

FIGURE 4-10

Excel Destination

The Excel Destination is identical to the Excel Source except that it accepts data rather than sends data. To use it, first select the Excel Connection Manager from the Connection Manager page, and then specify the worksheet into which you wish to load data.

WARNING The big caveat with the Excel Destination is that unlike the Flat File Destination, an Excel spreadsheet must already exist with the sheet into which you wish to copy data. If the spreadsheet doesn’t exist, you will receive an error. To work around this issue, you can create a blank spreadsheet to use as your template, and then use the File System Task to copy the file over.

Flat File Destination

The commonly used Flat File Destination sends data to a flat file, and it can be fixed-width or delimited based on the Connection Manager. The destination uses a Flat File Connection Manager. You can also add a custom header to the file by typing it into the Header option in the Connection Manager page. Lastly, you can specify on this page that the destination file should be overwritten each time the Data Flow is run.

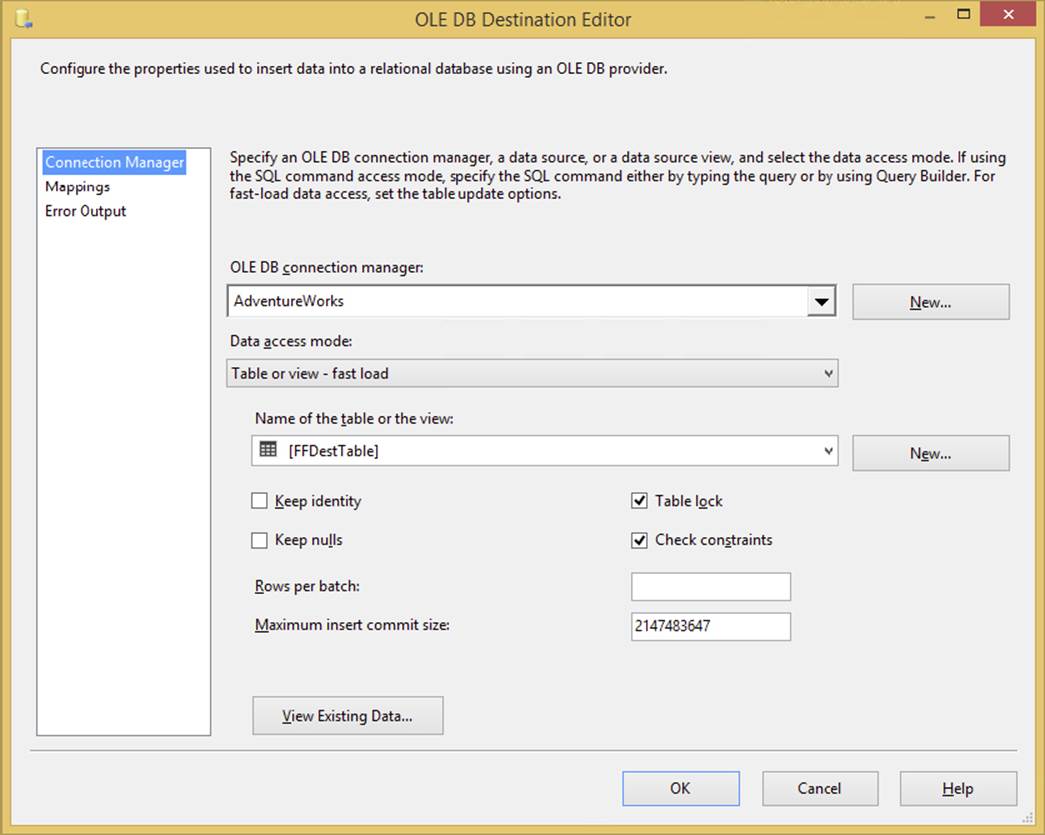

OLE DB Destination

Your most commonly used destination will probably be the OLE DB Destination (see Figure 4-11). It can write data from the source or transformation to OLE DB–compliant Data Sources such as Oracle, DB2, Access, and SQL Server. It is configured like any other destination and source, using OLE DB Connection Managers. A dynamic option it has is the Data Access Mode. If you select Table or View - Fast Load, or its variable equivalent, several options will be available, such as Table Lock. This Fast Load option is available only for SQL Server database instances and turns on a bulk load option in SQL Server instead of a row-by-row operation.

FIGURE 4-11

A few options of note here are Rows Per Batch, which specifies how many rows are in each batch sent to the destination, and Maximum Insert Commit Size, which specifies how large the batch size will be prior to issuing a commit statement. The Table Lock option places a lock on the destination table to speed up the load. As you can imagine, this causes grief for your users if they are trying to read from the table at the same time. Another important option is Keep Identity, which enables you to insert into a column that has the identity property set on it. Generally speaking, you can improve performance by setting Max Insert Commit Size to a number like 10,000, but that number will vary according to column width.

New users commonly ask what the difference is between the fast load and the normal load (table or view option) for the OLE DB Destination. The Fast Load option specifies that SSIS will load data in bulk into the OLE DB Destination’s target table. Because this is a bulk operation, error handling via a redirection or ignoring data errors is not allowed. If you require this level of error handling, you need to turn off bulk loading of the data by selecting Table or View for the Data Access Mode option. Doing so will allow you to redirect your errors down the red line, but it causes a slowdown of the load by a factor of at least four.

Raw File Destination

The Raw File Destination is an especially speedy Data Destination that does not use a Connection Manager to configure. Instead, you point to the file on the server in the editor. This destination is written to typically as an intermediate point for partially transformed data. Once written to, other packages can read the data in by using the Raw File Source. The file is written in native format, so it is very fast.

Recordset Destination

The Recordset Destination populates an ADO recordset that can be used outside the transformation. For example, you can populate the ADO recordset, and then a Script Task could read that recordset by reading a variable later in the Control Flow. This type of destination does not support an error output like some of the other destinations.

Data Mining Model Training

The Data Mining Model Training Destination can train (the process of a data mining algorithm learning the data) an Analysis Services data mining model by passing it data from the Data Flow. You can train multiple mining models from a single destination and Data Flow. To use this destination, you select an Analysis Services Connection Manager and the mining model. Analysis Services mining models are beyond the scope of this book; for more information, please see Professional SQL Server Analysis Services 2012 with MDX and DAX by Sivakumar Harinath and his coauthors (Wrox, 2012).

NOTE The data you pass into the Data Mining Model Training Destination must be presorted. To do this, you use the Sort Transformation, discussed later in this chapter.

DataReader Destination

The DataReader Destination provides a way to extend SSIS Data Flows to external packages or programs that can use the DataReader interface, such as a .NET application. When you configure this destination, ensure that its name is something that’s easy to recognize later in your program, because you will be calling that name later. After you have configured the name and basic properties, check the columns you’d like outputted to the destination in the Input Columns tab.

Dimension and Partition Processing

The Dimension Processing Destination loads and processes an Analysis Services dimension. You have the option to perform full, incremental, or update processing. To configure the destination, select the Analysis Services Connection Manager that contains the dimension you would like to process on the Connection Manager page of the Dimension Processing Destination Editor. You will then see a list of dimensions and fact tables in the box. Select the dimension you want to load and process, and from the Mappings page, map the data from the Data Flow to the selected dimension. Lastly, you can configure how you would like to handle errors, such as unknown keys, in the Advanced page. Generally, the default options are fine for this page unless you have special error-handling needs. The Partition Processing Destination has identical options, but it processes an Analysis Services partition instead of a dimension.

COMMON TRANSFORMATIONS

Transformations or transforms are key components to the Data Flow that transform the data to a desired format as you move from step to step. For example, you may want a sampling of your data to be sorted and aggregated. Three transformations can accomplish this task for you: one to take a random sampling of the data, one to sort, and another to aggregate. The nicest thing about transformations in SSIS is that they occur in-memory and no longer require elaborate scripting as in SQL Server 2000 DTS. As you add a transformation, the data is altered and passed down the path in the Data Flow. Also, because this is done in-memory, you no longer have to create staging tables to perform most functions. When dealing with very large data sets, though, you may still choose to create staging tables.

You set up the transformation by dragging it onto the Data Flow tab design area. Then, click the source or transformation you’d like to connect it to, and drag the green arrow to the target transformation or destination. If you drag the red arrow, then rows that fail to transform will be directed to that target. After you have the transformation connected, you can double-click it to configure it.

Synchronous versus Asynchronous Transformations

Transformations are divided into two main categories: synchronous and asynchronous. In SSIS, you want to ideally use all synchronous components. Synchronous transformations are components such as the Derived Column and Data Conversion Transformations, where rows flow into memory buffers in the transformation, and the same buffers come out. No rows are held, and typically these transformations perform very quickly, with minimal impact to your Data Flow.

Asynchronous transformations can cause a block in your Data Flow and slow down your runtime. There are two types of asynchronous transformations: partially blocking and fully blocking.

· Partially blocking transformations, such as the Union All, create new memory buffers for the output of the transformation.

· Fully blocking transformations, such as the Sort and Aggregate Transformations, do the same thing but cause a full block of the data. In order to sort the data, SSIS must first see every single row of the data. If you have a 100MB file, then you may require 200MB of RAM in order to process the Data Flow because of a fully blocking transformation. These fully blocking transformations represent the single largest slowdown in SSIS and should be considered carefully in terms of any architecture decisions you must make.

NOTE Chapter 16 covers these concepts in much more depth.

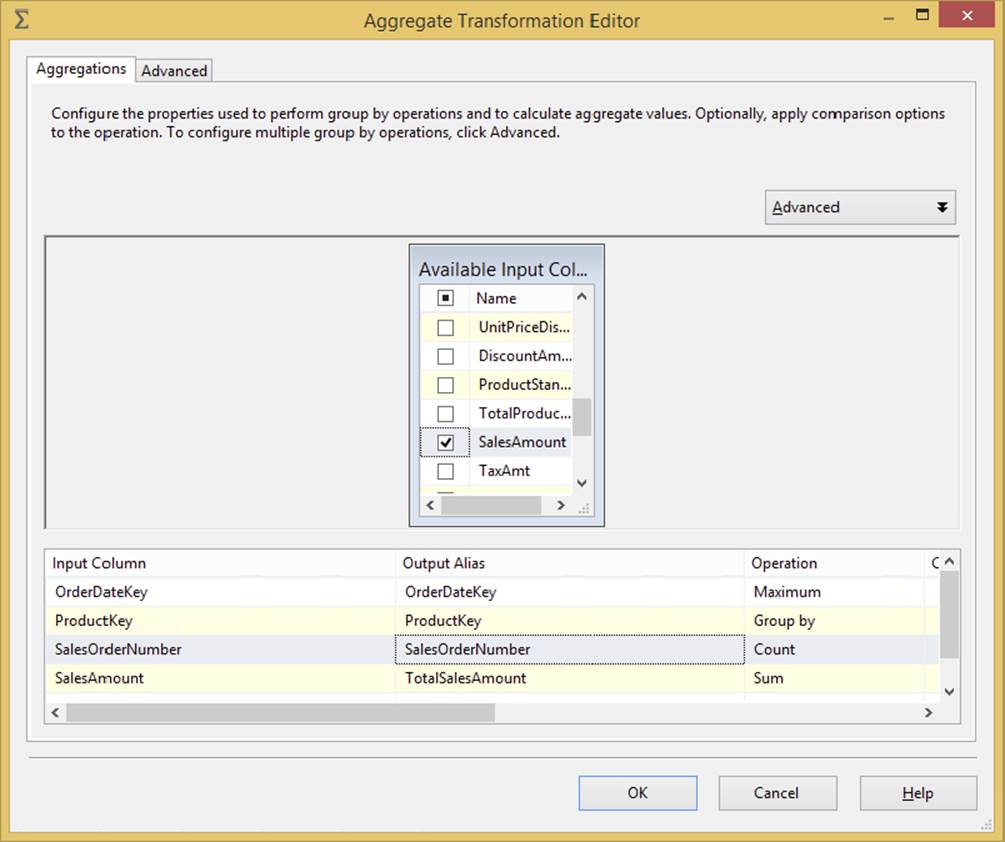

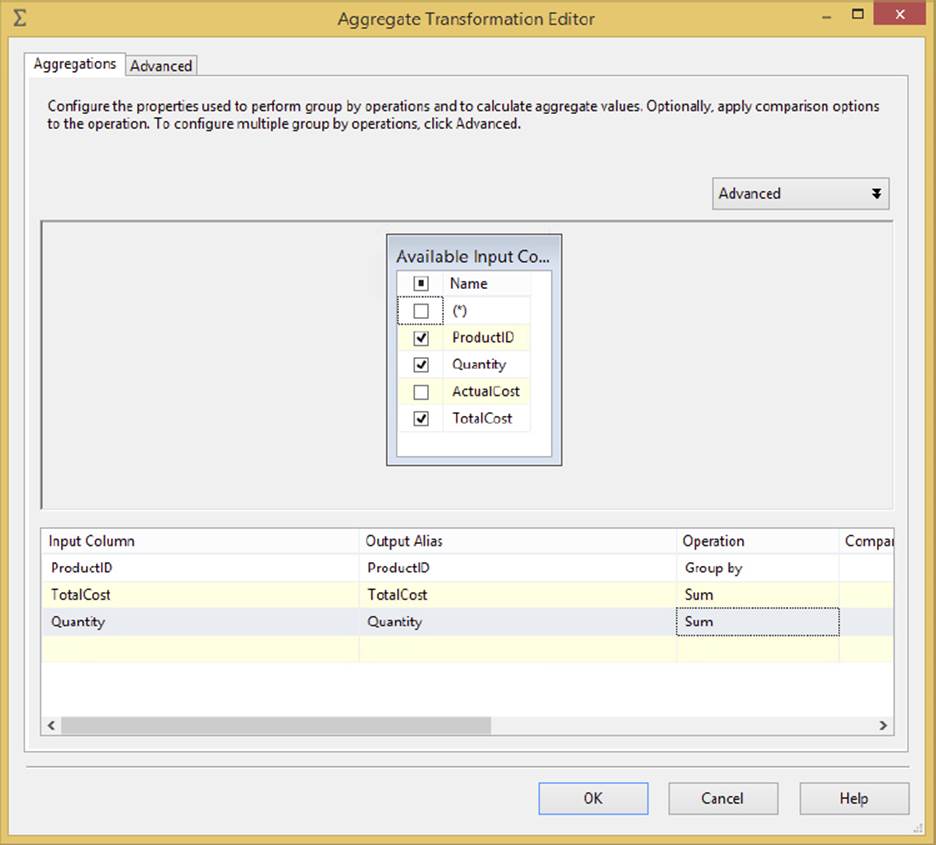

Aggregate

The fully blocking asynchronous Aggregate Transformation allows you to aggregate data from the Data Flow to apply certain T-SQL functions that are done in a GROUP BY statement, such as Average, Minimum, Maximum, and Count. For example, in Figure 4-12, you can see that the data is grouped together on the ProductKey column, and then the SalesAmount column is summed. Lastly, for every ProductKey, the maximum OrderDateKey is aggregated. This produces four new columns that can be consumed down the path, or future actions can be performed on them and the other columns dropped at that time.

FIGURE 4-12

The Aggregate Transformation is configured in the Aggregate Transformation Editor (see Figure 4-12). To do so, first check the column on which you wish to perform the action. After checking the column, the input column will be filled below in the grid. Optionally, type an alias in the Output Alias column that you wish to give the column when it is outputted to the next transformation or destination. For example, if the column currently holds the total money per customer, you might change the name of the column that’s outputted from SalesAmount to TotalCustomerSaleAmt. This will make it easier for you to recognize what the column represents along the data path. The most important option is Operation. For this option, you can select the following:

· Group By: Breaks the data set into groups by the column you specify

· Average: Averages the selected column’s numeric data

· Count: Counts the records in a group

· Count Distinct: Counts the distinct non-NULL values in a group

· Minimum: Returns the minimum numeric value in the group

· Maximum: Returns the maximum numeric value in the group

· Sum: Returns sum of the selected column’s numeric data in the group

You can click the Advanced tab to see options that enable you to configure multiple outputs from the transformation. After you click Advanced, you can type a new Aggregation Name to create a new output. You will then be able to check the columns you’d like to aggregate again as if it were a new transformation. This can be used to roll up the same input data different ways.

In the Advanced tab, the “Key scale” option sets an approximate number of keys. The default is Unspecified, which optimizes the transformation’s cache to the appropriate level. For example, setting this to Low will optimize the transform to write 500,000 keys. Setting it to Medium will optimize it for 5,000,000 keys, and High will optimize the transform for 25,000,000 keys. You can also set the exact number of keys by using the “Number of keys” option.

The “Count distinct scale” option will optionally set the amount of distinct values that can be written by the transformation. The default value is Unspecified, but if you set it to Low, the transformation will be optimized to write 500,000 distinct values. Setting the option to Medium will set it to 5,000,000 values, and High will optimize the transformation to 25,000,000. The Auto Extend Factor specifies to what factor your memory can be extended by the transformation. The default option is 25 percent, but you can specify another setting to keep your RAM from getting away from you.

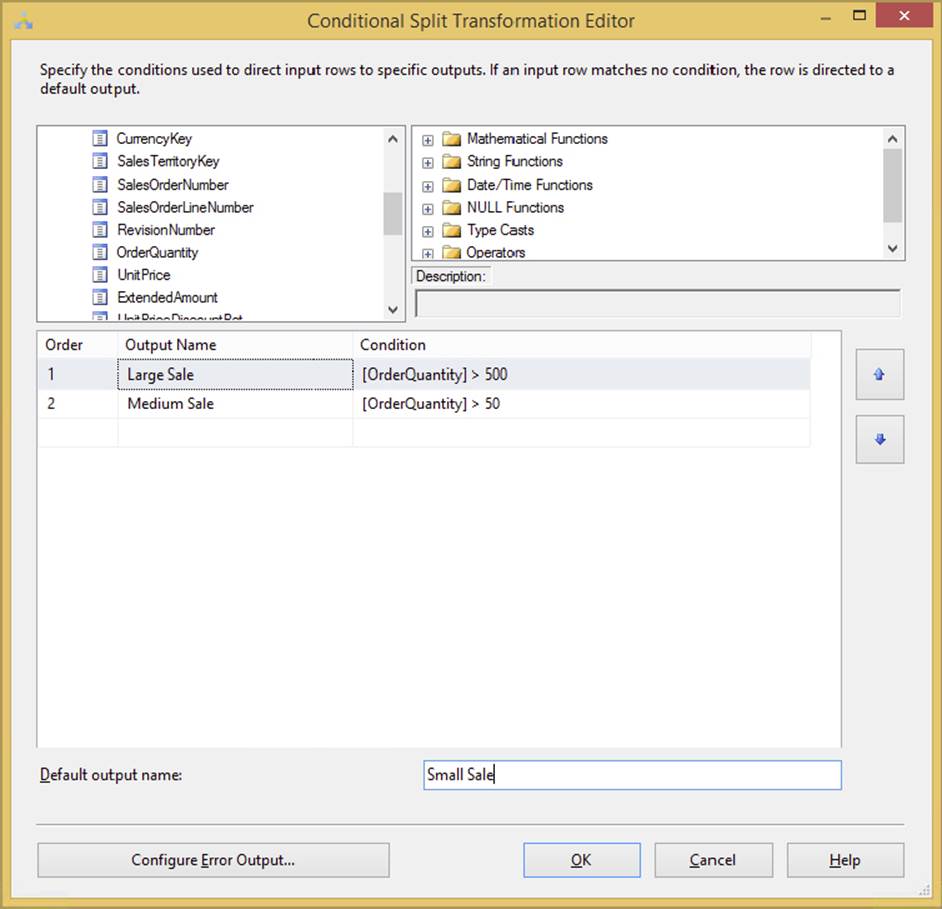

Conditional Split

The Conditional Split Transformation is a fantastic way to add complex logic to your Data Flow. This transformation enables you to send the data from a single data path to various outputs or paths based on conditions that use the SSIS expression language. For example, you could configure the transformation to send all products with sales that have a quantity greater than 500 to one path, and products that have more than 50 sales down another path. Lastly, if neither condition is met, the sales would go down a third path, called “Small Sale,” which essentially acts as an ELSE statement in T-SQL. This exact situation is shown in Figure 4-13. You can drag and drop the column or expression code snippets from the tree in the top-right panel. After you complete the condition, you need to name it something logical, rather than the default name of Case 1. You’ll use this case name later in the Data Flow. You also can configure the “Default output name,” which will output any data that does not fit any case. Each case in the transform and the default output name will show as a green line in the Data Flow and will be annotated with the name you typed in.

FIGURE 4-13

You can also conditionally read string data by using SSIS expressions, such as the following example, which reads the first letter of the City column:

SUBSTRING(City,1,1) == "F"

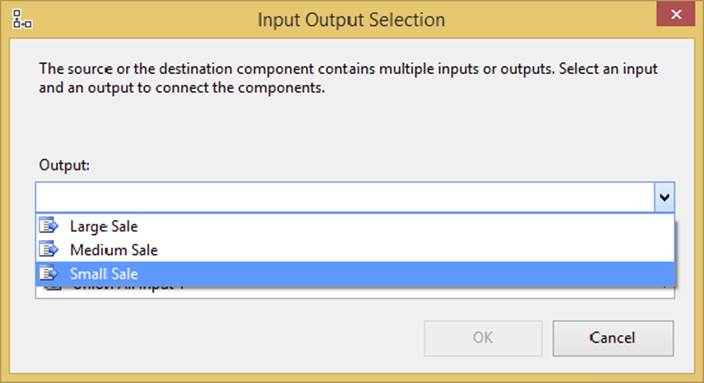

You can learn much more about the expression language in Chapter 5. Once you connect the transformation to the next transformation in the path or destination, you’ll see a pop-up dialog that lets you select which case you wish to flow down this path, as shown inFigure 4-14. In this figure, you can see three cases. The “Large Sale” condition can go down one path, “Medium Sales” down another, and the default “Small Sales” down the last path. After you complete the configuration of the first case, you can create a path for each case in the conditional split.

FIGURE 4-14

A much more detailed example of the Conditional Split Transformation is given in Chapter 8.

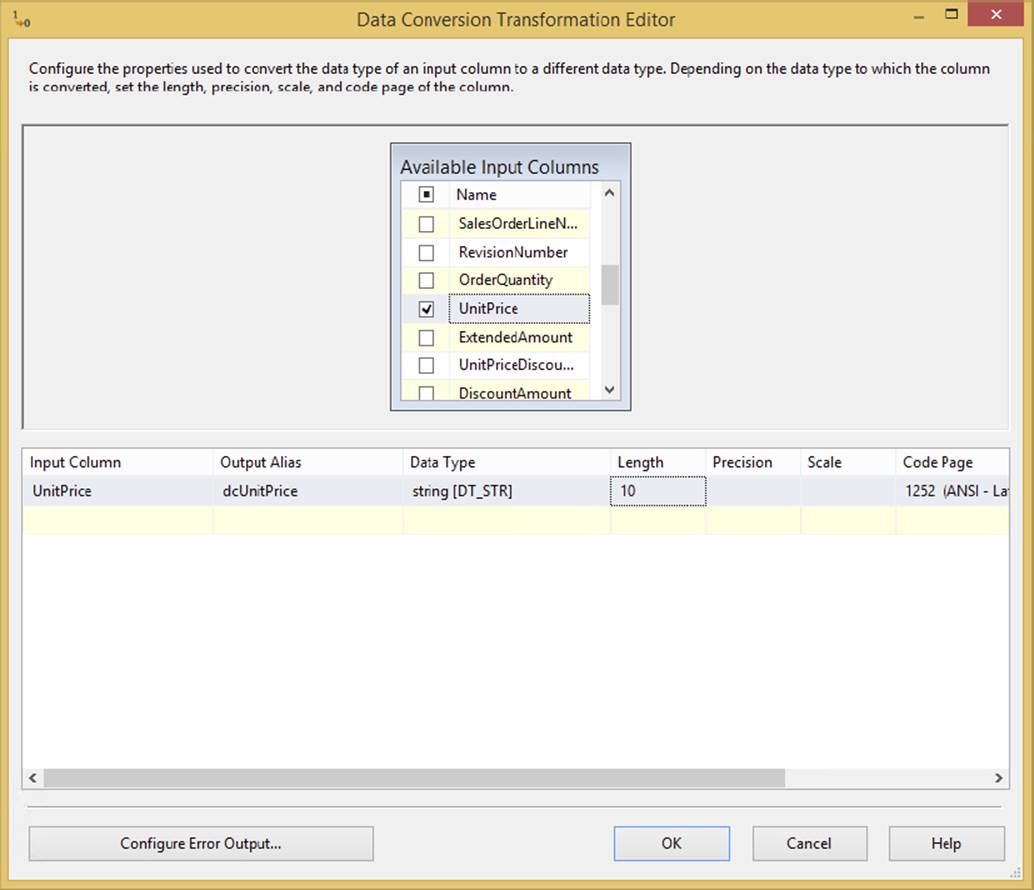

Data Conversion

The Data Conversion Transformation performs a similar function to the CONVERT or CAST functions in T-SQL. This transformation is configured in the Data Conversion Transformation Editor (see Figure 4-15), where you check each column that you wish to convert and then specify to what you wish to convert it under the Data Type column. The Output Alias is the column name you want to assign to the column after it is transformed. If you don’t assign it a new name, it will later be displayed as Data Conversion: ColumnName in the Data Flow. This same logic can also be accomplished in a Derived Column Transform, but this component provides a simpler UI.

FIGURE 4-15

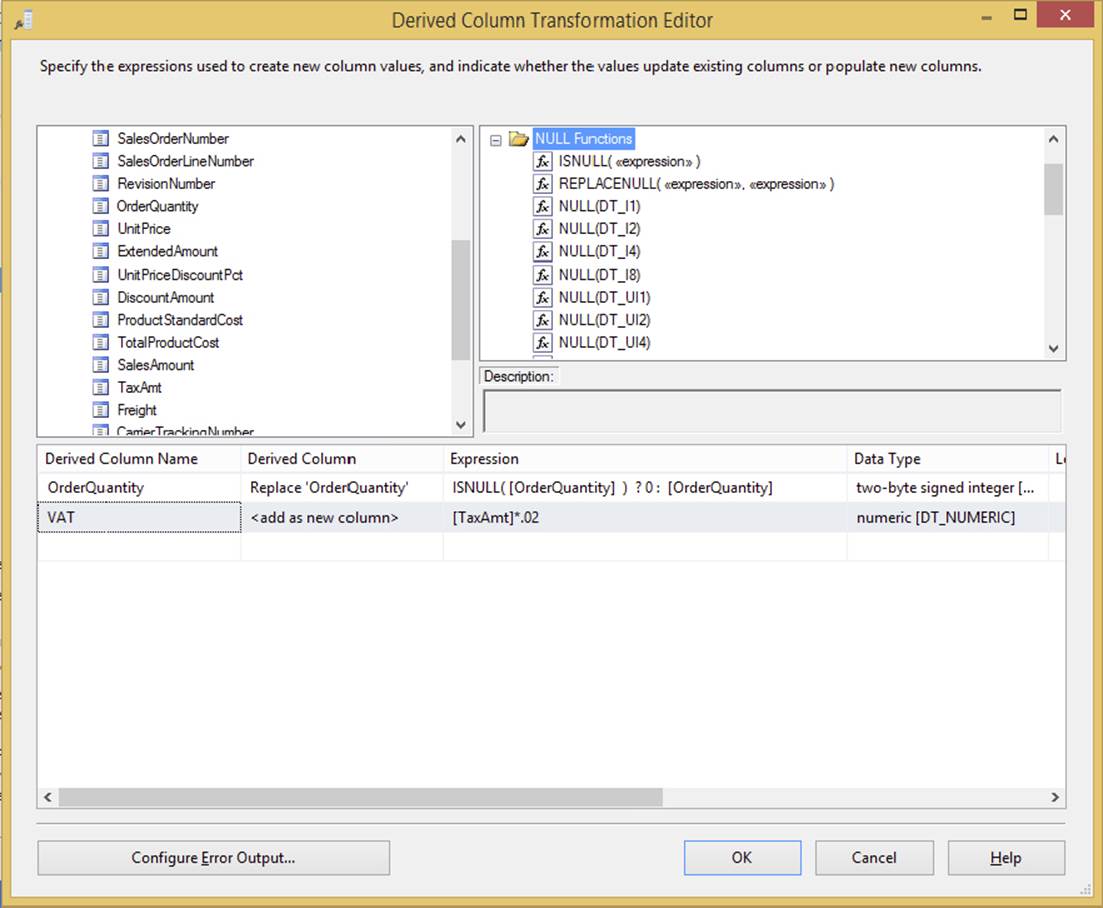

Derived Column

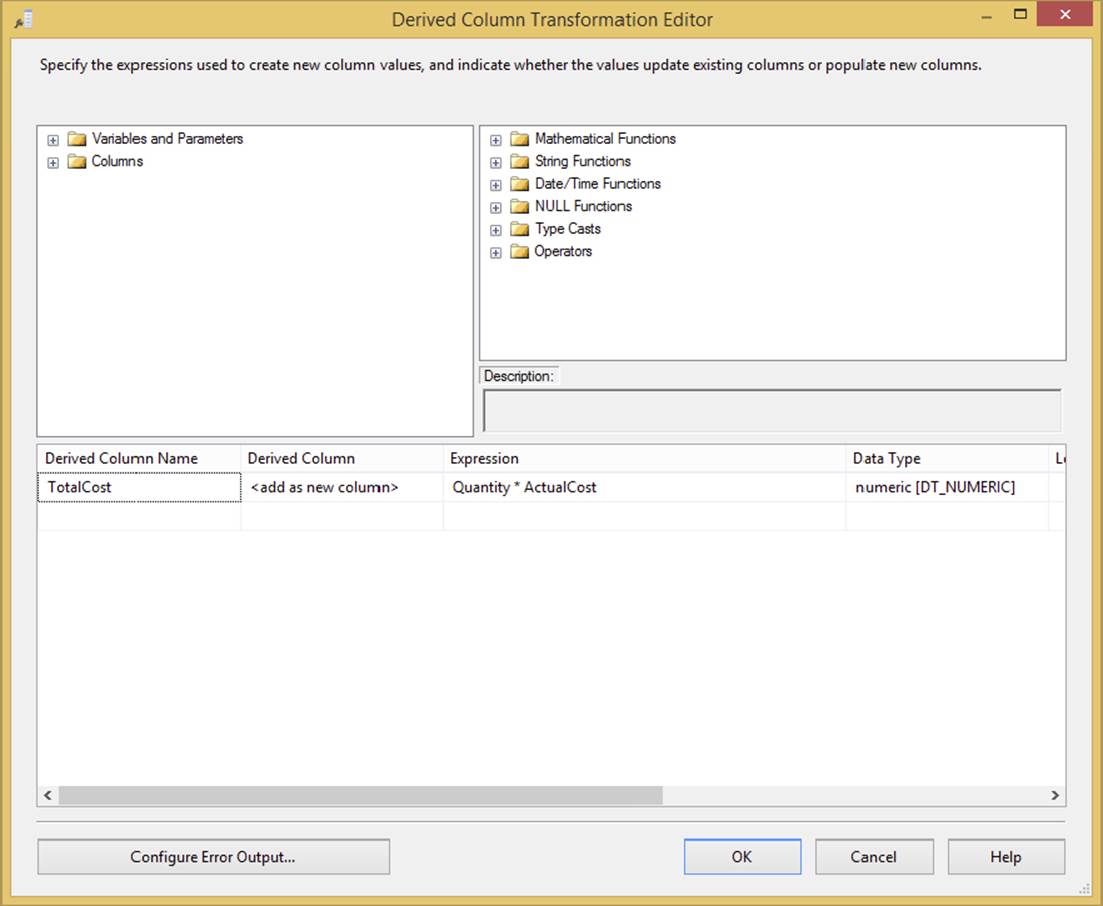

The Derived Column Transformation creates a new column that is calculated (derived) from the output of another column or set of columns. It is one of the most important transformations in your Data Flow arsenal. You may wish to use this transformation, for example, to multiply the quantity of orders by the cost of the order to derive the total cost of the order, as shown in Figure 4-16. You can also use it to find out the current date or to fill in the blanks in the data by using the ISNULL function. This is one of the top five transformations that you will find yourself using to alleviate the need for T-SQL scripting in the package.

FIGURE 4-16

To configure this transformation, drag the column or variable into the Expression column, as shown in Figure 4-16. Then add any functions to it. You can find a list of functions in the top-right corner of the Derived Column Transformation Editor. You must then specify, in the Derived Column dropdown box, whether you want the output to replace an existing column in the Data Flow or create a new column. As shown in Figure 4-16, the first derived column expression is doing an in-place update of the OrderQuantity column. The expression states that if the OrderQuantity column is null, then convert it to 0; otherwise, keep the existing data in the OrderQuantity column. If you create a new column, specify the name in the Derived Column Name column, as shown in the VAT column.

You’ll find all the available functions for the expression language in the top-right pane of the editor. There are no hidden or secret expressions in this C# variant expression language. We use the expression language much more throughout this and future chapters so don’t worry too much about the details of the language yet.

Some common expressions can be found in the following table:

|

EXAMPLE EXPRESSION |

DESCRIPTION |

|

SUBSTRING(ZipCode, 1,5) |

Captures the first 5 numbers of a zip code |

|

ISNULL(Name) ? “NA” : Name |

If the Name column is NULL, replace with the value of NA. Otherwise, keep the Name column as is. |

|

UPPER(FirstName) |

Uppercases the FirstName column |

|

(DT_WSTR, 3)CompanyID + Name |

Converts the CompanyID column to a string and appends it to the Name column |

Lookup

The Lookup Transformation performs what equates to an INNER JOIN on the Data Flow and a second data set. The second data set can be an OLE DB table or a cached file, which is loaded in the Cache Transformation. After you perform the lookup, you can retrieve additional columns from the second column. If no match is found, an error occurs by default. You can later choose, using the Configure Error Output button, to ignore the failure (setting any additional columns retrieved from the reference table to NULL) or redirect the rows down the second nonmatched green path.

NOTE This is a very detailed transformation; it is covered in much more depth in Chapter 7 and again in Chapter 8.

Cache

The Cache Transformation enables you to load a cache file on disk in the Data Flow. This cache file is later used for fast lookups in a Lookup Transformation. The Cache Transformation can be used to populate a cache file in the Data Flow as a transformation, and then be immediately used, or it can be used as a destination and then used by another package or Data Flow in the same package.

The cache file that’s created enables you to perform lookups against large data sets from a raw file. It also enables you to share the same lookup cache across many Data Flows or packages.

NOTE This transformation is covered in much more detail in Chapter 7.

Row Count

The Row Count Transformation provides the capability to count rows in a stream that is directed to its input source. This transformation must place that count into a variable that could be used in the Control Flow — for insertion into an audit table, for example. This transformation is useful for tasks that require knowing “how many?” It is especially valuable because you don’t physically have to commit stream data to a physical table to retrieve the count, and it can act as a destination, terminating your data stream. If you need to know how many rows are split during the Conditional Split Transformation, direct the output of each side of the split to a separate Row Count Transformation. Each Row Count Transformation is designed for an input stream and will output a row count into a Long (integer) or compatible data type. You can then use this variable to log information into storage, to build e-mail messages, or to conditionally run steps in your packages.

For this transformation, all you really need to provide in terms of configuration is the name of the variable to store the count of the input stream. You will now simulate a row count situation in a package. You could use this type of logic to implement conditional execution of any task, but for simplicity you’ll conditionally execute a Script Task that does nothing.

1. Create an SSIS package named Row Count Example. Add a Data Flow Task to the Control Flow design surface.

2. In the Control Flow tab, add a variable named iRowCount. Ensure that the variable is package scoped and of type Int32. If you don’t know how to add a variable, select Variable from the SSIS menu and click the Add Variable button.

3. Create a Connection Manager that connects to the AdventureWorks database. Add an OLE DB Data Source to the Data Flow design surface. Configure the source to point to your AdventureWorks database’s Connection Manager and the table [ErrorLog].

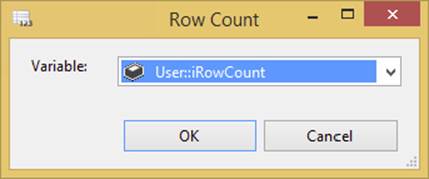

4. Add a Row Count Transformation Task to the Data Flow tab. Open the Advanced Editor. Select the variable named User::iRowCount as the Variable property. Your editor should resemble Figure 4-17.

FIGURE 4-17

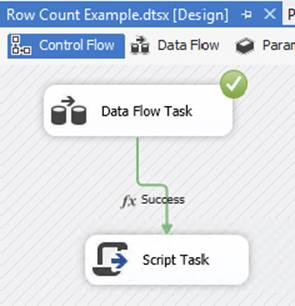

5. Return to the Control Flow tab and add a Script Task. This task won’t really perform any action. It will be used to show the conditional capability to perform steps based on the value returned by the Row Count Transformation.

6. Connect the Data Flow Task to the Script Task.

7. Right-click the arrow connecting the Data Flow and Script Tasks. Select the Edit menu. In the Precedence Constraint Editor, change the Evaluation Operation to Expression. Set the Expression to @iRowCount>0.

When you run the package, you’ll see that the Script Task is not executed. If you are curious, insert a row into the [ErrorLog] table and rerun the package or change the source table that has data. You’ll see that the Script Task will show a green checkmark, indicating that it was executed. An example of what your package may look like is shown in Figure 4-18. In this screenshot, no rows were transformed, so the Script Task never executed.

FIGURE 4-18

Script Component

The Script Component enables you to write custom .NET scripts as transformations, sources, or destinations. Once you drag the component over, it will ask you if you want it to be a source, transformation, or destination. Some of the things you can do with this transformation include the following:

· Create a custom transformation that would use a .NET assembly to validate credit card numbers or mailing addresses.

· Validate data and skip records that don’t seem reasonable. For example, you can use it in a human resource recruitment system to pull out candidates that don’t match the salary requirement at a job code level.

· Read from a proprietary system for which no standard provider exists.

· Write a custom component to integrate with a third-party vendor.

Scripts used as sources can support multiple outputs, and you have the option of precompiling the scripts for runtime efficiency.

NOTE You can learn much more about the Script Component in Chapter 9.

Slowly Changing Dimension

The Slowly Changing Dimension (SCD) Transformation provides a great head start in helping to solve a common, classic changing-dimension problem that occurs in the outer edge of your data model — the dimension or lookup tables. The changing-dimension issue in online transaction and analytical processing database designs is too big to cover in this chapter, but a brief overview should help you understand the value of service the SCD Transformation provides.

A dimension table contains a set of discrete values with a description and often other measurable attributes such as price, weight, or sales territory. The classic problem is what to do in your dimension data when an attribute in a row changes — particularly when you are loading data automatically through an ETL process. This transformation can shave days off of your development time in relation to creating the load manually through T-SQL, but it can add time because of how it queries your destination and how it updates with the OLE DB Command Transform (row by row).

NOTE Loading data warehouses is covered in Chapter 12.

Sort

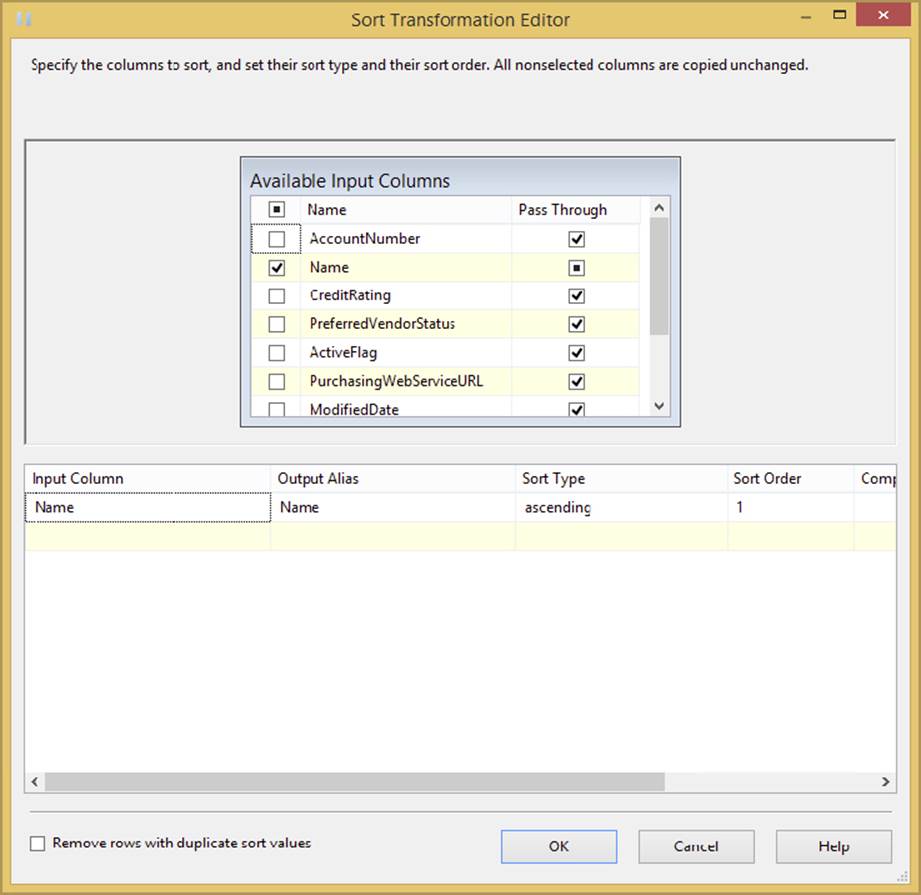

The Sort Transformation is a fully blocking asynchronous transformation that enables you to sort data based on any column in the path. This is probably one of the top ten transformations you will use on a regular basis because some of the other transformations require sorted data, and you’re reading data from a system that does not allow you to perform an ORDER BY clause or is not pre-sorted. To configure it, open the Sort Transformation Editor after it is connected to the path and check the column that you wish to sort by. Then, uncheck any column you don’t want passed through to the path from the Pass Through column. By default, every column will be passed through the pipeline. You can see this in Figure 4-19, where the user is sorting by the Name column and passing all other columns in the path as output.

FIGURE 4-19

In the bottom grid, you can specify the alias that you wish to output and whether you want to sort in ascending or descending order. The Sort Order column shows which column will be sorted on first, second, third, and so on. You can optionally check the Remove Rows with Duplicate Sort Values option to “Remove rows that have duplicate sort values.” This is a great way to do rudimentary de-duplication of your data. If a second value comes in that matches your same sort key, it is ignored and the row is dropped.

NOTE Because this is an asynchronous transformation, it will slow down your Data Flow immensely. Use it only when you have to, and use it sparingly.

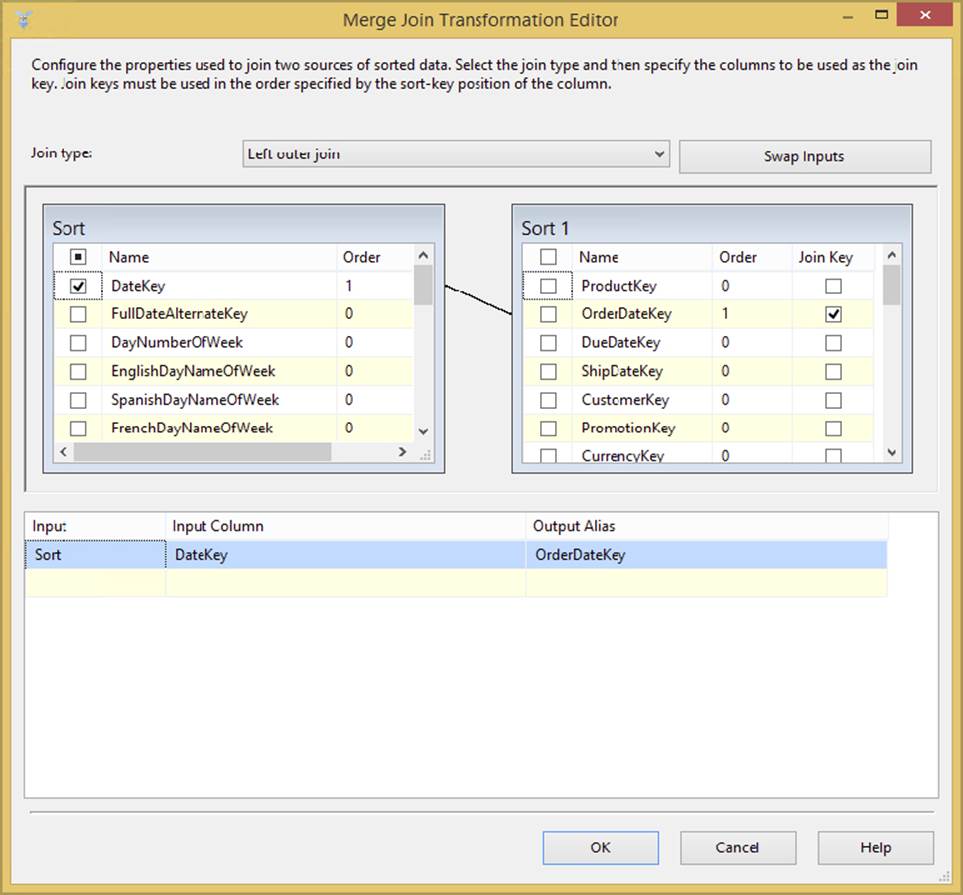

As mentioned previously, avoid using the Sort Transformation when possible, because of speed. However, some transformations, like the Merge Join and Merge, require the data to be sorted. If you place an ORDER BY statement in the OLE DB Source, SSIS is not aware of the ORDER BY statement because it could just have easily been in a stored procedure.

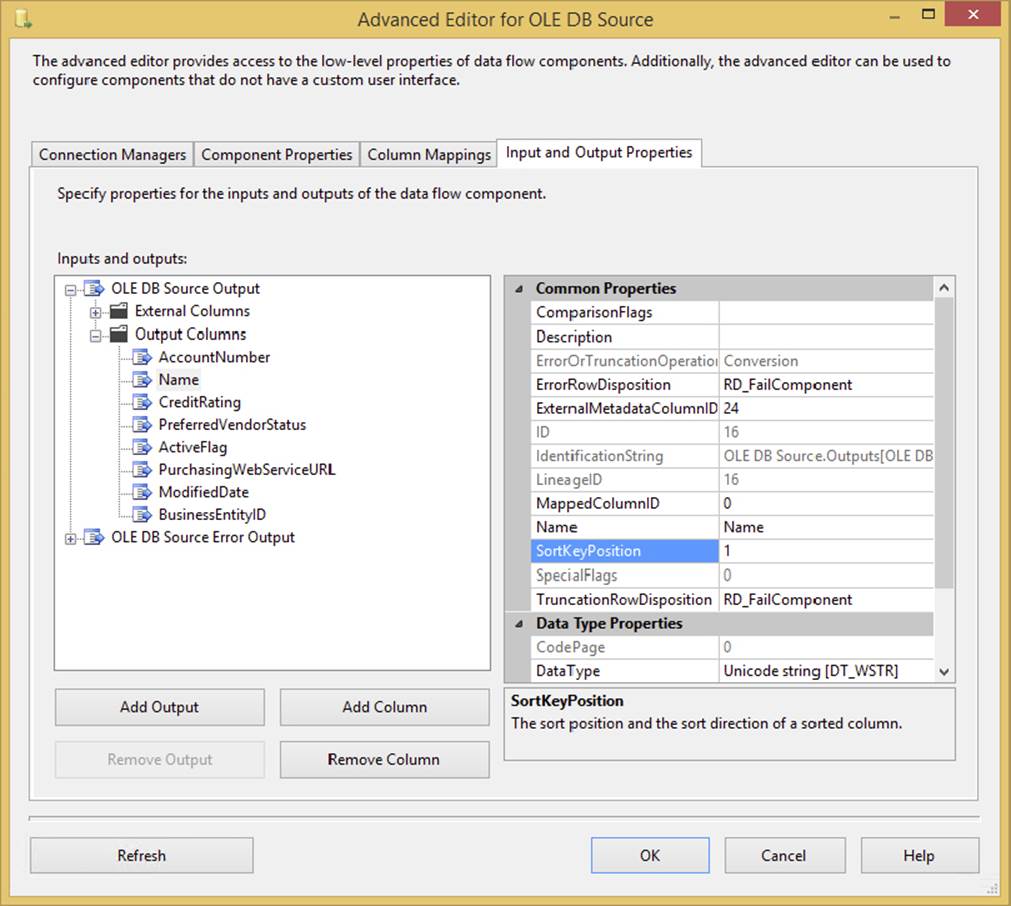

If you have an ORDER BY clause in your T-SQL statement in the OLE DB Source or the ADO.NET Source, you can notify SSIS that the data is already sorted, obviating the need for the Sort Transformation in the Advanced Editor. After ordering the data in your SQL statement, right-click the source and select Advanced Editor. From the Input and Output Properties tab, select OLE DB Source Output. In the Properties pane, change the IsSorted property to True.

Then, under Output Columns, select the column you are ordering on in your SQL statement, and change the SortKeyPosition to 1 if you’re sorting only by a single column ascending, as shown in Figure 4-20. If you have multiple columns, you could change this SortKeyPosition value to the column position in the ORDER BY statement starting at 1. A value of -1 will sort the data in descending order.

FIGURE 4-20

Union All

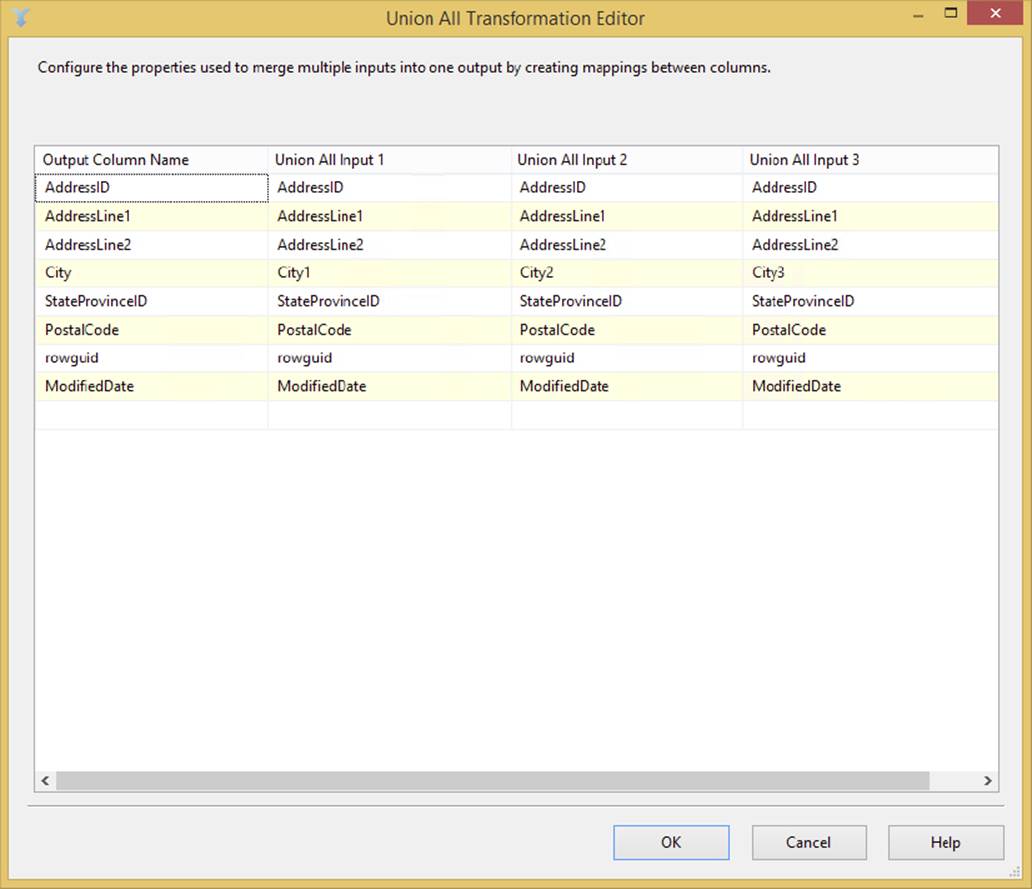

The Union All Transformation works much the same way as the Merge Transformation, but it does not require sorted data. It takes the outputs from multiple sources or transformations and combines them into a single result set. For example, in Figure 4-21, the user combines the data from three sources into a single output using the Union All Transformation. Notice that the City column is called something different in each source and that all are now merged in this transformation into a single column. Think of the Union All as essentially stacking the data on top of each other, much like the T-SQL UNION operator does.

FIGURE 4-21

To configure the transformation, connect the first source or transformation to the Union All Transformation, and then continue to connect the other sources or transformations to it until you are done. You can optionally open the Union All Transformation Editor to ensure that the columns map correctly, but SSIS takes care of that for you automatically. The transformation fixes minor metadata issues. For example, if you have one input that is a 20-character string and another that is 50 characters, the output of this from the Union All Transformation will be the longer 50-character column. You need to open the Union All Transformation Editor only if the column names from one of the transformations that feed the Union All Transformation have different column names.

OTHER TRANSFORMATIONS

There are many more transformations you can use to complete your more complex Data Flow. Some of these transformations like the Audit and Case Transformations can be used in lieu of a Derived Column Transformation because they have a simpler UI. Others serve a purpose that’s specialized.

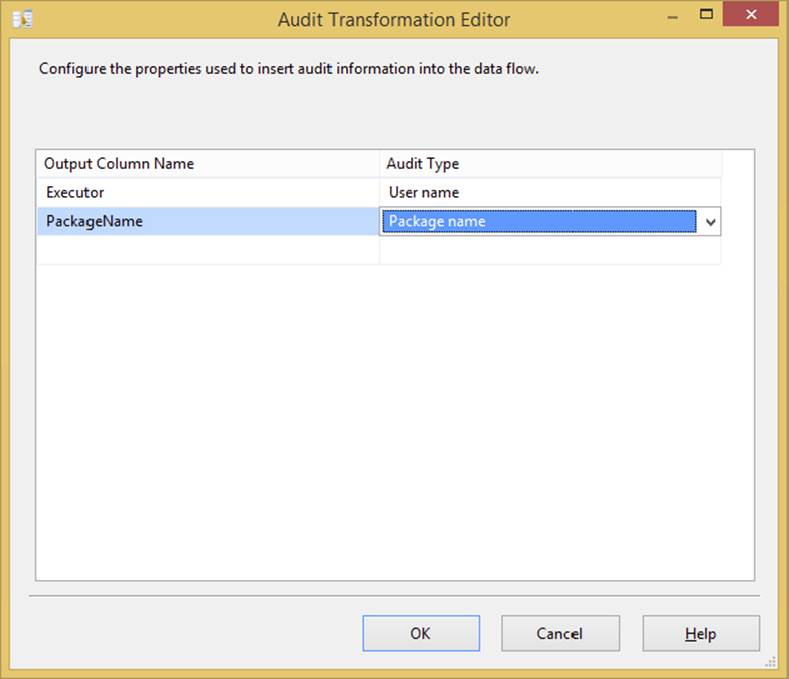

Audit

The Audit Transformation allows you to add auditing data to your Data Flow. Because of acts such as HIPPA and Sarbanes-Oxley (SOX) governing audits, you often must be able to track who inserted data into a table and when. This transformation helps you with that function. The task is easy to configure. For example, to track what task inserted data into the table, you can add those columns to the Data Flow path with this transformation. The functionality in the Audit Transformation can be achieved with a Derived Column Transformation, but the Audit Transformation provides an easier interface.

All other columns are passed through to the path as an output, and any auditing item you add will also be added to the path. Simply select the type of data you want to audit in the Audit Type column (shown in Figure 4-22), and then name the column that will be outputted to the flow. Following are some of the available options:

FIGURE 4-22

· Execution instance GUID: GUID that identifies the execution instance of the package

· Package ID: Unique ID for the package

· Package name: Name of the package

· Version ID: Version GUID of the package

· Execution start time: Time the package began

· Machine name: Machine on which the package ran

· User name: User who started the package

· Task name: Data Flow Task name that holds the Audit Task

· Task ID: Unique identifier for the Data Flow Task that holds the Audit Task

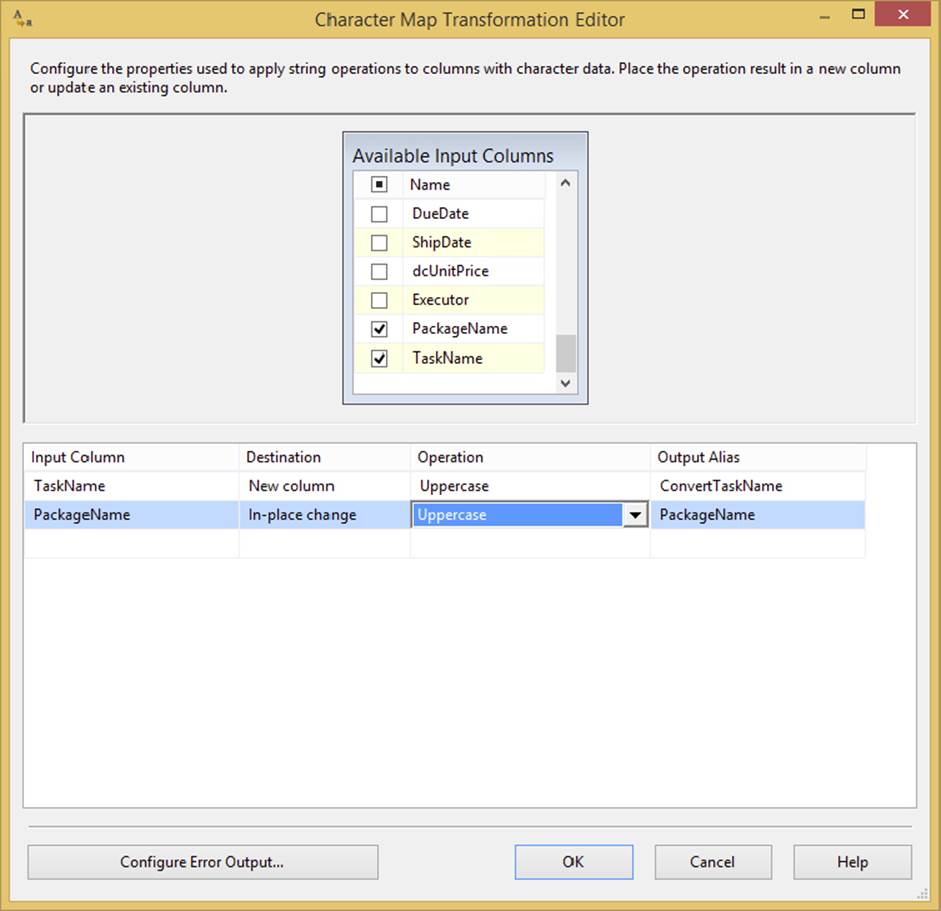

Character Map

The Character Map Transformation (shown in Figure 4-23) performs common character translations in the flow. This simple transformation can be configured in a single tab. To do so, check the columns you wish to transform. Then, select whether you want this modified column to be added as a new column or whether you want to update the original column. You can give the column a new name under the Output Alias column. Lastly, select the operation you wish to perform on the inputted column. The available operation types are as follows:

· Byte Reversal: Reverses the order of the bytes. For example, for the data 0x1234 0x9876, the result is 0x4321 0x6789. This uses the same behavior as LCMapString with the LCMAP_BYTEREV option.

· Full Width: Converts the half-width character type to full width

· Half Width: Converts the full-width character type to half width

· Hiragana: Converts the Katakana style of Japanese characters to Hiragana

· Katakana: Converts the Hiragana style of Japanese characters to Katakana

· Linguistic Casing: Applies the regional linguistic rules for casing

· Lowercase: Changes all letters in the input to lowercase

· Traditional Chinese: Converts the simplified Chinese characters to traditional Chinese

· Simplified Chinese: Converts the traditional Chinese characters to simplified Chinese

· Uppercase: Changes all letters in the input to uppercase

FIGURE 4-23

In Figure 4-23, you can see that two columns are being transformed — both to uppercase. For the TaskName input, a new column is added, and the original is kept. The PackageName column is replaced in-line.

Copy Column

The Copy Column Transformation is a very simple transformation that copies the output of a column to a clone of itself. This is useful if you wish to create a copy of a column before you perform some elaborate transformations. You could then keep the original value as your control subject and the copy as the modified column. To configure this transformation, go to the Copy Column Transformation Editor and check the column you want to clone. Then assign a name to the new column.

NOTE The Derived Column Transformation will allow you to transform the data from a column to a new column, but the UI in the Copy Column Transformation is simpler for some.

Data Mining Query

The Data Mining Query Transformation typically is used to fill in gaps in your data or predict a new column for your Data Flow. This transformation runs a Data Mining Extensions (DMX) query against an SSAS data-mining model, and adds the output to the Data Flow. It also can optionally add columns, such as the probability of a certain condition being true. A few great scenarios for this transformation would be the following:

· You could take columns, such as number of children, household income, and marital income, to predict a new column that states whether the person owns a house or not.

· You could predict what customers would want to buy based on their shopping cart items.

· You could fill the gaps in your data where customers didn’t enter all the fields in a questionnaire.

The possibilities are endless with this transformation.

DQS Cleansing

The Data Quality Services (DQS) Cleansing Transformation performs advanced data cleansing on data flowing through it. With this transformation, you can have your business analyst (BA) create a series of business rules that declare what good data looks like in the Data Quality Client (included in SQL Server). The BA will use a tool called the Data Quality Client to create domains that define data in your company, such as what a Company Name column should always look like. The DQS Cleansing Transformation can then use that business rule.

This transformation will score the data for you and tell you what the proper cleansed value should be. Chapter 10 covers this transformation in much more detail.

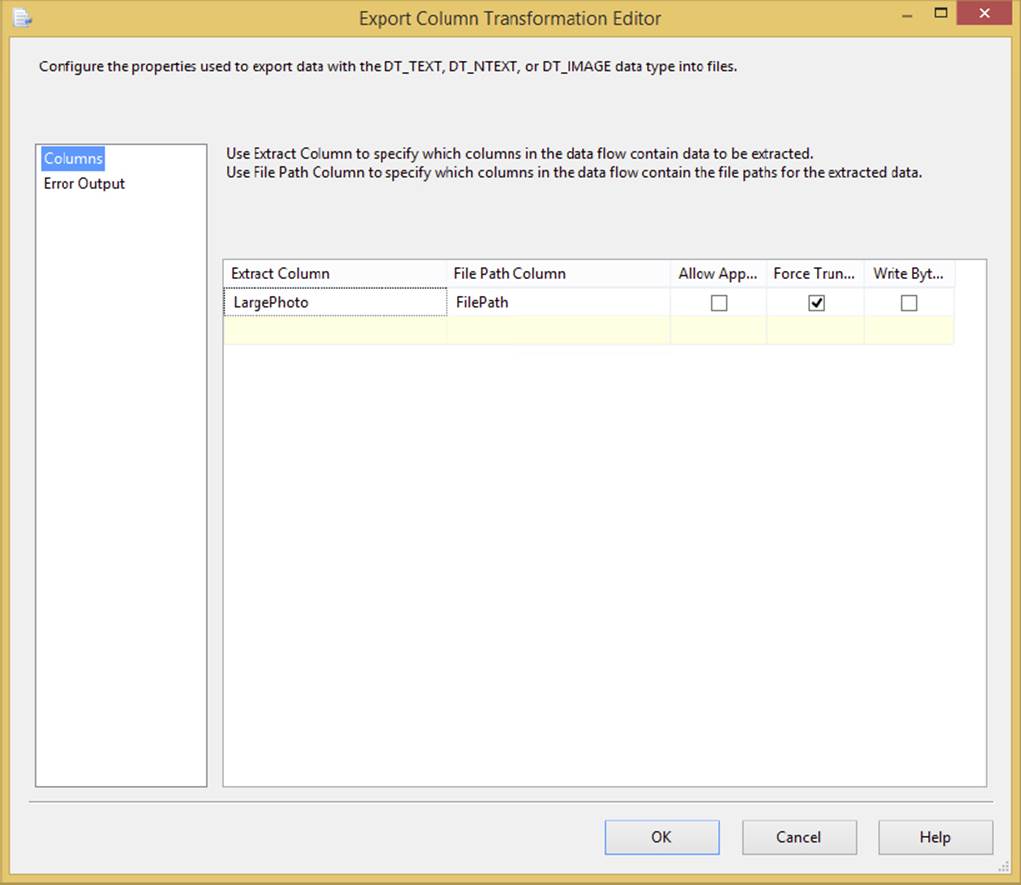

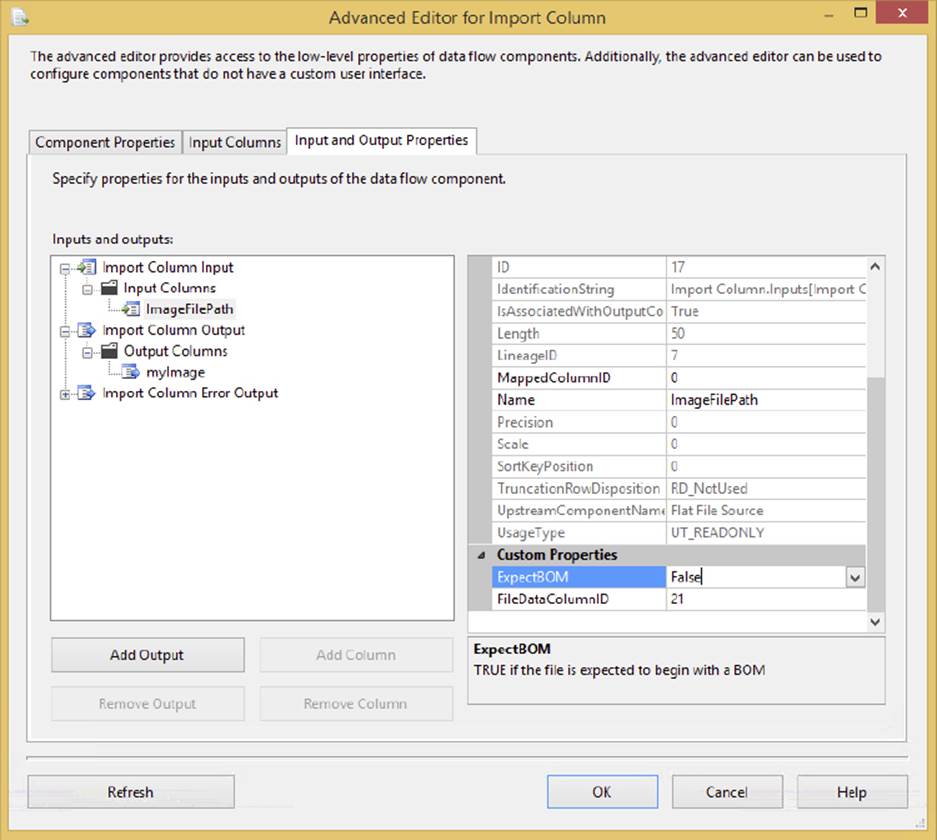

Export Column

The Export Column Transformation is a transformation that exports data to a file from the Data Flow. Unlike the other transformations, the Export Column Transformation doesn’t need a destination to create the file. To configure it, go to the Export Column Transformation Editor, shown in Figure 4-24. Select the column that contains the file from the Extract Column dropdown box. Select the column that contains the path and filename to send the files to in the File Path Column dropdown box.

FIGURE 4-24

The other options specify where the file will be overwritten or dropped. The Allow Append checkbox specifies whether the output should be appended to the existing file, if one exists. If you check Force Truncate, the existing file will be overwritten if it exists. The Write BOM option specifies whether a byte-order mark is written to the file if it is a DT_NTEXT or DT_WSTR data type.

If you do not check the Append or Truncate options and the file exists, the package will fail if the error isn’t handled. The following error is a subset of the complete error you would receive:

Error: 0xC02090A6 at Data Flow Task, Export Column [61]: Opening the file

"wheel_small.tif" for writing failed. The file exists and cannot be

overwritten. If the AllowAppend property is FALSE and the ForceTruncate

property is set to FALSE, the existence of the file will cause this failure.

The Export Column Transformation Task is used to extract blob-type data from fields in a database and create files in their original formats to be stored in a file system or viewed by a format viewer, such as Microsoft Word or Microsoft Paint. The trick to understanding the Export Column Transformation is that it requires an input stream field that contains digitized document data, and another field that can be used for a fully qualified path. The Export Column Transformation will convert the digitized data into a physical file on the file system for each row in the input stream using the fully qualified path.

In the following example, you’ll use existing data in the AdventureWorksDW database to output some stored documents from the database back to file storage. The database has a table named DimProduct that contains a file path and a field containing an embedded Microsoft Word document. Pull these documents out of the database and save them into a directory on the file system.

1. Create a directory with an easy name like c:\ProSSIS\Chapter4\Export that you can use when exporting these pictures.

2. Create a new SSIS project and package named Export Column Example.dtsx. Add a Data Flow Task to the Control Flow design surface.

3. On the Data Flow design surface, add an OLE DB Data Source configured to the AdventureWorksDW database table DimProduct.

4. Add a Derived Column Transformation Task to the Data Flow design surface. Connect the output of the OLE DB data to the task.

5. Create a Derived Column Name named FilePath. Use the Derived Column setting of <add as new column>. To derive a new filename, just use the primary key for the filename and add your path to it. To do this, set the expression to the following:

"c:\ProSSIS\Chapter4\Export\" + (DT_WSTR,50)ProductKey + ".gif"

NOTE The \\ is required in the expressions editor instead of \ because of its use as an escape sequence.

6. Add an Export Column Transformation Task to the Data Flow design surface. Connect the output of the Derived Column Task to the Export Column Transformation Task, which will consume the input stream and separate all the fields into two usable categories: fields that can possibly be in digitized data formats, and fields that can possibly be used as filenames.

7. Set the Extract Column equal to the [LargePhoto] field, since this contains the embedded GIF image. Set the File Path Column equal to the field name [FilePath]. This field is the one that you derived in the Derived Column Task.

8. Check the Force Truncate option to rewrite the files if they exist. (This will enable you to run the package again without an error if the files already exist.)

9. Run the package and check the contents of the directory. You should see a list of image files in primary key sequence.

Fuzzy Lookup

If you have done some work in the world of extract, transfer, and load (ETL) processes, then you’ve run into the proverbial crossroads of handling bad data. The test data is staged, but all attempts to retrieve a foreign key from a dimension table result in no matches for a number of rows. This is the crossroads of bad data. At this point, you have a finite set of options. You could create a set of hand-coded complex lookup functions using SQL Sound-Ex, full-text searching, or distance-based word calculation formulas. This strategy is time-consuming to create and test, complicated to implement, and dependent on a given language, and it isn’t always consistent or reusable (not to mention that everyone after you will be scared to alter the code for fear of breaking it). You could just give up and divert the row for manual processing by subject matter experts (that’s a way to make some new friends). You could just add the new data to the lookup tables and retrieve the new keys. If you just add the data, the foreign key retrieval issue is solved, but you could be adding an entry into the dimension table that skews data-mining results downstream. This is what we like to call a lazy-add. This is a descriptive, not a technical, term. A lazy-add would import a misspelled job title like “prasedent” into the dimension table when there is already an entry of “president.” It was added, but it was lazy.

The Fuzzy Lookup and Fuzzy Grouping Transformations add one more road to take at the crossroads of bad data. These transformations allow the addition of a step to the process that is easy to use, consistent, scalable, and reusable, and they will reduce your unmatched rows significantly — maybe even altogether. If you’ve already allowed bad data in your dimension tables, or you are just starting a new ETL process, you’ll want to put the Fuzzy Grouping Transformation to work on your data to find data redundancy. This transformation can examine the contents of a suspect field in a staged or committed table and provide possible groupings of similar words based on provided tolerances. This matching information can then be used to clean up that table. Fuzzy Grouping is discussed later in this chapter.

If you are correcting data during an ETL process, use the Fuzzy Lookup Transformation — my suggestion is to do so only after attempting to perform a regular lookup on the field. This best practice is recommended because Fuzzy Lookups don’t come cheap. They build specialized indexes of the input stream and the reference data for comparison purposes. You can store them for efficiency, but these indexes can use up some disk space or take up some memory if you choose to rebuild them on each run. Storing matches made by the Fuzzy Lookups over time in a translation or pre-dimension table is a great design. Regular Lookup Transformations can first be run against this lookup table and then divert only those items in the Data Flow that can’t be matched to a Fuzzy Lookup. This technique uses Lookup Transformations and translation tables to find matches using INNER JOINs. Fuzzy Lookups whittle the remaining unknowns down if similar matches can be found with a high level of confidence. Finally, if your last resort is to have the item diverted to a subject matter expert, you can save that decision into the translation table so that the ETL process can match it next time in the first iteration.

Using the Fuzzy Lookup Transformation requires an input stream of at least one field that is a string. Internally, the transformation has to be configured to connect to a reference table that will be used for comparison. The output to this transformation will be a set of columns containing the following:

· Input and Pass-Through Field Names and Values: This column contains the name and value of the text input provided to the Fuzzy Lookup Transformation or passed through during the lookup.

· Reference Field Name and Value: This column contains the name and value(s) of the matched results from the reference table.

· Similarity: This column contains a number between 0 and 1 representing similarity to the matched row and column. Similarity is a threshold that you set when configuring the Fuzzy Lookup Task. The closer this number is to 1, the closer the two text fields must match.

· Confidence: This column contains a number between 0 and 1 representing confidence of the match relative to the set of matched results. Confidence is different from similarity, because it is not calculated by examining just one word against another but rather by comparing the chosen word match against all the other possible matches. For example, the value of Knight Brian may have a low similarity threshold but a high confidence that it matches to Brian Knight. Confidence gets better the more accurately your reference data represents your subject domain, and it can change based on the sample of the data coming into the ETL process.

The Fuzzy Lookup Transformation Editor has three configuration tabs.

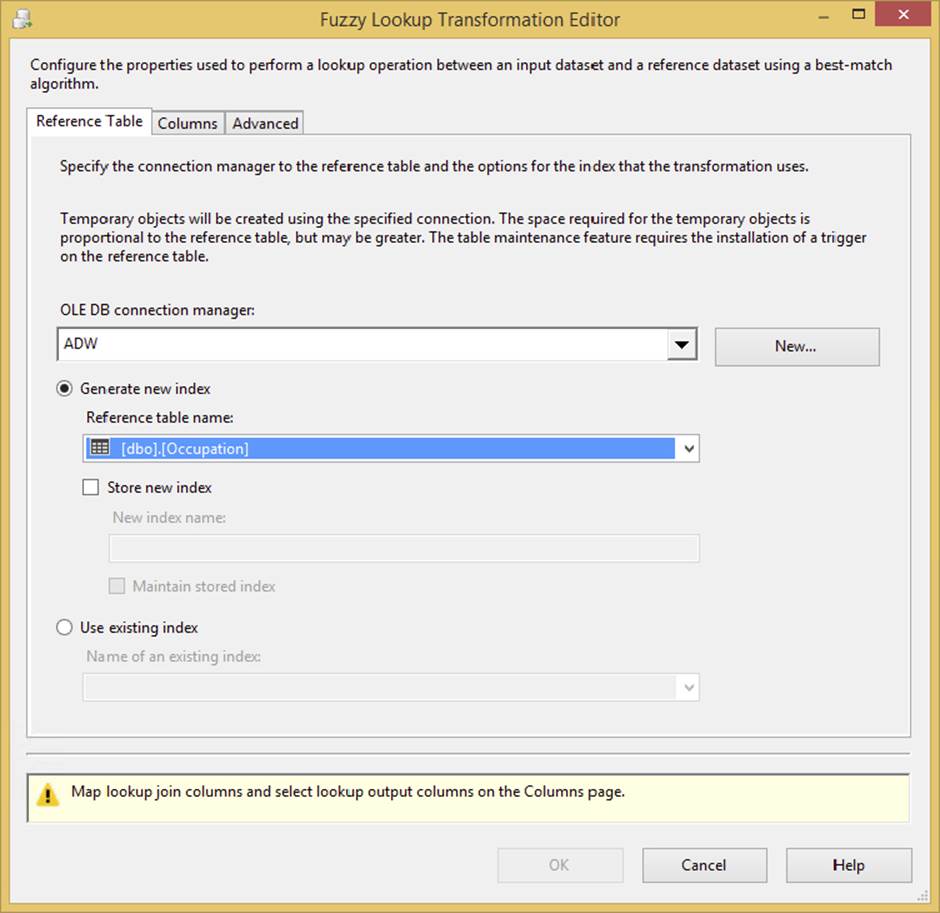

· Reference Table: This tab (shown in Figure 4-25) sets up the OLE DB Connection to the source of the reference data. The Fuzzy Lookup takes this reference data and builds a token-based index (which is actually a table) out of it before it can begin to compare items. This tab contains the options to save that index or use an existing index from a previous process. There is also an option to maintain the index, which will detect changes from run to run and keep the index current. Note that if you are processing large amounts of potential data, this index table can grow large.

FIGURE 4-25

There are a few additional settings in this tab that are of interest. The default option to set is the “Generate new index” option. By setting this, a table will be created on the reference table’s Connection Manager each time the transformation is run, and that table will be populated with loads of data as mentioned earlier in this section. The creation and loading of the table can be an expensive process. This table is removed after the transformation is complete.

Alternatively, you can select the “Store new index” option, which will instantiate the table and not drop it. You can then reuse that table from other Data Flows or other Data Flows from other packages and over multiple days. As you can imagine, by doing this your index table becomes stale soon after its creation. There are stored procedures you can run to refresh it in SQL, or you can click the “Maintain stored index” checkbox to create a trigger on the underlying reference table to automatically maintain the index table. This is available only with SQL Server reference tables, and it may slow down your insert, update, and delete statements to that table.

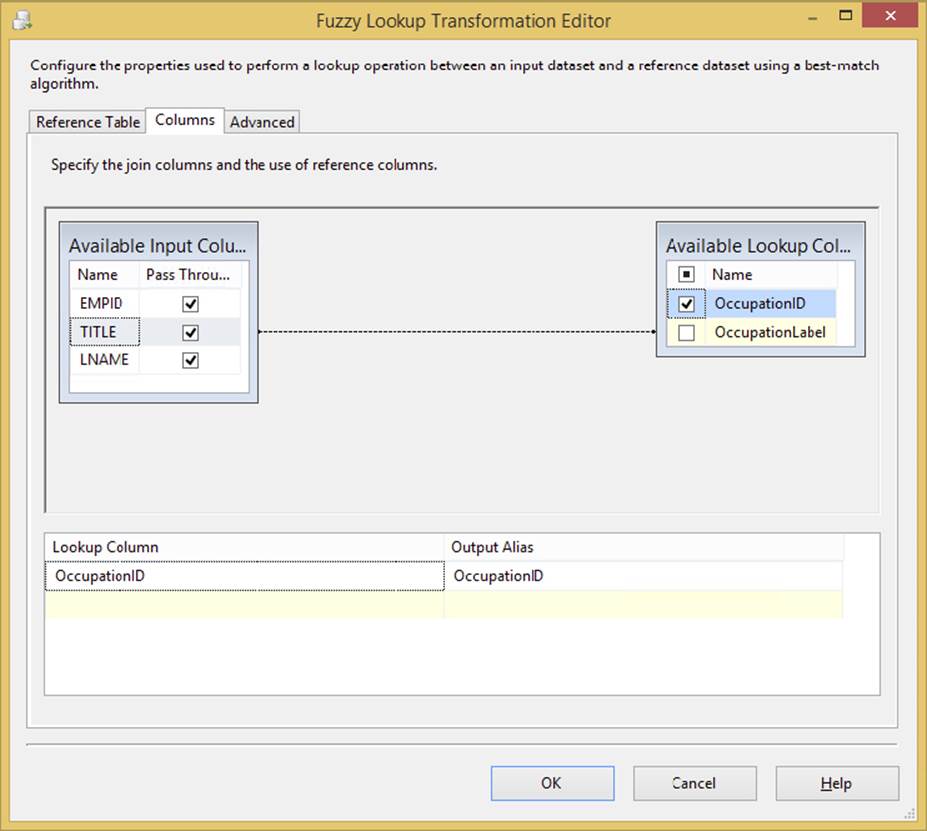

· Columns: This tab allows mapping of the one text field in the input stream to the field in the reference table for comparison. Drag and drop a field from the Available Input Column onto the matching field in the Available Lookup Column. You can also click the two fields to be compared and right-click to create a relationship. Another neat feature is the capability to add the foreign key of the lookup table to the output stream. To do this, just click that field in the Available Input Columns.

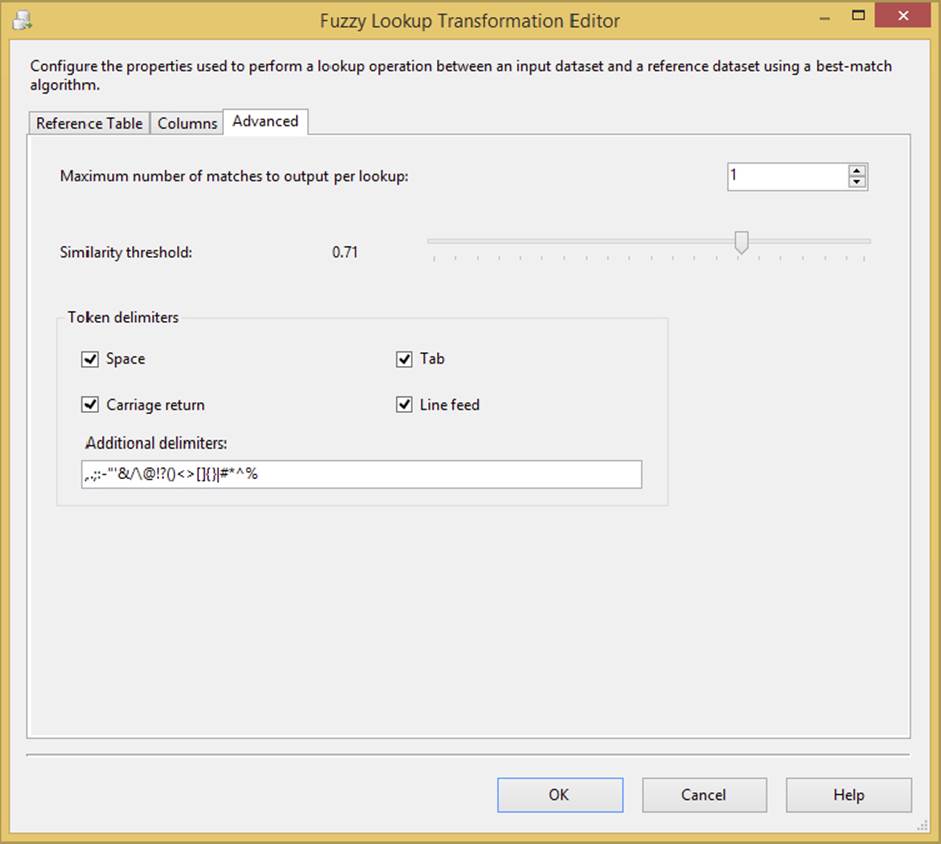

· Advanced: This tab contains the settings that control the fuzzy logic algorithms. You can set the maximum number of matches to output per incoming row. The default is set to 1, which means pull the best record out of the reference table if it meets the similaritythreshold. Incrementing this setting higher than this may generate more results that you’ll have to sift through, but it may be required if there are too many closely matching strings in your domain data. A slider controls the Similarity threshold. It is recommended that you start this setting at .71 when experimenting and move up or down as you review the results. This setting is normally determined based on a businessperson’s review of the data, not the developer’s review. If a row cannot be found that’s similar enough, the columns that you checked in the Columns tab will be set to NULL. The token delimiters can also be set if, for example, you don’t want the comparison process to break incoming strings up by a period (.) or spaces. The default for this setting is all common delimiters. Figure 4-26 shows an example of an Advanced tab.

FIGURE 4-26

It’s important to not use Fuzzy Lookup as your primary Lookup Transformation for lookups because of the performance overhead; the Fuzzy Lookup transformation is significantly slower than the Lookup transformation. Always try an exact match using a Lookup Transformation and then redirect nonmatches to the Fuzzy Lookup if you need that level of lookup. Additionally, the Fuzzy Lookup Transformation does require the BI or Enterprise Edition of SQL Server.

Although this transformation neatly packages some highly complex logic in an easy-to-use component, the results won’t be perfect. You’ll need to spend some time experimenting with the configurable settings and monitoring the results. To that end, the following short example puts the Fuzzy Lookup Transformation to work by setting up a small table of occupation titles that will represent your dimension table. You will then import a set of person records that requires a lookup on the occupation to your dimension table. Not all will match, of course. The Fuzzy Lookup Transformation will be employed to find matches, and you will experiment with the settings to learn about its capabilities.

1. Use the following data (code file FuzzyExample.txt) for this next example. This file can also be downloaded from www.wrox.com/go/prossis2014 and saved to c:\ProSSIS\Chapter4\FuzzyExample.txt. The data represents employee information that you are going to import. Notice that some of the occupation titles are cut off in the text file because of the positioning within the layout. Also notice that this file has an uneven right margin. Both of these issues are typical ETL situations that are especially painful.

2. EMPID TITLE LNAME

3. 00001 EXECUTIVE VICE PRESIDEN WASHINGTON

4. 00002 EXEC VICE PRES PIZUR

5. 00003 EXECUTIVE VP BROWN

6. 00005 EXEC VP MILLER

7. 00006 EXECUTIVE VICE PRASIDENS WAMI

8. 00007 FIELDS OPERATION MGR SKY

9. 00008 FLDS OPS MGR JEAN

10. 00009 FIELDS OPS MGR GANDI

11. 00010 FIELDS OPERATIONS MANAG HINSON

12. 00011 BUSINESS OFFICE MANAGER BROWN

13. 00012 BUS OFFICE MANAGER GREEN

14. 00013 BUS OFF MANAGER GATES

15. 00014 BUS OFF MGR HALE

16. 00015 BUS OFFICE MNGR SMITH

17. 00016 BUS OFFICE MGR AI

18. 00017 X-RAY TECHNOLOGIST CHIN

19. 00018 XRAY TECHNOLOGIST ABULA

20. 00019 XRAY TECH HOGAN

00020 X-RAY TECH ROBERSON

21.Run the following SQL code (code file FuzzyExampleInsert.sql) in AdventureWorksDW or another database of your choice. This code will create your dimension table and add the accepted entries that will be used for reference purposes. Again, this file can be downloaded from www.wrox.com/go/prossis2014:

22. CREATE TABLE [Occupation](

23. [OccupationID] [smallint] IDENTITY(1,1) NOT NULL,

24. [OccupationLabel] [varchar] (50) NOT NULL

25. CONSTRAINT [PK_Occupation_OccupationID] PRIMARY KEY CLUSTERED

26. (

27. [OccupationID] ASC

28. ) ON [PRIMARY]

29. ) ON [PRIMARY]

30. GO

31. INSERT INTO [Occupation] Select 'EXEC VICE PRES'

32. INSERT INTO [Occupation] Select 'FIELDS OPS MGR'

33. INSERT INTO [Occupation] Select 'BUS OFFICE MGR'

INSERT INTO [Occupation] Select 'X-RAY TECH'

34.Create a new SSIS package and drop a Data Flow Task on the Control Flow design surface and click the Data Flow tab.

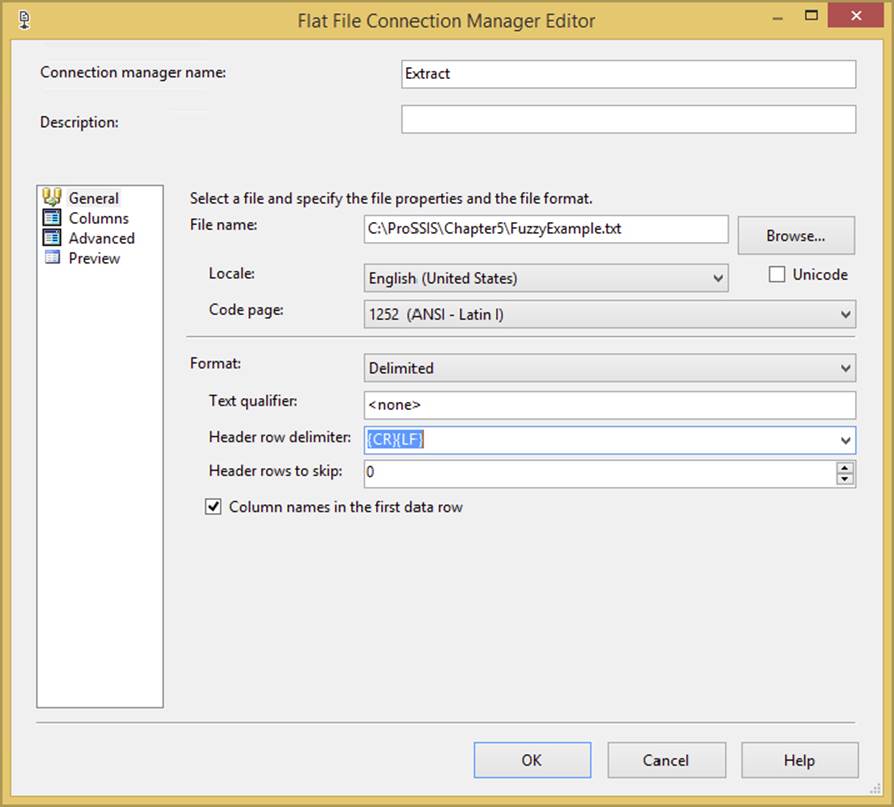

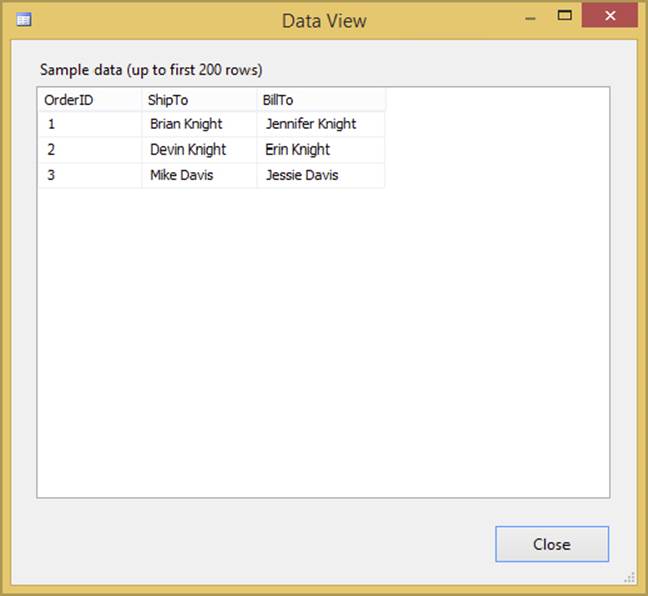

35.Add a Flat File Connection to the Connection Manager. Name it Extract, and then set the filename to c:\projects\Chapter4\fuzzyexample.txt. Set the Format property to Delimited, and set the option to pull the column names from the first data row, as shown inFigure 4-27.

FIGURE 4-27

36.Click the Columns tab to confirm it is properly configured and showing three columns. Click the Advanced tab and ensure the OuputColumnWidth property for the TITLE field is set to 50 characters in length. Save the connection.

37.Add a Flat File Source to the Data Flow surface and configure it to use the Extract connection.

38.Add a Fuzzy Lookup Transformation to the Data Flow design surface. Connect the output of the Flat File Source to the Fuzzy Lookup, and connect the output of the Fuzzy Lookup to the OLE DB Destination.

39.Open the Fuzzy Lookup Transformation Editor. Set the OLE DB Connection Manager in the Reference tab to use the AdventureWorksDW database connection and the Occupation table. Set up the Columns tab by connecting the input to the reference table columns as in Figure 4-28, dragging the Title column to the OccupationLabel column on the right. Set up the Advanced tab with a Similarity threshold of 50 (0.50).

FIGURE 4-28

40.Open the editor for the OLE DB Destination. Set the OLE DB connection to the AdventureWorksDW database. Click New to create a new table to store the results. Change the table name in the DDL statement that is presented to you to create the [FuzzyResults] table. Click the Mappings tab, accept the defaults, and save.

41.Add a Data Viewer of type grid to the Data Flow between the Fuzzy Lookup and the OLE DB Destination.

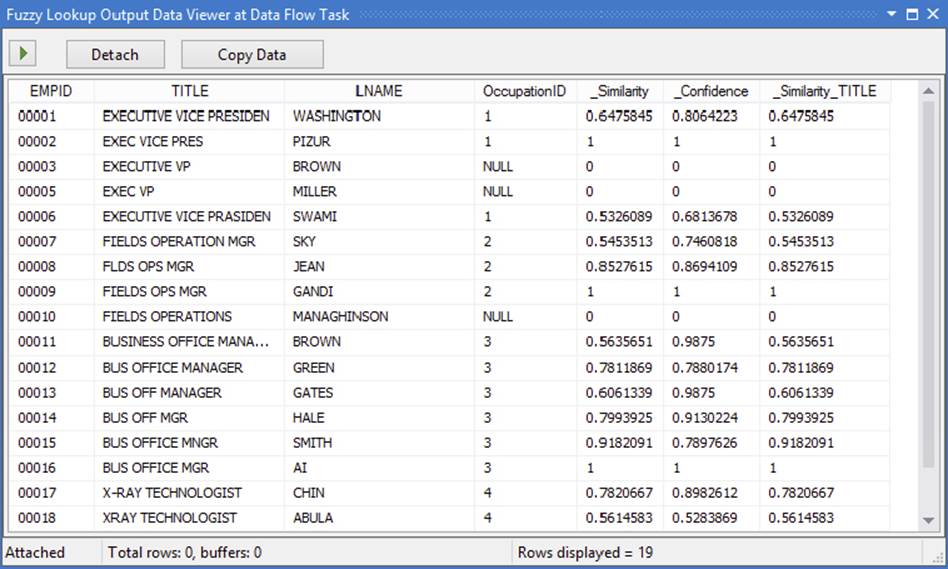

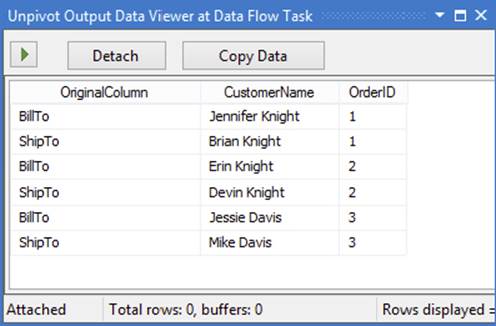

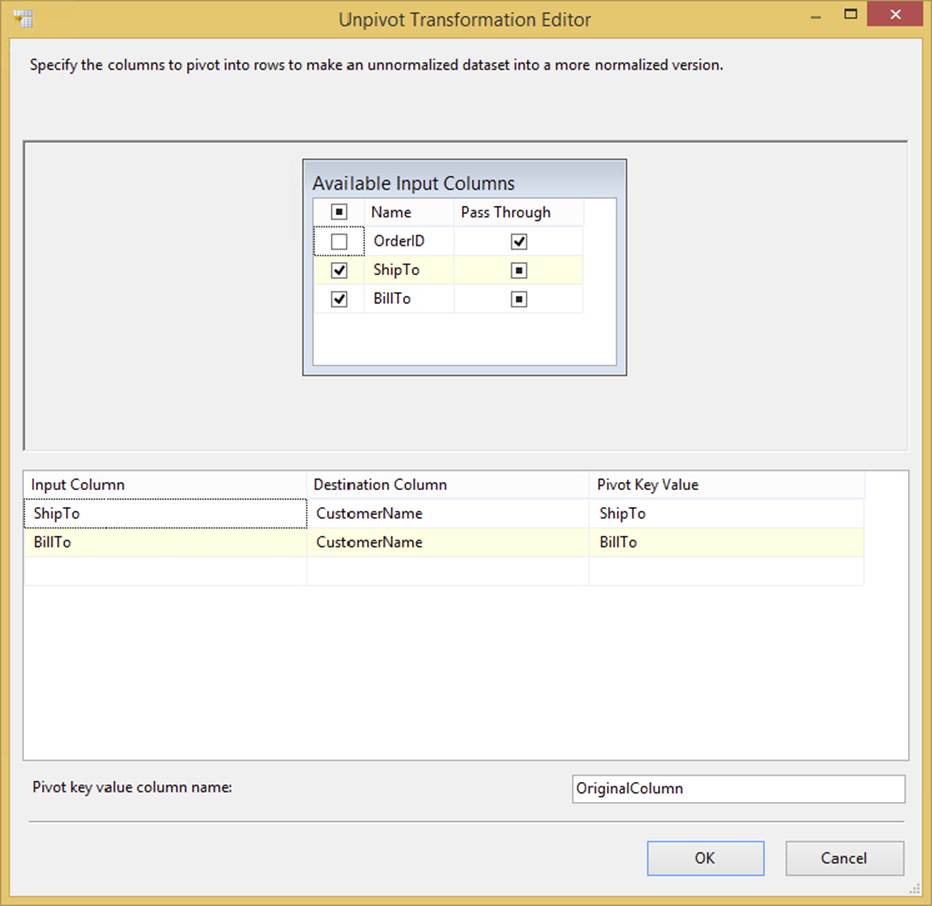

Run the package. Your results at the Data View should resemble those in Figure 4-29. Notice that the logic has matched most of the items at a 50 percent similarity threshold — and you have the foreign key OccupationID added to your input for free! Had you used a strict INNER JOIN or Lookup Transformation, you would have made only three matches, a dismal hit ratio. These items can be seen in the Fuzzy Lookup output, where the values are 1 for similarity and confidence. A few of the columns are set to NULL now, because the row like Executive VP wasn’t 50 percent similar to the Exec Vice Pres value. You would typically send those NULL records with a conditional split to a table for manual inspection.

FIGURE 4-29

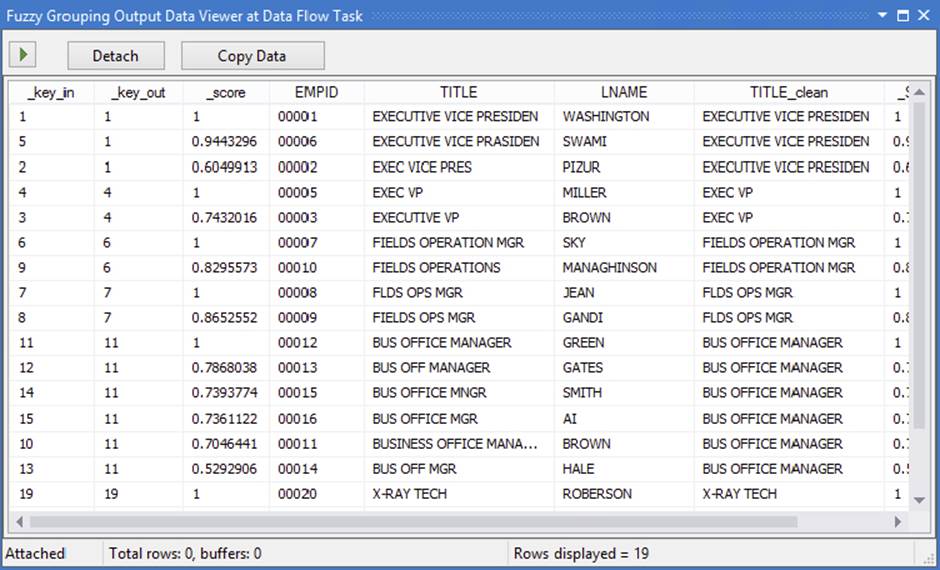

Fuzzy Grouping

In the previous section, you learned about situations where bad data creeps into your dimension tables. The blame was placed on the “lazy-add” ETL processes that add data to dimension tables to avoid rejecting rows when there are no natural key matches. Processes like these are responsible for state abbreviations like “XX” and entries that look to the human eye like duplicates but are stored as two separate entries. The occupation titles “X-Ray Tech” and “XRay Tech” are good examples of duplicates that humans can recognize but computers have a harder time with.

The Fuzzy Grouping Transformation can look through a list of similar text and group the results using the same logic as the Fuzzy Lookup. You can use these groupings in a transformation table to clean up source and destination data or to crunch fact tables into more meaningful results without altering the underlying data. The Fuzzy Group Transformation also expects an input stream of text, and it requires a connection to an OLE DB Data Source because it creates in that source a set of structures to use during analysis of the input stream.

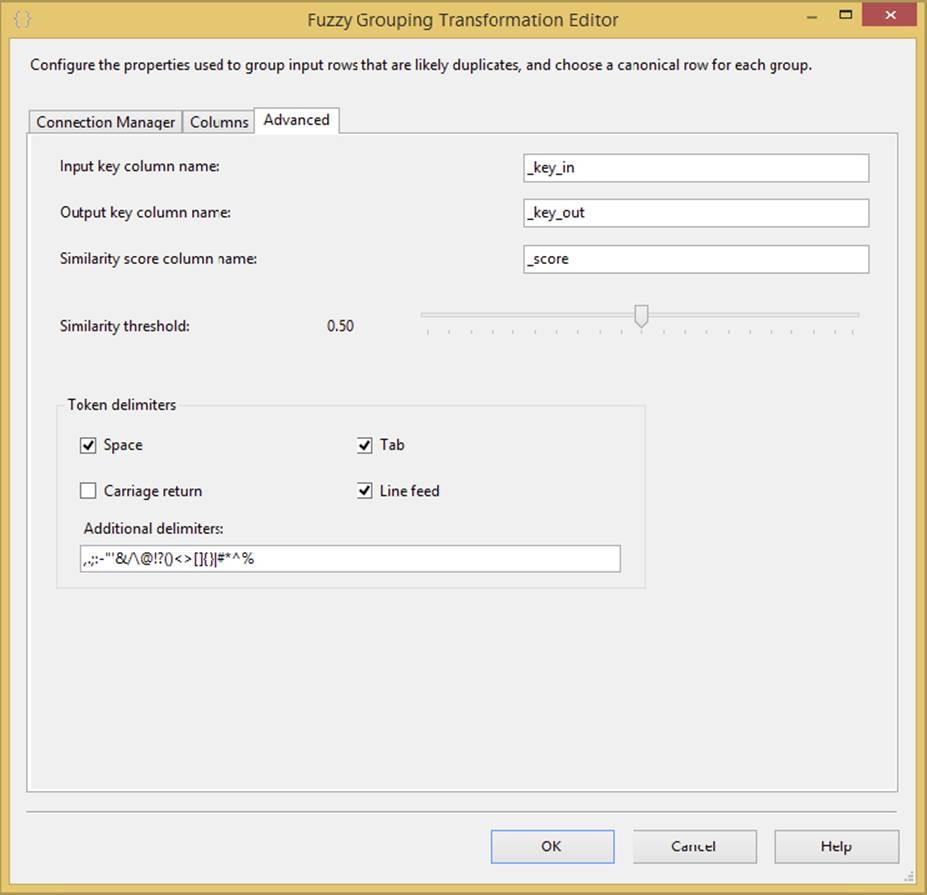

The Fuzzy Lookup Editor has three configuration tabs:

· Connection Manager: This tab sets the OLE DB connection that the transform will use to write the storage tables that it needs.

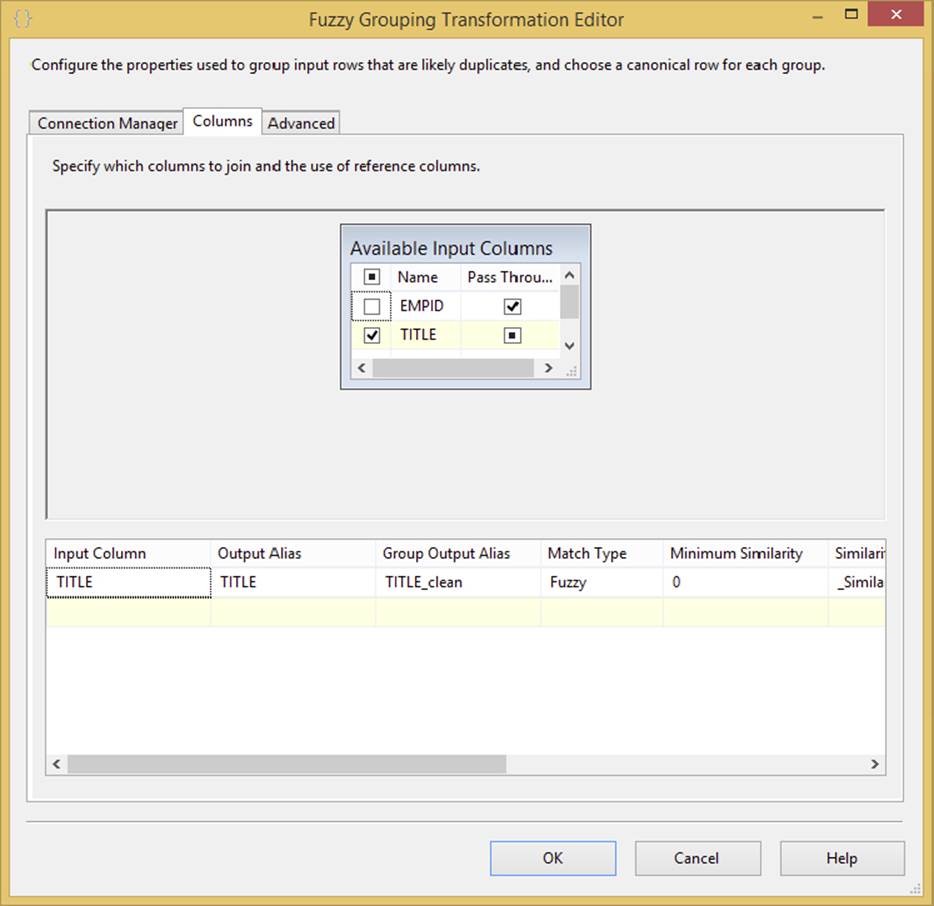

· Columns: This tab displays the Available Input Columns and allows the selection of any or all input columns for fuzzy grouping analysis. Figure 4-30 shows a completed Columns tab.

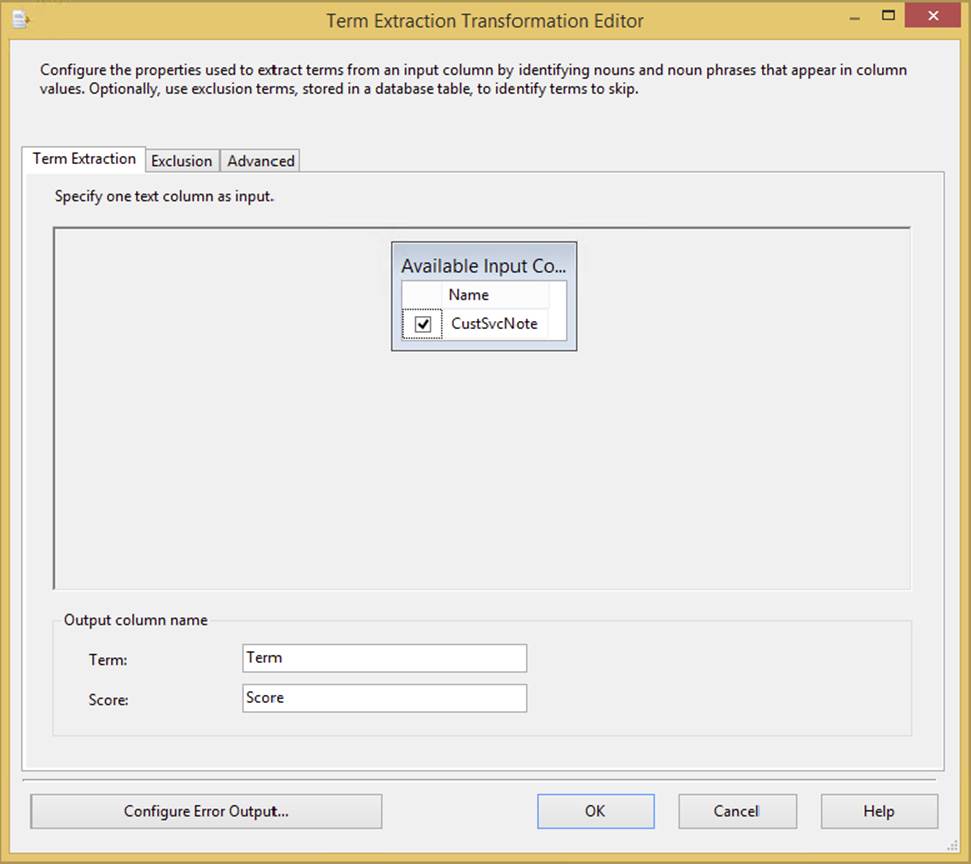

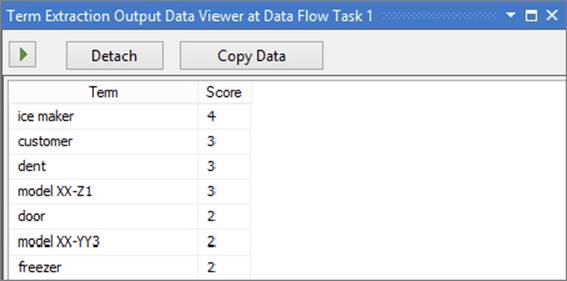

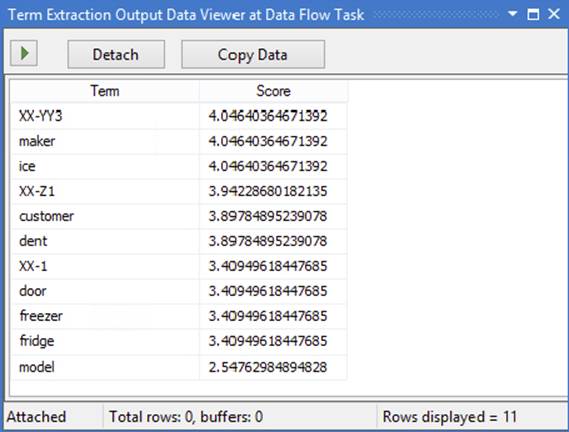

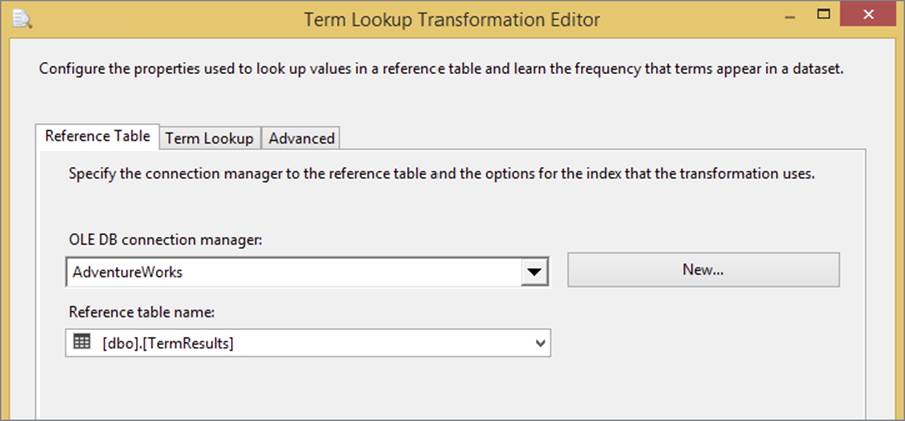

FIGURE 4-30