Building Web Apps with WordPress (2014)

Chapter 16. WordPress Optimization and Scaling

This chapter is all about squeezing the most performance possible out of WordPress through optimal server configuration, caching, and clever programming.

WordPress often gets knocked for not scaling as well as other PHP frameworks or other programming languages. The idea that WordPress doesn’t work at scale mostly comes from the fact that WordPress has traditionally been used to run small blogs on shared hosting accounts. Decisions are made by the WordPress core team (including supporting deprecated functionality and older versions of PHP and MySQL) to make sure that WordPress will boot up easily on as many hosting setups as possible, including under-powered shared hosting accounts.

So there are a lot of really slow WordPress sites out in the wild that help to give the impression that WordPress itself is slow. However, WordPress is pretty darn fast on the right setup and can be scaled using the same techniques any PHP/MySQL-based app can use. We will cover many of those techniques in this chapter, introducing you to a number of tools and concepts that can be applied to your own WordPress apps.

Terms

In this chapter, and throughout this book, we’ll throw around terms like “optimization” and “scaling.” It’s important to understand exactly what we mean by these terms.

Optimization generally refers to getting your app and scripts to run as fast as possible. In some cases, we will be optimizing for memory use or something other than speed. But for the most part, when we say “optimize,” we are talking about making things fast.

Scaling means building an app that can handle more stuff. More page views. More visits. More users. More posts. More files. More subsites. More computations.

Scaling can also mean building an app to handle bigger stuff. Bigger pages. Bigger posts. Bigger files.

The truth is that sometimes an app or specific parts of an app will run fine under light use or when database tables are smaller, etc. But once the number of users and objects being worked on gets larger in number or size, the performance of the app falls off or locks up completely.

Scalability is a subjective measure of how well your code and application handles more and bigger stuff. Generally, you want to build your app to handle the amount of growth you expect and then some more just in case. On the other hand, you want to always weigh the pros and cons of any platform or coding decision made for the sake of scalability. These decisions usually come at a cost, both in money and also in technical debt or added complexity to your codebase. Also, some techniques that make handling many, big transactions as fast as possible actually slow things down when working with fewer, smaller transactions. So it’s always important to make sure that you are building your app toward your real-world expectations and aren’t programing for scalability for the sake of it.

Scaling and optimization are closely related because applications that are fast scale better. There is more to scaling than having fast components, but fast components will make scaling easier. And having a slow application can make scaling harder. For this reason, it always makes sense to optimize your application from the inside out. In “The Truth About WordPress Performance,” a great whitepaper by Copyblogger Media and W3 Edge, the authors refer to optimizing the “origin” versus optimizing the “edge.”

Origin refers to your WordPress application, which is the source of all of the data coming out of your app. Optimizing the origin involves making your WordPress app and the server it runs on faster.

Edge refers to services outside of your WordPress application, which are further from the origin but potentially closer to your users. These services include content delivery networks (CDNs) as well as things like browser caching. Optimizing the edge involves using these services in a smart way to make the end user experience better.

Origin Versus Edge

Again, we advocate optimizing from the inside out, or from the origin to the edge. Improvements in the core WordPress performance will always trickle through the edge to the end user. On the other hand, performance increases based on outside services, while improving the user experience, will sometimes hide bigger issues in the origin that need to be addressed.

A typical example to illustrate this point is when a proxy server like Varnish (covered in more detail later in this chapter) is used to speed up load times on a slowly loading site. Varnish will make a copy of your fully rendered WordPress pages. If a visitor is requests a page that is available in the Varnish cache, that copy is served to the visitor rather than generating a new one through WordPress.

Serving flat files is much faster than running dynamic PHP code, and so Varnish can greatly speed up a website. A page that takes 10 seconds to load on a slow WordPress setup might load in 1 second using Varnish when a copy is fetched. However, 10-second load times are unacceptable, and they are still going to happen. The first time each page is loaded, it will take 10 seconds. Page loads in your admin dashboard are going to take 10 seconds. If a page copy is cleaned out of the Varnish cache for any reason, either because it has been updated or because Varnish needed to make room, it’s going to take 10 seconds to load a fresh copy of that page.

Varnish and tools like it are great at what they do and can be a valuable part of your application platform. At the same time, you want to make sure that these edge services aren’t hiding issues in your origin.

Testing

For this chapter, part of our definition of performance will be tied to how fast certain pages load in the web browser. We will use a few different tools to test page loads for a single user and also for many simultaneous concurrent users.

For all of the tests in this chapter, we used a fresh install of WordPress, running the Twenty Thirteen theme and no other plugins. The site was hosted on a dedicated server running CENTOS 6 with the following specs:

§ Intel® Xeon® E3-1220 processor

§ 4 Cores x 3.1 GHz

§ 12 GB DDR3 ECC RAM

§ 2 TB SATA hard drives in software RAID

When not otherwise specified, the server was running a minimal setup with only Apache, MySQL, and PHP installed.

What to Test

Before getting into how to test, let’s spend a little bit of time thinking about what to test. The testing tools described below primarily work by pointing your browser or another tool at a specific URL or a group of URLs for testing. But how do you choose which URLs to test?

The easy answer is to test everything, but that’s not very helpful. As important as knowing which pages to test is why those pages should be tested and what you are looking for. So here are a few things to think about when testing your app’s pages for performance.

Test a “static” page to use as a benchmark

By static here, we don’t mean a static .html file. The page should be one generated by WordPress, but choose one, like your “about” page or contact form, that has few moving parts. The results for page load on your more static pages will represent a sort of best-case scenario for how fast you can get pages to load on your app. If static pages are loading slowly, fix that first before moving on to your more complicated pages.

Test your pages with all outside page caches and accelerators turned off

You first want to make sure that your core WordPress app is running well before testing your entire platform including CDNs, reverse proxies, and any other accelerators you are using to speed up the end user experience. If you send 100 concurrent connections a page with a full page cache setup, the first page load might take 10 seconds, then the following 99 may take 1 second. Your average load time will be 1.09 seconds! However, as we discussed earlier that first 10-second load time is really unacceptable and hints at larger problems with your setup.

Test your pages with all outside page caches and accelerators turned on

Turning off the outside accelerators will help you locate issues with your core app. However, you want to run tests with the services on as well. This will help you locate issues with those services. Sometimes they will slow down your app.

Test prototypical pages

Whichever kind of page your users are most likely to be interacting with are pages you will want to test. If your app revolves around a custom post type, make sure that the CPT pages perform well. If your app revolves around a search of some kind, test the search form and search results pages.

Test atypical pages

While you should spend the most time focusing on the common uses of your app, it is a good idea to test the atypical or longtail uses of your app as well, especially if you have some reason to expect a performance issue there.

Test URLs in groups

Some of the following tools (like Siege and Blitz.io) allow you to specify a list of URLs. By including a list of all of the different types of pages your users will interact with, you get a better idea of what kind of traffic your site can handle. If you expect (or know from analytics) that 80% of your site traffic is on static pages and 20% is on your search pages, you can build a list of URLs with eight static pages and two search results pages, which will simulate that same 80/20 split during testing. If the test shows your site can handle 1,000 visitors per minute this way, it’s a pretty good indication that your site will be able to handle 1,000 visitors in a real-world scenario.

Test URLs by themselves

Testing URLs in groups will make the topline results more realistic, but it will make tracking down certain performance issues harder. If your static pages load in under 1 second, but your search results pages load in 10 seconds, doing the 80/20 split test described would result in an average load time of 2.8 seconds. However, the 10-second load time on the search results page may be unacceptable. If you test a single search result page or a group of similar search results pages, you’ll be better able to diagnose and fix performance issues with the search functionality on your site.

Test your app from locations outside your web server

The command-line tools described below can be run from the same server serving your website. It’s a good idea to run the tools from a different server outside that network so you can get a more realistic idea of what your page loads are when including network traffic to and from the server.

Test your app from inside your web server

It also makes sense to run performance tests from within your web server. This way you remove any effect the outside network traffic will have on the numbers and can better diagnose performance issues that are happening within your server.

Each preceding example has a good counterexample, which is another way of saying you really do have to test everything page of your site under multiple conditions if you want the best chances of finding any performance issues with your site. The important part is to have an idea of what you are trying to test and to try as much as possible to reduce outside influences on the one piece you are focusing on.

Chrome Debug Bar

The Google Chrome Debug Bar is a popular tool with web developers that can be used to analyze and debug HTML, JavaScript, and CSS on websites. The Network tab also allows you to view all requests to a website, their responses, and the time each request took.

Similar tabs exist in the Firebug plugin for Firefox and in Internet Explorer’s Developers Tools.

To test your site’s page load time using the Chrome Debug Bar:

1. Open Chrome.

2. Click the Chrome menu and go to Tools → Developer Tools.

3. Click the Network tab of the debug bar that shows in the bottom pane.

4. Navigate to the page you want to test.

5. You will get a report of all of the requests made to the server.

6. Scroll to the bottom to see the total number of requests and final page load time.

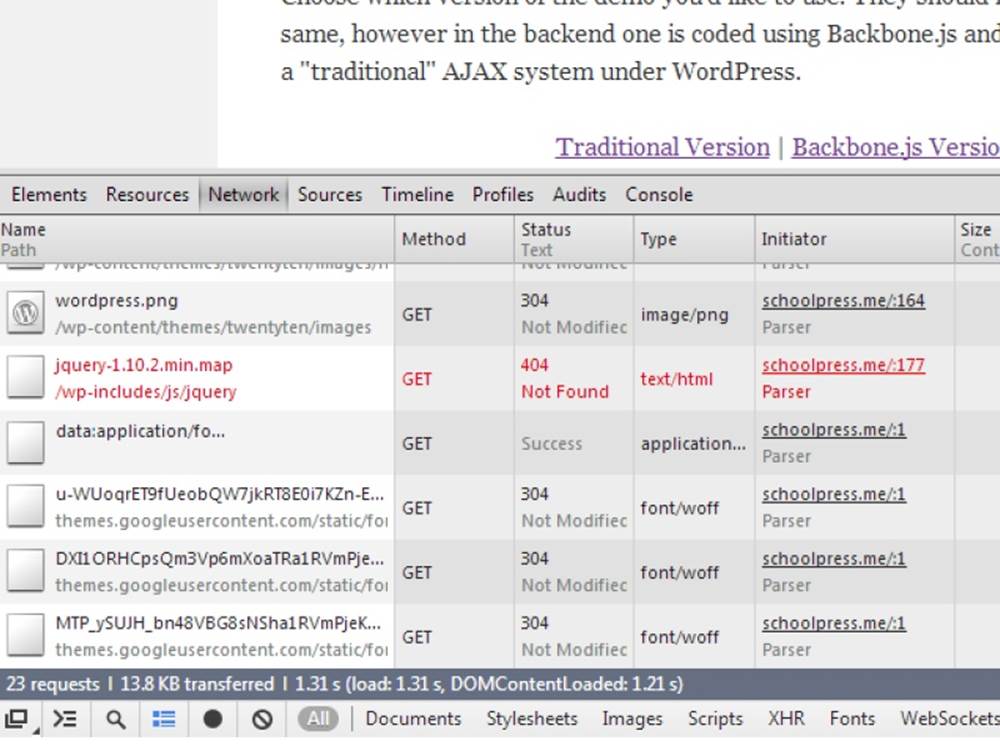

Figure 16-1 shows an example of the Chrome Debug Bar running on a website.

Figure 16-1. A shot of the Network tab of the Chrome Debug Bar

The final report will look something like the following:

19 requests | 35.7 KB transferred | 1.42 s (load: 1.16 s, DOMContentLoaded: 1.10 s)

This line is telling us the number of requests, the total amount of data transferred to and from the server, the final load time, and also the amount of time it took to load the DOM.

A DOMContentLoaded action is fired once all of the HTML of a given site has been loaded, but before any images, JavaScript, or CSS may have finished loading. For this reason the “DOMContentLoaded” time will be smaller than the total load time reported by the debug bar.

The Chrome Debug Bar is a crude way to test load times. You have to do multiple loads manually and keep track of the load times to get a good average. However, the debug bar does give you useful information about individual file and script load times, which can be used to find bottlenecks in your site images or scripts.

When testing page load times with the Google Chrome Debug Bar, the first time you visit a web page will typically be much slower than subsequent loads. This is because CSS, JavaScript, and images will be cached by the browser. Additional server-side caching may also affect load times. Keep this in mind when testing load times. Unless you are trying to test page loads with caching enabled, you may want to disable caching in your browser and on the server.

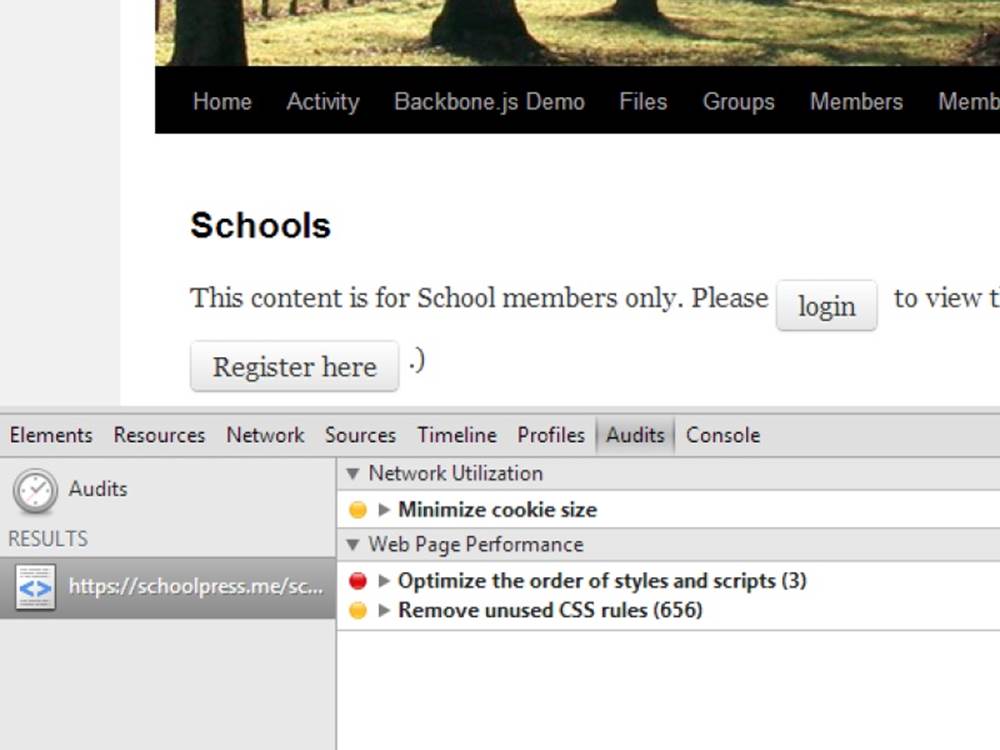

You can also use the Audit tab of the Chrome Debug Bar (or the PageSpeed Tools by Google) to get recommendations for how to make your website faster. Figure 16-2 shows an example of the report generated by the Audit tab. This chapter will cover the main tools and methods for carrying out the kinds of recommendations made by the PageSpeed audit.

Figure 16-2. The Audit tab of the Chrome Debug Bar

NOTE

There is also a PageSpeed Server offered by Google that is in beta at the time of this writing. In the future, this may be a useful tool for speeding up websites through caching, but in our experience, the tool is not flexible enough to work with complicated web apps (that can’t simply cache everything). We recommend the other tools covered in this chapter instead.

Apache Bench

Using your web browser, you can get an idea of load times for a single user under whatever load your server happens to be under at the time of testing. To get an idea of how well your server will respond under constant heavy load, you need to use a benchmarking tool like Apache Bench.

Despite the name, Apache Bench can be used to test other HTTP servers besides Apache. What is basically does is spawn the specified number of dummy connections against a website and records the average load times along with other information.

Installing Apache Bench

Apache Bench is available for all Linux distributions. On CENTOS and RedHat servers, you can install it via the httpd-tools package. If you have the yum package manager installed, you can use this command:

yum install httpd-tools

On Ubuntu servers, Apache Bench will be part of the apache2-utils package. If you have apt-get installed, you can use this command:

apt-get install apache2-utils

Apache Bench is also available for Windows and should have been installed alongside your Apache installation. Information on how to install and run Apache Bench on Windows can be found in the Apache docs.

Running Apache Bench

The full list of parameters and options can be found in the man file or on the Apache website. The command to run Apache Bench is ab, and a typical command will look like this:

ab -n 1000 -c 100 http://yourdomain.com/index.php

The two main parameters for the ab command are n and c. n is the number of requests. c is the number of concurrent requests to perform at one time. In the last example, 1,000 total requests will be made in batches of 100 simultaneous requests at a time.

NOTE

If you leave off the trailing slash on your domain or don’t specify a .php file to load, Apache Bench may fail with an error.

The output will look something like this (The report shows the results for 100 concurrent requests against the homepage of a default WordPress install running on our test server).

#ab -n 1000 -c 100 http://yourdomain.com/index.php

This is ApacheBench, Version 2.3 <$Revision: 655654 $>

Copyright 1996 Adam Twiss, Zeus Technology Ltd, http://www.zeustech.net/

Licensed to The Apache Software Foundation, http://www.apache.org/

Benchmarking yourdomain.com (be patient)

Server Software: Apache/2.2.15

Server Hostname: yourdomain.com

Server Port: 80

Document Path: /

Document Length: 251 bytes

Concurrency Level: 100

Time taken for tests: 8.167 seconds

Complete requests: 1000

Failed requests: 993

(Connect: 0, Receive: 0, Length: 993, Exceptions: 0)

Write errors: 0

Non-2xx responses: 7

Total transferred: 9738397 bytes

HTML transferred: 9516683 bytes

Requests per second: 122.44 [#/sec] (mean)

Time per request: 816.740 [ms] (mean)

Time per request: 8.167 [ms] (mean, across all concurrent requests)

Transfer rate: 1164.40 [Kbytes/sec] received

Connection Times (ms)

min mean[+/-sd] median max

Connect: 0 0 0.4 0 2

Processing: 3 799 127.4 826 1164

Waiting: 2 714 113.7 729 1091

Total: 3 799 127.4 826 1164

Percentage of the requests served within a certain time (ms)

50% 826

66% 854

75% 867

80% 876

90% 904

95% 936

98% 968

99% 987

100% 1164 (longest request)

The main stat to track here is the first “Time per request”. In the test, the mean is shown as 816.740 milliseconds (ms) or about 0.817 seconds. What this number means [28] is that when there are 100 people hitting the site at the same time, it takes about 0.817 seconds for the server to originate the HTML for the home page

There is a second “Time per request” stat under the first labeled “mean, across all concurrent requests.” This is simply the mean divided by the number of concurrent connections. In the example it shows 8.167 ms or about 1 hundredth of a second. It’s important to realize that the second “Time per request” number is not the load time for a single request. However, across multiple tests, this ratio (average request time / number of concurrent requests) does give you an idea of how well your server handles larger numbers of concurrent users. If the mean across concurrent requests stays the same as you increase -c, it means that your server is scaling well. If it goes up drastically, it means that your server is not scaling well.

Another important stat in this report is “requests per second.” This number sometimes maps more directly to your load estimates. You can get real-life “requests per day” or “requests per hour” numbers from your site stats and convert these to requests per second and compare that to the numbers showing up in your reports and then tweak the n and c inputs to match your desired conditions.

Testing with Apache Bench

There are a few tips that will help you when testing a website with Apache Bench.

First, run Apache Bench from somewhere other than the server you are testing since Apache Bench itself will be using up resources required for your web server to run. Running your benchmarks from outside locations will also give you a more realistic idea of page generation times, including network transfer times.

On the other hand, running Apache Bench from the same server the site is hosted on will take the network latency out of the equation and give you an idea of the performance of your stack irrespective of the greater Internet.

Second, start with a small number of simultaneous connections and build up to larger numbers. If you try to test 100,000 simultaneous connections right out the door, you can fry your web server, your testing server, or both. Try 100 connections, then 200, then 500, then 1,000, then more. Large errors or bottlenecks in your server and app performance can come out with as few as 100 connections. Once you pass those tests, try throwing more connections at the app.

Third, run multiple tests. There are a lot of factors that will affect the results of your benchmarks. No two tests will be exactly the same, so try to run a few tests on different pages of your site, at different times, under different conditions, and from different servers and geographical locations. This will give you a more realistic results.

Graphing Apache Bench results with gnuplot

The -g parameter of Apache Bench can be used to specify an output file of the result data in the gnuplot format. This data file can be fed into a gnuplot script to generate a graph image.

NOTE

You can also use the -e parameter to specify an output file in CSV (Excel) format.

You can run the following commands to set up some space for your testing and save the gnuplot data:

# mkdir benchmarks

# mkdir benchmarks/data

# mkdir benchmarks/graphs

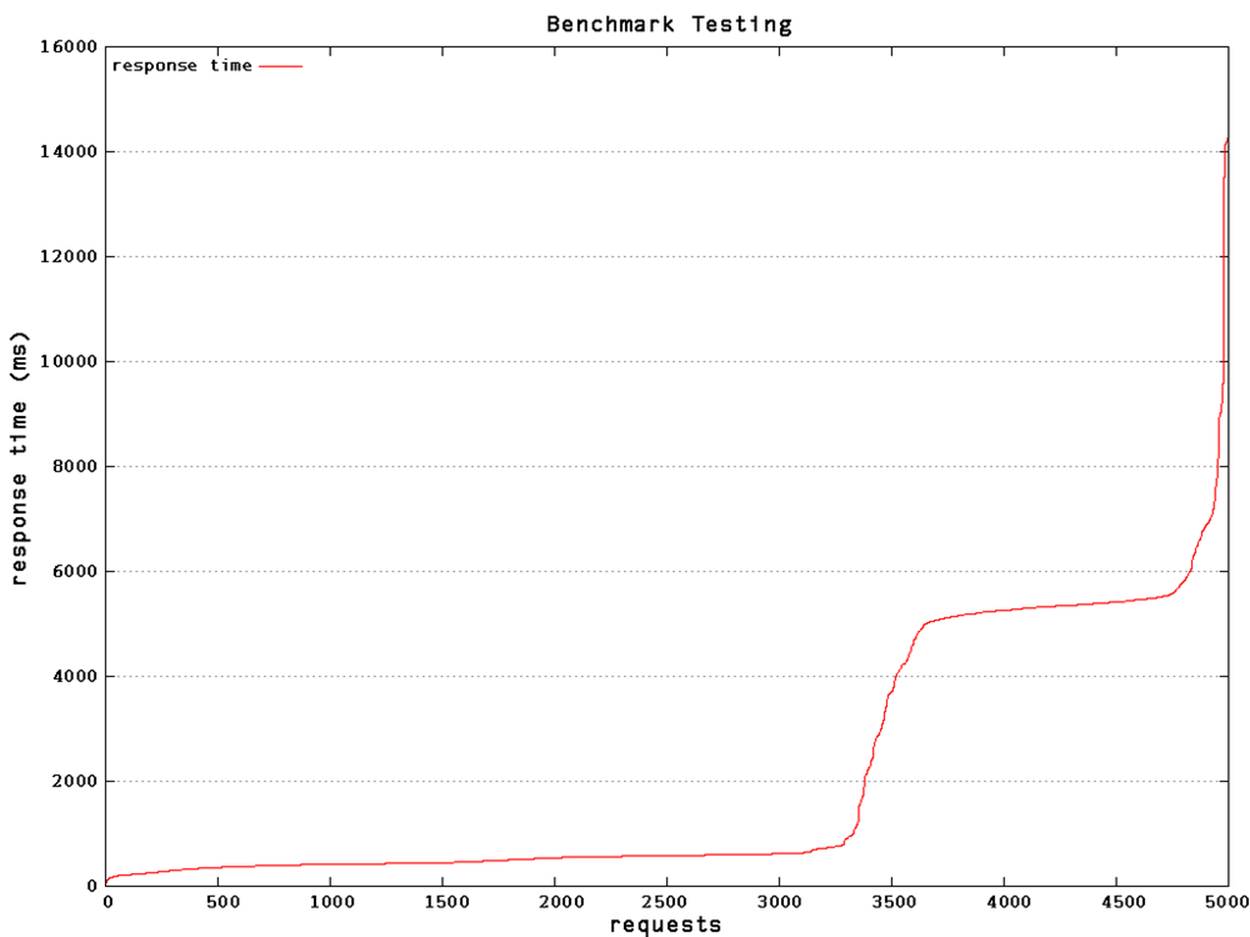

# ab -n 5000 -c 200 -g benchmarks/data/testing.tsv “http://yourdomain.com/”

The summary report for this benchmark includes:

Requests per second: 95.00 [#/sec] (mean)

Time per request: 2105.187 [ms] (mean)

Then you’ll need to install gnuplot.[29] Once installed, you can create a couple of gnuplot scripts to generate your graphs. Here are a couple scripts modified from the examples in a blog post by Brad Landers. Put these in your /benchmark/ folder.

This first graph will draw a line chart showing the distribution of load times. This chart is good at showing how many of your requests loaded under certain times. You can save this script as plot1.gp:

# Let's output to a png file

set terminal png size 1024,768

# This sets the aspect ratio of the graph

set size 1, 1

# The file we'll write to

set output "graphs/sequence.png"

# The graph title

set title "Benchmark testing"

# Where to place the legend/key

set key left top

# Draw gridlines oriented on the y axis

set grid y

# Label the x-axis

set xlabel 'requests'

# Label the y-axis

set ylabel "response time (ms)"

# Tell gnuplot to use tabs as the delimiter instead of spaces (default)

set datafile separator '\t'

# Plot the data

plot "data/testing.tsv" every ::2 using 5 title 'response time' with lines

exit

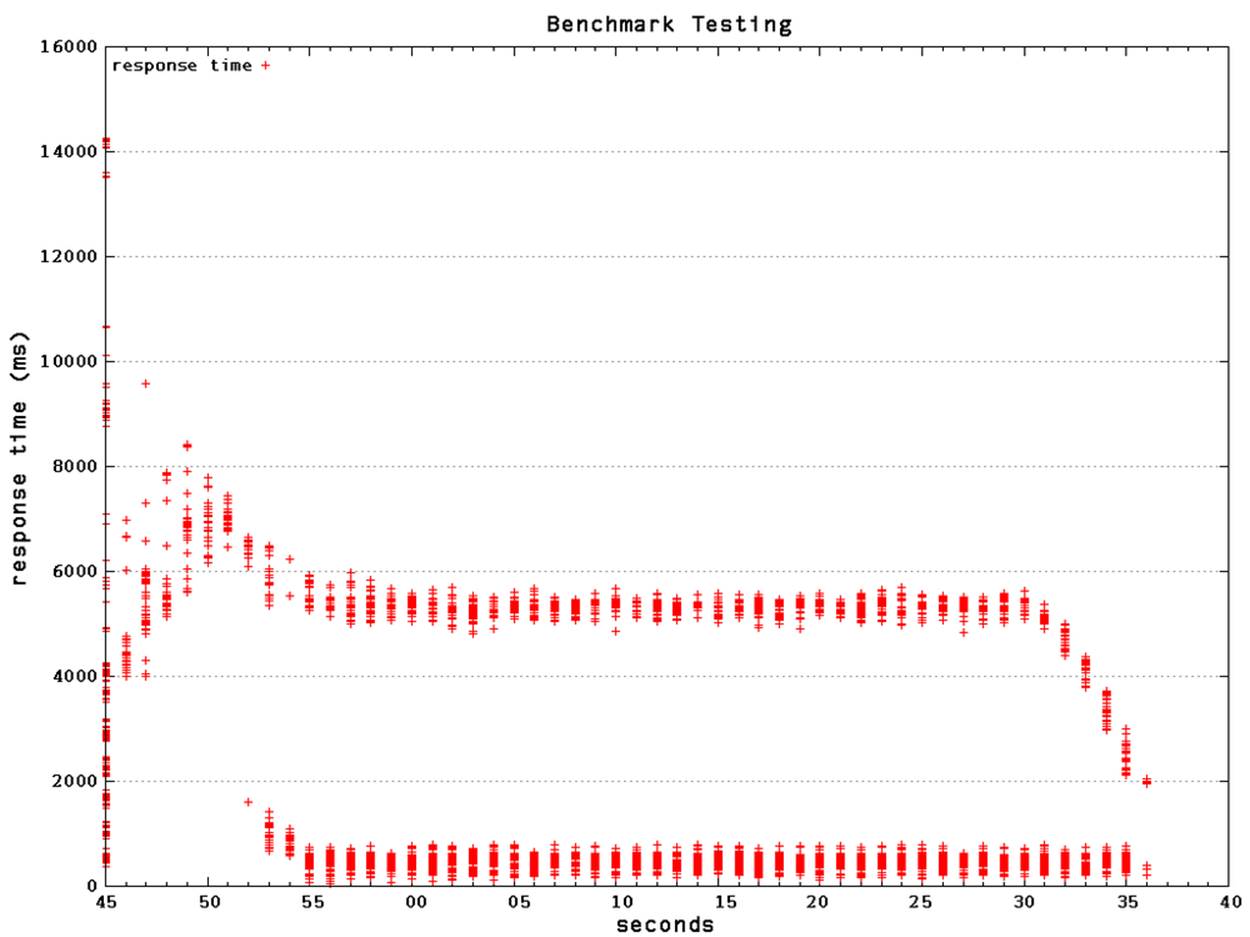

This second graph will draw a scatterplot showing the request times throughout the tests. This chart is good at showing the distribution of load times throughout the tests. You can save this script as plot2.gp.:

# Let's output to a png file

set terminal png size 1024,768

# This sets the aspect ratio of the graph

set size 1, 1

# The file we'll write to

set output "graphs/timeseries.png"

# The graph title

set title "Benchmark testing"

# Where to place the legend/key

set key left top

# Draw gridlines oriented on the y axis

set grid y

# Specify that the x-series data is time data

set xdata time

# Specify the *input* format of the time data

set timefmt "%s"

# Specify the *output* format for the x-axis tick labels

set format x "%S"

# Label the x-axis

set xlabel 'seconds'

# Label the y-axis

set ylabel "response time (ms)"

# Tell gnuplot to use tabs as the delimiter instead of spaces (default)

set datafile separator '\t'

# Plot the data

plot "data/testing.tsv" every ::2 using 2:5 title 'response time' with points

exit

To turn your benchmark data into graphs then, run these commands:

# cd benchmark

# gnuplot plot1.gp

# gnuplot plot2.gp

The resulting charts should look like Figure 16-3 and Figure 16-4.

Figure 16-3. The output of the plot1.gp gnuplot script

Seeing the data in graphical form can help a lot. For example, while the summary showed a mean load time of 2,105 ms, the graphs above show us that a little over half of our requests were processed in under 1 second, and the remaining requests took over 4.5 seconds.

You might think that a two-second load time is acceptable, but a four-second load time is not. Based on the summary report, you’d think you were in the clear, when really something like 30%+ of your users would be experiencing load times over four seconds.

Figure 16-4. The output of the plot2.gp gnuplot script

Siege

Siege is a tool like Apache Benchmark that will hit your site with multiple simultaneous connections and record response times. The report generated by Siege does a good job of showing just the most interesting information.

Siege will need to be installed from source. You can get the latest source files at the Joe Dog software site.

A sample Siege command will look like this:

siege -b -c100 -d20 -t2M http://yourdomain.com

The -b parameter tells Siege to run a benchmark. The -c100 parameter says to use 100 concurrent users. The -d20 parameter sets the average sleep time between page loads for each user to 20 seconds. And the -t2M parameter says to run the benchmark for two minutes. You can also use -t30S to set a time in seconds or -t1H to set a time in hours.

The output will look like this:

** Preparing 100 concurrent users for battle.

The server is now under siege...

Lifting the server siege... done.

Transactions: 1160 hits

Availability: 100.00 %

Elapsed time: 119.29 secs

Data transferred: 9.53 MB

Response time: 0.11 secs

Transaction rate: 9.72 trans/sec

Throughput: 0.08 MB/sec

Concurrency: 1.05

Successful transactions: 1160

Failed transactions: 0

Longest transaction: 0.26

Shortest transaction: 0.09

The server was hit 1,160 times by the 100 users, with an average response time of 0.11 seconds. The server was up 100% of the time, and the longest response time was just 0.26 seconds.

Blitz.io

Blitz.io is a web server for running benchmarks against your websites and web apps. Blitz.io offers a nice GUI for starting a benchmark and generates some beautiful graphical reports showing your app’s response times. More importantly, Blitz.io can generate traffic coming from different areas of the world using different user agents to simulate a more realistic traffic scenario.

The service can get a little costly, but can be useful for easily generating final reports that represent a more realistic estimate of how your site will perform in the wild.

W3 Total Cache

There are a few plugins for WordPress that will help you set up various tools to increase the performance of a WordPress site. One plugin in particular, W3 Total Cache, offers just about every performance-increasing method out there.

Frederick Townes, founder of Mashable and the lead developer of W3 Total Cache, shares our belief that WordPress optimization should be done as close to the core WordPress app (the origin) as possible:

Mileage varies, but one thing we know for certain is that user experience sits right next to content in terms of importance - they go hand in hand. In order for a site or app to actually reach its potential, it’s critical that the stack, app and browser are all working in harmony. For WordPress, I try to make that easier than it was in the past with W3 Total Cache.

For many sites with low traffic or little dynamic content, setting up the most common settings in W3 Total Cache is all you will need to scale your app. For other sites, you may want to implement some of the methods bundled with W3 Total Cache individually so you can customize them to your specific app. In general, W3 Total Cache does a great job of making sure that all of the bundled techniques play nice together. For this reason, it’s a good idea to work with W3 Total Cache to customize things rather than use a solution outside of the plugin that could conflict with it. We’ll go over a typical configuration for W3 Total Cache, and also describe briefly how some of the techniques work in general.

W3 Total Cache is available for download from the WordPress.org plugin repository. Once the plugin is installed, a Performance menu item will be added to the admin dashboard. You’ll usually have to update permissions on various folders and files on your WordPress install to allow W3 Total Cache to work. The plugin will give you very specific messages to get things set up. Once this is done, you’re ready to start enabling the various tools bundled with the plugin.

NOTE

The W3 Total Cache plugin is available in its entirety for free through the WordPress plugin repository. You will need to purchase a plan through a content delivery network provider (CDN) to take advantage of the CDN features of W3 Total Cache. And finally, the makers of W3 Total Cache offer various support and configuration services through their website.

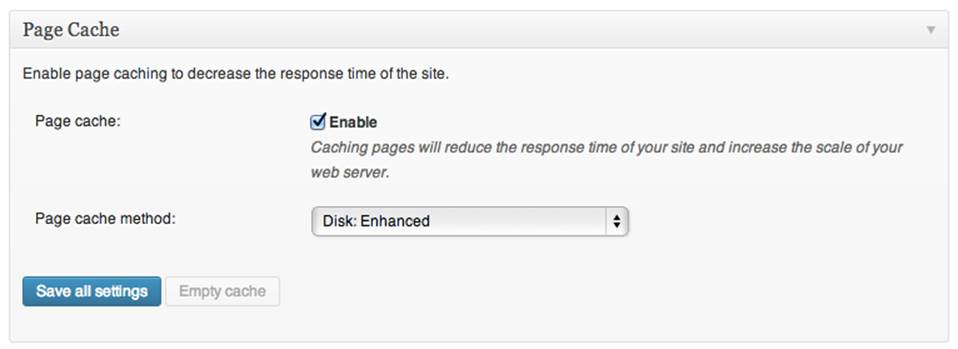

To enable the tools we want to use, go to the General Settings page of the W3 Total Cache Performance Menu. Find the Enable checkbox for the Page Cache, Minify, Database Cache, Object Cache, and Browser Cache sections, check the box, and then click one of the Save All Settings (seeFigure 16-5).

Figure 16-5. Check Enable in the box for each performance technique that you want to use

You can typically get by using the default and recommended settings for all of the W3 Total Cache tools. Your exact settings will depend on the specifics of your app, your hosting setup, and how your users use your app. There are a lot of settings, and we won’t go over all of them, but we’ll cover a few of the more important settings in the following sections.

Page Cache Settings

A page cache is exactly what it sounds like: the caching of entire web pages after they’ve been generated. When a new user visits your site, if a cache of the page is available, that static HTML file is served instead of loading the page through PHP and WordPress. If there is no cache or the cache has expired, the page is loaded as it normally would through WordPress.

For a web server like Nginx or Apache, serving a static HTML file is much faster than serving a dynamic PHP file. Serving stacking files avoids all of the database calls and calculations that are required in your dynamic PHP scripts, but it also plays to the strengths of your web stack, which is architected from the OS level up to the web server level primarily to push files around quickly.

Every visit that is served a static HTML file instead of generating a dynamic page in PHP is going to save you some RAM and CPU time. With more resources available, even noncached or noncachable pages are going to load faster. So page caching can greatly speed up page loads on your site, and is one of the primary focuses of web hosts and others trying to serve WordPress sites quickly.

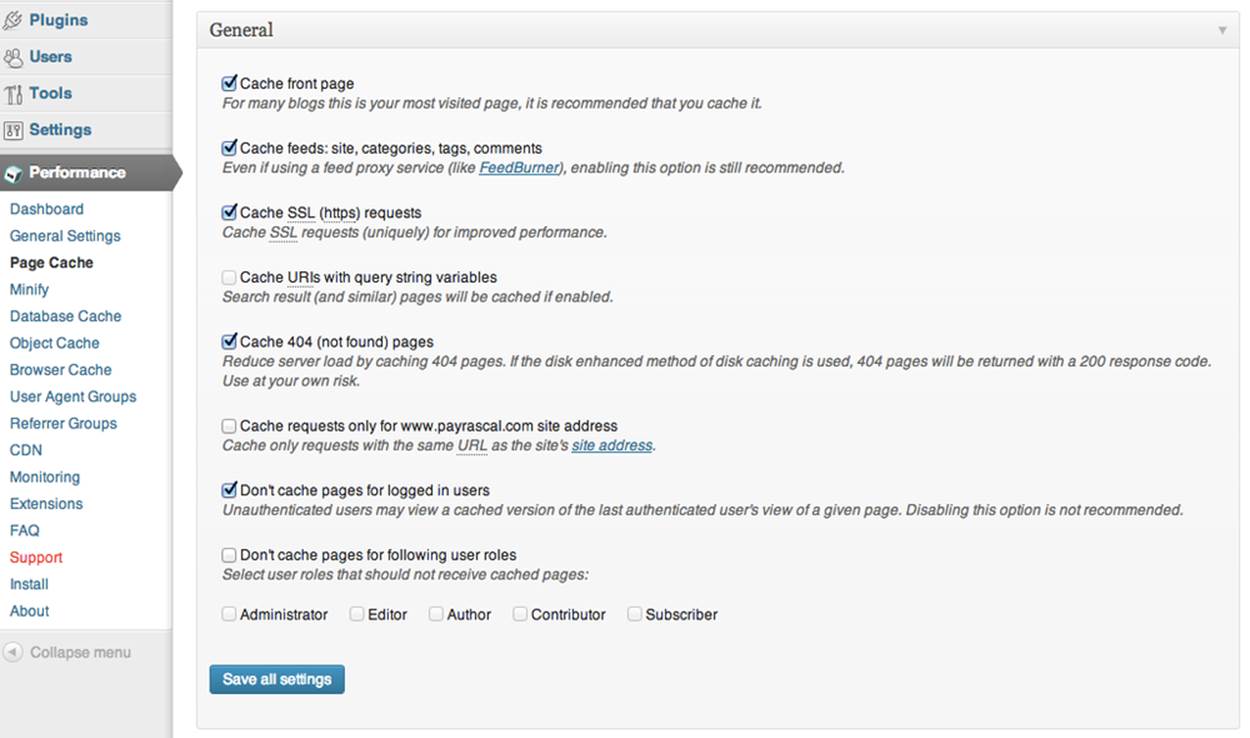

On the Page Cache page under the Performance menu, you’ll usually want to enable the following options in the General box: Cache front page, Cache feeds, Cache SSL (https) requests, Cache 404 (not found) pages, and “Don’t cache pages for logged in users.” See Figure 16-6 for an example.

The “Don’t cache pages for logged in users” checkbox is an important option to check because logged-in users will often have access to private account information, and you don’t want that stuff getting into the cache. At the very least, you might accidentally show a cached “Howdy, Jason” in the upper right of your website for users who aren’t Jason. In the worst-case scenario, you might share Jason’s personal email address or account numbers.

For these reasons, full page caching is typically going to cause problems for logged-in members. Other types of caching can still help speed up page load speeds for logged-in members, and we’ll cover a few methods later in this chapter.

Figure 16-6. W3 Total Cache page cache general settings

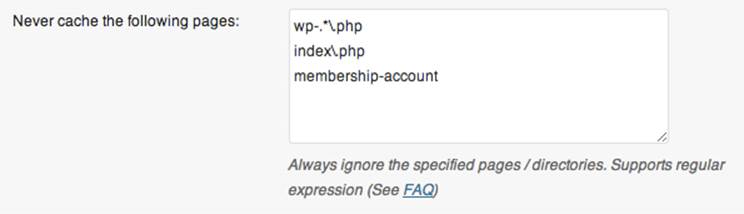

Another important option to get familiar with is the ability to exclude certain pages, paths, and URLs from the page cache. Inside of the Advanced box is a text area labeled “Never cache the following pages.” This text area is shown in Figure 16-7.Pages, paths, and URLs added to this setting are going to be ignored by the page cache, and so will be generated fresh on every page load. Place one URL string per line, and regular expressions are allowed.

Figure 16-7. Never cache the following pages section in W3 Total Cache Page settings

Some common pages that you will want to exclude from the page cache include checkout pages, login pages, non-JavaScript-based contact forms, API URLs, and any other pages that should be generated dynamically on each load.

Minify

Minifying is the process of both combining and removing excess whitespace and unnecessary characters from source files, typically JavaScript and CSS files, to reduce the file size of those files when served to the web browser. Smaller file sizes means faster load times.

You are probably familiar with files like jquery.min.js, which is a minimized version of the jQuery library. W3 Total Cache will automatically minify all of your CSS and JS files for you. You can also enable the minification of HTML files (most notably from the page cache), which can save a bit on page loads as well.

In general, minification is a good idea on production sites. On development sites, you will want to leave minification off so you can better debug CSS and scripting issues.

Database Caching

W3 Total Cache offers database caching. This will store the results of SELECT queries inside of a cache file (or in a memory backend). Repeated calls to the same DB query will pull results from the cache instead of querying the database, which may be on an entirely different service that is part of the slowdown.

If your database server is running on solid state drives (SSDs) or have some kind of caching enabled at the MySQL layer, database caching with W3 Total Cache may not improve performance and can negatively impact it, relatively speaking. So be sure to run benchmarks before and after enabling database caching to see if it helps your site and analyze your slow query log to identify queries that can be manually tuned. Remember, caching scales servers; it doesn’t magically resolve fundamentally slow performing queries or code.

If you find that specific queries are taking a long time, you can cache them individually using WP transients or other fragment caching techniques, which are covered later in this chapter.

Object Cache

Object caching is similar to database caching, but the PHP representations of the objects are stored in cache instead of raw MySQL results. Like database caching, object caching can sometimes slow your site down instead of speeding it up; your mileage may vary. Using a persistent object cache (covered later in this chapter) will make it more likely that the object cache will speed up your site. Be sure to benchmark your site before and after configuring object caching.

Object caching is also known to cause issues with some WordPress plugins or activities in WordPress. Object caching is a powerful tool for speeding up your site, but you may have to spend time tweaking the lower levels of the scripts you use to make them work with the plugins and application code for your specific app.

CDNs

A content delivery network or CDN is a service that can serve static files for you—typically images, JavaScript files, and CSS files—on one or many colocated servers that are optimized for serving static files. So instead of loading an image off the same server that is generating the PHP pages for your site, your images will be loaded from whichever CDN server is closest to you. Even if you use your own server as a CDN, you can decrease load times because the browser will be able to load the static files and PHP page at the same time because a separate browser connection will be used for both.

W3 Total Cache can help you integrate with many of the most popular CDNs. The plugin will handle uploading all of your media files, static script files, and page cache files to the CDN, automatically redirect URLs on your site to the CDN and, most importantly, purge modified files for those CDNs that support it.

GZIP Compression

GZIP compression is another neat trick that will often speed up your site. In effect, you trade processing time (when the files are zipped up) for download time (since the files will be smaller). The browser will unzip the files on the receiving end. The time saved by downloading smaller files usually makes up for the time spent zipping and unzipping them. Of course, when using W3 Total Cache, the compression happens once when the cache is built.

But again, like everything else, run a benchmark before and after enabling GZIP compression to make sure that your site is benefiting from the feature.

Hosting

Upgrading your hosting is one of the best things you can do to improve performance for your WordPress app. More CPU and RAM will speed up PHP, MySQL, and your underlying web server. This may be sound obvious, but many people can get caught up in the excitement of optimizing code or using caching techniques to speed up part of their web app while ignoring a simple hosting upgrade that will improve performance across the board.

Of course, we advocate using all of the techniques in this chapter if applicable and within budget. However, one of the earliest decisions you are likely to make, possibly before you even start coding, is where you are going to host your finished web app.

You can find our specific recommendations for hosting WordPress apps on this book’s website. In this section, we’ll cover the different types of hosting to consider.

WordPress-Specific Hosts

As WordPress has become more popular for building websites, hosting companies have cropped up that are configured specifically for running WordPress sites. The earliest of these were Page.ly, Zippykid, WP Engine, and SiteGround.

The WordPress-specific hosts offer managed environments with server-side caching and a support staff that is more knowledgeable about WordPress than a typical hosting company.

The control panels for these hosts are similar to shared hosting plans, with limited flexibility in adjusting the underlying configuration. On the plus side, these hosts typically handle a lot of your caching setup, do a great job managing spam and denial of service attacks, and can quickly scale your app as load increases. On the downside, the limited configurability can be an issue with certain apps and plans can get pricey for larger sites.

Rolling Your Own Server

The alternative to WordPress-specific managed hosting is to roll your own server, either on dedicated hardware or in a cloud environment.

On the dedicated side, Rackspace is a popular choice, and 1and1 provides powerful hardware at wholesale prices. On the cloud side, Amazon EC2 is very popular, and Digital Ocean is a cost-effective alternative.

No matter which route you go, you will have to set up your own web server, install PHP and MySQL yourself, and manage all of the DNS and other server maintenance yourself. Depending on your needs and situation, this could be a good thing or a bad thing. If you need a more specific configuration for your app, you have to roll your own server. On the other hand, you’ll have to spend time or money on server administration that might be better spent elsewhere.

It’s important to know where your limits are in terms of server administration. For example, Jason is very experienced setting up web servers like Apache and configuring and maintaining PHP and MySQL. On the other hand, he has little experience managing a firewall against denial of service attacks or load balancing across multiple servers. You’ll want to choose a hosting company and option that works to your strengths and makes up for your weaknesses.

Rolling your own server and getting 10 times the raw performance for 1/10th the cost of a shared hosting plan can feel pretty good. But when you find yourself up at 3 a.m., wasting time struggling to keep your server alive against automated hacking attempts from foreign countries, the monthly fee of the managed options may not seem so steep.

Below, we’ll go quickly over a few common setups for Linux-based servers running WordPress. Details on how to set up each individual configuration are constantly evolving. We will try to always have links to the most recent instructions and reviews online at the book’s website.

WARNING

Best practices for setting up and running web servers and the various caching tools that speed them up are changing all the time. Also, instructions will depend on your particular server, which version of Linux it is running, which other tools you are using, and the specifics of the app itself. The proper way to use the information in the rest of this chapter is to go over the instructions provided here and in the linked to articles to get an idea of how the technique being covered works. If you decide to implement the technique on your own server, do some research (Google) to find a tutorial or instructions that are up to date and more specific to your situation.

Apache server setup

As the most popular web server software in use today, it is usually fairly painless to install Apache on any flavor of Linux server.

Once set up, there are a few things you can do to optimize the performance of Apache for your WordPress app:

§ Disable unnecessary modules loaded by default.

§ Set up Apache to use prefork or worker multi-processing, depending on your need. A good overview of each option can be found at Understanding Apache 2 MPM (worker vs prefork). Prefork is the default and what is usually best for running WordPress.

§ If using the Apache Prefork Multi-Processing Module (default), configure the StartServers, MinSpareServers, MaxSpareServers, ServerLimit, MaxClients, and MaxRequestsPerChild values.

§ If using the worker Multi-Processing Module, configure the StartServers, MaxClients, MinSpareThreads, MaxSpareThreads, ThreadsPerChild, and MaxRequestsPerChild values.

There are a couple settings in particular to pay attention to when optimizing Apache for your hardware and the app it’s running. These settings typically have counterparts in other web servers as well. The concepts behind them should be applicable to any web server running WordPress.

The following settings and instructions assume you are using the more common prefork module for Apache:

MaxClients

When Apache is processing a request to serve a file or PHP script, the server creates a child process to handle that request. The MaxClients setting in your Apache configuration tells Apache the maximum number of child processes to create.

After reaching the MaxClients number, Apache will queue up any other incoming requests. So if MaxClients is set too low, your visitors will experience long load times as they wait for Apache to even start to process their requests.

If MaxClients is set to high, Apache will use up all of your RAM and will start to use swap memory, which is stored on the hard drive and much much slower than physical RAM. When this happens, your visitors will experience long load times since their requests will be handled using the slower swap memory.

Besides simply being slower, using swap memory also requires more CPU as the memory is swapped from hard disks to RAM and back again, which can lead to lower performance overall. When your server backs up like this, it’s called thrashing and can quickly spiral out of control and eventually will lock up your server.

So it’s important to pick a good value for your MaxClients setting. To determine an appropriate value for MaxClients for your Apache server, take the amount of server memory you want to dedicate to Apache (typically as much as possible after you subtract the amount that MySQL and any other services on your server use) and then divide that by the average memory footprint of your Apache processes.

There is no exact way to figure out how much memory your services are using or how much memory each Apache process takes. It’s best to start conservatively and to tweak the values as you watch in real time.

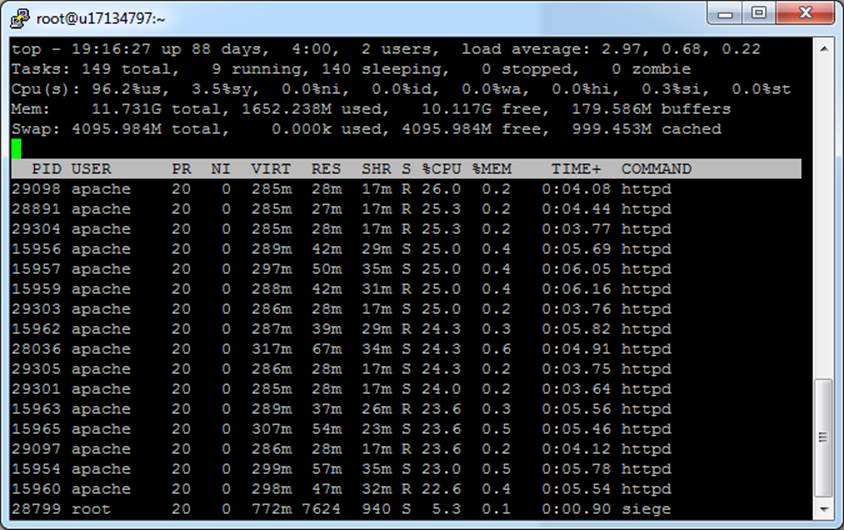

Using the command top -M we can see the total memory on our server, how much is free, and how much active processes are currently using. On our test server, which is under no load, I see that we have 11.7 GB of memory and 10.25 GB of that free. If we want to do a 50/50 split between Apache and MySQL (another assumption you should test out and adjust to your specific app), we can dedicate about 4.5 GB to Apache, 4.5 GB to MySQL, and leave the rest (up to 2.7 GB in this case) for padding and other services running on the server.

Figure 16-8 shows an example of the output from running the top command.

Figure 16-8. Using the top command to figure out how much memory is available

To figure out how much memory Apache needs for each process, you can use top -M again, when the server is under normal loads. Look for processes running the httpd command. If we see our app using about 20 MB of memory for each process, we would divide 4.5 GB (~4600 MB) by 20 MB and get 230, meaning our server should be able to support 230 MaxClients in 4.5 GB of memory.

When setting the MaxClients value, set the ServerLimit value to the same number. ServerLimit is a kind of MaxMaxClients that can only be changed when Apache is restarted. The MaxClients setting can be changed by other scripts while Apache is running, although this isn’t commonly done. So theoretically ServerLimit could be set higher than MaxClients and some process could change the MaxClients value up or down while Apache was running.

MaxRequestsPerChild

Each child process or client spun up by Apache will handle multiple requests one after another. If MaxRequestsPerChild is set to 0, these child processes are never shut down, which is good since it lowers the overhead of spinning up a new child process but can be bad if there is a memory leak in your app. Setting MaxRequestsPerChild to a very high number like 1,000 or 2,000 is a nice compromise so that new processes aren’t shut down and restarted too often but if a memory leak does occur it will be cleaned up when the child process is eventually shut down.

KeepAlive

By default, the KeepAlive setting of Apache is turned off, meaning that after serving a file to a client browser, the connection is closed. A separate connection is opened and closed for each file request from that browser. Since a single page may have several files associated with it in the form of images, JavaScript, and CSS, this can lead to a lot of unnecessary opening and closing of connections.

With KeepAlive turned on, Apache will keep the first connection from a web browser open and serve all subsequent requests from the same browser session through that connection. After sitting idle with no requests from the same browser session, Apache will close the connection. Using a single connection instead of many can lead to great performance gains for some sites, especially if there are a lot of images or separate CSS and JavaScript on each page (you should probably be minimizing your CSS and JavaScript into one file for each anyway).

On the other hand, turning KeepAlive on requires more RAM since each connection will hold onto the memory for each request as it keeps a connection open.

It’s useful to experiment with turning KeepAlive on. If you do, you should change the KeepAliveTimeout value from the default 15 seconds to something smaller like 2-3 seconds—or something closer to the real load times of a single page visit on your site. This will free up the memory faster.

Also, if you turn KeepAlive on, you should probably adjust the MaxClients and MaxRequestsPerChild settings. Since each child process will be using more memory as it keeps the connection open, you may need to adjust your MaxClients value lower to avoid running out of memory. Since each connection counts as one request with respect to MaxRequestsPerChild, you may want to adjust your MaxRequestsPerChild value lower since there will be fewer requests overall per visit.

Some other good articles on optimizing Apache include:

§ “Apache Performance Tuning”

§ “Apache MPM Prefork”

§ “Apache MPM Worker”

§ “How to Set MaxClients in Apache/Prefork” at Fuscata

§ “Optimize Apache for WordPress” by Drew Strojny

§ “Apache Optimization: KeepAlive On or Off?” by Abdussamad

Nginx server setup

A popular alternative to Apache that is gaining a lot of momentum right now is Nginx. The main advantage of Nginx is that it is an asynchronous web server whereas Apache is a process-based web server. What this means in practice is that when many simultaneous clients hit an Apache-based server, a new thread is created for each connection. With Nginx, all connections are handled by a single thread or a small group of threads. Since each thread requires a block of memory, Nginx is more memory efficient and so can process a higher number of simultaneous requests than Apache can.

Some good articles about installing and configuring Nginx include:

§ “Nginx,” an article from the WordPress Codex

§ “How to Install WordPress with nginx on Ubuntu 12.04” by Etel Sverdlov

Nginx in front of Apache

The trade-off in using Nginx over Apache is that Nginx has fewer module extensions than Apache. Some modules like mod_rewrite for “pretty permalinks” will have to be ported over to the Nginx way of doing things. Other modules may not have Nginx equivalents.

For this reason, it is becoming popular to set up a dual web server configuration where Nginx serves cached web pages and static content and Apache serves dynamically generated content. One article explaining how to configure this setup is “How To Configure Nginx as a Front End Proxy for Apache” by Etel Sverdlov.

The main advantage of this setup is that static files will be served from Nginxk, which is configured to serve static files quickly; this will ease the memory burden of Apache. If you are already using a CDN for your static files, then using Nginx for static files would be redundant. Also, because you are still serving PHP files through Apache, you won’t gain the memory benefits of Nginx on dynamically generated pages. For these reasons, it is probably better to use Nginx for both static files and PHP or Apache with a CDN for static files.

MySQL optimization

To get the best performance out of WordPress, you will want to make sure that you’ve configured MySQL properly for your hardware and site use and that you’ve optimized the database queries in your app.

Optimizing MySQL configuration

The MySQL configuration file is typically found at /etc/my.cnf or /etc/mysql/my.cnf and can be tweaked to improve performance on your site. There are several interelated settings. The best way to figure out a good configuration for your hardware and site is to use the MySQLTuner Perl script.

After downloading the MySQLTuner script, you will also need to have Perl installed on your server. Then run perl mysqltuner.pl and follow the recommendations given. The output will look like the following:

-------- General Statistics ----------------------------------------------

[--] Skipped version check for MySQLTuner script

[OK] Currently running supported MySQL version 5.5.32

[OK] Operating on 64-bit architecture

-------- Storage Engine Statistics ---------------------------------------

[--] Status: +Archive -BDB -Federated +InnoDB -ISAM -NDBCluster

[--] Data in MyISAM tables: 35M (Tables: 395)

[--] Data in InnoDB tables: 16M (Tables: 316)

[--] Data in PERFORMANCE_SCHEMA tables: 0B (Tables: 17)

[!!] Total fragmented tables: 327

-------- Security Recommendations ---------------------------------------

[OK] All database users have passwords assigned

-------- Performance Metrics ---------------------------------------------

[--] Up for: 26d 22h 6m 21s (8M q [3.755 qps], 393K conn, TX: 15B, RX: 1B)

[--] Reads / Writes: 95% / 5%

[--] Total buffers: 168.0M global + 2.8M per thread (151 max threads)

[OK] Maximum possible memory usage: 583.2M (7% of installed RAM)

[OK] Slow queries: 0% (0/8M)

[OK] Highest usage of available connections: 21% (33/151)

[OK] Key buffer size / total MyISAM indexes: 8.0M/21.1M

[OK] Key buffer hit rate: 100.0% (84M cached / 40K reads)

[!!] Query cache is disabled

[OK] Sorts requiring temporary tables: 0% (3 temp sorts / 1M sorts)

[!!] Joins performed without indexes: 23544

[!!] Temporary tables created on disk: 26% (359K on disk / 1M total)

[!!] Thread cache is disabled

[OK] Table cache hit rate: 34% (400 open / 1K opened)

[OK] Open file limit used: 68% (697/1K)

[OK] Table locks acquired immediately: 99% (8M immediate / 8M locks)

[OK] InnoDB data size / buffer pool: 16.1M/128.0M

-------- Recommendations -------------------------------------------------

General recommendations:

Run OPTIMIZE TABLE to defragment tables for better performance

Enable the slow query log to troubleshoot bad queries

Adjust your join queries to always utilize indexes

When making adjustments, make tmp_table_size/max_heap_table_size equal

Reduce your SELECT DISTINCT queries without LIMIT clauses

Set thread_cache_size to 4 as a starting value

Variables to adjust:

query_cache_size (>= 8M)

join_buffer_size (> 128.0K, or always use indexes with joins)

tmp_table_size (> 16M)

max_heap_table_size (> 16M)

thread_cache_size (start at 4)

One thing to note is that MySQLTuner will give better recommendations if it has at least one day’s worth of log data to process. For this reason, it should be run 24 hours after MySQL has been restarted. You’ll want to follow the recommendations given, wait 24 hours, and run the script again, then rinse and repeat over a few days to narrow in on optimal settings for your MySQL setup.

Optimizing DB queries

A large source of load-time-draining process cycles is unoptimized, unnecessary, or otherwise slow MySQL queries. Finding and optimizing these slow SQL queries will speed up your site. Caching database queries, either at the database level or through the use of transients for specific queries, will help with slow queries, but you definitely want the original SQL as optimized as possible.

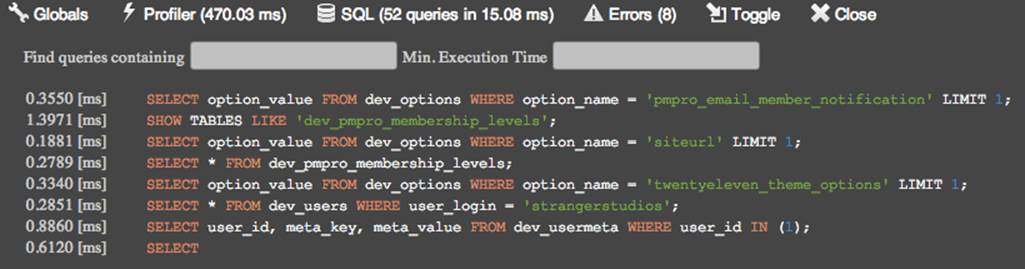

The first step in optimizing your database queries is to find out which queries are slow or otherwise undeeded. A great tool to do this is the Black Box Debug Bar plugin.

The Black Box Debug Bar, shown in Figure 16-9, adds a bar to the top of your website that will show you a page’s load time in milliseconds, the number of SQL queries made and how long they took, and the number of PHP errors, warnings, and notices on the page.

Figure 16-9. The Black Box Debug Bar added to the top of all pages on your site while active

If you click on the SQL icon in the debug bar, you will also see all of the SQL queries made and the individual query times.

NOTE

Viewing the final generated SQL query is especially useful for queries that might be constructed across several PHP functions or with a lot of branching logic. For example, the final query to load posts on the blog homepage of WordPress is generated using many variables stored in the $wp_query object depending on if a search is being made, what page of the archive you are on, etc.

With the debug bar turned on, you can browse around your site looking for queries that are slow or outright unnecessary.

Another way to find slow SQL queries is to enable slow query logging in your MySQL configuration file. This will help find slow queries that come up in real use. You don’t want to rely on the slow query log, but it can catch some real-world use cases that won’t come up in testing.

To enable slow query logging in MySQL, find your my.cnf or my.ini file and add the following lines:

slow-query-log = 1;

slow-query-log-file = /path/to/a/log/file;

After updating your MySQL configuration file, you will need to restart MySQL.

When trying to optimize your DB queries, you should always be on the lookout for:

§ Cases where the same SQL query is being run more than once per page load. Store the result in a global variable or somewhere else to access it later in the page load.

§ Cases where one SQL query can be used instead of many. For example, plugins can load all of their options at once instead of using a separate query or getOption() call for each option.

§ Cases where a SQL query is being run, but the result is not being used. Some queries may only need to be run in the dashboard or only on the frontend or only on a specific page. Change the WordPress hook being used or add PHP logic around these calls so they are only executed when needed.

If you find a necessary query that is taking a particularly long time, how you go about optimizing it will be very specific to the query itself. Here are some things to try:

§ Adjust queries to use only indexed columns in WHERE, ON, ORDER BY, and GROUP BY clauses.

§ Add WHERE clauses to your JOINs so you are joining smaller subtables.

§ Use a different table to store your data, for example, using taxonomies versus post meta, which is covered in Chapter 5.

§ Add indexes to columns that are used in WHERE, ON, ORDER BY, and GROUP BY clauses.

advanced-cache.php and object-cache.php

The keystones[30] that enable all of these caching techniques, including the ones used by the W3 Total Cache plugin, are the advanced-cache.php and/or object-cache.php files, which can be added to the /wp-content/ directory.

To tell WordPress to check for the advanced-cache.php and object-cache.php files, add the line define(‘WP_CACHE’, true); to your wp-config.php file.

The advanced-cache.php file is loaded by wp-settings.php before the majority of the WordPress source files are loaded. Because of this, you can execute certain code (e.g., to look for a cache file on the server) and then stop PHP execution with an exit; command before the rest of WordPress loads.

If a object-cache.php file is present, it will be used to define the WP Cache API functions instead of the built-in functions found in wp-includes/cache.php. By default, WordPress will cache all options in an array during each page load. Transients are stored in the database. If you write your own object-cache.php file, you can tell WordPress to store options and transients in a RAM-based memory that is persisted between page loads.

Plugins like W3 Total Cache are mostly a frontend for generating an advanced-cache.php file based on the settings you choose. You can also choose to roll your own advanced-cached.php or object-cache.php file or use one configured already for a specific caching tool or technique. Most of the caching techniques that follow involve using a specific advanced-cache.php or object-cache.php file to interact with another service for caching.

If you add a header comment to the top of your .php files dropped into the wp-content directory with the same structure as a plugin (plugin name, description, etc), then that information will show up on the Drop-ins tab of the plugins page in the WordPress dashboard.

Alternative PHP Cache (APC)

Alternative PHP Cache is an extension for PHP that acts as an opcode cache and can be used to store key-value pairs for object caching.

Opcode caching

When a PHP script is executed, it is compiled to opcodes that are ready to be executed by the server. With an opcode cache, part of the compiling is cached until the underlying PHP scripts are updated.

Key-value cache

APC also adds the apc_store() and apc_fetch() functions, which can be used to store and retrieve bits of information from memory. A value stored in memory can typically be loaded faster than a value stored on a hard disk or in a database, especially if that value requires some computation. Plugins like W3 Total Cache or APC Object Cache Backend can be used to store the WordPress object cache inside of RAM using APC.

These are the rough steps to set up APC:

1. Install APC on your server, configure PHP to use it, and restart your web server.

2. Configure WordPress to use APC using W3 Total Cache, APC Object Cache Backend, or another plugin or custom object-cache.php script.

Here are some good links for information on using APC in general and with WordPress:

§ “Alternative PHP Cache” at PHP.net

§ “How to Install Alternative PHP Cache (APC) on a Cloud Server Running Ubuntu 12.04” by Danny Sipos

NOTE

PHP versions 5.5 and higher come compiled with OPCache, which is an alternative to APC for opcode caching. However OPCache does not have the same store and fetch functionality that APC has for object caching. For this reason, you need to either disable OPCache and use APC or run an updated version of APC called APCu alongside OPCache. APCu offers the store and fetch functionality but leaves the opcode caching to OPCache.

Memcached

Memcached is a system that allows you to store key-value pairs in RAM that can be used as a backend for an object cache in WordPress. Memcached is similar to APC, minus the opcode caching.

You can store your full-page caches inside of Memcached instead of files on the server for faster load times, although the performance gain will be slower for modern servers with faster solid-state drives. Memcached can be run on both Apache- and Nginx-based servers.

One of the advantages of Memcached over other object caching techniques (including Redis and APC) is that a Memcached cache can be distributed over multiple servers. So if you have multiple servers hosting your app, they can all use the one Memcached instance to store a common cache instead of having their own (often redundant) cache stores on each server. Interesting note: the enterprise version of W3 Total Cache allows you to use APC across multiple servers seamlessly.

These are the rough steps to set up Memcached:

1. Install the Memcached service on your server, give it some memory, and run it.

2. Use W3 Total Cache or the Memcached Object Cache plugin to update the WordPress object cache to use Memcached.

Here are some good links for information on using Memcached in general and with WordPress:

§ “Memcached” at PHP.net

§ the Memcached website

§ “WordPress + Memcached” by Scott Taylor

Redis

Redis is another system for storing key-value pairs in memory on your Apache- or Nginx-based web server. Like Memcached, it can be used as a backend for your WordPress object cache or page cache.

Unlike Memcached, Redis can store data in lists, sets, and sorted sets in addition to simple key-value hashes. These data structures are always useful for your apps, and the maturity of Memcached, which was created a few years before Redis, is appreciated by some developers.

These are the rough steps to setup Redis:

1. Install Redis on your server, give it some memory, and run it.

2. Use a replacement for the WordPress index.php that searches the Redis cache and serves pages from there if found. A popular version is wp-redis-cache.

3. Run a plugin or other script to clear the Redis cache on post updates/etc.

Here are some good links for information on using Redis in general and with WordPress:

§ The Redis website

§ WP-Redis-Cache

§ “WordPress with Redis as a Frontend Cache” by Jim Westergren

Varnish

Varnish is a reverse proxy that can sit in front of your Apache or Nginx setup and serve cached versions of complete web pages to your visitors. Because your web server and PHP are never even loaded for cached pages, Varnish will outperform Memcached and Redis for full page caching. On the other hand, Varnish is not meant to do object caching and so will only work for static pages on your site.

These are the rough steps for setting up Varnish with WordPress:

1. Install Varnish on your server.

2. Configure Varnish to ignore the dashboard at /wp-admin/ and other sections of your site which shouldn’t be cached.

3. Use a plugin to purge the Varnish cache when posts are updated and other updates are done to WordPress. Some popular plugins to do this are WP-Varnish and Varnish HTTP Purge.

Here are some good links for information on using Varnish in general and with WordPress.

§ The Varnish website

§ “How to Install and Customize Varnish for WordPress” by Austin Gunter

§ Varnish 3.0 Configuration Templates

Batcache

Batcache uses APC or Memcached as a backend for full-page caching in WordPress. The end result should be similar to using W3 Total Cache or another plugin integrated with APC or Memcached for full-page caching.

One thing unique to Batcache is that the caching is only enabled if a page has been loaded two times within 120 seconds. A cache is then generated and used for the next 300 seconds. These values can be tweaked to fit your purposes, but the basic idea here is that Batcache is meant primarily as a defense against traffic spikes like those that happen when a website is “slashdotted,” “techcrunched,” “reddited,” or linked to by any of the other websites large enough to warrant its own verb. Another benefit to caching only pages under heavy load is that a lower amount of RAM is required to store the cache.

If you tweak the default settings, you can set up Batcache to work as an always-on full page caching system.

These are the rough steps for setting up Batcache with WordPress:

1. Set up Memcached or APC to be used as the in-memory key-value store for Batcache.

2. Download the Batcache plugin from the WordPress repository.

3. Move the advanced-cache.php file to the wp-content folder of your WordPress install.

Batcache has an interesting pedigree since it was developed specifically for WordPress. It was first used on WordPress VIP sites and WordPress.com. Batcache was originally called Supercache, but the popular caching plugin WP Super Cache was released around the same time and the Supercache/Batcache authors changed the name from one famous DC Comics caped crusader to another. Here are some good links for information on using Batcache with WordPress.

§ The Batcache plugin

§ “WordPress Caching using APC and Batcache” by Jonathan D. Johnson

§ Original Batcache announcement and overview by Andy Skelton

Selective Caching

The caching methods described so far have either been full page caches or otherwise “dumb” caches storing every WordPress object in cache. Rules could then be added to tell the cache to avoid certain URLs or conditions, but basically you were caching all of the things.

Sometimes you will want to do things the other way around. You’ll want to cache specific pages and objects. This is typically done by storing information within a WordPress transient. If you have a persistent object enabled like APC, that stored object will load that much faster.

NOTE

What we’re calling selective caching here is being commonly referred to as fragment caching. No matter the term, the concept is the same: caching parts of a rendered web page instead of the full page.

For example, you might have a full-page cache enabled through W3 Total Cache or Varnish, but you’ll need to exclude logged-in users from seeing the cache because member-specific information could get cached. Mary could end up seeing “Welcome, Bob” in the upper right of the page. Still, some portion of each page might be the same for each user or certain kinds of users. We can selectively cache that information if it takes excessive database calls or computation to compile.

Good candidates for selective caching include reports, complicated post queries, and other bits of content that require a lot of time or memory to compute.

The Transient API

Transients are the preferred way for WordPress apps to set and get values out of the object cache. If no persistent caching system is installed, the transients are stored inside of the wp_options table of the WordPress database. If an object caching system like APC, APCu, Memcached, or Redis is installed, then that system is used to store the transients.

At any time, the server could be rebooted or the object cache memory could be cleared, wiping out your stored transients. For this reason, when storing transients, you should always assume that the storage is temporary and unreliable. If the information to be stored needs to be saved, you can still store it inside of a transient redundantly for performance reasons, but make sure you also store it in another way—most likely by saving an option through update_option().

In the SchoolPress app, we need to get an average homework score across all assignments a single student has submitted. The query for this would involve running the average function against the meta_value column of the wp_usermeta table. Running an average for one student with say 10 to 100 assignments wouldn’t be too intense; however, if you had a page showing the average score for 20-80 students within one class, that series of computations might take a while to run. To speed this up, we can cache the results of the full class report within a transient, as illustrated inExample 16-1.

This is a perfect use case for using transients because having access to the computed results inside of a transient will speed up repeated loads of the report, but it’s OK if the transient suddenly disappears because we can always compute the averages from scratch.

Example 16-1. SPClass

class SPClass()

{

/* ... constructor and other methods ... */

function getStudents()

{

/* gets all users within the BuddyPress group for this class */

return $this->students; //array of student objects

}

function getAssignmentAverages()

{

//check for transient

$this->assignment_averages =

get_transient('class_assignment_averages_' . $this->ID);

//no transient found? compute the averages

if(empty($this->assignment_averages))

{

$this->assignment_averages = array();

$this->getStudents();

foreach($this->students as $student)

{

$this->assignment_averages[$student->ID] =

$student->getAssignmentAverages();

}

//save in transient

set_transient('class_assignment_averages_' .

$this->ID, $this->assignment_averages);

}

//return the averages

return $this->assignment_averages;

}

}

//clear assignment averages transients when an assignment is graded

public function clear_assignment_averages_transient($assignment_id)

{

//class id is stored as postmeta on the assignment post

$assignment = new Assignment($assignment_id);

$class_id = $assignment->class_id;

//clear any assignment averages transient for this class

delete_transient('class_assignment_averages_' . $class_id);

}

add_action('sp_update_assignment_score', array('SPClass',

'clear_assignment_averages_transient'));

The example includes a lot of snipped code and makes some assumptions about the SPClass and Student classes. However, you should get the idea of how this report uses transients to store the computed averages, retrieve them, and clear them out on updates.

In the preceding example, we store the array stored in $this->assignment_averages in the transient. Alternatively, we could have stored the generated HTML, but storing the array saves us most of the complex database calls and is more flexible.

The function to store a value inside of a transient is set_transient($transient, $value, $expiration), and attributes are as follows:

§ $transient—Unique name for the transient, 45 characters or less.

§ $value—The value to store. Objects and arrays are automatically serialized and unserialized for you.

§ $expiration – An optional parameter to set an expiration for the transient in seconds. Expired transients are deleted by a WordPress garbage collection script. By default, this value is 0 and doesn’t expire until deleted.

Notice that we use a descriptive key (class_assignment_averages_) followed by the ID of the class group. This way all classes will have their own transient for storing assignment averages.

To retrieve the transient, we simply call get_transient($transient), passing one parameter with the unique name of the transient. If the transient is available and not past expiration, the value is returned. Otherwise the call returns false.

To delete the transient before expiration, we simply call delete_transient($transient) passing one parameter with the unique name of the transient. Notice that in the example, we hook into the sp_update_assignment_score that is fired when any assignment gets scored. We pass an array as the callback for the hook since the method is part of the SPClass class. The “sp_update_assignment_score” hook passes the $assignment_id as a parameter. The callback method uses this ID to find the assignment and the associated class ID, then deletes the correspondingclass_assignment_averages_{ID} transient.

If you were storing transients related to posts or users, you may want to clear them out on “save_post” or “profile_update,” respectively.

NOTE

The transient functions are fairly simple wrappers for the functions defined in the default wp-includes/cache.php or your drop-in object-cache.php file: wp_cache_set(), wp_cache_get(), and wp_cache_delete(). If you wanted, you could call these functions directly. Information on these functions can be found in the WordPress Codex.

Multisite Transients

In network installs, the transients set with set_transient() are specific to the current network site. So our “class_assignment_averages_1” set on one network site won’t be available on another network site. (This makes sense in the assignment scores example.)

If you’d like to set a transient network-wide, WordPress offers variants of the transient functions:

§ set_site_transient($transient, $value, $expiration)

§ get_site_transient($transient)

§ delete_site_transient($transient)

These functions work the same as the basic transient functions; however, the _site_transients are stored in wp_site_options instead of the individual network site wp_options tables.

Because the transients set with set_site_transient() prefix the string _site to the front of the transient name, you only have 40 characters to work with for the name versus the usual 45.

Finally, it should be noted that a different set of hooks fire before and after a network-wide transient is set versus a single network site transient. If you’ve written code that hooks into pre_set_transient, set_transient, or setted_transient, you may need to have that code also hook into pre_set_site_transient, pre_set_transient, and pre_setted_site_transient.

Using JavaScript to Increase Performance

A useful tactic for speeding up page loads is to load certain parts of a web page through JavaScript instead of generating the same output through dynamic PHP.

This technique can make pages appear to load faster since the frame of the web page can be loaded quickly while the time-intensive portion of the site can loaded over time while a “loading…” icon flashes on the screen or a progress bar fills up. Your users will get immediate feedback that the page has loaded along with an indication to sit tight for a few seconds while the page renders.

Using JavaScript can also literally speed up your page loads. If you load all of the dynamic content of a page through JavaScript, you can then use a page cache to serve the rest of the page without hitting PHP.

For example, on many blogs, the only piece of dynamic content is the comments. Using the built-in WordPress comments and a full page cache means that recent comments won’t show up on the site until the cache clears. However, if you use a JavaScript-based commenting system like those provided through the JetPack plugin or a service like Disqus or Facebook, then your comments section is simply a bit of static JavaScript code that loads the dynamic comments from another server.

Example 16-2 shows a bare-bones example of how you can go about loading specific content through JavaScript on an otherwise static page.

Example 16-2. JS Display Name plugin

<?php

/*

Plugin Name: JS Display Name

Plugin URI: http://bwawwp.com/js-display-name/

Description: A way to load the display name of a logged-in user through JS

Version: .1

Author: Jason Coleman

Author URI: http://bwawwp.com

*/

/*

use this function to place the JavaScript in your theme

if(function_exists("jsdn_show_display_name"))

{

jsdn_show_display_name();

}

*/

function jsdn_show_display_name($prefix = "Welcome, ")

{

?>

<p>

<script src="<?php echo admin_url(

"/admin-ajax.php?action=jsdn_show_display_name&prefix=" .

urlencode($prefix)

);?>"></script>

</p>

<?php

}

/*

This function detects the JavaScript call and returns the user's display name

*/

function jsdn_wp_ajax()

{

global $current_user;

if(!empty($current_user->display_name))

{

$prefix = sanitize_text_field($_REQUEST['prefix']);

$text = $prefix . $current_user->display_name;

header('Content-Type: text/javascript');

?>

document.write(<?php echo json_encode($text);?>);

<?php

}

exit;

}

add_action('wp_ajax_jsdn_show_display_name', 'jsdn_wp_ajax');

add_action('wp_ajax_nopriv_jsdn_show_display_name', 'jsdn_wp_ajax');

Custom Tables

Another tool you will definitely need in your toolbox when building WordPress apps in general, but specifically when trying to optimize performance, is to build a custom database table or view to make certain lookups and queries faster.