How to Use Objects: Code and Concepts (2016)

Part II: Contracts

Chapter 5. Testing

Testing is an activity not much loved in the development community: You have to write code that does not contribute a shred of new functionality to your application, and the writing is frustrating, too, because most of the time the tests do not run through, so that you have to spend even more time on figuring out whether the test or the application code is wrong. Some people think that the reason for hiring special test engineers is to shield the real developers from the chores of testing and let them get on with their work.

Fortunately, the agile development movement has taught us otherwise:![]() 28,29,172,171,92 Testing is not the bane of productivity, but rather the backbone of productivity in software engineering. Testing makes sure we build the software we seek to build. Testing makes us think through the border cases and understand our own expectations of the software. Testing enables us to change the software and adapt it to new requirements. Testing lets us explore design and implementation alternatives quickly. Testing flattens the learning curve for new libraries. And finally, testing tells us when we are done with our task, and can go home and relax, so that we can return sprightly the next morning—if nothing else.

28,29,172,171,92 Testing is not the bane of productivity, but rather the backbone of productivity in software engineering. Testing makes sure we build the software we seek to build. Testing makes us think through the border cases and understand our own expectations of the software. Testing enables us to change the software and adapt it to new requirements. Testing lets us explore design and implementation alternatives quickly. Testing flattens the learning curve for new libraries. And finally, testing tells us when we are done with our task, and can go home and relax, so that we can return sprightly the next morning—if nothing else.

The purpose of this chapter is to show you that testing can actually be a very rewarding activity, and one that boosts both productivity and job satisfaction. The main point we will be pursuing is this:

Testing is all about checking our own expectations.

Whenever we write software, we are dealing with expectations of its future runtime behavior. In the previous chapter, we introduced assertions![]() 4.1 and contracts as a framework for reasoning about code to make sure it works once and for all. Many practitioners feel, however, that they need to see the code run to be convinced. Donald Knuth’s formulation of this feeling has stuck through the decades: “Beware of bugs in the above code;

4.1 and contracts as a framework for reasoning about code to make sure it works once and for all. Many practitioners feel, however, that they need to see the code run to be convinced. Donald Knuth’s formulation of this feeling has stuck through the decades: “Beware of bugs in the above code;![]() 139 I have only proved it correct, not tried it.”

139 I have only proved it correct, not tried it.”

This chapter therefore complements the previous one by pointing out motivations and techniques for testing runtime behavior. Do not make the mistake of thinking that contracts become obsolete because you engage in testing: No serious software developer can develop serious software without forming a mental picture of assertions, contracts, and invariants that explain why the code works. Tests are only one (particularly effective) way of capturing and trying out these assertions. Without having the assertions, you will not be able to write tests.

5.1 The Core: Unit Testing

Testing usually targets not whole applications, but rather single components and objects—most of the time, we perform unit testing. The underlying reasoning is, of course, that components conforming rigorously to their specifications will create a running overall application; to make sure that this is the case, we can always come back later and write tests targeting groups of components or even the entire application as their “units.” These tests are then usually called functional tests, integration tests, or acceptance tests, if they concern use cases specified in the legal contract about the software. Because of its widespread use, good integration with Eclipse,![]() 34,30 and status as a pioneering implementation, we will use JUnit in this book.

34,30 and status as a pioneering implementation, we will use JUnit in this book.

Before looking any further, let us get in the right mindset about testing:

Testing is incredibly fast and cheap.

Since testing has become a central day-to-day activity in professional software development, the available Eclipse tooling supports the developer through all steps. Here is a very simple example that “tests” the built-in arithmetic:

testing.intro.Introduction

public class Introduction {

@Test

public void obvious() {

assertEquals(42, 6 + 36);

}

}

![]() 1.2.1We have created this snippet in a few seconds by letting Eclipse generate the code: Typing @Test makes Quick-Fix (Ctrl-1) propose to add JUnit to the project’s class path. Writing assertEquals proposes to add a static import for Assert.*, a common idiom for unit testing. Running tests is equally simple:

1.2.1We have created this snippet in a few seconds by letting Eclipse generate the code: Typing @Test makes Quick-Fix (Ctrl-1) propose to add JUnit to the project’s class path. Writing assertEquals proposes to add a static import for Assert.*, a common idiom for unit testing. Running tests is equally simple:

Tool: Starting Unit Tests in Eclipse

Select Debug As/JUnit Test Case from the test class’s context menu, either in the Package Explorer or in the editor. You can also press Alt-Shift-D T. When applying these steps to packages or projects, Eclipse will execute all contained test classes.

![]() We typically use Debug As and Alt-Shift-D, rather than Run As and Alt-Shift-X, because the debugger will frequently come in handy. Thus it is a good idea, and one that comes with only a small overhead, to start the test in debug mode.

We typically use Debug As and Alt-Shift-D, rather than Run As and Alt-Shift-X, because the debugger will frequently come in handy. Thus it is a good idea, and one that comes with only a small overhead, to start the test in debug mode.

A little side view appears that announces by a bright, encouraging, green icon that the test has succeeded. The view also offers to rerun tests—either the whole test suite, only the failed tests, or, through the context menu, selected test cases.

Tests boost productivity.

Compare this with the effort of manually trying out an application’s behavior—for instance, because a user has reported a bug somewhere. Starting the UI, and then clicking through the various menus and dialogs until you can actually exercise the code deep down that you suspect of being faulty, can take several minutes, and does so every time you have to recheck the behavior after a change to the code.

Automatic JUnit tests, in contrast, let you run the suspected code directly, by creating a few objects and calling the method that seems to be the culprit. It is true that writing the first test for an object takes more time than clicking through the UI once. However, the effort is amortized quickly if for every attempt to fix the bug, you simply have to click a button to re-execute the test. Even the runtime of the test itself is much shorter than that of the application, because less infrastructure has to come up.![]() 5.3.2.1 In particular, if your application separates the core functionality from the

5.3.2.1 In particular, if your application separates the core functionality from the![]() 9.1 user interface code, tests of the functionality will run an order of magnitude faster than the overall application.

9.1 user interface code, tests of the functionality will run an order of magnitude faster than the overall application.

Run tests frequently.

Tests are most beneficial if you run them after every change to the software: If you broke something in the software machinery, you will know immediately and you can fix it while the knowledge of the machinery is fresh in your mind. Since Eclipse makes running tests so simple, there is no reason not to make sure about the software every few minutes.

Make tests fully automatic.

Frequent testing is possible only if running the tests involves no more effort than pressing a button. For this to work out, all tests have to check the expected results automatically. It is not acceptable for tests to produce output for the user to inspect, because no one will bother checking the output manually, and the bugs introduced in a change will go unnoticed.

![]() It is this automatic testing that creates the connection between testing and reasoning

It is this automatic testing that creates the connection between testing and reasoning![]() 4.1 about software by assertions and contracts: Only if you have fixed your expectations in contracts will you be able to tell exactly which outcome to expect from a test. The test merely checks whether your expectation actually holds true. Conversely, writing automatic tests will advance your mastership of contracts. In the end, both techniques are complementary and mutually depending tools for achieving the goal of correctness.

4.1 about software by assertions and contracts: Only if you have fixed your expectations in contracts will you be able to tell exactly which outcome to expect from a test. The test merely checks whether your expectation actually holds true. Conversely, writing automatic tests will advance your mastership of contracts. In the end, both techniques are complementary and mutually depending tools for achieving the goal of correctness.

Tests create fixtures for examining objects.

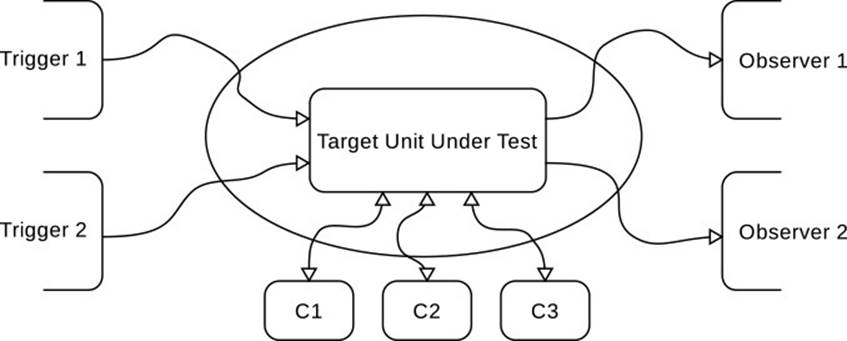

The challenge in making tests pay is to minimize the effort required to extract single functional units, whether they are objects or components, from the application and place them inside a test environment, which is also called a fixture in this context. Fig. 5.1 illustrates the point, in analogy to an electrical testing board (also sometimes called a fixture). You have to “wire up” the unit in an environment that enables it to perform its work. Some objects will “trigger” the unit by injecting data or calling methods; other objects will “observe” the behavior of the unit. Very often, these observers are just the assertEquals statements within the test case, which extract the result of computations from the unit and compare it with an expected![]() 2.1 value; sometimes they will be actual observers in the sense of the pattern.

2.1 value; sometimes they will be actual observers in the sense of the pattern.![]() 5.3.2.1

5.3.2.1 ![]() 1.1 Finally, the target unit will need some collaborators, shown at the bottom of Fig. 5.1, which provide specific services.

1.1 Finally, the target unit will need some collaborators, shown at the bottom of Fig. 5.1, which provide specific services.

Figure 5.1 Creating a Fixture

Since fixtures are a common necessity, JUnit provides extensive support for them. It pays particular attention to the case where a number of test cases (i.e., a number of different trigger scenarios in Fig. 5.1) work in the context of the same fixture. The next sample code shows the procedure. For each method annotated @Test, the framework creates a new instance of the class, so that the test starts with a clean slate. It then runs the method tagged @Before, which can create the fixture by setting up the context of the unit under test. Next, it runs the actual @Test to trigger a specific behavior. Finally, it gives the method tagged @After the chance to clean up after the test, for instance by removing files created on disk. (The names setUp and tearDown reflect the JUnit3 naming conventions, which have stuck with many developers.)

testing.intro.Fixture

public class Fixture {

private UnitUnderTest target;

@Before

public void setUp() {

... wire up target

}

@After

public void tearDown() {

... free resources used in test

}

@Test

public void test() {

... trigger behavior of target

}

}

![]() Sometimes it is useful to create a common environment for all test cases, such as by setting up a generic directory structure into which the different test files can be placed. This is accomplished by methods tagged @BeforeClass and @AfterClass.

Sometimes it is useful to create a common environment for all test cases, such as by setting up a generic directory structure into which the different test files can be placed. This is accomplished by methods tagged @BeforeClass and @AfterClass.

![]() Technically, each test case gets a fresh instance of the class, so the setup could also be done in the constructor or in field initializers. However, this is considered bad practice. First, exceptions thrown during setup get reported rather badly as “instance creation failed.” Second, JUnit creates the instances of all test cases in the beginning, so that setups that acquire resources, open database connections, or build expensive structures can hit resource limits.

Technically, each test case gets a fresh instance of the class, so the setup could also be done in the constructor or in field initializers. However, this is considered bad practice. First, exceptions thrown during setup get reported rather badly as “instance creation failed.” Second, JUnit creates the instances of all test cases in the beginning, so that setups that acquire resources, open database connections, or build expensive structures can hit resource limits.

JUnit goes even further in creating fixtures by allowing you to parameterize the working environment of the target unit. Suppose you have written some code accessing databases. Since your clients will be using different database systems, you have to test your code on all of them. To accomplish this, you instruct JUnit to use a special executor (or runner) for the test suite, the pre-defined runner Parameterized (line 1 in the next snippet). That runner will query the test class for a list of values by invoking a static method tagged @Parameters (lines 8–15). The values are passed as arguments to the constructor (lines 5–7) and can then be retrieved later by the test cases (line 18–19).

testing.intro.ParameterizedTests

1 @RunWith(Parameterized.class)

2 public class ParameterizedTests {

3 private DBType databaseType;

4 private String host;

5 public ParameterizedTests(DBType databaseType, String host) {

6 ... store away the parameters

7 }

8 @Parameters

9 public static List<Object[]> getDatabaseTypes() {

10 Object parameters[][] = {

11 { new DBType("MySQL"), "localhost" },

12 ...

13 };

14 return Arrays.asList(parameters);

15 }

16 @Test

17 public void test() {

18 System.out.println("Accessing " + databaseType + " at "

19 + host);

20 }

21 }

Once such a fixture is in place, it is easy and lightweight to create yet another test, to cover yet another usage scenario for the target unit. Of![]() 1.4.5 course, the class can also contain further private helper methods that make it yet simpler to perform the elementary steps of triggering and observing the target unit.

1.4.5 course, the class can also contain further private helper methods that make it yet simpler to perform the elementary steps of triggering and observing the target unit.

See each test fail once.

Tests are only software themselves, and it is easy to make mistakes in tests. Very often, you might write a test with a particular special case of your software’s behavior in mind, but not test the corresponding code segment at all.

Suppose you are unsure about whether the code for a special case in some method is correct. You trigger the method and observe the result. Because the result is as expected, you conclude that the code in question is correct. What may have happened, however, is that the actual execution has bypassed the existing bug. This problem can be avoided only if you see the bug manifest itself in the test once. Maybe you even check that the questionable code is executed using the debugger. If later the same test case succeeds, you can then be sure that you have tested the right thing and that thing is correct.

Write a regression test for every bug you find and fix.

There are many motivations and guidelines for testing. Since testing is all![]() 5.4 about the reliability of software, bugs are at its very core: Bugs, and the

5.4 about the reliability of software, bugs are at its very core: Bugs, and the![]() 5.4.1 possibility of bugs, are the main reason for using tests. We therefore include one fundamental guideline here: Whenever you find a bug, write a test to expose the bug, then fix the bug, and finally see the test succeed. In this way, you document the successful solution and you ensure the bug will not resurface later on in case you just removed the symptom of the bug, rather than its source.

5.4.1 possibility of bugs, are the main reason for using tests. We therefore include one fundamental guideline here: Whenever you find a bug, write a test to expose the bug, then fix the bug, and finally see the test succeed. In this way, you document the successful solution and you ensure the bug will not resurface later on in case you just removed the symptom of the bug, rather than its source.

Let your testing inspire a modular design.

Before we go on to more detail, let us finish with one motivation for and outlook on testing that has always driven us to think carefully about the tests we write: Tests can help you create better designs.![]() 11.1

11.1 ![]() 12.1

12.1

The reason is a technical one: Whether it will be easier or harder to place a unit in a fixture clearly depends on how many connections the unit has with the objects in its environment, and how many assumptions it makes about these objects—the triggers and observers will have to replicate the![]() 5.3.2.1 machinery to fulfill these assumptions before testing can even begin. The underlying observation is that loose coupling provides for testability: An

5.3.2.1 machinery to fulfill these assumptions before testing can even begin. The underlying observation is that loose coupling provides for testability: An![]() 12.1.2 object that makes few assumptions, that is loosely coupled to its environment, will be easier to test.

12.1.2 object that makes few assumptions, that is loosely coupled to its environment, will be easier to test.

The desire to write tests quickly can therefore guide you to structure![]() 163 your code in a more modular fashion, since a failure to achieve modularity shows up immediately in the increased effort of writing tests. Conversely,

163 your code in a more modular fashion, since a failure to achieve modularity shows up immediately in the increased effort of writing tests. Conversely,![]() 6.1.1 the effort put into creating a modular structure is partly compensated by the decreased effort required for writing tests.

6.1.1 the effort put into creating a modular structure is partly compensated by the decreased effort required for writing tests.

It is also interesting to see the situation in Fig. 5.1 as a form of reuse.![]() 12.4 That is, if a unit that was intended for an entirely different environment is placed into the testing fixture and still continues to work, then the likelihood that it can be reused in yet another, nontesting environment is increased.

12.4 That is, if a unit that was intended for an entirely different environment is placed into the testing fixture and still continues to work, then the likelihood that it can be reused in yet another, nontesting environment is increased.

![]() In fact, this argument describes the best possible outcome of extensive testing. The opposite outcome is also possible: If developers are forced to write tests and are measured only by how many acceptance tests are passed, they may be inspired to write

In fact, this argument describes the best possible outcome of extensive testing. The opposite outcome is also possible: If developers are forced to write tests and are measured only by how many acceptance tests are passed, they may be inspired to write![]() 5.1 quick and dirty code that gets them past the next release date. Be careful to take the right design cues from writing tests and to assign tests a constructive role in your software processes.

5.1 quick and dirty code that gets them past the next release date. Be careful to take the right design cues from writing tests and to assign tests a constructive role in your software processes.

By now, you should be convinced that testing is beneficial beyond the nitty-gritty of tracing bugs and ensuring correct behavior. You should be itching to check your expectations on whether and how the software you are currently writing will actually work properly. JUnit, and other tools, are waiting to assist you in this quest.

5.2 The Test First Principle

Traditional software processes consider testing a post-hoc activity: You specify what your software is supposed to do, then you write the software, and finally you validate your implementation by writing tests. Unfortunately, this is often too late—by the time a bug is discovered, no one remembers clearly how the software was supposed to work in the first place; worse yet, very often a bug is only a manifestation of a misunderstanding in the specification, and substantial changes to the software will be required at![]() 92,26,171,172 the last minute. A conclusion that has been gaining more and more support in the community since the 1990s is the test-first principle:

92,26,171,172 the last minute. A conclusion that has been gaining more and more support in the community since the 1990s is the test-first principle:

Start software development by writing tests.

At first, this is startling: How can you test something that is not even there? The explanation derives from the idea that the whole purpose of tests is to check your expectations. And the expectation of what the software under development will eventually do is clearly there right from the beginning—there is no reason not to write it down immediately.

Suppose that for your own source code editor, you want to build a text![]() 1.3.1

1.3.1 ![]() 4.1 buffer that supports efficient modifications. Before you begin to write it, you capture some minimal expectations that you happen to think of:

4.1 buffer that supports efficient modifications. Before you begin to write it, you capture some minimal expectations that you happen to think of:

testing.intro.TestFirst

public class TestFirst {

private TextBuffer buf;

@Before

public void setUp() {

buf = new TextBuffer();

}

@Test

public void createEmpty() {

assertEquals("", buf.getContent());

}

@Test

public void insertToEmpty() {

buf.insert(0, "hello, world");

assertEquals("hello, world", buf.getContent());

}

}

Unfortunately, the code does not even compile—you have not yet created the class TextBuffer or its methods getContent and insert. In fact,![]() 5.4.3

5.4.3 ![]() 5.4.5 this is the intention of test-driven development: The test cases to be fulfilled drive which parts of the software need to be created and which behavior

5.4.5 this is the intention of test-driven development: The test cases to be fulfilled drive which parts of the software need to be created and which behavior![]() 171 they should exhibit. Robert Martin proposes three laws that capture the essence:

171 they should exhibit. Robert Martin proposes three laws that capture the essence:

1. You may not write production code unless you have first written a failing unit test.

2. You may not write more of a unit test than is sufficient to fail.

3. You may not write more production code than is sufficient to make the failing unit test pass.

These laws together suggest a particular way of developing software: Write a small unit test that fails, write just the functionality that is needed,![]() 5.1 then proceed to the next test, and so on, until the tests cover the desired functionality. The interesting point is that the software developed in this way is correct—because it already passes all relevant tests.

5.1 then proceed to the next test, and so on, until the tests cover the desired functionality. The interesting point is that the software developed in this way is correct—because it already passes all relevant tests.

In the previous example, we obeyed (1) but slightly bent (2), because we actually checked two expected behaviors. But the interesting point is (3): We have to make the tests succeed by adding just enough production code. Using Quick-Fix (Ctrl-1), we let Eclipse generate the missing class and the two missing methods. Then, at least, the code compiles, but the tests still fail, since the getContent always returns null:

testing.intro.TextBuffer

public Object getContent() {

// TODO Auto-generated method stub

return null;

}

Even if the test fails so far, we have still gained something: We can run the test, it fails, and so by the second half of rule (1), we can start on the production code.

Tests keep you focused on what you want to achieve.

The interesting point in supplying the production code is that we are up against a concrete challenge: We do not want to find the ultimate version of TextBuffer; we just want the next test to succeed. In other words, we are looking for the “simplest thing that could possibly work.” In this case,![]() 28 we might first think about a StringBuilder. We get through the first test createEmpty by just defining:

28 we might first think about a StringBuilder. We get through the first test createEmpty by just defining:

testing.intro.TextBuffer

private StringBuilder rep = new StringBuilder();

public Object getContent() {

return rep.toString();

}

We can also solve the second task by adding:

testing.intro.TextBuffer

public void insert(int pos, String str) {

rep.insert(pos, str);

}

Now both tests run through, and we can start the next round of testing-and-solving. For instance, we write a test for deleting a number of characters at a given position:

testing.intro.TestFirst

@Test

public void delete() {

buf.insert(0, "hello, wonderful world");

buf.delete(7, 10);

assertEquals("hello, world", buf.getContent());

}

We can implement the new method immediately:

testing.intro.TextBuffer

public void delete(int pos, int len) {

rep.delete(pos, pos + len);

}

The nice thing is that after every change, you rerun all tests to make sure![]() 5.1 immediately that the new functionality works and that the old functionality continues to work. You make steady progress on the goals at hand.

5.1 immediately that the new functionality works and that the old functionality continues to work. You make steady progress on the goals at hand.

Test-first keeps you focused on the clients’ perspective.

![]() 1.1The initial depiction of objects already includes the injunction that in designing objects, we should always start from the clients’ (i.e., the outside)

1.1The initial depiction of objects already includes the injunction that in designing objects, we should always start from the clients’ (i.e., the outside)![]() 4.1

4.1 ![]() 4.2.2 point of view. This insight has resurfaced in the discussion of “good” contracts for methods. Test-driven development is a simple means of achieving

4.2.2 point of view. This insight has resurfaced in the discussion of “good” contracts for methods. Test-driven development is a simple means of achieving![]() 5.4.3 this goal: If you start out by writing the client code before even thinking about the object’s internals, these internals will not cloud your judgement about a good interface.

5.4.3 this goal: If you start out by writing the client code before even thinking about the object’s internals, these internals will not cloud your judgement about a good interface.

In the example of deletion, for instance, we translated the idea “a number of characters at a given position” into parameters (pos, len), and we discovered only afterward that the current representation uses parameters![]() 11.5.1 (start, end) instead. Since the representation is intended to be changeable, this is the way to go: The desirable client interface stays intact and sensible, and the details of the behavior are handled internally.

11.5.1 (start, end) instead. Since the representation is intended to be changeable, this is the way to go: The desirable client interface stays intact and sensible, and the details of the behavior are handled internally.

Test-first ensures testability.

Another advantage of writing tests immediately is that the new unit does not have to be extracted from its natural environment with a great effort—it![]() 5.1 first lives as a stand-alone entity and is then integrated into the application context.

5.1 first lives as a stand-alone entity and is then integrated into the application context.

We remarked earlier that testing inspires modular design. Test-first increases![]() 5.1 this effect, because the units are created independently from one another before they start collaborating. Since one writes only the minimal test code that fails on the unit, the necessary fixture is kept to a minimum. As a consequence, the unit will even be loosely coupled: As it makes few

5.1 this effect, because the units are created independently from one another before they start collaborating. Since one writes only the minimal test code that fails on the unit, the necessary fixture is kept to a minimum. As a consequence, the unit will even be loosely coupled: As it makes few![]() 12.1 demands on the fixture, and since it works in that context, it will make few demands on any context into which it will be deployed.

12.1 demands on the fixture, and since it works in that context, it will make few demands on any context into which it will be deployed.

Test-first creates effective documentation.

Developers deplore writing documentation, because it does not contribute to the functionality and becomes outdated quickly anyway. Conversely, developers love reading and writing code. Test code, when it is well structured![]() 1.2.3

1.2.3 ![]() 1.4.6 and readable, is a very effective documentation: Other team members can read it as tutorial code that shows how the unit under test is supposed to be used. With test-first, that explanation is even supplied by the original developer, who, of course, understands best the intention of the tested unit. For the original developer, the test code serves as a reminder when the developer comes back to the unit for maintenance.

1.4.6 and readable, is a very effective documentation: Other team members can read it as tutorial code that shows how the unit under test is supposed to be used. With test-first, that explanation is even supplied by the original developer, who, of course, understands best the intention of the tested unit. For the original developer, the test code serves as a reminder when the developer comes back to the unit for maintenance.

5.3 Writing and Running Unit Tests

The basic usage of JUnit has already been explained: Just press Alt-Shift-D T![]() 5.1 to run the current class as a JUnit test suite. In most situations, one needs to know a bit more about the concepts and the mechanics of testing. We treat these considerations here to keep this chapter self-contained, even if they reference forward into later chapters.

5.1 to run the current class as a JUnit test suite. In most situations, one needs to know a bit more about the concepts and the mechanics of testing. We treat these considerations here to keep this chapter self-contained, even if they reference forward into later chapters.

5.3.1 Basic Testing Guidelines

Test development can be a substantial task, if it is taken seriously. Following a few guidelines will make this work much more effective, so that the investment in tests pays off sooner.

Keep the tests clean.

Although tests may start out as ad-hoc artifacts created to explore and fix![]() 5.4.1 specific bugs, they must really be seen as a long-term investment. Much of

5.4.1 specific bugs, they must really be seen as a long-term investment. Much of![]() 5.4.8 their benefit shows up during maintenance, possibly long after the software has been developed and deployed. This means, however, that the tests themselves must be maintained in parallel with the software and that they must

5.4.8 their benefit shows up during maintenance, possibly long after the software has been developed and deployed. This means, however, that the tests themselves must be maintained in parallel with the software and that they must![]() 5.1 be ready to be run at any given time. Running the tests frequently and making them automatic is one precaution, but it must also be easy to extend the test suite to cover extensions of the software, and to adapt the tests to changes in the requirements and the behavior of the software.

5.1 be ready to be run at any given time. Running the tests frequently and making them automatic is one precaution, but it must also be easy to extend the test suite to cover extensions of the software, and to adapt the tests to changes in the requirements and the behavior of the software.

![]() 172(Ch.9)Since tests in this way become an integral part of the software, they should be kept as clean and as maintainable as the production code. For

172(Ch.9)Since tests in this way become an integral part of the software, they should be kept as clean and as maintainable as the production code. For![]() 1.4.5

1.4.5 ![]() 1.4.8 instance, one can factor out common setup and test fixtures, either in separate

1.4.8 instance, one can factor out common setup and test fixtures, either in separate![]() 1.8.5

1.8.5 ![]() 3.1.4 methods, classes, or superclasses, and one should certainly spend time on

3.1.4 methods, classes, or superclasses, and one should certainly spend time on![]() 1.2.3 good naming to make the code self-documenting. The immediate reward for these activities is the feedback that all tests are up and running. The long-term reward is a dependable software product.

1.2.3 good naming to make the code self-documenting. The immediate reward for these activities is the feedback that all tests are up and running. The long-term reward is a dependable software product.

Keep the tests up-to-date.

Tests must be maintained carefully to enable them to fulfill their role, in particular in the long range: If every month one test case gets broken and the developer does not bother to check out the problem, after a few years![]() 5.1 there will be so many rotten tests that no one dares to run them frequently, and newly broken tests will not be recognized. Such a test suite is a lost investment, one that consumes development time without ever producing any benefits.

5.1 there will be so many rotten tests that no one dares to run them frequently, and newly broken tests will not be recognized. Such a test suite is a lost investment, one that consumes development time without ever producing any benefits.

Document known problems using succeeding tests.

A corner case arises with known problems and limitations of the software:![]() 5.1On the one hand, they are bugs and should be documented by tests. On the other hand, you do not intend to fix them right away because they are low-priority items. Adding a test nevertheless will make JUnit report an overall “red bar,” with the consequences just discussed. The solution is to write the test, say clearly that it constitutes a failure, but make it show as “green” nevertheless—for instance, by catching the (expected) exception. Later on, when there is some time, you can simply turn the test “red” and fix the known problem.

5.1On the one hand, they are bugs and should be documented by tests. On the other hand, you do not intend to fix them right away because they are low-priority items. Adding a test nevertheless will make JUnit report an overall “red bar,” with the consequences just discussed. The solution is to write the test, say clearly that it constitutes a failure, but make it show as “green” nevertheless—for instance, by catching the (expected) exception. Later on, when there is some time, you can simply turn the test “red” and fix the known problem.

Test against the interface, not the implementation.

![]() 233Software engineers usually distinguish between black-box and white-box tests. Black-box tests capture the behavior as stated in the specification. White-box tests look at the actual source code to ensure, for instance, that every branch in the code is covered by at least one test. The danger of looking at the implementation too closely is that the tests are blind to the

233Software engineers usually distinguish between black-box and white-box tests. Black-box tests capture the behavior as stated in the specification. White-box tests look at the actual source code to ensure, for instance, that every branch in the code is covered by at least one test. The danger of looking at the implementation too closely is that the tests are blind to the![]() 1.1 border cases not covered there. Also, if the implementation changes later, the original tests will no longer achieve their objective.

1.1 border cases not covered there. Also, if the implementation changes later, the original tests will no longer achieve their objective.

To integrate both perspectives, it is sensible to align tests with the specification, but to look into the implementation to discover those special cases that the specification does not mention. Such a case can be interpreted in two ways: Either the specification is incomplete, or the code does something that is not covered by its specification. If the case does not follow immediately from the specification, it should be mentioned explicitly, at least in some internal comment, so that the maintenance team can understand its necessity. In this way, the tests will finally target the software’s interface and will provide a very effective documentation.

Make each test check one aspect.

Tests are useful for capturing contracts and checking for behavior in detail.![]() 5.4.2 This works best if each test covers one specific behavior or reaction of the software: The test’s name links to that detail, the triggers are limited

5.4.2 This works best if each test covers one specific behavior or reaction of the software: The test’s name links to that detail, the triggers are limited![]() 5.1 to the bare necessities, and the test’s asserts check the one expected outcome. With this setup, a failing test points immediately to a specific mechanism that has been broken. In test-driven development, each test case

5.1 to the bare necessities, and the test’s asserts check the one expected outcome. With this setup, a failing test points immediately to a specific mechanism that has been broken. In test-driven development, each test case![]() 5.2

5.2 ![]() 5.4.6 corresponds to a use case, and each should be documented and described independently.

5.4.6 corresponds to a use case, and each should be documented and described independently.

Writing a separate test for each aspect requires slightly more overhead than testing a multistep behavior in a single go, because one needs to apply similar triggers and check similar assertions in the end. This overhead can be minimized by keeping tests clean and by factoring out the common code.

We note, however, that sometimes bugs manifest themselves only in specific sequences of interactions; these should, of course, be written up as a single test case. This point does not contradict the general rule, because only the granularity of what constitutes an “aspect” has changed: The aspect here is the interaction between the different invoked features.

Keep tests independent.

The order in which tests are run is not defined, even if you might observe that your specific version of JUnit executes tests in alphabetical order. Tests that rely on such an order are not very useful: The larger context complicates bug tracking, while the main point of testing is to create a small, well-defined context for the purpose. Also, such tests cannot be rerun on their own for debugging if they fail.

Tests runs should be reproducible.

Tracking failures in tests is greatly simplified if the code is executed in exactly the same way each time the test is started. After the error is shown, you can use the debugger to step through the code, possibly restarting if you missed the crucial point at the first try. This implies that the fixture must be set up in the same way for each run.

Even so, it can still be useful to create randomized input data. First, of course, such tests might catch border cases that you had not identified previously. Second, creating bulk data by hand is hardly effective. There is certainly no harm in using randomized tests as a complementary set of usage scenarios besides the hand-coded fixtures. You might also consider saving the created input to disk explicitly to make the tests themselves deterministic.

Don’t modify the API for the tests.

One crucial problem of tests is that their assertions can often be written up best by peeking at the internal state of the target unit. At some point in the test code you might think, “I’m not sure about whether this object is![]() 4.1 actually already broken.” In such a circumstance, you would like to check its invariant, but that invariant is about the internal fields that are not accessible.

4.1 actually already broken.” In such a circumstance, you would like to check its invariant, but that invariant is about the internal fields that are not accessible.

It is a bad idea to make fields public or to create getters just for testing purposes: Clients may think that these members belong to the official API and start using them in regular code, even if the documentation indicates that the member is visible only for testing purposes. Also, the test relies on the target’s internals, so that an enhancement of these internals may break the test even though the final outcome remains correct. Testing the![]() 11.5.1 internals goes against the grain of encapsulation and destroys its benefits.

11.5.1 internals goes against the grain of encapsulation and destroys its benefits.

![]() If you feel that you really must look at the internals of an object, you can make private and protected fields visible at runtime using reflection. Line 5 gets a handle on a private field in the target unit. Line 6 overrides the access protection enforced by the JVM, so that line 7 can actually get the value.

If you feel that you really must look at the internals of an object, you can make private and protected fields visible at runtime using reflection. Line 5 gets a handle on a private field in the target unit. Line 6 overrides the access protection enforced by the JVM, so that line 7 can actually get the value.

testing.basics.AccessPrivateTest

1 @Test

2 public void accessPrivateState() throws Exception {

3 TargetUnit target = new TargetUnit();

4 target.compute();

5 Field state = target.getClass().getDeclaredField("state");

6 state.setAccessible(true);

7 assertEquals(42, state.get(target));

8 }

Test the hard parts, not the obvious ones.

Writing and maintaining tests is always an effort, and it must be justified by corresponding benefits. Since the core benefit of tests is to check for the absence of bugs in specific usage scenarios, a test is futile if it checks obvious things. For instance, testing a setter by calling the corresponding getter afterward will not be very useful. Conversely, if the setter has further side effects on the object’s state and is expected to send out change notifications,![]() 2.1 then it might be a good idea to include a test of these reactions. In general, it is useful to test behavior

2.1 then it might be a good idea to include a test of these reactions. In general, it is useful to test behavior

• That has a complex specification![]() 5.4.2

5.4.2

• Whose implementation you have understood poorly

• Whose implementation involves complex, possibly cross-object invariants![]() 6.2.3

6.2.3

• That is mission-critical to the project

• That is safety-critical![]() 5.4.7

5.4.7

Focusing on such aspects of the product also increases the immediate reward of the tests and motivates the team to keep the tests up-to-date and![]() 5.4.10 clean.

5.4.10 clean.

Write tests that leave the “happy path.”

The happy path of a software is its defined functionality: Given the right inputs, it should deliver the expected results. It is obvious that tests should cover this expected behavior to verify that the software achieves its purpose. Focusing tests on the happy path is also encouraged by the non-redundancy![]() 4.5 principle from design-by-contract: The code remains simpler if a method

4.5 principle from design-by-contract: The code remains simpler if a method![]() 4.1 does not check its pre-conditions, so it is free to return any result or even crash entirely if the caller violates the contract. Testing the happy path respects such a behavior.

4.1 does not check its pre-conditions, so it is free to return any result or even crash entirely if the caller violates the contract. Testing the happy path respects such a behavior.

However, many components of a software must be resilient to unexpected inputs or must at least fail gracefully if something does not go according to plan. Foremost, the boundary objects must be paranoid about![]() 4.6

4.6 ![]() 1.8.7 their input: Users or other systems will routinely submit erroneous inputs and the system must cope without crashing. However, the safety-critical internal components must also not do anything dangerous just because of a bug in some other component.

1.8.7 their input: Users or other systems will routinely submit erroneous inputs and the system must cope without crashing. However, the safety-critical internal components must also not do anything dangerous just because of a bug in some other component.

In such cases, it is important to write tests that leave the software’s happy path. These tests deliberately submit ill-formatted input or input outside the specified range and check that nothing bad happens: Negative numbers in ordering items must not trigger a payment to the customer, ill-formatted strings must not bring the server crashing down, and the robot arm must not damage the surrounding equipment because of a wrong movement parameter.

Because professional software is, above all, reliable software, professional developers routinely write several times as many tests for “wrong” scenarios as for the happy path. If nothing else, writing many such tests spares you the embarrassment of seeing your customer break the software in the first demo session.

5.3.2 Creating Fixtures

![]() 5.1One of the most time-consuming tasks in testing is the setup of the fixture, which is then shared between different related test cases. We therefore include a brief discussion of common techniques used here.

5.1One of the most time-consuming tasks in testing is the setup of the fixture, which is then shared between different related test cases. We therefore include a brief discussion of common techniques used here.

5.3.2.1 Mock Objects

![]() 1.1

1.1 ![]() 11.2Since objects are small and focus on a single purpose, they usually require collaborators that perform parts of a larger task on their behalf. As Fig. 5.1 on page 246 indicates, these collaborators must be included in any testing fixture. However, this might be problematic: The collaborators might be developed independently and might not even exist at the time, especially in test-first scenarios. They might be large and complex, which would destroy the test’s focus. They might be complex to set up, which bloats the fixture and increases the test’s runtime. Put briefly, such collaborators need to be replaced.

11.2Since objects are small and focus on a single purpose, they usually require collaborators that perform parts of a larger task on their behalf. As Fig. 5.1 on page 246 indicates, these collaborators must be included in any testing fixture. However, this might be problematic: The collaborators might be developed independently and might not even exist at the time, especially in test-first scenarios. They might be large and complex, which would destroy the test’s focus. They might be complex to set up, which bloats the fixture and increases the test’s runtime. Put briefly, such collaborators need to be replaced.

Mock objects simulate part of the behavior of production objects.

![]() 163,29Mock objects are replacements for collaborators that exhibit just the behavior required in the concrete testing scenario. They do not reimplement the full functionality; in fact, they might even fake the answers to specific method calls known to arise in the given test scenario.

163,29Mock objects are replacements for collaborators that exhibit just the behavior required in the concrete testing scenario. They do not reimplement the full functionality; in fact, they might even fake the answers to specific method calls known to arise in the given test scenario.

As a simple example, the Document in a word processor might be![]() 2.1

2.1 ![]() 9.1 observed by a user interface component that renders the content on the screen. When testing the document, this rendering is irrelevant and blurs the test’s focus. One therefore registers a mock observer that merely keeps all the events in a list (lines 2–3) and later checks that all expected events have arrived (line 6; the method merely loops through the given events and checks their data against that of the received events).

9.1 observed by a user interface component that renders the content on the screen. When testing the document, this rendering is irrelevant and blurs the test’s focus. One therefore registers a mock observer that merely keeps all the events in a list (lines 2–3) and later checks that all expected events have arrived (line 6; the method merely loops through the given events and checks their data against that of the received events).

testing.mock.DocumentTest.testNotification

1 Document doc = new Document("0123456789");

2 MockObserver obs = new MockObserver();

3 doc.addDocumentListener(obs);

4 doc.replace(3, 2, "ABC");

5 assertEquals("012ABC56789", doc.get());

6 obs.checkEvents(new DocumentEvent(doc, 3, 2, "ABC"));

Mock objects can check early.

Tests are most useful if they fail early: If the final result of a test is wrong,![]() 163 then the bug must be hunted throughout the executed code. Mock objects make it simpler to create such early failures, because they naturally “listen in” on the conversation of the application object with its collaborators. In the preceding example, for instance, the MockObserver could also have been told the expected events up front, so that any irregularity would have been spotted early, and with a stack trace and debugger state leading to the point of the modification.

163 then the bug must be hunted throughout the executed code. Mock objects make it simpler to create such early failures, because they naturally “listen in” on the conversation of the application object with its collaborators. In the preceding example, for instance, the MockObserver could also have been told the expected events up front, so that any irregularity would have been spotted early, and with a stack trace and debugger state leading to the point of the modification.

Mock objects can inject errors.

Robust software also handles error situations gracefully. To test this handling, it necessary to create these situations artificially, which might not be simple: You cannot simply unplug the network cable at a specific moment during the test to simulate network failure. However, you can create a mock network socket that behaves as if the network connection had been broken. The difference will not be visible to the target unit of the test—all it sees is an exception indicating the failure. (Note also the arbitrary result returned by read(); making the integer sequential might help debugging.)

testing.mock.MockBrokenNetwork

public class MockBrokenNetwork extends InputStream {

private int breakAt;

private int length;

private int currentCount;

public MockBrokenNetwork(int breakAt, int length) {

this.breakAt = breakAt;

this.length = length;

}

public int read() throws IOException {

if (currentCount == breakAt)

throw new IOException("network unreachable");

if (currentCount == length)

return -1;

return currentCount++;

}

}

Separate components by interfaces to enable mock objects.

To work with the target unit of the test, the mock objects must be sub-types of the expected production classes. The easiest way to enable this is to anticipate the need for mock objects and to let the likely objects requiring testing interact only with interfaces. As a side effect, this leads to a desirable decoupling between the system’s components. The goal of![]() 3.2.7

3.2.7 ![]() 12.1 testing can then serve as an incentive to refactor the production code by introducing interfaces.

12.1 testing can then serve as an incentive to refactor the production code by introducing interfaces.

Furthermore, the introduction of interfaces leads to a design where you think in terms of roles rather than concrete objects. Roles help to focus![]() 3.2.1

3.2.1 ![]() 11.3.3.7 on the essential expected behavior of objects, rather than the concrete implementation. Also, they enable the designer to differentiate between client-specific interfaces, which structures the individual objects further.

11.3.3.7 on the essential expected behavior of objects, rather than the concrete implementation. Also, they enable the designer to differentiate between client-specific interfaces, which structures the individual objects further.![]() 3.2.2

3.2.2

![]() Do not introduce interfaces just because a mock object is required, since this will make the production code less readable. If the majority of types in the code are abstract interfaces, the human reader has to find, using the Type Hierarchy, that there is just a single implementation. Introduce interfaces for testing only if this also improves the production code.

Do not introduce interfaces just because a mock object is required, since this will make the production code less readable. If the majority of types in the code are abstract interfaces, the human reader has to find, using the Type Hierarchy, that there is just a single implementation. Introduce interfaces for testing only if this also improves the production code.

Mock objects improve the design quality.

![]() 163The original proposal of mock objects observes several beneficial effects of mock objects on the design and structure of the production code. Beyond the possible decoupling of interfaces mentioned previously, objects tend to become smaller and will make fewer assumptions on their environment, just because recreating this environment by mock objects for tests becomes so

163The original proposal of mock objects observes several beneficial effects of mock objects on the design and structure of the production code. Beyond the possible decoupling of interfaces mentioned previously, objects tend to become smaller and will make fewer assumptions on their environment, just because recreating this environment by mock objects for tests becomes so![]() 1.3.8 much harder. Furthermore, the use of the SINGLETON pattern is discouraged further, because it fixes the concrete type of the created object so that the object cannot be replaced. As a strategic effect, mock objects encourage

1.3.8 much harder. Furthermore, the use of the SINGLETON pattern is discouraged further, because it fixes the concrete type of the created object so that the object cannot be replaced. As a strategic effect, mock objects encourage![]() 2.1

2.1 ![]() 2.3.2 the use of observer or visitor relationships, both of which tend to enable

2.3.2 the use of observer or visitor relationships, both of which tend to enable![]() 12.3 extensibility of the system by new operations and hence new functionality.

12.3 extensibility of the system by new operations and hence new functionality.

5.3.3 Dependency Injection

![]() 201Large frameworks, such as the JavaEE platform, offer applications a solid ground on which to build their specific functionality. Furthermore, they serve as containers that provide the application with predefined services such as database persistence or user authentication. To use a service, the application must get hold of the object(s) that constitute the entry point to the service’s API. As more and more services are offered and consumed, the application gets tangled up with boilerplate code to access the required API objects.

201Large frameworks, such as the JavaEE platform, offer applications a solid ground on which to build their specific functionality. Furthermore, they serve as containers that provide the application with predefined services such as database persistence or user authentication. To use a service, the application must get hold of the object(s) that constitute the entry point to the service’s API. As more and more services are offered and consumed, the application gets tangled up with boilerplate code to access the required API objects.

![]() 251, 137, 252Dependency injection is the way out. With this approach, the application declares, by simple annotations, which service objects it requires and relies on the container to provide them transparently. The boilerplate code is replaced by general framework mechanisms for providing dependencies before an object starts working. We will focus here on the core of dependency injection but note that injection frameworks usually offer much more, such as the management of the different components’ life cycles and fine-tuned mechanisms for producing service objects.

251, 137, 252Dependency injection is the way out. With this approach, the application declares, by simple annotations, which service objects it requires and relies on the container to provide them transparently. The boilerplate code is replaced by general framework mechanisms for providing dependencies before an object starts working. We will focus here on the core of dependency injection but note that injection frameworks usually offer much more, such as the management of the different components’ life cycles and fine-tuned mechanisms for producing service objects.

Dependency injection helps in creating fixtures.

The central point in the current context is that dependency injection can be used to create fixtures: Once the general mechanisms are in place, they are not limited to a predefined set of container-provided services, but apply equally to different objects and components within the application. If the application uses this setup throughout, then it becomes straightforward to replace production objects with mock objects for testing.

Let us make a tiny example using the JavaEE Context Dependency![]() 251 Injection (CDI), mirroring the overview provided in Fig. 5.1 directly. Some target unit requires two collaborators: one basic Service and one Collaborator, which is specified through an interface to enable its replacement. We mark both as @Inject to request suitable instances from the injection framework.

251 Injection (CDI), mirroring the overview provided in Fig. 5.1 directly. Some target unit requires two collaborators: one basic Service and one Collaborator, which is specified through an interface to enable its replacement. We mark both as @Inject to request suitable instances from the injection framework.

testing.inject.TargetUnit

public class TargetUnit {

@Inject private Service service;

@Inject private Collaborator colleague;

public int compute() {

return service.compute(colleague.getData());

}

}

The unit test must now set up the framework once (lines 1–4) and must access the target unit through the framework to enable injections (line 8). Otherwise, the test proceeds as usual (lines 9–10).

testing.inject.InjectionTest

1 @BeforeClass

2 public static void setup() {

3 weld = new Weld().initialize();

4 }

5 @Test

6 public void runWithInjection() {

7 TargetUnit target =

8 weld.instance().select(TargetUnit.class).get();

9 int result = target.compute();

10 assertEquals(42, result);

11 }

The framework will pick up any beans, which are Java classes with a default constructor that obey a few restrictions, from the class path and match![]() 137 them against the types, and possible other qualifiers, of an injection point. In this way, the local service can be provided as a standard class without further annotations.

137 them against the types, and possible other qualifiers, of an injection point. In this way, the local service can be provided as a standard class without further annotations.

testing.inject.Service

public class Service {

public int compute(int data) {

return data * 2;

}

}

The mock implementation for the Collaborator, in contrast, is marked as an alternative implementation, so that it does not usually get picked up automatically.

testing.inject.MockCollaborator

@Alternative

public class MockCollaborator implements Collaborator {

public int getData() {

return 21;

}

}

The container uses this special implementation only if the configuration file META-INF/beans.xml, which can be specific to the testing environment, contains a corresponding entry:

<alternatives>

<class>testing.inject.MockCollaborator</class>

</alternatives>

Of course, the concept of alternative implementations also applies to concrete classes, so that we could replace the Service by a MockService in the testing environment.

As an overall result, the testing context wires up all dependencies into the target from the outside, so that the target unit contains the unmodified production code. Dependency injection has helped to create an effective fixture.

5.3.4 Testing OSGi Bundles

![]() 174The Eclipse platform provides a solid basis for your own application

174The Eclipse platform provides a solid basis for your own application![]() A.1 development. Its strength is a flexible and expressive module system, called OSGi (short for Open Services Gateway initiative). OSGi enables components, called bundles, to coexist within a Java application. In Eclipse, the term bundle is synonymous with plugin for historical reasons. The OSGi framework provides many services, such as notifications at startup and shutdown of the application, and the dynamic loading and unloading of bundles, which go beyond the capabilities of the standard Java runtime. Testing OSGi bundles therefore requires this framework to be up to provide the expected infrastructure.

A.1 development. Its strength is a flexible and expressive module system, called OSGi (short for Open Services Gateway initiative). OSGi enables components, called bundles, to coexist within a Java application. In Eclipse, the term bundle is synonymous with plugin for historical reasons. The OSGi framework provides many services, such as notifications at startup and shutdown of the application, and the dynamic loading and unloading of bundles, which go beyond the capabilities of the standard Java runtime. Testing OSGi bundles therefore requires this framework to be up to provide the expected infrastructure.

Tool: Quick-Start JUnit Plugin Test

As a shortcut to running tests on top of the OSGi platform, just use Debug as/JUnit Plug-in Test from the context menu of the current class or press Alt-Shift-D P (instead of Alt-Shift-D T for the usual unit tests).

![]() A.1.4

A.1.4![]() Unfortunately, this configuration is rarely useful: It selects all plugins in the current workspace and from the Eclipse target platform, so that starting up the test is

Unfortunately, this configuration is rarely useful: It selects all plugins in the current workspace and from the Eclipse target platform, so that starting up the test is![]() 5.1 unbearably slow and makes it impossible to run the test frequently.

5.1 unbearably slow and makes it impossible to run the test frequently.

Tool: Launching JUnit Plugin Tests

Under Debug/Debug Configurations, the category JUnit Plug-in Test provides launchers for tests that require the OSGi platform. When you enter this dialog after selecting a test class, the New Launch Configuration toolbar item will create the same setup as the quick-start described earlier. However, you can configure it immediately. Once the test is configured, you can always reinvoke it with Alt-Shift-D P.

The first task in configuring is to select only the required plugins. Go to the new test, and then to the tab titled Plug-ins. You should first Deselect all. Then, you can reselect the plugin containing the test and click Add required plug-ins. At this point, the setup contains the minimal number of necessary bundles and startup time will be quick.

![]() Try to use Headless mode whenever you are not actually testing the user interface. Starting tests with the Eclipse user interface up, even if it is never used, takes much time. In the tab titled Main, you should therefore set the selection Run an application to Headless mode.

Try to use Headless mode whenever you are not actually testing the user interface. Starting tests with the Eclipse user interface up, even if it is never used, takes much time. In the tab titled Main, you should therefore set the selection Run an application to Headless mode.

As seen in the Main tab, the test is run in a special work area that gets erased when the tests are launched. However, for performance reasons, the work area is not deleted before the single tests, as the following code shows: The first test case creates a project that the second expects to be present. (We rely here on the alphabetic order behavior of our local JUnit implementation.)

testing.doc.ResourcesUsageTest

@Test

public void nonErasedBetweenTestsA() throws CoreException {

ResourcesPlugin.getWorkspace().getRoot()

.getProject("Retained")

.create(null);

}

@Test

public void nonErasedBetweenTestsB() throws CoreException {

assertTrue(ResourcesPlugin.getWorkspace().getRoot()

.getProject("Retained").exists());

}

![]() Plugin tests can exploit the powerful OSGi infrastructure. For instance, they can

Plugin tests can exploit the powerful OSGi infrastructure. For instance, they can![]() A.2.1 take advantage of libraries contained within bundles and can use a test workspace as a scratchpad for creating data.

A.2.1 take advantage of libraries contained within bundles and can use a test workspace as a scratchpad for creating data.

In summary, testing on top of the OSGi platform is simple and cheap if you follow a few straightforward rules. Furthermore, it gives you the OSGi infrastructure for free.

5.3.5 Testing the User Interface

The user interface is, in some sense, the most important part of an application, because its quality will determine whether you earn the users’ trust. Put simply, if the interface misbehaves too often, the users will deduce that the application is a bit flaky on the whole and will not apply it for critical tasks. It is therefore desirable to test the user interface. The Eclipse![]() 83 environment offers the SWTBot project for the purpose. Before we give a brief description, let us put the overall goal into perspective.

83 environment offers the SWTBot project for the purpose. Before we give a brief description, let us put the overall goal into perspective.

Minimize the need for user interface tests.

Unfortunately, user interface tests pose two new challenges, compared to![]() 7.1 tests of an application’s business logic. First, user interfaces are event-driven, meaning they react to single user inputs, one at a time, and the

7.1 tests of an application’s business logic. First, user interfaces are event-driven, meaning they react to single user inputs, one at a time, and the![]() 10.1 sequence of these inputs will determine their reaction. Think of theNew Class dialog in Eclipse. It should not matter whether you enter the class name or the package name first; the checks for legality should run in the same way. However, surprisingly often the concrete code does have a preference, because its developer thought of one particular input sequence. Thorough tests must encompass not just a simple collection of input/output scenarios; they also will need to drive at an expected result by several

10.1 sequence of these inputs will determine their reaction. Think of theNew Class dialog in Eclipse. It should not matter whether you enter the class name or the package name first; the checks for legality should run in the same way. However, surprisingly often the concrete code does have a preference, because its developer thought of one particular input sequence. Thorough tests must encompass not just a simple collection of input/output scenarios; they also will need to drive at an expected result by several![]() 9.1 different interaction paths. A second, more fundamental problem is that user interfaces are volatile, since they have to be adapted to the users’ changing requirements at short notice. However, even simple “style-only” changes, such as in the order or labels of buttons, can sometimes break the test cases and necessitate extensive and expensive rework.

9.1 different interaction paths. A second, more fundamental problem is that user interfaces are volatile, since they have to be adapted to the users’ changing requirements at short notice. However, even simple “style-only” changes, such as in the order or labels of buttons, can sometimes break the test cases and necessitate extensive and expensive rework.

Invest in testing the core, rather than the user interface.

![]() 9.1Fortunately, well-engineered applications separate the user interface code from the core functionality. Ideally, the user interface is a simple, shallow layer that displays the application data and triggers operations in the core. The unit tests of the core functionality will then go a long way toward making the application well behaved. At the same time, these unit tests are rather straightforward. The testing of the actual user interface can then

9.1Fortunately, well-engineered applications separate the user interface code from the core functionality. Ideally, the user interface is a simple, shallow layer that displays the application data and triggers operations in the core. The unit tests of the core functionality will then go a long way toward making the application well behaved. At the same time, these unit tests are rather straightforward. The testing of the actual user interface can then![]() 5.4.4

5.4.4 ![]() 5.4.6 focus on the overall integration and on demonstrating that all use cases are covered.

5.4.6 focus on the overall integration and on demonstrating that all use cases are covered.

The SWTBot simulates the events fired by the real widgets.

![]() 7.1User interfaces, very briefly, fill the screen with widgets, such as text fields, their labels, and buttons. Whenever the user interacts with these widgets, such as by typing into a text field or clicking a button with the mouse, the widgets send events to the application; in turn, the application takes corresponding actions. In a rough approximation, one could say that the application observes the widgets, even if the notifications do not usually

7.1User interfaces, very briefly, fill the screen with widgets, such as text fields, their labels, and buttons. Whenever the user interacts with these widgets, such as by typing into a text field or clicking a button with the mouse, the widgets send events to the application; in turn, the application takes corresponding actions. In a rough approximation, one could say that the application observes the widgets, even if the notifications do not usually![]() 2.1 concern the widgets’ state.

2.1 concern the widgets’ state.

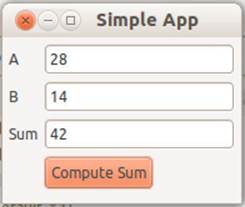

Consider the very simple application in Fig. 5.2. The user types two numbers into the fields labeled A and B, and clicks the button. The application is to compute the sum of the two numbers.

Figure 5.2 Simple Example Application for SWTBot

When the user interacts with the widgets, the application is notified about these interactions. The SWTBot allows test cases to trigger the same sequences of events automatically and repeatably. One challenge consists of identifying the components on the screen. The SWTBot offers many search methods, each of which returns a proxy for the real widget.![]() 2.4.3

2.4.3

Here is a simple example for this application. Line 3 looks up the first test field (the tree of widgets) and uses the proxy to “type” some text. Line 4![]() 7.1 fills text field B accordingly, and line 5 searches for a button by its label and “clicks” it through the proxy. Finally, line 6 checks the result text field.

7.1 fills text field B accordingly, and line 5 searches for a button by its label and “clicks” it through the proxy. Finally, line 6 checks the result text field.

testing.swt.SimpleAppTest

1 @Test

2 public void checkAddition() {

3 bot.text(0).setText("28");

4 bot.text(1).setText("14");

5 bot.button("Compute Sum").click();

6 assertEquals("42", bot.text(2).getText());

7 }

To apply the SWTBot, the JUnit test has to start the application under test. If you are testing whole Eclipse applications, then the SWTBot run configurations under Run as/Configurations will do the job. It is also possible to start applications programmatically. Because user interfaces involve rather subtle threading issues, some care must be taken. The mechanics are![]() 7.10.1 already available in a JUnit runner class; you just have to fill in the actual starting code:

7.10.1 already available in a JUnit runner class; you just have to fill in the actual starting code:

testing.swt.SimpleAppTestRunner

public class SimpleAppTestRunner

extends SWTBotApplicationLauncherClassRunner {

public SimpleAppTestRunner(Class<?> klass) throws Exception {

super(klass);

}

@Override

public void startApplication() {

SimpleApp.main(new String[0]);

}

}

Then, you have to tell JUnit to run the test suite with that specialized runner:

testing.swt.SimpleAppTest

@RunWith(SimpleAppTestRunner.class)

public class SimpleAppTest {

private static SWTBot bot;

@BeforeClass

public static void setupClass() {

bot = new SWTBot();

}

...

}

![]() 5.3.4

5.3.4![]() The SWTBot works only for OSGi plugin tests. Since this is its main application area, it also starts up the Eclipse platform, which takes a few hundred milliseconds. Testing the user interface is therefore slightly less lightweight than unit testing, which is another argument for focusing tests on the core functionality.

The SWTBot works only for OSGi plugin tests. Since this is its main application area, it also starts up the Eclipse platform, which takes a few hundred milliseconds. Testing the user interface is therefore slightly less lightweight than unit testing, which is another argument for focusing tests on the core functionality.

In summary, it is feasible to test the user interface with JUnit, in much the same way that one tests any component. Because of the challenges mentioned in this section, however, it is a good idea not to test aspects of the functionality that could just as easily be tested independently of the user interface by targeting the core functionality directly.

5.4 Applications and Motivations for Testing

So far, we have explained the motivation for testing by exploring its benefits. For day-to-day work, this presentation might be too far removed from the actual decisions about what to test and when to test. Consequently, this section seeks to complement the previous material by identifying concrete challenges that can be addressed by tests. We hope that the list will serve as an inspiration for you to write more, and more varied, tests, and at the same time to be more conscious of the goals behind the single tests, as such an understanding will help you structure the test suites coherently.

5.4.1 Testing to Fix Bugs

A good point to start writing tests in earnest is when you encounter a really nasty bug, one that you have been tracking down for an hour or so. One of the central and most fundamental strategies in the bug hunt is, of course, to dissect the software and to isolate the faulty behavior. Tests do just that: You have a suspicion of which component is the culprit, so you extract it from its natural surroundings to examine it in detail (Fig. 5.1 on page 246). If you already have a fixture for the component, the work is much simplified. If you don’t, the potential gain in the concrete situation at hand might be a strong incentive to create one.

Write a test to expose the bug, then fix it.

Testing always starts with a failing test, after which one modifies the production![]() 5.1 code to make the test succeed. Afterward, the test can be run frequently, and can be used to make sure the desired behavior stays intact. This procedure matches the case of catching bugs perfectly.

5.1 code to make the test succeed. Afterward, the test can be run frequently, and can be used to make sure the desired behavior stays intact. This procedure matches the case of catching bugs perfectly.